Abstract

Post‐lingual deafness induces a decline in the ability to process phonological sounds or evoke phonological representations. This decline is paralleled with abnormally high neural activity in the right posterior superior temporal gyrus/supramarginal gyrus (PSTG/SMG). As this neural plasticity negatively relates to cochlear implantation (CI) success, it appears important to understand its determinants. We addressed the neuro‐functional mechanisms underlying this maladaptive phenomenon using behavioral and functional magnetic resonance imaging (fMRI) data acquired in 10 normal‐hearing subjects and 10 post‐lingual deaf candidates for CI. We compared two memory tasks where subjects had to evoke phonological (speech) and environmental sound representations from visually presented items. We observed dissociations in the dynamics of right versus left PSTG/SMG neural responses as a function of duration of deafness. Responses in the left PSTG/SMG to phonological processing and responses in the right PSTG/SMG to environmental sound imagery both declined. However, abnormally high neural activity was observed in response to phonological visual items in the right PSTG/SMG, i.e., contralateral to the zone where phonological activity decreased. In contrast, no such responses (overactivation) were observed in the left PSTG/SMG in response to environmental sounds. This asymmetry in functional adaptation to deafness suggests that maladaptive reorganization of the right PSTG/SMG region is not due to balanced hemispheric interaction, but to a specific take‐over of the right PSTG/SMG region by phonological processing, presumably because speech remains behaviorally more relevant to communication than the processing of environmental sounds. These results demonstrate that cognitive long‐term alteration of auditory processing shapes functional cerebral reorganization. Hum Brain Mapp, 2013. © 2012 Wiley Periodicals, Inc.

Keywords: disinhibition, fMRI, imagery, phonology, plasticity, sound memory, supramarginal gyrus

INTRODUCTION

Cochlear implant (CI) is a hearing restoration technique used in congenital and acquired severe to profound sensorineural deafness that relies on transforming acoustic information into electric input applied to the auditory nerve. In post‐linguistically deaf adults, duration of auditory deprivation is a factor known to contribute to speech recognition ability after implantation [Blamey et al., 1996]. Additional factors contribute to outcome variability, e.g., pre‐implant residual hearing [Rubinstein et al., 1999], but to date none of the identified clinical variables fully explains all of the variability [Blamey et al., 1996; Giraud and Lee, 2007; Green et al., 2007]. Other factors may intervene, including the amount and trajectory of cerebral plasticity that may have taken place during the period of deafness [Giraud and Lee, 2007; Lazard et al., 2010b; Strelnikov et al., 2010].

The right posterior superior temporal gyrus/supramarginal gyrus (PSTG/SMG) undergoes a profound reorganization during deafness that is manifest in a hypermetabolism at rest [Giraud and Lee, 2007; Lee et al., 2007a], and in abnormal activation levels during visual speech tasks (rhyming and lip‐reading tasks) [Lazard et al., 2010b; Lee et al., 2007b]. We previously argued that the involvement of this region in visually driven speech processing may be maladaptive, as it predicts poor phonological scores in deaf subjects and poor auditory speech perception after receiving a CI [Lazard et al., 2010b]. The progressive engagement of the right PSTG/SMG following auditory deprivation duration is observed in conjunction with the disengagement of the left PSTG/SMG [Lee et al., 2007b], a cortical region that normally underpins phonological processing [Binder and Price, 2001]. The engagement of the right PSTG/SMG hence presumably follows from reduced neural activity in the left homologue reflecting phonological decline when auditory feedback is lacking [Andersson et al., 2001; Lazard et al., 2010b; Lee et al., 2007b; Schorr et al., 2005]. The decline in phonological skills in post‐lingual deaf subjects [Anderson et al., 2002; Lazard et al., 2010b] could also arise from more general alterations of auditory memory (not exclusively phonological memory) due to the sparseness of auditory inputs [Andersson et al., 2001].

As the right PSTG/SMG is normally more involved in environmental sound processing [Lewis et al., 2004; Toyomura et al., 2007] than in phonological processing [Hartwigsen et al., 2010], we conducted a functional magnetic resonance imaging (fMRI) task in 10 post‐lingual deaf subjects and 10 normal‐hearing controls. Participants had to perform a mental imagery task in which they had to retrieve environmental sounds from pictures of noisy objects [Halpern and Zatorre, 1999]. Seven of the deaf subjects had previously participated in another fMRI study, in which they performed a rhyming task on visual material [Lazard et al., 2010b]. We re‐analyzed these data and compared the dynamics of functional interactions across the two PSTG/SMG regions during phonological and environmental sound processing using a cross‐sectional approach. This approach involves observing deaf patients at one single time point, where they exhibit different durations of auditory deprivation and evolving hearing loss (i.e., from the beginning of hearing aid use). This represents an alternative to more demanding longitudinal studies, in which neuroplasticity is followed‐up in each patient.

Unilateral cortical lesions can induce further dysfunction in the same cortical hemisphere [He et al., 2007; Price et al., 2001] or elicit a compensation by recruiting homologous areas in the contralateral hemisphere [Heiss et al., 1999; Winhuisen et al., 2005, 2007], e.g., through disinhibition of transcallosal connections [Thiel et al., 2006; Warren et al., 2009]. As most cognitive functions are asymmetrically implemented in the brain [Formisano et al., 2008; Hickok and Poeppel, 2007; Hugdahl, 2000], the engagement of the contralateral cortex may be less efficient than the primary functional organization, and hence maladaptive [Kell et al., 2009; Marsh and Hillis, 2006; Martin and Ayala, 2004; Naeser et al., 2005; Preibisch et al., 2003; van Oers et al., 2010].

We assumed that the ability to mentally evoke stored sounds, whether phonological or environmental, i.e., auditory memory, declines during acquired deafness. We thus expected to observe a parallel decrease in neural responses to phonological processing in the left PSTG/SMG and to environmental sound in the right PSTG/SMG activation. If demonstrated, the latter loss of cortical function could play a facilitating role in the enhanced engagement of the right PSTG/SMG in phonological processing [Lazard et al., 2010b]. This asymmetry in brain plasticity could reflect the fact that maintaining environmental sound processing during deafness is less relevant than maintaining oral communication. Alternatively, however, if communication needs play no special role in neural plasticity, there should be no asymmetry in neural adaptation to the decline in the processing of phonological and environmental sounds. We, therefore, expected our results to distinguish between two possibilities: (i) if right phonological maladaptive plasticity results from reciprocal hemispheric interaction, a decline in environmental sound processing should prompt equivalent overactivation in the left PSTG/SMG during the evocation of sounds, (ii) if phonological maladaptive plasticity is facilitated by environmental sound decline and the stronger need to maintain efficient oral communication, only the right PSTG/SMG should display an over‐activation. From a clinical perspective, validating the latter hypothesis would imply that it may be important to train environmental sound memory in profound hearing deficits because it prevents the reorganization of the right PSTG/SMG, a functional adaptation that is deleterious to the success of cochlear implantation.

METHODS

Subjects (Table I)

Table I.

Clinical data for the ten cochlear implant candidates enrolled in the sound imagery task

| Subject number | Sex | Age at experiment (years) | Duration of bilateral HL (years) | Duration of auditory deprivation (months) | WRS pre‐CIa (%, 60 dB HL) | WRS post‐CI (%, 60 dB HL) |

|---|---|---|---|---|---|---|

| 1 | F | 67.7 | 46 | 36 | 0 | 48 |

| 2 | M | 45 | 26 | 4 | 0 | 41 |

| 3 | F | 32 | 10 | 12 | 0 | 69 |

| 4 | M | 57.9 | 41 | 16 | 0 | 84 |

| 5 | F | 59 | 11 | 36 | 0 | 88 |

| 6 | F | 56.2 | 23 | 36 | 0 | 24 |

| 7 | F | 25.5 | 0.5 | 5 | 0 | 86 |

| 8 | F | 56 | 22 | 48 | 0 | 48 |

| 9 | F | 73 | 16 | 8 | 0 | 86 |

| 10 | F | 54.7 | 30 | — | 50 | 28 |

HL: hearing loss; WRS: word recognition scores.

The 7 patients who also participated in the phonological task are numbered 1, 2, 3, 6, 8, 9, and 10.

Duration of bilateral hearing loss represents the time elapsed since subjective auditory acuity decrease, leading to the use of hearing aids.

Duration of auditory deprivation represents the time elapsed since subjects could no longer communicate by hearing, even with the best‐fitted hearing aid, without lip‐reading. Because Subject 10 had some residual hearing, this definition was not applicable in her case.

With optimally fitted hearing aids, Lafon test (three‐phoneme monosyllabic words).

Twenty adults participated in this fMRI study, which was approved of by the local ethics committee (CPP, Sud‐Est IV, Centre Léon Bérard, Lyon, France). Written informed consent was obtained from all participants. The samples were composed of 10 post‐lingually severe to profound deaf subjects (8 women and 2 men, mean age ± standard deviation = 53 ± 14.7 years), who were candidates for cochlear implantation, and 10 age‐matched normal‐hearing controls (6 women and 4 men, mean age ± standard deviation = 41 ± 13.8 years). The hearing of subjects was assessed by audiometric testing. Normal hearing for control subjects was defined by pure tone thresholds = 20 dB hearing loss (HL) for frequencies from 500 to 4,000 Hz. All subjects had normal or corrected‐to‐normal vision, no history of neurological pathology, and were right‐handed according to the Edinburgh handedness inventory [Oldfield, 1971]. CI candidates 1–9 (Table I) met the classical criteria for cochlear implantation, i.e., average of 0.5, 1, and 2 kHz hearing threshold >90 dB, <30% sentence recognition score with best‐fitted hearing aid (according to NIH Consensus Statement [1995]), and no word recognition using a list composed of three‐phoneme monosyllabic words presented at 60 dB (HL) [Lafon test, Lafon, 1964] with best‐fitted hearing aid. CI candidate number 10 was referred to cochlear implantation in agreement with extended indications for implantation (=50% sentence recognition score with best‐fitted hearing aids) [Cullen et al., 2004]. The evolution of her deafness from mild to severe had been steep and occurred during childhood. It then stayed stable for many years until this study was performed. All but one CI candidate had progressive sensorineural hearing loss and wore hearing aids. Subject number 7 had bilateral petrous bone fracture, yielding sudden deafness. Hearing loss was first severe and rapidly deteriorated within 6 months into profound deafness. No attempt was made to fit a hearing aid for this subject.

Table I provides the duration of auditory deprivation, i.e., the time elapsed since subjects could no longer communicate by hearing, even with the best‐fitted hearing aids. Subject 10 was excluded from correlations between duration of auditory deprivation and imaging results because he presented some residual hearing. Table I also provides the duration of hearing loss, i.e., the time elapsed since subjective auditory acuity decreased, leading to the use of hearing aids. The use of hearing aids was bilateral in the case of bilateral progressive hearing loss (Subjects 2–6 and 8–10), or unilateral in the case of progressive hearing loss in one ear and sudden severe deafness in the other ear (idiopathic etiology 15 years before, Subject 1).

None of the ten CI candidates used sign language, and they all relied on speechreading and written language for communication. Deaf patients were either implanted with a Cochlear device (Melbourne, Australia) or with a MXM device (Vallauris, France). Word recognition scores were measured 6 months after implantation using the Lafon test (as for the preoperative assessment).

Experimental Paradigm

fMRI data were acquired during mental imagery of sounds evoked by visually presented items. This experiment was performed by controls and CI candidates prior to surgery. Acquired data were related to post‐operative word recognition scores and clinical variables (auditory deprivation duration and hearing loss duration) in the latter group.

Subjects were asked to retrieve the sound produced by a visually presented object or animal (white and black pictures). Objects included musical instruments (piano, trumpet, etc), transportation means (car, train, plane, etc), and other everyday objects like a door and a clock. Two control tasks consisted of either imagining the color of those items (only silent objects, e.g., shirt, snowman) or silently naming them. The aim of the color imagery task was to target specifically sound memory processing. The aim of the naming task was to eliminate subjects with semantic dysfunctions. Conditions were randomized across subjects. The visual stimuli comprised 80 pictures, all shown once. Subjects were requested to self‐rate their ability to mentally evoke the sound or color of each item or to name them. They gave their response by button press (left button if possible, right button if not) after each presentation within the scanner. Answers and related reaction times were recorded. No objective control was provided in this experiment because the items used for the experiment were sampled from everyday life (piano, car, dog…) and could not be unknown by the participants [Bunzeck et al., 2005]. The fMRI experiment included two runs of event‐related design. Images were presented for 1.5 sec and were followed by a fixation cross whose duration randomly varied from 1 to 7 sec. A screen showing written instructions (3.5 sec) preceded each image. All subjects performed a training session using Presentation software version 9.90 (Neurobehavioral system, Albany, CA).

Cerebral activity of the left and right PSTG/SMG was compared in seven CI candidates who also performed a phonological (rhyming) task in a separate session. The phonological task consisted in comparing the ending of two visually presented common regular French words. The material involved three possible phonological endings with different orthographic spellings, so that judgements could not be based on orthography, but had to be based solely on phonology. The results from this session are reported in a separate manuscript [Lazard et al., 2010b]. The stimuli were comprised of 80 words. The same scanner and the same methods of acquisition as in the imagery task were used. Subjects were requested to determine whether words rhymed or not by a button press after each presentation of pairs of words. The seven subjects who performed this task are numbered 1–3, 6, and 8–10 in Table I.

Imaging Parameters for fMRI Experiment

Gradient echo‐planar fMRI data with blood oxygenation level dependent contrast were acquired with a 1.5 T magnetic resonance scanner (Siemens Sonata, Medical Systems, Erlangen, Germany) with standard head coil to obtain volume series with 33 contiguous slices (voxel size 3.4 mm × 3.4 mm × 4 mm, no gap, repetition time 2.95 sec, echo time 60 msec) covering the whole brain. Earplugs (mean sound attenuation 30 dB) and earmuffs (mean sound attenuation 20 dB) were provided both to controls and deaf subjects to equate as much as possible the experimental environments of both groups. We acquired 498 functional images in two runs per subject.

Data Analyses

Answers and reaction times (expressed as means ± standard deviation) were compared across groups using non‐parametric Mann–Whitney–Wilcoxon tests.

The fMRI data were analyzed using SPM5 (Statistical Parametric Mapping, Centre for Neuroimaging, London, UK, http: //www.fil.ion.ucl.ac.uk/spm) in a Matlab 7.1 (Mathworks, Natick, MA) environment and displayed using MRIcron software (http://www.sph.sc.edu/comd/rorden/mricron). We performed standard preprocessing (realignment, unwarping, normalization, and spatial smoothing with an 8‐mm full width at half‐maximum Gaussian kernel), and calculated contrast images versus baseline in each single subject for each task (imagine sound, imagine color, and naming). We performed group analyses of each contrast (one sample t‐tests), plus contrasts comparing each condition with every other. Group differences (simple group effects and group‐by‐task interactions) between CI candidates and controls in the sound imagery task (minus color) were also explored using ANOVA modeling groups and conditions, and two sample t‐tests. In group‐by‐task interactions, results were masked by the simple main effect of sound imagery in each sample (inclusive mask). One‐sample t‐tests analyses were reported using the false discovery rate (FDR) correction thresholded at P < 0.05. Uncorrected statistics was used for the two‐sample t‐tests and interactions at P < 0.001. Correlations with behavioral data (i.e., hearing loss/deafness duration, post‐CI word recognition) using non‐parametric Spearman tests (P < 0.05) were also tested.

For the CI candidates group, we entered contrast images into a regression analysis using post‐CI word recognition scores, hearing loss duration, and auditory deprivation duration. Because we only used this whole brain analyses to confirm post‐hoc correlations, we used a P = 0.01 threshold, which is acceptable when exploring a‐priori defined regions (see Table III).

Table III.

Areas of significant activation for the sound imagery task in deaf subjects, task‐by‐group interaction (sound more than color imagery), and brain correlation between neural response to sound imagery and auditory deprivation and CI outcomes

| Region | L/R | Contrast | MNI coordinates | P | Z score |

|---|---|---|---|---|---|

| Inf frontal gyrus | L | Sound>Baseline | −46, 24, −16 | FDR 0.02 | 4.41 |

| Controls>Patients | −42, 4, 2 | Uncorr 0.001 | 3.09 | ||

| Sup frontal gyrus | L | Sound>Baseline | −34, 56, 18 | FDR 0.04 | 3.93 |

| Cerebellum | L | Sound>Baseline | −22, −70, −30 | FDR 0.02 | 4.26 |

| Cerebellum | R | Sound>Baseline | 44, −72, −28 | FDR 0.008 | 5.34 |

| PSTG/SMG | R | Controls>Patients | 60, −66, 8 | Uncorr 0.002 | 2.91 |

| Neg correlation with HL | 62, −46, 26 | Uncorr 0.0001 | 4.03 | ||

| Pos correlation with CI | 60, −46, 26 | Uncorr 0.04 | 1.75 | ||

| Middle STG | R | Neg correlation with CI | 46, −12, 12 | Uncorr 0.0001 | 3.74 |

Inf: inferior, Sup: superior; Neg: negative; Pos: positive; HL: hearing loss.

Values of the peak voxel for the right and left PSTG/SMG in the two tasks (sound imagery and phonological processing) were extracted for the CI candidates (n = 10 for the imagery task and n = 7 in common for the phonology task) and the respective controls. Values were compared across groups using non‐parametric Mann–Whitney–Wilcoxon tests, and tested for correlations with deafness duration (i.e., either hearing loss or auditory deprivation durations) and post‐CI word recognition scores.

RESULTS

Behavioral Assessment and Control Tasks

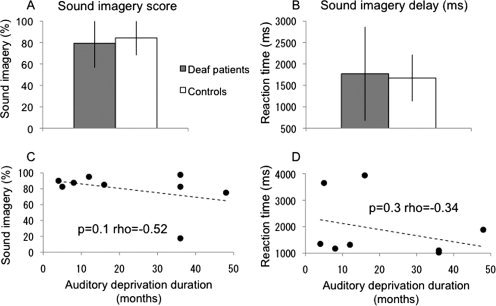

In the sound imagery task, the mean self‐estimations of sound imagery were 79% (s.d±23) and 84% (s.d±16), and the delays to imagine the sounds corresponding to the images were 1,771 msec (s.d±1096) and 1,673 msec (s.d±546), for CI candidates and controls, respectively. These behavioral data did not statistically differ across groups (Fig. 1A,B). Although self‐estimated performance did not differ across groups, we tested for a possible decline in sound imagery as a function of deafness duration. There was no significant correlation for scores or reaction times (Fig. 1C,D).

Figure 1.

Behavioral results during fMRI imagery tasks: (A) Performance, (B) Reaction times. CI candidates and controls did not differ in either measure. C, D: Correlations between behavioral and clinical data (auditory deprivation duration). Sound imagery showed a slight trend to decrease with auditory deprivation.

Regarding the control tasks during scanning, we did not observe a group difference for the control task “imagine color.” Crucially, there was also no group difference in the naming task that we used to assure that CI candidates did not have any semantic deficit. This control task was entered in the statistical model but not used in specific contrasts, as it was not central to our hypotheses.

Sound Imagery in Normal Hearing and Post‐Lingually Deaf Subjects

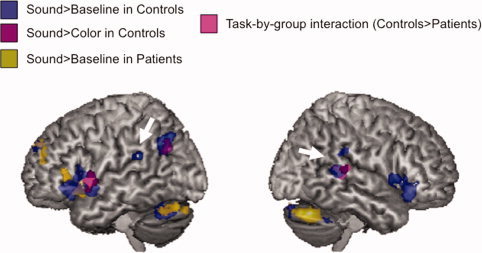

In normal hearing subjects, sound imagery relative to baseline (Fig. 2, blue blobs and Table II) activated the bilateral inferior frontal gyri, the bilateral PSTG/SMG, the bilateral cerebellum, the left anterior superior temporal gyrus (STG), the left superior frontal gyrus, and the left inferior parietal/angular gyrus. Sound imagery relative to color imagery (Fig. 2, purple blobs and Table II) activated the left inferior and superior frontal gyrus, the left inferior parietal lobule, and the right PSTG/SMG. Many of these regions were not significantly activated in deaf patients (Fig. 2, yellow blobs and Table III), who performed the task recruiting only the left inferior and superior frontal gyri and the bilateral cerebellum. Notably, they recruited none of the brain regions usually involved in environmental sound acoustic analysis and representation (i.e., the left parietal lobule and the right posterior temporal cortex) [Engel et al., 2009; Kraut et al., 2006; Lewis et al., 2004]. No activation during sound relative to color imagery in the CI candidate group was observed. We detected a task‐by‐group interaction. Control subjects showed stronger neural activation than patients (sound>color; p < 0.001, uncorrected, Fig. 2, pink blobs and Table III) in the left inferior frontal gyrus and the right PSTG/SMG.

Figure 2.

Sound imagery in normal hearing and post‐lingually deaf subjects. Surface rendering for the main contrasts: in controls—sound more than baseline (blue blobs) and sound more than color (purple blobs); in CI candidates—sound more than baseline (yellow blobs); in task‐by‐group interaction—controls more than patients and sound imagery more than color (pink blobs). White arrows indicate our regions of interest. For better illustration purpose, an uncorrected p < 0.01 voxel level threshold was used, minimum voxel size 50. Reported blobs were significant at p < 0.05 FDR corrected.

Table II.

Areas of significant activation for the sound imagery task in normal hearing subjects

| Region | L/R | Contrast | MNI coordinates | P | Z score |

|---|---|---|---|---|---|

| Inf frontal gy./insula anterior STG | L | Sound>Baseline | −50, 10, 21 | FDR 0.008 | 5.36 |

| Sound>Color | −42, 4, 6 | Uncorr 0.0001 | 3.89 | ||

| Sound>Baseline | −54, 4, −6 | FDR 0.01 | 4.51 | ||

| Inf frontal gyrus | R | Sound>Baseline | 60, 14, 0 | FRD 0.01 | 4.95 |

| Sup front gyrus | L | Sound>Baseline | −22, 32, 48 | FDR 0.01 | 4.06 |

| Sound>Color | −26, 42, 46 | Uncorr 0.0001 | 4.60 | ||

| Inf parietal lobule/angular gyrus | L | Sound>Baseline | −44, −66, 38 | FDR 0.01 | 4.81 |

| Sound>Color | −46, −70, 28 | Uncorr 0.0001 | 3.90 | ||

| PSTG/SMG | L | Sound>Baseline | −62, −40, 22 | FDR 0.01 | 4.73 |

| PSTG/SMG | R | Sound>Baseline | 70, −40, 10 | FDR 0.01 | 4.30 |

| Sound>Baseline | 70, −34, 28 | FDR 0.02 | 3.41 | ||

| Sound>Color | 70, −34, 10 | Uncorr 0.0001 | 3.55 | ||

| Cerebellum | L | Sound>Baseline | −28, −56, −32 | FDR 0.01 | 4.85 |

| Cerebellum | R | Sound>Baseline | 16, −82, −24 | FDR 0.01 | 4.71 |

Inf: inferior, Sup: superior, gy: gyrus.

Comparing Processing of Sound Imagery and Phonology in CI Candidates and Normal‐Hearing Controls

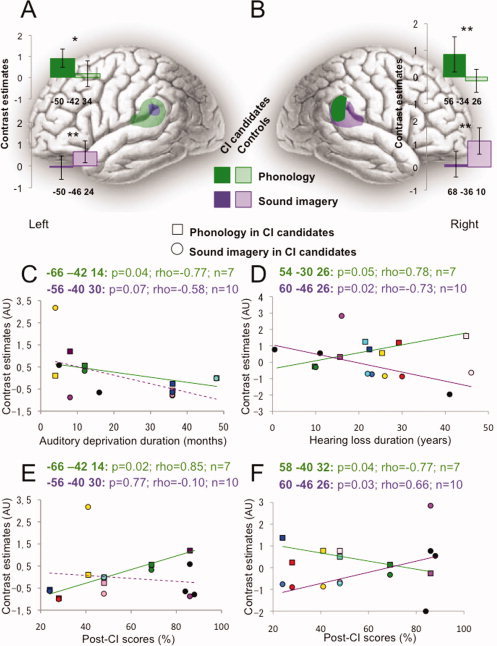

As 7 of the 10 CI candidates also performed a phonological rhyming task [Lazard et al., 2010b] in a separate session, we could compare responses in the left and right PSTG/SMG in each task. Patterns of activation in normal‐hearing subjects and in CI candidates, depicted in Figure 3A,B, were inverted in the two groups. In normal‐hearing controls during the sound imagery task, the region that was most strongly activated was the right PSTG/SMG (Fig. 3B, light purple, p < 0.01 relative to the activation of the left PSTG/SMG, 3A light purple), and during the phonological task, the left PSTG/SMG, even though slightly activated, was significantly more activated than the right PSTG/SMG (p < 0.01; Fig. 3A,B, light green). Conversely, the CI candidates over‐activated both right and left PSTG/SMG during the phonological task (significant group difference in the right PSTG/SMG, p < 0.01, and in the left PSTG/SMG, p = 0.02, Fig. 3A,B, dark green, and stars), but CI candidates did not activate these regions during the imagery task (significant group differences in the right and left PSTG/SMG, p < 0.01, Fig. 3A,B, dark purple, and stars).

Figure 3.

Comparing residual processing of environmental sound and phonology in CI candidates, and as a function of deafness and post‐CI scores. A: Left and (B) right PSTG/SMG activation during the phonological (green blobs and histograms) and sound imagery (purple blobs and histograms) tasks, in CI candidates (dark colors) and controls (transparent colors). *p < 0.05; **p < 0.01. C–F: Each subject who participated in both tasks (n = 7) is individualized by a specific color. Squares are for the phonological task, circles for the sound imagery task. The three other subjects are black‐colored. C: Neural activity of the left PSTG/SMG decreased with auditory deprivation duration in both tasks in CI candidates. D: Neural activity of the right PSTG/SMG decreased with hearing loss duration in the sound imagery task but increased in the phonological task, in CI candidates. E: Neural activity of the left PSTG/SMG positively correlated with post‐CI scores in the phonological task. F: Neural activity of the right PSTG/SMG positively correlated with post‐CI scores in the sound imagery task but negatively in the phonological task.

Activity in PSTG/SMG and Deafness Durations (Auditory Deprivation and Hearing Loss Durations)

We assessed how activity was related to deafness duration and post‐implant speech perception scores in bilateral PSTG/SMG. Despite low individual activation levels in these regions in the CI candidate group for the sound imagery task (not significantly different from baseline), correlations indicated that the response inter‐subject variability was meaningfully related to CI outcome (Fig. 3F). The levels of activation of the right PSTG/SMG during sound imagery and the left PSTG/SMG during phonological processing were both negatively correlated with the duration of deafness (with the duration of hearing loss for the right PSTG/SMG shown in Fig. 3D and Supporting Information Fig. 1, and with the duration of auditory deprivation for the left PSTG/SMG shown in Fig. 3C and Supporting Information Fig. 1). Regarding contralateral recruitment, different behaviors were observed between the right and left PSTG/SMG. The involvement of the left PSTG/SMG in sound imagery tended to decrease with the duration of auditory deprivation (Fig. 3C and Supporting Information Fig. 1), while the over‐activation in phonological processing of the right PSTG/SMG increased with hearing loss duration (Fig. 3D and Supporting Information Fig. 1).

Activity in PSTG/SMG and Cochlear Implant Outcome

Phonological processing by the left PSTG/SMG correlated positively with post‐implant speech perception measured at 6 months (Fig. 3E), as well as the level of activation in the right PSTG/SMG during environmental sound (Fig. 3F). Conversely, enhanced phonological responses by the right PSTG/SMG negatively correlated with post‐CI scores (Fig. 3F). Note that those deaf subjects who did not over‐activate the left PSTG/SMG during the phonological task were those for whom the right PSTG/SMG was recruited most strongly for the same task (Fig. 3E,F).

Whole Brain Regressions With Hearing Loss Duration and CI‐Outcome

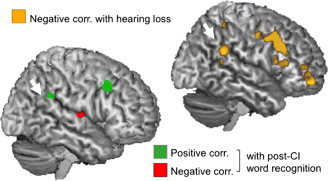

We confirmed our results using independent whole brain regression analyses. The activation of the right PSTG/SMG during sound imagery (Fig. 4 and Table III) correlated positively with post‐CI speech perception, and negatively with hearing loss duration. These results confirm that although the right PSTG/SMG progressively disengages from environmental sound processing, its activation is associated with good speech perception scores. The negative correlation with post‐CI scores showed that subjects, who reorganized the right PSTG/SMG to process phonology, performed environmental sound imagery by recruiting alternative regions, namely the right middle STG (Fig. 4, red blob and Table III).

Figure 4.

Right hemisphere and surface rendering for whole brain correlation with hearing loss (negative correlation, yellow blobs) and with post‐CI scores at 6 month after surgery (positive correlation: green blobs. Negative correlation: red blobs). White arrows indicate our regions of interest. Effect displayed at p < 0.01 (T = 2.90), minimum voxel size 20.

DISCUSSION

Even though patients did not report difficulties with sound imagery, bilateral PSTG/SMG activation profiles denote profound functional reorganization compared to controls. When retrieving the sound of everyday objects, hearing subjects involved a large part of the network that has previously been related to the acoustic processing of environmental sounds, including the bilateral inferior prefrontal gyrus/insula, the left anterior temporal cortex [Leff et al., 2008; Scott et al., 2000] and the right posterior temporal lobe [Beauchamp et al., 2004; Binder et al., 1996; Lewis et al., 2004]. They additionally recruited the inferior parietal lobule/angular gyrus previously linked to long‐term memory retrieval [Kraut et al., 2006; Shannon and Buckner, 2004] and the bilateral supramarginal gyrus, where speech sensory‐motor transformation is carried out [Hickok et al., 2009]. These data confirmed that the right PSTG/SMG region is normally involved in environmental sound processing. They also suggest that mental evocation of sounds, e.g., animal sounds, relies to some extent on sound‐to‐articulation mapping (presumably inner onomatopoeia). This refers to the process by which a sound is mapped onto the motor routines that lead to its production. This process typically involves an interaction between the supramarginal gyrus/SPT area and the left inferior prefrontal gyrus. The left inferior frontal gyrus is a multimodal area involved in hierarchical language processing [Sahin et al., 2009] and in language production planning [Hickok and Poeppel, 2007; Turkeltaub and Coslett, 2010] and the supramarginal gyrus belongs to the articulatory sensory‐motor integration circuit [Hickok et al., 2009].

In contrast with normal hearing subjects, deaf subjects only involved the inferior prefrontal/insula/anterior temporal region and did not activate the bilateral PSTG/SMG during the imagery task. Assuming that their self‐report could retrieve the sound of familiar objects and animals was accurate, they presumably invoked abstract, semantic‐level representations, as suggested by the activation of the left insula/ventral prefrontal, known to participate in sound retrieval and categorization [Doehrmann and Naumer, 2008]. These data may indicate that a detailed acoustic representation of sounds, at least for the sounds presented during the task, was disrupted.

Activation profiles of the right and left PSTG/SMG in CI candidates were inverted compared to normal‐hearing controls. Because phonology may be (i) more relevant to oral communication and speech‐reading and (ii) more strongly reinforced by visual cues, we hypothesized that the over‐activation of the right PSTG/SMG during the phonological task [Lazard et al., 2010b] was part of a global reorganization involving the usual function of this region, i.e., environmental sound processing. The right activation of the PSTG/SMG observed during sound imagery in controls was an expected finding, as the task was designed to recruit it [Beauchamp et al., 2004; Binder et al., 1996; Bunzeck et al., 2005; Lewis et al., 2004]. In contrast, low activation levels in the left PSTG/SMG during the phonological task were presumably due to the fact that this task was too easy for normal‐hearing subjects. We cannot exclude the possibility, however, that this lack of sensitivity was due to the small number of subjects in the samples, or that lateralization may be different in the case of more difficult tasks [Hartwigsen et al., 2010]. That controls activated this region during sound imagery denotes that they may perform automatic sound‐to‐articulation mapping, e.g., transforming sounds into onomatopoeia when possible. Deaf subjects did not activate the right and left PSTG/SMG during the imagery task. This suggests that their ability to evoke non‐speech sounds through acoustic representations may be altered. The global over‐activation of the left PSTG/SMG in deaf subjects relative to controls during the phonological task may reflect difficulties in processing the task.

The goal of this study was to understand the interactions across bilateral PSTG/SMG in acquired deafness and their possible implication in CI outcome. The main issue was whether the declining activation of the right PSTG/SMG in environmental sounds memory following deafness onset could prompt or facilitate its take‐over in phonological processing, or whether its implication in phonological processing merely reflects hemispheric reciprocal interaction, presumably disinhibition [Thiel et al., 2006; Warren et al., 2009].

The neural response level during phonological processing in the left PSTG/SMG declined with the duration of auditory deprivation in deaf subjects. However, that this decline was not correlated with the duration of hearing loss may reflect the possibility that phonological processing is maintained as long as the meaning of speech inputs is available without resorting to lip‐reading, and starts to decline when speech becomes unintelligible. In contrast, the engagement of the right PSTG/SMG in environmental sound memory was negatively correlated with the duration of hearing loss, but not with the duration of auditory deprivation. This negative correlation with the duration of hearing loss may be explained by an earlier beginning of the process of decline of right environmental sound processing in the time course of the auditory handicap.

The dynamics of right and left PSTG/SMG activation in phonological processing and sound imagery was even more drastically different. In the course of deafness, we observed a growing phonological implication of the right PSTG/SMG, whereas the implication of the left PSTG/SMG in sound imagery decreased. Transcallosal disinhibition is an adaptive behavior that is frequently reported after left strokes [Marsh and Hillis, 2006]; if contralateral compensation resulted from bilateral interhemispheric disinhibition following the declining efficiency of both PSTG/SMG, we should not observe this asymmetry, but instead a bilateral positive correlation with deafness (either with the duration of hearing loss or of auditory deprivation). We therefore conclude that the remarkable involvement of the right PSTG/SMG in phonological processing resulted from specific cognitive factors, i.e., the need to maintain phonological processing for visual communication, such as in speech‐reading [Lazard et al., 2010b; Mortensen et al., 2006; Suh et al., 2009]. Importantly, both growing phonological responses and declining responses to sound imagery in the right PSTG/SMG (Fig. 3D) correlated with hearing loss duration, meaning that these two functional reorganizations happened jointly and early in the evolution of auditory loss.

Central to our interpretation is the hypothesis that, during deafness, it is more important to maintain phonological representations than environmental sound representations, because oral and written communication depends on the former. Post‐lingually deaf individuals usually rely on lip‐reading to perceive speech, and this process has been shown to involve the left PSTG/SMG [Lee et al., 2007b]. Although normal‐hearing subjects use the left PSTG/SMG not to lip‐read, but only to process auditory speech sounds, deafness prompts an immediate take‐over of this region [Lee et al., 2007b]. This indicates that the routing of visual speech information to auditory areas preexists even though it is not predominantly used under normal hearing conditions. The involvement of the left PSTG/SMG in lip‐reading presumably results from the patients resorting to phonological representations that have previously been associated with specific phonological oro‐facial configurations. While this cross‐modal mechanism does not prevent the decline in phonological processing, it presumably delays it until auditory cues are too distorted to be utilizable. Yet, the involvement of the left PSTG/SMG in visual speech may progressively enhance the visual specificity of this primarily auditory region [Champoux et al., 2009]. Thus, although the left PSTG/SMG progressively looses its specificity to phonology, its steady involvement in speech‐reading helps maintain a physiological level of activity. This may not be the case for the right PSTG/SMG as environmental sounds, music, and prosody processing are not as systematically visually reinforced as phonological sounds. Therefore, the asymmetry in the visual take‐over of the PSTG/SMG could contribute to the right asymmetric take‐over by phonology. Accordingly, we previously showed that the right PSTG/SMG is more slowly involved by the visual system during speech‐reading than its left homologue [Lee et al., 2007b]. The right PSTG/SMG might be available to phonological processing because it is under‐stimulated in deaf people's everyday life in its usual function (environmental sound processing), and not immediately taken‐over by the visual system.

Overall, present and previous data converge to suggest that the involvement of the right PSTG/SMG in phonological processing and speech‐reading results from an asymmetry in the use of the PSTG region during deafness, possibly arising from a difference in the propensity to be visually recycled.

The present data also show that the involvement of the right PSTG/SMG in environmental sound memory is positively correlated with CI speech perception outcome. As long as the right PSTG/SMG is still involved in environmental sound processing, it does not seem to be available to contralateral reorganization. Supporting this hypothesis, we observed a dissociation between the level of activation of the right PSTG/SMG by sound imagery vs. phonological processing in both correlations with hearing loss duration (Fig. 3D) and speech perception after CI (Fig. 3F). Although marginally significant (interaction P = 0.09), this dissociation is visible in individual subjects in Figure 3F: the individuals with strong responses during sound imagery in the right PSTG/SMG had low responses during the rhyming task, and vice versa. This dissociation shows that the implication of the right PSTG/SMG in phonological processing is presumably maladaptive because it is normally involved in non‐speech complex acoustic processing [Thierry et al., 2003; Toyomura et al., 2007]. The positive correlation with post‐CI scores in the sound imagery task may thus be explained by the fact that sustained use of the right PSTG/SMG protects against deleterious contralateral phonological take‐over.

Designing behavioral tests that detect these reorganizations before cochlear implantation is appealing. It seems possible to elaborate dedicated phonological tests based on written material that could identify CI candidates who have abandoned phonological strategies to communicate. Testing sound memory with purely visual material seems more arduous and presumably less directly relevant, since CI candidates did not report any difficulty in evoking environmental sounds. Yet, these results illustrate that communication needs play an important role in the cortical adaptation to function loss [Dye and Bavelier, 2010; Strelnikov et al., 2010]. They further suggest that it is beneficial to maintain normal functional organization during deafness, not only with respect to phonological processing, but more generally to sound memory.

Supporting information

Additional Supporting Information may be found in the online version of this article.

Supporting Information Figures1‐11.

REFERENCES

- Anderson I, Weichbold V, D'Haese P ( 2002): Recent results with the MED‐EL COMBI 40+ cochlear implant and TEMPO+ behind‐the‐ear processor. Ear Nose Throat J 81: 229–233. [PubMed] [Google Scholar]

- Andersson U, Lyxell B, Ronnberg J, Spens KE ( 2001): Cognitive correlates of visual speech understanding in hearing‐impaired individuals. J Deaf Stud Deaf Educ 6: 103–116. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Argall BD, Martin A ( 2004): Integration of auditory and visual information about objects in superior temporal sulcus. Neuron 41: 809–823. [DOI] [PubMed] [Google Scholar]

- Binder JR, Price CJ. ( 2001): Functional neuroimaging of language In: Cabeza R K. Kingstone A, editors. Handbook of Functional Neuroimaging of Cognition. Cambridge, MA: MIT Press; pp 187–251. [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Rao SM, Cox RW ( 1996): Function of the left planum temporale in auditory and linguistic processing. Brain 119( Part 4): 1239–1247. [DOI] [PubMed] [Google Scholar]

- Blamey P, Arndt P, Bergeron F, Bredberg G, Brimacombe J, Facer G, Larky J, Lindstrom B, Nedzelski J, Peterson A, Shipp D, Staller S, Whitford L ( 1996): Factors affecting auditory performance of postlinguistically deaf adults using cochlear implants. Audiol Neurootol 1: 293–306. [DOI] [PubMed] [Google Scholar]

- Bunzeck N, Wuestenberg T, Lutz K, Heinze HJ, Jancke L ( 2005): Scanning silence: Mental imagery of complex sounds. Neuroimage 26: 1119–1127. [DOI] [PubMed] [Google Scholar]

- Champoux F, Lepore F, Gagne JP, Theoret H ( 2009): Visual stimuli can impair auditory processing in cochlear implant users. Neuropsychologia 47: 17–22. [DOI] [PubMed] [Google Scholar]

- Cullen RD, Higgins C, Buss E, Clark M, Pillsbury HC III, Buchman CA ( 2004): Cochlear implantation in patients with substantial residual hearing. Laryngoscope 114: 2218–2223. [DOI] [PubMed] [Google Scholar]

- Doehrmann O, Naumer MJ ( 2008): Semantics and the multisensory brain: How meaning modulates processes of audio‐visual integration. Brain Res 1242: 136–150. [DOI] [PubMed] [Google Scholar]

- Dye MW, Bavelier D ( 2010): Attentional enhancements and deficits in deaf populations: An integrative review. Restor Neurol Neurosci 28: 181–192. [DOI] [PubMed] [Google Scholar]

- Engel LR, Frum C, Puce A, Walker NA, Lewis JW ( 2009): Different categories of living and non‐living sound‐sources activate distinct cortical networks. Neuroimage 47: 1778–1791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Formisano E, De Martino F, Bonte M, Goebel R ( 2008): “Who” is saying “what”? Brain‐based decoding of human voice and speech. Science 322: 970–973. [DOI] [PubMed] [Google Scholar]

- Giraud AL, Lee HJ ( 2007): Predicting cochlear implant outcome from brain organisation in the deaf. Restor Neurol Neurosci 25: 381–390. [PubMed] [Google Scholar]

- Green KM, Bhatt YM, Mawman DJ, O'Driscoll MP, Saeed SR, Ramsden RT, Green MW ( 2007): Predictors of audiological outcome following cochlear implantation in adults. Cochlear Implants Int 8: 1–11. [DOI] [PubMed] [Google Scholar]

- Halpern AR, Zatorre RJ ( 1999): When that tune runs through your head: A PET investigation of auditory imagery for familiar melodies. Cereb Cortex 9: 697–704. [DOI] [PubMed] [Google Scholar]

- Hartwigsen G, Baumgaertner A, Price CJ, Koehnke M, Ulmer S, Siebner HR ( 2010): Phonological decisions require both the left and right supramarginal gyri. Proc Natl Acad Sci USA 107: 16494–16499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- He BJ, Snyder AZ, Vincent JL, Epstein A, Shulman GL, Corbetta M ( 2007): Breakdown of functional connectivity in frontoparietal networks underlies behavioral deficits in spatial neglect. Neuron 53: 905–918. [DOI] [PubMed] [Google Scholar]

- Heiss WD, Kessler J, Thiel A, Ghaemi M, Karbe H ( 1999): Differential capacity of left and right hemispheric areas for compensation of poststroke aphasia. Ann Neurol 45: 430–438. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D ( 2007): The cortical organization of speech processing. Nat Rev Neurosci 8: 393–402. [DOI] [PubMed] [Google Scholar]

- Hickok G, Okada K, Serences JT ( 2009): Area Spt in the human planum temporale supports sensory‐motor integration for speech processing. J Neurophysiol 101: 2725–2732. [DOI] [PubMed] [Google Scholar]

- Hugdahl K ( 2000): Lateralization of cognitive processes in the brain. Acta Psychol (Amst) 105: 211–235. [DOI] [PubMed] [Google Scholar]

- Kell CA, Neumann K, von Kriegstein K, Posenenske C, von Gudenberg AW, Euler H, Giraud AL ( 2009): How the brain repairs stuttering. Brain 132( Part 10): 2747–2760. [DOI] [PubMed] [Google Scholar]

- Kraut MA, Pitcock JA, Calhoun V, Li J, Freeman T, Hart J Jr. ( 2006): Neuroanatomic organization of sound memory in humans. J Cogn Neurosci 18: 1877–1888. [DOI] [PubMed] [Google Scholar]

- Lafon JC ( 1964): [Audiometry with the Phonetic Test.]. Acta Otorhinolaryngol Belg 18: 619–633. [PubMed] [Google Scholar]

- Lazard DS, Bordure P, Lina‐Granade G, Magnan J, Meller R, Meyer B, Radafy E, Roux PE, Gnansia D, Pean V, Truy E. ( 2010a): Speech perception performance for 100 post‐lingually deaf adults fitted with Neurelec cochlear implants: Comparison between Digisonic((R)) Convex and Digisonic((R)) SP devices after a 1‐year follow‐up. Acta Otolaryngol 130: 1267–73. [DOI] [PubMed] [Google Scholar]

- Lazard DS, Lee HJ, Gaebler M, Kell CA, Truy E, Giraud AL ( 2010b): Phonological processing in post‐lingual deafness and cochlear implant outcome. Neuroimage 49: 3443–51. [DOI] [PubMed] [Google Scholar]

- Lee HJ, Giraud AL, Kang E, Oh SH, Kang H, Kim CS, Lee DS ( 2007a): Cortical activity at rest predicts cochlear implantation outcome. Cereb Cortex 17: 909–917. [DOI] [PubMed] [Google Scholar]

- Lee HJ, Truy E, Mamou G, Sappey‐Marinier D, Giraud AL ( 2007b): Visual speech circuits in profound acquired deafness: A possible role for latent multimodal connectivity. Brain 130 ( Part 11): 2929–2941. [DOI] [PubMed] [Google Scholar]

- Leff AP, Schofield TM, Stephan KE, Crinion JT, Friston KJ, Price CJ ( 2008): The cortical dynamics of intelligible speech. J Neurosci 28: 13209–13215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis JW, Wightman FL, Brefczynski JA, Phinney RE, Binder JR, DeYoe EA ( 2004): Human brain regions involved in recognizing environmental sounds. Cereb Cortex 14: 1008–1021. [DOI] [PubMed] [Google Scholar]

- Marsh EB, Hillis AE ( 2006): Recovery from aphasia following brain injury: The role of reorganization. Prog Brain Res 157: 143–156. [DOI] [PubMed] [Google Scholar]

- Martin N, Ayala J ( 2004): Measurements of auditory‐verbal STM span in aphasia: Effects of item, task, and lexical impairment. Brain Lang 89: 464–483. [DOI] [PubMed] [Google Scholar]

- Mortensen MV, Mirz F, Gjedde A ( 2006): Restored speech comprehension linked to activity in left inferior prefrontal and right temporal cortices in postlingual deafness. Neuroimage 31: 842–852. [DOI] [PubMed] [Google Scholar]

- Naeser MA, Martin PI, Nicholas M, Baker EH, Seekins H, Kobayashi M, Theoret H, Fregni F, Maria‐Tormos J, Kurland J, Doron KW, Pascual‐Leone A ( 2005): Improved picture naming in chronic aphasia after TMS to part of right Broca's area: An open‐protocol study. Brain Lang 93: 95–105. [DOI] [PubMed] [Google Scholar]

- NIH Consensus Conference ( 1995): Cochlear implants in adults and children. JAMA 274: 1955–1961. [PubMed] [Google Scholar]

- Oldfield RC ( 1971): The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9: 97–113. [DOI] [PubMed] [Google Scholar]

- Preibisch C, Neumann K, Raab P, Euler HA, von Gudenberg AW, Lanfermann H, Giraud AL ( 2003): Evidence for compensation for stuttering by the right frontal operculum. Neuroimage 20: 1356–1364. [DOI] [PubMed] [Google Scholar]

- Price CJ, Warburton EA, Moore CJ, Frackowiak RS, Friston KJ ( 2001): Dynamic diaschisis: Anatomically remote and context‐sensitive human brain lesions. J Cogn Neurosci 13: 419–429. [DOI] [PubMed] [Google Scholar]

- Rubinstein JT, Parkinson WS, Tyler RS, Gantz BJ ( 1999): Residual speech recognition and cochlear implant performance: Effects of implantation criteria. Am J Otol 20: 445–452. [PubMed] [Google Scholar]

- Sahin NT, Pinker S, Cash SS, Schomer D, Halgren E ( 2009): Sequential processing of lexical, grammatical, and phonological information within Broca's area. Science 326: 445–449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schorr EA, Fox NA, van Wassenhove V, Knudsen EI ( 2005): Auditory‐visual fusion in speech perception in children with cochlear implants. Proc Natl Acad Sci USA 102: 18748–18750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SK, Blank CC, Rosen S, Wise RJ ( 2000): Identification of a pathway for intelligible speech in the left temporal lobe. Brain 123 ( Part 12): 2400–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shannon BJ, Buckner RL ( 2004): Functional‐anatomic correlates of memory retrieval that suggest nontraditional processing roles for multiple distinct regions within posterior parietal cortex. J Neurosci 24: 10084–10092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strelnikov K, Rouger J, Demonet JF, Lagleyre S, Fraysse B, Deguine O, Barone P ( 2010): Does brain activity at rest reflect adaptive strategies? Evidence from speech processing after cochlear implantation. Cereb Cortex 20: 1217–1222. [DOI] [PubMed] [Google Scholar]

- Suh MW, Lee HJ, Kim JS, Chung CK, Oh SH ( 2009): Speech experience shapes the speechreading network and subsequent deafness facilitates it. Brain 132 ( Part 10): 2761–2771. [DOI] [PubMed] [Google Scholar]

- Thiel A, Schumacher B, Wienhard K, Gairing S, Kracht LW, Wagner R, Haupt WF, Heiss WD ( 2006): Direct demonstration of transcallosal disinhibition in language networks. J Cereb Blood Flow Metab 26: 1122–1127. [DOI] [PubMed] [Google Scholar]

- Thierry G, Giraud AL, Price C ( 2003): Hemispheric dissociation in access to the human semantic system. Neuron 38: 499–506. [DOI] [PubMed] [Google Scholar]

- Toyomura A, Koyama S, Miyamaoto T, Terao A, Omori T, Murohashi H, Kuriki S ( 2007): Neural correlates of auditory feedback control in human. Neuroscience 146: 499–503. [DOI] [PubMed] [Google Scholar]

- Turkeltaub PE, Coslett HB ( 2010): Localization of sublexical speech perception components. Brain Lang 114: 1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Oers CA, Vink M, van Zandvoort MJ, van der Worp HB, de Haan EH, Kappelle LJ, Ramsey NF, Dijkhuizen RM ( 2010). Contribution of the left and right inferior frontal gyrus in recovery from aphasia. A functional MRI study in stroke patients with preserved hemodynamic responsiveness. Neuroimage 49: 885–893. [DOI] [PubMed] [Google Scholar]

- Warren JE, Crinion JT, Lambon Ralph MA, Wise RJ ( 2009): Anterior temporal lobe connectivity correlates with functional outcome after aphasic stroke. Brain 132 ( Part 12): 3428–3442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winhuisen L, Thiel A, Schumacher B, Kessler J, Rudolf J, Haupt WF, Heiss WD ( 2005): Role of the contralateral inferior frontal gyrus in recovery of language function in poststroke aphasia: A combined repetitive transcranial magnetic stimulation and positron emission tomography study. Stroke 36: 1759–1763. [DOI] [PubMed] [Google Scholar]

- Winhuisen L, Thiel A, Schumacher B, Kessler J, Rudolf J, Haupt WF, Heiss WD ( 2007): The right inferior frontal gyrus and poststroke aphasia: A follow‐up investigation. Stroke 38: 1286–1292. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional Supporting Information may be found in the online version of this article.

Supporting Information Figures1‐11.