Abstract

Regions of the fusiform gyrus (FG) respond preferentially to faces over other classes of visual stimuli. It remains unclear whether emotional face information modulates FG activity. In the present study, whole‐head magnetoencephalography (MEG) was obtained from fifteen healthy adults who viewed emotionally expressive faces and made button responses based upon emotion (explicit condition) or age (implicit condition). Dipole source modeling produced source waveforms for left and right primary visual and left and right fusiform areas. Stronger left FG activity (M170) to fearful than happy or neutral faces was observed only in the explicit task, suggesting that directed attention to the emotional content of faces facilitates observation of M170 valence modulation. A strong association between M170 FG activity and reaction times in the explicit task provided additional evidence for a role of the fusiform gyrus in processing emotional information. Hum Brain Mapp, 2013. © 2011 Wiley Periodicals, Inc.

Keywords: magnetoencephalography, face processing, emotion, fusiform, event‐related fields

INTRODUCTION

Accurate identification of facial features is important to human verbal and nonverbal communication, and investigators have noted the evolutionary advantage of being able to quickly determine from facial features an individual's identity, approximate age, gender, attractiveness, race, and emotional state [e.g., Darwin, 1872; Fox et al., 2000]. In humans, PET and fMRI studies have localized face responses to the occipito‐temporal junction, the inferior parietal lobe, and the middle temporal lobe [e.g., Lu et al., 1991; Morris et al., 1998]. Intracranial EEG has localized both invariant (e.g., physical traits) and variant facial features (e.g., facial expressions) to the ventral temporal cortex [Tsuchiya et al., 2008]. Most reliably, ventral temporal cortex constituting the fusiform gyrus, including the fusiform face area (FFA), is preferentially activated when viewing faces compared to other classes of stimuli, such as cars, houses, hands, and scrambled or inverted faces [Allison et al., 1994; Grill‐Spector et al., 2004; Halgren et al., 2000; Haxby et al., 1999; Kanwisher et al., 1997; Pourtois et al., 2009; Watanabe et al., 1999].

Current theories of face processing suggest that emotional face information is served by a brain system somewhat independent from that used to decode structural facial features [Bruce and Young, 1986; Haxby et al., 2000]. Brain areas implicated in processing facial expressions and emotion include the medial prefrontal cortex, subcallosal cingulate, anterior cingulate, and insula [e.g., Fusar‐Poli et al., 2009; Phan et al., 2002]. An additional region is the amygdala, a brain area involved in the recognition of emotion [e.g., Adolphs et al., 1994, 1995; Costafreda et al., 2008; Sergerie et al., 2008]. Feedback loops between amygdala and regions associated with processing facial structure have been suggested, and it has been postulated that the amygdala has a top‐down effect on fusiform gyrus activity [Morris et al., 1998; for a recent review, see Vuilleumier and Pourtois, 2007; Vuilleumier et al., 2004]. Thus, the FG may show sensitivity to facial expressions of emotion.

Regional brain activation to facial emotions has been investigated in studies contrasting explicit with implicit processing [Cunningham et al., 2004; Mathersul et al., 2009; Scheuerecker et al., 2007; Williams et al., 2009]. Although results of these studies indicate widespread increases during explicit processing (e.g., occipital, temporal, parietal, and frontal lobes), greater amygdala and fusiform activity is also observed. For example, in an fMRI study contrasting explicit (emotion identification) with implicit processing (age identification), both amygdala and bilateral fusiform cortices showed greater BOLD activation during the explicit task [Habel et al., 2007]. Along these lines, an fMRI event‐related study showed amygdala and fusiform involvement in facial emotion recognition associated with accurate recognition of threat‐related emotions [Loughead et al., 2008]. Finally, a recent meta‐analysis of fMRI studies showed increased activation in fusiform and amygdala regions in explicit than implicit emotion face tasks [Fusar‐Poli et al., 2009].

Examining temporal features of face processing, electroencephalographic (EEG) and magnetoencephalographic (MEG) techniques have identified several face‐related responses that appear to be modulated by emotion. For example, late‐latency occipito‐temporal event‐related potentials (ERPs) 250–550 ms post‐stimulus have been observed to differ with respect to facial emotion [Krolak‐Salmon et al., 2001; Streit et al., 2000]. A P200 responds preferentially to faces and is also modified by emotion [Ashley et al., 2004]. An even earlier response referred to as N170 in EEG [Pizzagalli et al., 2002] and M170 in MEG [Liu et al., 2000], occurs ∼ 170 ms after stimulus onset and localizes to the FFA [Deffke et al., 2007; Henson et al., 2009]. Multiple studies support the hypothesis that the N170/M170 response reflects encoding of the structural components of faces and face familiarity [Eimer, 2000; Ewbank et al., 2008; Harris and Aguirre, 2008; Kloth et al., 2006; Liu et al., 2002; Pourtois et al., 2009; Sagiv and Bentin, 2001], and additional studies suggest that the N170/M170 is sensitive to faces of personal import [e.g., self or mother; see Caharel et al., 2005], or even to how much an individual likes a face [Pizzagalli et al., 2002]. In terms of emotion, several EEG/MEG studies indicate that N170/M170 is also modulated by facial emotion [Blau et al., 2007; Caharel et al., 2005; Japee et al., 2009; e.g., Streit et al., 1999; Turetsky et al., 2007], although several studies have reported negative findings [e.g., Ashley et al., 2004; Balconi and Lucchiari, 2005]. It is likely that some of the conflicting 170 ms results may stem from variability in experimental paradigms and task demands. Specifically, in many EEG studies employing implicit facial emotion tasks, N170 amplitude differences were not observed to different emotional stimuli [Ashley et al., 2004; Balconi and Lucchiari, 2005; Eimer and Holmes, 2002; Herrmann et al., 2002; Holmes et al., 2003, 2005; Santesso et al., 2008]. In contrast, in EEG studies where facial emotion was explicitly assessed, emotional face stimuli elicited greater responses than neutral emotions [Japee et al., 2009; Krombholz et al., 2007; Turetsky et al., 2007], and in an MEG study utilizing an explicit task, positive emotion (happiness) produced larger M170 FFA responses than disgust or neutral expressions [Lewis et al., 2003]. Although modulation of M170 as a function of emotion appears more often in explicit tasks, it should be noted that some studies have observed amplitude differences during an implicit task. For example, three EEG studies and one MEG study showed greater N170 amplitudes to fearful faces than other emotional face stimuli when emotion was implicitly judged [Batty and Taylor, 2003; Blau et al., 2007; Hung et al., 2010; Jiang et al., 2009], and one EEG study showed greater N170 amplitudes to disgust than neutral or smiling faces in explicit and implicit conditions [Caharel et al., 2005]. Differences in study findings, however, do not always appear to be accounted for by explicit/implicit task demands, as one EEG study found no N170 amplitude differences between emotional and neutral expressions when emotion was explicitly judged [Eimer et al., 2003].

It remains unclear whether the N170/M170 is involved in purely structural or in face structure and emotion processing. To better examine the effect of emotion on M170 activity, the present study examined the modulatory effect of task demand by employing a within‐subject design in which participants performed explicit and implicit emotion judgment tasks while whole‐cortex magnetoencephalographic (MEG) data were obtained. Source localization allowed for examination of face processing in primary and secondary visual areas. Compared to several previous studies, the tasks employed in the present study were improved by the use of a large set of unique and well‐validated emotional face stimuli in order to decrease possible habituation effects on M170 amplitude [Ishai et al., 2006; Morel et al., 2009], a confound that may also account for some of the discrepant findings in previous studies.

Although there are some inconsistent findings in the literature, most studies show FG activity modulation in explicit tasks. Thus, the primary study hypothesis was that M170 would show stronger activation to emotional than non‐emotional faces only in the explicit condition, as indicated by a M170 source strength × condition (explicit, implicit) interaction. In addition, to assess associations between brain activity and performance, correlation analyses examined associations between behavioral measures and primary visual and FG activity. As previous studies have not examined associations between FG activity and performance, these analyses were exploratory.

MATERIALS AND METHODS

Subjects

Seventeen healthy adults (nine females) participated (mean age ± s.d. = 26.8 ± 5.4). All subjects were right‐handed. Subjects were familiarized with the MEG scanning procedures and task prior to the experiment. All subjects gave written informed consent as approved by the Institutional Review Board at The Children's Hospital of Philadelphia (CHOP).

Stimuli

Face stimuli were taken from a large set of faces developed by Gur et al. A full description of the methods for obtaining these stimuli can be found in Gur et al. [ 2002a]. Pictures of 50 actors, with each actor displaying a fearful, happy, and neutral emotion, were used (= total of 150 faces). An oval crop was applied to remove clothing, hair, and other non‐relevant features. Image color and brightness were normalized across the three images of each actor. As images from individuals with a wide range of skin color were used, it was not possible to standardize color and brightness across individuals.

Experimental Design

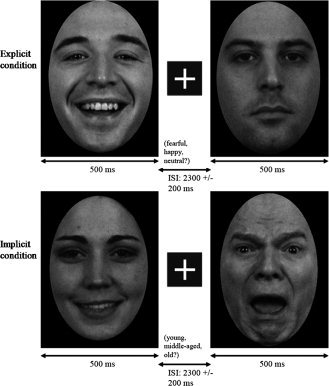

Subjects were seated in a dimly lit and sound attenuated room. Stimulus presentation was accomplished using Presentation (Neurobehavioral Systems, Albany, CA), with images projected onto a screen positioned ∼ 45 cm from the subject. In both the explicit and implicit task, faces appeared for 500 ms, followed by a fixation cross displayed for 2.3 ± 0.2 s (see Fig. 1). Stimuli were presented using a Sanyo Pro xtraX Multimedia LCD Projector PLC‐XP41, and a photodiode trigger ensured accurate timing of the MEG data relative to stimulus presentation.

Figure 1.

Examples of emotion face stimuli: upper and lower left = happy; upper right = neutral; lower right = fearful. Order of explicit (upper row) and implicit tasks (lower row) was counterbalanced across subjects. Stimuli were presented for 500 ms with a 2,300 ± 200 ms ISI.

Faces were presented in pseudorandom order, with the stipulation that neither the same emotion/age nor actor was seen twice in a row. Three hundred faces were shown during the explicit task (100 trials for each emotion category) and three hundred faces were shown during the implicit task (100 trials for each age category). As 50 actors each displayed fearful, happy, and neutral faces, to obtain 100 trials per condition each image was shown twice. In the explicit task, subjects made a button response based on emotion (fearful, happy, neutral); in the implicit task, subjects viewed the same stimuli and made a button response based on age (young = <25, middle‐aged = 25–45, older = >45). Subjects were asked to respond as quickly and accurately as possible. Task order was counterbalanced across subjects.

MEG and Anatomical MRI Data Acquisition and Coregistration

MEG recordings were performed at the CHOP Lurie Family Foundations MEG Imaging Center in a magnetically and electrically shielded room using a whole‐cortex 275‐channel MEG system (VSM MedTech, Coquitlam, BC). At the start of the session, three head‐position indicator coils were attached to the scalp at nasion and bilateral pre‐auricular points. These coils provided specification of the position and orientation of the MEG sensors relative to the head. As it was necessary for the participant's head to remain still in the MEG dewar across the recording session, foam wedges were inserted between the side of the participant's head and the inside of the dewar to ensure immobility. To aid in the identification of eye‐blink activity, the electro‐oculogram (EOG; bipolar oblique, upper right and lower left sites) was collected. Data were sampled at 600 Hz and 4th order bandpass filtered from 0.1 to 150 Hz, with 3rd order gradiometer environmental noise reduction.

For MEG source modeling, 3D volumetric magnetic resonance images (MRI) were acquired with 1 × 1 × 1 mm3 resolution (Siemens 3T VerioTM). The T1‐weighted images were acquired in the axial plane using a 3D‐MPRAGE pulse sequence (TR = 1,670 ms, TE = 3.41 ms, TI = 1,100 ms, flip angle = 15°, FOV = 256 mm, whole brain, slice thickness = 1 mm, 160 slices). MR data were available on 9 of 17 participants. To co‐register the functional MEG and structural MR data (in the eight subjects without individual MR data, MEG data was co‐registered to the standard MNI brain), the three head‐position indicator coils attached to the scalp and ∼ 400 other points across the surface of the head and upper face were digitized using a Polhemus digitizer system (Colchester, VT). A transformation matrix that involved rotation and translation between the MEG digitized points and structural MRI coordinate system was used to co‐register the MEG and MR data.

Data Analysis

For source analyses, epochs 800 ms pre‐stimulus to 1,500 ms post‐stimulus were defined from the continuous recording. Using BESA 5.2 (MEGIS Software GmbH, Gräfelfing, Germany), eye blink artifacts were removed [Berg and Sherg, 1994, Lins et al., 1993]. Scanning the eye‐blink corrected raw data, epochs with artifacts other than blinks were rejected by amplitude and gradient criteria (amplitude >5,000 fT cm−1, gradients >2,500 fT/cm/sample). Non‐contaminated epochs were averaged according to stimulus type, and a 1 Hz (24 dB/octave, zero‐phase) to 50 Hz (48 dB/octave, zero‐phase) bandpass filter was applied. Approximately 95 of 100 trials were included in each average.

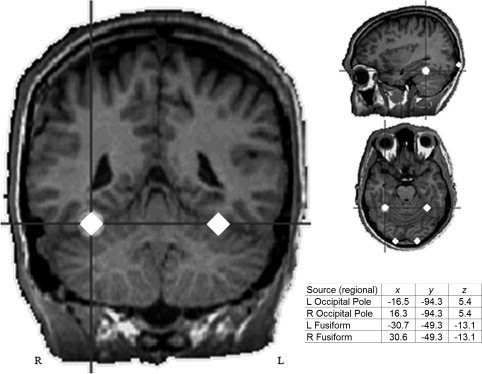

In the nine subjects with MR data, primary visual and FG responses were individually localized using regional sources (i.e., two orthogonally oriented dipoles per regional source). Left and right occipital pole visual areas, identified between 85 and 115 ms, were first localized using two symmetric regional sources (left and right hemisphere). The position of the left and right hemisphere occipital pole visual sources were fixed and then two symmetric regional sources were added to model the M170 response, identified between 120 and 180 ms. In all nine subjects, the early left and right visual responses localized to or near the calcarine sulcus. M170 localized to left and right fusiform gyrus. Talairach coordinates of each source were averaged across subjects, and the grand average locations were then used to create a standard source model that was applied to each subject. As illustrated in Figure 2, the standard source model was constructed by including: (1) left and right occipital pole regional sources, (2) left and right fusiform regional sources, and (3) the subject's eye‐blink artifact source.

Figure 2.

Standard regional source model locations for visual and FG sources (Talairach coordinates). Average 3D distance from the mean values was 11.6 mm (s.d. = 4.5) and 9.8 mm (s.d. = 4.0) for visual and fusiform sources, respectively (left and right do not differ due to symmetry constraints).

Using all 275 channels of MEG data, determination of the strength and peak latency of left and right early visual and fusiform activity was accomplished by applying the standard source model to each individual to transform the raw MEG surface activity into brain space [Scherg and Berg, 1996; Scherg and von Cramon, 1985]. The final source model serves as a source montage for the raw MEG data [Scherg and Ebersole, 1994; Scherg et al., 2002]. In contrast to signal‐space projection methods, the multiple source inverse contrasts activity in different brain regions and does not require orthogonality, with the regularization in source space providing sufficient smoothing while keeping a strong separation between regions. Thus, it is comparable to a distributed model with sources at fixed points within the brain serving as readout points of the regional activity [Scherg and Berg, 1996; Scherg et al., 1989, 2002]. As a result, the MEG sensor data can be transformed from channel space (femtotesla, fT) into brain source space where the waveforms are the modeled source activities (current dipole moment, nAm).

Source strength and latency scoring was obtained from the root‐mean‐squared regional source waveforms. Using in‐house MATLAB software, pre‐stimulus baseline (−700 to −200 ms) source waveform activity was subtracted, and left and right early visual and FG peak source strength and latency were identified (maximum point in the scoring window). Early visual activity was identified in an 85–115 ms scoring window. In all subjects, two distinct FG peaks were observed. As such, FG activity was scored in an early (120–180 ms) and late latency window (190–260 ms).

Statistical Analyses

Repeated measure ANOVAs with Condition (explicit, implicit), Hemisphere, and Valence (fearful, happy, neutral) examined effects on source strength and latency for (1) primary visual, (2) early FG, and (3) late FG activity. Pearson's r correlations examined associations between source strength, latency, reaction time, and percent‐correct values (associations with reaction time were performed excluding trials with incorrect responses). To obtain a normal distribution, amplitude and latency scores were transformed to a logarithmic scale (all P‐values >0.05 per Kolmogorov‐Smirnov test). Findings remained the same when using the non‐transformed scores and excluding two subjects with amplitude or latency scores more than two standard deviations from the group mean. All statistical tests were performed using PASW for Windows, Release 18.

RESULTS

Of the 17 subjects, early visual or fusiform activity was not observed in 2 subjects, and data from these 2 subjects were excluded. The remaining 15 subjects showed early visual and early and late FG responses bilaterally.

Behavioral Results

Performance on both tasks was significantly above chance, with accuracy of judging emotion 93.1% ± 1.0% (mean ± s.e.m.), and accuracy of judging age 64.6% ± 1.4%. Although subjects judged emotion more accurately than age, reaction times (RT) did not differ between the explicit (averaging RT across emotion) and implicit (averaging RT across age) tasks, t(14) = 0.99, ns.

Regarding accuracy, simple effects analysis of a Valence main effect, F(2,13) = 5.71, P < 0.05, indicated that happy faces (95.3% ± 0.01%) were identified more accurately than fearful faces (89.6% ± 0.02%) in the explicit task. Also in the explicit task, a main effect of Valence for reaction times, F(2,13) = 10.96, P < 0.01, indicated faster button responses to happy (766.1 ± 41.7 ms) than fearful or neutral faces (841.4 ± 42.9 ms). In the implicit task, an Age main effect, F(2,13) = 28.0, P < 0.01, indicated faster responses to young and older (808.2 ± 36.0 ms) than middle‐aged faces (926.5 ± 44.9 ms).

Brain Activity Results

Amplitude

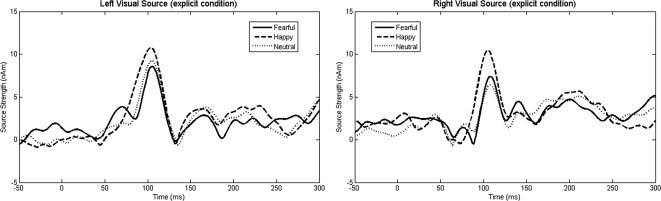

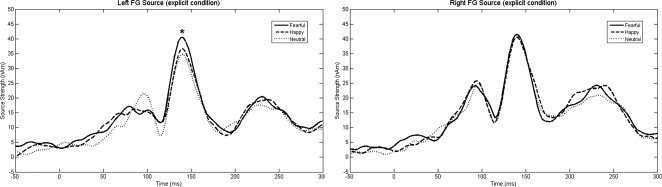

No significant main effects or interactions were observed for early visual source strength (all ps = ns).1 Source waveforms for the occipital pole sources are shown in Figure 3. As shown in Figure 4, for the early fusiform response, simple effects analysis of a significant hemisphere × valence × condition interaction, F(2,13) = 5.07, P = 0.02, showed greater activity for fearful (40.08 ± 5.10 nAm) than happy or neutral faces (34.88 ± 4.76 nAm), only in the explicit condition, and only in the left hemisphere (P < 0.05). For the late fusiform response, a significant Valence main effect, F(14) = 4.46, P < 0.05, indicated a stronger response to fearful (27.84 ± 3.73 nAm) than happy or neutral faces (25.70 ± 3.48 nAm) bilaterally. Finally, a Hemisphere main effect, F(14) = 9.89, P < 0.01, indicated stronger late fusiform responses in the right (29.88 ± 3.46 nAm) than left hemisphere (22.95 ± 3.82 nAm).

Figure 3.

Left and right hemisphere early visual source waveforms for the fearful, happy, and neutral conditions (explicit task). No early visual source strength main effects or interactions were observed.

Figure 4.

Left and right hemisphere FG grand average source waveforms for the fearful, happy, and neutral conditions (explicit task), showing stronger early left fusiform source strength (∼ 150 ms) to fearful than happy or neutral faces (P < 0.05). Late FG activity (∼ 230 ms) can also be observed. It should be noted, however, that each individual's FG response was temporally shifted to the grand average M170 latency and, as such, activity before and after the M170 peak may be imprecise.

Latency

Examining occipital pole and FG sources, the only significant finding was a main effect of hemisphere for the late fusiform response, F(14) = 5.87, P < 0.05, indicating earlier right (225.3 ± 4.27 ms) than left (230.0 ± 4.07 ms) hemisphere responses.

Associations With RT

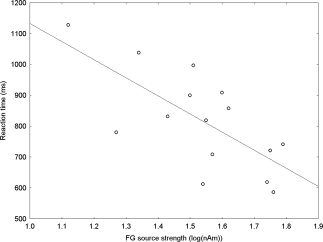

Associations between source strength, latency, task performance, and RT for each response measure (early visual and early and late fusiform) were examined for the explicit task. Significant or marginally significant associations were seen only between early left and right fusiform source strength and RT, with greater amplitudes predicting earlier button presses for all three emotion conditions (see Table I). As shown in Figure 5, collapsing across hemisphere and emotion, early fusiform source strength accounted for nearly 50% of RT variance (r = 0.70, P < 0.01).

Table I.

Pearson's r values for explicit task reaction time and left and right early (first two columns) and late (second two columns) fusiform activity for each emotion condition

| Left FG (early) | Right FG (early) | Left FG (late) | Right FG (late) | |||||

|---|---|---|---|---|---|---|---|---|

| r | P‐value | r | P‐value | r | P‐value | r | P‐value | |

| Fearful | 0.60 | 0.02 | 0.60 | 0.02 | 0.08 | 0.77 | 0.00 | 1.00 |

| Happy | 0.48 | 0.07 | 0.53 | 0.04 | 0.24 | 0.38 | 0.19 | 0.50 |

| Neutral | 0.49 | 0.07 | 0.69 | <0.01 | 0.03 | 0.89 | 0.24 | 0.39 |

Figure 5.

Pearson's r correlation between early fusiform source strength and RT, collapsed across hemisphere. The strong association (r = 0.70), accounting for nearly 50% of the RT variance, suggests involvement of the fusiform gyri in explicit emotion processing.

DISCUSSION

The present study examined the effect of explicitly versus implicitly processing the emotional content of faces on early visual and FG activity. In support of our hypotheses, stronger early left FG activity to fearful than happy or neutral faces was observed only in the explicit task. Present findings thus suggest that directed attention to the emotional content of faces modulates early FG activity. Present findings are consistent with fMRI studies showing differential activation of fusiform regions for explicit compared to implicit task conditions [Gur et al., 2002b; Habel et al., 2007; Loughead et al., 2008], and increased FG activation to faces that are explicitly judged as threat‐related [Pinkham et al., 2008]. The finding of greater left FG activity to fearful than happy or neutral faces during an explicit task also replicates findings reported in previous studies [Critchley et al., 2000; Japee et al., 2009]. For example, using a task similar to the one employed here, Critchley et al. [ 2000] observed greater left middle temporal gyrus and left fusiform gyrus activity during explicit than implicit processing of facial expressions. A failure to observe FG emotion effects in some previous studies may be due to the use of an implicit task. Additionally, the lack of an effect in previous studies may be due to repetition effects [Morel et al., 2009; Schweinberger et al., 2007]. In particular, to the extent that the M170 response decreases to repeated stimuli, studies with small stimulus sets may have more difficulty observing M170 amplitude differences to emotional face stimuli.

The influence of task demands did not extend to the early visual response, suggesting that the sensitivity seen in the FG was not due to a global increase in brain activity associated with explicitly processing emotion versus age. Further, an association between early FG amplitude and RT in the explicit task for all emotion conditions also supports a role of FG in processing emotion. The association between early FG activity and RT in the explicit task may indicate that the cognitive processes needed to make a correct response occur downstream of early visual activity, but prior to late fusiform activity, as neither early visual nor late fusiform activity were associated with RT. Present results thus provide evidence for a multifaceted role of fusiform areas that includes encoding structural facial features [Eimer, 2000; Halgren et al., 2000], identity [Rotshtein et al., 2005], and emotion [Krombholz et al., 2007; Streit et al., 1999]. As such, present findings indicate that pathways for the processing of identity and emotion are not as segregated in the brain as postulated by traditional models of face processing [e.g., Bruce and Young, 1986].

In the present study, increased activity was observed to the fearful face stimuli. Several studies suggest that the FG is modulated by the amygdala when viewing fearful facial expressions. For example, two recent meta‐analyses of PET or fMRI amygdala emotion studies found that fear and disgust were more likely to be associated with amygdala activation than happiness and unspecified positive emotions [Costafreda et al., 2008; Fusar‐Poli et al., 2009]. Fusar‐Poli et al. [ 2009] also noted that the processing of fearful faces activated fusiform areas as well as the amygdala. Other findings support amygdala modulation of FG activity. Morris et al. [ 1998] showed a significant positive correlation between fusiform responses to fearful faces and amygdala activity (as measured by differential fearful vs. happy responses). Vuilleumier et al. [ 2004], studying patients with hippocampal sclerosis, found that in a subset of these patients with concomitant amygdala lesions there was no significant increase in fusiform activity when viewing fearful over neutral faces. In contrast, patients with lesions restricted to the hippocampus showed the expected pattern of increased fusiform activity to fearful faces, with the degree of amygdala sclerosis correlating inversely with the size of the differential response to fearful vs. happy faces. Evidence for a top‐down amygdala effect on the FG is provided by Luo et al. [ 2007], who observed that amygdala responses to fear were earlier than FG responses. As this finding did not generalize to other facial emotions, the amygdala/FG association may be specific to fear stimuli [see Vuilleumier and Pourtois, 2007 for a detailed discussion of this issue].

It is also possible that the left‐hemisphere emotion effect may be related to hemisphere differences in amygdala activation. A meta‐analysis examining differential amygdala responses to fearful versus happy or neutral stimuli found that significant emotion effects are observed most often in the left hemisphere [Baas et al. 2004; although for a more nuanced examination of this issue see Sergerie et al., 2008]. Other studies suggest that whereas the right amygdala is involved in a rapid and automatic detection of facial emotion, a more delayed and evaluative response is subserved by the left amygdala [Costafreda et al., 2008; Morris et al., 1998; Wright et al., 2001]; if true, this may account for the hemisphere differences observed in the present study. Additional evidence of a left hemisphere bias comes from an intracranial EEG study by Tsuchiya et al. [ 2008] who showed a possible left‐hemisphere dominance for emotion decoding in ventral temporal cortex. Future studies examining associations between the timing of amygdala and FG activity in both hemispheres, as well as functional connectivity between the amygdala and FG, are of interest.

In the present study, early and late fusiform responses were observed, a finding also reported in previous studies. For example, Halgren et al. [ 2000] observed fusiform responses at 165 and 256 ms. Whereas early fusiform emotion findings were observed only during the explicit task, for late fusiform activity (∼ 228 ms) a stronger response to fearful than happy or neutral faces was observed bilaterally, and in both the explicit and implicit task. Previous studies have reported similar findings, with augmented late potentials to threatening faces in explicit and implicit tasks [Ishai et al., 2006; Sato et al., 2001; Schupp et al., 2004]. It is possible that whereas one must attend to the emotional content of a face to elicit increased early fusiform activity to fearful faces, later fusiform activity may reflect the need to attend to potential environmental threats regardless of current task demands. Present results are similar to the increased FG activity to emotional stimuli observed in some fMRI studies. For example, Gur et al. [ 2002b] reported greater FFA activation during the explicit emotion identification condition, and Ganel et al. [ 2005] observed higher FFA activation when subjects judged expression or directed attention to identity. Present early and late FG results, however, suggest that FG activity to emotional stimuli varies across a relatively short time period and thus underscores the need to use neuroimaging methods with high temporal resolution to fully understand the role of the FG in processing emotional stimuli.

A few issues are worth comment. A potential study confound is that subjects were more accurate in the explicit than implicit task. Performance on both tasks was well above chance, however, and RTs did not differ between the tasks. In addition, in an fMRI study examining the effect of task difficulty on fusiform activation, Bokde et al. [ 2005] showed that FFA activation increased with increasing task difficulty. The lack of a main effect showing greater implicit than explicit FG activity in the present study indicates that possible differences in task difficulty do not account for the present findings.

It is also worth commenting on the latency of the FG response observed in the present study. The average early latency was 140.8 ms in the left and 139.7 ms in the right FG. Although these latencies are earlier than those reported in some studies, they are similar to other N170/M170 studies reporting responses prior to 155 ms [Bayle and Taylor, 2010; Campanella et al., 2002; Henson et al., 2009; Krolak‐Salmon et al., 2001; Lueschow et al., 2004; Pourtois et al., 2009; Streit et al., 1999]. For example, using intracranial electrodes and recording directly from the right fusiform gyrus, Pourtois et al. [ 2009] observed a face‐specific response at ∼ 144 ms, a latency very similar to that observed in the present study. Here, as stimulus onset was determined from a photodiode rather than the trigger sent from the stimulus presentation program, our latencies may be more accurate (and thus earlier) than latencies reported in studies that time‐lock to the trigger sent from the stimulus presentation program (and especially in studies that do not account for the projector refresh rate). In general, however, there appears to be a fairly large range of “normal” N170/M170 values reported in the literature.

To conclude, stronger early left fusiform activity to fearful than happy or neutral faces was observed in the explicit emotion judgment task, indicating that directed attention to the fearful content of faces facilitates observation of early fusiform valence modulation. The strong association between early fusiform activity and RT in the explicit task provides additional evidence for the involvement of the fusiform gyrus in processing emotional information. By the late FG response, a stronger fusiform response to fearful faces was observed in both the explicit and implicit task, perhaps reflecting the need to direct attention to potentially threatening environmental stimuli regardless of task demands. Present findings thus suggest that studies investigating the effect of emotion on FG activity should (1) employ an explicit task to increase the chance of observing emotion condition differences, (2) utilize a large number of novel face stimuli to minimize the likelihood of response habituation, and (3) use source analysis to specifically examine left and right hemisphere FG activity.

Acknowledgements

Dr. Roberts would like to extend thanks to the Oberkircher Family for the Oberkircher Family Endowed Chair in Pediatric Radiology.

Footnotes

To ensure no differences existed between subjects with and without individual MRs, analyses were run with MR availability as a between group factor. The main effect of group was not significant nor were the group × condition (explicit vs. implicit) and group × valence interactions (P‐values > 0.30).

REFERENCES

- Adolphs R, Tranel D, Damasio H, Damasio A ( 1994): Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 372: 669–672. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio AR ( 1995): Fear and the human amygdala. J Neurosci 15: 5879–5891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T, Ginter H, McCarthy G, Nobre AC, Puce A, Luby M, Spencer DD ( 1994): Face recognition in human extrastriate cortex. J Neurophysiol 71: 821–825. [DOI] [PubMed] [Google Scholar]

- Ashley V, Vuilleumier P, Swick D ( 2004): Time course and specificity of event‐related potentials to emotional expressions. Neuroreport 15: 211–216. [DOI] [PubMed] [Google Scholar]

- Baas D, Aleman A, Kahn RS ( 2004): Lateralization of amygdala activation: A systematic review of functional neuroimaging studies. Brain Res Brain Res Rev 45: 96–103. [DOI] [PubMed] [Google Scholar]

- Balconi M, Lucchiari C ( 2005): Event‐related potentials related to normal and morphed emotional faces. J Psychol 139: 176–192. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ ( 2003): Early processing of the six basic facial emotional expressions. Brain Res Cogn Brain Res 17: 613–620. [DOI] [PubMed] [Google Scholar]

- Bayle DJ, Taylor MJ ( 2010): Attention inhibition of early cortical activation to fearful faces. Brain Res 1313: 113–123. [DOI] [PubMed] [Google Scholar]

- Berg P, Scherg M ( 1994): A multiple source approach to the correction of eye artifacts. Electroencephalogr Clin Neurophysiol 90: 229–241. [DOI] [PubMed] [Google Scholar]

- Blau VC, Maurer U, Tottenham N, McCandliss BD ( 2007): The face‐specific N170 component is modulated by emotional facial expression. Behav Brain Funct 3: 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bokde AL, Dong W, Born C, Leinsinger G, Meindl T, Teipel SJ, Reiser M, Hampel H ( 2005): Task difficulty in a simultaneous face matching task modulates activity in face fusiform area. Brain Res Cogn Brain Res 25: 701–710. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A ( 1986): Understanding face recognition. Br J Psychol 77( Part 3): 305–327. [DOI] [PubMed] [Google Scholar]

- Caharel S, Courtay N, Bernard C, Lalonde R, Rebai M ( 2005): Familiarity and emotional expression influence an early stage of face processing: An electrophysiological study. Brain Cogn 59: 96–100. [DOI] [PubMed] [Google Scholar]

- Campanella S, Quinet P, Bruyer R, Crommelinck M, Guerit JM ( 2002): Categorical perception of happiness and fear facial expressions: An ERP study. J Cogn Neurosci 14: 210–227. [DOI] [PubMed] [Google Scholar]

- Critchley H, Daly E, Phillips M, Brammer M, Bullmore E, Williams S, Van Amelsvoort T, Robertson D, David A, Murphy D ( 2000): Explicit and implicit neural mechanisms for processing of social information from facial expressions: A functional magnetic resonance imaging study. Hum Brain Mapp 9: 93–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costafreda SG, Brammer MJ, David AS, Fu CHY ( 2008): Predictors of amygdala activation during the processing of emotional studies: A meta‐analysis of 385 PEg and fMRI studies. Brain Res Rev 58: 57–70. [DOI] [PubMed] [Google Scholar]

- Cunningham WA, Raye CL, Johnson MK ( 2004): Implicit and explicit evaluation: FMRI correlates of valence, emotional intensity, and control in the processing of attitudes. J Cogn Neurosci 16: 1717–1729. [DOI] [PubMed] [Google Scholar]

- Darwin C ( 1872): The Expression of the Emotions in Man and Animals. London: John Murray. [Google Scholar]

- Deffke I, Sander T, Heidenreich J, Sommer W, Curio G, Trahms L, Lueschow A ( 2007): MEG/EEG sources of the 170‐ms response to faces are co‐localized in the fusiform gyrus. Neuroimage 35: 1495–1501. [DOI] [PubMed] [Google Scholar]

- Eimer M ( 2000): The face‐specific N170 component reflects late stages in the structural encoding of faces. Neuroreport 11: 2319–2324. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A ( 2002): An ERP study on the time course of emotional face processing. Neuroreport 13: 427–431. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A, McGlone FP ( 2003): The role of spatial attention in the processing of facial expression: An ERP study of rapid brain responses to six basic emotions. Cogn Affect Behav Neurosci 3: 97–110. [DOI] [PubMed] [Google Scholar]

- Ewbank MP, Smith WA, Hancock ER, Andrews TJ ( 2008): The M170 reflects a viewpoint‐dependent representation for both familiar and unfamiliar faces. Cereb Cortex 18: 364–370. [DOI] [PubMed] [Google Scholar]

- Fox E, Lester V, Russo R, Bowles RJ, Pichler A, Dutton K ( 2000): Facial expressions of emotion: Are angry faces detected more efficiently? Cogn Emot 14: 61–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fusar‐Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, Benedetti F, Abbamonte M, Gasparotti R, Barale F, Perez J, McGuire P, Politi P ( 2009): Functional atlas of emotional faces processing: A voxel‐based meta‐analysis of 105 functional amgnetic resonance imaging studies. J psychiatry Neurosci 34: 418–432. [PMC free article] [PubMed] [Google Scholar]

- Ganel T, Valyear KF, Goshen‐Gottstein Y, Goodale MA ( 2005): The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia 43: 1645–1654. [DOI] [PubMed] [Google Scholar]

- Grill‐Spector K, Knouf N, Kanwisher N ( 2004): The fusiform face area subserves face perception, not generic within‐category identification. Nat Neurosci 7: 555–562. [DOI] [PubMed] [Google Scholar]

- Gur RC, Sara R, Hagendoorn M, Marom O, Hughett P, Macy L, Turner T, Bajcsy R, Posner A, Gur RE ( 2002a): A method for obtaining 3‐dimensional facial expressions and its standardization for use in neurocognitive studies. J Neurosci Methods 115: 137–143. [DOI] [PubMed] [Google Scholar]

- Gur RC, Schroeder L, Turner T, McGrath C, Chan RM, Turetsky BI, Alsop D, Maldjian J, Gur RE ( 2002b): Brain activation during facial emotion processing. Neuroimage 16( 3, Part 1): 651–662. [DOI] [PubMed] [Google Scholar]

- Habel U, Windischberger C, Derntl B, Robinson S, Kryspin‐Exner I, Gur RC, Moser E ( 2007): Amygdala activation and facial expressions: Explicit emotion discrimination versus implicit emotion processing. Neuropsychologia 45: 2369–2377. [DOI] [PubMed] [Google Scholar]

- Halgren E, Raij T, Marinkovic K, Jousmaki V, Hari R ( 2000): Cognitive response profile of the human fusiform face area as determined by MEG. Cereb Cortex 10: 69–81. [DOI] [PubMed] [Google Scholar]

- Harris AM, Aguirre GK ( 2008): The effects of parts, wholes, and familiarity on face‐selective responses in MEG. J Vis 8: 1–12. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Ungerleider LG, Clark VP, Schouten JL, Hoffman EA, Martin A ( 1999): The effect of face inversion on activity in human neural systems for face and object perception. Neuron 22: 189–199. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI ( 2000): The distributed human neural system for face perception. Trends Cogn Sci 4: 223–233. [DOI] [PubMed] [Google Scholar]

- Henson RN, Mouchlianitis E, Friston KJ ( 2009): MEG and EEG data fusion: Simultaneous localization of face‐evoked responses. Neuroimage 47: 581–589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrmann MJ, Aranda D, Ellgring H, Mueller TJ, Strik WK, Heidrich A, Fallgatter AJ ( 2002): Face‐specific event‐related potential in humans is independent from facial expression. Int J Psychophysiol 45: 241–244. [DOI] [PubMed] [Google Scholar]

- Holmes A, Vuilleumier P, Eimer M ( 2003): The processing of emotional facial expression is gated by spatial attention: Evidence from event‐related brain potentials. Brain Res Cogn Brain Res 16: 174–184. [DOI] [PubMed] [Google Scholar]

- Holmes A, Winston JS, Eimer M ( 2005): The role of spatial frequency information for ERP components sensitive to faces and emotional facial expression. Brain Res Cogn Brain Res 25: 508–520. [DOI] [PubMed] [Google Scholar]

- Hung T, Smith ML, Bayle DJ, Mills T, Cheyne D, Taylor MJ ( 2010): Unattended emotional faces elicit early lateralized amygdala‐frontal and fusiform activations. Neuroimage 50: 727–733. [DOI] [PubMed] [Google Scholar]

- Ishai A, Bikle PC, Ungerleider LG ( 2006): Temporal dynamics of face repetition suppression. Brain Res Bull 70: 289–295. [DOI] [PubMed] [Google Scholar]

- Japee S, Crocker L, Carver F, Pessoa L, Ungerleider LG ( 2009): Individual differences in valence modulation of face‐selective M170 response. Emotion 9: 59–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang Y, Shannon RW, Vizueta N, Bernat EM, Patrick CJ, He S ( 2009): Dynamics of processing invisible faces in the brain: Automatic neural encoding of facial expression information. Neuroimage 44: 1171–1177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM ( 1997): The fusiform face area: A module in human extrastriate cortex specialized for face perception. J Neurosci 17: 4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kloth N, Dobel C, Schweinberger SR, Zwitserlood P, Bolte J, Junghofer M ( 2006): Effects of personal familiarity on early neuromagnetic correlates of face perception. Eur J Neurosci 24: 3317–3321. [DOI] [PubMed] [Google Scholar]

- Krolak‐Salmon P, Fischer C, Vighetto A, Mauguiere F ( 2001): Processing of facial emotional expression: Spatio‐temporal data as assessed by scalp event‐related potentials. Eur J Neurosci 13: 987–994. [DOI] [PubMed] [Google Scholar]

- Krombholz A, Schaefer F, Boucsein W ( 2007): Modification of N170 by different emotional expression of schematic faces. Biol Psychol 76: 156–162. [DOI] [PubMed] [Google Scholar]

- Lewis S, Thoma RJ, Lanoue MD, Miller GA, Heller W, Edgar C, Huang M, Weisend MP, Irwin J, Paulson K, et al. ( 2003): Visual processing of facial affect. Neuroreport 14: 1841–1845. [DOI] [PubMed] [Google Scholar]

- Lins OG, Picton TW, Berg P, Scherg M ( 1993): Ocular artifacts in recording EEGs and event‐related potentials. II: Source dipoles and source components. Brain Topogr 6: 65–78. [DOI] [PubMed] [Google Scholar]

- Liu J, Higuchi M, Marantz A, Kanwisher N ( 2000): The selectivity of the occipitotemporal M170 for faces. Neuroreport 11: 337–341. [DOI] [PubMed] [Google Scholar]

- Liu J, Harris A, Kanwisher N ( 2002): Stages of processing in face perception: An MEG study. Nat Neurosci 5: 910–916. [DOI] [PubMed] [Google Scholar]

- Loughead J, Gur RC, Elliott M, Gur RE ( 2008): Neural circuitry for accurate identification of facial emotions. Brain Res 1194: 37–44. [DOI] [PubMed] [Google Scholar]

- Lu ST, Hamalainen MS, Hari R, Ilmoniemi RJ, Lounasmaa OV, Sams M, Vilkman V ( 1991): Seeing faces activates three separate areas outside the occipital visual cortex in man. Neuroscience 43: 287–290. [DOI] [PubMed] [Google Scholar]

- Lueschow A, Sander T, Boehm SG, Nolte G, Trahms L, Curio G ( 2004): Looking for faces: Attention modulates early occipitotemporal object processing. Psychophysiology 41: 350–360. [DOI] [PubMed] [Google Scholar]

- Luo Q, Holroyd T, Jones M, Hendler T, Blair J ( 2007): Neural dynamics for facial threat processing as revealed by gamma band synchronization using MEG. Neuroimage 34: 839–847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mathersul D, Palmer DM, Gur RC, Gur RE, Cooper N, Gordon E, Williams LM ( 2009): Explicit identification and implicit recognition of facial emotions: II. Core domains and relationships with general cognition. J Clin Exp Neuropsychol 31: 278–291. [DOI] [PubMed] [Google Scholar]

- Morel S, Ponz A, Mercier M, Vuilleumier P, George N ( 2009): EEG‐MEG evidence for early differential repetition effects for fearful, happy and neutral faces. Brain Res 1254: 84–98. [DOI] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Buchel C, Frith CD, Young AW, Calder AJ, Dolan RJ ( 1998): A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain 121( Part 1): 47–57. [DOI] [PubMed] [Google Scholar]

- Phan KL, Wager T, Taylor SF, Liberzon I ( 2002): Functional neuroanatomy of emotion: A meta‐analysis of emotion activation studies in PET and fMRI. Neuroimage 16: 331–348. [DOI] [PubMed] [Google Scholar]

- Pinkham AE, Hopfinger JB, Ruparel K, Penn DL ( 2008): An investigation of the relationship between activation of a social cognitive neural network and social functioning. Schizophr Bull 34: 688–697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pizzagalli DA, Lehmann D, Hendrick AM, Regard M, Pascual‐Marqui RD, Davidson RJ. ( 2002): Affective judgments of faces modulate early activity (approximately 160 ms) within the fusiform gyri. Neuroimage 16( 3, Part 1): 663–677. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Spinelli L, Seeck M, Vuilleumier P ( 2009): Modulation of face processing by emotional expression and gaze direction during intracranial recordings in right fusiform cortex. J Cogn Neurosci 22: 2086–2107. [DOI] [PubMed] [Google Scholar]

- Roberts TP, Flagg EJ, Gage NM ( 2004): Vowel categorization induces departure of M100 latency from acoustic prediction. Neuroreport 15: 1679–1682. [DOI] [PubMed] [Google Scholar]

- Rotshtein P, Henson RN, Treves A, Driver J, Dolan RJ ( 2005): Morphing Marilyn into Maggie dissociates physical and identity face representations in the brain. Nat Neurosci 8: 107–113. [DOI] [PubMed] [Google Scholar]

- Sagiv N, Bentin S ( 2001): Structural encoding of human and schematic faces: Holistic and part‐based processes. J Cogn Neurosci 13: 937–951. [DOI] [PubMed] [Google Scholar]

- Santesso DL, Meuret AE, Hofmann SG, Mueller EM, Ratner KG, Roesch EB, Pizzagalli DA ( 2008): Electrophysiological correlates of spatial orienting towards angry faces: A source localization study. Neuropsychologia 46: 1338–1348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Yoshikawa S, Matsumura M ( 2001): Emotional expression boosts early visual processing of the face: ERP recording and its decomposition by independent component analysis. Neuroreport 12: 709–714. [DOI] [PubMed] [Google Scholar]

- Scherg M, Berg P ( 1996): New concepts of brain source imaging and localization. Electroencephalogr Clin Neurophysiol Suppl 46: 127–137. [PubMed] [Google Scholar]

- Scherg M, Ebersole JS ( 1994): Brain source imaging of focal and multifocal epileptiform EEG activity. Neurophysiol Clin 24: 51–60. [DOI] [PubMed] [Google Scholar]

- Scherg M, von Cramon D ( 1985): A new interpretation of the generators of BAEP waves I‐V: Results of a spatio‐temporal dipole model. Electroencephalogr Clin Neurophysiol 62: 290–299. [DOI] [PubMed] [Google Scholar]

- Scherg M, Vajsar J, Picton TW ( 1989): A source analysis of the late auditory evoked potentials. J Cogn Neurosci 1: 336–355. [DOI] [PubMed] [Google Scholar]

- Scherg M, Ille N, Bornfleth H, Berg P ( 2002): Advanced tools for digital EEG review: Virtual source montages, whole‐head mapping, correlation, and phase analysis. J Clin Neurophysiol 19: 91–112. [DOI] [PubMed] [Google Scholar]

- Scheuerecker J, Frodl T, Koutsouleris N, Zetzsche T, Wiesmann M, Kleemann AM, Bruckmann H, Schmitt G, Moller HJ, Meisenzahl EM ( 2007): Cerebral differences in explicit and implicit emotional processing—An fMRI study. Neuropsychobiology 56: 32–39. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Ohman A, Junghofer M, Weike AI, Stockburger J, Hamm AO ( 2004): The facilitated processing of threatening faces: An ERP analysis. Emotion 4: 189–200. [DOI] [PubMed] [Google Scholar]

- Schweinberger SR, Kaufmann JM, Moratti S, Keil A, Burton AM ( 2007): Brain responses to repetitions of human and animal faces, inverted faces, and objects: An MEG study. Brain Res 1184: 226–233. [DOI] [PubMed] [Google Scholar]

- Sergerie K, Chochol C, Armony JL ( 2008): The role of the amygdala in emotional processing: A quantitative meta‐analysis of functional neuroimaging studies. Neurosci Biobehav Rev 23: 811–830. [DOI] [PubMed] [Google Scholar]

- Streit M, Ioannides AA, Liu L, Wolwer W, Dammers J, Gross J, Gaebel W, Muller‐Gartner HW ( 1999): Neurophysiological correlates of the recognition of facial expressions of emotion as revealed by magnetoencephalography. Brain Res Cogn Brain Res 7: 481–491. [DOI] [PubMed] [Google Scholar]

- Streit M, Wolwer W, Brinkmeyer J, Ihl R, Gaebel W ( 2000): Electrophysiological correlates of emotional and structural face processing in humans. Neurosci Lett 278: 13–16. [DOI] [PubMed] [Google Scholar]

- Tsuchiya N, Kawasaki H, Oya H, Howard MA III, Adolphs R ( 2008): Decoding face information in time, frequency and space from direct intracranial recordings of the human brain. PLoS ONE 3: e3892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turetsky BI, Kohler CG, Indersmitten T, Bhati MT, Charbonnier D, Gur RC ( 2007): Facial emotion recognition in schizophrenia: When and why does it go awry? Schizophr Res 94: 253–263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G ( 2007): Distributed and interactive brain mechanisms during emotion face perception: Evidence from functional neuroimaging. Neuropsychologia 45: 174–194. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ ( 2004): Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nat Neurosci 7: 1271–1278. [DOI] [PubMed] [Google Scholar]

- Watanabe S, Kakigi R, Koyama S, Kirino E ( 1999): Human face perception traced by magneto‐ and electro‐encephalography. Brain Res Cogn Brain Res 8: 125–142. [DOI] [PubMed] [Google Scholar]

- Williams LM, Mathersul D, Palmer DM, Gur RC, Gur RE, Gordon E ( 2009): Explicit identification and implicit recognition of facial emotions: I. Age effects in males and females across 10 decades. J Clin Exp Neuropsychol 31: 257–277. [DOI] [PubMed] [Google Scholar]

- Wright CI, Fishcer H, Whalen PJ, McInerney SC, Shin LM, Rauch SL ( 2001): Differential prefrontal cortex and amygdala habituation to repeatedly presented emotional stimuli. Neuroreport 12: 379–383. [DOI] [PubMed] [Google Scholar]