Abstract

Object categorization during ambiguous sensory stimulation generally depends on the activity of extrastriate sensory areas as well as top‐down information. Both reflect internal representations of prototypical object knowledge against which incoming sensory information is compared. However, besides these general mechanisms, individuals might differ in their readiness to impose internal representations onto incoming ambiguous information. These individual differences might be based on what was referred to as “Schema Instantiation Threshold” (SIT; Lewicki et al. [1992]: Am Pshycol 47:796–801), defining a continuum from very rapid (low threshold) to a rather controlled application of internal representations (high threshold). We collected fMRI scans while subjects with low SIT (“internal encoders”) and subjects with high SIT (“external encoders”) made gender categorizations of ambiguous facial images. Internal encoders made faster gender decisions during high sensory ambiguity, showed higher fusiform activity, and had faster BOLD responses in the fusiform (FFA) and occipital face area (OFA) indicating a faster and stronger application of face‐gender representations due to a low SIT threshold. External encoders made slower gender decisions and showed increased medial frontal activity, indicating a more controlled strategy during gender categorizations and increased decisional uncertainties. Internal encoders showed higher functional connectivity of the orbito‐frontal cortex (OFC) to seed activity in the FFA which might indicate both more readily generated predictive classificatory guesses and the subjective impressions of accurate classifications. Taken together, an “internal encoding style” is characterized by the fast, unsupervised and unverified application of primary object representations, whereas the opposite seems evident for subjects with an “external encoding style”. Hum Brain Mapp, 2010. © 2010 Wiley‐Liss, Inc.

Keywords: encoding style, face perception, gender decision, individual differences, fMRI

INTRODUCTION

Visual object recognition results from the interplay of bottom‐up processing of sensory information and task‐specific expectations, which act as top‐down modulations onto incoming information [Fenske et al., 2006; Mesulam, 2008]. The former bottom‐up sensory signals are mainly feed‐forward signals originating in the primary and extrastriate visual cortex. Initial object categorization takes place in these extrastriate occipito‐temporal brain regions according to prototypical stimulus feature configurations. Ventral extrastriate regions, for example, have been shown to be especially sensitive to facial stimulus configurations [e.g., Kanwisher et al., 1997; Rossion et al., 2003], and this sensitivity might have been shaped by long‐term experience with faces representing an expertise network for this category of stimuli [Johnson, 2005]. Therefore, representations in ventral extrastriate brain regions might serve as prototypical templates or primary object schemata for faces [Goldstein and Chance, 1980] to support and facilitate object recognition and categorization of incoming sensory information when it closely matches these schemata. The term “schema,” therefore, refers to a unit of organized object knowledge due to previous experience which is subsequently applied to the encoding of new incoming information [see Rumelhart, 1980].

However, visual object recognition is not only affected by this bottom‐up processing but also by top‐down modulations. Such top‐down processes are imposed, for example, by selectively attending to a specific stimulus or stimulus feature [Banich et al., 2000], expectations about the occurrence of specific objects [Li et al., 2009; Zhang et al., 2008], predictions about forthcoming objects [Fenske et al., 2006; Summerfield and Egner, 2009], or the monitoring of decision processes under uncertainty conditions [Ridderinkhof et al., 2004]. This higher level top‐down modulation might facilitate the processing of attended, expected, or predicted objects though this facilitation might be based on different underlying brain mechanisms, such that attention might enhance and expectation might reduce visual processing in extrastriate object sensitive brain regions [see Summerfield and Egner, 2009]. In contrast to “primary” object schemata in the extrastriate visual cortex described above, these top‐down modulatory processes might represent “secondary” schemata, since they also represent a preferred object category selected by attention, expectations, and/or predictions. However, these secondary schemata seem to be more transient in nature, but might, similar to primary schemata, figure as template against which incoming information is compared. Secondary schemata seem to originate predominantly in frontal brain regions and modulate the information flow and categorization processes in extrastriate visual processing regions [Fenske et al., 2006; Li et al., 2009; Summerfield et al., 2006a, b; Zhang et al., 2008], whereby the orbito‐frontal cortex (OFC) in particular seems to signal subjectively successful object categorization [Fenske et al., 2006; Summerfield and Koechlin, 2008].

Therefore, object recognition and categorization integrates both the bottom‐up stream of incoming sensory information and the application of primary and secondary schemata during stimulus encoding. However, the relative contribution of external sensory information and internal schemata to the final percept varies and is dependent on both the exact nature of sensory stimulus properties and the top‐down secondary schemata such as that imposed by task instructions. With respect to stimulus properties, extremely filtered, morphed, or incomplete images of objects convey ambiguous sensory information. In the absence of strong top‐down guidance by, for example, expectations or selective attention, a reliable object categorization of these ambiguous stimuli might be more strongly driven by the application of primary schemata as represented in object sensitive visual areas. Studies on object categorization of ambiguous stimuli in the absence of top‐down control demonstrated a relation between activation in extrastriate object sensitive regions with the individual categorization behavior [Akrami et al., 2009; Li et al., 2009; Liu and Jagadeesh, 2008; Sterzer and Kleinschmidt, 2007; Summerfield et al., 2006b; Zhang et al., 2008]. However, besides stimulus properties top‐down control is the second important factor during percept formation and top‐down control is quite often represented in frontal brain regions. Top‐down control by secondary schemata could be generated by context‐related expectations [Fenske et al., 2006; Kveraga et al., 2007a] or by task instructions [Li et al., 2009; Zhang et al., 2008], and this top‐down influence might affect the bottom‐up processing and primary schema application in extra‐striate brain regions. For example, object selective regions are active during task instructions to simply imagine specific objects for which these brain regions are sensitive without any sensory stimulation [O'Craven and Kanwisher, 2000]. In the presence of ambiguous sensory stimulation, such as ambiguous [Summerfield et al., 2006a, b] or random noise images [Li et al., 2009; Zhang et al., 2008], frontal brain regions seem to enhance signals in extrastriate object sensitive regions that match the object category predicted by task instructions. Such task instructions might involve to selectively attend one of two superimposed objects [O'Craven et al., 1999].

However, this dynamic interplay between incoming sensory information and internal schemata might not only rely on stimulus properties and task dependent top‐down modulation as described above but also seems to be highly dependent on a third factor pertaining to inter‐individual differences, such as the individual readiness to impose internal schemata onto ambiguous external information. For example, when confronted with an ambiguous sensory image, some individuals seem to make rather fast object categorizations based on a first impression and guided by a coarse application of primary object schemata, while others are more conservative and try to accumulate more sensory evidence for an appropriate and controlled object categorization. Thus, individuals might differ with respect to the threshold of applying an internal schema during object recognition. In the framework of a cognitive theory of percept formation, this individual threshold was termed the “Schema Instantiation Threshold” (SIT) [see Billieux et al., 2009]. Individuals who more readily impose primary schemata are more likely to experience a consistency during the encoding of ambiguous stimuli even in the absence of an objective consistency [Lewicki, 2005; Lewicki et al., 1992]. Such a transient consistency can result in “split‐second illusions” in everyday life caused by rapidly imposed internal schemata in the absence of reliable sensory evidence. Some kind of perceptual illusory consistencies can be replicated in experimental settings by tachistoscopically presented ambiguous stimuli [Lewicki, 2005]. This subjective experience of consistency is similar to the neural process of perceptual closure occurring in inferior occipito‐temporal cortex where inconsistent sensory information is filled with missing information to generate a consistent percept [Sehatpour et al., 2006] and individuals might differ in their readiness to finalize the perceptual process when sensory evidence is inconsistent.

Individuals, therefore, might significantly differ with respect to their SIT, which would result in a more internally or externally guided encoding style. These different encoding styles differ in their balance between schema application and accumulation of sensory evidence especially in the case of ambiguous sensory stimulation. According to this hypothesis, an “internal encoding style” more strongly relies on a fast application of internal primary schemata especially in the case of less sensory evidence such as during high sensory ambiguity. This fast schema application is not accompanied by an accumulation of additional sensory information to verify whether the applied schema really matched the sensory information. The “external encoding style,” in turn, is based on accumulated sensory evidence from external stimuli and a more controlled and iterative sensory stimulus processing. This iterative processing provides a closer look at the sensory information particularly when more accurate sensory information becomes available over time. To parametrically identify individual encoding styles, Lewicki et al. introduced the “encoding style questionnaire” (ESQ) [Billieux et al., 2009; see Supporting Information S1], which measures subjects' frequency of experiencing split‐second illusions and allows for a differentiation of external from internal encoding styles.

The purpose of this study was to test the hypothesis that the dynamic interplay between the sensory bottom‐up and top‐down functional pathways of schema applications in visual face perception is modulated by an individually different SIT factor, that is, the individual encoding style. To investigate the neuronal differences of visual encoding style, we designed a face gender decision task using blurry facial images which successively became more recognizable in a sequence of images with decreasing levels of Gaussian filtering. The rationale for using blurry facial images was two‐fold. First, blurry [Li et al., 2009; Zhang et al., 2008], degraded [Summerfield et al., 2006a, b], or ambiguous [Akrami et al., 2009; Liu and Jagadeesh, 2008] images have been shown to facilitate the application of primary and secondary schemata during object recognition as we outlined above. Therefore, blurry images are suitable for testing individual differences in the readiness to impose internal schemata onto incoming ambiguous sensory information. Second, a specific network of extrastriate brain regions is sensitive to facial images consisting of a region in the fusiform gyrus termed the “fusiform face area” (FFA) [Kanwisher et al., 1997] and in the inferior occipital cortex termed “occipital face area” (OFA) [Gauthier et al., 2000; Rossion et al., 2003]. These regions are sensitive to the presence of faces but also seem to be able to discriminate between faces based on factors such as gender [Grill‐Spector et al., 2004]. For the latter, the integrity of the OFA seems to be important to receive the signal fed forward from the FFA [Dricot et al., 2008; Rossion et al., 2003; Steeves et al., 2006].

Gender is a socially learned cognitive schema, which is applied during the perception of human faces [Fiske, 1993]. Gender recognition relies on the activity of extrastriate face sensitive regions as described above. During the sequence of filtered facial images in the present experiment, subjects made a gender decision at the first point of subjective certainty. This gender decision requires the collaboration of distributed brain regions. According to the SIT theory, we expected that face sensitive regions in extrastriate brain regions would impose primary face‐gender schemata onto incoming sensory information and, ideally, accumulate sensory evidence by recursive application of these schemata [Akrami et al., 2009; Liu and Jagadeesh, 2008]. Besides activation in posterior face sensitive regions, we expected to find frontal activations where transient secondary schemata with respect to specific task conditions and the individual encoding style should be represented. Specifically, activations in the ventromedial frontal cortex were assumed to represent object templates according to task specific expectations that a specific object will most likely occur [Liu and Jagadeesh, 2008; Summerfield et al., 2006a, b; Zhang et al., 2008]. Activations in dorso‐medial frontal and insular cortex, on the other hand, were found during categorization uncertainties when task instructions were less specific about the forthcoming object [Grinband et al., 2006] and are reported to be related to monitoring processes in dorso‐medial frontal cortex [Ridderinkhof et al., 2004], and to decision making in anterior insular cortex during ambiguous stimuli encoding [Singer et al., 2009].

More specifically, we expected that internal and external encoders would differ significantly in the spatial and temporal dynamics while making gender decisions on ambiguous facial images. Internal encoders more readily apply internal and especially primary schemata in the absence of sufficient sensory evidence, and hence, we expected that internal encoders would make more rapid judgments. Accordingly, brain activation in posterior face sensitive regions should show earlier activation in internal compared with external encoders [Liu and Jagadeesh, 2008; Summerfield et al., 2006b]. External encoders, on the other hand, should apply a more controlled processing and due to a higher SIT are expected to accumulate more sensory evidence for a higher degree of a sensory‐schema match. External encoders, therefore, would respond slower with more recurrent activation in the functional connection between the posterior extrastriate and frontal brain regions.

MATERIALS AND METHODS

Participants

We screened a total of 218 subjects (161 female; mean age 22.9 years, SD = 4.4, age range 18–46 years) with the Encoding Style Questionnaire (ESQ) [see Billieux et al., 2009] prior to experimental testing to find subgroups of subjects with high internal encoding (IE) and external encoding (EE) style scores. Subjects were mainly Arts and Sciences students from Bremen University and Jacobs University. From this sample, we chose an equal number of subjects for each group of internal and external encoders by including only individuals with the most extreme scores in the ESQ inventory. The ESQ inventory score threshold criterion was ≥4.83 (0.8) for internal and ≤2.33 (−0.6) for external encoders, for the cumulative and the standardized ES scores (in parenthesis), respectively.

Twenty‐four healthy subjects were investigated during the fMRI session (three male; mean age 23.9 years, SD = 5.3, age range 18–43 years) with 12 subjects representing the group of internal encoders and 12 subjects the group of external encoders. Both groups differed significantly with respect to their cumulative (M IE = 4.85, SD = 0.09; M EE = 2.07, SD = 0.16; t 22 = 15.232, P < 0.001) and standardized encoding style score (M IE = 1.16, SD = 0.06; M EE = 2.07, SD = 0.16; t 22 = 15.911, P < 0.001).

All subjects were right‐handed (Oldfield, 1971) and had normal or corrected‐to‐normal vision. No subject reported a history of neurological or psychiatric conditions. All subjects gave informed and written consent for their participation in accordance with ethical and data security guidelines of the University of Bremen. The study was approved by the local ethics committee.

Stimulus Material and Trial Sequence

The stimuli were projected via a JVC video projector using Presentation®‐Software (Neurobehavioral Systems; https://nbs.neuro‐bs.com) onto a projection screen positioned at the rear end with a viewing distance of about 38 cm. Facial stimuli were photographs of male and female faces (200 × 200 pixel; visual angle 7° × 7°) selected from the AR database [Martinez and Benavente, 1998], the Psychological Image Collection at Stirling (PICS, [http://pics. psych.stir.ac.uk/]), the Frontal Faces Dataset [Weber, 1999; http://www.vision.caltech.edu/html-files/archive.html], and pictures from our own database. We only included Caucasian faces without task‐irrelevant features, like a beard, eyeglasses, or excessive make‐up. All heads were in an upright position with the face being in full frontal view, showing a neutral facial expression. In addition, eye gaze was directed at the camera. Faces were superimposed by a gray mask (CIELab, 70, 0, 0; visual angle 5.25° × 5.5°) to cover task‐irrelevant facial information (see Fig. 1a) and were adjusted to achieve uniform average luminosity and contrast. We initially created a set of 70 facial stimuli (35 male and 35 female) that were evaluated in a pilot study. Prior to the scanning session, 15 subjects who did not participate in the fMRI experiment made a forced‐choice gender classification on these facial stimuli. According to the results of this pilot evaluation a final set of 50 facial stimuli (25 male and 25 female) were selected as those correctly classified at minimally 90% accuracy.

Figure 1.

Subjects made a gender decision on 40 face‐string sequences (20 male faces, 20 female faces) using blurred faces, which became successively more recognizable. Blurring was achieved by using a Gaussian filter with decreasing width during each sequence (a, left panel). Subjects were asked to indicate the gender of the face as soon as they had the impression that they could reliably identify the gender. The face‐string sequence stopped immediately after the response or with the ninth picture of the face‐string sequence in case of no response. During the scrambled string, the same face was presented as a scrambled sequence with a total of nine images with an equal stepwise decrease of the Gaussian filter. Ten short face‐string sequences were intermixed to prevent habituation in the long face‐string sequence and to keep subjects alert to the first picture of each sequence (a, right panel). Short strings were not included in the data analyses. (b) Behavioral results revealed that internal encoders showed faster gender categorizations compared to external encoders (left panel). The mean accuracy was not significantly different between internal and external encoders (right panel; error bars indicate the standard error of the mean [SEM]). [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

To create ambiguous facial images, each of these 50 facial stimuli were spatially low‐pass filtered using a two‐dimensional circularly isotropic Gaussian filter of different filter width with σ as the standard deviation of the Gaussian filter. Gaussian filtering was conducted by stepwise filtering (Δσ = 2) the same facial stimulus from extremely coarse (σ = 15) to fine filtering (σ = 1). This stepwise filtering resulted in eight different images of the same facial stimulus. These images were presented in a “face‐string sequence,” starting from extremely coarse to extremely fine filtering (see Fig. 1a). The experimental design included 40 long and 10 short versions of these face‐string sequences. Twenty male and 20 female facial stimuli were used for a long face‐string sequence containing all filtering steps from extremely coarse to fine filtering (see Fig. 1a, top left). The remaining 10 images were used for the short face‐string sequences (see Fig. 1a, top right) containing only four filter steps starting with intermediate filtering of σ = 7 and decreasing to fine filtering of σ = 1. These short face‐string sequences consisted of a sequence of four filtered images of the same facial stimulus. These short sequences were included to prevent a potential habituation to the long face‐string sequence and to maintain attention on the first image of each sequence. Note that in the long face‐string sequence, the first picture was always an extremely filtered facial image where a correct gender decision was possible only on a chance level. The randomly included short sequences started always with an intermediate filtered image where gender decision were partly possible and subjects were, therefore, stressed to already attend to the first picture in the short and, as a transfer effect, in the long sequence.

Each image within a face‐string sequence was presented for 1,300 ms and was immediately followed by the next image. Subjects were asked to make a gender classification during both the short and long face‐string sequences as soon as they were able to identify the gender based on their first subjective impression of being able to make a reliable decision. Subjects responded by pressing a button with either their right or left index finger. The buttons were counterbalanced across subjects. The face‐string sequence ended immediately after subjects made a decision to prevent the subjects from receiving feedback on the accuracy of their decision. In case of no response, the entire sequence of nine images was successively presented with a red square superimposed on the last image to indicate the end of one sequence and the beginning of the next sequence. Each sequence of a facial image was followed by an intermediate sequence showing a scrambled version of the same face (8 × 8 pixel scramble), which was identically filtered as the facial images and presented in the same sequence of pictures from coarse to fine filtering (see Fig. 1a, bottom panel). During this period a red square (8 × 8 pixels) appeared in the middle of the picture. Subjects were instructed to passively fixate on this red square. An enlarged red square superimposed on the ninth scrambled image indicated a new face sequence. This scrambled sequence was designed as a baseline condition for building functional contrast during image analysis.

The face images were presented in two blocks of 25 pictures, each containing a randomized presentation of male and female faces. The block order was counter‐balanced across subjects. The images appeared randomly in each block with the constraint that no more than three male or female faces appeared successively. At the beginning and end of each block, a gray square (200 × 200 pixels) appeared on the screen for 30 s.

To localize face sensitive regions in extrastriate visual cortex, we used a face localizer task consisting of 18 blocks of rapid presentations of human faces, monkey faces, human hands, technical objects, fruits and vegetables, human bodies, and scrambled objects [see Tsao et al., 2006]. Blocks of faces and objects (200 × 200 pixel, 7° × 7°) were alternated with blocks of a scrambled presentation of the same faces and objects (8 × 8 pixel scrambled). Each image was presented for 500 ms immediately followed by the next image and each block consisted of a presentation of 36 different faces or objects (18 s). During the entire sequence, a red square (6 × 6 pixels) was presented in the middle of the screen. Subjects were instructed to fixate on the red square.

After the experiment, all participants took part in a post‐evaluation of all facial images used during the experiment. Participants were asked to make gender classifications for each unfiltered image without time limitations. This post‐experimental evaluation of facial images was performed to rule out the possibility that differences between internal and external encoders during the experiment can be attributed to general gender classification differences unrelated to the ambiguity manipulation.

Image Acquisition

Imaging data were obtained on a 3‐T SIEMENS Magnetom Allegra® System (Siemens, Erlangen, Germany) using a T2*‐weighted gradient echo‐planar imaging (EPI) sequence (28 contiguous axial slices aligned to the AC‐PC plane, slice thickness 4 mm, no gap, TR = 1.5 s, TE = 30 ms, FA = 73°, in‐plane resolution 3 × 3 mm, interleaved acquisition), and a circularly polarized head coil.

A high resolution magnetization prepared rapid acquisition gradient echo (MPRAGE) T1‐weighted sequence (176 contiguous slices, TR = 2.3 s, TE = 4.38 ms, TI = 900 ms, FA = 8°, FOV 296 × 296 mm, in‐plane resolution 1 × 1 mm, slice thickness 1 mm) was obtained in sagittal orientation to obtain structural images.

Image Analysis

We used the statistical parametric mapping software SPM5/8b (Welcome Department of Cognitive Neurology, London, UK) for preprocessing and statistical analysis of functional images. Functional images were first corrected for latency differences in slice acquisition to the middle slice in each image. After motion estimation, they were realigned to the tenth image for each data set. The anatomical images were coregistered to the functional images to reveal the warping parameters for normalizing the functional images to the Montreal Neurological Institute (MNI) stereotactic template brain. During normalization, the functional images were resampled to 2 × 2 × 2.66 mm3 voxel size. Normalized images were spatially smoothed using a nonisotropic Gaussian kernel of FWHM 8 × 8 × 10.66 mm3 to decrease differences in individual structural brain anatomy and to increase the signal‐to‐noise ratio. Images were high‐pass filtered (128 s) to remove low‐frequency signal drifts. A first‐order autoregressive model (AR‐1) served for estimating temporal autocorrelations by using restricted maximum likelihood estimates of variance components.

We defined boxcar functions created by the onset of a face sequence and lasting until the response on a trial‐by‐trial basis. These boxcar functions were convolved with a canonical hemodynamic response functions (HRF). For the corresponding scrambled sequence of each facial image, the same boxcar function was aligned to the onset of each scrambled sequence with the same duration as the face sequence. The four blocks of gray squares at the beginning and the end of each block were equally modeled by a boxcar function of 30 s duration and convolved with a canonical HRF. Five experimental conditions were subsequently entered into a general linear model: two regressors for correct and incorrect responses, two regressors for the corresponding scrambled sequence of the correct and incorrect classified facial image, and one regressor for the blocks containing the gray squares. Additionally, six motion correction parameters as regressors of no interest were included in the design matrix to minimize false positive activations due to task‐correlated motion [Johnstone et al., 2006].

First‐level linear contrasts were calculated by comparing functional activations during the face sequence with functional activations during the scrambled sequence for each subject. These individual contrasts were entered into a second‐level random effect analysis across subjects by using a two‐sample t‐test to analyze activation differences between internal and external encoders. Functional activations were thresholded at a combined voxel and cluster‐size threshold of P < 0.001 and a cluster extent of k = 7.

We determined extrastriate visual processing regions involved in face perception using a face localizer task. Blocks of faces and objects were modeled by a boxcar function of 18 s duration and convolved with a canonical HRF. Activation during blocks of face presentations were contrasted against activation during blocks of object presentation. Contrasts were thresholded at a combined voxel and cluster threshold of P < 0.005 and k = 7. To determine individual location of the fusiform and the occipital face area, we analyzed activation in the fusiform gyrus and the occipital face area according to the coordinates reported in previous studies [Kanwisher et al., 1997; Rossion et al., 2003]. Individual regions of interest (ROI) were defined by 3 mm radius sphere around peak activation in the fusiform and inferior occipital gyrus. Additionally, 3 mm ROIs were defined in the right insula (INS) and the medial frontal gyrus (MeFG).

We expect internal encoders to more rapidly impose face‐gender schemata onto incoming ambiguous information. To analyze the BOLD signal time courses within specific ROIs assumed to represent face‐gender schemata, we extracted BOLD signal changes in time bins of the TR for each face‐string. These were time‐locked to the onset of the first picture of each face‐string sequence by using a finite impulse response (FIR) model. Differences in time course were compared between groups as significant differences in each time bin.

Psychophysiological Interaction

Activations in the fusiform gyrus are associated with the detection of relevant facial information and this information is fed forward to other brain regions involved in response control. To examine the functional coupling of activations in the fusiform gyrus in the face and scrambled sequence, we extracted the time course of activation in the FFA in a 3 mm radius sphere around peak activations in these regions taken from the face localizer scan. To search for activations in other brain regions that are correlated with the time course in the FFA, we deconvolved the time courses and multiplied them with a “psychological variable” defined as the contrast between the face and the scrambled sequence. This data was finally entered into a psychophysiological interaction (PPI) analysis [Friston et al., 1997] with the psychological variable and the deconvolved time course as additional regressors of no interest. The inclusion of these regressors assures that the resulting functional activation is solely determined by the interaction between a physiological variable (time course in seed regions) and a psychological variable (face sequence > scrambled sequence). Individual results for these PPI analyses were once again entered into a second‐level random effects analysis by entering individual contrasts into a two‐sample t‐test with internal and external encoders as group variables. Resulting statistical maps were thresholded by a combined voxel and cluster threshold of P < 0.05 (FWE corrected) and a cluster extent of k = 7 for the analysis of functional activation of the entire sample and with P < 0.001 and a cluster extent of k = 7 for the group comparison.

RESULTS

Behavioral Data

Internal encoders responded significantly faster during the face‐string sequence (M IE = 5.85 s, SEM = 0.29; M EE = 6.86 s, SEM = 0.27; F 1,22 = 6.54, P = 0.028) irrespective of the correctness of the response (F 1,22 = 1.649, P = 0.213). There was no interaction between encoding style and correct responses (F 1,22 = 0.307, P = 0.585). Performance accuracy did not differ between internal and external encoders (M IE = 79.16%, SEM = 3.20; M EE = 84.83%, SEM = 2.53; t 22 = 0.816, P = 0.423) (see Fig. 1b).

Imaging Data

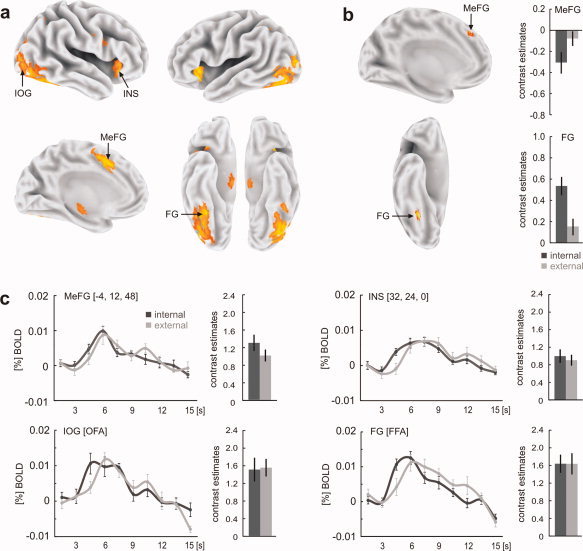

In a first step, we compared functional activations during the face‐string sequence with the activations during the sequence of scrambled pictures in the whole group of subjects (see Fig. 2a and Table I). This comparison revealed an activation pattern comprising of medial (BA 6, 32) and right lateral inferior frontal brain regions (BA44), as well as the bilateral anterior insula extending to the lentiform nuclei. Additional activations were found in the superior and medial parietal cortex (BA 7), the extrastriate regions along the ventral processing stream in the middle and inferior occipital cortex (BA 18, 19), and the right fusiform gyrus (BA 37). Subcortical activations were located in the dorso‐medial and inferior thalamic regions and the right tectum. Internal encoders showed significantly higher activation than the external encoders in the right mid‐fusiform gyrus (BA 37; t 22 = 4.769, P < 0.001), whereas external encoders showed more activation in the left medial frontal cortex (BA 8; t 22 = 2.606, P = 0.016) (see Fig. 2b and Table Ib,c).

Figure 2.

(a) Comparing functional activations during the face‐string sequence with those of the scrambled sequence irrespective of encoding style revealed a distributed activation pattern including activations in the inferior occipital gyrus (IOG), fusiform gyrus (FG), anterior insular cortex (INS), and medial frontal gyrus (MeFG). (b) Internal encoders showed higher activation in the right FG whereas external encoders showed higher activation in MeFG. (c) Individual face responsive regions in extrastriate brain regions were determined using functional face localizer scans. Across all subjects, we found right hemispheric regions in the inferior occipital gyrus and the fusiform gyrus that most probably represent the occipital face area (OFA) and the fusiform face area (FFA), respectively. Although both areas revealed no significant differences in activation between internal and external encoders, the time course of the BOLD response in both areas revealed an earlier latency peak in the group of internal encoders. Functional contrasts were rendered on the human Colin atlas implemented in the CARET software [Van Essen et al., 2001]. Coordinates refer to the MNI space; error bars indicate SEM. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

Table I.

Regions of significant activation in the comparison between the face‐string sequence and the scrambled string

| Region | BA | MNI coordinates | t | Cluster size |

|---|---|---|---|---|

| (a) face‐string > scrambled string | ||||

| L medial frontal gyrus | 6 | −4, 12, 48 | 6.46 | 853 |

| R medial frontal gyrus | 6 | 0, 2, 53 | ||

| R cingulate gyrus | 32 | 8, 18, 43 | ||

| R inferior frontal gyrus | 44 | 52, 8, 27 | 5.59 | 125 |

| L superior parietal lobule | 7 | −26, −64, 51 | 5.17 | 27 |

| R superior parietal lobule | 7 | 32, −56, 51 | 5.05 | 12 |

| L precuneus | 7 | −26, −52, 48 | 4.82 | 7 |

| R precuneus | 7 | 22, −62, 37 | 4.96 | 16 |

| L insula | −30, 22, 3 | 6.74 | 219 | |

| R insula | 32, 24, 0 | 6.37 | 293 | |

| R fusiform gyrus | 37 | 38, −48, −16 | 7.08 | 1,733 |

| L middle occipital gyrus | 18 | −32, −90, 13 | 6.36 | 1,487 |

| 18 | −32, −82, 8 | |||

| R middle occipital gyrus | 19 | 46, −74, −8 | ||

| 19 | 34, −80, 5 | |||

| L inferior occipital gyrus | 18 | −40, −78, −11 | ||

| R tectum | 6, −28, −11 | 6.84 | 836 | |

| R subthalamic nucleus | 8, −14, −3 | |||

| L thalamus (dm) | −2, −14, −8 | |||

| L lentiform nucleus | −14, 0, −5 | 5.05 | 9 | |

| R lentiform nucleus | 16, 6, −5 | 5.21 | 69 | |

| (b) internal encoders > external encoders | ||||

| R fusiform gyrus | 37 | 38, −48, −13 | 5.04 | 44 |

| (c) external encoders > internal encoders | ||||

| L medial frontal gyrus | 8 | −2, 36, 48 | 2.97 | 12 |

Functional activations in correctly classified trials (a) for the entire sample thresholded at P < 0.05 (FWE corrected) and k = 7, and for the comparison of (b) internal against external and (c) external against internal encoders thresholded at P < 0.001 (uncorrected) and k = 7.

Face sensitive extrastriate regions were defined for each subject through a face localizer as described above. Although the face localizer sequence revealed left hemispheric face sensitive regions in the inferior occipital gyrus (n = 9 subjects) and the fusiform gyrus (n = 12 subjects) only for some subjects, we consistently found right hemispheric activation in the inferior occipital and fusiform gyrus closely corresponding to the OFA and FFA for each subject (see Table II). Hence, further data analysis was restricted to right hemispheric face sensitive regions only. Though no significant BOLD signal differences between internal and external encoders were seen in the right OFA (t 22 = 0.049, P = 0.962) and right FFA (t 22 = 0.226, P = 0.823), internal encoders showed a faster peak latency in both the OFA and FFA (see Fig. 2c). Comparing BOLD signal course between internal and external encoders within each TR time bin, we found a significantly higher signal in the third time bin (3–4.5s) for internal encoders in the OFA (t 22 = 2.086, P = 0.049) and FFA (t 22 = 2.385, P = 0.026). The same effect was present in the right insular cortex (t 22 = 2.582, P = 0.017) but not in the MeFG region (P < 0.124).

Table II.

Results of the face localizer scan

| Subject | OFA | FFA |

|---|---|---|

| Internal encoders | ||

| 1 | 46, −80, −3 | 42, −56,−24 |

| 2 | 38, −92, −13 | 36, −62, −19 |

| 3 | 44, −74, −13 | 46, −64, −24 |

| 4 | 42, −86, −11 | 46, −66, −19 |

| 5 | 42, −88, −8 | 42, −54, −19 |

| 6 | 38, −82, −8 | 48, −54, −28 |

| 7 | 40, −86, −8 | 42, −50, −24 |

| 8 | 44, −82, −11 | 40, −64, −19 |

| 9 | 44, −76, −16 | 42, −50, −24 |

| 10 | 46, −82, −11 | 42, −54, −21 |

| 11 | 48, −74, −8 | 40, −54, −19 |

| 12 | 46, −80, −11 | 44, −48, −16 |

| External encoders | ||

| 1 | 46, −84, −11 | 38, −64, −19 |

| 2 | 50, −74, −8 | 40, −68, −14 |

| 3 | 42, −80, −19 | 40, −54, −31 |

| 4 | 46, −74, −13 | 48, −54, −24 |

| 5 | 44, −74, −11 | 40, −46, −21 |

| 6 | 48, −76, −13 | 46, −52, −24 |

| 7 | 42, −80, −11 | 44, −42, −24 |

| 8 | 38, −86, −21 | 44, −44, −24 |

| 9 | 48, −70, −14 | 38, −56, −19 |

| 10 | 48, −82, −8 | 48, −62, −16 |

| 11 | 48, −76, −11 | 42, −60, −19 |

| 12 | 40, −74, −11 | 42, −54, −21 |

MNI coordinates of right hemispheric regions in extrastriate cortex which showed significant activation (P < 0.005, uncorrected) for each subject in the comparison of blocks containing human or monkey faces compared to blocks containing other objects such as hands, bodies, fruits or technical devices. We consistently found activations in the inferior occipital gyrus most likely reflecting the occipital face area (OFA) and in the fusiform gyrus most likely reflecting the fusiform face area (FFA).

PPI Analysis

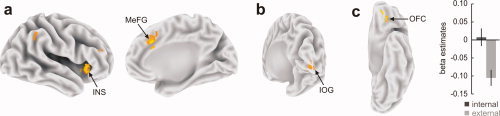

The individually determined cortical regions most likely representing the FFA were used as seed regions for a PPI analysis. Using a PPI analysis, we tried to find activation in other brain regions that are closely associated with activity in the FFA. Brain regions that showed a positive functional coupling to FFA activity were found in the right hemispheric medial (BA 8, 32) and lateral frontal cortices (BA 46) as well as in the insular cortex (BA 13) and inferior parietal cortex (BA 40) (Fig. 3a, Table IIIa). A negative coupling was found in the right inferior occipital cortex (BA 18) and posterior cingulate cortex (BA 23) (Fig. 3b, Table IIIb).

Figure 3.

The functional connectivity analysis using the PPI approach (P < 0.001, k = 7) with the fusiform face area (FFA) as the seed region revealed (a) significant positive associations in right insula and medial frontal cortex with activity in the FFA during the facestring compared with the scrambled sequence. (b) A negative relationship to activity in FFA was found in the right inferior occipital gyrus. (c) Internal compared with external encoders revealed a higher functional connectivity in the orbito‐frontal cortex (OFC) with activity in FFA during the face‐string sequence. The left panel shows the left hemisphere from a ventral view with the activation cluster in the left OFC. The right panel shows the corresponding beta estimates in the OFC where internal encoders revealed higher signal compared to external encoders. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

Table III.

Regions of significant activation for the PPI analysis

| Region | BA | MNI coordinates | t | Cluster size |

|---|---|---|---|---|

| (a) face‐string > scrambled string | ||||

| R medial frontal gyrus/cingulate gyrus | 8/32 | 8, 24, 37 | 11.01 | 265 |

| R middle frontal gyrus | 46 | 44, 40, 24 | 6.78 | 30 |

| R insula | 13 | 40, 18, 3 | 9.76 | 208 |

| R inferior parietal lobule | 40 | 54, −46, 45 | 7.42 | 68 |

| (b) scrambled string > face‐string | ||||

| R inferior occipital gyrus | 18 | 18, −98, 3 | 7.98 | 78 |

| L cingulate gyrus | 23 | −6, −60, 13 | 7.25 | 93 |

| L superior frontal gyrus | 9 | −10, 50, 40 | 6.98 | 59 |

| (c) internal encoders > external encoders | ||||

| L medial orbital gyrus | 11 | −18, 32, −8 | 5.09 | 202 |

Individual face response regions in right fusiform gyrus most likely representing the fusiform face area were defined as seed regions. The table displays regions that show (a) a positive and (b) a negative association to activation in the FFA for the different conditions thresholded at P < 0.05 (FWE corrected) and k = 7, and the comparison of (c) internal against external encoders thresholded at P < 0.001 (uncorrected) and k = 7.

A region in the orbito‐frontal cortex (OFC) revealed an interaction effect between encoding style and functional connectivity with the FFA when comparing the face‐string and scrambled sequence. Specifically, internal encoders revealed a significantly stronger functional coupling of FFA and OFA (t 22 = 3.837, P = 0.001) (Fig. 3c, Table IIIc). External encoders revealed no significantly higher coupling between the FFA and other brain regions relative to internal encoders.

DISCUSSION

In this experiment, two groups of subjects viewed a series of male and female faces. Each face was arranged in a face‐string sequence of filtered images gradually changing from coarse (high sensory ambiguity) to fine filtering (low sensory ambiguity). During this face‐string sequence, sensory stimulus information necessary for a gender classification task was progressively increased. We expected that this sequence of facial images would differentially stimulate individual “Schema Instantiation Thresholds” (SIT) [Lewicki, 2005] for the application of primary face‐gender schemata [Akrami et al., 2009; Liu and Jagadeesh, 2008]. We found strong behavioral and functional evidence that internal encoders in contrast with external encoders have a lower SIT and that this lower SIT triggers faster gender decisions even in conditions of high sensory ambiguity. An external encoding style was associated with delayed responses, indicating a higher SIT. In this case, gender decisions were more strongly based on controlled and detailed stimulus processing through accumulating sensory evidence.

This difference between internal and external encoders in imposing primary face‐gender schemata was also confirmed by the imaging data, which revealed both common and differential activation patterns for the two encoding style groups. For both groups activations in the occipital and in the fusiform face area were found. These regions were used to classify incoming sensory information according to the primary face‐gender schema [Gauthier et al., 2000; Steeves et al., 2006]. Activations were also found in the dorsal medial frontal and insular cortices. The latter region most likely represents secondary schemata temporarily generated during perceptually ambiguous stimulation and decisional uncertainties [Grinband et al., 2006; Huettel et al., 2005; Li et al., 2009; Ridderinkhof et al., 2004; Summerfield et al., 2006a, b; Zhang et al., 2008]. However, apart from these common activations, internal and external encoders also revealed differences in functional activations in brain areas most likely representing the differential application of primary and secondary schemata. As compared with external encoders, internal encoders revealed higher activation in the fusiform gyrus and significantly earlier peak BOLD latencies in the OFA and the FFA. This finding might indicate a coarser but more rapid gender classification process according to the primary face‐gender schemata. Although the BOLD signal provides rather poor temporal resolution, differences in BOLD peak latencies have been shown to be a reliable temporal index of perceptual inferences during sensory ambiguity in the time range of several hundreds of milliseconds [Sterzer and Kleinschmidt, 2007].

External encoders revealed higher activation in the medial frontal cortex, a brain area reported to underlie the formation and representation of secondary schemata in the case of enhanced task‐specific uncertainties [Isoda and Hikosaka, 2007; Ridderinkhof et al., 2004]. These secondary schemata might act as a temporal supervisory control instance to adjust the brain system to an accurate gender classification [Isoda and Hikosaka, 2007; Ridderinkhof et al., 2004] based on a more controlled application of the primary face‐gender schemata in posterior brain regions. In this study, the formation of secondary schemata occurred in the context of perceptual ambiguity leading to decisional uncertainties. The processing of decisional uncertainties seems to be associated with activity in the dorsal medial frontal cortex [Grinband et al., 2006; Huettel et al., 2005; Li et al., 2009; Ridderinkhof et al., 2004; Zhang et al., 2008]. We assume that external encoders base their gender classifications more strongly on accumulated sensory evidence as revealed by increased reaction time and this accumulation is persistently accompanied by increased decisional uncertainties as indicated by increased medial frontal activity. The latter, in turn, might again have enhanced the need to accumulate more sensory evidence.

We have to note that though internal encoders and external encoders revealed significant differences in activations during gender decision in the face‐string task, these differences might partly be attributed to the total time taken to perform the task since response times differed significantly between groups. The BOLD response was modeled from the onset of each face‐string sequence until the individual response. Therefore, longer reaction times for external encoders might imply longer activation for underlying brain regions that could generate higher functional activations and, consequently, functional differences between groups. However, several factors suggest that this may not be the case. First, internal encoders made faster gender decisions but still revealed higher functional activation in the fusiform gyrus as opposed to external encoders. Second, face‐string sequences were compared with corresponding scrambled sequences that were modeled with identical length as the face‐string sequence on a trial‐by‐trial basis. This contrasting procedure should partly eliminate the effect of time‐on‐task within and across groups. Finally, it has been demonstrated that a longer stimulus duration or response latencies to a stimulus does affect the width rather than the height of the BOLD response [see for example, Menon and Kim, 1999], suggesting that differences in the height of BOLD activations between internal encoders and external encoders are rather unaffected by stimulus duration.

Taken together, the present data so far indicate that internal encoders made faster gender decisions based on less sensory evidence and a more rapid application of the primary face‐gender schemata whereas external encoders made decisions more strongly based on sensory evidence presumably using a more controlled and detailed processing strategy under stronger top‐down control. This first conclusion about encoding style differences for recognition of ambiguous images was further corroborated by differences in functional connectivity of the FFA as a central region for face processing and primary face‐gender applications. A PPI analysis [Friston et al., 1997] with the FFA as seed region revealed a positive association of the FFA signal to activity in the medial frontal cortex and right insula in addition to a negative association with activity in the OFA. These functional connectivity data support the common functional activations but suggest a specific functional relation of the FFA with other brain regions active during the face‐string sequence. More specifically, a significantly higher functional coupling of the orbito‐frontal cortex with FFA activity was found for internal encoders. The orbito‐frontal cortex was recently reported to be active during different types of object classification tasks under perceptual uncertainty [for reviews see, Fenske et al., 2006; Kveraga et al., 2007b]. Based on the low spatial frequency information, the OFC might initially form predictions about the most probable gender category to which an ambiguous facial stimulus might belong [Kveraga et al., 2007b]. Apart from these predictions, a second function of the OFC might also refer to successful object recognition [Fenske et al., 2006]. In this case, success is primarily determined by the matching of the percept with internal predictions and expectations rather than with external sensory criteria [Kveraga et al., 2007b], as seen during illusory face perceptions for example [Li et al., 2009]. Higher functional coupling of activation in the OFC to seed activation in the FFA might indicate both a rapid formation of gender classification predictions and subjectively experienced reliable gender recognitions in the group of internal encoders. For the group of external encoders less activity in OFC and in combination with increased activity in medial frontal cortex as discussed above might indicate more perceptual and decisional uncertainty. Increased task specific uncertainties should be formed and represented as secondary schemata in frontal brain regions and should dynamically interact with the primary face‐gender schemata in posterior brain regions for detailed stimulus processing.

Altogether, the present data provide strong evidence for different neural encoding dynamics during ambiguous visual stimulation in internally and externally encoding participants. The internal encoding style is primarily based on a fast application of the primary schemata for object classifications in response to high sensory ambiguity and with apparently very little verification of whether the schemata actually match the sensory input. These fast classifications seem to be accompanied by less decisional uncertainties about successful object recognition. The external encoding style, on the other hand, involves a higher degree of decisional uncertainty with less subjective confidence in successful object recognition. These uncertainties seem to be represented as a secondary task‐specific schema, which adjusts the brain system to a more controlled stimulus processing based on accumulated sensory evidence.

In conclusion, the present data show that apart from the sensory properties of the stimulus and top‐down factors such as expectations and predictions, individual differences also affect the processing of visual information in the collaboration of extra‐striate and frontal brain areas. Here, individual differences were operationalized in the framework of differences in “encoding styles” [Lewicki, 2005]. The current data demonstrate that primary object schemata of some individuals (internal encoders) exert a larger and more immediate influence on perception than in other individuals (external encoders). Assuming visual recognition results from the interplay of bottom‐up and top‐down functional pathways [Fenske et al., 2006; Mesulam, 2008], these models appear to be partly incomplete. This interplay seems not only to be controlled by situational factors and task conditions [Li et al., 2009; Zhang et al., 2008], but also to a considerable degree by individual characteristics, such as an individual encoding style as a moderator variable.

Supporting information

Additional Supporting Information may be found in the online version of this article.

Supporting Information

Acknowledgements

The authors thank Michael Koch, Canan Basar‐Eroglu, and Klaus Pawelzik for their help during the screening procedure, which allowed them to get access to wide range of student populations. Mikhael Babanin was involved during the preparation of the study. They thank Sebastian Möller for providing support with the face localizer sequence.

REFERENCES

- Akrami A, Liu Y, Treves A, Jagadeesh B ( 2009): Converging neuronal activity in inferior temporal cortex during the classification of morphed stimuli. Cereb Cortex 19: 760–776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banich MT, Milham MP, Atchley RA, Cohen NJ, Webb A, Wszalek T, Kramer AF, Liang Z, Barad V, Gullett D, Shah C, Brown C. ( 2000): Prefrontal regions play a predominant role in imposing an attentional ‘set’: Evidence from fMRI. Brain Res Cogn Brain Res 10: 1–9. [DOI] [PubMed] [Google Scholar]

- Billieux J, D'Argembeau A, Lewicki P, Van der Linden M ( 2009): A French adaptation of the internal and external encoding style questionnaire and its relationships with impulsivity. Eur Rev Appl Psychol 59: 3–8. [Google Scholar]

- Dricot L, Sorger B, Schiltz C, Goebel R, Rossion B ( 2008): The roles of “face” and “non‐face” areas during individual face perception: Evidence by fMRI adaptation in a brain‐damaged prosopagnosic patient. Neuroimage 40: 318–332. [DOI] [PubMed] [Google Scholar]

- Fenske MJ, Aminoff E, Gronau N, Bar M ( 2006): Top‐down facilitation of visual object recognition: Object‐based and context‐based contributions. Prog Brain Res 155: 3–21. [DOI] [PubMed] [Google Scholar]

- Fiske ST ( 1993): Social cognition and social perception. Ann Rev Psychol 44: 155–194. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Buechel C, Fink GR, Morris J, Rolls E, Dolan RJ ( 1997): Psychophysiological and modulatory interactions in neuroimaging. Neuroimage 6: 218–229. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Moylan J, Skudlarski P, Gore JC, Anderson AW ( 2000): The fusiform “face area” is part of a network that processes faces at the individual level. J Cogn Neurosci 12: 495–504. [DOI] [PubMed] [Google Scholar]

- Goldstein AG, Chance JE ( 1980): Memory for faces and schema theory. J Psychol 105: 47–59. [Google Scholar]

- Grill‐Spector K, Knouf N, Kanwisher N ( 2004): The fusiform face area subserves face perception, not generic within‐category identification. Nat Neurosci 7: 555–562. [DOI] [PubMed] [Google Scholar]

- Grinband J, Hirsch J, Ferrera VP ( 2006): A neural representation of categorization uncertainty in the human brain. Neuron 49: 757–763. [DOI] [PubMed] [Google Scholar]

- Huettel SA, Song AW, McCarthy G ( 2005): Decisions under uncertainty: Probabilistic context influences activation of prefrontal and parietal cortices. J Neurosci 25: 3304–3311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Isoda M, Hikosaka O ( 2007): Switching from automatic to controlled action by monkey medial frontal cortex. Nat Neurosci 10: 240–248. [DOI] [PubMed] [Google Scholar]

- Johnson MH ( 2005): Subcortical face processing. Nat Rev Neurosci 6: 766–774. [DOI] [PubMed] [Google Scholar]

- Johnstone T, Ores Walsh KS, Greischar LL, Alexander AL, Fox AS, Davidson RJ, Oakes TR ( 2006): Motion correction and the use of motion covariates in multiple‐subject fMRI analysis. Hum Brain Mapp 27: 779–788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM ( 1997): The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci 17: 4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kveraga K, Boshyan J, Bar M ( 2007a) Magnocellular projections as the trigger of top‐down facilitation in recognition. J Neurosci 27: 132–140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kveraga K, Ghuman AS, Bar M ( 2007b) Top‐down predictions in the cognitive brain. Brain Cogn 65: 145–168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewicki P ( 2005): Internal and external encoding style and social motivation In Forgas JP, Williams KD, Laham SM, editors. Social Motivation: Conscious and Unconscious Processes. New York, NY: Cambridge University Press; pp 194–209. [Google Scholar]

- Lewicki P, Hill T, Czyzewska M ( 1992): Nonconscious acquisition of information. Am Psychol 47: 796–801. [DOI] [PubMed] [Google Scholar]

- Li J, Liu J, Liang J, Zhang H, Zhao J, Huber DE, Rieth CA, Lee K, Tian J, Shi G ( 2009): A distributed neural system for top‐down face processing. Neurosci Lett 451: 6–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu Y, Jagadeesh B ( 2008): Modulation of neural responses in inferotemporal cortex during the interpretation of ambiguous photographs. Eur J Neurosci 27: 3059–3073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martinez AM, Benavente R ( 1998): The AR Face Database: CVC Technical Report #24. Available at: http://cobweb.ecn.purdue.edu/`aleix/aleix_face_DB.html.

- Menon RS, Kim SG ( 1999): Spatial and temporal limits in cognitive neuroimaging with fMRI. Trends Cogn Sci 3: 207–216. [DOI] [PubMed] [Google Scholar]

- Mesulam M ( 2008): Representation, inference, and transcendent encoding in neurocognitive networks of the human brain. Ann Neurol 64: 367–378. [DOI] [PubMed] [Google Scholar]

- O'Craven KM, Kanwisher N ( 2000): Mental imagery of faces and places activates corresponding stiimulus‐specific brain regions. J Cogn Neurosci 12: 1013–1023. [DOI] [PubMed] [Google Scholar]

- O'Craven KM, Downing PE, Kanwisher N ( 1999): fMRI evidence for objects as the units of attentional selection. Nature 401: 584–587. [DOI] [PubMed] [Google Scholar]

- Oldfield RC (1971): The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9: 97–113. [DOI] [PubMed] [Google Scholar]

- Ridderinkhof KR, Ullsperger M, Crone EA, Nieuwenhuis S ( 2004): The role of the medial frontal cortex in cognitive control. Science 306: 443–447. [DOI] [PubMed] [Google Scholar]

- Rossion B, Caldara R, Seghier M, Schuller AM, Lazeyras F, Mayer E ( 2003): A network of occipito‐temporal face‐sensitive areas besides the right middle fusiform gyrus is necessary for normal face processing. Brain 126: 2381–2395. [DOI] [PubMed] [Google Scholar]

- Rumelhart DE ( 1980): Schemata: The building blocks of cognition In Spiro RJ, Bruce BC, Brewer WF, editors. Theoretical Issues in Reading Comprehension. Hillsdale, NJ: Erlbaum; pp 38–58. [Google Scholar]

- Sehatpour P, Molholm S, Javitt DC, Foxe JJ ( 2006): Spatiotemporal dynamics of human object recognition processing: an integrated high‐density electrical mapping and functional imaging study of “closure” processes. Neuroimage 29: 605–618. [DOI] [PubMed] [Google Scholar]

- Singer T, Critchley HD, Preuschoff K ( 2009): A common role of insula in feelings, empathy and uncertainty. Trends Cogn Sci 13: 334–340. [DOI] [PubMed] [Google Scholar]

- Steeves JK, Culham JC, Duchaine BC, Pratesi CC, Valyear KF, Schindler I, Humphrey GK, Milner AD, Goodale MA ( 2006): The fusiform face area is not sufficient for face recognition: Evidence from a patient with dense prosopagnosia and no occipital face area. Neuropsychologia 44: 594–609. [DOI] [PubMed] [Google Scholar]

- Sterzer P, Kleinschmidt A ( 2007): A neural basis for inference in perceptual ambiguity. Proc Natl Acad Sci USA 104: 323–328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Summerfield C, Koechlin E ( 2008): A neural representation of prior information during perceptual inference. Neuron 59: 336–347. [DOI] [PubMed] [Google Scholar]

- Summerfield C, Egner T ( 2009): Expectation (and attention) in visual cognition. Trends Cogn Sci 13: 403–409. [DOI] [PubMed] [Google Scholar]

- Summerfield C, Egner T, Greene M, Koechlin E, Mangels J, Hirsch J ( 2006a) Predictive codes for forthcoming perception in the frontal cortex. Science 314: 1311–1314. [DOI] [PubMed] [Google Scholar]

- Summerfield C, Egner T, Mangels J, Hirsch J ( 2006b) Mistaking a house for a face: Neural correlates of misperception in healthy humans. Cereb Cortex 16: 500–508. [DOI] [PubMed] [Google Scholar]

- Tsao DY, Freiwald WA, Tootell RB, Livingstone MS ( 2006): A cortical region consisting entirely of face‐selective cells. Science 311: 670–674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Essen DC, Drury HA, Dickson J, Harwell J, Hanlon D, Anderson CH ( 2001): An integrated software suite for surface‐based analyses of cerebral cortex. J Am Med Inform Assoc 8: 443–459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weber M ( 1999): Frontal Face Dataset: California Institute of Technology. Available at: http://www.vision.caltech.edu/html-files/archive.html.

- Zhang H, Liu J, Huber DE, Rieth CA, Tian J, Lee K ( 2008): Detecting faces in pure noise images: A functional MRI study on top‐down perception. Neuroreport 19: 229–233. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional Supporting Information may be found in the online version of this article.

Supporting Information