Abstract

Psychophysical and neuroimaging studies in both animal and human subjects have clearly demonstrated that cortical plasticity following sensory deprivation leads to a brain functional reorganization that favors the spared modalities. In postlingually deaf patients, the use of a cochlear implant (CI) allows a recovery of the auditory function, which will probably counteract the cortical crossmodal reorganization induced by hearing loss. To study the dynamics of such reversed crossmodal plasticity, we designed a longitudinal neuroimaging study involving the follow‐up of 10 postlingually deaf adult CI users engaged in a visual speechreading task. While speechreading activates Broca's area in normally hearing subjects (NHS), the activity level elicited in this region in CI patients is abnormally low and increases progressively with post‐implantation time. Furthermore, speechreading in CI patients induces abnormal crossmodal activations in right anterior regions of the superior temporal cortex normally devoted to processing human voice stimuli (temporal voice‐sensitive areas‐TVA). These abnormal activity levels diminish with post‐implantation time and tend towards the levels observed in NHS. First, our study revealed that the neuroplasticity after cochlear implantation involves not only auditory but also visual and audiovisual speech processing networks. Second, our results suggest that during deafness, the functional links between cortical regions specialized in face and voice processing are reallocated to support speech‐related visual processing through cross‐modal reorganization. Such reorganization allows a more efficient audiovisual integration of speech after cochlear implantation. These compensatory sensory strategies are later completed by the progressive restoration of the visuo‐audio‐motor speech processing loop, including Broca's area. Hum Brain Mapp, 2012. © 2011 Wiley Periodicals, Inc

Keywords: crossmodal compensation, cochlear implant, deafness, voice area, speechreading, multisensory integration

INTRODUCTION

In humans and animals, the loss of a sensory modality triggers compensatory mechanisms leading to superior performance in the remaining modalities [Bavelier et al.,2006; Merabet and Pascual‐Leone2010] and important functional reorganizations such as the colonization of the deprived cortical areas by the remaining modalities [Röder et al.,2002; Sadato et al.,1996; Weeks et al.,2000]. For example, in congenitally deaf subjects, while the pattern of brain activation during visual lip‐reading largely overlaps with that described in normally hearing subjects (NHS) [Capek et al.,2008], cross‐modal reorganization affecting the auditory areas can be evoked by visual sign language [Campbell and Capek2008; Nishimura et al.,1999; Petitto et al.,2000] or even simple nonbiological visual moving stimuli [Finney et al.,2001].

Does such cross‐modal reorganization affect the cortical network of speech comprehension in cochlear implanted deaf subjects? Cochlear implantation is highly efficient for auditory recovery from profound deafness [Moore and Shannon2009] in spite of the reduced spectral information delivered by the implant [Shannon et al.,1995]. After implantation, postlingually deaf cochlear implanted adults (CI) maintain a supra‐normal speech‐reading performance [Rouger et al.,2007; Strelnikov et al.,2009b] because visual information provides complementary cues crucial for speech comprehension especially in noisy environments. Indeed, postlingually deaf CI patients present almost perfect audiovisual speech comprehension [Moody‐Antonio et al.,2005], which corresponds to the development of “supra‐normal” audiovisual integration skills [Rouger et al.,2007]. Furthermore, visual speech cues are relied upon by CI patients in the case of ambiguous audiovisual stimuli [Desai et al.,2008; Rouger et al.,2008]. Altogether, these behavioral results indicate a progressive reorganization of the visual and auditory speech processing strategies after deafness and cochlear implantation.

The aim of this study was to analyze how these changes in strategies for speech comprehension are related to progressive modification of cortical crossmodal reorganization. Indeed, in parallel with the reactivation of auditory cortical areas [Mortensen et al.,2006], cross‐modal activations of low‐level visual areas are observed during auditory‐only speech perception [Giraud et al.,2001], indicating a synergy between the two modalities. Our hypothesis is that the network that supports visual speech perception in CI patients undergoes reorganization as the auditory system is functionally reactivated. To date no study has described the progressive modifications that occur after cochlear implantation, and how these changes affect the cortical network of visual speech perception. To understand how changes in speech processing strategies are expressed in terms of cortical activity, we designed a longitudinal positron emission tomography follow‐up study in post‐lingual CI deaf adults. This study revealed evidence for cross‐modal activation of the auditory associative areas that involve the auditory voice‐sensitive region in the anterior part of the right STS. These cross‐modal activations declined during the first year post‐implantation while the visual speech cues progressively reactivated the auditory‐motor loop including the frontal Broca's area.

MATERIALS AND METHODS

Participants

Ten cochlear implant (CI) patients (Table I) and six normally hearing (NH) subjects were involved in this HO PET brain imaging study. Participants were native French speakers with self‐reported normal or corrected‐to‐normal vision and without any previously known language or cognitive disorders. All CI patients had postlingually acquired profound bilateral deafness (a bilateral hearing loss above 90 dB) of diverse etiologies (meningitis, chronic otitis, and otosclerosis) and durations. Only one patient (CI10) presented a sudden deafness, which occurred more than 10 years before the cochlear implantation. In all the other patients, the deafness was progressive, and the duration of hearing loss for each patient is shown in Table I. Because of this progressive hearing impairment, the duration of deafness could not be reliably defined and consequently we did not attempt to correlate this measure with any of the brain activity patterns. In 8 out of the 10 patients, contralateral residual hearing was either absent or only weak with thresholds above 70 dB SPL for the low frequencies ranges only (Table I). Two patients presented a threshold of 40–55 dB SPL for the frequency range 125–500 Hz.

Table I.

Summary of the patients

| # | Age | Sex | Duration of hearing loss | Implant | Side | Residual hearing* | Onset | T0 | T1 | A (T0) | A (T1) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| IC02 | 81 | F | >20 years | Nucleus CI24 | R | 70–80 dB 125–2,000 Hz | 31d | 2d | 10 m (X) | 40% | X |

| IC03 | 39 | M | >20 years | Nucleus CI24 | R | None | 36d | 22d (X) | 10 m | X | 70% |

| IC04 | 39 | F | >20 years | Nucleus CI24 | R | 75–80 dB 125–250 Hz | 35d | 8d | 3 m | 40% | 85% |

| IC06 | 57 | F | >20 years | Nucleus CI24 | L | 85 dB at 500 Hz | 27d | 2d | 15 m | 15% | 40% |

| IC07 | 69 | M | >5 years | MED‐EL | L | 70–85 dB 125–2,000 Hz | 29d | 5d | 11 m | 50% | 90% |

| IC08 | 39 | M | >20 years | Nucleus CI24 | L | 85 dB at 250 Hz | 32d | 9d | (X) | 20% | X |

| IC09 | 62 | F | >5 years | Clarion | L | 40–75 dB 125–500 Hz | 34d | 3d | 4 m | 50% | 90% |

| IC10 | 64 | F | >10 years | Advanced Bionics | L | None | 33d | 9d | 8 m | 20% | 60% |

| IC11 | 54 | F | >20 years | Nucleus CI24 | R | 55–75 dB 125–500 Hz | 33d | 15d | 7 m | 50% | 60% |

| IC12 | 35 | F | >20 years | Nucleus CI24 | R | 80 dB at 250 Hz | 31d | 1d | 6 m | 45% | 60% |

The duration of hearing loss indicates the estimation of the period during which the patients are suffering from hearing loss. The values of residual hearing correspond to the thresholds for the nonimplanted ear (obtained during a tonal audiometry test). For frequencies higher than those reported here, the thresholds are over 90 dB SPL. Onset indicates the time (in days) between cochlear implantation and the implant onset. T0 indicates the time (in days) between the implant onset and the first tomography, while T1 indicates the time (in months) between the implant onset and the second tomography.

CI patients were recipients of a unilateral CI (five on the left side and five on the right side) of various types (Table I). Because of the limited sub‐group size and since the stimulation was limited to the visual modality, we did not perform any specific analysis on the differences between left and right implantation sides of the patients. Post‐surgery implant onset time varied from 27 to 36 days (mean 32.1 days). The age of the patients was comprised between 35 and 81 years (mean 53.9 years), while the NH group ranged from 20 to 49 years (mean 34.2 years). Patient IC03 at T0 and patients IC02 and IC08 at T1 were excluded a priori from the analyses due to protocol deviations and cochlear explantation leaving a total number of 9 patients at T0, 8 at T1 and 7 patients scanned both at T0 and T1.

At the time of the CI activation the patients had a profound hearing loss with a mean auditory speech comprehension score of 28% (±18) as assessed by a speech therapist (see Procedure). At the time of the second PET session, their auditory recovery was in the normal range [Rouger et al.,2007] with a mean word recognition score of 70% (±16). Speech‐reading scores performed outside the scanner by the speech therapist (see Procedure) with explicit answers elicited an accuracy score of 30.3 ± 8.9% for patients and a significantly different score of 9.4 ± 7.1% for controls (P < 0.05, Mann‐Whitney).

All participants gave their full‐informed consent prior to their inclusion in this study, in accordance with local ethics committees (n° 1‐04‐47, Toulouse, France) and with the Declaration of Helsinki.

Stimuli

Stimuli were French bisyllabic words (e.g.,/sitrõ/, English lemon) and meaningless time‐reversed bisyllabic words (nonwords). Words and nonwords were pooled into lists of 40 stimuli each, including 20 words and 20 nonwords in random order. These lists were equalized for syllabic structure (CV/CVC/CCV), language utilization frequency (Brulex), and anterior‐posterior phonemic constitution. All stimuli were uttered by a female French speech therapist using a normal pronunciation with an even intonation, tempo, and vocal intensity. Utterances were recorded in a soundproof booth with a professional digital video camera. Video was digitized at 25 frames per second with a 720 × 576 graphic resolution. Visual stimuli were extracted using Adobe Premiere Pro 7.0 (Adobe Systems, Mountain View, CA), including a 200 ms rest‐time before and after each word. All stimuli were finally exported in MPEG2 video format with maximum encoding quality.

Procedure

A speech‐therapist evaluated monthly all patients through free‐field vocal audiometry using French disyllabic words, to screen for their auditory and speech‐reading performance (the latter being measured with the implant switched off). Each cochlear‐implant patient was scanned twice. The first PET session (T0) was performed as early as possible after the implant onset. The second PET session (T1) was performed as soon as the patient's auditory speech performance (as measured by the speech therapist) had reached a recognition level of 60% or above, or after a maximum of 1 year after the implant was activated (see Table I). Duration of auditory stimulation by the CI varied from 1 to 22 days (mean 7.6 days) for T0 tomography and from 3 to 15 months (mean 8.2 months) for T1 tomography (Table I).

In each PET session there were two experimental conditions: a rest condition and a speechreading condition. Neither of these involved any auditory stimulation apart from the low‐level, continuous background noise in the scanner room. During rest, subjects were lying in the scanner with their eyes closed. During speechreading, video recordings of words and nonwords that showed the speaker's face were silently presented at a rate of one item every 5 s. Visual presentation of the word was followed by a black screen with a white fixation cross in the center. Subjects had to distinguish words from nonwords through a yes/no 2‐alternative forced choice task using a two‐button computer mouse with their right hand. Such a task was chosen to maintain a high level of attention on the speech‐reading stimuli while avoiding speech production artefacts, which would have resulted from direct vocal feedback from the subjects. For each subject, a during‐scan speechreading score was obtained, corresponding to the rate of correct categorization for both words and nonwords.

Positron Emission Tomography

Subjects were scanned in a shielded darkened room with their head immobilized and transaxially aligned along the orbitomeatal line with a laser beam, with position controlled before each acquisition. Measurements of regional distribution of radioactivity were performed with an ECAT HR+ (Siemens®) PET camera with full volume acquisition (63 planes, thickness 2.4 mm, axial field‐of‐view 158 mm, in‐plane resolution ≈ 4.2 mm). The duration of each scan was 80 s; about 6 mCi of HO were administered to each subject for each individual scan. Stimulation was started ≈ 20 s before the beginning of neuroimaging data acquisition and was continued until scan completion. Experimental instructions were given to subjects before each session and repeated before each run.

Data Analysis

Neuroimaging data were analyzed with SPM2 software including the standard procedures for image pre‐processing (realignment, spatial normalization to the Montreal Neurological Institute brain template, smoothing with 8 mm isotropic Gaussian kernel), model definition and statistical assessment (first‐level analysis). In all cases, activations were computed in individual subjects using contrasts with the rest condition as usually recommended [Penny and Holmes2004]. This was especially relevant, as we have previously shown that the activity level at rest presents regional differences when comparing normal hearing subjects and CI patients [Strelnikov et al.,2010]. The output of individual analyses was entered into a second level random‐effect (RFX) analysis for group comparison. Comparisons between NH subjects and cochlear‐implant patients were achieved using a one‐way Anova, while intrapatient comparisons between T0 and T1 involved a paired t‐test. All differences were estimated at the level of P < 0.05 with family wise error correction for multiple nonindependent comparisons. Regional cerebral blood flow levels used in functional connectivity analyses were computed using 4 mm‐radius spheres centered on the voxels associated with maximum significance level. These regional levels were then analyzed using a post‐hoc Pearson correlation test.

RESULTS

Behavioural Results

The overall performance on the visual lexical decision task (word/nonword discrimination) during PET scanning sessions was 68.9% (± 9.7) for CI deaf patients and 56.0% (±8.6) for NHS. In the control hearing subjects, this level of performance corresponds to chance (Binomial test, P = 0.34, ns), while in the CI deaf patients the performance levels are well above chance both at T0 and T1 (65.5 and 65.3%, respectively, Binomial test, both P < 0.01). We further used Signal Detection Theory using the words stimuli as the targets to measure d′, a criterion of perception sensitivity unaffected by decision bias [Tanner and Swets,1954]. d′ values in CI patients were significantly higher than those observed in NHS (0.95 ± 0.7 vs. 0.29 ± 0.5, Mann & Whitney U‐test, P = 0.0069) adding further evidence that during the period of deafness, CI deaf patients had developed a supranormal ability in speech‐reading (see also Material and Methods/participants).

Neuroimaging Results

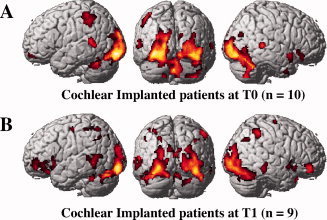

We compared the level of activity between groups by computing the brain activity level relative to the resting baseline. The speechreading discrimination task (words/nonwords) elicited activations in a complex cortical network including the occipital, superior temporal, and frontal lobes in both groups (Fig. 1, see tables of activation in Tables II and III), as previously reported in normal listeners [Campbell2008]. There is some overlap of the extent of the areas activated by speech‐reading in CI patients at T0 and T1 as well as in NHS (see supplementary material for data in NHS); yet the levels of activation in the auditory areas of the superior temporal cortex and in the frontal lobes vary according to the time post‐implantation, suggesting a gradient from inexperienced (T0) to experienced (T1) CI users and then to NHS.

Figure 1.

Brain activation patterns during speech‐reading. A: cochlear‐implant patients at T0 (n = 9). B: cochlear‐implant patients at T1 (n = 8). Speech‐reading elicits auditory activations in cochlear‐implant patients. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

Table II.

Group activations: cortical areas activated during speechreading in cochlear‐implant patients scanned early after their implant onset (T0) and after auditory speech recovery (T1)

| Cortical area | BA | P‐value | voxels | Z | x | Y | z |

|---|---|---|---|---|---|---|---|

| Cochlear‐implant patients at T0 | |||||||

| L inf. occip. gyrus | 17 | < 0.0005 | 2063 | 7.15 | −34 | −88 | −8 |

| L inf. occip. gyrus | 17 | 6.92 | −24 | −94 | −10 | ||

| L mid. occip. gyrus | 17/18 | 6.30 | −18 | −102 | 4 | ||

| L fusiform gyrus | 37 | < 0.0005 | 287 | 6.01 | −34 | −54 | −24 |

| R fusiform gyrus | 18 | < 0.0005 | 2,461 | 7.04 | 34 | −62 | −18 |

| R mid. occip. gyrus | 17/18 | 6.66 | 28 | −94 | 4 | ||

| R inf. occip. gyrus | 17 | 6.20 | 44 | −82 | −10 | ||

| L post. sup. temp. sulcus | 22 | < 0.0005 | 124 | 5.19 | −56 | −46 | 10 |

| L medio‐dorsal cereb. (decl./uvula) | < 0.0005 | 154 | 4.84 | −2 | −74 | −20 | |

| R medio‐dorsal cereb. (decl./uvula) | 4.69 | 4 | −68 | −14 | |||

| L inf. parietal lobule | 40 | 0.003 | 86 | 4.67 | −48 | −42 | 46 |

| L inf. parietal lobule | 40 | 4.34 | −48 | −52 | 54 | ||

| R post. sup. temp. sulcus | 22 | 0.006 | 74 | 5.59 | 62 | −38 | 6 |

| R ant. sup. temp. sulcus | 38 | 0.013 | 60 | 4.96 | 58 | 12 | −14 |

| Cochlear‐implant patients at T1 | |||||||

|---|---|---|---|---|---|---|---|

| Cortical area | BA | P‐value | voxels | Z | x | Y | z |

| Cochlear‐implant patients at T1 | |||||||

| L inf. occip. gyrus | 17/18 | < 0.0005 | 1,852 | 6.69 | −32 | −96 | −12 |

| L inf. occip. gyrus | 18 | 6.56 | −26 | −90 | −8 | ||

| L mid. occip. gyrus | 18/19 | 5.45 | −22 | −98 | 16 | ||

| R mid. occip. gyrus | 17/18 | < 0.0005 | 2,439 | 5.72 | 36 | −86 | 8 |

| R fusiform gyrus | 19/37 | 5.60 | 40 | −78 | −16 | ||

| R inf. occip. gyrus | 17 | 5.41 | 32 | −94 | −2 | ||

| L inf. front. gyrus | 47 | 0.003 | 346 | 4.88 | −44 | 30 | −20 |

| L mid. front. gyrus | 46/10 | 4.49 | −46 | 50 | 0 | ||

| L inf. front. gyrus | 47 | 4.22 | −30 | 20 | −10 | ||

| R inf. front. gyrus | 46/47 | 0.022 | 97 | 4.10 | 46 | 46 | −10 |

| R inf. front. gyrus | 46/10 | 3.60 | 36 | 36 | −6 | ||

| L post. inf. temp. gyrus | 37 | 0.026 | 233 | 3.96 | −64 | −52 | −8 |

| L post. mid. temp. gyrus | 37 | 3.76 | −52 | −50 | 2 | ||

| L post. sup. temp. sulcus | 22/37 | 3.74 | −58 | −56 | 8 | ||

BA indicates Brodmann areas. x, y, and z coordinates are in MNI space.

Abbreviations: ant.: anterior; cereb.: cerebellum; decl.: declive; front.: frontal; inf.: inferior; L: left; mid.: middle; occip.: occipital; post.: posterior; R: right; sup.: superior; temp.: temporal.

Table III.

Group comparisons: significantly sub‐ and over‐activated cortical areas for patients comparisons

| Cortical area | BA | P‐value | voxels | Z | x | Y | z |

|---|---|---|---|---|---|---|---|

| Patients at T1 > Patients at T0 | |||||||

| L inf. front. gyrus | 47 | 0.044 | 85 | 3.44 | −22 | 14 | −22 |

| L inf. front. gyrus | 47 | 3.20 | −18 | 20 | −16 | ||

| L inf. front. gyrus | 47 | 3.11 | −22 | 26 | −10 | ||

| Patients at T0 > Patients at T1 | |||||||

|---|---|---|---|---|---|---|---|

| Cortical area | BA | P‐value | voxels | Z | x | Y | z |

| Patients at T0 > Patients at T1 | |||||||

| R ant. sup. temp. sulcus | 38 | 0.003 | 628 | 3.83 | 58 | 6 | −16 |

| R ant. mid. temp. gyrus | 38 | 3.49 | 62 | 2 | −30 | ||

| R ant. sup. temp. gyrus | 22 | 3.46 | 64 | −14 | −10 | ||

BA indicates Brodmann areas. x, y, and z coordinates are in MNI space.

Abbreviations: ant.: anterior; front.: frontal; inf.: inferior; L: left; mid.: middle; R: right; sup.: superior; temp.: temporal.

Visual Areas

During speechreading, all groups displayed highly significant activations (P < 0.0005) in visual cortical areas including bilateral inferior/middle occipital gyri and fusiform gyri (see Fig. 1). This set of areas commonly activated in the three groups includes the visual motion area MT and the face specific area FFA, as previously reported [Paulesu et al.,2003]. No significant activation differences could be found between any of the three groups in these occipital areas (P > 0.05).

Auditory Areas

During the speechreading task, we observed activation of the auditory temporal areas (BA 22) in the deaf patients for both scanning sessions (Fig. 1A,B). More precisely, CI patients showed activations in the posterior part of the left superior temporal sulcus (parieto‐temporo‐occipital junction; PTO), both at T0 (P < 0.0005) and T1 (P = 0.026). Pair‐wise comparison in patients did not show any statistically significant difference between T0 and T1 scanning sessions (paired t‐test). Patients also displayed some activation in the posterior part of the right superior temporal sulcus but only at T0 after the implant onset (P = 0.006). However, the activation of the auditory regions by visual speech cues in patients did not encompass the primary auditory cortex as defined by the anatomical maps from the Talairach Daemon resource. NH controls did not show any activation in any of these auditory posterior temporal regions (Supporting Information Fig. 1), although some were reported in some speechreading studies [Bernstein et al.,2002; Paulesu et al.,2003].

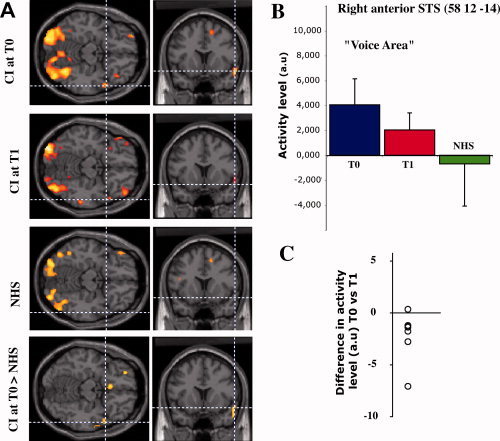

During speechreading, patients scanned at the implant onset (T0) displayed a significant activation (P = 0.013) in the right anterior superior temporal sulcus (Fig. 2A). The same patients scanned at T1 displayed a nonsignificant activation (P = 0.708) in this anterior temporal region. Pair‐wise comparison of patients between the two scanning sessions confirmed a significantly greater activation of the anterior part of the right STS at T0 when compared to T1 (Fig. 2B, peak of activation observed at [57 5 −14]; P = 0.003, paired t‐test). This cortical locus closely matches the right anterior superior temporal clusters reported in the literature as voice‐sensitive cortical regions (see Table IV) belonging to the temporal voice areas (TVA) network [Belin et al.,2000; Kriegstein and Giraud2004].

Figure 2.

Activity in the voice identity area during speech‐reading. A: activation patterns for each subject group and differential activation pattern between patients at T0 and NH controls. B: normalized regional cerebral blood flow (arbitrary units) in the right anterior superior temporal sulcus for each subject group. Activity level in the voice identity area decreases with the auditory experience, being high for inexperienced cochlear‐implant patients, and low for NH controls. Panel C shows the individual differences of activity between T0 and T1 (7 paired comparisons; the single outlier is a different patient than the one in Fig. 3C).

Table IV.

Location of voice‐sensitive right anterior superior temporal clusters

| Study | Type | x | y | z | d | r | Task |

|---|---|---|---|---|---|---|---|

| This study | PET | 57 | 5 | −14 | 0 | 8 | Lipreading |

| Belin et al.,2000, 2002 | fMRI | 58 | 6 | −10 | 4.2 | 6 | Nonspeech vocal |

| Belin and Zatorre, 2003 | fMRI | 58 | 2 | −8 | 6.8 | 6 | Speaker vs. syllable |

| von Kriegstein et al., 2003 | fMRI | 54 | 12 | −15 | 7.7 | 10 | Speaker vs. speech |

| 57 | 9 | −21 | 8.1 | 10 | |||

| von Kriegstein & Giraud,2004 | fMRI | 51 | 18 | −15 | 14.4 | 10 | Speaker vs. speech |

x, y, and z coordinates of the activation peak in Talairach space. d: peak‐to‐peak distance with the present study. r: full width at half‐maximum of the Gaussian smoothing kernel. All distances are expressed in millimeters. All quoted studies were performed with auditory vocal stimuli through functional magnetic resonance imaging in NH groups.

During the same task, NH controls did not present activation in any auditory cortical region (see Supporting Information Tables I and II), including the right anterior STS region observed in the patient group (Fig. 2A,B). Direct group comparisons showed that the right anterior STS was significantly over‐activated in CI patients scanned at T0 compared to NHS (P < 0.007, ANOVA, Fig. 2A), while patients scanned at T1 did not show any significantly greater activation relative to NHS (P > 0.05, ANOVA). When looking at the actual values of activation during speechreading across groups, there is a progressive decrease in the activity level in the anterior superior temporal area, being high in inexperienced CI users (T0), intermediate in experienced CI users (T1), and low in NHS (Fig. 2B). Importantly, our longitudinal approach revealed that all but one of the patients showed a diminution of activity in the anterior superior temporal area during the speechreading task between T0 and T1 (Fig. 2C), making this result very consistent.

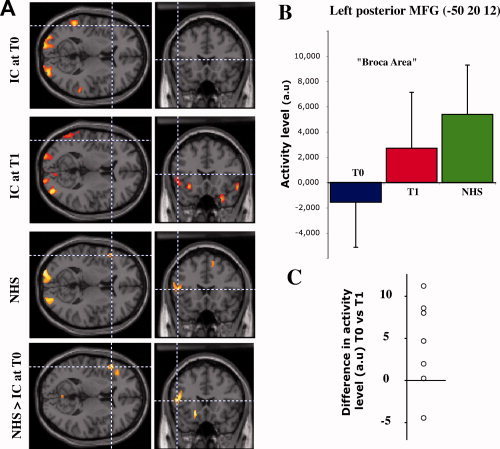

Frontal Areas

Visual presentation of speech elicited bilateral responses in the dorsolateral prefrontal cortex in CI patients after stabilization of speech performance (at T1, see Table II and Fig. 1B), but not at the time of the implant onset (at T0, Fig. 1A). These responses were located in the left posterior inferior and middle frontal gyri (P = 0.003) and in the right inferior frontal gyrus (P = 0.022). Pair‐wise comparison of patients indicate a significantly greater activation at T1 in the left posterior inferior frontal gyrus (T1 > T0; P = 0.044, paired t‐test). NH controls showed a significant activation in the left posterior middle frontal gyrus (Broca's area) during speechreading (P = 0.022, Supporting Information Table I, Fig. 3A), while they did not show any significant activation in the right prefrontal cortex. Direct group comparison showed that Broca's area was significantly hypo‐activated in CI patients scanned at T0 (T0 < controls, P = 0.002, ANOVA, Fig. 3A), while there was no significant difference between patients at T1 and NH subjects (Supporting Information Table II).

Figure 3.

Activity in Broca's area during speech‐reading. A: activation patterns for each subject group and differential activation pattern between NH controls and patients at T0. B: normalized regional cerebral blood flow (arbitrary units) in the left posterior middle frontal gyrus for each subject group. Activity level in Broca's area increases with the auditory experience, being low for inexperienced cochlear‐implant patients and high for NH controls. Panel C shows the individual differences of activity between T0 and T1 (7 paired comparisons; the single outlier is a different patient than the one in Fig. 2C).

The level of activation in Broca's area during the speechreading task tended to show a graded effect according to the degree of auditory experience, although such effect was reversed compared to that seen in the right STS. Indeed, Broca's area activation was low in CI patients at the time of the implant onset (T0), intermediate after several months of auditory recovery (T1), and high in NH subjects (Fig. 3B). Again, the longitudinal follow‐up of the CI users shows that all but two of the patients exhibit an increase in the amount of activation in Broca's area during speechreading between T0 and T1 (Fig. 3C).

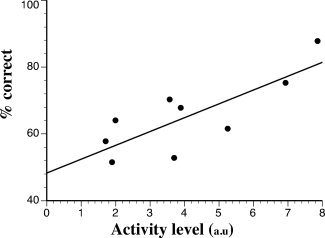

Correlation Analysis

For cochlear‐implant patients, regional cerebral blood flow (rCBF) in the activated part of the right anterior STS was positively correlated at T0 with speechreading word/nonword discrimination scores obtained during PET scanning (P = 0.0008, Fig. 4). A positive correlation was also obtained using the speechreading word recognition scores measured by the speech therapist outside the scanner (P = 0.0196). After speech rehabilitation at T1, the activity level in the right anterior STS was strongly reduced and none of these correlations could be observed any more (P = 0.58 and 0.42, respectively). While being strongly activated during speechreading in CI users, the posterior temporal regions did not show any rCBF correlation with patients' speechreading scores (P > 0.10 for all correlations). Furthermore, correlation analysis between cortical activity and patients' auditory speech performance at T1 did not outline any cortical region (P > 0.05).

Figure 4.

Functional/behavioral relationships observed in patients at T0. Inexperienced cochlear‐implant patients present a positive correlation (P = 0.0008) between the normalized regional cerebral blood flow (arbitrary units) in the voice identity area and their speech‐reading word/nonword recognition score (in % correct).

A similar analysis was performed at the level of Broca's area. In this case, we did not find any correlation of the level of activation in the left inferior frontal cortex with the during‐scan (P = 0.90) speechreading performance, nor with the visual (P = 0.54) or the auditory (P = 0.17) speech scores as measured by the speech therapist outside the scanner. However, a functional connectivity analysis demonstrated a significant positive correlation between activation levels in Broca's area and the left posterior STS (PTO junction) for cochlear‐implant patients scanned at T0 as well as T1 (P = 0.039 and P = 0.050, respectively), while NH controls did not display such a correlation (P = 0.542). Such results illustrate the progressive reactivation of the auditory‐motor loop while the patients are experiencing the altered auditory information provided by the implant.

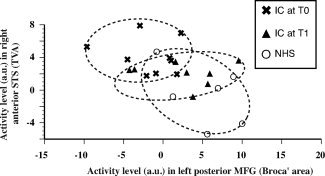

Our neuroimaging results show that the activity levels in Broca's area and in the right anterior STS present a progressive increase and decrease as a function of the hearing expertise of the subjects, respectively. To further analyse this, we performed a correlation analysis of both these activity levels. When the analysis is performed within a given group (NHS, CI at T0 or T1), no significant correlation is found between activity levels in both these areas. However, at the global population level that combines all subjects (NHS and CI at T0 and T1), activity levels in these areas display a significant negative correlation (Fig. 5, P = 0.0093), with low activity levels in Broca's area being associated with high activity levels in the right anterior STS and vice‐versa.

Figure 5.

Relationship between activity levels in the voice identity area and Broca's area. At the population level there is a negative correlation (P = 0.0093) between normalized regional cerebral blood flows (arbitrary units) in the voice identity area and Broca's area. Inexperienced cochlear‐implant users display high activity in the voice identity area and low activity in Broca's area. While this is the reverse in NH controls, experienced CI users display intermediary activity levels in both areas.

In the second scanning session (T1), the time since implantation varied from 3 to 15 months to ensure a homogeneous level of recovery across the CI patients. To investigate whether the duration of auditory experience could have an effect itself on the pattern of cortical activation during speechreading, correlation analyses were performed between T1 time and activity levels at T1, and between T1–T0 time differences and T1–T0 activity level differences. None of these analyses revealed any correlation between the amount of experience with the implant and observed activity levels.

Since a few patients presented some residual hearing (Table I), we also checked whether this could have affected crossmodal plasticity. Because residual hearing can improve speech perception in noise, it might request less involvement of visual compensation and thus could affect crossmodal compensation. For each patient we computed an average hearing threshold using hearing thresholds from the auditory tonal test and assigning to nonaudible frequencies a ceiling threshold of 120 dB (human physiological threshold). These averaged hearing thresholds were then included in a correlation analysis with cortical activity. Such correlation analysis did not outline any cortical region for any contrast at any period after implantation, suggesting the lack of influence of the residual hearing in the pattern of cortical crossmodal reorganization both at T0 and T1.

DISCUSSION

This study leads to two main findings which are important for understanding the functional reorganization of the speech network in CI users. First, we observed a crossmodal reorganization of the cortical areas normally devoted to processing human voice stimuli. Second, we have been able to demonstrate through a speechreading task a progressive reactivation of the perceptuo‐motor loop including Broca's area.

Activation of the Right Anterior STS During Speechreading in CI Patients

Recent voice cognition studies have revealed a set of temporal cortical areas specifically responsive to human voice and referred to as TVA [Belin et al.,2000]. Among these areas, the anterior part of the right STS (rSTS) contains regions specifically involved in human voice recognition [Kriegstein and Giraud2004]. During the speech‐reading task, the activity level within this region was significantly higher in inexperienced CI patients than in experienced CI patients or NHS, for whom no significant activation pattern could be observed.

So far, the rSTS is known to respond to auditory stimuli [Belin et al.,2000] and not to visual stimuli such as human faces. However, this region is functionally coupled with the fusiform face area (FFA) during voice/face associations [von Kriegstein et al.,2005], which suggests that the rSTS might be involved in face‐related visual tasks. During the speechreading task, the FFA was activated by visual stimuli in all groups in our experiment, while we did not observe a functional correlation between the FFA and the rSTS (Pearson post‐hoc correlation, P > 0.05). Our hypothesis is that the face processing originally performed in the FFA is functionally extended to lip movements processing, leading to a crossmodal reorganization of the rSTS. Our data indeed show a clear significant correlation between rCBF in the rSTS and speech‐reading performance in inexperienced CI patients. Altogether, these findings suggest that the right anterior TVA might play a functional role during speechreading in inexperienced CI patients, possibly via audiovisual facilitation mechanisms involving auditory‐to‐visual matching strategies.

We can expect that during deafness, the voice‐sensitive areas become progressively de‐specialized in processing auditory voice‐related stimuli while they are progressively involved in processing visual voice‐related stimuli such as speechreading. The ability to discriminate human voices is indeed strongly impaired in CI users [Fu et al.,2004], especially when the patients are tested shortly after cochlear implantation [Massida et al.,2008]. Further, in CI deaf patients who did not recover auditory speech comprehension, human voice stimuli failed to activate the right STS [Coez et al.,2008]. These failures in the voice‐processing network could be explained by the poor auditory spectral information delivered by the CI, which affects voice discrimination tasks (Massida et al., in press). Furthermore, the visual colonization of the voice‐sensitive areas presently reported could also have possible deleterious effects on their functional recovery. In consequence, in proficient auditory CI users, we can expect that once the auditory input has been restored by the neuroprosthesis, voice‐sensitive areas will progressively evolve from an initially more visual‐focused (i.e., speechreading) to an auditory‐focused vocal processing strategy. Indeed, in our group of patients, the level of activation of the rSTS decreases within the first month post‐implantation, while patients are recovering auditory speech comprehension. Moreover, this rapid decrease could explain the fact that no such activation has been previously reported in CI patients during a speech‐reading task, since recording brain activity early after implantation appears to be critical to show this effect on follow‐up.

The cross‐modal activations of the auditory areas reported here (rSTS) might be neural correlates of the highly synergic audiovisual speech integration observed in CI patients [Rouger et al.,2007; Strelnikov et al.,2009a]. Previous studies have shown that CI patients display cross‐modal activations in low‐level visual areas (BA 17/18) during auditory‐only word perception [Giraud et al.,2001]. Interestingly, in these studies speech‐reading performance was significantly correlated with cross‐modal activation of visual areas by auditory speech stimulation, while in our study speechreading performance is significantly correlated with cross‐modal activation of auditory areas by visual speech stimulation. These findings thus concur to indicate supranormal auditory‐to‐visual and visual‐to‐auditory crossmodal activations after deafness and cochlear implantation. This tight cooperation between auditory and visual networks seems to be especially important in light of the role of visuo‐auditory interactions for speech comprehension.

Lastly, cross‐modal activations elicited by CI patients in auditory regions during speechreading did not encompass the primary auditory cortex as defined by the anatomical maps. This confirms previous studies performed in congenital deaf patients that reported activation in secondary auditory areas but not in A1 during exposure to visual sign language [Lambertz et al.,2005; Nishimura et al.,1999] or speech‐reading [Campbell and Capek2008; Capek et al.,2008; Lee et al.,2007]. However, NHS did not show any activation in the auditory regions while it has been reported in some speechreading studies [Bernstein et al.,2002; Molholm and Foxe2005; Paulesu et al.,2003; Pekkola et al.,2005] or during a visual driven multisensory illusion [Saint‐Amour et al.,2007]. This discrepancy could result from differences in the speechreading task in which the subjects were engaged.

Activations of Broca's Area and the Left Posterior STS During Speechreading in CI Patients

The use of a longitudinal approach to study speechreading in CI patients allowed us to reveal that the auditory‐motor stream [Hickok and Poeppel2007] can be reactivated through the visual channel as posterior temporal auditory regions undergo cross‐modal reorganizations.

Our follow‐up in CI patients revealed a progressive activation of Broca's area by visual speech with a significantly higher level of activity at T1 (after auditory recovery) than at T0 (before auditory recovery), tending to reach the level observed in NHS. In CI deaf patients, activation of Broca's area by auditory speech is only observed in proficient CI users [Mortensen et al.,2006]. This result is in agreement with the involvement of Broca's area in auditory phonological tasks [Demonet et al.,1992,2005] and also in speechreading tasks in hearing subjects [Campbell et al.,2001; MacSweeney et al.,2000; Paulesu et al.,2003], as was observed within our control group. This activation indicates that visual lip and face movements are linked to matching internal motor representations [Paulesu et al.,2003].

In NHS, Broca's area and the posterior part of the left superior temporal sulcus (or parieto‐temporo‐occipital junction‐PTO), are two key cortical regions for speech processing. The PTO might support an amodal encoding of the phonological information thus allowing higher‐level semantic processing (“sound‐meaning” interface [Hickok and Poeppel2007]), while speech processing in the left inferior lateral premotor cortex is likely to involve the mapping of phonological representations to motor commands leading to unambiguous speech signal coding [Dufor et al.,2009; Liberman and Whalen2000]. Both of these areas are crucial components of the speech visuo‐audio‐motor loop, anatomically connected via the arcuate fasciculus [Saur et al.,2008].

Our results actually indicate persistent crossmodal activations in the left posterior superior temporal cortex in CI patients, after implantation (T0) as well as after auditory recovery (T1). The same observation could be replicated in the right posterior STS, but only at T0. Further, our previous study [Strelnikov et al., 2010] revealed that the posterior region of the right STS/STG also presents a higher activity level at rest compared to NHS. Those activations could not be observed in NH controls during the speechreading task, although auditory voice stimulation in NH subjects is known to elicit activations extending up to the posterior regions in the right STS. This reinforces the view that crossmodal cooperation plays a key role during auditory speech perception recovery after cochlear implantation. The reported crossmodal activation of the left posterior temporal cortex in CI users could indicate that CI patients learnt to extract useful information from speech‐related face and lip movements to link efficiently the corresponding articulatory representations to phonological representations, leading to a better performance than NH controls. In this view, the visuo‐audio‐motor speech‐processing network would process visual speech cues in a highly efficient way during speechreading in CI patients, allowing an appropriate phonological and articulatory internal representation of visual speech. Such functional link is demonstrated by the positive correlation observed in CI patients between activity levels in Broca's area and in the left posterior STS.

Altogether these findings suggest that the restoration of the perceptuo‐motor speech loop after cochlear implantation is not limited to auditory input but also encompasses visual input, so that the visuo‐audio‐motor speech processing network is reactivated as a whole. Such a functional reactivation might be interpreted as a global mechanism and might explain the lack of direct correlation with the speechreading performance. This network might then play a similar role during the recovery of speech perception after cochlear implantation as during the normal developmental acquisition of speech in NH people [Hickok and Poeppel2000].

CONCLUSION

Our results using visual speech stimulation suggest the emergence of a “reversed” pattern of neuroplasticity after cochlear implantation, expressed as a regression of abnormal crossmodal activations in the temporal areas and a progressive reactivation of the frontal areas normally involved in speech comprehension. After cochlear implantation, deaf patients thus undergo functional reorganizations involving not only auditory and audiovisual speech processing networks, but also the visual speech‐processing network. This allows a low‐level integration of audiovisual information early after the beginning of auditory stimulation through the implant, thus facilitating auditory‐matching processes during speechreading. Such audiovisual integration is later completed by an increased use of higher‐level speech processing strategies including a progressive reactivation of the audio‐visuo‐motor loop to allow for efficient articulatory‐to‐phonological information matching. This articulatory processing is probably coupled with some high‐level speech processing, including syntaxic and lexical predictive amodal strategies used to understand visual speech. Lastly, the crossmodal reorganization of the voice‐sensitive region could indicate a progressive reconstruction of the multimodal representation of a speaker's identity after cochlear implantation (“Person Identity Nodes”, cf. [Belin et al., 2004]).

Supporting information

Additional Supporting Information may be found in the online version of this article.

Supporting Information

Sup. Figure 1. Brain activation patterns during speech‐reading in Normal hearing Subjects (n = 6).

Acknowledgements

We would like to thank the CI patients and NH subjects for their participation, M.‐L. Laborde and M. Jucla for their help in stimuli preparation; J. Foxton and K. Strelnikov for corrections of the manuscript; G. Viallard and H. Gros for their help in data acquisition.

Authors contribution: Julien Rouger and Sébastien Lagleyre contributed equally to this work.

REFERENCES

- Bavelier D, Dye MW, Hauser PC ( 2006): Do deaf individuals see better? Trends Cogn Sci 10: 512–518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B ( 2000): Voice‐selective areas in human auditory cortex. Nature 403: 309–312. [DOI] [PubMed] [Google Scholar]

- Bernstein LE, Auer ET Jr., Moore JK, Ponton CW, Don M, Singh M ( 2002): Visual speech perception without primary auditory cortex activation. Neuroreport 13: 311–315. [DOI] [PubMed] [Google Scholar]

- Campbell R ( 2008): The processing of audio‐visual speech: Empirical and neural bases. Philos Trans R Soc Lond B Biol Sci 363: 1001–1010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell R, Capek C ( 2008): Seeing speech and seeing sign: Insights from a fMRI study. Int J Audiol 47( Suppl 2): S3–S9. [DOI] [PubMed] [Google Scholar]

- Campbell R, MacSweeney M, Surguladze S, Calvert G, McGuire P, Suckling J, Brammer MJ, David AS ( 2001): Cortical substrates for the perception of face actions: An fMRI study of the specificity of activation for seen speech and for meaningless lower‐face acts (gurning). Brain Res Cogn Brain Res 12: 233–243. [DOI] [PubMed] [Google Scholar]

- Capek CM, Macsweeney M, Woll B, Waters D, McGuire PK, David AS, Brammer MJ, Campbell R ( 2008): Cortical circuits for silent speechreading in deaf and hearing people. Neuropsychologia 46: 1233–1241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coez A, Zilbovicius M, Ferrary E, Bouccara D, Mosnier I, Ambert‐Dahan E, Bizaguet E, Syrota A, Samson Y, Sterkers O ( 2008): Cochlear implant benefits in deafness rehabilitation: PET study of temporal voice activations. J Nucl Med 49: 60–67. [DOI] [PubMed] [Google Scholar]

- Demonet JF, Chollet F, Ramsay S, Cardebat D, Nespoulous JL, Wise R, Rascol A, Frackowiak R. ( 1992): The anatomy of phonological and semantic processing in normal subjects. Brain 115( Pt 6): 1753–1768. [DOI] [PubMed] [Google Scholar]

- Demonet JF, Thierry G, Cardebat D ( 2005): Renewal of the neurophysiology of language: Functional neuroimaging. Physiol Rev 85: 49–95. [DOI] [PubMed] [Google Scholar]

- Desai S, Stickney G, Zeng FG ( 2008): Auditory‐visual speech perception in normal‐hearing and cochlear‐implant listeners. J Acoust Soc Am 123: 428–440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dufor O, Serniclaes W, Sprenger‐Charolles L, Demonet JF ( 2009): Left premotor cortex and allophonic speech perception in dyslexia: A PET study. Neuroimage 46: 241–248. [DOI] [PubMed] [Google Scholar]

- Finney EM, Fine I, Dobkins KR ( 2001): Visual stimuli activate auditory cortex in the deaf. Nat Neurosci 4: 1171–1173. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Chinchilla S, Galvin JJ ( 2004): The role of spectral and temporal cues in voice gender discrimination by normal‐hearing listeners and cochlear implant users. J Assoc Res Otolaryngol 5: 253–260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giraud AL, Price CJ, Graham JM, Truy E, Frackowiak RS ( 2001): Cross‐modal plasticity underpins language recovery after cochlear implantation. Neuron 30: 657–663. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D ( 2000): Towards a functional neuroanatomy of speech perception. Trends Cogn Sci 4: 131–138. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D ( 2007): The cortical organization of speech processing. Nat Rev Neurosci 8: 393–402. [DOI] [PubMed] [Google Scholar]

- Kriegstein KV, Giraud AL ( 2004): Distinct functional substrates along the right superior temporal sulcus for the processing of voices. Neuroimage 22: 948–955. [DOI] [PubMed] [Google Scholar]

- Lambertz N, Gizewski ER, de Greiff A, Forsting M ( 2005): Cross‐modal plasticity in deaf subjects dependent on the extent of hearing loss. Brain Res Cogn Brain Res 25: 884–890. [DOI] [PubMed] [Google Scholar]

- Lee HJ, Giraud AL, Kang E, Oh SH, Kang H, Kim CS, Lee DS ( 2007): Cortical activity at rest predicts cochlear implantation outcome. Cereb Cortex 17: 909–917. [DOI] [PubMed] [Google Scholar]

- Liberman AM, Whalen DH ( 2000): On the relation of speech to language. Trends Cogn Sci 4: 187–196. [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Amaro E, Calvert GA, Campbell R, David AS, McGuire P, Williams SC, Woll B, Brammer MJ ( 2000): Silent speechreading in the absence of scanner noise: An event‐related fMRI study. Neuroreport 11: 1729–1733. [DOI] [PubMed] [Google Scholar]

- Massida Z, Belin P, James C, Rouger J, Fraysse B, Barone P, Deguine O: Voice discrimination in cochlear‐implanted deaf subjects (in press). [DOI] [PubMed]

- Massida Z, Rouger J, James CJ, Belin P, Barone P, Deguine O ( 2008): Voice gender perception in cochlear implanted patients, Vol. 4 The Federation for European Neuroscience Societies: Geneva: pp 087.12. [Google Scholar]

- Merabet LB, Pascual‐Leone A ( 2010): Neural reorganization following sensory loss: The opportunity of change. Nat Rev Neurosci 11: 44–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molholm S, Foxe JJ ( 2005): Look ‘hear’, primary auditory cortex is active during lip‐reading. Neuroreport 16: 123–124. [DOI] [PubMed] [Google Scholar]

- Moody‐Antonio S, Takayanagi S, Masuda A, Auer ET Jr., Fisher L, Bernstein LE ( 2005): Improved speech perception in adult congenitally deafened cochlear implant recipients. Otol Neurotol 26: 649–654. [DOI] [PubMed] [Google Scholar]

- Moore DR, Shannon RV ( 2009): Beyond cochlear implants: awakening the deafened brain. Nat Neurosci 12: 686–691. [DOI] [PubMed] [Google Scholar]

- Mortensen MV, Mirz F, Gjedde A ( 2006): Restored speech comprehension linked to activity in left inferior prefrontal and right temporal cortices in postlingual deafness. Neuroimage 31: 842–852. [DOI] [PubMed] [Google Scholar]

- Nishimura H, Hashikawa K, Doi K, Iwaki T, Watanabe Y, Kusuoka H, Nishimura T, Kubo T ( 1999): Sign language ‘heard’ in the auditory cortex. Nature 397: 116. [DOI] [PubMed] [Google Scholar]

- Paulesu E, Perani D, Blasi V, Silani G, Borghese NA, De Giovanni U, Sensolo S, Fazio F ( 2003): A functional‐anatomical model for lipreading. J Neurophysiol 90: 2005–1203. [DOI] [PubMed] [Google Scholar]

- Pekkola J, Ojanen V, Autti T, Jaaskelainen IP, Mottonen R, Tarkiainen A, Sams M ( 2005): Primary auditory cortex activation by visual speech: An fMRI study at 3 T. Neuroreport 16: 125–128. [DOI] [PubMed] [Google Scholar]

- Penny W, Holmes A. 2004. Random‐effects analysis In: Frackowiak R, Friston K, Frith C, Dolan R, Price C, Zeki S, Ashburner J, Penny W, editors. Human Brain Function. Elsevier: London: pp 842–850. [Google Scholar]

- Petitto LA, Zatorre RJ, Gauna K, Nikelski EJ, Dostie D, Evans AC ( 2000): Speech‐like cerebral activity in profoundly deaf people processing signed languages: Implications for the neural basis of human language. Proc Natl Acad Sci U S A 97: 13961–13966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Röder B, Stock O, Bien S, Neville H, Rosler F ( 2002): Speech processing activates visual cortex in congenitally blind humans. Eur J Neurosci 16: 930–936. [DOI] [PubMed] [Google Scholar]

- Rouger J, Lagleyre S, Fraysse B, Deneve S, Deguine O, Barone P ( 2007): Evidence thant cochlear implanted deaf patients are better multisensory integrators. Proc Natl Acad Sci U S A 104: 7295–7300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rouger J, Fraysse B, Deguine O, Barone P ( 2008): McGurk effects in cochlear‐implanted deaf subjects. Brain Res 1188: 87–99. [DOI] [PubMed] [Google Scholar]

- Sadato N, Pascual‐Leone A, Grafman J, Ibanez V, Deiber MP, Dold G, Hallett M ( 1996): Activation of the primary visual cortex by Braille reading in blind subjects. Nature 380: 526–528. [DOI] [PubMed] [Google Scholar]

- Saint‐Amour D, De Sanctis P, Molholm S, Ritter W, Foxe JJ ( 2007): Seeing voices: High‐density electrical mapping and source‐analysis of the multisensory mismatch negativity evoked during the McGurk illusion. Neuropsychologia 45: 587–597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saur D, Kreher BW, Schnell S, Kümmerer D, Kellmeyer P, Vry MS, Umarova R, Musso M, Glauche V, Abel S, Huber W, Rijntjes M, Hennig J, Weiller C. ( 2008): Ventral and dorsal pathways for language. Proc Natl Acad Sci U S A 105: 18035–18040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shannon RV, Zeng FG, Kamath V, Wygonski J, Ekelid M ( 1995): Speech recognition with primarily temporal cues. Science 270: 303–304. [DOI] [PubMed] [Google Scholar]

- Strelnikov K, Rouger J, Barone P, Deguine O. ( 2009a): Role of speechreading in audiovisual interactions during the recovery of speech comprehension in deaf adults with cochlear implants. Scand J Psychol 50: 437–444. [DOI] [PubMed] [Google Scholar]

- Strelnikov K, Rouger J, Lagleyre S, Fraysse B, Deguine O, Barone P. ( 2009b): Improvement in speech‐reading ability by auditory training: Evidence from gender differences in normally hearing, deaf and cochlear implanted subjects. Neuropsychologia 47: 972–979. [DOI] [PubMed] [Google Scholar]

- Strelnikov K, Rouger J, Demonet JF, Lagleyre S, Fraysse B, Deguine O, Barone P ( 2010): Does brain activity at rest reflect adaptive strategies? Evidence from speech processing after cochlear implantation. Cereb Cortex 20: 1217–1222. [DOI] [PubMed] [Google Scholar]

- Tanner WP Jr., Swets JA ( 1954): A decision‐making theory of visual detection. Psychol Rev 61: 401–409. [DOI] [PubMed] [Google Scholar]

- von Kriegstein K, Kleinschmidt A, Sterzer P, Giraud AL ( 2005): Interaction of face and voice areas during speaker recognition. J Cogn Neurosci 17: 367–376. [DOI] [PubMed] [Google Scholar]

- Weeks R, Horwitz B, Aziz‐Sultan A, Tian B, Wessinger CM, Cohen LG, Hallett M, Rauschecker JP ( 2000): A positron emission tomographic study of auditory localization in the congenitally blind. J Neurosci 20: 2664–2672. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional Supporting Information may be found in the online version of this article.

Supporting Information

Sup. Figure 1. Brain activation patterns during speech‐reading in Normal hearing Subjects (n = 6).