Abstract

Animal experiments provide evidence that learning to associate an auditory stimulus with a reward causes representational changes in auditory cortex. However, most studies did not investigate the temporal formation of learning‐dependent plasticity during the task but rather compared auditory cortex receptive fields before and after conditioning. We here present a functional magnetic resonance imaging study on learning‐related plasticity in the human auditory cortex during operant appetitive conditioning. Participants had to learn to associate a specific category of frequency‐modulated tones with a reward. Only participants who learned this association developed learning‐dependent plasticity in left auditory cortex over the course of the experiment. No differential responses to reward predicting and nonreward predicting tones were found in auditory cortex in nonlearners. In addition, learners showed similar learning‐induced differential responses to reward‐predicting and nonreward‐predicting tones in the ventral tegmental area and the nucleus accumbens, two core regions of the dopaminergic neurotransmitter system. This may indicate a dopaminergic influence on the formation of learning‐dependent plasticity in auditory cortex, as it has been suggested by previous animal studies. Hum Brain Mapp 34:2841–2851, 2013. © 2012 Wiley Periodicals, Inc.

Keywords: neuroimaging, fMRI, auditory system, plasticity, appetitive conditioning

INTRODUCTION

Cortical plasticity is a fundamental attribute of the brain and a prerequisite for lifelong learning and recovery after damage to the central nervous system. Prior studies have shown that even the very early representation of stimuli within primary and secondary sensory cortices is modulated by behavioral relevance and experience [Feldman, 2005; Froemke and Jones, 2011; Gilbert et al., 2009; Jäncke, 2009; Münte et al., 2002; Ohl and Scheich, 2005; Op de Beeck and Baker, 2010]. In the auditory domain, plasticity has been mainly studied using aversive conditioning tasks, where a previously neutral auditory stimulus (conditioned stimulus; CS) acquires significance through its prediction of a future aversive event, such as an electric shock (unconditioned stimulus; US). Several experiments in rodents provide evidence that learning‐related changes such as receptive‐field shifts can arise rapidly after only a few pairings of the auditory stimulus with the footshock [Bakin et al., 1996; Condon and Weinberger, 1991; Edeline and Weinberger, 1991; Ohl et al., 2001]. Conducting similar experiments with functional magnetic resonance imaging (fMRI) in human subjects, Thiel et al. [ 2002a, 2002b] reported increased conditioning‐related BOLD activity in secondary auditory cortex.

In addition, animal data suggest that similar learning‐related effects can also be observed using operant appetitive conditioning tasks [Beitel, 2003; Blake et al., 2006; Brosch et al., 2011; Hui et al., 2009; Polley, 2004; Takahashi et al., 2010]. In these experiments, the animal learns to execute an appropriate response to a specific auditory stimulus (CS+) to gain a reward, whereas responses to other auditory stimuli (CS−) are not rewarded. However, in most of these appetitive studies, learning‐dependent plasticity has been investigated by comparing auditory cortex receptive fields before and after conditioning [e.g., Beitel, 2003; Takahashi et al., 2010].

We here present a fMRI study on learning‐induced plasticity in human auditory cortex, in which learning took place during fMRI measurements. We adopted a frequently used appetitive operant conditioning paradigm [Knutson et al., 2000; Wittmann et al., 2005], in which participants had to learn by trial and error to associate a specific category of frequency‐modulated (FM) tones with the chance to gain a monetary reward in a subsequent reaction time task. Tone duration served as reward‐predicting stimulus feature. Half of the participants had the chance to gain a reward in experimental trials with long FM tones, the other half in trials containing a short FM tone. As a fMRI study by Brechmann and Scheich [ 2005] showed that the processing of FM‐duration preferentially involves the left auditory cortex, we expected learning‐induced plasticity to occur predominantly in left auditory cortex.

Studies investigating the neurochemical modulation of learning‐induced plasticity provide compelling evidence that the dopaminergic neurotransmitter system plays a critical role in this process [Camara et al., 2009; Glimcher, 2011; Kubikova and Kostal, 2010; Morris et al., 2010; Samson et al., 2010]. Several animal studies showed that stimulation of the ventral tegmental area (VTA) modulates plasticity in auditory cortex [Bao et al., 2001; Hui et al., 2009; Kisley and Gerstein, 2001]. For example, Bao et al. [ 2001] reported increased spatial auditory cortex representations of a CS, which was paired with direct electric stimulation of the VTA. This effect was abolished by blocking dopaminergic d2 receptors. Therefore, we hypothesized that plasticity within the auditory system induced by our operant conditioning task will be accompanied by changes within the dopaminergic neurotransmitter system, in particular within the VTA and in the nucleus accumbens, which represents the main striatal target area of dopaminergic projections from the VTA [Fields et al., 2007].

MATERIAL AND METHODS

Subjects

Thirty‐nine volunteers (18 female, age range: 18–31 years, mean age: 24 years) participated in the experiment. All participants were right‐handed, normal hearing (hearing‐loss less than 15 dB HL between 100 Hz and 8 kHz) and had no history of neurological disorder. The study was conducted in accordance with the Declaration of Helsinki (World Medical Association, 2008). All experimental procedures were approved by the ethics committee of the University of Magdeburg and written informed consent was obtained from the participants. Five subjects were excluded from all further analyses due to severe head movements during fMRI scanning (overall head movement > 3 mm, scan‐to‐scan movement > 1 mm).

Task

We used an appetitive operant conditioning task, in which participants had to learn to associate a specific category of FM tones with the chance to gain a monetary reward in a subsequent reaction time task. Before the actual experiment, we measured the subjects' individual reaction time distribution in a number comparison task, in which participants had to indicate via key‐press whether a number (1, 4, 6, or 9) presented on a screen was smaller or larger than five [Pappata et al., 2002; Wittmann et al., 2005]. We recorded 100 reaction times and calculated 85% value of the individual reaction time distribution from the subset of correct responses. This value was used as reaction time threshold for gaining a reward in the subsequent paradigm.

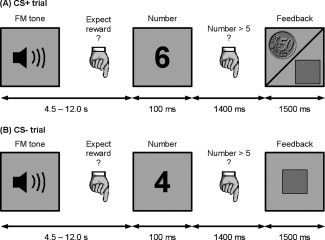

As depicted in Figure 1, the response‐reward part of the experimental paradigm also consisted of manual responses in a number comparison task. In half of the trials (CS+), correct and fast responses were financially rewarded, which was indicated by the image of a 50 Euro‐Cent coin. Slow or incorrect responses in these trials resulted in a neutral feedback (gray square) and were not rewarded (see Fig. 1A). The other half of trials (CS−) was not rewarded and subjects were always presented with the neutral feedback, independent of their response (see Fig. 1B). Whether the upcoming number comparison was potentially rewarded (CS+) or not (CS−) was indicated by an FM tone presented at the beginning of each trial. The tones differed in five stimulus dimensions: frequency range, modulation rate, loudness, direction, and duration. Participants were instructed that one category of the tones predicted a reward chance but had to learn the relevant feature that established the target category by trial and error. In this experiment, sound duration served as reward‐predicting feature. For one half of the participants, long tones predicted a reward, i.e., served as CS+, for the other half short tones served as CS+. To individually assess the current learning status, participants had to indicate their reward expectancy for the current trial via key‐press after each FM tone. In total, the experiment consisted of 160 trials, with 80 of them potentially rewarded.

Figure 1.

Participants worked on a simple reaction times task, in which they had to indicate whether a number shown on a screen was smaller or larger than five. In half of the trials (CS+), fast and correct responses in this task were rewarded with 50 Euro‐Cent. Slow or incorrect answers in these trials resulted in no reward (indicated by a square). In the other half of trials (CS−), participants were never rewarded, independent of the speed of their response. At the beginning of each trial, a FM tone indicated whether the upcoming number comparison was potentially rewarded (CS+ tones) or not (CS− tones). Participants were instructed that a specific category of tones predicts a reward chance, but had to learn this category by trial and error. After each FM tone, they had to state their current reward expectancy for the upcoming trial via key press. The rating was taken as an index for learning.

Key responses had to be made with the index finger (reward expected = YES, number < 5) or middle finger (reward expected = NO, number > 5) of the right hand. In the number comparison task, numbers were presented for 100 ms; the feedback was presented 1.5 s after onset of the number presentation. Delays between FM tone and number comparison task were temporally jittered in steps of 1.5 s ranging from 4.5 to 12.0 s. The inter‐trial‐interval ranged from 3.0 to 12.0 s in steps of 1.5 s. During all delays, a fixation cross was presented on the screen.

Stimuli

For each stimulus dimension (frequency range, modulation rate, loudness, direction, and duration), two principle levels were defined. We specified a low and a high frequency band, each containing five onset frequencies separated by half‐tone steps (500 Hz, 530 Hz, 561 Hz, 595 Hz, 630 Hz/1,630 Hz, 1,732 Hz, 1,826 Hz, 1,915 Hz, and 2,000 Hz). Stimulus frequencies varied with either 0.25 octaves/s or 0.5 octaves/s. Sound level was individually adjusted to be most comfortable under scanner noise for both the quieter and louder sounds differing by ∼10 phon. Sound duration was either 400 (short) or 800 ms (long). The direction of modulation was either rising or falling. Combining all possible values for these five dimensions resulted in 160 different stimuli in total, with 80 of them (all short or all long FM tones) predicting a potential reward.

fMRI Data Acquisition

Data acquisition was performed on a 3 T Siemens MAGNETOM Trio MRI scanner (Siemens AG, Erlangen, Germany) with an eight‐channel head array. Key‐presses were recorded using a MR‐compatible response keypad (Covilex GmbH, Magdeburg, Germany). Acoustic stimuli were delivered by MR compatible headphones (MR confon OPTIME 1; MR confon GmbH, Magdeburg, Germany). To ensure that participants could hear FM tones during data acquisition, sound level and balance were individually adjusted under scanner noise before starting the experiment.

During functional measurements, 1,680 T 2*‐weighted gradient echo planar imaging volumes (time of repetition (TR) = 1.500 ms, time of echo (TE) = 30 ms, flip angle α = 80°, Field of view (FoV) = 192 × 192 mm2, voxel‐size = 3.0 × 3.0 × 3.0 mm3) with BOLD‐contrast were obtained. Volumes consisted of 24 interleaved slices (gap of 0.3 mm) ranging from the anterior cingulate cortex dorsally to the inferior colliculus in the brain stem. After the experimental task, a high‐resolution T 1‐weighted structural volume was obtained from each subject.

Behavioral Data Analysis

To investigate the learning rate, i.e., the formation of an association between potential reward in the number comparison task and the FM tones, we determined individual learning curves from the subjects' responses on reward expectancy after presentation of the tones. Using a sliding average over 31 trials (i.e., ±15 trials around each data point), we visualized the temporal development of the percentage of correct responses in this task. From the resulting plots, we assigned all participants to one of the following three groups: learner, nonlearner, or nonassignable. We defined learners as those participants showing a clear increase in the percentage of correct responses over time and reaching a stable plateau of at least 90% correct responses within the first 120 trials (i.e., after three quarters of the experiment). Nonlearners were defined as those participants never reaching at least a level of 66.4% correct responses within the course of the experiment. 66.4% was chosen as cut‐off as this is the lower limit for above chance performance (at P < 0.05 for n = 31 as calculated using a binomial test). Because we were interested in directly comparing true learners and nonlearners, we excluded all subjects not matching the criteria of one of both groups and therefore not showing any clear learning‐ or nonlearning‐behavior from all further analyses. In total, we assigned 16 participants to the group of learners, nine to the group of nonlearners, and another nine were excluded from further analyses. Within the group of learners, 10 out of 16 subjects were potentially rewarded following long FM tones. Within the nonlearners, four out of nine subjects were rewarded after long FM tones. Average reaction time thresholds as calibrated in the behavioral pretest and gained monetary reward of both groups are stated in the results section. The group of excluded subjects performed quite heterogeneously in the task: Four subjects learned in the very last trials and did therefore not show any stable plateau of performance, four subjects showed some learning but did not reach values higher than 80% correct responses, and one subject knew the critical dimension right from the start of the experiment and did not show any learning‐induced change in performance.

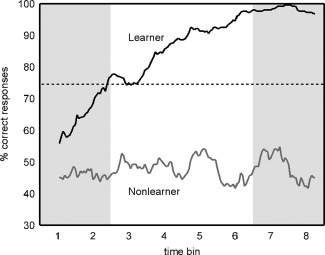

For both the learner and nonlearner groups, we calculated average learning curves. Curves were smoothed using a sliding average over 15 trials. On the group level, the lower limit of above‐chance performance was set to 74.6% (binomial test at P < 0.05, n = 15). Using the average curve of the learners' group, we defined two phases of the experiment: an “unlearned” phase, in which the subjects were not able to associate specific FM tones with a reward chance (i.e., learning curve is within chance level), and a “learned” phase, in which the performance reached a stable plateau of peak performance. Note that for the learners, the prediction of receiving a reward is at chance in the first quarter of the experiment and at ceiling in the last quarter of the experiment (see Fig. 2). Given this average curve progression, we assigned the first quarter of the experiment to the phase “unlearned,” and the fourth quarter to the phase “learned.” All subsequent analyses therefore focus on these parts of the experiments.

Figure 2.

Learning curves derived from the participants' responses of reward expectancy for the upcoming trial after hearing the FM tone: Lines depict average learning curves for learners and nonlearners. Dotted lines indicate the lower border of above‐chance‐performance. Using the average curve of the learners' group, we defined two phases of the experiment: an “unlearned” phase, in which subjects were not able to associate specific FM tones with a reward chance, and a “learned” phase, in which discrimination performance reached a stable plateau. Given the learners' average curve progression, we assigned the first quarter of the experiment to the phase “unlearned,” and the last quarter to the phase “learned.” The corresponding time intervals are marked with gray boxes. All subsequent analyses focused on these parts of the experiment.

We investigated reaction times in the number comparison task for learners and nonlearners during the “learned” and “unlearned” phase of the experiment. On the individual level, median reaction times in both CS+ and CS− trials were extracted for the first and the last quarter of the experiment. Each of the four conditions contained 20 trials. Subsequently, we calculated group means and standard errors for both groups. To reveal statistically significant reaction time differences between CS+ and CS− trials in the number comparison task in the first and the fourth quarter of the experiment, we conducted two‐way repeated‐measures analyses of variance (ANOVA) for both groups, including the factors time (first/fourth quarter of the experiment) and conditioning (CS+/CS−). Post hoc analyses were conducted using two‐tailed paired t tests (P < 0.05, Bonferroni corrected for multiple comparisons).

fMRI Data Analysis

MRI data were processed and analyzed using SPM8 (FIL, Welcome Trust Centre for Neuroimaging, UCL, London, UK). To correct for head motion, the functional time series were spatially realigned to the first image of the session. The structural T 1‐weighted volume was registered to a mean functional image and segmented to obtain spatial normalization parameters. Using these parameters, functional and structural images were normalized to the Montreal Neurological Institute (MNI) template brain. Finally, normalized functional volumes were smoothed with a three‐dimensional Gaussian kernel of 4 mm full‐width‐half‐maximum.

A mixed‐effects model was used for statistical analysis. Our single‐subject model contained separate regressors modeling BOLD responses to CS+ and CS− tones. To investigate changes in neural activity related to learning the reward association, we split these regressors into eight time bins. This resulted in 16 regressors in total, each of them accounting for 10 FM tones. Feedback was modeled using four regressors. For CS+ trials, one regressor accounted for reward after fast responses, another one for neutral feedback after slow responses. For CS− trials, we used one regressor modeling correct responses. Feedback after response errors (i.e., no button press, wrong button) in both CS+ and CS− trials was pooled in one additional regressor. Signal changes related to head movement were accounted for by including the six movement parameters as calculated in the SPM8 realign procedure. In total, the single‐subject model consisted of 26 regressors. Time series in each voxel were high‐pass filtered to 1/128 Hz and modeled for temporal autocorrelation across scans with an AR(1) process.

For each subject, we calculated a time by conditioning interaction to investigate changes in BOLD responses to CS+ and CS− stimuli between the first and the last quarter of the experiment. On group level, we investigated this contrast both within the groups of learners and nonlearners and between groups using one‐sample‐ and two‐sample‐t tests. Taking into account the average time course of learning within the group of learners, this contrast aimed to reveal learning‐induced plasticity within this group. On the other hand, we expected no differential effect for the nonlearners.

We restricted our analyses and interpretations to three main regions‐of‐interest: the left auditory cortex, the VTA, and the nucleus accumbens. All regions were specified using literature‐based peak coordinates. Left auditory cortex was defined as sphere of radius 8 mm around [x,y,z] = [−45,−27,6] in MNI‐space. This peak‐coordinate originates from a previous study by Thiel et al. (2002a) in which this particular part of left auditory cortex showed learning‐related plasticity in an aversive conditioning task. To restrict the search volume to temporal lobe only, the final volume for this region was specified as the intersection of the 8 mm sphere with a left temporal lobe mask as provided by the WFU PickAtlas extension for SPM [Maldjian et al., 2003]. Similarly, the VTA was anatomically defined as the intersection of a brainstem mask (WFU PickAtlas) and a sphere of radius 6 mm around [x,y,z] = [0,−10,−12] in MNI space [peak coordinate: Aron et al., 2005]. The nucleus accumbens was specified using spheres of radius 6 mm around [x,y,z] = [−12,10,−6] and [x,y,z] = [12,10,−4] in MNI space [peak coordinates: Liu et al., 2011]. Note that the reduced radius used for both subcortical structures accounts for the comparatively small size of these regions. Statistical tests applied in the region‐of‐interest analysis were restricted to those four volumes. Results were reported as statistically significant for a threshold of P < 0.05, corrected for family‐wise errors (FWE) on cluster‐level.

Post hoc analyses were performed to ensure that statistical differences obtained in this analysis are related to significant differences in responses to CS+ and CS− stimuli after learning the reward association and were not present before learning had taken place. For all activation clusters obtained in the region‐of‐interest analysis, we extracted individual beta values as function of time and conditioning from spheres of 4 mm around the group activation peak. These beta values were tested for statistically significant differences using two‐tailed (before learning) and one‐tailed (after learning) t tests (P < 0.05, Bonferroni corrected).

To visualize the time course of learning‐related effects in our regions‐of‐interest, we extracted the individual beta values for each time bin (eight in total) as a function of stimulus type and group. Data are depicted as group medians and standard errors of median and represent averaged values of voxels within a sphere of 4 mm around the activation peak.

In addition to the main analysis restricted to our hypothesized regions‐of‐interest, we conducted an additional exploratory whole‐brain analysis for each comparison. For this exploratory analysis, we used a more liberal combined threshold of P < 0.001 (uncorrected) and minimal cluster‐size k = 10.

RESULTS

Behavioral Data

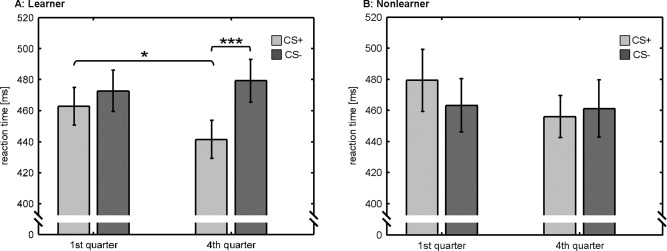

For both groups, we compared reaction times in the task as a function of conditioning (CS+/CS−) and time (first quarter of the experiment/fourth quarter of the experiment). Within the group of learners, a two‐way between‐subject ANOVA revealed a statistically significant main effect of conditioning (F(1,15) = 19.23, P < 0.001) as well as a significant conditioning by time interaction (F(1,15) = 8.63, P < 0.05). Post hoc t tests showed that reaction times in potentially rewarded trials (CS+) were significantly faster in the fourth quarter (i.e., after learners learned the reward‐association), when compared with the first quarter (i.e., before learning the reward‐association) of the experiment (P < 0.05, Bonferroni corrected). Moreover, in the fourth quarter of the experiment, reaction times in CS+ trials were significantly faster than in nonreward‐predicting trials (CS−). Within the group of nonlearners, no significant reaction time differences were observed. Average reaction times for both groups are depicted in Figure 3. Note that learners and nonlearners did not differ regarding the individually calibrated reaction time threshold used in the number comparison task (learners: 405 ± 17 ms, nonlearners: 396 ± 14 ms, P = 0.50). However, learners gained a significantly higher amount of reward, when compared with nonlearners in the actual experiment (learners: 31.0 ± 1.5 荤, nonlearners: 25.0 ± 2.0 荤, P < 0.05).

Figure 3.

Reaction times for the number comparison task for the learners and nonlearners: Within the learners, reaction times in potentially rewarded (CS+) trials were significantly decreased, when compared with nonreward‐predicting (CS−) trials after learning the reward‐association (i.e., in the fourth quarter of the experiment). In addition, reaction times in CS+ trials were significantly reduced, when compared with the first quarter of the experiment (i.e., before learning the reward‐association). Nonlearners showed no statistically significant reaction time differences related to conditioning (CS+/CS−) and time (first quarter of experiment/last quarter of experiment). Figure depicts average reaction times and standard errors. Statistically significant differences are marked by asterisks (two‐tailed t tests at P < 0.05, corrected for multiple comparisons).

Functional MRI Data

In analogy to the behavioral analysis, we focused on a conditioning by time interaction (CS+ − CS−)4th quarter − (CS+ − CS−)1st quarter to identify brain regions showing learning‐induced functional plasticity. In line with our hypothesis on regions involved in the formation of this kind of plasticity, we restricted our main analysis to three regions‐of‐interest: the left auditory cortex, the VTA, and the nucleus accumbens.

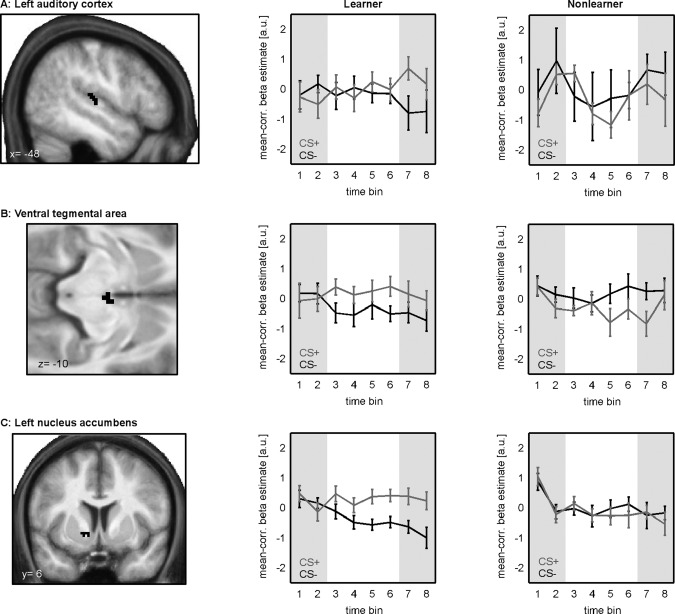

Within the group of learners, we found positive interaction effects (at P < 0.05, FWE corrected) in left auditory cortex ([x,y,z] = [−48,−24,12], k = 11), left nucleus accumbens ([x,y,z] = [−16,6,−4], k = 6), and in the VTA ([x,y,z] = [2,−12,−10], k = 7). No effects were observed in right nucleus accumbens. Activation clusters obtained from this analysis are depicted in Figure 4. Within all regions, post hoc t tests showed that responses to CS+ stimuli were significantly increased as compared to CS− stimuli in the fourth quarter of the experiment (P < 0.05, Bonferroni corrected), whereas no difference was observed before learning the reward association. Nonlearners showed no effects within those regions.

Figure 4.

Learning‐related changes in BOLD‐responses to reward‐predicting (CS+) and nonreward‐predicting (CS−) stimuli: Figure depicts activation clusters in learners obtained in a region‐of‐interest analysis on the conditioning by time interaction, restricted to left auditory cortex, the VTA, and the nucleus accumbens. Learners showed statistically significant positive interaction effects in all regions but the right nucleus accumbens (P < 0.05, FWE corrected). No effects were observed in the group of nonlearners. The right columns depict median time‐courses of beta estimates as function of conditioning (CS+/CS−) for both groups. Beta‐values are mean‐corrected on individual level. The first and the fourth quarter of the experiment are highlighted in gray.

To visualize time‐courses of beta estimates for rewarded (CS+) and nonrewarded (CS−) stimuli, we extracted average mean‐corrected beta values around the activation peaks for each subject. Figure 4 (central column) shows median beta values for the learner group as function of time and conditioning. Note the higher BOLD activity for CS+ trials, when compared with CS− at the end of the experiment, i.e., after learning had taken place. For the group of nonlearners, we found no significant interaction effects in any of the specified regions‐of‐interest. For a comparison of time courses of beta values, we extracted mean‐corrected values of this group from the same regions as for the learners [see Fig. 4 (right column)].

A direct between‐group comparison between learners and nonlearners revealed a statistically significant positive effect (learner > nonlearner) in the left auditory cortex (activation peak at [x,y,z] = [−48,−22,10], cluster size k = 22) and nucleus accumbens bilaterally (left: [x,y,z] = [−12, 4,−6], k = 25; right: [x,y,z] = [12,6,−6], k = 33) but not in the VTA (P < 0.05, FWE corrected). Again, we performed post hoc t tests to study differential responses to CS+ and CS− stimuli before and after learning the reward association within the identified activation clusters. Within all regions, learners showed significantly increased responses to CS+, when compared with CS− stimuli in the fourth quarter of the experiment. In contrast, no significant differences were observed in the first quarter. For nonlearners, post hoc tests revealed significantly increased responses for CS−, when compared with CS+ stimuli in the fourth quarter of the experiment. No effects were observed for this group in auditory cortex and right nucleus accumbens (all tests: P < 0.05, Bonferroni corrected).

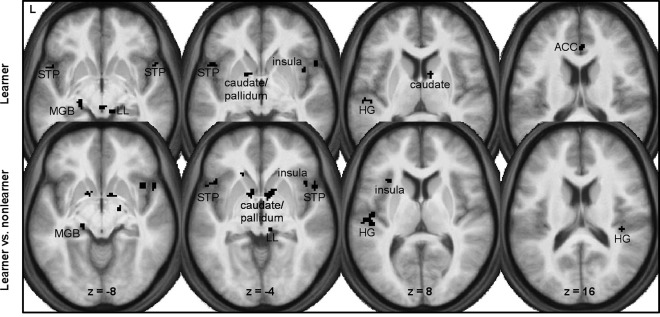

Additionally, we conducted an additional exploratory whole‐brain‐analysis on the conditioning by time interaction (at P < 0.001, k = 10). Within the group of learners, we found a pattern of activation including, among others, the right anterior cingulate cortex, the caudate nucleus, the globus pallidus, as well as the left auditory cortex and left medial geniculate nucleus (see Fig. 5A). Again, no significant activations were found in the group of nonlearners. As depicted in Figure 5B, a direct comparison of interaction effects between learners and nonlearners showed significant differences for the caudate nucleus, the globus pallidus, the left medial geniculate nucleus, and bilateral auditory cortex. A complete list of all activation clusters revealed in the whole‐brain‐analysis can be found in Table 1.

Figure 5.

Results of the additional whole‐brain analysis: Figure shows statistically significant positive effects of the conditioning by time interaction for the group of learners (upper row) and between learners and nonlearners (lower row) at a threshold level of P < 0.001 (uncorrected, minimal cluster size k = 10). For both comparisons, interaction effects were observed in the caudate, Heschl's gyrus (HG), the insula, the lateral leminiscus (LL), the medial geniculate nucleus (MGB), the pallidum, and the superior temporal pole (STP). Within the group of learners, we found additional learning‐related changes in the anterior cingulate cortex (ACC).

Table 1.

Results of the exploratory whole‐brain analysis on the conditioning by time interaction within the group of learners (A) and in a between‐group comparison of learners and nonlearners (B)

| x | y | z | Volume | Z | Region | |

|---|---|---|---|---|---|---|

| (A) Learner | −54 | −24 | 10 | 288 | 4.06 | Left Heschl's gyrus/sulcus |

| −48 | 14 | −6 | 152 | 3.50 | Left superior temporal pole | |

| 52 | 14 | −6 | 136 | 3.84 | Right superior temporal pole | |

| −20 | −24 | −8 | 96 | 3.78 | Left medial geniculate nucleus | |

| 4 | −26 | −10 | 160 | 3.60 | Oculomotor nucleus | |

| 14 | −30 | −10 | 88 | 3.63 | Right lateral lemniscus | |

| −16 | 6 | −4 | 88 | 3.89 | Left pallidum | |

| 10 | 6 | 8 | 120 | 3.83 | Right caudate nucleus | |

| 42 | 10 | −4 | 80 | 3.59 | Right insula | |

| 2 | 32 | 16 | 128 | 3.98 | Right anterior cingulate cortex | |

| −24 | 42 | 18 | 80 | 3.59 | Left middle frontal gyrus | |

| (B) Learner vs. nonlearner | −48 | −22 | 10 | 560 | 3.68 | Left Heschl's gyrus/sulcus |

| 40 | −28 | 14 | 96 | 3.83 | Right Heschl's gyrus | |

| −18 | −24 | −8 | 88 | 3.55 | Left medial geniculate nucleus | |

| 10 | −28 | −4 | 112 | 3.64 | Right superior colliculus | |

| 14 | −34 | −18 | 104 | 3.84 | Right lateral lemniscus | |

| −12 | 4 | −6 | 272 | 4.10 | Left pallidum/caudate nucleus | |

| 8 | 4 | 6 | 792 | 4.09 | Right pallidum/caudate nucleus | |

| −46 | 16 | −4 | 200 | 3.57 | Left insula/superior temporal pole | |

| −42 | −10 | 2 | 80 | 3.59 | Left insula | |

| −32 | 20 | 8 | 88 | 3.62 | Left insula | |

| 40 | 14 | −8 | 496 | 3.87 | Right insula/superior temporal pole |

The table states peak MNI coordinates, cluster volume in mm3, Z‐values, and corresponding brain regions. All clusters were identified using a combined threshold of P < 0.001 (uncorrected) and minimum cluster size k = 10.

DISCUSSION

This data show that learning‐dependent plasticity in human auditory cortex can be induced by appetitive operant conditioning. We demonstrate that this kind of plasticity depends on learning the association between a reward‐predicting tone and reward because differences in neural activity to the CS+ and CS− were only observed after learning had taken place and only occurred in subjects who had learned the tone‐reward association. Additionally, in line with earlier studies indicating a role of the dopaminergic system in reward‐learning, we also found learning‐related differences in neural activity in the VTA and the nucleus accumbens.

Learning‐Related Plasticity in Auditory Areas

Herein, we show that auditory plasticity can not only be observed in aversive but also appetitive conditioning paradigms in humans. This complements several earlier studies in animals which show changes in auditory cortex receptive field, phase locking, and response amplitudes during appetitive conditioning [Beitel, 2003; Blake et al., 2006; Brosch et al., 2011; Hui et al., 2009; Polley, 2004; Takahashi et al., 2010]. Note, however, that the differential responses we observed in humans in this experiment are not due to receptive field shifts, as sounds of both categories share the same frequency range. Changes in cortical stimulus representation may rather be explained by the formation of a distinct categorization pattern. This assumption is based on findings reported by Ohl et al. [ 2001]. They showed that gerbils which had to learn to categorize rising and falling FM tones to avoid a footshock showed distinct spatial patterns of auditory cortex activity for the two categories of tones after learning the categorization principles.

In humans, a differential task‐dependent involvement of left auditory cortex during categorization of FM tones has been demonstrated by Brechmann and Scheich [ 2005]. They reported a systematic change in right auditory cortex activation with task‐performance when subjects had to categorize FM tones according to the direction but an involvement of left auditory cortex when the subjects had to categorize FM tones according to the stimulus duration. The left hemispheric effect was initially interpreted to be consistent with the specialization of the left auditory cortex for temporal processing [Zatorre and Belin, 2001]. However, it is also consistent with a specialization of the left hemisphere for sequential processing [Bradshaw and Nettleton, 1981; Brechmann et al., 2007] because in contrast to the direction of an FM tone, the duration of a tone can only be evaluated in comparison with previous tones in a sequence. In this fMRI study, the subjects had to learn to categorize a similar set of FM stimuli according to their duration. In contrast to the study of Brechmann and Scheich [ 2005], categorization had to be learned by trial and error rather than by instruction. Still, we found learning related changes in activity in the left auditory cortex which underlines the specific role of the left auditory cortex for categorizing sounds in relation to their duration.

We here found that functional plasticity in auditory cortex emerged only after participants had learned the association between FM tone categories and reward chance. This is in good agreement with a very recent study in rats conducted by Takahashi et al. [ 2010]. They reported that rats, which were trained to nose poke in response to a conditioned tone to gain a food reward, showed progressive changes in the tonotopic organization of auditory cortex related to the current state of training. Even though there are several differences in the underlying techniques and approaches to measure auditory plasticity in animals and humans, both our and the animal data suggest that the plasticity observed in appetitive conditioning is related to the learning status of the subject. In line with this, the group of nonlearners did not show differing BOLD‐responses to CS+ and CS− stimuli. However, on a descriptive level nonlearners showed fluctuations in BOLD signal in auditory cortex that were unspecific to CS+ or CS− stimuli. We would like to propose that these fluctuations over time might be related to changes in the nonlearners' motivation to solve the task when they were not able to find a suitable sound categorization pattern. This change in motivation might then directly influence the attention to FM tones and the observed BOLD signal in auditory cortex.

The learning‐related effect was not restricted to the cortical level. Our whole brain analysis indicates that similar changes are also evident in the medial geniculate nucleus. Previous studies have not only reported receptive‐field re‐tunings of neurons within the ventral medial geniculate body during classical conditioning in animals [e.g. Edeline and Weinberger, 1991] but also provided evidence for a promoting role of this brain region in the formation of functional plasticity in the auditory system. In particular, it has been shown that direct electric microstimulation of the medial geniculate body causes shifts or broadenings, respectively, of frequency‐tuning curves in primary auditory cortex [Ma and Suga, 2009] and in the inferior colliculus [Wu and Yan, 2007].

Role of the Reward System in Learning‐Related Plasticity

Learners did not only develop a learning‐related dissociation of BOLD‐responses to CS+ and CS− stimuli within the auditory system but also showed very similar effects regarding responses in VTA and nucleus accumbens. This result is in good agreement with many previous studies on reward processing demonstrating that both regions respond differentially to reward‐predicting, when compared with neutral stimuli [Knutson and Gibbs, 2007; Liu et al., 2011; O'Doherty et al., 2002]. However, in our data, the dissociation of VTA and nucleus accumbens responses to CS+ and CS− mainly relied on a decrease in neural activity to the CS−, whereas most prior human and animal studies reported increased neural activity to reward‐predicting stimuli (see Schultz [ 2010] for a review). But, there is data recorded from awake monkeys suggesting that responses in dopaminergic midbrain neurons can also decrease to nonrewarded stimuli or to reward‐predicting stimuli that are overtrained [Ljungberg et al., 1992]. Thus, more data is needed to clarify the significance of our data showing a decrease in VTA and nucleus accumbens responses to nonrewarded stimuli over the course of learning.

Our whole brain analysis revealed increased activity to CS+, when compared with CS− stimuli in further reward‐related areas that have previously been shown to be involved in anticipating primary and secondary reinforcers such as the caudate nucleus/pallidum, the insula, and the anterior cingulate cortex [Liu et al., 2011; O'Doherty et al., 2002; Wittmann et al., 2005, for review].

Reward‐related increases in neural activity in VTA and nucleus accumbens are usually attributed to the dopaminergic neurotransmitter system [Arias‐Carrión and Poppel, 2007; O'Doherty, 2004; Ye et al., 2011]. We would like to point out that the crucial contribution of dopamine to the changes in BOLD activity cannot be measured with fMRI. However, a combined [11 C] raclopride positron emission tomography and fMRI study suggests a correlation of CS+ induced BOLD activity in VTA with reward‐related dopamine release in the ventral striatum in a similar paradigm [Schott et al., 2008].

Previous work has provided strong evidence that differing dopaminergic responses to reward‐predicting and neutral sensory stimuli are crucial for the development of learning‐induced plasticity [Camara et al., 2009; Glimcher, 2011; Kubikova and Kostal, 2010; Morris et al., 2010; Samson et al., 2010]. Stark and Scheich [ 1997] suggested that dopamine might be involved in the initial formation of behaviorally relevant stimulus associations in auditory cortex. Applying chronic brain microdialysis during footshock avoidance training in gerbils, they reported an increased concentration of homovanillic acid, a major dopamine metabolite, within auditory cortex. This increased concentration could only be observed during task training but not in later relearning sessions. In line with this, Schicknick et al. (2008) found that administration of the D1/D5 dopamine receptor agonist SKF‐38393 before or shortly after initial training of a footshock avoidance task increased FM tone discrimination performance in gerbils. SKF‐38393 was ineffective when administered in later retraining sessions. In addition, work in animals showed that pairing tones with a direct electric stimulation of the VTA results in a changed cortical representation of the CS [Bao et al., 2001; Hui et al., 2009; Kisley and Gerstein, 2001]. Interestingly, Bao et al. (2001) reported that this effect was abolished by blocking dopaminergic D1 and D2 receptors. This suggests that dopamine might mediate the plasticity promoting effects of VTA stimulation. To test this hypothesis, we conducted a post hoc dynamic causal modeling (DCM) analysis aiming to investigate effective connectivity between the auditory cortex and the VTA during learning the association between FM tones and reward (see Supporting Information). Results of the DCM analysis suggest changes in VTA input strength into auditory cortex related to learning the association between FM tones and reward. Interestingly, post hoc tests on the connection strengths within the winning model provided no evidence for a stable intrinsic connection between both regions during the experiment but showed that the connection strength was dependent on stimulus type and learning. These findings can be interpreted in a way that VTA inputs into auditory cortex are not present permanently during all phases of the experiment but are rather directly linked to learning the association between FM tone and reward chance. These additional results strengthen the view that dopaminergic inputs into auditory cortex are critical for the formation of learning‐dependent plasticity in auditory cortex.

In summary, this data show the formation of learning‐related plasticity in human auditory cortex during operant appetitive conditioning. We demonstrate that plasticity is strongly related to learning the reward‐predicting auditory stimulus class as effects occur only after learning the reward association and are not present in nonlearning subjects. Our observation of similar learning‐related differences in VTA and nucleus accumbens may point to a dopaminergic influence on this process, as it has been suggested by previous studies in animals.

Supporting information

Supporting Information Figure 1.

Supporting Information Figure 2.

Supporting Information

ACKNOWLEDGMENTS

The authors thank Astrid Gieske for conducting the pilot experiment, Anja Lindig and Monika Dobrowolny for fMRI data acquisition in Magdeburg, and Marcus Jörn for programming the experimental control software. The helpful comments by four anonymous reviewers are acknowledged.

REFERENCES

- Arias‐Carrión O, Poppel E (2007): Dopamine, learning, and reward‐seeking behavior. Acta Neurobiol Exp (Wars) 67:481–488. [DOI] [PubMed] [Google Scholar]

- Aron A, Fisher H, Mashek DJ, Strong G, Li H, Brown LL (2005): Reward, motivation, and emotion systems associated with early‐stage intense romantic love. J Neurophysiol 94:327–337. [DOI] [PubMed] [Google Scholar]

- Bakin JS, South DA, Weinberger NM (1996): Induction of receptive field plasticity in the auditory cortex of the guinea pig during instrumental avoidance conditioning. Behav Neurosci 110:905–913. [DOI] [PubMed] [Google Scholar]

- Bao S, Chan VT, Merzenich MM (2001): Cortical remodelling induced by activity of ventral tegmental dopamine neurons. Nature 412:79–83. [DOI] [PubMed] [Google Scholar]

- Beitel RE (2003): Reward‐dependent plasticity in the primary auditory cortex of adult monkeys trained to discriminate temporally modulated signals. Proc Natl Acad Sci USA 100:11070–11075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blake DT, Heiser MA, Caywood M, Merzenich MM (2006): Experience‐dependent adult cortical plasticity requires cognitive association between sensation and reward. Neuron 52:371–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradshaw JL, Nettleton NC (1981): The nature of hemispheric specialization in man. Behav Brain Sci 4:51–91. [Google Scholar]

- Brechmann A, Scheich H (2005): Hemispheric shifts of sound representation in auditory cortex with conceptual listening. Cereb Cortex 15:578–587. [DOI] [PubMed] [Google Scholar]

- Brechmann A, Gaschler‐Markefski B, Sohr M, Yoneda K, Kaulisch T, Scheich H (2007): Working memory specific activity in auditory cortex: Potential correlates of sequential processing and maintenance. Cereb Cortex 17:2544–2552. [DOI] [PubMed] [Google Scholar]

- Brosch M, Scheich H, Selezneva E (2011): Representation of reward feedback in primate auditory cortex. Front Syst Neurosci 5:1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camara E, Rodriguez‐Fornells A, Ye Z, Munte TF (2009): Reward networks in the brain as captured by connectivity measures. Front Neurosci 3:350–362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Condon CD, Weinberger NM (1991): Habituation produces frequency‐specific plasticity of receptive fields in the auditory cortex. Behav Neurosci 105:416–430. [DOI] [PubMed] [Google Scholar]

- Edeline JM, Weinberger NM (1991): Thalamic short‐term plasticity in the auditory system: Associative returning of receptive fields in the ventral medial geniculate body. Behav Neurosci 105:618–639. [DOI] [PubMed] [Google Scholar]

- Feldman DE (2005): Map plasticity in somatosensory cortex. Science 310:810–815. [DOI] [PubMed] [Google Scholar]

- Fields HL, Hjelmstad GO, Margolis EB, Nicola SM (2007): Ventral tegmental area neurons in learned appetitive behavior and positive reinforcement. Annu Rev Neurosci 30:289–316. [DOI] [PubMed] [Google Scholar]

- Froemke RC, Jones BJ (2011): Development of auditory cortical synaptic receptive fields. Neurosci Biobehav Rev 35:2105–2113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert CD, Li W, Piech V (2009): Perceptual learning and adult cortical plasticity. J Physiol 587:2743–2751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glimcher PW (2011): Understanding dopamine and reinforcement learning: The dopamine reward prediction error hypothesis. Proc Natl Acad Sci USA 108( Suppl 3):15647–15654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hui GK, Wong KL, Chavez CM, Leon MI, Robin KM, Weinberger NM (2009): Conditioned tone control of brain reward behavior produces highly specific representational gain in the primary auditory cortex. Neurobiol Learn Mem 92:27–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jäncke L (2009): The plastic human brain. Restor Neurol Neurosci 27:521–538. [DOI] [PubMed] [Google Scholar]

- Kisley MA, Gerstein GL (2001): Daily variation and appetitive conditioning‐induced plasticity of auditory cortex receptive fields. Eur J Neurosci 13:1993–2003. [DOI] [PubMed] [Google Scholar]

- Knutson B, Gibbs SE (2007): Linking nucleus accumbens dopamine and blood oxygenation. Psychopharmacology (Berl) 191:813–822. [DOI] [PubMed] [Google Scholar]

- Knutson B, Westdorp A, Kaiser E, Hommer D (2000): FMRI visualization of brain activity during a monetary incentive delay task. Neuroimage 12:20–27. [DOI] [PubMed] [Google Scholar]

- Kubikova L, Kostal L (2010): Dopaminergic system in birdsong learning and maintenance. J Chem Neuroanat 39:112–123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu X, Hairston J, Schrier M, Fan J (2011): Common and distinct networks underlying reward valence and processing stages: A meta‐analysis of functional neuroimaging studies. Neurosci Biobehav Rev 35:1219–1236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ljungberg T, Apicella P, Schultz W (1992): Response of monkey dopamine neurons during learning of behavioural reactions. J Neurophysiol 67:145–163. [DOI] [PubMed] [Google Scholar]

- Ma X, Suga N (2009): Specific and nonspecific plasticity of the primary auditory cortex elicited by thalamic auditory neurons. J Neurosci 29:4888–4896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maldjian JA, Laurienti PJ, Kraft RA, Burdette JH (2003): An automated method for neuroanatomic and cytoarchitectonic atlas‐based interrogation of fMRI data sets. Neuroimage 19:1233–1239. [DOI] [PubMed] [Google Scholar]

- Morris G, Schmidt R, Bergman H (2010): Striatal action‐learning based on dopamine concentration. Exp Brain Res 200:307–317. [DOI] [PubMed] [Google Scholar]

- Münte TF, Altenmuller E, Jäncke L (2002): The musician's brain as a model of neuroplasticity. Nat Rev Neurosci 3:473–478. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP (2004): Reward representations and reward‐related learning in the human brain: Insights from neuroimaging. Curr Opin Neurobiol 14:769–776. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP, Deichmann R, Critchley HD, Dolan RJ (2002): Neural responses during anticipation of primary taste reward. Neuron 33:815–826. [DOI] [PubMed] [Google Scholar]

- Ohl FW, Scheich H (2005): Learning‐induced plasticity in animal and human auditory cortex. Curr Opin Neurobiol 15:470–477. [DOI] [PubMed] [Google Scholar]

- Ohl FW, Scheich H, Freeman WJ (2001): Change in pattern of ongoing cortical activity with auditory category learning. Nature 412:733–736. [DOI] [PubMed] [Google Scholar]

- Op de Beeck HP, Baker CI (2010): The neural basis of visual object learning. Trends Cogn Sci 14:22–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pappata S, Dehaene S, Poline JB, Gregoire MC, Jobert A, Delforge J, Frouin V, Bottlaender M, Dolle F, Di Giamberardino L, Syrota A (2002): In vivo detection of striatal dopamine release during reward: A PET study with [(11)C]raclopride and a single dynamic scan approach. Neuroimage 16:1015–1027. [DOI] [PubMed] [Google Scholar]

- Polley DB (2004): Associative learning shapes the neural code for stimulus magnitude in primary auditory cortex. Proc Natl Acad Sci USA 101:16351–16356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Samson RD, Frank MJ, Fellous J‐M (2010): Computational models of reinforcement learning: The role of dopamine as a reward signal. Cogn Neurodyn 4:91–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schicknick H, Schott BH, Budinger E, Smalla KH, Riedel A, Seidenbecher CI, Scheich H, Gundelfinger ED, Tischmeyer W (2008): Dopaminergic modulation of auditory cortex‐dependent memory consolidation through mTOR. Cereb Cortex 18:2646–2658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schott BH, Minuzzi L, Krebs RM, Elmenhorst D, Lang M, Winz OH, Seidenbecher CI, Coenen HH, Heinze HJ, Zilles K, Düzel E, Bauer A (2008): Mesolimbic functional magnetic resonance imaging activations during reward anticipation correlate with reward‐related ventral striatal dopamine release. J Neurosci 28:14311–14319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W (2010): Dopamine signals for reward value and risk: Basic and recent data. Behav Brain Funct 6:24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stark H, Scheich H (1997): Dopaminergic and serotonergic neurotransmission systems are differentially involved in auditory cortex learning: A long‐term microdialysis study of metabolites. J Neurochem 68:691–697. [DOI] [PubMed] [Google Scholar]

- Takahashi H, Funamizu A, Mitsumori Y, Kose H, Kanzaki R (2010): Progressive plasticity of auditory cortex during appetitive operant conditioning. Biosystems 101:37–41. [DOI] [PubMed] [Google Scholar]

- Thiel CM, Bentley P, Dolan RJ (2002a): Effects of cholinergic enhancement on conditioning‐related responses in human auditory cortex. Eur J Neurosci 16:2199–2206. [DOI] [PubMed] [Google Scholar]

- Thiel CM, Friston KJ, Dolan RJ (2002b): Cholinergic modulation of experience‐dependent plasticity in human auditory cortex. Neuron 35:567–574. [DOI] [PubMed] [Google Scholar]

- Wittmann BC, Schott BH, Guderian S, Frey JU, Heinze H‐J, Düzel E (2005): Reward‐related fMRI activation of dopaminergic midbrain is associated with enhanced hippocampus‐dependent long‐term memory formation. Neuron 45:459–467. [DOI] [PubMed] [Google Scholar]

- World Medical Association (2008): World Medical Association Declaration of Helsinki. Ethical principles for medical research involving human subjects. Available online under http://www.wma.net/en/30publications/10policies/b3/index.html (accessed 16 April 2012).

- Wu Y, Yan J (2007): Modulation of the receptive fields of midbrain neurons elicited by thalamic electrical stimulation through corticofugal feedback. J Neurosci 27:10651–10658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ye Z, Hammer A, Camara E, Münte TF (2011): Pramipexole modulates the neural network of reward anticipation. Hum Brain Mapp 32:800–811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P (2001): Spectral and temporal processing in human auditory cortex. Cereb Cortex 11:946–953. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information Figure 1.

Supporting Information Figure 2.

Supporting Information