Abstract

Being looked at by a person enhances the subsequent memorability of her/his identity. Here, we tested the specificity of this effect and its underlying brain processes. We manipulated three social cues displayed by an agent: Gaze Direction (Direct/Averted), Emotional Expression (Anger/Neutral), and Pointing gesture (Presence/Absence). Our behavioral experiment showed that direct as compared with averted gaze perception enhanced subsequent retrieval of face identity. Similar effect of enhanced retrieval was found when pointing finger was absent as compared with present but not for anger as compared with neutral expression. The fMRI results revealed amygdala activity for both Anger and Direct gaze conditions, suggesting emotional arousal. Yet, the right hippocampus, known to play a role in self‐relevant memory processes, was only revealed during direct gaze perception. Further investigations suggest that right hippocampal activity was maximal for the most self‐relevant social event (i.e. actor expressing anger and pointing toward the participant with direct gaze). Altogether, our results suggest that the perception of self‐relevant social cues such as direct gaze automatically prompts “self‐relevant memory” processes. Hum Brain Mapp 33:2428–2440, 2012. © 2011 Wiley Periodicals, Inc.

Keywords: direct gaze, emotion, fMRI, hippocampus, memory

INTRODUCTION

The direction of body and gaze of surrounding agents is a fundamental information to be decoded in order to infer the focus of their attention and subsequently adapt one's interpersonal behavior [George and Conty, 2008]. Especially, being looked at by someone (i.e. perceiving direct gaze) indicates that she or he may want to communicate with us [Kampe et al., 2003] which leads us to allocate special meaning to information being conveyed by this person. Experimental findings converge toward the view that, from birth, humans are prone to process direct gaze that in turn automatically modulates emotion, attention and performance [Conty et al., 2006, 2010; Farroni et al., 2002; Senju et al., 2005; Von Grünau and Anston, 1995]. In particular, the perception of another's direct gaze facilitates person construal [Mason et al., 2006] and impacts the subsequent memorability of face identity. Mason et al. [ 2004] showed enhanced retrieval for faces previously displaying direct as compared with averted gaze. How does such an effect occur?

A first hypothesis is that the memory effect of perceiving direct gaze results from an emotional arousal bias. In line with this view, the perception of both emotional expression and direct gaze enhanced amygdala activation during face processing [Adolphs, 2002; Kawashima et al., 1999]. Electrophysiological and lesion studies provide strong evidence that the amygdala plays a critical role in the rapid mediation of emotionally arousing events on memory consolidation, and more particularly on storage processes in the (para‐)hippocampal structures [Cahill and McGaugh, 1998; McGaugh, 2000, 2004]. Such findings support the concept of “emotional tagging” which refers to the idea that “emotional arousing events mark an experience as important” [Richter‐Levin and Akirav, 2003]. However, if the memory effect of direct gaze results from a pure emotional arousal effect, enhanced retrieval of face identity should also be found for emotional faces as compared with neutral ones. Yet, in healthy subjects, such global memory bias has failed to be fully reported [see Armony and Sergerie, 2007; Johansson et al., 2004]. Hence, the question arises as to what aspect of an emotional event is defined as important.

In healthy subjects, the face memory literature usually reports better recognition for happy faces as compared with other emotional expressions or neutral faces [D'Argembeau and Van der Linden, 2007; Sweeny et al., 2009], rather than a global memory effect bias for all emotions compared with neutral expressions. Patient studies reveal specific face memory biases in pathologies associated with social impairments. For example, clinically depressed patients, in contrast to healthy subjects, show robust memory bias for sad as compared with neutral or other emotional faces [Gilboa‐Schechtman et al., 2002; see also Ridout et al., 2009]. These results have lead D'Argembeau et al. [ 2003] to propose that emotional face memory biases mainly rely on the social meaning for the self of the perceived emotional expressions. Altogether, these findings call for a refinement of the first hypothesis: the memory effect for face with direct gaze could be related to a self‐relevant memory bias. Indeed, the evaluation of emotional stimuli (and in particular of emotional faces) is suggested to depend on the degree of self‐relevance of the emotional event [Sander et al., 2003, 2007; Vrticka et al., 2009]. For instance, angry faces are perceived to be more intense with direct than averted gaze possibly because anger directed toward the observer poses a direct threat [Cristinzio et al., 2010; N'Diaye et al., 2009; Sander et al., 2007]. Thus, being observed by another could specifically mark a social event as important for the self and, as such, incidentally impact the memorability of her/his identity. In line with this view, high self‐involvement during the encoding of an emotional event increases the memorability of the event and leads to specific activation in the amygdala‐hippocampal region [Muscatell et al., 2009].

Yet another hypothesis was discussed by Masson et al. [2004]: the memory effect of perceiving direct gaze could reflect a mere spatial attentional bias. Investigations of spatial orientation of attention have demonstrated automatic attentional shifts when perceiving averted eyes [for a review Frischen et al., 2007]. Indeed, both direct and averted gaze are communicative cues and potentially drive the attentional focus of the observer either toward the eyes of the agent in case of direct gaze (mutual attention), or toward an external object (joint attention) [Itier and Batty, 2009]. Hence, averted gaze is a powerful attentional and spatial cue which can temporarily divert the observer's attention outside the facial area. As such, its perception would diminish faces encoding and therefore identity memorability.

Here, we aimed at testing whether perceiving direct social attention activates specific memory‐related process when compared with other social cues. This required disentangling between the three above mentioned processing biases: (i) the self‐relevant bias, (ii) the emotional arousal bias, and (iii) the spatial attentional bias. To do so, we manipulated three visual social parameters displayed by an agent: Gaze Direction (Direct/Averted), Emotional Expression (Anger/Neutral), and Pointing Gesture (Presence/Absence). Altogether, the above‐mentioned parameters offered a coherent representation of another's communicative intention. We expected anger expressions to lead to emotional arousal reactions as anger was described as a strong interactive emotion, less socially ambiguous than fear [Pichon et al., 2009] or happy expressions [Niedenthal et al., 2010], that robustly activates the amygdala. Moreover, we expected pointing gesture to act as a potential attentional and spatial signal that would diminish the memorability of the agent's face, as its perception, like the perception of averted gaze, was shown to trigger an automatic attentional shift even in the absence of predictive value [Belopolsky et al., 2008]. Finally, both anger expression and pointing gesture possess a self‐relevant dimension. Obviously, a gesture pointing toward the participant should increase his/her feeling of self‐involvement and especially when paired with direct gaze, just as direct gaze does on its own. Indeed, both are primary signals of communicative intentions. Moreover, as explained above, anger expression is expected to be judged as more self‐relevant when paired with direct gaze. Thus, when directed toward the participant, both anger expression and pointing gesture were expected to increase the self‐relevance of the social event.

In a behavioral experiment, we first assessed whether perceiving direct as compared with averted gaze enhances subsequent recognition of face identity during a recall stage and whether such a memory effect was related to a self‐relevance bias. To test the specificity of such an effect, we examined whether the other two manipulated social parameters (i.e. Emotional Expression (Anger/Neutral) and Pointing Gesture (Presence/Absence)) also impacted memorability. If memory effects result from emotional arousal reactions, enhanced retrieval of face identity should be found for angry as compared with neutral faces. Besides, if memory effects result from spatial attentional bias, enhanced retrieval of face identity should be found when a patent attentional and spatial cue (pointing gesture) is absent. These effects are obviously not exclusive and can occur simultaneously, nevertheless, if detecting self‐relevant social cues prompts particular memory processes, the brain network underlying direct gaze processing should display a unique activation pattern (compared with the other two manipulated social parameters), notably in amygdalar and hippocampal regions, shown to be specifically activated by self‐relevant emotional memories [Botzung et al., 2010; Rabin et al., 2010]. In line with such view, we expected both anger expression and pointing gesture to increase the self‐relevance of a social event only when directed toward the participant, and therefore to elicit higher activity in amygdala and hippocampal regions when associated with direct gaze. We tested these hypotheses using functional magnetic resonance imaging (fMRI).

BEHAVIORAL EXPERIMENTS: THE EFFECT OF VISUAL SOCIAL CUES ON SUBSEQUENT IDENTITY RETRIEVAL

To test whether the three social parameters we manipulated impacted subsequent facial memorability, we performed three experiments on three independent groups of participants. Our main hypothesis was that retrieval of face identity will be automatically enhanced for targets previously displaying direct as compared with averted gaze. However, such a memory effect may not be exclusively triggered by self‐relevant social cues; a similar outcome could also result from emotional arousal and/or spatial attentional biases.

Methods

Participants

Each group consisted of 16 participants (Group 1: 8 females, mean age = 23.9 ± 0.8 years/Group 2: 8 females, mean age = 24.7 ± 0.06 years/Group 3 = 8 females, mean age = 23.8 ± 0.7 years). All had normal or corrected‐to‐normal vision, were right‐handed, naive to the aim of the experiment, and did not present a neurological or psychiatric history. All provided written informed consent according to institutional guidelines of the local research ethics committee (who stated on the compliance to the Declaration of Helsinki) and were paid for their participation.

Material

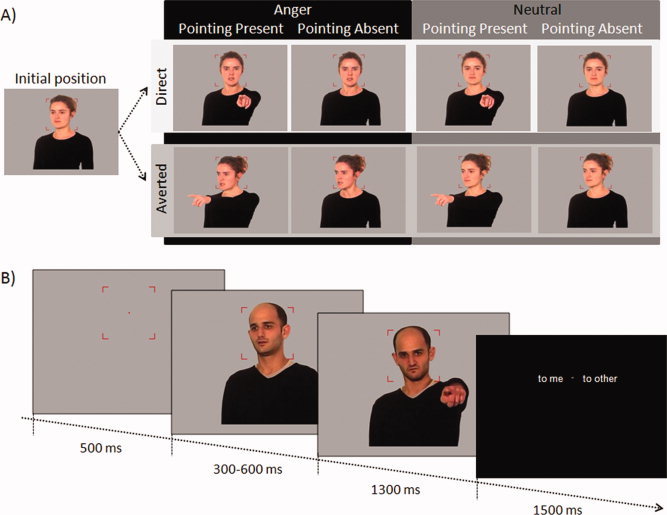

Stimuli consisted of photographs of 12 actors (six males) taken from the top of the head to the middle of the bust, and selected from a wider set of stimuli based on the performances obtained in pilot studies (see Supporting Information). For each actor, three displayed social parameters were manipulated: Gaze direction (head, eye‐gaze, and bust directed toward the participant—Direct gaze condition‐ or rotated by 30° toward the left—Averted gaze condition‐), Emotional expression (Angry or Neutral), and Pointing Gesture (Presence or Absence). This manipulation resulted in eight different stimuli (i.e. conditions of interest) for each actor varying in a 2 × 2 × 2 factorial design (Fig. 1A). For each actor, we created an additional photograph with a neutral expression, the arms along the body, and an intermediate body/eye direction of 15° toward the left. This posture was thereafter referred to as the “initial position.” For each photograph, the actor's body was cut and pasted onto a uniform grey background and passed in 256 colors. For each actor, the nine photographs were resized with systematic parameters so the actor's face covered the participant's central vision (less than 6° of visual angle both horizontally and vertically in all experimental contexts). During the experiments, the actor's body covered a visual angle inferior to 15° vertically and 12° horizontally (pointing stimuli covered approximately 1.5° more horizontally than no pointing stimuli). For all stimuli, right sides of deviation were obtained by mirror‐imaging resulting in 216 images in total (24 initial positions and 192 conditions of interest).

Figure 1.

Experimental design. (A) Example of an actor under the eight experimental conditions. (B) Time course for one trial. Before stimuli presentation, a central fixation area was presented for 500 ms to focus the participant's attention on the actor's face. The initial position was then presented for 300 to 600 ms before the apparition of a second photograph creating an apparent movement of the actor. The second photograph remained for 1,300 ms before the appearance of the response screen.

Procedure

The experiment was divided in two parts: an initial encoding phase and a surprise recognition test. In the initial encoding phase, each target actor (12 in total) was seen under only four conditions and only in the left side of deviation. For Group 1, half of the actors had direct gaze, while the other half had averted gaze (the assignment was reversed for half of the participants). The stimuli were seen in four conditions: Emotional Expression (Anger/Neutral) × Pointing gesture (Presence/Absence). For Group 2, half of the actors expressed anger, while the other half displayed a neutral facial expression (this assignment was reversed for half of the participants). The stimuli were seen in four conditions: Gaze direction (Direct/Averted) × Pointing Gesture (Presence/Absence). For Group 3, half of the actors pointed a finger, while the other half did not (this assignment was reversed for half of the participants). The stimuli were seen in four conditions: Gaze direction (Direct/Averted) × Emotional expression (Anger/Neutral).

As dynamic stimuli are known to favor natural social processes [Sato W et al., 2004], we created an apparent movement of the actor by the consecutive presentation of two photographs on the screen [Conty et al., 2007]. The first photograph showed an actor in the initial position during a random time ranging from 300 to 600 ms. It was immediately followed by a second stimulus presenting the same actor in one of the conditions of interest (Fig. 1B). This second photograph remained on the screen for 1.3 s. Thus, the participants viewed an actor moving either toward or away from them, with a neutral or angry expression and with finger pointing in the attended direction or not. At each trial, the actor's appearance was preceded by a 500 ms fixation area consisting of a central red fixation point and four red angles delimiting a square of 6° of central visual angle in the experimental context. The fixation area, delimited by the four angles, remained on the screen during the trial until the apparition of a response screen. The participant was instructed to fixate the central point keeping her/his attention inside the fixation area during actor presentation. The face of the actor remained inside the fixation area throughout the duration of the trial.

An explicit task on the parameter of interest, i.e. to judge the direction of attention of the perceived agent [Schilbach et al., 2006], was used. Thus, after each actor presentation, the participant was instructed to indicate whether the actor was addressing them or another. This was indicated by a response screen containing the expressions “to me” and “to other” assigned randomly to the left and the right of the central area of the screen (Fig. 1B). The participant responded by pressing one of two buttons (left or right) corresponding to the correct answer. The association between button and correct answer was randomized across trials. The response screen remained until 1.5 s had elapsed and was followed by a black screen of 0.5 s preceding the next trial. The order of trial presentation was randomized across participants in all experiments.

Subsequently, participants completed another experiment using an entirely different set of stimuli for 30 min. This was immediately followed by a surprise recognition test in which 22 actors (12 initial targets and 10 lures) were presented in the center of the computer screen. To ensure that recognition of face identity and not pattern matching was investigated, all the 22 actors were presented in the initial position, in the right side of deviation and at a smaller exposure size than in the initial experiment. The order of actor presentation was randomized across participants. Using a two‐choice key‐press paradigm, participants were required to report whether each actor was “old” (i.e. had been seen before in the initial task) or “new” (i.e. had not been seen before). Each static actor stimuli remained on the screen until a response was made. Participants were debriefed and thanked.

Trainings

Before the encoding phase of the experiment, participants were instructed to imagine they were part of a virtual three‐dimensional scene with two virtual individuals located on their right and left side, at an angular distance of approximately 15°. The perceived actor could, henceforth, be directed toward the participant (Direct gaze condition) or toward another virtual other (Averted gaze condition). The participants were trained at performing the experimental task and at maintaining their focus within the fixation area (on the fixation point) during the trial. This training consisted of 12 trials (consisted of photographs of two actors that were not displayed subsequently) using the same timing as in the encoding stage of the experiment.

Statistical Analyses

The mean percentage of correct recognition (% CR) and reaction times (RTs) obtained during the surprise recognition stage were computed separately for old and new stimuli. For old stimuli, they were separately computed in two categories, as a function of the manipulated factor (Group 1: Direct vs. Averted gaze; Group 2: Anger vs. Neutral expression; Group 3: Pointing present vs. Absent). For each group of participants, a t‐test was performed to compare the % CR from their specific two categories

Results

During the initial encoding phase of the experiment, the performances in identifying the target of attention were very high (mean = 96 ± 1.0%) and fast (mean = 618 ± 12 ms) in all experimental conditions. They did not differ between experimental groups (all P > 0.5).

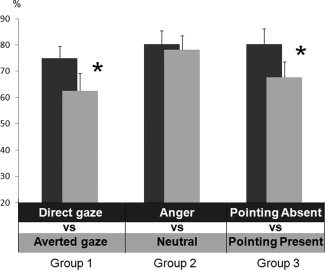

During the surprise recognition stage, new actors were correctly categorized (mean = 74 ± 0.4%). Performances did not differ between experimental groups (P > 0.1). The mean participants' response time was 1150 ± 150 ms. No effects were found on reaction times (RTs). However, as expected, % CR of Group 1 showed a significant effect of Eye direction during the recall stage (Fig. 2). Actors displaying direct gaze in the initial experiment were better recognized than those initially displaying averted gaze (t (1,15) = 2.15, P < 0.05, mean effect = 14.0 ± 5%). Group 2 did not show any significant difference in recall performances between actors initially expressing anger as compared with neutral expression (P > 0.7), while Group 3 remembered significantly better those actors who did not initially display pointing gesture than those who were initially displaying pointing gesture (t (1,15) = 2.32, P < 0.04, mean effect = 20.0 ± 6%).

Figure 2.

Mean percentage of correct recognition (with standard error) for the three experimental groups. *P < 0.05.

Discussion

As expected, we found that perceiving direct gaze enhanced subsequent identity memorability. We replicated the effect obtained by Mason et al. [2004] who used isolated frontal faces as stimuli. This allows us to exclude the possibility that the present effect is related to low visual properties of stimuli, i.e. to the fact that actors who were initially directing their attention toward participants were better encoded due to their initial frontal posture in direct gaze condition. By contrast to our study, in Mason et al. study [2004] averted gaze were seen in frontal head view suggesting that this configuration of head was not sufficient to explain the memory effect obtained for direct gaze condition in our data.

Interestingly, we did not reveal an increase of memorability for emotional expressions. Actors initially expressing anger were not better recognized than those with neutral expression. Like previous studies, we therefore failed to report a global memory bias for emotional as compared with neutral faces [Armony and Sergerie, 2007; Johansson et al., 2004]. At first, this speaks in favor of the idea that increased memorability of faces with direct gaze is related to a self‐relevant bias rather than an emotionally arousing one. Yet, one possible explanation for the absence of behaviorally observable memory improvement for anger expressions relies on a potential ceiling effect for both neutral and anger expressions. Indeed, during the behavioral experiment, participants from Group 2 have seen four times the actors displaying direct and averted gaze with and without a pointing gesture. In other words, all actors, whether displaying neutral or an angry expression, were encoded during the perception of direct gaze and/or with the absence of a pointing gesture; i.e. during the two conditions which lead to the best performances of recognition. However, the perception of an angry face could still be linked to an additional emotional memory effect, which, in the present behavioral study, may not have been revealed due to the potential above‐mentioned ceiling effect. If this is the case, we may observe greater amygdala and hippocampus activity for angry versus neutral faces.

Yet, the fact that no‐pointing actors were better recognized than pointing ones suggest that the present memory effect could also result from a spatial attentional bias. Indeed, both averted gaze and pointing gesture are communicative cues known to induce an automatic attentional shift [Belopolsky et al., 2008; Driver et al., 1999; Langton et al., 2000] that can temporarily, and even covertly, direct the participant's attention outside the actor's face area. As a consequence, perceiving averted gaze and/or pointing gesture could disturb the encoding of face identity resulting in a decrease of subsequent retrieval performances.

However, the memory effects for direct gaze and in the absence of a pointing gesture may still be mediated by different processes. Indeed, while the absence (as compared with the presence) of a pointing gesture may have favor attentional focus on the actor's face allowing for its better encoding, direct (as compared with averted) gaze can prompt automatic processing of a personally‐relevant episode. As such, both the presence of a direct gaze and the absence of a pointing gesture incidentally allow for the easy retrieval of face identity information, yet the brain networks sustaining these two conditions may differ. We therefore performed an fMRI experiment to test this hypothesis. We expected specific involvement of amygdalar and hippocampal regions during direct gaze perception. Moreover, if amygdala and hippocampus's implication reflect self‐relevant memory process, the level of activity within these structures should be particularly high when direct gaze is accompanied by anger expression and/or pointing gesture.

FMRI EXPERIMENT: NEURAL BASES OF PERCEIVING SOCIAL PARAMETERS

Method

Participants

Twenty‐two healthy volunteers (mean age = 23.3 ± 0.5 years) participated in the experiment (10 females). All had normal or corrected‐to‐normal vision, were right‐handed, naive to the aim of the different experiments and did not present a neurological or psychiatric history. All provided written informed consent according to institutional guidelines of the local research ethics committee (who stated on the compliance to the Declaration of Helsinki) and were paid for their participation.

Material and procedure

Material, procedure, and instructions were the same as in the encoding phase of the first experiment. However, each actor was seen under all 16 conditions: Gaze direction (Direct/Averted) × Emotional expression (Anger/Neutral) × Pointing gesture (Presence/Absence) × Side of Deviation (rightward/leftward). The resulting 192 trials were presented in two blocks of 18 min including 68 null events (34 black screens of 4.1 s and 34 of 4.4 s duration) each. The order of trial presentations was randomized across blocks and participants. The participant was instructed to judge whether the actor was addressing them or another after each stimuli.

fMRI data acquisition

Imaging data were collected at the Centre for NeuroImaging Research (CENIR) of Pitié‐Salpêtrière Hospital using a Siemens Trio scanner operating at 3T. Gradient‐echo T2*‐weighted transverse echo‐planar images (EPI) with BOLD contrast were acquired. Each volume contained 40 axial slices (repetition time (TR) = 2,000 ms, echo time (TE) = 50 ms, 3.0 mm thickness without gap yielding isotropic voxels of 3.0 mm3, flip angle = 78°, field of view (FOV) = 192 mm, resolution = 64 × 64), acquired in an interleaved manner. We collected a total of 1,120 functional volumes for each participant, as well as high‐resolution T1‐weighted anatomical images (TR = 2,300 ms, TE = 9.6 ms, slice thickness = 1 mm, 176 sagittal slices, flip angle = 9°, FOV = 256 mm, resolution = 256 × 256).

fMRI data processing

Image processing was carried out using Statistical Parametric Mapping (SPM5, Wellcome Department of Imaging Neuroscience; available at: http://www.fil.ion.ucl.ac.uk/spm) implemented in MATLAB (Mathworks Inc., Sherborn, MA). For each subject, the 1,120 functional images acquired were reoriented to the AC‐PC line, corrected for differences in slice acquisition time using the middle slice as reference, spatially realigned to the first volume by rigid body transformation, spatially normalized to the standard Montreal Neurological Institute (MNI) EPI template to allow group analysis, re‐sampled to an isotropic voxel size of 2 mm and spatially smoothed with an isotropic 8 mm full‐width at half‐maximum (FWHM) Gaussian kernel. To remove low‐frequency drifts from the data, we applied a high‐pass filter using a standard cut‐off frequency of 128 Hz.

Statistical analysis was carried out using SPM5. At the subject‐level, the eight conditions of interest were modeled from the apparition of the actor to the end of the response screen (rightward and leftward sides of deviation were not modeled separately). Thus, each condition of interest contained 96 trials (duration between 3.6 s and 3.9 s). For each participant, the fixation (192 trials of 500 ms duration), the button presses (192 trials of 0 ms duration) as well as six additional covariates of non‐interest capturing residual movement‐related artifacts were also modeled. At the group‐level, we used a random effect model: we performed repeated measures ANOVA with three within‐subjects factors corresponding to images parameter estimates obtained at the subject‐level. A nonsphericity correction was applied for variance differences across conditions and subjects.

A statistical threshold of P < 0.05 corrected for multiple spatial comparisons across the whole‐brain (family‐wise error probability (FWE)) was used, except for a priori hypothesized regions which were threshold at P < 0.001 uncorrected. These a priori regions of interest included bilateral amygdala and hippocampus. A small volume correction (SVC, P < 0.05 FWE corrected) approach was applied on these brain areas using an anatomical mask build from Anatomy Toolbox (v17), also exploited to identify localization of active clusters. Coordinates of activations were reported in millimeters (mm) in the MNI space.

Results

Behavioral results

During scanning, the recognition performance of the target of attention was very high in all experimental conditions (mean = 99 ± 1.0%). Repeated‐measures analyses of variance (ANOVA) was carried out on RTs with Gaze direction (Direct/Averted), Emotional expression (Anger/Neutral), and Pointing gesture (Presence/Absence) as within‐subjects factors (the analyses pooled over rightward and leftward sides of deviation).

Participants were faster at recognizing the target of attention for Direct than Averted gaze (F (1,21) = 80.9; P < 0.001‐mean effect = 32 ± 3ms). This is in accordance with previous results showing that human observers are faster at detecting a face with direct than with averted gaze [Conty et al., 2006; Senju et al., 2005; Von Grünau and Anston, 1995]. They also were faster for Neutral as compared with Anger stimuli (F (1,21) = 4.89; P < 0.05; mean effect = 9 ± 4 ms). This converged with previous evidence that negative faces capture attention and disrupt the performance of an ongoing task [Eastwood et al., 2003; Vuilleumier et al., 2001]. Finally, participants were faster when pointing gesture was present versus absent (F (1,21) = 14.7; P < 0.001; mean effect = 14 ± 4 ms) converging with the view that the act of pointing stresses the attended direction of the actor [Belopolsky et al., 2008; Langton et al., 2000; Liszkowski et al., 2004; Materna et al., 2008a]. No interaction between factors was observed (all F < 1).

fMRI Results

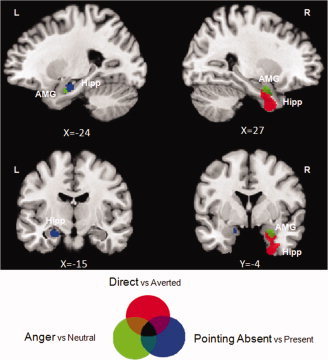

Main effect of direct gaze (Direct vs. Averted)

The perception of direct gaze was associated with activation in a cluster which included, in the anterior part of the right hippocampal formation, one subpeak in the entorhinal cortex (HIPP‐EC x = 28, y = 0, z = −40) and one in the horn of the hippocampus (HIPP‐CA x = 32, y = −8, z = −28) (see Table I). We also observed a subpeak in the right amygdala (AMG x = 26, y = −6, z = −22) (see Fig. 3).

Table I.

MNI Coordinates of brain areas selectively activated in main contrasts of interest

| MNI coordinates | ||||||

|---|---|---|---|---|---|---|

| Hemisphere | Anatomical region | X | Y | Z | Z value | Cluster size |

| Main contrasts of interest | ||||||

| Main effect Direct vs. Averted gaze | ||||||

| R | Hippocampus, (EC 90%)a | 28 | 0 | −40 | 4.66 | 319 |

| R | Hippocampus (CA 90%)a | 32 | −8 | −28 | 3.72 | 319↓ |

| R | Amygdala (90%)a | 26 | −6 | −22 | 3.85 | 319↓ |

| Main effect Anger vs. Neutral | ||||||

| L | Inferior frontal gyrus (pars orbitalis‐BA 47) | −44 | 32 | −12 | 4.72 | 13 |

| L | Amygdala (70%)a | −24 | −6 | −20 | 3.85 | 51 |

| R | Amygdala (90%)a | 26 | −6 | −18 | 3.70 | 179 |

| R | Fusiform gyrus (BA 37) | 42 | −46 | −20 | 5.45 | 96 |

| L | Inferior occipital gyrus (hOC4v BA 18) | −34 | −86 | −10 | 6.00 | 413 |

| R | Inferior occipital gyrus (hOC4v, BA 18) | 40 | −90 | −4 | 6.66 | 593 |

| Main effect Pointing Absence vs. Presence | ||||||

| L | Hippocampus (CA 60%)a | −24 | −14 | −16 | 4.05 | 88 |

| R | Middle occipital gyrus (BA 18) | 30 | −98 | 6 | 5.31 | 88 |

| Supplementary main contrasts | ||||||

| Main effect of Averted vs. Direct gaze | ||||||

| R | Superior parietal lobule (SPL) | 18 | −66 | 60 | 6.06 | 162 |

| L | Middle occipital gyrus (BA 17) | −28 | −92 | 26 | 4.99 | 17 |

| L | Middle occipital gyrus | −28 | −74 | 30 | 4.59 | 13 |

| Main effect Pointing Presence vs. Absence | ||||||

| L | Cuneus (BA 18) | −10 | −96 | 22 | Inf | 1806 |

| L | Middle occipital gyrus (BA 19) | −50 | −78 | 4 | Inf | 1279 |

| R | Middle temporal gyrus (MT/V5) | 52 | −72 | 8 | Inf | 1200 |

| R | Superior occipital gyrus (BA 18) | 18 | −92 | 18 | Inf | 1806↓ |

| L | Middle occipital gyrus (BA 19) | −50 | −78 | 4 | Inf | 1279 |

Results reported at p FWE < 0.05.

Regions of interest (ROIs) reported at P < 0.001 uncorrected but surviving SVC correction. Extend threshold = 10. Subpeaks in clusters marked with ↓. % represents the probability for the cluster to be located in the mentioned area.

Figure 3.

Temporal pole activations revealed during direct gaze, anger, and absence of pointing gesture: discrepancies and overlaps. The activated clusters in both hippocampus (EC and CA) and amygdala found during Direct versus Averted (in red), Anger versus Neutral (in green), and Pointing absent versus present (in blue) were projected on sagittal (upper part) and coronal sections (bottom part) of the MNI template. Direct gaze was associated with right hippocampus activation, while the absence of pointing gesture was associated with the left hippocampus. The right amygdala showed significant, but independent, effects for both direct gaze and anger conditions. AMG = amygdala; Hipp = hippocampus.

Main effect of Anger expression (Anger vs. Neutral)

Regions specific to anger expressions included the right (x = 26, y = −6, z = −18) and left (x = −24, y = −6, z = −20) amygdala (see Fig. 3). We also observed bilateral activation in extra‐striate visual areas, in the left ventrolateral prefrontal cortex (BA 47) and in the right fusiform gyrus.

Main effect of the absence of pointing gesture (Absence vs. Presence)

Regions specific to the absence of a pointing gesture included the posterior part of the left hippocampus (HIPP‐CA x = −24, y = −14, z = −16). We also observed bilateral activation in the right extra‐striate visual area (V2 and V3).

Main effect of spatial attentional biases (Averted vs. Direct gaze; Presence vs. Absence of Pointing gesture)

Regions specific to averted gaze perception included activations in extra‐striate visual areas (BA18, MT/V5) as well as in superior parietal lobule. Regions specific to pointing gesture included activations in extra‐striate visual areas (BA18, MT/V5, and Cuneus). Interestingly, all these regions have been shown to be activated during shift of attention [Greene et al., 2009; Materna et al., 2008b; Nummenmaa et al., 2010].

Post hoc analyses

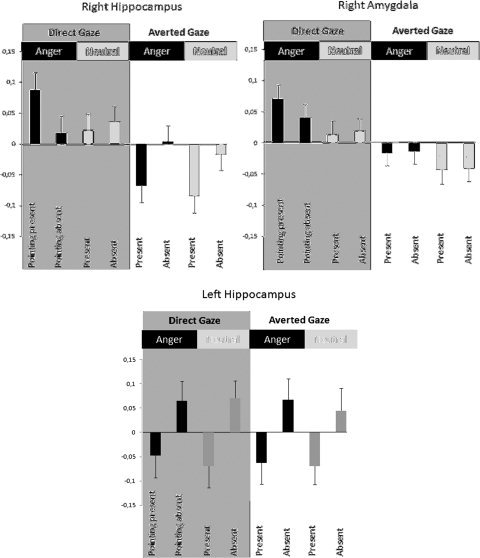

To test the specificity of hippocampus involvement for direct gaze as compared with anger stimuli, and by contrast to amygdala (see Fig. 3 for illustration), we conducted post hoc analyses on amygdala (AMG) and hippocampus (EC and CA) peaks revealed in the two following contrasts: Direct versus Averted gaze and Anger versus Neutral faces. For each peak revealed in a specific contrast, we tested whether the null hypothesis could be rejected for the other experimental factor. The t‐test were performed on the mean average parameter estimates (betas extracted at the subject level for each condition) of 3‐mm radius spheres centered on the coordinates of the maximal voxel for each region of interest. t‐Tests on the AMG activity observed in the Anger versus Neutral contrast revealed a main effect of Gaze direction: not only was this structure activated by anger as compared with neutral stimuli but it was also more activated by direct as compared with averted gaze condition, (t (1,21) = 4.1; P < 0.001 for the right t (1,21) = 3.1; P < 0.006 for the left). Reciprocally, the t‐test run on the right AMG activity observed in the Direct versus Averted gaze contrast also revealed that anger elicited greater activity in AMG than neutral expressions (F (1,21) = 3.2, P < 0.005). By contrast, no main effect of Emotional expression was revealed in the right HIPP (EC and CA) observed in the Direct versus Averted gaze contrast (both t (1,21) < 1.5, P > 0.1).

Interactions

Regarding the interactions between factors, no region resisted to FWE or small volume correction. In particular, and by contrast to our hypothesis, AMG or HIPP (EC and CA) did not display greater activity when actors pointed to and looked at the subject, or looked at the subject with an angry expression or even when they pointed to and looked at the subject with an angry expression. Yet, by restricting our analysis to our ROIs and lowering the threshold, we observed a triple interaction in the right HIPP‐EC (P < 0.05 uncorrected; x = 32, y = 0, z = −38), suggesting a tendency for more activity when actors pointed to and looked at the subject with an angry expression. Interestingly, even with such liberal threshold, this triple interaction did not appear in bilateral AMG or left HIPP‐CA.

In summary, our result revealed that the right HIPP (EC and CA) was specifically involved during direct gaze perception as compared with other manipulated social cues, while the left HIPP‐CA was particularly activated in the absence of pointing gesture. AMG, especially in the right hemisphere, was involved in processing both direct gaze and anger stimuli (see Fig. 3 for illustration). Finally, we observed that right HIPP‐EC activity seemed to be sensitive to the degree of self‐relevance of the perceived social scene, while the AMG seems sensitive to the salience of the event, whether social or emotional (see Fig. 4).

Figure 4.

Mean parameter estimates of activity for right amygdala (AMG) and hippocampus (HIPP‐EC) activated in Direct versus Averted gaze contrast and left hippocampus (HIPP‐CA) activated in Pointing absent versus present contrast. Betas were extracted at the subject level of 3‐mm radius spheres centered on the coordinates of the maximal voxel for each region of interest. Value were centered and represented in arbitrary units with standard error in the eight experimental conditions for right HIPP‐EC (left top panel), right AMG (right top panel), and left HIPP‐CA (bottom panel).

Discussion

The goal of the present fMRI study was to identify the neural bases of a potential self‐relevant memory bias for faces displaying direct attention. We found that direct gaze perception specifically activated the right hippocampus, whereas angry expression and pointing gesture by themselves did not. The level of right hippocampal activity tends to be higher when the actor expressed anger, pointed to and looked at the participants, i.e. during the most relevant situation for the self. We therefore suggest that our main results are in accordance with the appraisal theory of emotion arguing that the impact of an emotional event depends on the degree of its significance for the self [Sander et al., 2007]. They further bring to light the role of right hippocampal regions in the underlying processes.

Both direct gaze and anger conditions trigger activity in the amygdala which maintains strong connections with the hippocampus [LaBar and Cabeza, 2006], and which is an important component of the neural systems that help mark socially relevant information, in particular on the basis of facial appearance [Adolphs, 2009]. The present effects are therefore in agreement with data showing that the amygdala possesses different cell populations (and thus dissociable representations) for the perception of facial expressions and face/gaze directions [Calder and Nummenmaa, 2007; Hoffman et al., 2007]. Yet, they do not entirely replicate previous fMRI findings wherein amygdala's activity exhibited the interaction between emotional expression and gaze direction factors [Hadjikhani et al., 2008; N'Diaye et al., 2009; Sato et al., 2004, 2010]. One possible explanation for such difference lies in the initial eye position of dynamic stimuli that can impact amygdala activity. Here, we used the same initial eye position for all conditions [in contrast to N'Diaye et al., 2009; Sato et al., 2004] and manipulated the dynamic aspect of all visual parameters [in contrast to Sato et al., 2010]. Such experimental manipulations allowed us to reveal distinct clusters in the right hippocampus and in the right amygdala during direct gaze perception. Yet, in our data, only right hippocampal activity reflects an interaction between our three parameters. We therefore suggest that the amygdala directly relays salient social information to the hippocampus which is, in turn, particularly sensitive to self‐relevance.

Surprisingly, neither amygdalar nor hippocampal activity was found to be greater for pointing present as compared with absent, even when directed toward the participant with direct gaze. This may be related to the present protocol and task. Participants were requested to focus their attention within a clearly defined fixation area surrounding the actor's face/head. The task consisted in determining whether the actor was addressing “to me” or “to other.” Importantly here, the eye/head orientation was sufficient to perform the task. Indeed, when present, the pointing gesture always pointed in the same direction than eye/head direction. This close relationship between eye/head and pointing gesture orientation may have diminished the impact of the pointing gesture on self‐relevant social cues processing. On the other hand, pointing gesture may have probably driven the participant's attention away from the requested fixation area, potentially resulting in a decrease in the time spent on the face. This would be consistent with the observation that the present versus absent pointing contrasts revealed different visual areas. Altogether, the above‐described effects may have contributed to the absence of amygdalar and hippocampal activity for the pointing condition.

In contrast to right hippocampus, activity in left posterior hippocampus is enhanced in the absence of a pointing gesture in the social scene. As averted gaze, pointing is a communicative cue which may, in some cases, prompt a change in the observer's direction of attention. Therefore, activity in left posterior hippocampus in the absence of a pointing gesture may reflect participants focus on the other social cues displayed by the agent. Interestingly, such a view converges with the proposal that primarily attentional focus on the details of the stimulus results in better encoding of those details that leads to modulation of long term‐memory retention [Sharot and Phelps, 2004].

To summarize, our data suggest that, during social interactions, the right anterior hippocampus deals more specifically with the significance for the self while the left posterior hippocampus deals with attentional focus paid to other agents. This view converges with previous evidence that damage to right medial temporal lobe structures disrupts aspects of social perception more than similar lesions to the left hemisphere [Cristinzio et al., 2010].

General Discussion

While our behavioral study demonstrates that both direct gaze perception and the absence of a pointing gesture during the perception of other social agents enhanced the subsequent retrieval of their identity, our fMRI results reveal that brain structures related to the encoding process of the social event diverged between the two conditions. Direct gaze condition activated the right anterior hippocampus, whereas the absence of a pointing gesture is associated with left posterior hippocampus activation. If the general role of the hippocampus in memorizing events needs no further proof, the present data yet strongly suggests a specific function of right hippocampus in memorizing self‐relevant social events.

Neuroimaging studies have revealed a consistent overlap between brain regions involved in self‐processing and those implicated in autobiographical memory. In particular, events in which a person was highly involved have been shown to lead to particular activation of bilateral amygdala‐hippocampal region [Muscatell et al., 2009]. Another study has argued for the unique pattern of activity in right hippocampus during personally relevant memory construction (compared with other relevant mental construction) while left hippocampus was activated by event vividness whether for the self or for another [Rabin et al., 2010]. The present activation of right hippocampus converges with the view that direct gaze perception prompts self‐referential processing [Argyle, 1981; Hietanen et al., 2008], here possibly a “self‐relevant memorizing” process that incidentally allows one to better retrieve information concerning the identity of an encountered face.

Why was the perception of an angry face not associated with such an enhanced‐memory effect? If the perception of angry expressions was linked to a specific emotional memory effect, greater hippocampus activity should have been revealed in the present fMRI study for anger as compared with neutral stimuli. This was not the case. Anger faces activated bilaterally the amygdala showing that these stimuli are particularly salient. However, they did not trigger specific activation in right hippocampus in accordance with the claim that, in absence of direct gaze, the perception of an angry expression may not particularly processed as self‐relevant. Our results fit with the idea that emotional memory effect depends on the relevance of the emotion in context [Levine and Edelstein, 2009; Nairne et al., 2009].

The question arises as to whether the present explicit task involving gaze direction drove in part our effects. We think it is unlikely in light of previous research. There is indeed no evidence to believe that the role of the hippocampus in memory is limited to conscious encoding [Henke, 2010]. While Calder et al. [2002] found right hippocampus activity during an implicit task on direct versus averted gaze, Schilbach et al. [2006] failed to do so with an explicit task similar to the present one. Moreover, most previous studies using emotional faces or implicit gender categorization tasks did not reveal activity in the hippocampus. Rather than being related to the task, the absence of hippocampus activity may be explained by other experimental manipulations, such as the exploitation of only one facial identity [Kawashima et al., 1999] or the presentation of all facial stimuli before scanning [George et al., 2001]. Finally, the report of imaging results happens to be often limited to amygdala [Adams and Kleck, 2003; Kampe et al., 2001; Sato et al., 2010].

One potential limit of our study was the separate investigation of the memory effects from the brain processes of perceiving direct gaze. To bear out the role of right hippocampus in memorizing self‐relevant social cues, correlations between right hippocampus activity during the encoding of the visual scene and behavioral performance at retrieving another's identity need further investigation. Moreover, our data suggest that the activity of the right hippocampus varied with the degree of self‐relevance of the social scene. Such interactions need greater statistical power to be investigated and have not been explored in the present behavioral studies. It would also be interesting to investigate the conscious aspect of such a self‐relevant memory bias.

CONCLUSION

Recently, appraisal theory of emotion has advanced that the evaluation of emotional stimuli depends on the degree of self‐relevance of the emotional event. Here, we confirm and further such a view. We demonstrate that the memory effect of perceiving direct gaze is robust and reproducible. Moreover, we propose that it is related to a self‐relevant memory bias that relies on right hippocampal activity when one is the target of another's attention.

Supporting information

Additional Supporting Information may be found in the online version of this article.

Supporting Information

Acknowledgements

The authors are grateful to Eric Bertasi, Guillaume Dezecache, and Marie‐Sarah Adenis for their assistance in experimental settings and data collection.

REFERENCES

- Adams RB Jr, Kleck RE ( 2003): Perceived gaze direction and the processing of facial displays of emotion. Psychol Sci 14: 644–647. [DOI] [PubMed] [Google Scholar]

- Adolphs R ( 2002): Recognizing emotion from facial expressions: Psychological and neurological mechanisms. Behav Cogn Neurosci Rev 1: 21–62. [DOI] [PubMed] [Google Scholar]

- Adolphs R ( 2009): The social brain: Neural basis of social knowledge. Annu Rev Psychol 60: 693–716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Argyle M ( 1981): Bodily Communication. London: Methuen & Co. Ltd. [Google Scholar]

- Armony JL, Sergerie K ( 2007): Own‐sex effects in emotional memory for faces. Neurosci Lett 426: 1–5. [DOI] [PubMed] [Google Scholar]

- Belopolsky AV, Olivers CN, Theeuwes J ( 2008): To point a finger: Attentional and motor consequences of observing pointing movements. Acta Psychol (Amst) 128: 56–62. [DOI] [PubMed] [Google Scholar]

- Botzung A, Labar KS, Kragel P, Miles A, Rubin DC ( 2010): Component neural systems for the creation of emotional memories during free viewing of a complex, real‐world event front. Hum Neurosci 4: 34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cahill L, McGaugh JL ( 1998): Mechanisms of emotional arousal and lasting declarative memory. Trends Neurosci 21: 294–299. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Lawrence AD, Keane J, Scott SK, Owen AM, Christoffels I, Young AW ( 2002): Reading the mind from eye gaze. Neuropsychologia 40: 1129–1138. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Nummenmaa L ( 2007): Face cells: Separate processing of expression and gaze in the amygdala. Curr Biol 17: R371–R372. [DOI] [PubMed] [Google Scholar]

- Conty L, Gimmig D, Belletier C, George N, Huguet P ( 2010): The cost of being watched: Stroop interference increases under concomitant eye contact. Cognition 115: 133–139. [DOI] [PubMed] [Google Scholar]

- Conty L, N'Diaye K, Tijus C, George N ( 2007): When eye creates the contact! ERP evidence for early dissociation between direct and averted gaze motion processing. Neupsychologia 45: 3024–3037. [DOI] [PubMed] [Google Scholar]

- Conty L, Tijus C, Hugueville L, Coelho E, George N ( 2006): Searching for asymmetries in the detection of gaze contact versus averted gaze under different head views: A behavioural study. Spat Vis 19: 529–545. [DOI] [PubMed] [Google Scholar]

- Cristinzio C, N'Diaye K, Seeck M, Vuilleumier P, Sander D ( 2010): Integration of gaze direction and facial expression in patients with unilateral amygdala damage. Brain 133: 248–261. [DOI] [PubMed] [Google Scholar]

- D'Argembeau A, Van der Linden M, Etienne AM, Comblain C ( 2003): Identity and expression memory for happy and angry faces in social anxiety. Acta Psychol (Amst) 114: 1–15. [DOI] [PubMed] [Google Scholar]

- D'Argembeau A, Van der Linden M ( 2007): Facial expressions of emotion influence memory for facial identity in an automatic way. Emotion 7: 507–515. [DOI] [PubMed] [Google Scholar]

- Driver J, Davis G, Ricciardelli P, Kidd P, Maxwell E, Baron‐Cohen S ( 1999): Perception triggers reflexive visuospatial orienting. Vis Cogn 6: 509–540. [Google Scholar]

- Eastwood JD, Smilek D, Merikle PM ( 2003): Negative facial expression captures attention and disrupts performance. Percept Psychophys 65: 352–358. [DOI] [PubMed] [Google Scholar]

- Farroni T, Csibra G, Simion F, Johnson MH ( 2002): Eye contact detection in humans from birth. Proc Natl Acad Sci USA 99: 9602–9605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frischen A, Bayliss AP, Tipper SP ( 2007): Gaze cueing of attention: Visual attention, social cognition, and individual differences. Psychol Bull 133: 694–724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- George N, Conty L ( 2008): Facing the gaze of others. Neurophysiol Clin 38: 197–207. [DOI] [PubMed] [Google Scholar]

- George N, Driver J, Dolan RJ ( 2001): Seen gaze‐direction modulates fusiform activity and its coupling with other brain areas during face processing. Neuroimage 13: 1102–1112. [DOI] [PubMed] [Google Scholar]

- Gilboa‐Schechtman E, Erhard‐Weiss D, Jeczemien P ( 2002): Interpersonal deficits meet cognitive biases: Memory for facial expressions in depressed and anxious men and women. Psychiatry Res 113: 279–293. [DOI] [PubMed] [Google Scholar]

- Greene DJ, Mooshagian E, Kaplan JT, Zaidel E, Iacoboni M ( 2009): The neural correlates of social attention: Automatic orienting to social and nonsocial cues. Psychol Res 73: 499–511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadjikhani N, Hoge R, Snyder J, de Gelder B ( 2008): Pointing with the eyes: The role of gaze in communicating danger. Brain Cogn 68: 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henke K ( 2010): A model for memory systems based on processing modes rather than consciousness. Nat Rev Neurosci 11: 523–532. [DOI] [PubMed] [Google Scholar]

- Hietanen JK, Leppanen JM, Peltola MJ, Linna‐Aho K, Ruuhiala HJ ( 2008): Seeing direct and averted gaze activates the approach‐avoidance motivational brain systems. Neuropsychologia 46: 2423–2430. [DOI] [PubMed] [Google Scholar]

- Hoffman KL, Gothard KM, Schmid MC, Logothetis NK ( 2007): Facial‐expression and gaze‐selective responses in the monkey amygdala. Curr Biol 17: 766–772. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Batty M ( 2009): Neural bases of eye and gaze processing: The core of social cognition. Neurosci Biobehav Rev 33: 843–863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johansson M, Mecklinger A, Treese AC ( 2004): Recognition memory for emotional and neutral faces: An event‐related potential study. J Cogn Neurosci 16: 1840–1853. [DOI] [PubMed] [Google Scholar]

- Kampe KK, Frith CD, Dolan RJ, Frith U ( 2001): Reward value of attractiveness and gaze. Nature 413: 589. [DOI] [PubMed] [Google Scholar]

- Kampe KK, Frith CD, Frith U ( 2003): Hey John“: signals conveying communicative intention toward the self activate brain regions associated with ”mentalizing,“ regardless of modality. J Neurosci 23: 5258–5263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawashima R, Sugiura M, Kato T, Nakamura A, Hatano K, Ito K, Fukuda H, Kojima S, Nakamura K ( 1999): The human amygdala plays an important role in gaze monitoring. A PET study. Brain 122: 779–783. [DOI] [PubMed] [Google Scholar]

- LaBar KS, Cabeza R ( 2006): Cognitive neuroscience of emotional memory. Nat Rev Neurosci 7: 54–64. [DOI] [PubMed] [Google Scholar]

- Langton SR, Watt RJ, Bruce II ( 2000): Do the eyes have it? Cues to the direction of social attention. Trends Cogn Sci 4: 50–59. [DOI] [PubMed] [Google Scholar]

- Levine LJ, Edelstein RS ( 2009): Emotion and memory narrowing: A review and goal‐relevance approach. Cogn Emot 23, 833–875. [Google Scholar]

- Liszkowski U, Carpenter M, Henning A, Striano T, Tomasello M ( 2004): Twelve‐month‐olds point to share attention and interest. Dev Sci 7: 297–307. [DOI] [PubMed] [Google Scholar]

- Mason MF, Cloutier J, Macrae CN ( 2006): On construing others: Category and stereotype activation from facial cues. Soc Cogn 24: 540–562. [Google Scholar]

- Mason MF, Hood BM, Macrae CN ( 2004): Look into my eyes: Gaze direction and person memory. Memory 12: 637–643. [DOI] [PubMed] [Google Scholar]

- Materna S, Dicke PW, Thier P ( 2008a) Dissociable roles of the superior temporal sulcus and the intraparietal sulcus in joint attention: A functional magnetic resonance imaging study. J Cogn Neurosci 20: 108–119. [DOI] [PubMed] [Google Scholar]

- Materna S, Dicke PW, Thier P ( 2008b) The posterior superior temporal sulcus is involved in social communication not specific for the eyes. Neuropsychologia 46: 2759–2765. [DOI] [PubMed] [Google Scholar]

- McGaugh JL ( 2000): Memory–A century of consolidation. Science 287: 248–251. [DOI] [PubMed] [Google Scholar]

- McGaugh JL ( 2004): The amygdala modulates the consolidation of memories of emotionally arousing experiences. Annu Rev Neurosci 27: 1–28. [DOI] [PubMed] [Google Scholar]

- Muscatell KA, Addis DR, Kensinger EA ( 2009): Self‐involvement modulates the effective connectivity of the autobiographical memory network. Soc Cogn Affect Neurosci 5: 68–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- N'Diaye K, Sander D, Vuilleumier P ( 2009): Self‐relevance processing in the human amygdala: Gaze direction, facial expression, and emotion intensity. Emotion 9: 798–806. [DOI] [PubMed] [Google Scholar]

- Nairne JS, Pandeirada JN, Gregory KJ, Van Arsdall JE ( 2009): Adaptive memory: Fitness relevance and the hunter‐gatherer mind. Psychol Sci 20: 740–746. [DOI] [PubMed] [Google Scholar]

- Niedenthal PM, Mermillod M, Maringer M, Hess U ( 2010): The Simulation of Smiles (SIMS) model: Embodied simulation and the meaning of facial expression. Behav Brain Sci 33: 417–433. [DOI] [PubMed] [Google Scholar]

- Nummenmaa L, Passamonti L, Rowe J, Engell AD, Calder AJ ( 2010): Connectivity analysis reveals a cortical network for eye gaze perception. Cereb Cortex 20: 1780–1787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichon S, de Gelder B, Grezes J ( 2009): Two different faces of threat. Comparing the neural systems for recognizing fear and anger in dynamic body expressions. Neuroimage 47: 1873–1883. [DOI] [PubMed] [Google Scholar]

- Rabin JS, Gilboa A, Stuss DT, Mar RA, Rosenbaum RS ( 2010): Common and unique neural correlates of autobiographical memory and theory of mind. J Cogn Neurosci 22: 1095–1111. [DOI] [PubMed] [Google Scholar]

- Richter‐Levin G, Akirav I ( 2003): Emotional tagging of memory formation–In the search for neural mechanisms. Brain Res Brain Res Rev 43: 247–256. [DOI] [PubMed] [Google Scholar]

- Ridout N, Noreen A, Johal J ( 2009): Memory for emotional faces in naturally occurring dysphoria and induced sadness. Behav Res Ther 47: 851–860. [DOI] [PubMed] [Google Scholar]

- Sander D, Grafman J, Zalla T ( 2003): The human amygdala: An evolved system for relevance detection. Rev Neurosci 14: 303–316. [DOI] [PubMed] [Google Scholar]

- Sander D, Grandjean D, Kaiser S, Wehrle T, Scherer KR ( 2007): Interaction effects of perceived gaze direction and dynamic facial expression: Evidence for appraisal theories of emotion. Eur J Cogn Psychol 19: 470–480. [Google Scholar]

- Sato W, Kochiyama T, Uono S, Yoshikawa S ( 2010): Amygdala integrates emotional expression and gaze direction in response to dynamic facial expressions. Neuroimage 50: 1658–1665. [DOI] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Yoshikawa S, Naito E, Matsumura M ( 2004): Enhanced neural activity in response to dynamic facial expressions of emotion: an fMRI study. Brain Res Cogn Brain Res 20: 81–91. [DOI] [PubMed] [Google Scholar]

- Schilbach L, Wohlschlaeger AM, Kraemer NC, Newen A, Shah NJ, Fink GR, Vogeley K ( 2006): Being with virtual others: Neural correlates of social interaction. Neuropsychologia 44: 718–730. [DOI] [PubMed] [Google Scholar]

- Senju A, Hasegawa T, Tojo Y ( 2005): Does perceived direct gaze boost detection in adults and children with and without autism? The stare‐in‐the‐crowd effect revisited. Vis Cogn 12: 1474–1496. [Google Scholar]

- Sharot T, Phelps EA ( 2004): How arousal modulates memory: Disentangling the effects of attention and retention. Cogn Affect Behav Neurosci 4: 294–306. [DOI] [PubMed] [Google Scholar]

- Sweeny TD, Grabowecky M, Suzuki S, Paller KA ( 2009): Long‐lasting effects of subliminal affective priming from facial expressions. Conscious Cogn 18: 929–938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Von Grünau M, Anston C ( 1995): The detection of gaze direction: A stare‐in‐the‐crowd effect. Perception 24: 1297–1313. [DOI] [PubMed] [Google Scholar]

- Vrticka P, Andersson F, Sander D, Vuilleumier P ( 2009): Memory for friends or foes: The social context of past encounters with faces modulates their subsequent neural traces in the brain. Soc Neurosci 4: 384–401. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ ( 2001): Effects of attention and emotion on face processing in the human brain: An event‐related fMRI study. Neuron 30: 829–841. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional Supporting Information may be found in the online version of this article.

Supporting Information