Abstract

Humans often watch interactions between other people without taking part in the interaction themselves. Strikingly little is, however, known about how gestures and expressions of two interacting humans are processed in the observer's brain, since the study of social cues has mostly focused on the perception of solitary humans. To investigate the neural underpinnings of the third‐person view of social interaction, we studied brain activations of subjects who observed two humans either facing toward or away from each other. Activations within the amygdala, posterior superior temporal sulcus (pSTS), and dorsomedial prefrontal cortex (dmPFC) were sensitive to the interactional position of the observed people and distinguished humans facing toward from humans facing away. The amygdala was most sensitive to face‐to‐face interaction and did not differentiate the humans facing away from the pixelated control figures, whereas the pSTS dissociated both human stimuli from the pixel figures. The results of the amygdala reactivity suggest that, in addition to regulating interpersonal distance towards oneself, the amygdala is involved in the assessment of the proximity between two other persons. Hum Brain Mapp, 2012. © 2011 Wiley Periodicals, Inc.

Keywords: fMRI, body, human, social interaction, amygdala, superior temporal sulcus

INTRODUCTION

Healthy human adults are able to read nonverbal social cues from others, relying heavily on facial and bodily expressions [for reviews, see e.g. Adolphs,2010a; de Gelder et al.,2010; Hari and Kujala,2009; Puce and Perrett,2003]. To date, neuroimaging studies of social cognition have mainly focused on the perception of faces, body postures or gestures of solitary humans, or on brain activity related to thinking of other people. Such studies have revealed activation of the socio‐emotional network that comprises e.g. the amygdala [for a review, see Adolphs,2010b], fusiform gyrus [Kanwisher et al.,1997], pSTS [for a review, see Allison et al.,2000], and extrastriate body area [Downing et al.,2001]; the network also involves the temporal poles [for a review, see Olson et al.,2007], temporo‐parietal junction [Saxe and Kanwisher,2003] and the dorsomedial frontal areas [for a review, see Frith and Frith,2003].

Brain imaging studies of visual observation of social interaction between people are still rare and have relied on e.g. cartoon [Walter et al.,2004] or biological motion [Centelles et al.,2011[ stimuli, or compared observation of two interacting persons vs. one person [Iacoboni et al.,2004]. One earlier study has investigated brain activity in subjects observing two persons (faces blurred) interact in different situations‐when a person is either innocently teasing or violently threatening another person [Sinke et al.,2010]. Stronger activation was found in the amygdala to the threatening than teasing interactions, regardless of whether the emotional context was attended or unattended.

Relatively little is still known about how gestures and expressions of two naturally interacting humans are processed in the observer's brain. Many questions remain unanswered, for example, how the brain areas known to process visual images of single humans contribute to the analysis of the observed interactive stance between two people, how the mere direction of the observed interaction affects the processing, or how the viewer's gaze lands on the observed images of social interaction.

Here, we investigated brain processes involved during observation of still images of friendly social interaction between two humans. We measured hemodynamic brain responses with functional magnetic resonance imaging (fMRI) from a group of healthy adult subjects while they observed photos of two persons engaged in a friendly face‐to‐face interaction (Toward) or facing away from each other (Away). Pixelated and crystallized photos served as control stimuli (Pixel; Fig. 1). We hypothesized that observation of photos illustrating interaction between two subjects activates brain areas that have earlier been associated with socio‐emotional processing of single subjects. We also expected to find differences between responses to Toward and Away conditions, thereby clarifying the roles of the different brain areas that support the interpretation of social interaction between others.

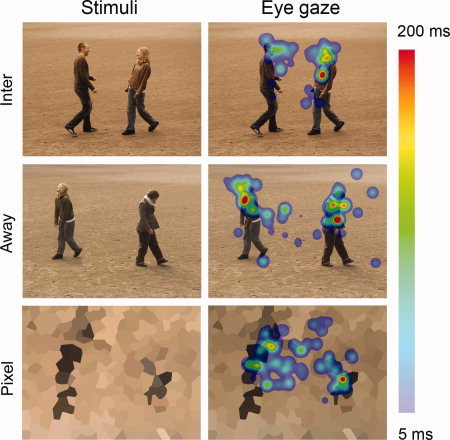

Figure 1.

Examples of stimuli and eye gaze patterns. Left: The stimuli contained humans either engaged in interaction (Toward) or together but facing away from each other (Away) and crystallized pixel figures. Right: The corresponding average eye gaze maps of the subjects. The color coding represents the average of fixation durations (minimum of 5 ms indicated by light blue and the maximum of 200 ms or over by bright red).

MATERIALS AND METHODS

Subjects

Twenty‐two (22) healthy subjects participated in the measurements: 3 subjects in the pilot recordings, and 19 subjects in the final study. The study had a prior approval by the ethics committee of the Helsinki and Uusimaa district. All participants gave their written informed consent prior to the experiment, and a similar consent was also obtained from the actors before they were videotaped for stimulus production.

Data from one subject were discarded due to excess head motion, and thus data from 18 subjects (9 females and 9 males, 19–41 years, mean ± SD 28.3 ± 6.8 years) were fully analyzed. The subjects were mostly right‐handed according to the Edinburgh Handedness Inventory [Oldfield,1971]: on the scale from −1 (left) to +1 (right), the mean ± SD score was 0.77 ± 0.41. Subjects were compensated monetarily for the lost working hours and travel expenses.

Stimuli

Preparation

For stimulus materials, students from the Theatre Academy of Finland were recruited in a photo and video shooting session in the sand field of a recreational park. Only still pictures, clipped from the videos, were used in the experiment. The direction of interaction was clearly deducible from them, and they were more straightforward than a video for eye gaze analysis. The actors, who were two males and two females, were filmed in various circumstances, e.g., two actors were either facing toward or away from each other.

After some digital manipulation with Adobe Photoshop CS4 v.11.0.2, 10 photos from four actor pairs were selected for both facing toward and facing away conditions, resulting in 40 photos per category. The photos fulfilled the following criteria: (i) the photo was technically in focus and clearly presented the event in question (e.g., face‐to‐face interaction between two actors), (ii) a variety of different postures was captured for each actor (i.e., the different photos of interacting actors did not appear identical). Furthermore, a visual control category of pixel photos was created, aiming to show no natural social communication, yet forming separate figures for catching eye gaze in a manner similar to the other photos.

This process resulted in 120 photos, divided into three categories. Each category with 40 images included four different actors, each appearing 20 times in both stimulus types. The stimulus categories were: (1) two persons facing each other and greeting by shaking hands, hugging, or touching each other on the shoulder (explicit touch or overlap of bodies in the image, caused by actors standing partly side by side, was evident in 21/40 stimuli) (Toward), (2) two persons in the same photo but facing away from each other (Away), and (3) crystallized pixel figures (Pixel) as control stimuli (see Fig. 1).

Presentation

The images, displayed on a projection screen by a data projector (Christie Vista X3, Christie Digital Systems, USA), were 640 × 480 pixels in size (width × height, 20 cm × 14 cm on the screen), overlaid on a gray background of 1,024 × 768 pixels and presented with a frame rate of 75 Hz. Stimulus presentation was controlled with Presentation® software (http://nbs.neuro-bs.com/) run on a PC computer. The stimuli were viewed binocularly at a distance of 34 cm within a block design.

The experiment included four kinds of blocks (Toward, Away, Pixel and Rest), each block consisting of one type of stimuli or a fixation cross displayed on a gray background (Rest). In the same experiment, other human and animal stimuli that were irrelevant for this study were also shown. Each stimulus was displayed for 2.5 s within a continuous 25‐s stimulus block that thus contained 10 stimuli; the stimulus blocks alternated with 25‐s Rest blocks. The data were gathered in two successive recording sessions (each 12 min 5 s in duration, comprising 140 stimuli presented in a pseudorandomized order), with the order of the stimulus blocks pseudorandomized within each recording session and the recording sessions counterbalanced across subjects. The recording sessions always started with a rest block; thus one recording session included altogether 14 stimulus blocks and 15 rest blocks.

Subject Instruction

Prior to the experiment, the subjects were informed that they would see images of people and abstract pixel compositions. They were instructed to explore the images freely and inspect the attitude of the individuals towards each other or towards their surroundings, whenever possible. They were also asked to avoid overt and covert verbalizing, and to keep the head stable.

Data Acquisition

The magnetic resonance data were acquired with whole‐body General Electric Signa® 3.0T MRI scanner at the Advanced Magnetic Imaging Centre. During the experiment, the subject was resting in the scanner, facing upwards and viewing the stimulus images through a mirror lens attached to the standard 8‐channel head coil.

Functional MR images were acquired using a gradient‐echo planar imaging sequence with field of view = 240 × 240 mm2, time of repetition = 2,500 ms, time to echo = 32 ms, number of excitations = 1, flip angle = 75, and matrix size = 64 × 64. Before the stimulation, six dummy volumes were acquired allowing the MR signal to stabilize. Altogether 42 slices (thickness 3.0 mm) were acquired in an interleaved order. The resulting functional voxels were 3.75 × 3.75 × 3 mm3 in size.

Structural T1‐weighted images were acquired using a standard spoiled‐gradient echo sequence with a matrix size of 256 × 256, time of repetition 9.2 ms, field of view 260 × 260 mm2, flip angle of 15, and slice thickness of 1 mm, resulting in 1 × 1.016 × 1.016 mm3 voxels.

Data Preprosessing

The fMRI data were evaluated with BrainVoyager QX software version 1.10.2/3 (Brain Innovation B.V., The Netherlands). Preprocessing included slice scan time correction and 3D motion correction with first volume as a reference, linear trend removal and high‐pass filtering at 0.008 Hz.

Functional and anatomical data were iso‐voxelated to 3 × 3 × 3 mm3 and 1 × 1 × 1 mm3 voxels, respectively, and normalized to the Talairach space [Talairach and Tournoux,1988]. All data were analyzed further at this resolution. Subsequently, all functional data were interpolated to the resolution of anatomical images for visualization of statistical maps.

Statistical Analysis

Whole‐brain analysis was conducted for identification of the activation differences during different stimulus conditions. Brain activations were subjected to statistical analysis using random effects general linear model (RFX‐GLM), and the individual time courses were normalized using z‐transformation by subtracting the mean signal and then dividing them by the standard deviation of the signal. The predictors for RFX‐GLM were obtained by convolving the time courses of the stimulus blocks with a canonical hemodynamic response function to reveal blood‐oxygenation‐level‐dependent (BOLD) activations.

All statistical maps were corrected for multiple comparisons using false discovery rate (FDR) correction [Genovese et al.,2002] implemented in the BVQX software, and the cluster‐size (k) threshold of seven contiguous voxels was applied. The main effects of human stimuli were inspected with bidirectional statistical maps (showing contrasts in both directions within the whole brain) of “Humans vs. Rest” (the term “Rest” referring to the “baseline” level for the signal change), and the main effects of pixel stimuli were inspected with similar statistical maps of “Pixels vs. Rest”. Brain activations related specifically to human stimuli were inspected with contrast “Humans vs. Pixels” (balanced for the amount of stimuli), and the specific contribution of both types of human stimuli were clarified with contrasts “Toward vs. Pixel” and “Away vs. Pixel”. The difference between humans engaged in face‐to‐face interaction and humans facing away from each other was obtained with comparison “Toward vs. Away”.

The FDR‐corrected statistical threshold was q(FDR) < 0.005 for stimulus vs. baseline comparisons, q(FDR) < 0.01 for comparison of human and pixel stimuli, and q(FDR) < 0.05 for the contrasts Toward vs. Away, Away vs. Pixel and Toward vs. Pixel. For the latter two contrasts, a stricter threshold of q(FDR) < 0.005 was further used, due the widespread activation, to separate the conjoined brain areas for classification in Table I. Brain activations in all contrasts were identified with the common brain atlases [Duvernoy,1999; Talairach and Tournoux,1988].

Table I.

Differences between human and pixel stimuli

| Activations | Toward vs. Away | Toward vs. Pixel | Away vs. Pixel | |||

|---|---|---|---|---|---|---|

| mm3 | Peak (x, y, z) | mm3 | Peak (x, y, z) | mm3 | Peak (x, y, z) | |

| Amygdala | 2,916a | 32, 2, −3 | 2,511b | 21, −10, −11 | ||

| 1,701 | −18, −9, −8 | |||||

| 351 | −27, −7, −14 | |||||

| pSTS | 1,566 | 51, −67, −7 | 9,288 | 46, −64, 8 | 46,602d | 46, −64, 8 |

| 3,969 | −45, −63, 16 | 3,429 | −45, −64, 6 | 9,774 | −41, −69, 7 | |

| Superior frontal gyrus (dmPFC) | 999 | 4, 39, 44 | 540 | 4, 50, 34 | ||

| 945 | −12, 39, 35 | 189 | −12, 59, 34 | |||

| 531 | −15, 21, 33 | |||||

| Middle STS | 216 | 52, −10, −10 | 1134 | 57, −3, −11 | ||

| 189 | −51, −10, −13 | |||||

| Superior parietal gyrus | 891 | 22, −40, 49 | ||||

| Cingulate gyrus | 702 | 10, −1, 23 | ||||

| 324 | 3, −16, 34 | |||||

| Insula | 486 | −35, 11, −1 | ||||

| Superior temporal gyrus | 324 | −57, −49, 13 | ||||

| Middle temporal gyrus | 324 | 60, −39, −2 | ||||

| Inferior parietal gyrus | 324 | −57, −40, 16 | ||||

| Temporal pole | 189 | 45, 11, −23 | 675 | 40, 8, −26 | ||

| 189 | −48, 6, −20 | |||||

| Fusiform gyrus | 324 | −39, −78, −14 | ||||

| 594 | −44, −48, −26 | |||||

| Precuneus | 5967 | 1, −66, 26 | ||||

| Angular gyrus | 243 | −48, −73, 23 | ||||

| Occipitopolar sulcus | 1566 | 19, −97, −17 | ||||

| 513 | −24, −100, −25 | |||||

| Caudate nucleus | 621 | 20, 21, 7 | 648 | 0, 8, 5 | ||

| 4,482 | −18,−10, 15 | |||||

| 324 | −12, −19, 22 | |||||

| 405 | −25, −16, 24 | |||||

| Cerebellum‐pons | 459 | 6, −30, −13 | 216 | 3, −61, −36 | ||

| 324 | −9, −28, −10 | 216 | −5, −64, −32 | |||

| Thalamus | 513c | 9, −1, −5 | 270 | 1, −13, 8 | ||

| 783 | −6, −4, 4 | 189 | 0, −32, 12 | |||

| Hippocampus | 378 | −21, −16, −14 | 297 | −28, −21, −15 | ||

| Deactivations | ||||||

| Calcarine sulcus | 7182 | −14, −74, −8 | 6,399 | −8, −78, −8 | 19,872 | −17, −81, −18 |

| Middle occipital gyrus | 324 | −26, −85, 13 | 432 | −14, −91, 7 | ||

| Intraparietal sulcus | 1,539 | 50, −37, 48 | ||||

| 216 | 38, −46, 57 | |||||

| 6,696 | 22, −84, 17 | |||||

| 891 | −24, −72, 35 | |||||

| 2,565 | −29, −90, 11 | |||||

| 1,512 | −42, −40, 39 | |||||

| Middle frontal gyrus | 783 | 35, 32, 24 | ||||

| 351 | −41, −4, 31 | |||||

| Inferior occipital gyrus | 2,241 | −50, −61, −13 | ||||

Activations = signal stronger in the [1st] vs. [2nd] condition, deactivations = signal stronger in the [2nd] vs. [1st] condition.

Conjoined with insula.

Conjoined with hippocampus.

Conjoined with hypothalamus.

Conjoined with precuneus and posterior cingulate.

Eye Tracking

The subjects' eye gaze was tracked during the fMRI scanning with SMI MEye Track long‐range eye‐tracking system (Sensomotoric Instruments GmbH, Germany), based on video‐oculography and the dark pupil‐corneal reflection method.

The infrared camera was set at the foot of the bed to monitor the subject's eye via a mirror attached to the head coil, and an infrared light source was placed on the mirror box to illuminate the eye. The camera was shielded properly (in house) and did not affect the signal‐to‐noise ratio of the fMRI data. The eye tracker was calibrated prior to the experiment using 5 fixation points, and the data were sampled at 60 Hz.

Eye gaze was tracked successfully in 11 subjects, and the data were analyzed with Begaze 2.0 (Sensomotoric Instruments GmbH, Germany). Blinks were removed from the data and fixations were detected with a dispersion‐threshold identification algorithm, using 2° dispersion window size and 120 ms as the minimum fixation duration. The gaze maps were calculated with a smoothing kernel of 70 pixels, and color‐coding for average fixation durations from 5 ms to 200 ms or over (see Fig. 1).

Fixations for Toward and Away categories were compared using all stimuli from both categories. For each photo, regions of interest were drawn manually around human heads and bodies, from which the subjects' fixation durations were calculated separately for the humans positioned on the left and right side of the stimulus (right head, right body, left head, and left body).

RESULTS

Eye Gaze

The durations of fixations within the areas of interest were 408 ± 70 ms and 248 ± 70 ms (left head), 245 ± 46 ms and 269 ± 51 ms (left body), 451 ± 96 ms and 225 ± 50 ms (right head), 215 ± 59 ms and 280 ± 60 ms (right body), respectively for Toward and Away conditions; the results are based on all 40 Toward and 40 Away stimuli. Fixation times differed for the heads, which were viewed on average 193 ms longer in Toward than Away condition (left head t 10 = 2.8, P < 0.05; right head t 10 = 2.7, P < 0.05, paired‐samples t‐test).

Haemodynamic Activation Related to Observing Human Interaction

The main effects of humans (“Humans vs. Rest”) displayed bilateral activation within e.g., the amygdala, thalamic nuclei, posterior superior temporal sulcus (pSTS), intraparietal sulcus (IPS), premotor cortex and supplementary motor area (SMA) (Top section of Fig. 2). Pixel images (“Pixels vs. Rest”) activated other parts of this circuitry but not the amygdala nor pSTS.

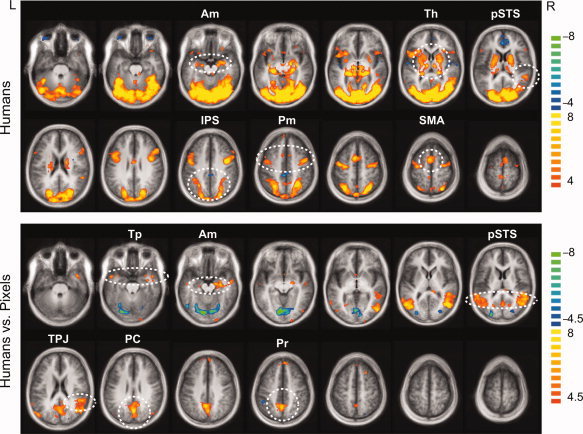

Figure 2.

Spatial distribution of the hemodynamic signals. Top: The main effects of Humans (Toward + Away). Human stimuli activated the amygdala, thalamus, pSTS and IPS, premotor cortex and SMA (encircled). Bottom: Activation was stronger to Humans vs. Pixels e.g. in temporal poles, amygdala, pSTS, TPJ, posterior cingulate, and precuneus (encircled). The statistical maps are overlaid on the anatomical MR image averaged across all subjects; t‐values are given on the right, q(FDR) < 0.005 for main effects and q(FDR) < 0.01 for Humans vs. Pixels. Axial slices from left to right are at the Talairach z‐coordinates of −28, −21, −14, −7, 0, 7, 14 on the first row and 21, 28, 35, 42, 49, 56, and 63 on the second row. The t values are given on the right. Am, amygdala; Th, thalamus; pSTS, posterior superior temporal sulcus; IPS, intraparietal sulcus; PM, premotor cortex; SMA, supplementary motor area; TPJ, temporo‐parietal junction; PC, posterior cingulate; Pr, precuneus.

Brain areas generally sensitive to images of humans were inspected by contrasting the stimuli containing humans (Toward and Away) with pixel images (“Humans vs. Pixels”, balanced for the total stimulus number). Responses were stronger to human than pixel images in e.g. in the bilateral temporal poles, bilateral amygdala, bilateral pSTS (the right pSTS conjoined with the temporoparietal junction), posterior cingulate and precuneus (“Humans vs. Pixels”, Bottom section of Fig. 2).

Brain processing modified by social interaction was inspected by contrasts Toward vs. Away, Toward vs. Pixel and Away vs. Pixel (Table I). The activation within the amygdala, pSTS, and dorsomedial prefrontal cortex (dmPFC) in the superior frontal gyrus was robustly stronger in Toward than Away conditions (Top section of Fig. 3), and likewise, the activation in these areas was stronger in Toward than Pixel conditions (Table I).

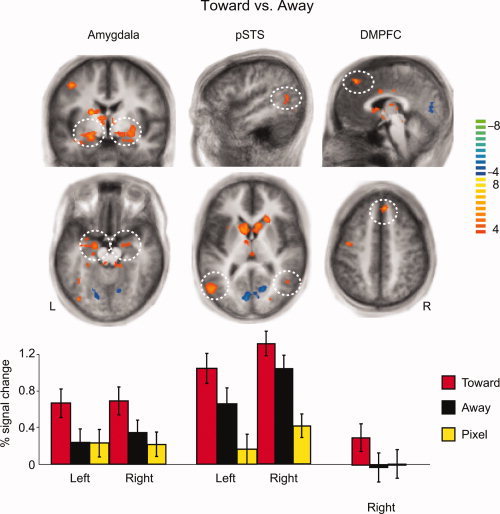

Figure 3.

Different response patterns of the amygdala, pSTS and dmPFC in the Toward, Away, and Pixels conditions. Top and middle panels: The contrast of Toward vs. Away was associated with brain activation in the amygdala, pSTS, and right dmPFC. Bottom panel: The signal changes within the above areas show that the amygdala response was stronger in the Toward condition than either in the Away or Pixel conditions, whereas the pSTS showed enhanced responses to both types of human stimuli compared with the pixel images, and the dmPFC showed activation only in the Toward condition. The volumes obtained from the contrast are 888 mm3 area in left amygdala, 309 mm3 area in right amygdala, 1,678 mm3 area in left pSTS, 348 mm3 area in right pSTS, 629 mm3 area in right dmPFC; the color bar on the right represents the t‐values, and the axial slices are from the Talairach z‐coordinates of −14, 7, and 44 respectively from left to right.

Each of these areas–the amygdala, pSTS, dmPFC–had a unique response profile. The percentage signal changes in the bottom panel of Figure 3 show that the amygdala responses were stronger to Toward than either Away or Pixel conditions, whereas the responses were equal to Away and Pixel. The activation of pSTS, however, was stronger to both Toward and Away than Pixel conditions, and also stronger to Toward than Away condition. The dmPFC reacted only to Toward stimuli, and the activity there was weaker than in the amygdala or the pSTS.

Only the early visual areas, cuneus, and lingual gyrus, were activated more strongly to Away than Toward condition (color‐coded with blue–green in Fig. 3).

DISCUSSION

Third‐Person View to Interaction Between Two Individuals

This study explored brain activation patterns related to third‐person observation of interaction between two persons. Activation was stronger to human than pixel stimuli in the brain circuitry comprising the temporal poles, amygdala, pSTS, temporo‐parietal junction, posterior cingulate, and precuneus. The nodes of this circuitry have been previously associated with socio‐emotional aspects of perceiving single humans, for example human bodies [Downing et al.,2007; Peelen and Downing,2005; Peelen et al.,2006; Pourtois et al.,2007; Spiridon et al.,2006; Taylor et al.,2007], biological motion [Grossman and Blake,2002; Pyles et al.,2007; Santi et al.,2003; Vaina et al.,2001], gestures or expressions [Adams and Kleck,2003; Allison et al.,2000; Haxby et al.,2000], and theory of mind and mentalizing [Ruby and Decety,2004; Saxe and Kanwisher,2003]. According to the current results, the brain areas that process social cues of single humans also participate in the processing of social interaction observed between two individuals.

Somewhat surprising was the lack of difference between fusiform activations to human and Pixel stimuli. One explanation could be that our pixelated control stimuli, that were created from similar scenes, would have contextually triggered perception or imagination of faces in the Pixel stimuli; it is already known that imagined faces [Ishai et al.,1999; O'Craven and Kanwisher,2000] or contextual information regarding faces [Cox et al.,2004] are associated with activation of the face‐sensitive brian areas. However, the stronger responses to human than pixel images in the temporal poles, amygdala, pSTS, TPJ, posterior cingulate, and precuneus (see Fig. 2) suggest that other contextual information in the Pixel stimuli, apart from imagining faces, has been negligible.

Direction of Interaction as a Social Cue

Previously, the third‐person view on social interaction has been studied when subjects have been e.g. observing audiovisual movies of everyday social situations [Iacoboni et al.,2004], reading comic strips involving intentions of two persons [Walter et al.,2004] and viewing dynamic interactional bodily gestures of two persons whose faces were blurred [Sinke et al.,2010]. All these experiments demonstrated activation of the posterior cingulate/precuneus and dmPFC in association with the third‐person view on social interactions. Our findings support the involvement of the dmPFC in assessing social interaction, as we found dmPFC activation both in the Toward vs. Away and Toward vs. Pixel comparisons.

In addition to dmPFC and cingulate‐cortex activations, our data showed stronger activation of the temporal poles to Toward than Pixel stimuli, and stronger right temporal‐pole activation during Away than Pixel condition, with no difference between Toward and Away conditions. These findings suggest that the temporal poles represent theory‐of‐mind‐related processing of the stimuli [Frith and Frith,2003; Olson et al.,2007], which was applicable to both Toward and Away conditions.

We used still images of humans as stimuli, whereas earlier studies inspecting visually observed social interactions used dynamic video clips of two‐person interactions [Iacoboni et al.,2004; Sinke et al.,2010]. Interestingly however, all these studies have found rather similar brain networks to observing social interactions of others from a third‐person perspective. In our study, merely observing a photo illustrating face‐to‐face interaction between two people was enough to trigger this brain activity.

Analysis of Personal Space or Relational Interactions Between Others

Regulation of personal space has been recently linked to the function of the human amygdala. An individual with complete bilateral amygdala lesions had a diminished sense of personal space, and the amygdala was suggested to “trigger the strong emotional reactions normally following personal space violations, thus regulating interpersonal distance in humans” [Kennedy et al.,2009].

The stronger amygdala activation in our study during observation of two people engaged in friendly face‐to‐face interaction than during observation of the same people facing to opposite directions might also be related to perception of personal space, now between other persons, since in some of our stimuli the humans facing each other were physically closer to each other than those facing away. Whether the key factor here was the nature of the social interaction (i.e., whether humans are facing toward or away from each other), the distance between the observed others, or both aspects together, remains to be explored in future studies.

In some of the Toward stimuli, face‐to‐face interacting humans were also touching or standing partly side‐by‐side resulting in an overlap of their bodies in the 2D picture. However, the presence of touch itself, in the absence of the social context, is unlikely to have caused the amygdala activation observed in our study, since observing other persons being touched is associated with somatosensory rather than amygdala activations [Banissy and Ward,2007; Blakemore et al.,2005; Keysers et al.,2004].

Although both the amygdala and pSTS are known to respond to a variety of socio‐emotional stimuli [Adolphs,2010b; Frith,2007], the response profiles of these areas to the observed social interaction appeared very different in our study. The amygdala was activated more strongly to Toward than Away or Pixel conditions but it did not differentiate between Away and Pixel conditions. Instead, the pSTS systematically distinguished both Toward and Away conditions from Pixel condition, and further Toward from Away condition. In other words, the amygdala was not sensitive to the mere presence of people within the scene, whereas the pSTS was. The amygdala thus seems sensitive to the face‐to‐face interaction between others, which may potentially reveal a social relationship or a rising threat amongst others. Instead, the activation of the pSTS suggests that its function is related to analyzing human gestures on a more detailed level, sampling both the presence of humans within the scene as well as the interactional positions of the observed humans.

Eye Movements and Attention in Observing Social Interaction

The longer fixation times in the Toward than Away condition to human heads (including faces) may explain the stronger activation of the fusiform gyrus in the Toward than Away condition. Furthermore, the foci of attention reflected by the eye fixations may have affected the amygdala activation in our study, since attention and eye gaze modulate amygdala activation in many ways. For example, diverting attention from the emotional content may suppress amygdala activation to faces [Morawetz et al.,2010], increased amygdala activation predicts gaze changes toward the eye region of fearful faces [Gamer and Buchel,2009], and lesions of the amygdala impair gaze shifts to the eye region of fearful faces [Adolphs et al.,2005].

The majority of the research on amygdala reactivity has applied stimuli related to fear, whereas the current stimuli completely lacked fear expressions and merely showed friendly interaction. On the other hand, impaired reactions to gaze cues in amygdala‐lesioned patients imply involvement of the amygdala also in social orienting [Akiyama et al.,2007]. Such social orienting cues were provided in our Toward condition, where two persons were facing and gazing each other. Thus, our results on amygdala reactivity are in line with the proposed role of the amygdala in detecting and analyzing not only the emotional but also the social valence of a stimulus [Akiyama et al.,2007].

CONCLUSION

We investigated brain activation during observation of social interaction between two people. The results demonstrate the involvement of brain regions that have previously been shown to process biological motion and gestures of solitary humans. Temporal poles and precuneus together with posterior cingulate were similarly activated regardless of whether the humans in the stimuli were facing toward or away from each other. Instead, the activity in the amygdala, pSTS, and dmPFC distinguished humans facing toward each other from both humans facing away and pixel compositions.

Although both the amygdala and pSTS responded differentially to the two types of social interaction (Toward and Away), they had distinct response profiles. The amygdala response was sensitive to humans facing each other, whereas the pSTS activation was strongest during Toward, intermediate during Away and weakest during Pixel condition. These results suggest that the nodes of the socio‐emotional network have distinct roles. Particularly, the results suggest that in addition to having a role in the assessment of the proximity of another person towards oneself, the amygdala may be involved in the assessment of the proximity between two other persons.

Acknowledgements

The authors thank V.‐M. Saarinen for carrying out the eye tracking measurements and the related data analysis, J. Kujala for the help in photographing the stimuli and for providing Matlab scripts, S. Vanni for advice for the control stimuli, M. Kattelus for the help in fMRI measurements, and Theatre Academy of Finland for the help in producing the stimuli.

REFERENCES

- Adams RB Jr, Kleck RE ( 2003): Perceived gaze direction and the processing of facial displays of emotion. Psychol Sci 14: 644–647. [DOI] [PubMed] [Google Scholar]

- Adolphs R ( 2010a): Conceptual challenges and directions for social neuroscience. Neuron 65: 752–767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R ( 2010b): What does the amygdala contribute to social cognition? Ann NY Acad Sci 1191: 42–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR ( 2005): A mechanism for impaired fear recognition after amygdala damage. Nature 433: 68–72. [DOI] [PubMed] [Google Scholar]

- Akiyama T, Kato M, Muramatsu T, Umeda S, Saito F, Kashima H ( 2007): Unilateral amygdala lesions hamper attentional orienting triggered by gaze direction. Cereb Cortex 17: 2593–2600. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, McCarthy G ( 2000): Social perception from visual cues: role of the STS region. Trends Cogn Sci 4: 267–278. [DOI] [PubMed] [Google Scholar]

- Banissy MJ, Ward J ( 2007): Mirror‐touch synesthesia is linked with empathy. Nat Neurosci 10: 815–816. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Bristow D, Bird G, Frith C, Ward J ( 2005): Somatosensory activations during the observation of touch and a case of vision‐touch synaesthesia. Brain 128: 1571–1583. [DOI] [PubMed] [Google Scholar]

- Centelles L, Assaiante C, Nazarian B, Anton J‐L, Schmitz C ( 2011): Recruitment of both the mirror and the mentalizing networks when observing social interactions depicted by point‐lights: a neuroimaging study. PLoS ONE 6:e15749. [DOI] [PMC free article] [PubMed]

- Cox D, Meyers E, Sinha P ( 2004): Contextually evoked object‐specific responses in human visual cortex. Science 304: 115–117. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Van den Stock J, Meeren HK, Sinke CB, Kret ME, Tamietto M ( 2010): Standing up for the body. Recent progress in uncovering the networks involved in the perception of bodies and bodily expressions. Neurosci Biobehav Rev 34: 513–527. [DOI] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N ( 2001): A cortical area selective for visual processing of the human body. Science 293: 2470–2473. [DOI] [PubMed] [Google Scholar]

- Downing PE, Wiggett AJ, Peelen MV ( 2007): Functional magnetic resonance imaging investigation of overlapping lateral occipitotemporal activations using multi‐voxel pattern analysis. J Neurosci 27: 226–233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duvernoy HM ( 1999): The Human Brain: Surface, Three‐Dimensional Sectional Anatomy with MRI, and Blood Supply. Wien New York: Springer‐Verlag. [Google Scholar]

- Frith CD ( 2007): The social brain? Philos Trans R Soc Lond B Biol Sci 362: 671–678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frith U, Frith CD ( 2003): Development and neurophysiology of mentalizing. Philos Trans R Soc Lond B Biol Sci 358: 459–473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gamer M, Buchel C ( 2009): Amygdala activation predicts gaze toward fearful eyes. J Neurosci 29: 9123–9126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Genovese CR, Lazar NA, Nichols T ( 2002): Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage 15: 870–878. [DOI] [PubMed] [Google Scholar]

- Grossman ED, Blake R ( 2002): Brain areas active during visual perception of biological motion. Neuron 35: 1167–1175. [DOI] [PubMed] [Google Scholar]

- Hari R, Kujala MV ( 2009): Brain basis of human social interaction: From concepts to brain imaging. Physiol Rev 89: 453–479. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI ( 2000): The distributed human neural system for face perception. Trends Cogn Sci 4: 223–233. [DOI] [PubMed] [Google Scholar]

- Iacoboni M, Lieberman MD, Knowlton BJ, Molnar‐Szakacs I, Moritz M, Throop CJ, Fiske AP ( 2004): Watching social interactions produces dorsomedial prefrontal and medial parietal BOLD fMRI signal increases compared to a resting baseline. Neuroimage 21: 1167–1173. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider LG, Martin A, Schouten JL, Haxby JV ( 1999): Distributed representation of objects in the human ventral visual pathway. Proc Natl Acad Sci USA 96: 9379–9384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM ( 1997): The fusiform face area: A module in human extrastriate cortex specialized for face perception. J Neurosci 17: 4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennedy DP, Glascher J, Tyszka JM, Adolphs R ( 2009): Personal space regulation by the human amygdala. Nat Neurosci 12: 1226–1227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keysers C, Wicker B, Gazzola V, Anton JL, Fogassi L, Gallese V ( 2004): A touching sight: SII/PV activation during the observation and experience of touch. Neuron 42: 335–346. [DOI] [PubMed] [Google Scholar]

- Morawetz C, Baudewig J, Treue S, Dechent P ( 2010): Diverting attention suppresses human amygdala responses to faces. Front Hum Neurosci 4: 226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Craven KM, Kanwisher N ( 2000): Mental imagery of faces and places activates corresponding stiimulus‐specific brain regions. J Cogn Neurosci 12: 1013–1023. [DOI] [PubMed] [Google Scholar]

- Oldfield RC ( 1971): The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9: 97–113. [DOI] [PubMed] [Google Scholar]

- Olson IR, Plotzker A, Ezzyat Y ( 2007): The enigmatic temporal pole: A review of findings on social and emotional processing. Brain 130: 1718–1731. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Downing PE ( 2005): Is the extrastriate body area involved in motor actions? Nat Neurosci 8: 125. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Wiggett AJ, Downing PE ( 2006): Patterns of fMRI activity dissociate overlapping functional brain areas that respond to biological motion. Neuron 49: 815–822. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Peelen MV, Spinelli L, Seeck M, Vuilleumier P ( 2007): Direct intracranial recording of body‐selective responses in human extrastriate visual cortex. Neuropsychologia 45: 2621–2625. [DOI] [PubMed] [Google Scholar]

- Puce A, Perrett D ( 2003): Electrophysiology and brain imaging of biological motion. Philos Trans R Soc Lond B Biol Sci 358: 435–445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pyles JA, Garcia JO, Hoffman DD, Grossman ED ( 2007): Visual perception and neural correlates of novel ‘biological motion’. Vis Res 47: 2786–2797. [DOI] [PubMed] [Google Scholar]

- Ruby P, Decety J ( 2004): How would you feel versus how do you think she would feel? A neuroimaging study of perspective‐taking with social emotions. J Cogn Neurosci 16: 988–999. [DOI] [PubMed] [Google Scholar]

- Santi A, Servos P, Vatikiotis‐Bateson E, Kuratate T, Munhall K ( 2003): Perceiving biological motion: Dissociating visible speech from walking. J Cogn Neurosci 15: 800–809. [DOI] [PubMed] [Google Scholar]

- Saxe R, Kanwisher N ( 2003): People thinking about thinking people. The role of the temporo‐parietal junction in “theory of mind”. Neuroimage 19: 1835–1842. [DOI] [PubMed] [Google Scholar]

- Sinke CB, Sorger B, Goebel R, de Gelder B ( 2010): Tease or threat? Judging social interactions from bodily expressions. Neuroimage 49: 1717–1727. [DOI] [PubMed] [Google Scholar]

- Spiridon M, Fischl B, Kanwisher N ( 2006): Location and spatial profile of category‐specific regions in human extrastriate cortex. Hum Brain Mapp 27: 77–89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P ( 1988): Co‐Planar Stereotaxic Atlas of the Human Brain: Three‐Dimensional Proportional System: An Approach to Cerebral Imaging. Stuttgart: Thieme Medical Publishers; p 122. [Google Scholar]

- Taylor JC, Wiggett AJ, Downing PE ( 2007): Functional MRI analysis of body and body part representations in the extrastriate and fusiform body areas. J Neurophysiol 98: 1626–1633. [DOI] [PubMed] [Google Scholar]

- Vaina LM, Solomon J, Chowdhury S, Sinha P, Belliveau JW ( 2001): Functional neuroanatomy of biological motion perception in humans. Proc Natl Acad Sci USA 98: 11656–11661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walter H, Adenzato M, Ciaramidaro A, Enrici I, Pia L, Bara BG ( 2004): Understanding intentions in social interaction: The role of the anterior paracingulate cortex. J Cogn Neurosci 16: 1854–1863. [DOI] [PubMed] [Google Scholar]