Abstract

In our everyday life we process information from different modalities simultaneously with great ease. With the current study we had the following goals: to detect the neural correlates of (1) automatic semantic processing of associates and (2) to investigate the influence of different visual modalities on semantic processing. Stimuli were presented with a short SOA (350 ms) as subjects performed a lexical decision task. To minimize the variance and increase homogeneity within our sample, only male subjects were measured. Three experimental conditions were compared while brain activation was measured with a 3 T fMRI scanner: related word‐pairs (e.g., frame–picture), unrelated word‐pairs (e.g., frame–car) as well as word‐nonword pairs (e.g., frame–fubber). They were presented uni‐ (word ‐word) and cross‐modally (picture–word). Behavioral data revealed a priming effect for cross‐modal and unimodal word‐pairs. On a neural level, the unimodal condition revealed response suppression in bilateral fronto‐parietal regions. Cross‐modal priming led to response suppression within the right inferior frontal gyrus. Common areas of deactivation for both modalities were found in bilateral fronto‐tempo‐parietal regions. These results suggest that the processing of semantic associations presented in different modalities lead to modality‐specific activation caused by early access routes. However, common activation for both modalities refers to a common neural network for semantic processing suggesting amodal processing. Hum Brain Mapp, 2009. © 2009 Wiley‐Liss, Inc.

Keywords: semantic priming, lexical decision, semantic processing, language, fMRI

INTRODUCTION

In our everyday life we process information from different modalities simultaneously (e.g., auditory, visual). For proper comprehension the sensory input is converted into concepts linked to the distributed semantic associations that encode their meaning [Mesulam,1998]. Comprehension is a very fast, automatic process, which holds true for single items as well as the linkage of two or more concepts, as semantic priming studies have shown. Within the last years, the neural correlates of unimodal (visual words) semantic priming processes have been elucidated. Whether cross‐modal integration (visual objects vs. visual word forms) of meaning activates common or distinct neural networks, remains yet debated.

The storage and access of conceptual representations of information presented cross‐modally is summarized in two general classes of models. The first model postulates multiple semantic systems [e.g., Paivio,1991; Shallice,1988; Warrington and Shallice,1984]. Distinct conceptual representations for verbal (words) and visual (pictures) input modalities are assumed, i.e., verbal and visual input modalities have separate conceptual representations [Paivio,1991]. Both systems have their own organization and processing parameters. The second general model suggests a single amodal semantic system which is incorporated in the Organized Unitary Conceptual Hypothesis [OUCH; Caramazza et al.,1990]. Here, all processing routes converge to a single set of conceptual representations common for different modalities. The model assumes a common semantic representation of visual objects and their verbal descriptions, but different access routes from the two modalities.

A number of functional imaging studies have addressed the question of a modality independent semantic system, usually applying simple naming tasks which leave a long time for processing. For example, Moore and Price [1999] found activation of the left fusiform and the right inferior frontal gyrus as well as right anterior cingulate for the processing of meaningful over non‐meaningful stimuli, irrespective of input modality (pictures and words). Imaging studies investigating cross‐modal semantic processing found mainly left‐lateralized activations common for both input modalities. For example, auditory‐to‐visual semantic tasks revealed common activation in left inferior prefrontal and anterior temporal regions [Heim et al.,2007; Marinkovic et al.,2003]. It was assumed that there is a modality specific memory system but that supramodal semantic stores can be accessed from any modality. Common areas of activation for semantic processing of pictures and words were found within the left fusiform, parahippocampal, and superior temporal, as well as left inferior frontal gyrus [Bright et al.,2004]. Modality specific activations were found in the temporal pole for words and occipitotemporal regions for pictures. Furthermore, in an imaging study of Vandenberghe et al. [1996] semantic processing of pictures and words led to largely overlapping activation in the left hemisphere including the temporo‐parietal junction, fusiform gyrus, middle temporal and inferior frontal gyri. Modality specific activations were present in the left fusiform and right occipital gyrus for pictures and in the left inferior parietal and inferior frontal gyri for words. Altogether, these results seem to support an amodal semantic system that is distributed across the brain and shared by both input modalities. Nevertheless, beside common areas of activation in mainly left frontal and temporal regions all studies revealed modality specific activations. However, the tasks which have been used to investigate cross‐modal processing so far have been limited to simple word or picture naming [e.g., DeLeon et al.,2007; Moore and Price,1999], categorization or semantic judgment tasks [e.g., Booth et al.,2002; Bright et al.,2004; Marinkovic et al.,2003; Vandenberghe et al.,1996] and simple lexical decisions [e.g., Heim et al.,2007; Xiao et al.,2005]. Only a few studies investigated cross‐modal processing with the technique of semantic priming whereas the studies often used auditory‐to‐visual stimuli, i.e., did not ask for the cross‐modal integration of visual information [e.g., Badgaiyan et al.,2001; Bergerbest et al.,2004; Carlesimo et al.,2003; Giesbrecht et al.,2004] or used long stimulus presentations times, i.e., they investigated controlled rather than automatic processing of modalities [Kahlaoui et al.,2007; Koivisto and Revonsuo,2000].

In behavioral studies of semantic processing, a widely applied technique is automatic semantic priming using short SOAs. A task commonly used is the lexical decision task. Generally, a prime word (e.g., frame) is presented on a computer screen, followed by a string of letters (target) which can be a real word (e.g., picture) or nonword (e.g., fubber). The subject has to decide if the target is a real word or not. The main outcome is the priming effect, i.e., subjects respond faster to the target if it was preceded by a related prime word (e.g., frame–picture), compared to when it is preceded by an unrelated word (e.g., frame–stone). The priming effect is explained by an automatic “spread of activation” between related word representations within the semantic network that occurs at short stimulus onset asynchrony [SOA < 400 ms; see Neely,1991 for a review]. Thus, if a prime is presented, its concept is activated and the activation will automatically spread through associative pathways to the corresponding concept.

The neural correlates underlying this spread of activation have been examined by functional imaging studies (for example Kotz et al.,2002; Raposo et al.,2006; Sachs et al.,2008; Wible et al.,2006]. In summary, related word‐pairs led to reduced (response suppression) or enhanced activity (response enhancement) in comparison to unrelated word‐pairs within lateral temporal, inferior or middle frontal regions [Rissman et al.,2003; Wible et al.,2006] and temporo‐parietal regions [Kotz et al.,2002; Raposo et al.,2006]. Response suppression is thought to reflect the attenuation of hemodynamic activity as consequence of priming, i.e., it reflects faster or “more efficient” processing of primed stimuli [Henson,2003]. Response enhancement is thought to be a correlate of cognitive processes that index the spread of activation itself [Henson,2003; Marinkovic et al.,2003]. Thus, response suppression is a special form of BOLD response “deactivation”, response enhancement a special form of BOLD “activation in the context of semantic priming experiments in an fMRI setting.

The aim of the current study was to investigate between‐ (picture–word) and within‐modality (word–word) neural processing using semantic priming. In order to avoid strategic influence or expectancy which can affect priming‐specific patterns of activation [Grossman et al.,2006], we wanted to use fast, automatic, implicit processing, i.e., semantic priming with an short stimulus onset asynchrony (SOA). According to Neely [1991] automatic processing occurs if the SOA is under 400 ms. Therefore, we used an SOA of 350 ms. The novelty of our design compared to the existing studies lies in the combination of short SOA addressing automatic lexico‐semantic processing of associatively related concepts and different modalities. Associations were defined as “external or complementary relations among objects” [Lin and Murphy,2001] that shared functional (e.g., chalk–blackboard) or part‐whole links (e.g., ladder–rung).

We predict that if cross‐modal and unimodal stimuli are processed in an amodal system, comparable activation clusters should be found in areas specific for semantic processing such as lateral prefrontal and temporal cortices [e.g., Kotz et al.,2002; Raposo et al.,2006; Rissman et al.,2003; Wible et al.,2006]. If, alternatively, cross‐modal and unimodal stimuli are processed in multiple semantic systems we predict that differential activation clusters should be revealed. Due to the processing of perceptual features visual picture–word processing is then hypothesized to activate occipital [Kohler et al.,2000], and verbal word–word priming to left‐temporal regions. In addition, due to possible gender effects in neural processing, we only included male subjects [for example Baxter et al.,2003; Bermeitinger et al.,2008; Laws,1999; Lloyd‐Jones and Humphreys,1997].

MATERIALS AND METHODS

Participants

Sixteen healthy male subjects with an average age of 26.0 years (S.D. = 3.4) were recruited from the staff of the RWTH University Hospital Aachen and were paid a fee for participation. All subjects were native German speakers, had normal or corrected‐to‐normal vision and were right‐handed according to the Edinburgh Inventory of Handedness [Oldfield,1971]. Subjects were excluded if they had been diagnosed with a past or present psychiatric, neurological, or medical disorder. None of the subjects was taking psycho‐pharmacologically active medication at time of study or within the last two months. The study was approved by the local ethics committee and all subjects gave informed consent to participate in the study.

Stimuli and Design

In the semantic priming task, a prime was presented to the subjects (e.g., frame). Depending on the session, the prime stimulus was either a picture or a written word. A visually presented string of letters (target) followed the prime. Subjects had to decide whether the target was a real word (e.g., picture) or not (e.g., fubber) by pressing one of two buttons.

Three experimental conditions were used: (1) related word‐pairs, e.g., frame–picture, (2) unrelated word‐pairs, e.g., frame–car, (3) word‐nonword pairs, e.g., frame–fubber. The different modalities (unimodal word–word and cross‐modal picture–word) were presented in different sessions with different stimuli. The idea behind this is that (a) we wanted to avoid confusing instructions, i.e., before a new session started, the subject was informed that there will be pictures and words or words and words and (b) the experiment should be rather short to not compromise the attention of the subjects, i.e., using two different sessions for both modalities led to a shorter duration of the experiment because the subjects had to be instructed only twice. To avoid session‐specific effects we modeled the session effect in the fMRI data analysis. Altogether, two sessions per modality were presented leading to 30 related trials per condition.

The related word‐pairs consisted of a prime and an associatively related target. The associative relation was defined by a functional or a part‐whole relationship between prime and target. To ensure that the word‐pairs had a strong relationship a pre‐test was conducted. Twelve volunteers not participating in the actual fMRI study were asked to rate word‐pair relations on a scale from 1 (= unrelated) to 7 (= highly‐related). The volunteers were instructed to rate the target words regarding their contextual relatedness and their interaction in time and space. Words selected had to be values of 5 or higher in the pre‐test. The selected words for pictures and written words did not differ in their average rating (M pic = 6.58, SDpic = 0.46; M word = 6.43; SDword = 0.53; P = 0.24). The unrelated targets had to be scored 2 or less in the same test. Nonwords were all pronounceable German words that were constructed by changing one or two consonants in real target words. The selected stimuli had to belong to the same overall conceptual domain (all words depicted only objects), were concrete and imaginable. Different stimuli were used for the modalities, but they were repeated within one session (i.e., the prime words were presented in the related, the unrelated and the nonword condition, the target words were presented twice in the related and unrelated condition). The stimuli (i.e., primes, targets and prime‐target pairs) were matched within and between modalities (i.e., sessions). Prime and target were also matched within modality. The criteria for all matches were lexical frequency (CELEX database; Baayen et al.,1993], word length, and relationship (part‐whole vs. functional; see Table I for stimuli used in the experiment). The results revealed no differences between stimuli and modalities (P > 0.25; see Table II for results of the matching). In an additional test, all prime‐target pairs were rated on a 10‐point scale regarding their extent of visual similarity [Kalenine and Bonthoux,2008]. The results demonstrate that thematic relations were not similar (M sim_unimodal = 2.8; SDsim_unimodal = 1.6; M sim_cross‐modal = 2.6; SDsim_cross‐modal = 1.3; P = 0.37) and that the similarity did not differ between modalities.

Table I.

Stimuli used in the experiment

| Related condition (English translation) | Unrelated condition (English translation) | Nonword condition (English translation) |

|---|---|---|

| UNIMODAL CONDITION | ||

| Fahne–Mast (flag–mast) | Fahne–Bahn (flag–train) | Fahne–Deisil (flag–deisil) |

| Dom–Altar (cathedral–altar) | Dom–Kohle (cathedral–coal) | Dom–Laugu (cathedral–laugu) |

| Film–Kamera (Film–camera) | Film–Hebel (film–lever) | Film–Makri (film–makri) |

| Pflug–Scheune (plough–barn) | Pflug–Altar (plough–altar) | Pflug–Tarla (plough–tarla) |

| Tapete–Leim (wallpaper–glue) | Tapete–Bullauge (wallpaper–porthole) | Tapete–Petul (wallpaper–petul) |

| Ofen–Kohle (oven–coal) | Ofen–Plastik (oven–plastic) | Ofen–Stikpla (oven–stikpla) |

| Kompass–Nadel (compass–needle) | Kompass–Marmor (compass–marble) | Kompass–Soram (compass–soram) |

| Dampfer–Bullauge (steamboat–porthole) | Dampfer–Nadel (steamboat–needle) | Dampfer–Lune (steamboat–lune) |

| Naht–Saum (seam–border) | Naht–Mast (seam–mast) | Naht–Musa (seam–musa) |

| Messer–Schneide (knife–edge) | Messer–Pfeiler (knife–pillar) | Messer–Deische (knife–deische) |

| Terrasse–Markise (patio–awning) | Terrasse–Geige (patio–electricity) | Terrasse–Ramke (patio–ramke) |

| Bagger–Schaufel (excavator–bucket) | Bagger–Girlande (excavator–garland) | Bagger–Felau (excavator–felau) |

| Magnet–Eisen (magnet–iron) | Magnet–Abfluss (magnet–spout) | Magnet–Eisam (magnet–eisam) |

| Fliese–Marmor (tile–marble) | Fliese–Scheune (tile–barn) | Fliese–Siesch (tile–siesch) |

| Ablage–Zettel (clipboard–note) | Ablage–Markise (clipboard–awning) | Ablage–Kise (clipboard–kise) |

| Apparat–Hebel (apparatus–lever) | Apparat–Rauch (apparatus–smoke) | Apparat–Hebap (apparatus–hebap) |

| Beton–Pfeiler (concrete–pillar) | Beton–Schneide (concrete–edge) | Beton–Feito (concrete–feito) |

| Boden–Teppich (floor–carpet) | Boden–Schaufel (floor–bucket) | Boden–Peti (floor–peti) |

| Klingel–Rezeption (bell–reception) | Klingel–Geige (bell–violin) | Klingel–Gelik (bell–gelik) |

| Lampion–Girlande (lampion–garland) | Lampion–Leim (lampion–glue) | Lampion–Mielal (Lampion–mielal) |

| Leitung–Strom (cable–electricity) | Leitung–Saum (cable–border) | Leitung–Musal (cable–musal) |

| Mine–Kuli (refill–ballpoint pen) | Mine–Kamera (refill–camera) | Mine–Lune (refill–lune) |

| Orchester–Geige (orchestra–violin) | Orchester–Teppich (orchestra–carpet) | Orchester–Otor (orchestra–otor) |

| Rohr–Abfluss (tube–drain) | Rohr–Zettel (tube–note) | Rohr–Tehz (tube–tehz) |

| Schild–Schwert (buckler–sword) | Schild–Seide (buckler–silk) | Schild–Deisil (buckler–deisil) |

| Schranke–Bahn (gate–railroad) | Schranke–Schwert (gate–sword) | Schranke–Schrew (gate–schrew) |

| Seife–Wasser (soap–water) | Seife–Kuli (soap–ballpoint pen) | Seife–Feisuk (soap–feisuk) |

| Tuch–Seide (shawl–silk) | Tuch–Rezeption (shawl–reception) | Tuch–Deisu (shawl–deisu) |

| Tüte–Plastik (bag–plastic) | Tüte–Eisen (bag–iron) | Tüte–Lastüt (bag–lastüt) |

| Zigarre–Rauch (cigar–smoke) | Zigarre–Wasser (cigar–water) | Zigarre–Chag (cigar–chag) |

| CROSS‐MODAL CONDITION | ||

| Pinsel–Farbe (paint–brush–paint) | Pinsel–Lampe (paint–brush–lamp) | Pinsel–Rafins (paint–brush–rafins) |

| Ring–Diamant (ring–diamond) | Ring–Farbe (ring–paint) | Ring–Tantia (ring–tantia) |

| Schublade–Kommode (drawer–bureau) | Schublade–Flügel (drawer–aerofoil) | Schublade–Modos (drawer–modos) |

| Absatz–Stiefe–l (heel–boot) | Absatz–Schleife (heel–bow) | Absatz–Schliefa (heel–schliefa) |

| Bohrer–Dübel (borer–dowel) | Bohrer–Container (borer–container) | Bohrer–Tocob (borer–tocob) |

| Dose–Öffner (can–can–opener) | Dose–Theater (can–theatre) | Dose–Atera (can–atera) |

| Geschenk–Schleife (present–bow) | Geschenk–Sprosse (present–rung) | Geschenk–Rosek (present–rosek) |

| Gewehr–Patrone (gun–round) | Gewehr–Bikini (gun–bikini) | Gewehr–Kineb (gun–kineb) |

| Helm–Mofa (helmet–moped) | Helm–Kommode (helmet–bureau) | Helm–Modehl (helmet–modehl) |

| Herd–Pfanne (cooker–pan) | Herd–Spitze (cooker–lace) | Herd–Tize (cooker–tize) |

| Kassette–Radio (casette–radio) | Kassette–Schminke (casette–make–up) | Kassette–Minkat (cassette–minkat) |

| Leiter–Sprosse– (ladder –rung) | Leiter–Diamant (ladder–diamond) | Leiter–Rosei (ladder–rosei) |

| Pullover–Wolle (pullover–wool) | Pullover–Tabak (pullover–tobacco) | Pullover–Ellov (pullover–ellov) |

| Schlüssel–Schloss (key–lock) | Schlüssel–Schwamm (key–sponge) | Schlüssel–Olsch (key–olsch) |

| BH–Spitze (bra–lace) | BH–Schraube (bra–srew) | BH–Sarub (bra–sarub) |

| Glühbirne–Lampe (bulb–lamp) | Glühbirne–Wäsche (bulb–laundry) | Glühbirne–Schäb (bulb–schab) |

| Flugzeug–Flügel (airplane–aerofoil) | Flugzeug–Schloss (airplane–lock) | Flugzeug–Slog (airplane–slog) |

| Grill–Rost (barbecue–gridiron) | Grill–Note (barbecue–note) | Grill–Etir (barbecue–etir) |

| Klammer–Wäsche (peg–laundry) | Klammer–Mofa (peg–moped) | Klammer–Famo (peg–famo) |

| Klavier–Note (piano–note) | Klavier–Stiefel (piano–boot) | Klavier–Felav (piano–felav) |

| Laster–Container (truck–container) | Laster–Wolle (truck–wool) | Laster–Lowst (truck–lowst) |

| Maske–Theater (mask–theater) | Maske–Antenne (mask–antenna) | Maske–Ethas (mask–ethas) |

| Muschel–Sand (clam–sand) | Muschel–Patrone (clam) –round) | Muschel–Danusch (clam–danusch) |

| Mutter–Schraube (screw nut–screw) | Mutter–Radio (srew nut–radio) | Mutter–Rautu (screw nut–radio) |

| Pfeife–Tabak (pipe–tobacco) | Pfeife–Dübel (pipe–dowel) | Pfeife–Batei (pipe–batei) |

| Pool–Bikini (pool–bikini) | Pool–Rost (pool–gridiron) | Pool–Ikino (pool–ikino) |

| Spiegel–Schminke (mirror–make–up) | Spiegel–Geschirr (mirror–dishes) | Spiegel–Ligisch (mirror–ligisch) |

| Spüle–Geschirr (sink–dishes) | Spüle–Sand (sink–sand) | Spüle–Schegü (sink–schgu) |

| Fernseher–Antenne (television–antenna) | Fernseher–Pfanne (television–pan) | Fernseher–Entav (television–entav) |

| Wanne–Schwamm (tub–sponge) | Wanne–Öffner (tub–opener) | Wanne–Waman (tub–waman) |

Different stimuli were used for the different conditions. They were matched within and between modalities, primes, targets and prime‐target pairs regarding lexical frequency, word length, familiarity and relationship. In the cross‐modal condition the prime word was replaced by a picture.

Table II.

Results of the normative rating studies

| Matching criteria | Within modality | Between modality | ||

|---|---|---|---|---|

| Primes | Targets | Word‐pairs | ||

| Lexical frequency | 0.69 | 0.61 | 0.45 | 0.96 |

| Number of letters | 0.33 | 0.86 | 0.50 | 0.61 |

| Number of syllables | 0.26 | 0.38 | 0.54 | 0.88 |

| Mean rating score | 0.25 | |||

The within modality matching was done between primes and targets. Between modality the matching was done between uni‐ and cross‐modal primes, uni‐ and cross‐modal targets and uni‐ and cross‐modal word‐pairs. The results represent the P values of the paired‐sample t‐tests.

The pictures for the cross‐modal condition were taken from the International Picture Naming Project (IPNP; http://crl.ucsd.edu/~aszekely/ipnp/index.html). The pictures were normed across seven languages [for details see Bates et al.,2003]. In addition, we conducted a pre‐test with 12 volunteers not participating in the fMRI study. The volunteers were asked to name the pictures. Only pictures that were recognized by every volunteer were selected. The final selection of the pictures was made according the already selected and matched word‐pair list whereas the prime words were replaced with pictures. These pictures consisted of concrete black line drawings on a white background that represent simplex words, i.e., objects.

Design and Procedure

A rapid event‐related fMRI (erfMRI) design was used to present (unimodally and cross‐modally) related, unrelated and word‐nonword trials [Amaro and Barker,2006; Gold et al.,2006; Sass et al.,2009a,b]. Within these blocks, the inter‐stimulus interval (ISI) was shorter than the duration of the hemodynamic response function (HRF) generated from previous trials. The idea behind was that the presentation of trials from the same condition in a sequence leads to a better sampling of the HRF curve and hence to a better signal. Per ‘word’ condition, there were three blocks with two trials and six blocks with three trials (i.e., within one block the same condition occurred two‐ or three‐times). The remaining stimuli (six per conditions and all nonwords) were pseudo‐randomly distributed. The ISI between the small blocks and between the remaining stimuli was longer so that the overlapping HRFs are deconvolved. The modalities (cross‐modal picture–word and unimodal word–word) were presented in different sessions. Subjects obtained no information about the construction and arrangement of stimuli and conditions. Four pseudo randomized versions of the experiment were counterbalanced across subjects to avoid a systematic effect of conditions on each other. The presentation of the stimuli was controlled using a preprogrammed Presentation script file (version 11.0 software package Neurobehavioral Systems, http://www.neurobs.com/) projected through MR‐compatible video goggles (VisuaStim XGA, Resonance Technology, Inc., http://www.mri-video.com/).

Each trial began with an attention cue ”+“ (500ms), followed by the presentation of the prime (350ms; either word or picture) and then by the target that was shown for 1000 ms. After the target, a hash sign (#) appeared for a jittered duration of 1.5–2.5 s (small jitter within the small blocks; M ISIsmall = 2 s) or 3–5 s (long jitter between small blocks and remaining stimuli; M ISIlong = 4 s). Subjects had to press one of two buttons as fast and correct as possible depending on the kind of the target word (real word–right button or nonword–left button). Pressing was done with the index or middle finger of the left hand to avoid motor‐related activation in the left hemisphere.

Data Acquisition

The Scanning was performed on a 3T scanner (Gyroscan Achieva, Philips Medical Systems, Best, The Netherlands) using standard gradients and a circular polarized phase array head coil. Subjects lay in a supine position, while head movement was limited by foam padding within the head coil. In order to ensure optimal visual acuity, subjects were offered fMRI‐compatible glasses that could be fixed to the video goggles. For each subject and each run, a series of 216 EPI‐scans, lasting about 7 min, was acquired. Stimuli were presented in an erfMRI design fashion, with 30 stimuli per condition and a trial length of approximately five seconds.

Scans covered the whole brain, including five initial dummy scans parallel to the AC/PC line with the following parameters: number of slices (NS), 31; slice thickness (ST), 3.5 mm; interslice gap (IG), 0.35 mm; matrix size (MS), 64 × 64; field of view (FOV), 240 × 240 mm; echo time (TE), 30 ms; repetition time (TR), 2 s. For anatomical localization, we acquired high resolution images with a T1‐weighted 3D FFE sequence (TR = 25 ms; TE = 2.04 ms; NS = 160 (sagittal); ST = 2 mm; IG = 1 mm; FOV = 256 × 256 mm; voxel size = 1 × 1 × 2 mm).

Behavioral Data Analysis

Reaction time was measured from the moment the target was presented until the subject made a correct response. Raw reaction time data were trimmed by eliminating responses exceeding the mean of every condition by more than two standard deviations to reduce skew [3.9%; Ratcliff,1993]. Trimmed data were entered into a repeated measure ANOVA with modality (cross‐modal and unimodal) and relation (related and unrelated) as independent variables.

fMRI Data Analysis

Image processing and statistical analyses were performed using statistical parametric mapping software (SPM5, http://www.fil.ion.ucl.ac.uk) implemented in MATLAB 7.0 (Mathworks, Inc., Sherborn, MA). The first five volumes were discarded to minimize T1‐saturation effects, i.e., they were discarded because of the non‐equilibrium state of magnetization.

Each subject's fMRI images were realigned to the first functional image in order to correct for head movement. The resliced volumes were normalized to the standard stereotaxic anatomical MNI‐space by using the transformation matrix calculated from the first EPI‐scan of each subject and the EPI‐template. For the normalization the default SPM5 settings with 16 nonlinear iterations and the standard EPI‐template of SPM5 were used. Each normalized image was then smoothed using an 8‐mm Gaussian kernel to accommodate differences in anatomy between subjects. The time series data were high‐pass filtered with a high‐pass cut‐off 1/128 Hz. The first‐order autocorrelations of the data were estimated and corrected for.

Following pre‐processing, statistical analyses for each individual subject were conducted. The hemodynamic response triggered by the target word in each condition was modeled with a canonical HRF. The model parameters were estimated using restricted maximum likelihood (ReML). Parameter estimates of the HRF regressor for each of the different conditions were calculated from the least mean squares fit of the model to the time series. Additionally, because the two modalities were presented in different sessions, the session effect was modeled. At the second level, a SPM5 random effects group analyses was performed by entering the parameter estimates for all conditions into a within‐subject one‐way ANOVA to create a statistical parametric map (SPM). Because we assume that the differences between the processing of both modalities might be small, we chose to employ Monte‐Carlo simulation of the brain volume to establish an appropriate voxel contiguity threshold [Slotnick et al.,2003]. This correction has the advantage of higher sensitivity to smaller effect sizes, while still correcting for multiple comparisons across the whole brain volume. The result of the Monte‐Carlo simulations was based on the 4 × 4 × 4 mm interpolated voxels. Further information used for the cluster‐size calculations were the 8‐mm smoothing kernel, the field of view (64 × 64) and the number of slices (31). Assuming an individual voxel type I error of P < 0.00005, a cluster extent of 4 contiguous resampled voxels was indicated as necessary to correct for multiple voxel comparisons at P < 0.05. The extent threshold was set at k = 20 voxels.

Firstly, we examined the semantic priming effect concerning response suppression (RS) and response enhancement (RE). To investigate RS, the related condition was subtracted from the unrelated condition (unrelated > related). The opposite contrast was calculated to obtain RE. To ensure, that the activations (RS) and deactivation (RE) were a result of the differences between the related and unrelated conditions, both contrasts were exclusively masked with the word‐nonword conditions (cross‐modal picture‐nonword and unimodal word‐nonword). The mask was thresholded at P < 0.05 uncorrected. Secondly, to investigate the modality‐specific effects and interactions we subtracted the unimodal condition from the cross‐modal condition (i.e., [cross‐modal related > unrelated] > [unimodal related > unrelated]) and vice versa. The results were inclusively masked with the corresponding contrasts (related > unrelated) to assess those voxels that fall within the area determined by the mask [Bright et al.,2004; Devlin et al.,2002]. The mask was thresholded at P < 0.05 uncorrected. Finally, modality‐independent regions were identified by subtracting (a) both unrelated conditions from the related conditions ([unimodal related + cross‐modal related] > [unimodal unrelated + cross‐modal unrelated]) to assess activation of both related conditions and (b) deactivation of both related conditions were calculated by subtracting both related conditions from the unrelated conditions ([unimodal unrelated + cross‐modal unrelated] > [unimodal related + cross‐modal related]). In addition, the results were inclusively masked (thresholded at P < 0.05) with the individual contrasts: (a) [unimodal related > unrelated], [cross‐modal related > unrelated]; (b) [unimodal unrelated > related], [cross‐modal unrelated > related]. With this procedure the contrasts show only voxels that fall within the areas determined by the mask and the regions of activation overlap can be identified [Bright et al.,2004; Devlin et al.,2002]. The reported voxel coordinates of activation peaks were from the MNI space (ICBM standard). For the anatomical localization the functional data were referenced to probabilistic cytoarchitectonic maps [Eickhoff et al.,2005].

RESULTS

Behavioral Data

Accuracy

The percentage of errors was entered into a repeated measure ANOVA with modality and relation as independent variables. Results revealed that there was no effect of modality (F (1, 15) = 0.05, P = 0.83) and relation (F (1, 15) = 1.05, P = 0.32) and no interaction between modality and relation (F (1, 15) = 1.15, P = 0.30). Incorrect responses were excluded from further analysis.

Reaction time

The results of the ANOVA indicated that there was no main effect of modality (F (1, 15) = 0.06, P = 0.81), but that subjects were faster in the related compared to the unrelated condition (F (1, 15) = 14.73, P < 0.005). There was no interaction between modality and relation (F (1, 15) = 0.20, P = 0.66). The difference between the related and the unrelated condition of the two modalities was used to calculate the priming effects of the modalities. In both modalities, subjects were faster in the related compared to the unrelated condition (cross‐modal condition: t (15) = 2.92, P < 0.01; unimodal condition: t (15) = 2.66, P < 0.05). A further t‐test of priming effect sizes in cross‐modal and unimodal conditions revealed that the picture–word priming effect did not differ from the word–word priming effect (t (15) = −0.45, P = 0.66; see Table III for mean reaction times, standard deviations and size of the priming effects).

Table III.

Mean reaction times and SD of condition

| Condition | Mean RT (ms) | SD (ms) | Priming effect (SD) |

|---|---|---|---|

| Cross‐modal related | 638.54 | 79.29 | 39.13 (53.69) |

| Cross‐modal unrelated | 677.67 | 100.01 | |

| Unimodal related | 644.46 | 85.20 | 31.34 (47.17) |

| Unimodal unrelated | 675.80 | 84.63 |

RT, reaction time; SD, standard deviation.

Imaging Data

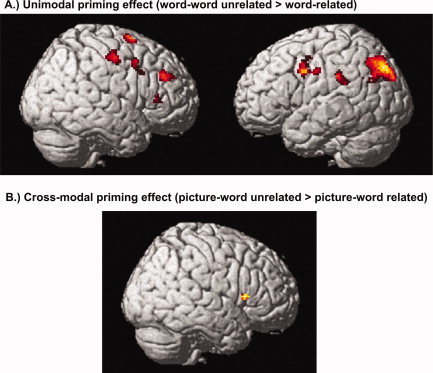

Priming effects in the unimodal word–word condition

Subtraction of the unimodal related from the unimodal unrelated trials (unrelated > related; excl. masked with unimodal word‐nonword) revealed bilateral fronto‐parietal clusters of activation, i.e., bilateral inferior frontal gyrus (IFG), left inferior parietal lobule (IPL), right middle frontal gyrus (MFG), right superior frontal gyrus (SFG) and right precentral gyrus. Furthermore, a significant cluster in the right precuneus was found. For the opposite contrast (related > unrelated) no activation reached significance (see Table IV, Fig. 1A).

Table IV.

Results of fMRI semantic priming effects and modality specific activations

| Anatomical region | BA | Coordinates | z value | No. voxels | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| WORD–WORD UNRELATED > WORD–WORD RELATED (EXCL. MASKED WITH WORD–NONWORD) | ||||||

| Left inferior parietal lobule | 40 | −30 | −74 | 40 | 5.25 | 1495 |

| Right middle frontal gyrus | 46 | 44 | 40 | 30 | 4.91 | 112 |

| Left inferior parietal lobule | 40 | −42 | −34 | 30 | 4.89 | 284 |

| Left inferior frontal gyrus | 44 | −42 | 4 | 32 | 4.79 | 1722 |

| Right superior frontal gyrus | 6 | 26 | 6 | 68 | 4.75 | 78 |

| Right precentral gyrus | 4a | 40 | −14 | 48 | 4.52 | 129 |

| Right inferior frontal gyrus | 45 | 56 | 34 | 8 | 4.30 | 22 |

| Right precuneus | 7 | 26 | −60 | 24 | 4.19 | 27 |

| Right precentral gyrus | 44 | 52 | 10 | 44 | 4.18 | 55 |

| PICTURE–WORD UNRELATED > PICTURE–WORD RELATED (EXCL. MASKED WITH PICTURE–NONWORD) | ||||||

| Right inferior frontal gyrus | 45 | 44 | 18 | 8 | 4.22 | 27 |

| COMMON AREAS OF ACTIVATION: [PICTURE–WORD UNRELATED + WORD–WORD UNRELATED] > [PICTURE–WORD RELATED + WORD–WORD RELATED]; INCL. MASKED WITH [PICTURE–WORD UNRELATED > RELATED] AND [WORD–WORD UNRELATED > RELATED] | ||||||

| Right inferior frontal gyrus | 45 | 44 | 18 | 8 | 4.95 | 539 |

| Left medial frontal gyrus | 9 | −16 | 32 | 30 | 4.87 | 407 |

| Left inferior parietal lobule | 40 | −38 | −34 | 32 | 4.41 | 55 |

| Right fusiform gyrus | 20 | 42 | −34 | −16 | 4.38 | 28 |

| Left superior temporal gyrus | 22 | −32 | −54 | 18 | 4.36 | 94 |

| Right inferior frontal gyrus | 44, 45 | 54 | 18 | 32 | 4.36 | 64 |

| Right middle temporal gyrus | 21 | 44 | −44 | 8 | 4.34 | 79 |

| Right middle frontal gyrus | 9 | 40 | 38 | 38 | 4.31 | 39 |

| Right inferior frontal gyrus | 9 | 32 | 2 | 32 | 4.11 | 30 |

| Left caudate | −12 | 24 | 4 | 4.10 | 27 | |

Significance level and the size of the respective activation cluster (number of voxels) at P < 0.05, corrected. Coordinates are listed in MNI atlas space. BA is the Brodmann area nearest to the coordinate and should be considered approximate.

Figure 1.

Correlates of the uni‐ and cross‐modal priming effect. Significant areas of activation for the comparison of unrelated and related word‐pairs. (A) Unimodal response suppression (unimodal unrelated > related; excl. masked with unimodal word‐nonword) within bilateral fronto‐parietal regions. (B) Cross‐modal response suppression (cross‐modal related > unrelated; excl. masked with cross‐modal picture‐nonword) within the right inferior frontal gyrus. [Color figure can be viewed in the online issue, which is available at www.interscience.wiley.com.]

Priming effects in the cross‐modal picture–word condition

The contrast cross‐modal unrelated > cross‐modal related (excl. masked with cross‐modal picture‐nonword) revealed activation in the right IFG. For the opposite contrast (related > unrelated) no significant activation cluster was found (see Table IV, Fig. 1B).

Modality specific semantic effects and interactions

For the subtraction of the unimodal condition from the cross‐modal condition ([cross‐modal related > unrelated] > [unimodal related > unrelated]; incl. masked with [(unimodal/cross‐modal) related > unrelated]) and vice versa ([unimodal related > unrelated] > [cross‐modal related > unrelated]; incl. masked with [(unimodal/cross‐modal) related > (unimodal/cross‐modal) unrelated]) no cluster reached significance.

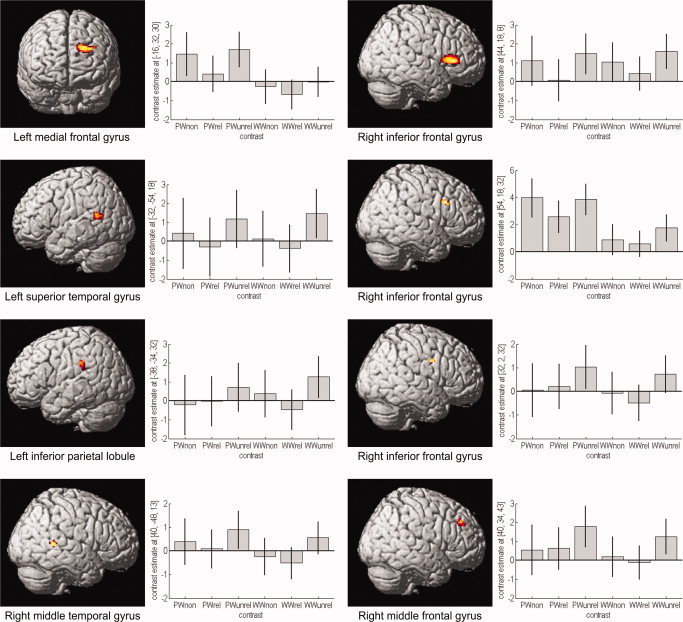

Regions of common semantic effects for both modalities

To identify regions of overlapping activation, both unrelated conditions were subtracted from the related conditions ([unimodal related + cross‐modal related] > [unimodal unrelated + cross‐modal unrelated]; incl. masked with [(unimodal/cross‐modal) related > (unimodal/cross‐modal) unrelated]). No cluster reached significance. For the opposite contrast ([unimodal unrelated + cross‐modal unrelated] > [unimodal related + cross‐modal related]; incl. masked with [(unimodal/cross‐modal) unrelated > (unimodal/cross‐modal) related]), bilateral fronto‐tempo‐parietal activation clusters were found: right IFG, MFG, fusiform gyrus, middle temporal gyrus (MTG) and left medial frontal gyrus, IPL, superior temporal gyrus (STG) and left caudate nucleus. Since the activations that were found in this contrast are relatively small but complex, we examined them more thoroughly by plotting parameter estimates for each of the conditions to rule out that the overlapping activations occur in both modalities. Analyzing the parameter estimates, we established that there was a decrease of the related in comparison of the unrelated condition of both modalities for all main clusters (see Table IV and Fig. 2).

Figure 2.

Correlates of modality‐independent semantic processing and parameter estimates. Significant areas of common deactivation for the contrast (unimodal unrelated + cross‐modal unrelated) > (unimodal related + cross‐modal related), incl. masked with (unimodal unrelated > related) and (cross‐modal unrelated > related). Areas of overlapping activation were found in bilateral fronto‐temporal and left inferior parietal regions. Plots of mean parameter estimates with 90% confidence interval for every significant deactivation showing response amplitude for every condition: picture–word nonword (PWnon), picture–word related (PWrel), picture–word unrelated (PWunrel), word–word nonword (WWnon), word–word related (WWrel) and word–word unrelated (WWunrel). Labeling of the y axis is in arbitrary units. The graphs have different scaling and scaling steps. [Color figure can be viewed in the online issue, which is available at www.interscience.wiley.com.]

DISCUSSION

The present study examined the neural correlates of uni‐ and cross‐modal priming using fMRI with a word–word and picture–word semantic priming task. The results indicated that the processing of different modalities leads to common semantic priming effects behaviorally. On a neural level, unimodal priming led to bilateral fronto‐parietal activations whereas cross‐modal priming revealed activation of the right IFG. The comparison of both modalities revealed no differences between them but rather modality‐independent signal changes in bilateral fronto‐tempo‐parietal regions.

In the unimodal (word–word) condition we found a deactivation of frontal and parietal areas for related compared to unrelated stimuli, corresponding to a significant behavioral priming effect. The bilateral frontal deactivation confirms earlier priming studies that related the prefrontal regions with the selection and retrieval of semantic information [Cabeza and Nyberg,2000; Rossell et al.,2003]. Hence, the response suppression is caused by reduced demands for the semantic memory retrieval or is the result of selection caused by the automatic spread of activation [Matsumoto et al.,2005; Tivarus et al.,2006]. For example, Kuperberg et al. [2007] found deactivation of the bilateral prefrontal regions in response to directly related word‐pairs. They assumed that this reflects a relative ease of accessing target words that has been predicted from their directly related primes. Furthermore, they propose that the deactivation of the right prefrontal cortex might reflect the more general involvement of inhibitory processes, “possibly inhibiting predictions that did not match related targets.” In addition, the reduced activity of the right MFG could be caused by the extensive search within the semantic network for unrelated word‐pairs leading to a higher retrieval effort and reduced decision certainty [Kotz et al.,2002; Rissman et al.,2003]. This result is supported by the deactivation of the left IPL, a region that is associated with semantic memory processing and retrieval especially for semantic associations [Assaf et al.,2006]. In addition, the activation of the right precuneus is particularly interesting. It is assumed that the precuneus plays a role in mental imagery, episodic memory, contextual associations and self‐processing [Cavanna and Trimble,2006; Kircher et al.,2000]. We assume that the activity of the precuneus in our study reflects the involvement of episodic and more hypothetically self relevant (semantic autobiographic) memory retrieval in semantic priming.

In the cross‐modal (picture–word) condition the behavioral priming effect was related to neural response suppression in the right IFG. Again, these signal decreases might be related to the more general involvement of right prefrontal regions in inhibition processes [Kuperberg et al.,2007].

The comparison of both modalities revealed no differences between uni‐ and cross‐modal semantic priming. In contrast, common regions of deactivation were found within bilateral fronto‐tempo‐parietal regions. The extensive involvement of the right‐lateralized fronto‐temporal clusters during semantic processing has been demonstrated in some studies. One hypothesis suggests, that the right frontal and temporal lobe maintains weak, diffuse semantic activations within a broader semantic field [Beeman and Chiarello,1998; Jung‐Beeman,2005; Raposo et al.,2006; Rossell et al.,2001]. For example, activation was found for distant semantic relations of words [Beeman et al.,1994], extraction of overall meaning from sentences [Kircher et al.,2001] and for related words that do not share many semantic features [Chiarello,1998]. In addition, activation of the MTG bilaterally were found for semantic category judgments, i.e., the temporal lobe plays a critical role during semantic processing [Pugh et al.,1996]. For our results, we assume that the deactivation of the right fronto‐temporal regions for related pairs reflects the ease of semantic processing for associatively related concepts that is independent of modality. Support comes from several studies investigating semantic priming in the left and right hemisphere [Bright et al.,2004; Raposo et al.,2006; Rossell et al.,2001]. They propose that ”both hemispheres are capable of activating a large set if that information is associated“ [Richards and Chiarello,1995]. We would therefore interpret our results along this line and assume that deactivations of the right lateral temporal and frontal lobe reflect semantic processing of associations and the higher retrieval effort and extensive search within the semantic network for unrelated word‐pairs. This assumption is supported by the signal decreases within left fronto‐temporal regions that were found during amodal, implicit priming [Henson,2003] and were related to greater processing resources required for words that are semantically unrelated compared to related [Cabeza and Nyberg,2000; Rissman et al.,2003; Ruff et al.,2008].

In the current study, we used only artifacts to investigate the influence of different visual modalities on the processing of semantic associations. Nevertheless, associative relationships exist not only between artifacts, but also between other concepts, for example, living/natural objects (e.g., lion–mane, tiger–stripes). Using natural objects, different areas of activation would be expected in comparison to artifacts [e.g., Perani et al.,1999; Thompson‐Schill et al.,1999]. For example, Perani et al. [1999] have found that the left fusiform gyrus was involved in the processing of pictures and words of living entities and the left middle temporal gyrus was involved in the processing of words and pictures of tools. Furthermore, Kalenine et al. [2009] showed that the domain (natural vs. artifact objects) had an influence on the neural correlates of semantic relations. According to our study, we would therefore expect different areas of activation for natural objects [e.g., within bilateral visual areas; Kalenine et al.,2009] but these activations should be independent of presentation modality. Furthermore, the current literature revealed that an associative relationship between object concepts and action semantics [Beauchamp and Martin,2007; Tyler et al.,2003] is mainly processed within the left temporal cortex – independent of presentation modality [words or pictures; Perani et al.,1999]. A further distinction can be made regarding manipulable (e.g., cherry–basket) and non‐manipulable objects (e.g., bed–person asleep). Kalenine et al. [2009] found that manipulable associative relations led to enhanced activation within left inferior parietal and middle temporal regions in comparison to non‐manipulable relations whereas artifacts elicited greater activation within right temporo‐parietal regions in comparison to natural objects. Overall, using different associatively related object concepts, we would expect category‐specific differences between man‐made and natural objects [Martin et al.,1996] within distinct neural networks. This differentiation might be based on perceptual/visual attributes (natural kinds) and functional/motor features (artifacts), i.e., object knowledge is organized around sensory and functional features as suggested by the sensory‐motor hypothesis [Warrington and Shallice,1984]. However, according to the results of the current study and results of earlier studies [e.g, Kahlaoui et al.,2007; Sass et al.,2009b] these differences between distinct objects domains (living, non‐living) and categories (animals, tools) should be independent of presentation modality.

Limitations

We restricted our sample to male subjects due to the ongoing debate about gender specific effects for almost all types of cognitive demands, including language processing and lateralization [Baxter et al.,2003; Bermeitinger et al.,2008; Laws,1999; Lloyd‐Jones and Humphreys,1997].

Summary

Our results indicate that cross‐modal and unimodal processing share a common neural network within the bilateral fronto‐temporal cortex. Thus, our data support an amodal semantic system based on the hypothesis of Caramazza et al. [1990] rather than the hypothesis of multiple semantic systems [e.g., Paivio,1991]. First of all, similar to other studies we found no difference between cross‐modal and unimodal priming on a behavioral or neural level [Caramazza et al.,1990; Vandenberghe et al.,1996]. Secondly, both priming types elicited common deactivations in the bilateral fronto‐temporal regions. These areas may reflect semantic processing and retrieval of associatively related concepts irrespective of modality. Conceivably, the processing of different modalities leads to modality specific activation reflecting the processing routes that converge to a single set of conceptual representations common for different modalities [Caramazza et al.,1990; Carlesimo et al.,2003], i.e., semantic processing itself is independent of modality.

Acknowledgements

We thank two anonymous reviewers for their helpful comments on this manuscript.

REFERENCES

- Amaro E Jr, Barker GJ ( 2006): Study design in fMRI: Basic principles. Brain Cogn 60: 220–232. [DOI] [PubMed] [Google Scholar]

- Assaf M, Calhoun VD, Kuzu CH, Kraut MA, Rivkin PR, Hart J Jr, Pearlson GD ( 2006): Neural correlates of the object‐recall process in semantic memory. Psychiatry Res 147: 115–126. [DOI] [PubMed] [Google Scholar]

- Baayen RH, Piepenbrock R, Rijn Hv ( 1993): The CELEX Lexical Database [CD‐ROM]. Version Release 1. Philadelphia: Linguistic Data Consortium, University of Pennsylvania. [Google Scholar]

- Badgaiyan RD, Schacter DL, Alpert NM ( 2001): Priming within and across modalities: Exploring the nature of rCBF increases and decreases. Neuroimage 13: 272–282. [DOI] [PubMed] [Google Scholar]

- Bates E, D'Amico S, Jacobsen T, Szekely A, Andonova E, Devescovi A, Herron D, Lu CC, Pechmann T, Pleh C, Wicha N, Federmeier K, Gerdjikova I, Gutierrez G, Hung D, Hsu J, Iyer G, Kohnert K, Mehotcheva T, Orozco‐Figueroa A, Tzeng A, Tzeng O ( 2003): Timed picture naming in seven languages. Psychon Bull Rev 10: 344–380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baxter LC, Saykin AJ, Flashman LA, Johnson SC, Guerin SJ, Babcock DR, Wishart HA ( 2003): Sex differences in semantic language processing: A functional MRI study. Brain Lang 84: 264–272. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Martin A ( 2007): Grounding object concepts in perception and action: Evidence from fMRI studies of tools. Cortex 43: 461–468. [DOI] [PubMed] [Google Scholar]

- Beeman MJ, Chiarello C ( 1998): Complementary right and left hemisphere language comprehension. Curr Directions Psychol Sci 7: 2–8. [Google Scholar]

- Beeman MJ, Friedman RB, Grafman J, Perez E, Diamond S, Lindsay MB ( 1994): Summation priming and coarse semantic encoding in the right hemisphere. J Cogn Neurosci 6: 26–45. [DOI] [PubMed] [Google Scholar]

- Bergerbest D, Ghahremani DG, Gabrieli JD ( 2004): Neural correlates of auditory repetition priming: Reduced fMRI activation in the auditory cortex. J Cogn Neurosci 16: 966–977. [DOI] [PubMed] [Google Scholar]

- Bermeitinger C, Wentura D, Frings C ( 2008): Nature and facts about natural and artifactual categories: Sex differences in the semantic priming paradigm. Brain Lang 106: 153–163. [DOI] [PubMed] [Google Scholar]

- Booth JR, Burman DD, Meyer JR, Gitelman DR, Parrish TB, Mesulam MM ( 2002): Modality independence of word comprehension. Hum Brain Mapp 16: 251–261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bright P, Moss H, Tyler LK ( 2004): Unitary vs multiple semantics: PET studies of word and picture processing. Brain Lang 89: 417–432. [DOI] [PubMed] [Google Scholar]

- Cabeza R, Nyberg L ( 2000): Imaging cognition. II. An empirical review of 275 PET and fMRI studies. J Cogn Neurosci 12: 1–47. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Hillis AE, Rapp BC, Romani C ( 1990): The multiple semantics hypothesis: Multiple confusions? Cogn Neuropsychol 7: 161–189. [Google Scholar]

- Carlesimo GA, Turriziani P, Paulesu E, Gorini A, Caltagirone C, Fazio F, Perani D ( 2003): Brain activity during intra‐ and cross‐modal priming: New empirical data and review of literature. Neuropsychologia 42: 14–24. [DOI] [PubMed] [Google Scholar]

- Cavanna AE, Trimble MR ( 2006): The precuneus: A review of its functional anatomy and behavioural correlates. Brain 129 ( Part 3): 564–583. [DOI] [PubMed] [Google Scholar]

- Chiarello C ( 1998): On codes of meaning and the meaning of codes: Semantic access and retrieval within and between hemispheres In: Beeman M, Chiarello C, editors. Right Hemisphere Language Comprehension: Perspectives from Cognitive Neuroscience. Mahwah, NJ: Erlbaum; pp 141–160. [Google Scholar]

- DeLeon J, Gottesman RF, Kleinman JT, Newhart M, Davis C, Heidler‐Gary J, Lee A, Hillis AE ( 2007): Neural regions essential for distinct cognitive processes underlying picture naming. Brain 130 ( Part 5): 1408–1422. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Russell RP, Davis MH, Price CJ, Moss HE, Fadili MJ, Tyler LK ( 2002): Is there an anatomical basis for category‐specificity? Semantic memory studies in PET and fMRI. Neuropsychologia 40: 54–75. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K ( 2005): A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage 25: 1325–1335. [DOI] [PubMed] [Google Scholar]

- Giesbrecht B, Camblin CC, Swaab TY ( 2004): Separable effects of semantic priming and imageability on word processing in human cortex. Cereb Cortex 14: 521–529. [DOI] [PubMed] [Google Scholar]

- Gold BT, Balota DA, Jones SJ, Powell DK, Smith CD, Andersen AH ( 2006): Dissociation of automatic and strategic lexical‐semantics: Functional magnetic resonance imaging evidence for differing roles of multiple frontotemporal regions. J Neurosci 26( 24): 6523–6532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossman M, Koenig P, Kounios J, McMillan C, Work M, Moore P ( 2006): Category‐specific effects in semantic memory: Category–task interactions suggested by fMRI. Neuroimage 30: 1003–1009. [DOI] [PubMed] [Google Scholar]

- Heim S, Eickhoff SB, Ischebeck AK, Supp G, Amunts K ( 2007): Modality‐independent involvement of the left BA 44 during lexical decision making. Brain Struct Funct 212: 95–106. [DOI] [PubMed] [Google Scholar]

- Henson R ( 2003): Neuroimaging studies of priming. Prog Neurobiol 70: 53–81. [DOI] [PubMed] [Google Scholar]

- Jung‐Beeman M ( 2005): Bilateral brain processes for comprehending natural language. Trends Cogn Sci 9( 11): 512–518. [DOI] [PubMed] [Google Scholar]

- Kahlaoui K, Baccino T, Joanette Y, Magnié M‐N ( 2007): Pictures and Words: Priming and Category Effects in Object Processing. Curr Psychol Lett [Online] 23, URL: http://cpl.revues.org/index2882.html. [Google Scholar]

- Kalenine S, Bonthoux F ( 2008): Object manipulability affects children's and adults' conceptual processing. Psychon Bull Rev 15: 667–672. [DOI] [PubMed] [Google Scholar]

- Kalenine S, Peyrin C, Pichat C, Segebarth C, Bonthoux F, Baciu M ( 2009): The sensory‐motor specificity of taxonomic and thematic conceptual relations: A behavioral and fMRI study. Neuroimage 44: 1152–1162. [DOI] [PubMed] [Google Scholar]

- Kircher TT, Brammer M, Tous Andreu N, Williams SC, McGuire PK ( 2001): Engagement of right temporal cortex during processing of linguistic context. Neuropsychologia 39: 798–809. [DOI] [PubMed] [Google Scholar]

- Kircher TT, Senior C, Phillips ML, Benson PJ, Bullmore ET, Brammer M, Simmons A, Williams SC, Bartels M, David AS ( 2000): Towards a functional neuroanatomy of self processing: Effects of faces and words. Brain Res Cogn Brain Res 10: 133–144. [DOI] [PubMed] [Google Scholar]

- Kohler S, Moscovitch M, Winocur G, McIntosh AR ( 2000): Episodic encoding and recognition of pictures and words: Role of the human medial temporal lobes. Acta Psychol (Amst) 105: 159–179. [DOI] [PubMed] [Google Scholar]

- Koivisto M, Revonsuo A ( 2000): Semantic priming by pictures and words in the cerebral hemispheres. Brain Res Cogn Brain Res 10: 91–98. [DOI] [PubMed] [Google Scholar]

- Kotz SA, Cappa SF, von Cramon DY, Friederici AD ( 2002): Modulation of the lexical‐semantic network by auditory semantic priming: An event‐related functional MRI study. Neuroimage 17: 1761–1772. [DOI] [PubMed] [Google Scholar]

- Kuperberg GR, Lakshmanan BM, Greve DN, West WC ( 2008): Task and semantic relationship influence both the polarity and localization of hemodynamic modulation during lexico‐semantic processing. Hum Brain Mapp 29: 544–561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laws KR ( 1999): Gender affects naming latencies for living and nonliving things: Implications for familiarity. Cortex 35: 729–733. [DOI] [PubMed] [Google Scholar]

- Lin EL, Murphy GL ( 2001): Thematic relations in adults' concepts. J Exp Psychol Gen 130: 3–28. [DOI] [PubMed] [Google Scholar]

- Lloyd‐Jones TJ, Humphreys GW ( 1997): Perceptual differentiation as a source of category effects in object processing: Evidence from naming and object decision. Mem Cognit 25: 18–35. [DOI] [PubMed] [Google Scholar]

- Marinkovic K, Dhond RP, Dale AM, Glessner M, Carr V, Halgren E ( 2003): Spatiotemporal dynamics of modality‐specific and supramodal word processing. Neuron 38: 487–497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin A, Wiggs CL, Ungerleider LG, Haxby JV ( 1996): Neural correlates of category‐specific knowledge. Nature 379: 649–652. [DOI] [PubMed] [Google Scholar]

- Matsumoto A, Iidaka T, Haneda K, Okada T, Sadato N ( 2005): Linking semantic priming effect in functional MRI and event‐related potentials. Neuroimage 24: 624–634. [DOI] [PubMed] [Google Scholar]

- Mesulam MM ( 1998): From sensation to cognition. Brain 121 ( Part 6): 1013–1052. [DOI] [PubMed] [Google Scholar]

- Moore CJ, Price CJ ( 1999): Three distinct ventral occipitotemporal regions for reading and object naming. Neuroimage 10: 181–192. [DOI] [PubMed] [Google Scholar]

- Neely JH ( 1991): Semantic priming effects in visual word recognition: A selective review of current findings and theories In: Besner D, Humphreys GW, editors. Basic Processes in Reading: Visual Word Recognition. Hillsdale, NJ: Lawrence Erlbaum; pp 264–336. [Google Scholar]

- Oldfield RC ( 1971): The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9: 97–113. [DOI] [PubMed] [Google Scholar]

- Paivio A ( 1991): Dual coding theory: Retrospect and current status. Can J Psychol 45: 255–287. [Google Scholar]

- Perani D, Schnur T, Tettamanti M, Gorno‐Tempini M, Cappa SF, Fazio F ( 1999): Word and picture matching: A PET study of semantic category effects. Neuropsychologia 37: 293–306. [DOI] [PubMed] [Google Scholar]

- Pugh KR, Shaywitz BA, Shaywitz SE, Constable RT, Skudlarski P, Fulbright RK, Bronen RA, Shankweiler DP, Katz L, Fletcher JM, et al. ( 1996): Cerebral organization of component processes in reading. Brain 119 ( Part 4): 1221–1238. [DOI] [PubMed] [Google Scholar]

- Raposo A, Moss HE, Stamatakis EA, Tyler LK ( 2006): Repetition suppression and semantic enhancement: An investigation of the neural correlates of priming. Neuropsychologia 44: 2284–2295. [DOI] [PubMed] [Google Scholar]

- Ratcliff R ( 1993): Methods for dealing with reaction time outliers. Psychol Bull 114: 510–532. [DOI] [PubMed] [Google Scholar]

- Richards L, Chiarello C ( 1995): Depth of associated activation in the cerebral hemispheres: Mediated versus direct priming. Neuropsychologia 33: 171–179. [DOI] [PubMed] [Google Scholar]

- Rissman J, Eliassen JC, Blumstein SE ( 2003): An event‐related FMRI investigation of implicit semantic priming. J Cogn Neurosci 15: 1160–1175. [DOI] [PubMed] [Google Scholar]

- Rossell SL, Bullmore ET, Williams SC, David AS ( 2001): Brain activation during automatic and controlled processing of semantic relations: A priming experiment using lexical‐decision. Neuropsychologia 39: 1167–1176. [DOI] [PubMed] [Google Scholar]

- Rossell SL, Price CJ, Nobre AC ( 2003): The anatomy and time course of semantic priming investigated by fMRI and ERPs. Neuropsychologia 41: 550–564. [DOI] [PubMed] [Google Scholar]

- Ruff I, Blumstein SE, Myers EB, Hutchison E ( 2008): Recruitment of anterior and posterior structures in lexical‐semantic processing: An fMRI study comparing implicit and explicit tasks. Brain Lang 105: 41–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sachs O, Weis S, Zellagui N, Huber W, Zvyagintsev M, Mathiak K, Kircher T ( 2008): Automatic processing of semantic relations in fMRI: Neural activation during semantic priming of taxonomic and thematic categories. Brain Res 1218: 194–205. [DOI] [PubMed] [Google Scholar]

- Sass K, Krach S, Sachs O, Kircher T ( 2009a): Lion–tiger–stripes: Neural correlates of indirect semantic priming across processing modalities. NeuroImage 45: 224–236. [DOI] [PubMed] [Google Scholar]

- Sass K, Sachs O, Krach S, Kircher T ( 2009b) : Taxonomic and thematic categories: Neural correlates of categorization in an auditory‐to‐visual priming task using fMRI. Brain Res 1270: 78–87. [DOI] [PubMed] [Google Scholar]

- Shallice T ( 1988): Specialisation within the semantic system. Cogn Neuropsychol 5: 133–142. [Google Scholar]

- Slotnick SD, Moo LR, Segal JB, Hart J Jr ( 2003): Distinct prefrontal cortex activity associated with item memory and source memory for visual shapes. Brain Res Cogn Brain Res 17: 75–82. [DOI] [PubMed] [Google Scholar]

- Thompson‐Schill SL, Aguirre GK, D'Esposito M, Farah MJ ( 1999): A neural basis for category and modality specificity of semantic knowledge. Neuropsychologia 37: 671–676. [DOI] [PubMed] [Google Scholar]

- Tivarus ME, Ibinson JW, Hillier A, Schmalbrock P, Beversdorf DQ ( 2006): An fMRI study of semantic priming: Modulation of brain activity by varying semantic distances. Cogn Behav Neurol 19: 194–201. [DOI] [PubMed] [Google Scholar]

- Tyler LK, Stamatakis EA, Dick E, Bright P, Fletcher P, Moss H ( 2003): Objects and their actions: Evidence for a neurally distributed semantic system. Neuroimage 18: 542–557. [DOI] [PubMed] [Google Scholar]

- Vandenberghe R, Price C, Wise R, Josephs O, Frackowiak RS ( 1996): Functional anatomy of a common semantic system for words and pictures. Nature 383: 254–256. [DOI] [PubMed] [Google Scholar]

- Warrington EK, Shallice T ( 1984): Category specific semantic impairments. Brain 107 ( Part 3): 829–854. [DOI] [PubMed] [Google Scholar]

- Wible CG, Han SD, Spencer MH, Kubicki M, Niznikiewicz MH, Jolesz FA, McCarley RW, Nestor P ( 2006): Connectivity among semantic associates: An fMRI study of semantic priming. Brain Lang 97: 294–305. [DOI] [PubMed] [Google Scholar]

- Xiao Z, Zhang JX, Wang X, Wu R, Hu X, Weng X, Tan LH ( 2005): Differential activity in left inferior frontal gyrus for pseudowords and real words: An event‐related fMRI study on auditory lexical decision. Hum Brain Mapp 25: 212–221. [DOI] [PMC free article] [PubMed] [Google Scholar]