Abstract

Learning to articulate novel combinations of phonemes that form new words through a small number of auditory exposures is crucial for development of language and our capacity for fluent speech, yet the underlying neural mechanisms are largely unknown. We used functional magnetic resonance imaging to reveal repetition–suppression effects accompanying such learning and reflecting discrete changes in brain activity due to stimulus‐specific fine‐tuning of neural representations. In an event‐related design, subjects were repeatedly exposed to auditory pseudowords, which they covertly repeated. Covert responses during scanning and postscanning overt responses showed evidence of learning. An extensive set of regions activated bilaterally when listening to and covertly repeating novel pseudoword stimuli. Activity decreased, with repeated exposures, in a subset of these areas mostly in the left hemisphere, including premotor cortex, supplementary motor area, inferior frontal gyrus, superior temporal cortex, and cerebellum. The changes most likely reflect more efficient representation of the articulation patterns of these novel words in two connected systems, one involved in the perception of pseudoword stimuli (in the left superior temporal cortex) and one for processing the output of speech (in the left frontal cortex). Both of these systems contribute to vocal learning. Hum Brain Mapp 2008. © 2007 Wiley‐Liss, Inc.

Keywords: articulation, fMRI, language, repetition suppression, speech

INTRODUCTION

One of the key features of human language is the ability to learn new words, allowing us to efficiently communicate existing and novel ideas. The process of learning new words involves both semantic and phonological components, and learning to articulate new words usually requires repetition of exemplars [Jarvis, 2004]. Vocal learning in birds and humans is based on an auditory comparison between a heard sound and one's imitated repetition, allowing for correction of the vocal output for the next time the sound is produced [Konishi, 1965, 2004].

A kind of fast‐track version of the overt repetition process for vocal learning is the phonological loop. This loop of working memory can serve the same purpose as overt articulatory repetition through subvocal rehearsal of novel vocal exemplars [Baddeley, 1992; Gathercole et al., 1994, 1997]. Importantly, the neural mechanisms underlying this subvocal repetition are exceptionally similar to those involved in overt articulation, as evidenced by interference between these mechanisms [Baddeley, 1992], action potentials in vocal musculature during subvocal repetition [Sokolov, 1972], and similar patterns of neural activation between overt and covert speech and language tasks as shown by functional magnetic resonance imaging (fMRI) [Yetkin et al., 1995].

Speech articulation relies heavily on phonological and very little on semantic components of language. Therefore, if we are to understand speech mechanisms and comprehend common conditions selectively affecting articulation, then phonology should be studied separately from other aspects of language. In an experimental setting, the phonological component of articulatory learning for new words can be largely separated from cognitive, semantic, and lexical processes by the use of novel pseudoword stimuli—stimuli that sound like words and are composed of the same phonological rules, but which carry little or no meaning [see e.g., Gathercole et al., 1994, 1997; Klein et al., 2006]. This is important since various routes of processing word stimuli (e.g., phonological vs. semantic) have been shown to activate separate cortical areas [Devlin et al., 2003; Petersen et al., 1988; Poldrack et al., 1999b; Wagner et al., 2000].

The regions involved in speech articulation have been a strong focus of previous study, but it is not known whether all or some of these regions, or other regions, are also involved in articulatory learning. The discovery of the KE family, half of whose members are affected by an inherited mutation of the FOXP2 gene that selectively impairs their ability to articulate speech sounds, manifested as verbal dyspraxia, has led to valuable insights into the genetic and neural basis of articulation [Vargha‐Khadem et al., 1998; Watkins et al., 2002a, b]. Affected family members are particularly impaired at repetition of words and nonwords [Watkins et al., 2002a]. Functional imaging revealed abnormal activity during word repetition in the striatum, inferior and medial frontal cortex [Watkins et al., 2002b]. Gray matter regions affected by the genetic mutation in these individuals include caudate nucleus, putamen, parts of sensorimotor cortex, posterior inferior and superior temporal cortex, temporal pole, the supplementary motor area, left inferior frontal gyrus, and the cerebellum [Vargha‐Khadem et al., 1998; Watkins et al., 2002b]. It is also clear from stroke and lesion studies that motor cortical areas, basal ganglia, and the cerebellum all contribute different aspects to oral syllable production [Wildgruber et al., 2001]. The role of the cerebellum in articulation has been especially well documented [e.g., Ackermann et al., 1992; Gasparini et al., 1999], particularly in relation to dysarthria, usually caused by cerebellar damage or disease [Wise et al., 1999].

There exists some controversy surrounding the relative contribution of Broca's area [Hillis et al., 2004; Price et al., 1996] and the anterior insular cortex [Dronkers, 1996; Wise et al., 1999] to speech articulation, though this disagreement may simply stem from the fact that the use of these and other regions can be highly dependent on specific task and stimulus parameters [Price et al., 1996]; moreover, recent studies support the view of left inferior frontal gyrus as the main component of articulatory movements subserving phonology [Bonilha et al., 2006; Lu et al., 2007]. Some of the organization of articulatory movements also seems to be centered around cortical areas such as the lateral aspect of the precentral gyrus [Alexander et al., 1990], where neural representations of facial and articulatory muscles reside, and the supplementary motor area (SMA) [Ziegler et al., 1997], which helps in the preparation and initiation of the sequential movements so important for fluent speech.

Although the neural underpinnings of speech articulation have been studied extensively, it is surprising that less attention has been paid to the changes of neural activation due to learning to pronounce new words, which is a task so essential to our language abilities. Few, if any, studies to date have examined the neural changes accompanying learning within phonological and articulatory levels of auditory word processing, despite the demonstration of repetition priming effects in various language areas with visual nonword stimuli [Frith et al., 1995], spoken words and pseudowords [Orfanidou et al., 2006], spoken sentences [Dehaene‐Lambertz et al., 2006; Hasson et al., 2006], and at the lexicosemantic level of word learning [van Turennout et al., 2000, 2003]. One study showed unimodal and cross‐modal repetition suppression effects after conscious repetition of common nouns [Cohen et al., 2004], but unfortunately phonological components cannot be separated from semantic ones in their paradigm. Similarly, another study [Breitenstein et al., 2005] used pseudowords to show changes accompanying the learning of a novel lexicon of words matched to meaningful pictures, but this study and others like it have not assessed pure articulatory learning. The phonological aspect of language is critically important in the context of selective articulatory disorders such as verbal dyspraxia, apraxia of speech, dysarthria, or dysphonia, and therefore it must be understood separately from semantic learning.

We used fMRI to examine the neural changes associated with repeated exposure to novel auditory pseudoword stimuli. Our paradigm involved auditory presentation of novel but legal combinations of phonemes (i.e., pseudowords), such that semantic associations were separated from the perceptual and articulatory demands of word processing. We hypothesized that changes would be present in various auditory and motor areas after a small number of exposures, since humans need very few exposures to a stimulus to show learning effects. Specifically, we tested the hypothesis that all areas involved in speech articulation show changes in activation as a result of learning to articulate novel pseudoword stimuli. Alternatively, only a subset of the areas involved in articulation, or even areas outside of this network, might be involved in the process of learning to articulate novel pseudowords. Given the nature of our task, which requires accurate listening (input) and speech (output), changes were expected in both the auditory and motor systems as they relate to novel word‐like stimuli.

MATERIALS AND METHODS

Subjects

Of the 19 subjects who participated in the study, 14 were included in the fMRI analysis (six female; age range 20–34 years; mean age: 23.3 years). Of the remaining five subjects, three were excluded due to problems with image quality. The other two subjects were excluded due to clinically insignificant structural differences, namely enlarged lateral ventricles, which resulted in poor registration of the basal ganglia structures for these subjects. All subjects were right‐handed, as confirmed by a standardized handedness questionnaire, and all subjects were native speakers of English. The study was conducted under ethical approval from the local NHS Research Ethics Committee. Informed consent was obtained from all subjects who were compensated for their participation.

Stimuli

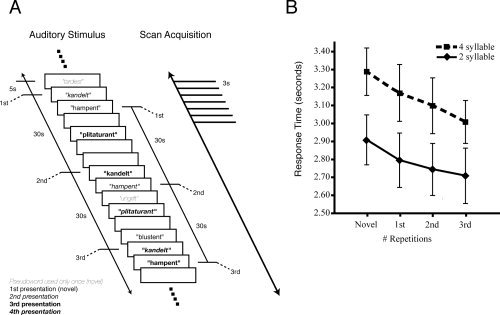

Stimuli consisted of 60 nonwords (or pseudowords) based on the [Gathercole and Baddeley, 1996] Test of Nonword Repetition. Pseudowords (total n = 60) were of the two‐syllable (e.g., “terscoft”; n = 30) and four‐syllable (e.g., “frackerister”; n = 30) type and varied in phonological complexity. Audio recordings of the stimuli spoken by a British‐English female were saved as separate sound files and presented over headphones during the experiment. Half of the pseudowords were repeated a total of four times at 30‐s intervals in a pseudorandom fashion throughout the scanning session and intermixed with the other half, which were only presented once to each subject (see Fig. 1A for further examples of pseudowords and structure of experimental design). Half the stimuli in the repeated and nonrepeated sets were two‐syllable stimuli and the other half were four‐syllable stimuli. This led to 150 total (30 × 4 repeated + 30 nonrepeated) stimulus presentations. The pseudoword stimuli had varying durations and the onsets of consecutive stimuli were separated by intervals of between 5 and 15 s. The scan lasted 21 min (see Fig. 1A).

Figure 1.

A. Schematic of experimental design. Auditory pseudoword stimuli were presented for covert repetition in a pseudorandom order. Half the stimuli (light gray) were presented only once, the other half were presented four times (shown in regular, italic, bold and bold italic). Inter‐stimulus intervals were between 5‐ and 15‐s duration. EPI volumes were acquired every 3 s. B. Response times to covert word repetition across four stimulus presentations. Subjects were asked to press a button with their left index finger after silently repeating each pseudoword. Response times were measured from the start of stimulus presentation to the button press. Response times for four‐syllable stimuli were significantly longer than those for two‐syllable stimuli; also, there was a significant decrease in response times across the four presentations (see text). Error bars represent between‐subject standard errors of the mean.

Procedure

All data were collected on a 3‐T Siemens Trio MR system using an eight‐channel head coil. Since this was a completely auditory task, subjects were asked to keep their eyes closed throughout the experiment. Auditory stimuli were presented using the Commander XG (Resonance Technologies, Northridge, CA) MR‐compatible headphones at a comfortable listening level and were clearly audible above the background scanner noise, which was attenuated by up to 30 dB.

Subjects heard pseudoword stimuli, which they were asked to repeat covertly. Covert repetition was used in order to minimize artifacts in the images due to muscle activations and changes in air spaces near the brain (caused by mouth opening and closing). Subjects were asked to respond by button‐press with their left index finger immediately after covertly repeating each pseudoword, thereby providing a correlate of the time taken to repeat each stimulus. The button press served two purposes: it provided a simple behavioral measure of learning during our task, and it indicated whether the subject was awake and concentrating for the duration of the task. Subject compliance with button presses was 100%, indicating that all subjects performed the task adequately. In this event‐related design, 403 whole‐brain volumes were obtained in an echo‐planar scanning sequence (T R = 3 s; T E = 30 ms; 48 axial slices; 3 × 3 × 3 mm3 voxels).

A high‐resolution (1 × 1 × 1 mm3) T1‐weighted structural image was also acquired for each subject during the same scanning session. Immediately after the scans were completed, subjects performed a behavioral experiment outside of the scanner. Subjects listened to and repeated aloud a list of 60 pseudowords, half of which they had heard and covertly repeated four times in the scanner, while the other half they had heard and covertly repeated only once. Audio recordings of these responses were made for off‐line analysis. A judge who was unaware of the pseudoword status (i.e., items repeated four times vs. only once) measured the number of errors made by each subject in overt repetition of the stimuli.

Data Analysis

Analysis of the imaging data was carried out using the general linear model within FEAT (FMRI Expert Analysis Tool) Version 5.42, part of FSL (FMRIB's Software Library, http://www.fmrib.ox.ac.uk.) [Smith et al., 2004].

Prestatistics

The following prestatistics processing was applied: motion correction of each 3D volume in the time‐series to the middle volume as a reference using MCFLIRT (Motion Correction using FMRIB's Linear Image Registration Tool) [Jenkinson et al., 2002]; removal of signal from nonbrain tissue using BET (Brain Extraction Tool) [Smith, 2002]; spatial smoothing using a Gaussian kernel of full‐width half‐maximum 6 mm; mean‐based intensity normalization of all volumes by the same factor; and highpass temporal filtering (highpass filter cutoff = 75 s) to remove low‐frequency noise components. The functional data for each subject were linearly registered using FLIRT (FMRIB's Linear Image Registration Tool) [Jenkinson and Smith, 2001; Jenkinson et al., 2002] to his or her own structural image, and this image was then registered to a standard space image (the MNI152_average) brain.

Single subject analysis

The statistical model consisted of four explanatory variables (EVs) and their temporal derivatives: novel stimuli (including items to be repeated and nonrepeated items), first repetition of stimuli, second repetition, and third repetition. The onset of each stimulus was modeled with a 5‐s duration aimed at capturing both the auditory perception and covert production processes. Six subject‐specific vectors obtained from motion correction, giving the translational and rotational motion in each direction, were used as additional EVs (covariates of no interest) to remove any movement‐related activation artifacts. The evoked hemodynamic responses were modeled as double‐γ functions convolved with the stimulus [Friston et al., 1998; Glover, 1999]. The parameters of the double‐γ are identical to those used for the “canonical HRF” used in SPM (Statistical Parametric Mapping) since SPM99 [Glover, 1999]. Effects were tested through two sets of contrasts of the relevant EVs, a linear increase (−2, −1, 1, 2) and a linear decrease (2, 1, −1, −2) across the four repetitions of stimuli and a change between the novel and third repetitions (1, 0, 0, −1) and (−1, 0, 0, 1). This analysis was aimed at capturing learning‐related changes across the whole brain.

Group analysis

The higher‐level analysis across subjects used FLAME (FMRIB's Local Analysis of Mixed Effects) [Beckmann et al., 2003; Woolrich et al., 2004]. Statistical images were cluster thresholded (see later) and the resulting z‐maps were overlaid onto a standard‐space average of the high‐resolution T1‐weighted structural image obtained in each of the 14 subjects.

ROI analysis

In a separate analysis, we extracted the signal change related to each exposure number (novel and three subsequent repetitions) within several regions of interest (ROI). These ROIs were masks of the clusters derived from the contrast revealing activation to stimuli presented for the first time (novel condition, including repeated and nonrepeated items) compared with baseline. This contrast was thresholded at Z > 4.3 and clusters were identified whose size was significant at P < 0.05, corrected. The mean signal change (effect size) for the voxels in the cluster masks was extracted for individual subjects' datasets for each presentation of the repeated stimuli. These data were analysed using repeated measures analysis of variance (ANOVA) with four within‐subject levels (Novel, 1st, 2nd, and 3rd repetitions). We extracted these data for each subject separately, using the same ROI across all subjects. The values that we obtained from this analysis allowed us to compare the effect sizes and specific patterns of changes associated with learning in each area.

RESULTS

Behavioral Data

Repeated presentation of stimuli for covert repetition in the scanner resulted in a behavioral learning effect in all subjects tested for stimulus repetition time via button press during task participation. All of these subjects showed a significantly shorter time to covertly repeat pseudowords after four exposures (mean time: 2.86 s) as compared with the first exposure (i.e., novel stimuli; mean time: 3.10 s). Across all subjects and stimuli, the time to silently repeat each stimulus decreased for each repeated presentation for both two‐syllable and four‐syllable pseudowords (Fig. 1B). A repeated‐measures ANOVA revealed a main effect for both the number of syllables [F(1,12) = 123.14, P < 0.001; 4‐syllable > 2‐syllable pseudowords] and number of presentations [F(3,36) = 23.40, P < 0.001; decrease in response times across four presentations] and no interaction between those factors (P = 0.67). Pairwise planned comparisons showed that there were significant decreases in response times between every pair of subsequent presentations (P < 0.05).

Analysis of the postscan overt repetition errors revealed that, in addition to the in‐scan improvement in repetition time discussed above, subjects made a larger number of errors in overt repetition of pseudowords presented once only (mean number of errors, 5.43, standard deviation, 2.34) as compared with pseudowords presented and covertly repeated four times during the scan (mean number of errors, 3.07, standard deviation, 1.49). A paired t‐test showed that this effect was significant (t(13) = 4.83, P < 0.0005). This result suggests that subjects benefited from repeated presentation and covert repetition.

Debriefing reports indicate that participants, while aware that some pseudowords were repeated, had no explicit knowledge of the repetition structure of the experiment, including the number of repetitions of pseudowords or the length of the time interval between them.

fMRI Data

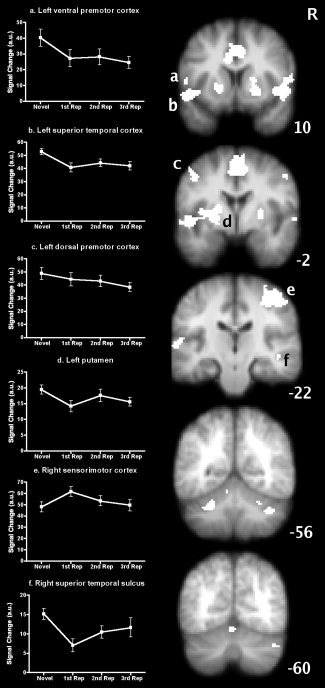

The whole brain analyses revealed several areas showing significant activity during listening to and silently repeating novel pseudoword stimuli compared with baseline. These areas comprise a bilateral symmetric network including posterior inferior frontal cortex, superior temporal cortex, putamen, dorsal premotor cortex, and anterior lobe of the cerebellum, and medially the SMA/preSMA/cingulate motor area (CMA) complex and the cerebellar vermis (see Table I and Fig. 2). A large cluster in the right sensorimotor cortex at about the level of the hand representation was also seen in this contrast and most likely reflects the predicted response to the button press with the left hand. In the ROI analysis, masks of the aforementioned areas were derived from the activation clusters. The extensive cluster in the left superior temporal lobe extending medially to the left putamen was divided into two masks, one comprising voxels in the putamen and the remainder comprising the left superior temporal lobe. The other cluster masks were: right frontotemporal cortex, right putamen, left ventral premotor cortex, the SMA/preSMA/CMA, left dorsal premotor cortex, right dorsal premotor cortex, right sensorimotor cortex, right superior temporal sulcus (STS), left anterior lobe of the cerebellum, right anterior lobe of the cerebellum, the cerebellar vermis, and right posterior lobe of the cerebellum (see Table I and Fig. 2). Repeated measures analysis of the mean signal change in these masks across repeated presentations of the stimuli revealed a significant interaction between signal change and ROI (F(39, 546) = 1.842, P = 0.002). This interaction was due to significant decreases in the left dorsal premotor cortex (F(3,39) = 3.50, P = 0.024), left frontotemporal cortex (F(3,39) = 6.37, P = 0.001), left putamen (F(3,39) = 3.36, P = 0.028), and left ventral premotor cortex (F(3,39) = 4.31, P = 0.01). No significant decreases were observed in the right hemisphere homologues of these regions. The right STS, however, also showed a significant decrease in activity with repeated presentation (F(3,39) = 5.864, P = 0.002). Significant increases were found only in the right sensorimotor cortex (F(3,39) = 7.31, P = 0.001), and examination of the means suggests this is only for the first repetition relative to the novel stimuli; activity in this region is at the level of the hand representation and most likely reflects the button press made by subjects after covertly repeating the stimuli. Figure 2 shows the pattern of these changes across the four repetitions. The right STS, left superior temporal cortex, left ventral premotor cortex, and left putamen show a large decrease in activity after just one repetition followed by small changes on the 2nd and 3rd repetitions. Changes in the left dorsal premotor cortex were more gradual across the four presentations.

Table I.

Brain areas activated during listening to and covertly repeating novel pseudoword stimuli

| Cluster (number of voxels) | Z max | X | Y | Z | ROI activation change |

|---|---|---|---|---|---|

| Left superior temporal cortex and putamen (1399) | 6.27 | −54 | 12 | −12 | * |

| 5.70 | −20 | 4 | −2 | * | |

| Left ventral premotor cortex (18) | 4.73 | −52 | 12 | 6 | * |

| Right frontotemporal cortex (453) | 5.23 | 52 | 10 | −2 | n.s. |

| Right putamen (483) | 5.22 | 18 | 6 | 10 | n.s. |

| Supplementary motor area and cingulate cortex (1219) | 5.71 | −2 | 0 | 52 | n.s. |

| 5.54 | 6 | 8 | 40 | ||

| Right dorsal premotor cortex (20) | 4.88 | 54 | 0 | 44 | n.s. |

| Left dorsal premotor cortex (69) | 4.96 | −48 | −2 | 50 | * |

| Right sensorimotor cortex (542) | 5.72 | 48 | −20 | 50 | * |

| Right superior temporal sulcus (15) | 4.78 | 46 | −24 | −6 | * |

| Left anterior lobe of cerebellum (147) | 5.10 | −20 | −50 | −30 | n.s. |

| Right anterior lobe of cerebellum (117) | 5.15 | 40 | −56 | −36 | n.s. |

| Midline cerebellum (vermis lobule VI) (65) | 4.89 | −2 | −68 | −14 | n.s. |

| Right posterior lobe of cerebellum (Crus I) (19) | 4.82 | 44 | −68 | −32 | n.s. |

Coordinates indicate local maxima of activation in the x (medial–lateral; negative, left), y (anterior–posterior; negative, posterior), and z (dorsal–ventral; negative, ventral) axes. Statistical maps were cluster thresholded at Z > 4.3 and P < 0.05. See also Figure 2.

– indicates significant activation change across four presentations at P < 0.05;

n.s. – not significant.

Figure 2.

Brain areas activated by covert repetition of auditory pseudowords. Statistical maps of the areas activated by listening to and covertly repeating novel pseudowords are overlaid onto the average structural image of the subjects in this study. White areas indicate masks of significant clusters thresholded at Z > 4.3, P < 0.05, corrected. R ‐ right hemisphere; numbers located next to each coronal slice indicate its position in mm relative to the vertical plane through the anterior commissure. Small letters superimposed correspond to the graphs a. ‐ f., identifying those areas in which there was a significant change (P < 0.05) in activity across repetitions. a.u. ‐ arbitrary units. See Table 1 and text for further details.

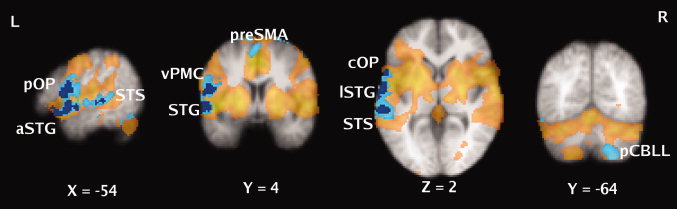

For the whole brain analyses, the contrasts examining linear changes across the four presentations of the stimuli revealed significant decreases in activity in a cluster encompassing the lateral inferior frontal cortex and temporal cortex in the left hemisphere only. The local maxima within this cluster were in the pars opercularis, ventral premotor cortex, and central (Rolandic) opercular cortex, the anterior and lateral superior temporal gyrus and posterior STS (see Table II and Fig. 3). We also compared brain activity during the first presentation and the last (3rd repetition) to capture any change between these two states ignoring information about change between the first and second repetitions. In addition to the areas revealed by the linear contrast above, the preSMA, SMA, and right posterior lobe of the cerebellum all showed significantly decreased activity between the first and last presentation (3rd repetition) of the stimuli (see Table II and Fig. 3). There were no brain areas that showed significant linear increases in activity or a significant increase in activity between the first and last presentation.

Table II.

Brain areas showing decreased activity across repeated presentations (including listening and covert repetition) of pseudoword stimuli

| Cluster (number of voxels), location of statistical peak | Z max | X | Y | Z |

|---|---|---|---|---|

| Linear decreases across four repetitions | ||||

| Left frontotemporal cortex (1048) | ||||

| Posterior IFG (pars opercularis) | 3.10 | −54 | 12 | 8 |

| Anterior STG | 3.36 | −56 | 8 | −4 |

| Ventral premotor cortex | 3.19 | −50 | 6 | 16 |

| Lateral STG | 3.37 | −62 | −8 | 2 |

| Lateral STG/central operculum | 3.34 | −62 | −8 | 6 |

| Posterior STS | 3.42 | −60 | −30 | 0 |

| Additional areas showing decreases from novel to third repetition | ||||

| Medial frontal cortex (772) | ||||

| PreSMA | 3.20 | −2 | 4 | 60 |

| SMA | 3.16 | −2 | 0 | 62 |

| Right posterior lobe of cerebellum (730) | ||||

| Lobule VIIIB | 3.22 | 28 | −56 | −54 |

| Lobule VIIIA | 3.22 | 20 | −62 | −46 |

| 3.59 | 24 | −64 | −58 | |

No significant increases in activity were seen.

See legend to Table I for details. Statistical maps were cluster thresholded at Z > 2.3 and P < 0.05; local maxima within a cluster with Z > 3.1 are reported. IFG – inferior frontal gyrus; STG – superior temporal gyrus; STS – superior temporal sulcus.

Figure 3.

Brain areas showing learning‐related decreases in activity. Statistical maps are overlaid onto the average structural image of subjects in this study. Colored areas indicate significant clusters thresholded at Z > 2.3, P < 0.05, corrected. Areas showing a significant linear decrease in activity across four presentations of the stimuli are shown in dark blue; those showing a significant decrease between the first (Novel) and last presentations (3rd repetition) are shown in light blue. The areas involved in listening to and repeating novel pseudowords are shown in semi‐transparent yellow (Note: for display purposes this statistical image was thresholded at Z > 2.3, P < 0.05. corrected, for comparison with the contrasts examining learning). L ‐ left hemisphere; R ‐ right hemisphere; numbers located below each slice indicate its position in mm relative to the horizontal and vertical planes through the anterior commissure; pOP ‐ pars opercularis; aSTG ‐ anterior superior temporal gyrus; STS ‐ superior temporal sulcus; vPMC ‐ ventral premotor cortex; preSMA ‐ presupplementary motor area; cOP ‐ central operculum; lSTG ‐ lateral superior temporal gyrus; pCBLL ‐ posterior cerebellum. See Table 2 for further details.

DISCUSSION

This study describes the neural changes accompanying learning of novel combinations of phonemes comprising new words. A repetition learning paradigm using auditory pseudoword stimuli drove subjects to learn to articulate new word‐like stimuli, presumably through the phonological loop [Baddeley, 1992; Gathercole et al., 1997], allowing us to separate out the neural systems involved in articulatory learning, as opposed to the type of procedural word learning using lexicosemantic rules [Breitenstein et al., 2005; van Turennout et al., 2003; Wheeldon and Monsell, 1992].

We show that behavioral improvements are associated with distinct neural changes in motor and language areas of the brain. It is not surprising that auditory stimulation and (subvocal) repetition should lead to articulatory learning, since this is perhaps the most common system for natural vocal learning in humans. Because of strong connections between the speech perception and motor systems [Pulvermuller, 2005], as well as previous studies implicating motor structures in speech articulation (see introduction), we hypothesized that language and motor structures would be functionally affected by our paradigm. Indeed, we show a decrease in activation across several motor cortical, cerebellar, and left temporal and frontal language areas. Yet surprisingly, only a subset of the areas involved in speech articulation showed a change in activation, manifested as a decrease in all but one region. Specifically, despite bilateral statistically significant activations during articulation of novel pseudowords, decreases in activation due to repeated stimulus presentation were almost completely left lateralized. Equally impressive is that changes are noted surprisingly quickly, with major changes following merely one stimulus exposure, indicating the highly plastic nature of the neural networks underlying these types of learning.

Behaviorally, the time to covertly repeat pseudoword stimuli decreased for each additional exposure to the stimulus, while postscanning behavioral testing also showed a decreased rate of errors for pseudowords repeated four times over those heard and covertly repeated only once. These data are good indicators that subjects learned the articulation patterns associated with new words. Behavioral improvement was coupled to decreased activity in two distinct but interrelated systems: (1) one system composed of the left hemisphere cortical motor areas (interacting with right cerebellum) used for speech articulation and (2) a left temporal cortical system used for the phonological representations of auditory word‐like stimuli. The precise pattern of decreased activation varied across areas and especially between motor and perceptual areas (Fig. 2), indicating that different forms of learning or priming may have occurred.

Two separate analyses (whole‐brain and ROI) showed very similar areas of decreased activations across repeated presentations. The ROI analysis (see later) allowed us to address the question of whether all or only some of the areas involved in articulation of pseudowords showed learning‐related changes. However, since it is possible that activation changes are present in areas not significantly (i.e., subthreshold) involved in the task of repeating novel stimuli, we also performed a whole‐brain analysis. Within that analysis (Fig. 3), we examined both linear changes in activation across the four presentations as well as any changes between the novel and the third repetitions of stimuli. Linear decreases were seen only in one large cluster of left frontotemporal cortex (see Table II for local maxima). The second comparison, in addition to showing decreased activation across the left frontotemporal cluster seen in the linear analysis, captured other areas such as SMA, preSMA, and the right posterior lobe of the cerebellum. Importantly, these areas were all activated by the task of hearing and covertly repeating novel pseudowords by themselves (Table I). However, not all areas originally activated showed changes, suggesting that the areas where modification occurs in this type of learning are a subset of the areas involved in the articulation of pseudowords. Specifically, all changes seen in the whole‐brain analysis were left lateralized, with a corresponding change in right cerebellum.

The ROI analysis used the areas of significant activation for novel stimuli (Table I) as masks. This second analysis was able to capture any changes in areas originally involved in the task (Fig. 2). Again, we saw that changes were lateralized to the left hemisphere despite bilateral activation for novel stimuli. The areas that significantly changed according to this analysis matched the whole‐brain analysis well, with significant changes in left superior temporal cortex, left dorsal and ventral premotor cortex, and left putamen. Significant changes were also seen in right sensorimotor cortex (button press) and right STS (see below for explanation). All changes in activation (except right sensorimotor cortex) were decreases. Importantly, right hemisphere homologues, with the exception of the more medially located SMA and possibly the right STS, did not show any changes in the whole‐brain nor the ROI analyses. These differences between hemispheres may reflect the left hemisphere's specialization for many aspects of speech perception and production, and the involvement of the right hemisphere in extralinguistic processing such as the speaker's voice [Dehaene‐Lambertz et al., 2006].

We suggest that, in learning to articulate novel words, changes in activation occur in systems traditionally separated into “motor” and “language” but which may be thought of as an interconnected network linking action and perception. Recent transcranial magnetic stimulation [Pulvermuller et al., 2005; Watkins and Paus, 2004; Watkins et al., 2003] and fMRI [Pulvermuller et al., 2003, 2006; Wilson et al., 2004] studies support this notion showing increased activity in motor areas during speech perception. In nonhuman primates, anatomical links in the form of corticocortical connections exist between premotor and inferior frontal regions [Rizzolatti and Luppino, 2001; Young et al., 1994] and between premotor and auditory (temporal cortex) areas [Romanski et al., 1999]. Human homologues of these connections may well be the anatomical substrate by which changes in activations occurred in our experiment across premotor, inferior frontal, and temporal cortex. The arcuate fasciculus, connecting the posterior part of the superior temporal gyrus with the inferior frontal gyrus, is one such connection that has been studied in detail in humans because of its direct involvement in aphasias [Bartha and Benke, 2003; Catani et al., 2005; Damasio and Damasio, 1980]. This fiber pathway and the two regions it connects are certainly crucial in our task, which requires the kind of repetition of auditory stimuli that patients with conduction aphasia are unable to perform. Even though in our study we did not examine the neural correlates of learning to articulate pseudowords presented visually, a previous study of auditory and visual pseudoword processing showed modality‐specific activity for auditory processing in the left superior temporal gyrus, while posterior inferior frontal gyrus activity was present in both auditory and visual modalities [Burton et al., 2005]. This argues for the idea of two distinct but interconnected systems converging on the inferior frontal gyrus.

The involvement of the left superior temporal cortex and posterior inferior frontal cortex in articulatory learning is consistent with expectations given the role of these areas in perceptual and production aspects of speech. Furthermore, the difficulty level of a repetition task can have a strong effect on left superior temporal cortex activation, with higher activations having been shown for second language stimuli, increased syllable number, and complexity of words and pseudowords [Klein et al., 2006]. Similar differences regarding native and second language stimuli exist for frontal lobe regions [Ruschemeyer et al., 2006; Yetkin et al., 1996]. Difficulty level may simply reflect the processing efficiency for a particular stimulus or task [Grill‐Spector et al., 2006]. In our study, the covert articulation of pseudowords became easier and more accurate (in a stimulus‐specific manner) as a result of increasing stimulus exposures, as measured by decreases in response time (Fig. 1B) and fewer errors in postscanning overt repetition. Consequently, we found decreases in activation across superior temporal cortex (Fig. 3). The learning‐related decreases in activity that we observed in left ventral premotor cortex and pars opercularis of Broca's area are consistent with fMRI and transcranial magnetic stimulation studies identifying this region as crucial for phonological processing [Burton et al., 2005; Gough et al., 2005; Nixon et al., 2004].

As noted above, in association with changes in left cortical motor/premotor areas, the right posterior lobe of the cerebellum showed learning‐related decreases in activity. The important role of the cerebellum in error detection, especially in motor tasks [Doyon et al., 2002; Friston et al., 1992] and more generally in procedural learning [Fiez et al., 1992; Raichle et al., 1994], makes it an ideal candidate for involvement in articulatory learning. Recent studies of the cerebellum have postulated that regions in the posterior lobe may be involved particularly with auditory processing [Pastor et al., 2002; Petacchi et al., 2005], providing an intriguing explanation for the results we see here.

The SMA also showed learning‐related decreases in our study, consistent with its involvement in production of complex sequences of movement [Gerloff et al., 1997; Lee and Quessy, 2003], a key aspect of fluent articulation and speech. Damage to this area can cause problems with initiation of speech, mutism, or dysphonia [Jonas, 1981; Ziegler et al., 1997]. It is surprising, therefore, that we did not see more significant changes in the basal ganglia, as the SMA is thought to receive timing cues relevant for sequential movement from the output nuclei of the basal ganglia [Mushiake and Strick, 1995]. It is surprising for several other reasons, such as the known involvement of the basal ganglia in procedural motor and nonmotor learning tasks [Harrington et al., 1990; Poldrack et al., 1999a]. Activity in the putamen was observed for listening to and covertly repeating novel pseudoword stimuli (Table I and Fig. 2). These data fit with previous results showing that the left putamen is activated to a greater extent for the increased articulatory demands of a second language [Klein et al., 1994]. Notably, we did not find any activation related to articulation nor learning in the caudate nucleus; this negative result may be unexpected given the structural and functional changes of the caudate nucleus seen in affected members of the KE family with FOXP2 mutations and their resulting difficulties in speech articulation [Watkins et al., 2002b]. It may be that activation here requires overt speech, since covert speech does not actually allow a comparison of true auditory feedback with the expected output; moreover, in a pilot study of five subjects, we did find a statistical trend for increases in caudate nucleus activation as a result of increased number of exposures of stimuli. Future experiments should be able to tease out the specific contribution of the various parts of the basal ganglia to vocal learning.

A number of neural mechanisms could potentially underlie the repetition suppression effect that we observed [for a recent review, see Grill‐Spector et al., 2006]. Repetition suppression, defined as decreased neural responses as measured by fMRI following stimulus repetition, is usually thought to result from either a decreased (more efficient) processing time or a sharpening of responses at the neural level [Poldrack, 2000]. Neurophysiological recordings in monkeys have shown that repetition suppression paradigms lead to a minority of neurons in the network firing more vigorously, with the majority of neurons in that same network decreasing their firing rates in a highly stimulus‐specific manner [Desimone, 1996; Miller and Desimone, 1994]. These changes are usually interpreted as more efficient encoding of stimuli in response to repeated exposure to those stimuli, which can be picked up as decreased activations in an fMRI signal. Thus, it is likely that the observed decrease in activation coupled to improvement in behavior (which we define as learning) is a result of more efficient processing at the input and output levels. The precise mechanism by which such efficiency is obtained is unknown and may or may not include altered input from other cortical or subcortical structures.

We are confident that the activations we report are due to real plastic changes in neural activation patterns rather than being artifactual in nature. By using an event‐related design, we ensured that stimuli were intermixed and counter‐balanced in repetition number such that presentation number did not covary with the length of time into the scan. Therefore, our results cannot be explained by the effects of decreased attention over time. Any time‐related artifacts would also be removed through high‐pass temporal filtering. It has been argued that awareness of stimuli or task demands can lead to changes in activation (Poldrack, 2000). It is highly unlikely that our results could be explained by changes in awareness of the stimuli, since postscanning reports indicate that subjects were completely unaware of the frequency of repetitions in our study.

We cannot rule out the possibility, however, that subjects attributed some semantic elements to pseudoword stimuli, and that these semantic attributes changed in a stimulus‐specific manner over the course of the experiment. This possibility provides an alternative explanation to changes in left inferior frontal gyrus activation, which likely plays some role in semantic associations [Chou et al., 2006]. We believe that this explanation is less likely, given that a more anterior region of the left inferior frontal gyrus than observed in our results is usually implicated in semantic processing. Nonetheless, a decision about the semantic content (or lack thereof) of each stimulus is implied in our paradigm, since any single stimulus has all the phonological features of a potentially real word. Our experiment was designed such that this search for semantic content should be constant across all stimuli, but the possibility remains that there was a change in this search process across stimulus repetitions (similar to a child learning a new word which is first taken to be a “pseudoword”).

In the future, it would be especially appealing to examine the time‐course of repetition‐related plasticity in the systems that we have studied here. In various learning tasks, some performance gains can only be seen at the behavioral and neural levels some time after practice of the task [Karni and Sagi, 1993; Ungerleider et al., 2002]. It is probable that some gains would be seen in performance of our task only after such a consolidation period, perhaps even only after sleep [Maquet et al., 2003]. It is likely that such additional changes would provide even stronger evidence for the mechanisms of plasticity involved in vocal learning that we have outlined here.

Acknowledgements

We thank our participants in this study, Peter Hobden and Clare Mackay for assistance with scanning, Paul Matthews for his support, and Joseph Devlin for useful comments on the manuscript. The study was conducted as part of the MSc in Neuroscience course at the University of Oxford.

REFERENCES

- Ackermann H,Vogel M,Petersen D,Poremba M ( 1992): Speech deficits in ischaemic cerebellar lesions. J Neurol 239: 223–227. [DOI] [PubMed] [Google Scholar]

- Alexander MP,Naeser MA,Palumbo C ( 1990): Broca's area aphasias: Aphasia after lesions including the frontal operculum. Neurology 40: 353–362. [DOI] [PubMed] [Google Scholar]

- Baddeley A ( 1992): Working memory. Science 255: 556–559. [DOI] [PubMed] [Google Scholar]

- Bartha L,Benke T ( 2003): Acute conduction aphasia: An analysis of 20 cases. Brain Lang 85: 93–108. [DOI] [PubMed] [Google Scholar]

- Beckmann CF,Jenkinson M,Smith SM ( 2003): General multilevel linear modeling for group analysis in FMRI. Neuroimage 20: 1052–1063. [DOI] [PubMed] [Google Scholar]

- Bonilha L,Moser D,Rorden C,Baylis GC,Fridriksson J ( 2006): Speech apraxia without oral apraxia: Can normal brain function explain the physiopathology? Neuroreport 17: 1027–1031. [DOI] [PubMed] [Google Scholar]

- Breitenstein C,Jansen A,Deppe M,Foerster AF,Sommer J,Wolbers T,Knecht S ( 2005): Hippocampus activity differentiates good from poor learners of a novel lexicon. Neuroimage 25: 958–968. [DOI] [PubMed] [Google Scholar]

- Burton MW,Locasto PC,Krebs‐Noble D,Gullapalli RP ( 2005): A systematic investigation of the functional neuroanatomy of auditory and visual phonological processing. Neuroimage 26: 647–661. [DOI] [PubMed] [Google Scholar]

- Catani M,Jones DK,ffytche DH ( 2005): Perisylvian language networks of the human brain. Ann Neurol 57: 8–16. [DOI] [PubMed] [Google Scholar]

- Chou TL,Booth JR,Bitan T,Burman DD,Bigio JD,Cone NE,Lu D,Cao F ( 2006): Developmental and skill effects on the neural correlates of semantic processing to visually presented words. Hum Brain Mapp 27: 915–924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen L,Jobert A,Le Bihan D,Dehaene S ( 2004): Distinct unimodal and multimodal regions for word processing in the left temporal cortex. Neuroimage 23: 1256–1270. [DOI] [PubMed] [Google Scholar]

- Damasio H,Damasio AR ( 1980): The anatomical basis of conduction aphasia. Brain 103: 337–350. [DOI] [PubMed] [Google Scholar]

- Dehaene‐Lambertz G,Dehaene S,Anton JL,Campagne A,Ciuciu P,Dehaene GP,Denghien I,Jobert A,Lebihan D,Sigman M,Pallier C,Poline JB ( 2006): Functional segregation of cortical language areas by sentence repetition. Hum Brain Mapp 27: 360–371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R ( 1996): Neural mechanisms for visual memory and their role in attention. Proc Natl Acad Sci USA 93: 13494–13499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Devlin JT,Matthews PM,Rushworth MF ( 2003): Semantic processing in the left inferior prefrontal cortex: a combined functional magnetic resonance imaging and transcranial magnetic stimulation study. J Cogn Neurosci 15: 71–84. [DOI] [PubMed] [Google Scholar]

- Doyon J,Song AW,Karni A,Lalonde F,Adams MM,Ungerleider LG ( 2002): Experience‐dependent changes in cerebellar contributions to motor sequence learning. Proc Natl Acad Sci USA 99: 1017–1022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dronkers NF ( 1996): A new brain region for coordinating speech articulation. Nature 384: 159–161. [DOI] [PubMed] [Google Scholar]

- Fiez JA,Petersen SE,Cheney MK,Raichle ME ( 1992): Impaired non‐motor learning and error detection associated with cerebellar damage. A single case study. Brain 115 (Part 1): 155–178. [DOI] [PubMed] [Google Scholar]

- Friston KJ,Frith CD,Passingham RE,Liddle PF,Frackowiak RS ( 1992): Motor practice and neurophysiological adaptation in the cerebellum: A positron tomography study. Proc Biol Sci 248: 223–228. [DOI] [PubMed] [Google Scholar]

- Friston KJ,Fletcher P,Josephs O,Holmes A,Rugg MD,Turner R ( 1998): Event‐related fMRI: characterizing differential responses. Neuroimage 7: 30–40. [DOI] [PubMed] [Google Scholar]

- Frith C,Kapur N,Friston KJ,Liddle PF,Frackowiak RS ( 1995): Regional cerebral activity associated with the incidential processing of pseudo‐words. Hum Brain Mapp 3: 153–160. [Google Scholar]

- Gasparini M,Di Piero V,Ciccarelli O,Cacioppo MM,Pantano P,Lenzi GL ( 1999): Linguistic impairment after right cerebellar stroke: A case report. Eur J Neurol 6: 353–356. [DOI] [PubMed] [Google Scholar]

- Gathercole SE,Baddeley A( 1996). The Children's Test of Nonword Repetition. London: Psychological Corp. [Google Scholar]

- Gathercole SE,Willis CS,Baddeley AD,Emslie H ( 1994): The Children's Test of Nonword Repetition: A test of phonological working memory. Memory 2: 103–127. [DOI] [PubMed] [Google Scholar]

- Gathercole SE,Hitch GJ,Service E,Martin AJ ( 1997): Phonological short‐term memory and new word learning in children. Dev Psychol 33: 966–979. [DOI] [PubMed] [Google Scholar]

- Gerloff C,Corwell B,Chen R,Hallett M,Cohen LG ( 1997): Stimulation over the human supplementary motor area interferes with the organization of future elements in complex motor sequences. Brain 120 (Part 9): 1587–1602. [DOI] [PubMed] [Google Scholar]

- Glover GH ( 1999): Deconvolution of impulse response in event‐related BOLD fMRI. Neuroimage 9: 416–429. [DOI] [PubMed] [Google Scholar]

- Gough PM,Nobre AC,Devlin JT ( 2005): Dissociating linguistic processes in the left inferior frontal cortex with transcranial magnetic stimulation. J Neurosci 25: 8010–8016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill‐Spector K,Henson R,Martin A ( 2006): Repetition and the brain: Neural models of stimulus‐specific effects. Trends Cogn Sci 10: 14–23. [DOI] [PubMed] [Google Scholar]

- Harrington DL,Haaland KY,Yeo RA,Marder E ( 1990): Procedural memory in Parkinson's disease: Impaired motor but not visuoperceptual learning. J Clin Exp Neuropsychol 12: 323–339. [DOI] [PubMed] [Google Scholar]

- Hasson U,Nusbaum HC,Small SL ( 2006): Repetition suppression for spoken sentences and the effect of task demands. J Cogn Neurosci 18: 2013–2029. [DOI] [PubMed] [Google Scholar]

- Hillis AE,Work M,Barker PB,Jacobs MA,Breese EL,Maurer K ( 2004): Re‐examining the brain regions crucial for orchestrating speech articulation. Brain 127 (Part 7): 1479–1487. [DOI] [PubMed] [Google Scholar]

- Jarvis ED ( 2004): Learned birdsong and the neurobiology of human language. Ann NY Acad Sci 1016: 749–777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkinson M,Smith S ( 2001): A global optimisation method for robust affine registration of brain images. Med Image Anal 5: 143–156. [DOI] [PubMed] [Google Scholar]

- Jonas S ( 1981): The supplementary motor region and speech emission. J Commun Disord 14: 349–373. [DOI] [PubMed] [Google Scholar]

- Jenkinson M,Bannister P,Brady M,Smith S ( 2002): Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage 17: 825–841. [DOI] [PubMed] [Google Scholar]

- Karni A,Sagi D ( 1993): The time course of learning a visual skill. Nature 365: 250–252. [DOI] [PubMed] [Google Scholar]

- Klein D,Zatorre RJ,Milner B,Meyer E,Evans AC ( 1994): Left putaminal activation when speaking a second language: Evidence from PET. Neuroreport 5: 2295–2297. [DOI] [PubMed] [Google Scholar]

- Klein D,Watkins KE,Zatorre RJ,Milner B ( 2006): Word and nonword repetition in bilingual subjects: A PET study. Hum Brain Mapp 27: 153–161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konishi M ( 1965): The role of auditory feedback in the control of vocalization in the white‐crowned sparrow. Z Tierpsychol 22: 770–783. [PubMed] [Google Scholar]

- Konishi M ( 2004): The role of auditory feedback in birdsong. Ann NY Acad Sci 1016: 463–475. [DOI] [PubMed] [Google Scholar]

- Lee D,Quessy S ( 2003): Activity in the supplementary motor area related to learning and performance during a sequential visuomotor task. J Neurophysiol 89: 1039–1056. [DOI] [PubMed] [Google Scholar]

- Lu L,Leonard C,Thompson P,Kan E,Jolley J,Welcome S,Toga A,Sowell E ( 2007): Normal developmental changes in inferior frontal gray matter are associated with improvement in phonological processing: A longitudinal MRI analysis. Cereb Cortex 17: 1092–1099. [DOI] [PubMed] [Google Scholar]

- Maquet P,Schwartz S,Passingham R,Frith C ( 2003): Sleep‐related consolidation of a visuomotor skill: Brain mechanisms as assessed by functional magnetic resonance imaging. J Neurosci 23: 1432–1440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK,Desimone R ( 1994): Parallel neuronal mechanisms for short‐term memory. Science 263: 520–522. [DOI] [PubMed] [Google Scholar]

- Mushiake H,Strick PL ( 1995): Pallidal neuron activity during sequential arm movements. J Neurophysiol 74: 2754–2758. [DOI] [PubMed] [Google Scholar]

- Nixon P,Lazarova J,Hodinott‐Hill I,Gough P,Passingham R ( 2004): The inferior frontal gyrus and phonological processing: An investigation using rTMS. J Cogn Neurosci 16: 289–300. [DOI] [PubMed] [Google Scholar]

- Orfanidou E,Marslen‐Wilson WD,Davis MH ( 2006): Neural response suppression predicts repetition priming of spoken words and pseudowords. J Cogn Neurosci 18: 1237–1252. [DOI] [PubMed] [Google Scholar]

- Pastor MA,Artieda J,Arbizu J,Marti‐Climent JM,Penuelas I,Masdeu JC ( 2002): Activation of human cerebral and cerebellar cortex by auditory stimulation at 40 Hz. J Neurosci 22: 10501–10506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petacchi A,Laird AR,Fox PT,Bower JM ( 2005): Cerebellum and auditory function: An ALE meta‐analysis of functional neuroimaging studies. Hum Brain Mapp 25: 118–128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petersen SE,Fox PT,Posner MI,Mintun M,Raichle ME ( 1988): Positron emission tomographic studies of the cortical anatomy of single‐word processing. Nature 331: 585–589. [DOI] [PubMed] [Google Scholar]

- Poldrack RA ( 2000): Imaging brain plasticity: Conceptual and methodological issues—A theoretical review. Neuroimage 12: 1–13. [DOI] [PubMed] [Google Scholar]

- Poldrack RA,Prabhakaran V,Seger CA,Gabrieli JD ( 1999a): Striatal activation during acquisition of a cognitive skill. Neuropsychology 13: 564–574. [DOI] [PubMed] [Google Scholar]

- Poldrack RA,Wagner AD,Prull MW,Desmond JE,Glover GH,Gabrieli JD ( 1999b): Functional specialization for semantic and phonological processing in the left inferior prefrontal cortex. Neuroimage 10: 15–35. [DOI] [PubMed] [Google Scholar]

- Price CJ,Wise RJ,Warburton EA,Moore CJ,Howard D,Patterson K,Frackowiak RS,Friston KJ ( 1996): Hearing and saying. The functional neuro‐anatomy of auditory word processing. Brain 119 (Part 3): 919–931. [DOI] [PubMed] [Google Scholar]

- Pulvermuller F ( 2005): Brain mechanisms linking language and action. Nat Rev Neurosci 6: 576–582. [DOI] [PubMed] [Google Scholar]

- Pulvermuller F,Shtyrov Y,Ilmoniemi R ( 2003): Spatiotemporal dynamics of neural language processing: an MEG study using minimum‐norm current estimates. Neuroimage 20: 1020–1025. [DOI] [PubMed] [Google Scholar]

- Pulvermuller F,Hauk O,Nikulin VV,Ilmoniemi RJ ( 2005): Functional links between motor and language systems. Eur J Neurosci 21: 793–797. [DOI] [PubMed] [Google Scholar]

- Pulvermuller F,Huss M,Kherif F,Moscoso del Prado Martin F,Hauk O,Shtyrov Y ( 2006): Motor cortex maps articulatory features of speech sounds. Proc Natl Acad Sci USA 103: 7865–7870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raichle ME,Fiez JA,Videen TO,MacLeod AM,Pardo JV,Fox PT,Petersen SE ( 1994): Practice‐related changes in human brain functional anatomy during nonmotor learning. Cereb Cortex 4: 8–26. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G,Luppino G ( 2001): The cortical motor system. Neuron 31: 889–901. [DOI] [PubMed] [Google Scholar]

- Romanski LM,Tian B,Fritz J,Mishkin M,Goldman‐Rakic PS,Rauschecker JP ( 1999): Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci 2: 1131–1136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruschemeyer SA,Zysset S,Friederici AD ( 2006): Native and non‐native reading of sentences: An fMRI experiment. Neuroimage 31: 354–365. [DOI] [PubMed] [Google Scholar]

- Smith S ( 2002): Fast robust automated brain extraction. Hum Brain Mapp 17: 143–155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM,Jenkinson M,Woolrich MW,Beckmann CF,Behrens TE,Johansen‐Berg H,Bannister PR,De Luca M,Drobnjak I,Flitney DE,Niazy RK,Saunders J,Vickers J,Zhang Y,De Stefano N,Brady JM,Matthews PM ( 2004): Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage 23 ( Suppl 1): S208–S219. [DOI] [PubMed] [Google Scholar]

- Sokolov A 1972. Inner Speech and Thought. New York: Plenum. [Google Scholar]

- Ungerleider LG,Doyon J,Karni A ( 2002): Imaging brain plasticity during motor skill learning. Neurobiol Learn Mem 78: 553–564. [DOI] [PubMed] [Google Scholar]

- van Turennout M,Ellmore T,Martin A ( 2000): Long‐lasting cortical plasticity in the object naming system. Nat Neurosci 3: 1329–1334. [DOI] [PubMed] [Google Scholar]

- van Turennout M,Bielamowicz L,Martin A ( 2003): Modulation of neural activity during object naming: Effects of time and practice. Cereb Cortex 13: 381–391. [DOI] [PubMed] [Google Scholar]

- Vargha‐Khadem F,Watkins KE,Price CJ,Ashburner J,Alcock KJ,Connelly A,Frackowiak RS,Friston KJ,Pembrey ME,Mishkin M,Gadian DG,Passingham RE ( 1998): Neural basis of an inherited speech and language disorder. Proc Natl Acad Sci USA 95: 12695–12700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner AD,Koutstaal W,Maril A,Schacter DL,Buckner RL ( 2000): Task‐specific repetition priming in left inferior prefrontal cortex. Cereb Cortex 10: 1176–1184. [DOI] [PubMed] [Google Scholar]

- Watkins K,Paus T ( 2004): Modulation of motor excitability during speech perception: The role of Broca's area. J Cogn Neurosci 16: 978–987. [DOI] [PubMed] [Google Scholar]

- Watkins KE,Dronkers NF,Vargha‐Khadem F ( 2002a): Behavioural analysis of an inherited speech and language disorder: Comparison with acquired aphasia. Brain 125 (Part 3): 452–464. [DOI] [PubMed] [Google Scholar]

- Watkins KE,Vargha‐Khadem F,Ashburner J,Passingham RE,Connelly A,Friston KJ,Frackowiak RS,Mishkin M,Gadian DG ( 2002b): MRI analysis of an inherited speech and language disorder: Structural brain abnormalities. Brain 125 (Part 3): 465–478. [DOI] [PubMed] [Google Scholar]

- Watkins KE,Strafella AP,Paus T ( 2003): Seeing and hearing speech excites the motor system involved in speech production. Neuropsychologia 41: 989–994. [DOI] [PubMed] [Google Scholar]

- Wheeldon LR,Monsell S ( 1992): The locus of repetition priming of spoken word production. Q J Exp Psychol A 44: 723–761. [DOI] [PubMed] [Google Scholar]

- Wildgruber D,Ackermann H,Grodd W ( 2001): Differential contributions of motor cortex, basal ganglia, and cerebellum to speech motor control: Effects of syllable repetition rate evaluated by fMRI. Neuroimage 13: 101–109. [DOI] [PubMed] [Google Scholar]

- Wilson SM,Saygin AP,Sereno MI,Iacoboni M ( 2004): Listening to speech activates motor areas involved in speech production. Nat Neurosci 7: 701–702. [DOI] [PubMed] [Google Scholar]

- Wise RJ,Greene J,Buchel C,Scott SK ( 1999): Brain regions involved in articulation. Lancet 353: 1057–1061. [DOI] [PubMed] [Google Scholar]

- Woolrich MW,Behrens TE,Beckmann CF,Jenkinson M,Smith SM ( 2004): Multilevel linear modelling for FMRI group analysis using Bayesian inference. Neuroimage 21: 1732–1747. [DOI] [PubMed] [Google Scholar]

- Yetkin FZ,Hammeke TA,Swanson SJ,Morris GL,Mueller WM,McAuliffe TL,Haughton VM ( 1995): A comparison of functional MR activation patterns during silent and audible language tasks. AJNR Am J Neuroradiol 16: 1087–1092. [PMC free article] [PubMed] [Google Scholar]

- Yetkin O,Zerrin Yetkin F,Haughton VM,Cox RW ( 1996): Use of functional MR to map language in multilingual volunteers. AJNR Am J Neuroradiol 17: 473–477. [PMC free article] [PubMed] [Google Scholar]

- Young MP,Scannell JW,Burns GA,Blakemore C ( 1994): Analysis of connectivity: Neural systems in the cerebral cortex. Rev Neurosci 5: 227–250. [DOI] [PubMed] [Google Scholar]

- Ziegler W,Kilian B,Deger K ( 1997): The role of the left mesial frontal cortex in fluent speech: Evidence from a case of left supplementary motor area hemorrhage. Neuropsychologia 35: 1197–1208. [DOI] [PubMed] [Google Scholar]