Abstract

Investigations of the neural correlates of face recognition have typically used old/new paradigms where subjects learn to recognize new faces or identify famous faces. Familiar faces, however, include one's own face, partner's and parents' faces. Using event‐related fMRI, we examined the neural correlates of these personally familiar faces. Ten participants were presented with photographs of own, partner, parents, famous and unfamiliar faces and responded to a distinct target. Whole brain, two regions of interest (fusiform gyrus and cingulate gyrus), and multiple linear regression analyses were conducted. Compared with baseline, all familiar faces activated the fusiform gyrus; own faces also activated occipital regions and the precuneus; partner faces activated similar areas, but in addition, the parahippocampal gyrus, middle superior temporal gyri and middle frontal gyrus. Compared with unfamiliar faces, only personally familiar faces activated the cingulate gyrus and the extent of activation varied with face category. Partner faces also activated the insula, amygdala and thalamus. Regions of interest analyses and laterality indices showed anatomical distinctions of processing the personally familiar faces within the fusiform and cingulate gyri. Famous faces were right lateralized whereas personally familiar faces, particularly partner and own faces, elicited bilateral activations. Regression analyses show experiential predictors modulated with neural activity related to own and partner faces. Thus, personally familiar faces activated the core visual areas and extended frontal regions, related to semantic and person knowledge and the extent and areas of activation varied with face type. Hum Brain Mapp, 2009. © 2008 Wiley‐Liss, Inc.

Keywords: face processing, fMRI, partner, parents, own, familiar faces

INTRODUCTION

Considerable progress has been made in our understanding of face processing over the last years through a variety of functional neuroimaging investigations. That faces are particular visual stimuli has been proposed for many years [Bodamer,1947; Charcot,1883; Wilbrand,1892], but recent technological advances have allowed the identification of the neural substrates of face processing, leading to detailed anatomo‐functional models [Allison et al.,2000; Gobbini and Haxby,2007; Haxby et al.,2000].

Although most neuroimaging face processing studies have used faces unfamiliar to the participants to preclude effects of familiarity, others have focused precisely on the effect of familiarity. These latter use either old/new paradigms, in which participants have to recognize faces they have just learned (“old faces”) among distractors [“new faces”; e.g., Dubois et al.,1999; Ishai and Yago,2006], or ask participants to recognize famous among unknown faces [e.g., Elfgren et al.,2006; Gobbini et al.,2004; Ishai et al.,2005]. Fewer studies on familiar face processing have used stimuli with the ecological validity of being personally familiar.

Personally familiar faces can range from casual acquaintances to colleagues, close family, and lovers to one's own face, a highly familiar face. All personally familiar faces have associations with an accumulation of experiences and social interactions. As they are processed frequently and repeatedly, in a range of conditions, this extensive experience is likely reflected in neural processes [Balas et al.,2007]. We wished to determine whether personally familiar faces are processed similarly to unfamiliar faces and whether there are distinct neural activation patterns across the different types of personally familiar faces.

Face Perception and Identification

In their behavioural cognitive model, Bruce and Young [1986] described the putative stages required for face identification, postulating that a face is first recognized as known and is then matched to a particular individual, before retrieval of multimodal and semantic knowledge about the person. This model has proven very useful and has been updated with recent data [Breen et al.,2001; Schweinberger and Burton,2003]. Face identification in these general models is thought to rely on a posterior to anterior ventral‐temporal axis, predominant in the right hemisphere [De Renzi et al.,1994; Kanwisher et al.,1997; McCarthy et al.,1999; Puce et al.,1996; Rossion et al.,2000], with posterior regions processing visuospatial aspects of faces and anterior regions processing integrated modality independent and social aspects of faces. Early processing of facial features is associated with activation of the inferior occipital gyri [Haxby et al.,2000]. Information is then proposed to follow two main pathways: one through the superior temporal sulcus, specialized in changeable aspects of faces such as eye gaze, expression, and lip movement [e.g., Allison et al.,2000]; the other through the fusiform gyrus up to the anterior temporal pole, a pathway specialized for face identification and multi‐modal integration with voice, name and biographical elements [Gorno‐Tempini et al.,2001; Joubert et al.2004,2006; Leveroni et al.,2000; Paller et al.,2000]. The model of Haxby et al. [2000] was recently revised to include recognition of personally familiar faces [Gobbini and Haxby,2007]; the authors proposed that these faces recruit a distributed network including areas of visual processing such as the fusiform, inferior occipital gyri and posterior superior temporal sulcus as well as areas associated with both person knowledge and emotion. They suggest that full recognition of a person includes not only recognizing the visual appearance and retrieving biographical information, but also retrieving personal traits, attitude, mental states and intentions, involving areas such as the anterior paracingulate, the posterior superior temporal sulcus and the precuneus. Lastly, they argued that social interactions and familiarity recognition modulate the emotional response to familiar faces, implicating areas such as the insula and amygdala. Thus, face recognition depends on a network of brain areas [Barbeau et al.,2008; Ishai,2008; Leveroni et al.,2000]; we hypothesize that aspects of this network are modulated according to the type of face viewed.

Identification of Personally Familiar Faces

Most of us are able to recognize hundreds of faces; however, personally familiar faces share extended exposure and increased person knowledge attained through social interactions. Although differences in personal relevance of these faces are important, there are also obvious distinctions among these familiar faces. For example, parents' faces are the first to be learned and recognized in infancy, thereby having personal relevance for the longest period of time. They are the visual stimuli stored when visual and memory systems were in the earliest stages of maturation, and parents' faces undergo steady updating with age. For most couples, a partner's face is one of the faces seen most often, every morning, evening and week‐ends. A partner's face also holds emotional valence and person knowledge attributed to personal closeness. Along the same line, one's own face is seen as a mirrored reflection many times a day, often because we need to act on it (e.g., for brushing teeth, shaving, combing hair) and it may be a cue for self‐knowledge or awareness, a special kind of person knowledge.

Behavioural studies

Behavioral investigations have examined possible hemispheric asymmetries in processing personally familiar faces. Some authors have found a right‐hemisphere advantage for one's own face [Keenan et al.,1999,2000], whereas others found a left‐hemisphere advantage [Brady et al.,2004]. When viewing one's own face was compared with viewing other familiar faces such as colleagues or famous faces, the reverse hemispheric effect was found [Brady et al.,2004; Keenan et al.,1999]. However, some of these studies used mirror images of participant's own face whereas others did not, which may impact lateralization of own face processing. In split‐brain patients, the initial studies by Sperry et al. [1979] found that both hemispheres were able to recognize one's self‐image, whereas Turk et al. [2002] found a left hemisphere advantage and Keenan et al. [2003] found a right hemisphere advantage. As the results from these various studies are contradictory, research using controlled protocols is warranted.

Neuroimaging studies

A few recent PET and fMRI studies have investigated possible differences in the neural substrate underlying processing of personally familiar faces, although none investigated the processing of parents' faces (except Gobbini et al. [2004] but combined with other personally familiar faces). Investigations of mothers viewing photographs of their child found differential brain activations in occipito‐temporal areas and the fusiform gyrus as well as orbito‐frontally, that correlated with mothers' mood, reflecting positive emotional arousal related to their children's faces [Nitschke et al.,2004]. In another report, increased activation was found in mothers viewing their own child (compared with their child's friends) in the amygdala, the insula and the superior temporal sulcus and anterior paracingulate gyrus, areas thought to reflect emotional response, attachment, and empathy [Leibenluft et al.,2004].

In studies of viewing one's own face, some report increased activity of the left fusiform gyrus [Kircher et al.,2001; Sugiura et al.,2000,2005], while the right fusiform gyrus usually shows increased activity to other face stimuli. In contrast, Platek et al. [2004] and Devue et al. [2007] identified a right‐sided network for own faces. A later study found a bilateral network of brain areas associated with subject's own faces [Platek et al.,2006], which was thought to be similar to the network engaged in self‐awareness and the executive aspects of own face processing. Uddin et al. [2005] showed participants digital morphs between their own face and a familiar face and found that activation of the right inferior parietal lobule, inferior frontal gyrus and inferior occipital gyrus increased as the stimuli contained more of one's own face and less of the familiar face. Thus, according to the above studies, viewing one's own face could trigger a strongly lateralized “self” network, but with no consensus as to whether it is right or left lateralized.

In contrast to one's parents' and own face, one's spouse or partner's face should activate areas associated with previous romantic, emotional and social interactions. Two functional imaging studies examined neural responses in intensely “in love” young adults with mean relationship lengths of 0.61 years [Fisher et al.,2005] and 2.4 years [Bartels and Zeki,2000]. Fisher et al. [2005] found increased activation in the right ventral tegmental area and right posterior‐dorsal body of the caudate nucleus; some of their participants also showed activity in the right insula, and right anterior and posterior cingulate cortex. The latter findings are in general agreement with the study of Bartels and Zeki [2000], who found activity bilaterally in the medial insula, the anterior cingulate, the caudate nucleus, and the putamen. Kircher et al. [2001] found higher activity in the right insula for partner faces. Bartel and Zeki [2000] also reported deactivation in the cingulate gyrus, the amygdala as well as prefrontal, parietal, and middle temporal cortex. Overall, these studies suggest that partner's faces in early stages of a relationship activate areas in the limbic system [Fisher et al.,2005], which include areas associated with person knowledge at later stages in a relationship [Bartel and Zeki,2000]. Relationships, however, last much longer; thus to fully characterize processing related to partners/spouses, it is essential to investigate the neural correlates of partner faces well past the stage of the initial “in love” period.

Although we readily recognize famous faces such as politicians or actors, we do not typically have personal experience with them. This is likely reflected in the processing of famous faces, which only implicates the inferior occipital and fusiform gyri, the superior temporal sulcus bilaterally and the amygdala [Ishai et al.,2002]. However, there is disagreement whether right or left hemisphere activation to famous faces is stronger [Denkova et al.,2006; Eger et al.,2005; Ishai et al.,2005; Pourtois et al.,2005].

Thus, a number of functional imaging studies have investigated the neural substrates of personally familiar faces, but little convergence is found among the reported results, perhaps due to dissimilar protocols. The marked discrepancies suggest that there are variables such as the types of personally familiar faces, which could affect the results.

Here we wished to answer the following questions: (i) are personally familiar faces processed with the same functional mechanisms as unfamiliar faces? and (ii) are there activation differences across types of personally familiar faces? Brain responses to the presentation of parents, partner, own and famous faces were compared with unknown faces. We expected that personally familiar faces would produce wider activation patterns than unfamiliar faces, particularly including frontal structures. Unlike one's own face and parents' faces, partner faces were expected to recruit areas associated with emotional control, such as the insula and the amygdala.

METHODS

Participants

We studied 10 participants, 4 males, mean age 35.4 years (±7.7 years SD), who met the following criteria: they had lived with their partner for at least 2 years (average relationship length 9.1 years (±5.01 years), they grew up in the same house as both parents and both were still alive, and they could provide recent high quality photographs of themselves, their partner and their parents.

Material

Stimuli

Each subject provided his/her own digitized photographs of his/her partners,' parents and own face, following a standardized protocol controlling for neutrality (no emotion on the face), gaze direction (looking straight at the camera), and light conditions. Subject's own face was reversed right/left, as when viewing one's face in a mirror. All subjects also provided a short list of famous people, men and women, who they would easily recognize if they saw a picture of them, from which we chose two (a male and a female). We also included photographs of two unfamiliar subjects (male and female). Thus, the set of eight photographs was unique for each subject. The set was processed to remove all information unrelated to the faces themselves (background, paraphernalia, etc.). The photographs were converted to grayscale. Figure 1 shows an example of a set of photographs for a single subject. A “ghost” image was prepared for each set by superimposing all photographs and adjusting levels of transparencies. Stimuli were repeated such that at least 40 trials of each face type (parent, partner, own, famous, unfamiliar, and scrambled) were presented. The order was pseudo‐random such that one stimulus type did not repeat without at least four intervening faces. The faces were presented for 500 ms and ISI was jittered between 1.7 and 2.0 s. The ghost image occurred with a probability of 8% and was included to maintain subject's attention. Participants were asked to focus on the photographs and press a button to the rare “ghost” image.

Figure 1.

An example of the stimulus set used in the experiment for one subject. The task was to press a button each time the “ghost” (the superimposition of all faces) appeared.

Procedure

Participants gave informed written consent and the Research Ethics Board at the Hospital for Sick Children approved all procedures. After standard MR screening, participants completed the task in the scanner, followed by a short questionnaire including questions such as “How many minutes do you look at yourself (in a mirror) a day on average?” and “For how many years have you been with your partner?”.

All MR imaging was conducted on a 1.5T Signa Twin EXCITE3 scanner (GE Medical Systems, WI; software rev.12M4) with a standard quadrature head coil. Foam padding comfortably restricted head motion. A set of high‐resolution T1‐weighted 3D SPGR images covering the whole brain was acquired (TE/TR/α = 9 ms/4.2 ms/15°, 116 slices, voxel size = 1 × 1 × 1.5 mm3, 2 NEX, 7 min) as an anatomical reference prior to the acquisition of functional images. Functional images were acquired with a standard gradient‐recalled echo‐planar imaging sequence (TE/TR/α = 40 ms/2,000 ms/90°, voxel size = 3.75 × 3.75 × 5 mm3) over 27 contiguous axial slices with interleaved acquisition.

Face stimuli were displayed on MR compatible goggles (CinemaVision, Resonance Technology, CA). Subjects responded to ghost trials using an MR compatible keypad (Lumitouch, Photonics Control, CA). Stimuli were controlled and responses recorded using the software Presentation (Neurobehavioral Systems, CA) on a personal computer. A TTL trigger pulse from the scanner ensured stimulus synchronization with image acquisition.

Data processing and analyses

Data analyses were carried out in AFNI [Cox,1996], using motion correction, 8‐mm spatial blur (FWHM), signal intensity normalization for percent signal change and deconvolution using a fixed haemodynamic response function, for all subjects. Images were spatially normalized to the MNI N27 brain in Talairach stereotaxic space and resampled to 3‐mm cubic voxels. Group images were analyzed using a random effects analysis of variance. The results were thresholded at P < 0.01 [corrected for cluster size; Xiong et al.,1995] for the whole brain and for the regions of interest (ROIs) in fusiform and cingulate gyri. These ROIs were chosen as they were the only two regions activated by all personally familiar faces in the whole‐brain results. The fusiform gyrus was active in contrasts with the baseline, and the cingulate gyrus activated in contrasts with unfamiliar faces. The selection of ROI masks was anatomically based on the Talairach structural template defined in AFNI [MNI N27 brain in TLRC space; Eickhoff et al.,2007]. The probability map was thresholded at P = 0.01 (t = 3.25) for familiar faces versus baseline and P = 0.01 (t = 2.88) for personally familiar faces versus unknown faces. The ROI masks were then applied to the thresholded data. To control for multiple comparisons in the whole brain, fusiform and cingulate ROI analyses, we performed 1,000 Monte Carlo iterations at an uncorrected P‐value of 0.01 on the 43,349 voxels, 596 voxels, and 1,878 voxels in the respective masks. This yielded a minimum volume of 5.70 cc (210 voxels), 0.49 cc (18 voxels), and 1.6 cc (59 voxels) at a P‐value of 0.05 for the whole brain, the fusiform gyrus, and the cingulate gyrus, respectively. A laterality index (LI: [Left − Right]/[Left + Right]) of activated voxels was calculated for the whole brain and each ROI. LI > 0.20 was deemed left dominant and LI < −0.20 as right dominant, values in‐between were considered bilateral.

Three multiple linear regression models were performed on activity related to own, partner, and parent faces based on the questionnaire. Predictors of the activity related to own faces included age, time spent looking at one's self in the mirror in minutes per day, and a rating out of 10 of how comfortable they were with their own face. Age, hours spent with partner per day and relationship length in years were the predictors for activity related to partner faces. Activity related to parent faces was regressed on age, time it would take to reach the parents' home, and days spent with parents per year. Similar to above, clusterwise multiple comparison controls were used at a P‐value of 0.01 yielding a minimum volume of 5.1 cc (188 voxels) for own faces, 2.2 cc (81 voxels) for partner faces, and 2.3 cc (85 voxels) for parent faces with a P‐value of 0.05.

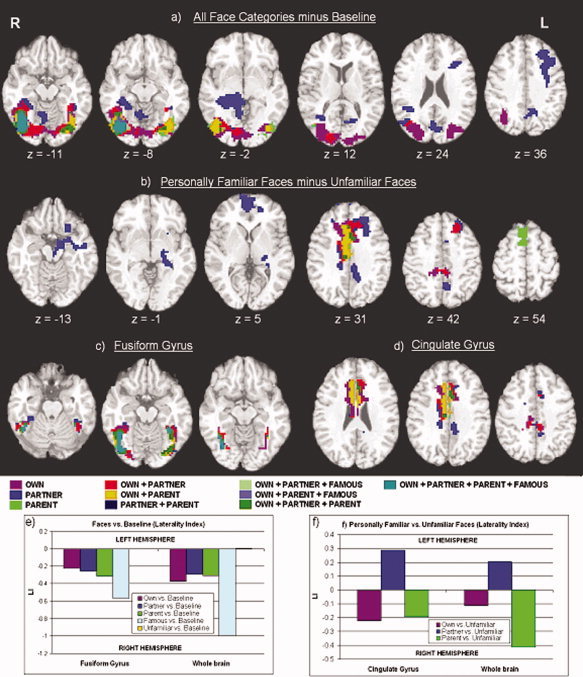

RESULTS

To examine the neural correlates of face type over the whole brain, each face type was compared to rest/baseline (the fixation cross; Table I) and unfamiliar faces (Table II). Based on the stringent cluster size threshold, the reported regions appear as part of clusters, which are equal to or larger than 210 voxels (Fig. 2a,b). Compared with baseline, partner faces activated bilateral (L/R) fusiform gyrus, right (R) lingual gyrus, cuneus (R), parahippocampal gyrus (R), middle temporal gyrus (R), superior temporal gyrus (R) and left (L) precuneus and middle frontal gyrus (L). Parent faces, compared to baseline, only activated the fusiform gyrus (L/R). Pictures of the participant's face (i.e., own face) activated fusiform gyrus (L/R), inferior occipital gyrus (L/R), lingual gyrus (L/R), cuneus (L/R) and precuneus (R). Famous faces activated the fusiform gyrus (R) and unfamiliar faces showed decreased activation of the anterior cingulate (L/R) and cingulate gyrus (L/R). The LI results over the whole brain showed that, compared to baseline, unfamiliar faces activation was bilateral (LI = 0.002; Fig. 2e). All other faces were right lateralized with partner faces the least and famous faces the most right lateralized [LI = −0.288 for partner; LI = −0.311 for parent; LI = −0.373 for own; and LI = −1.0 for famous faces].

Table I.

Areas of significant activation during recognition of all faces types minus baseline

| Anatomical region | Brodmann area | Hemisphere | x | y | z | (t)‐value |

|---|---|---|---|---|---|---|

| Own faces minus baseline | ||||||

| Fusiform gyrus | BA 37 | R | 42 | −48 | −14 | 5.507 |

| L | −40 | −47 | −14 | 4.695 | ||

| Inferior occipital gyrus | BA 19 | R | 43 | −77 | −4 | 5.184 |

| L | −34 | −76 | −4 | 4.678 | ||

| Lingual gyrus | BA 18 | R | 17 | −78 | −3 | 3.641 |

| L | −5 | −79 | −3 | 3.837 | ||

| Cuneus | BA 17/18 | R | 24 | −86 | 13 | 4.640 |

| L | −22 | −82 | 18 | 4.339 | ||

| Precuneus | BA 7 | R | 20 | −70 | 41 | 3.821 |

| Partner's faces minus baseline | ||||||

| Fusiform gyrus | BA 37 | R | 47 | −45 | −16 | 5.262 |

| L | −38 | −46 | −16 | 6.417 | ||

| Lingual gyrus | BA 18 | R | 12 | −76 | −3 | 3.398 |

| L | −17 | −76 | −6 | 4.587 | ||

| Cuneus | BA 18 | R | 19 | −85 | 18 | 3.510 |

| Parahippocampal gyrus | BA 36 | R | 14 | 42 | −6 | 4.202 |

| Middle temporal gyrus | BA 39 | R | 45 | −64 | 20 | 5.209 |

| Superior temporal gyrus | BA 13 | R | 45 | −43 | 20 | 3.394 |

| Precuneus | BA 7 | L | −6 | −58 | 37 | 3.589 |

| Middle frontal gyrus | BA 9 | L | −37 | 26 | 29 | 4.664 |

| Parent faces minus baseline | ||||||

| Fusiform gyrus | BA 37 | R | 40 | −49 | −16 | 4.933 |

| L | −38 | −60 | −11 | 3.970 | ||

| Famous faces minus baseline | ||||||

| Fusiform gyrus | BA 37 | R | 40 | −55 | −14 | 4.329 |

| Unfamiliar faces minus baseline | ||||||

| Anterior cingulate | BA 32 | R | 7 | 30 | 25 | −4.217 |

| L | −3 | 33 | 24 | −3.837 | ||

| Cingulate gyrus | BA 24 | R | 6 | −10 | 28 | −3.772 |

| L | −5 | 13 | 30 | −4.754 | ||

Table II.

Areas of significant activation during recognition of personally familiar minus unfamiliar faces

| Anatomical region | Brodmann area | Hemisphere | x | y | z | (t)‐value |

|---|---|---|---|---|---|---|

| Own faces minus unfamiliar faces | ||||||

| Anterior cingulate | BA 32 | R | 3 | 35 | 20 | 3.930 |

| L | −1 | 36 | 20 | 3.857 | ||

| Cingulate gyrus | BA 32/24 | R | 7 | 18 | 30 | 3.946 |

| L | −1 | 20 | 30 | 3.716 | ||

| Medial frontal gyrus | BA 9 | R | 18 | 27 | 31 | 3.698 |

| L | −15 | 29 | 30 | 4.272 | ||

| Middle frontal gyrus | BA 46 | L | −38 | 36 | 17 | 3.342 |

| Partner's faces minus unfamiliar faces | ||||||

| Anterior cingulate | BA 32 | R | 5 | 33 | 24 | 4.133 |

| L | −3 | 31 | 24 | 3.377 | ||

| Cingulate gyrus | BA 32 | R | 5 | 31 | 29 | 3.639 |

| L | −2 | 31 | 28 | 4.032 | ||

| Medial frontal gyrus | BA 10 | R | 7 | 59 | 8 | 3.787 |

| L | −6 | 49 | 8 | 3.450 | ||

| Middle frontal gyrus | BA 8 | L | −23 | 36 | 38 | 4.279 |

| Inferior frontal gyrus | BA 47 | L | −22 | 17 | −14 | 4.074 |

| Middle temporal gyrus | BA 20/21 | L | −49 | −11 | −14 | 3.322 |

| Insula | BA 13 | L | −28 | 15 | −4 | 3.300 |

| Amygdala | BA 34 | L | −25 | −2 | −10 | 3.218 |

| Thalamus | L | −20 | −26 | 5 | 3.303 | |

| Parahippocampal gyrus | BA 34/28 | L | −14 | −9 | −14 | 3.314 |

| Precuneus | BA 7 | L | −6 | −56 | 41 | 3.375 |

| Parent faces minus unfamiliar faces | ||||||

| Cingulate gyrus | BA 32/24 | R | 5 | 26 | 30 | 3.659 |

| L | −1 | 21 | 30 | 3.901 | ||

| Superior frontal gyrus | BA 6/8 | R | 6 | 22 | 56 | 3.831 |

Figure 2.

Representation of the regions that showed significant BOLD responses for (a) contrast for all face categories > Baseline, (b) contrast for personally familiar faces > unfamiliar faces, (c) ROI for the fusiform gyrus for all face types, and (d) ROI for the cingulate gyrus for personally familiar faces. Laterality indices (LI) are presented on graphs (e) for all faces versus baseline in the fusiform gyrus and whole brain analysis and (f) for personally familiar versus unfamiliar faces in the cingulate gyrus and the whole brain analysis.

All personally familiar faces were contrasted with unfamiliar faces. Results showed that partner faces had the most extended activations, including the anterior cingulate (L/R), cingulate gyrus (L/R), medial frontal gyrus (L/R), middle frontal gyrus (L), inferior frontal gyrus (BA 47; L), middle temporal gyrus (L), parahippocampal gyrus (L), amygdala (L), insula (L), thalamus (L) and precuneus (L). The parent faces only activated the cingulate gyrus (L/R) and the superior frontal gyrus (BA 6; R). Own faces compared with unfamiliar faces activated the anterior cingulate (L/R), cingulate gyrus (L/R), medial frontal gyrus (L/R) and middle frontal gyrus (L). The LI results showed that compared with unfamiliar faces, partner faces were left lateralized (LI = 0.205), parent faces were right lateralized (LI = −0.413), and own faces were bilateral (LI = −0.112; Fig. 2f).

The ROI for the fusiform gyrus showed bilateral activation for personally familiar faces, while famous faces activated only the right fusiform (Fig. 2c). Activation associated with own and partner faces extended most anteriorly in both hemispheres, while own faces also extended into inferior parts of the fusiform gyrus. Activation related to parent faces occupied primarily central regions of the fusiform gyrus, overlapping with both own and partner faces. The laterality index showed that familiar and all personally familiar face types showed higher voxel counts in the right fusiform (own, LI = −0.220; partner, LI = −0.251; parents, LI = −0.316; famous, LI = −0.566), with increasing asymmetry from own to famous faces (Fig. 2e).

The ROI analysis of the cingulate gyrus showed greater activation for personally familiar faces than unfamiliar faces, with the most extensive activation seen to own faces, and the least to parents (Fig. 2d). Parent faces activated mostly the right cingulate gyrus. Activation associated with own and partner faces extended most anteriorly in the left hemisphere, whereas own and parent faces extended most anteriorly in the right hemisphere. Activation of own and partner faces overlapped in posterior parts of the cingulate gyrus. The laterality index confirmed the initial observation of slightly more voxels activated in the right cingulate gyrus for parents' faces (LI = −0.194) and own face (LI = −0.222) and more voxels activated in the left cingulate gyrus for partner faces (LI = 0.288). Famous faces did not elicit significant activation in this area.

Significant predictors of activation related to partner faces were time spent daily with partner and relationship length, and included activity in right superior temporal gyrus and left insula (see Table III). The overall regression model was significant, suggesting that these predictors adequately explained the data. All predictors of own faces (age, time spent in front of a mirror, rating of own face) correlated significantly with medial frontal gyrus activation (Table III). However, the overall model did not fully explain the data, suggesting that additional predictor variables are needed to describe activation related to own faces. Activation elicited by parents' faces did not correlate with age, distance from parents, or days spent with parents per year.

Table III.

Areas of significant activation as function of predictors for partner and own faces

| Predictor | Anatomical region | Direction | Brodmann area | Hemisphere | x | y | z | (t)‐value |

|---|---|---|---|---|---|---|---|---|

| Partner faces | ||||||||

| Time spent together with partner daily | ||||||||

| Precentral gyrus | ↓ | BA 6 | R | 48 | −10 | 34 | −4.10 | |

| L | −54 | −5 | 30 | −4.24 | ||||

| Inferior frontal gyrus | ↓ | BA 9 | R | 60 | 7 | 26 | −4.59 | |

| Postcentral gurus | ↓ | BA 4 | L | −54 | −14 | 31 | −4.52 | |

| Relationship length | ||||||||

| Precentral gyrus | ↓ | BA 42/43 | R | 56 | −10 | 12 | −4.17 | |

| L | −55 | −10 | 13 | −4.83 | ||||

| Superior temporal gyrus | ↓ | BA 22 | R | 59 | 1 | 4 | −4.86 | |

| Transverse temporal gyrus | ↓ | BA 42 | R | 56 | −13 | 12 | −4.01 | |

| Insula | ↓ | BA 13 | L | −39 | −10 | 12 | −4.38 | |

| Own faces | ||||||||

| Age | ||||||||

| Paracentral lobule | ↓ | BA 6 | R | 7 | −29 | 64 | −6.23 | |

| Postcentral gyrus | ↓ | BA 3 | R | 28 | −29 | 63 | −5.01 | |

| Precuneus | ↓ | BA 7 | R | 4 | −47 | 59 | −4.31 | |

| Time spent in front of mirror daily | ||||||||

| Medial frontal gyrus | ↑ | BA 9 | R | 10 | 48 | 23 | 4.22 | |

| L | −3 | 50 | 18 | 4.53 | ||||

| Superior frontal gyrus | ↑ | BA 9 | L | −8 | 41 | 33 | 11.81 | |

| Own face rating | ||||||||

| Superior frontal gyrus | ↑ | BA 9 | R | 6 | 56 | 23 | 5.08 | |

| L | −3 | 57 | 24 | 6.74 | ||||

| Superior frontal gyrus | ↑ | BA 10 | R | 19 | 57 | 21 | 3.73 | |

| Medial frontal gyrus | ↑ | BA 9 | R | 2 | 48 | 21 | 4.81 | |

| L | −3 | 48 | 21 | 4.03 | ||||

| Precuneus | ↑ | BA 7 | L | −4 | −53 | 61 | 4.45 | |

| Paracentral gyrus | ↑ | BA 6 | R | 6 | −32 | 66 | 5.33 | |

| Postcentral gyrus | ↑ | BA 3 | R | 31 | −29 | 63 | 5.58 | |

Arrows show the direction of correlation: ↑ is positive, ↓ is negative.

DISCUSSION

We examined the neural substrates associated with processing partner, parents and own faces, each in comparison with baseline and unknown faces. We found that personally familiar faces all activated the fusiform and the cingulate gyri, with other areas activated depending on the face. Notably, partner faces showed the most extensive activation. Parent faces, which have been known for the longest time, showed the least activation, an intriguing finding considering that one could expect that these faces to trigger a network of brain areas involved in autobiographical memory. Laterality index analyses revealed that, regarding whole brain or regional analyses, personally familiar faces were less right lateralized than famous faces. Compared with unfamiliar faces, partner faces were left lateralized while own faces were bilateral.

These results, the first to distinguish long‐term partner and parents face activations, indicate that the network model of familiar face processing [Gobbini and Haxby,2007; Haxby et al.,2000] is modulated according to the type of familiar face. These results contribute to our understanding of some features presented by prosopagnosic patients. These patients usually recognize the faces of close relatives better than other faces. This has usually been explained by higher frequency and deeper memory traces for these faces. However, these results suggest that it could be related to distinct networks which are lateralized differently for personally familiar faces than for other faces. Also, these findings make important predictions for future studies, particularly in neurophysiology, as components associated with face processing (e.g., N170‐P2) which are usually larger over the right hemisphere, should be modulated by whether the face is famous, the partner's or one's own face.

All Face Types Versus Baseline

As expected, activations elicited by all faces compared to a fixation cross recruited areas associated with the visual recognition of faces [e.g., McCarthy et al.,1997; Puce et al.,1996] such as the fusiform gyrus. The partner, parent, and own faces evoked bilateral activation in the fusiform gyrus; famous faces activation was significant only in the right hemisphere. Partner and own faces also activated bilateral lingual gyrus and cuneus; inferior occipital gyrus activation was only seen for own faces.

In addition to the core visual system [e.g., Gobbini and Haxby,2007], further areas were involved for processing partner and own faces; both activated the precuneus, but partner faces activated the left while own faces activated right. In the face processing literature, the precuneus is associated with person knowledge processing [Gobbini and Haxby,2006,2007], but more generally the precuneus is linked with visuospatial analysis of objects [Faillenot et al.,1999], and a recognition network supporting retrieval [Nagahama et al.,1999; Reber et al.,2002]. Thus, the precuneus may well be involved in retrieval of person knowledge. The intriguing lateralizations may be attributed to the differences between person knowledge and self‐knowledge, and merit further study.

Partner faces also generated activation in the middle and superior temporal gyri, the parahippocampal, and the middle frontal gyri (BA 9). The superior temporal gyrus is part of the network linked with the social cognition of faces [Allison et al.,2000]. The parahippocampal gyrus is involved in encoding visual information [e.g., Kirchhoff et al.,2000; Rombouts et al.,1999], which in face studies is related to the recognition of familiar faces [Barbeau et al.,2008; Leveroni et al.,2000] and memory retrieval of personal and social knowledge [Sugiura et al.,2005]. Memory retrieval is also likely to be supported by activity in the middle frontal gyrus, which has been linked with cognitive functions such as working memory and attention [e.g., Christoff and Gabrielli,2000; Petrides,1996]. Activation in this area was associated with personally familiar faces as well [Bartel and Zeki,2000; Gobbini and Haxby,2006; Platek et al.,2006]; we suggest that in this study, the middle frontal gyrus was involved in holding the person information in mind.

Thus compared to baseline, all personally familiar and famous faces activated the core visual system for processing faces, with an interesting laterality differentiation in the precuneus in the comparisons of partner and own faces. Partner faces activated an extensive network of regions, likely linked with the mnemonic and semantic attributes of the partner.

Personally Familiar Versus Unfamiliar Faces

Partner, parent and own faces elicited significantly more cortical activation than unfamiliar faces. All personally familiar faces evoked activation in the cingulate gyrus bilaterally; while partner and own faces also activated the anterior cingulate bilaterally. The cingulate gyrus is a multimodal area [Turak et al.,2002]. In face processing studies it shows a stronger response to familiar faces [Platek et al.,2006], self‐resembling faces [i.e., kin recognition; Platek et al.,2008], and it is likely involved in the integration of information elicited by the face [Devue et al.,2007]. Significant cingulate gyrus activation was evident in the comparisons between unfamiliar and personally familiar faces, but not with famous faces. Anterior cingulate regions are associated with emotional processing and posterior regions associated with cognitive processing [Bush et al.,2000]. The proposed emotional and cognitive subdivisions of the cingulate gyrus have reciprocal interconnections with areas including the amygdala and lateral prefrontal cortices, respectively [Bush et al.,2000], areas also activated by personally familiar faces in the current study. Therefore, given the link between the cingulate gyrus, and person knowledge (including both emotional and semantic information), we suggest that the cingulate activation to personally familiar faces is related to shared experiences at a personal level.

In addition, partner and own faces elicited activation in bilateral medial frontal and left middle frontal gyri; the inferior frontal gyrus responded only to partner faces. Prefrontal regions underlie cognitive functions such as memory and attention, although the exact nature of their involvement remains a matter of debate. The middle frontal and medial frontal gyri have been linked to manipulation and monitoring of information effortfully held in mind [Christoff and Gabrielli,2000], while the inferior frontal gyrus (BA 47) is associated with processing syntax and speech [De Carli et al.,2007], as well as maintaining one or a few items in mind [Christoff and Gabrielli,2000]. The inferior frontal gyrus has also been related to processing of emotional stimuli and maintains strong connections with inferior temporal regions and limbic areas such as the parahippocampal gyrus [Petrides and Pandya,2002], linked to the active judgement of stimuli. Thus, as a partner's face is likely to evoke specific memories or events with some emotional overlay, we propose that prefrontal activation related to viewing a partner's face reflects these types of information being reviewed or monitored.

Besides the anterior cingulate and prefrontal activity, partner faces activated areas associated specifically with emotional processing or emotional valence [Britton et al.,2006] such as the insula, amygdala and thalamus. The amygdala have been shown to elicit a stronger response to faces compared to pictures [Sergerie et al.,2008], and the reciprocal functional connections between the amygdala and the ventral prefrontal cortex impact cognitive judgement [Nomura et al.,2004] and the maturation of cognitive and affective control [Hare and Casey,2005]. Insula activity has been reported to partner faces in the early “in love” stage of a relationship [Bartels and Zeki,2000; Fisher et al.,2005] and has been associated with processing emotions as part of face recognition [Gobbini and Haxby,2007]. Thus, although our participants had experienced much longer relationships than participants in previous studies, the limbic activation is consistent with these other reports, and suggests some similarities between relationships that start well and those that last for many years.

The superior frontal gyrus was activated uniquely to parent faces. Previous face imaging studies have linked the superior frontal gyrus with a self‐awareness network [Platek et al.,2006], kin recognition [Platek et al.,2005], personal choice [Turk et al.,2004] and self‐related processing [Goldberg et al.,2006]. So why would parent faces activate the superior frontal gyrus more than any of the other faces? The right superior frontal gyrus has been implicated in a network of areas associated with facial resemblance, linked with detection of facial familiarity and kin recognition [Platek et al.,2005]. Parents would be associated with self‐awareness or self‐resemblance, as we all see similarities and differences between ourselves and parents; these comparisons may contribute to our self‐image and self‐related processes and be evoked with seeing parents' photographs.

Overall, these findings suggest that the anterior cingulate and cingulate gyrus together with prefrontal activations may facilitate the integration and monitoring of personal information present in both one's own face, parents' faces and partner's face, but not in unfamiliar faces. Compared to unfamiliar faces, own faces recruit areas primarily associated with person knowledge (or self‐knowledge), while partner and parents faces recruited areas associated both with person knowledge, memory, emotional processing and self‐image.

ROI Analyses and Laterality Indices

Despite the fusiform gyrus being related to physiognomic processing of faces generally, regions of interest (ROI) analyses showed differences with personal familiarity. Areas in the mid‐fusiform, particularly in the right hemisphere, were activated for all faces, but the extent varied with the type of face (Fig. 2c). Partner and own faces activated the most anterior parts of the fusiform gyrus bilaterally, and own faces activation was also seen in superior areas in the left posterior fusiform. Parent faces activation was the most restricted, being similar to famous faces, except that it occupied central areas of the fusiform gyrus bilaterally, while activation to famous faces was mostly in the right central fusiform. Thus, the faces seen most often on a daily basis activated larger areas of the fusiform. This is interesting in light of developmental data which show that with increased skill or familiarity with faces between 12‐year‐olds and adults, the fusiform area activated becomes smaller [e.g., Passarotti et al.,2003]. Clearly, the personal significance of one's own and one's partner's face impacts processing from the early stages, producing distinct and special activation patterns.

The laterality index for the fusiform (Fig. 2e), suggested that compared to baseline, activation for all faces is strongly lateralized to the right, with the most lateralized being the famous faces and the least being own faces. A contrast of these patterns may have produced the apparent left‐lateralization reported in the literature for own faces. A laterality index of all faces compared to baseline was also performed for the whole brain, and results were very similar to the index generated for the fusiform gyrus. The similarity of the whole brain and fusiform gyrus laterality indices suggests that for faces, the laterality may be driven by activation in the fusiform gyrus. These results suggest that the accepted view that face processing relies predominantly on the right hemisphere may be modulated according to the type of face being processed.

All personally familiar faces activated large parts of the cingulate gyrus, compared to unfamiliar faces, and as with the fusiform, the ROI analyses showed that the extent differed with face type (see Fig. 2d). Own faces elicited the greatest spread of activation, sharing a large anterior area of right cingulate gyrus with parent faces and a large anterior area in the left with partner faces. Partner faces were represented more dorsally in both anterior and posterior areas of the cingulate gyrus. The cingulate cortex has been strongly linked with various cognitive functions related to self‐control or self‐reflection, and the larger activation to own faces is consistent with this. Also, the cingulate gyrus is associated with monitoring emotions and empathy, aspects likely invoked by the faces of parents or partners. The lateralization of cingulate gyrus activation may be related to the duration of the familiarity. Parents' and own faces have the longest extent of familiarity and tend to elicit more activation in the right cingulate. Partner's faces on the other hand are newer and are represented largely in the left cingulate. Investigating the effect of duration of familiarity requires further research; however, the design would be complicated, and it would be difficult to partial out duration of the relationship while controlling for personal familiarity.

Unlike the fusiform, the laterality effects in the cingulate were not closely linked to the whole‐brain results. For example, laterality indices showed that own faces were right lateralized in the cingulate gyrus, but the whole brain index suggested that own faces activate areas bilaterally (Fig. 2f). The latter is in agreement with a recent report that own face activations are sustained by a bilateral network [Platek et al.,2006]. The type or area of activation examined may lead to a more right‐ or left‐sided pattern and perhaps explain the discrepant laterality reports in the literature [Brady et al.,2004; e.g., Keenan et al.,2000].

Predictors of Activation Related to One's Own and Partner's Faces

Personal familiarity is based on many factors, which would include not only the length of the relationship, but also the time spent together on a daily basis; both of these factors were significantly related to activation elicited by partner's faces. As time spent together daily increased, activation in the inferior frontal, precentral, and postcentral gyri decreased. Christoff and Gabrielli [2000] found that activation in the inferior frontal gyrus was linked to monitoring items online; the present result suggests that increases in daily time together may reduce the need for effortful maintenance of information about the person. Similarly, activation in the right superior and transverse temporal gyri and the left insula decreased as relationship length increased. The insula is associated with processing of partners' faces [Bartels and Zeki,2000; Fisher et al.,2005] and perhaps the emotional component decreases as relationship length increases.

Predictors of activation related to one's own face, included age, time spent looking at one's self in the mirror, and one's rating of one's own face. Activation in the right precuneus, paracentral lobule and postcentral gyrus decreased with age. In contrast, activation in the left precuneus increased with higher ratings of one's own face. This is an interesting distinction as it highlights a difference between a current reference (i.e., how one rates one's face at a point in time), which is represented in the left precuneus, and a reference across time from chronological age in the right precuneus. The precentral gyrus was previously linked with activation elicited by own faces, consistent with Morita et al. [2008], who suggested that this area is specifically involved in own‐face recognition, although the activation they reported was more lateral and inferior than in the present study. The superior frontal and medial frontal gyri (BA 9) activation increased as both the time one spends in front of the mirror and the rating of one's own face increased. A number of studies have shown that the prefrontal cortex, particularly on the right, activated specifically under circumstances of self‐evaluation or self‐recognition [Fossati et al.,2003; Morita et al.,2008; Uddin et al.,2005].

Overall, evidence from regression analyses indicate that experiential predictors such as time spent with one's partner or looking at one's self in the mirror everyday modulate neural activity.

CONCLUSIONS

This study determined neural responses to personally familiar faces and extends previous results to direct comparisons of several types of personally familiar faces, including partner, own and parent faces. Despite the small sample size, we present important data which support prior work and give preliminary results on the differentiation of the neural substrates of personally familiar faces. The current results provide new evidence on the brain areas implicated in processing parent faces, which were the only ones to exhibit activation in the superior frontal gyrus. Partner faces engaged the most extensive cortical areas including those associated with emotional processing and uniquely activated the inferior frontal gyrus. The pattern observed in the ROI analyses of the fusiform and cingulate gyri showed anatomical distinctions in processing partner, parent and own faces. Laterality indices suggested that fusiform gyrus activity, associated with the core visual system, is right lateralized. However, cingulate gyrus activation associated with extended and emotional processing was more complex; partner faces were left‐lateralized, own faces were right‐lateralized, and parent faces showed bilateral activity with a right bias. Activity related to personally familiar faces was modulated by factors such as time spent with one's partner. Our findings suggest that long‐term, repeated exposure and personal experiences impact the network of areas recruited for processing faces in core visual areas as well as extended cognitive and emotional systems. Activation within common areas, such as the fusiform and cingulate gyri, is additionally modulated by face type.

REFERENCES

- Allison T,Puce A,McCarthy G ( 2000): Social perception from visual cues: Role of the STS region. Trends Cogn Sci 4: 267–278. [DOI] [PubMed] [Google Scholar]

- Balas B,Cox D,Conwell E ( 2007): The effect of real‐world personal familiarity on the speed of face information processing. PLoS ONE 2: e1223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barbeau EJ,Taylor MJ,Regis J,Marquis P,Chauvel P,Liégeois‐Chauvel C ( 2008): Spatio‐temporal dynamics of face recognition. Cereb Cortex 18: 997–1009. [DOI] [PubMed] [Google Scholar]

- Bartels A,Zeki S ( 2000): The architecture of the colour centre in the human visual brain: New results and a review. Eur J Neurosci 12: 172–193. [DOI] [PubMed] [Google Scholar]

- Bodamer J ( 1947): Die Prosopagnosie. Archives fur Psychiatrie und Nervenkrankheiten 179: 6–53. [DOI] [PubMed] [Google Scholar]

- Brady N,Campbell M,Flaherty M ( 2004): My left brain and me: A dissociation in the perception of self and others. Neuropsychologia 421: 156–161. [DOI] [PubMed] [Google Scholar]

- Breen N,Caine D,Coltheart M ( 2001): Mirrored‐self misidentification: Two cases of focal onset dementia. Neurocase 7: 239–254. [DOI] [PubMed] [Google Scholar]

- Britton JC,Phan KL,Taylor SF,Welsh RC,Berridge KC,Liberzon I ( 2006): Neural correlates of social and nonsocial emotions: An fMRI study. Neuroimage 31: 397–409. [DOI] [PubMed] [Google Scholar]

- Bruce V,Young A ( 1986): Understanding face recognition. Br J Psychol 77: 305–327. [DOI] [PubMed] [Google Scholar]

- Bush G,Luu P,Posner MI ( 2000): Cognitive and emotional influences in anterior cingulate cortex. Trends Cogn Sci 4: 215–222. [DOI] [PubMed] [Google Scholar]

- Charcot J ( 1883): Un cas de suppression brusque et isolée de la vision mentale des signes et des objets formes et couleurs. Progrés Médical 11: 568. [Google Scholar]

- Christoff K,Gabrieli JDE ( 2000): The frontopolar cortex and human cognition: Evidence for a rostro‐caudal hierarchical organisation of the human prefrontal cortex. Psychobiol 28: 168–186. [Google Scholar]

- Cox RW ( 1996): AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res 29: 162–173. [DOI] [PubMed] [Google Scholar]

- De Carli D,Garreffa G,Colonnese C,Giulietti G,Labruna L,Briselli E,Ken S,Macri MA,Maraviglia B ( 2007): Identification of activated regions during a language task. Magn Reson Imaging 25: 933–938. [DOI] [PubMed] [Google Scholar]

- De Renzi E,Perani D,Carlesimo GA,Silveri MC,Fazio F ( 1994): Prosopagnosia can be associated with damage confined to the right hemisphere‐an MRI and PET study and a review of the literature. Neuropsychologia 32: 893–902. [DOI] [PubMed] [Google Scholar]

- Denkova E,Botzung A,Manning L ( 2006): Neural correlates of remembering/knowing famous people: An event‐related fMRI study. Neuropsychologia 44: 2783–2791. [DOI] [PubMed] [Google Scholar]

- Devue C,Collette F,Balteau E,Degueldre C,Luxen A,Maquet P,Bredart S ( 2007): Here I am: The cortical correlates of visual self‐recognition. Brain Res 1143: 169–182. [DOI] [PubMed] [Google Scholar]

- Dubois S,Rossion B,Schiltz C,Bodart JM,Michel C,Bruyer R,Crommelinck M ( 1999): Effect of familiarity on the processing of human faces. Neuroimage 9: 278–289. [DOI] [PubMed] [Google Scholar]

- Eger E,Schweinberger SR,Dolan RJ,Henson RN ( 2005): Familiarity enhances invariance of face representations in human ventral visual cortex: fMRI evidence. Neuroimage 26: 1128–1139. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB,Paus T,Caspers S,Grosbras MH,Evans AC,Zilles K,Amunts K ( 2007): Assignment of functional activations to probabilistic cytoarchitectonic areas revisited. Neuroimage 36: 511–521. [DOI] [PubMed] [Google Scholar]

- Elfgren C,van Westen D,Passant U,Larsson EM,Mannfolk P,Fransson P ( 2006): fMRI activity in the medial temporal lobe during famous face processing. Neuroimage 30: 609–616. [DOI] [PubMed] [Google Scholar]

- Faillenot I,Decety J,Jeannerod M ( 1999): Human brain activity related to the perception of spatial features of objects. Neuroimage 10: 114–124. [DOI] [PubMed] [Google Scholar]

- Fisher H,Aron A,Brown LL ( 2005): Romantic love: An fMRI study of a neural mechanism for mate choice. J Comp Neurol 493: 58–62. [DOI] [PubMed] [Google Scholar]

- Fossati P,Hevenor SJ,Graham SJ,Grady C,Keightley ML,Craik F,Mayberg H ( 2003): In search of the emotional self: An FMRI study using positive and negative emotional words. Am J Psychiatry 160: 1938–1945. [DOI] [PubMed] [Google Scholar]

- Gobbini MI,Haxby JV ( 2006): Neural response to the visual familiarity of faces. Brain Res Bull 71: 76–82. [DOI] [PubMed] [Google Scholar]

- Gobbini MI,Haxby JV ( 2007): Neural systems for recognition of familiar faces. Neuropsychologia 45: 32–41. [DOI] [PubMed] [Google Scholar]

- Gobbini MI,Leibenluft E,Santiago N,Haxby JV ( 2004): Social and emotional attachment in the neural representation of faces. Neuroimage 22: 1628–1635. [DOI] [PubMed] [Google Scholar]

- Goldberg II,Harel M,Malach R ( 2006): When the brain loses its self: Prefrontal inactivation during sensorimotor processing. Neuron 50: 329–339. [DOI] [PubMed] [Google Scholar]

- Gorno‐Tempini M,Wenman R,Price C,Rudge P,Cipolotti L ( 2001): Identification without naming: A functional neuroimaging study of an anomic patient. J Neurol Neurosurg Psychiatry 70: 397–400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hare TA,Casey BJ ( 2005): The neurobiology and development of cognitive and affective control. Cognit Brain Behav IX: 273–286. [Google Scholar]

- Haxby JV,Hoffman EA,Gobbini MI ( 2000): The distributed human neural system for face perception. Trends Cogn Sci 4: 223–233. [DOI] [PubMed] [Google Scholar]

- Ishai A ( 2008): Let's face it: It's a cortical network. Neuroimage 40: 415–419. [DOI] [PubMed] [Google Scholar]

- Ishai A,Yago E ( 2006): Recognition memory of newly learned faces. Brain Res Bull 71: 167–173. [DOI] [PubMed] [Google Scholar]

- Ishai A,Haxby JV,Ungerleider LG ( 2002): Visual imagery of famous faces: Effects of memory and attention revealed by fMRI. Neuroimage 17: 1729–1741. [DOI] [PubMed] [Google Scholar]

- Ishai A,Schmidt CF,Boesiger P ( 2005): Face perception is mediated by a distributed cortical network. Brain Res Bull 67: 87–93. [DOI] [PubMed] [Google Scholar]

- Joubert S,Mauries S,Barbeau E,Ceccaldi M,Poncet M ( 2004): The role of context in remembering familiar persons: Insights from semantic dementia. Brain Cogn 55: 254–261. [DOI] [PubMed] [Google Scholar]

- Joubert S,Felician O,Barbeau E,Ranjeva JP,Christophe M,Didic M,Poncet M,Ceccaldi M ( 2006): The right temporal lobe variant of frontotemporal dementia: Cognitive and neuroanatomical profile of three patients. J Neurol 253: 1447–1458. [DOI] [PubMed] [Google Scholar]

- Kanwisher N,McDermott J,Chun MM ( 1997): The fusiform face area: A module in human extrastriate cortex specialized for face perception. J Neurosci 17: 4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keenan JP,McCutcheon B,Freund S,Gallup GG Jr,Sanders G,Pascual‐Leone A ( 1999): Left hand advantage in a self‐face recognition task. Neuropsychologia 37: 1421–1425. [DOI] [PubMed] [Google Scholar]

- Keenan JP,Wheeler MA,Gallup GG Jr,Pascual‐Leone A ( 2000): Self‐recognition and the right prefrontal cortex. Trends Cogn Sci 4: 338–344. [DOI] [PubMed] [Google Scholar]

- Keenan JP,Wheeler M,Platek SM,Lardi G,Lassonde M ( 2003): Self‐face processing in a callosotomy patient. Eur J Neurosci 18: 2391–2395. [DOI] [PubMed] [Google Scholar]

- Kircher TT,Senior C,Phillips ML,Benson PJ,Bullmore ET,Brammer M,Simmons A,Williams SC,Bartels M,David AS ( 2000): Towards a functional neuroanatomy of self processing: Effects of faces and words. Cogn Brain Res 10: 133–144. [DOI] [PubMed] [Google Scholar]

- Kircher TT,Senior C,Phillips ML,Rabe‐Hesketh S,Benson PJ,Bullmore ET,Brammer M,Simmons A,Bartels M,David AS ( 2001): Recognizing one's own face. Cognition 78: B1–B15. [DOI] [PubMed] [Google Scholar]

- Kirchhoff BA,Wagner AD,Maril A,Stern CE ( 2000): Prefrontal‐temporal circuitry for episodic encoding and subsequent memory. J Neurosci 20: 6173–6180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leibenluft E,Gobbini MI,Harrison T,Haxby JV ( 2004): Mothers' neural activation in response to pictures of their children and other children. Biol Psychiatry 56: 225–232. [DOI] [PubMed] [Google Scholar]

- Leveroni CL,Seidenberg M,Mayer AR,Mead LA,Binder JR,Rao SM ( 2000): Neural systems underlying the recognition of familiar and newly learned faces. J Neurosci 20: 878–886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCarthy G,Puce A,Gore JC,Allison T ( 1997): Face‐specific processing in the human fusiform gyrus. J Cogn Neurosci 9: 605–610. [DOI] [PubMed] [Google Scholar]

- McCarthy G,Puce A,Belger A,Allison T ( 1999): Electrophysiological studies of human face perception. II. Response properties of face‐specific potentials generated in occipitotemporal cortex. Cereb Cortex 9: 431–444. [DOI] [PubMed] [Google Scholar]

- Morita T,Itakura S,Saito DN,Nakashita S,Harada T,Kochiyama T,Sadato N ( 2008): The role of the right prefrontal cortex in self‐evaluation of the face: A functional magnetic resonance imaging study. J Cogn Neurosci 20: 342–355. [DOI] [PubMed] [Google Scholar]

- Nagahama Y,Okada T,Katsumi Y,Hayashi T,Yamauchi H,Sawamoto N,Toma K,Nakamura K,Hanakawa T,Konishi J,Fukuyama H,Shibasaki H ( 1999): Transient neural activity in the medial superior frontal gyrus and precuneus time locked with attention shift between object features. Neuroimage 10: 193–199. [DOI] [PubMed] [Google Scholar]

- Nitschke JB,Nelson EE,Rusch BD,Fox AS,Oakes TR,Davidson RJ ( 2004): Orbitofrontal cortex tracks positive mood in mothers viewing pictures of their newborn infants. Neuroimage 21: 583–592. [DOI] [PubMed] [Google Scholar]

- Nomura M,Ohira H,Haneda K,Iidaka T,Sadoto N,Okada T,Yonekura Y ( 2004): Functional association of the amygdala and ventral prefrontal cortex during cognitive evaluation of facial expressions primed by masked angry faces: An event‐related fMRI study. Neuroimage 21: 352–363. [DOI] [PubMed] [Google Scholar]

- Paller KA,Gonsalves B,Grabowecky M,Bozic VS,Yamada S ( 2000): Electrophysiological correlates of recollecting faces of known and unknown individuals. Neuroimage 11: 98–110. [DOI] [PubMed] [Google Scholar]

- Passarotti A,Paul B,Bussiere J,Buxton R,Wong E,Stiles J ( 2003): The development of face and location processing: an fMRI study. Dev Sci 6: 100–117. [Google Scholar]

- Petrides M ( 1996): Lateral frontal cortical contribution to memory. Neuroscience 8: 57–63. [Google Scholar]

- Petrides M,Pandya DN ( 2002): Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. Eur J Neurosci 16: 291–310. [DOI] [PubMed] [Google Scholar]

- Platek SM,Keenan JP,Gallup GG Jr,Mohamed FB ( 2004): Where am I? The neurological correlates of self and other. Cogn Brain Res 19: 114–122. [DOI] [PubMed] [Google Scholar]

- Platek SM,Keenan JP,Mohamed FB ( 2005): Sex differences in the neural correlates of child facial resemblance: An event‐related fMRI study. Neuroimage 25: 1336–1344. [DOI] [PubMed] [Google Scholar]

- Platek SM,Loughead JW,Gur RC,Busch S,Ruparel K,Phend N,Panyavin IS,Langleben DD ( 2006): Neural substrates for functionally discriminating self‐face from personally familiar faces. Hum Brain Mapp 27: 91–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platek SM,Krill AL,Kemp SM ( 2008): The neural basis of facial resemblance. Neurosci Lett 437: 76–81. [DOI] [PubMed] [Google Scholar]

- Pourtois G,de Gelder B,Bol A,Crommelinck M ( 2005): Perception of facial expressions and voices and of their combination in the human brain. Cortex 41: 49–59. [DOI] [PubMed] [Google Scholar]

- Puce A,Allison T,Asgari M,Gore JC,McCarthy G ( 1996): Differential sensitivity of human visual cortex to faces, letterstrings, and textures: A functional magnetic resonance imaging study. J Neurosci 16: 5205–5215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reber PJ,Wong EC,Buxton RB ( 2002): Comparing the brain areas supporting nondeclarative categorization and recognition memory. Cogn Brain Res 14: 245–257. [DOI] [PubMed] [Google Scholar]

- Rombouts SA,Scheltens P,Machielson WC,Barkhof F,Hoogenraad FG,Veltman DJ,Valk J,Witter MP ( 1999): Parametric fMRI analysis of visual encoding in the human medial temporal lobe. Hippocampus 9: 637–643. [DOI] [PubMed] [Google Scholar]

- Rossion B,Dricot L,Devolder A,Bodart JM,Crommelinck M,De Gelder B,Zoontjes R ( 2000): Hemispheric asymmetries for whole‐based and part‐based face processing in the human fusiform gyrus. J Cogn Neurosci 12: 793–802. [DOI] [PubMed] [Google Scholar]

- Schweinberger SR,Burton AM ( 2003): Covert recognition and the neural system for face processing. Cortex 39: 9–30. [DOI] [PubMed] [Google Scholar]

- Sergerie K,Chochol C,Armory JL ( 2008): The role of the amygdala in emotional processing: A quantitative meta‐analysis of functional neuroimaging studies. Neurosci Biobehav Rev 32: 811–830. [DOI] [PubMed] [Google Scholar]

- Sperry RW,Zaidel E,Zaidel D ( 1979): Self recognition and social awareness in the deconnected minor hemisphere. Neuropsychologia 17: 153–166. [DOI] [PubMed] [Google Scholar]

- Sugiura M,Kawashima R,Nakamura K,Okada K,Kato T,Nakamura A,Hatano K,Itoh K,Kojima S,Fukuda H ( 2000): Passive and active recognition of one's own face. Neuroimage 11: 36–48. [DOI] [PubMed] [Google Scholar]

- Sugiura M,Watanabe J,Maeda Y,Matsue Y,Fukuda H,Kawashima R ( 2005): Cortical mechanisms of visual self‐recognition. Neuroimage 24: 143–149. [DOI] [PubMed] [Google Scholar]

- Turak B,Louvel J,Buser P,Lamarche M ( 2002): Event‐related potentials recorded from the cingulate gyrus during attentional tasks: A study in patients with implanted electrodes. Neuropsychologia 40: 99–107. [DOI] [PubMed] [Google Scholar]

- Turk DJ,Heatherton TF,Kelley WM,Funnell MG,Gazzaniga MS,Macrae CN ( 2002): Mike or me? Self‐recognition in a split‐brain patient. Nat Neurosci 5: 841–842. [DOI] [PubMed] [Google Scholar]

- Turk DJ,Banfield JF,Walling BR,Heatherton TF,Grafton ST,Handy TC,Gazzaniga MS,Macrae CN ( 2004): From facial cue to dinner for two: The neural substrates of personal choice. Neuroimage 22: 1281–1290. [DOI] [PubMed] [Google Scholar]

- Uddin LQ,Kaplan JT,Molnar‐Szakacs I,Zaidel E,Iacoboni M ( 2005): Self‐face recognition activates a frontoparietal “mirror” network in the right hemisphere: An event‐related fMRI study. Neuroimage 25: 926–935. [DOI] [PubMed] [Google Scholar]

- Wilbrand H ( 1892): Ein Fall von Seelenblindheit und Heminopsie mit Sectionsbefund. Deutsch. Z. Nervenheilk. 2: 361. [Google Scholar]

- Xiong J,Gao JH,Lancaster JL,Fox PT ( 1995): Clustered pixels analysis for functional MRI activation studies of the human brain. Hum Brain Mapp 3: 287–301. [Google Scholar]