Abstract

Unlike previous emotional studies using functional neuroimaging that have focused on either locating discrete emotions in the brain or linking emotional response to an external behavior, this study investigated brain regions in order to validate a three‐dimensional construct – namely pleasure, arousal, and dominance (PAD) of emotion induced by marketing communication. Emotional responses to five television commercials were measured with Advertisement Self‐Assessment Manikins (AdSAM®) for PAD and with functional magnetic resonance imaging (fMRI) to identify corresponding patterns of brain activation. We found significant differences in the AdSAM scores on the pleasure and arousal rating scales among the stimuli. Using the AdSAM response as a model for the fMRI image analysis, we showed bilateral activations in the inferior frontal gyri and middle temporal gyri associated with the difference on the pleasure dimension, and activations in the right superior temporal gyrus and right middle frontal gyrus associated with the difference on the arousal dimension. These findings suggest a dimensional approach of constructing emotional changes in the brain and provide a better understanding of human behavior in response to advertising stimuli. Hum Brain Mapp, 2009. © 2008 Wiley‐Liss, Inc.

Keywords: fMRI, AdSAM, neuromarketing

INTRODUCTION

Researchers have used a variety of self‐report techniques in analyzing the emotional responses to commercials. Some have used a discrete self‐report approach that focused on specific emotions such as happiness and anger [Izard, 1977; Plutchik, 1984]. Other researchers have used a more robust three‐dimensional self‐report approach [Osgood et al., 1957; Russell and Mehrabian, 1977; Sundar and Kalyanaraman, 2004] including physiological measures to assess the emotional response. A consolidation between self‐report and physiological measurements would provide convergent validity for both methods, but currently a link between the two measures of emotion in response to marketing communications has not been fully explored. In this study, we proposed that the three‐dimensional self‐report approach to emotion is an effective methodological tool that emulates key physiological functions in the brain. Such findings could provide a promising new perspective for investigating issues that have been unexplored or vaguely defined in previous neuroimaging studies.

The search for physiological links to emotion reflects an approach that seeks fundamental or discrete emotions [Mandler, 1984]. Subcortical emotional responses have been recorded through classical conditioning of fear reactions to audio or visual stimuli [LeDoux et al., 1989]. The responses either interrupt the cognitive focus of current attention or influence the context for ongoing cognitive processes [Simon, 1982]. Pleasant and unpleasant emotional responses were found related to increase in the neural activity in the medial prefrontal cortex, thalamus, and hypothalamus, while unpleasant emotions were associated with the neural activity in the occipitotemporal cortex, parahippocampalgyrus, and amygdala [Lane et al., 1997]. Additionally, facial expressions of disgust or anger were found to increase the neural activity in the left inferior frontal gyrus [Sprengelmeyer et al., 1998] and anterior insular cortex [Phillips et al., 1997]. A meta‐analysis of emotion activation studies in PET and fMRI [Phan et al., 2002] concluded that no single brain region is activated by all emotions, and no single brain region is activated by one particular emotion.

The discrete approach assumes categorical judgment of emotional stimuli. This requires connections between both hemispheres [Bowers et al., 1991] and between the anterior cingulated cortex (ACC) and the bilateral prefrontal cortices [Devinsky et al., 1995]. Hence, certain brain regions may become activated from the demand to categorize or label discrete emotions rather than becoming activated from the natural emotional responses to given stimuli. Furthermore, most neuroimaging studies treat emotions as two discrete categories—pleasant and unpleasant—while ignoring the nuances along the pleasure dimension and the additional explanatory power of the arousal and dominance dimensions. For example, the intensity of fear has been associated with brain activities in the left inferior frontal gyrus [Morris et al., 1998] while anger and disgust have been associated with different degrees of intensity or arousal [Iidaka et al., 2001].

The alternative three dimensional approach to emotion attempts to simplify the representation of responses by identifying a set of common dimensions that can be used to distinguish specific emotions from one another. This approach includes both verbal and nonverbal measures [Lang, 1980; Osgood et al., 1957; Russell and Mehrabian, 1977], and has been largely ignored in previous research. One example of this approach is the pleasure–displeasure, arousal–calm, and dominance–submissiveness (PAD) model [Russell and Mehrabian, 1977]. The three bi‐polar dimensions are independent of each other, and the variance of emotional responses can be identified with their positions along these three dimensions. The dimensional approach helps differentiate emotions postulated by the discrete approach [Shaver et al., 1987] by providing a numeric level of each dimension to describe the specific emotions. Each discrete emotion can be identified by specific combinations of the dimensions. The meaning of these specific adjectives may differ by individual, culture, or other influences; nevertheless, the method for identifying the response is universal.

The current study was designed to assess the validity of the dimensional approach for measuring emotional responses by comparing them to the brain responses obtained through neuroimaging. The neuroimaging data was derived from blood oxygenation level‐dependent (BOLD) signals detected with fMRI. The neuroimaging analysis targeted the amygdala, prefrontal cortex (PFC), and temporal cortex for several reasons. First, the PFC has been found to have neural projections to the amygdala [McDonald et al., 1996], and the temporal cortex has been found to send stimulus information to the PFC [Hasselmo et al., 1989]. Second, the temporal cortex has been found to allow the brain to merge perceptual and semantic information, past memories and short‐term manipulation of the stimuli [Mitchell et al., 2003]. Third, both the semantic information and the dynamic nature of video clips have been found to increase neural activity in the PFC [Adolphs, 2002; Decety and Chaminade, 2003].

The self‐report data was derived from responses to the Advertisement Self‐Assessment Manikins (AdSAM®) scale [Morris et al., 2005]. This scale provides a nonverbal, cross‐cultural, visual measure of emotional response that measures the dimensions of pleasure, arousal, and dominance, and we therefore suggest is a better tool than a verbal technique that requires respondents to cognitively translate their reactions into words before reporting their feelings. Thus, we postulate that this methodology, grounded in psychological literature since the 1950s, should be the basis of emotional detection in the brain.

MATERIALS AND METHODS

Subjects

Twelve healthy, right‐handed young adult participants (6 males/6 females, age range 22–28, mean age 24.8) signed written, informed consent for the protocol approved by the Institutional Review Board at the University of Florida. At the time of the scan, none of the participants reported taking any psychiatric medication or having any history of neurological disorders. All of the participants stated having either normal vision or corrected to normal vision, and three of the participants used specialized, nonmagnetic corrective lenses inside the scanner. All participants were financially compensated $50.00 USD.

Experimental Protocols

The participants viewed five commercials inside of the scanner in a block design paradigm created with E‐Prime (Psychology Software Tools, Pittsburgh, PA). The commercials were presented by back‐projection using a 17″ LCD screen with a resolution of 1024 pixels by 768 pixels through the Integrated Functional Imaging System (IFIS, MRI Devices, Waukesha, WI). An MRI‐compatible auditory system (Resonance Technology) with stereo earphones and a microphone protected participants from scanner noise while permitting verbal communication with the operator and transmitted the sound of the commercials. The first two commercials lasted 30 s each and the other three commercials lasted 1 min each. Resting blocks consisting of viewing a red cross on a black screen for duration of 30 s were interspersed between each commercial block.

The functional scan consisted of six runs with each run (except for the initial resting‐state run) being separated into three blocks: (1) a resting period, (2) a task of viewing a commercial, and (3) the AdSAM task. The initial run started with a 30 s resting block and then included two sets of the AdSAM task in response to “How do you normally feel?” and “How do you feel right now?” Following the commercial block, the participants performed the AdSAM task, which consisted of three trials of rating pleasure, arousal, and dominance. The participants were asked to convey their feelings in terms of pleasure (happy vs. sad), arousal (stimulated vs. bored), and dominance (in control vs. cared for) by selecting the most appropriate Self‐Assessment Manikin out of five possible choices immediately after viewing a commercial. The responses and reaction times were recorded with a right‐handed button response glove (IFIS, MRI Devices, Waukesha, WI). The participants were instructed to indicate how they felt after watching each commercial by rating the AdSAM scales without spending a lot of time thinking about the questions. They took a training at the same day before the scanning and were explained how to best interpret the manikin figures. For the training, the participants practiced the task by answering questions regarding their general feelings and their feeling towards viewing a commercial for V8 vegetable juice outside of the scanner.

The five commercials presented were all broadcasted more than 10 years ago to avoid the likelihood of the participants having previously seen them on television. The first commercial was a public service announcement called Be a Hero. It shows happy looking children answering a question – Who is my hero? Some name famous people but one boy names his teachers. The commercial urges those interested to get involved in teaching and make a difference. The second commercial was from the spring water company Evian. This commercial portrays beautiful mountains, blue sky, and the sun sparkling on snow in the French Alps. The third commercial was from the soft drink Coke. It shows a young boy offering his Coke bottle outside the locker room after a football game to Mean Joe Greene of the Pittsburgh Steelers. At first Mean Joe Green politely declines but then changes his mind, accepts the Coke bottle, and passes his jersey to the young boy as a return gift. The fourth commercial was for the sports drink Gatorade. It showcases special effects of a 23‐year‐old Michael Jordan, in a Chicago Bulls uniform, playing the modern‐day Michael Jordan in a one‐on‐one grudge match. A 1987 version of Jordan's head was digitally “placed” on the torso of an actual performance double playing against the real 39‐year‐old Jordan. The two engage in the one‐on‐one basketball, which shows the older‐but‐wiser Jordan schooling his younger, more energetic self. The fifth and final commercial was and an Anti‐Fur public service announcement. The commercial starts with scenes from a fashion show with several models showcasing fur coats. The spectators are clapping and admiring the fur coats. As one model turns around there is blood dripping from her coat and it is splattered all over the spectators who are now terrified and disgusted. As the model leaves, there is a bloody trail behind her. It closes with and an announcement: It takes up to 40 dumb animals to make a fur coat but only one to wear it.

Imaging Parameters

A 3T head‐dedicated MRI scanner (Siemens Allegra; Munich, Germany) was used. Functional images were acquired with a gradient‐echo EPI pulse sequence sensitive to the BOLD signal using the following parameters: TR = 3.0 s, TE = 30 ms, FA = 90°, Matrix size = 64 × 64, FOV = 240 mm, 36 axial slices with a slice thickness of 3.8 mm without gaps. The first two functional volumes were discarded because of their T1 saturation. For structural coregistration with the functional images, T1‐weighted 3D anatomical images were acquired with a MPRAGE sequence in the following parameters: TR = 1.5 s, TE = 4.38 ms, FA = 8°, Matrix size = 256 × 256; FOV = 240 mm, 160 slices, slice thickness = 1.1 mm.

Data Analysis

BrainVoyager v. 4.9.6 (Brain Innovations, Maastricht, Holland) was used to analyze all of the imaging data. The functional images from each participant were first coregistered with the 3D anatomic images and then normalized into Talairach space. The resulting 3D functional data then underwent motion correction, linear trend removal, spatial smoothing (5.7‐mm FWHM Gaussian filter). A GLM produced voxel‐wise statistical activation maps. The predictors were estimated hemodynamic responses to the tasks. Contrasts between the predictors were used to evaluate the relative contribution of each condition to the variance in the BOLD signal. A statistical threshold was set to P < 0.052 corrected with a minimum cluster size of 150 voxels when comparing the BOLD signal between the tasks. ROIs were analyzed for selected clusters of significantly activated voxels. Within each ROI, the BOLD responses for each condition were visualized using time‐locked averaging of the percentage signal change relative to the baseline resting condition.

We used the method of a group split in order to integrate the behavioral data into our approach to the imaging data. We set up contrasts with low pleasure ads on one side and high pleasure on the other and then similarly with low arousal ads versus high arousal ads. We split the stimuli based on the AdSAM behavioral scores and compared activation brain activity between stimuli on each side of the split.

RESULTS

Behavioral Data

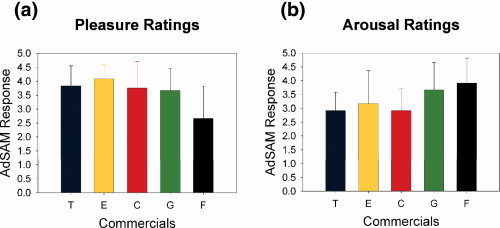

As shown in Figure 1, each of the five stimuli, television commercials, was rated on a five point AdSAM® scale of PAD and was averaged from all twelve subjects (see Methods Section). The lowest mean rating from pleasure was scored from the Anti‐Fur commercial, 2.7 ± 1.1, (mean ± sd) and it was significantly lower than the mean ratings from all of the other four commercials (Fig. 1a). In the ratings for arousal, the mean scores from the Anti‐Fur commercial (3.9 ± 0.9) and Gatorade commercial (3.7 ± 0.9) were significantly higher than the scores for the Teacher commercial (2.9 ± 0.6) and Coke commercial (2.9 ± 0.8) (Fig. 1b). In the ratings for Dominance, the mean scores for all the five commercials did not significantly differ from one another; therefore, they are not shown. (P‐value < 0.05).

Figure 1.

In both figures, each of the five commercials is represented by a different color (Teacher = blue, Evian = yellow, Coke = red, Gatorade = green, Anti‐Fur = brown). Figure 1a illustrates that the Anti‐Fur commercial is significantly lower than the other four commercials for the mean Pleasure scores with t‐values of 0.023 for teacher, 0.004 for Evian, 0.015 for Coke, and 0.032 for Gatorade. Figure 1b illustrates that that the Gatorade and Anti‐Fur commercials combined are significantly higher than the Teacher and Coke commercials combined on mean Arousal scores. (P‐value < 0.05).

Imaging Data

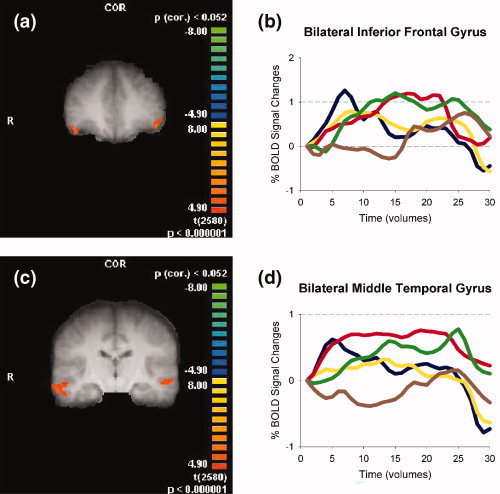

We compared changes in the BOLD signal for the combined blocks viewing the Teacher, Evian, Coke, and Gatorade commercials relative to the viewing of the Anti‐Fur commercial using a strict threshold criteria of P(corr.) < 0.052 and a minimum cluster size of 150 voxels. We observed BOLD signal increases in the bilateral inferior frontal gyri [IFG/Brodmann Area (BA) 47] and bilateral middle temporal gyri (MTG/BA 21) and BOLD signal decreases in the right superior parietal lobe (BA 7, see TableI for details).

Table I.

Localization of brain activation during commercials with a high level of pleasure

| Region | BA | Side | X | Y | Z | Size | t‐value |

|---|---|---|---|---|---|---|---|

| Inferior frontal gyrus | 47 | R | 46 | 37 | −8 | 447 | 5.64 |

| Inferior frontal gyrus | 47 | L | −43 | 35 | −4 | 289 | 5.69 |

| Middle temporal gyrus | 21 | R | 59 | −19 | −12 | 3139 | 5.81 |

| Middle temporal gyrus | 21 | L | −55 | −19 | −5 | 302 | 5.21 |

| Superior parietal lobe | 7 | R | 13 | −77 | 44 | 168 | −5.13 |

Comparison between four commercials (T, C, G, E) and commercial F. P(corr.) <0.052. Only clusters >150 voxels are shown. BA = Brodmann's Area.

Size = number of 1 mm3 voxels. X, Y, and Z refer to Talairach coordinates.

There are three steps in our selection of regions of interest (ROIs) in the pleasure dimension from Table I. First, we acquired beta weights with the aforementioned general linear model (GLM) on the imaging data to search for significantly activated regions, which are listed in Table I. Beta weights are estimates of the fMRI hemodynamic responses to the modeled condition. Second, we contrasted the beta weights to the behavioral data collected through AdSAM to examine the correspondence between the two types of measures. Third, we used the time locked average response plots of significantly activated regions to identify ROIs where the patterns of activations also highly corresponded to the pattern of the AdSAM behavioral data for the pleasure dimension. By following those three steps, we selected to present the bilateral IFG and bilateral MTG in Figure 2 for the pleasure dimension.

Figure 2.

Contrasts of the Anti‐Fur commercial (×4 balanced) against the other four commercials for comparison of the pleasure dimension. The ROIs shown in Figure 2a and in Figure 2c include bilateral inferior frontal gyri: right at Tal (46,37,−8) t = 5.64 and left at Tal (−43,35,−4) t = 5.69 and the bilateral middle temporal gyri: right at Tal (59,19,12) t = 5.81 and left at Tal (−55,−19,−5) t = 5.21. The graphs in Figure 2b,d depict an increased BOLD signal in all four commercials relative to the Anti‐Fur commercial block for the selected voxels. Each of the five commercials is represented by a different color (Teacher = blue, Evian = yellow, Coke = red, Gatorade = green, Anti‐Fur = brown).

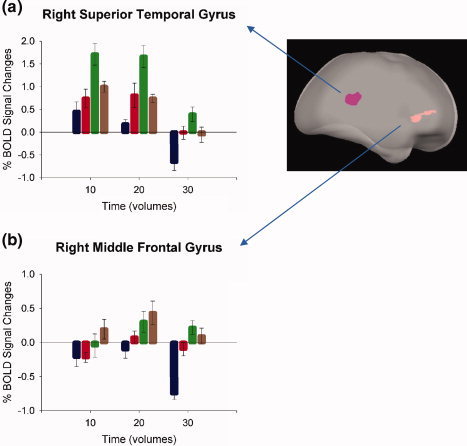

We also compared changes in BOLD signal for the combined blocks of the Anti‐Fur and Gatorade commercials relative to the viewing of the Teacher and Coke commercials using the same strict threshold criteria of P(corr.) < 0.052 and a minimum cluster size of 150 voxels. We observed several areas with BOLD signal increases in the left hemisphere regions including the middle frontal gyrus (MFG/BA9), superior frontal gyrus (SFG/BA10), middle occipital gyrus (BA19), inferior temporal gyrus (ITG/BA37) and thalamus, and in the right hemisphere regions including the cerebellum, middle frontal gyrus (MFG/BA10), and optic radii (Table II).

Table II.

Localization of brain activation during commercials with a high level of arousal

| Region | BA | Side | X | Y | Z | Size | t‐value |

|---|---|---|---|---|---|---|---|

| Cerebellum | — | R | 19 | −41 | −39 | 1,629 | 5.30 |

| Inferior temporal gyrus | 37 | L | −48 | −43 | −11 | 1,896 | 5.43 |

| Middle frontal gyrus | 9 | L | −36 | 28 | 30 | 477 | 5.27 |

| Middle frontal gyrus* | 10 | R | 26 | 32 | 5 | 1,113 | 5.40 |

| Middle occipital gyrus | 19 | L | −46 | −65 | 5 | 159 | 5.07 |

| Pulvinar of the thalamus | L | −24 | −26 | 4 | 349 | 5.04 | |

| Superior frontal gyrus | 8 | M | −7 | 29 | 47 | 2,774 | 5.70 |

| Superior frontal gyrus | 10 | L | −16 | 44 | 10 | 214 | 5.17 |

| Superior temporal gyrus* | 22 | R | 64 | −37 | 14 | 808 | 5.69 |

P(corr.) <0.052. Only clusters >150 voxels are shown. BA = Brodmann's Area.

Size = number of 1 mm3 voxels. X, Y, and Z refer to Talairach coordinates.

Appears in Figure 3.

We again used the aforementioned three steps to select ROIs for the arousal dimension and present the right MFG and right superior temporal gyrus (STG) in Figure 3. As shown in the figure, the time locked average responses in these ROIs corresponded well to the AdSAM behavioral data of arousal.

Figure 3.

Contrasts of the Gatorade and Anti‐Fur commercials against the Teacher and Coke commercials. The ROI's shown include the right superior temporal gyrus: at Tal (64,−37,14) t = 5.69 and the right middle frontal gyrus: at Tal (26,32,5) t = 5.40. The graphs in Figure 3a and in Figure 3b depict an increased BOLD signal in the blocks containing the Gatorade and Anti‐Fur commercials relative to the blocks containing the Teacher and Coke commercials for the selected voxels.

DISCUSSION

In this study, we evaluated emotional responses based upon our hypotheses that the processeses involved with AdSAM and PAD ratings can be observed functioning in the brain. The empirical evidence supported the identification of regions of the brain that correspond to both the pleasure‐displeasure and arousal‐calm dimensions of the PAD model of emotions [Russell and Mehrabian, 1977]. Furthermore, the self‐report data generated through the AdSAM measure correlated well with the fMRI data.

The AdSAM pleasure scores of four stimuli (Teacher, Evian, Coke, and Gatorade) were significantly higher than that of the Anti‐Fur commercial (see Fig. 1). The disturbing content of the Anti‐Fur Commercial that included a scene showering blood most likely contributed to this intense unpleasant emotion. When the imaging data of the first four commercials were contrasted to those of the Anti‐Fur commercial, significant differences were identified in brain regions that are known to be associated with emotional valence. These regions included the bilateral IFG and the bilateral MTG (see Fig. 2) which have been found to be associated with emotional responses [George et al., 1996; Sprengelmeyer et al., 1998]. Activation in the amygdala is often intertwined with activation of these two areas of the PFC [McDonald et al., 1996]; however, in this study, no significant activities were detected in the amygdala. Previous research indicates that the activation of the amygdala can be substantially reduced by an explicit request for emotional recognition [Nomura et al., 2003; Wright and Liu, 2006]. It is possible that the activity in the amygdala was habituated in this study because the subjects were explicitly requested to report their emotional responses, leading to an inability of the stimuli to elicit strong levels of fear or anxiety related to amygdala activation [LeDoux, 1995].

The AdSAM arousal scores of the Teacher and Coke commercials were significantly lower than those of the Gatorade and Anti‐Fur commercials (Fig. 1b). When these same pairs were contrasted in the imaging data, significant differences were identified in the right STG and the right MFG (see Fig. 3). The STG has been found to be a motion‐processing region [Schultz et al., 2005]. Perceiving the graphical display of motion has been found to increase arousal [Simons et al., 1999]; hence, the identification of motion may actually be arousal.

For dominance, there were no findings of significant differences in this data. This should not be surprising since dominance often accounts for a much smaller amount of variance of emotional responses than does pleasure or arousal [Mehrabian and Russell, 1974] and is often not a factor in vicarious experiences such as watching a television commercial. Dominance was included in this study because it is a content feature of emotional stimuli [Bradley and Lang, 2000]. We acknowledged the theoretical importance of dominance, but primarily focused on pleasure and arousal.

Arguably, the PAD model of emotion as shown in these findings appears to be a superior method than the discrete approach for identifying the dynamics of emotional response in the brain. We examined the subjects' emotional responses along the pleasure–displeasure, arousal–calm, and dominance–submissiveness dimensions and found specific brain regions associated with pleasure and arousal, respectively. By focusing on the patterns of responses as reported in the PAD model, we were able to locate specific functional regions where pleasure and arousal were distinctly and significantly different from each other as well as areas where they were different among the various stimuli.

Our findings were not intended to have an impact on the specific commercial ads used. As mentioned previously, these ads were selected mainly because they were once popular in the past but were probably never seen by the participants. We focused on targeting the emotions involved in processing television ads and hope that our results will contribute to further prmoting the multidimensional approach in analyzing commercial ads.

This study used a relatively small but adequate sample for fMRI studies and further research is needed to locate the responses in the dominant dimension and to calibrate the levels of activity in the brain that distinguish among responses to stimuli. These preliminary results suggest that human emotions are multidimensional and that self‐report techniques for emotional response along the pleasure and arousal dimensions correspond to a specific task but different functional regions of the brain. Moreover, our research may help examine emotional responses to television advertisements and predict consequent attitudes and behaviors towards marketing communications.

Supporting information

Additional Supporting Information may be found in the online version of this article.

Supporting Information

Acknowledgements

This work was supported by a grant as part of the joint AAAA/ARF Project (The American Association of Advertising Agencies and the American Research Foundation) and a grant from American Beverage Medical Research Foundation.

Contributor Information

Jon D. Morris, Email: jonmorris@ufl.edu.

Yijun Liu, Email: yijunliu@ufl.edu.

REFERENCES

- Adolphs R ( 2002): Recognizing emotion from facial expressions: Psychological and neurological mechanisms. Behav Cogn Neurosci Rev 1: 21–62. [DOI] [PubMed] [Google Scholar]

- Bowers D,Blonder LX,Feinberg T,Heilman KM ( 1991): Differential impact of right and left hemisphere lesions on facial emotion and object imagery. Brain 114: 2593–2609. [DOI] [PubMed] [Google Scholar]

- Bradley MM,Lang PJ ( 2000): Measuring emotion: Behavior feeling, and physiology In: Lane RD, Nadel L, editors Cognitive Neuroscience of Emotion. New York: Oxford University Press; pp 242–276. [Google Scholar]

- Decety J,Chaminade T ( 2003): Neural correlates of feeling sympathy. Neuropsychologia 41: 127–138. [DOI] [PubMed] [Google Scholar]

- Devinsky O,Morrell MJ,Vogt BA ( 1995): Contributions of anterior cingulate cortex to behavior. Brain 118: 279–306. [DOI] [PubMed] [Google Scholar]

- George MS,Parekh PI,Rosinsky N,Ketter TA,Kimbrell TA,Heilman KM,Herscovitch P,Post RM ( 1996): Understanding emotional prosody activates right hemisphere regions. Arch Neurol 53: 665–670. [DOI] [PubMed] [Google Scholar]

- Hasselmo ME,Rolls ET,Baylis GC ( 1989): The role of expression and identity in the face‐selective responses of neurons in the temporal visual cortex of the monkey. Behav Brain Res 32: 203–218. [DOI] [PubMed] [Google Scholar]

- Iidaka T,Omori M,Murata T,Kosaka H,Yonekura Y,Okada T,Sadato N ( 2001): Neural interaction of the amygdala with the prefrontal and temporal cortices in the processing of facial expressions as revealed by fMRI. J Cogn Neurosci 13: 1035–1047. [DOI] [PubMed] [Google Scholar]

- Izard CE ( 1977): The emotions in life and science In: Izard CE, editor. Human Emotions. New York: Plenum Press; pp 1–18. [Google Scholar]

- Lane RD,Reiman EM,Bradley MM,Lang PJ,Ahern GL,Davidson RJ,Schwartz GE ( 1997): Neuroanatomical correlates of pleasant and unpleasant emotion. Neuropsychologia 35: 1437–1444. [DOI] [PubMed] [Google Scholar]

- Lang PJ ( 1980): Behavioral treatment and bio‐behavioral assessment: Computer applications b In: Sidowski JB,Johnson JH,Williams TA, editors. Technology in Mental Health Care Delivery Systems. Norwood, NJ: Ablex; pp 119–137. [Google Scholar]

- LeDoux JE ( 1995): Emotion: Clues from the brain. Annu Rev Psychol 46; 209–235. [DOI] [PubMed] [Google Scholar]

- LeDoux JE,Romanski L,Xagoraris A ( 1989): Indelibility of subcortical emotional memories. J Cogn Neurosci 1: 238–243. [DOI] [PubMed] [Google Scholar]

- Mandler G ( 1984): Mind and Body. New York: Norton. [Google Scholar]

- McDonald AJ,Mascagni F,Guo L ( 1996): Projections of the medial and lateral prefrontal cortices to the amygdala: A Phaseolus vulgaris leucoagglutinin study in the rat. Neuroscience 71: 55–75. [DOI] [PubMed] [Google Scholar]

- Mehrabian A,Russell JA ( 1974): An Approach to Environmental Psychology b. Cambridge, MA: Oelgesschlager, Gunn. and Hain. [Google Scholar]

- Mitchell RLC,Elliott R,Barry M,Cruttenden A,Woodruff PWR ( 2003): The neural response to emotional prosody, as revealed by functional magnetic resonance imaging. Neuropsychologia 41: 1410–1421. [DOI] [PubMed] [Google Scholar]

- Morris JS,Friston KJ,Buchel C,Frith CD,Young AW,Calder AJ,Dolan RJ ( 1998): A neuromodulatory role for the human amygdala in processing emotional facial expression. Brain 121, 47–57. [DOI] [PubMed] [Google Scholar]

- Morris JD,Woo C,Singh AJ ( 2005): Elaboration likelihood model: A missing intrinsic emotional implication. J Target Meas Anal Market 14: 79–98. [Google Scholar]

- Nomura M,Iidaka T,Kakehi K,Tsukiura T,Hasegawa T,Maeda Y,Matsue Y ( 2003): Frontal lobe networks for effective processing of ambiguously expressed emotions in humans. Neurosci Lett 348: 113–116. [DOI] [PubMed] [Google Scholar]

- Osgood CE,Suci GJ,Tannenbaum PH ( 1957): The Measurement of Meaning. Urbana, IL: University of Illinois Press. [Google Scholar]

- Phan KL,Wager T,Taylor SF,Liberzon I ( 2002): Functional neuroanatomy of emotion: A meta‐analysis of emotion activation studies in PET and fMRI. Neuroimage 16: 331–348. [DOI] [PubMed] [Google Scholar]

- Phillips ML,Young AW,Senior C,Brammer M,Andrew C,Calder AJ,Bullmore ET,Perrett DI,Rowland D,Williams SCR,Gray JA,David AS ( 1997): A specific neural substrate for perceiving facial expressions of disgust. Nature 389: 495–498. [DOI] [PubMed] [Google Scholar]

- Plutchik R ( 1984): Emotions: A general psychoevolutionary theory b In: Scherer KR,Ekman P, editors. Approaches to Emotion. Hillsdale, NJ: Lawrence Erlbaum Associates; pp 197–219. [Google Scholar]

- Russell JA,Mehrabian A ( 1977): Evidence for a three‐factor theory of emotions. J Res Personality 11: 273–294. [Google Scholar]

- Schultz J,Friston KJ,O'Doherty J,Wolpert DM,Frith CD ( 2005): Activation in posterior superior temporal sulcus parallels parameter inducing the percept of animacy. Neuron 45: 625–635. [DOI] [PubMed] [Google Scholar]

- Shaver P,Schwartz J,Kirson D,O' Connor C ( 1987): Emotion knowledge: Further exploration of a prototype approach. J Personality Social Psychol 52: 1061–1086. [DOI] [PubMed] [Google Scholar]

- Simon HA ( 1982): Comments In: Clarke MS,Fiske ST, editors. Affect and Cognition: The Seventeenth Annual CARNEGIE Symposium on Cognition. Hillsdale, NJ: Erlbaum; pp 333–342. [Google Scholar]

- Simons RF,Detenber BH,Rodedema TM,Reiss JE ( 1999): Emotion processing in three systems: The medium and the message. Psychophysiology 36: 619–627. [PubMed] [Google Scholar]

- Sprengelmeyer R,Rausch M,Eysel UT,Przuntek H ( 1998): Neural structures assoicated with recognition of facial expressions of basic emotions. Proc R Soc London Ser B 265: 1927–1931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sundar SS,Kalyanaraman S ( 2004): Arousal, memory, and impression‐formation effects of animation speed in web advertising. JAdvertising 33: 7–17. [Google Scholar]

- Wright P,Liu Y ( 2006): Neutral faces activate the amygdala during identity matching. Neuroimage 29: 628–636. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional Supporting Information may be found in the online version of this article.

Supporting Information