Abstract

The strength of brain responses to others' pain has been shown to depend on the intensity of the observed pain. To investigate the temporal profile of such modulation, we recorded neuromagnetic brain responses of healthy subjects to facial expressions of pain. The subjects observed grayscale photos of the faces of genuine chronic pain patients when the patients were suffering from their ordinary pain (Chronic) and when the patients' pain was transiently intensified (Provoked). The cortical activation sequence during observation of the facial expressions of pain advanced from occipital to temporo‐occipital areas, and it differed between Provoked and Chronic pain expressions in the right middle superior temporal sulcus (STS) at 300–500 ms: the responses were about a third stronger for Provoked than Chronic pain faces. Furthermore, the responses to Provoked pain faces were about 40% stronger in the right than the left STS, and they decreased from the first to the second measurement session by one‐fourth, whereas no similar decrease in responses was found for Chronic pain faces. Thus, the STS responses to the pain expressions were modulated by the intensity of the observed pain and by stimulus repetition; the location and latency of the responses suggest close similarities between processing of pain and other affective facial expressions. Hum Brain Mapp, 2009. © 2009 Wiley‐Liss, Inc.

Keywords: face, magnetoencephalography, superior temporal sulcus, pain, emotion

INTRODUCTION

Why do we give a grimace of pain when we see other people getting injured? We cannot have the same sensory experience of pain as the person we are observing, yet we seem to have an immediate insight into what is happening‐even in the absence of a verbal report. Because the observer receives no direct noxious input and no conscious efforts are needed, the insight seems to stem from the observer's own experiences that enable sharing a part of the affective experience of the person in pain. Indeed, the brain research of the past decade has demonstrated that the observer's own emotion‐ and movement‐related brain areas are activated during mere perception of actions or affective states of another person [for reviews, see Frith and Frith, 2006; Hari and Kujala, 2009; Rizzolatti et al., 2001].

Similarly, observing or imagining someone else's pain recruits the affective pain network, especially the anterior cingulate cortex (ACC) and the anterior insula (AI), in both subject's and observer's brain [Botvinick et al., 2005; Jackson et al., 2005; Morrison et al., 2004; Saarela et al., 2007; Singer et al., 2004]. This cortical circuitry relates to the unpleasant feeling one associates with pain [for a review, see Rainville, 2002]. Moreover, the functional magnetic resonance imaging (fMRI) response of the observer's AI correlates positively with the intensity of the observed pain [Saarela et al., 2007]. These results suggest that the ability to understand the internal states or “feelings” of others' pain is supported by a brain network that is activated both when people experience pain and when they observe pain in someone else. Similar brain mechanisms for shared sensory‐affective experiences in humans have been found also for touch [Avikainen et al., 2002; Keysers et al., 2004] and disgust [Krolak‐Salmon et al., 2003; Wicker et al., 2003].

Until now, the majority of brain imaging studies on witnessing others' pain have focused on measuring the relatively slow cerebral haemodynamics. However, both real‐life events and cortical processes have a characteristic temporal organization, and the corresponding rapid time courses can be tracked in the human brain with time‐sensitive electrophysiological methods. Time scales of 50–250 ms characterize many types of perceptual and cognitive phenomena, such as sensory integration, figure‐ground segregation, and object categorization and recognition. Following the time course is of particular interest when a neuron population processes different aspects of sensory information in different time windows; for example, the earliest visual responses of neurons in the monkey middle superior temporal sulcus (STS) are modified by the coarse shape of faces (related to e.g. species), but the detailed information (such as identity) affects the neurons' responses 50 ms later [Sugase et al., 1999].

Also in the human brain, various aspects of facial information are processed in different time windows. Observing a face elicits the earliest prominent responses in the occipital visual cortices around 100 ms, which are mainly related to the low‐level visual processing—although some face‐specific processing possibly takes place also at these early latencies [Liu et al., 2002]. The temporo‐occipital 170‐ms responses (peaking at 140–200 ms in different studies) are clearly stronger to faces than to other visual categories [Allison et al., 1994], and they correlate strongly with face recognition performance [Tanskanen et al., 2005]. Subsequent processes around 200–500 ms show similar correlation with face recognition [Tanskanen et al., 2007], but unlike the 170‐ms responses, they are also modulated by the subject's task [Furey et al., 2006; Lueschow et al., 2004] and the familiarity of faces [Paller et al., 2003].

The long‐latency cortical responses are also modulated by emotional expressions of faces. When subjects observe happy, disgusted, surprised or fearful faces, event‐related EEG responses peak in the occipital and temporal regions 250–750 ms after the stimulus onset [Carretie and Iglesias, 1995; Krolak‐Salmon et al., 2001]. Intracortical recordings indicate similar latencies for the reactivity of the human AI, which responds to facial expressions of disgust at 300–500 ms [Krolak‐Salmon et al., 2003], and to painful CO2‐laser stimuli already at 180–230 ms [Frot and Mauguiere, 2003].

To reveal the temporal dimensions of a shared pain experience, we recorded neuromagnetic brain responses from healthy subjects while they observed facial expressions of pain. The stimuli were photos of Provoked pain faces (chronic pain patients whose pain was transiently intensified) and Chronic pain faces (the same patients at rest); we were especially interested in the possible amplitude and hemispheric differences between responses to these pain expressions, as well as in the resilience of the responses to stimulus repetition. Furthermore, Neutral faces (gender‐matched actors) and Scrambled images (all the face photos phase‐randomized) were presented as control stimuli, with the aim to detect the general face‐sensitive brain responses. On the basis of the previous findings on pain and emotion, we hypothesized that the more intensive Provoked pain faces would recruit responses either in brain areas related to affective pain processing, or in brain areas involved in processing facial expressions of emotions.

MATERIALS AND METHODS

Subjects

Eleven healthy adults participated in the study but responses from two subjects were discarded due to excessive eye blinks. Thus, data from nine subjects (six females, three males; 26–40 years, mean ± SD 29 ± 4 years) were analyzed. All subjects were right‐handed according to the Edinburgh Handedness Inventory [Oldfield, 1971]: on the scale from −1 (left) to +1 (right), the mean ± SEM score was 0.94 ± 0.03 (range from 0.8 to 1).

Participants of the MEG experiments gave their written, informed consent prior to the experiment, and a similar consent was also obtained from the chronic pain patients and actors before they were videotaped for the stimuli. The generation of the pain face stimuli and the MEG recordings had prior approvals by the ethics committee of the Helsinki and Uusimaa Hospital district.

Stimuli

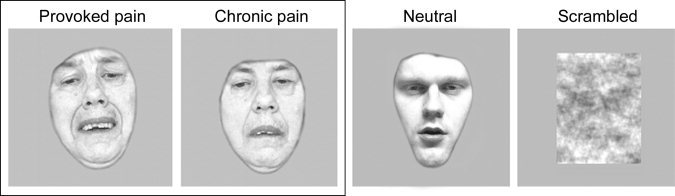

The subjects were shown intact and scrambled (phase‐randomized) grayscale still photos of faces that displayed Provoked pain, Chronic pain, and Neutral facial expressions (see Fig. 1), previously used in our fMRI study [Saarela et al., 2007]. We used real pain faces as stimuli, similarly as Botvinick et al. [2005], since acted facial expressions of pain can differ from genuine pain expression [Hill and Craig, 2004]. The facial photos expressing pain were obtained from four patients (two females, two males) suffering from chronic pain; photos of Chronic pain when the patients were at rest and photos of Provoked pain when the patient's own pain was transiently intensified, for example, by gently moving or stroking the painful limb. The preparation, behavioral pretesting, and selection of the pain face stimuli are described in detail in Saarela et al. [2007].

Figure 1.

Examples of stimuli used in the experiment superimposed on mean‐intensity gray background. The photos of the pain patients in their Provoked pain and Chronic pain conditions (during pain intensification and at rest, respectively) are shown in the box on the left, and the Neutral and Scrambled faces used for detecting general face‐sensitive responses are shown on the right.

The Neutral faces were originally obtained from healthy gender‐matched actors for another study [Schürmann et al., 2005] and reused as control stimuli in our previous pain experiment [Saarela et al., 2007]. The Neutral faces depicted faces of two males and two females not showing any explicit emotion (see example in the Fig. 1). The purpose of Neutral and Scrambled faces in this study was to aid in detecting general face‐sensitive brain responses.

Ratings of Pain Intensity

During filming the stimuli for our former study [Saarela et al., 2007], the pain patients, whose faces were shown in the current study, estimated their own pain once during the Chronic state and three to five times during the Provoked state. The mean pain estimates of the four patients were 3.9 for the Chronic state (7, 3, 2, and 3.5 by patients 1, 2, 3, and 4, respectively) and 8.9 for the Provoked state (8.7, 9, 8.1, and 6.9, respectively) on a scale from 0 (minimum) to 10 (maximum).

In our original behavioral stimulus selection study [Saarela et al., 2007], 25 different photos of five pain patients were shown (each for 2.5 s with an interval of 5 s) in a random order to 30 individuals who did not participate in the fMRI study; the subjects' task was to rate the facial expressions for pain intensity. As a result, 24 stimuli from four pain patients were selected for our original fMRI recordings [Saarela et al., 2007] and reused here. This set of stimuli comprised three Chronic pain photos (with lowest ratings) and three Provoked pain photos (with highest ratings) from each patient. As was already stated in the original publication, the mean ± SEM pain rating was 3.3 ± 0.3 for the final set of Chronic pain photos and 6.7 ± 0.3 for the Provoked pain photos. Thus, the intensity ratings were slightly smaller but in good overall accordance with the ratings of the pain patients themselves.

For the current study, we reanalyzed the behavioral data to find out whether the subjects' pain intensity ratings were affected by repeated exposure to the pain expressions of the same patient. We first obtained the pain ratings of each patient's pain faces shown as the first and the last within the stimulation sequence, separately for Provoked and Chronic pain. In the subsequent repeated‐measures ANOVA, pain intensity and stimulus repetition served as within‐subjects factors, and ratings to the first and last photos of a patient were compared with planned contrasts.

Stimulus Presentation and Instructions

Stimulus presentation was controlled by the Presentation® software (http://nbs.neuro-bs.com/) running on a PC computer. The images were displayed on a rear projection screen by a data projector (VistaPro™, Christie Digital Systems, Cypress, CA). The experiments were run in the standard VGA mode (resolution 640 × 480 pixels, frame rate 60 Hz, 256 gray levels). The image size was 12.7 cm × 17 cm (width × height) on the screen, and the stimuli were viewed binocularly with moderate background illumination at a distance of 94 cm. The average stimulus luminance was 100 cd/m2 for each stimulus category.

The photos were overlaid on a gray 30.5 cm × 23 cm (width × height) display area, luminance‐matched with the mean of the face stimuli. Each stimulus was shown for 2 s, and the interstimulus interval was 2.0–2.5 s. The total number of different stimuli in the whole experiment was 12 for the Provoked pain faces, 12 for the Chronic pain faces, 24 for the Neutral faces, and 48 for the Scrambled faces (all the previous stimuli phase‐randomized). Stimuli were presented in two subsequent recording sessions in a pseudorandomized order, counterbalanced across subjects. During one recording session (duration ∼18 min), all Provoked pain, Chronic pain, and Neutral faces were presented four times, and the Scrambled faces once, resulting in 48 Provoked, Chronic, and Scrambled faces, and in 96 Neutral faces per recording session.

The subjects were informed that the stimuli contained neutral facial expressions, facial expressions of pain, and scrambled images. Subjects were instructed to view all the stimuli attentively, but no extra behavioral task was given.

MEG Data Acquisition

Neuromagnetic fields were acquired with a whole‐scalp Vectorview system (Neuromag, currently Elekta Neuromag Oy, Helsinki, Finland) comprising 306 sensors: two orthogonal planar gradiometers and a magnetometer on each of the 102 triple‐sensor elements. MEG signals were band‐pass filtered to 0.1–170 Hz and digitized at 600 Hz. The responses were averaged from 200 ms before the stimulus to 1,000 ms after the stimulus onset. For data analysis and source modeling, the responses were low‐pass filtered at 40 Hz, and a prestimulus baseline of 200 ms was applied. Before averaging, trials contaminated with eye movements (detected with horizontal and vertical electro‐oculograms) or excessive MEG signals were discarded.

Data Analysis

Sensor‐level analysis

The sensor‐level data were inspected from gradiometers, which pick up the strongest signals directly above local current sources. In each of the two recording sessions, ≥39 responses for each stimulus category were acquired (twice as many for Neutral faces).

Because individual variation in the cortical anatomy and in the head position with respect to the MEG sensors renders intersubject comparison of magnetic field pattern orientation questionable, we removed orientation information from the data by first calculating vector sums of the two orthogonal planar gradients for each sensor element and for each condition. Thereafter, we identified the channels that showed prominent responses for Provoked and Chronic pain faces. This search yielded five locations: right and left temporal cortices, occipital cortex, and right and left temporo‐occipital cortices. At each of these five regions, the signals were spatially averaged over six channel pairs (see Fig. 3 for sample responses from subject S1 and for the locations of channels from which the signals were averaged in all subjects). Comparison of these areal averages across subjects simplifies the data analysis, because the signals from few adjacent channels are more stable than signals from single channels alone—this procedure has proven useful and robust in previous MEG analyses [e.g. Avikainen et al., 2003; Hari et al., 1997; Uusvuori et al., 2008]. A minor drawback is the decrease of the amplitude as a result of spatial averaging. Sensor‐level areal averages were subjected to statistical testing with repeated‐measures ANOVA, aimed to reveal possible effects and interactions of stimulus category, hemisphere, and measurement session. Subsequently, post hoc comparisons were applied to reveal the origins of the observed differences.

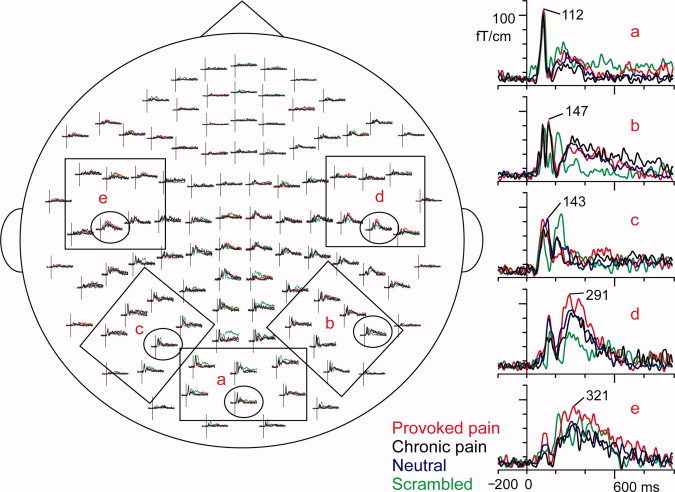

Figure 3.

Sample responses of a typical subject and the channel layout of the vector sums calculated from two orthogonal gradiometers, seen from above. On left, the selections show the six channels of each area, from which the areal averages were calculated on the basis of the maximum responses: (a) occipital cortex, (b) right temporo‐occipital cortex, (c) left temporo‐occipital cortex, (d) right temporal lobe, and (e) left temporal lobe. On right, the representative responses, encircled in the whole‐scalp view, have been magnified. The traces are from −200 ms to 1000 ms, and the amplitudes are given as fT/cm. The latencies of the peak amplitudes are marked on the single channel responses. Red, Provoked pain face; black, Chronic pain face; blue, Neutral face; green, Scrambled face.

Source modeling

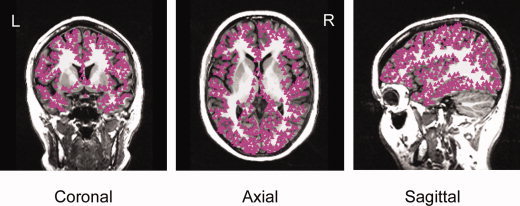

To estimate the neural generators of the evoked responses, we modeled cortical sources of the responses from the first session to the Provoked and Chronic pain faces. Cortical current sources were estimated for eight of the nine subjects; no structural magnetic resonance images were available for one subject (S9). First, the MEG data were spatially filtered by the Signal Space Separation method [Taulu et al., 2004] to suppress external magnetic interference. Second, anatomical MR images were processed with the FreeSurfer software package [Dale et al., 1999; Fischl et al., 1999] to obtain cortical surface reconstructions; the border of white and gray matter was tessellated and decimated to a 7‐mm grid of MEG source points (see Fig. 2).

Figure 2.

The 7‐mm grid of source points at the border of gray and white matter is shown with pink triangles in coronal, axial, and sagittal brain sections of one subject. The grid covered the neocortex of both hemispheres.

Thereafter, cortically constrained and noise‐normalized minimum‐norm estimates (MNEs, also referred to as dynamic Statistical Parametric Maps by Dale et al. [2000]) were computed using the “MNE Software” package (developed by Matti Hämäläinen at Massachusetts General Hospital; [Lin et al., 2006]). All 306 channels of the Vectorview MEG system were used for the analysis, and cortical current generators were modeled for the whole cortex.

Because the signals detected by MEG arise mainly from postsynaptic currents in the pyramidal neurons [see e.g. Hari, 1990; Okada et al., 1997], currents normal to the cortical surface were favored by applying a loose orientation constraint, which weights currents flowing along the cortex by a factor of 0.3 with respect to currents perpendicular to it. A single‐compartment boundary element model of the inner skull surface served as the volume conductor for cortical currents. The noise covariance estimate required by the MNE was obtained from the unaveraged baseline periods (from −200 ms to 0 ms relative to stimulus onset) and computed independently for all subjects. Subsequently, the responses were normalized relative to the noise level of the measurements, resulting in response strengths as z‐scores [Dale et al., 2000].

For a group average, individual source estimates of Provoked and Chronic categories were first temporally smoothed by a ±50‐ms moving average. The individual MNEs were then morphed to a standard atlas brain provided by the FreeSurfer package (“fsaverage,” an average of the brains of 20 healthy adults) with spatial smoothing. Subsequently, the morphed MNEs were averaged at 400 ± 50 ms across subjects, and the results were visualized on the standard atlas brain.

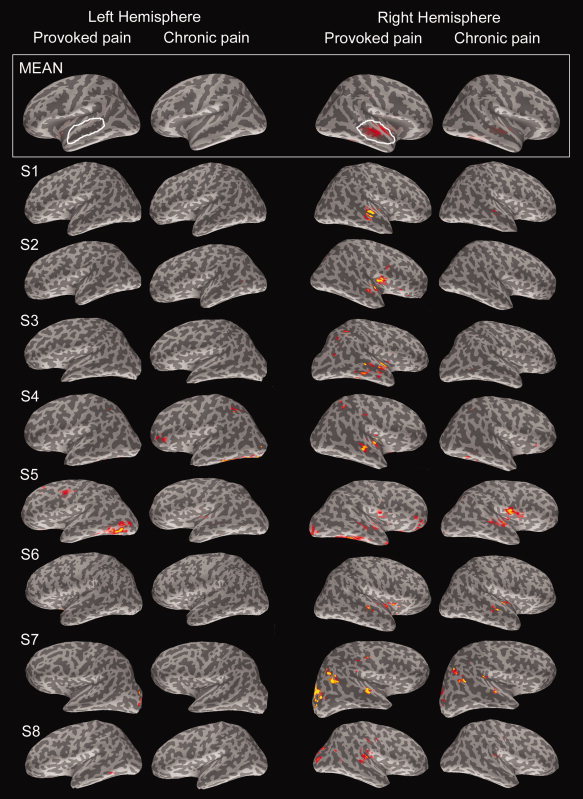

The top panel of Figure 6 shows the across‐subjects statistical z‐score maps; for closer group‐level statistical comparisons of the cortical source strengths between Provoked and Chronic conditions, ellipsoidal regions of interest (ROIs) were selected post hoc in the right and left STS of the atlas brain to cover the peak responses of the group data (see Fig. 6, top panel), and the peak amplitudes of the temporally smoothed responses within 300–500 ms were measured from the ROI signal for each subject. The normalized amplitudes (z‐scores) were compared for the effects of hemisphere and stimuli with repeated‐measures ANOVA, and paired‐samples t‐tests were used post hoc to find the origins of the differences.

Figure 6.

Estimates of the sources of the 300–500‐ms activity in the whole cortex. The average (MEAN) across all subjects is overlaid on the atlas brain, and the current estimates of subjects S1–S8 are shown on their individual brain surface maps. Estimates for Provoked and Chronic pain faces of the first measurement session are shown separately in the left and right hemisphere. For visualization purposes, the color scales are individually normalized with respect to the individual signal‐to‐noise ratio. In the grand average current estimates (MEAN) in the topmost panel, white ovals represent the ROIs for numerical statistical comparisons of the z‐score amplitudes for Provoked and Chronic pain conditions.

RESULTS

Behavioral Pain Ratings

In the reanalysis of the pain ratings by 30 individuals in our previous study [Saarela et al., 2007], repeated‐measures ANOVA revealed the main effects of both pain intensity and stimulus repetition on the pain ratings (repetition × intensity; P < 0.001 for intensity and P < 0.05 for repetition). The planned contrasts showed 9% decrease of the pain ratings for Provoked pain faces from the first to the last photo of a patient (from 7.14 ± 0.16 for the first photo to 6.48 ± 0.17 for the last photo; P < 0.01, paired‐samples t‐test). The ratings of Chronic pain faces from the first to the last photo did not change (3.36 ± 0.15 for the first photo vs. 3.10 ± 0.15 for the last photo).

Spatial Distributions, Temporal Waveforms, and Latencies of the Responses

Figure 3 shows the spatial distribution of the observed responses in a representative subject. In the occipital sensors, strong transient responses peaked at 112 ms to all stimuli, with the maximum amplitude around 110 fT/cm (Fig. 3a). Additional transient responses occurred in the right temporo‐occipital sensors for all intact face stimuli (Provoked, Chronic, and Neutral faces), peaking at 147 ms with an amplitude of about 90 fT/cm (Fig. 3b) and in the left temporo‐occipital sensors at 143 ms with amplitude of about 85 fT/cm (Fig. 3c). Furthermore, a much slower deflection peaked around 300–500 ms in the temporal‐lobe sensors, in both right (Fig. 3d) and left hemisphere (Fig. 3e). These long‐latency responses were strongest for Provoked pain faces (represented by red curves in Fig. 3), with maximum amplitudes around 120 fT/cm. Responses in the central and frontal sensors were very weak for intact face photos.

The analysis windows for data across subjects were 90–120 ms for occipital visual responses, 140–160 and 140–170 ms for the temporo‐occipital “face responses” in the right and left hemisphere, respectively, and 300–500 ms for the late temporal‐lobe responses (see Fig. 4).

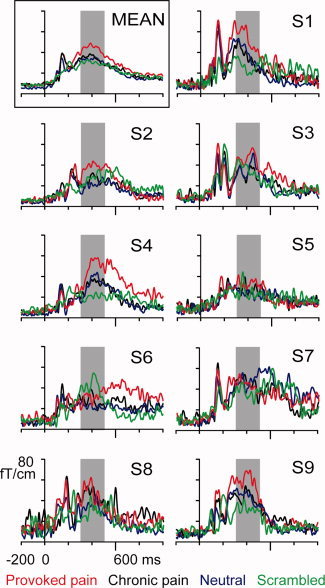

Figure 4.

Grand average (MEAN) and individual areal average responses (S1–S9) of the right temporal lobe during the first session. Red = Provoked pain face; black = Chronic pain face; blue = Neutral face; green = Scrambled face. The shadowed area indicates the 300–500‐ms time window used for response quantification.

Early Responses in Occipital and Temporo‐Occipital Regions

As expected, the response waveforms to Scrambled faces were clearly different from those to the other categories, sharing only the latency and form of the first visual responses (at 90–120 ms in the occipital cortices) with other stimulus categories (Fig. 3a). A small stimulus effect was observed in these occipital responses (stimulus × session, stimulus P < 0.05, repeated‐measures ANOVA; see amplitudes in Table I). The post hoc analysis showed that the responses to Neutral faces were 24% ± 7% stronger than the responses to Provoked or Chronic pain faces (Provoked vs. Neutral P < 0.05 and Chronic vs. Neutral P < 0.05, paired‐samples t‐tests).

Table I.

The amplitudes of the responses at five sensor locations: occipital cortex, bilateral temporo‐occipital and temporal regions

| Location and time window | Session 1 | Session 2 | ||||||

|---|---|---|---|---|---|---|---|---|

| Chronic | Provoked | Neutral | Scrambled | Chronic | Provoked | Neutral | Scrambled | |

| Occipital 90–120 ms | 56 (9) | 53 (9) | 66 (10) | 51 (8) | 55 (9) | 52 (9) | 64 (8) | 53 (7) |

| Right temp‐occ 140–160 ms | 51 (7) | 54 (7) | 58 (7) | 31 (2) | 51 (8) | 55 (7) | 47 (7) | 32 (3) |

| Left temp‐occ 140–170 ms | 51 (8) | 49 (8) | 45 (7) | 38 (6) | 45 (8) | 48 (10) | 42 (8) | 37 (5) |

| Right temporal 300–500 ms | 35 (3) | 44 (3) | 33 (3) | 30 (2) | 31 (3) | 33 (2) | 29 (3) | 27 (1) |

| Left temporal 300–500 ms | 26 (2) | 29 (3) | 23 (2) | 24 (3) | 24 (2) | 23 (2) | 19 (2) | 23 (2) |

The mean (SEM) amplitudes across all nine subjects are given in femtoteslas per centimeter (fT/cm) for each stimulus condition, the two measurement sessions, and the left and right hemisphere (when applicable) at a given time window and sensor location.

In the right temporo‐occipital area, all intact face stimuli (Provoked pain, Chronic pain, and Neutral faces) elicited strong, transient responses at 140–160 ms, whereas the responses to Scrambled faces did not (Fig. 3b). The statistical tests confirmed the difference: the stimulus category showed a significant main effect (stimulus × session; stimulus P < 0.001; see Table I). The right‐hemisphere responses to intact faces were on average 70% ± 22% (mean ± SEM) stronger than the responses to scrambled images in the first measurement session (Scrambled vs. Chronic pain, P < 0.05; Scrambled vs. Provoked pain, P < 0.01, Scrambled vs. Neutral, P < 0.05; paired‐samples t‐tests). Similar stimulus effect was observed also for left temporo‐occipital area at 140–170 ms (stimulus × session; stimulus P < 0.001), and post hoc tests confirmed the difference to originate from Scrambled vs. intact faces (Scrambled vs. Chronic, P < 0.01; Scrambled vs. Provoked, P < 0.05, Scrambled vs. Neutral, P < 0.01). No statistically significant differences were observed between any intact face categories (Provoked, Chronic, or Neutral face stimuli).

Effects of Stimulus, Hemisphere, and Session on Activity in Temporal Lobes

The strongest temporal‐lobe responses were elicited by Provoked pain faces. The three stimulus categories containing intact faces (Chronic pain, Provoked pain, and Neutral faces) produced long‐lasting, sustained responses peaking at 300–500 ms over the temporal lobes of both hemispheres, although stronger on the right (Fig. 3d,e). Figure 4 shows the areal mean responses of all nine subjects in the right hemisphere during the first measurement session, and the left top panel shows the across‐subjects mean response. In six of nine subjects, the responses were clearly stronger for Provoked pain (red curves) than for any other stimulus category.

In the statistical analysis (repeated‐measures ANOVA) of these temporal‐lobe responses, significant main effects were found for stimulus, session, and hemisphere (stimulus × session × hemisphere; P < 0.0001, P < 0.001, and P < 0.005, respectively; see Fig. 5 and Table I for details of response amplitudes). The strongest hemispheric difference appeared for the Provoked pain faces in the first measurement session: the sensor‐level responses in the right hemisphere were about 40% stronger than in the left (amplitudes in the right and left hemisphere were 44 ± 3 fT/cm and 29 ± 3 fT/cm, respectively). An interaction effect was also found between stimuli and hemisphere (stimulus × hemisphere, P < 0.01), indicating that the two hemispheres reacted differentially to the stimulus categories. Post hoc tests revealed that the right hemisphere responses were 30% ± 7% stronger for Provoked pain than Chronic pain faces (P < 0.005; see Fig. 4 and Table I). Instead, the right‐hemisphere responses for Chronic pain versus Neutral faces did not differ (P = 0.4, n.s.).

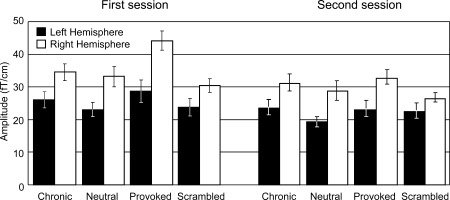

Figure 5.

Signal strengths (mean ± SEM) of temporal‐lobe responses to different stimuli categories at 300–500 ms. Left: first measurement session. Right: second measurement session.

Furthermore, the right‐hemisphere responses for Provoked pain decreased by 24% ± 4% in the second session compared with the first (p < 0.001; mean responses 44 ± 3 fT/cm in the first and 33 ± 2 fT/cm in the second session; see Fig. 5). No similar modulations were found for Chronic pain faces in the right hemisphere.

Cortical Sources of Temporal‐Lobe Responses

Figure 6 shows the statistical maps of the current estimates for the 400 ± 50‐ms time window for the whole cortex; the panels display both group‐mean data (top row) and the data for eight individuals for Provoked and Chronic pain faces, in both left and right hemisphere. These estimates suggest that the temporal‐lobe responses for Provoked pain faces arose from the right, middle STS region (Fig. 6, second column from right); no signals were systematically observed in the homologue area in the left hemisphere at the same threshold. For Chronic pain faces, some weak activity was observed in the right hemisphere and no activity in the left.

For the more detailed statistical analysis, the z‐score values of the temporally smoothed 300–500 ms responses, and their peak latencies were collected from the ROIs of the right and left STS (Fig. 6; see also details in Table II). Small main effects were found for both stimuli and hemispheres (stimulus × hemisphere; P < 0.01 for stimulus and P < 0.05 for hemisphere, repeated‐measures ANOVA). Responses to Provoked pain were 19% ± 9% stronger than responses to Chronic pain in the right STS area (P < 0.05, paired‐samples t‐test; see Table II). Similarly, responses to Provoked and Chronic pain were 68% ± 14% and 39% ± 10% stronger in the right than left hemisphere, respectively (P < 0.005 and P < 0.01).

Table II.

The individual z‐scores and peak latencies of the left and right‐hemisphere ROIs

| Subject | Left hemisphere | Right hemisphere | ||||||

|---|---|---|---|---|---|---|---|---|

| Chronic | Provoked | Chronic | Provoked | |||||

| Z‐score | Latency | Z‐score | Latency | Z‐score | Latency | Z‐score | Latency | |

| 1 | 6.77 | 303 | 6.81 | 300 | 12.64 | 327 | 15.27 | 332 |

| 2 | 5.93 | 418 | 6.78 | 486 | 6.45 | 405 | 9.34 | 398 |

| 3 | 6.63 | 347 | 4.84 | 317 | 6.52 | 317 | 10.38 | 425 |

| 4 | 6.14 | 400 | 7.33 | 355 | 8.98 | 450 | 10.98 | 428 |

| 5 | 5.38 | 451 | 4.55 | 484 | 7.53 | 479 | 6.25 | 439 |

| 6 | 4.97 | 308 | 3.86 | 342 | 7.65 | 400 | 7.70 | 383 |

| 7 | 6.98 | 372 | 6.69 | 347 | 9.10 | 415 | 9.36 | 370 |

| 8 | 3.81 | 356 | 4.78 | 321 | 5.46 | 389 | 6.58 | 403 |

Response strengths of individual subjects in the ROIs of left and right hemispheres are the z‐score values from the dynamical statistical parametric maps. The latencies are given in milliseconds.

DISCUSSION

Early Visual Responses

As expected, all intact faces elicited significantly stronger responses than Scrambled faces at 140–160/170 ms in the temporo‐occipital area. However, we found no systematic differences at this latency between responses to the Provoked pain, Chronic pain, or Neutral faces, although some studies have suggested enhanced electric N170 responses for fearful faces [Batty and Taylor, 2003] or enhanced responses in the fusiform cortex to facial emotion expressions [e.g. Breiter et al., 1996; Ganel et al., 2005; Morris et al., 1998; Vuilleumier et al., 2001]. However, the temporo‐occipital brain regions continue to process information at longer latencies, thus the fMRI results could reflect these later processes [e.g. Allison et al., 1999; Furey et al., 2006].

Single‐cell recordings of human medial frontal cortex during brain surgery have indicated responses to facial expression of fear already at 120 ms [Kawasaki et al., 2001]. Moreover, a similar emotion‐sensitive response, specifically to an expression of fear, appears around 120 ms both at posterior and fronto‐central EEG scalp electrodes [Eimer and Holmes, 2002, 2007; Pourtois et al., 2005]. Early responses to sad and happy facial expressions have also been associated to activation of the dorsal medial frontal cortex [Seitz et al., 2008].

In our study, the mid‐occipital responses at 100 ms were stronger for Neutral faces than for Chronic or Provoked pain faces. Although these responses can be modulated by low‐level attributes such as luminance and spatial frequency, the mean luminance of all stimulus categories in this study was equal, and systematic differences in spatial frequency were unlikely. However, Neutral and Provoked/Chronic pain faces differed on their contrast (as is evident from Fig. 1.), which can explain why the 100‐ms mid‐occipital responses were strongest to the Neutral faces [see e.g. Gardner et al., 2005; Tanskanen et al., 2005]. Moreover, because the Neutral faces depicted different persons than the pain faces, we will not discuss the differences in the mid‐occipital responses further. Importantly, the higher visual areas are less sensitive to contrast [Avidan et al., 2002], and the main effects between the Chronic and Provoked pain stimuli were found at locations and latencies that are not sensitive to low‐level attributes [Rolls, 2007; Rolls et al., 1987; Wallis and Rolls, 1997].

Modulation of STS Responses by the Intensity of Pain Expression and Stimulus Repetition

Here, we demonstrated that the middle STS responds more strongly to facial expressions of Provoked than Chronic pain; the main responses peaked at 300–500 ms with right‐hemisphere dominance. Given the rather long latency of these STS responses, the observed modulation most likely reflects influences from other brain regions. Previously, middle STS has been shown to respond to observed lip reading [Calvert et al., 1997], yawning [Schürmann et al., 2005], lip forms [Nishitani and Hari, 2002], and hand actions [e.g. Grezes et al., 1999; Nishitani and Hari, 2000; Rizzolatti et al., 1996]. Also, single‐cell recordings in monkeys [Hasselmo et al., 1989] and humans [Ojemann et al., 1992] have revealed STS neuronal populations responsive specifically for facial expression.

Nearby regions in the human STS are activated by other social stimuli. For example, the posterior STS responds to visual and auditory biological motion, for example, to body movement observed from either point‐light walkers [Bonda et al., 1996; Grossman et al., 2000], walking mannequins [Thompson et al., 2005], or animated walking humans [Pelphrey et al., 2003]. This area is also activated when the subject is listening to sounds of footsteps [Bidet‐Caulet et al., 2005; Saarela and Hari, 2008]. Instead, the rostrally adjacent area along the sulcus reacts to eye gaze and mouth opening [Hoffman and Haxby, 2000; Puce et al., 1998; Wicker et al., 1998].

In fMRI recordings, a bilateral STS response has been associated with observation of video clips of acted pain [Simon et al., 2006] but not with observation of static pain expressions of true pain patients [Botvinick et al., 2005; Saarela et al., 2007]. This difference in STS reactivity might reflect sensitivity of the middle STS to mouth movements, which were visible in the video clips [Simon et al., 2006] but not in still images [Botvinick et al., 2005; Saarela et al., 2007]. Accordingly, the STS response profile during observation of static pain expressions might be better captured with time‐sensitive electrophysiological methods than with the sluggish hemodynamic measures. In fact, here, we showed that the STS responses for still photos of pain expressions decrease quickly, whereas a changing (e.g. moving) stimulus may elicit more persistent STS responses [Simon et al., 2006]. This interpretation is in line with the recently proposed role of STS in analyzing temporally varying visual and auditory stimuli for their communicative value [Redcay, 2008].

In our experiment, the right‐hemisphere STS responses for Provoked pain expressions decreased from the first to the second session by a quarter, whereas no dampening was observed for Chronic pain faces. This decrease of the responses is in accordance with the long recovery cycle of cortical responses to real physical pain [Raij et al., 2003], compared with, for example, responses for innocuous touch in the primary somatosensory cortex [Hari and Forss, 1999] or responses to visual stimuli in the occipital cortices [Uusitalo et al., 1996]. Similarly, the Provoked pain expressions were rated as less intense after repeated exposure. This decrease of both subjective pain ratings and the strength of brain responses after several exposures to Provoked pain expressions agrees with the finding that healthcare professionals, who are repeatedly exposed to strong expressions of pain by strangers, attribute less pain to facial expressions of pain than do nonprofessionals [Kappesser and Williams, 2002]. Generally, the decrease of brain responses to repeated exposure for others' intense facial expressions could diminish the evoked affective load and thereby save the observer's resources; this view is supported by a recent finding that MEG responses to fearful faces at 300 ms also decrease from the first to the second presentation [Morel et al., 2009].

Similarities Between Brain Correlates for Facial Expressions of Pain and Emotion

Emotionally salient stimuli have been proposed to capture attention reflexively due to their natural value for survival [Schupp et al., 2003], and the STS responses in our study could have been affected by similar attentional processes—especially since attention to facially expressed emotion is known to enhance the fMRI response of the right STS [Narumoto et al., 2001; Pessoa et al., 2002; Winston et al., 2003].

The basic facial expressions of emotion do not include pain [Ekman et al., 1969], and the pain expression has been recently found to be more arousing and unpleasant than the emotion expressions [Simon et al., 2008]. Even so, the expression of pain has also much in common with emotions. For example, the experience of pain connects to a distinct facial expression, which communicates the internal state of the subject and is recognizable in most conspecifics [Prkachin et al., 2004; Williams, 2002]. Pain is also a highly affective experience in the same way as the emotions are. Moreover, according to our results, the expression of pain observed from another's face is processed at similar latencies and in similar cortical regions than has previously been reported for the facial expressions of emotions.

The middle STS, here found to respond most strongly for Provoked pain faces, also responds to all basic emotional facial expressions with a right‐hemisphere dominance [e.g. Engell and Haxby, 2007; Furl et al., 2007; Phillips et al., 1997; Vuilleumier et al., 2003; Winston et al., 2004]. Additionally, evoked scalp potentials have been observed at similar latencies around 300 ms for faces expressing happiness, surprise, fear, and disgust [Ashley et al., 2004; Carretie and Iglesias, 1995; Krolak‐Salmon et al., 2001]. In one of these studies [Krolak‐Salmon et al., 2001], the responses were similar for all emotions at 250–550 ms, but differed at 550–750 ms.

The middle STS responses for Provoked pain faces in our study were strongly right‐hemisphere dominant, suggesting hemispheric differentiation in processing of pain faces. Previously, right‐hemisphere dominance has been observed during self‐experienced pain [e.g. Hari et al., 1997; Hsieh et al., 1996; Ostrowsky et al., 2002; Symonds et al., 2006]. Right and left hemispheres have also been suggested to have different effects on processing of the valence of emotions [for a review, see Killgore and Yrgelun‐Todd, 2007]. Specifically, the right hemisphere has been suggested to allocate attention to the pain experience [Hsieh et al., 1996] and to have an important role in urgent and threatening situations [e.g. Adolphs et al., 1996; Davidson, 1992; Van Strien and Morpurgo, 1992]. The right‐hemisphere dominance has been more often associated with negative emotional facial expressions, such as fear [Krolak‐Salmon et al., 2001], and the facial expression of pain seems to communicate similarly urgent situation of a conspecific as does the fear expression. In addition, facial pain expressions are perceived as more arousing and unpleasant than any emotional faces of similar intensity [Simon et al., 2008].

Differences Between Neuromagnetic and Hemodynamic Responses for Pain Expressions

The transfer of an affective experience of pain from one person to another has been hitherto studied mainly by means of fMRI measurements, where visual or other cues of conspecific's pain have activated the ACC and AI regions in the observer's brain, closely resembling the activation during self‐experienced pain. Thus, understanding others' pain experience seems to rely on similar experiences of the observer [for a review, see Hein and Singer, 2008]. Unfortunately, not much is yet known about the time course of brain responses to others' pain, because the hemodynamic measures have poor temporal acuity.

In our previous fMRI study, ACC and AI were more strongly activated during observation of Provoked than Chronic pain faces [Saarela et al., 2007], whereas no such signals were seen in the present study. Besides differences between the fMRI and MEG methods, slightly different tasks were given to the subjects in these two studies, which could explain a part of the differences—the face‐sensitive cortical processes after 200 ms are known to be modulated by the subject's task [Furey et al., 2006; Lueschow et al., 2004]. In our fMRI study, the subjects were instructed to view all stimuli attentively to be able to answer questions of the stimuli afterwards, whereas, here, we merely instructed the subjects to view all stimuli attentively but did not ask them to memorize any aspect of the stimuli.

An even more important reason for the differences between the previous fMRI study and the current MEG study is that ACC and AI are very difficult to be detected in MEG recordings since (1) both sources are deep (leading to suppression of the MEG signal with respect to a more superficial source of equal strength), (2) ACC is symmetric (leading to MEG signal cancellation because of opposite currents in nearby cortical walls), and (3) some currents in the insula are radial with respect to the skull surface and thus poorly visible in MEG [Hämäläinen et al., 1993]. For example, when the same laser‐heat pain stimulus was used in both fMRI and MEG settings, the AI activation was evident in the fMRI measurements [Raij et al., 2005], but the MEG responses of the lateral cortex were adequately explained by activation of the second somatosensory cortex [Forss et al., 2005]. Indeed, according to our simulation using the anatomy of one subject, the source current in AI should be three to four times stronger than that in STS to produce an MEG response of the same magnitude.

CONCLUDING REMARKS

In line with our hypothesis that viewing Provoked pain faces would recruit brain areas related to processing of observed emotional faces or affective pain, we found that responses in the middle STS, peaking at 300–500 ms after stimulus onset, were stronger for Provoked than Chronic pain faces. These results resemble previous findings on facial expression of emotion in latency and, to some extent, location. In addition, the decrease of the responses for Provoked pain expressions from the first to the second measurement session could reflect an ecologically valid mechanism that protects the observer against a prolonged affective load.

Acknowledgements

We thank Matti Hämäläinen for providing the software for cortically constrained current estimates, Jan Kujala for Matlab scripts and assistance, and Nina Forss for comments on the manuscript. For stimulus production, we thank Eija Kalso, Yevhen Hluschuk, Martin Schürmann, Amanda C. de C. Williams, the Helsinki Medical Students' Theatre Company, and the patients of the Department of Anesthesia and Intensive Care Medicine, Helsinki University Central Hospital.

REFERENCES

- Adolphs R, Damasio H, Tranel D, Damasio AR ( 1996): Cortical systems for the recognition of emotion in facial expressions. J Neurosci 16: 7678–7687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T, Ginter H, McCarthy G, Nobre AC, Puce A, Luby M, Spencer DD ( 1994): Face recognition in human extrastriate cortex. J Neurophysiol 71: 821–825. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, Spencer DD, McCarthy G ( 1999): Electrophysiological studies of human face perception. I. Potentials generated in occipitotemporal cortex by face and non‐face stimuli. Cereb Cortex 9: 415–430. [DOI] [PubMed] [Google Scholar]

- Ashley V, Vuilleumier P, Swick D ( 2004): Time course and specificity of event‐related potentials to emotional expressions. Neuroreport 15: 211–216. [DOI] [PubMed] [Google Scholar]

- Avidan G, Harel M, Hendler T, Ben‐Bashat D, Zohary E, Malach R ( 2002): Contrast sensitivity in human visual areas and its relationship to object recognition. J Neurophysiol 87: 3102–3116. [DOI] [PubMed] [Google Scholar]

- Avikainen S, Forss N, Hari R ( 2002): Modulated activation of the human SI and SII cortices during observation of hand actions. Neuroimage 15: 640–646. [DOI] [PubMed] [Google Scholar]

- Avikainen S, Liuhanen S, Schürmann M, Hari R ( 2003): Enhanced extrastriate activation during observation of distorted finger postures. J Cogn Neurosci 15: 658–663. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ ( 2003): Early processing of the six basic facial emotional expressions. Brain Res Cogn Brain Res 17: 613–620. [DOI] [PubMed] [Google Scholar]

- Bidet‐Caulet A, Voisin J, Bertrand O, Fonlupt P ( 2005): Listening to a walking human activates the temporal biological motion area. Neuroimage 28: 132–139. [DOI] [PubMed] [Google Scholar]

- Bonda E, Petrides M, Ostry D, Evans A ( 1996): Specific involvement of human parietal systems and the amygdala in the perception of biological motion. J Neurosci 16: 3737–3744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick M, Jha AP, Bylsma LM, Fabian SA, Solomon PE, Prkachin KM ( 2005): Viewing facial expressions of pain engages cortical areas involved in the direct experience of pain. Neuroimage 25: 312–319. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, Strauss MM, Hyman SE, Rosen BR ( 1996): Response and habituation of the human amygdala during visual processing of facial expression. Neuron 17: 875–887. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Bullmore ET, Brammer MJ, Campbell R, Williams SC, McGuire PK, Woodruff PW, Iversen SD, David AS ( 1997): Activation of auditory cortex during silent lipreading. Science 276: 593–596. [DOI] [PubMed] [Google Scholar]

- Carretie L, Iglesias J ( 1995): An ERP study on the specificity of facial expression processing. Int J Psychophysiol 19: 183–192. [DOI] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI ( 1999): Cortical surface‐based analysis. I. Segmentation and surface reconstruction. Neuroimage 9: 179–194. [DOI] [PubMed] [Google Scholar]

- Dale AM, Liu AK, Fischl BR, Buckner RL, Belliveau JW, Lewine JD, Halgren E ( 2000): Dynamic statistical parametric mapping: Combining fMRI and MEG for high‐resolution imaging of cortical activity. Neuron 26: 55–67. [DOI] [PubMed] [Google Scholar]

- Davidson RJ ( 1992): Anterior cerebral asymmetry and the nature of emotion. Brain Cogn 20: 125–151. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A ( 2002): An ERP study on the time course of emotional face processing. Neuroreport 13: 427–431. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A ( 2007): Event‐related brain potential correlates of emotional face processing. Neuropsychologia 45: 15–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P, Sorenson ER, Friesen WV ( 1969): Pan‐cultural elements in facial displays of emotion. Science 164: 86–88. [DOI] [PubMed] [Google Scholar]

- Engell AD, Haxby JV ( 2007): Facial expression and gaze‐direction in human superior temporal sulcus. Neuropsychologia 45: 3234–3241. [DOI] [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Dale AM ( 1999): Cortical surface‐based analysis. II. Inflation, flattening, and a surface‐based coordinate system. Neuroimage 9: 195–207. [DOI] [PubMed] [Google Scholar]

- Forss N, Raij TT, Seppä M, Hari R ( 2005): Common cortical network for first and second pain. Neuroimage 24: 132–142. [DOI] [PubMed] [Google Scholar]

- Frith CD, Frith U ( 2006): The neural basis of mentalizing. Neuron 50: 531–534. [DOI] [PubMed] [Google Scholar]

- Frot M, Mauguiere F ( 2003): Dual representation of pain in the operculo‐insular cortex in humans. Brain 126 ( Pt 2): 438–450. [DOI] [PubMed] [Google Scholar]

- Furey ML, Tanskanen T, Beauchamp MS, Avikainen S, Uutela K, Hari R, Haxby JV ( 2006): Dissociation of face‐selective cortical responses by attention. Proc Natl Acad Sci USA 103: 1065–1070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furl N, van Rijsbergen NJ, Treves A, Friston KJ, Dolan RJ ( 2007): Experience‐dependent coding of facial expression in superior temporal sulcus. Proc Natl Acad Sci USA 104: 13485–13489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ganel T, Valyear KF, Goshen‐Gottstein Y, Goodale MA ( 2005): The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia 43: 1645–1654. [DOI] [PubMed] [Google Scholar]

- Gardner JL, Sun P, Waggoner RA, Ueno K, Tanaka K, Cheng K ( 2005): Contrast adaptation and representation in human early visual cortex. Neuron 47: 607–620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grezes J, Costes N, Decety J ( 1999): The effects of learning and intention on the neural network involved in the perception of meaningless actions. Brain 122 ( Pt 10): 1875–1887. [DOI] [PubMed] [Google Scholar]

- Grossman E, Donnelly M, Price R, Pickens D, Morgan V, Neighbor G, Blake R ( 2000): Brain areas involved in perception of biological motion. J Cogn Neurosci 12: 711–720. [DOI] [PubMed] [Google Scholar]

- Hari R ( 1990): The neuromagnetic method in the study of the human auditory cortex In: Grandori FHM, Romani GL, editors. Auditory Evoked Magnetic Fields and Electric Potentials. Basel: Karger; pp 222–282. [Google Scholar]

- Hari R, Forss N ( 1999): Magnetoencephalography in the study of human somatosensory cortical processing. Philos Trans R Soc Lond B Biol Sci 354: 1145–1154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hari R, Kujala MV ( 2009): Brain basis of human social interaction: From concepts to brain imaging. Physiol Rev 89: 453–479. [DOI] [PubMed] [Google Scholar]

- Hari R, Portin K, Kettenmann B, Jousmäki V, Kobal G ( 1997): Right‐hemisphere preponderance of responses to painful CO2 stimulation of the human nasal mucosa. Pain 72: 145–151. [DOI] [PubMed] [Google Scholar]

- Hasselmo ME, Rolls ET, Baylis GC ( 1989): The role of expression and identity in the face‐selective responses of neurons in the temporal visual cortex of the monkey. Behav Brain Res 32: 203–218. [DOI] [PubMed] [Google Scholar]

- Hein G, Singer T ( 2008): I feel how you feel but not always: The empathic brain and its modulation. Curr Opin Neurobiol 18: 153–158. [DOI] [PubMed] [Google Scholar]

- Hill ML, Craig KD ( 2004): Detecting deception in facial expressions of pain: Accuracy and training. Clin J Pain 20: 415–422. [DOI] [PubMed] [Google Scholar]

- Hoffman EA, Haxby JV ( 2000): Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat Neurosci 3: 80–84. [DOI] [PubMed] [Google Scholar]

- Hsieh JC, Hannerz J, Ingvar M ( 1996): Right‐lateralised central processing for pain of nitroglycerin‐induced cluster headache. Pain 67: 59–68. [DOI] [PubMed] [Google Scholar]

- Hämäläinen M, Hari R, Ilmoniemi R, Knuutila J, Lounasmaa OV ( 1993): Magnetoencephalography‐theory, instrumentation, and applications to noninvasive studies of the working brain. Rev Mod Phys 65: 413–497. [Google Scholar]

- Jackson PL, Meltzoff AN, Decety J ( 2005): How do we perceive the pain of others? A window into the neural processes involved in empathy. Neuroimage 24: 771–779. [DOI] [PubMed] [Google Scholar]

- Kappesser J, Williams AC ( 2002): Pain and negative emotions in the face: Judgements by health care professionals. Pain 99: 197–206. [DOI] [PubMed] [Google Scholar]

- Kawasaki H, Kaufman O, Damasio H, Damasio AR, Granner M, Bakken H, Hori T, Howard MA III, Adolphs R ( 2001): Single‐neuron responses to emotional visual stimuli recorded in human ventral prefrontal cortex. Nat Neurosci 4: 15–16. [DOI] [PubMed] [Google Scholar]

- Keysers C, Wicker B, Gazzola V, Anton JL, Fogassi L, Gallese V ( 2004): A touching sight: SII/PV activation during the observation and experience of touch. Neuron 42: 335–346. [DOI] [PubMed] [Google Scholar]

- Killgore WDS, Yrgelun‐Todd DA ( 2007): The right‐hemisphere and valence hypotheses: Could they both be right (and sometimes left)? SCAN 2: 240–250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krolak‐Salmon P, Fischer C, Vighetto A, Mauguiere F ( 2001): Processing of facial emotional expression: Spatio‐temporal data as assessed by scalp event‐related potentials. Eur J Neurosci 13: 987–994. [DOI] [PubMed] [Google Scholar]

- Krolak‐Salmon P, Henaff MA, Isnard J, Tallon‐Baudry C, Guenot M, Vighetto A, Bertrand O, Mauguiere F ( 2003): An attention modulated response to disgust in human ventral anterior insula. Ann Neurol 53: 446–453. [DOI] [PubMed] [Google Scholar]

- Lin FH, Belliveau JW, Dale AM, Hamäläinen MS ( 2006): Distributed current estimates using cortical orientation constraints. Hum Brain Mapp 27: 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J, Harris A, Kanwisher N ( 2002): Stages of processing in face perception: An MEG study. Nat Neurosci 5: 910–916. [DOI] [PubMed] [Google Scholar]

- Lueschow A, Sander T, Boehm SG, Nolte G, Trahms L, Curio G ( 2004): Looking for faces: Attention modulates early occipitotemporal object processing. Psychophysiology 41: 350–360. [DOI] [PubMed] [Google Scholar]

- Morel S, Ponz A, Mercier M, Vuilleumier P, George N ( 2009): EEG‐MEG evidence for early differential repetition effects for fearful, happy and neutral faces. Brain Res 1254: 84–98. [DOI] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Buchel C, Frith CD, Young AW, Calder AJ, Dolan RJ ( 1998): A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain 121 ( Pt 1): 47–57. [DOI] [PubMed] [Google Scholar]

- Morrison I, Lloyd D, di Pellegrino G, Roberts N ( 2004): Vicarious responses to pain in anterior cingulate cortex: Is empathy a multisensory issue? Cogn Affect Behav Neurosci 4: 270–278. [DOI] [PubMed] [Google Scholar]

- Narumoto J, Okada T, Sadato N, Fukui K, Yonekura Y ( 2001): Attention to emotion modulates fMRI activity in human right superior temporal sulcus. Brain Res Cogn Brain Res 12: 225–231. [DOI] [PubMed] [Google Scholar]

- Nishitani N, Hari R ( 2000): Temporal dynamics of cortical representation for action. Proc Natl Acad Sci USA 97: 913–918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nishitani N, Hari R ( 2002): Viewing lip forms: Cortical dynamics. Neuron 36: 1211–1220. [DOI] [PubMed] [Google Scholar]

- Ojemann JG, Ojemann GA, Lettich E ( 1992): Neuronal activity related to faces and matching in human right nondominant temporal cortex. Brain 115 ( Pt 1): 1–13. [DOI] [PubMed] [Google Scholar]

- Okada YC, Wu J, Kyuhou S ( 1997): Genesis of MEG signals in a mammalian CNS structure. Electroencephalogr Clin Neurophysiol 103: 474–485. [DOI] [PubMed] [Google Scholar]

- Oldfield RC ( 1971): The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9: 97–113. [DOI] [PubMed] [Google Scholar]

- Ostrowsky K, Magnin M, Ryvlin P, Isnard J, Guenot M, Mauguiere F ( 2002): Representation of pain and somatic sensation in the human insula: A study of responses to direct electrical cortical stimulation. Cereb Cortex 12: 376–385. [DOI] [PubMed] [Google Scholar]

- Paller KA, Ranganath C, Gonsalves B, LaBar KS, Parrish TB, Gitelman DR, Mesulam MM, Reber PJ ( 2003): Neural correlates of person recognition. Learn Mem 10: 253–260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA, Mitchell TV, McKeown MJ, Goldstein J, Allison T, McCarthy G ( 2003): Brain activity evoked by the perception of human walking: Controlling for meaningful coherent motion. J Neurosci 23: 6819–6825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, McKenna M, Gutierrez E, Ungerleider LG ( 2002): Neural processing of emotional faces requires attention. Proc Natl Acad Sci USA 99: 11458–11463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phillips ML, Young AW, Senior C, Brammer M, Andrew C, Calder AJ, Bullmore ET, Perrett DI, Rowland D, Williams SC, Gray JA, David AS ( 1997): A specific neural substrate for perceiving facial expressions of disgust. Nature 389: 495–498. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Dan ES, Grandjean D, Sander D, Vuilleumier P ( 2005): Enhanced extrastriate visual response to bandpass spatial frequency filtered fearful faces: Time course and topographic evoked‐potentials mapping. Hum Brain Mapp 26: 65–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prkachin KM, Mass H, Mercer SR ( 2004): Effects of exposure on perception of pain expression. Pain 111: 8–12. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Bentin S, Gore JC, McCarthy G ( 1998): Temporal cortex activation in humans viewing eye and mouth movements. J Neurosci 18: 2188–2199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raij TT, Numminen J, Närvänen S, Hiltunen J, Hari R ( 2005): Brain correlates of subjective reality of physically and psychologically induced pain. Proc Natl Acad Sci USA 102: 2147–2151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raij TT, Vartiainen NV, Jousmäki V, Hari R ( 2003): Effects of interstimulus interval on cortical responses to painful laser stimulation. J Clin Neurophysiol 20: 73–79. [DOI] [PubMed] [Google Scholar]

- Rainville P ( 2002): Brain mechanisms of pain affect and pain modulation. Curr Opin Neurobiol 12: 195–204. [DOI] [PubMed] [Google Scholar]

- Redcay E ( 2008): The superior temporal sulcus performs a common function for social and speech perception: Implications for the emergence of autism. Neurosci Biobehav Rev 32: 123–142. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fadiga L, Matelli M, Bettinardi V, Paulesu E, Perani D, Fazio F ( 1996): Localization of grasp representations in humans by PET. I. Observation versus execution. Exp Brain Res 111: 246–252. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V ( 2001): Neurophysiological mechanisms underlying the understanding and imitation of action. Nat Rev Neurosci 2: 661–670. [DOI] [PubMed] [Google Scholar]

- Rolls ET ( 2007): The representation of information about faces in the temporal and frontal lobes. Neuropsychologia 45: 124–143. [DOI] [PubMed] [Google Scholar]

- Rolls ET, Baylis GC, Hasselmo ME ( 1987): The responses of neurons in the cortex in the superior temporal sulcus of the monkey to band‐pass spatial frequency filtered faces. Vision Res 27: 311–326. [DOI] [PubMed] [Google Scholar]

- Saarela MV, Hari R ( 2008): Listening to humans walking together activates the social brain circuitry. Soc Neurosci 3: 401–409. [DOI] [PubMed] [Google Scholar]

- Saarela MV, Hlushchuk Y, Williams AC, Schürmann M, Kalso E, Hari R ( 2007): The compassionate brain: Humans detect intensity of pain from another's face. Cereb Cortex 17: 230–237. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Junghofer M, Weike AI, Hamm AO ( 2003): Emotional facilitation of sensory processing in the visual cortex. Psychol Sci 14: 7–13. [DOI] [PubMed] [Google Scholar]

- Schürmann M, Hesse MD, Stephan KE, Saarela M, Zilles K, Hari R, Fink GR ( 2005): Yearning to yawn: The neural basis of contagious yawning. Neuroimage 24: 1260–1264. [DOI] [PubMed] [Google Scholar]

- Seitz RJ, Schafer R, Scherfeld D, Friederichs S, Popp K, Wittsack HJ, Azari NP, Franz M ( 2008): Valuating other people's emotional face expression: A combined functional magnetic resonance imaging and electroencephalography study. Neuroscience 152: 713–722. [DOI] [PubMed] [Google Scholar]

- Simon D, Craig KD, Miltner WH, Rainville P ( 2006): Brain responses to dynamic facial expressions of pain. Pain 126: 309–318. [DOI] [PubMed] [Google Scholar]

- Simon D, Craig KD, Gosselin F, Belin P, Rainville P ( 2008): Recognition and discrimination of prototypical dynamic expressions of pain and emotions. Pain 135: 55–64. [DOI] [PubMed] [Google Scholar]

- Singer T, Seymour B, O'Doherty J, Kaube H, Dolan RJ, Frith CD ( 2004): Empathy for pain involves the affective but not sensory components of pain. Science 303: 1157–1162. [DOI] [PubMed] [Google Scholar]

- Sugase Y, Yamane S, Ueno S, Kawano K ( 1999): Global and fine information coded by single neurons in the temporal visual cortex. Nature 400: 869–873. [DOI] [PubMed] [Google Scholar]

- Symonds LL, Gordon NS, Bixby JC, Mande MM ( 2006): Right‐lateralized pain processing in the human cortex: An FMRI study. J Neurophysiol 95: 3823–3830. [DOI] [PubMed] [Google Scholar]

- Tanskanen T, Näsänen R, Montez T, Päällysaho J, Hari R ( 2005): Face recognition and cortical responses show similar sensitivity to noise spatial frequency. Cereb Cortex 15: 526–534. [DOI] [PubMed] [Google Scholar]

- Tanskanen T, Näsänen R, Ojanpää H, Hari R ( 2007): Face recognition and cortical responses: Effect of stimulus duration. Neuroimage 35: 1636–1644. [DOI] [PubMed] [Google Scholar]

- Taulu S, Kajola M, Simola J ( 2004): Suppression of interference and artifacts by the Signal Space Separation method. Brain Topogr 16: 269–275. [DOI] [PubMed] [Google Scholar]

- Thompson JC, Clarke M, Stewart T, Puce A ( 2005): Configural processing of biological motion in human superior temporal sulcus. J Neurosci 25: 9059–9066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uusitalo MA, Williamson SJ, Seppä MT ( 1996): Dynamical organisation of the human visual system revealed by lifetimes of activation traces. Neurosci Lett 213: 149–152. [DOI] [PubMed] [Google Scholar]

- Uusvuori J, Parviainen T, Inkinen M, Salmelin R ( 2008): Spatiotemporal interaction between sound form and meaning during spoken word perception. Cereb Cortex 18: 456–466. [DOI] [PubMed] [Google Scholar]

- Wallis G, Rolls ET ( 1997): Invariant face and object recognition in the visual system. Prog Neurobiol 51: 167–194. [DOI] [PubMed] [Google Scholar]

- Van Strien JW, Morpurgo M ( 1992): Opposite hemispheric activations as a result of emotionally threatening and non‐threatening words. Neuropsychologia 30: 845–848. [DOI] [PubMed] [Google Scholar]

- Wicker B, Michel F, Henaff MA, Decety J ( 1998): Brain regions involved in the perception of gaze: A PET study. Neuroimage 8: 221–227. [DOI] [PubMed] [Google Scholar]

- Wicker B, Keysers C, Plailly J, Royet JP, Gallese V, Rizzolatti G ( 2003): Both of us disgusted in my insula: The common neural basis of seeing and feeling disgust. Neuron 40: 655–664. [DOI] [PubMed] [Google Scholar]

- Williams AC ( 2002): Facial expression of pain: An evolutionary account. Behav Brain Sci 25: 439–455; discussion 455–488. [DOI] [PubMed] [Google Scholar]

- Winston JS, O'Doherty J, Dolan RJ ( 2003): Common and distinct neural responses during direct and incidental processing of multiple facial emotions. Neuroimage 20: 84–97. [DOI] [PubMed] [Google Scholar]

- Winston JS, Henson RN, Fine‐Goulden MR, Dolan RJ ( 2004): fMRI‐adaptation reveals dissociable neural representations of identity and expression in face perception. J Neurophysiol 92: 1830–1839. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ ( 2001): Effects of attention and emotion on face processing in the human brain: An event‐related fMRI study. Neuron 30: 829–841. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ ( 2003): Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat Neurosci 6: 624–631. [DOI] [PubMed] [Google Scholar]