Abstract

The present magnetoencephalography (MEG) study tested the hypothesis of a phase synchronization (functional coupling) of cortical alpha rhythms (about 6–12 Hz) within a “speech” cortical neural network comprising bilateral primary auditory cortex and Wernicke's areas, during dichotic listening (DL) of consonant‐vowel (CV) syllables. Dichotic stimulation was done with the CV‐syllable pairs /da/‐/ba/ (true DL, yielded by stimuli having high spectral overlap) and /da/‐/ka/ (sham DL, obtained with stimuli having poor spectral overlap). Whole‐head MEG activity (165 sensors) was recorded from 10 healthy right‐handed non‐musicians showing right ear advantage in a speech DL task. Functional coupling of alpha rhythms was defined as the spectral coherence at the following bands: alpha 1 (about 6–8 Hz), alpha 2 (about 8–10 Hz), and alpha 3 (about 10–12) with respect to the peak of individual alpha frequency. Results showed an inverse pattern of functional coupling: during DL of speech sounds, spectral coherence of the high‐band alpha rhythms increased between left auditory and Wernicke's areas with respect to sham DL, whereas it decreased between left and right auditory areas. The increase of functional coupling within the left hemisphere would underlie the processing of the syllable presented to the right ear, which arrives to the left auditory cortex without the interference of the other syllable presented to the left ear. Conversely, the decrease of inter‐hemispherical coupling of the high‐band alpha might be due to the fact that the two auditory cortices do not receive the same information from the ears during DL. These results suggest that functional coupling of alpha rhythms can constitute a neural substrate for the lateralization of auditory stimuli during DL. Hum Brain Mapp 2008. © 2007 Wiley‐Liss, Inc.

Keywords: dichotic listening, consonant‐vowel (CV)‐syllables, magnetoencephalography (MEG), spectral coherence, functional coupling, primary auditory cortex, Wernicke's area

INTRODUCTION

Dichotic listening is broadly used in measuring cerebral lateralization of perceptual and cognitive functions in both clinical and experimental practice. It consists in the simultaneous presentation of two different auditory stimuli to either ear [Bryden, 1988; Hugdahl, 2000; Tervaniemi and Hugdahl, 2003]. Subjects with left‐hemispheric language lateralization are faster and more accurate in reporting dichotic verbal items presented at the right compared to left ear [Kimura, 1961; Studdert‐Kennedy and Shankweiler, 1970], while they exhibit left ear advantage for tasks involving the recognition of complex tones, music or environmental sounds [Boucher and Bryden, 1997; Brancucci and San Martini, 1999, 2003; Brancucci et al., 2005a; Kallman and Corballis, 1975]. Functional neuroimaging studies of regional cerebral blood flow have elucidated fine spatial details of brain structures involved in DL such as bilateral primary auditory areas [Hugdahl et al., 1999, 2000; Jancke and Shah, 2002; Jancke et al., 2003; Lipschutz et al., 2002], orbitofrontal and hippocampal paralimbic belts [Pollmann et al., 2004], prefrontal cortex [Hugdahl et al., 2003a; Lipschutz et al., 2002; Thomsen et al., 2004], and splenium of the corpus callosum [Plessen et al., 2007; Pollmann et al., 2002; Westerhausen et al., 2006].

It has been shown that “true” DL occurs when the stimuli constituting the dichotic pair have high overlap in spectral contents. This claim is based on both behavioral and physiological evidence. Springer et al. [1978] have shown that while report of consonant‐vowel syllables presented to the left ear during dichotic testing was at chance, report of left ear digits under the same conditions was greater than 80% in four out of five split‐brain patients. As the acoustic overlap is greater between consonant‐vowel syllables than between digits, they concluded that the availability of information from the ipsilateral auditory pathway is a function of the spectral acoustic overlap between competing dichotic stimuli. This indicates that noncompeting pairs (digits in that study) do not make up true DL stimuli. Sidtis [1981] demonstrated a nearly threefold difference in the magnitude of the laterality measure when dichotic tones with similar vs. different fundamental frequencies were employed. The musical fifth interval (low spectral overlap) yielded minimal laterality effects, whereas intervals of a second, a minor third, or an octave (higher spectral overlap) yielded maximal laterality effects. More recently using MEG during DL of non‐verbal stimuli, we demonstrated that, according to Kimura [1967], the neurophysiological interactions that allow the lateralization of the dichotic input were especially evident when the dichotic pair is composed by complex tones having similar fundamental frequency and spectral content [Brancucci et al., 2004]. The same is true when the dichotic pair was composed by consonant‐vowel syllables (CV‐syllables) having high spectral overlap [Della Penna et al., in press]. On the whole, it can be argued that when the dichotic pair is composed by stimuli having scarce spectral overlap, they reach both auditory cortices without significant loss of information. Conversely, when the dichotic pair is composed by stimuli having high spectral overlap, they compete to reach the cortical level, due to the organization of the cortex that is based on spectral cues, i.e. tonotopy, [Bilecen et al., 1998; Formisano et al., 2003; Romani et al., 1982]. It can be speculated that this competition actually favors stimulus lateralization beyond the fact that the input to each ear is generally better represented in the contralateral auditory cortex [Ackermann et al., 2001; Eichele et al., 2005; Makela, 1988].

At the present stage of research, an open issue remains the investigation of the cooperation between auditory cortical areas during DL of speech sounds. In fact, a consequence of the DL paradigm—where the two auditory cortices do not receive the same information from the ears—is that cortical areas do not respond with the same features to the stimulation. It is conceivable that this different activity of left and right auditory areas is associated with a reduced coordination or functional coupling between them, possibly due to a major involvement of left (dominant for verbal sounds) compared with right auditory cortical areas. Such a functional coupling would be allowed by direct inter‐hemispherical connections between auditory cortices, as revealed by several studies in the cat, rat, monkey, and man [Arnault and Roger, 1990; Bozhko et al., 1988; Code and Winer, 1986; Diamond et al., 1968; Pandya et al., 1969], and has been observed in electroencephalography recordings during DL of complex tones (non‐speech sounds, Brancucci et al., 2005b).

The spectral band of the human encephalogram, which is most directly involved in the type of processes investigated by the present study, is alpha frequency band (about 6–12 Hz). Alpha frequency contains the dominant component of the human encephalogram and is retained to represent the main element of talamo‐cortical and cortico‐cortical connectivity [Lopes da Silva et al., 1980]. Alpha rhythms are strictly related to attentional level and cortical information processing in that enhancement or increase of alpha power reflects elaboration or inhibition of sensory stimuli [Nunez, 1995; Pfurtscheller and Lopes da Silva, 1999]. Field research in the last years has indicated that a deep investigation of alpha rhythms requires its division in sub‐bands. Noteworthy, low‐band (about 6–8 Hz, alpha 1 and 8–10 Hz, alpha 2) alpha rhythms reflect unspecific “alertness” and/or “expectancy” processes [Klimesch et al., 1996, 1998], whereas high‐band (about 10–12 Hz, alpha 3) alpha rhythms depend on task‐specific sensory processes [Klimesch et al., 1994, 1996]. Regarding specifically the auditory cortex, it has been shown the existence of a distinct, reactive auditory rhythm around 10 Hz in the human temporal cortex, also called “tau” rhythm [Lehtela et al., 1997]. Many studies observed a modulation of oscillations in the alpha range involving the auditory cortex during lexical decision task with words and pseudowords [Krause et al., 2006], auditory‐verbal encoding or retrieval working memory task [Ellfolk et al., 2006], and other auditory memory tasks [Fingelkurts et al., 2003; Pesonen et al., 2006].

The earliest DL findings recognized that attentional processes play an important role in this topic [Kinsbourne, 1970]. More recently, functional neuroimaging studies have shown that attentional shifts during DL are accompanied by specific modulations of neural activity possibly with a facilitating effect for auditory processing [Alho et al., 2003; Hugdahl et al., 2000; O'Leary et al., 1996]. Selective attention to the right ear leads to increased activity in the left auditory cortex whereas selective attention to the left ear increases activity in the right auditory cortex. In particular the area showing such an asymmetric pattern of activation linked to attention is the planum temporale [Jancke et al., 2001, 2003; Lipschutz et al., 2002].

We aim here at extending previous evidence [Brancucci et al., 2005b] to speech sounds, in order to elucidate whether synchronization of alpha rhythms within a network of speech auditory areas including bilateral primary auditory cortex (PAC) and Wernicke's area can be affected by DL. The hypothesis is that the different activity of left and right PAC elicited by “true” DL is associated with a reduced functional coupling between the two areas, as an outcome of the major involvement of left (dominant for speech sounds) compared with right auditory cortex. On the contrary, we expect an increase of functional coupling within left hemispheric speech cortical areas (left PAC and Wernicke's area), at the basis of the processing of the CV‐syllable presented at the right ear, which arrives to the left PAC with a reduced interference of the CV‐syllable presented dichotically to the left ear. To face this issue we used an approach based on magnetoencephalography (MEG) and analysis of spectral coherence of the alpha rhythms generated during passive DL of CV‐syllables with distributed attention (i.e. no lateral attentional shifts). The computation of spectral coherence on MEG recordings is particularly suited for the study of functional coupling thanks to both high temporal resolution of MEG, which allows the study of neural synchronization in the millisecond scale, and to the fact that the tissues which are among the neural sources and the sensors are transparent to magnetic signals. This avoids that the recorded signal is blurred, which can introduce artifacts in the analysis of spectral coherence. Moreover, MEG is a silent neuroimaging technique, which has been successfully used to model the activation of auditory cortical areas [Hari, 1990].

MATERIALS AND METHODS

Subjects

Ten right‐handed (Edinburgh Inventory: mean ± standard error = 67.94 ± 7.39) healthy volunteers were recruited (seven females, age range 20–31 years, average 25 years). All subjects gave their written informed consent according to the Declaration of Helsinki and could freely request an interruption of the investigation at any time. The general procedures were approved by the local Institutional Ethics Committee. None of them had auditory impairments as shown by auditory functional assessment. No differences (±5 dB) of hearing threshold at 500 and 1,000 Hz were found between left and right ears in all subjects.

Behavioral Test

In a separate session, subjects underwent a behavioral verbal DL task. The verbal DL task consisted of 60 items, which were continuously generated by a computer, with 3 s inter‐item interval. Subjects were provided with an earphone, a pencil, and a grid printed on a sheet of paper. He/she comfortably sat in front of the computer. After 30 items the positions of the earphones was switched between left and right ears, to avoid any bias due to the output channels. Each item consisted in a dichotic pair composed by two of the CV‐syllables /ba/, /ka/, /da/, /ga/, /pa/, /ta/. The task of the subject was to indicate on the grid which CV‐syllable he/she perceived at best, among the ones listed above. Subjects were asked to pay attention at both ears simultaneously, i.e. without privileging one ear [Hugdahl et al., 2000, 2003b; Jancke et al., 2003]. Data analysis was based on the number of correct reported syllables that were presented at the left vs. right ear. That is, when the subject reported a syllable that was actually present in the dichotic pair, a point was ascribed to the left (right) ear if the reported syllable was presented at the left (right) ear [Eichele et al., 2005]. One‐way ANOVA analysis with Ear of input (left, right) as a factor was carried out on ear scores (dependent variable).

Stimulation for MEG Recordings

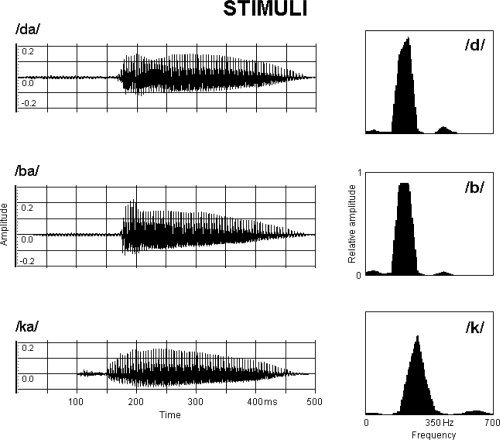

Dichotic stimuli consisted of three CV‐syllables (/da/, /ba/, /ka/) recorded from a natural female voice. The intensity of the stimuli was adjusted at 60 dBA (auditory decibel). The CV‐syllables were recorded and handled with a sampling rate of 44,100 Hz and an amplitude resolution of 16 bit. Stimulus recording and handling was performed by using the software “Wave 2.0” (Voyetra Turtle Beach Systems, Yonkers, NY) for Microsoft Windows on a PC Pentium III 550 MHz with audio card Sound Blaster AWE 32. Waveforms of the CV‐syllables are plotted in Figure 1 (left panel). Verification of the actual sound intensity was done by means of a Phonometer (Geass, Delta Ohm HD2110, Torino, Italy). The normalized power spectrum densities of the consonant forming the CV‐syllables are shown in Figure 1 (right panel). The spectra were computed in the time window of the consonant using 1024 points and a Hamming window. The three syllables were arranged in the following four dichotic stimuli: two “true dichotic” competing CV‐syllable pairs (/da/ at the left ear + /ba/at the right ear and viceversa /ba/at the left ear + /da/ at the right ear) having high spectral overlap and two “dichotic sham” non‐competing CV‐syllables pairs (/da/ at the left ear + /ka/ at the right ear and viceversa/ka/at the left ear + /da/ at the right ear) having low spectral overlap (control condition).

Figure 1.

Left: Waveforms of the CV‐syllables /da/, /ba/, and /ka/ which constituted the dichotic stimuli. Right: power spectrum densities of the consonants /d/, /b/ and /k/ forming the CV‐syllables. The spectral overlap between/d/and/b/was estimated to be about 93%, so that the CV‐syllables /da/ and /ba/ were named as “competing”. The overlap was reduced to 23% for /d/ and /k/, forming the noncompeting syllables /da/ and/ ka/ which constituted the control stimuli in the present study (dichotic sham condition). [Color figure can be viewed in the online issue, which is available at www.interscience.wiley.com.]

The amount of spectral overlap between the consonants of the CV‐syllables forming the dichotic stimuli was estimated by computing the Euclidean distance between consonant spectra. We obtained a spectral overlap of about 93% when comparing /d/ versus /b/ (two voiced consonants) and about 23% for /d/ versus /k/ (a voiced versus a voiceless consonant). Each of the four stimuli was presented 80 times in a pseudorandomized sequence to avoid expectancy effects that could affect the neuromagnetic measures. The interstimulus interval varied randomly between 2,500 and 3,500 ms.

MEG Recordings

Whole‐brain activity was recorded using the 165 channel MEG system installed at the University of Chieti inside a high‐quality magnetically shielded room [Della Penna et al., 2000]. The system consists of 153 dc SQUID integrated magnetometers arranged on a helmet surface covering the whole head and 12 reference channels. The acoustic stimulation was provided by Sensorcom plastic ear tubes connected to an artifact‐free transducer. During the magnetic recordings the subjects passively listened to the stimuli and did not perform any task. They were asked to pay attention at both ears at the same time, i.e. without privileging one side [Hugdahl et al., 2000; Jancke et al., 2003]. Simultaneously with magnetic recordings, ECG was acquired as a reference for the rejection of the heart artifact. All signals were band‐pass filtered at 0.16–250 Hz and recorded at 1 kHz sampling rate.

The position of subjects' head with respect to the sensors was determined by recording and fitting the magnetic field generated by four coils placed on the scalp before and after each recording. The coil positions on the subject scalp were digitized by means of a 3D digitizer (Polhemus, 3Space Fastrak), together with anatomical landmarks defining a coordinate system. A set of high resolution magnetic resonance images (MRIs) of subjects' heads were obtained by a Siemens Magnetom Vision 1.5 Tesla using an MPRAGE sequence (256′ 256, FoV 256, TR = 9.7 ms, TE = 4 ms, Flip angle 128, voxel size 1 mm3).

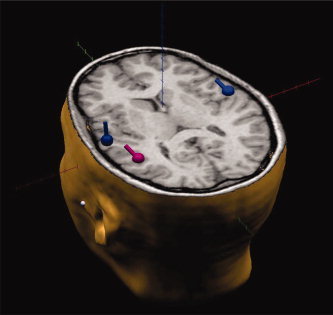

Dipole Source Analysis

We studied the coherence between sources representing the evoked activity of the bilateral PAC and of Wernicke's area (Fig. 2). Indeed, the aim of the present study was to investigate the functional coupling among the major areas involved in the perception of simple speech stimuli [Jancke et al., 2002], which are also the first cortical stations reached by auditory speech signals. It should be however considered that DL involves a distributed network of areas comprising, other than audiory cortex, the prefrontal cortex, the orbitofrontal and hippocampal paralimbic belts, and the splenium of the corpus callosum.

Figure 2.

Three ECDs have been considered for the analysis of functional coupling. They are localized in the left PAC, right PAC, and Wernicke's area. [Color figure can be viewed in the online issue, which is available at www.interscience.wiley.com.]

Wernicke's area and the left PAC are few centimetres apart. For our recordings we used magnetometers, which feature a spread lead field so that the detected signal comprises the contribution of nearby laying sources even if it is small. So, to separate the activity of Wernicke's area from the left primary auditory area we studied coherence between those brain sites that gave rise to the evoked fields, instead of channel coherence. At this aim we reconstructed the specific source waveforms from the raw waveforms of all channels throughout all the trials, i.e. the source raw activities. Thus, the oscillations observed in the present study are directly generated at the level of the corresponding sources.

First, we localized the sources based on the analysis of the auditory evoked magnetic fields (AEFs). For each dichotic pair, about 70 artifact‐free AEF trials were band‐pass filtered (1–70 Hz) and averaged in a time period of 600 ms, including a 50 ms pre‐stimulus epoch. For each channel, the baseline level was set as the mean value of the magnetic field in the time interval −10 ÷ 10 ms across the stimulus onset. Before averaging, the heart artifact was removed from all the channels by an adaptive algorithm utilizing the QRS peak recorded by the ECG channel as a trigger.

Second, we used multiple source analysis provided by the BESA software (MEGIS Software GmbH, Germany) based on the Equivalent Current Dipole (ECD) as source model and a homogeneous sphere to model the subject's head. The fitting interval was 70 ÷ 360 ms poststimulus. In this time interval, the position, orientation, and amplitude of two free ECDs were fitted to model the sources in the primary auditory cortices using the dichotic pair comprising noncompeting syllables /da/‐/ka/, since the signal to noise ratio was higher in this condition. No regional constraints were applied to the fit of the two ECDs. For the other conditions the locations of the auditory ECDs found in the noncompeting condition /da/‐/ka/ were held fixed during the fit. A third source was positioned in the Talairach coordinates (−52, −40, 8) to represent the Wernicke's area in the left hemisphere (see Fig. 2). The orientation and amplitude of this dipole source could change to fit AEFs in the time interval 150 ÷ 400 ms poststimulus. We also checked whether other sources could explain the evoked data by adding free ECDs. Since we did not obtain sets of sources fitting the residual field (after fitting the ECDs in the primary auditory cortex), which were reproducible across subjects and conditions, we considered only the two ECDs in the bilateral primary auditory cortex and the one in Wernicke's area for further analysis. For each subject, the ECD locations were checked on the MRI with the Brain‐Voyager software (Brain Innovation B.V., The Netherlands). We selected a goodness of fit of 80% as the lower threshold to accept an ECD configuration. Because of the low amplitude of the evoked signal, possibly caused by the interference between auditory pathways during DL [Brancucci et al., 2004; Della Penna et al., in press], a 20% of residual variance was assumed to be related to nonphase‐locked signals. The mean across subjects of the explained variance was 86%.

Third, the source raw activities for the two ECDs in the PAC and for the one in Wernicke's area that are the ECD amplitudes at each sampling time were obtained by means of BESA software. The ECD positions and orientations were held fixed while an inverse operator was applied to the instantaneous raw field distribution over the helmet throughout the whole recording session.

Analysis of Spectral Coherence

The source raw activities reconstructed from continuously recorded MEG data were segmented in single trials each spanning from −1000 to +1000 ms, the zerotime being the onset of auditory stimulus. ECD single trials were discarded when associated with artefacts. About 70 MEG trials were accepted for each stimulus condition and for each subject. To perform spectral coherence analysis of the artifact‐free data, we preliminarily removed phase‐locked activity (i.e., average evoked auditory source activity) with a mathematical technique based on weighted inter‐trial variance calculation. It is indeed well known that phase‐locked activity (evoked activity) can interfere with the study of power density or functional coupling of cerebral rhythms [Kalcher and Pfurtscheller, 1995]. Briefly, the procedure of phase‐locked activity removal was the following. For each source, a correction factor was calculated for each single trial by cross‐correlation between the average source AEFs and the on‐going raw source activity of that trial. This factor was used to weight the removal of source AEFs from the on‐going source activity of that trial. A similar technique has been successfully used in previous studies focused on the analysis of cerebral rhythms [Brancucci et al., 2005b; Kalcher and Pfurtscheller, 1995].

Spectral coherence is a normalized measure of the coupling between two signals at any given frequency [Halliday et al., 1995; Rappelsberger and Petsche, 1988] and can be used to study the functional coupling between cortical areas [Babiloni et al., 2006; Brancucci et al., 2005b]. The coherence values were calculated for each frequency bin by:

which is the extension of the Pearson's correlation coefficient R to complex number pairs. In this equation, f denotes the spectral estimate of two source raw signals x and y for a given frequency bin (λ). The numerator contains the cross‐spectrum for x and y (f xy), while the denominator contains the respective auto‐spectra for x (f xx) and y (f yy). For each frequency bin (λ), the coherence value (Cohxy) is obtained by squaring the magnitude of the complex correlation coefficient R. This procedure returns a real number between 0 (no coherence) and 1 (maximal coherence). For each subject and each source pair, a statistical threshold level for coherence was computed according to Halliday et al. [1995], taking into account the number of single valid trials used as an input for the analysis of spectral coherence. Of note, for each subject and each source pair, coherence values were all above statistical threshold posed at P < 0.05.

As mentioned above, here spectral coherence was computed among time series of the activity arising from ECDs, which were located in the left and right PAC and in Wernicke's area. The between‐ECDs coherence was calculated at “baseline” period (from −1,000 ms to zerotime, defined as the auditory stimulus onset) as well as at “event” period (from zerotime to +1,000 ms). The computation of source coherence from data segments of 1,000 ms yielded a frequency resolution of 1 Hz. Frequency bands of interest were alpha 1, alpha 2, and alpha 3, which were assessed subjectively, on the basis of the individual alpha peak (IAF). These alpha sub‐bands were determined according to a standard procedure based on the peak of individual alpha frequency at the power density spectrum [IAF; Klimesch, 1996, 1999; Klimesch et al., 1996]. With respect to the IAF, the alpha sub‐bands were defined as follows: (i) alpha 1 as the IAF‐4 Hz to IAF‐2 Hz, (ii) alpha 2 as IAF‐2 Hz to IAF, and (iii) alpha 3 as IAF to IAF +2 Hz. Of note, mean (± standard error) IAF was 10.1 ± 0.4 Hz.

Statistical Analysis

For the statistical analysis, maximal event‐related MEG source coherence (ErCoh) within each alpha sub‐band was used as a dependent variable. ErCoh is the mean difference between coherence at event and baseline periods. It should be stressed that the magnitude of ErCoh is usually smaller than the absolute coherence values. However, it has the advantage to take into account the inter‐subject variability of baseline coherence.

Statistical evaluation of the data was done by means of three 2 × 2 repeated measures analysis of variance (ANOVA), one for each alpha sub‐band (alpha 1, alpha 2, alpha 3). Tukey's post‐hoc comparisons were applied and Mauchley's test evaluated the sphericity assumption. Correction of the degrees of freedom was made by the Greenhouse‐Geisser procedure. ANOVA factors were (a) ECD pair, with two levels, namely coherence between ECDs in the left and right PAC and coherence between left PAC and Wernicke's area and (b) spectral overlap of the dichotic stimuli, with two levels, namely high spectral overlap (true dichotic condition) and low spectral overlap (sham dichotic condition). Of note, the level “high spectral overlap” contained averaged data from both dichotic stimuli composed by /da/ at the left ear + /ba/ at the right ear and /ba/ at the left ear + /da/ at the right ear. Similarly, the level “low spectral overlap” contained averaged data from both stimuli composed by /da/ at the left ear + /ka/ at the right ear and /ka/ at the left ear + /da/ at the right ear.

RESULTS

Behavioral Test

All subjects showed a right ear advantage in the verbal DL task. Laterality index (LI) was computed as follows: LI = (R − L)/(R + L) × 100, where R is the number of correct reports of the right ear and L the number of correct reports of the left ear. Mean (±standard error) laterality index was 18.6 ± 2.9, the range spanned from 11.1 to 30.0. ANOVA analysis indicated a statistically significant effect in favor of the right ear (F = 37.58, P < 0.001). This result indicates that the subjects perceived preferentially the syllable presented at the right ear which, in DL conditions, sends its inputs mainly to the left hemisphere [Brancucci et al., 2004].

Control Analysis on the Behavioral Test: Dichotic “True” vs. “Sham” Laterality Effects

With the aim to check whether dichotic pairs composed by stimuli having high spectral overlap yielded stronger laterality effects than dichotic pairs having scarce spectral overlap we analyzed the behavioral results by dividing the trials in two categories: “true” dichotic stimuli (those composed by the CV‐syllables having high spectral overlap, i.e. /ba/‐/da/, /ba/‐/pa/, /ba/‐/ta/, /da/‐/pa/, /da/‐ /ta/, /ka/‐/ga/, /pa/‐/ta/, and vice versa) and “sham” dichotic stimuli (those composed by CV‐syllables having low spectral overlap, i.e. /ba/‐/ka/, /ba/‐/ga/, /da/‐ /ga/, /da/‐/ka/, /ga/‐/pa/, /ga/‐/ta/, /ka/‐/ta/, /ka/‐/pa/, and vice versa). Laterality index for “true” dichotic stimuli was 26.1 ± 3.7, whereas laterality index for “sham” dichotic stimuli was 12.3 ± 3.8. One‐way ANOVA with laterality index as a dependent variable showed that the laterality effect (right ear advantage) was stronger for “true” than “sham” dichotic stimuli (F = 7.54, P = 0.03).

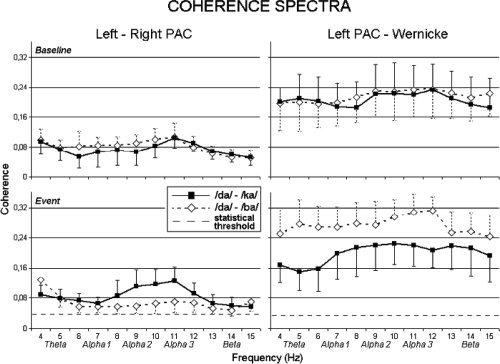

Coherence Spectra

Figure 3 illustrates baseline (upper part of the figure) and event (bottom) mean coherence spectra around the alpha frequency range between both MEG source pairs (left ↔ right PAC and left PAC ↔ Wernicke's area) for both dichotic competing (/da/‐/ba/) and dichotic noncompeting (/da/‐/ka/) CV‐syllables along with statistical threshold of coherence [Halliday et al., 1995]. Mean (±standard error) statistical threshold was 0.038 ± 0.002 for the source pair left‐right PAC and 0.037 ± 0.002 for the source pair left PAC‐Wernicke's area. Absolute coherence values were relatively low in magnitude especially for left ↔ right PAC, which are two distant sources [Thatcher et al., 1986]. However, all band values were above the corresponding statistical thresholds (P < 0.05) at both baseline and event periods in each subject. This was true for all alpha frequency bands. Comparing event with baseline coherence spectra, it can be observed that, especially evident at higher alpha bands (alpha 2 and alpha 3), MEG source coherence decreased between bilateral PAC when competing compared with noncompeting CV‐syllables were presented. Conversely, MEG source coherence increased within the left hemisphere between left PAC and Wernicke's area during dichotic listening of competing compared to noncompeting CV‐syllables.

Figure 3.

Across‐subjects mean MEG coherence spectra observed during the delivering of competing (/da/‐/ba/) and noncompeting (/da/‐/ka/) dichotic CV‐syllables. Top: baseline coherence spectra (last prestimulus second). Bottom: event coherence spectra (first poststimulus second) with statistical thresholds. Left: interhemispheric coherence spectra, between sources located in the left and right PAC. Right: intrahemispheric coherence spectra, between sources located in the left PAC and in Wernicke's area. Note that spectral bands, reported below the horizontal axis, are not fixed but vary according to individual alpha frequency.

Table I reports mean ErCoh values for the three alpha sub‐bands (alpha 1, alpha 2, and alpha 3) between left and right PAC and between left PAC and Wernicke's area in the two experimental conditions (competing and noncompeting CV‐syllables).

Table I.

Maximal ErCoh values and standard errors (in brackets) in the three alpha sub‐bands (alpha1, alpha2, and alpha3) between the two ECD pairs (left PAC↔right PAC and left PAC↔Wernicke's area) for competing (true dichotic) and non‐competing (sham diachotic) syllables

| Left PAC‐Right PAC | Left PAC‐Wernicke | |

|---|---|---|

| ALPHA1 | ||

| Competing (/da/‐/ba/) | 0.027 (0.008) | 0.061 (0.01) |

| Non‐competing (/da/‐/ka/) | 0.024 (0.010) | 0.042 (0.017) |

| ALPHA2 | ||

| Competing (/da/‐/ba/) | 0.029 (0.009) | 0.093 (0.025) |

| Non‐competing (/da/‐/ka/) | 0.038 (0.007) | 0.054 (0.018) |

| ALPHA3 | ||

| Competing (/da/‐/ba/) | 0.023 (0.006) | 0.068 (0.017) |

| Non‐competing (/da/‐/ka/) | 0.050 (0.016) | 0.028 (0.018) |

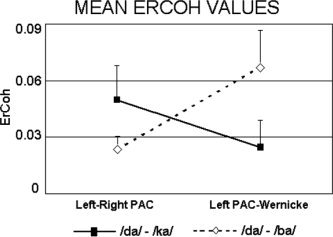

Statistical Results

Two‐way repeated measures ANOVA of ErCoh values having ECD pairs (left ↔ right PAC, left PAC ↔ Wernicke's area) and spectral overlap (high /da/+/ba/, low /da/+/ka/) as factors yielded no statistically significant effects at the two low alpha sub‐bands (i.e. alpha 1 and alpha 2) but a statistically significant interaction effect (F = 9.88, P = 0.013) at the high alpha sub‐band (i.e. the alpha 3 sub‐band). This indicated (Fig. 4) an inverse pattern of functional coupling at high‐band alpha rhythms; whereas MEG source ErCoh between left and right PAC decreased with dichotic stimuli having high frequency overlap compared with sham DL (based on stimuli with poor spectral overlap), MEG source coherence between left PAC and Wernicke's area increased. Tukey's post‐hoc comparisons indicated that the increase of MEG source ErCoh (left PAC ↔ Wernicke's area) during DL of verbal stimuli having high spectral overlap was statistically significant (P = 0.03).

Figure 4.

Across subjects mean (±standard error) event‐related coherence (ErCoh) values in the alpha 3 frequency band. Statistical results showed a significant interaction (P = 0.013) between the two factors (ECD pair and spectral overlap of CV‐syllables) indicating that, compared to sham DL, during DL (/da/‐/ba/) there was an inverse pattern of functional coupling: whereas interhemispheric high‐band alpha (alpha 3) ErCoh between left and right PAC was reduced, intrahemispheric high‐band alpha ErCoh within the left hemisphere (left PAC ↔ Wernicke's area) was increased.

Control Analysis for Possible Attentional Biases

With the aim to check whether in the present experiment spectral coherence was affected by uncontrolled variations of the attentional level during the MEG recordings, we analyzed the alpha power (in the whole alpha band, based on the IAF) of all parietal MEG channels in the period of 1 s immediately preceding the auditory stimulations. Parietal alpha power is retained to be a reliable indicator of the attentional level [Brancucci et al., 2005b; Klimesch et al., 1998]. Normalized mean (±standard error) alpha power preceding “true” dichotic stimuli was 0.35 ± 0.03 whereas alpha power preceding “sham” dichotic stimuli was 0.34 ± 0.02. Paired t‐test showed that the attentional level did not significantly differ (P = 0.30) between the two conditions.

DISCUSSION

The present study was designed to test whether functional coupling of alpha rhythms decreases during DL of verbal sounds. To this purpose, we performed coherence analysis of MEG data recorded during the presentation of dichotic speech stimuli on subjects showing right ear advantage in a speech DL task. The three principal areas involved in the perception of speech stimuli were taken in account, that is, left and right PAC, and Wernicke's area. An increase of functional coupling within the high‐band alpha range in the left hemisphere, specifically between left PAC and Wernicke's area, i.e. the two main brain areas of the left hemisphere implicated in the perception of speech was observed [Price, 2000]. Moreover, an inverse pattern (i.e. a decrease) of functional coupling was observed between left and right PAC. No significant modulation of functional coupling in the low alpha band was observed. Of note, the attentional level between “true” and “sham” dichotic stimuli showed negligible variations as reflected by the analysis of alpha power during the MEG recordings. Alpha power is a reliable indicator of attentional level [Brancucci et al., 2005a, b; Klimesch et al., 1998]. No significant modulation of low‐band alpha rhythms was observed.

The present observations of a functional coupling modulation of high‐ but not low‐alpha rhythms can be interpreted considering that the alpha band of the human encephalogram reflects quite different cognitive processes. It has been shown that, depending on the specific frequency, modulation of brain rhythms within the alpha band is related to different types of cognitive activity. Unspecific alertness processes (i.e. global attention) are reflected by changes in the low alpha band, whereas specific sensory processes are reflected by changes in the high alpha band [Klimesch et al., 1998]. This interpretation is supported by the findings of a recent study, which has demonstrated that functional coupling of alpha rhythms can reflect attentional processes. In particular, focused attention to one ear increased high alpha band synchronization likelihood in the ipsilateral frontal region [Gootjes et al., 2006]. In the light of these considerations, the results of the present study showing phase synchronization effects in the high alpha band are interpretable considering the fact that the present experimental design typically involved sensory specific, rather than global attentional processes.

The present results extend previous DL evidence [Brancucci et al., 2004; Hugdahl et al., 1999; Mathiak et al., 2000; Milner et al., 1968; Pollmann et al., 2002; Sparks and Geschwind, 1968; Springer and Gazzaniga, 1975] by showing the modulation of functional coupling of auditory cortical areas implicated in speech perception. The investigation of functional coupling of cortical rhythms complements the evaluation of amplitude and latency of EEG or MEG activity evoked by dichotic stimuli. Furthermore, it confirms and complements previous behavioral evidence demonstrating that small changes in the degree of competition (i.e. spectral overlap) between the dichotic tones significantly affect the magnitude of perceptual asymmetry. We observed here that the right ear advantage was stronger for “true” than “sham” dichotic stimuli, having “true” dichotic stimuli higher spectral overlap than “sham” ones. According to previous evidence on complex tones [Sidtis, 1981, 1988] and linguistic sounds [Springer et al., 1978] it can be stated that laterality effects are related to the spectral composition of the stimuli constituting the dichotic pair. The higher the spectral overlap in the dichotic pair, the stronger the ear advantage. Thus, the modulation of neuromagnetic functional coupling disclosed in the present study could have been blurred, as suggested by the behavioral results, which showed that even “sham” dichotic stimuli yield (minor) laterality effects.

In the present study, dichotic stimuli with high spectral overlap were obtained by simultaneous presentation of two CV‐syllables with voiced consonant (/da/ and /ba/) whereas dichotic stimuli with low spectral overlap were obtained by simultaneous presentation of a CV‐syllable with voiced consonant (/da/) and a CV‐syllable with voiceless consonant (/ka/). In this context, the present results are in accord with the outcome of a recent fMRI report, which showed that the left auditory cortex (in particular the left medial planum temporale) is highly sensible to the phonological differences between voiceless and voiced consonants [Jancke et al., 2002].

In particular, the present findings confirm and extend evidence from a precedent EEG study on functional coupling of cortical rhythms between bilateral auditory cortices during DL of harmonic complex nonverbal tones [Brancucci et al., 2005b]. In that study, it was demonstrated that functional coupling of EEG rhythms at left and right and scalp sites roughly overlying auditory cortices, was significantly lower during DL of competing (i.e. having similar fundamental frequencies and high spectral overlap) than noncompeting (i.e. having dissimilar fundamental frequencies and low spectral overlap) tone pairs. Moreover, it was suggested that this decrease of functional coupling could be a possible neural substrate for the lateralization of auditory stimuli during DL. In the present study, the methodological advancements allowed us to observe the modulation of functional coupling at cortical areas and to specify the individual sub‐band of alpha rhythms sensitive to that modulation. Moreover, the present study expands to speech stimuli and to a network of cortical areas devoted to speech perception.

It should be stressed that the absolute coherence values were somewhat low, especially for that what concerned the interhemispheric source pair (left ↔ right PAC). This might be ascribed to the large distance between the two sources of the MEG signals and to the preliminary removal of the auditory evoked magnetic fields (i.e. neural activity phase locked to the auditory stimulus) before the computation of coherence, which was done in order to investigate brain rhythms nonphase‐locked to the stimulus [Pfurtscheller and Lopes da Silva, 1999]. Previous findings have shown that coherence values are inversely proportional to the distance of the recorded sites [Thatcher et al., 1986]. Furthermore, the preliminary removal of evoked activity is in line with recent guidelines on the study of brain rhythmicity [Pfurtscheller and Lopes da Silva, 1999] and provides absolute and event‐related coherence values lower than those obtained computing the coherence from event‐related potentials [Kaiser et al., 2000; Yamasaki et al., 2005]. Finally, it should be also stressed that the individual coherence values were statistically significant (P < 0.05). Indeed, the coherence values of each subject were higher than statistical threshold as computed with the procedure suggested by Halliday et al. [1995]. Obviously, event‐related coherence values are usually even smaller than absolute coherence values as they represent a difference between coherence levels in two different time periods.

On the whole, the present results agree with the “structural theory” proposed originally by Kimura [1967]. On the basis of neuropsychological results, it was suggested that the contralateral neural pathway suppresses the ipsilateral one during DL. In line with this theory, commisurotomized patients had no difficulty reporting words or consonants‐vowel syllables presented monaurally [Milner et al., 1968; Sparks and Geschwind, 1968; Springer and Gazzaniga, 1975]. In contrast, they failed to report items presented to left ear when the same stimuli were presented dichotically. The lesion of the posterior part of the corpus callosum (splenium) prevented dichotic sounds to left ear from reaching the left hemisphere via the indirect contralateral route [Pollmann et al., 2002; Westerhausen et al., 2006]. This route going through the splenium would permit normal subjects to hear dichotic items in both ears, even if the ear contralateral to the dominant hemisphere is preferred. The present results on MEG source coherence extend the aforementioned “structural theory”, in that the suggested inhibition of the ipsilateral pathway may be associated with a drop of the functional coordination between the two auditory cortical areas at the dominant human encephalographic rhythm, in particular in the high alpha band.

CONCLUSION

The present study focused on functional coupling of cortical alpha rhythms within a neural network of cortical areas comprising bilateral auditory cortices and Wernicke's area during DL of speech sounds (CV‐syllables). Results showed that, during DL of speech sounds, functional coupling of high‐band alpha rhythms between left and right PAC decreased, whereas it increased within auditory speech areas of the left hemisphere. The decrease of inter‐hemispherical functional coupling of high‐band alpha rhythms is possibly because of the fact that, during DL of speech sounds, predominant information processing is performed by the specialized left hemisphere and the level of coordination between the two hemispheres declines. Instead, the increase of intra‐hemispherical functional coupling of high‐band alpha rhythms within the left hemisphere would underlie the stimulus‐specific processing of the syllable presented to the right ear, which arrives to the left PAC with reduced “dichotic interference” of the other syllable, presented to the left ear. These results suggest that functional coupling of alpha rhythms might constitute a neural substrate for lateralization of auditory stimuli during DL, at least regarding the auditory cortices. Indeed, DL involves a distributed network of areas comprising, other than the auditory cortices, the prefrontal cortex, the orbitofrontal and hippocampal paralimbic belts, and the splenium of the corpus callosum. Specifically, it has been recently shown that frontal areas play an important role in DL [Jancke et al., 2001, 2002, 2003;Karino et al., 2006; Lipschutz et al., 2002; Thomsen et al., 2004]. Future studies should investigate functional coupling among these brain areas during DL, possibly by using localization methods based on extended sources as models, as well as the role of other spectral frequencies and the influence of attentional constraints.

REFERENCES

- Ackermann H,Hertrich I,Mathiak K,Lutzenberger W ( 2001): Contralaterality of cortical auditory processing at the level of the M50/M100 complex and the mismatch field: A whole‐head magnetoencephalography study. Neuroreport 12: 1683–1687. [DOI] [PubMed] [Google Scholar]

- Alho K,Vorobyev VA,Medvedev SV,Pakhomov SV,Roudas MS,Tervaniemi M,van Zuijen T,Naatanen R ( 2003): Hemispheric lateralization of cerebral blood‐flow changes during selective listening to dichotically presented continuous speech. Brain Res Cogn Brain Res 17: 201–211. [DOI] [PubMed] [Google Scholar]

- Arnault P,Roger M ( 1990): Ventral temporal cortex in the rat: Connections of secondary auditory areas Te2 and Te3. J Comp Neurol 302: 110–123. [DOI] [PubMed] [Google Scholar]

- Babiloni C,Brancucci A,Vecchio F,Arendt‐Nielsen L,Chen ACN,Rossini PM ( 2006): Anticipation of somatosensory and motor events increases centro‐parietal functional coupling: An EEG coherence study. Clin Neurophysiol 117: 1000–1008. [DOI] [PubMed] [Google Scholar]

- Bilecen D,Scheffler K,Schmid N,Tschopp K,Seelig J ( 1998): Tonotopic organization of the human auditory cortex as detected by BOLD‐FMRI. Hear Res 126: 19–27. [DOI] [PubMed] [Google Scholar]

- Boucher R,Bryden MP ( 1997): Laterality effects in the processing of melody and timbre. Neuropsychologia 35: 1467–1473. [DOI] [PubMed] [Google Scholar]

- Bozhko GT,Slepchenko AF ( 1988): Functional organization of the callosal connections of the cat auditory cortex. Neurosci Behav Physiol 18: 323–330. [DOI] [PubMed] [Google Scholar]

- Brancucci A,San Martini P ( 1999): Laterality in the perception of temporal cues of musical timbre. Neuropsychologia 37: 1445–1451. [DOI] [PubMed] [Google Scholar]

- Brancucci A,San Martini P ( 2003): Hemispheric asymmetries in the perception of rapid (timbral) and slow (nontimbral) amplitude fluctuations of complex tones. Neuropsychology 17: 451–457. [DOI] [PubMed] [Google Scholar]

- Brancucci A,Babiloni C,Babiloni F,Galderisi S,Mucci A,Tecchio F,Zappasodi F,Pizzella V,Romani GL,Rossini PM ( 2004): Inhibition of auditory cortical responses to ipsilateral stimuli during dichotic listening: Evidence from magnetoencephalography. Eur J Neurosci 19: 2329–2336. [DOI] [PubMed] [Google Scholar]

- Brancucci A,Babiloni C,Rossini PM,Romani GL ( 2005a): Right hemisphere specialization for intensity discrimination of musical and speech sounds. Neuropsychologia 43; 1916–1923. [DOI] [PubMed] [Google Scholar]

- Brancucci A,Babiloni C,Vecchio F,Galderisi S,Mucci A,Tecchio F,Romani GL,Rossini PM ( 2005b): Decrease of functional coupling between left and right auditory cortices during dichotic listening: An electroencephalography study. Neuroscience 136: 323–332. [DOI] [PubMed] [Google Scholar]

- Bryden MP ( 1988): An overview of the dichotic listening procedure and its relation to cerebral organization In: Hugdahl K, editor. Handbook of Dichotic Listening: Theory, Methods and Research. New York: Wiley; pp 1–44. [Google Scholar]

- Code RA,Winer JA ( 1986): Columnar organization and reciprocity of commissural connections in cat primary auditory cortex (AI). Hear Res 23: 205–222. [DOI] [PubMed] [Google Scholar]

- Della Penna S,Del Gratta C,Granata C,Pasquarelli A,Pizzella V,Rossi R,Russo M,Torquati K,Ernè SN ( 2000): Biomagnetic systems for clinical use. Philos Mag B 80: 937–948. [Google Scholar]

- Della Penna S,Brancucci A,Babiloni C,Franciotti R,Pizzella V,Rossi D,Torquati K,Rossini PM,Romani GL ( 2006): Lateralization of dichotic speech stimuli is based on specific auditory pathways interactions: Neuromagnetic evidence. Cereb Cortex 2006 Dec 18; [Epub ahead of print] (in press). [DOI] [PubMed] [Google Scholar]

- Diamond IT,Jones EG,Powell TP (1968):Interhemispheric fiber connections of the auditory cortex of the cat.Brain Res 11: 177–193. [DOI] [PubMed] [Google Scholar]

- Eichele T,Nordby H,Rimol LM,Hugdahl K ( 2005): Asymmetry of evoked potential latency to speech sounds predicts the ear advantage in dichotic listening. Brain Res Cogn Brain Res 24: 405–412. [DOI] [PubMed] [Google Scholar]

- Ellfolk U,Karrasch M,Laine M,Pesonen M,Krause CM ( 2006): Event‐related desynchronization/synchronization during an auditory‐verbal working memory task in mild Parkinson's disease. Clin Neurophysiol 117: 1737–1745. [DOI] [PubMed] [Google Scholar]

- Fingelkurts A,Fingelkurts A,Krause C,Kaplan A,Borisov S,Sams M ( 2003): Structural (operational) synchrony of EEG alpha activity during an auditory memory task. Neuroimage 20: 529–42. [DOI] [PubMed] [Google Scholar]

- Formisano E,Kim DS,Di Salle F,van de Moortele PF,Ugurbil K,Goebel R ( 2003): Mirror‐symmetric tonotopic maps in human primary auditory cortex. Neuron 40: 859–869. [DOI] [PubMed] [Google Scholar]

- Gootjes L,Bouma A,Van Strien JW,Scheltens P,Stam CJ ( 2006): Attention modulates hemispheric differences in functional connectivity: Evidence from MEG recordings. Neuroimage 30: 245–253. [DOI] [PubMed] [Google Scholar]

- Halliday DM,Rosenberg JR,Amjad AM,Breeze P,Conway BA,Farmer SF ( 1995): A framework for the analysis of mixed time series/point process data—Theory and application to the study of physiological tremor, single motor unit discharges and electromyograms. Prog Biophys Mol Biol 64: 237–278. [DOI] [PubMed] [Google Scholar]

- Hari R ( 1990): Magnetic evoked fields of the human brain: Basic principles and applications. Electroencephalogr Clin Neurophysiol Suppl 41: 3–12. [DOI] [PubMed] [Google Scholar]

- Hugdahl K ( 2000): Lateralization of cognitive processes in the brain. Acta Psychol (Amst) 105: 211–235. [DOI] [PubMed] [Google Scholar]

- Hugdahl K,Bronnick K,Kyllingsbaek S,Law I,Gade A,Paulson OB ( 1999): Brain activation during dichotic presentations of consonant‐vowel and musical instrument stimuli: A 15O‐PET study. Neuropsychologia 37: 431–440. [DOI] [PubMed] [Google Scholar]

- Hugdahl K,Law I,Kyllingsbaek S,Bronnick K,Gade A,Paulson OB ( 2000): Effects of attention on dichotic listening: An 15O‐PET study. Hum Brain Mapp 10: 87–97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hugdahl K,Bodner T,Weiss E,Benke T ( 2003a): Dichotic listening performance and frontal lobe function. Brain Res Cogn Brain Res 16: 58–65. [DOI] [PubMed] [Google Scholar]

- Hugdahl K,Rund BR,Lund A,Asbjornsen A,Egeland J,Landro NI,Roness A,Stordal KI,Sundet K ( 2003b): Attentional and executive dysfunctions in schizophrenia and depression: Evidence from dichotic listening performance. Biol Psychiatry 53: 609–616. [DOI] [PubMed] [Google Scholar]

- Jancke L,Shah NJ ( 2002): Does dichotic listening probe temporal lobe functions? Neurology 58: 736–743. [DOI] [PubMed] [Google Scholar]

- Jancke L,Buchanan TW,Lutz K,Shah NJ ( 2001): Focused and nonfocused attention in verbal and emotional dichotic listening: An FMRI study. Brain Lang 78: 349–363. [DOI] [PubMed] [Google Scholar]

- Jancke L,Wustenberg T,Scheich H,Heinze HJ ( 2002): Phonetic perception and the temporal cortex. Neuroimage 15: 733–746. [DOI] [PubMed] [Google Scholar]

- Jancke L,Specht K,Shah JN,Hugdahl K ( 2003): Focused attention in a simple dichotic listening task: An fMRI experiment. Brain Res Cogn Brain Res 16: 257–266. [DOI] [PubMed] [Google Scholar]

- Kaiser J,Lutzenberger W,Preissl H,Ackermann H,Birbaumer N ( 2000): Right‐hemisphere dominance for the processing of sound‐source lateralization. J Neurosci 20: 6631–6639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kalcher J,Pfurtscheller G ( 1995): Discrimination between phase‐locked and non‐phase‐locked event‐related EEG activity. Electroencephalogr Clin Neurophysiol 94: 381–384. [DOI] [PubMed] [Google Scholar]

- Kallman HJ,Corballis MC ( 1975): Ear asymmetry in reaction time to musical sounds. Percept Psychophys 17: 368–370. [Google Scholar]

- Karino S,Yumoto M,Itoh K,Uno A,Yamakawa K,Sekimoto S,Kaga K ( 2006): Neuromagnetic responses to binaural beat in human cerebral cortex. J Neurophysiol 96: 1927–1938. [DOI] [PubMed] [Google Scholar]

- Kimura D ( 1961): Cerebral dominance and the perception of verbal stimuli. Can J Psychol 15: 166–171. [Google Scholar]

- Kimura D ( 1967): Functional asymmetry of the brain in dichotic listening. Cortex 3: 163–168. [Google Scholar]

- Kinsbourne M ( 1970): The cerebral basis of lateral asymmetries in attention. Acta Psychol (Amst) 33: 193–201. [DOI] [PubMed] [Google Scholar]

- Klimesch W ( 1996): Memory processes, brain oscillations and EEG synchronization. Int J Psychophysiol 24: 61–100. [DOI] [PubMed] [Google Scholar]

- Klimesch W ( 1999): EEG alpha and theta oscillations reflect cognitive and memory performance: A review and analysis. Brain Res Rev 29: 169–195. [DOI] [PubMed] [Google Scholar]

- Klimesch W,Schimke H,Schweiger J ( 1994): Episodic and semantic memory: An analysis in the EEG theta and alpha band. Electroencephalogr Clin Neurophysiol 91: 428–441. [DOI] [PubMed] [Google Scholar]

- Klimesch W,Doppelmayr M,Schimke H,Pachinger T ( 1996): Alpha frequency, reaction time, and the speed of processing information. J Clin Neurophysiol 13: 511–518. [DOI] [PubMed] [Google Scholar]

- Klimesch W,Doppelmayr M,Russegger H,Pachinger T,Schwaiger J ( 1998): Induced alpha band power changes in the human EEG and attention. Neurosci Lett 244: 73–76. [DOI] [PubMed] [Google Scholar]

- Krause CM,Gronholm P,Leinonen A,Laine M,Sakkinen AL,Soderholm C ( 2006): Modality matters: The effects of stimulus modality on the 4‐ to 30‐Hz brain electric oscillations during a lexical decision task. Brain Res 1110: 182–192. [DOI] [PubMed] [Google Scholar]

- Lehtela L,Salmelin R,Hari R ( 1997): Evidence for reactive magnetic 10‐Hz rhythm in the human auditory cortex. Neurosci Lett 222(2): 111–114. [DOI] [PubMed] [Google Scholar]

- Lipschutz B,Kolinsky R,Damhaut P,Wikler D,Goldman S ( 2002): Attention‐dependent changes of activation and connectivity in dichotic listening. Neuroimage 17: 643–656. [PubMed] [Google Scholar]

- Lopes da Silva FH,Vos JE,Mooibroek J,Van Rotterdam A ( 1980): Relative contributions of intracortical and thalamo‐cortical processes in the generation of alpha rhythms, revealed by partial coherence analysis. Electroencephalogr Clin Neurophysiol 50: 449–456. [DOI] [PubMed] [Google Scholar]

- Makela JP ( 1988): Contra‐ and ipsilateral auditory stimuli produce different activation patterns at the human auditory cortex. A neuromagnetic study. Pflugers Arch 412: 12–16. [DOI] [PubMed] [Google Scholar]

- Mathiak K,Hertrich I,Lutzenberger W,Ackermann H ( 2000): Encoding of temporal speech features (formant transients) during binaural and dichotic stimulus application: A whole‐head magnetencephalography study. Brain Res Cogn Brain Res 10: 125–131. [DOI] [PubMed] [Google Scholar]

- Milner B,Taylor L,Sperry RW ( 1968): Lateralized suppression of dichotically presented digits after commissural section in man. Science 161: 184–186. [DOI] [PubMed] [Google Scholar]

- Nunez P ( 1995): Neocortical Dynamics and Human EEG Rhythms. New York: Oxford University Press. [Google Scholar]

- O'Leary DS,Andreason NC,Hurtig RR,Hichwa RD,Watkins GL,Ponto LL,Rogers M,Kirchner PT ( 1996): A positron emission tomography study of binaurally and dichotically presented stimuli: Effects of level of language and directed attention. Brain Lang 53: 20–39. [DOI] [PubMed] [Google Scholar]

- Pandya DN,Hallett M,Kmukherjee SK ( 1969): Intra‐ and interhemispheric connections of the neocortical auditory system in the rhesus monkey. Brain Res 14: 49–65. [DOI] [PubMed] [Google Scholar]

- Pesonen M,Bjornberg CH,Hamalainen H,Krause CM ( 2006): Brain oscillatory 1–30 Hz EEG ERD/ERS responses during the different stages of an auditory memory search task. Neurosci Lett 399(1–2): 45–50. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G,Lopes da Silva FH ( 1999): Event‐related EEG/MEG synchronization and desynchronization: Basic principles. Clin Neurophysiol 110: 1842–1857. [DOI] [PubMed] [Google Scholar]

- Plessen KJ,Lundervold A,Gruner R,Hammar A,Lundervold A,Peterson BS,Hugdahl K ( 2007): Functional brain asymmetry, attentional modulation, and interhemispheric transfer in boys with Tourette syndrome. Neuropsychologia 45: 767–774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pollmann S,Maertens M,von Cramon DY,Lepsien J,Hugdahl K ( 2002): Dichotic listening in patients with splenial and nonsplenial callosal lesions. Neuropsychology 16: 56–64. [DOI] [PubMed] [Google Scholar]

- Price CJ ( 2000): The anatomy of language: Contributions from functional neuroimaging. J Anat 197(Part 3): 335–359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rappelsberger P,Petsche H ( 1988): Probability mapping: Power and coherence analyses of cognitive processes. Brain Topogr 1: 46–54. [DOI] [PubMed] [Google Scholar]

- Romani GL,Williamson SJ,Kaufman L ( 1982): Tonotopic organization of the human auditory cortex. Science 216: 1339–1340. [DOI] [PubMed] [Google Scholar]

- Sidtis JJ ( 1981): The complex tone test: Implications for the assessment of auditory laterality effects. Neuropsychologia 19: 103–111. [DOI] [PubMed] [Google Scholar]

- Sidtis JJ ( 1988): Dichotic listening after commissurotomy In: Hugdahl K,editor. Handbook of Dichotic Listening: Theory, Methods and Research. New York: Wiley; pp 161–184. [Google Scholar]

- Sparks R,Geschwind N ( 1968): Dichotic listening after section of neocortical commissures. Cortex 4: 3–16. [Google Scholar]

- Springer SP,Gazzaniga MS ( 1975): Dichotic testing of partial and complete split‐brain subjects. Neuropsychologia 13: 341–346. [DOI] [PubMed] [Google Scholar]

- Springer SP,Sidtis J,Wilson D,Gazzaniga MS ( 1978): Left ear performance in dichotic listening following commissurotomy. Neuropsychologia 16: 305–312. [DOI] [PubMed] [Google Scholar]

- Studdert‐Kennedy M,Shankweiler D ( 1970): Hemispheric specialization for speech perception. J Acoust Soc Am 48: 579–594. [DOI] [PubMed] [Google Scholar]

- Tervaniemi M,Hugdahl K ( 2003): Lateralization of auditory‐cortex functions. Brain Res Rev 43: 231–246. [DOI] [PubMed] [Google Scholar]

- Thatcher RW,Krause PJ,Hrybyk M ( 1986): Cortico‐cortical associations and EEG coherence: A two‐compartmental model. Electroencephalogr Clin Neurophysiol 64: 123–143. [DOI] [PubMed] [Google Scholar]

- Thomsen T,Rimol LM,Ersland L,Hugdahl K ( 2004): Dichotic listening reveals functional specificity in prefrontal cortex: An fMRI study. Neuroimage 21: 211–218. [DOI] [PubMed] [Google Scholar]

- Westerhausen R,Woerner W,Kreuder F,Schweiger E,Hugdahl K,Wittling W ( 2006): The role of the corpus callosum in dichotic listening:A combined morphological and diffusion tensor imaging study. Neuropsychology 20: 272–279. [DOI] [PubMed] [Google Scholar]

- Yamasaki T,Goto Y,Taniwaki T,Kinukawa N,Kira J,Tobimatsu S ( 2005): Left hemisphere specialization for rapid temporal processing: A study with auditory 40 Hz steady‐state responses. Clin Neurophysiol 116: 393–400. [DOI] [PubMed] [Google Scholar]