Abstract

How humans understand the actions and intentions of others remains poorly understood. Here we report the results of a magnetoencephalography (MEG) experiment to determine the temporal dynamics and spatial distribution of brain regions activated during execution and observation of a reach to grasp motion using real world stimuli. We show that although both conditions activate similar brain areas, there are distinct differences in the timing, pattern and location of activation. Specifically, observation of motion revealed a right hemisphere dominance with activation involving a network of regions that include frontal, temporal and parietal areas. In addition, the latencies of activation showed a task specific pattern. During movement execution, the earliest activation was observed in the left premotor and somatosensory regions, followed closely by left primary motor and STG at the time of movement onset. During observation, there was a shift in the timing of activation with the earliest activity occurring in the right temporal region followed by activity in the left motor areas. Activity within these areas was also characterized by a shift to a lower frequency in comparison with action execution. These results add to the growing body of evidence indicating a complex interaction within a distributed network involving motor and nonmotor regions during observation of real actions. Hum Brain Mapp, 2010. © 2009 Wiley‐Liss, Inc.

Keywords: magnetoencephalography, grasping, reaching, mirror neurons, intention

INTRODUCTION

The ability to understand and predict the actions and intentions of others is fundamental for social cognition. In spite of this, the neural mechanisms underlying action understanding remains controversial. Simulation theory proposes that the actions of others are recognized and understood by simulating the pattern of activity within the observer's own neural networks via the mirror neuron system (MNS) [Gallese et al.,1996; Rizzolatti et al.,2001]. The discovery of mirror neurons in the premotor cortex of the macaque monkey [Gallese et al.,1996] precipitated a growing body of research suggesting the existence of a similar mechanism in humans for understanding the actions and intentions of others. A wide range of brain imaging techniques (fMRI, PET, EEG, TMS and MEG) have now been used to show strong functional and anatomical associations between the execution and observation of the same action. Two key brain regions are thought to comprise the MNS: the premotor cortex and the inferior parietal cortex [Rizzolatti and Craighero,2004]. Action recognition involves a number of processes relating to recognition and representation of the goal of the observed action, as well as the intentions behind the action. Goal representation is thought to be mediated by the anterior intraparietal sulcus [Hamilton and Grafton,2006]. A third area has also been identified [Iacoboni et al.,2001; Puce and Perrett,2003] in the superior temporal sulcus (STS), a higher order visual region that is known to be involved in the processing of biological motion [Puce and Perrett,2003; Virji‐Babul et al.,2007].

In order to isolate the processes involved in action understanding, many of the motor based tasks used in previous experiments have used stimuli of static images or video clips in which a hand or arm is presented performing a particular action such as reaching for a wooden shape [Filimon et al.,2007], manipulating a series of rings or cups [Molnar‐Szakacs et al.,2006] or simply the action of isolated finger movements [Kessler et al.,2006]. Recently, a number of studies have examined the role of context in action understanding. Iacoboni et al. [2005] reported that actions embedded in contexts were associated with greater activity in mirror neuron areas such as the inferior frontal cortex in comparison with observing actions in the absence of context. Brass et al. [2007] also reported that unusual actions were associated with activations in the superior temporal gyrus. These tasks have been invaluable in extending the function of the mirror neuron system to go beyond simply recognizing the motor aspects of the action but also the intention of the action in relation to the context.

A number of studies have examined how the presence of a live model influences the activity of this network. Nitashani and Hari [2000] reported that observing pinching movements made by an experimenter in front of the subject, led to strong activation in the left inferior frontal area and the primary motor area. Järveläinen et al. [2001] examined the neuromagnetic oscillatory activity of the primary motor cortex while subjects observed either live or videotaped movements of the hand. They found that live motion was associated with stronger activation of the primary motor cortex. Shimada et al. [2006] compared the activity of motor areas in infants whether they either watched a live model performing an action or a television version of the same action, using near‐infrared spectroscopy. They reported that the infant's motor areas were significantly more activated when observing a live person in comparison with the televised action and proposed that the human brain responds differently to the real and virtual worlds.

We designed the present study with the goal of characterizing the spatial temporal dynamics of the cortical areas underlying action representation during observation of a functional task. In this task the experimenter sat beside the subject and performed a reach to grasp motion with a coffee cup, within an MEG environment. The subjects viewed both their own actions and the actions of the experimenter from the same perspective. We have previously shown that this task results in significant mu suppression in bilateral sensorimotor areas during action execution and observation [Virji‐Babul et al.,2008]. Given the previous results on the influence of context on action observation, we predicted that viewing action within a more realistic context would activate the mirror neuron network as well as the structures involved in perception of social stimuli such as the superior temporal sulcus.

METHODS

Participants

Ten healthy adult participants (four males, six females) age range 20–40 years with no history of neurological dysfunction or injury participated in this study. This study was approved both by the Simon Fraser University Research Ethics Board and the Down Syndrome Research Foundation Research Ethics Committee. All participants were right handed and all subjects gave informed consent.

Experimental Paradigm

Participants were seated in an electromagnetically shielded room (Vaccumschmeltz). Data were collected under three conditions: Rest, Execution and Observation. The experimenter sat next to the subject under all three conditions. In the Rest condition participants were asked to sit quietly with hands on their lap and eyes open. Timing of events associated with the performed and observed movements were measured using two nonmagnetic response pads (Lumitouch, Burnaby, B.C.) with highly sensitive buttons (four on each pad) placed adjacent to each other on a wooden board in front of the participant. For the movement task, participants began by resting their right hand on the buttons of one pad and made self paced movements to reach with their dominant hand and grasp and lift a cup that was placed on the buttons of the second button box. The participants were asked to lift the cup approximately 2–3 inches off the box and return it to the same position and finally return to their initial hand position. This provided trigger signals that were recorded along with the MEG data indicating the onset of reaching (hand motion), onset of lifting (hand/object motion), placement of the cup on the pad (termination of object motion) and time of return of the moving hand (termination of hand motion). In the Observation condition participants sat with their hands in their lap and passively observed the experimenter performing the same action in an identical way, ensuring that timing of reaching and lifting events was highly similar across the two conditions. Vision of the participant's own hands during the Observation condition was obstructed by a cloth or blanket. In addition, the experimenter was seated on the subject's dominant side so that the subject viewed the action from the same perspective as during the Execution condition. The experimenter's hand and reaching arm was positioned on the table so that it was visible to the participant throughout the session from rest to the completion of the movement. Each reach and grasp trial lasted approximately 3 seconds with approximately 2–3 second intertrial interval between trials. The average number of trials ranged between 80 and 100 in each condition. The Observation and Execution conditions were counterbalanced.

MEG Data Acquisition

The MEG data was acquired using a 151‐channel whole head MEG system (VSM Medtech, Coquitlam, Canada) installed within a magnetically shielded room. For both Execution and Observation conditions, 420 seconds of a continuous data were initially collected at a rate of 600 samples per second with a 150‐Hz low pass filter, using third‐order gradiometer noise cancellation. The data was manually inspected for artifacts and corresponding segments were marked as bad. Approximately 80–100 reaching/grasping trials per condition were retained for each subject for subsequent processing. Each data set was then filtered to 0.2–50 Hz band and downsampled to 200 samples per second.

Four trigger events were recorded per each reaching/grasping trial. The first event, marking the onset of the reaching motion, was used in the present study as there was a 2–3‐second long interval preceding the first trigger, providing a good baseline for detection of event‐related activity.

Data Processing

The SAM beamformer spatial filtering algorithm [Robinson and Vrba,1999] was used to identify areas of action or stimuli‐related activity. For forward modeling of the source fields, a multiple‐sphere approximation of the scalp surface of each participant was obtained based on their digitized head shape. Coregistration of the functional and anatomical data was performed by matching the digitized head shape to the subject's MRI image. If the latter was missing, the best matching MRI from a database of about one hundred images was used. To accomplish this, we initially selected several candidate MRIs whose interfiducial distances closely approximated the actual measurements. The MRI that had the best fit with the subject's head shape was used for co‐registration.

For each subject a three‐dimensional spatial distribution of averaged source activity over extended time windows was computed using a spatiotemporal beamforming method [Robinson,2004]. This involved identifying active and control windows of approximately equal lengths during the grasp‐lift and rest periods. For each voxel of a three‐dimensional grid (step 0.5 cm) covering the brain SAM beamformer weights and source waveforms were calculated using standard software supplied with the MEG system (CTF MEG System Software v. 5.4). The power of the phase‐locked evoked component was computed for each time point in the active window and results were averaged over the entire window. The phase‐locked component is characterized by stable sign and latency across the trials. To extract it, the signal at each time point in the active window was averaged over trials and baselined using the mean signal in the control window [see Robinson,2004 for details]. The mean evoked power was then normalized by the projected sensor noise to compensate for spatial distortions and to scale the data into units of noise variance, yielding a pseudo‐Z value for the voxel [Robinson and Vrba,1999]. Spatial locations with high pseudo‐Z values identify brain areas where evoked activity is consistently related to the stimulus.

In our study we used windows of approximately 500 msec. The time windows for each participant were selected by examining averaged MEG waveforms from sensors located close to motor area, as illustrated in Figure 1A. Window positions relative to the marker varied slightly depending on the subject and the condition, in order to accommodate most of the task‐related activity. The active window always started at the onset of evoked response, about −200 ± 50 ms for execution condition and 0 ± 50 msec for observation condition. Its length was determined by the first fall off of the signal (typically 500 ± 50 msec). Averaging over a time interval ensures that possible jitter and complex, oscillatory nature of the response are accounted for (see Fig 1). The control window preceded the active window and had the same length to avoid statistical bias. The requirement for selection of the active window was that it should cover all task‐related activity but not be too large as the effect may be diluted by time averaging. The windows were therefore selected on an individual basis. Using an identical window for each participant would have resulted in longer window lengths due the inter‐subject variability.

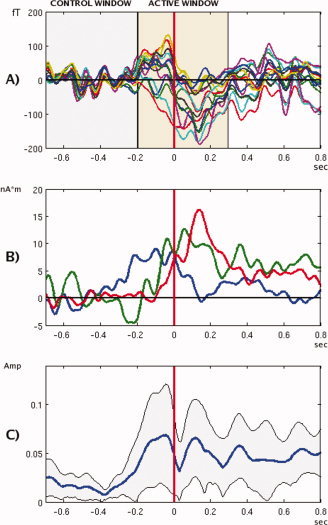

Figure 1.

Time courses of evoked fields in execution condition. (A) Output of 15 MEG sensors located over the left‐central area of the brain, for a single subject (TA) filtered to 0.2–15 Hz band. Shaded areas illustrate active and control windows selection. (B) Time courses of SAM virtual sensor at the left motor location (0.2–15 Hz), for subjects PA (blue), TA (green), CA (red). (C) Grand‐averaged amplitudes of the left motor virtual sensors outputs (0.2–8 Hz), in relative units: each waveform was normalized to a unit total power before averaging, to prevent bias caused by waveforms with larger amplitudes. Shaded area shows bounds of a 2 STD interval.

Location of Peak Activity in Beamformer Images

Functional images were spatially normalized into a common template space using SPM99 package [Singh et al.,2002]. Each image was additionally normalized on its mean value over the entire head volume, and then a group‐averaged functional image was calculated. An additional normalization step was performed to prevent bias in the group‐averaged results that could be caused by individual data with large pseudo‐Z amplitudes.

We then examined the locations of the maxima in the group‐averaged images found using a peak detection algorithm. This resulted in two sets of maxima for Execution and Observation conditions. Statistically significant locations were used for further analysis. Only maxima that satisfied the following two conditions were retained: (a) magnitudes larger than a statistical threshold obtained using surrogate data; and (b) a large number of participants should have maxima in their individual functional images close to these group‐averaged locations. Since the population distribution for the functional image data is unknown, one way to estimate statistical significance is to generate surrogate data and find the distribution from it. For this purpose, nonparametric permutation tests have been used in a number of recent studies to create surrogate functional images by randomly permuting segment labels (i.e “active” and “control” labels) multiple times [Chau,2004; Singh,2003]. We adopted a similar approach, but instead of permuting the labels, we randomly varied the event marker positions in the dataset. Namely, one hundred sets of random event markers with 85 events per set were generated. Within each set, the markers were distributed with a uniform probability over the entire duration of the record. A pair of 0.5 second long “active” and “control” windows was attached to every such event. The same analysis was repeated for each participant and for every random set as for the real set of events. The data was then averaged over the subjects. Thus one hundred surrogate group‐averaged images for each condition were obtained. As the event timings were selected at random, these images represented nothing but a mere statistical variability. We then found a distribution of the global maxima of these images. The upper boundary of the 95% percentile of this distribution was used as a significance threshold for the real group‐averaged data. Note that using the largest (global) maximum as a test statistics allows a strong control of the Type I statistical error in the multiple comparisons (multiple hypothesis testing) situation [Chau,2004, Shaffer,1995].

To test if a group‐averaged maximum represents significant number of participants, we adopted a null‐hypothesis that each subject has maxima distributed randomly over the brain volume. Using simple statistical considerations, one can estimate a chance probability to find a specified number of individual maxima within a small search distance around a group location, to obtain a threshold value for this number depending on desired significance level. In our analysis, we used 1.5 cm and 2 cm search radii and P value of 0.05 for the group peaks selection (see further details of the procedure in Appendix).

Finally, for each subject we constructed the SAM virtual sensors corresponding to group maxima in the subject's own brain space. The group locations (for each condition) were projected back from the template space to the subject's head coordinates. Each projection was replaced with the closest peak of the subject's functional image for the corresponding condition, provided that the peak could be found within 2 cm distance of the group maximum. If this condition could not be met, the projected group maximum itself was used. Thus we obtained two final sets of locations per subject that we labeled “Execution” and “Observation”. SAM virtual sensors were constructed in a standard way [Robinson and Vrba,1999] for both sets. The waveforms from these sensors were analyzed to obtain temporal information about the activations and to perform the time‐frequency analysis.

In subsequent processing, we used the “Execution” set for analyses in the Execution condition. In the Observation condition, we looked at both sets as the “Execution” set provided a direct comparison of two conditions at the same locations, while the “Observation” set related to activity not found in the Execution condition.

Laterality Index

The laterality index (LI) of the group‐averaged images was obtained by using a 95% significance statistical threshold (obtained by bootstrap) to choose significant voxels in the image. The values of these pixels were integrated separately for left and right hemispheres, and LI = (left − right)/(left + right) was estimated.

Timing of Activation

Virtual sensor signals from both execution and observation sets varied significantly over the group, as illustrated by Figure 1B. This is not surprising taking into account the complexity of the motion and the fact that many different strategies may be used by the human brain to accomplish this action. Note also that for SAM beamformer the sign of reconstructed waveforms cannot be uniquely identified, thus adding to the variability. For waveforms of similar shapes, it is possible to align the signs by flipping some of the curves relative to the time axis. In our case the variability of the waveforms did not permit this process.

One way to overcome this difficulty is to use absolute values (amplitudes) of the signals (Fig. 1C). In this case, the signs will be lost but the information about the strength of the response is still preserved. Still, Figure 1C clearly illustrates that due to the complexity of this movement, and differences in individual waveforms, it is not possible to obtain consistent results from the group time courses.

Therefore, instead of relying on the averaged data, we looked at the timings of the maximal activity for individual subjects in both execution and observation conditions for both sets of locations. For each participant, the waveforms from virtual sensors were smoothed with an 8 Hz low‐pass filter, averaged and squared to obtain the time course of the power. We then selected the strongest peak in the active window and took its latency relative to the event marker to be the timing of the activation for the corresponding location for each participant. A pool of latency data obtained this way was used for statistical analyses of relative timing of activations in different locations and conditions.

Time Frequency Distributions

The time‐frequency distributions (TFDs) of the virtual sensor signals were analyzed by computing spectrograms (that is, power spectrums of the short‐time Fourier transforms [see Cohen,1989]). A 0.4‐second long Hamming window was moved in 5 msec steps in the interval [−1, 1] sec relative to the event marker. For every channel, we first calculated averaged TFDs for each subject, and then obtained a group average. Finally, the power for each frequency line was normalized on its mean in the interval [−0.6, 0] sec. The results (in decibels) were plotted using color encoding. To obtain significance thresholds, we took TFD data in the interval [−0.6, 0] sec for the Observation condition, and generated 1,000 bootstrap resamplings for each frequency line. For every sample, global minimum and maximum values were found, and then P = 0.01 thresholds for the minima and maxima were estimated from obtained populations. Again, using global extremes as test statistics allows a strong control of the Type I error. This procedure was repeated for every virtual channel. Thus we obtained statistical thresholds for minima and maxima as functions of the location (i.e. virtual channel) and frequency. The frequency dependences were smoothed using 20 Hz running average. In the time frequency plots the points that did not reach significance levels were explicitly set to 0 dB.

The time frequency plots typically show event‐related desynchronization (ERD) preceding and during the motion. To compare ERDs between the conditions, we averaged the spectrograms along each frequency line over the time interval [0–0.5 sec] relative to the marker. This provided an overall desynchronization as a function of frequency in the “active” period. We then looked at the minima of this dependence in four overlapping frequency bands: 4–8 Hz, 7–14 Hz, 13–26 Hz, 25–50 Hz. These bands roughly correspond to the conventional theta, alpha, beta and gamma brain rhythms. The minimum (if found) was assumed to be the desynchronization frequency for the band for a given location. Applying this procedure to every location in the “Execution” set, we thus obtained up to 9 desynchronization frequencies for each band per condition. Comparison of these sets between the conditions reveals the global changes in the ERD frequencies across the locations, as shown in the “Results” section.

RESULTS

Areas of Activation for Execution and Observation

As explained in the “Methods” section, we used spatial distributions of the pseudo‐Z values (ratios of the phase‐locked signal power to projected sensor noise) to localize sources of sustained evoked activity.

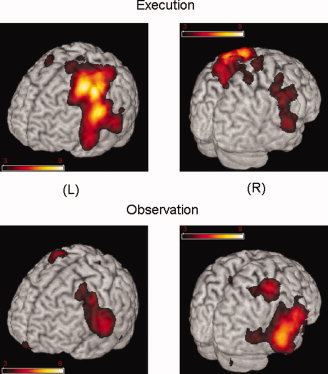

In the group‐averaged data, we found 15 virtual locations for the Execution condition and 13 locations for the Observation condition, significant at α = 0.05 threshold for the peak magnitude. For the same significance level, we found that only nine group‐averaged maxima (out of 15) for the Execution condition and six (out of 13) for the Observation condition were represented by an adequate number of participants. Figure 2 and Table I show these average overall group activations for both Execution and Observation conditions. As expected, during Execution the strongest maxima were observed in the left motor areas; there was however, significant activation in the right premotor and primary motor regions as well as the right superior temporal gyrus showing that functional action sequences activate bilateral networks. Similarly, we observed bilateral activation of the frontal, parietal‐temporal and temporal regions during the Observation condition.

Figure 2.

Average overall group activations for the execution and observation conditions. Color coding represents the group averaged pseudo Z values. Images were generated using MRIcro software (http://www.mricro.com).

Table I.

Group averaged locations. Talairach coordinates are shown in mm. Execution and observation sets are shown in order of decreasing Z2. Locations were labeled using the Talairach Client (Lancaster JL et al.,2000)

| Peak # | X | Y | Z | # subj, r = 1.5 cm | # subj, r = 2 cm | Brain region | Brodmann area # | Range (mm) |

|---|---|---|---|---|---|---|---|---|

| Execution | ||||||||

| 1 | −32.7 | −18.1 | 42.5 | 6 | 9 | L Precentral gyrus | 4 | 1 |

| 2 | −32.7 | −2 | 52.8 | 6 | 10 | L Middle frontal gyrus | 6 | 0 |

| 3 | −52.5 | −11.1 | 27.5 | 4 | 7 | L Precentral gyrus/STG | 4 | 3 |

| 4 | −32.7 | −44.5 | 58.6 | 2 | 6 | L Postcentral gyrus | 5 | 0 |

| 5 | 46.5 | −14.7 | 31.3 | 6 | 8 | R Precentral gyrus | 6 | 3 |

| 6 | 50.5 | −19.9 | 5.8 | 4 | 5 | R Superior temporal gyrus | 22 | 1 |

| 7 | 3 | −17 | 64.6 | 5 | 5 | R Medial frontal gyrus | 6 | 1 |

| 8 | 42.6 | −13.3 | 60.7 | 6 | 7 | R Precentral gyrus | 6 | 0 |

| 9 | 10.9 | −36.7 | 58.2 | 5 | 6 | R Postcentral gyrus | 4 | 3 |

| Observation | ||||||||

| 10 | 58.4 | −32.1 | −4.1 | 4 | 6 | R Middle temporal gyrus | 21 | 3 |

| 11 | 58.4 | −3.9 | 16 | 8 | 10 | R Precentral gyrus | 4 | 0 |

| 12 | 42.6 | −32.8 | 58 | 6 | 6 | R Postcentral gyrus | 40 | 1 |

| 13 | −52.5 | −19.9 | 5.8 | 6 | 7 | L Superior temporal gyrus | 41 | 1 |

| 14 | −36.6 | −14.4 | 38.7 | 5 | 5 | L Precentral gyrus | 4 | 4 |

| 15 | −28.7 | −1.8 | 56.5 | 4 | 4 | L Subgyral | 6 | 1 |

Laterality Index

The results from the LI estimate provided the following values: Execution = 0.73, Observation = −0.50 indicating a clear left hemisphere dominance for the Execution condition and a right hemisphere dominance for Observation.

Latencies of Activations

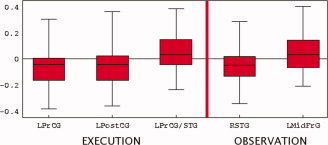

A one‐way ANOVA applied to activation times of virtual channels showed an effect for the Execution condition (P = 0.05), indicating that as a group, differences in latencies exceed statistical variability; however the post‐hoc tests (Tukey) could not pin point specific channels with relative delays reaching statistical significance. For the Observation condition, ANOVA yielded no effect for both Execution and Observation locations indicating that the variability was too large to reliably resolve time differences between individual pairs of virtual channels. We therefore fixed one virtual channel in the Execution set and computed latencies of all other channels relative to this reference channel. Making each channel in turn a reference, we obtained nine sets of latencies (one per each location in the “Execution” set) for each condition. The t‐tests performed on these sets showed that three channels out of nine had timings significantly different from all others in the Execution condition, and two—in the Observation condition, as shown in Figure 3 (P = 0.005 significance level was used for t‐tests to account for multiple comparisons). On average, the left primary motor and somatosensory areas were activated 50 ms earlier than other areas, while the left temporal area was delayed relative to others by approximately the same time. In the Observation condition, we found the opposite pattern: activity in the right temporal area location was the earliest, followed by activity in the left motor areas. There were no significant time differences among the “Observation” set of locations.

Figure 3.

Latencies of activations. precentral gyrus (PrCG), post central gyrus (PostCG), superior temporal gyrus (STG), Mid frontal gyrus (MidFrG). [Color figure can be viewed in the online issue, which is available at www.interscience.wiley.com.]

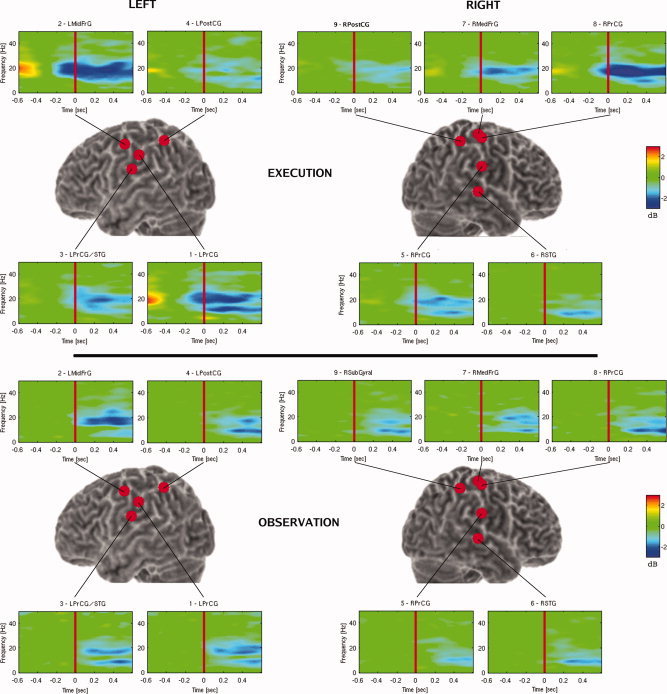

Time Frequency

Figure 4 shows the grand averaged virtual sensor time frequency plots for both conditions. The frequency plots are numbered from the channels displaying the highest pseudo‐Z values to the lowest. For any frequency line, the start of ERD was identified as the first time point where the absolute value of the change in the amplitude (in dB) exceeded the significance threshold. In Execution, ERD started approximately 150 msec before the onset of motion (vertical red line indicates movement onset) and continued to the completion of the motion.For the Execution condition, significant ERD was seen in both the alpha/μ (8–15 Hz) as well as the beta (15–35 Hz) frequency range in the left premotor and primary motor regions. ERD in the both frequency bands was also observed in the right premotor, and primary motor regions—although the onset of this activity occurred later. In the right STG region, ERD in the alpha band was observed after the onset of the movement.

Figure 4.

Grand averaged time frequency plots of virtual sensors. Precentral gyrus (PrCG), mid frontal gyrus (MidFrG), superior temporal gyrus (STG), postcentral gyrus (PostCG). Note that virtual sensor #3 is labeled LPrCG/STG as the location of this sensor was in between these two regions and could not be localized with greater accuracy.

In the Observation condition, significant ERD started approximately 100 msec after movement onset. Strong desynchronization in the beta band was observed in the left motor regions. Activity in the left parietal region occurred in both alpha and beta bands, approximately 200 msec following movement onset. On the right side desynchronization in the motor, parietal and STG regions was observed primarily in the alpha band.

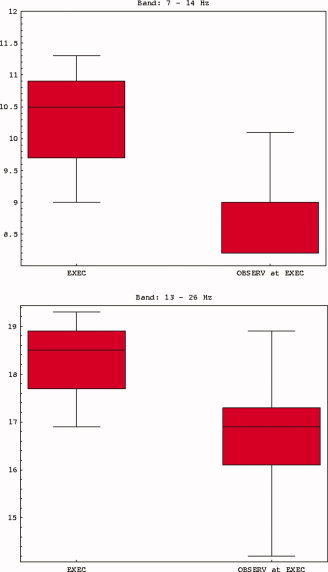

Spectral Analysis

In comparing the spectral distribution of ERD between the two conditions, we noted that the Observation condition was characterized by a downward shift in frequency. Figure 5 shows the box‐whisker plots of the desynchronization frequencies for the locations in the Execution and Observation conditions. The figure clearly demonstrates the shift by 1–2 Hz in alpha and beta bands when the subjects observed the motion. Although relatively small, this shift is statistically significant (P < 0.01). For the theta and gamma bands similar shifts were not observed.

Figure 5.

Desynchronization frequencies for the locations in the execution and observation conditions. [Color figure can be viewed in the online issue, which is available at www.interscience.wiley.com.]

DISCUSSION

The aim of this study was to determine the temporal dynamics and spatial distribution of brain regions activated during Execution and Observation of a reach to grasp motion using real world stimuli. There were three main differences between these two conditions. First, each condition was associated with specific hemisphere dominance. Although movement related to grasping the cup yielded significant activity in bilateral motor and parietal regions, there was a clear left hemisphere dominance with the strongest maxima in the left primary motor and premotor regions contralateral to the side of movement. In contrast, observation of the same motion resulted in a shift of dominance to the right hemisphere as shown by the laterality index. Second, the latencies of activation showed a task specific pattern. During movement execution, the earliest activation was observed in the left premotor and somatosensory regions, followed closely by left primary motor and STG at the time of movement onset. During Observation, there was a shift in the timing of activation with the earliest activity occurring in the right temporal region followed by activity in the left motor areas. Third, although significant ERD was seen in both conditions in the alpha/mu and beta frequency range in a network of areas that included bilateral frontal, parietal and temporal regions, there was a small but statistically significant downward shift in the overall alpha and beta band activity during Observation.

Despite well documented changes in cortical oscillatory rhythms during motor and cognitive tasks [e.g. Neuper and Pfurtscheller,2001; Schurmann and Basar,2001], the functional significance of these rhythms remain unclear. ERD is generally thought to indicate active processing [Pfurtscheller and Lopes da Silva,1999] with the suggestion that a desynchronized neural network has a larger capacity for processing inputs and for transferring information [Yamagishi et al.,2005]. Neuper and Pfurtscheller [2001] have further proposed a distinction between the lower (8–10 Hz) and upper (10–12 Hz) alpha band activity. They suggest that lower alpha desynchronization may be associated with nontask specific activity related to attention processes, whereas upper alpha activity is associated with task specific activity. During voluntary movement they showed that lower alpha activity was widely observed over primary sensorimotor, premotor and parietal regions indicating a general readiness or pre‐setting of neural networks that are involved in the motor task, but not necessary to support the actual movement. The upper alpha band was associated with specific regions in the contralateral motor areas related to the performed movement. The shift to the lower alpha frequency that we observed in this experiment may be related to these processes. Observation of action may activate subsets of neural networks including motor circuits, as learned events relating to an observed movement.

The binding of coherent or coupled neural assemblies may be disengaged and selectively re‐grouped with other task‐related circuits in relation to the environmental context. The MNS might therefore involve the coupling of neural networks based on previous experience, with a constant update or adaptation by disengaging and newly engaging a coupling of task‐specific relevant neuronal ensembles. Previous findings have already indicated selective thalamo‐cortical network activations during sensory processing [Llinás and Ribary,1993; Ribary,2005; Ribary et al.,1991] and during particular motor tasks [Schnitzler et al.,2005; Volkmann et al.,1996].

The shift to the right hemisphere during Observation may also be related to these task‐related circuits. Given that our task did not require imitation, it likely precludes active preparation based on the observed motion. It is more likely that right hemisphere activation is related to attention control, processing of spatial functions, encoding the global features of the task or for coding intention. It is important to highlight that mirror neurons are a subset of a population of neurons that are active during observation and execution of actions and there is still much debate about whether this response is due to the activity of a distinct population of neurons that codes both perception and action [Dinstein et al., 2008]. Recently, Chong et al. [2008] showed for the first time that the right inferior parietal lobe (IFL) selectively responds to motor and perceptual representations of action, suggesting that the IFL may play a critical role in action understanding.

This recent finding in combination with our results showing a right hemisphere dominance during observation and early activation in the right temporal region, may be another essential link between perception and action. It has been suggested that the temporal cortex interacts with premotor and parietal cortex particularly during imitation, by integrating visual input with reafferent copies of the imitated action [Iacoboni et al.,2001]. Although in our study there was no requirement for imitation we found that the strongest maxima was in the right temporal region. This area is also involved in cognitive processing related to perspective‐taking [Schulte‐Rüther et al.,2007]. The ability to distinguish between the actions of the self from others may in fact be mediated by an interaction of the temporal‐parietal region with motor related areas. In the present experiment, the task took place within a social context with a live model and was designed from the first person perspective with the experimenter sitting next to the subject during the task. The combination of this visual perspective occurring within a real world context may have led to the activation of a network involving the STG, frontal and parietal regions.

Keysers and Perrett [2004] have recently reviewed the animal and human literature relating to the mirror neuron system and showed that while neurons in the STS, posterior parietal lobe and the premotor cortex respond to the sight and sounds of the actions of others, only the parietal and motor regions respond to the agent's own actions. Over time, the STS becomes critical for learning and discriminating self produced actions from the actions of others. They propose that the STS, posterior parietal lobe and the premotor cortex should be considered as a functional circuit with reciprocal connections that facilitates information in both directions to facilitate social understanding.

We should also note that in contrast to some previous fMRI studies on action observation, we did not observe significant group activations in the inferior frontal areas. Within the fMRI literature there is currently a debate on whether this area should in fact, be considered a mirror neuron area. In a recent review Morin and Geres [2008] note that activations in the inferior frontal gyrus (IFG) tend to be more “erratic” and do not appear to be sensitive to goal directed actions. In our study while we observed the largest group activations in the more posterior regions of the frontal cortex, we did observe activations in the inferior frontal regions in some subjects; however these were not large enough to be detected in the group average. While it is beyond the scope of this paper to discuss this debate in detail, we should point out that there are two key differences between our MEG results and previous fMRI studies that should be considered. First, the interval that we focused our analysis was from 0 to 500 msec. This very early time interval is well suited for MEG but is not within the scope of the response time of fMRI. A second well‐known issue that must be mentioned is that in MEG the signal to noise ratio for inferior sources is less than ideal. As a result, sources in these areas may require very large activations to be detected. Whether the IFG is activated during this early time period during the reaching phase is therefore still unclear and requires further investigation.

In summary, our results show that observing behavior within a real world context involves a complex interaction within a distributed network involving activation of specific frequency bands associated with attention related processes within a motor context. Our findings confirm previous reports of regions of overlap in the neural areas involved during the execution and observation of the same action with less complex motor tasks [Caetano et al.,2007]. In our study these regions of overlap were the left and right precentral gyrus, and the left midfrontal gyrus. Activity in the motor areas is the central component of the traditional mirror neuron network. This type of activity has been reported not only during observation of human motion but also in the observation of robotic motion [Gazzola et al.,2007] and object motion [Virji‐Babul et al.,2007] suggesting that the perception of motion involves this subset of a more general network involved in linking perception with action. These data suggest that the MNS hypothesis may need to be expanded when considering movements occurring in a real environment. Further studies involving task‐specific neural network recruitments, binding and synchronization during action execution and observation will help to refine the MNS hypothesis.

Acknowledgements

This research was supported by the Down Syndrome Research Foundation, the Human Early Learning Partnership (HELP) and the Natural Science and Research Foundation Canada (NSERC). We would like to thank Julie Unterman for assistance with data collection and to all the individuals who participated in this study.

Significance Test for the Number of Subjects Showing a Local Maximum in the Vicinity of a Group Maximum

To determine if a group maximum represents a statistically significant number of participants, we adopt a null‐hypothesis that each subject has exactly N peaks peaks in their functional images, that the peaks are independent and have a uniform random distribution over the brain volume V brain. Let us estimate a chance probability to find k or fewer subjects that have minimum one peak within a sphere of radius r centered at a group maximum location.

First we look at an individual subject. If N peaks = 1, then ignoring edge effects a probability to find a peak within a certain search volume v search of subject's functional image is p 1 = v search/V brain, where v search = (4/3)πr 3. For N peaks > 1 we have a standard Bernoulli trials model, because the peaks are independent. Probability P to find at least one peak within the search volume is then P = 1 − (1‐p 1).

Assume that total number of participants is N subj. For each one the probability of having one or more peaks within the search volume is P. As subjects are all independent, we have a Bernoulli trials case again. Consequently the total number of subjects having peaks within the search volume has a binomial distribution with parameters P and N subj. The probability of having k or fewer subjects with at least one peak within the search volume is simply a CDF of this distribution, which is given by the formula:

Finally, setting some significance level α, for example α = 0.05, one can find a threshold value for the number of subjects k * such that a chance probability to get k ≥ k * is less than α: 1−F(k *; N subj, P) < α.

In practice, the total number of peaks varied slightly from subject to subject. In our estimates the mean numbers of peaks were used: N peaks = 15 for the execution and N peaks = 17 for the observation condition, respectively. To obtain the brain volume V, we chose a spherical head model with radius 8.5 cm and assumed that the brain occupies 2/3 of the sphere. With these settings, finding four (six) subjects or more with peaks within the search volume was significant at P = 0.05 level for 1.5 (2) cm search distance.

We used a pair of search radii (r = 1.5 and 2 cm) instead of just one to account for discrete nature of the statistic in question (the number of individual peaks located within the search distance) and relative arbitrariness of the choice of r. The problem may be illustrated by an example. Suppose four individual peaks are found within r = 1.5 cm search distance from some group location. At the same time, five peaks are found using r = 2 cm. With all other parameters set as specified above, this group location should be retained as significant if r = 1.5 cm is used. On the contrary, it should be discarded if r = 2 cm is used. Similar problem occurs if three peaks are found with r = 1.5 cm (insignificant) and say six peaks with r = 2 cm (significant). However any particular choice of r is rather subjective and we would like to be less sensitive to it. A simple way to achieve this adopted in this study was to use two reasonable values for r instead of one, and to retain the group location if it meets the significance criterion for any of them (or for both).

REFERENCES

- Brass M, Schmitt RM, Spengler S, Gergely G ( 2007): Investigating action understanding: Inferential processes versus action simulation. Curr Biol 17: 2117–2121. [DOI] [PubMed] [Google Scholar]

- Caetano G, Jousmäki V, Hari R ( 2007): Actor's and observer's primary motor cortices stabilize similarly after seen or heard motor actions. Proc Natl Acad Sci USA 104: 9058–9062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chau W, McIntosh AR, Robinson SE, Schulz M, Pantev C ( 2004): Improving permutation test power for group analysis of spatially filtered MEG data. Neuroimage 23: 983–996. [DOI] [PubMed] [Google Scholar]

- Chong TT, Cunnington R, Williams MA, Kanwisher N, Mattingley JB ( 2008): fMRI adaptation reveals mirror neurons in human inferior parietal cortex. Current Biology: CB 18: 1576–1580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen L ( 1989): Time‐frequency distributions—A review. Proc IEEE 77: 941–981. [Google Scholar]

- Dinstein I, Gardner JL, Jazayeri M, Heeger DJ ( 2008): Executed and observed movements have different distributed representations in human aIPS. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 28: 11231–11239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Filimon F, Nelson JD, Hagler DJ, Sereno MI ( 2007): Human cortical representations for reaching: Mirror neurons for execution, observation, and imagery. Neuroimage 37: 1315–1328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallese V, Fadiga L, Fogassi L, Rizzolatti G ( 1996): Action recognition in the premotor cortex. Brain 119 ( Pt 2): 593–609. [DOI] [PubMed] [Google Scholar]

- Gazzola V, Rizzolatti G, Wicker B, Keysers C ( 2007): The anthropomorphic brain: The mirror neuron system responds to human and robotic actions. Neuroimage 35: 1674–1684. [DOI] [PubMed] [Google Scholar]

- Hamilton AF, Grafton ST ( 2006): Goal representation in human anterior intraparietal sulcus. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 26: 1133–1137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iacoboni M, Koski LM, Brass M, Bekkering H, Woods RP, Dubeau MC, Mazziotta JC, Rizzolatti G ( 2001): Reafferent copies of imitated actions in the right superior temporal cortex. Proc Natl Acad Sci USA 98: 13995–13999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iacoboni M, Molnar‐Szakacs I, Gallese, V Buccino G, Mazziotta JC, Rizzolatti G ( 2005): Grasping the intentions of others with one's own mirror neuron system. PLoS Biol 3: e79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Järveläinen J, Schürmann M, Avikainen S, Hari R ( 2001): Stronger reactivity of the human primary motor cortex during observation of live rather than video motor acts. Neuroreport 12: 3493–3495. [DOI] [PubMed] [Google Scholar]

- Kessler K, Biermann‐Ruben K, Jonas M, Siebner HR, Bäumer T, Münchau A, Schnitzler A ( 2006): Investigating the human mirror neuron system by means of cortical synchronization during the imitation of biological movements. Neuroimage 33: 227–238. [DOI] [PubMed] [Google Scholar]

- Keysers C, Perrett DI ( 2004): Demystifying social cognition: A Hebbian perspective. Trends Cogn Sci 8: 501–507. [DOI] [PubMed] [Google Scholar]

- Lancaster JL, Woldorff MG, Parsons LM, Liotti M, Freitas CS, Rainey L, Kochunov PV, Nickerson D, Mikiten SA, Fox PT ( 2000): Automated Talairach Atlas labels for functional brain mapping. Hum Brain Mapp 10: 120–131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Llinás R, Ribary U ( 1993): Coherent 40‐hz oscillation characterizes dream state in humans. Proc Natl Acad Sci USA 90: 2078–2081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molnar‐Szakacs I, Kaplan J, Greenfield PM, Iacoboni M ( 2006): Observing complex action sequences: The role of the fronto‐parietal mirror neuron system. Neuroimage 33: 923–935. [DOI] [PubMed] [Google Scholar]

- Morin O, Grèzes J ( 2008): What is “mirror” in the premotor cortex? A review. Clinical Neurophysiology 38: 189–195. [DOI] [PubMed] [Google Scholar]

- Nitashani N, Hari R ( 2000): Temporal dynamics of cortical representation for action. Proc Natl Acad Sci USA 97: 913–918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neuper C, Pfurtscheller G ( 2001): Event‐related dynamics of cortical rhythms: Frequency‐specific features and functional correlates. International Journal of Psychophysiology: Official Journal of the International Organization of Psychophysiology 43: 41–58. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, da Silva L ( 1999): Event‐related EEG/MEG synchronization and desynchronization: Basic principles. Clin Neurophysiol 110: 1842–1857. [DOI] [PubMed] [Google Scholar]

- Puce A, Perrett D ( 2003): Electrophysiology and brain imaging of biological motion. Philos Trans R Soc Lond B Biol Sci 358: 435–445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ribary U, Ioannides AA, Singh KD, Hasson R, Bolton JP, Lado F, Mogilner A, Llinás R ( 1991): Magnetic field tomography of coherent thalamocortical 40‐hz oscillations in humans. Proc Natl Acad Sci USA 88: 11037–11041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ribary U ( 2005): Dynamics of thalamo‐cortical network oscillations and human perception. Progr Brain Res 150: 127–142. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L ( 2004): The mirror‐neuron system. Ann Rev Neurosci 27: 169–192. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V ( 2001): Neurophysiological mechanisms underlying the understanding and imitation of action. Nat Rev Neurosci 2: 661–670. [DOI] [PubMed] [Google Scholar]

- Robinson SE ( 2004): Localization of event‐related activity by SAM(erf). Neurol Clin Neurophysiol 30: 109. [PubMed] [Google Scholar]

- Robinson SE, Vrba J ( 1999): Functional neuroimaging by synthetic aperture magnetometry (SAM) In: Yoshimoto T, Kotani M, Kuriki S, Karibe H., Nakasato N, editors. Recent Advances in Biomagnetism. Sendai: Tohoku University Press; pp 302–305. [Google Scholar]

- Schnitzler, Alfons, Gross, Joachim ( 2005): Normal and pathological oscillatory communication in the brain. Nat Rev Neurosci 6: 285–296. [DOI] [PubMed] [Google Scholar]

- Schulte‐Rüther M, Markowitsch HJ, Fink GR, Piefke M ( 2007): Mirror neuron and theory of mind mechanisms involved in face‐to‐face interactions: A functional magnetic resonance imaging approach to empathy. J Cogn Neurosci 19: 1354–1372. [DOI] [PubMed] [Google Scholar]

- Schürmann M, Basar E ( 2001): Functional aspects of alpha oscillations in the EEG. International Journal of Psychophysiology: Official Journal of the International Organization of Psychophysiology 39: 151–158. [DOI] [PubMed] [Google Scholar]

- Shaffer JP ( 1995): Multiple hypothesis testing. Ann Rev Psych 46: 561–584. [Google Scholar]

- Shimada S, Hiraki K ( 2006): Infant's brain responses to live and televised action. NeuroImage 32: 930–939. [DOI] [PubMed] [Google Scholar]

- Singh KD, Barnes GR, Hillebrand A ( 2003): Group imaging of task‐related changes in cortical synchronisation using nonparametric permutation testing. Neuroimage 19: 1589–1601. [DOI] [PubMed] [Google Scholar]

- Singh KD, Barnes GR, Hillebrand A, Forde EME, Williams AL ( 2002): Task‐related changes in cortical synchronization are spatially coincident with the hemodynamic response. Neuroimage 16: 103–114. Available at: http://www.fil.ion.ucl.ac.uk/spm/ [DOI] [PubMed] [Google Scholar]

- Virji‐Babul N, Cheung T, Weeks D, Kerns K, Shiffrar M ( 2007): Neural activity involved in the perception of human and meaningful object motion. Neuroreport 18: 1125–1128. [DOI] [PubMed] [Google Scholar]

- Virji‐Babul N, Moiseev A, Cheung T, Weeks D, Cheyne D, Ribary U ( 2008): Changes in mu rhythm during action observation and execution in adults with Down syndrome: Implications for action representation. Neurosci Lett 436: 177–180. [DOI] [PubMed] [Google Scholar]

- Volkmann J, Joliot M, Mogilner A, Ioannides AA, Lado F, Fazzini E, Ribary U, Llinás R ( 1996): Central motor loop oscillations in parkinsonian resting tremor revealed by magnetoencephalography. Neurology 46: 1359–1370. [DOI] [PubMed] [Google Scholar]