Abstract

To appropriately adapt to constant sensory stimulation, neurons in the auditory system are tuned to various acoustic characteristics, such as center frequencies, frequency modulations, and their combinations, particularly those combinations that carry species‐specific communicative functions. The present study asks whether such tunings extend beyond acoustic and communicative functions to auditory self‐relevance and expertise. More specifically, we examined the role of the listening biography—an individual's long term experience with a particular type of auditory input—on perceptual‐neural plasticity. Two groups of expert instrumentalists (violinists and flutists) listened to matched musical excerpts played on the two instruments (J.S. Bach Partitas for solo violin and flute) while their cerebral hemodynamic responses were measured using fMRI. Our experimental design allowed for a comprehensive investigation of the neurophysiology (cerebral hemodynamic responses as measured by fMRI) of auditory expertise (i.e., when violinists listened to violin music and when flutists listened to flute music) and nonexpertise (i.e., when subjects listened to music played on the other instrument). We found an extensive cerebral network of expertise, which implicates increased sensitivity to musical syntax (BA 44), timbre (auditory association cortex), and sound–motor interactions (precentral gyrus) when listening to music played on the instrument of expertise (the instrument for which subjects had a unique listening biography). These findings highlight auditory self‐relevance and expertise as a mechanism of perceptual‐neural plasticity, and implicate neural tuning that includes and extends beyond acoustic and communication‐relevant structures. Hum Brain Mapp 2009. © 2007 Wiley‐Liss, Inc.

Keywords: auditory cortex, music perception, auditory neuroscience, auditory expertise, plasticity, sensory neuroscience

INTRODUCTION

A fundamental question in neuroscience concerns how our nervous system adapts to the environment's constant sensory stimulation. One mechanism involves specialized neurons that are tuned to relevant aspects of sensory input, or attenuate irrelevant aspects [e.g., Gordon and O'Neil,2000; Rauschecker et al.,1995; Wong et al., 2007b]. In the auditory system, which subserves both speech and music processing, evidence suggests that low‐level neurons are tuned to basic acoustic properties such as center frequency (CF) and frequency modulation (FM) (“information‐bearing elements” or IBEs), while high‐level neurons are sensitive to relevant parts and combinations of IBEs, which carry species‐specific communicative functions [Suga,1995; Suga et al.,2000]. For example, in speech, combinations of formants (CF) and formant transitions (FM) form certain consonant‐vowel sequences, which are arguably represented in the middle and anterior portion of the superior temporal region [Binder et al.,2000; Liebenthal et al.,2005; Scott et al.,2000]. In music, cognition has been framed as the transformation of acoustic input to conscious experience via formal eliciting codes, a process parallel to language cognition in the codes' selection of communicatively relevant aspects of the acoustic stimulus [Bharucha et al.,2006]. For example, combinations of tones form chords and harmonic progressions that can be syntactically appropriate or inappropriate, a distinction registered in Broca's area [Maess et al.,2001]. The communicative relevance (or species specificity) of such neural specialization distinguishes how the auditory systems of listeners with different auditory experiences (e.g., native English speakers vs. Mandarin speakers [Wong et al.,2004a]; musicians vs. non‐musicians [Gaab and Schlaug,2003]) process the same combinations of IBEs.

Because music lacks the confound of a lexico‐semantics as invasive as that in language, it is uniquely suitable for the study of plasticity in neurological mechanisms [Zatorre,2005]. Although Koelsch et al. [2004] argues in favor of a greater role for musical semantics, there is certainly no dictionary of symbols and designates as exists for spoken language. Given a musician's special set of long‐term experiences with listening to and creating a set of sounds (their “listening biography”), we ask whether neural sensitivity reflects not only IBE combinations that are typically communication‐relevant, but also distinct IBE combinations that have become relevant across the course of this extensive history. In the terminology of Bharucha et al. [2006], do the special listening biographies of instrumentalists create special formal listening codes? To answer this question, cerebral hemodynamic responses in highly‐trained classical violinists and flutists were measured (using fMRI) while they listened to two sets of musical excerpts from the same style and genre (Bach Partitas): one for their instrument of expertise (violin or flute), and one for the other instrument. Bach Partitas contain combinations of IBEs that are relevant to musicians of the classical tradition, regardless of their instrument of expertise. However, musicians have a special listening biography associated with the timbre of their instrument of expertise. If neural sensitivity extends to IBE combinations shaped by the listening biography, we would expect that violinists listening to a Bach Partita for violin would engage a brain network similar to that engaged by flutists listening to a Bach Partita for flute. This brain network should include the motor (precentral regions), auditory (superior temporal regions), syntactic (BA44), and executive (frontal regions) aspects uniquely associated with the instrument of expertise, including areas that are hypothesized to be essential for processing music by musicians [Ohnishi et al.,2001]. However, if neural sensitivity is confined to a broader level of IBE combinations, we would expect all classical musicians (violinists or flutists) to engage a brain network specific to classical music (no differences across groups), or a brain network specific to musical timbre (distinct responses to flute and violin music, regardless of instrument of expertise).

MATERIALS AND METHODS

Subjects

Nine highly trained violinists and seven highly trained flutists (mean age = 27.25 years, range 18–50 years) participated in the study (Table I shows subject characteristics). All subjects started playing their instrument of expertise before age 12 (mean = 8.06 years, range 3–12 years) and had played that instrument for at least 10 years. Although as a group, violinists appeared to have played their instrument of expertise for a longer time, the difference between the two groups was not statistically reliable in our samples [t (14) = 1.043, P = 0.315]. Although several subjects had experience playing additional instruments, none had extensive experience with the non‐expertise instrument being tested. All subjects except for one in each group were right‐handed as assessed by the Edinburgh Handedness Inventory [Oldfield,1971]. The remaining two subjects were ambidextrous. There was no significant difference between the groups in handedness scores. Our experimental protocol was approved by the Northwestern University Institutional Review Board.

Table I.

Subject characteristics

| Subject ID | Gender | Handedness | Instrument of expertise (Years) | Additional instrument(s) (Years) |

|---|---|---|---|---|

| V1 | F | Right | Violin (11) | None |

| V2 | F | Right | Violin (23) | None |

| V3 | F | Right | Violin (14) | None |

| V4 | F | Right | Violin (16) | Piano (2) |

| V5 | F | Right | Violin (19) | Piano (2) |

| V6 | F | Right | Violin (19) | Piano (2) |

| V7 | M | Right | Violin (10) | Viola (2) |

| V8 | F | Right | Violin (15) | Piano (1) |

| V9 | M | Ambidextrous | Violin (28) | Viola (10), Piano (2) |

| Mean | 17.2 | |||

| F1 | F | Right | Flute (10) | None |

| F2 | F | Right | Flute (12) | None |

| F3 | F | Ambidextrous | Flute (24) | Piano (16) |

| F4 | F | Right | Flute (16) | None |

| F5 | M | Right | Flute (14) | Violin (2) |

| F6 | F | Right | Flute (11) | Piano (1), Guitar (2) |

| F7 | Right | Flute (14) | Organ (4) | |

| Mean | 14.4 |

Years in the “Instrument of expertise” column indicates the number of continuous years subjects had played their instrument of expertise. Years in the “Additional instrument” column indicates number of years subjects had played an instrument other than their instrument of expertise.

Stimuli and Procedures

Twelve‐second excerpts from the J.S. Bach Partita in A Minor for solo flute and Partita in D Minor for solo violin were used as stimuli. They were presented at a comfortable volume, comparable with that which a person would employ when listening to recorded music for pleasure. The excerpts were matched as closely as possible in syntactic and acoustic properties (except for the acoustic properties specific to the instruments): textures were matched because both pieces are monophonic; ranges were matched by the structural capabilities/constraints of the violin and flute instruments and because music from the same composer of the same genre (solo partitas) for treble instruments will naturally lie within a similar range; styles were matched because the excerpts were from the same composer and genre; tempos were matched because they were drawn from movements with matching tempo markings (e.g., the number of excerpts taken from the allemande was identical for both instruments); and dynamics were matched because excerpts were drawn from similar movements and amplitude normalized. It was advantageous to use matched excerpts written originally for flute and violin, rather than a single piece played on both instruments, so that the idiomatic qualities that distinguish writing for particular instruments would be persevered, to elicit as ecologically valid a response as possible. It is noteworthy that although stylistically matched excerpts for flute and violin preserve idiomatic qualities related to the specific instruments, they do not carry instrument‐specific syntactic distinctions; music written by the same composer in the same genre for solo flute and violin are matched in terms of the parameters considered to contribute to musical syntax [Lerdahl and Jackendoff,1983; Meyer,1989]. Stimuli were recorded from commercially available recordings onto a Pentium IV PC sampled at 44.1 kHz. All subjects had previously played the piece in their timbre of expertise, so their special listening biography in relation to stimuli in their timbre of expertise included additional experience listening to as well as performing that music.

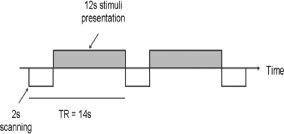

Figure 1 details the stimulus presentation and fMRI sequence. Between two volumes of image acquisition, each lasting 14 s, scanning occurred during the first 2 s and violin or flute excerpts were presented during the remaining 12 s. Subjects received specific instructions to attend to the music as closely as possible (we told the subjects that they were required to answer questions about the stimuli after scanning), paying attention in the same way they would when ordinarily listening to music in a concert hall or on recording. They were not provided with further details, so that they would not be biased to attend to syntactic properties, or expressive properties, or any other aspect of the music. Since the current study focuses on the listening biography, which encompasses a series of cognitive and sensory‐motor processes, we felt that it was important not to bias our subjects to perform a narrowly constructed task. Subjects were asked to press a button at the end of each excerpt using the index finger of their right hand; the behavioral responses recorded assisted in ascertaining that the subjects were attending to the stimuli. Stimuli were presented binaurally via headphones which were custom made for MRI environments (http://www.Avotec.org). The stimuli were played at appropriate intervals using the E‐prime software program (Psychology Software Tools, Pittsburg, USA).

Figure 1.

Stimulus presentation and image acquisition. Scanning occurred during the first 2 s and violin or flute excerpts were presented during the remaining 12 s. Note scanner induced activations will not be present during subsequent image acquisition because of the 12 s delay.

MRI Acquisition

MR images were acquired at the Center for Advanced MRI in the Department of Radiology at Northwestern University using a Siemens 3T Trio scanner. A high resolution, T1‐weighted 3D volume was acquired sagittally (MP‐RAGE; TR/TE = 2,100 ms/2.4 ms, flip angle = 8°, TI = 1,100 ms, matrix size = 256 × 256, FOV of 22 cm, slice thickness = 1 mm) and was used to localize the functional activation maps. T2*‐weighted functional images were acquired axially along the AC‐PC plane using a susceptibility weighted EPI pulse sequence while subjects performed the behavioral task (TE = 30 ms, TR = 14 s, flip angle = 90°, in‐plane resolution = 3.4375 mm2, 38 slices with a slice thickness = 3 mm and zero gap were acquired in an interleaved measurement). A sparse sampling method was used, whereby the image acquisition occurred during the first 2 s of the 14‐s TR, thus minimizing contamination of the stimuli by scanner noise. In addition, the long TR provided sufficient time for the scanner‐noise generated hemodynamic response to decay so that its peak would not overlap with the hemodynamic response generated by the music stimuli [Belin et al.,1999; Gaab and Shlaug,2003; Hall et al.,1999; Wong et al.,2007a]. There were 75 trials of stimuli for each instrument, order randomized across the fMRI experiment. In addition, there were 25 null trials of scanning when no stimuli were presented. In total, there were 175 (75 × 2 + 25) 14‐s TRs lasting about 41 min. These imaging procedures are similar to our published study (Wong et al., 2007a).

fMRI Data Analyses

The T2*‐weighted functional MR images (time series) were analyzed using BrainVoyager [Goebel,2004]. The data were preprocessed following the BrainVoyager recommended order, including scan time correction, 3D motion correction, spatial smoothing (FWHM 6 mm), linearly detrending, and finally temporal filtering. Anatomical and functional images from each subject were transformed into the Talairach stereotaxic space [Talairach and Tournoux,1988]. The T2*‐weighted images were resampled to 1 × 1 × 1 mm3 after Talairach transformation. After these preprocessing procedures, the activation maps were estimated. Square waves modeling the events of interest were created as extrinsic model waveforms of the task‐related hemodynamic response. These events of interest included the two events of interest (Instrument of Expertise vs. Instrument of Nonexpertise). Note that even though the TR was 14‐s long, image acquisition only occurred during the first 2 s of the TR (see Fig. 1). Thus, the images collected reflected either a stimulus event or a null event (no stimulus presented). Imaging at specific time points relative to stimulus presentation removed the need to convolve the task‐related extrinsic waveforms with a hemodynamic response function before statistical analyses as is commonly done [Wong et al.,2004b]. The waveforms of the modeled events were used as regressors in a multiple linear regression of the voxel‐based time series. Normalized beta values signifying the fit of the regressors to the functional scanning series, voxel‐by‐voxel, for each condition were used in multisubject analyses. These analysis procedures are similar to our published studies (e.g., Wong et al.,2007a).

RESULTS

Imaging Results

We performed two types of analyses: first a voxel‐wise random effect analysis contrasting listening for the Instrument of Expertise vs. Nonexpertise, and second, region‐of‐interest (ROI) analyses focusing more specifically on the left auditory cortex.

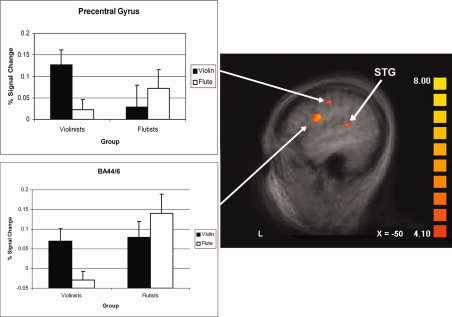

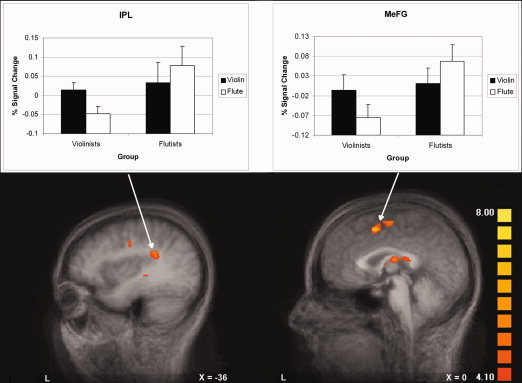

Voxel‐wise contrast (random effect analysis)

We report here a voxel‐wise random effect analysis contrasting Instrument of Expertise vs. Nonexpertise across all subjects (listening to one's own instrument vs. listening to another instrument). All clusters reported in Table II exceeded a single‐voxel P value of < 0.001 extending at least 300 voxels except for left STG. This activation and spatial threshold was determined by a Monte Carlo simulation procedure implemented in BrainVoyager, and corresponded to a family‐wise alpha of 0.05 for the whole brain. As shown in Table II, listening to music played on one's own instrument (i.e., violinists listening to violin excerpts and flutists listening to flute excerpts), relative to listening to music played on an untrained instrument (i.e., violinists listening to flute music and flutists listening to violin music) activated more strongly an extensive brain network on the left hemisphere including the precentral gyrus (BA 6), the inferior frontal gyrus (IFG, extending from BA 44 to BA6) (Fig. 2), the inferior parietal lobule (IPL) (Fig. 3, left panel), and the medial frontal gyrus (MeFG) (Fig. 3, right panel). In addition, the left globus pallidus and the right middle frontal gyrus were activated.

Table II.

Regions of activation based on the Instrument of Expertise vs. Nonexpertise contrast (random effect analysis)

| Area | x | Y | z | t Value | Size (mm3) |

|---|---|---|---|---|---|

| R MFG | 30 | −1 | 34 | 6.3057 | 2825 |

| L MeFG | 0 | 8 | 49 | 7.057 | 1424 |

| L Globus Pallidus | −15 | 2 | −2 | 5.462 | 1124 |

| L Precentral | −33 | −10 | 37 | 5.7088 | 948 |

| L IPL | −36 | −37 | 25 | 5.776 | 718 |

| L Precentral, IFG (BA6/BA 44) | −51 | −4 | 19 | 6.667 | 1415 |

| L STG | −50 | −37 | 11 | 5.869 | 182a |

The coordinates represent the location of the peak voxel for a cluster in Talaraich space.

All the clusters except for L STG exceeded a single‐voxel P value of < 0.001 and a spatial extent of 300 voxels (corresponding to P < 0.05 after correction for multiple comparisons across the whole brain).

BA, approximate Brodmann's area; L, left; R, right; IFG, inferior frontal gyrus; MFG, middle frontal gyrus; MeFG, medial frontal gyrus; IPL, inferior parietal lobule; precentral, precentral gyrus; STG, superior temporal gyrus.

The L STG cluster reported here is below the threshold for spatial extent.

Figure 2.

Brain activation revealed by the Instrument of Expertise vs. Nonexpertise Instrument contrast (based on a random effect analysis) showing activation in left BA 44/6, Precentral Gyrus, and STG. Bar graphs show activation for each instrument for each subject group. Error bars indicate standard error of the mean. Activation is projected onto a T1‐weighted volume averaged across all subjects; color bar indicates strength of activation in t value (also applied to Fig. 3). [Color figure can be viewed in the online issue, which is available at www.interscience.wiley.com.]

Figure 3.

Brain activation revealed by the Instrument of Expertise vs. Nonexpertise Instrument contrast (based on a random effect analysis) showing activation in IPL (left panel) and MeFG (right panel). Bar graphs show activation for each instrument for each subject group. Error bars indicate standard error of the mean. [Color figure can be viewed in the online issue, which is available at www.interscience.wiley.com.]

To provide additional detail about the activation in the random effect analysis, Figures 2 and 3 include bar graphs showing the activation for each instrument for each subject group in the precentral gyrus and the IFG in Figure 2 and the IPL and MeFG in Figure 3. A 5 × 5 × 5‐voxel kernel was drawn around the strongest activating voxel for each region; voxels exceeding the single‐voxel P < 0.001 cutoff were averaged and shown in the graphs. For all the regions shown, a 2 × 2 repeated measures ANOVA (instrument × group) revealed a significant interaction [BA44/6: F = 42.985, P < 0.0001; Precentral Gyrus: F = 33.302, P < 0.0001; IPL: F = 32.153, P < 0.0001; MeFG: F = 42.203, P < 0.0001]. Except for the precentral gyrus, which showed a main effect of instrument only (overall responses to violin were larger, an effect exaggerated by the violinists' extremely large responses) [F = 5.388, P = 0.036], there were no main effects, suggesting that, in general, no one instrument induced a larger response and no one group of subjects responded with greater intensity.

Planned comparisons (based on two‐tail paired t‐tests) specifically focusing on how listeners responded to their instrument of expertise and nonexpertise were conducted on each region in each subject group. A priori, we predicted that stronger activation should be observed in the instrument of expertise. Our planned comparisons confirmed this prediction, as suggested by a P value of less than 0.025 (Bonferroni correction for two t‐tests performed for each region) [BA44/6: violinists (P < 0.0002) and flutists (P < 0.025); Precentral Gyrus: violinists (P < 0.001) and flutists (P < 0.02); IPL: violinists (P < 0.001) and flutists (P < 0.025); MeFG: violinists (P < 0.002) and flutists (P < 0.001)].

It is worth mentioning that, enlarging the size of the ROI kernel to 7 mm3 and including all voxels in the kernel (i.e., no single‐voxel P value threshold is applied) produced essentially identical patterns of results. Specifically, for all regions reported above, a 2 × 2 repeated measures ANOVA (instrument × group) revealed a significant interaction [BA44/6: F = 36.658, P < 0.0001; Precentral Gyrus: F = 21.798, P < 0.0001; IPL: F = 29.928, P < 0.0001; MeFG: F = 36.185, P < 0.0001].

Region‐of‐interests analysis for STG

Because we hypothesized activation in the left auditory cortex to be associated with the listening biography, we performed two ROI analyses focusing on the left STG. In the first analysis, the cluster selected was one with voxels exceeding a single‐voxel P value of < 0.001 in the voxel‐wise random effect contrast discussed earlier (Instrument of Expertise vs. Nonexpertise), but with activation not extensive enough to survive a cluster size threshold according to the Monte Carlo simulation performed. This region centers in the posterior superior temporal gyrus [−50, −37, 11] with a cluster size of 182 voxels. In a 2 × 2 repeated measures ANOVA, we found a significant instrument (violin or flute) × group (violinists or flutists) interaction [F = 35.435, P < 0.0001], suggesting that activation in this left STG region was stronger when listeners listened to their instrument of expertise. Paired t‐tests revealed that subjects responded more strongly to their instrument of expertise [violinists: P < 0.001; flutists: P < 0.02]. Figure 4 shows these STG results. This interaction remained significant when unthresholded data and a 5 mm3 kernel [F = 23.787, P < 0.0001] and a 7 mm3 kernel [F = 25.558, P < 0.0001] were applied.

Figure 4.

Left STG activation demonstrating a listening biography effect. Top panel: Percent signal change in the left STG cluster (cluster defined by activation in group map) shown on Figure 2, which revealed a significant instrument × group interaction (see text for statistics). Bottom panel: Percent signal change in a 5 × 5 × 5‐mm3 kernel surrounding [−57, −36, 10] in left STG defined by Ohnishi et al. [2001]; a significant instrument × group interaction was also found. Error bars indicate standard error of the mean.

In the second analysis, a 5 × 5 × 5‐mm3 kernel was drawn around Talaraich coordinates [−57, −36, 10] in the posterior STG, which was found to show stronger activation in musicians relative to nonmusicians during passive music listening similar to our present study [Ohnishi et al.,2001]. Intensity (% signal change) was extracted from all voxels in this 5 × 5 × 5‐mm3 cluster without any statistical threshold being applied, unlike the above ROI in which only voxels exceeding a particular P value were included. A 2 × 2 repeated measures ANOVA similarly showed a significant instrument × group interaction [F = 5.604, P < 0.0033] (Fig. 4) with no main effects. The same analysis focusing on the right STG [52, −23, 9] reported by Ohnishi et al. did not yield a significant interaction nor main effects. Similar to the aforementioned results, using a 7 × 7 × 7‐mm3 kernel still resulted in a significant interaction for left but not right STG activation [left STG: F = 5.152, P < 0.04; right STG: F = 2.993, P = 0.137].

DISCUSSION

The current study shows that the auditory system is sensitive not only to IBEs such as central frequencies and frequency modulations and their combinations (e.g., vowels, consonants, pitches, and chords), but also to the long‐term listening biography of classical instrumentalists – in particular, to the special experience instrumentalists have with music for their instrument of expertise. If acoustic differences between the two sets of stimuli had been the primary relevant factor, results would have shown selective responses to violin and flute music, regardless of instrument of expertise. Instead, results show selective responses to music played on the instrument of expertise (violin for violinists and flute for flutists), allowing each instrument to serve as the control for the other condition (violin for flutists and flute for violinists). Many studies have identified special processing in musicians [Gaab and Shlaug,2003; Münte et al.,2003], but they have compared musicians with non‐musicians, leaving open an explanation based on genetic predisposition rather than training. Although it is plausible that genetic predispositions might influence some people to become musicians, it is less plausible that genetic predispositions influence the selection of specific instruments. By comparing two groups of highly trained musicians differing only in their instrument of expertise, rather than comparing musicians and nonmusicians, this study contributes support to the notion that some of the special characteristics of the expert auditory system in musicians are due to training, and not to genetic predispositions. It is particularly important to point out that although Ohnishi et al. [2001] found stronger activation in the left posterior STG in musicians relative to non‐musicians during passive music listening (similar to our study's task), we found stronger activation in the same region during listening to the instrument of expertise, suggesting that the importance of the left STG is in processing sounds that a listener (musician or not) has long‐term experience with, and is not necessarily associated with any other differences (including genetic factors) between musicians and non‐musicians. The same comparison in the right STG did not yield significant expertise‐specific results.

Self‐Relevance and the Listening Biography

It is particularly noteworthy that the relevant regions were activated selectively in response to music for the instrument of expertise, not to music in almost all ways identical, except for the different instrument. This selectivity suggests that it is not just structural or syntactic features (since those were matched) that elicit musical responses; rather, an individual's listening biography – the contexts and modalities within which prior musical experiences have occurred – shapes musical perception in important ways. For performers (subjects) in our study, the listening biography uniquely associated with the piece in the timbre of expertise included experience playing it, and additional experience listening to it. For example, the activity in the left medial frontal gyrus triggered by the instrument of expertise suggests a personal and high‐level executive response to the instrument with which the performer has an extensive history. The activated frontal regions have been implicated in the special processing associated with stimuli related to a person's notion of self [see Gillihan and Farah,2005, for a review]. This special processing of self‐relevant stimuli might yield a survival advantage and reflect our evolutionary history. The present study provides a novel example of self‐relevance in response to non‐linguistic auditory input. Music for the instrument of expertise likely raises issues of evaluation and judgment for performers, as they compare, for example, the quality of the instrumentalist with their own. Performers are liable to engage more with the implied social dynamic of the performance when they can imagine themselves performing the actions that created the sounds, and compare choices made by the actual performer with ones they would have made had they been playing the instrument. Although previous studies have shown that musicians exhibit particular sensitivities to timbres with which they have special long‐term auditory experience [Fujioka et al.,2006; Pantev et al.,1989,2001], they have not revealed a network this extensive. We argue that because the stimuli used in previous studies [e.g., Pantev, et al.,2001] were isolated tones rather than full musical excerpts as in the present study, the materials were not sufficient to trigger the full extent of the response demonstrated in this study. It is worth noting that the neural distinction is not associated with a completely different network, but rather a single network activated to different extents: a system arguably more efficient than developing a completely separate network.

Syntactic and Motor Involvement in Music Listening

The left inferior frontal gyrus (more specifically BA44), selectively activated by musicians listening to music for their own instrument of expertise, had been thought to be language‐specific before being additionally implicated in the syntactical processing of music [Patel and Balaban,2001]. Since performer expressivity depends on musical syntax [Palmer,1997], performers might be more invested in syntactical processing for music in their timbre of expertise. Seeking to evaluate the expressive success, comparing it to their own achievements on the instrument, subjects may have invested extra energy to the syntactic processing that forms the basis for expressive assessment. Musical syntax is often conceptualized as a robust phenomenon, determined largely by features in the acoustic signal, but the discovery of selective BA44 activity in response to music for the timbre of expertise suggests that the listening biography – the listener's special set of prior experiences with the music – can modulate syntactic processing. Another region often associated with language, the left superior temporal gyrus, was also selectively activated by subjects in response to music for their timbre of expertise. This activation likely reflects the effect of the listening biography on other aspects of auditory processing; for example, the more sophisticated analysis of subtle timbral nuances for the instrument of expertise.

It is noteworthy that activation in the inferior frontal region can be attributed to the involvement of the mirror neuron network [Lahav et al.,2007; Ramnani and Miall,2004; Rizzolatti et al.,2001]. Janata and Grafton [2003] observed that “the sensory experience of musical patterns is intimately coupled with action,” and speech sounds have been shown to selectively activate distinct motor regions in the precentral gyrus [Pulvermüller et al.,2006]. Similarly for music, musical practice has been shown to bind representations of sound and motion, so that finger motions trigger imagined sound, and heard sound triggers imagined finger motions [Bangert et al.,2006; Haueisen and Knösche,2001]. We attribute the activity shown by our subjects in the precentral gyrus (related to motor control) and the globus pallidus (related to posture control and the suppression of unwanted movements) to sound‐motor interactions in responses to music in the timbre of expertise. However, we believe that the major portion of BA44 activation shown in this study is more appropriately attributed to syntactic processing. Musical training has been shown to enhance syntactical processing as reflected in behavioral measures (clearer and more robust “key profiles”) [Krumhansl,1990] and brain responses (larger P300s and P600s to syntactic anomalies) [Besson et al.,1994; Besson and Fata,1995; Granot and Donchin,2002; Koelsch et al.,2002; Krohn et al.,2007]. General musical training (lessons on any instrument) has been shown to facilitate general music‐syntactic processing, and this study suggests that specific musical training (special listening biographies associated with music for the timbre of expertise) can facilitate music‐syntactic processing for specific kinds of musical input.

A Broader Account of Auditory Expertise

This study shows that musical structure does not exclusively shape musical responses; rather, personal experiences and listening histories intervene to generate special reactions. Classically trained performers are already a small group with respect to the population of musical listeners, and that they, based only on the instrument on which they'd been trained, could recruit such different areas to process highly similar music for two timbres suggests personal experience, training, and familiarity play an even larger role in music cognition than usually assumed in the literature. In addition to addressing a long‐standing concern in neuroscience regarding perceptual‐neural plasticity, our finding has implications for sensory preference (e.g., why some people adore music other people cannot stand), education (e.g., how training effects experience), and communication (e.g., how different people can hear different things in the same musical piece or sequence of sensory stimuli). In terms of Bharucha et al.'s musical perception framework of a code transforming acoustic signals to cognitive representation [Bharucha et al.,2006], this study shows that different listening biographies create different codes: multimodal familiarity causes listeners to transform music into experience differently.

The experience‐based neurologic expertise system uncovered by this study establishes a framework within which deficits and achievements in the development of expertise can be studied. According to this framework, long‐term exposure and use shapes responses in fundamental ways that include and extend beyond the IBE‐tuned neurons and the central “what” and “where” auditory pathways [Rauschecker,1998].

Acknowledgements

The authors thank Holly Weis, Richard Cheung, Tyler Perrachione, Geshri Gunasekera, and Nondas Leloudas for their assistance in this research.

REFERENCES

- Bangert M,Peschel T,Schlaug G,Rotte M,Drescher D,Hinrichs H,Heinze H,Altenmüller E ( 2006): Shared networks for auditory and motor processing in professional pianists: Evidence from fMRI conjunction. Neuroimage 30: 917–926. [DOI] [PubMed] [Google Scholar]

- Belin P,Zatorre RJ,Hoge R,Evans AC,Pike B ( 1999): Event‐related fMRI of the auditory cortex. NeuroImage 10: 417–429. [DOI] [PubMed] [Google Scholar]

- Besson M,Fata F ( 1995): An event‐related potential (ERP) study of musical expectancy: Comparison of musicians with nonmusicians. J Exp Psychol Hum Percept Perform 21: 1278–1296. [Google Scholar]

- Besson M,Fata F,Requin J ( 1994): Brain waves associated with musical incongruities differ for musicians and nonmusicians. Neurosci Lett 168: 101‐–105. [DOI] [PubMed] [Google Scholar]

- Bharucha JJ,Curtis M,Paroo K ( 2006): Varieties of musical experience. Cognition 100: 131–172. [DOI] [PubMed] [Google Scholar]

- Binder JR,Frost JA,Hammeke TA,Bellgowan PS,Springer JA,Kaufman JN,Possing ET ( 2000): Human temporal lobe activation by speech and nonspeech sounds. Cereb Cortex 10: 512–528. [DOI] [PubMed] [Google Scholar]

- Fujioka T,Ross B,Kakigi R,Pantev C,Trainor LJ ( 2006): One year of musical training affects development of auditory cortical‐evoked fields in young children. Brain 129: 2593–2608. [DOI] [PubMed] [Google Scholar]

- Gaab N,Shlaug G ( 2003): The effect of musicianship on pitch memory in performance matched groups. Neuroreport 14: 2291–2295. [DOI] [PubMed] [Google Scholar]

- Gillihan SJ,Farah MJ ( 2005): Is self special? A critical review of evidence from experimental psychology and cognitive neuroscience. Psychol Bull 131: 76–97. [DOI] [PubMed] [Google Scholar]

- Goebel R ( 2004): Brain Voyager QX. Pittsburg: Psychology Software Tools. [Google Scholar]

- Gordon M,O'Neill WE ( 2000): An extralemniscal component of the mustached bat inferior colliculus selective for direction and rate of linear frequency modulations. J Comp Neurol 426: 165–181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Granot R,Donchin E ( 2002): Do Re Mi Fa Sol La Ti—Constraints, congruity, and musical training: An event‐related brain potentials study of musical expectancies. Music Percept 19: 487–528. [Google Scholar]

- Hall DA,Haggard MP,Akeroyd MA,Palmer AR,Summerfield AQ,Elliott MR,Gurney EM,Bowtell RW ( 1999): “Sparse” temporal sampling in auditory fMRI. Hum Brain Mapp 7: 213–223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haueisen J,Knösche TR ( 2001): Involuntary motor activity in pianists evoked by music perception. J Cogn Neurosci 13: 786–792. [DOI] [PubMed] [Google Scholar]

- Janata P,Grafton ST ( 2003): Swinging in the brain: Shared neural substrates for behaviors related to sequencing and music. Nat Neurosci 6: 682–687. [DOI] [PubMed] [Google Scholar]

- Koelsch S,Schmidt B,Kansok J ( 2002): Effects of musical expertise on the early anterior negativity: An event‐related brain potential study. Psychophysiology 39: 657–663. [DOI] [PubMed] [Google Scholar]

- Koelsch S,Kasper E,Sammler D,Schulze K,Gunter T,Friederici A ( 2004): Music, language and meaning: Brain signatures of semantic processing. Nat Neurosci 7: 302–307. [DOI] [PubMed] [Google Scholar]

- Krohn KI,Brattico E,Välimäki V,Tervaniemi M ( 2007): Neural representations of the hierarchical scale pitch structure. Music Percept 24: 281–297. [Google Scholar]

- Krumhansl CL ( 1990): Cognitive Foundations of Musical Pitch. New York: Oxford University Press. [Google Scholar]

- Lahav A,Saltzman E,Schlaug G ( 2007): Action representation of sound: Audiomotor recognition network while listening to newly acquired actions. J Neurosci 27: 308–314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lerdahl F,Jackendoff R ( 1983): A Generative Theory of Tonal Music. Cambridge, MA: MIT Press. [Google Scholar]

- Liebenthal E,Binder JR,Spitzer SM,Possing ET,Medler DA ( 2005): Neural substrates of phonemic perception. Cereb Cortex 15: 1621–1631. [DOI] [PubMed] [Google Scholar]

- Maess B,Koelsch S,Gunter TC,Friederici AD ( 2001): Musical syntax is processed in Broca's area: An MEG study. Nat Neurosci 4: 540–545. [DOI] [PubMed] [Google Scholar]

- Meyer L ( 1989): Style and music: Theory, history, and ideology. Chicago: University of Chicago Press. [Google Scholar]

- Münte TF,Nager W,Beiss T,Schroeder C,Altenmüller E ( 2003): Specialization of the specialized: Electrophysiological investigations in professional musicians. Ann NY Acad Sci 999: 131–139. [DOI] [PubMed] [Google Scholar]

- Ohnishi T,Matsuda H,Asada T,Aruga M,Hirakata M,Nishikawa M,Katoh A,Imabayashi E ( 2001): Functional anatomy of musical perception in musicians. Cereb Cortex 11: 754–760. [DOI] [PubMed] [Google Scholar]

- Oldfield RC ( 1971): The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9: 97–113. [DOI] [PubMed] [Google Scholar]

- Palmer C ( 1997): Music performance. Annu Rev Psychol 48: 115–138. [DOI] [PubMed] [Google Scholar]

- Pantev C,Hoke M,Lutkenhoner B,Lehnertz K ( 1989): Tonotopic organization of the auditory cortex: Pitch versus frequency representation. Science 246: 486–488. [DOI] [PubMed] [Google Scholar]

- Pantev C,Roberts LE,Schulz M,Engelien A,Ross B ( 2001): Timbre‐specific enhancement of auditory cortical representations in musicians. Neuroreport 12: 169–174. [DOI] [PubMed] [Google Scholar]

- Patel AD,Balaban E ( 2001): Human pitch perception is reflected in the timing of stimulus‐related cortical activity. Nat Neurosci 4: 839–844. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F,Huss M,Kherif F,Moscoso del Prado Martin F,Hasuk O,Shtyrov Y ( 2006): Motor cortex maps articulatory features of speech sounds. Proc Natl Acad Sci USA 103: 7865–7870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramnani N,Miall RC ( 2004): A system in the human brain for predicting the actions of others. Nat Neurosci 7: 85–90. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP,Tian B,Hauser M ( 1995): Processing of complex sounds in the Macaque nonprimary auditory cortex. Science 268: 111–114. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP ( 1998): Cortical processing of complex sounds. Curr Opin Neurobiol 8: 516–521. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G,Fogassi L,Gallese V ( 2001): Neurophysiological mechanisms underlying the understanding and imitation of action. Nat Rev Neurosci 2: 661–670. [DOI] [PubMed] [Google Scholar]

- Scott SK,Blank CC,Rosen S,Wise RJ ( 2000): Identification of a pathway for intelligible speech in the left temporal lobe. Brain 12: 2400–2406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suga N ( 1995): Sharpening of frequency tuning by inhibition in the central auditory system: Tribute to Yasuji Katsuki. Neurosci Res 21: 287–299. [DOI] [PubMed] [Google Scholar]

- Suga N,Gao E,Zhang Y,Ma X,Olsen JF ( 2000): The corticofugal system for hearing: Recent progress. Proc Natl Acad Sci USA 97: 11807–11814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J,Tournoux P ( 1988): Co‐Planar Stereotactic Atlas of the Human Brain. New York: Thieme. [Google Scholar]

- Wong PCM,Parsons LM,Martinez M,Diehl RL ( 2004a): The role of the insular cortex in pitch pattern perception: The effect of linguistic contexts. J Neurosci 24: 9153–9160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong PCM,Nusbaum HC,Small SL ( 2004b): Neural Bases of Talker Normalization. J Cogn Neurosci 16: 1173–1184. [DOI] [PubMed] [Google Scholar]

- Wong PCM,Perrachione TK,Parrish TB ( 2007a): Neural characteristics of successful and less successful speech and word learning in adults. Hum Brain Mapp 28: 995–1006. DOI: 10.1002/hbm.20330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong PCM,Skoe E,Russo NM,Dees T,Kraus N ( 2007b): Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nat Neurosci 10: 420–422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ ( 2005): Music, the food of neuroscience? Nature 434: 312–315. [DOI] [PubMed] [Google Scholar]