Abstract

One of the functions of emotional vocalizations is the regulation of social relationships like those between adults and children. Listening to infant vocalizations is known to engage amygdala as well as anterior and posterior cingulate cortices. But, the functional relationships between these structures still need further clarification. Here, nonparental women and men listened to laughing and crying of preverbal infants and to vocalization‐derived control stimuli, while performing a pure tone detection task during low‐noise functional magnetic resonance imaging. Infant vocalizations elicited stronger activation in amygdala and anterior cingulate cortex (ACC) of women, whereas the alienated control stimuli elicited stronger activation in men. Independent of listeners' gender, auditory cortex (AC) and posterior cingulate cortex (PCC) were more strongly activated by the control stimuli than by infant laughing or crying. The gender‐dependent correlates of neural activity in amygdala and ACC may reflect neural predispositions in women for responses to preverbal infant vocalizations, whereas the gender‐independent similarity of activation patterns in PCC and AC may reflect more sensory‐based and cognitive levels of neural processing. In comparison to our previous work on adult laughing and crying, the infant vocalizations elicited manifold higher amygdala activation. Hum Brain Mapp 2007. © 2006 Wiley‐Liss, Inc.

Keywords: human vocalizations, emotions, gender differences, valence effects, familiarity

INTRODUCTION

Emotions play a key role in the regulation of social behavior. Perceiving emotional vocalizations, for example, engages a variety of brain regions not only involved in the processing of their physical auditory attributes but also of their social and biological relevance. Neural structures underlying sensory, cognitive, and behavioral aspects of emotional stimulus processing are regions of sensory and association cortex, and limbic as well as paralimbic structures for evaluating and mediating autonomous and expressive‐motor aspects of emotions and for associating stimuli with adequate emotional responses. Brain structures, such as orbitofrontal, prefrontal, and cingulate cortices play a role in regulating emotional behavior and representing one's own social environment and relationships [Adolphs, 2003; Gainotti, 2001].

Using functional magnetic resonance imaging (fMRI), it was previously shown that listening to nonverbal emotional vocalizations—laughing, crying, and expressions of fear—elicited activation in the human amygdala besides those in auditory cortex (AC) [Phillips et al., 1998; Sander and Scheich, 2001, 2005]. In addition to the amygdala, infant crying activated anterior and posterior cingulate cortices in mothers [Lorberbaum et al., 1999, 2002], brain structures known to be relevant for caregiving behavior [MacLean, 1993; Pedersen, 2004]. Elaborating on these findings, Seifritz et al. [2003] showed that neural activation in response to infant vocalizations was modulated by the social (parental) state and gender of the listener. While parents exhibited stronger amygdala activation in response to infant crying, nonparents did so in response to infant laughing; but only women showed decreases of anterior cingulate cortex (ACC) activation in response to both vocalizations, independent of parental state. Socially less salient vocalizations like pleasant and unpleasant words were also found to activate anterior and posterior cingulate cortices as well [Maddock et al., 2003]. Thus, a specific ensemble of brain structures processing human verbal and nonverbal vocalizations of emotional relevance comprises AC, amygdala, and cingulate cortex [Lorberbaum et al., 2002; Maddock et al., 2003; Phillips et al., 1998; Seifritz et al., 2003]. This ensemble is not specific for humans, since the same brain structures were also activated in awake rhesus monkeys listening to species‐specific calls as recently shown with positron emission tomography (PET) [Gil‐da‐Costa et al., 2004].

Gender differences in neural activity in various brain structures during processing of various socially and biologically relevant information like human faces [Fischer et al., 2004], speech [Kansaku et al., 2000; but see Lattner et al., 2005; Shaywitz et al., 1995], and emotions are common. They were observed during viewing facial expressions of emotion [Killgore and Yurgelun‐Todd, 2001; Schneider et al., 2000] and erotic stimuli [Bradley et al., 2001; Hamann et al., 2004; Sabatinelli et al., 2004], as well as in relation to memory for negative emotions [Cahill et al., 2004a, b], and in response to the nonverbal vocalizations laughing and crying [Sander and Scheich, 2001; Seifritz et al., 2003].

Using low‐noise fMRI, the present study aimed at further investigating the involvement of the amygdala‐cingulate‐auditory cortex ensemble in processing laughing and crying, and especially the activity relationships between these structures. Our analysis focused on amygdala, cingulate cortex, and auditory cortex because they are not only responsive to human emotional vocalizations (see above) but also anatomically interconnected [Amaral et al., 1992; Barbas et al., 1999; Vogt and Pandya, 1987; Yukie, 1995, 2002].

Since we have previously studied adult laughing and crying [Sander and Scheich, 2001, 2005], we were interested in assessing whether the infant vocalizations in some obvious way produced different brain activation. It is a common notion in biology that infant vocalizations are extremely salient for conspecifics whether parents or not. Therefore, we first compared amygdala activation elicited by infant vocalizations in some of the same listeners who previously participated in the studies on adult vocalizations.

Second, based on reported gender differences in ACC [Seifritz et al., 2003] and amygdala activation [Cahill et al., 2004b; Fischer et al., 2004; Hamann et al., 2004; Sander and Scheich, 2001; Schneider et al., 2000] while processing emotional stimuli, we hypothesized activation differences between men and women in these two structures in response to listening to laughing and crying of preverbal infants. Vocalizations of preverbal infants were used because they are primary means for communication and rather reliable elicitors of (maternal) caregiving behavior [Zeifman, 2001]. There may even be a disposition for processing preverbal infant vocalizations in women as suggested by gender differences in opioid receptors, especially during the reproductive state of women [Zubieta et al., 1999]. This assumption is supported by animal studies demonstrating that the presence of female sex hormones is necessary for spontaneous pub retrieval by mice lacking experience with pubs [Ehret and Koch, 1989]. In targeting potential gender differences in ACC and amygdala activation by infant vocalizations we used a variety of laughing and crying stimuli from different individuals to account for influences related to infant or acoustic stimulus characteristics.

Third, we tested for valence effects of stimuli (laughing vs. crying) within the different structures. With respect to our findings on adult laughing and crying [Sander and Scheich, 2001, 2005] we did not expect observing valence differences here.

Fourth, by introducing control stimuli that were acoustically derived from the infant vocalizations by fragment recombination and sounded somewhat alienated, but still like laughing and crying, we attempted to force the brain system to finer differentiations between natural and unnatural stimuli.

METHODS

Participants

Participants were 9 women and 9 men, all right‐handed [Edinburgh Handedness Inventory, Oldfield, 1971] and with normal hearing. Except one man, who was excluded from further data analysis, participants had no own children at the time of investigation. Women (n = 9; mean age 24.33 ± 3.2 years; laterality quotient 89.97 ± 15.05) and men (n = 8; age 27.75 ± 6.41 years; laterality quotient 94.33 ± 8.19) did not differ in age or handedness (P > 0.1 and P > 0.4, respectively). The study was performed in compliance with the Declaration of Helsinki. The experimental procedure was explained to the participants, and all of them gave written informed consent to the study, which was approved by the ethical committee of the University of Magdeburg.

Stimuli

Natural samples of continuous laughing and crying of preverbal infants (2 female, 2 male; mean age 15.4 months, range of 10.8–17.3 months) were recorded in the home environment by the parents. Recordings were assessed with respect to acoustical quality and clearness of emotional expression. Two representative examples of laughing as well as of crying were chosen from each infant and cut to a length of 78 s, with linear rise/fall times of 250 ms (CoolEditPro, Syntrillium Software Corporation, Phoenix).

The decision to create fragment recombinations of laughing and crying as control stimuli (see below) was driven by two motives: (i) to preserve at least for these fragments the spectral and temporal complexity and (ii) to avoid a shift in stimulus category by introducing perceptually nonemotional controls.

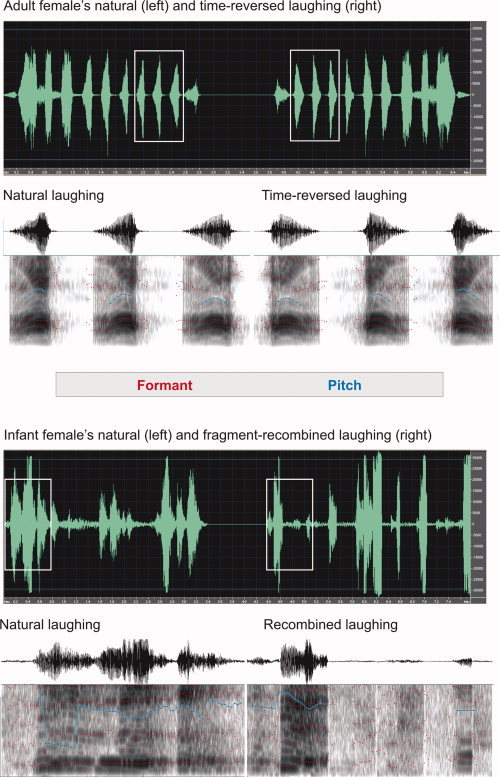

Control stimuli were generated by splitting each laughing and crying stimulus into 150 ms segments, which after edge smoothing (linear rise/fall times of 5 ms) were randomly recombined (fragment recombination). They sounded laughing‐ and crying‐like but with a touch of “alienation”. Compared to adult laughing and crying of our previous study [Sander and Scheich, 2005], which were produced by professional actors, these natural infant vocalizations were assumed to be more salient. Examples of acoustical features of infant and adult laughing are given in Figure 1 [Boersma and Weenink, 1992–2003].

Figure 1.

Spectrograms of excerpts from adult female laughing (top) and infant female laughing (bottom), with a total duration of 2,820 ms for each adult and 3,379 ms for each infant laughing. The first row shows waveforms of natural laughing (left) and time‐reversed or fragment‐recombined laughing (right). Oscillograms and sonograms for the sound selections outlined in the first rows (rectangles) are shown in the second rows. They have durations of 673 ms for the adult and 774 ms for the infant stimulus.

Stimulus Presentation, Task, and Procedure

Stimuli were presented alternating with a reference (see below) in an fMRI block‐design. Stimulus blocks consisted of either continuous “Laughing” or “Crying” or of the acoustical controls, “unnatural Laughing” or “unnatural Crying”, named in accordance with their origin. Stimulus presentation was counterbalanced across emotional valence (laughing, crying), gender of voice (female, male), and sequence of presentation mode (natural laughing and crying followed by the unnatural versions or vice versa). No information was given on the infants themselves, like gender or attractiveness, or on how many stimuli were presented from one and the same infant. The experimental session comprised 16 stimulus blocks, four blocks of each stimulus class, and 16 “Reference” blocks without stimulus presentation of 52 s each, resulting in total duration of 34 min 40 s.

To ensure attention to the acoustic stimuli across the entire experiment, participants had to detect short sinus tones (1 kHz, 100 ms), which randomly interrupted the vocalizations. They had to count their number and to report this after scanning. Sinus tones were introduced one to four times within a stimulus block (in total 38). Furthermore, the occurrence of a sinus tone had to be reported by key press within 2.3 s to count as a correct response.

Interactions between listening to emotional vocalizations and participants' change of mood were controlled for by mood questionnaires filled out directly before and after the experiment [Mehrdimensionaler Befindlichkeitsfragebogen, Steyer et al., 1997]. Finally, participants were debriefed on the pleasantness, emotionality, intensity, naturalness, and evocation of positive feelings of all stimulus classes. Ratings were given on a seven‐point bipolar scale ranging from “1” (the least value) to “7” (the strongest value).

In the scanner, participants' heads were immobilized with a vacuum cushion with attached ear muffs containing the MRI‐compatible electrodynamic headphones [Baumgart et al., 1998]. Stimuli were presented binaurally with an individually adjusted, comfortable listening level (68–75 dBA SPL) under computer control.

Data Acquisition

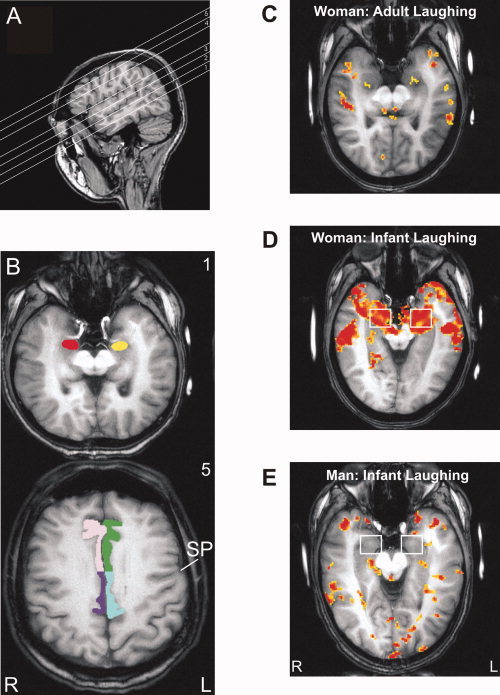

Scanning was done in a BRUKER BIOSPEC 3T system (Ettlingen, Germany) equipped with a quadrupolar head coil and an asymmetrical gradient system (30 mT/m). A volume of five axial slices, each 8 mm thick, oriented parallel to the Sylvian fissure was collected using a modified noise‐reduced FLASH sequence for functional imaging (58 dBA SPL) [Baumgart et al., 1999] with the following parameters: TR = 214.89 ms, TE = 30.33 ms, flip angle = 15°, matrix 64 × 52, FOV 18 × 18 cm2. Since scan‐time depends on the recorded volume, two separate slice packages were scanned to avoid tiring of participants. The ventral package comprised three contiguous slices covering amygdala, AC, and in part posterior cingulate cortex (PCC) (Fig. 2A, slices 1–3), and the dorsal package comprised two contiguous slices covering parts of ACC and PCC (Fig. 2A, slices 4 and 5).

Figure 2.

A: Slice orientation parallel to the Sylvian fissure as shown in sagittal view. The ventral slice package covered amygdala (slice 1), auditory cortex, and parts of posterior cingulate cortex (PCC; slices 2 and 3), and the dorsal package parts of anterior cingulate cortex (ACC) and PCC (slices 4 and 5). B: Overview of the analyzed regions of interest (ROI) in axial‐oblique view according to slice orientation. Slice numbers are the same as in (A). Slice 1 shows the amygdala ROI (left: yellow, right: red), slice 5 ACC (left: green, right: pink) and PCC ROI (left: blue, right: lilac). The border between ACC and PCC was drawn at the level of the sulcus precentralis (SP). Individual activation in response to laughing, compared with reference, is shown for a woman listening to adult laughing (C) as well as to infant laughing (D) and for a man listening to infant laughing (E). Significance level of activated voxels: yellow P < 0.05, red P < 0.00001. L, left hemisphere; R, right hemisphere.

The use of a low‐noise FLASH sequence aimed at minimizing confounding effects of stressful high‐noise fMRI on neural activity, especially in the amygdala. But this was at the expense of resolution and total brain coverage [see Sander et al., 2003]. To capture amygdala and AC together in the ventral slice package a slice thickness of 8 mm was chosen. This resulted in a relatively large voxel size for which we accepted the existence of partial volume effects. Thus, activation reported may underestimate actual activation, but not overestimate activation [Jezzard and Ramsey, 2003].

The temporal resolution of the measurement was optimized by the use of a keyhole procedure. For image formation highly phase‐encoded echoes contribute mostly to object limits and size, while low phase‐encoding steps are dominantly responsive for object and image contrast, and their temporal change. The functional contrast is therefore most dominant in the least phase‐encoded echoes. The keyhole scheme reduces the update‐rate of highly phase‐encoded echoes, while for image reconstruction missing phase‐encoding steps are taken from a completely phase‐encoded reference image which itself is updated at a lower rate. The frequency with which the raw‐data matrix is completely actualized defines the keyhole block‐size. The following specifications were used here: keyhole block‐size = 4, keyhole factor (number of regularly phase‐encoding steps divided by the number of completely phase‐encoding steps) = 23/52 = 0.442, complete position = 3. The choice of the position of the reference image has to assure that there is no confoundation between image recordings under different neuronal states [Gao et al., 1996; van Vaals et al., 1993; Zaitsev et al., 2001].

Twelve images were recorded during each stimulus block and eight images during each reference block resulting in a total of 320 images for the measured volume. Directly following fMRI, anatomical images were recorded with the same orientation as the functional images and with high T1‐contrast (MDEFT).

Data Analysis

Using the AIR package, functional data were checked for motion [Woods et al., 1998a, b]. Data were excluded when continuous head movements exceeded 1° in one of the three angles or a translation of more than half a voxel in any direction. This was not the case. Second, functional images were checked for short movement artifacts (for example, due to swallowing) [see Brechmann et al., 2002]. The mean gray value of all voxels in the region of AC, defined in two slices across all repetitions, was analyzed because movements can lead to large deviations from the grand average. Functional images of which gray values deviated of more than 2.5% from the grand average were excluded from further analysis. A minimum of 20 images has to be left for each condition to perform cross‐correlations with an alpha error of 5% and a corresponding beta error of ≤30% for an effect value of 0.8 as recommended for small sample sizes, that is, for small numbers of pairs of comparison (stimulus versus reference) [Gaschler‐Markefski et al., 1997]. Therefore, a significance level of 5% error probability was chosen. None of the data sets fell below the limit of 20 images. Thereafter, the matrix size of functional images was increased to 128 × 128 by pixel replication followed by spatial smoothing to reduce in‐plane noise with a Gaussian filter (FWHM = 2.8 mm, kernel width = 7 mm). After mean intensity adjustment of each slice, data sets were corrected for in‐plane motion between successive images using the three parameter in‐plane rigid body model of BrainVoyager™. Functional activation was determined by correlation analysis to obtain statistical parametric maps [Bandettini et al., 1993; Gaschler‐Markefski et al., 1997]. A trapezoid function, roughly modeling the expected blood‐oxygen‐level‐dependent (BOLD) response, served as correlation vector with the time series of gray value change of each voxel. Because the development of the BOLD response and its return to baseline take a few seconds, the first image of each stimulus and reference block was set to half‐maximum. Images of stimulus blocks were set to maximum value and those of reference blocks to minimum value. Functional data analysis was carried out with the custom‐made software package KHORFu [Gaschler et al., 1996] using cluster‐pixel‐analysis of a cluster size of 8 pixels to exclude false single‐point activation.

In each participant, regions of interest (ROIs) were defined in the two hemispheres for the amygdala complex including some periamygdaloid surrounding, ACC, and PCC (Fig. 2A,B) as well as for the four functional–anatomical territories of AC as previously described [Brechmann et al., 2002] (Fig. 6A, left). ROIs for ACC comprised parts of Brodmann areas (BA) 24, 32, and 33, those for PCC parts of BA 23 and 31, and of retrosplenial cortex areas 29 and 30. The border between ACC and PCC was drawn at the level of the most lateral extension of the sulcus precentralis in the most dorsal slice (Fig. 2B, slice no. 5). Although this border might be slightly biased in favor to PCC [Vogt et al., 1995], it was a reliably identifiable, anatomical landmark in our data. Functional confoundation was assumed to be negligible at the present state of investigation. To exactly identify the different ROIs in a single participant, its individual 3D anatomical data set was used as reference (spatial resolution 1 × 1 × 1.5 mm3). Significantly activated voxels (P < 0.05 over all cycles) were determined in each ROI and participant for the four experimental conditions, laughing versus reference, unnatural laughing versus reference, crying versus reference, and unnatural crying versus reference, as well as for natural laughing and crying lumped together versus reference and unnatural laughing and crying lumped together versus reference (see Results). Further analyses were based on the correlative measure of neural activity, namely, intensity weighted volumes (IWVs) representing the product of the absolute number of significantly activated voxels and their mean change in signal intensity between stimulus and reference blocks in a given ROI. To compute signal intensity change, the first image of each stimulus and reference block was excluded from data analysis to compensate for the delay of hemodynamic responses. The correlation vector for this computation was therefore similar to a c‐box function. As defined above for the trapezoid function that served as correlation vector for computing significantly activated voxels, differences between images (percent signal change) of stimulus blocks, which were set to maximum value, and those of reference blocks, which were set to minimum value, were calculated.

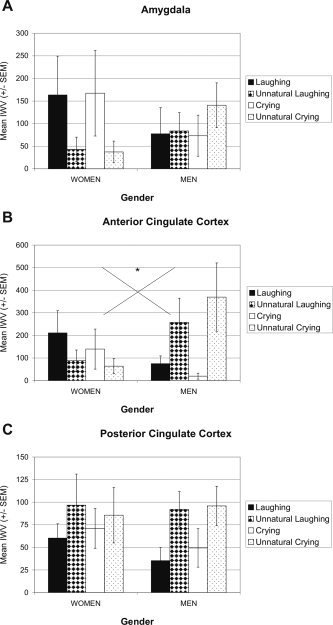

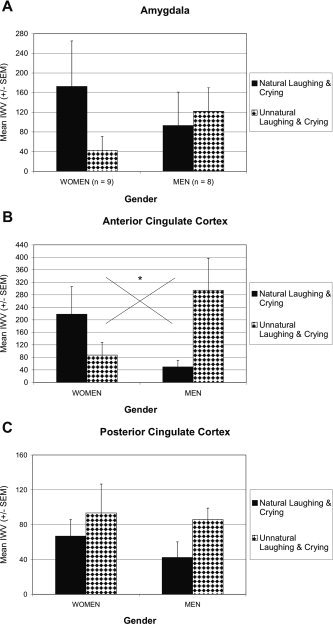

Figure 6.

Mean activation (IWV ± SEM) elicited by infant laughing and crying and by the control stimuli, unnatural laughing and unnatural crying, in amygdala (A), anterior cingulate cortex (ACC; B), and posterior cingulate cortex (PCC; C). Women exhibited stronger activation by laughing and crying in amygdala and ACC, and men by unnatural laughing and unnatural crying in ACC and PCC.

Since the region of the amygdala is prone to susceptibility effects (signal loss) due to the vicinity of sinus cavities and air‐filled bone structures [Merboldt et al., 2001] we calculated the signal‐to‐noise ratio (SNR) of the T2* images for amygdala and, for reasons of comparison, also for auditory cortex (AC) ROIs. The SNR was calculated in accordance with the following formula:

| (1) |

where S m denotes the mean of the signal intensity of the ROI and N m the mean of the background noise. For both amygdala and AC ROIs the SNRs were above 20:1 (range of 22:1–170:1) and generally better for the AC than amygdala ROI. However, we cannot exclude the presence of susceptibility effects; therefore, the observed (amygdala) activation in the present study may still be underestimated.

First, differences in neural activity were analyzed for the comparison of adult versus infant vocalizations by testing influences of the factors source (adult, infant) and stimulus (natural, unnatural) on amygdala and AC activation. IWVs were comparable across the two studies, since data acquisition details like slice thickness (8 mm) and orientation (parallel to the Sylvian fissure) were the same in the present as well as in the previous study [Sander and Scheich, 2005].

Second, differences in neural activity elicited by the infant vocalizations were analyzed for influences of the factors stimulus (natural and unnatural as well as laughing, unnatural laughing, crying, and unnatural crying separately) and gender of listeners (men, women) on amygdala, ACC, PCC, and AC activation. Tests were done with an analysis of variance with repeated measurements. Post‐hoc tests were t tests. The level of significance used throughout was 5% error probability, Greenhouse‐Geisser epsilon corrected or Bonferroni adjusted for multiple comparisons if necessary. Correlation analyses were done by two‐tailed Pearson tests.

Analyses of (i) the reported number of heard sinus tones, (ii) target detection rate, (iii) reaction time, (iv) scores of the three scales of the mood questionnaire (see Results), and (v) subjective stimulus ratings were performed using t tests or analyses of variance with repeated measurements. Reported values are mean ± standard deviation, if not stated otherwise.

RESULTS

Behavioral Data

Mean detection rate of randomly presented sinus tones was 85% (±19.2%), which did not differ between the different stimulus classes (laughing 83%, crying 87%, unnatural laughing 89%, and unnatural crying 81%; P > 0.4) and was not influenced by participants' gender (P > 0.5). Mean reaction time was 745 ms (± 384 ms), without any differences between stimulus classes (laughing 718 ms, crying 749 ms, unnatural laughing 734 ms, unnatural crying 759 ms; P > 0.2) or men and women (P > 0.2).

With respect to participants' mood, there was an interaction of mood‐scale by time‐point (F(1.582, 30) = 7.310, P = 0.006), specifying the main effects of mood‐scale (F(2, 30) = 31.370, P < 0.0001) and time‐point (F(1, 15) = 12.814, P = 0.003). Post‐hoc tests revealed no differences before and after the experiment for the scales “good/bad mood” and “rest/restlessness” (both comparisons P > 0.2), whereas participants felt somewhat tired afterwards (T(16) = 4.026, P = 0.001), with a mean score (11.5) close to the mean of the scale “awakeness/tiredness” (12). Listener's gender did not influence the scores at any time‐point (P > 0.5). Apparently, there was no influence of a change of mood on the perception of infant laughing and crying or of their unnatural versions.

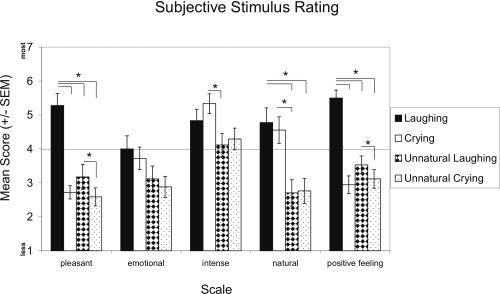

Figure 3 shows that subjective stimulus ratings of pleasantness, emotionality, intensity, naturalness, and evocation of positive feelings differed across stimulus classes. The interaction of stimulus by rating was significant (F(4.347, 168) = 6.538, P < 0.0001), specifying the main effects of stimulus (F(1.484, 42) = 15.926, P < 0.0001) and rating (F(2.568, 56) = 7.197, P = 0.001). Post‐hoc tests revealed that infant laughing was rated as the most pleasant and positive feelings evoking stimulus (pleasantness: T ≥ 3.427 (16/15), P ≤ 0.004; positive feelings evoking: T ≥ 5.230 (16/15), P < 0.0001). Laughing was rated as similar emotional, intense, and natural as crying, but more so than the two acoustical controls (naturalness: T ≥ 4.140 (15), P ≤ 0.001). Infant crying was rated as unpleasant and negative feelings evoking as the control stimuli, but as more emotional, intense, and natural than the controls, although significantly only for naturalness in comparison to unnatural laughing (T = 3.066 (15), P = 0.008). Unnatural laughing and unnatural crying were rated as similar unpleasant, less emotional, middle intense, unnatural, and negative feelings evoking. Stimulus ratings were also influenced by participants' gender (interaction of stimulus by gender: F(3, 42) = 4.958, P = 0.005). Ratings of laughing and crying did not differ between genders, but women rated both control stimuli as more unpleasant than men (T ≥ −2.366 (14), P ≤ 0.033). To summarize, in accordance with their emotional valence, infant laughing and crying were assessed as pleasant, unpleasant, respectively and as evoking positive, negative feelings, respectively, but as similarly emotional, intense, and natural. Interestingly, both acoustical control stimuli were assessed as unpleasant, not emotional, being of middle intensity, unnatural, and negative feelings evoking.

Figure 3.

Mean scores (± SEM) of subjective stimulus ratings. Laughing, crying, unnatural laughing, and unnatural crying were rated on a seven‐point scale ranging from 1 (the least value) to 7 (the strongest value) with respect to pleasantness, emotionality, intensity, naturalness, and the evocation of positive feelings.

Imaging Data

Adult versus infant laughing and crying

Since our previous work analyzed activations by adult laughing and crying [Sander and Scheich, 2001, 2005], it was of great interest to obtain first a general orientation whether the infant vocalizations produced largely different activation patterns. For this purpose, a comparison was made in a subgroup of the present sample (2 men, 4 women) in which amygdala and AC were previously analyzed with adult vocalizations [Sander and Scheich, 2005]. This subgroup may be regarded as representative for both studies with respect to age (23 ± 3.1 years) and similarity of rating the emotional impact of the stimuli, which did not differ between the two studies and which reflected the mean stimulus rating patterns of each of the two studies; but the gender distribution was only representative for the study using adult vocalizations.

Influences of the factors source (adult, infant) and stimulus (natural, unnatural) on activation in amygdala and AC were assessed (with respect to the factor hemisphere see below). The activation was lumped together according to subjective classifications of stimulus naturalness with laughing and crying forming the natural, the control stimuli, the unnatural stimulus class. The mean time between the two studies was 4 months (range of 2–10 months), and the detection performance (spectral shifts, pure tone) did not differ between the two experiments (P > 0.9).

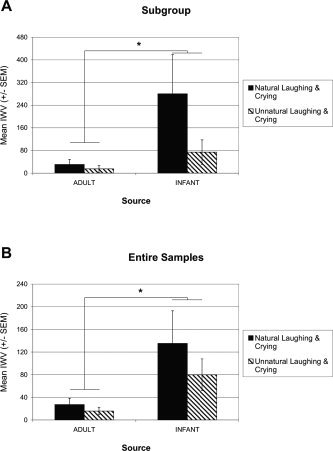

In the amygdala, the main effect of source was significant (F(1/10) = 5.022, P = 0.049; Fig. 4A): The infant vocalizations elicited much stronger amygdala activation than the adult vocalizations, all stimuli compared to resting condition (reference). A similar main effect of source was obtained when the complete samples of the two studies were analyzed (N = 17 for the present and N = 20 for the previous study), with stronger amygdala activation by infant than adult vocalizations (F(1, 35) = 9.482, P = 0.004; Fig. 4B). Although the natural stimuli elicited stronger amygdala activation, the main effect of stimulus was not significant (P > 0.1).

Figure 4.

Mean amygdala activation (IWV ± SEM) elicited by adult and infant vocalizations. A: A subgroup of the present sample (N = 6), which participated also in the previous study with adult vocalizations. B: The two complete groups (N = 17 for the infant vocalizations and N = 20 for the adult vocalizations). IWV (intensity weighted volume) = product of the absolute number of significantly activated voxels and their mean change in signal intensity.

A different pattern of factor influences was observed in AC (data not shown). There was no main effect of source on AC activation in the subgroup (P > 0.7). But the main effect of stimulus was significant with stronger activation by the unnatural than natural stimuli (F(1, 10) = 6.041, P= 0.034). Like for the amygdala, similar results were obtained when the complete samples of the two studies were analyzed, without any differences in AC activation by the factor source (P > 0.9), with a main effect of stimulus: stronger activation by unnatural than natural stimuli (F(1, 35) = 11.246, P = 0.002), and with a significant interaction of source by stimulus (F(1, 35) = 7.384, P = 0.010), reflecting the difference of activation evoked by infant natural and unnatural stimuli, not adult stimuli.

To summarize, activation of the amygdala was modulated by the source of vocalizations (adult, infant), whereas activation of AC was modulated by the discriminative properties of the two stimulus classes (natural, unnatural).

Effects of infant laughing and crying on amygdala and anterior and posterior cingulate cortex

Since left and right hemispheres responded similarly to the infant vocalizations as well as to the control stimuli, activations were collapsed across hemispheres. Results therefore cover effects of gender and stimulus properties on global activity in amygdala, cingulate gyrus, and AC.

The first analysis addressed the stimulus naturalness–unnaturalness distinction. The effect exerted by the present acoustical control stimuli (fragment recombinations of infant laughing and crying) on emotional ratings bears similarities to the effect of adult laughing and crying played backwards on corresponding ratings [Sander and Scheich, 2005]. Again, the clearest differentiation between the experimental stimuli and their alienated acoustical controls was observed for ratings of stimulus naturalness. Since both control stimuli were rated as less natural than laughing and crying, we denote them as unnatural. Therefore, in accordance with subjective classifications of stimulus' naturalness, activations by infant (natural) laughing and crying were lumped together and contrasted to the lumped activation by unnatural laughing and crying. This ignored the emotional valence difference between laughing and crying.

In women, amygdala and ACC were more strongly activated by the natural than unnatural stimuli (Fig. 5A,B). The reverse was observed in amygdala and ACC of men, with stronger activation by the unnatural than natural stimuli (Fig. 5B). The interaction of stimulus by gender was significant in ACC (F(1, 15) = 5.197, P = 0.038), but not in amygdala (P > 0.2). Post‐hoc comparisons were not significant (gender differences P < 0.1, stimulus differences P > 0.07).

Figure 5.

Mean activation (IWV ± SEM) elicited by infant vocalizations in amygdala (A) and cingulate cortex (B, C). Activations for laughing and crying, as well as for, the alienated control stimuli are collapsed, which corresponds to subjective ratings of stimulus' naturalness. In women, natural laughing and crying elicited stronger activation in amygdala and ACC than unnatural stimuli. In men, unnatural laughing and crying elicited stronger activation in ACC and PCC than natural stimuli, and to a lesser extent in the amygdala.

The similarity of activation patterns in amygdala and ACC was substantiated by significant positive correlations of activity between these brain structures (both genders) for natural (r = 0.527, P = 0.030) and unnatural stimuli (r = 0.564, P = 0.018). None of the two structures showed activation correlations with PCC or AC.

Men showed similar activations across amygdala, ACC, and PCC, with an advantage for the unnatural stimuli. In PCC, however, women, like men, showed stronger activation in response to the unnatural than natural stimuli (Fig. 5C). This overall stimulus effect in PCC did not reach significance (P > 0.2). Thus, in amygdala and ACC the natural–unnatural distinction was reflected in opposite ways by the men and women.

The second analysis assessed valence effects of the stimuli. Activations differed between men and women in amygdala and ACC (Figs. 2D,E, and 6A,B). Women exhibited stronger activation in response to laughing than unnatural laughing and to crying than unnatural crying. In contrast, stronger ACC activation was elicited in men by unnatural laughing and unnatural crying than laughing and crying, respectively. These gender differences were reflected by a significant interaction of stimulus by gender in ACC (F(3, 45) = 3.703, P = 0.018), but not in amygdala (P > 0.1). None of the post‐hoc comparisons reached significance (gender differences P > 0.08, stimulus differences P ≥ 0.06).

Similar to the analysis of stimulus naturalness–unnaturalness distinction, significant positive correlations of activity (both genders) were observed between amygdala and ACC for laughing (r = 0.496, P = 0.043) and crying (r = 0.745, P < 0.0001), while those for unnatural laughing (P = 0.056) and unnatural crying (P = 0.058) failed to reach significance. No activation correlations were observed for amygdala and ACC with PCC or AC.

Activations in men were similar in ACC and PCC with respect to larger activation by the two unnatural stimuli (Fig. 6C). This pattern was also observed for women in PCC; but this stimulus effect was not significant (P > 0.1). It is of note here that no valence differences were observed between natural laughing and crying and unnatural laughing and crying.

Effects of infant laughing and crying on auditory cortex

Similar to amygdala and cingulate cortex, AC was activated by infant laughing and crying and the unnatural stimuli (see Fig. 7). Since all auditory territories exhibited similar effects, data shown in Figure 7B,C comprise all four territories.

Figure 7.

Auditory cortex (AC) activation by infant vocalizations. A: AC territories TA, T1, T2, and T3 are shown for the left and right hemisphere of a single male participant (left panel). In the same participant, activation (P < 0.05) in response to infant vocalizations is shown for the contrasts laughing versus reference (middle panel) and unnatural laughing versus reference (right panel); the latter elicited stronger activation. Panels (B) and (C) show activations collapsed across the four territories. B: Mean AC activation (IWV ± SEM) elicited by natural and unnatural laughing and crying, and (C) by each of the four stimuli, laughing, unnatural laughing, crying, and unnatural crying, separately. Significance level of activated voxels: yellow P < 0.05, red P < 0.00001. L, left hemisphere; R, right hemisphere.

Interestingly, total AC activation by unnatural stimuli, irrespective of gender, was significantly stronger than activation by natural stimuli as compared to reference (F(1, 15) = 11.499, P = 0.004) (Fig. 7B). This main effect of stimulus was also observed when each of the four stimuli was considered separately (F(1.285, 45) = 10.804, P = 0.002) (Fig. 7C). Post‐hoc comparisons revealed stronger activation in response to unnatural laughing than laughing and to unnatural crying than crying (T(16) ≤ −2.999, P ≤ 0.008). The activation in response to the two unnatural stimuli was larger in men than women but the interaction of the factors gender and stimulus failed to reach significance (P = 0.070). No valence differences were observed for natural or unnatural laughing and crying. Overall, the patterns of AC activation resembled those of PCC more than those of amygdala and ACC, namely, unnatural stimuli produced strongest activation.

The similarity of activation patterns in AC and PCC was underpinned by significant positive correlations (both genders) between activities of these two structures for the natural (r = 0.543, P = 0.024) and unnatural stimuli (r = 0.510, P = 0.037), and for crying (r = 0.661, P = 0.004) and unnatural laughing (r = 0.576, P = 0.015). But neither AC nor PCC showed activation correlations with amygdala or ACC.

DISCUSSION

Activation Modulation by Adult and Infant Vocalizations

It is a tenet in ethology that infant schemes (Kindchenschema) are particularly salient as signals in intraspecies communication and elicit numerous almost reflex‐type behaviors. Therefore, we made some preliminary comparisons of amygdala activations by adult and infant laughing and crying testing the assumption that infant vocalizations as compared to less salient information elicit stronger amygdala activation. Indeed, in a subgroup of our sample, 900% more activation on average by infant than adult vocalizations was observed in the amygdala. Contrary to the amygdala, the AC did not follow the biological, emotional distinction of adult versus infant vocalizations. Therefore, the same activation by adult and infant vocalizations and the natural–unnatural distinction found in AC suggest that rather familiar versus unfamiliar sound patterns were distinguished. Moreover, this same activation suggests that the large amygdala difference of activation is not due to a general activation difference by the two classes of stimuli, adult versus infant vocalizations, but specially related to the different emotional (biological) implication.

However, despite the impressive amygdala activation difference in favor of infant vocalizations, this finding clearly needs to be replicated in further studies since it was not obtained in one and the same study but in two distinct studies, which limits its generalizability.

The question of differential amygdala activation by valence differences appears here in an interesting light, namely, although the amygdala is dramatically activated by salient infant vocalizations and differentially with respect to listener's gender, no valence effect was observed. The amygdala is generally responsive to emotional stimuli [Zald, 2003], and if small activations are produced valence effects may occur by chance. The strong activation by infant laughing and crying should allow a reliable discrimination of valence, but this is not the case here. Thus, not valence but the social relevance of these emotional vocalizations seems to modulate neural activity in the human amygdala.

Amygdala Activation and Habituation

Similar to our previous studies with adult laughing and crying we observed considerable amygdala activation by (preverbal) infant vocalizations. This might seem astonishing with respect to fMRI findings showing habituation of amygdala activation elicited by emotional stimuli and in emotional learning paradigms [Breiter et al., 1996; Büchel et al., 1999; LaBar et al., 1998], but not with respect to fMRI findings of amygdala activation that persisted even after the end of presentation of emotionally negative stimuli as a consequence to maintain the previously elicited emotional response [Schaefer et al., 2002]. Most importantly in this context is the recent finding of long‐lasting increases in spontaneous firing rates of basolateral amygdala neurons because of emotional arousal [Pelletier et al., 2005]. The firing rates accelerated in two forms, one delayed after tens of minutes, the other fast within seconds, but both returned to control levels after 100–160 min, which provides a profound basis for observing amygdala activation that does not habituate rapidly. Thus, differences in the time course of amygdala activation, habituation versus prolonged activity increases, may presumably depend on variations of the biological significance of encountered (emotional) stimuli as, for example, evidenced in the cat's amygdala by relatively fast habituation of responses to repeated clicks but not to repeated meows [Sawa and Delgado, 1963], or the predictability of their occurrence in corresponding learning paradigms, besides difference in task demands.

Influence of Listener's Gender on Brain Areas of Activation

On the basis of previous reports of gender differences in amygdala or ACC activation [Sander and Scheich, 2001; Seifritz et al., 2003], we hypothesized gender‐dependent activation patterns in these structures while perceiving stimuli with high biological significance, like laughing and crying of preverbal infants. Such vocalizations are primary means of infants for communication and rather reliable elicitors of caregiving behavior [Zeifman, 2001]. Indeed, preverbal infant vocalizations activated amygdala and ACC of listening men and women differently: Women showed stronger activation by laughing and crying, but men for the unnatural stimulus versions, especially in ACC. In PCC, however, activation patterns were similar for women and men and characterized by stronger activation in response to unnatural stimuli.

These gender‐of‐listener‐dependent results bear similarities but also differences to the study by Seifritz et al. [2003] on parent and non‐parent listeners, which also used transformed laughing and crying as a control. In both studies, laughing and crying activate amygdala, ACC, and AC of both genders. But in the Seifritz et al. study AC in both genders was activated most strongly by the natural stimuli, while we found strongest activation by the unnatural stimuli (comparisons with reference blocks). In ACC, Seifritz et al. found signal decreases in women by natural stimuli, while we found activation in both genders by natural stimuli, which was stronger in women. In men, Seifritz et al. found no natural–unnatural differences, while we found stronger activations by unnatural stimuli. In the amygdala, we also observed stronger activation by natural stimuli in women. Although this effect was not supported by an interaction of gender by stimulus, positive activation correlations were exclusively seen with ACC and not with any of the other two structures. The reliability of this observation, however, clearly needs to be tested in further studies.

As a general conclusion, both studies showed gender differences of activations in the analyzed brain structures, but the exact relationships are hard to compare because of different control stimuli and methods of analysis. We used a control stimulus, which still sounded laughing‐ and crying‐like even though definitely strange (listeners' judgment). The control in the Seifritz et al. study was an auditory construct composed of spectral components of laughing and crying together, thus not recognizable as the natural categories. Since only direct activation contrasts between natural and control stimuli were determined and no activations versus rest (baseline), no information is available about the activation produced by this auditory control construct. Consequently, whether natural stimuli produced activations or deactivations different from our results cannot be decided upon.

The fact that gender differences of results are quite sensitive to details of the experimental design is also shown by a comparison with our previous study on adult laughing and crying [Sander and Scheich, 2001]. There we found stronger activation of women's amygdala by laughing and, as a trend, stronger activation of men's amygdala by crying. Like in the present study, where we did not find this gender‐effect activations were determined with respect to rest (reference), thus cannot explain the difference. However, in the previous work we had no auditory control. Thus, the perceptual emphasis was on the laughing–crying distinction and not on an additional natural–unnatural contrast.

Gender differences of brain structure in amygdala and ACC are controversial [Filipek et al., 1994; Franklin et al., 2000; Gur et al., 2002; Pruessner et al., 2000; Pujol et al., 2002; Tebartz van Elst et al., 2000; Yucel et al., 2001], and are also found for primary AC volume [Rademacher et al., 2001]. But even if there were structural differences they may not be reflecting fMRI activation differences dependent on small changes in experimental design. PET studies have revealed, e.g., gender differences of opioid receptors and differential responsiveness of opioid neurotransmission with emotions in amygdala and ACC, but not PCC [Zubieta et al., 1999, 2003]. Further studies in this direction might find explanations for differential gender responsiveness to emotional stimuli as shown by fMRI.

On the other hand, emotional ratings of laughing and crying did not show strong gender‐dependence [also not in our previous study, data not reported, Sander and Scheich, 2005]. Such a divergence of gender‐independent rating data but gender‐dependent physiological measures was previously reported for parents as well as nonparents listening to infant laughing and crying [Brewster et al., 1998; but see Frodi, 1985; Seifritz et al., 2003], and also for viewing broader sets of emotional pictures [Bradley et al., 2001; Kemp et al., 2004; Wrase et al., 2003]. This suggests the possibility that men and women do not activate brain structures differently because of different emotional attitudes towards laughing and crying, but because of different behavioral dispositions as a consequence of hearing such stimuli. This might be particularly plausible with respect to infants' vocalizations [care‐giving tendency in women, Lorberbaum et al., 2002; MacLean, 1993; Zeifman, 2001]. This is also compatible with studies on separation calls of mouse pups and gender‐specific effects [Ehret and Koch, 1989].

Although we interpret our findings as reflecting (neural) differences between genders, one might ask whether the present results could also be determined by some unknown mediating variables unrelated to the listener's gender. Differences in the individual experience with infants may be such a variable. Ratings of the emotional impact of infant crying, laughing, or smiling did neither differ between parents and nonparents [Leger et al., 1996; Seifritz et al., 2003] nor between parents and children [Frodi, 1985]; whereas differences in experience with infants were reflected by differences in amygdala activation [see Introduction, Seifritz et al., 2003]. Another candidate for a mediating variable may be differences in attribution to an infant. Indeed, infants described as being of a difficult versus easy temperament led to poorer performance of terminating crying in mothers within a learned helplessness paradigm [Donovan and Leavitt, 1985]. Similarly, describing an infant as premature versus being a term baby influenced not only ratings of crying in parents but also physiological measures [Frodi, 1985]. In line with this, infants described as high versus low attractive received higher preference ratings from parents and nonparents especially in combination with syllabic sounds [Bloom and Lo, 1990]. The latter points to a further mediating variable, namely, differences related to the infant stimuli themselves. Crying of 6‐months‐old infants was rated as more intense and was correlated with a different set of acoustic parameters than that of 1‐months‐old infants [Leger et al., 1996]. In contrast to this age dependency, cry characteristics seem to be independent of infant's gender or cultural background [Wasz‐Höckert et al., 1968].

Taken together, with respect to our own findings we may exclude differences in experience with infants or perceiver's age as generator of the observed activation differences in the analyzed brain structures. Since we did not vary attributions to the infants producing laughing and crying or inform listeners about infant's gender, these potentially mediating variables may not account for our results, although we cannot control for attributions made by the listeners themselves. But we cannot and do not want to rule out that the present results are specific to the stimuli we used. As mentioned above, emotional intensity ratings of infant crying differed with infant's age. Laughing and crying of the present study were produced by older, but still preverbal infants (parents' report). Thus, one might assume that activation patterns vary with respect to the faculty of speech, which is related to the age of the vocalizer. The differences in amygdala activation observed here by comparing adult versus infant vocalizations may reflect this. In fact, this assumption was one of the driving motives for the present study, in as much as we assumed that nonverbal infant vocalizations by being infants' primary means for communication have a higher biological significance than adult vocalizations.

According to these distinctions amygdala and ACC might have a common functional denominator in processing the emotional aspects of emotional stimuli. Under the terms of a model by Vogt [2005] (see below) the PCC is involved in processing cognitive aspects of emotional stimuli. Cognitive analysis of auditory stimuli seems to be a function that strongly influences activity distributions also in AC [Brechmann and Scheich, 2005]. Thereby, similar responses of PCC and AC and with respect to the distinction of naturally and unnaturally sounding stimuli might be explained.

Similarity of Activation Patterns and its Implications

Activation patterns elicited by preverbal infant vocalizations seem to be similarly gender‐dependent in amygdala and ACC. This is in line with the suggestion of concordant functions of amygdala and ACC in producing emotional behavior [Vogt et al., 1992], and with the previously shown independence of amygdala activation from emotional valence [Sander and Scheich, 2005]. In contrast, activation patterns in PCC and AC were independent of listener's gender, and both were more strongly activated by the acoustical controls than natural laughing and crying. Furthermore, the similarity of activation patterns first between amygdala and ACC, and second between AC and PCC was corroborated by corresponding positive correlations of activation in response to the infant vocalizations.

Recently, Vogt [2005] suggested a four‐region model of the cingulate cortex. Summarizing emotion studies with respect to this model demonstrated that, contrary to midcingulate cortex (MCC), ACC and PCC are strongly activated by emotional stimuli. Anatomical connections between amygdala and cingulate cortex are restricted to ACC and anterior MCC [Vogt and Pandya, 1987]. Since emotional and control stimuli activated PCC to similar amounts, the interpretation was suggested that PCC is involved in assessing the self‐relevance of emotional events, thereby gating emotional information to cingulate areas processing emotions [Vogt, 2005]. In analogy to the connections between amygdala and ACC, the reciprocal connections between PCC and AC [Yukie, 1995] may explain their similar activation patterns observed here.

With cognitive tasks, unrelated to emotion and memory, the PCC exhibits in general decreases of activation or “deactivations” [for review see Maddock, 1999]. The PCC activations reported here may therefore reflect the reverse, namely, the change from cognition to perception of emotional stimuli. In addition to its involvement in emotional processing and cognitive analyses, the PCC also plays a role in the distinction of naturalness–unnaturalness. Thus, the possibility arises that PCC activation by emotional stimuli is also influenced by the novelty and strangeness of emotional stimuli.

But, although our results support the hypothesis of gender‐dependent activation patterns in amygdala and ACC, and point to a functional segregation between amygdala and ACC on the one hand and AC and PCC on the other hand, they have to be considered as preliminary. Their generalizability has to be shown by further studies directly incorporating statistical approaches that allow the extrapolation of results to the population.

In conclusion, the present study shows that the degree of saliency or biological significance of emotional vocalizations like laughing and crying, strongly modulates neural activity in the human amygdala. Furthermore, processing preverbal infant vocalizations activates amygdala and ACC in a gender‐dependent concordant manner, presumably reflecting neural predispositions in women for response preference to such sounds. In contrast, the gender‐independent similarity of activation patterns in PCC and AC might reflect more sensory‐based and cognitive levels of neural processing.

Acknowledgements

We would like to cordially thank the families of the infants for recordings of their baby's laughing and crying, and Gregor Szycik for support in data preprocessing.

Part of this study was presented at the 34th Annual Meeting of the Society for Neuroscience, October 23–27, 2004, San Diego, USA.

REFERENCES

- Adolphs R ( 2003): Cognitive neuroscience of human social behaviour. Nature Rev Neurosci 4: 165–178. [DOI] [PubMed] [Google Scholar]

- Amaral DG,Price JL,Pitkänen A,Carmichael ST ( 1992): Amygdalo‐cortical interconnections in the primate brain In: Aggleton JP, editor. The Amygdala: Neurobiological Aspects of Emotion, Memory, and Mental Dysfunction. New York: Wiley‐Liss; pp 1– 66. [Google Scholar]

- Bandettini PA,Jesmanowicz A,Wong EC,Hyde JS ( 1993): Processing strategies for time‐course data sets in functional MRI of the human brain. Magn Reson Med 30: 161–173. [DOI] [PubMed] [Google Scholar]

- Barbas H,Ghashghaei HT,Dombrowski SM,Rempel‐Clower NL ( 1999): Medial prefrontal cortices are unified by common connections with superior temporal cortices and distinguished by input from memory‐related areas in the rhesus monkey. J Comp Neurol 410: 343–367. [DOI] [PubMed] [Google Scholar]

- Baumgart F,Kaulisch T,Tempelmann C,Gaschler‐ Markefski B,Tegeler C,Schindler F,Stiller D,Scheich H ( 1998): Electrodynamic headphones and woofers for application in magnetic resonance imaging scanners. Med Phys 25: 2068–2070. [DOI] [PubMed] [Google Scholar]

- Baumgart F,Gaschler‐Markefski B,Woldorff MG,Heinze H‐J,Scheich H ( 1999): A movement‐sensitive area in auditory cortex. Nature 400: 724–726. [DOI] [PubMed] [Google Scholar]

- Bloom K,Lo E ( 1990): Adult perceptions of vocalizing infants. Infant Behav Dev 13: 209–219. [Google Scholar]

- Boersma P,Weenink D (1992–2003): Praat, a system for doing phonetics by computer. Available at http://www.praat.org.

- Bradley MM,Codispoti M,Sabatinelli D,Lang PJ ( 2001): Emotion and motivation. II. Sex differences in picture processing. Emotion 1: 300–319. [PubMed] [Google Scholar]

- Brechmann A,Scheich H ( 2005): Hemispheric shifts of sound representation in auditory cortex with conceptual listening. Cereb Cortex 15: 578–587. [DOI] [PubMed] [Google Scholar]

- Brechmann A,Baumgart F,Scheich H ( 2002): Sound‐level‐dependent representation of frequency modulations in human auditory cortex: A low‐noise fMRI study. J Neurophysiol 87: 423–433. [DOI] [PubMed] [Google Scholar]

- Breiter HC,Etcoff NL,Whalen PJ,Kennedy WA,Rauch SL,Buckner RL,Strauss MM,Hyman SE,Rosen BR ( 1996): Response and habituation of the human amygdala during visual processing of facial expression. Neuron 17: 875–887. [DOI] [PubMed] [Google Scholar]

- Brewster AL,Nelson JP,McCanne TR,Lucas DR,Milner JS ( 1998): Gender differences in physiological reactivity to infant cries and smiles in military families. Child Abuse Negl 22: 775–788. [DOI] [PubMed] [Google Scholar]

- Büchel C,Dolan RJ,Armony JL,Friston KJ ( 1999): Amygdala‐hippocampal involvement in human aversive trace conditioning revealed through event‐related functional magnetic resonance imaging. J Neurosci 19: 10869–10876. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cahill L,Gorski L,Belcher A,Huynh Q ( 2004a): The influence of sex versus sex‐related traits on long‐term memory for gist and detail from an emotional story. Conscious Cogn 13: 391–400. [DOI] [PubMed] [Google Scholar]

- Cahill L,Uncapher M,Kilpatrick L,Alkire MT,Turner J ( 2004b): Sex‐related hemispheric lateralization of amygdala function in emotionally influenced memory: An FMRI investigation. Learn Mem 11: 261–266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donovan WL,Leavitt LA ( 1985): Simulating conditions of learned helplessness: The effects of interventions and attributions. Child Dev 56: 594–603. [PubMed] [Google Scholar]

- Ehret G,Koch M ( 1989): Ultrasound‐induced parental behaviour in house mice is controlled by female sex hormones and parental experience. Ethology 80: 81–93. [Google Scholar]

- Filipek PA,Richelme C,Kennedy DN,Caviness VSJ ( 1994): The young adult human brain: An MRI‐based morphometric analysis. Cereb Cortex 4: 344–360. [DOI] [PubMed] [Google Scholar]

- Fischer H,Sandblom J,Herlitz A,Fransson P,Wright CI,Bäckman L ( 2004): Sex‐differential brain activation during exposure to female and male faces. Neuroreport 15: 235–238. [DOI] [PubMed] [Google Scholar]

- Franklin MS,Kraemer GW,Shelton SE,Baker E,Kalin NH,Uno H ( 2000): Gender differences in brain volume and size of corpus callosum and amygdala of rhesus monkey measured from MRI images. Brain Res 852: 263–267. [DOI] [PubMed] [Google Scholar]

- Frodi A ( 1985): Variations in parental and nonparental response to early infant communication In: Reite M,Field T, editors. The Psychobiology of Attachment and Separation. Orlando: Academic Press; pp 351–367 [Google Scholar]

- Gainotti G ( 2001): Disorders of emotional behaviour. J Neurol 248: 743–749. [DOI] [PubMed] [Google Scholar]

- Gao JH,Xiong J,Lai S,Haacke EM,Woldorff MG,Li J,Fox PT ( 1996): Improving the temporal resolution of functional MR imaging using keyhole techniques. Magn Reson Med 35: 854–860. [DOI] [PubMed] [Google Scholar]

- Gaschler B,Schindler F,Scheich H ( 1996): KHORFu: A KHOROS‐based functional image post processing system. A statistical software package for functional magnetic resonance imaging and other neuroimage data sets In: Prat A,editor. COMPSTAT 1996: Proceedings in Computational Statistics. Twelth Symposium held in Barcelona, Spain, 1996. Heidelberg: Physica‐Verlag; pp 57–58. [Google Scholar]

- Gaschler‐Markefski B,Baumgart F,Tempelmann C,Schindler F,Stiller D,Heinze H‐J,Scheich H ( 1997): Statistical methods in functional magnetic resonance imaging with respect to nonstationary time‐series: Auditory cortex activity. Magn Reson Med 38: 811–820. [DOI] [PubMed] [Google Scholar]

- Gil‐da‐Costa R,Braun A,Lopes M,Hauser MD,Carson RE,Herscovitch P,Martin A ( 2004): Toward an evolutionary perspective on conceptual representation: Species‐specific calls activate visual and affective processing systems in the macaque. Proc Natl Acad Sci USA 101: 17516–17521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gur RC,Gunning‐Dixon F,Bilker WB,Gur RE ( 2002): Sex differences in temporo‐limbic and frontal brain volumes of healthy adults. Cereb Cortex 12: 998–1003. [DOI] [PubMed] [Google Scholar]

- Hamann SB,Herman RA,Nolan CL,Wallen K ( 2004): Men and woman differ in amygdala response to visual sexual stimuli. Nat Neurosci 7: 411–416. [DOI] [PubMed] [Google Scholar]

- Jezzard P,Ramsey NF ( 2003): Functional MRI In: Tofts P, editor. Quantitative MRI of the Brain. Measuring Changes Caused by Disease. Chichester: Wiley; pp. 413–453. [Google Scholar]

- Kansaku K,Yamaura A,Kitazawa S ( 2000): Sex differences in lateralization revealed in the posterior language areas. Cereb Cortex 10: 866–872. [DOI] [PubMed] [Google Scholar]

- Kemp AH,Silberstein RB,Armstrong SM,Nathan PJ ( 2004): Gender differences in the cortical electrophysiological processing of visual emotional stimuli. Neuroimage 21: 632–646. [DOI] [PubMed] [Google Scholar]

- Killgore WDS,Yurgelun‐Todd DA ( 2001): Sex differences in amygdala activation during the perception of facial affect. Neuroreport 12: 2543–2547. [DOI] [PubMed] [Google Scholar]

- LaBar KS,Gatenby JC,Gore JC,LeDoux JE,Phelps EA ( 1998): Human amygdala activation during conditioned fear acquisition and extinction: A mixed‐trial fMRI study. Neuron 20: 937–945. [DOI] [PubMed] [Google Scholar]

- Lattner S,Meyer ME,Friederici AD ( 2005): Voice perception: Sex, pitch, and the right hemisphere. Hum Brain Mapp 24: 11–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leger DW,Thompson RA,Merritt JA,Benz JJ ( 1996): Adult perception of emotion intensity in human infant cries: Effects of infant age and cry acoustics. Child Dev 67: 3238–3249. [DOI] [PubMed] [Google Scholar]

- Lorberbaum JP,Newman JD,Dubno JR,Horwitz AR,Nahas Z,Teneback CC,Bloomer CW,Bohning DE,Vincent D,Johnson MR,Emmanuel N,Brawman‐Mintzer O,Book SW,Lydiard RB,Ballenger JC,George MS ( 1999): Feasibility of using fMRI to study mothers responding to infant cries. Depress Anxiety 10: 99–104. [DOI] [PubMed] [Google Scholar]

- Lorberbaum JP,Newman JD,Horwitz AR,Dubno JR,Lydiard RB,Hamner MB,Bohning DE,George MS ( 2002): A potential role for thalamocingulate circuitry in human maternal behavior. Biol Psychiatry 51: 431– 445. [DOI] [PubMed] [Google Scholar]

- MacLean PD ( 1993): Perspectives on cingulate cortex in the limbic system In: Vogt BA,Gabriel M, editors. Neurobiology of Cingulatecortex and Limbic Thalamus. Boston: Birkhäuser; pp 1–15. [Google Scholar]

- Maddock RJ ( 1999): The retrosplenial cortex and emotion: New insights from functional neuroimaging of the human brain. Trends Neurosci 22: 310–316. [DOI] [PubMed] [Google Scholar]

- Maddock RJ,Garrett AS,Buonocore MH ( 2003): Posterior cingulate cortex activation by emotional words: fMRI evidence from a decision task. Hum Brain Mapp 18: 30–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merboldt K‐D,Fransson P,Bruhn H,Frahm J ( 2001): Functional MRI of the human amygdala? Neuroimage 14: 253–257. [DOI] [PubMed] [Google Scholar]

- Oldfield RC ( 1971): The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9: 97–113. [DOI] [PubMed] [Google Scholar]

- Pedersen CA ( 2004): Biological aspects of social bonding and the roots of human violence. Ann NY Acad Sci 1036: 106–127. [DOI] [PubMed] [Google Scholar]

- Pelletier JG,Likhtik E,Filali M,Paré D ( 2005): Lasting increases in basolateral amygdala activity after emotional arousal: Implications for facilitated consolidation of emotional memories. Learn Mem 12: 96–102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phillips ML,Young AW,Scott SK,Calder AJ,Andrew C,Giampietro V,Williams SCR,Bullmore ET,Brammer M,Gray JA ( 1998): Neural responses to facial and vocal expressions of fear and disgust. Proc R Soc London Ser B 265: 1809–1817. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pruessner JC,Li LM,Serles W,Pruessner M,Collins DL,Kabani N,Lupien S,Evans AC ( 2000): Volumetry of hippocampus and amygdala with high‐resolution MRI and three‐dimensional analysis software: Minimizing the discrepancies between laboratories. Cereb Cortex 10: 433–442. [DOI] [PubMed] [Google Scholar]

- Pujol J,Lopez A,Deus J,Cardoner N,Vallejo J,Capdevila A,Paus T ( 2002): Anatomical variability of the anterior cingulate gyrus and basic dimensions of human personality. Neuroimage 15: 847–855. [DOI] [PubMed] [Google Scholar]

- Rademacher J,Morosan P,Schleicher A,Freund H‐J,Zilles K ( 2001): Human primary auditory cortex in women and men. Neuroreport 12: 1561–1565. [DOI] [PubMed] [Google Scholar]

- Sabatinelli D,Flaisch T,Bradley MM,Fitzsimmons JR,Lang PJ ( 2004): Affective picture perception: Gender differences in visual cortex? Neuroreport 15: 1109–1112. [DOI] [PubMed] [Google Scholar]

- Sander K,Scheich H ( 2001): Auditory perception of laughing and crying activates human amygdala regardless of attentional state. Brain Res Cogn Brain Res 12: 181–198. [DOI] [PubMed] [Google Scholar]

- Sander K,Scheich H ( 2005): Left auditory cortex and amygdala, but right insula dominance for human laughing and crying. J Cogn Neurosci 17: 1519–1531. [DOI] [PubMed] [Google Scholar]

- Sander K,Brechmann A,Scheich H ( 2003): Audition of laughing and crying leads to right amygdala activation in a low‐noise fMRI setting. Brain Res Brain Res Protoc 11: 81–91. [DOI] [PubMed] [Google Scholar]

- Sawa M,Delgado JM ( 1963): Amygdala unitary activity in the unrestrained cat. Electroencephalogr Clin Neurophysiol 15: 637–650. [DOI] [PubMed] [Google Scholar]

- Schaefer SM,Jackson DC,Davidson RJ,Aguirre GK,Kimberg DY,Thompson‐Schill SL ( 2002): Modulation of amygdalar activity by the conscious regulation of negative emotion. J Cogn Neurosci 14: 913–921. [DOI] [PubMed] [Google Scholar]

- Schneider F,Habel U,Kessler C,Salloum JB,Posse S ( 2000): Gender differences in regional cerebral activity during sadness. Hum Brain Mapp, 9: 226–238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seifritz E,Esposito F,Neuhof JG,Luthi A,Mustovic H,Dammann G,von Bardeleben U,Radue E,Cirillo S,Tedeschi G,Di Salle F ( 2003): Differential sex‐independent amygdala response to infant crying and laughing in parents versus nonparents. Biol Psychiatry 54: 1367–1375. [DOI] [PubMed] [Google Scholar]

- Shaywitz BA,Shaywitz SE,Pugh KR,Constable RT,Skudlarski P,Fulbright RK,Bronen RA,Fletcher JM,Shankweiler DP,Katz L,Gore JC ( 1995): Sex differences in the functional organization of the brain for language. Nature 373: 607–609. [DOI] [PubMed] [Google Scholar]

- Steyer R,Schwenkmezger P,Notz P,Eid M. ( 1997): Der Mehrdimensionale Befindlichkeitsfragebogen (MDBF). Göttingen: Hogrefe. [Google Scholar]

- Tebartz van Elst L,Woermann F,Lemieux L,Trimble MR ( 2000): Increased amygdala volumes in female and depressed humans. A quantitative magnetic resonance imaging study. Neurosci Lett 281: 103–106. [DOI] [PubMed] [Google Scholar]

- van Vaals JJ,Brummer ME,Dixon WT,Tuithof HH,Engels H,Nelson RC,Gerety BM,Chezmar JL,den Boer JA ( 1993): “Keyhole” method for accelerating imaging of contrast agent uptake. J Magn Reson Imaging 3: 671– 675. [DOI] [PubMed] [Google Scholar]

- Vogt BA ( 2005): Pain and emotion interactions in subregions of the cingulate gyrus. Nat Rev Neurosci 6: 533–544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogt BA,Pandya DN ( 1987): Cingulate cortex of the rhesus monkey. II. Cortical afferents. J Comp Neurol 262: 271–289. [DOI] [PubMed] [Google Scholar]

- Vogt BA,Finch DM,Olson CR ( 1992): Functional heterogeneity in cingulate cortex: The anterior executive and posterior evaluative regions. Cereb Cortex 2: 435–443. [DOI] [PubMed] [Google Scholar]

- Vogt BA,Nimchinsky EA,Vogt LJ,Hof PR ( 1995): Human cingulate cortex: Surface features, flat maps, and cytoarchitecture. J Comp Neurol 359: 490–506. [DOI] [PubMed] [Google Scholar]

- Wasz‐Höckert O,Lind J,Vuorenkoski V,Partanen T,Valanne E. ( 1968): The Infant Cry: A Spectrographic and Auditory Analysis, Vol. 29. Lavenham, Suffolk: Lavenham Press. [Google Scholar]

- Woods RP,Grafton ST,Holmes CJ,Cherry SR,Mazziotta JC ( 1998a): Automated image registration. I. General methods and intrasubject, intramodality validation. J Comput Assist Tomogr 22: 139–152. [DOI] [PubMed] [Google Scholar]

- Woods RP,Grafton ST,Watson JD,Sicotte NL,Mazziotta JC ( 1998b): Automated image registration. II. Intersubject validation of linear and nonlinear models. J Comput Assist Tomogr 22: 153–165. [DOI] [PubMed] [Google Scholar]

- Wrase J,Klein S,Gruesser SM,Hermann D,Flor H,Mann K,Braus DF,Heinz A ( 2003): Gender differences in the processing of standardized emotional visual stimuli in humans: A functional magnetic resonance imaging study. Neurosci Lett 348: 41–45. [DOI] [PubMed] [Google Scholar]

- Yucel M,Stuart GW,Maruff P,Velakoulis D,Crowe SF,Savage G,Pantelis C ( 2001): Hemispheric and gender‐related differences in the gross morphology of the anterior cingulate/paracingulate cortex in normal volunteers: An MRI morphometric study. Cereb Cortex 11: 17–25. [DOI] [PubMed] [Google Scholar]

- Yukie M ( 1995): Neural connections of auditory association cortex with the posterior cingulate cortex in the monkey. Neurosci Res 22: 179–187. [DOI] [PubMed] [Google Scholar]

- Yukie M ( 2002): Connections between the amygdala and auditory cortical areas in the macaque monkey. Neurosci Res 42: 219–229. [DOI] [PubMed] [Google Scholar]

- Zaitsev M,Zilles K,Shah NJ ( 2001): Shared k‐space echo planar imaging with keyhole. Magn Reson Med 45: 109–117. [DOI] [PubMed] [Google Scholar]

- Zald DH ( 2003): The human amygdala and the emotional evaluation of sensory stimuli. Brain Res Rev 41: 88–123. [DOI] [PubMed] [Google Scholar]

- Zeifman DM ( 2001): An ethological analysis of human infant crying: Answering Tinbergen's four questions. Dev Psychobiol 39: 265– 285. [DOI] [PubMed] [Google Scholar]

- Zubieta J‐K,Dannals RF,Frost JJ ( 1999): Gender and age influences on human brain mu‐opioid receptor binding measured by PET. Am J Psychiatry 156: 842–848. [DOI] [PubMed] [Google Scholar]

- Zubieta J‐K,Ketter TA,Bueller JA,Xu Y,Kilbourn MR,Young EA,Koeppe RA ( 2003): Regulation of human affective responses by anterior cingulate and limbic μ‐opioid neurotransmission. Arch Gen Psychiatry 60: 1145–1153. [DOI] [PubMed] [Google Scholar]