Abstract

A fundamental question in multilingualism is whether the neural substrates are shared or segregated for the two or more languages spoken by polyglots. This study employs functional MRI to investigate the neural substrates underlying the perception of two sentence‐level prosodic phenomena that occur in both Mandarin Chinese (L1) and English (L2): sentence focus (sentence‐initial vs. ‐final position of contrastive stress) and sentence type (declarative vs. interrogative modality). Late‐onset, medium proficiency Chinese‐English bilinguals were asked to selectively attend to either sentence focus or sentence type in paired three‐word sentences in both L1 and L2 and make speeded‐response discrimination judgments. L1 and L2 elicited highly overlapping activations in frontal, temporal, and parietal lobes. Furthermore, region of interest analyses revealed that for both languages the sentence focus task elicited a leftward asymmetry in the supramarginal gyrus; both tasks elicited a rightward asymmetry in the mid‐portion of the middle frontal gyrus. A direct comparison between L1 and L2 did not show any difference in brain activation in the sentence type task. In the sentence focus task, however, greater activation for L2 than L1 occurred in the bilateral anterior insula and superior frontal sulcus. The sentence focus task also elicited a leftward asymmetry in the posterior middle temporal gyrus for L1 only. Differential activation patterns are attributed primarily to disparities between L1 and L2 in the phonetic manifestation of sentence focus. Such phonetic divergences lead to increased computational demands for processing L2. These findings support the view that L1 and L2 are mediated by a unitary neural system despite late age of acquisition, although additional neural resources may be required in task‐specific circumstances for unequal bilinguals. Hum. Brain Mapp, 2007. © 2006 Wiley‐Liss, Inc.

Keywords: fMRI, human auditory processing, speech perception, language, bilingualism, prosody, intonation, contrastive stress, emphatic stress, Mandarin Chinese

INTRODUCTION

It is estimated that over 50% of the world population is bilingual [Fabro,1999]. Thus, it is important to investigate how multiple languages are processed in the human brain. Two issues in particular have monopolized much of the literature on bilingualism: differential hemispheric asymmetry and differential localization within the dominant hemisphere.

Evidence from aphasia data suggests that the left hemisphere (LH) is dominant for both languages in bilinguals [Paradis,1995, 1998, 2001]. This leftward asymmetry is observed in multilingual aphasics regardless of language typology. For example, Mandarin Chinese and English are typologically diverse languages. In four left brain‐damaged Chinese‐speaking polyglots, all languages were similarly affected and recovery of the dominant language occurred first [Rapport et al.,1983]. Wada tests revealed that the LH is dominant for both languages in two Chinese‐English (C/E) and two English‐Chinese (E/C) bilingual patients [Rapport et al.,1983].

Using direct electrocortical stimulation, it has been shown that bilinguals use common as well as distinct cortical areas in temporal, parietal, and frontal lobes for different languages [FitzGerald et al.,1997; Lucas et al.,2004; Ojemann et al.,1989; Ojemann and Whitaker,1978; Roux et al.,2004]. Similar observations have been made for Chinese‐speaking polyglots. One C/E and two E/C bilinguals had naming interference sites in left temporoparietal regions that colocalized in both languages, along with other sites unique to each language [Rapport et al.,1983]. One early‐onset C/E bilingual showed naming interference in both languages at a site in the superior temporal gyrus [Walker et al.,2004]. In one French/English‐German‐Mandarin quadrilingual, French reading interference areas were common to Mandarin in the posterior part of the left middle temporal gyrus [Roux et al.,2004].

Functional neuroimaging data (functional magnetic resonance imaging, fMRI; positron emission tomography, PET) reveal that the second language (L2) is largely processed in the same brain regions as the first language (L1), but also to some degree in different brain regions from L1 [Briellmann et al.,2004; Callan et al.,2003; Hasegawa et al.,2002; Illes et al.,1999; Kim et al.,1997, 2002, Marian et al.,2003; Vingerhoets et al.,2003]. For C/E bilinguals, imaging data across a variety of tasks (e.g., word generation, sentence comprehension, rhyming, semantic relatedness) point to a unitary neural system underlying language processing in L1 (Mandarin) and L2 (English), which is also capable of recruiting additional task‐specific cortical resources for processing L2 [Chee et al.,1999ab,2001; Klein et al.,1999; Pu et al.,2001; Tan et al.,2003; Xue et al.,2004]. This is not to say that L2 is distinctly represented in these regions, but rather that activity in these regions reflects the recruitment of additional neural processes to carry out the language task. For example, perceptual identification of a difficult phonetic contrast in L2 may induce greater activity in brain regions involved with auditory‐articulatory mapping no matter whether segmental [Callan et al.,2004] or suprasegmental [Wang et al.,2003]. In the latter case, the early cortical effects of learning a tone language (Mandarin) as a second language by native speakers of English involve both expansion of areas in the left superior temporal gyrus and recruitment of additional regions in right prefrontal cortex that are implicated in pitch processing [Zatorre et al.,1992].

Other factors that interact with language performance, especially age of acquisition, amount of language exposure, and level of attained proficiency, can affect the cortical representation of languages in multilingual individuals. Different aspects of language may be selectively impacted by delays in exposure to L2. Grammatical processing is negatively impacted when L2 is learned beyond a critical or sensitive period. In adult C/E bilinguals, lexical‐semantic processing seems to be less affected by delays in L2 acquisition relative to syntax [Weber‐Fox and Neville,1996]. Both proficiency level [Perani et al.,1998] and environmental exposure [Perani et al.,2003] to L2 exert considerable influence in determining the cortical representation of L2. Greater levels of proficiency in L2 produce lexical‐semantic mental representations that more closely resemble those in L1 [see Perani and Abutalebi,2005, for review]. In late‐onset bilinguals the degree of L2 proficiency influences the neural organization of auditory comprehension [Perani et al.,1998] and morphosyntax/semantics [Wartenburger et al.,2003]. Regarding Mandarin and English, relative language proficiency in two groups of bilinguals (C/E, E/C) has been demonstrated to influence activation in the left prefrontal cortex during semantic processing [Chee et al.,2001], being more extensive and of greater magnitude when processing in the less proficient as compared to the more proficient language.

Although Mandarin, a tone language, and English, a nontone language, differ structurally in their use of prosody at the word level, both languages exploit prosody at the sentence level to distinguish sentence focus and sentence type. Using direct comparisons of brain activity elicited by the perception of lexical tone, sentence type, and sentence focus between native Mandarin (L1) speakers and monolingual English speakers (L0) who are not familiar with Mandarin, it has been argued that lower‐level aspects of speech prosody engage neural mechanisms in the right hemisphere (RH), whereas regions in the LH appear to be recruited for processing higher‐level aspects of language processing [Gandour et al.,2003,2004; Tong et al.,2005]. Of these regions, greater activation was found in the left supramarginal gyrus (SMG) and posterior middle temporal gyrus (pMTG) in the Chinese group of speakers relative to the English group. Only the left pMTG exhibited a task effect (sentence focus > sentence type). These findings are interpreted to suggest that the left SMG and pMTG are involved in auditory‐articulatory mapping and auditory‐conceptual/semantic mapping, respectively [Hickok and Poeppel,2004]. Because both sentence‐level contrasts occur in Mandarin and English, it is possible to compare the processing of the same prosodic contrasts in C/E bilinguals' L1 and L2.

To the present, brain mapping studies of C/E or E/C bilinguals have investigated language processing primarily in the visual modality using word generation [Chee et al.,1999b; Pu et al.,2001], word relatedness [Chee et al.,2001,2003], sentence comprehension [Chee et al.,1999a], and rhyming judgment [Tan et al.,2003; Xue et al.,2004] tasks. In the auditory modality, only one study has focused on speech prosody, using a lexical tone identification task with early learners of Mandarin as a second language [Wang et al.,2003]. The other two studies employed word generation [Klein et al.,1999] and n‐back working memory [Chee et al.,2004] tasks. At present, it has yet to be determined how the same aspects of speech prosody, or for that matter, any other aspect of the phonological system, are processed by C/E bilinguals in Mandarin (L1) and English (L2). This study represents an initial effort to fill this knowledge gap.

Accordingly, the purpose of this fMRI study is to investigate neural processes underlying the discrimination of the same two prosodic contrasts (sentence focus: initial word vs. final word; sentence type: statement vs. question) in both Mandarin (L1) and English (L2) by late C/E bilingual speakers with medium L2 proficiency and equal L1, L2 language exposure. As such, it is the first brain imaging study to directly explore differences in neural activity associated with auditory processing of the same prosodic contrast in the bilinguals' native language and second language [Tan et al.,2003]. The recruitment of similar brain regions in the processing of the second language even in late onset bilinguals would strongly suggest that the neuronal substrate is biologically determined for the processing of speech prosody. By using unequal bilinguals (C > E), we can directly examine whether perceptual processing of sentence focus (and type) in L1 and L2 is mediated by a unitary neural system or by a partially overlapping neural system that recruits additional cortical resources to meet increased computational demands caused by lower L2 proficiency. By presenting acoustically identical stimulus pairs in each of the bilinguals' two languages, we are able to separate task‐specific responses that are independent of stimulus characteristics. In particular, by holding utterance length constant, we can eliminate stimulus duration as a potential confound when interpreting hemispheric laterality effects.

PATIENTS AND METHODS

Subjects

Ten young adult students from mainland China (5 male; 5 female) were recruited from the campus of Purdue University. All subjects were strongly right‐handed (mean [M] = 97%) as measured by the Edinburgh Handedness Inventory [Oldfield,1971]. The average age of the subjects was 27 (standard deviation [SD] = 3); average number of years of education was 19 (SD = 2). All subjects had earned a minimum TOEFL score of 600 and were screened using a bilingual questionnaire [Weber‐Fox and Neville,1996] in order to create a group of late‐onset, medium proficiency Chinese‐English bilinguals. A medium level of proficiency was defined operationally as 2.5–3.0 on 5‐point self‐rating scales for speaking (M = 2.5, SD = 0.5) and comprehension (M = 2.9, SD = 0.3). To control for potentially confounding variables, this group of C/E bilinguals was homogeneous with respect to handedness, age and education at time of testing, age of second language (L2) acquisition (M = 11.6, SD = 1.7), manner of L2 acquisition (formal instruction in primary and secondary school), environment of L2 learning (China), and number of years in USA (M = 2.1, SD = 0.8), and frequency of English usage in the USA (1 = use Chinese only … 7 = use English only; M = 1.8, SD = 0.5). Subjects exhibited normal hearing sensitivity (pure‐tone air conduction thresholds of 20 dB HL (hearing level) or better in both ears at frequencies of 0.5, 1, 2, and 4 kHz). All subjects gave informed consent in compliance with a protocol approved by the Institutional Review Boards of Purdue University, Indiana University Purdue University Indianapolis, and Clarian Health.

With regard to language proficiency and age of acquisition, it has been demonstrated that the degree of perceptual similarity or dissimilarity between L1 and L2 sounds has an impact on attained proficiency in L2 perception or production [Callan et al.,2003,2004; Mack,2003]. Sentence focus and type show different degrees of phonetic coincidence between Mandarin and English [Eady and Cooper,1986; Jin,1996; Liu and Xu,2004; Pell,2001; Xu,1999; Xu and Xu,2005] (see Speech Material for details). Such differences are likely to be significant since very few bilinguals, regardless of age of L2 acquisition, are able to achieve native‐like fluency at the phonetic level. That is, there may be a critical or sensitive period for certain phonetic features [see Mack,2003, for review]. By using late‐onset C/E bilinguals with medium proficiency, we increase the likelihood that any phonetic differences between L1 and L2 sentence prosody will require more cortical resources for L2 than L1 processing.

Speech Material

Sentences were designed in both Chinese and English with two sentence types (statement, question) in combination with two locations of sentence focus (initial, final). In Chinese, e.g.: statement + initial stress, bi 4 ge 1 cao 3. 'Bi mows lawns.'; statement + final stress, bi 4 ge 1 cao 3. 'Bi mows lawns.'; question + initial stress, bi 4 ge 1 cao 3? 'Bi mows lawns?'; question + final stress, bi 4 ge 1 cao 3? 'Bi mows lawns?'. These four sentences comprised a set.

All sentences were made up of three monosyllables and exhibited the same syntactic structure (Subject Verb Object). Subjects were proper nouns and objects common nouns in all sets for both languages. In order to keep the number of syllables constant, surnames were used for subjects in Mandarin sentences, given names in English sentences. Semantic information was matched between languages so that sentences expressed the same pragmatic content. Verbal content was neutral so that sentences were not biased to any particular emotion. Sentence focus was assigned to either the subject or object noun.

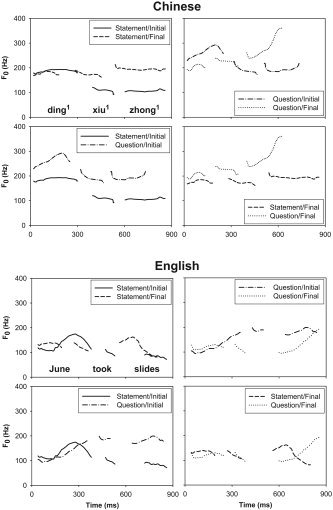

In Chinese (Fig. 1, top), the difference between statements and unmarked questions (i.e., those involving no change in word order and no addition of a question word) is manifested as a moderate rise in the pitch level, starting primarily from the focused word (lower panels). The effects of focus are manifested primarily by variations in pitch and duration (upper panels). Considerable differences in duration (upper left panel), pitch level (upper left panel), and pitch range (upper right panel) are observed between focused and unfocused words. Also shown are interaction effects between the overall shape of tonal contours and sentence focus and type (top right panel). Tone 1 is phonetically realized as high level in isolated monosyllables. In this sentence, it exhibits a high rising contour depending on the location of focus in questions only. In both statements and questions (lower left panel), the pitch range of the post‐focused words are compressed and lowered. These tokens are illustrative of our Chinese speaker's productions and, moreover, are consistent with the results of large‐scale acoustic phonetic investigations of Chinese tone, sentence focus and type [Jin,1996; Liu and Xu,2004; Shen,1990; Xu,1999,2006].

Figure 1.

Pitch contours obtained from sample sentence pairs in Mandarin Chinese (top: ding 1 xiu 1 zhong 1 'Ding fixes clocks') and English (bottom: June took slides) that share the same sentence type (statement, upper left; question, upper right) but differ in location of focus (initial vs. final), and those pairs that share the same focus location (initial, lower left; final, lower right) but differ in sentence type (statement vs. question).

In English (Fig. 1, bottom), the difference between statements and questions is manifested as a sharp rise in the pitch contour, whose onset of pitch raising in questions is conditioned by the location of sentence‐level focus (lower panels). Thus, as in Chinese, focus location serves as a pivot at which statement and question pitch contours begin to diverge, falling and rising, respectively. Like Chinese, the effects of focus are manifested primarily by variations in pitch and duration (upper panels). Unlike Chinese, however, pitch contours associated with sentence focus in English questions are inverted from those in statements, exhibiting low rising, instead of high falling, pitch contours as observed in their statement counterparts (lower panels). Unlike Chinese, the pitch range of post‐focused words is compressed and lowered in statements only (lower left panel). These tokens are illustrative of our English speaker's productions and, moreover, are consistent with the results of large‐scale acoustic phonetic investigations of English sentence focus and type [Cooper and Sorensen,1981; Eady and Cooper,1986; Eady et al.,1986; Pell,2001; Xu and Xu,2005].

Recording Procedure

One adult male speaker each of Mandarin (28 years old) and English (23 years old) was instructed to produce two lists of sentences in their native language at a normal speaking rate. For both Mandarin and English there were initially 90 sets of the stimulus material, corresponding to 360 sentences. In one list the 360 sentences were blocked in pairs by sentence focus (initial, final); in the other list the same sentences were blocked in pairs by sentence type (statement, question). In English, for example, a pragmatic cue was provided to facilitate assigning contrastive emphasis to the correct word (enclosed in square brackets): e.g., Sue bought meals. [not Dawn]; Sue bought meals. [not snacks]. Similarly, in Mandarin each sentence in the list was typed in Chinese characters and in a Roman transliteration system (pinyin). The experimenter controlled the pace of presentation by pointing to the sentences at regularly spaced intervals. This procedure enabled the talker to maintain a uniform speaking rate, thus maximizing the likelihood of producing consistent, natural‐sounding utterances. Recordings were made in a double‐walled soundproof booth using an AKG C410 headset type microphone and a Sony TCD‐D8 digital audio tape recorder. The talkers were seated and wore a custom‐made headband that maintained the microphone at a fixed distance of 12 cm from the lips. All recorded utterances were digitized at a 16 bit/44.1 kHz sampling rate.

Prescreening Identification Procedure

All Mandarin sentences were presented individually in random order for identification by five native speakers of Chinese who were naive to the purposes of the experiment. Similarly, all English sentences were presented for identification by five native speakers of English. They were asked to respond whether the sentence type was a statement or question and whether the focus location was on the first or last word. Only those sets of four sentences across the two languages that achieved a perfect (100%) recognition score for both sentence focus and sentence type, and in addition met our criterion for sentence duration (850 to 1050 ms), were retained as stimuli in the training (16 sets) and experimental sessions (32 sets).

Task Procedure

The experimental paradigm consisted of four active discrimination tasks, Chinese stimuli – sentence focus (C‐SF), Chinese stimuli – sentence type (C‐ST), English stimuli – sentence focus (E‐SF), English stimuli – sentence type (E‐ST), and passive listening control tasks (CL, EL). In the discrimination tasks, subjects were required to selectively attend to either sentence type (statement, question) or focus location (initial, final), make speeded‐response same‐different judgments of pairs of Chinese or English sentences (Table I), and respond by pressing the left (“same”) or right (“different”) mouse button. The control task involved passive listening to the same sentence pairs. It was designed to capture cognitive processes inherent to automatic, perceptual analysis including early auditory processing, executive functions mediating sustained attention and arousal, and motor response formation. Subjects were instructed to listen passively and to respond by alternately pressing the left and right mouse buttons after hearing each pair. No overt judgment was required. There were 32 pairs each of Mandarin and English sentences with unique combinations of sentence type and focus location. Sentence focus or type each differed in 37.5% of the pairs. Stimulus pairs that were identical in both focus and type comprised 25% of total pairs.

Table I.

Sample Chinese and English stimulus pairs for sentence focus vs. sentence type tasks

| Task | Chinese | English | Response | ||

|---|---|---|---|---|---|

| SF | yu 2 pa2 shan1. | yu 2 pa2 shan1. | Eve climbs hills. | Eve climbs hills. | Same |

| 'Yu climbs hills.' | 'Yu climbs hills.' | ||||

| kou 4 du2 shu1. | kou 4 du2 shu1? | Ron reads books. | Ron reads books? | Same | |

| 'Kou reads books.' | 'Kou reads books?' | ||||

| dai 4 hua4 tu2? | dai4 hua4 tu 2? | Dan draws maps? | Dan draws maps? | Different | |

| 'Dai draws maps?' | 'Dai draws maps?' | ||||

| ST | yu 2 pa2 shan1. | yu 2 pa2 shan1. | Eve climbs hills. | Eve climbs hills. | Same |

| 'Yu climbs hills.' | 'Yu climbs hills.' | ||||

| kou 4 du2 shu1. | kou 4 du2 shu1? | Ron reads books. | Ron reads books? | Different | |

| 'Kou reads books.' | 'Kou reads books?' | ||||

| dai 4 hua4 tu2? | dai4 hua4 tu 2? | Dan drew maps? | Dan drew maps? | Same | |

| 'Dai draws maps?' | 'Dai draws maps?' | ||||

Chinese sentences are displayed in pinyin, a quasi‐phonemic transcription system of Chinese characters. Tones are marked in superscript. Location of sentence stress is indicated in bold. Sentence types are marked conventionally for statement (.) and question (?). The English glosses for Chinese stimuli are enclosed in quotes. SF, sentence focus; ST, sentence type.

There were a total of four scanning runs: C‐SF vs. CL; C‐ST vs. CL; E‐SF vs. EL; E‐ST vs. EL. A scanning run consisted of two tasks presented in blocked format (32 s) alternating with 16‐s rest periods (e.g., C‐SF and CL). There were eight blocks in a run, four blocks per task. Eight sentence pairs were presented in each block. The order of scanning runs and the trials within blocks were randomized for each subject. Before each run, subjects were informed of the language (Chinese, English) in which the stimuli were to be presented. Instructions were delivered to subjects in Chinese via headphones during rest periods immediately preceding each task: “listen” for passive listening to sentence pairs; “intonation” for discrimination judgments of sentence types; and “stress” for discrimination judgments of stress location. Average trial duration was about 4 s, including an interstimulus interval of 200 ms between sentences within each pair and a response interval of 2 s.

All speech stimuli were digitally normalized to the same peak intensity. They were presented binaurally by means of a computer playback system (E‐Prime) through a pair of MRI‐compatible headphones (Avotec, Stuart, FL). The plastic sound conduction tubes were threaded through tightly occlusive foam eartips inside the earmuffs that attenuated the average sound pressure level of the continuous scanner noise by approximately 30 dB. Average intensity of all experimental stimuli was about 90 dB SPL (sound pressure level) against a background of scanner noise at 80 dB SPL after attenuation. Accuracy, reaction time, and subjective ratings of task difficulty were used to measure task performance. Each task was self‐rated by listeners on a 1–5‐point graded scale of difficulty (1 = easy, 3 = medium, 5 = hard) at the end of the scanning session.

Prior to scanning, subjects were trained under silent and noise‐simulated echo‐planar imaging (EPI) conditions to a high level of accuracy using stimuli different from those presented during the scanning runs: C‐SF, 100% correct; E‐SF, 95%; C‐ST, 98%; E‐ST, 100%.

Image Acquisition

Scanning was performed on a 1.5T Signa GE LX Horizon scanner (Waukesha, WI) equipped with a birdcage transmit‐receive radiofrequency headcoil. Each of two 200‐volume EPI runs was begun with a rest interval consisting of 8 baseline volumes (16 s), followed by 184 volumes during which the two comparison tasks (32 s) alternated with intervening 16‐s rest intervals, and ended with a rest interval of 8 baseline volumes (16 s). Functional data were acquired using a gradient‐echo EPI pulse sequence with the following parameters: repetition time (TR) 2 s; echo time (TE) 50 ms; matrix 64 × 64; flip angle (FA) 90°; field of view (FOV) 24 × 24 cm. Fifteen 7.5‐mm–thick, contiguous axial slices were used to image the entire cerebrum. Prior to functional imaging runs, high‐resolution, anatomic images were acquired in 124 contiguous axial slices using a 3‐D Spoiled‐Grass (3D SPGR) sequence (slice thickness 1.2–1.3 mm; TR 35 ms; TE 8 ms; 1 excitation; FA 30°; matrix 256 × 256; FOV 24 × 24 cm) for purposes of anatomic localization and coregistration to a standard stereotaxic system [Talairach and Tournoux,1988]. Subjects were scanned with eyes closed and room lights dimmed. The effects of head motion were minimized by using a head‐neck pad and dental bite bar.

Image Analysis

Image analysis was conducted using the AFNI software package [Cox,1996]. First, for every subject the volumes acquired in the functional imaging runs were rigid‐body motion‐corrected to the fourth acquired volume of the first functional imaging run. Second, to remove both scanner signal drift and differences in global intensity between runs, the time series of each run was first detrended by removing components proportional to the second‐order polynomial and then normalized to its mean intensity.

Each of the four functional runs was subsequently analyzed using the AFNI deconvolution method, allowing hemodynamic responses to the active and passive tasks (e.g., C‐SF vs. CL) within each run to be deconvolved from the baseline (rest) and directly compared to one another. The brain activation map of interest (e.g., C‐SF vs. CL) for each individual was constructed by computing Student's t‐statistic values for each voxel in the imaging volume based on a comparison of the voxel time‐course with the idealized hemodynamic response to the active and passive tasks within each run. After the functional datasets were transformed to 1‐mm isotropic voxels in Talairach coordinate space [Talairach and Tournoux,1988], the t‐statistic values were converted to z scores via the corresponding P values, and spatially smoothed by a 5.2 mm FWHM Gaussian filter to account for intersubject variation in brain anatomy and to enhance the signal‐to‐noise ratio. These voxel‐wise z scores were used to generate random effects maps for purposes of exploratory analysis. In the random effects analyses, task (SF, ST) and stimulus (Chinese, English) were fixed factors, and subject was the random factor. Significantly activated voxels (P < 0.005) located within a radius of 7.6 mm were grouped into clusters, with a minimum cluster size threshold corresponding to seven original resolution voxels (738 mm3). According to AFNI Monte Carlo simulation (AlphaSim), this clustering procedure yielded a false‐positive alpha level of less than 0.05.

A voxel‐wise conjunction analysis between Chinese (L1) and English (L2) stimuli was then carried out based on the resulting maps. For each of the two discrimination tasks (sentence focus, sentence type), the cluster maps of the Chinese and English stimuli were overlaid to construct a map that shows the overlapping activations between them.

ROI Analysis

Nine 6‐mm radius spherical regions of interest (ROIs) were chosen [Tong et al.,2005] that have been implicated in previous functional neuroimaging studies (for example) of phonological processing [Burton,2001], speech perception [Scott and Johnsrude,2003], semantic processing [Binder et al.,2000], attention [Shaywitz et al.,2001], and working memory [D'Esposito et al.,2000]. ROIs were symmetric in nonoverlapping frontal, temporal, and parietal regions of both hemispheres (Table II). All center coordinates were derived by averaging over peak location coordinates culled from previous relevant studies. Based on our random effects exploratory analysis, one additional ROI was drawn, centered about the peak activation in the anterior superior frontal sulcus (aSFS; Brodmann area [BA] 9), with the hypothesis that activation in this area would be more closely associated with increased demands of working memory in processing L2 stimuli.

Table II.

Center coordinates and extents of 6 mm radius spherical ROIs

| Region | BA | x, y, z | Description |

|---|---|---|---|

| Frontal | |||

| mMFG | 9/46 | ±43, +32, +22 | |

| pMFG | 9/6 | ±43, +13, +31 | |

| pIFG | 44/6 | ±49, +13, +21 | Centered in pars opercularis, extending caudally into precentral gyrus |

| aINS | 13/45 | ±37, +21, +10 | |

| aSFS | 9 | ±32, +28, +33 | Centered in anterior SFS, projecting into bordering edges of MFG/SFG |

| Parietal | |||

| IPS | 7/40 | ±35, −51, +43 | Centered in IPS, extending into both SPL and IPL |

| SMG | 40 | ±50, −36, +30 | Centered in anterior SMG, extending caudally into posterior SMG |

| Temporal | |||

| mSTG | 22/42 | ±54, −19, +2 | Centered in the STG, extending rostrally into bordering edges of the Sylvian fissure and ventrally into STS and dorsal aspects of the MTG |

| pSTG | 22 | ±52, −39, +12 | Centered in the STG, extending rostrally into bordering edges of the Sylvian fissure and ventrally into dorsal aspects of the MTG |

| pMTG | 21 | ±56, −45, +1 | Centered in the posterior MTG, extending ventrally into the ITS |

Stereotaxic coordinates (mm) are derived from the human brain atlas of Talairach and Tournoux 1988. Brodmann area (BA) designations are also based on this atlas.

a, anterior; m, middle; p, posterior; FO, frontal operculum; IFG, inferior frontal gyrus; INS, insula; IPS, intraparietal sulcus; IPL, inferior parietal lobule; ITS, inferior temporal sulcus; MFG, middle frontal gyrus; MTG, middle temporal gyrus; SFG, superior frontal gyrus; SFS, superior frontal sulcus; SPL, superior parietal lobule; STG, superior temporal gyrus; STS, superior temporal sulcus. Right hemisphere regions of interest (ROIs) were generated by reflecting the left hemisphere location across the midline.

For each ROI, mean z scores were calculated for each task (C‐SF, C‐ST, E‐SF, E‐ST) and hemisphere (LH, RH) for every subject. These z scores within each ROI were analyzed using repeated measures, mixed‐model analyses of variance (ANOVAs) (SAS, Cary, NC) to compare activation between tasks (SF, ST), hemispheres (LH, RH), and stimuli (Chinese, English).

RESULTS

fMRI Random Effect Maps and ROI Analysis

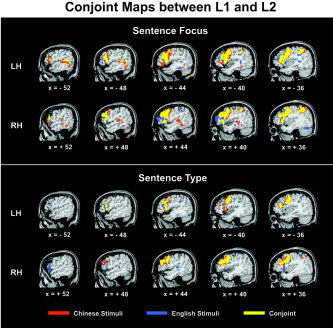

The whole‐brain cluster analysis revealed extensive overlapping activation between Chinese (L1) and English (L2) stimuli in frontal, parietal, and temporal areas (Fig. 2).

Figure 2.

Conjunction maps between Mandarin Chinese (L1) and English (L2) stimuli show the overlapping areas of activation between the two languages in sentence focus (top) and sentence type (bottom) tasks separately. Common activation is indicated by the overlap (yellow) between Chinese (red) and English (blue) stimuli in the functional activation maps. Overlapping areas of activation are observed for the two languages in frontal, parietal, and temporal areas. Significantly activated voxels (t1‐tailed = 3.25, P < 0.005) located within a radius of 7.6 mm were grouped into clusters, with a minimum cluster size threshold corresponding to 7 original resolution voxels (738 mm3).

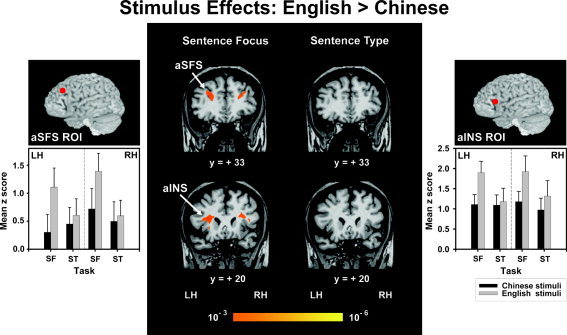

Stimuli: Chinese vs. English

In the sentence focus task, but not sentence type, C/E bilinguals exhibited significantly greater bilateral activity in the anterior insula (aINS) (F 1,9 = 12.15, P < 0.01) and aSFS ROIs (F 1,9 = 18.54, P < 0.005) when presented with English stimuli as compared to Chinese stimuli (Fig. 3). Random effects maps revealed that the aSFS region encompassed bordering edges of the middle frontal and superior frontal gyri (Fig. 3). None of the ROIs or any other region of the brain as revealed in the random effects maps showed significantly greater activation in response to the Chinese stimuli relative to the English stimuli.

Figure 3.

Random effects fMRI activation maps obtained from between‐stimulus comparisons of discrimination judgments of sentence focus (left panels) and sentence type (right panels) tasks relative to the control condition (passive listening). Coronal sections in standard stereotaxic space of Talairach and Tournoux [1988] are superimposed onto a representative brain anatomy (y = +20, +33). aINS, anterior insula; aSFS, anterior superior frontal sulcus; LH, left hemisphere; RH, right hemisphere. The orange‐yellow scale indicates those areas activated significantly more by the English stimuli relative to the Chinese stimuli. English stimuli elicited significantly greater activation in the aINS and SFS bilaterally relative to the Chinese stimuli for the stress task only. Comparisons of mean z scores between language stimuli (Chinese, English) per tasks (SF, ST) and hemispheres (LH, RH) are shown in bar charts for the aSFS ROI (left) and the aINS ROI (right). Above each bar chart the location of the ROI is indicated by a solid red circle projected to the lateral surface of a rendered brain. SF, sentence focus; ST, sentence type; ROI = region of interest. Error bars = ±1 SE.

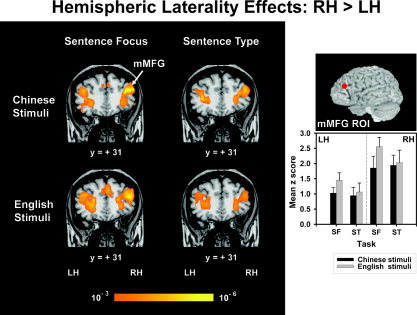

Hemispheres: LH vs. RH

When discriminating sentence focus in either Chinese or English stimuli, C/E bilinguals showed a significant leftward asymmetry in the supramarginal gyrus (SMG) ROI (F 1,9 = 5.95, P < 0.05) (Fig. 4, top). This anteroventral portion of the inferior parietal lobule is part of a continuous swath of activation that extends dorsally into the IPS and ventrally into the bordering edges of the lateral fissure. Only Chinese stimuli, however, elicited significant lateralization to the left posterior middle temporal gyrus (pMTG) ROI (F 1,9 = 5.69, P < 0.05) (Fig. 4, bottom). Random effects maps for these stimuli further showed that increased pMTG activity in the LH extends ventrally in a continuous swath into the inferior temporal and fusiform gyri and dorsally into the posterior superior temporal gyrus (Fig. 4, bottom; Chinese stimuli). In contrast, English stimuli elicited a peak focus in the left MTG about 15 mm posterior to the pMTG ROI in a temporo‐occipital area (BA 37; −52, −60, 3) (Fig. 4, bottom; English stimuli). This focus projects dorsally in a continuous swath into the posterior superior temporal gyrus. Stimuli notwithstanding, no laterality effects reached significance for the sentence type task in either the SMG or pMTG. In the frontal lobe, a rightward asymmetry was elicited in response to both Chinese and English stimuli in the middle portion of the middle frontal gyrus (mMFG) ROI (F 1,18 = 12.37, P < 0.01) across tasks (Fig. 5). Random effect maps revealed that the mMFG region is part of a continuous swath of activation extending medially to the inferior frontal gyrus, frontal operculum, and anterior insula, caudally to the precentral gyrus, and rostrally up to the bordering edges of frontopolar cortex (Figs. 4, 5). None of the other ROIs or any other regions, as revealed in the random effects maps, showed significant lateralization to the LH or RH for any other combination of stimulus (Chinese, English) and task (sentence focus, sentence type).

Figure 4.

Random effects fMRI activation maps obtained from within‐stimulus comparison of discrimination judgments of sentence focus relative to the control condition (passive listening) for the Chinese and English stimuli. Left and right sagittal sections in a standard stereotactic space are superimposed onto a representative brain anatomy (upper half: x = ±46; lower half: x = ±52). pMTG, posterior superior temporal gyrus; SMG, supramarginal gyrus. A leftward asymmetry is observed in the SMG (x = ±46) and pMTG (x = ±52). Comparisons of mean z scores between hemispheres (LH, RH) per tasks (SF, ST) and language stimuli (Chinese, English) are shown in bar charts for the SMG (upper right) and pMTG (lower right) ROIs. See also Figure 3 caption.

Figure 5.

Random effects fMRI activation maps obtained from within‐stimulus (Chinese, top; English, bottom) comparisons of discrimination judgments of sentence focus (left) and sentence type (right) relative to the control condition (passive listening). Coronal sections in a standard stereotactic space are superimposed onto a representative brain anatomy (y = +31). mMFG, middle portion of middle frontal gyrus. A rightward asymmetry is observed in the mMFG for both language stimuli regardless of task. A comparison of mean z scores between hemispheres (LH, RH) per tasks (SF, ST) and language stimuli (Chinese, English) is shown in a bar chart for the mMFG ROI. See also Figure 3 caption.

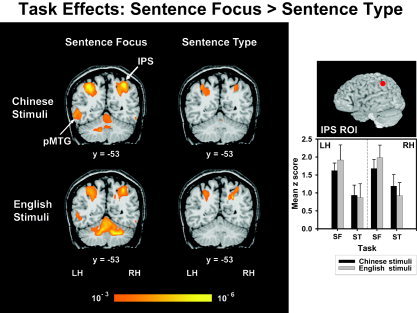

Tasks: sentence focus vs. sentence type

Four ROIs showed greater activity for the sentence focus task relative to sentence type. Regardless of stimuli, C/E bilinguals showed greater activity in the left pMTG ROI (F 1,9 = 7.29, P < 0.05) (Fig. 6; Fig. 4, bar chart) and bilaterally in the intraparietal sulcus (IPS) ROI (F 1,18 = 9.45, P < 0.05) (Fig. 6). A task effect was observed in the bilateral aINS (F 1,9 = 8.20, P < 0.05) and aSFS (F 1,9 = 5.36, P < 0.05) ROIs for English stimuli only (Fig. 3, bar charts). No other regions in the random effects maps showed a significant task effect for any other combination of language stimuli and hemisphere.

Figure 6.

Random effects fMRI activation maps obtained from within‐stimulus (Chinese, top; English, bottom) comparisons of discrimination judgments of sentence focus (left) and sentence type (right) relative to the control condition (passive listening). Coronal sections in a standard stereotactic space are superimposed onto a representative brain anatomy (y = −53). IPS, intraparietal sulcus; pMTG, posterior superior temporal gyrus. Increased activity is observed in the left pMTG and bilateral IPS in the sentence focus task relative to sentence type for both language stimuli. A comparison of mean z scores between tasks (SF, ST) per hemispheres (LH, RH) and language stimuli (Chinese, English) is shown in a bar chart for the IPS ROI. See also Figure 3 for a bar chart comparing mean z scores between tasks in the pMTG per hemisphere (LH, RH) and language stimuli (Chinese, English). See also Figure 3 caption.

fMRI Task Performance

Repeated measures ANOVAs were conducted on response accuracy, reaction time, and self‐ratings of task difficulty (Table III), with stimulus (Chinese, English) as the between‐stimulus factor and task (SF, ST) as the within‐stimulus factor. When performing the sentence focus task in English as compared to Chinese, C/E bilinguals exhibited longer reaction time (t 9 = 5.27, P < 0.001), lower response accuracy (t 9 = 4.29, P < 0.005), and more subjective difficulty (t 9 = 3.31, P < 0.01). When performing the sentence type task, on the other hand, there were no significant differences in behavioral measures of task performance between the English and Chinese stimuli. With regard to the English stimuli, C/E bilinguals' performance indicated greater difficulty on the sentence focus task as compared to type (reaction time: P < 0.05; accuracy: P < 0.01; subjective rating of task difficulty: P < 0.001).

Table III.

Behavioral data

| Stimulus and task | Accuracy, % | Reaction time, ms | Self‐rating* |

|---|---|---|---|

| Chinese | |||

| SF | 95.8 (2.9) | 552.4 (143.3) | 2.2 (1.0) |

| ST | 93.1 (5.4) | 595.2 (100.2) | 1.7 (0.9) |

| English | |||

| SF | 86.2 (7.1) | 670.8 (174.2) | 3.7 (1.1) |

| ST | 95.4 (4.9) | 559 (84.4) | 1.5 (0.7) |

Values are expressed as mean and standard deviation (in parentheses).

Subjective self‐ratings of task difficulty were obtained offline after the scanning session. Scalar units are from 1 to 5 (1 = easy; 3 = medium; 5 = hard) for stress and intonation tasks.

SF, sentence focus; ST, sentence type.

DISCUSSION

The major findings of this study demonstrate that even for late‐onset C/E bilinguals with medium L2 proficiency, similar brain regions are recruited in the processing of sentence‐level linguistic prosody in L2 as in L1. Conjunction maps reveal extensive swaths of overlapping activation between L1 and L2 stimuli in frontal, parietal, and temporal areas regardless of task. The neural networks in both the LH and RH are comparable to those of previous studies of Chinese speech prosody [Gandour,2006; Gandour et al.,2004; Tong et al.,2005]. When processing L2, these neural substrates underlying speech prosody perception are modulated by increasing activation of common regions to L1 and L2, or by recruiting segregated regions in which activation occurs in L2 only. In either case, differential activation of particular brain regions can be attributed to C/E bilinguals' effort to meet increased computational demands caused by lower L2 proficiency [Chee et al.,2001; Hasegawa et al.,2002; Xue et al.,2004]. In particular, their processing of the sentence focus task in L2 requires more extensive cortical resources than in L1 from task‐dependent mediational neural systems, i.e., attention and working memory, to compensate for their difficulty in processing L2 phonetic cues associated with sentence focus [Callan et al.,2004; Wang et al.,2003].

Between‐Language Stimulus Effects

As reflected in behavioral measures (Table III), discrimination of sentence focus in English (L2), but not sentence type, is more difficult than in Chinese (L1) for C/E bilinguals. Greater activation is observed bilaterally in anterior insular and anterior middle frontal regions when subjects perform the sentence focus task in English as compared to Chinese. This stimulus‐specific, task‐restricted effect in L2 processing is presumably due to their late age of acquisition, medium level of attained proficiency, and modest amount of language exposure [Chee et al.,2001]. We argue that subjects' performance is not equal between these two sentence prosody tasks because of language‐specific differences in the way focus is signaled phonetically [Xu,2006]. These subtle phonetic divergences between L1 and L2 (e.g., sentence‐initial focus) lead to increased computational demands in attention and working memory for C/E bilinguals with lower L2 proficiency when making sentence focus judgments.

Of interest to the present study, these frontal areas (aINS, aSFS) have been implicated in attention [Shaywitz et al.,2001] and working memory [Smith and Jonides,1999] processes. Both regions have been activated consistently throughout a series of studies on the perception of Chinese speech prosody [Gandour,2006, and references therein]. The anterior superior frontal sulcus is part of an anterior prefrontal cortical area that includes the middle frontal gyrus. This area has been implicated in the control of attention and maintenance of information in working memory [Corbetta and Shulman,2002; MacDonald et al.,2000; Shaywitz et al.,2001]. In this study, the speeded‐response, paired comparison discrimination paradigm makes considerable demands on working memory. Because of their lower L2 proficiency, subjects experience more difficulty in directing their attention to sequences of two noncontiguous words in a sentence, holding them in working memory to make a paired comparison with another sentence, and selecting the appropriate response.

The anterior insula has been shown to be engaged in tasks engaging covert articulatory rehearsal [Paulesu et al.,1993] and decision‐making [Binder et al.,2004]. Using degraded speech stimuli, its increased bilateral activation has also been interpreted to reflect listeners' unsuccessful effort in extracting syntactic and lexical‐semantic information [Meyer et al.,2002,2003]. The insular region in the LH similarly shows increased activation in covert production of sentence focus [Loevenbruck et al.,2005]. Converging evidence from the lesion deficit literature shows that it is involved in coordinating complex articulatory movements [Dronkers et al.,1996,2004a].

Activation of the left anterior insula has been demonstrated to vary as a function of both working memory load and L2 proficiency when processing an unfamiliar foreign language (French), i.e., greater activation in equal (proficient in English and Chinese) than in unequal bilinguals (proficient in English but not Chinese) [Chee et al.,2004]. Chee et al. [2004] suggest that differential activation of the insular region may be due to a more or less efficient processing strategy that correlates with L2 proficiency. Unlike Chee's experiment, we are comparing the same group of unequal bilinguals when performing the same tasks (sentence focus, sentence type) in their first (Mandarin) and second (English) languages. Both L1 and L2 are familiar to the C/E bilinguals; L2 proficiency is homogeneous across subjects. In this experiment, it appears that C/E unequal bilinguals employ similar processing strategies in L1 and L2. We suggest that their lower proficiency in L2 results in an increased working memory load for processing the sentence focus task in L2. Besides proficiency level, we must also take into account task demands in order to give a fuller account of differential activation in the insular region of bilinguals.

In bilingual processing, the left anterior insula plays an essential role in phonological processing, speech motor planning, and execution [Perani,2005]. Following Perani and Abutalebi [2005], we consider it to be part of an action‐recognition mirror system that enables perception of speech sounds. We further speculate that late‐onset unequal bilinguals might have to rely on functional differences in processes that have not been fully developed in the mirror system. This system is believed to facilitate temporal orientation and sequence organization. Both functions are inherent to the sentence focus task. Accordingly, unequal bilinguals have more difficulty processing sentence focus in L2 than L1.

In their cross‐language, between‐group comparisons of perception of these same two prosodic contrasts in Chinese [Tong et al.,2005], the anterior insula and adjacent fronto‐opercular region showed stronger activation bilaterally in a group of English monolinguals who have no familiarity with Chinese as compared to a group of native Chinese speakers regardless of prosodic task. Behaviorally, both sentence focus and type were more difficult for English than for Chinese listeners. We infer that increased activation in this area is likely to reflect English listeners' difficulty with covert articulatory rehearsal of sounds from an unfamiliar language [Dronkers et al.,2004a; Dronkers,1996; Paulesu et al.,1993]. In the current study, C/E bilinguals are very familiar with English, but have yet to achieve a high level of proficiency, especially with difficult L2 phonetic contrasts. Of course, degrees of familiarity with a nonnative language may range from none to native‐like fluency. Interestingly, we observe increased insular activity in C/E bilinguals with lower L2 proficiency in the sentence focus task only, but in both tasks for English monolinguals without any knowledge of Chinese. These combined findings suggest that activity in the anterior insula is graded in response to task difficulty, and is especially sensitive to design features (e.g., phonetic) of a particular language that are at variance with the listeners' native language.

These experimental tasks minimize language interference as a potentially confounding variable. In our companion study [Tong et al.,2005], C/E bilinguals were placed in a monolingual environment as they judged sentence focus and sentence type in L1 (Mandarin) stimuli. In this study, the same C/E bilinguals are placed in a monolingual environment and asked to judge the same aspects of linguistic prosody in L2 (English) stimuli, thus enabling us to make a direct comparison of C/E bilinguals' processing of sentence focus/type in Chinese and English. The strongly monolingual environment minimizes interference from one language while processing material in the other [Rodriguez‐Fornells et al.,2005]. It is therefore unlikely that any of the observed differences between L1 and L2 processing of sentence prosody can be attributed to language interference internal to the tasks themselves.

Within‐Language Laterality Effects

Stronger activity is observed in anteroventral aspects of the left supramarginal gyrus (BA 40) in the sentence focus task for both L1 and L2 stimuli. This heightened activation in the left SMG is compatible with its putative role as an interface subsystem in an auditory‐motor integration circuit in speech perception [Hickok and Poeppel,2004]. Its role in the learning of auditory‐articulatory mappings has recently been revealed in late‐onset, low‐proficiency Japanese‐English learners of a difficult L2 segmental phonetic contrast (/r‐l/) [Callan et al.,2003,2004]. Our finding generalizes its role to the learning of suprasegmental as well as segmental phonetic contrasts. The leftward asymmetry across stimuli emphasizes its involvement in perceptual‐motor mapping regardless of whether it is the speaker's first or second language. Accordingly, if the speaker has no knowledge or experience with the language, then one does not expect to see a left laterality effect in this region when performing speech prosody tasks [Gandour et al.,2004; Tong et al.,2005]. As is true of other brain regions mediating complex cognitive functions, the inferior parietal region does not act in isolation from other areas of the neural circuitry underlying speech perception. In this study, its level of activation must necessarily be embedded within the context of task demands [Gandour et al.,2004]. Its coactivation with the left posterior middle temporal gyrus for both L1 and L2 stimuli (see Within‐Language Task Effects, below) is consistent with its putative role(s) in verbal working memory [Chein et al.,2003; Paulesu et al.,1993; Ravizza et al.,2004]. Further research is warranted to determine the precise effects of task demands on functions of this region, but there can be no doubt that it forms a crucial part of a pathway that processes the sounds of spoken language.

In the posterior middle temporal gyrus (BA 21), activity is lateralized to the LH in the sentence focus task for the Chinese stimuli only. Its enhanced activation in the LH is consistent with the view that there is a ventral processing stream that radiates posteriorly from the auditory cortex in speech perception [Binder et al.,2000; Hickok and Poeppel,2000, 2004], and that more remote areas of the temporal lobe may be recruited for higher‐order processing, especially in computing the meaning of spoken sentences [Davis and Johnsrude,2003; Rodd et al.,2005; Scott and Johnsrude,2003]. When processing Chinese stimuli, it has also been shown that native Chinese speakers exhibit greater activity in this LH region relative to monolingual English speakers for either task [Tong et al.,2005]. Because of their lack of familiarity with Chinese, no left‐sided laterality effects are observed in this region or any other region of the LH for the monolingual English group. Interestingly, English stimuli elicit a laterality effect for C/E bilinguals in a more posterior region of the left middle temporal gyrus (BA 37) that projects caudally into bordering areas of the occipital lobe. This segregated activation associated with English stimuli may be related to the processing difficulty experienced by these C/E listeners who have no control over the rate at which they must process the spoken sentences. When performing the sentence focus task in a second language with lower proficiency, it appears that they adopt visual scanning strategies to compensate for their difficulty in processing this particular phonetic contrast under a heavy memory load [Benson et al.,2001] (see Within‐Language Task Effects, below).

The rightward asymmetry in the mid‐portion of the middle frontal gyrus (BA 9/46) across tasks in response to both L1 and L2 stimuli implicates more general auditory attention and working memory processes associated with lower‐level aspects of pitch perception independent of design characteristics of a particular language. The mid‐portion of dorsolateral prefrontal cortex has been shown to be engaged in controlling attention to tasks and maintaining information in working memory [Corbetta and Shulman,2002; MacDonald et al.,2000]. Auditory selective attention tasks have been shown to elicit increased activity in right dorsolateral prefrontal cortex [Zatorre et al.,1999]. Its activation has also been lateralized to the RH regardless of the level of prosodic representation (tone/syllable, intonation/sentence) [Gandour et al.,2004]. Converging data from epilepsy patients show deficits in retention of pitch information after unilateral excisions of right prefrontal cortex [Zatorre and Samson,1991]. This area is not domain‐specific since it is similarly recruited for extraction and maintenance of pitch information in processing music [Koelsch et al.,2002; Zatorre et al.,1994].

Within‐Language Task Effects

Greater activity is observed bilaterally in the intraparietal sulcus (IPS; BA 40/7) in the sentence focus task relative to type regardless of stimuli. Activation in the IPS bilaterally appears to reflect domain‐general executive processes associated with tasks that require participants to voluntarily orient and maintain attention to a particular location or feature [Corbetta et al.,2000; Corbetta and Shulman,2002] or actively retain information in working memory without regard for its phonological significance [Ravizza et al.,2004]. Its activation is consistent with a dorsal frontoparietal executive system that is involved in attention or working memory tasks employing a wide variety of verbal, spatial, and visual stimuli [Chein et al.,2003].

In the temporal lobe, the sentence focus task elicited stronger responses than type in the left pMTG for L1 only. The left posterior middle temporal gyrus has been hypothesized to be part of an auditory‐semantic integration circuit in speech perception, a ventral processing stream that further extends to parts of the inferior temporal and fusiform gyri [Hickok and Poeppel,2004]. This region's involvement in sentence comprehension, especially at the word level, is supported by voxel‐based lesion‐symptom mapping analysis [Dronkers et al.,2004b]. It has recently been demonstrated that this region and surrounding posterior temporal cortex are involved in computing the meaning of spoken sentences [Rodd et al.,2005]. With regard to bilingualism, brain imaging studies have further shown that this region is preferentially activated by attention to semantic relations in monolinguals [McDermott et al.,2003] or multilinguals [Vingerhoets et al.,2003].

Recent hierarchical models of bilingual memory consist of one common conceptual store and two separate lexical stores, one for each language, plus weighted bidirectional links between lexical nodes and conceptual nodes [see French and Jacquet,2004, for review]. Neural mechanisms in task demands clearly impact bilingual memory [see Francis,1999, for review]. The focus task requires subjects to direct their attention explicitly to phonetic properties of the sentence‐initial and ‐final words and make a comparison judgment about which word carries the contrastive stress. The fact that the stressed word is an object of semantic focus entails a link between prosodic and semantic representations. Although there was no difference between L1 and L2 in the pMTG ROI, we do observe recruitment of additional areas in temporo‐occipital regions for L2 only (Fig. 4). This additional activation in L2 as compared to L1 suggests that the prosodic‐semantic/conceptual links may be somewhat weaker in L2. In the perception of a difficult L2 phonetic contrast, unequal bilinguals are expected to use existing and/or additional brain regions involved with auditory/conceptual‐semantic processes to a greater extent in the less proficient language [Callan et al.,2004; Hasegawa et al.,2002]. Thus, bilingual processing is dynamic and sensitive to task demands, as is reflected in listeners' ability to adapt through recruiting nearby cortical resources. Using event‐related potentials as a measure of lexical‐conceptual links, the magnitude and latency of N400 has similarly been demonstrated to vary depending on the relative dominance of the language [Alvarez et al.,2003]. The processing of a lower proficiency L2 apparently requires a higher‐order visual scanning strategy related to computational demands of working memory [Kim et al.,2002].

CONCLUSIONS

Phonetic discrimination of functionally equivalent prosodic contrasts in L1 and L2 by unequal C/E bilinguals reveals essentially a unitary neural system that can adapt to stimulus‐specific and task‐specific demands for processing a lower proficiency L2. Subtle differences in the way the same prosodic function at the sentence level is manifested phonetically in L2 can induce greater activation bilaterally in frontal areas implicated in motor aspects of speech and verbal working memory, and greater activation in left temporo‐occipital regions that are involved in computing the meaning of spoken sentences. In bilingual and monolingual brains alike, speech prosody perception and comprehension involves a dynamic interplay among widely distributed regions, not only within a single hemisphere but also between the two hemispheres [Friederici and Alter,2004].

REFERENCES

- Alvarez RP, Holcomb PJ, Grainger J (2003): Accessing word meaning in two languages: an event‐related brain potential study of beginning bilinguals. Brain Lang 87: 290–304. [DOI] [PubMed] [Google Scholar]

- Benson R, Whalen D, Richardson M, Swainson B, Clark V, Lai S, Liberman AM (2001): Parametrically dissociating speech and nonspeech perception in the brain using fMRI. Brain Lang 78: 364–396. [DOI] [PubMed] [Google Scholar]

- Binder J, Frost J, Hammeke T, Bellgowan P, Springer J, Kaufman J, Possing E (2000): Human temporal lobe activation by speech and nonspeech sounds. Cereb Cortex 10: 512–528. [DOI] [PubMed] [Google Scholar]

- Binder JR, Liebenthal E, Possing ET, Medler DA, Ward BD (2004): Neural correlates of sensory and decision processes in auditory object identification. Nat Neurosci 7: 295–301. [DOI] [PubMed] [Google Scholar]

- Briellmann RS, Saling MM, Connell AB, Waites AB, Abbott DF, Jackson GD (2004): A high‐field functional MRI study of quadri‐lingual subjects. Brain Lang 89: 531–542. [DOI] [PubMed] [Google Scholar]

- Burton M (2001): The role of the inferior frontal cortex in phonological processing. Cogn Sci 25: 695–709. [Google Scholar]

- Callan DE, Tajima K, Callan AM, Kubo R, Masaki S, Akahane‐Yamada R (2003): Learning‐induced neural plasticity associated with improved identification performance after training of a difficult second‐language phonetic contrast. Neuroimage 19: 113–124. [DOI] [PubMed] [Google Scholar]

- Callan DE, Jones JA, Callan AM, Akahane‐Yamada R (2004): Phonetic perceptual identification by native‐ and second‐language speakers differentially activates brain regions involved with acoustic phonetic processing and those involved with articulatory‐auditory/orosensory internal models. Neuroimage 22: 1182–1194. [DOI] [PubMed] [Google Scholar]

- Chee MW, Caplan D, Soon CS, Sriram N, Tan EW, Thiel T, Weekes B (1999a): Processing of visually presented sentences in Mandarin and English studied with fMRI. Neuron 23: 127–137. [DOI] [PubMed] [Google Scholar]

- Chee MW, Tan EW, Thiel T (1999b): Mandarin and English single word processing studied with functional magnetic resonance imaging. J Neurosci 19: 3050–3056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chee MW, Hon N, Lee HL, Soon CS (2001): Relative language proficiency modulates BOLD signal change when bilinguals perform semantic judgments. Neuroimage 13: 1155–1163. [DOI] [PubMed] [Google Scholar]

- Chee MW, Soon CS, Lee HL (2003): Common and segregated neuronal networks for different languages revealed using functional magnetic resonance adaptation. J Cogn Neurosci 15: 85–97. [DOI] [PubMed] [Google Scholar]

- Chee MW, Soon CS, Lee HL, Pallier C (2004): Left insula activation: a marker for language attainment in bilinguals. Proc Natl Acad Sci U S A 101: 15265–15270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chein JM, Ravizza SM, Fiez JA (2003): Using neuroimaging to evaluate models of working memory and their implications for language processing. J Neuroling 16: 315–339. [Google Scholar]

- Cooper WE, Sorensen J (1981): Fundamental Frequency in Sentence Production. New York: Springer. [Google Scholar]

- Corbetta M, Shulman GL (2002): Control of goal‐directed and stimulus‐driven attention in the brain. Nat Rev Neurosci 3: 201–215. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Kincade JM, Ollinger JM, McAvoy MP, Shulman GL (2000): Voluntary orienting is dissociated from target detection in human posterior parietal cortex. Nat Neurosci 3: 292–297. [DOI] [PubMed] [Google Scholar]

- Cox RW (1996): AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res 29: 162–173. [DOI] [PubMed] [Google Scholar]

- D'Esposito M, Postle BR, Rypma B (2000): Prefrontal cortical contributions to working memory: evidence from event‐related fMRI studies. Exp Brain Res 133: 3–11. [DOI] [PubMed] [Google Scholar]

- Davis MH, Johnsrude IS (2003): Hierarchical processing in spoken language comprehension. J Neurosci 23: 3423–3431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dronkers NF (1996): A new brain region for coordinating speech articulation. Nature 384: 159–161. [DOI] [PubMed] [Google Scholar]

- Dronkers N, Ogar J, Willock S, Wilkins DP (2004a): Confirming the role of the insula in coordinating complex but not simple articulatory movements. Brain Lang 91: 23–24. [Google Scholar]

- Dronkers NF, Wilkins DP, Van Valin RD Jr, Redfern BB, Jaeger JJ (2004b): Lesion analysis of the brain areas involved in language comprehension. Cognition 92: 145–177. [DOI] [PubMed] [Google Scholar]

- Eady SJ, Cooper WE (1986): Speech intonation and focus location in matched statements and questions. J Acoust Soc Am 80: 402–415. [DOI] [PubMed] [Google Scholar]

- Eady SJ, Copper WE, Klouda GV, Meueller PR, Lotts DW (1986): Acoustical characteristics of sentential focus: narrow vs. broad and single vs. dual focus environments. Lang Speech 29: 233–251. [DOI] [PubMed] [Google Scholar]

- Fabro F. 1999. The Neurolinguistics of Bilingualism. Hove, UK: Psychology Press. [Google Scholar]

- FitzGerald DB, Cosgrove GR, Ronner S, Jiang H, Buchbinder BR, Belliveau JW, Rosen BR, Benson RR (1997): Location of language in the cortex: a comparison between functional MR imaging and electrocortical stimulation. Am J Neuroradiol 18: 1529–1539. [PMC free article] [PubMed] [Google Scholar]

- Francis WS (1999): Cognitive integration of language and memory in bilinguals: Semantic representation. Psychol Bull 125: 193–222. [DOI] [PubMed] [Google Scholar]

- French RM, Jacquet M (2004): Understanding bilingual memory: models and data. Trends Cogn Sci 8: 87. [DOI] [PubMed] [Google Scholar]

- Friederici AD, Alter K (2004): Lateralization of auditory language functions: a dynamic dual pathway model. Brain Lang 89: 267–276. [DOI] [PubMed] [Google Scholar]

- Gandour J (2006): Brain mapping of Chinese speech prosody In: Li P, Tan LH, Bates E, Tzeng O, editors. Handbook of East Asian Psycholinguistics: Chinese. New York: Cambridge University Press. [Google Scholar]

- Gandour J, Dzemidzic M, Wong D, Lowe M, Tong Y, Hsieh L, Satthamnuwong N, Lurito J (2003): Temporal integration of speech prosody is shaped by language experience: an fMRI study. Brain Lang 84: 318–336. [DOI] [PubMed] [Google Scholar]

- Gandour J, Tong Y, Wong D, Talavage T, Dzemidzic M, Xu Y, Li X, Lowe M (2004): Hemispheric roles in the perception of speech prosody. Neuroimage 23: 344–357. [DOI] [PubMed] [Google Scholar]

- Hasegawa M, Carpenter PA, Just MA (2002): An fMRI study of bilingual sentence comprehension and workload. Neuroimage 15: 647–660. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D (2000): Towards a functional neuroanatomy of speech perception. Trends Cogn Sci 4: 131–138. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D (2004): Dorsal and ventral streams: a framework for understanding aspects of the functional anatomy of language. Cognition 92: 67–99. [DOI] [PubMed] [Google Scholar]

- Illes J, Francis WS, Desmond JE, Gabrieli JD, Glover GH, Poldrack R, Lee CJ, Wagner AD (1999): Convergent cortical representation of semantic processing in bilinguals. Brain Lang 70: 347–363. [DOI] [PubMed] [Google Scholar]

- Jin J (1996): An acoustic study of sentence stress in Mandarin Chinese [Dissertation]. Columbus: Ohio State University.

- Kim KH, Relkin NR, Lee KM, Hirsch J (1997): Distinct cortical areas associated with native and second languages. Nature 388: 171–174. [DOI] [PubMed] [Google Scholar]

- Kim JJ, Kim MS, Lee JS, Lee DS, Lee MC, Kwon JS (2002): Dissociation of working memory processing associated with native and second languages: PET investigation. Neuroimage 15: 879–891. [DOI] [PubMed] [Google Scholar]

- Klein D, Milner B, Zatorre RJ, Zhao V, Nikelski J (1999): Cerebral organization in bilinguals: a PET study of Chinese‐English verb generation. Neuroreport 10: 2841–2846. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Gunter TC, v Cramon DY, Zysset S, Lohmann G, Friederici AD (2002): Bach speaks: a cortical “language‐network” serves the processing of music. Neuroimage 17: 956–966. [PubMed] [Google Scholar]

- Liu F, Xu Y (2004): Asking questions with focus. J Acoust Soc Am 115: 2397. [Google Scholar]

- Loevenbruck H, Baciu M, Segebarth C, Abry C (2005): The left inferior frontal gyrus under focus: an fMRI study of the production of deixis via syntactic extraction and prosodic focus. J Neuroling 18: 237–258. [Google Scholar]

- Lucas TH 2nd, McKhann GM 2nd, Ojemann GA (2004): Functional separation of languages in the bilingual brain: a comparison of electrical stimulation language mapping in 25 bilingual patients and 117 monolingual control patients. J Neurosurg 101: 449–457. [DOI] [PubMed] [Google Scholar]

- MacDonald AW 3rd, Cohen JD, Stenger VA, Carter CS (2000): Dissociating the role of the dorsolateral prefrontal and anterior cingulate cortex in cognitive control. Science 288: 1835–1838. [DOI] [PubMed] [Google Scholar]

- Mack M (2003): The phonetic systems of bilinguals In: Banich MT, Mack M, editors. Mind, Brain, and Language: Multidisciplinary Perspectives. Mahwah, NJ: Lawrence Erlbaum; p 309–349. [Google Scholar]

- Marian V, Spivey M, Hirsch J (2003): Shared and separate systems in bilingual language processing: converging evidence from eyetracking and brain imaging. Brain Lang 86: 70–82. [DOI] [PubMed] [Google Scholar]

- McDermott KB, Petersen SE, Watson JM, Ojemann JG (2003): A procedure for identifying regions preferentially activated by attention to semantic and phonological relations using functional magnetic resonance imaging. Neuropsychologia 41: 293–303. [DOI] [PubMed] [Google Scholar]

- Meyer M, Alter K, Friederici AD, Lohmann G, von Cramon DY (2002): FMRI reveals brain regions mediating slow prosodic modulations in spoken sentences. Hum Brain Mapp 17: 73–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer M, Alter K, Friederici A (2003): Functional MR imaging exposes differential brain responses to syntax and prosody during auditory sentence comprehension. J Neuroling 16: 277–300. [Google Scholar]

- Ojemann GA, Whitaker HA (1978): The bilingual brain. Arch Neurol 35: 409–412. [DOI] [PubMed] [Google Scholar]

- Ojemann GA, Ojemann JG, Lettich E, Berger MS (1989): Cortical language localization in left, dominant hemisphere: an electrical stimulation mapping investigation in 117 patients. J Neurosurg 71: 316–326. [DOI] [PubMed] [Google Scholar]

- Oldfield RC (1971): The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9: 97–113. [DOI] [PubMed] [Google Scholar]

- Paradis M. (ed.) (1995): Aspects of Bilingual Aphasia. Oxford: Pergamon. [Google Scholar]

- Paradis M (1998): Acquired aphasia in bilingual speakers In: Sarno MT, editor. Acquired Aphasia, 3rd ed. New York: Academic Press; p 531–550. [Google Scholar]

- Paradis M (2001): Bilingual and polyglot aphasia In: Berndt RS, Boller F, editors. Handbook of Neuropsychology: Language and Aphasia, 2nd ed. Oxford: Elsevier Health Sciences; p 69–91. [Google Scholar]

- Paulesu E, Frith CD, Frackowiak RS (1993): The neural correlates of the verbal component of working memory. Nature 362: 342–345. [DOI] [PubMed] [Google Scholar]

- Pell M (2001): Influence of emotion and focus location on prosody in matched statements and questions. J Acoust Soc Am 109: 1668–1680. [DOI] [PubMed] [Google Scholar]

- Perani D (2005): The neural basis of language talent in bilinguals. Trends Cogn Sci 9: 211. [DOI] [PubMed] [Google Scholar]

- Perani D, Abutalebi J (2005): The neural basis of first and second language processing. Curr Opin Neurobiol 15: 202–206. [DOI] [PubMed] [Google Scholar]

- Perani D, Paulesu E, Galles NS, Dupoux E, Dehaene S, Bettinardi V, Cappa SF, Fazio F, Mehler J (1998): The bilingual brain. Proficiency and age of acquisition of the second language. Brain 121: 1841–1852. [DOI] [PubMed] [Google Scholar]

- Perani D, Abutalebi J, Paulesu E, Brambati S, Scifo P, Cappa SF, Fazio F (2003): The role of age of acquisition and language usage in early, high‐proficient bilinguals: an fMRI study during verbal fluency. Hum Brain Mapp 19: 170–182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pu Y, Liu HL, Spinks JA, Mahankali S, Xiong J, Feng CM, Tan LH, Fox PT, Gao JH (2001): Cerebral hemodynamic response in Chinese (first) and English (second) language processing revealed by event‐related functional MRI. Magn Reson Imaging 19: 643–647. [DOI] [PubMed] [Google Scholar]

- Rapport RL, Tan CT, Whitaker HA (1983): Language function and dysfunction among Chinese‐ and English‐speaking polyglots: cortical stimulation, Wada testing, and clinical studies. Brain Lang 18: 342–366. [DOI] [PubMed] [Google Scholar]

- Ravizza SM, Delgado MR, Chein JM, Becker JT, Fiez JA (2004): Functional dissociations within the inferior parietal cortex in verbal working memory. Neuroimage 22: 562–573. [DOI] [PubMed] [Google Scholar]

- Rodd JM, Davis MH, Johnsrude IS (2005): The neural mechanisms of speech comprehension: fMRI studies of semantic ambiguity. Cereb Cortex 15: 1261–1269. [DOI] [PubMed] [Google Scholar]

- Rodriguez‐Fornells A, van der Lugt A, Rotte M, Britti B, Heinze H‐J, Munte TF (2005): Second language interferes with word production in fluent bilinguals: brain potential and functional imaging evidence. J Cogn Neurosci 17: 422–433. [DOI] [PubMed] [Google Scholar]

- Roux FE, Lubrano V, Lauwers‐Cances V, Tremoulet M, Mascott CR, Demonet JF (2004): Intra‐operative mapping of cortical areas involved in reading in mono‐ and bilingual patients. Brain 127: 1796–1810. [DOI] [PubMed] [Google Scholar]

- Scott SK, Johnsrude IS (2003): The neuroanatomical and functional organization of speech perception. Trends Neurosci 26: 100–107. [DOI] [PubMed] [Google Scholar]

- Shaywitz BA, Shaywitz SE, Pugh KR, Fulbright RK, Skudlarski P, Mencl WE, Constable RT, Marchione KE, Fletcher JM, Klorman R (2001): The functional neural architecture of components of attention in language‐processing tasks. Neuroimage 13: 601–612. [DOI] [PubMed] [Google Scholar]

- Shen XN (1990): The Prosody of Mandarin Chinese. Berkeley: University of California Press. [Google Scholar]

- Smith EE, Jonides J (1999): Storage and executive processes in the frontal lobes. Science 283: 1657–1661. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P (1988): Co‐planar Stereotaxic Atlas of the Human Brain: 3‐dimensional Proportional System: An Approach to Cerebral Imaging. New York: Thieme Medical. [Google Scholar]

- Tan LH, Spinks JA, Feng CM, Siok WT, Perfetti CA, Xiong J, Fox PT, Gao JH (2003): Neural systems of second language reading are shaped by native language. Hum Brain Mapp 18: 158–166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tong Y, Gandour J, Talavage T, Wong D, Dzemidzic M, Xu Y, Li X, Lowe M (2005): Neural circuitry underlying the perception of sentence‐level linguistic prosody. Neuroimage 28: 417–428. [DOI] [PubMed] [Google Scholar]

- Vingerhoets G, Van Borsel J, Tesink C, van den Noort M, Deblaere K, Seurinck R, Vandemaele P, Achten E (2003): Multilingualism: an fMRI study. Neuroimage 20: 2181–2196. [DOI] [PubMed] [Google Scholar]

- Walker JA, Quinones‐Hinojosa A, Berger MS (2004): Intraoperative speech mapping in 17 bilingual patients undergoing resection of a mass lesion. Neurosurgery 54: 113–117; discussion 118. [DOI] [PubMed] [Google Scholar]

- Wang Y, Sereno JA, Jongman A, Hirsch J (2003): fMRI evidence for cortical modification during learning of Mandarin lexical tone. J Cogn Neurosci 15: 1019–1027. [DOI] [PubMed] [Google Scholar]

- Wartenburger I, Heekeren HR, Abutalebi J, Cappa SF, Villringer A, Perani D (2003): Early setting of grammatical processing in the bilingual brain. Neuron 37: 159–170. [DOI] [PubMed] [Google Scholar]

- Weber‐Fox CM, Neville HJ (1996): Maturational constraints on functional specializations for language processing: ERP and behavioral evidence in bilingual speakers. J Cogn Neurosci 8: 231–256. [DOI] [PubMed] [Google Scholar]

- Xu Y (1999): Effects of tone and focus on the formation and alignment of f0 contours. J Phonetics 27: 55–105. [Google Scholar]

- Xu Y (2006): Tones in connected discourse In: Brown K, editor. Encyclopedia of Language and Linguistics, 2nd ed. Oxford: Elsevier. [Google Scholar]

- Xu Y, Xu CX (2005): Phonetic realization of focus in English declarative intonation. J Phonetics 33: 159–197. [Google Scholar]

- Xue G, Dong Q, Jin Z, Chen C (2004): Mapping of verbal working memory in nonfluent Chinese‐English bilinguals with functional MRI. Neuroimage 22: 1–10. [DOI] [PubMed] [Google Scholar]

- Zatorre R, Samson S (1991): Role of the right temporal neocortex in retention of pitch in auditory short‐term memory. Brain 114: 2403–2017. [DOI] [PubMed] [Google Scholar]

- Zatorre R, Evans A, Meyer E, Gjedde A (1992): Lateralization of phonetic and pitch discrimination in speech processing. Science 256: 846–849. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Evans AC, Meyer E (1994): Neural mechanisms underlying melodic perception and memory for pitch. J Neurosci 14: 1908–1919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Mondor TA, Evans AC (1999): Auditory attention to space and frequency activates similar cerebral systems. Neuroimage 10: 544–554. [DOI] [PubMed] [Google Scholar]