Abstract

We studied the effects of sound presentation rate and attention on auditory supratemporal cortex (STC) activation in 12 healthy adults using functional magnetic resonance imaging (fMRI) at 3 T. The sounds (200 ms in duration) were presented at steady rates of 0.5, 1, 1.5, 2.5, or 4 Hz while subjects either had to focus their attention to the sounds or ignore the sounds and attend to visual stimuli presented with a mean rate of 1 Hz. Consistent with previous observations, we found that both increase in stimulation rate and attention to sounds enhanced activity in STC bilaterally. Further, we observed larger attention effects with higher stimulation rates. This interaction of attention and presentation rate has not been reported previously. In conclusion, our results show both rate‐dependent and attention‐related modulations of STC indicating that both factors should be controlled, or at least addressed, in fMRI studies of auditory processing. Hum Brain Mapping, 2005. © 2005 Wiley‐Liss, Inc.

Keywords: auditory cortex, fMRI, attention, auditory processing, sound presentation rate

INTRODUCTION

In a pioneering functional magnetic resonance imaging (fMRI) study, Binder et al. [1994] showed that auditory supratemporal cortex (STC) activation increases monotonically when the sound presentation rate is increased from 0.17 to 2.50 Hz. Since then, this finding has been replicated several times [Dhankhar et al.,1997; Harms and Melcher,2002; Mechelli et al.,2000; Rees et al.,1997; Tanaka et al.,2000]. Typically, the effect of sound presentation rate has been studied using tasks in which the subjects are either instructed to attend to the sounds, or are not required to perform any specific task during scanning. Using such tasks, obviously, the possible effects of attention on STC activation cannot be separated from the rate‐dependent modulation. However, previous studies using electroencephalography (EEG) [Alho et al.,1992; Hillyard et al.,1973; Näätänen et al.,1978; Woods et al.,1991], magnetoencephalography (MEG) [Hari et al.,1989; Rif et al.,1991; Woldorff et al.,1993], positron emission tomography (PET) [Alho et al.,1999; O'Leary et al.,1996; Tzourio et al.,1997; Zatorre et al.,1999], and fMRI [Grady et al.,1997; Jäncke et al.,1999; Petkov et al.,2004; Woodruff et al.,1996] show that attention strongly modulates brain signals related to sound processing. Moreover, previous event‐related brain potential (ERPs) and neuromagnetic field (ERFs) recordings suggest that sound presentation rate affects both stimulus‐dependent and attention‐related brain activity [Rif et al.,1991; Teder et al.,1993]. However, the interaction of attention and sound presentation rate has not been previously studied with fMRI.

In the present study, we recorded fMRI BOLD responses to sounds presented at different rates ranging from 0.5 to 4 Hz. During the recordings, subjects either focused their attention on these sounds or ignored the sounds and attended to visual stimuli. We hypothesized that the sounds would activate STC bilaterally and that this activation would increase as a function of the presentation rate. Further, we hypothesized that attention to sounds would enhance STC activation. Finally, we examined whether the effect of attention differed as a function of sound presentation rate.

SUBJECTS AND METHODS

Subjects

Twelve right‐handed, healthy subjects (18–45 years old; seven men) with normal hearing and normal or corrected‐to‐normal visual acuity participated in the experiment. Informed written consent was obtained from each subject prior to the experiment. The study protocol was approved by the Ethics Committee for Studies in Healthy Subjects and Primary Care of the Hospital District of Helsinki and Uusimaa.

Auditory Stimuli

The sounds (duration 200 ms; rise and fall time 5 ms) were harmonic tones presented diotically with a fundamental frequency of 186 Hz (372, 558, 744, 930, and 1,116 Hz partials with equal intensity). Harmonic sounds were chosen in order to evoke robust STC activation [Hall et al.,2002]. The frequent tone had a 3% upward frequency glide starting at 150 ms from stimulus onset. The infrequent tone was otherwise similar but the frequency glided downwards. The tones were delivered through earplugs (E.A.R., Inc., Boulder, CO) via pneumatic headphones (Avotec, Stuart, FL). The intensity of the sounds (attenuated by earplugs) at the ear drum was approximately 75 dB SPL. Scanner noise (∼102 dB SPL, A‐weighted measurement inside the head coil) was attenuated by wrapping the head coil in acoustic shielding material, by headphones, and earplugs. After attenuation, the level of the scanner noise was approximately 70 dB SPL.

Visual Stimuli

Colored filled circles (duration 100 ms; visual angle 3.6°) were projected to a mirror fixed to the head coil. The circles were presented on a gray (R = 190, G = 190, B = 190) background and their color changed at 50 ms from stimulus onset. The color of the frequently presented circle changed from yellow (R = 223, G = 189, B = 99) to orange (R = 221, G = 161, B = 91) while the color of the infrequent circle changed from red (R = 221, G = 125, B = 83) to orange.

Stimulus Sequences and Behavioral Task

The sounds were presented, in different blocks, with five steady presentation rates: 0.5, 1, 1.5, 2.5, or 4 Hz. The onset‐to‐onset interval of the pictures was randomly set to either 500, 1,000, or 1,500 ms so that the average presentation rate of pictures was 1 Hz (in all blocks). The onsets of visual and auditory stimuli were asynchronous. Infrequent stimuli were mixed pseudo‐randomly with the frequent stimuli so that there were 2–4 infrequent stimuli of each modality (e.g., 3 auditory and 4 visual infrequent stimuli) in each 28‐s block. To maintain the subject's attention throughout each block, one visual and one auditory infrequent stimulus was always presented at a random time point during the first and last 8 s of the block while a third infrequent stimulus could appear (visual P = 0.5; auditory P = 0.5) in the middle of the block, and a fourth one (visual P = 0.5; auditory P = 0.5) anywhere in the block. During baseline blocks, only the infrequent sounds were presented (mean rate 0.1 Hz). The visual stimuli were identical in all blocks and tasks.

During the experiment the subjects performed either a visual or auditory target detection task. The task was changed five times during the experiment after two sets of six blocks were presented (Fig. 1). The set of six blocks consisted of five blocks with different sound presentation rates and one block with infrequent sounds presented without the frequent sounds (Fig. 1, bottom). These six blocks were presented in a random order. The order of tasks was counterbalanced so that half of the subjects started with an auditory and half with a visual task (Fig. 1, top). The task was indicated by a simultaneous spoken and a written instruction (“Attend to sounds” or “Attend to pictures”) presented through the headphones and on the screen for 2 s before the beginning of a new task.

Figure 1.

Top: In alternating conditions, the subjects' task was either to attend to pictures (Visual) or sounds (Auditory) and respond to the infrequent stimulus. The order of tasks was counterbalanced so that half of the subjects started with an auditory task and half with a visual task. An instruction was displayed on the screen and played via headphones before each condition. Bottom: The sounds were presented, in different 28‐s blocks, with five constant presentation rates: 0.5, 1.0, 1.5, 2.5, or 4.0 Hz. In addition, there were blocks in which the infrequent tones were presented at irregular intervals (mean rate 0.1 Hz) without intervening frequent tones. In all blocks, pictures were presented at a mean rate of 1 Hz.

During the experiment the subjects were required to focus on a fixation mark, which was either a letter “V” or “Λ” (Lambda), indicating a visual or an auditory task, respectively. To continuously remind the subjects about the current task, the fixation mark was presented throughout the experiment so that the subjects saw either the fixation mark alone or the fixation mark embedded in the center of the picture. The subjects were required to press a response button with their left index finger every time the target sound (auditory task) or the target picture (visual task) appeared.

fMRI Image Acquisition

Functional gradient‐echo planar (EPI) MR images (TE 32 ms, TR 2,800 ms, flip angle 90°) were acquired using a 3.0 T GE Signa (GE Medical Systems, Milwaukee, WI) system retrofitted with an Advanced NMR operating console and a quadrature birdcage coil. The in‐plane resolution in the EPI‐images was 3.4 × 3.4 mm (voxel matrix 64 × 64, FOV 22 × 22 cm). The imaged area consisted of 28 contiguous 3.4‐mm thick axial oblique slices with the lowest slice positioned approximately 2 cm caudal to the line between the anterior and posterior commissures. For each subject, a total of 720 functional volumes (60 for each rate and task) were acquired.

For anatomical alignment, a T1‐weighted inversion recovery spin‐echo volume was acquired using the same slice prescription as for the functional volumes but with a denser in‐plane resolution (matrix 256 × 256).

fMRI Data Analysis

Data analysis was carried out using fMRI Expert Analysis Tool (FEAT) software (v. 3.1), part of the Functional Magnetic Resonance Imaging of the Brain Centre (FMRIB) software library (FSL, http://www.fmrib.ox.ac.uk/fsl). The first five volumes were excluded from data analysis to allow the initial stabilization of the fMRI signal. The data were motion‐corrected [Jenkinson et al.,2002], spatially smoothed with a Gaussian kernel of 5 mm full‐width half maximum, and high‐pass filtered [Woolrich et al.,2001] (cutoff 676 s). Statistical analysis was carried out using the FMRIB Improved Linear Model (FILM) [Woolrich et al.,2001]. Data from each of 11 conditions (auditory or visual task × 5 rates + an auditory attention condition with the auditory targets presented without the frequent sounds) and the in‐between tasks periods displaying the instruction about the following task were entered separately to the model. The visual attention condition with infrequent sounds presented without the frequent sounds was used as baseline. The hemodynamic response was modeled with a gamma‐function (mean lag 6 s, SD 3 s) and its temporal derivative. The model was temporally filtered in a fashion similar to the data. Finally, several contrasts were specified to create Z statistic images comparing each rate and task against the baseline condition.

For group analyses [Woolrich et al.,2004], individual level Z statistic images for all subjects were transformed into standard space (MNI152) [Jenkinson et al.,2002]. Z statistic images were thresholded with Z > 2.33 and a (corrected) cluster significance threshold of P < 0.05. The STC clusters from a comparison testing all conditions vs. baseline were slightly smoothed (with subsequent dilation and erosion in 3D) and used as a region‐of‐interest (ROI) for determining the mean percent signal change for each rate and condition.

RESULTS

Behavioral Task

The mean hit rate for all tasks and levels was 0.76 ± 0.05 (mean ± SEM). Performance was more accurate in the visual (mean 0.81 ± 0.05) than in the auditory task (0.71 ± 0.06; repeated measures ANOVA: TASK * RATE, main effect of TASK: P < 0.05). Responses were faster in the auditory than in the visual task (mean difference 62 ± 14 ms; P < 0.001; the reaction times were corrected for the 100‐ms difference in the target‐feature onset time). There were no systematic effects of the sound presentation rate.

fMRI

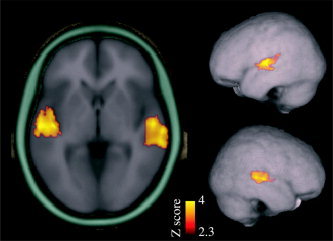

Sounds activated auditory areas in the bilateral STC (Fig. 2). As expected, this activation increased with sound presentation rate (repeated measures ANOVA: HEMISPHERE * ATTENTION * RATE, main effect of RATE: P < 0.001) and it was larger when subjects attended to the sounds than when they attended to the pictures (main effect of ATTENTION: P < 0.01; Fig. 3). There was an interaction between rate and attention effects: STC activation increased more steeply with sound presentation rate when the subjects attended to the sounds than when they attended to the pictures (ATTENTION * RATE interaction effect: P < 0.05).

Figure 2.

Sounds activated STC bilaterally (n = 12). Contrast between all rates and tasks vs. baseline (attend visual, infrequent sounds alone) is shown (threshold Z = 2.33, corrected cluster threshold P < 0.05; MNI152 coordinates of maximum voxel, left: 58, –18, 4, right: –56, –18, 4). These STC clusters were used as ROIs for determining the mean percent signal change for each rate and condition (Fig. 3).

Figure 3.

Mean percent change (± SEM) in ROIs (activation clusters of Fig. 2) for each condition, rate, and hemisphere.

DISCUSSION

The STC activation increased with sound presentation rate from 0.5 to 4 Hz (Fig. 3), in line with the results of previous fMRI studies [Binder et al.,1994; Dhankhar et al.,1997; Harms and Melcher,2002; Mechelli et al.,2000; Rees et al.,1997]. However, the activation observed in the auditory attention condition of the present experiment seemed to reach a plateau at the highest presentation rate (Fig. 3). Consistently, a decrease of STC activation with rates exceeding ∼4–6 Hz is suggested by several studies [Giraud et al.,2000; Harms and Melcher,2002; Seifritz et al.,2003; Tanaka et al.,2000]. Taken together, the present and previous fMRI studies indicate that STC activation strongly depends on sound presentation rate and, further, suggest that the monotonic increase of activation observed with slower presentation rates is followed by a plateau or even a decrease with higher rates (>4–6 Hz). The dependency of auditory fMRI signal on presentation rate is observed using a wide range (from pure tones to speech sounds) of different acoustic stimuli. Therefore, it is likely that the effect of sound presentation rate on STC activation is a general feature of the auditory fMRI signal rather than being specific to certain type of stimulation.

The present data also demonstrated a distinct effect of attention on STC activation. Previously, the effects of attention and sound presentation rate have not been separated from each other. This is because in previous studies the subjects were either required to attend to sounds or the experimental design did not carefully control for the possibility that the subjects attended to sounds. Indeed, insofar as more frequently presented stimuli may attract more auditory attention, such conditions may confound presentation rate with attention effects.

Interestingly, we found that the effect of attention on STC activation increases with sound presentation rate: larger attention effects were observed with higher stimulation rates. There are several possible accounts for this new finding. First, previous ERP results suggest that each attended sound is associated with attention‐related activity [e.g., Hillyard et al.,1973; Näätänen et al.,1978]. This attention‐related activity might accumulate so that a stronger fMRI signal is detected with higher sound presentation rates. There is also evidence that the attention‐related ERP responses have shorter recovery periods than stimulus‐dependent responses [Woods et al.,1980; Woods,1990]. Second, attention itself may be facilitated by the more frequent occurrence of attended sounds [Alho et al.,1990; Hansen and Hillyard,1988; Näätänen,1982; Woldorff and Hillyard,1991]. Finally, both mechanisms may act simultaneously. It is also possible that the effect of attention on STC activation is different for different types of sounds. For example, the effect of attention may be enhanced for behaviorally relevant stimuli such as speech.

The present results reflect average activation over a 28‐s block, which affects the interpretation of the results in several ways. First, there are at least three different components of the fMRI signal contributing to the present results: block‐onset, block‐offset, and steady‐state responses all have different time characteristics and are modulated differently by sound presentation rate [Figure 4 in Harms and Melcher,2002]. In addition, attention‐related activation appears to have different temporal characteristics than these other components: stimulus‐dependent activation in the auditory‐cortex is largest immediately after the block onset, whereas attention‐dependent activity increases gradually throughout the block [Petkov et al.,2004]. Second, a sound presented in silence or with a slow presentation rate probably elicits more activation per se as compared to a sound presented in the context of a high‐rate block: A sound presented with a slow rate (e.g., 0.5 Hz) will elicit high‐amplitude obligatory ERP components (such as P1, N1, and P2) while at higher presentation rates (e.g., 4 Hz) these components are diminished (due to stimulus‐dependent adaptation or refractoriness; Näätänen and Picton [1987]). Unfortunately, it is not possible to separate the fMRI responses to individual sounds from each other when the sounds are presented with a high rate. However, since ERPs show smaller responses to individual sounds presented at higher rates, it is probable that the dependency of fMRI activation on sound presentation rate is due to accumulation of activation to individual sounds during the block (or increase of sound energy over time) rather than due to enhanced processing of individual sounds.

In the present study, the task‐related motor activity was controlled for by fixing the number of infrequent stimuli in each condition and baseline. As a consequence of this, the probability of the infrequent sounds within a sound sequence decreased with increasing sound presentation rate. Thus, it could be argued that the present results might be partially explained by rate‐dependent activation to infrequent sounds. This argument could be supported by the fact that the mismatch negativity (MMN) [Näätänen,1992] component of the ERP, which is generated mainly in the auditory cortex in response to infrequent sounds (changes in a sound sequence), is enhanced when the probability of the infrequent sound is decreased [Sabri and Campbell,2001]. However, as the present results are consistent with those of previous studies using monotonous sounds [Harms and Melcher,2002; Tanaka et al.,2000] it is likely that the contribution of activation caused by infrequent sounds is negligible as compared with the activation caused by frequent sounds. (Note that the even if the results were affected by the activation to infrequent sounds, the conclusion of the present study would not change.)

In conclusion, the present results support the separability of stimulus‐dependent and attention‐related activation in the auditory cortex as suggested by a recent fMRI study [Petkov et al.,2004] and, further, indicate that both attention and sound presentation rate have to be strictly controlled in fMRI studies on sensory and higher‐order auditory processing. Particularly, attention should be carefully controlled when the modulation of STC activation over a wide range of presentation rates is examined.

Acknowledgements

We thank Drs. G. Christopher Stecker and David L. Woods for helpful comments on the manuscript.

REFERENCES

- Alho K, Lavikainen J, Reinikainen K, Sams M, Näätänen R (1990): Event‐related brain potentials in selective listening to frequent and rare stimuli. Psychophysiology 27: 73–86. [DOI] [PubMed] [Google Scholar]

- Alho K, Woods DL, Algazi A, Näätänen R (1992): Intermodal selective attention. II. Effects of attentional load on processing of auditory and visual stimuli in central space. Electroencephalogr Clin Neurophysiol 82: 356–368. [DOI] [PubMed] [Google Scholar]

- Alho K, Medvedev SV, Pakhomov SV, Roudas MS, Tervaniemi M, Reinikainen K, Zeffiro T, Näätänen R (1999): Selective tuning of the left and right auditory cortices during spatially directed attention. Cogn Brain Res 7: 335–341. [DOI] [PubMed] [Google Scholar]

- Binder JR, Rao SM, Hammeke TA, Frost JA, Bandettini PA, Hyde JS (1994): Effects of stimulus rate on signal response during functional magnetic resonance imaging of auditory cortex. Cogn Brain Res 2: 31–38. [DOI] [PubMed] [Google Scholar]

- Dhankhar A, Wexler BE, Fulbright RK, Halwes T, Blamire AM, Shulman RG (1997): Functional magnetic resonance imaging assessment of the human brain auditory cortex response to increasing word presentation rates. J Neurophysiol 77: 476–483. [DOI] [PubMed] [Google Scholar]

- Giraud AL, Lorenzi C, Ashburner J, Wable J, Johnsrude I, Frackowiak R, Kleinschmidt A (2000): Representation of the temporal envelope of sounds in the human brain. J Neurophysiol 84: 1588–1598. [DOI] [PubMed] [Google Scholar]

- Grady CL, Van Meter JW, Maisog JM, Pietrini P, Krasuski J, Rauschecker JP (1997): Attention‐related modulation of activity in primary and secondary auditory cortex. Neuroreport 8: 2511–2516. [DOI] [PubMed] [Google Scholar]

- Hall DA, Johnsrude IS, Haggard MP, Palmer AR, Akeroyd MA, Summerfield AQ (2002): Spectral and temporal processing in human auditory cortex. Cereb Cortex 12: 140–149. [DOI] [PubMed] [Google Scholar]

- Hansen JC, Hillyard SA (1988): The temporal dynamics of human auditory selective attention. Psychophysiology 25: 316–329. [DOI] [PubMed] [Google Scholar]

- Hari R, Hämäläinen M, Kaukoranta E, Mäkelä J, Joutsiniemi SL, Tiihonen J (1989): Selective listening modifies activity of the human auditory cortex. Exp Brain Res 74: 463–470. [DOI] [PubMed] [Google Scholar]

- Harms MP, Melcher JR (2002): Sound repetition rate in the human auditory pathway: representations in the waveshape and amplitude of fMRI activation. J Neurophysiol 88: 1433–1450. [DOI] [PubMed] [Google Scholar]

- Hillyard SA, Hink RF, Schwent VL, Picton TW (1973): Electrical signs of selective attention in the human brain. Science 182: 177–180. [DOI] [PubMed] [Google Scholar]

- Jäncke L, Mirzazade S, Shah NJ (1999): Attention modulates activity in the primary and the secondary auditory cortex: a functional magnetic resonance imaging study in human subjects. Neurosci Lett 266: 125–128. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S (2002): Improved optimization for the robust and accurate linear registration and motion correction of brain images. NeuroImage 17: 825–841. [DOI] [PubMed] [Google Scholar]

- Mechelli A, Friston KJ, Price CJ (2000): The effects of presentation rate during word and pseudoword reading: a comparison of PET and fMRI. J Cogn Neurosci 12: 145–156. [DOI] [PubMed] [Google Scholar]

- Näätänen R (1982): Processing negativity: an evoked‐potential reflection of selective attention. Psychol Bull 92: 605–640. [DOI] [PubMed] [Google Scholar]

- Näätänen R (1992): Attention and brain function. Hillsdale, NJ: Lawrence Erlbaum. [Google Scholar]

- Näätänen R, Picton T (1987): The N1 wave of the human electric and magnetic response to sound: a review and an analysis of the component structure. Psychophysiology 24: 375–425. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Gaillard AW, Mäntysalo S (1978): Early selective‐attention effect on evoked potential reinterpreted. Acta Psychol 42: 313–329. [DOI] [PubMed] [Google Scholar]

- O'Leary DS, Andreason NC, Hurtig RR, Hichwa RD, Watkins GL, Ponto LL, Rogers M, Kirchner PT (1996): A positron emission tomography study of binaurally and dichotically presented stimuli: effects of level of language and directed attention. Brain Lang 53: 20–39. [DOI] [PubMed] [Google Scholar]

- Petkov CI, Kang X, Alho K, Bertrand O, Yund EW, Woods DL (2004): Attentional modulation of human auditory cortex. Nat Neurosci 7: 658–663. [DOI] [PubMed] [Google Scholar]

- Rees G, Howseman A, Josephs O, Frith CD, Friston KJ, Frackowiak RS, Turner R (1997): Characterizing the relationship between BOLD contrast and regional cerebral blood flow measurements by varying the stimulus presentation rate. NeuroImage 6: 270–278. [DOI] [PubMed] [Google Scholar]

- Rif J, Hari R, Hämäläinen MS, Sams M (1991): Auditory attention affects two different areas in the human supratemporal cortex. Electroencephalogr Clin Neurophysiol 79: 464–472. [DOI] [PubMed] [Google Scholar]

- Sabri M, Campbell KB (2001): Effects of sequential and temporal probability of deviant occurrence on mismatch negativity. Cogn Brain Res 12: 171–180. [DOI] [PubMed] [Google Scholar]

- Seifritz E, Salle FD, Esposito F, Bilecen D, Neuhoff JG, Scheffler K (2003): Sustained blood oxygenation and volume response to repetition rate‐modulated sound in human auditory cortex. NeuroImage 20: 1365–1370. [DOI] [PubMed] [Google Scholar]

- Tanaka H, Fujita N, Watanabe Y, Hirabuki N, Takanashi M, Oshiro Y, Nakamura H (2000): Effects of stimulus rate on the auditory cortex using fMRI with 'sparse' temporal sampling. Neuroreport 11: 2045–2049. [DOI] [PubMed] [Google Scholar]

- Teder W, Alho K, Reinikainen K, Näätänen R (1993): Interstimulus interval and the selective‐attention effect on auditory ERPs: “N1 enhancement” versus processing negativity. Psychophysiology 30: 71–81. [DOI] [PubMed] [Google Scholar]

- Tzourio N, Massioui FE, Crivello F, Joliot M, Renault B, Mazoyer B (1997): Functional anatomy of human auditory attention studied with PET. NeuroImage 5: 63–77. [DOI] [PubMed] [Google Scholar]

- Woldorff MG, Hillyard SA (1991): Modulation of early auditory processing during selective listening to rapidly presented tones. Electroencephalogr Clin Neurophysiol 79: 170–191. [DOI] [PubMed] [Google Scholar]

- Woldorff MG, Gallen CC, Hampson SA, Hillyard SA, Pantev C, Sobel D, Bloom FE (1993): Modulation of early sensory processing in human auditory cortex during auditory selective attention. Proc Natl Acad Sci U S A 90: 8722–8726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodruff PW, Benson RR, Bandettini PA, Kwong KK, Howard RJ, Talavage T, Belliveau J, Rosen BR (1996): Modulation of auditory and visual cortex by selective attention is modality‐dependent. Neuroreport 7: 1909–1913. [DOI] [PubMed] [Google Scholar]

- Woods DL (1990): The physiological basis of selective attention: Implications of event‐related potential studies In: Rohrbaugh JW, Johnson R, Parasuraman R, editors. Event‐related brain potentials: issues and interdisciplinary vantages. New York: Oxford University Press; p 178–209. [Google Scholar]

- Woods DL, Hillyard SA, Courchesne E, Galambos R (1980): Electrophysiological signs of split‐second decision‐making. Science 207: 655–657. [DOI] [PubMed] [Google Scholar]

- Woods DL, Alho K, Algazi A (1991): Brain potential signs of feature processing during auditory selective attention. Neuroreport 2: 189–192. [DOI] [PubMed] [Google Scholar]

- Woolrich MW, Ripley BD, Brady M, Smith SM (2001): Temporal autocorrelation in univariate linear modeling of FMRI data. NeuroImage 14: 1370–1386. [DOI] [PubMed] [Google Scholar]

- Woolrich MW, Behrens TE, Beckmann CF, Jenkinson M, Smith SM (2004): Multi‐level linear modelling for FMRI group analysis using Bayesian inference. NeuroImage 21: 1732–1747. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Mondor TA, Evans AC (1999): Auditory attention to space and frequency activates similar cerebral systems. Neuroimage 10: 544–554. [DOI] [PubMed] [Google Scholar]