Abstract

Previous research has implicated a portion of the anterior temporal cortex in sentence‐level processing. This region activates more to sentences than to word‐lists, sentences in an unfamiliar language, and environmental sound sequences. The current study sought to identify the relative contributions of syntactic and prosodic processing to anterior temporal activation. We presented auditory stimuli where the presence of prosodic and syntactic structure was independently manipulated during functional magnetic resonance imaging (fMRI). Three “structural” conditions included normal sentences, sentences with scrambled word order, and lists of content words. These three classes of stimuli were presented either with sentence prosody or with flat supra‐lexical (list‐like) prosody. Sentence stimuli activated a portion of the left anterior temporal cortex in the superior temporal sulcus (STS) and extending into the middle temporal gyrus, independent of prosody, and to a greater extent than any of the other conditions. An interaction between the structural conditions and prosodic conditions was seen in a more dorsal region of the anterior temporal lobe bilaterally along the superior temporal gyrus (STG). A post‐hoc analysis revealed that this region responded either to syntactically structured stimuli or to nonstructured stimuli with sentence‐like prosody. The results suggest a parcellation of anterior temporal cortex into 1) an STG region that is sensitive both to the presence of syntactic information and is modulated by prosodic manipulations (in nonsyntactic stimuli); and 2) a more inferior left STS/MTG region that is more selective for syntactic structure. Hum Brain Mapp, 2005. © 2005 Wiley‐Liss, Inc.

Keywords: anterior temporal lobe, sentence processing, syntactic processing, prosodic processing, fMRI

INTRODUCTION

Recent studies looking at the neural organization of auditory language perception have found an area in the anterior part of the temporal lobe that appears to be involved in sentence‐level comprehension. For example, in one neuropsychological study it was found that in a group of aphasic patients tested for comprehension of sentences with various degrees of morphosyntactic complexity, those patients with deficits understanding the most complex sentences tended to have lesions including the left anterior temporal lobe [Dronkers et al.,1994]. A similar disruption in sentence comprehension has also been found in patients with fronto‐temporal dementia who show differences in relative cerebral perfusion in left anterior temporal regions that are correlated with sentence comprehension difficulty [Grossman et al.,1998]. Event‐related potentials (ERPs) recorded from patients with left anterior temporal lobe lesions do not show a specific syntax‐related component (i.e., ELAN), further suggesting that this area is involved in syntax‐related sentence processing [Friederici and Kotz,2003].

Additional evidence implicating anterior temporal cortex (ATC) in sentence‐level processing comes from functional imaging studies. Data from these studies suggest that this region is more responsive to sentence‐level stimuli than to other types of linguistic or acoustic stimuli [see Stowe et al.,2005]. For example, sentences presented in the listener's native language show greater ATC activation compared to sentences in a language unfamiliar to the listener [Mazoyer et al.,1993; Schlosser et al.,1998], or compared to spectrally rotated sentences [Scott et al.,2000].1 Because stimuli comprised of sentences from an unfamiliar language or spectrally rotated sentences both contain similar spectral‐temporal properties as native‐language/unaltered sentences, such a finding suggests that spectrotemporal complexity per se is not sufficient to maximally drive ATC activation. ATC also does not seem to be maximally activated by word‐level information based on evidence from two studies which found greater activation for sentences than for lists of unrelated words [Friederici et al.,2000; Mazoyer et al.,1993]. Because word‐lists are matched to sentences with respect to lexical information, this finding suggests that the ATC response to sentences is not simply a function of lexical‐level phonological or semantic processes. This pattern, greater activation to sentences than word lists, seems to hold whether normal sentences or pseudoword sentences (i.e., jabberwocky) are used, suggesting further that semantic manipulations do not modulate anterior temporal cortex activity [Friederici et al.,2000; Mazoyer et al.,1993].2 ATC has also been found to show increased activation for sentences with syntactic violations [Friederici et al.,2003; Meyer et al.,2000]. On the assumption that encountering a syntactic violation leads to increased sentence processing load, this finding provides further evidence for ATC involvement in sentence‐level processing. Finally, a study that compared activation patterns for sentences vs. other types of meaningful complex auditory signals like environmental sound sequence events (e.g., tires squealing followed by a crash; Humphries et al. [2001]) found greater activation for sentences in ATC, implying that this area is not responding generally to sequences of meaningfully related auditory events.

The ATC is not the only brain region that has been implicated in sentence‐level processing. Several other areas, including left inferior frontal gyrus (IFG) and left posterior temporal and inferior parietal lobe, have been found to respond to various sentence‐level manipulations, such as syntactic complexity [Caplan et al.,1998; Indefrey et al.,2004; Just et al.,1996], syntactic violations [Indefrey et al.,2001; Ni et al.,2000], and sentence‐level semantic violations [Friederici et al.,2003; Newman et al.,2001; Ni et al.,2000]. Given these results it seems likely that ATC is one part of a larger, distributed sentence‐processing network.

Results from these various studies support the view that ATC plays a role in sentence‐level processing; however, it is not entirely clear what this role is. One issue that has not been investigated fully is the relative role of syntactic vs. prosodic structure in driving ATC activation. Sentences contain prosodic and intonational structure at the phrasal level, a feature that is different from many of the other classes of stimuli studied with respect to ATC activity. It is, therefore, unclear whether ATC activation is driven by sentence structure, prosodic/intonational structure, both sources of information, or some other sentence‐level factor (e.g., compositional semantic processing; see Discussion). We will use the term “prosody” to refer generally to phrasal level prosodic and/or intonational structure.

There are a handful of findings that provide some preliminary evidence that ATC activation may be more related to sentence structure than prosody. For example, ATC shows greater activation for sentences than for word‐lists, even when the stimuli are presented visually [Stowe et al.,1999; Vandenberghe et al.,2002], which presumably removes the prosodic components. However, it is not entirely clear if, during the course of reading, subjects may be imposing upon the sentence some sort of internally generated prosody that could in turn generate ATC activation. The fact that two separate tasks, listening to prosodic de‐lexicalized speech and imposing prosodic boundaries during silent reading, produce similar prosodic‐related ERP components supports this view [Steinhauer and Friederici,2001]. A second line of evidence comes from a study comparing prosodically “flattened” speech, in which prosodic information (i.e., F0) was reduced, against degraded speech, in which lexico‐syntactic information was removed and prosody was preserved [Meyer et al.,2004]. In this study, activation for flattened sentences was greater in ATC bilaterally than for degraded sentences, suggesting that this region plays a greater role in syntactic processing. A similar argument could be made with respect to sentences from an unfamiliar language which contain prosodic information but lack recognizable lexical content. Such findings are clearly supportive of the view that ATC activity is driven by syntactic rather than prosodic information, but it is also possible that the prosodic cues in the degraded speech and the foreign speech are less salient, or that ATC activity is driven by the interaction of lexical and prosodic information.

We sought to address this issue in an experiment that independently manipulated syntactic and prosodic3 information in the stimuli. Three “structural” conditions included normal sentences, sentences with scrambled word order, and lists of content words. Previous studies that found a sentence/word‐list difference in ATC have used either lists of content words or lists with some combination of function and content words [Friederici et al.,2000; Mazoyer et al.,1993; Stowe et al.,1999; Vandenberghe et al.,2002]. We include both types of “nonsyntactic” stimuli to explore any possible differences between the two. The three classes of stimuli were presented either with “sentence prosody” (phrasal level stress patterns and pauses) or with “list prosody” (flat supralexical stress pattern). If ATC activation is driven by the presence of syntactic information, then sentence stimuli should activate this region independent of whether they are presented with normal sentence prosody or with list prosody. Likewise, nonsyntactic stimuli (scrambled sentences and content word lists) should yield lower activation levels in ATC compared to syntactic stimuli (sentences), even when the nonsyntactic stimuli are presented with sentence‐like prosody.

SUBJECTS AND METHODS

Subjects

Twelve native English‐speaking subjects (six male, six female; age range 22–30 years) participated in this experiment. All subjects were right‐handed based on self report. Subjects gave informed consent under a protocol approved by the Institutional Review Board of the University of California, Irvine.

Materials

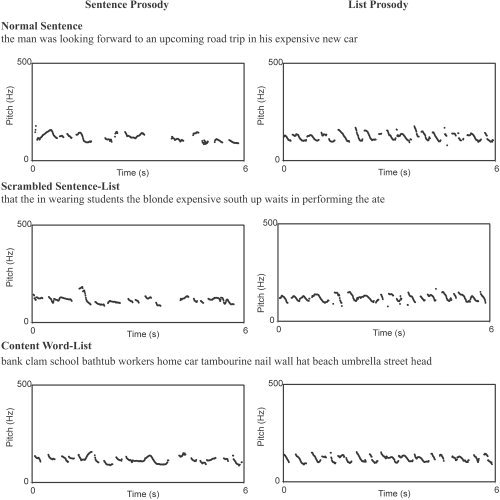

The experiment was organized in a factorial design with two independent variables. The first variable was the type of structure, and had three levels: normal sentences, scrambled sentence‐lists, and content word‐lists. The second variable was the type of prosodic information in the stimulus, and had two levels: sentence prosody and “list prosody.” This gave a total of six different conditions (see Fig. 1 for examples of the six conditions). Sentences with sentence prosody. The sentences were comprised of multiple clauses with the number of words varied between 12 and 18 (mean = 14.9). The length of the words varied between 0.27 and 0.94 s (mean = 0.57, std. = 0.12). Stimuli were generated by reading each sentence with a natural prosodic contour. Sentences with list prosody. The “list prosody” versions of the sentence stimuli were generated by recording each word in the sentence separately (out of the sentence context), and then concatenating the words in the order of the original sentence. The interstimulus interval in the concatenated stimuli was determined using the constraint that they were as short as possible while preserving perceptual clarity of offset segment of one word and the onset segment of the next. This led to inter‐stimulus intervals (ISIs) ranging from 25–100 ms. The logic behind using an ISI that is as short as possible is that concatenated words do not contain coarticulation at word boundaries, which tends to increase the overall duration of the sentence relative to naturally generated sentences. This procedure produces a prosodic contour that is list‐like in the sense that there is no supralexical prosodic grouping. (These stimuli differ somewhat from naturally generated lists in infralexical acoustic patterns in that speakers sometimes impose a specific intonational contour to a spoken list. We are therefore using the term “list prosody” in a loose sense.) The list prosody versions were matched in overall length to their natural sentence counterparts by minor changes in rate. Scrambled sentences with sentence prosody. Scrambled sentences were generated by pseudo‐randomly selecting (without replacement) a set of words from the entire pool of words in all stimulus sentences. Each stimulus item was generated with the following constraints: 1) that the ratio of content to function words was typical of normal sentences; 2) that the same word‐form did not appear twice in a row (e.g., “the the”); and 3) that each item matched, in terms of number of syllables, a designated item from the normal sentence list. This procedure generated pairs of normal and scrambled sentences that were matched in length (number of syllables), but not in lexical content. We elected not to match the stimuli in terms of lexical content to avoid the possibility that subjects will try to reconstruct a grammatical order given a set of words that are potentially semantically related (e.g., trip car road expensive…). Scrambled “sentences” thus constructed were read and digitized using the prosodic and intonation contours of its length‐matched normal sentence. A practiced reader first listened to the normal sentence item several times, attending to the prosodic/intonation information. The scrambled sentence was then read using the normal sentence prosody/intonation pattern. Several exemplars of each scrambled stimulus item were recorded and three judges selected the best match to the normal sentence in terms of prosody/intonation. (An additional rating task was conducted on all stimuli using “superimposed” prosody; see below.) Because the same set of words was used in this condition and the structured sentence conditions, lexical frequency and a range of other word‐level psycholinguistic factors are controlled in comparisons between these conditions. Scrambled sentences with list prosody. List prosody versions of the scrambled sentence stimuli were generated by recording one word at a time and concatenating words within a stimulus “sentence” using the procedures described above. Content word lists with sentence prosody. The content word‐lists were created by randomly sampling from a collection of concrete nouns. As with scrambled sentences, each stimulus item was matched in length (number of syllables) to a normal sentence item, and this item served as the source for the sentence‐prosody that was superimposed on the content word list. The procedure for superimposing prosody on the content word list was the same as described above. Content word lists with list prosody. Procedures for generating content word lists with list prosody are identical to those used for generating scrambled sentences with list prosody.

Figure 1.

Examples of each condition with pitch tracking curves calculated using the Praat software package. The graphs range from 0–500 Hz and cover a time period of 6 s.

All stimuli were digitally recorded by a male speaker and the average duration of each condition was edited to 6 s in length. Perceived loudness for each stimulus was matched to a standard stimulus by two listeners.

To ensure that sentence prosody “triplets” (normal sentence, scrambled sentence, content word list) in fact had similar prosodic/intonation contours, six participants were asked to listen to an item from the normal sentence condition followed by an item from either the scrambled sentence or content word list conditions. Their task was to judge whether the pair matched in prosody/intonation. Several practice trials preceded testing to familiarize subjects with the task. Each normal sentence item was paired on different trials with i) its scrambled sentence match; ii) its content word list match; iii) four different scrambled sentence foils; and iv) four different content word list foils. Each participant was able to perform this task with greater than 95% accuracy.

Procedure

The stimuli were delivered into the magnet environment using electrostatic headphones (Stax; online at http://www.stax.co.jp). Subjects were instructed that they would hear spoken strings of words, some of which would sound normal, like sentences, and some of which sound odd in one way or another: some would be spoken unnaturally, somewhat like a computer voice, whereas others would not make any sense. Subjects were instructed to passively listen to the stimuli with eyes closed and to maintain strict attention to each item. The experiment was organized into four separate runs. Each run contained 24 trials. Each trial included 6 s of stimulus followed by 8.5, 9.5, 10.5, or 11.5 s of rest. The length of the rest period was varied in order to increase statistical power [Birn et al.,2002]. The length of each run was 394 s. The total number of trials for each condition was 16. The presentation order was pseudo‐randomized such that an equal number of trials of each of the six stimuli followed each individual stimulus type. Run order was counterbalanced across subjects.

Imaging was performed on a Siemens scanner operating at 1.5 T. Each subject had a series of functional runs followed by a high‐resolution anatomical scan. For the anatomical scan, images (field of view (FOV) = 256, matrix = 256 × 256, size = 1 × 1 mm, thickness = 1 mm) were collected in the sagittal plane using an MPRAGE sequence. For the functional images, 10 axial slices were collected every 2 s. These images were collected using an echo planar imaging (EPI) pulse sequence (FOV = 256, matrix = 64 × 64, size = 4 × 4 mm, thickness = 6 mm, gap = 0 mm, echo time (TE) = 40 ms). For each subject, care was taken to choose slice positions that covered at least the entire temporal lobe extending from the inferior tip of the temporal pole to the inferior parietal lobe.

To correct for head motion artifact the functional images of each subject were aligned to the first volume in the series using a 3D rigid body, six‐parameter model in the AIR 3.0 program [Woods et al.,1998]. The functional volumes were then aligned to the corresponding anatomical image. A second stage of alignment, necessary for group normalization and group averaging, was performed by warping the volumes to the Montreal Neurological Institute (MNI) atlas using a 5[th]‐order polynomial model in the AIR 3.0 program.

The subsequent analysis was performed using Matlab (MathWorks, Natick, MA). A Gaussian spatial filter (full‐width at half‐maximum (FWHM) = 6 mm) was first applied to each image. A mask was then applied so that voxels outside the brain were excluded from the analysis. The data were then temporally filtered to remove low‐frequency noise using a high‐pass filter (13‐order Butterworth filter; 0.01 Hz). A regression analysis was applied to each voxel time course in each individual subject. The design matrix consisted of six regressors representing the time course of activation for each stimulus condition. Given the long length of our stimuli (6 s), each stimulus was represented with a series of three impulse response functions. The resulting time course was convolved with the statistical parametric mapping (SPM) canonical hemodynamic response function. After the regression coefficients were calculated, contrasts were performed testing differences between the conditions. A random effects analysis of the individual subject results was then used to test between‐subject differences [Friston et al.,1999]. A cluster‐based thresholding procedure was then used. The statistical images were first thresholded at an uncorrected P‐value of 0.01, and contiguous clusters were identified. These clusters were then thresholded based on spatial extent [Worsley et al.,2002]. The resulting clusters are significant with a corrected P‐value of less than 0.05. Cluster centers are reported in Talaraich coordinates based on the MNI brain.

RESULTS

All six of the conditions when compared with rest (i.e., the intertrial interval between stimuli) activated bilateral regions in the temporal lobe including anterior, middle, and posterior superior temporal gyrus (STG), superior temporal sulcus (STS), and middle temporal gyrus (MTG).

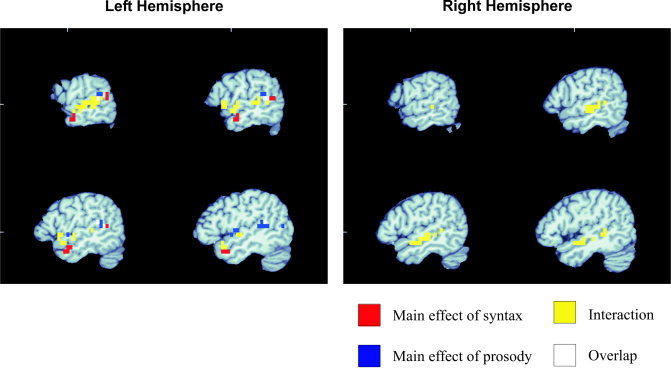

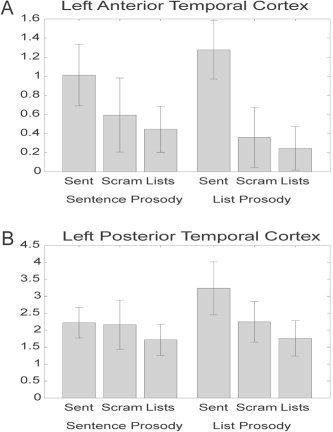

At each voxel, contrasts were carried out to examine the main effects of type of structure (normal sentences, scrambled sentences, and content word lists) and type of prosody (sentence‐like vs. list‐like), as well as their interaction (see Table I). Voxels showing a main effect of structure were noted in two regions of the left temporal lobe: a set of voxels in the posterior STS (center = [−54, −1, 24]), and a cluster in anterior STS/MTG (center = [−63, −49, 7]) (Fig. 2, red). Activation in the anterior region was characterized by significantly higher response in the two structured sentence conditions (normal sentences and sentences with lists prosody) than any of the other conditions, and the two, structured‐sentence conditions did not differ (Fig. 3A). However, the main effect in the posterior region appears to be driven primarily by greater activation for the sentences with list prosody compared to all other stimuli (Fig. 3B). Two additional clusters showing higher activation levels for sentences were found in the thalamus bilaterally (left center = [−5, −19, −2]; right center = [6, −17, 0]).

Table I.

Effects of interest

| Contrast | Cluster center (MNI) | Region | Cluster size (mm3) |

|---|---|---|---|

| Main effect: syntax | −54, −1, 24 | Left posterior temporal lobe | 1,728 |

| −63, −49, 7 | Left anterior temporal lobe | 1,056 | |

| −5, −19, −2 | Left thalamus | 864 | |

| 6, −17, 0 | Right thalamus | 864 | |

| Main effect: prosody | −44, −48, 9 | Left posterior temporal lobe | 6,240 |

| −45, −5, 0 | Medial Heschl's gyrus | 1,248 | |

| Interaction: prosody × syntax | 54, −23, −7 | Right STG/STS | 3,360 |

| −59, −18, −1 | Left STG/STS | 5,760 |

Figure 2.

Overlay of the main effect of structure (red), main effect of prosody (blue), and interaction (yellow). Overlap between the main effect of prosody and the interaction are shown in white. No overlap was seen for the other conditions. Slices are taken in the sagittal plane of the left hemisphere at positions (−62, −58, −54, −50) (MNI coordinates) and in the right hemisphere at positions (50, 54, 58, 62).

Figure 3.

Mean activation level above rest across conditions for the clusters identified in the main effect of structure in (A) left anterior temporal cortex (center = [−63, −49, 7]), and (B) left posterior temporal lobe (center = [−54, 1, −24]). Error bars represent standard error across subjects.

A main effect of prosody was seen in voxels located in the left hemisphere of the posterior temporal lobe (STG/STS) (center = [−44, −48, 9]) as well as medial parts near Heschl's gyrus (center = [−45, −5, 0]) (Fig. 2, blue). These regions showed higher overall levels of activation for list prosody than for sentence prosody. No right hemisphere regions showed a main effect of prosody.

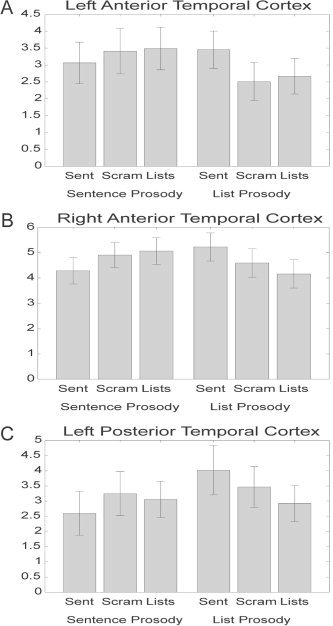

Voxels showing an interaction between prosody and syntax were seen in both hemispheres covering many superior temporal regions both anteriorly and posteriorly, including bilateral STS and STG regions. In the left hemisphere the STG activation extended further anterior than in the right hemisphere (center left = [−59, −18, −1], center right = [54, −23, −7]) (Fig. 2, yellow). The interaction in the left anterior temporal region reflected an “either/or” pattern of activation: maximal response was produced either by syntactically structured sentences or by the presence of sentence prosody or both. Significantly lower levels of activation were found in both unstructured conditions with list prosody (Fig. 4A). The interaction in the posterior left hemisphere and the right hemisphere regions had a different character. These interactions appear to be driven by the sentences yielding greater activation with list prosody, and the lists yielding slightly greater activation with sentence prosody (right hemisphere; Fig. 4B), or showing similar levels of activation across the prosody manipulation (left posterior; Fig. 4C).

Figure 4.

Mean activation level above rest across conditions for the clusters identified in the interaction between structure and prosody in (A) left anterior temporal cortex (center = [−55, 4, −7]), (B) right anterior temporal cortex (center = [55, −16, −9]), (C) and left posterior temporal lobe (center = [−59, −38, 6]). Error bars represent standard error across subjects.

In the left anterior temporal lobe, there was no overlap between the three activation maps (two main effects and interaction). In the left posterior temporal lobe, there was a small degree of overlap between voxels showing the main effect of prosody and the interaction between prosody and syntax; these regions of overlap are colored white in Figure 2.

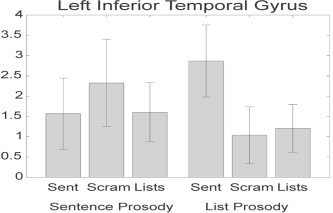

Because an interaction was observed between the prosodic and syntactic manipulations, we next ran contrasts on two individual effects of particular theoretical interest. These contrasts were run on a voxel‐wise basis in the entire EPI volume. The first contrast sought to identify regions that are more responsive to word strings with syntactic structure than word strings without such structure. The most closely matched conditions in this respect were the sentences with list prosody and scrambled sentences with list prosody. These two conditions used identical lexical items (in fact, the same audio files of individually recorded words), and the word strings were constructed using the same concatenation process; thus, the conditions were well controlled for lexical and sublexical factors (e.g., lexical‐phonological and lexical‐semantic factors), lower level acoustic factors (e.g., loudness, spectrotemporal modulations), and overall perceived prosodic quality (flat at the supralexical level). They differed only in presentation order. The results of this contrast are presented in Figure 5 (blue and yellow). Note that the areas highlighted by this contrast are predominantly in the left hemisphere and comprise three regions: posterior superior temporal (bilateral), ATC (left only), and inferior frontal (left only). The temporal lobe locations generally were identified in the global analysis, but the inferior frontal region was not. One might be tempted to argue that the frontal region is sensitive to sentence structure given this finding, but closer inspection of activation across all conditions suggests otherwise. First, activation to sentences with normal prosody was minimal, and second, activation to scrambled sentences with sentence prosody was statistically indistinguishable from sentences with list prosody (Fig. 6).

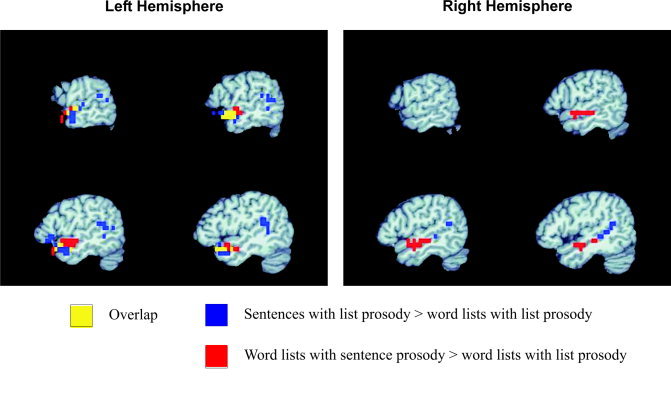

Figure 5.

Overlay of activation for sentences with list prosody over word lists with list prosody (blue), word lists with sentence prosody over word lists with list prosody (red), and the overlap between the two contrasts (yellow). Slices are taken in the sagittal plane of the left hemisphere at positions (−62, −58, −54, −50) (MNI coordinates) and in the right hemisphere at positions (50, 54, 58, 62).

Figure 6.

Mean activation level above rest across conditions for the inferior frontal lobe cluster (center = [−56, 18, −9]) identified in the contrast of sentences with list prosody over word lists with list prosody. Error bars represent standard error across subjects.

The second contrast sought to identify brain regions more responsive to the perception of supralexical (sentence‐like) prosodic/intonation contours than to the perception of list‐like prosodic/intonation contours. To address this question, we contrasted the sentence prosody and list prosody versions of the content word lists. We chose to examine content word lists in this contrast because these stimuli lack any nonprosodic cues to phrasal boundaries. Activated regions (sentence prosody > list prosody) were confined to the middle and anterior portions of the STG/STS bilaterally. Activations in the left hemisphere extended more anterior in the temporal lobe than did the activations in the right hemisphere (Fig. 5, red and yellow).

DISCUSSION

The goal of this experiment was to explore neural activation patterns for syntactic and prosodic processing during auditory sentence comprehension. In particular, we sought to determine whether the processing of prosodic information in sentences might account for the relatively selective response to sentence stimuli in ATC. Our results demonstrate a functional partitioning of left ATC: a more dorsal region appears to be sensitive both to prosodic and syntactic manipulations, whereas a more ventral region in the left ATC, extending into the middle temporal gyrus, appears to be more sensitive to syntactically structured sentences than nonstructured sentences independent of prosody. This sentence > nonsentence effect in the more ventral site held even in the most tightly matched contrast (sentences vs. scrambled sentences, both with list prosody) which held lexical and sublexical factors constant. We conclude that there is a region in left ATC that responds preferentially to structured sentence stimuli, and that this response preference cannot be attributed to acoustic‐phonetic, lexical‐phonological, lexical‐semantic, or prosodic factors. This preference for sentence stimuli could be driven by syntactic parsing, or by the integration of syntactic and semantic information (combinatorial semantic computations). The more dorsal left ATC region showed an interaction between syntactic and prosodic responses, and may be important for integrating these forms of information, but this remains a speculation.

Several additional regions within our functional volume (temporal and occipital lobes, inferior frontal and parietal regions) activated in various analyses. We will discuss these in turn.

Right Anterior Temporal Cortex

Right ATC has been found in some studies to respond more strongly to sentence than nonsentence stimuli [Humphries et al.,2001; Mazoyer et al.,1993]. This region has been found to respond more to stimuli with syntactic structure than to stimuli with only prosodic structure [Meyer et al.,2002,2004]. In our study, we found right ATC to show an interaction between the syntactic and prosodic manipulations (Fig. 2), with greater activation resulting from the anomalous structure‐prosody pairings than the more natural pairings (Fig. 4B). The locus of ATC activation did not extend as far anterior toward the temporal pole in the right hemisphere compared to the left, and the above‐noted right hemisphere activation pattern was different both from the more ventral and more dorsal left ATC regions identified (Figs. 3A, 4A). On the basis of these findings, it is not possible to deduce the functional role of right ATC in sentence processing. However, the data do show (1) that the right ATC responds differently compared to the left, suggesting a functional distinction and, relatedly, (2) that the right ATC is not selective for syntactically structured word strings, unlike a portion of the left ATC. Additional parts of the right hemisphere have been implicated by other studies in prosody [Meyer et al.,2004], thus it remains a possibility that right ATC is involved in processing some aspect of prosody/intonation.

Left Posterior Superior Temporal

Left posterior superior temporal regions have been identified in previous studies as showing a relatively selective response to sentence stimuli over nonsentence stimuli [Vandenberghe et al.,2002]. Additionally, left posterior temporal areas have been found in some studies to show an increased response to complex sentences over simple sentences [Just et al.,1996; Keller et al.,2001] and to syntactic violations [Friederici et al.,2003; Ni et al.,2000] (interestingly, these studies do not show differences in ATC). However, some authors have suggested that left posterior regions support lexical‐level processes rather than syntactic computation [Hickok and Poeppel,2004]. We found that left posterior regions either show an interaction between syntactic and prosodic manipulations or respond more robustly only to sentences without sentence prosody. There are a number of possible interpretations for this pattern of results. One possible explanation is that activation in the posterior temporal region is related to integration of lexical/semantic information into a sentence‐level context. An interaction between prosody and syntax may have appeared based on an increased difficulty in identifying and subsequently integrating words during the processing of sentences with list prosody. But again, this is speculative. What we can safely conclude is that the differing response pattern between anterior and posterior regions indicate some functional distinction.

Syntactic Processing and Broca's Area

Several neuroimaging studies designed to look at sentence processing have failed to find ATC activation and have instead found activation in the left inferior frontal gyrus (IFG) [Caplan et al.,1998; Indefrey et al.,2001,2004; Just et al.,1996; Ni et al.,2000]. These studies have either varied sentence complexity by comparing sentences which are relatively hard to parse against easier sentences [Caplan et al.,1998; Just et al.,1996], in which case the relative activity in left IFG increases with the complexity of the sentence; or they have compared activation for sentences with specific types of syntactic violations against normal sentences [Indefrey et al.,2001; Ni et al.,2000], in which case greater activation is seen in left IFG for the stimuli with syntactic violations. Our study did not yield differences between sentences and word lists in left IFG, which is inconsistent with the hypothesis that this region plays a fundamental role in basic syntactic parsing.

One possible explanation for these two different patterns of activity is that the two experimental approaches (i.e., comparing sentences with word lists and looking at syntactic complexity/violations) are engaging different components of sentence processing. For example, processing structured sentences presumably engages a host of basic syntactic and compositional semantic processes that are not engaged in processing word lists. Thus, this contrast is likely to identify regions involved in a range of fundamental sentence parsing computations. Contrasting syntactically complex with syntactically simple sentences, or syntactically well formed vs. ill‐formed constructions, presumably isolates higher‐order aspects of sentence processing because all of these stimuli would be expected to engage basic syntactic processes (all of them involved the computation of at least basic sentence structures). It remains an open question exactly what these “higher‐order” aspects of sentence processing are, which appear to engage the IFG region. Some authors have suggested it is a subcomponent of syntax (e.g., processing constructions with syntactic movement) [Ben‐Shachar et al.,2004]. Others have argued for a more general role involving working memory, which may be required to support the increased processing load associated with such constructions [Just et al.,1996]. An additional possibility includes some form of structural reanalysis process (at least for the syntactic violation contrasts).

Semantic Processing in ATC

Several studies suggest that ATC may be involved in semantic processing. This is supported in part by a study that found that left ATC showed increased activation during a lexical priming task compared with a letter identification task [Mummery et al.,1999]. In that study, activation levels were higher when the primes were unrelated; this was interpreted as reflecting an increase in semantic processing load [Mummery et al.,1999]. Another study compared sentences, scrambled sentences, sentences where the content words were replaced by semantically unrelated words (yielding pragmatically implausible sentences), and scrambled versions of those semantically unrelated sentences [Vandenberghe et al.,2002]. This study found—in addition to a main effect of sentences over scrambled sentences in left ATC, consistent with our findings—that sentences with semantically unrelated words (semantically odd sentences) had higher levels of activation in left ATC than sentences with related words (normal sentences). The authors concluded that the left anterior temporal lobe plays a role in integrating semantic information necessary during sentence comprehension, and because semantic integration is more difficult with semantically odd sentences, activation levels are higher in left ATC under such conditions [Vandenberghe et al.,2002]. This interpretation is not inconsistent with our findings: as noted, our “syntactic” effect could be driven either by syntactic parsing or by the integration of syntactic structure with lexical semantics (compositional semantics) [for a review of this argument, see Stowe et al.,2005]. It should be pointed out, however, that only a subset of voxels in the Vandenberghe et al. [2002] study responded to the semantic manipulation, while other voxels in left ATC were relatively insensitive to the presence of semantically unrelated words; the semantically insensitive voxels still showed a sentence > word‐list effect, suggesting that a functional subdivision of left ATC may exist based on the response to the presence of syntactic structure and sentence‐level semantic information. Thus, the Vandenberghe et al. [2002] study does not refute the hypothesis that a subregion of left ATC responds selectively to syntactic structure.

CONCLUSIONS

Our goal was to determine whether the relatively selective response of ATC to stimuli with sentence structure could be explained by differences in the prosodic/intonational organization of the stimulus sets tested to date. Our results indicate a functional partitioning of left ATC into a more dorsal region that is responsive both to syntactic and prosodic/intonational manipulations, and a more ventral region that is only sensitive to the syntactic manipulation. We conclude that prosodic/intonational factors cannot account for the relatively selective response of left ATC to sentence structure, particularly in the more ventral site. This more ventral portion of left ATC may be important for syntactic parsing, compositional semantic processes, or both. We speculate that the more anterior left ATC location may participate in the integration of prosodic/intonational cues with syntactic computations.

Footnotes

Some of these studies have found left dominant effects, while others have found bilateral activations. Thus, it is not entirely clear how lateralized these findings are. For this reason, we will remain agnostic on the issue of hemisphere asymmetries in ATC until the Discussion section, where the issue is taken up directly.

There is equivocal evidence as to whether semantically anomalous sentences lead to greater activation than normal sentences, as Vandenberghe et al. [2002] report such an effect that failed to be seen in a study by Friederici et al. [2003]. This issue is taken up in the Discussion.

Our definition of prosody here is mainly confined to differences in supralexical intonation patterns related to linguistic processing. Prosody is also useful, of course, in sublexical linguistic processing [see Gandour et al.,2003] and in identifying nonlinguistic information like emotion and tone.

REFERENCES

- Ben‐Shachar M, Palti D, Grodzinsky Y (2004): Neural correlates of syntactic movement: converging evidence from two fMRI experiments. Neuroimage 21: 1320–1336. [DOI] [PubMed] [Google Scholar]

- Birn RM, Cox RW, Bandettini PA (2002): Detection versus estimation in event‐related fMRI: choosing the optimal stimulus timing. Neuroimage 15: 252–264. [DOI] [PubMed] [Google Scholar]

- Caplan D, Alpert N, Waters G (1998): Effects of syntactic structure and propositional number on patterns of regional cerebral blood flow. J Cogn Neurosci 10: 541–552. [DOI] [PubMed] [Google Scholar]

- Dronkers NF, Wilkins DP, Van Valin RD Jr, Redern BB, Jaeger JJ (1994): A reconsideration of the brain areas involved in the disruption of morphosyntactic comprehension. Brain Lang 47: 461–462. [Google Scholar]

- Friederici AD, Kotz SA (2003): The brain basis of syntactic processes: functional imaging and lesion studies. Neuroimage 20: S8–S17. [DOI] [PubMed] [Google Scholar]

- Friederici AD, Meyer M, von Cramon DY (2000): Auditory language comprehension: An event‐related fMRI study on the processing of syntactic and lexical information. Brain Lang 74: 289–300. [DOI] [PubMed] [Google Scholar]

- Friederici AD, Ruschemeyer S‐A, Hahne A, Fiebach CJ (2003): The role of left inferior frontal and superior temporal cortex in sentence comprehension: localizing syntactic and semantic processes. Cereb Cortex 13: 170–177. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Worsley KJ (1999): How many subjects constitute a study? Neuroimage 10: 1–5. [DOI] [PubMed] [Google Scholar]

- Gandour J, Dzemidzic M, Wong D, Lowe M, Tong Y, Hsieh L, Satthamnuwong N, Lurito J (2003): Temporal integration of speech prosody is shaped by language experience: an fMRI study. Brain Lang 84: 318–336. [DOI] [PubMed] [Google Scholar]

- Grossman M, Payer F, Onishi K, D'Eposito M, Morrison D, Sadek A, Alavi A (1998): Language comprehension and regional cerebral defects in frontotemporal degeneration and Alzheimer s disease. Neurology 50: 157–163. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D (2004): Dorsal and ventral streams: a framework for understanding aspects of the functional anatomy of language. Cognition 92: 67–99. [DOI] [PubMed] [Google Scholar]

- Humphries C, Willard K, Buchsbaum B, Hickok G (2001): Role of anterior temporal cortex in auditory sentence comprehension: an fMRI study. Neuroreport 12: 1749–1752. [DOI] [PubMed] [Google Scholar]

- Indefrey P, Hagoort P, Herzog H, Seitz RJ, Brown CM (2001): Syntactic processing in left prefrontal cortex is independent of lexical meaning. Neuroimage 14: 546–555. [DOI] [PubMed] [Google Scholar]

- Indefrey P, Hellwig F, Herzog H, Seitz RJ, Hagoort P (2004): Neural responses to the production and comprehension of syntax in identical utterances. Brain Lang 89: 312–319. [DOI] [PubMed] [Google Scholar]

- Just MA, Carpenter PA, Keller TA, Eddy WF, Thulborn KR (1996): Brain activation modulated by sentence comprehension. Science 274: 114–116. [DOI] [PubMed] [Google Scholar]

- Keller TA, Carpenter PA, Just MA (2001): The neural bases of sentence comprehension: an fMRI examination of syntactic and lexical processing. Cereb Cortex 11: 223–227. [DOI] [PubMed] [Google Scholar]

- Mazoyer B, Tzourio N, Frak V, Syrota A (1993): The cortical representation of speech. J Cogn Neurosci 5: 467–479. [DOI] [PubMed] [Google Scholar]

- Meyer M, Friederici AD, von Cramon DY (2000): Neurocognition of auditory sentence comprehension: event related fMRI reveals sensitivity to syntactic violations and task demands. Cogn Brain Res 9: 19–33. [DOI] [PubMed] [Google Scholar]

- Meyer M, Alter K, Friederici AD, Lohmann G, von Cramon DY (2002): FMRI reveals brain regions mediating slow prosodic modulations in spoken sentences. Hum Brain Mapp 17: 73–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer M, Steinhauer K, Alter K, Friederici AD, von Cramon DY (2004): Brain activity varies with modulation of dynamic pitch variance in sentence melody. Brain Lang 89: 277–289. [DOI] [PubMed] [Google Scholar]

- Mummery CJ, Shallice T, Price CJ (1999): Dual‐process model in semantic priming: a functional imaging perspective. Neuroimage 9: 516–525. [DOI] [PubMed] [Google Scholar]

- Newman AJ, Pancheva R, Ozawa K, Neville HJ, Ullman MT (2001): An event‐related fMRI study of syntactic and semantic violations. J Psycholinguist Res 30: 339–364. [DOI] [PubMed] [Google Scholar]

- Ni W, Constable RT, Mencl WE, Pugh KR, Fullbright RK, Shaywitz SE, Shaywitz BA, Gore JC, Shankweiler D (2000): An event‐related neuroimaging study distinguishing form and content in sentence processing. J Cogn Neurosci 12: 120–133. [DOI] [PubMed] [Google Scholar]

- Schlosser MJ, Aoyagi N, Fulbright RK, Gore JC, McCarthy G (1998): Functional MRI studies of auditory comprehension. Hum Brain Mapp 6: 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SK, Blank CC, Rosen S, Wise RJS (2000): Identification of a pathway for intelligible speech in the left temporal lobe. Brain 123: 2400–2406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinhauer K, Friederici AD (2001): Prosodic boundaries, common rules, and brain responses: the closure positive shift in ERPs as a universal marker for prosodic phrasing in listeners and readers. J Psycholinguist Res 30: 221–224. [DOI] [PubMed] [Google Scholar]

- Stowe LA, Paans AMJ Wijers, AA , Zwarts F, Mulder G, Vaalburg W (1999): Sentence comprehension and word repetition: a positron emission tomography investigation. Psychophysiology 36: 786–801. [PubMed] [Google Scholar]

- Stowe LA, Haverkort M, Zwarts F (2005): Rethinking the neurological basis of language. Lingua 115: 997–1042. [Google Scholar]

- Vandenberghe R, Nobre AC, Price CJ (2002): The response of left temporal cortex to sentences. J Cogn Neurosci 14: 550–560. [DOI] [PubMed] [Google Scholar]

- Woods RP, Grafton ST, Holmes CJ, Cherry SR, Mazziotta JC (1998): Automated image registration. I. General methods and intrasubject, intramodality validation. J Comput Assist Tomogr 22: 141–154. [DOI] [PubMed] [Google Scholar]

- Worsley KJ, Liao CH, Aston J, Petre V, Duncan GH, Morales F, Evans AC (2002): A general statistical analysis for fMRI data. Neuroimage 15: 1–15. [DOI] [PubMed] [Google Scholar]