Abstract

Event‐related functional magnetic resonance imaging was used to test the involvement of the inferior prefrontal cortex in verbal working memory. Pairs of French nouns were presented to ten native French speakers who had to make semantic or grammatical gender decisions. Verbal working memory involvement was manipulated by making the categorization of the second noun optional. Decisions could be made after processing the first noun only (RELEASE condition) or after processing the two nouns (HOLD condition). Reaction times suggested faster processing for gender than for semantic category in RELEASE. Despite the absence of anatomical difference across tasks and conditions in the wide activated network, the haemodynamic response peak latencies of the inferior prefrontal cortex were significantly delayed in HOLD versus RELEASE while no such peak delay was observed in the superior temporal gyrus. Interestingly, this pattern did not interact with language tasks. This study shows that cognitive manipulation can influence haemodynamic time‐course and suggests that the main cognitive process determining inferior prefrontal activation is verbal working memory rather than specific linguistic processes such as grammatical or semantic analysis. Hum. Brain Mapping 19:37–46, 2003. © 2003 Wiley‐Liss, Inc.

Keywords: verbal working memory, inferior prefrontal cortex, gender processing, semantic processing, event‐related fMRI, evoked haemodynamic response

INTRODUCTION

The left inferior prefrontal cortex (IPC) has been hypothesized to take part in numerous aspects of language processing. After it was baptized the “centre for motor images of words” by Paul Broca [1861], clinical neuropsychologists soon extended the involvement of the left IPC to syntactic processing because lesions in this location often caused agrammatism [e.g., Taubner et al., 1999]. Overall, neuropsychological data from patient studies did not provide a clear structure‐function interpretational framework for the organization of language in the brain, however, and some fundamental debates continue to rage [e.g., Grodzinsky, 2000].

Neuroimaging studies have even further diversified the hypothetical roles of the IPC by showing its activation when different aspects of linguistic representations are accessed. First, experiments involving phoneme detection [Binder, 1997; Démonet et al., 1992, 1994; Thierry et al., 1999], non‐word repetition [Thierry et al., 1999], and more generally phonological rehearsal tasks favoring the involvement of the phonological loop [Paulesu et al., 1993] have demonstrated selective activations in the dorsal aspects of the left IPC. Second, studies exploring neural correlates of sentence comprehension have suggested a selective involvement of the left IPC [Caplan et al., 2000; Dapretto and Bookheimer, 1999], including Broca's area (Brodmann's Area, BA 44/45) and the inner part of the frontal operculum and the anterior insula [Moro et al., 2001] in syntactic processing [Caplan et al., 1998, 2000; Caplan and Waters, 1999; Just et al., 1996]. Third, the IPC has been proposed to take part in the encoding [Demb et al., 1995] and retrieval [Dapretto and Bookheimer, 1999; Gabrieli et al., 1998] of semantic knowledge, although this has been extensively debated [see Démonet and Thierry, 2001; Thompson‐Schill et al., 1997].

In other words, the left IPC might be involved at all the different stages of language processing that have been postulated by cognitive models, i.e., phonology, syntax, and semantics [Levelt et al., 1999; Marslen‐Wilson, 1989; McClelland and Elman, 1986; Norris, 1994]. Therefore, if one region in the brain contradicts the hypotheses of Localisationism, it certainly is Broca's area.

However, an interesting hypothesis is that the involvement of the IPC is not selective to phonology, syntax, and semantics but rather relates to its central role in verbal working memory (VWM), which is the subsystem for working memory devoted to verbal information [Baddeley, 1998]. Indeed, tasks such as phonological rehearsal, syntactic structure screening, semantic selection, inner speech, etc., all involve VWM to various extents and this might explain why the IPC is very often found activated [Caplan et al., 2000; Caplan and Waters, 1999; Gabrieli et al., 1998; Paulesu et al., 1993]. Moreover, several experiments have shown selective activation of the IPC in relation to the manipulation of VWM load [e.g., Braver et al., 1997; Fiez et al., 1996].

Here, we used event‐related functional magnetic resonance imaging (ER‐fMRI) to further investigate the involvement of the IPC in VWM. On the one hand, we varied VWM demands by manipulating the number of verbal items (one or two) that needed to be categorized in order to complete the task. Participants were presented with noun‐pairs. They could either make a decision without processing the second noun (RELEASE condition) or needed to hold the second noun in VWM in order to respond (HOLD condition). On the other hand, we used two different language tasks, a gender one and a semantic one, to test whether VWM demands would interact with the linguistic process in focus. This made our design 2 × 2 factorial.

Given the extended involvement of VWM in the HOLD condition, we hypothesized that reaction times would be longer in HOLD than RELEASE, and that the IPC would be activated for a longer period of time as compared to other structures involved in perceptual processes (such as the superior temporal gyrus, STG). The latter effect should result in delayed haemodynamic peak latencies.

Language task differences could relate to the fact that the grammatical gender of nouns is an over‐learned and mandatory feature of French whereas natural vs. artifact categorization requires access to a wider and multi‐dimensional domain of knowledge. Two previous studies have reported a trend for faster reaction time in gender decision tasks as compared to semantic categorization [Miceli et al., 2002; Schmitt et al., 2001]. We, therefore, hypothesized that grammatical gender would be retrieved before lexical semantic information. Given the inconsistency of results concerning the involvement of the IPC in syntactic and semantic processing and given the fact that we used noun‐pairs as opposed to sentences, we did not make any hypotheses concerning the effects of language tasks on activation patterns.

SUBJECTS AND METHODS

Subjects

The participants were 24 French volunteers (12 men, 12 women, mean age 25 ± 3 years) in the behavioral study and 10 (5 men, 5 women, mean age 26.1 ± 1.8 years) in the fMRI experiment. They gave informed consent to participate in the experiment, which had been approved by a local ethics committee.

Stimuli

In the behavioral study, the stimuli were 280 monosyllabic singular French nouns associated in pairs; a subgroup of 120 was used in the ER‐fMRI experiment. All nouns were selected from the Brulex database [Content et al., 1990]. Half of the words corresponded to artifacts and the other half referred to natural objects. Each semantic group featured an equal number of feminine and masculine nouns. In order to avoid any interference between natural (semantic) gender and syntactic gender, animal names displaying natural gender were excluded (gender marking can then be considered as syntactic information free of semantic judgment [Hagoort and Brown, 1999]).

Lexical frequency was between 150 and 500. Eighty‐three percent of the words had only one standard meaning, 11% had one semantic variant, and 5% had two [Content et al., 1990; Robert, 1986]. Eighty‐seven percent of the nouns had a unique grammatical gender, 13% had a “dominant” gender (the homophonic noun with opposite gender was far less frequent).

Words were produced by a female speaker, digitized at 22 kHz, normalized, amplified, and digitally resampled to 500 msec. Sound files were stretched or expanded by no more than 5%. They were then pseudo‐randomly assembled in pairs lasting for 1,040 msec, the two nouns in a pair being separated by 40 msec. Semantic and phonological links were avoided within a pair and all category combinations were in equal proportions (i.e., 25% feminine–feminine, 25% feminine–masculine, 25% masculine–masculine, and 25% masculine–feminine; and similarly for semantic categories).

Tasks

In each block, participants were asked to monitor the pairs in which both nouns pertained to a specific category (feminine, masculine, natural objects, or artifacts) by pressing keys in the behavioral task and lifting their fingers in the fMRI, e.g., left for “yes” and right for “no.” They were explicitly instructed to respond as soon as they could (e.g., “no” immediately after identifying the first noun as incongruent with the target category).

In each trial, two different situations could arise: (1) the first noun was incongruent with the target category, in which case the participants could respond “no” immediately and there was no need to hold the second noun in VWM (RELEASE condition); or (2) the first noun was congruent, in which case they needed to hold the second noun in VWM until they could reach a decision about its congruency (HOLD condition). The categorization of the second noun in the latter case was enough to make a decision.

In a previous event‐related potential experiment using the same design, we showed that participants do abort the processing after categorizing the first noun in the RELEASE condition, as demonstrated by the absence of an N400 component for the second noun in this condition [Thierry et al., 1998].

Procedure

In the behavioral version, subjects were installed in a quiet room and presented with 8 blocks of 70 pairs of nouns. Stimulus Onset Asynchrony (SOA) was set at 2,500 msec. Before each block the experimenter gave the instruction verbally, indicating the target category and corresponding response sides.

In the fMRI version, participants were installed in the scanner and were given the instruction prior to each block through headphones. Six runs of 20 pairs of nouns were delivered at a rate of 1 every 12 sec [Bandettini and Cox, 2000].

In both experiments, type of task (gender or semantic) and response sides were fully counter‐balanced across blocks and participants and the same pairs of words were equally used in all conditions. The rate of stimulus presentation and the overall number of stimuli were the only differences between the behavioral and the fMRI experiment.

EHR Recording and Analysis

Haemodynamic Responses were acquired using a 1.5 T Magnetom Vision Siemens Scanner in EPI mode using a single‐shot T2*‐weighted sequence. Acquisitions provided 6 contiguous transverse slices (FOV 220 mm, thickness 7 mm, TE = 66 msec, TR = 2,000 msec, matrix acquisition 96*128 interpolated to 128*128) in approximately 780 msec and were repeated 6 times after each stimulation. Stimulus delivery occurred every 12 sec in the 1,220‐msec silence gap between two acquisitions. Acquisition voxel size was 2.3 × 1.7 × 7.0 mm. The bottom of the lower slice was set 7 mm under AC–PC. Raw images were realigned, normalized and smoothed (FWMH of 6 mm) using SPM99 [Wellcome Institute of Neurology; online at http://www.fil.ion.ucl.ac.uk; Friston et al., 1995, 1996]. Slice timing correction was deliberately not implemented before image realignment because subjects' movements were prominent in the [x, y] plane and signals from adjacent cortical regions of interest would have been confounded more than signals from adjacent slices.

Haemodynamic signals were temporally high‐pass filtered at 1.5.10‐2 Hz and modeled using a half sinusoidal waveform best fitting stimulus rate (half period = 6 sec). Activations were detected in the framework of the general linear model using SPM96 at a threshold of P < 0.001 (extension correction of P < 0.05; Table I; see Fig. 2) and replicated at a threshold of P < 0.001 corrected for multiple comparisons in SPM99.

Table I.

SPM Results*

| BA | Cluster | Release | Hold | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Voxel | Coordinates | Cluster | Voxel | Coordinates | |||||||||||||

| P | n | Z | P | Z | x | y | z | P | n | Z | P | Z | x | y | z | ||

| Gender | |||||||||||||||||

| Left Hemisphere | |||||||||||||||||

| Superior temporal gyrus/transverse temporal gyrus | BA 29/41 | *** | 371 | 8.44 | *** | −8.44 | −42 | −32 | 14 | *** | 346 | 8.80 | *** | −8.80 | −40 | −32 | 14 |

| Superior temporal gyrus/inferior parietal lobule | BA 13/40 | *** | −7.81 | −48 | −44 | 21 | *** | −7.60 | −50 | −44 | 21 | ||||||

| Superior temporal gyrus | BA 22 | *** | −7.85 | −50 | −12 | 7 | *** | −7.29 | −50 | −12 | 7 | ||||||

| Thalamus | *** | 106 | 7.80 | *** | −7.80 | −10 | −22 | 7 | *** | 137 | 8.08 | *** | −8.08 | −8 | −22 | 7 | |

| Thalamus | *** | −6.71 | −12 | −32 | 0 | *** | −7.20 | −12 | −30 | 0 | |||||||

| Thalamus | *** | −5.43 | −12 | −8 | 14 | *** | −6.20 | −12 | −8 | 14 | |||||||

| Cuneus/lingual gyrus | BA 18 | *** | 326 | 6.50 | *** | −6.50 | 0 | −78 | 7 | * | 19 | 4.38 | 0.063 | −4.38 | −10 | −82 | 21 |

| Insula/prefrontal cortex | BA 13/44 | *** | −6.95 | −33 | 22 | 7 | *** | −6.95 | −39 | 14 | 7 | ||||||

| Right hemisphere | |||||||||||||||||

| Transverse temporal gyrus | BA 41 | *** | 424 | 8.38 | *** | −8.38 | 38 | −36 | 14 | *** | 444 | 8.44 | *** | −8.44 | 38 | −36 | 14 |

| Superior temporal gyrus/transverse temporal gyrus | BA 29/41 | *** | −7.62 | 48 | −26 | 14 | *** | −8.13 | 48 | −28 | 14 | ||||||

| Superior temporal gyrus/insula | BA 22/13 | *** | −7.64 | 48 | 8 | 0 | |||||||||||

| Superior temporal gyrus/insula | BA 13/40 | *** | −8.04 | 48 | −40 | 21 | |||||||||||

| Thalamus | *** | 92 | 7.15 | *** | −7.15 | 8 | −20 | 7 | *** | 463 | 7.86 | *** | −7.86 | 8 | −20 | 7 | |

| Thalamus | *** | −6.60 | 12 | −30 | 0 | *** | −6.68 | 10 | −30 | 0 | |||||||

| Cuneus/lingual gyrus | BA 17/18 | *** | 326 | 6.50 | *** | −6.48 | 8 | −68 | 7 | *** | −6.68 | 4 | −80 | 0 | |||

| Insula/prefrontal cortex | BA 13/44 | * | −4.50 | 41 | 15 | 7 | * | −4.50 | 40 | 15 | 7 | ||||||

| Semantics | |||||||||||||||||

| Left hemisphere | |||||||||||||||||

| Transverse temporal gyrus | BA 41 | *** | 189 | 8.61 | *** | −7.69 | −48 | −22 | 14 | ||||||||

| Superior temporal gyrus/transverse temporal gyrus | BA 29/41 | *** | −8.94 | −42 | −32 | 14 | *** | −8.61 | −40 | −34 | 14 | ||||||

| Superior temporal gyrus/inferior parietal lobule | BA 13/40 | −8.09 | −48 | −42 | 21 | *** | −7.16 | −48 | −44 | 21 | |||||||

| Superior temporal gyrus | BA 22 | *** | 17 | 7.33 | *** | −7.33 | −48 | −12 | 7 | *** | 24 | 7.08 | *** | −7.08 | −50 | −12 | 7 |

| Superior temporal gyrus | BA 22 | *** | −6.52 | −50 | 0 | 0 | |||||||||||

| Insula | BA 13 | ** | 26 | 4.88 | ** | −4.88 | −40 | 12 | 7 | * | 23 | 4.85 | ** | −4.85 | −38 | 14 | 7 |

| Insula | BA 13 | * | −4.57 | −30 | 18 | 7 | |||||||||||

| Thalamus | *** | 111 | 7.88 | *** | −7.88 | −10 | −22 | 7 | *** | 106 | 8.26 | *** | −8.26 | −10 | −22 | 7 | |

| Thalamus | *** | −6.05 | −12 | −10 | 14 | *** | −6.72 | −8 | −30 | 0 | |||||||

| Thalamus | *** | −5.45 | −10 | −32 | 0 | *** | −5.90 | −12 | −8 | 14 | |||||||

| Lingual gyrus | BA 19 | *** | 17 | 7.33 | *** | −5.64 | −16 | −72 | 0 | *** | −7.19 | −6 | −62 | 0 | |||

| Cuneus/lingual gyrus | BA 18 | *** | 26 | 6.32 | *** | −6.32 | −6 | −82 | 21 | ||||||||

| Insula/prefrontal cortex | BA 13/44 | *** | −6.95 | −42 | 11 | 7 | *** | −6.95 | −39 | 16 | 7 | ||||||

| Right hemisphere | |||||||||||||||||

| Transverse temporal gyrus | BA 41 | *** | 237 | 8.94 | *** | −7.86 | −48 | −22 | 14 | ||||||||

| Superior temporal gyrus/transverse temporal gyrus | BA 29/41 | *** | 347 | 8.70 | *** | −8.70 | 40 | −36 | 14 | *** | 374 | 8.65 | *** | −8.65 | 42 | −36 | 14 |

| Superior temporal gyrus/insula | BA 22/13 | *** | −7.91 | 50 | −12 | 7 | *** | −7.67 | 48 | 8 | 0 | ||||||

| Superior temporal gyrus/insula | BA 13/40 | *** | −7.65 | 46 | −20 | 7 | |||||||||||

| Insula | BA 13 | *** | −8.18 | 44 | −38 | 21 | |||||||||||

| Thalamus | *** | 83 | 7.24 | *** | −7.24 | 8 | −22 | 7 | *** | 113 | 8.03 | *** | −8.03 | 10 | −20 | 7 | |

| Thalamus | *** | −6.07 | 12 | −28 | 0 | * | −4.81 | 12 | −10 | 14 | |||||||

| Lingual gyrus | BA 19 | *** | 211 | 7.18 | *** | −7.18 | 18 | −62 | 0 | *** | 310 | 7.71 | *** | −7.71 | 16 | −62 | 0 |

| Lingual gyrus | BA 18/19 | *** | −5.94 | 6 | −80 | 0 | |||||||||||

| Cuneus/lingual gyrus | BA 17/18 | *** | −7.58 | 2 | −76 | 7 | |||||||||||

| Insula/prefrontal cortex | BA 13/44 | * | −4.50 | 39 | 18 | 7 | * | −4.50 | 43 | 10 | 7 | ||||||

Listed structures are highest probability hits in a radius of 5 mm around the activated voxel according to the Talairach and Tournoux Atlas [1988].

P < 0.001,

P < 0.01,

P < 0.05.

(Cluster‐level statistics are not displayed for prefrontal activation because SPM assimilated corresponding voxels as part of the STG cluster.)

Cluster = cluster‐level statistics; n = voxel count; voxel = voxel‐level statistics (coordinates are in mm in Talairach space).

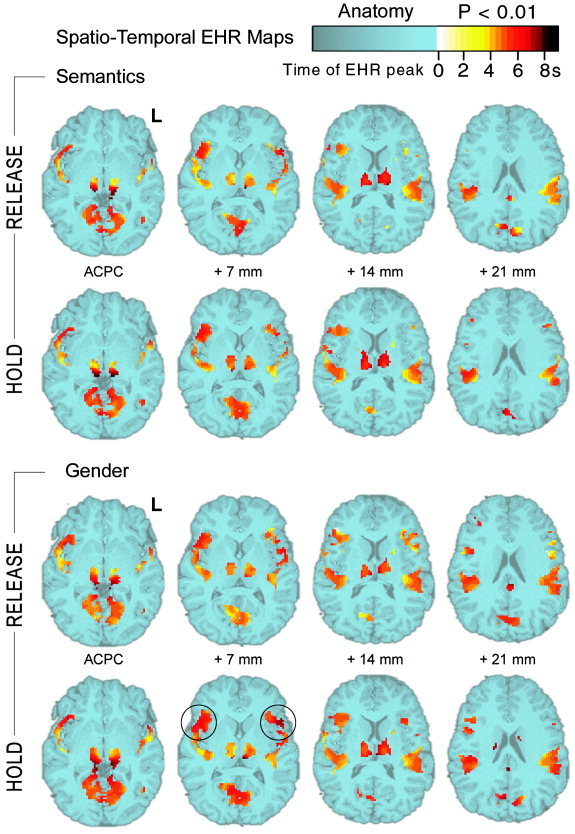

Figure 2.

Spatio‐Temporal maps derived from SPM results and EHR peak analysis. Activations (P < 0.01 uncorrected) are plotted for each task and condition on the anatomy of one subject (in blue). Color other than blue indicates the time of the EHR peak for each activated voxel. Early peaking EHRs (peak between 3 and 4 sec after SOT) were recorded in primary auditory cortices ([−34, −33, 14] and [29, −33, 14] P < 0.0001) and dorso‐median aspects of the thalamus ([−7, −17, 7] and [4, −21, 7] P < 0.0001). EHRs peaking after 6.5 s (i.e., most delayed ones) were found in the pulvinar ([−10, −33, 0] and [9, −34, 0] P < 0.003), Broca's area ([−42, 12, 7] P < 0.005) and homologous right regions ([42, 12, 7] P < 0.0001). Significant differences were found in the temporal analysis of Haemodynamic responses. Circles highlight the significant delay for prefrontal responses in Gender HOLD versus Gender RELEASE. Note that Wernicke's area showed no sensitivity to task or condition, as illustrated by invariant temporal clusters on the 14‐mm slices.

Individual haemodynamic responses were averaged in each subject and condition to obtain Evoked Haemodynamic Responses (EHRs [Thierry et al., 1999; Toni et al., 1999]). EHRs were calculated by averaging adjusted signals recollected from the XA matrix generated by SPM96 using custom Matlab (MathWorks, Natick, MA) procedures. SPM96 was preferred to SPM99 for this analysis because the adjusted signals can be directly retrieved together with contrast‐specific t statistics. After slice timing correction, EHRs were spline‐interpolated for optimizing temporal resolution down to 150 msec (i.e., not less than slice acquisition duration). EHR peak latencies were then detected for each activated voxel by searching for the maximum of amplitude within the corresponding event‐related haemodynamic response and plotted onto high‐resolution anatomical slices of one of the subjects (see Fig. 2).

Four volumes of interest, encompassing the 4 regions activated in every subject (i.e., left and right STG and left and right IPC), were defined a priori in Talairach space [Talairach and Tournoux, 1988]. The left STG was delimited by [x, y, z] coordinates in mm as follows: [−65 < x < −20], [−50 < y < −25], [−7 < z < 21]; and encompassed Brodmann's Areas (BAs) 41, 42, 22, and 21. The left inferior prefrontal region was delimited by [x, y, z] coordinates in Talairach space as follows: [−65 < x < −25], [0 < y < 20], [−7 < z < 28]; and encompassed BAs 44, 47, 13. Right homologous volumes were symmetrical in terms of x coordinates. EHRs of activated voxels were averaged in each of the four volumes for each individual and their peak latencies determined. A four‐factor ANOVA was then performed on regional peak latencies to characterize temporal differences relating to task (two levels), condition (two levels), region (two levels), and hemisphere (two levels, see Fig. 3).

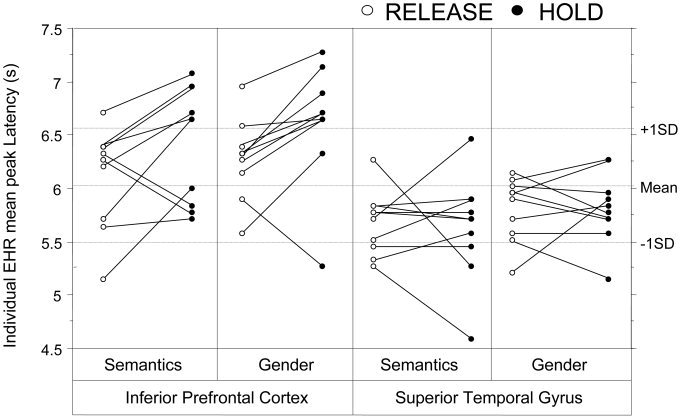

Figure 3.

Individual EHR peak latency plot for the IPC and the STG in both tasks and conditions. Individual EHRs were averaged in search volumes defined by coordinate intervals within Talairach space (see Subjects and Methods ). Voxels included in the EHR analysis were activated at a threshold of P < 0.01 uncorrected. The line drawn between HOLD and RELEASE average EHR peak latencies shows the evolution from one condition to the other in each subject.

RESULTS

Behavioral results

Hit rates were significantly higher in the semantic task (mean = 93.1% ± 3.7) than the gender task (mean = 91% ± 3.4) [F(1,23) = 12.75, P = 0.0016] and significantly higher in HOLD condition (mean = 93.2% ± 3.1) than RELEASE condition (mean = 90.9% ± 2.7) [F(1,23) = 59.15, P < 0.0001]. There was a significant task * condition interaction [F(1,23) = 19.64, P = 0.0002] showing that Semantic HOLD was the condition in which participants made the fewest errors.

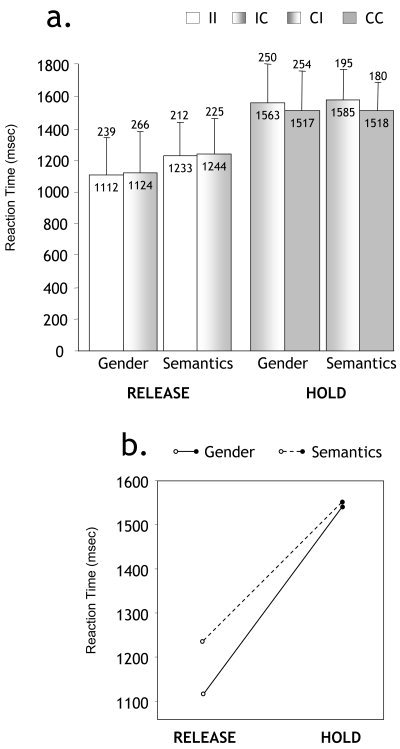

Differences in RTs across tasks (errors being dismissed) were just at significance threshold [F(1,23) = 4.24, P = 0.051]. A major effect was found for the HOLD versus RELEASE comparison [F(1,23) = 412.3, P < 0.0001; Fig. 1a] as well as a reliable task * condition interaction [F(1,23) = 30.6, P < 0.0001; Fig. 1b], indicating that Semantic RELEASE RTs were significantly longer [F(1,23) = 12.74, P = 0.0013] than Gender RELEASE RTs in the absence of a significant difference [F(1,23) = 0.71, P = 0.739] between Semantic HOLD and Gender HOLD.

Figure 1.

a:Mean Reaction Times (RTs) in all conditions. Sub‐types corresponding to different stimulus combinations in pairs are distinguished (I refers to Incongruent and C to Congruent regarding the target category). Numeric values of mean and standard deviations are displayed within and above each bar, respectively. b: Plot of the Task * Condition interaction [F(1,23) = 30.6, P < 0.0001].

Finally, in RELEASE, RTs to Incongruent‐Incongruent noun pairs were not significantly different from RTs to Incongruent‐Congruent pairs [F(1,23) = 1.25, P = 0.2746], but in HOLD, there was a significant difference between Congruent‐Incongruent and Congruent‐Congruent RTs [F(1,23) = 20.25, P = 0.0002].

Evoked haemodynamic responses

Activations were strikingly similar in all conditions and revealed a wide bilateral network including the STG, IPC, thalami, cunei, and lingual gyri (Table I, Fig. 2). Across task comparisons yielded no significant differences.

The ANOVA on EHR peak latencies showed (1) a significant language task main effect (F[1,9] = 8.67, P = 0.0164), EHRs being delayed in Gender compared to Semantics; (2) a near threshold condition main effect (F[1,9] = 4.803, P = 0.056), EHRs peaking later in HOLD than RELEASE; (3) a highly significant region main effect (F[1,9] = 25.24, P = 0.0007), EHRs peaking later in the insula/IPC than in the STG; and (4) a significant condition * region interaction (F[1,9] = 30.38, P = 0.0004) showing that inferior prefrontal EHRs were clearly delayed in HOLD vs. RELEASE, whereas the STG was insensitive to condition change (Table II, Fig. 3). There was no main effect of hemisphere [F[1,9] = 0.21, P = 0.657] and none of the other interactions reached significance. Most delayed peaks were found in voxel [−46, 14, 7] (Talairach co‐ordinate) located in the pars opercularis of Broca's area and contralateral voxel [39, 8, 7].

Table II.

Results of the Four‐Way ANOVA Performed on EHR Peak Latencies

| Effect or Interaction | F | P |

|---|---|---|

| Task | 8.670 | 0.0164 |

| Condition | 4.803 | 0.0561 |

| Region | 25.237 | 0.0007 |

| Hemisphere | 0.211 | 0.6570 |

| Task * condition | 0.036 | 0.8535 |

| Task * region | 0.037 | 0.8526 |

| Task * hemisphere | 0.036 | 0.8532 |

| Condition * region | 30.381 | 0.0004 |

| Condition * hemisphere | 0.540 | 0.4810 |

| Region * hemisphere | 3.323 | 0.1017 |

| Task * condition * region | 0.010 | 0.9238 |

| Task * condition * hemisphere | 0.057 | 0.8163 |

| Task * region * hemisphere | 1.859 | 0.2059 |

| Condition * region * hemisphere | 2.002 | 0.1908 |

| Task * condition * region * hemisphere | 0.433 | 0.5271 |

DISCUSSION

Four main results were obtained: (1) RTs were faster in RELEASE than HOLD and in Gender RELEASE than Semantics RELEASE; (2) activation patterns were remarkably similar across tasks and conditions; (3) a significant hæmodynamic peak delay between HOLD and RELEASE was identified in the inferior prefrontal regions but not in the superior temporal regions and this effect did not interact with language task.

As expected, RTs were shorter for RELEASE than HOLD and within the RELEASE condition, for Gender than Semantics. Participants tended to respond faster in the gender task but made more errors, which reflects a mild speed/accuracy trade‐off effect. Gender decision may be facilitated by the fact that gender is binary (a word is either masculine or feminine in French), hence faster reaction times in RELEASE. This is a metalinguistic task, however [Miceli et al. 2002], and as such it is likely to elicit more errors. Semantic categorization, on the other hand, is more “natural” but requires dealing with a fuzzier dichotomy. Vin (wine), for instance, designates an object that is manufactured but derived from natural components such as grapes. Semantic decisions are thus likely to be made after longer consideration than gender ones.

Activated regions evidenced by the fMRI experiment corresponded to a network of regions previously described in auditory word processing [Belin et al., 2000; Binder et al., 2000; Démonet et al., 1992, 1994; Perani et al., 1999; Price, 2000; Price et al., 1996]. The relative symmetry of the network was congruent with recent studies [Friederici et al., 2000; Moro et al., 2001; Ni et al., 2000] and conceptualizations [Hickok and Poeppel, 2000]. Activations in visual association areas (cuneus, lingual gyrus) have been reported before in language experiments with auditory input [Démonet et al., 1994; Giraud and Price, 2001; Giraud et al., 2000; Zatorre et al., 1996] and in visual language tasks involving semantic and syntactic processing [Moro et al., 2001; Perani et al., 1999]. They may reflect visual mental imagery as a complementary strategy to carry out demanding tasks, especially in the context of the noisy environment created by the fMRI procedure. However, no anatomical difference was found across tasks or conditions. We assume that cognitive operations performed by participants were too similar across tasks to allow different neural networks to be involved. In particular, access to the meaning of words was most probably involved in both tasks.

The critical result of this study was a significant delay in the peaking latency of EHRs induced by the manipulation of the demand on VWM. The issue of haemodynamic responses variability has been addressed extensively in the last decade [Aguirre et al., 1998; Bandettini, 1999; Bandettini and Cox, 2000; Buckner et al., 1996, 1998; D'Esposito et al., 1999; Kim et al., 1997; Lee et al., 1995; Miezin et al., 2000; Schacter et al., 1997; Thierry et al., 1999]. Haemodynamic responses have been shown to be too variable across regions in terms of timing, amplitude, and shape for allowing direct comparison between different parts of the brain [Bandettini, 1999; Buckner et al., 1996, 1998; Lee et al., 1995; Schacter et al., 1997]. Such regional differences might be due to variable influences of microscopic and macroscopic blood flow, to differential vascular sampling or real differences of neural activity [Buckner et al., 1998; Miezin et al., 2000; Schacter et al., 1997]. Although the haemodynamic response of one region is susceptible to be dysphased by several seconds across subjects [Buckner et al., 1998; Kim et al., 1997; Miezin et al., 2000], it has been proposed that its grand‐average latency and amplitude are reliable for groups of subjects as small as n=6, i.e., the central tendency of the EHR can be reproduced in different groups of subjects and, a fortiori, in the same group of subjects with a precision of tenths of seconds [Buckner et al., 1998].

Here, we found that the mean EHR peak was significantly delayed by condition change in one region (the IPC) but not another (the STG). According to Miezin et al. [2000], the haemodynamic response in a given region is nearly identical from one data set to another (time to peak correlation r2 = 0.95 across sets), therefore the significant difference found for the IPC can only relate to the difference introduced by condition or task variations. The STG, on the other hand, showed no sensitivity to condition change. Thus, the observed pattern cannot be the result of an overall inertia effect of blood flow in the brain [Thierry et al., 1999] but is rather a region‐specific effect relating to condition‐specific requirements.

Two different sources of modulation might explain the EHR delay in the IPC: (1) gender processing as opposed to semantic processing (involving left frontal regions [Caplan and Waters, 1999] and right prefrontal regions [Friederici et al., 2000; Ni et al., 2000]); and (2) VWM demands [Caplan and Waters, 1999; Paulesu et al., 1993]. Although Gender EHRs peaked later than Semantic EHRs overall (i.e., in both conditions and all regions), the task factor did not interact with the condition * region interaction. In Figure 3, an inferior prefrontal haemodynamic delay can be observed in HOLD vs. RELEASE for 8 subjects out of 10 in Semantics and 9 subjects out of 10 in Gender while no such global trend can be observed in the STG. In the absence of an interaction involving VWM condition, brain region, and language task, it appears that the major factor influencing the time course of the IPC haemodynamic response is VWM involvement rather than the linguistic task in question.

In sum, our results are congruent with other studies by showing that VWM demands differentially involve the IPC [Caplan et al., 2000]. Moreover, this region shows this trend of sensitivity for both gender categorization [Miceli et al., 2002] and semantic processing [Dapretto and Bookheimer, 1999], possibly because semantic selection, which involves VWM, is required [Thompson‐Schill et al., 1997]. When semantic and grammatical tasks are highly comparable, there is no evidence for a separate spatial encapsulation, possibly because of the spatial resolution of 1.5 T fMRI. Finally, this study demonstrates that varying cognitive demands can selectively influence the time‐course of haemodynamic responses in circumscribed regions of the brain.

Acknowledgements

We thank Kader Boulanouar, Pierre Celsis, Bernard Doyon, Nick Ellis, Angela Friederici, Ingrid Pedersen, Neil Roberts, Serge Ruff, Margot Taylor, and Chris Whitaker for precious advice, assistance, and comments.

REFERENCES

- Aguirre, GK , Zarahn E, D'Esposito M (1998): The variability of human, BOLD hemodynamic responses. Neuroimage 8: 360–369. [DOI] [PubMed] [Google Scholar]

- Baddeley A (1998): Recent developments in working memory. Curr Opin Neurobiol 8: 234–238. [DOI] [PubMed] [Google Scholar]

- Bandettini PA (1999): The temporal resolution of MRI In Moonen CTW, Bandettini P, editors. Functional MRI. Mauer, Germany: Springer‐Verlag; p 205–220. [Google Scholar]

- Bandettini PA, Cox RW (2000): Event‐related fMRI contrast when using constant interstimulus interval: theory and experiment. Magn Reson Med 43: 540–548. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B (2000): Voice‐selective areas in human auditory cortex. Nature 403: 309–312. [DOI] [PubMed] [Google Scholar]

- Binder JR (1997): Neuroanatomy of language processing studied with functional MRI. Clin Neurosci 4: 87–94. [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Bellgowan PS, Springer JA, Kaufman JN, Possing ET (2000): Human temporal lobe activation by speech and nonspeech sounds. Cereb Cortex 10: 512–528. [DOI] [PubMed] [Google Scholar]

- Braver TS, Cohen JD, Nystrom LE, Jonides J, Smith EE, Noll DC (1997): A parametric study of prefrontal cortex involvement in human working memory. Neuroimage 5: 49–62. [DOI] [PubMed] [Google Scholar]

- Broca P (1861): Perte de la parole, ramolissement chronique et destrcution partielle du lobe antérieur gauche du cerveau. Bull Soc Anthropol Paris 2: 235–238. [Google Scholar]

- Buckner RL, Bandettini PA, O'Craven KM, Savoy RL, Petersen SE, Raichle ME, Rosen BR (1996): Detection of cortical activation during averaged single trials of a cognitive task using functional magnetic resonance imaging. Proc Natl Acad Sci USA 93: 14878–14883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckner RL, Koutstaal W, Schacter DL, Dale AM, Rotte M, Rosen BR (1998): Functional‐anatomic study of episodic retrieval. II. Selective averaging of event‐related fMRI trials to test the retrieval success hypothesis. Neuroimage 7: 163–175. [DOI] [PubMed] [Google Scholar]

- Caplan D, Waters GS (1999): Verbal working memory and sentence comprehension. Behav Brain Sci 22: 77–94. [DOI] [PubMed] [Google Scholar]

- Caplan D, Alpert N, Waters G (1998): Effects of syntactic structure and propositional number on patterns of regional cerebral blood flow. J Cogn Neurosci 10: 541–552. [DOI] [PubMed] [Google Scholar]

- Caplan D, Alpert N, Waters G, Olivieri A (2000): Activation of Broca's area by syntactic processing under conditions of concurrent articulation. Hum Brain Mapp 9: 65–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Content A, Mousty P, Radeau M (1990): BRULEX: une base de données lexicales informatisée pour le français écrit et parlé. L'Année Psychol 90: 551. [Google Scholar]

- Dapretto M, Bookheimer SY (1999): Form and content: dissociating syntax and semantics in sentence comprehension. Neuron 24: 427–432. [DOI] [PubMed] [Google Scholar]

- Demb JB, Desmond JE, Wagner AD, Vaidya CJ, Glover GH, Gabrieli JD (1995): Semantic encoding and retrieval in the left inferior prefrontal cortex: a functional MRI study of task difficulty and process specificity. J Neurosci 15: 5870–5878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Démonet JF, Thierry G (2001): Language and brain: what is up? What is coming up? J Clin Exp Neuropsychol 23: 49–73. [DOI] [PubMed] [Google Scholar]

- Démonet JF, Chollet F, Ramsay S, Cardebat D, Nespoulous JL, Wise R, Rascol A, Frackowiak R (1992): The anatomy of phonological and semantic processing in normal subjects. Brain 115: 1753–1768. [DOI] [PubMed] [Google Scholar]

- Démonet JF, Price C, Wise R, Frackowiak RS (1994): Differential activation of right and left posterior sylvian regions by semantic and phonological tasks: a positron‐emission tomography study in normal human subjects. Neurosci Lett 182: 25–28. [DOI] [PubMed] [Google Scholar]

- D'Esposito M, Zarahn E, Aguirre GK, Rypma B (1999): The effect of normal aging on the coupling of neural activity to the bold hemodynamic response. Neuroimage 10: 6–14. [DOI] [PubMed] [Google Scholar]

- Fiez JA, Raife EA, Balota DA, Schwarz JP, Raichle ME, Petersen SE (1996): A positron emission tomography study of the short‐term maintenance of verbal information. J Neurosci 16: 808–822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friederici AD, Meyer M, von Cramon DY (2000): Auditory language comprehension: an event‐related fMRI study on the processing of syntactic and lexical information. Brain Lang 74: 289–300. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Worsley KJ, Poline JB, Frith CD, Frackowiak RSJ (1995): Statistical parametric maps in functional imaging: A general linear approach. Hum Brain Mapp 2: 189–210. [Google Scholar]

- Friston KJ, Ashburner J, Poline JB, Frith CD, Heather JD, Frackowiak RSJ (1996): Spatial realignment and normalization of images. Hum Brain Mapp 2: 165–189. [Google Scholar]

- Gabrieli JD, Poldrack RA, Desmond JE (1998): The role of left prefrontal cortex in language and memory. Proc Natl Acad Sci USA 95: 906–913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giraud AL, Price CJ (2001): The constraints functional neuroimaging places on classical models of auditory word processing. J Cogn Neurosci 13: 754–765. [DOI] [PubMed] [Google Scholar]

- Giraud AL, Truy E, Frackowiak RS, Gregoire MC, Pujol JF, Collet L (2000): Differential recruitment of the speech processing system in healthy subjects and rehabilitated cochlear implant patients. Brain 123: 1391–1402. [DOI] [PubMed] [Google Scholar]

- Grodzinsky Y (2000): The neurology of syntax: language use without Broca's area. Behav Brain Sci 23: 1–21. [DOI] [PubMed] [Google Scholar]

- Hagoort P, Brown CM (1999): Gender electrified: ERP evidence on the syntactic nature of gender processing. J Psycholing Res 28: 715–728. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D (2000): Towards a functional neuroanatomy of speech perception. Trends Cogn Sci 4: 131–138. [DOI] [PubMed] [Google Scholar]

- Just MA, Carpenter PA, Keller TA, Eddy WF, Thulborn KR (1996): Brain activation modulated by sentence comprehension. Science 274: 114–116. [DOI] [PubMed] [Google Scholar]

- Kim SG, Richter W, Ugurbil K (1997): Limitations of temporal resolution in functional MRI. Magn Reson Med 37: 631–636. [DOI] [PubMed] [Google Scholar]

- Lee AT, Glover GH, Meyer CH (1995): Discrimination of large venous vessels in time‐course spiral blood‐oxygen‐level‐dependent magnetic‐resonance functional neuroimaging. Magn Reson Med 33: 745–754. [DOI] [PubMed] [Google Scholar]

- Levelt WJM, Roelofs A, Meyer AS (1999): A theory of lexical access in speech production. Behav Brain Sci 22: 1. [DOI] [PubMed] [Google Scholar]

- Marslen‐Wilson W (1989): Access and integration: projecting sound onto meaning In: Marslen‐Wilson W, editor. Lexical representation and process. Cambridge, MA: MIT Press; p 3–24 [Google Scholar]

- McClelland JL, Elman JL (1986): The TRACE model of speech perception. Cognit Psychol 18: 1–86. [DOI] [PubMed] [Google Scholar]

- Miceli G, Turriziani P, Caltagirone C, Capasso R, Tomaiuolo F, Caramazza A (2002): The neural correlates of grammatical gender: an fMRI investigation. J Cogn Neurosci 14: 618–628. [DOI] [PubMed] [Google Scholar]

- Miezin FM, Maccotta L, Ollinger JM, Petersen SE, Buckner RL (2000): Characterizing the hemodynamic response: effects of presentation rate, sampling procedure, and the possibility of ordering brain activity based on relative timing. Neuroimage 11: 735–759. [DOI] [PubMed] [Google Scholar]

- Moro A, Tettamanti M, Perani D, Donati C, Cappa SF, Fazio F (2001): Syntax and the brain: disentangling grammar by selective anomalies. Neuroimage 13: 110–118. [DOI] [PubMed] [Google Scholar]

- Ni W, Constable RT, Mencl WE, Pugh KR, Fulbright RK, Shaywitz SE, Shaywitz BA, Gore JC, Shankweiler D (2000): An event‐related neuroimaging study distinguishing form and content in sentence processing. J Cogn Neurosci 12: 120–133. [DOI] [PubMed] [Google Scholar]

- Norris D (1994): Shortlist: a connexionist model of continuous speech recognition. Cognition 52: 189–234. [Google Scholar]

- Paulesu E, Frith CD, Frackowiak RS (1993): The neural correlates of the verbal component of working memory. Nature 362: 342–345. [DOI] [PubMed] [Google Scholar]

- Perani D, Cappa SF, Schnur T, Tettamanti M, Collina S, Rosa MM, Fazio F (1999): The neural correlates of verb and noun processing. A PET study. Brain 122: 2337–2344. [DOI] [PubMed] [Google Scholar]

- Price CJ (2000): The anatomy of language: contributions from functional neuroimaging. J Anat 197: 335–359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ, Wise RJ, Warburton EA, Moore CJ, Howard D, Patterson K, Frackowiak RS, Friston KJ (1996): Hearing and saying. The functional neuro‐anatomy of auditory word processing. Brain 119: 919–931. [DOI] [PubMed] [Google Scholar]

- Robert P (1986). Micro‐Robert, dictionnaire du français primordial. Paris: Dictionnaires le Robert. [Google Scholar]

- Schacter DL, Buckner RL, Koutstaal W, Dale AM, Rosen BR (1997): Late onset of anterior prefrontal activity during true and false recognition: an event‐related fMRI study. Neuroimage 6: 259–269. [DOI] [PubMed] [Google Scholar]

- Schmitt BM, Schiltz K, Zaake W, Kutas M, Munte TF. (2001): An electrophysiological analysis of the time course of conceptual and syntactic encoding during tacit picture naming. J Cogn Neurosci 13: 510–522. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P (1988): Co‐planar stereotaxic atlas of the human brain. New York: Thieme Medical Publishers. [Google Scholar]

- Taubner RW, Raymer AM, Heilman KM (1999): Frontal‐opercular aphasia. Brain Lang 70: 240–261. [DOI] [PubMed] [Google Scholar]

- Thierry G, Boulanouar K, Kherif F, Ranjeva JP, Demonet JF (1999): Temporal sorting of neural components underlying phonological processing. Neuroreport 10: 2599–2603. [DOI] [PubMed] [Google Scholar]

- Thierry G, Doyon B, Demonet JF (1998): ERP mapping in phonological and lexical semantic tasks: A study complementing previous PET results. Neuroimage 8: 391–408. [DOI] [PubMed] [Google Scholar]

- Thompson‐Schill SL, D'Esposito M, Aguirre GK, Farah MJ (1997): Role of left inferior prefrontal cortex in retrieval of semantic knowledge: a reevaluation. Proc Natl Acad Sci USA 94: 14792–14797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toni I, Schluter ND, Josephs O, Friston K, Passingham RE (1999): Signal‐, set‐ and movement‐related activity in the human brain: an event‐related fMRI study. Cereb Cortex 9: 35–49. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Meyer E, Gjedde A, Evans AC (1996): PET studies of phonetic processing of speech: review, replication, and reanalysis. Cereb Cortex 6: 21–30. [DOI] [PubMed] [Google Scholar]