Abstract

The processing of changing nonverbal social signals such as facial expressions is poorly understood, and it is unknown if different pathways are activated during effortful (explicit), compared to implicit, processing of facial expressions. Thus we used fMRI to determine which brain areas subserve processing of high‐valence expressions and if distinct brain areas are activated when facial expressions are processed explicitly or implicitly. Nine healthy volunteers were scanned (1.5T GE Signa with ANMR, TE/TR 40/3,000 ms) during two similar experiments in which blocks of mixed happy and angry facial expressions (“on” condition) were alternated with blocks of neutral faces (control “off” condition). Experiment 1 examined explicit processing of expressions by requiring subjects to attend to, and judge, facial expression. Experiment 2 examined implicit processing of expressions by requiring subjects to attend to, and judge, facial gender, which was counterbalanced in both experimental conditions. Processing of facial expressions significantly increased regional blood oxygenation level‐dependent (BOLD) activity in fusiform and middle temporal gyri, hippocampus, amygdalohippocampal junction, and pulvinar nucleus. Explicit processing evoked significantly more activity in temporal lobe cortex than implicit processing, whereas implicit processing evoked significantly greater activity in amygdala region. Mixed high‐valence facial expressions are processed within temporal lobe visual cortex, thalamus, and amygdalohippocampal complex. Also, neural substrates for explicit and implicit processing of facial expressions are dissociable: explicit processing activates temporal lobe cortex, whereas implicit processing activates amygdala region. Our findings confirm a neuroanatomical dissociation between conscious and unconscious processing of emotional information. Hum. Brain Mapping 9:93–105, 2000. © 2000 Wiley‐Liss, Inc.

Keywords: amygdala, conscious perception, emotion, extrastriate cortex, fusiform, hippocampus, middle temporal gyrus

INTRODUCTION

Nonverbal signals, e.g., facial expressions, dynamically shape behaviour during social communication. Moreover, adaptive social behaviour depends on perceiving and flexibly processing changes between social signals that convey different social meanings [e.g., Rolls et al., 1994; Hornak et al., 1996]. In unusual situations, effortful explicit interpretation of the meaning of social cues may be required to guide social responses. However, in familiar situations, social signals may be processed implicitly and behaviour responsively adapted without full cognitive awareness. Thus explicit and implicit processing of facial expressions may serve different functions and may have distinct neural substrates.

Dissociation between explicit and implicit social processing is apparent in patients who have intact semantic knowledge of social behaviour, but who exhibit pervasive social impairments, e.g., patients with ventromedial prefrontal lesions [Saver and Damasio, 1991], and perhaps adults with Asperger syndrome [Bowler, 1992]. However, the neural mechanisms underlying social behaviour are poorly understood, and it is unknown if explicit and implicit processing of social cues can be dissociated neuroanatomically.

In animal studies, several interconnected brain regions are associated with processing social and motivational cues. In the monkey, neuronal populations within inferotemporal and superior temporal sulcus (STS) cortex respond selectively to socially important aspects of faces, including expressions [e.g., Perrett et al., 1985; Hasselmo et al., 1989]. Amygdala and ventral prefrontal cortex, which receive inputs from inferotemporal and STS cortex, are implicated in the control of social and motivational behaviour: Lesions of these areas result in abnormal social functioning [Weizkrantz, 1956; Butter and McDonald, 1970] and impair reward‐related learning [Jones and Mishkin, 1972; Iversen and Mishkin 1970].

Human studies have reported that the amygdala is activated during the processing of unitary expressions of fear or happiness [Breiter et al., 1996; Morris et al., 1996] and during fear‐conditioning [Buchel et al., 1988; Morris et al., 1998b]. Moreover, experiments involving visual masking of facial expressions and fear‐conditioning of visually masked faces have suggested that the amygdala may respond to “unseen” emotive stimuli [Morris et al., 1998b, 1999; Whalen et al., 1988]. Activity in the insula has been associated with perceiving expressions of disgust [Phillips et al., 1997]. However, adaptive social behaviour requires flexible processing of multiple (not unitary) changing facial expressions: The middle temporal gyrus (MTG) has been reported as activated when viewing eye or mouth movements [Puce et al., 1998], and anterior cingulate, medial temporal lobe, and ventral prefrontal cortex are activated when matching faces by expressions [George et al., 1993]. Additionally, awareness of ones own subjective emotional experience when viewing emotive stimuli specifically activates the anterior cingulate region [Lane et al., 1997a], and animal and human studies [LaBerge and Buchsbaum, 1990; Robinson and Petersen, 1992; Morris et al., 1999] have associated activity within the thalamic pulvinar nucleus in selective attention to salient visual stimuli.

Thus discrete brain areas are associated with the processing of specific facial expressions, perceiving changes in facial features and matching different facial expressions. However, the neuroanatomical substrate for processing changing facial cues of different social valences (as occurs naturalistically in social situations) has not directly been investigated. Moreover, despite evidence that attention to emotive stimuli or subjective emotional state may modulate activity in specific brain areas, it is unknown if different neural pathways underlie explicit and implicit processing of socially important facial expressions. Therefore, we used functional magnetic resonance imaging (fMRI) to investigate the processing of facial expressions conveying distinct social valences and to determine whether explicit (Experiment 1) or implicit (Experiment 2) processing of expressions activate different neural pathways. We did not attempt to induce mood states.

MATERIALS AND METHODS

We studied nine right‐handed, healthy young males (mean age ± SD: 27 years ± 7; mean full‐scale IQ ± SD: 116 ± 10). Subjects were screened to exclude neuropsychiatric, neurological, or extracerebral disorders that might affect brain function and gave informed consent for a protocol passed by the local Research Ethics Committee. Subjects were familiarized with the stimuli and task procedure prior to scanning.

Experimental tasks

We carried out two experiments in which 10 blocks of eight facial stimuli from a standard series [Ekman and Freisen, 1976] were presented to subjects pseudo‐randomly in alternating (“on/off”) 30‐second phases. Four male and four female identities, each displaying happy, angry, and neutral facial expressions were used. Each face was presented for 3 seconds with an interstimulus interval of 0.75 seconds. Subjects were required to determine either facial expression or the gender of each face, as indicated by a legend underneath each stimulus (HAPPY/ANGRY – NEUTRAL or MALE ‐ FEMALE). Subjects responded by pressing one of two buttons with the right thumb accordingly. Both experiments were carried out in the same session. The order of the experiments and the order of the stimuli were counterbalanced across subjects.

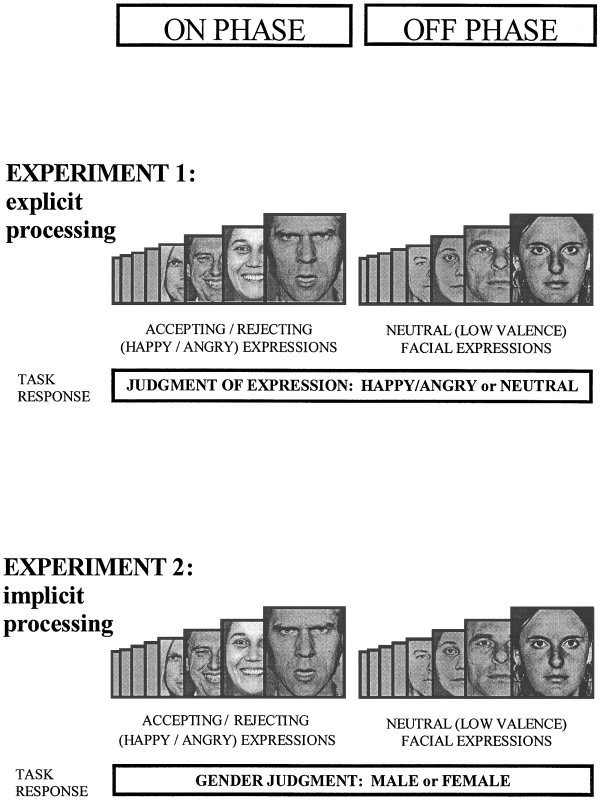

In both experiments (see Figs. 1 and 2), subjects were presented with a pseudo‐randomized mixture of happy and angry expressions in the “on”‐phase and neutral expressions in the control “off”‐phase. Happy and angry facial expressions were chosen to represent powerful signals of social acceptance or rejection, and the neutral facial expression was chosen for the control stimuli because it contains similar visual complexity, yet conveys less information of immediate social valence. Therefore, Experiments 1 and 2 investigated the processing of changing socially relevant facial expressions.

Figure 1.

Diagram of task paradigm. Two experiments employed an ON/OFF design in which eight stimuli were presented in each phase, lasting 30 sec. Stimuli were faces from the Pictures of Facial Affect [Ekman and Freisen, 1976], presented independently in a pseudorandom order for 3 sec, with an interstimulus interval of 0.75 sec. In both experiments, eight faces consisting of four male and four female with mixed happy and angry expressions were shown in the stimulation on phase, and four male and four female neutral faces were presented in the control off phase. Experiment 1 (explicit processing of facial expression): Subjects judged the emotional content of the faces by signalling whether the face was neutral or happy/angry by means of a button press. These responses were prompted by a legend beneath each of the stimuli. Experiment 2 (implicit processing of facial expressions): The stimuli consisted of happy and angry faces in the on phase and neutral faces in the control off phase, as in Experiment 1 (but in a different pseudo‐random order). Subjects were cued to respond to each of the stimuli by judging whether the faces were male or female, which was counterbalanced across conditions.

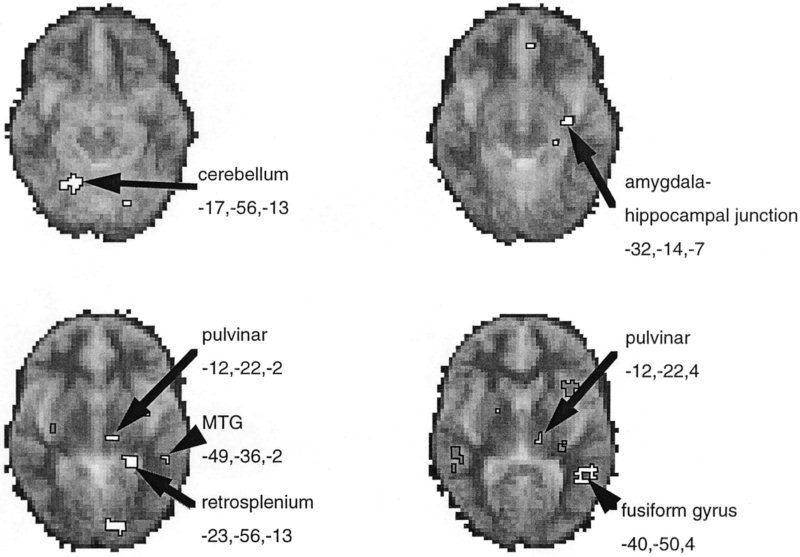

Figure 2.

Main effect of processing mixed high‐valence facial expressions vs. neutral facial expressions. Significant BOLD activations were calculated at each voxel from the fundamental power quotient (FPQ), representing the power of BOLD signal changes occurring at the on/off frequency of the block design, using the methods of Brammer et al. [1997]. Group data is presented from nine subjects performing both experiments. Areas showing significant increases in BOLD signal in‐phase with the stimulation (on) phase of the experiments (equivalent to activations) are shown in white and identified with Talairach co‐ordinates given. Signal changes out of phase with the stimulation condition are shown as darker delineated areas. Data is depicted on horizontal sections of a standard template MRI grey‐scale image (derived from 12 subjects) to illustrate and aid localization.

Experiment 1 (explicit task)

We examined the explicit processing of facial expressions by instructing the subjects to attend directly to the nature of the facial expression and signal their judgement of this in their response.

Experiment 2 (implicit task)

We investigated implicit processing of facial expressions by instructing the subjects to attend to and judge the gender of the faces. Because the subjects attended to a nonemotional quality of the faces that was balanced in both the stimulation (happy/angry expressions) and control (neutral expressions) conditions, we were able to examine the implicit processing of the facial expressions.

Data acquisition

Images were acquired using a 1.5 Tesla GE Signa System (General Electric, Milwaukee, WI) fitted with ANMR hardware and software (ANMR, Woburn, MA) at the Institute of Psychiatry, London. A quadrature birdcage headcoil was used for RF transmission and reception. For all three experiments, in each of 14 noncontiguous planes parallel to the intercommissural plane, 100 T2*‐weighted images depicting blood oxygenation level dependent (BOLD) contrast [Ogawa et al., 1990] were acquired: echoplanar imaging (EPI), TE = 40 ms, TR = 3,000 ms). In‐plane resolution = 3.1 mm, slice thickness = 5 mm, slice skip = 0.5 mm. At the same session, a 43‐slice, high resolution inversion recovery echoplanar image of the whole brain was acquired in the intercommissural plane (TE = 73s, TR = 16,000 ms, in‐plane resolution 1.5 mm, slice thickness = 3 mm), for the purpose of standardization in standard space.

Data analysis

Subjects were secured during scanning to prevent head movement. Additionally, standard movement correction methods were employed [Friston et al. 1996]. Using Generic Brain Activation Mapping software [Brammer et al, 1997], the periodic change in T2*‐weighted signal intensity at the experimentally determined frequency of alternation between A and B stimulation blocks was analyzed by pseudo‐generalized least‐squares (PGLS) fit of a sinusoidal regression model to the movement‐corrected time series at each voxel. In order to compute generic brain activation maps, showing brain regions activated over a group of subjects, maps of observed and randomized fundamental power quotient (FPQ = fundamental power divided by its standard error) were estimated in each individual after transformation into standard space [Talairach and Tournoux, 1988] and spatial smoothing (Gaussian filter, full width at half‐maximum = 14 mm). The median value of FPQ was computed at each voxel in standard space and its statistical significance was tested by reference to the null distribution of median FPQ computed from the identically smoothed and spatially transformed data. For a one‐tailed test of size a, the critical value was the 100*(1‐a)th percentile of the randomised distribution. Posthoc analysis of the time course of regional BOLD signal increases was applied in the analyses to determine the relationship between regional signal changes during on and off phases of the experiments.

We performed four steps in the analyses of the group data from Experiments 1 (explicit) and two (implicit processing of facial expressions). (1) We investigated the common neuroanatomy subserving the processing of facial expressions by analyzing Experiments 1 and 2 together to determine the main effect of facial expression. Thus independently of the attentional requirements of the two experiments, we identified brain regions showing significant BOLD signal increases when processing changing (mixed‐valence) facial expressions compared to neutral expressions, and vice versa. (2) We then determined which of these brain areas were also modulated by attention to facial expressions by using a repeated‐measures analysis‐of‐variance between Experiments 1 and 2 that was constrained to the generic pattern of significant BOLD activity. (3) In addition to having a common neuroanatomical substrate, explicit and implicit processing of facial expressions may be associated with activity in distinct brain regions that would not be identified in the above analyses. We, therefore, performed separate analyses of Experiments 1 and 2 separately to determine which brain regions showed significant BOLD signal increases in each experiment. (4) We performed a repeated‐measure analysis‐of‐variance, constrained to the combination of all the voxels that had significant BOLD activity in either Experiments 1 or 2. Thus we determined which brain regions were significantly different in activity between the two experiments, independently of the pattern of shared activity. In all the above analyses, we determined the phase‐relationship of BOLD signal changes. Because we were interested in the processing of high‐valence facial expressions, increases in BOLD signal that occurred in‐phase with the on‐conditions (mixed happy/angry facial expressions) are referred to heuristically as activations, and BOLD signal increases occurring during the off‐conditions (control, neutral facial expressions) are referred to as deactivations.

RESULTS

Behavioral performance

Subjects were debriefed after performance of each task. The nine subjects reported no difficulties in performing the tasks, and this was reflected in performance data. Mean errors per subjects were 1.7/80 trials in Experiment 1, 0.3/80 trials in Experiment 2.

Regional activity during the processing of changing mixed‐valence facial expressions: Main effect.

Significant increases in BOLD signal (activations) occurred during processing of high‐valence facial expressions in the left inferior and lateral temporal lobe visual cortices (fusiform and middle temporal gyri), left hippocampus, amygdalohippocampal junction, and pulvinar nucleus of the thalamus (see Fig. 2). Activations also occurred in cerebellum (bilaterally) and left retrosplenium (Table I). Deactivations (increased BOLD activity in the control condition) occurred in the left insula and cerebellum and in right superior temporal gyrus.

Table I.

Brain regions showing significant BOLD signal changes when processing mixed vs. neutral facial expressions (main effect)*

| Brain region | BA | Side | Tal(x) | Tal(y) | Tal(z) | Size | P value |

|---|---|---|---|---|---|---|---|

| On‐condition > off‐condition | |||||||

| Fusiform gyrus | 37 | L | −40 | −50 | 4 | 18 | 0. 0001 |

| Cerebellum | R | 17 | −56 | −13 | 17 | 0. 0002 | |

| Peristriate | 18 | L | −14 | −86 | −2 | 12 | 0. 0003 |

| Retrosplenium | 30 | L | −23 | −42 | −2 | 10 | 0. 0005 |

| Amygdalohippocampal junctiona, c | L | −32 | −14 | −7 | 4 | 0.003 | |

| Posterior hippocampus | L | −29 | −31 | 4 | 4 | 0.003 | |

| Pulvinar (thalamus) | L | −12 | −22 | 4 | 4 | 0.004 | |

| Middle temporal gyrusa, b | 21 | L | −49 | −36 | −2 | 3 | 0.001 |

| Pulvinar (thalamus) | 27 | L | −12 | −22 | −2 | 3 | 0.005 |

| Off‐condition > on‐condition | |||||||

| Insula | L | −35 | 14 | 4 | 13 | 0. 0002 | |

| Superior temporal gyrus | 22 | R | 49 | −33 | 4 | 8 | 0. 0003 |

| Cerebellum | L | −26 | −72 | −18 | 5 | 0. 0004 | |

Names of brain regions, Brodmann's areas (where appropriate), Talairach co‐ordinates, size of voxel cluster, and significance levels are given.

Areas showing significant differential response to explicit (Experiment 1) and implicit processing (Experiment 2) (P < 0.01) repeated measures ANOVA, constrained to group pattern of activity:

Significantly greater activity during explicit compared to implicit processing;

Significantly greater activity during implicit compared to explicit processing.

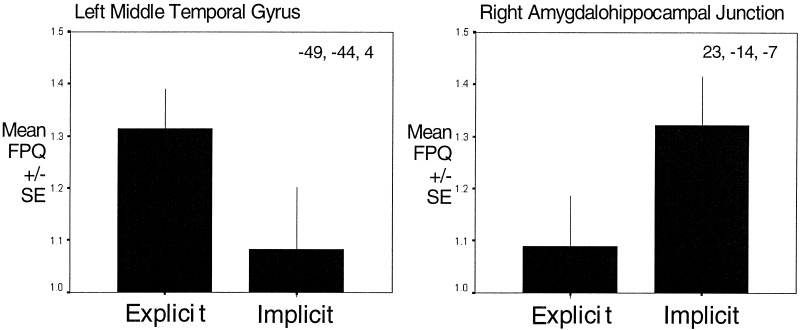

Between‐experiment differences in generic BOLD activity.

Of the brain areas identified above, the left middle temporal gyrus was significantly more active during Experiment 1 (explicit processing of expressions) than during Experiment 2 (implicit) (maximum difference at Talairach co‐ordinates, Tal [‐52, ‐42, 4], P < 0.01). In contrast, the left amygdalohippocampal junction (maximum difference at Tal [‐35, ‐11, ‐7], P < 0. 00001) was more active in Experiment 2 (implicit processing of expressions) than Experiment 1 (explicit). Additionally, implicit processing was associated with greater deactivation of the left cerebellum and right middle temporal gyrus than explicit processing.

Independent analyses of Experiments 1 and 2.

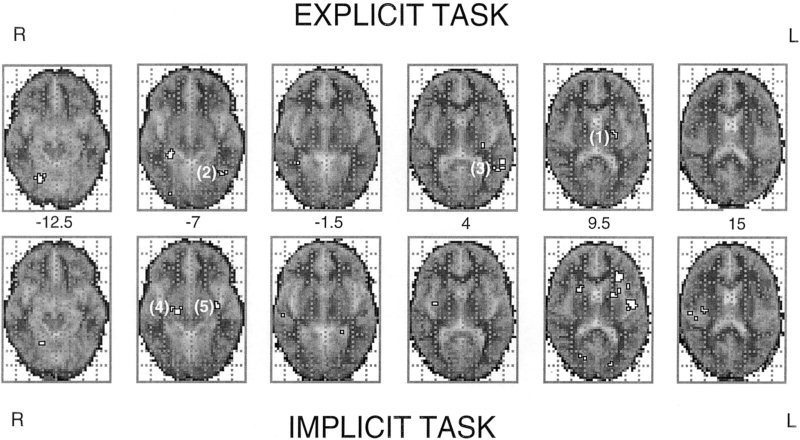

Experiments 1 and 2 were analyzed separately to identify shared and distinct neural pathways for explicit and implicit processing of changing facial expressions. In Experiment 1 (explicit processing), significant activations occurred in right posterior hippocampus, bilateral fusiform gyrus, left putamen, and middle temporal gyrus. There was also significant deactivation of the right cerebellum. In Experiment 2 (implicit processing), there was significant activation of left insula, inferior prefrontal lobe, bilateral amygdalohippocampal junction, and putamen (Fig.3). Deactivation (greater activity in off‐condition) occurred in left inferior frontal gyrus (Broca's area), retrosplenium, cerebellum, and bilateral posterior cingulate and middle temporal gyri (Table II).

Figure 3.

Changes in regional BOLD signal during explicit and implicit processing of mixed high‐valence facial expressions (vs. neutral facial expressions): Group data are presented from nine subjects, in separate analysis of the explicit task (upper set of brain sections) and the implicit task (lower set of brain sections). Voxel clusters in which there were significant increases in BOLD signal in‐phase with the stimulation (on) phase of the experiments (equivalent to activations) are delineated in white. Data are depicted on six horizontal sections of a standard template MRI grey‐scale image (derived from 12 subjects). Right and left sides of the brain sections are inverted, as denoted. The Talairach Z‐coordinate of each section is given in the middle of the figure. Also indentified are brain regions showing significant between task differences on analysis of variance: Activity greater in explicit task: (1) left putamen; (2) left fusiform gyrus; (3) left middle temporal gyrus. Activity greater in implicit task (4) right amygdala‐hippocampal junction; (5) left amygdala‐hippocampal junction.

Table II.

Significant regional BOLD signal changes in independent analyses of Experiments 1 and 2*

| Brain region | BA | Side | Tal(x) | Tal(y) | Tal(z) | Size | P value |

|---|---|---|---|---|---|---|---|

| Explicit processing of expressions (Experiment 1) | |||||||

| On‐condition > off‐condition | |||||||

| Posterior hippocampus | R | 29 | −36 | −7 | 9 | 0. 00004 | |

| Fusiform gyrus | 37 | R | 20 | −61 | −13 | 9 | 0. 0002 |

| Putamena, b | L | −23 | −14 | 9 | 6 | 0.002 | |

| Fusiform gyrusa, b | 37 | L | −38 | −56 | −7 | 5 | 0.001 |

| Fusiform gyrus | 37 | L | −40 | −50 | 4 | 5 | 0. 0005 |

| Middle temporal gyrusa, b | 21 | L | −49 | −42 | 4 | 4 | 0.001 |

| Explicit processing of expressions (Experiment 1) | |||||||

| Off‐condition > on‐condition | |||||||

| Cerebellum | R | 23 | −53 | −24 | 5 | 0. 0007 | |

| Implicit processing of expressions (Experiment 2) | |||||||

| On‐condition > off‐condition | |||||||

| Insula | L | −40 | −8 | 9 | 15 | 0. 00003 | |

| Inferior frontal lobe | L | −26 | 25 | 9 | 9 | 0. 0002 | |

| Putamen | R | 23 | 8 | 9 | 6 | 0.001 | |

| Insula | R | 38 | −17 | 15 | 5 | 0.002 | |

| Amygdalohippocampal junctiona, c | R | 20 | −17 | −7 | 5 | 0.0004 | |

| Amygdalohippocampal junctiona, c | R | 23 | −14 | −7 | 3 | 0. 0007 | |

| Amygdalohippocampal junctiona, c | L | −35 | −14 | −7 | 5 | 0. 0006 | |

| Putamen | L | −17 | 0 | 9 | 5 | 0. 0002 | |

| Striate cortex | 17 | L | −14 | −81 | 9 | 5 | 0.001 |

| Implicit processing of expressions (Experiment 2) | |||||||

| Off‐condition > on‐condition | |||||||

| Inferior frontal gyrus | 44 | L | −43 | 17 | 15 | 18 | 0. 00003 |

| Posterior cingulate | 31 | L | −32 | −53 | 26 | 14 | 0. 00007 |

| Posterior cingulate | 23 | R | 23 | −58 | 20 | 10 | 0. 0004 |

| Precentral gyrus | 4 | R | 46 | 0 | 20 | 8 | 0. 0003 |

| Middle temporal gyrus | 21 | L | −52 | −17 | −2 | 6 | 0. 0002 |

| Middle temporal gyrus | 21 | R | 52 | −42 | 4 | 6 | 0. 0007 |

| Retrosplenium | 30 | L | −6 | −50 | 20 | 6 | 0.002 |

| Cerebellum | L | −23 | −69 | −18 | 6 | 0.001 | |

| Peristriate cortex | 19 | L | −14 | −86 | −2 | 3 | 0.003 |

Names of brain regions, Brodmann's areas (where appropriate), Talairach co‐ordinates, size of voxel cluster, and significance levels are given.

Areas showing significant differential response to explicit (Experiment 1) and implicit processing (Experiment 2) (P < 0.01) repeated measures ANOVA, constrained to the combined pattern of activity during Experiments 1 and 2:

Significantly greater activity during explicit compared to implicit processing;

Significantly greater activity during implicit compared to explicit processing.

Analysis‐of‐variance between Experiments 1 and 2.

We determined which brain areas had significantly different activity between Experiments 1 and 2 (repeated‐measures analysis‐of variance, constrained to the combined areas showing significant BOLD activity in either Experiments 1 or 2). The left putamen (maximum difference at Tal [ ‐23, ‐11, 9], P < 0.05), left middle temporal gyrus (maximum difference at Tal [‐52, ‐42, 4], P < 0.01) and left fusiform gyri (maximum difference at Tal (‐43, ‐56, ‐7), P< 0.05) were significantly more active during explicit processing of facial expressions. The right cerebellum showed significantly greater deactivation during Experiment 1. Bilateral amygdalohippocampal junctions were significantly more active during Experiment 2 (implicit processing) than Experiment 1 (explicit processing) (maximum differences at Tal [23, ‐14, ‐7], P< 0. 00001 and Tal [‐35, ‐11, ‐7], P < 0. 00001) (Table II, Figs. 3, 4).

Figure 4.

Graphical representation of relative BOLD signal changes across all nine subjects occurring during the explicit and implicit processing of facial expressions in left middle temporal gyrus and right amygdalohippocampal junction (shown in Fig. 3). These regions were chosen because they showed significant between task differences on ANOVA. FPQ data (power on signal change at the on/off frequency of stimulation, divided by its standard deviation) were taken from a 3x3x3 voxel cube centered on the Talairach co‐ordinates given in each subject. Mean and standard error across individual subjects during the explicit and implicit processing of facial expressions is given.

DISCUSSION

Previous functional imaging studies have examined representations of unitary facial expressions [e.g., Breiter et al., 1996; Morris et al., 1996; Phillips et al., 1997; Lane et al., 1997b], matching of different expressions [George et al., 1993], conditional learning using facial stimuli [Morris et al., 1997, 1998b, 1999; Buchel et al., 1998], perception of facial movements [Puce et al., 1998], and attention to subjective emotional experience [Lane et al., 1997a]. However, these studies did not examine how social cues from facial expressions are processed naturalistically, since adaptive social behavior requires the ability to process rapidly multiple and changing expressions of different social meanings [e.g., Rolls et al., 1994; Hornak et al., 1996]. Moreover, many studies involving the processing of facial expressions have used implicit paradigms [e.g., Morris et al., 1996, 1998b, 1999; Phillips et al., 1997; Whalen et al., 1998] with the implicit assumption that emotional processes operate independently of cognitive attention, whereas other studies used explicit paradigms [e.g., George et al., 1993; Lane et al., 1997b], often with the intention of inducing changes in subjective mood [e.g., Lane et al., 1997a,b; Schneider et al., 1995]. However, nobody has determined if there are significant differences in the functional neuroanatomy of explicit and implicit processing of facial expressions.

We identified brain regions subserving the processing of mixed facial expressions of high social valence and investigated how these brain areas are modulated by attention to facial expression. Furthermore, we demonstrated dissociation of the functional neuroanatomy subserving explicit and implicit processing of facial expressions.

Generic processing of facial expressions

We compared regional brain activity during the processing of happy and angry facial expressions with neutral faces to determine regional brain activity associated with the naturalistic processing of changing cues of different social valence. Therefore, we predicted activity in brain regions involved: (1) in processing angry and happy faces independently, and (2) in brain regions involved in processing changes between facial expressions of different valence. However we did not exclude the possibility that one emotional facial expression, e.g., anger, had a greater influence on the observed regional brain activity, and we did not explore this further in the study. This may be particularly relevant to activity in medial temporal lobe, where previous studies reported greater amygdala activity during processing of “negative” emotions, e.g., fear, compared to “positive” happy facial expressions [e.g., Morris et al., 1996; Breiter et al., 1996].

In our study the processing of mixed facial expressions of high social valence was associated with activation of left fusiform and middle temporal gyri, pulvinar nucleus and anterior and posterior regions of the hippocampus. Both the fusiform and middle temporal gyri are components of the ventral visual processing pathway, specialized in representing objects. The fusiform cortex is activated during processing of categorical visual information and contains subregions, specialized in processing faces, including identity and expression (Haxby et al., 1991; Sergent et al.,1992; Puce et al., 1995, 1996; Courtney et al., 1996]. The middle temporal gyrus may represent a human homologue of superior temporal sulcus (STS) region in nonhuman primates: Intraoperative recordings [Ojemann et al. 1992], reversible lesions [Fried et al., 1982], and functional imaging studies [Puce et al., 1998] indicate that neurons in the human middle temporal gyrus respond to facial expressions and movements, in a similar manner to neurons in the STS region of monkeys, which respond to socially important aspects of faces such as expression, orientation, and eye‐gaze direction [e.g., Perrett et al., 1985; Hasselmo et al., 1989]. Together the fusiform and middle temporal gyri provide a specialized and detailed cortical representation of faces and salient facial expressions.

We also observed significant activity in two regions of the medial temporal lobe during the processing of high‐valence facial expressions: the amygdalohippocampal junction and a more posterior region of hippocampus. Both the amygdala and hippocampus have been implicated in emotional behavior [Papez, 1937; MacLean, 1952], and in humans both regions contain neurons that respond to faces [Fried et al., 1997]. The amygdala and hippocampus are strongly interconnected and receive inputs from extrastriate visual areas, including fusiform and middle temporal gyri [Amaral et al., 1992]. However, studies in both humans and animals have indicated that the role of the amygdala in emotional behavior and conditional learning is dissociable from the role of the hippocampus in episodic memory and declarative knowledge [e.g., Bechara et al., 1995; Zola‐Morgan et al., 1991]. Amygdala activation has been reported in previous functional imaging studies during the processing of facial expressions of fear [Morris et al., 1996] and during aversive conditioning of faces [Morris et al., 1997, 1998b; Buchel et al., 1998]. In our study, we interpret the activation in the amygdala region to indicate involvement of the amygdala in representing the motivational meaning (salience) of the happy and angry facial expressions. The more posterior hippocampal activation is likely to represent the further processing of this socially important information through involvement of declarative and mnemonic mechanisms. Dysfunction of this region of hippocampus has been reported in schizophrenic patients during episodic memory retrieval and may contribute to the abnormalities in social behavior associated with schizophrenic symptomatology [Heckers et al., 1998]. However, an interaction between mnemonic and emotional processing of faces may also account for this posterior hippocampal activation, as a result of the relatively small stimulus set and performance of the explicit and implicit experiments within the same session.

We also observed activation of the thalamus (pulvinar nucleus) in conjunction with medial and lateral temporal lobe regions during the processing of facial expressions. Previous functional imaging studies have reported thalamic activity when processing arousing stimuli [Fredrikson et al., 1993] and facial expressions [Lane et al., 1997b]. Also, activity in the pulvinar nucleus is reported during selective attention to visual stimuli during object identification [LaBerge and Buchsbaum, 1990] and the pulvinar nucleus is implicated in rapid selective attention to salient visual stimuli [Robinson and Petersen, 1992]. Anatomically, the pulvinar receives visual input directly from subcortical regions (superior colliculi) and from visual cortical areas, including the fusiform and middle temporal gyri. It is also connected with parietal regions implicated in spatial attention and with the amygdala. Functionally, the pulvinar is implicated in selective visual attention to salient stimuli, e.g., orienting salient targets and filtering of nonsalient distractors [LaBerge and Buchsbaum 1990; Robinson and Petersen 1992]. Previous functional imaging studies have reported a close relationship between activity in the pulvinar nucleus and amygdala activity during the processing of fear‐conditioned faces. Also, the correlation between pulvinar and amygdala is reported as being significantly stronger when there is no conscious awareness of the conditioned face stimulus [Morris et al., 1998b, 1999]. Our findings indicate that the pulvinar nucleus is an important brain area, together with extrastriate visual cortex and amygdalohippocampal complex, for the representation of socially salient stimuli, but in contrast to the findings of LaBerge and Buchsbaum [1990], we did not find strong evidence for modulation of pulvinar activity by attention.

Retrospenial cortex and cerebellum were also active during processing of high‐valence expressions. Activity within retrospenial cortex and adjacent posterior cingulate is reported to influence conditional learning and physiological arousal [Bussey et al., 1996; Devinsky and Luciano, 1993]. Consistent with this, arousing (threatening) stimuli are reported to activate posterior cingulate [Maddock and Buonocore, 1997], and in our own study facial expressions with immediate social importance evoke greater activity in this region than neutral faces, perhaps as part of an arousal/orientating response involving the pulvinar nucleus. The role of the cerebellum in emotional behavior and processing facial expression is poorly understood, but developmental and acquired cerebellar abnormalities have been associated with impaired social functioning [Courchesne et al., 1989; Schmahman and Sherman, 1998]. We observed regionally distinct activations and deactivations of cerebellum in our study; however, further interpretation of the contribution of the cerebellum to processing facial expressions would be speculative.

Thus extrastriate visual cortices, thalamus, and medial temporal lobe areas were significantly more active during the processing of happy and angry (mixed‐high valence facial expressions) compared to neutral facial expressions. These data are consistent with previous studies reporting activity in these areas during the processing of emotive social stimuli. Moreover, many of these areas are implicated in the representation of subjective mood states. Our study was not directed at studying mood (because of experimental constraints included short (3 sec) stimulus duration, no physiological measures or mood ratings were made during task performance). However, mood induction studies [e.g., Pardo et al. 1993; Schneider et al. 1995; Lane et al. 1997b], and studies employing symptom provocation in obsessive‐compulsive disorder or posttraumatic stress disorder [e.g., McGuire et al., 1994; Rauch et al., 1996] reported increased activity in limbic medial temporal lobe structures, paralimbic areas, and extrastriate visual cortices during the experience of arousing emotional states. These observations, together with our own findings, suggest that salient sensory information (e.g., processing facial affect), prior emotional experience, and subjective mood may share representations within a discrete set of limbic (e.g., amygdala) and related brain regions. These shared representations may provide a common basis for integrating emotional experience with motivational behavior.

Neuroanatomical dissociation of explicit and implicit processing of facial expressions

Of the areas showing significant activation in the combined analysis of Experiments 1 and 2, the middle temporal gyrus (MTG) was significantly more active in the explicit task compared to the implicit task, whereas the amygdala region was significantly more active during implicit processing compared to explicit processing. This indicates a functional dissociation between pathways for the conscious explicit appraisal of facial expressions (involving higher visual cortices) and pathways for automatic implicit processing of salient facial expressions that involve the amygdala. We investigated this dissociation further in separate analyses of Experiments 1 and 2 (and by an ANOVA constrained to the significant activations in either task). In these separate analyses, explicit processing of facial expressions evoked significant activation of temporal lobe visual cortices (fusiform and middle temporal gyri), and posterior hippocampus, whereas implicit processing of facial expressions evoked significant activation of the amygdala region and connected regions of insula and inferior prefrontal cortex. Both experiments activated regions of the putamen, but its contribution to the processing of facial expressions is unclear. Other striatal areas, e.g., head of caudate nucleus, are implicated in emotional processing and experience: deficits in processing facial expression of disgust has been associated with striatal degeneration [Sprengelmeyer et al., 1996], and fronto‐striatal‐thalamic circuits have been implicated in the control of social and emotional behavior [Alexander et al., 1996] and implicated in obsessive‐compulsive symptomatology [e.g., McGuire et al., 1994]. It is likely that the observed activity within the putamen during both explicit and implicit processing of expressions reflects participation of the neostriatum in the representation of, and subsequent responses to, emotional information from faces.

In comparing the two tasks by analysis of variance, there was significantly greater activation in the fusiform and middle temporal gyri during explicit processing of expressions, compared to the implicit processing of facial expressions, which again was shown to activate the amygdala region significantly more than the explicit task. This dissociation between brain areas involved in the “conscious” explicit and “automatic” implicit processing of (mixed high‐valence) facial expressions has not been directly addressed before, but it is important for understanding the mechanisms governing social behavior and appraising the relative usefulness of different experimental paradigms for the investigation of emotional processing in general. Our findings are consistent with the proposed distinction between conscious and unconscious mechanisms of emotional functioning [LeDoux, 1995, 1998] and evidence for parallel visual pathways within the human brain operating at different levels of conscious awareness [Weizkrantz et al., 1995; Morris et al., 1997, 1999]. Whereas conscious awareness and recall of visual stimuli is associated with processing involving higher visual cortices, the amygdala region has been implicated in automatic (and unconscious) processing of salient stimuli, particularly in paradigms involving facial expressions of fear and fear‐conditioning [Morris et al., 1996, 1997, 1999; Buchel et al., 1998; Whalen et al., 1998]. The insula and inferior prefrontal cortex were also significantly activated during the implicit task, and strong anatomical connections exist between these regions and the amygdala. Anatomically the insula is closely connected with inferior prefrontal cortex and amygdala [Preuss and Goldman‐Rakic, 1989; Augustine, 1996]. Functionally the amygdala, insula, and inferior prefrontal cortex (including inferolateral and orbital prefrontal cortex) are involved in representing motivational information, social cues, and the expression of conditioned responses: Increased insula activity is reported previously during the processing of specific facial expressions [Phillips et al., 1997] and in conjunction with amygdala activity during aversive conditioning [Buchel et al., 1998]. The orbital surface of the inferior prefrontal cortex contains neurons responsive to changes in motivational salience [Critchley and Rolls, 1996a,b; Rolls et al. 1996], and damage to this region may impair recognition of social cues [Hornak et al., 1996], result in dissocial behavior [Saver and Damasio, 1991] and, as with the amygdala, impair autonomic responses to emotive stimuli [Damasio et al., 1991; Bechara et al., 1995]. Although orbital prefrontal activity is often difficult to image using fMRI due to susceptibility‐related signal dropout, our findings suggest that adjacent and connected limbic and paralimbic areas provide a neuroanatomical substrate for implicit processing of facial expressions without conscious awareness.

Thus socially important facial expressions may be processed in a “conscious” explicit way, or in an implicit automatic way: Explicit processing evokes activity in visual cortical areas specialized in representing faces and in posterior hippocampus, which is necessary for the expression of declarative knowledge and memory. Alternatively facial expressions may be processed in an automatic, attention‐independent, implicit way, which does not rely on the detailed cortical representation of faces, but employs limbic and paralimbic areas (amygdala region, insula, and inferior prefrontal cortex) that are involved in conditional learning and representation of motivationally salient stimuli. Furthermore our findings are consistent with the proposal that adaptive social behavior is governed in part by automatic conditioned responses to social cues [e.g., Damasio et al., 1991]. However, it is likely these dissociable systems for processing socially salient visual stimuli interact in a dynamic manner depending on stimulus valence, subjective motivation, and the presence of competing stimuli.

Although some previous functional imaging studies of emotional processing [e.g., Rauch et al., 1996], and studies of patients with brain lesions [Kolb and Taylor, 1981; Adolphs et al., 1996] reported a right hemispheric predominance in representing emotional states or processing facial expressions, we did not observe strongly right‐lateralized activity during the processing of the high‐valence facial expressions. However, there is some evidence to suggest that postive facial expressions may be processed preferentially in the left hemisphere in contrast to negative facial expressions in the right hemisphere [Canli et al., 1998]. Therefore, presentation of both positive and negative facial expressions within the same condition in our task may partially account for the mixed‐laterality we observed. However, amygdala activity lateralized to the right hemisphere is a relatively robust finding in studies of implicit processing of emotive facial expressions that have used masked presentations of face stimuli [e.g., Morris et al., 1998b, 1999; Whalen et al., 1998], whereas bilateral amygdala activity is reported in studies using unmasked (fearful) facial expressions [Morris et al., 1996; Breiter et al., 1996]. We observed bilateral activity at the amygdalohippocampal junction during implicit processing of high‐valence facial expressions, but the effect was greater on the right side, perhaps suggesting that the right amygdala region may be particularly important for the automatic processing of social cues from faces. The existence of functional laterality in brain regions involved in processing social cues and emotive stimuli remains an important issue for further study.

Summary

Our study identifies brain regions associated with processing socially important facial expressions and demonstrates a functional dissociation of the neuroanatomy underlying explicit and implicit processing of facial expressions. Facial expressions are said to be our public display of emotion [Darwin, 1872], yet have evolved to provide a dynamic nonverbal language that facilitates social interactions. Our results are consistent with the proposal that conscious and unconscious emotional mechanisms can be dissociated [LeDoux, 1995, 1998]. Future research will increase understanding of the relationship between explicit and implicit processing of facial expression and the development of adaptive social behavior.

REFERENCES

- Adolphs R, Damasio H, Tranel D, Damasio AR. 1996. Cortical systems for the recognitionof emotion in facial expression. J Neurosci 16:7678–7687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander GE, DeLong MR, Strick PL. 1986. Parallel organization of functionally segregated circuits linking basal ganglia and cortex. Ann Rev Neurosci 9: 357–381. [DOI] [PubMed] [Google Scholar]

- Amaral GD, Price JL, Pitaken A, Carmichael ST. 1992. In: Aggleton JP, editor. The amygdala: neurobiological aspects of emotion, memory and mental dysfunction. New York: Wiley‐Liss; p 1–66. [Google Scholar]

- Augustine JR. 1996. Circuitry and functional aspects of the insular lobe in primates including humans. Brain Res Brain res Rev 22: 229–244. [DOI] [PubMed] [Google Scholar]

- Bechara A, Tranel D, Damasio H, Adolphs R, Rockland C, Damasio A. 1995. Double dissociation of conditioned and declarative knowledge relative to the amygdala and hippocampus in humans. Science 267: 1115–1118. [DOI] [PubMed] [Google Scholar]

- Bowler D. 1992. “Theory of mind” in Asperger's syndrome. J Child Psychol Psychiatr 33: 877–893. [DOI] [PubMed] [Google Scholar]

- Brammer MJ, Bullmore ET, Simmons A, Williams SC, Grasby PM, Howard RJ, Woodruff PW; Rabe‐Hesketh S. 1997. Generic brain activation mapping in fMRI: a nonparametric approach. Mag Reson Imaging 15: 763–770. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner PL, Strauss‐ MM; Hyman‐ SE; Rosen‐ BR. 1996. Response and habituation of the human amygdala during visual processing of facial expressions. Neuron 17: 875–887. [DOI] [PubMed] [Google Scholar]

- Buchel C, Morris J, Dolan R, Friston K. 1998. Brain systems mediating aversive conditioning: an event‐related fMRI study. Neuron 20: 947–957. [DOI] [PubMed] [Google Scholar]

- Bussey TJ, Muir JL, Everitt BJ, Robbins TW. 1996. Dissociable effects of anterior and posterior cingulate cortex lesions on the acquisition of a conditional visual discrimination: facilitation of early learning vs. impairment of late learning. Behav Brain Res 82: 42–56. [DOI] [PubMed] [Google Scholar]

- Butter CM, McDonald JA. 1970. Effect of orbitofrontal lesions on aggressive and aversive behaviors in rhesus monkeys. J Comp Physiol Psychol 72: 132–144. [DOI] [PubMed] [Google Scholar]

- Canli T, Desmond JE, Zhao Z, Glover G, Gabrieli JD. 1998. Hemispheric asymmetry for emotional stimuli detected with fMRI. Neuroreport 9: 3233–3239. [DOI] [PubMed] [Google Scholar]

- Courchesne E, Yeung Courchesne R, Press GA, Hesselink JR, Jernigan TL. 1988. Hypoplasia of cerebellar vermal lobules VI and VII in autism. N Engl J Med 318: 1349–1354. [DOI] [PubMed] [Google Scholar]

- Courtney SM, Ungerleider LG, Keil K, Haxby J. 1996. Object and spatial working memory activate separate neural systems in human cortex. Cereb Cortex 6: 39–49. [DOI] [PubMed] [Google Scholar]

- Critchley HD, Rolls ET. 1996a. Hunger and satiety modify the responses of olfactory and visual neurons in the primate orbitofrontal cortex. J Neurophysiol 75: 1673–1686. [DOI] [PubMed] [Google Scholar]

- Critchley HD, Rolls ET. 1996b. Olfactory neuronal responses in the primate orbitofrontal cortex: analysis in an olfactory discrimination task. J Neurophysiol 75: 1659–1672. [DOI] [PubMed] [Google Scholar]

- Damasio AR, Tranel D Damasio HC. 1991. Somatic markers and the guidance of behavior: Theory and preliminary testing In: Levin HS, Eisenberg HM, Benton LB, editors. Frontal lobe function and dysfunction. Oxford: Oxford University Press; Chapter 11. [Google Scholar]

- Darwin C. 1872. In: Ekman P, editor. The expression of the emotions in man and animals, 3rd ed Chicago: University of Chicago Press, 1998. [Google Scholar]

- Devinsky O, Luciano D. 1993. The contributions of cingulate cortex to human behaviour In: Vogt B, Gabriel M, et al., editors. Neurobiology of cingulate cortex and limbic thalamus: a comprehensive handbook. Boston: Birkhauser; p 527–556. [Google Scholar]

- Ekman P, Freisen W. 1976. Pictures of facial affect (consulting psychologists, Palo Alto).

- Fredrikson M, Wik G, Greitz T, Eriksson L, Stone‐Elander S, Ericson K, Sedvall G. 1993. Regional cerebral blood flow during experimental phobic fear. Psychophysiology 30: 126–130. [DOI] [PubMed] [Google Scholar]

- Fried I, Mateer C, Ojemann G, Wohns R, Fedio P. 1982. Organization of visuo‐spatial functions in human cortex: evidence from electrical stimulation. Brain 105: 349–371. [DOI] [PubMed] [Google Scholar]

- Fried I, MacDonald KA, Wilson CL. 1997. Single neuron activity in human hippocampus and amygdala during recognition of faces and objects. Neuron 18: 753–765. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Williams SCR, Howard R, Frackowiak RSJ, Turner R. 1996. Movement‐related effects in fMRI time series. Magn Reson Med 35: 346–355. [DOI] [PubMed] [Google Scholar]

- George MS, Ketter TA, Gill DS, Haxby JV, Ungerleider LG, Herscovitch P, Post RM. 1993. Brain regions involved in recognizing facial emotion or identity: an oxygen‐15 PET study. J Neuropsychiatry Clin Neurosci 5: 384–394. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Grady CL, Horowitz B, Ungerleider LG, Mishkin M, Carson RE, Herscovitch P, Schapiro MB, Rappaport SI. 1991. Dissociation of object and spatial visual processing in human extrastriate cortex. Proc Natl Acad Sci USA 88: 1621–1625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasselmo ME, Rolls ET, Baylis GC. 1989. The role of expression and identity in the face‐selective responses of neurones in the temporal visual cortex of monkeys. Behav Brain Res 32: 203–218. [DOI] [PubMed] [Google Scholar]

- Heckers S, Rauch SL, Goff D, Savage CR, Schacter DL, Fischman AJ, Alpert NM. 1998. Impaired recruitment of the hippocampus during conscious recollection in schizophrenia. Nature Neuroscience 1: 318–323. [DOI] [PubMed] [Google Scholar]

- Hornak J, Rolls ET, Wade D. 1996. Face and voice expression identification in patients with emotional and behavioural changes following ventral frontal lobe damage. Neuropsychologia 34: 247–261. [DOI] [PubMed] [Google Scholar]

- Iversen S, Mishkin M. 1970. Perseverative interference in monkey following selective lesions of the inferior prefrontal convexity. Exp Brain Res 55: 376–386. [DOI] [PubMed] [Google Scholar]

- Jones B, Mishkin M. 1972. Limbic lesions and the problem of stimulus‐reinforcement associations. Exp Neurol 36: 362–377. [DOI] [PubMed] [Google Scholar]

- Kolb B, Taylor L. 1981. Affective behaviour in patients with localized cortical excisions: role of lesion site and side. Science 214: 89–91. [DOI] [PubMed] [Google Scholar]

- LaBerge D, Buchsbaum MS. 1990. Positron emission tomographic measurement of pulvinar activity during an attention task. J Neurosci 10: 613–619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lane RD, Fink GR, Chau PM‐L, Dolan RJ. 1997a. Neural activation during selective attention to subjective emotional responses. NeuroReport 8: 3969–3972. [DOI] [PubMed] [Google Scholar]

- Lane RD, Reiman EM, Ahern GL, Schwartz GE, Davidson RJ. 1997b. Neuroanatomical correlates of happiness, sadness and disgust. Am J. Psychiatry 154: 926–933. [DOI] [PubMed] [Google Scholar]

- LeDoux JE. 1995. In search of an emotional system in the brain: leaping from fear to emotion and consciousness In: Gazzaniga MS, editor. The cognitive neurosciences. Cambridge: MIT Press; p 1041–1061. [Google Scholar]

- LeDoux, JE . 1998. The emotional brain: the mysterious underpinnings of emotional life. New York: Simon & Schuster. [Google Scholar]

- MacLean P. 1952. Some psychiatric implications of physiological studies on frontal‐temporal portion of the limbic system (visceral brain). Electroencephalography Clin Neurophysiol 4: 407–418. [DOI] [PubMed] [Google Scholar]

- Maddock R, Buonocore M. 1997. Activation of left posterior cingulate gyrus by the auditory presentation of threat‐related words: an fMRI study. Psychiatry Res 75: 1–14. [DOI] [PubMed] [Google Scholar]

- McGuire PK, Bench CJ, Frith CD, Marks IM, Frackowiak RS, Dolan RJ. 1994. Functional anatomy of obsessive‐compulsive phenomena. Br J Psychiatry 164: 459–468. [DOI] [PubMed] [Google Scholar]

- Morris JS, Frith CD, Perrett DI, Rowland D, Young AW, Calder AJ, Dolan RJ. 1996. A differential neural response in the human amygdala to fearful and happy facial expressions. Nature 383: 812–815. [DOI] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Dolan RJ. 1998a. Neural responses to salient visual stimuli. Proc R Soc London B Biol Sci 264/1382: 769–775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, Ohman A, Dolan RJ. 1998b. Conscious and unconscious emotional learning in the human amygdala. Nature 393: 467–470. [DOI] [PubMed] [Google Scholar]

- Morris JS, Ohman A, Dolan RJ. 1999. A subcortical pathway to the right amygdala mediating “unseen” fear. PANS 96: 1680–1685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ogawa S, Lee TM, Kay AR, Tank DW. 1990. Brain magnetic resonance imaging with contrast dependent on blood oxygenation. Proc Natl Acad Sci USA 3: 9868–9872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ojemann JG, Ojemann GA, Lettich E. 1992. Neuronal activity related to faces and matching right non‐dominant temporal cortex. Brain 115: 1–13. [DOI] [PubMed] [Google Scholar]

- Papez J. 1937. A proposed mechanism for emotion. Arch Neurol Psychiatr 79: 217–224. [Google Scholar]

- Pardo JV, Pardo PJ, Raichle ME. 1993. Neural correlates of self‐induced dysphoria. Am J Psychiatr 150: 713–719. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Smith PAJ, Potter DD, Mistlin AJ, Head AS, Milner AD Jeeves MA. 1985. Visual cells in the temporal cortex sensitive to face view and gaze direction. Proc Royal Soc Lond B Biol Sci 223: 293–317. [DOI] [PubMed] [Google Scholar]

- Phillips ML, Young AW, Senior C, Brammer M, Andrew C, Calder AJ, Bullmore ET, Perrett DI, Rowland D, Williams SC, Gray JA, David AS. 1997. A specific neural substrate for perceiving facial expressions of disgust. Nature 389: 496–498. [DOI] [PubMed] [Google Scholar]

- Preuss TM, Goldman‐Rakic PS. 1989. Connections of the ventral granular frontal cortex of macaques with perisylvian premotor and somatosensory areas: anatomical evidence for somatic representation in primate frontal association cortex. J Comp Neurol 282: 293–316. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Gore JC, McCarthy G. 1995. Face‐sensitive regions in human extra‐striate cortex studied by functional MRI. J Neurophysiol 74: 1192–2005. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Asgari M, Gore JC, McCarthy G. 1996. Differential sensitivity of human visual cortex to faces, letterstrings and textures: a functional magnetic resonance imaging study. J Neurosci 16: 5205–5215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A, Allison T, Bentin S, Gore JC, McCarthy G. 1998. Temporal cortex activation in humans viewing eye and mouth movements. J Neurosci 18: 2188–2199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauch SL, van der Kolk BA, Fisler RE, Alpert NM, Orr SP, Savage CR, Fischman AJ, Jenike MA, Pitman RK. 1996. A symptom provocation study of posttraumatic stress disorder using positron emission tomography and script‐driven imagery. Arch Gen Psychiatr 53: 380–387. [DOI] [PubMed] [Google Scholar]

- Robinson DL, Petersen SE. 1992. The pulvinar and visual salience. TINS 15: 127–132. [DOI] [PubMed] [Google Scholar]

- Rolls ET, Hornak J, Wade D and Mc Grath J. 1994. Emotion‐related learning in patients with social and emotional changes associated with frontal lobe damage. J Neurol Neurosurg Psychiatry 57: 1518–1524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rolls ET, Critchley HD, Mason R, Wakeman EA. 1996. Orbitofrontal cortex neurons: role in olfactory and visual association learning. J Neurophysiol 75: 1970–1981. [DOI] [PubMed] [Google Scholar]

- Saver J, Damasio A. 1991. Preserved access and processing of social knowledge in a patient with acquired sociopathy due to ventromedial frontal damage. Neuropsychologia 29: 1241–1249. [DOI] [PubMed] [Google Scholar]

- Schmahman J, Sherman JC. 1998. The cerebellar cognitive affective syndrome. Brain 121: 561–579. [DOI] [PubMed] [Google Scholar]

- Schneider F, Gur RE, Mozley LH, Smith RJ, et al. 1995. Mood effects on limbic blood flow correlate with emotional self‐rating: a PET study with oxygen‐15 labeled water. Psychiatry Res Neuroimaging 61: 265–283. [DOI] [PubMed] [Google Scholar]

- Sergent J, Ohta S, MacDonald B. 1992. Functional neuroanatomy of face and object processing: a positron emission tomography study. Phil Trans R Soc Lond B Biol Sci 335: 55–62. [DOI] [PubMed] [Google Scholar]

- Sprengelmeyer R, Young AW, Calder AJ, Karnat A, Lange H, Homberg V, Perrett DI, Rowland D. 1996. Loss of disgust: perception of faces and emotions in Huntington's disease. Brain 119: 1647–1665. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. 1988. Co‐planar stereotaxic atlas of the human brain. Stuttgart: Theime. [Google Scholar]

- Weizkrantz L. 1956. Behavioural changes associated with ablation of the amygdaloid complex in monkeys. J Comp Neurol 49: 381–391. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, Jenike MA. 1998. Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J Neurosci 18: 411–418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zola‐Morgan S, Squire LR, Alvarez‐Royo P, Clower RP. 1991. Independence of memory functions and emotional behavior: separate contributions of the hippocampal formation and the amygdala. Hippocampus 1: 207–220. [DOI] [PubMed] [Google Scholar]