Abstract

With the advancements in deep learning, deep generative models combined with graph neural networks have been successfully employed for data-driven molecular graph generation. Early methods based on the non-autoregressive approach have been effective in generating molecular graphs quickly and efficiently but have suffered from low performance. In this paper, we present an improved learning method involving a graph variational autoencoder for efficient molecular graph generation in a non-autoregressive manner. We introduce three additional learning objectives and incorporate them into the training of the model: approximate graph matching, reinforcement learning, and auxiliary property prediction. We demonstrate the effectiveness of the proposed method by evaluating it for molecular graph generation tasks using QM9 and ZINC datasets. The model generates molecular graphs with high chemical validity and diversity compared with existing non-autoregressive methods. It can also conditionally generate molecular graphs satisfying various target conditions.

Keywords: Molecular graph, Variational autoencoder, Graph neural network, Deep learning

Introduction

In recent years, machine learning has been actively adopted to accelerate the discovery of novel molecules with desired properties [1–4]. While traditional approaches, such as manual design and enumeration [5–8], depend highly on domain knowledge and intuition of human experts, machine learning approaches have allowed automated design of desired molecules in a data-driven manner. Thus, they have attracted considerable attention from academia and industry. A typical way to accomplish so is to construct a generative model by learning from data to capture the underlying distribution of the data, and use it to generate new molecules [9, 10]. The large number of known molecules that have been discovered in the past can be a valuable source for the data [11, 12]. Although this research area is currently in the incipient stage and remains challenging, studies are increasingly performed, demonstrating improved effectiveness.

The main consideration is how to represent molecules to be processed within a model and to be generated by a model. Various types of molecular representations can be considered. Among them, most existing studies have employed a simplified molecular-input line-entry system (SMILES) [13] to represent molecules. SMILES is a line notation that encodes its graph structure via depth-first traversal into a sequence of symbols with a simple vocabulary and grammar rules. There have been implemented deep generative models based on a recurrent neural network (RNN), which learn a generative distribution from data to produce a SMILES string from a latent vector. They include RNN language models [14–17], variational autoencoders (VAEs) [18–21] and generative adversarial networks (GANs) [22, 23]. To conditionally generate SMILES strings with specified target properties, additional optimization procedures have been adopted along with the generative models, including Bayesian optimization [18] and reinforcement learning [14, 15, 22, 23]. Conditional generative models, which directly sample new SMILES strings from a conditional generative distribution without any extra optimization procedure, have also been presented for targeted generation [19, 24].

SMILES representation has proven useful in learning generative models for molecular design tasks owing to its simplicity. However, several limitations hinder the generation of chemically valid molecules. This representation cannot fully represent the entire chemical space, but only a part of the chemical space can be represented as SMILES strings. It is inadequate to capture the similarity between molecules, because a small perturbation in a molecule may result in a significant change in the representation. To address such limitations, numerous research attempts have been made to generate molecular graphs directly using generative models. A molecular graph is a natural representation of a molecule, which is expressed as an undirected graph of varying size whose nodes and edges correspond to atoms and bonds, respectively. This representation provides high coverage of the chemical space, and can convey various chemical features within it.

With recent advances in graph neural networks [25–27], various methods for extending deep generative models to molecular graph generation have been proposed. They can be categorized into two main approaches: non-autoregressive and autoregressive. The non-autoregressive approach allows more principled use of generative models, generating a molecular graph directly from a latent vector using a non-autoregressive distribution without any iterative procedure. Few methods based on this approach have been presented, owing to the challenge imposed by graph isomorphism, meaning that a molecular graph is invariant to permutations of its nodes. Notably, Simonovsky and Komodakis [28] presented a non-autoregressive VAE that generates molecular graphs, named GraphVAE. Its training requires calculating the reconstruction loss for generated graphs. The problem of graph isomorphism is addressed by a graph matching procedure that entails expensive computation. De Cao and Kipf [29] sidestepped graph isomorphism by using a non-autoregressive GAN for molecular graph generation, named MolGAN. Its training however suffers from the mode-collapse problem, thus less diverse molecular graphs are generated. The non-autoregressive approach successfully generates small molecular graphs in a very fast and efficient manner but suffers from difficulty in model training and a low validity ratio when generating larger graphs. Owing to such limitations, the autoregressive approach, which aims at sequentially generating a molecular graph node by node using an autoregressive distribution, has been a main research direction [30–33]. These methods successfully generate molecular graphs with a high validity ratio, while involves an iterative procedure for each generation which makes them less efficient.

This work focuses on the non-autoregressive approach for fast and efficient generation of molecular graphs. Here we propose an efficient learning method to train a VAE that generates molecular graphs in a non-autoregressive manner. To improve the molecular graph generation, we train the VAE by incorporating three additional learning objectives: approximate graph matching, reinforcement learning, and auxiliary property prediction. The trained VAE can almost always generate chemically valid molecular graphs with improved diversity compared with existing non-autoregressive methods. The proposed method also allows constrained generation of molecular graphs with specified target properties.

Methods

Molecules as graphs

In this work, we use the molecular graph representation defined as follows. A molecule is represented by an undirected graph with up to m nodes, where and represent the set of nodes and the set of edges, respectively. The node vectors and edge vectors are associated with heavy atoms and their bonds, respectively, in the molecule. It should be noted that because we use an undirected graph. For the i-th atom, is a p-dimensional vector formed by concatenating three one-hot vectors indicating the atom type, formal charge, and number of explicit hydrogens. The dimension p depends on the dataset used. For the bond between the i-th and j-th atoms, is a q-dimensional one-hot vector associated with the bond type. We kekulize the molecule for simplicity so that the only bond types to consider are single, double, triple, and none, hence . Additionally, the properties of the molecule are represented as a property vector .

Graph variational autoencoder

We construct a conditional version of the graph VAE [34] in a non-autoregressive manner. It seeks to find the generative distribution of conditioned on a latent vector and a property vector and parameterized by , denoted as . The prior distributions of and are assumed to be and , respectively. To address the intractability of the posterior distribution , we introduce an approximate posterior distribution , which has a normal distribution and is parameterized by .

The distributions and are called the encoder and decoder of the VAE, respectively. For the encoder, we use a message passing neural network (MPNN) [27], which is a variant of a graph neural network that operates directly on graphs of different sizes and is invariant to graph isomorphism. The encoder takes and and outputs the mean vector and variance vector , from which is sampled based on reparametrization as with . The decoder is modeled as a fully-connected neural network that outputs values at once from and with node-wise and edge-wise softmax activation. The output values form a probabilistic graph composed of m nodes and edges.

The original learning objective of the VAE is given with respect the parameters and as:

| 1 |

where the first and second terms on the right-hand side are regarded as the reconstruction loss and regularization loss, respectively. Owing to graph isomorphism, the calculation of the reconstruction loss necessitates a graph matching procedure that involves comparing an input graph and its probabilistic reconstruction which is computationally expensive. For example, the max-pooling matching algorithm has computational complexity of [28].

To make the learning more efficient, we introduce an approximate graph matching procedure, which aims to alleviate the computational burden for the reconstruction loss. Additionally, we incorporate reinforcement learning and auxiliary property prediction into the training of the VAE to further improve the generation performance. Details regarding the learning objectives utilized in this work are presented in the following subsection.

Approximate graph matching

The reconstruction loss involves comparing an original input graph and its reconstruction by the VAE. In this subsection, we denote the reconstruction of as a probabilistic graph , where and . Because the reconstruction loss must be invariant to graph isomorphism, a graph matching procedure that seeks the best possible matching between the two graphs is needed. To avoid expensive computation, we propose to use approximate graph matching. The main idea is to approximate the distance between and by comparing the numbers of atom types, bond types, atom-bond pair types, and atom-bond-atom pair types.

Assuming that each edge vector is represented as a four-dimensional vector as , the reconstruction loss is approximated as follows:

| 2 |

where , , , and . When calculating the approximated reconstruction loss, we discard the non-atom and non-bond types from the vectors. The first, second, third, and fourth terms on the right-hand side correspond to the comparison of the numbers of atom types, bond types, atom-bond pair types, and atom-bond-atom pair types, respectively. They are independent of node ordering because they summate over the nodes in a graph, and are thus invariant to graph isomorphism. All the operations in the above equation are differentiable.

Reinforcement learning

We further improve the VAE via reinforcement learning with the aim of generating chemically valid molecules. We adopt a deterministic policy gradient framework, in which the decoder of the VAE is regarded as a policy network that takes the two vectors and as state inputs. It outputs a probabilistic graph as an action from the state. The reward for the action is the chemical validity of the probabilistic graph, which is evaluated using an external reward function R. The reward function returns 1 if the probabilistic graph can be decoded into a chemically valid molecular graph and 0 otherwise. In this work, the chemical validity of a molecular graph is evaluated via a sanitization check. With the reward function, the policy network learns how to generate a probabilistic graph that fulfills the expected reward of 1.

We wish to optimize the molecular graph generation of the VAE for maximizing the external reward function R. Because the reward function is non-differentiable, it cannot be incorporated directly into the learning procedure. We build a reward network r that approximates the reward function R. The reward network is modeled as an MPNN with a sigmoid output. It takes a probabilistic graph as input and predicts its actual reward value. The reward network can backpropagate the VAE. We train the VAE to generate a probabilistic graph towards maximizing the output of the reward network.

For the learning objective, we derive two additional losses and as:

| 3 |

| 4 |

The VAE is trained for minimizing , while the reward network r is trained to minimize .

It should be noted that we can impose extra domain-specific constraints regarding structures or properties on the external reward function R for constrained generation. For example, we can define a blacklist of undesired substructures and make the value of the reward function 0 when its input contains any substructure in the blacklist. This prevents generated molecules from having undesired substructures.

Auxiliary property prediction

Augmenting a generative model with side information has known to improve the quality of generated samples as well as the stability of model training [19, 35]. We incorporate auxiliary property prediction into the VAE learning to enable generating probabilistic graphs that correspond to desired properties as well as to diversify the generated outcomes. We build a predictor network as an MPNN with l linear outputs. It learns from the training dataset to predict the property vector of a given graph. Because the predictor network can backpropagate the VAE, we train the VAE to generate a probabilistic graph whose corresponding is to be reconstructed by the predictor network.

For learning with auxiliary property prediction, we derive two additional losses and as:

| 5 |

| 6 |

Only the probabilistic graphs that are deemed valid by the external reward function R are incorporated into the first loss . The VAE is trained to minimize . Simultaneously, the predictor network f is trained to minimize .

Learning from data

The proposed model is composed of four main components: the encoder network , decoder network , reward network r, and predictor network f. The full learning objective combines the vanilla objective of VAE (1) along with objectives for approximate graph matching (2), reinforcement learning (3–4), and auxiliary property prediction (5–6). The objective functions for the VAE part and the other part are and , respectively, given as:

| 7 |

| 8 |

where and are hyperparameters that control the trade-off between different learning objectives.

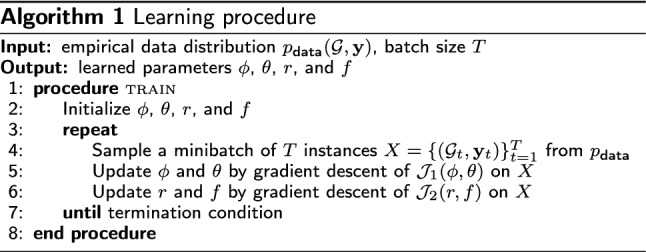

Given an empirical data distribution , we train the entire model for minimizing the two objective functions and simultaneously. For each iteration, a training batch X is sampled from the data distribution. The VAE parameters and are updated via gradient descent of on X, and the reward network r and predictor network f are updated via gradient descent of . Algorithm 1 presents the pseudocode of the learning procedure.

Molecular graph generation

Once the model is trained, we use the decoder to generate molecular graphs. For unconditional generation, is sampled from its prior distribution . To conditionally generate molecular graphs, is sampled from a conditional distribution. For example, if the target condition is given as , then . We sample from . Given and , the decoder returns a probabilistic output, which is decoded based on node-wise and edge-wise argmax to obtain a molecular graph as

| 9 |

We use a simple decoding method to discretize probabilistic outputs for deriving molecular graphs. Some studies reported that post-processing of probabilistic outputs based on such methods as maximum spanning tree [28] and beam search [36] can improve the validity of the generated molecular graphs.

Results and discussion

Datasets

We conducted experiments to investigate the effectiveness of the proposed method. In this work, we utilized two molecular datasets: QM9 and ZINC. They are publicly available and have commonly been used for the evaluation of molecular graph generation.

The QM9 dataset [37, 38] originally contains 133,885 organic molecules with at most nine heavy atoms of the following types: {C, N, O, F, none}. We sampled 100,000 unique molecular graphs from the original set. For each atom in a molecular graph, the formal charge and the number of explicit hydrogens belonged to and , respectively. Thus, the dimensions p and q were 12 and 4, respectively.

The ZINC dataset was constituted by sampling 100,000 unique molecular graphs from the drug-like part of the ZINC database [11]. Each molecular graph in the dataset contained up to 38 heavy atoms with ten atom types: {C, N, O, F, P, S, Cl, Br, I, none}. In the dataset used, the formal charge and the number of explicit hydrogens were in and , respectively. The dimensions p and q were 17 and 4, respectively.

We employed two properties that are readily calculable using the RDKit package [39]: the molecular weight (MolWt) and Wildman-Crippen partition coefficient (LogP) [40]. The property vector of each molecular graph was constituted with the three properties. Thus, the dimension l was 2. These properties can be evaluated efficiently without high costs for newly generated molecular graphs.

Experiments

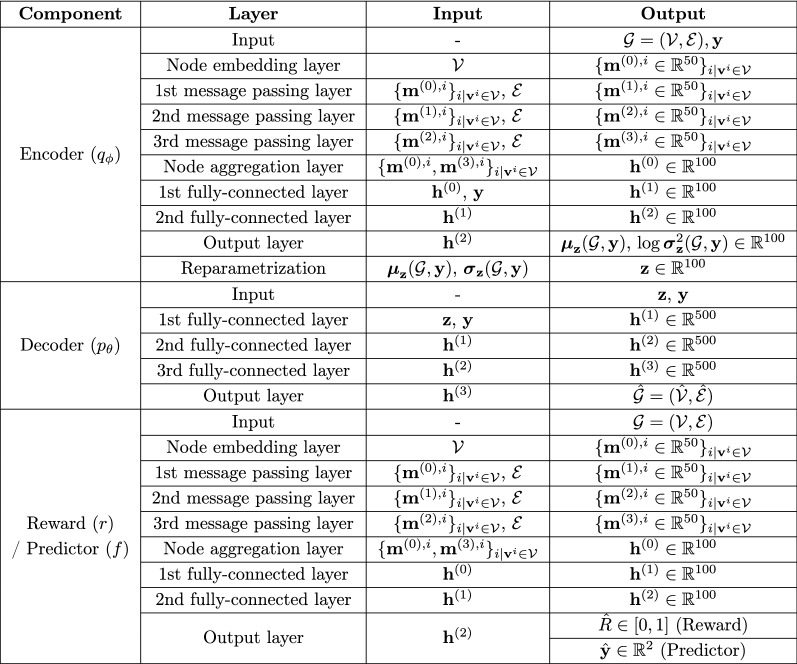

In the proposed method, the model architecture was determined as follows. For the molecular graph representation, the hyperparameter m was set as 5 plus the maximum number of heavy atoms in a molecule in the dataset used, i.e., m = 14 for QM9 and m = 43 for ZINC. The encoder, reward, and predictor networks were modeled as MPNNs. Each MPNN had three message passing layers with 50 hidden units, followed by a 100-dimensional node aggregation layer, which was then further processed by two fully-connected layers with 100 tanh hidden units. The decoder network was a fully-connected network with three fully-connected layers having dimensions of 500 with LeakyReLU activation. The dimension of was set as 100. Each property in was normalized to have a mean of 0 and a standard deviation of 1 for training. For the prior distribution , we estimated the mean vector and covariance matrix from the training dataset. For reinforcement learning, the external reward function was implemented using the RDKit package [39]. Figure 1 shows the architectural details of the model used in the experiments. For the objective functions, the hyperparameters and were both set as 1. Given a dataset, the model was trained for 50 epochs using the RMSProp optimizer with a learning rate of 0.0005 and a batch size of 20.

Fig. 1.

Model architecture used in case study

We compared the proposed method with two baseline methods that generate molecular graphs in a non-autoregressive manner: GraphVAE [28] and MolGAN [29]. We also performed an ablation study on our method to investigate the efficacy of reinforcement learning and auxiliary property prediction. We evaluated two ablation models, denoted A1 and A2. For model A1, we removed both the reward and predictor networks by setting and , which indicates that the reinforcement learning and auxiliary property prediction were excluded from the learning objective. For model A2, the predictor network was removed by setting and to discard auxiliary property prediction only.

The performance of each model for molecular graph generation was evaluated with regard to three metrics: Validity, Uniqueness, and Novelty. We sampled 10,000 molecular graphs from each model. Then, Validity was calculated as the fraction of valid molecular graphs out of 10,000 generated molecular graphs. Uniqueness was the fraction of valid graphs that were not duplicates. Novelty was the fraction of valid graphs that were not included in the training dataset. The values of each metric ranged from 0 to 1. Because the primary goal is to generate molecular graphs that are valid, unique, and novel, we calculated the geometric mean (G-mean) of the three metrics for an overall comparison among the models.

All the experiments were implemented based on GPU-accelerated TensorFlow in Python.

Results

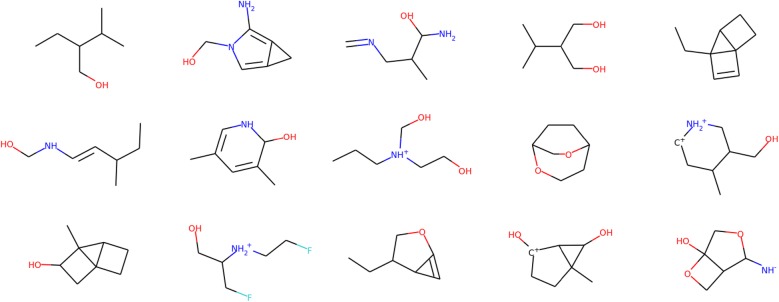

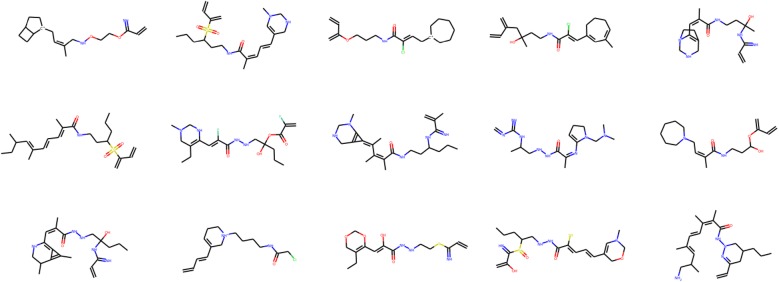

Table 1 presents the results of unconditional molecular graph generation for QM9 and ZINC. We report the validity, uniqueness, novelty, and G-mean for the five compared models. The best value in each row is highlighted in italics. Figures 2 and 3 show example molecular graphs generated randomly by the proposed models trained with QM9 and ZINC, respectively.

Table 1.

Performance comparison for unconditional molecular graph generation

| Dataset | Metric | GraphVAE [28] | MolGAN [29] | Proposed | ||

|---|---|---|---|---|---|---|

| A1 | A2 | Full | ||||

| QM9 | Validity | 0.610 | 0.981 | 0.550 | 0.965 | 0.945 |

| Uniqueness | 0.409 | 0.104 | 0.293 | 0.275 | 0.343 | |

| Novelty | 0.850 | 0.942 | 0.612 | 0.737 | 0.806 | |

| G-mean | 0.596 | 0.458 | 0.462 | 0.581 | 0.639 | |

| ZINC | Validity | 0.140 | 0.017 | 0.008 | 0.926 | 0.919 |

| Uniqueness | 0.316 | 0.201 | 0.949 | 0.614 | 0.762 | |

| Novelty | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | |

| G-mean | 0.354 | 0.151 | 0.197 | 0.828 | 0.888 | |

Best score for each metric is given in italic

Fig. 2.

Examples of newly generated molecular graphs from QM9

Fig. 3.

Examples of newly generated molecular graphs from ZINC

The proposed model exhibited the highest G-mean for both the QM9 and ZINC datasets, as the model achieved good performance for all three metrics. The proposed model exhibited a validity score of > 90% for both datasets, indicating that it almost always generated chemically valid molecules. Additionally, it generated more diverse molecules, as evident from its comparable uniqueness and novelty scores. In the case of ZINC, all the compared models yielded novelty of 1, indicating that all the generated molecules were not included in the training set. The baseline models yielded relatively good performance for QM9 but rarely generated valid molecular graphs for ZINC.

Compared with the ablated models, reinforcement learning helped the proposed model generate chemically valid molecules, as evident from the observation that model A2 was superior to model A1 in terms of validity. The auxiliary property prediction contributed to the diversification of the generated molecules, as the proposed model exhibited higher uniqueness than model A2.

Table 2 presents summary statistics for the newly generated molecules of each target condition by the proposed model. For conditional generation, the target conditions for MolWt and LogP were set as and , respectively, for QM9, and and , respectively, for ZINC. The property distributions obtained via unconditional generation were similar to those of the training set. Compared with the unconditional generation, the conditional generation results exhibited a slightly lower G-mean and a smaller number of unique molecular graphs. As shown in Table 2, when a target condition was set, the proposed model successfully generated molecular graphs whose properties were close to the target value.

Table 2.

Conditional molecular graph generation with proposed model

| Dataset | Target condition | G-mean | Unique count | MolWt | LogP |

|---|---|---|---|---|---|

| QM9 | Training set | – | 100,000 | 122.97 ± 7.61 | 0.14 ± 1.16 |

| Unconditional generation | 0.639 | 3243 | 123.01 ± 8.04 | − 0.06 ± 1.36 | |

| MolWt = 120 | 0.583 | 2316 | 121.85 ± 5.11 | 0.02 ± 1.36 | |

| MolWt = 125 | 0.543 | 1947 | 125.11 ± 4.56 | − 0.27 ± 1.22 | |

| MolWt = 130 | 0.482 | 1475 | 128.98 ± 4.27 | − 0.41 ± 1.33 | |

| LogP = − 0.4 | 0.576 | 2399 | 122.97 ± 8.26 | − 0.40 ± 0.73 | |

| LogP = 0.2 | 0.543 | 2099 | 122.53 ± 8.17 | 0.19 ± 0.75 | |

| LogP = 0.8 | 0.537 | 1989 | 122.17 ± 8.09 | 0.83 ± 0.72 | |

| ZINC | Training set | – | 100,000 | 357.94 ± 65.48 | 2.62 ± 1.36 |

| Unconditional generation | 0.888 | 7000 | 366.44 ± 51.63 | 2.49 ± 1.43 | |

| MolWt = 300 | 0.742 | 4090 | 313.12 ± 13.72 | 1.91 ± 1.50 | |

| MolWt = 350 | 0.796 | 5045 | 356.22 ± 12.66 | 2.24 ± 1.36 | |

| MolWt = 400 | 0.805 | 5212 | 400.95 ± 13.66 | 2.78 ± 1.30 | |

| LogP = 1.5 | 0.865 | 6470 | 352.33 ± 46.78 | 1.66 ± 0.94 | |

| LogP = 2.5 | 0.860 | 6356 | 366.64 ± 48.30 | 2.46 ± 0.92 | |

| LogP = 3.5 | 0.827 | 5658 | 381.96 ± 48.46 | 3.22 ± 0.85 |

GuacaMol benchmarks

We further investigated the effectiveness of the proposed method with the distribution-learning benchmarks in the GuacaMol framework [41]. The benchmarks are based on a standardized subset of the ChEMBL database [42]. As the training set for the proposed model, we used molecules with up to 50 heavy atoms from the original dataset. We trained the model for 20 epochs with the same experimental settings as before. Then, we evaluated Validity, Uniqueness, and Novelty of generated molecular graphs by the model. In addition, whether the model is able to reproduce the distribution of the training set was assessed with Kullback-Leibler Divergence (KLD) and Fréchet ChemNet Distance (FCD). For baselines, four SMILES generation models (LSTM, VAE, AAE, and ORGAN) and one molecular graph generation model (GraphMCTS) were compared, as implemented in [41].

The results for the distribution-learning benchmarks are shown in Table 3. Compared with the baseline models, the proposed model exhibited comparable or higher scores on validity, uniqueness, and novelty metrics. However, the proposed model yielded relatively lower KLD and FCD scores like GraphMCTS, which indicates that the proposed model was inferior on reproducing the underlying property distributions of the training set. Overall, the molecular graph generation models were very useful in generating chemically valid and diverse molecular graphs, while they were inferior to the SMILES generation models in distribution-learning.

Table 3.

Results of GuacaMol distribution-learning benchmarks

| Metric | SMILES-based | Graph-based | ||||

|---|---|---|---|---|---|---|

| LSTM | VAE | AAE | ORGAN | GraphMCTS | Proposed | |

| Validity | 0.959 | 0.870 | 0.822 | 0.379 | 1.000 | 0.830 |

| Uniqueness | 1.000 | 0.999 | 1.000 | 0.841 | 1.000 | 0.944 |

| Novelty | 0.912 | 0.974 | 0.998 | 0.687 | 0.994 | 1.000 |

| KLD | 0.991 | 0.982 | 0.886 | 0.267 | 0.522 | 0.554 |

| FCD | 0.913 | 0.863 | 0.529 | 0.000 | 0.015 | 0.016 |

Conclusion

While the non-autoregressive approach has the advantage of fast and computationally efficient generation of molecular graphs without any iterative procedure, the existing methods suffer from low performance. To overcome this limitation, we proposed an efficient learning method to build a graph VAE that generates molecular graphs in a non-autoregressive manner. In order to improve the generation performance, we introduced approximate graph matching, reinforcement learning, and auxiliary property prediction into the training of the model. Experimental validation using QM9 and ZINC datasets demonstrated the effectiveness of the proposed method. Compared with existing methods, the proposed method exhibited higher performance for molecular graph generation with a validity score of > 90% as well as improved diversity of the generated molecular graphs for both datasets. We also demonstrated that the proposed model can conditionally generate novel molecular graphs satisfying specified target conditions.

We believe that the proposed method can serve as an efficient tool for the discovery of new materials. Successful application of the method will allow automatic design of desired chemical structures with targeted conditions by learning implicit knowledge from data without explicit knowledge from human experts, thereby meriting further investigations. The generated molecular graphs can be examined further to obtain realistic chemical structures with desired properties.

One downside of the proposed method its high complexity with regard to space and time. Both the space and time complexity increase with the size of the graphs, owing to the use of graph neural networks. Thus, the proposed method would be impractical for learning and generating large molecular graphs, e.g., hundreds of heavy atoms in a molecule. In the future, research will be performed to reduce the complexity for generating larger molecular graphs efficiently.

Acknowledgements

The authors thank the anonymous reviewers for their valuable comments.

Authors' contributions

YK and SK designed and implemented the methodology with contributions from JY. WJS and DL performed the analysis. YSC and WJS supervised the research. YK and SK wrote the manuscript. All authors read and approved the final manuscript.

Funding

This work was supported by Samsung Advanced Institute of Technology, and the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT; Ministry of Science and ICT) (Nos. NRF-2017R1C1B5075685 and NRF-2019R1A4A1024732).

Availability of data and materials

The source code and datasets used in this study are available online at http://github.com/seokhokang/graphvae_approx/. The original QM9 and ZINC data are publicly accessible from http://quantum-machine.org/datasets/ and http://zinc15.docking.org/, respectively.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Varnek A, Baskin I. Machine learning methods for property prediction in chemoinformatics: quo vadis? J Chem Inf Model. 2012;52(6):1413–1437. doi: 10.1021/ci200409x. [DOI] [PubMed] [Google Scholar]

- 2.Xu Y, Yao H, Lin K. An overview of neural networks for drug discovery and the inputs used. Expert Opin Drug Discov. 2018;13(12):1091–1102. doi: 10.1080/17460441.2018.1547278. [DOI] [PubMed] [Google Scholar]

- 3.Chen H, Engkvist O, Wang Y, Olivecrona M, Blaschke T. The rise of deep learning in drug discovery. Drug Discov Today. 2018;23(6):1241–1250. doi: 10.1016/j.drudis.2018.01.039. [DOI] [PubMed] [Google Scholar]

- 4.Goh GB, Hodas NO, Vishnu A. Deep learning for computational chemistry. J Comput Chem. 2017;38:1291–1307. doi: 10.1002/jcc.24764. [DOI] [PubMed] [Google Scholar]

- 5.Huc I, Lehn JM. Virtual combinatorial libraries: dynamic generation of molecular and supramolecular diversity by self-assembly. Proc Natl Acad Sci. 1997;94(6):2106–2110. doi: 10.1073/pnas.94.6.2106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lehn JM. Dynamic combinatorial chemistry and virtual combinatorial libraries. Chem Eur J. 1999;5(9):2455–2463. doi: 10.1002/(SICI)1521-3765(19990903)5:9<2455::AID-CHEM2455>3.0.CO;2-H. [DOI] [Google Scholar]

- 7.Schneider G. Trends in virtual combinatorial library design. Curr Med Chem. 2002;9(23):2095–2101. doi: 10.2174/0929867023368755. [DOI] [PubMed] [Google Scholar]

- 8.Potyrailo R, Rajan K, Stoewe K, Takeuchi I, Chisholm B, Lam H. Combinatorial and high-throughput screening of materials libraries: review of state of the art. ACS Comb Sci. 2011;13(6):579–633. doi: 10.1021/co200007w. [DOI] [PubMed] [Google Scholar]

- 9.Schwalbe-Koda D, Gómez-Bombarelli R (2019) Generative models for automatic chemical design. arXiv preprint arXiv:190701632

- 10.Sanchez-Lengeling B, Aspuru-Guzik A. Inverse molecular design using machine learning: generative models for matter engineering. Science. 2018;361(6400):360–365. doi: 10.1126/science.aat2663. [DOI] [PubMed] [Google Scholar]

- 11.Sterling T, Irwin JJ. ZINC 15-ligand discovery for everyone. J Chem Inf Model. 2015;55(11):2324–2337. doi: 10.1021/acs.jcim.5b00559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kim S, Thiessen PA, Bolton EE, Chen J, Fu G, Gindulyte A, et al. PubChem substance and compound databases. Nucleic Acids Res. 2016;44(D1):D1202–D1213. doi: 10.1093/nar/gkv951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Weininger D. SMILES, a chemical language and information system. 1. Introduction to methodology and encoding rules. J Chem Inf Comput Sci. 1988;28(1):31–36. doi: 10.1021/ci00057a005. [DOI] [Google Scholar]

- 14.Popova M, Isayev O, Tropsha A. Deep reinforcement learning for de-novo drug design. Sci Adv. 2018;4(7):eaap7885. doi: 10.1126/sciadv.aap7885. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Olivecrona M, Blaschke T, Engkvist O, Chen H. Molecular de-novo design through deep reinforcement learning. J Cheminformatics. 2017;9(48):1–14. doi: 10.1186/s13321-017-0235-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Segler MHS, Kogej T, Tyrchan C, Waller MP. Generating focused molecule libraries for drug discovery with recurrent neural networks. ACS Cent Sci. 2018;4:120–131. doi: 10.1021/acscentsci.7b00512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gupta A, Müller AT, Huisman BJH, Fuchs JA, Schneider P, Schneider G. Generative recurrent networks for de novo drug design. Mol Inf. 2018;37(1–2):1700111. doi: 10.1002/minf.201700111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gómez-Bombarelli R, Wei JN, Duvenaud D, Hernández-Lobato JM, Sánchez-Lengeling B, Sheberla D, et al. Automatic chemical design using a data-driven continuous representation of molecules. ACS Cent Sci. 2018;4(2):268–276. doi: 10.1021/acscentsci.7b00572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kang S, Cho K. Conditional molecular design with deep generative models. J Chem Inf Model. 2019;59(1):43–52. doi: 10.1021/acs.jcim.8b00263. [DOI] [PubMed] [Google Scholar]

- 20.Kusner MJ, Paige B, Hernández-Lobato JM (2017) Grammar variational autoencoder. In: Proceedings of international conference on machine learning, pp 1945–1954

- 21.Dai H, Tian Y, Dai B, Skiena S, Song L (2018) Syntax-directed variational autoencoder for structured data. In: Proceedings of international conference on learning representations

- 22.Guimaraes GL, Sanchez-Lengeling B, Farias PLC, Aspuru-Guzik A (2017) Objective-reinforced generative adversarial networks (ORGAN) for sequence generation models. arXiv preprint arXiv:170510843

- 23.Putin E, Asadulaev A, Ivanenkov Y, Aladinskiy V, Sanchez-Lengeling B, Aspuru-Guzik A, et al. Reinforced adversarial neural computer for de novo molecular design. J Chem Inf Model. 2018;58(6):1194–1204. doi: 10.1021/acs.jcim.7b00690. [DOI] [PubMed] [Google Scholar]

- 24.Lim J, Ryu S, Kim JW, Kim WY. Molecular generative model based on conditional variational autoencoder for de novo molecular design. J Cheminformatics. 2018;10(1):31. doi: 10.1186/s13321-018-0286-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wu Z, Pan S, Chen F, Long G, Zhang C, Yu PS (2019) A comprehensive survey on graph neural networks. arXiv preprint arXiv:190100596 [DOI] [PubMed]

- 26.Battaglia PW, Hamrick JB, Bapst V, Sanchez-Gonzalez A, Zambaldi V, Malinowski M et al (2018) Relational inductive biases, deep learning, and graph networks. arXiv preprint arXiv:180601261

- 27.Gilmer J, Schoenholz SS, Riley PF, Vinyals O, Dahl GE (2017) Neural message passing for quantum chemistry. In: Proceedings of international conference on machine learning, pp 1263–1272

- 28.Simonovsky M, Komodakis N (2018) GraphVAE: towards generation of small graphs using variational autoencoders. In: Proceedings of international conference on artificial neural networks, pp 412–422

- 29.De Cao N, Kipf T (2018) MolGAN: an implicit generative model for small molecular graphs. arXiv preprint arXiv:180511973

- 30.Li Y, Vinyals O, Dyer C, Pascanu R, Battaglia P (2018) Learning deep generative models of graphs. arXiv preprint arXiv:180303324

- 31.You J, Ying R, Ren X, Hamilton W, Leskovec J (2018) GraphRNN: generating realistic graphs with deep auto-regressive models. In: Proceedings of international conference on machine learning, pp 5694–5703

- 32.Liu Q, Allamanis M, Brockschmidt M, Gaunt A (2018) Constrained graph variational autoencoders for molecule design. In: Advances in neural information processing systems, pp 7795–7804

- 33.You J, Liu B, Ying Z, Pande V, Leskovec J (2018) Graph convolutional policy network for goal-directed molecular graph generation. In: Advances in neural information processing systems, pp 6410–6421

- 34.Kingma DP, Welling M (2014) Auto-encoding variational Bayes. In: Proceedings of international conference on learning representations

- 35.Odena A, Olah C, Shlens J (2017) Conditional image synthesis with auxiliary classifier GANs. In: Proceedings of international conference on machine learning, pp 2642–2651

- 36.Bresson X, Laurent T (2019) A two-step graph convolutional decoder for molecule generation. arXiv preprint arXiv:190603412

- 37.Ruddigkeit L, Van Deursen R, Blum LC, Reymond JL. Enumeration of 166 billion organic small molecules in the chemical universe database GDB-17. J Chem Inf Model. 2012;52(11):2864–2875. doi: 10.1021/ci300415d. [DOI] [PubMed] [Google Scholar]

- 38.Ramakrishnan R, Dral PO, Rupp M, Von Lilienfeld OA. Quantum chemistry structures and properties of 134 kilo molecules. Sci Data. 2014;1(140022):1–7. doi: 10.1038/sdata.2014.22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Landrum G (2019) RDKit: open-source cheminformatics. http://www.rdkit.org. Accessed 10 June 2019

- 40.Wildman SA, Crippen GM. Prediction of physicochemical parameters by atomic contributions. J Chem Inf Comput Sci. 1999;39(5):868–873. doi: 10.1021/ci990307l. [DOI] [Google Scholar]

- 41.Brown N, Fiscato M, Segler MHS, Vaucher AC. GuacaMol: benchmarking models for de novo molecular design. J Chem Inf Model. 2019;59(3):1096–1108. doi: 10.1021/acs.jcim.8b00839. [DOI] [PubMed] [Google Scholar]

- 42.Gaulton A, Hersey A, Nowotka M, Bento AP, Chambers J, Mendez D, et al. The ChEMBL Database in 2017. Nucleic Acids Res. 2016;45(D1):D945–D954. doi: 10.1093/nar/gkw1074. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The source code and datasets used in this study are available online at http://github.com/seokhokang/graphvae_approx/. The original QM9 and ZINC data are publicly accessible from http://quantum-machine.org/datasets/ and http://zinc15.docking.org/, respectively.