Abstract

Machine learning techniques have gained prominence for the analysis of resting-state functional Magnetic Resonance Imaging (rs-fMRI) data. Here, we present an overview of various unsupervised and supervised machine learning applications to rs-fMRI. We offer a methodical taxonomy of machine learning methods in resting-state fMRI. We identify three major divisions of unsupervised learning methods with regard to their applications to rs-fMRI, based on whether they discover principal modes of variation across space, time or population. Next, we survey the algorithms and rs-fMRI feature representations that have driven the success of supervised subject-level predictions. The goal is to provide a high-level overview of the burgeoning field of rs-fMRI from the perspective of machine learning applications.

Keywords: Machine learning, resting-state, functional MRI, intrinsic networks, brain connectivity

1. Introduction

Resting-state fMRI (rs-fMRI) is a widely used neuroimaging tool that measures spontaneous fluctuations in neural blood oxygen-level dependent (BOLD) signal across the whole brain, in the absence of any controlled experimental paradigm. In their seminal work, Biswal et al. [1] demonstrated temporal coherence of low-frequency spontaneous fluctuations between long-range functionally related regions of the primary sensory motor cortices even in the absence of an explicit task, suggesting a neurological significance of resting-state activity. Several subsequent studies similarly reported other collections of regions co-activated by a task (such as language, motor, attention, audio or visual processing etc.) that show correlated fluctuations at rest [2, 3, 4, 5, 6, 7, 8, 9, 10, 11]. These spontaneously co-fluctuating regions came to be known as the resting state networks (RSNs) or intrinsic brain networks. The term RSN henceforth denotes brain networks subserving shared functionality as discovered using rs-fMRI.

Rs-fMRI has enormous potential to advance our understanding of the brain’s functional organization and how it is altered by damage or disease. A major emphasis in the field is on the analysis of resting-state functional connectivity (RSFC) that measures statistical dependence in BOLD fluctuations among spatially distributed brain regions. Disruptions in RSFC have been identified in several neurological and psychiatric disorders, such as Alzheimer’s [12, 13, 14], autism [15, 16, 17], depression [18, 19, 20], schizophrenia [21, 22], etc. Dynamics of RSFC have also garnered considerable attention in the last few years, and a crucial challenge in rs-fMRI is the development of appropriate tools to capture the full extent of this RS activity. rs-fMRI captures a rich repertoire of intrinsic mental states or spontaneous thoughts and, given the necessary tools, has the potential to generate novel neuroscientific insights about the nature of brain disorders [23, 24, 25, 26, 27, 28].

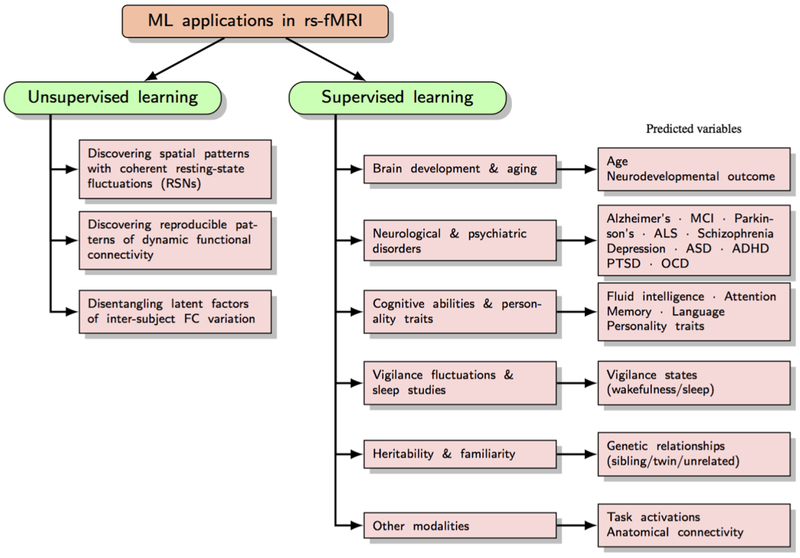

The study of rs-fMRI data is highly interdisciplinary, majorly influenced by fields such as machine learning, signal processing and graph theory. Machine learning methods provide a rich characterization of rs-fMRI, often in a data-driven manner. Unsupervised learning methods in rs-fMRI are focused primarily on understanding the functional organization of the healthy brain and its dynamics. For instance, methods such as matrix decomposition or clustering can simultaneously expose multiple functional networks within the brain and also reveal the latent structure of dynamic functional connectivity.

Supervised learning techniques, on the other hand, can harness RSFC to make individual-level predictions. Substantial effort has been devoted to using rs-fMRI for classification of patients versus controls, or to predict disease prognosis and guide treatments. Another class of studies explores the extent to which individual differences in cognitive traits may be predicted by differences in RSFC, yielding promising results. Predictive approaches can also be used to address research questions of interest in neuroscience. For example, is RSFC heritable? Such questions can be formulated within a prediction framework to test novel hypotheses.

From mapping functional networks to making individual-level predictions, the applications of machine learning in rs-fMRI are far-reaching. The goal of this review is to present in a concise manner the role machine learning has played in generating pioneering insights from rs-fMRI data, and describe the evolution of machine learning applications in rs-fMRI. We will present a review of the key ideas and application areas for machine learning in rs-fMRI rather than delving into the precise technical nuances of the machine learning algorithms themselves. In light of the recent developments and burgeoning potential of the field, we discuss current challenges and promising directions for future work.

1.1. Resting-state fMRI: A Historical Perspective

Until the 2000s, task-fMRI was the predominant neuroimaging tool to explore the functions of different brain regions and how they coordinate to create diverse mental representations of cognitive functions. The discovery of correlated spontaneous fluctuations within known cortical networks by Biswal et al. [1] and a plethora of follow-up studies have established rs-fMRI as a useful tool to explore the brain’s functional architecture. Studies adopting the resting-state paradigm have grown at an unprecedented scale over the last decade. These are much simpler protocols than alternate task-based experiments, capable of providing critical insights into functional connectivity of the healthy brain as well as its disruptions in disease. Resting-state is also attractive as it allows multi-site collaborations, unlike task-fMRI that is prone to confounds induced by local experimental settings. This has enabled network analysis at an unparalleled scale.

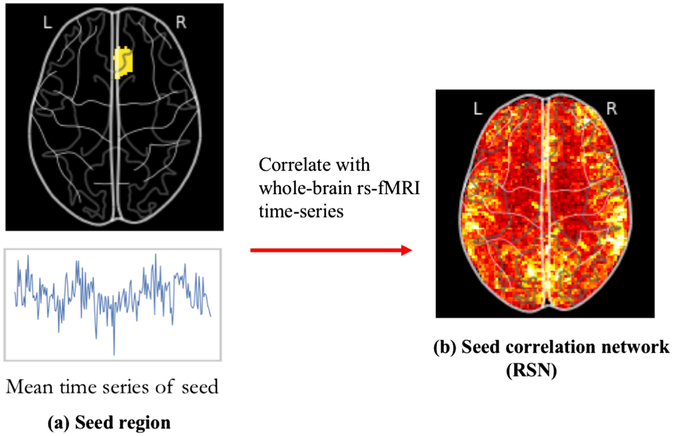

Traditionally, rs-fMRI studies have focused on identifying spatially-distinct yet functionally associated brain regions through seed-based analysis (SBA). In this approach, seed voxels or regions of interest are selected a priori and the time series from each seed is correlated with the time series from all brain voxels to generate a series of correlation maps. SBA, while simple and easily interpretable, is limited since it is heavily dictated by manual seed selection and, in its simplest form, can only reveal one specific functional system at a time.

Decomposition methods like Independent Component Analysis (ICA) emerged as a highly promising alternative to seed-based correlation analysis in the early 2000s [29, 2, 30]. This was followed by other unsupervised learning techniques such as clustering. In contrast to seed-based methods that explore networks associated with a seed voxel (such as motor or visual functional connectivity maps), these new class of model-free methods based on decomposition or clustering explored RSNs simultaneously across the whole brain for individual or group-level analysis. Regardless of the analysis tool, all studies largely converged in reporting multiple robust resting-state networks across the brain, such as the primary sensorimotor network, the primary visual network, fronto-parietal attention networks and the well-studied default mode network. Regions in the default mode network, such as the posterior cingulate cortex, precuneus, ventral and dorsal medial prefrontal cortex, show increased levels of activity during resting-state suggesting that this network represents the baseline or default functioning of the human brain. The default mode network has sparked a lot of interest in the rs-fMRI community [31], and several studies have consequently explored disruptions in DMN resting-state connectivity in various neurological and psychiatric disorders, including autism, schizophrenia and Alzheimer’s. [32, 33, 34]

Despite the widespread success and popularity of rs-fMRI, the causal origins of ongoing spontaneous fluctuations in the resting brain remain largely unknown. Several studies explored whether resting-state coherent fluctuations have a neuronal origin, or are just manifestations of aliasing or physiological artifacts introduced by the cardiac or respiratory cycle. Over time, evidence in support for a neuronal basis of BOLD-based resting state functional connectivity has accumulated from multiple complementary sources. This includes (a) observed reproducibility of RSFC patterns across independent subject cohorts [5, 4], (b) its persistence in the absence of aliasing and distinct separability from noise components [5, 35], (c) its similarity to known functional networks [1, 2, 11] and (d) consistency with anatomy [36, 37], (e) its correlation with cortical activity studied using other modalities [38, 39, 40] and finally, (f) its systematic alterations in disease [23, 24, 25].

1.2. Application of Machine Learning in rs-fMRI

A vast majority of literature on machine learning for rs-fMRI is devoted to unsupervised learning approaches. Unlike task-driven studies, modelling resting-state activity is not straightforward since there is no controlled stimuli driving these fluctuations. Hence, analysis methods used for characterizing the spatio-temporal patterns observed in task-based fMRI are generally not suited for rs-fMRI. Given the high dimensional nature of fMRI data, it is unsurprising that early analytic approaches focused on decomposition or clustering techniques to gain a better characterization of data in spatial and temporal domains. Unsupervised learning approaches like ICA catalyzed the discovery of the so-called resting-state networks or RSNs. Subsequently, the field of resting-state brain mapping expanded with the primary goal of creating brain parcellations, i.e., optimal groupings of voxels (or vertices in the case of surface representation) that describe functionally coherent spatial compartments within the brain. These parcellations aid in the understanding of human functional organization by providing a reference map of areas for exploring the brain’s connectivity and function. Additionally, they serve as a popular data reduction technique for statistical analysis or supervised machine learning.

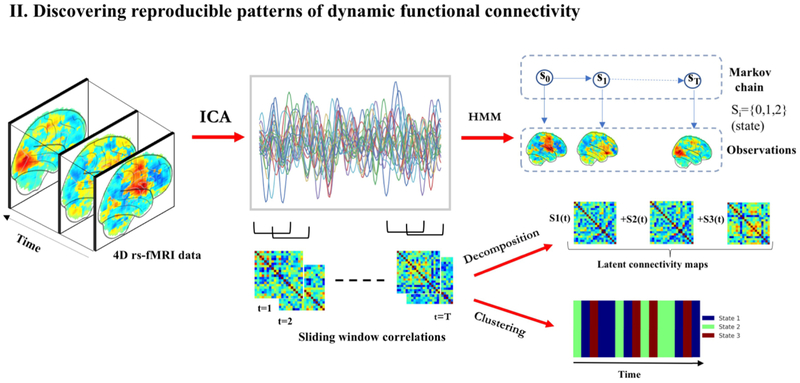

More recently, departing from the stationary representation of brain networks, studies have shown that RSFC exhibits meaningful variations during the course of a typical rs-fMRI scan [41, 42]. Since brain activity during resting-state is largely uncontrolled, this makes network dynamics even more interesting. Using unsupervised pattern discovery methods, resting-state patterns have been shown to transition between discrete recurring functional connectivity ”states”, representing diverse mental processes [42, 43, 44]. In the simplest and most common scenario, dynamic functional connectivity is expressed using sliding-window correlations. In this approach, functional connectivity is estimated in a temporal window of fixed length, which is subsequently shifted by different time steps to yield a sequence of correlation matrices. Recurring correlation patterns can then be identified from this sequence through decomposition or clustering. This dynamic nature of functional connectivity opens new avenues for understanding the flexibility of different connections within the brain as they relate to behavioral dynamics, with potential clinical utility [45].

Another, perhaps clinically more promising application of machine learning in rs-fMRI expanded in the late 2000s. This new class of applications leveraged supervised machine learning for individual level predictions. The covariance structure of resting-state activity, more popularly known as the ”connectome”, has garnered significant interest in the field of neuroscience as a sensitive biomarker of disease. Studies have further shown that an individual’s connectome is unique and reliable, akin to a fingerprint [46]. Machine learning can exploit these neuroimaging based biomarkers to build diagnostic or prognostic tools. Visualization and interpretation of these models can complement statistical analysis to provide novel insights into the dysfunction of resting-state patterns in brain disorders. Given the prominence of deep learning in today’s era, several novel neural-network based approaches have also emerged for the analysis of rs-fMRI data. A majority of these approaches target connectomic feature extraction for single-subject level predictions.

In order to organize the work in this rapidly growing field, we sub-divide the machine learning approaches into different classes by methods and application focus. We first differentiate among unsupervised learning approaches based on whether their main focus is to discover (a) the underlying spatial organization that is reflected in coherent fluctuations, (b) the structure in temporal dynamics of resting-state connectivity, or (c) population-level structure for inter-subject comparisons. Next, we move on to discuss supervised learning. We organize this section by discussing the relevant rs-fMRI features employed in these models, followed by commonly used training algorithms, and finally the various application areas where rs-fMRI has shown promise in performing predictions.

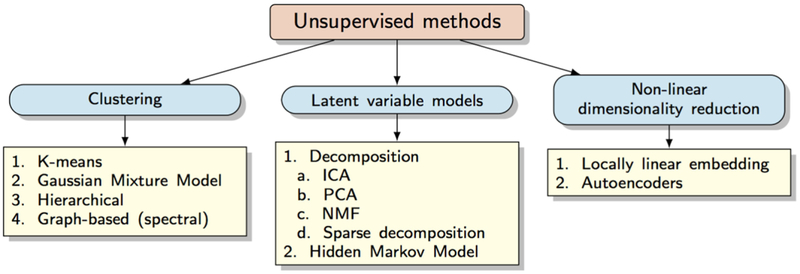

2. Unsupervised learning methods

The primary objective of unsupervised learning is to discover latent representations and disentangle the explanatory factors for variation in rich, unlabelled data. These learning methods do not receive any kind of supervision in the form of target outputs (or labels) to guide the learning process. Instead, they focus on learning structure in the data in order to extract relevant signal from noise. Below, we review some important unsupervised learning methods that have advanced rs-fMRI analysis.

2.1. Clustering

Given data points {X1, .., Xn}, the goal of clustering is to partition the data into K disjoint groups {C1, .., CK}. Different clustering algorithms differ in terms of their clustering objective, which is to maximize some notion of within-cluster similarity and/or between-cluster dissimilarity.

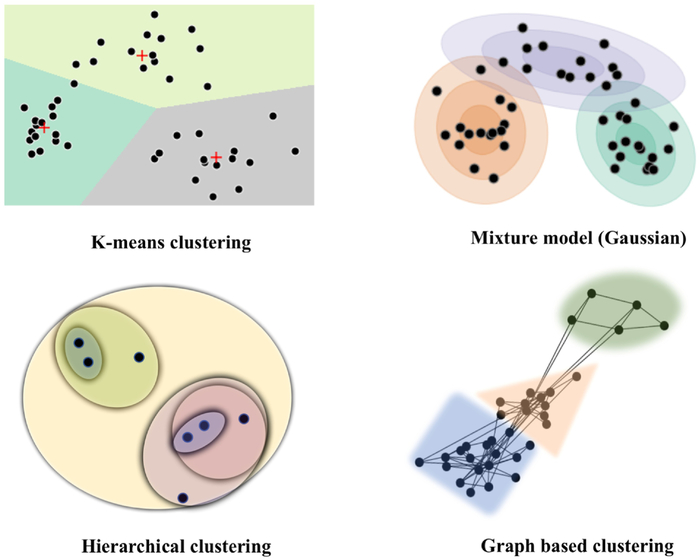

K-means.

K-means clustering is thus far the most popular learning algorithm for partitioning data. The algorithm aims at minimizing the within-cluster variance. Formally, this corresponds to the following clustering objective,

where nj denotes the cardinality of set Cj. This optimization problem is solved using an iterative algorithm, known as the Lloyd’s algorithm. The algorithm begins with initial estimates of cluster centroids and iteratively refines them by (a) assigning each datum to its closest cluster, and (b) updating cluster centroids based on these new assignments.

Gaussian mixture models.

Mixture models are often used to represent probability densities of complex multimodal data with hidden components. These models are constructed as mixtures of arbitrary unimodal distributions, each representing a distinct cluster. In the case of Gaussian mixture models, each Xi is assumed to be generated by a two-step process: (a) First, a latent component zi ∈ {1, .., K} is sampled, zi ~ Multinomial(ϕ) where ϕk = P(zi = k); then (b) a random sample is drawn from one of K multivariate gaussians conditional on zi, i.e. where μk and Σk denote the mean and covariance of the k-th gaussian respectively. Each gaussian distribution thus denotes a unique cluster. The model parameters {ϕ, μ, Σ} are obtained by maximizing the complete data likelihood,

Maximum likelihood estimates of GMMs are usually obtained using the Expectation-Maximization (EM) algorithm.

Hierarchical clustering.

Hierarchical clustering methods group the data into a set of nested partitions. This multi-resolution structure is often represented with a cluster tree, or dendrogram. Hierarchical clustering is divided into agglomerative or divisive methods, based on whether the clusters are identified in a bottom-up or top-down fashion respectively. Hierachical agglmomerative clustering (HAC), the more dominant approach, initially treats each data point as a singleton cluster and then successively merges them according to pre-specific distance metric until a single cluster containing all observations is formed. Many distance metrics, referred to as linkage criterion, have been proposed in literature that optimize different goals of hierarchical clustering. These include: (a) single-link, where distance between clusters C1 and C2 is defined as the distance between their closest points, i.e., , (b) Complete linkage, where this distance is measured between the farthest points, , (c) Average linkage which measures the average distance between members etc. Here, d represents dissimilarity between observations. Alternate methods for merging have also been proposed, the most popular being Ward’s criterion. Ward’s method measures how much the within-cluster variance will increase when merging two partitions and minimizes this merging cost. A major drawback is computational complexity, which render HAC methods impractical in applications with large observational data.

Graph-based clustering.

Graph based clustering forms another class of similarity-based partitioning methods for data that can be represented using a graph. Given a weighted undirected graph G = {V, E} with vertex set V and edge set E, most graph-partitioning methods optimize a dissociation measure, such as the normalized cut (Ncut). The edge weights w(i, j) represent a function of similarity between vertices i and j. Ncut computes the total edge weights connecting two partitions and normalizes this by their weighted connections to all nodes within the graph. A two-way normalized-cut criteria divides G into disjoint partitions A and B (A ∪ B = V, A ∩ B = ϕ) by simultaneously minimizing between-cluster similarity while maximizing within-cluster similarity. This objective criterion is expressed as,

However, minimizing this objective directly is an NP-hard problem. Spectral clustering algorithms typically solve a relaxation of this problem. This approach can be further extended to obtain a K-way partitioning of the graph. Graph-based clustering approach is often more resilient to outliers, compared to k-means or hierarchical clustering.

2.2. Latent variable models

2.2.1. Decomposition

Decomposition or factorization based approaches assume that the observed data can be decomposed as a product of simpler matrices, often imposing a specific structure and/or sparsity on these individual matrices. Formally, given data points X = [x1, .., xn] with xi ∈ RD, linear decomposition techniques seek a basis set W = [w1, .., wK] such that the linear space spanned by W closely reconstructs X.

Here, each data point xi is characterized by unique coefficients zi ∈ RK for the basis set W. Typically, K < D so that decomposition amounts to a dimensionality reduction. In matrix notation, the goal is to find W and Z such that X ≈ WZ, where Z = [z1, .., zn]. This ill-posed problem is generally solved by constraining the structure of W and/or Z.

Principal component analysis (PCA).

PCA is a linear projection based technique widely used for dimensionality reduction. The goal of PCA is to find an orthonormal basis W that maximizes the variance captured by projected data Z = WTX. This is equivalent to minimizing the reconstruction error of the data points based on the low-dimensional representation Z. Mathematically, this amounts to solving the following optimization problem,

where F denotes the Frobenius norm and denotes the set of D × K dimensional orthonormal matrices.

Independent component analysis (ICA).

Independent Component Analysis (ICA) is a popular method for decomposing data as a linear combination of statistically independent components. In the ICA terminology, W is often known as the mixing matrix whereas Z comprises the source signals. In the above formalism, ICA assumes that the sources, i.e., the rows of Z, are statistically independent. The source signals are recovered using a ”whitening” or ”unmixing” matrix U, where U = W−1. Since X = WZ, we obtain Z = UX Popular algorithms thus recover the sources by estimating U such that the components of UX are statistically independent. Common ICA algorithms emulate independence by either minimizing the mutual information between sources (InfoMax) or by maximizing their non-gaussianity (FastICA). ICA usually employs a full-rank matrix factorization and is often preceded with PCA for dimensionality reduction.

Sparse dictionary learning.

Sparse dictionary learning is formulated as a linear decomposition problem, similar to ICA/PCA, but with sparsity constraints on the components Z. This results in a non-convex optimization problem of the following form:

In most practical applications, this optimization problem is relaxed by replacing the L0-norm with L1-norm.

Non-negative matrix factorization (NMF).

NMF is another dimensionality reduction technique that seeks a low-rank decomposition of the data matrix X with non-negativity constraints on the components W and Z. Typically, this corresponds to solving the following optimization,

2.2.2. Hidden Markov Models

Hidden Markov Models (HMMs) are a class of unsupervised learning methods for sequential data. They are used to model a Markov process where the sequence of observations {x1, .., xT} are assumed to be generated from a sequence of underlying hidden states {s1, .., sT}, which can be discrete. In a HMM with K states, it is assumed that si can take discrete values in {1, .., K}. The parameters of the HMM are learned by maximizing the complete data likelihood,

Here, P(s1∣s0) denotes the initial state distribution π. The state transition probabilities are defined by a transition matrix T with elements Ti,j = P(st = j∣st−1 = i). The conditionals P(xt∣st = k, θ) are captured by an emission probability table E[k, xt]. The parameters θ of this probabilistic model are thus {π, T, E}. This maximum likelihood estimation problem is efficiently solved using a special case of the Expectation-Maximization algorithm, known as the Baum-Welch algorithm.

2.3. Non-linear embeddings

Locally linear embeddings.

LLE projects data to a reduced dimensional space while preserving local distances between data points and their neighborhood. LLE algorithm proceeds in two steps. First, each input Xi, i ∈ {1, , ., n} is approximated as a linear combination of its K closest neighbors. The linear subspace W is obtaining by minimizing the reconstruction error,i.e.,

Here, Wij = 0 if Xj is not one of the K-nearest neighbors of Xi. In the second step, the low-dimensional embeddings Yi are obtained by minimizing the embedding cost function,

In the latter optimization, W is kept fixed at Wopt, while Yi’s are optimized.

Autoencoders.

The autoencoder is an unsupervised neural-network based approach for learning latent representations of high-dimensional data. It encodes the input X into a lower dimensional representation Z = fθ(X), known as the bottleneck, which is then decoded to reconstruct the input . Both the encoder fθ and decoder gϕ are neural networks. The autoencoder is trained to minimize the reconstruction error on a set of examples, often measured with an L2 loss, i.e., . The autoencoder can thus be seen as a non-linear extension of PCA since fθ and gϕ are in general non-linear functions.

3. Applications of unsupervised learning in rs-fMRI

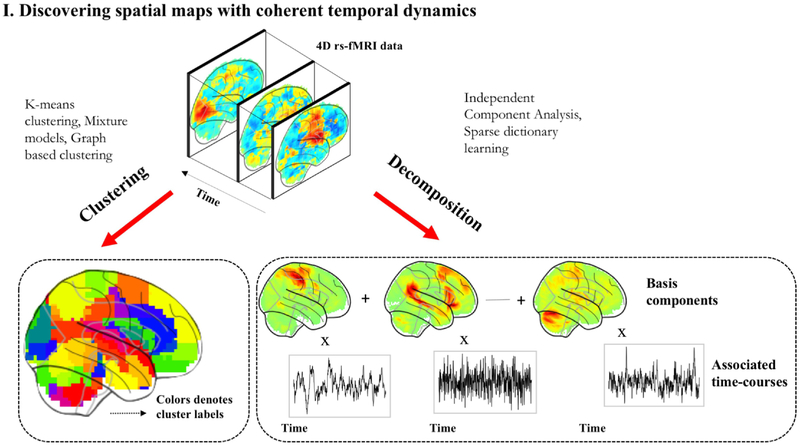

Unsupervised machine learning methods have proven promising for the analysis of high-dimensional data with complex structures, making it ever more relevant to rs-fMRI. Many unsupervised learning approaches in rs-fMRI aim to parcellate the brain into discrete functional sub-units, akin to atlases. These segmentations are driven by functional data, unlike those approaches that use cytoarchitecture as in the Broadmann atlas, or macroscopic anatomical features, as in the Automated Anatomical Labelling (AAL) atlas [47]. A second class of applications delve into the exploration of brain network dynamics. Unsupervised learning has recently been applied to interrogate the dynamic functional connectome with promising results[43, 42, 44, 48, 49]. Finally, the third application of unsupervised learning focuses on learning latent low-dimensional representations of RSFC to conduct analyses across a population of subjects. We discuss the methods under each of these challenging application areas below.

3.1. Discovering spatial patterns with coherent fluctuations

Mapping the boundaries of functionally distinct neuroanatomical structures, or identifying clusters of functionally coupled regions in the brain is a major objective in neuroscience. Rs-fMRI and machine learning methods provide a promising combination with which to achieve this lofty goal.

In the case of rs-fMRI, the typical approach is to decompose the 4D fMRI data into a linear superposition of distinct spatial modes that show coherent temporal dynamics using techniques like ICA. Clustering is an alternative unsupervised learning approach for analysis of rs- fMRI data. Unlike ICA or dictionary learning, clustering is used to partition the brain surface (or volume) into disjoint functional networks. It is important to draw a distinction at this stage between two slightly different applications of clustering since they sometimes warrant different constraints; one direction is focused on identifying functional networks which are often spatially distributed, whereas the other is used to parcellate brain regions. The latter application aims to construct atlases that reflect local areas that constitute the functional neuroanatomy, much like how standard atlases such as the Automated Anatomical Labelling (AAL) [47] delineate macroscopic anatomical regions. One important design decision in the application of clustering is the distance function used to measure dissimilarity between different voxels (or vertices). In the case of rs-fMRI, this distance function is either computed on raw time-series at voxels or between their connectivity profiles. While these two distances are motivated by the same idea of functional coherence, certain differences have been found in parcellations optimized using either criteria [50].

An important requirement for almost all of these methods is the a priori selection of the number of clusters/components. These are often determined through cross-validation or through statistics that reflect the quality, stability or reproducibility of decomposition/partitions at different scales.

3.1.1. ICA

ICA has been one of the earliest and most widely used analytic tools for rs-fMRI, driving several pivotal neuroscientific insights into intrinsic brain networks. When applied to rs-fMRI, brain activity is expressed as a linear superposition of distinct spatial patterns or maps, with each map following its own characteristic time course. These spatial maps can reflect a coherent functional system or noise, and several criteria can be used to automatically differentiate them. This capability to isolate noise sources makes ICA particularly attractive. In the early days of rs-fMRI, several studies demonstrated marked resemblance between the ICA spatial maps and cortical functional networks known from task-activation studies [2, 4]. While typical ICA models are noise-free and assume that the only stochasticity is in the sources themselves, several variants of ICA have been proposed to model additive noise in the observed signals. Beckmann et al. [2] introduced a probabilistic ICA (PICA) model to extract the connectivity structure of rs-fMRI data. PICA models a linear instantaneous mixing process under additive noise corruption and statistical independence between sources. De Luca et al. [5] showed that PICA can reliably distinguish RSNs from artifactual patterns. Both these works showed high consistency in resting-state patterns across multiple subjects. While there is no standard criteria for validating the ICA patterns, or any clustering algorithm for that matter, reproducibility or reliability is often used for quantitative assessment. More recently, Khorshidi et al. proposed an automated denoising strategy for fMRI based on ICA, known as FIX ”FMRIB’s ICA-based-X-noiseifier”. The authors trained a classifier using manual annotations to label artefactual components based on distinct spatial/ temporal features. These components could represent a variety of structured noise sources and once identified, they can be either subtracted or regressed out of the data to yield clean signals.

ICA can also be extended to make group inferences in population studies. Group ICA is thus far the most widely used strategy, where multi-subject fMRI data are concatenated along the temporal dimension before implementing ICA [51]. Individual-level ICA maps can then be obtained from this group decomposition by back-projecting the group mixing matrix [51], or using a dual regression approach [52]. More recently, Du et al.[53] introduced a group information guided ICA to preserve statistical independence of individual ICs, where group ICs are used to constrain the corresponding subject-level ICs. Varoquaux et al. [54] proposed a robust group-level ICA model to facilitate between-group comparisons of ICs. They introduce a generative framework to model two levels of variance in the ICA patterns, at the group level and at a subject-level, akin to a multivariate version of mixed-effect models. The IC estimation procedure, termed Canonical ICA, employs Canonical Correlation Analysis to identify a joint subspace of common IC patterns across subjects and yields ICs that are well representative of the group.

Alternatively, it is also possible to compute individual-specific ICA maps and then establish correspondences across them [53] for generating group inferences; however, this approach has been limited because source separations can be very different across subjects, for example, due to fragmentation.

While ICA and its extensions have been used broadly by the rs-fMRI community, it is important to acknowledge its limitations. ICA models linear representations of non-Gaussian data. Whether a linear transformation can adequately capture the relationship between independent latent sources and the observed high-dimensional fMRI data is uncertain and likely unrealistic. Unlike the popular Principal Component Analysis (PCA), ICA does not provide the ordering or the energies of its components, which makes it impossible to distinguish strong and weak sources. This also complicates replicability analysis since known sources i.e., spatial maps could be expressed in any arbitrary order. Extracting meaningful ICs also sometimes necessitates manual selection procedures, which can be inefficient or subjective. In the ideal scenario, each individual component represents either a physiologically meaningful activation pattern or noise. However, this might be an unrealistic assumption for rs-fMRI. Additionally, since ICA assumes non-Gaussianity of sources, Gaussian physiological noise can contaminate the extracted components. Further, due to the high-dimensionality of fMRI, analysis often proceeds with PCA based dimensionality reduction before application of ICA. PCA computes uncorrelated linear transformations of highest variance (thus explaining greatest variability within the data) from the top eigenvectors of the data covariance matrix. While this step is useful to remove observation noise, it could also result in loss of signal information that might be crucial for subsequent analysis. Although ICA optimizes for independence, it does not guarantee independence. Based on studies of functional integration within the brain, assumptions of independence between functional units could themselves be questioned from a neuroscientific point of view. Several papers have suggested that ICA is especially effective when spatial patterns are sparse, with negligible or little overlap. This hints to the possibility that success of ICA is driven by sparsity of the components rather than their independence. Along these lines, Daubechies and colleagues claim that fMRI representations that optimize for sparsity in spatial patterns are more effective than fMRI representations that optimize independence [55].

3.1.2. Learning sparse spatial maps

Sparse dictionary learning is another popular framework for constructing succinct representations of observed data. Varoquaux et al. [56] adopt a dictionary learning framework for segmenting functional regions from resting-state fMRI time series. Their approach accounts for inter-subject variability in functional boundaries by allowing the subject-specific spatial maps to differ from the population-level atlas. Concretely, they optimize a loss function comprising a residual term that measures the approximation error between data and its factorization, a cost term penalizing large deviations of individual subject spatial maps from group level latent maps, and a regularization term promoting sparsity. In addition to sparsity, they also impose a smoothness constraint so that the dominant patterns in each dictionary are spatially contiguous to construct a well-defined parcellation. In order to prevent blurred edges caused due to the smoothness constraint, Abraham et al. [57] propose a total variation regularization within this multi-subject dictionary learning framework. This approach is shown to yield more structured parcellations that outperform competing methods like ICA and clustering in explaining test data. Similarly, Lv et al. [58] propose a strategy to learn sparse representations of whole-brain fMRI signals in individual subjects by factorizing the time-series into a basis dictionary and its corresponding sparse coefficients. Here, dictionaries represent the co-activation patterns of functional networks and coefficients represent the associated spatial maps. Experiments revealed a high degree of spatial overlap in the extracted functional networks in contrast to ICA that is known to yield spatially non-overlapping components in practice.

3.1.3. K-means clustering and mixture models

K-means clustering or mixture models are frequently used for spatial segmentation of fMRI data [59, 37, 60, 61]. Similarity between voxels can be defined by correlating their raw time-series [59] or connectivity profiles [61]. Euclidean distance metrics have also been used on spectral features of time series [37].

K-means clustering has provided several novel insights into functional organization of the human brain. It has revealed the natural division of cortex into two complementary systems, the internally-driven ”intrinsic” system and the stimuli-driven ”extrinsic” system [59, 60]; provided evidence for a hierarchical organization of RSNs [60]; and exposed the anatomical contributions to co-varying resting-state fluctuations [37].

Golland et al. [62] proposed a Gaussian mixture model for clustering fMRI signals. Here, the signal at each voxel is modelled as a weighted sum of N Gaussian densities, with N determining the number of hypothesized functional networks and weights reflecting the probability of assignment to different networks. Large-scale systems were explored at several resolutions, revealing an intrinsic hierarchy in functional organization. Yeo et al. [63] used rs-fMRI measurements on 1000 subjects to estimate the organization of large-scale distributed cortical networks. They employed a mixture model to identify clusters of voxels with similar corticocortical connectivity profiles. Number of clusters were chosen from stability analysis and parcellations at both a coarse resolution of 7 networks and a finer scale of 17 networks were identified. A high degree of replicability was attained across data samples, suggesting that these networks can serve as reliable reference maps for functional characterization.

3.1.4. Identifying hierarchical spatial organization

Several studies have provided evidence for a hierarchical organization of functional networks in the brain[62, 60]. Hierachical agglmomerative clustering (HAC) thus provides a natural tool to partition rs-fMRI data and explore this latent hierarchical structure. Earliest applications of clustering to rs-fMRI were based on HAC [64, 36]. This technique thus largely demonstrated the feasibility of clustering for extracting RSNs from rs-fMRI data. Recent applications of HAC have focused on defining whole-brain parcellations for downstream analysis [65, 66, 67]. Spatial continuity can be enforced in parcels, for example, by considering only local neighborhoods as potential candidates for merging [65].

An advantage of hierarchical clustering is that unlike k-means clustering, it does not require knowledge of the number of clusters and is completely deterministic. However, once the cluster tree is formed, the dendrogram must be split at a level that best characterizes the ”natural” clusters. This can be determined based on a linkage inconsistency criterion [64], consistency across subjects [36], or advance empirical knowledge [68].

While a promising approach for rs-fMRI analysis, hierarchical clustering has some inherent limitations. It often relies on prior dimensionality reduction, for example by using an anatomical template [36], which can bias the resulting parcellation. It is a greedy strategy and erroneous partitions at an early stage cannot be rectified in subsequent iterations. Single-linkage criterion may not work well in practice since it merges partitions based on the nearest neighbor distance, and hence is not inherently robust to noisy resting-state signals. Further, different metrics usually optimize divergent attributes of clusters. For example, single-link clustering encourages extended clusters whereas complete-link clustering promotes compactness. This makes the a priori choice of distance metric somewhat arbitrary.

3.1.5. Graph based clustering

Functional MRI data can be naturally represented in the form of graphs. Here, nodes represent voxels and edges represent connection strength, typically measured by a correlation coefficient between voxel time series or between connectivity maps [50, 69]. Often, thresholding is applied on edges to limit graph complexity. Graph segmentation approaches, such as those based on Ncut criteria, have been widely used to derive whole-brain parcellations [50, 70, 71]. Population-level parcellations are usually derived in a two stage procedure: First, individual graphs are clustered to extract functionally-linked regions, followed by a second stage where a group-level graph characterizing the consistency of individual cluster maps is clustered [69, 50]. Spatial contiguity can be easily enforced by constraining the connectivity graph to local neighborhoods [50], or through the use of shape priors [71]. Departing from this protocol, Shen et al. [70] propose a groupwise clustering approach that jointly optimizes individual and group parcellations in a single stage and yields spatially smooth group parcellations in the absence of any explicit constraints.

A disadvantage of the Ncut criteria for fMRI is its bias towards creating uniformly sized clusters, whereas in reality functional regions show large size variations. Graph construction itself involves arbitrary decisions which can affect clustering performance [72] e.g., selecting a threshold to limit graph edges, or choosing the neighborhood to enforce spatial connectedness.

3.1.6. Comments

I. A comment on alternate connectivity-based parcellations.

Several papers make a distinction between clustering / decomposition and boundary detection based approaches for network segmentation. In the rs-fMRI literature, several non-learning based parcellations have been proposed, that exploit traditional image segmentation algorithms to identify functional areas based on abrupt RSFC transitions [73, 74]. Clustering algorithms do not mandate spatial contiguity, whereas boundary based methods implicitly do. In contrast, boundary based approaches fail to represent long-range functional associations, and may not yield parcels that are as connectionally homogeneous as unsupervised learning approaches. A hybrid of these approaches can yield better models of brain network organization. This direction was recently explored by Schaefer et al. [75] with a Markov Random Field model. The resulting parcels showed superior homogeneity compared with several alternate gradient and learning-based schemes. Further, complementing RSFC with other modalities can yield corroborative and perhaps complementary information for delineating areal boundaries. Recently, Glasser et al. approached this problem by developing a multi-modal approach for generating brain parcellations[74]. The authors propose a semi-automated approach that combines supervised machine learning with manual annotations for parcellating regions based on their multi-modal fingerprints (architecture, function, connectivity and topography). Such an approach can be instrumental towards the goal of precise human brain functional mapping.

II. Subject versus population level parcellations.

Significant effort in rs-fMRI literature is dedicated to identifying population-average parcellations. The underlying assumption is that functional connectivity graphs exhibit similar patterns across subjects, and these global parcellations reflect common organizational principles. Yet, individual-level parcellations can potentially yield more sensitive connectivity features for investigating networks in health and disease. A central challenge in this effort is to match the individual-level spatial maps to a population template in order to establish correspondences across subjects. Common approaches to obtain subject-specific networks with group correspondence often incorporate back-projection and dual regression [52, 51], or hierarchical priors within unsupervised learning [56, 76]. While a number of studies have developed subject-specific parcellations, the significance of this inter-subject variability for network analysis has only recently been discussed. Kong et al. [76] developed high quality subject-specific parcellations using a multi-session hierarchical Bayesian model, and showed that subject-specific variability in functional topography can predict behavioral measures. Recently, using a novel parcellation scheme based on K-medoids clustering, Salehi et al. [77] showed that individual-level parcellation alone can predict the sex of the individual. These studies suggest the intriguing idea that subject-level network organization, i.e. voxel-to-network assignments, can capture concepts intrinsic to individuals, just like connectivity strength.

III. Is there a universal ‘gold-standard’ atlas? .

When considering the family of different methods, algorithms or modalities , there exist a plethora of diverse brain parcellations at varying levels of granularity. Thus far, there is no unified framework for reasoning about these brain parcellations. Several taxonomic classifications can be used to describe the generation of these parcellations, such as machine learning or boundary detection, decomposition or clustering, multi-modal or unimodal. Even within the large class of clustering approaches, it is impossible to find a single algorithm that is consistently superior for a collection of simple, desired properties of partitioning [78]. Several evaluation criteria have emerged for comparing different parcellations, exposing the inherent trade-offs at work. Arslan et al. [79] performed an extensive comparison of several parcellations across diverse methods on resting-data from the Human Connectome Project (HCP). Through independent evaluations, they concluded that no single parcellation is consistently superior across all evaluation metrics. Recently, Salehi et al. [80] showed that different functional conditions, such as task or rest, generate reproducibly distinct parcellations thus questioning the very existence of an optimal parcellation, even at an individual-level. These novel studies necessitate rethinking about the final goals of brain mapping. Several studies have reflected the view that there is no optimal functional division of the brain, rather just an array of meaningful brain parcellations [65]. Perhaps, brain mapping should not aim to identify functional sub-units in a universal sense, like Broadmann areas. Rather, the goal of human brain mapping should be reformulated as revealing consistent functional delineations that enable reliable and meaningful investigations into brain networks.

IV. A comparison between decomposition and clustering.

A high degree of convergence has been observed in the functionally coherent patterns extracted using decomposition and clustering. Decomposition techniques allow soft partitioning of the data, and can thus yield spatially overlapping networks. These models may be more natural representations of brain networks where, for example, highly integrated regions such as network ‘hubs’ can simultaneously subserve multiple functional systems. Although it is possible to threshold and relabel the generated maps to produce spatially contiguous brain parcellations, these techniques are not naturally designed to generate disjoint partitions. In contrast, clustering techniques automatically yield hard assignments of voxels to different brain networks. Spatial constraints can be easily incorporated within different clustering algorithms to yield contiguous parcels. Decomposition models can adapt to varying data distributions, whereas clustering solutions allow much less flexibility owing to rigid clustering objectives. For example, k-means clustering function looks to capture spherical clusters. While a thorough comparison between these approaches is still lacking, some studies have identified the trade-offs between choosing either technique for parcellation. Abraham et al. [57] compared clustering approaches with group-ICA and dictionary learning on two evaluation metrics: stability as reflected by reproducibility in voxel assignments on independent data, and data fidelity captured by the explained variance on independent data. They observed a stability-fidelity trade-off: while clustering models yield stable regions but do not explain test data as well, linear de-composition models explain the test data reasonably well but at the expense of reduced stability.

3.2. Discovering patterns of dynamic functional connectivity

Unsupervised learning has also been applied to study patterns of temporal organization or dynamic reconfigurations in resting-state networks. These studies are often based on two alternate hypothesis that (a) dynamic (windowed) functional connectivity cycles between discrete ”connectivity states”, or (b) functional connectivity at any time can be expressed as a combination of latent ”connectivity states”. The first hypothesis is examined using clustering-based approaches or generative models like HMMs, while the second is modelled using decomposition techniques. Once stable states are determined across population, the former approach allows us to estimate the fraction of time spent in each state by all subjects. This quantity, known as dwell time or occupancy of the state, shows meaningful variation across individuals [43, 42, 81, 82]. It is important to note than in all these approaches, the RSNs or the spatial patterns are assumed to be stationary over time and it is the temporal coherence that changes with time.

3.2.1. Clustering

Several studies have discovered recurring dynamic functional connectivity patterns, known as ”states”, through k-means clustering of windowed correlation matrices [42, 81, 82, 83, 84]. FC associated with these repeating states shows marked departure from static FC, suggesting that network dynamics provide novel signatures of the resting brain [42]. Notable differences have been observed in the dwell times of multiple states between healthy controls and patient populations across schizophrenia, bipolar disorder and psychotic-like experience domains [81, 82, 83].

Abrol et al. [84] performed a large-scale study to characterize the replicability of brain states using standard k-means as well as a more flexible, soft k-means algorithm for state estimation. Experiments indicated reproducibility of most states, as well as their summary measures, such as mean dwell times and transition probabilities etc. across independent population samples. While these studies establish the existence of recurring FC states, behavioral associations of these states is still unknown. In an interesting piece of work, Wang et al. [85] identified two stable dynamic FC states using k-means clustering that showed correspondence with internal states of high- and low-arousal respectively. This suggests that RSFC fluctuations are behavioral state-dependent, and presents one explanation to account for the heterogeneity and dynamic nature of RSFC.

3.2.2. Markov modelling of state transition dynamics

HMMs are another valuable tool to interrogate recurring functional connectivity patterns [44, 43, 86]. The notion of states remains similar to the ”FC states” described above for clustering; however, the characterization and estimation is drastically different. Unlike clustering where sliding windows are used to compute dynamic FC patterns, HMMs model the rs-fMRI time-series directly. Hence, they offer a promising alternative to overcome statistical limitations of sliding-windows in characterizing FC changes.

Several interesting results have emerged through the adoption of HMMs. Vidaurre et al. [43] find that relative occupancy of different states is a subject-specific measure linked with behavioral traits and heredity. Through Markov modelling, transitions between states have been revealed to occur as a non-random sequence [42, 43], that is itself hierarchically organized [43]. Recently, network dynamics modelled using HMMs were shown to distinguish MCI patients from controls [86], thereby indicating their utility in clinical domains.

3.2.3. Finding latent connectivity patterns across time-points

Decomposition techniques for understanding RSFC dynamics have the same flavor as the ones described in section 2.2.1: of explaining data through latent factors; however, the variation of interest is across time in this case. Adoption of matrix decomposition techniques exposes a basis set of FC patterns from windowed correlation matrices. Dynamic FC has been characterized using varied decomposition approaches, including PCA[48], Singular Value Decomposition (SVD)[49], non-negative matrix factorization[87] and sparse dictionary learning[88].

Decomposition approaches, here, diverge from clustering or HMMs as they associate each dFC matrix with multiple latent factors instead of a single component. To compare these alternate approaches, Leonardi et al. [49] implemented a generalized matrix decomposition, termed k-SVD. This factorization generalizes both k-means clustering and PCA subject to variable constraints. Reproducibility analysis in this study indicated that dFC is better characterized by multiple overlapping FC patterns.

Decomposition of dFC has revealed novel alterations in network dynamics between healthy controls and patients suffering from PTSD [88] or multiple sclerosis [48], as well as between childhood and young adulthood [87].

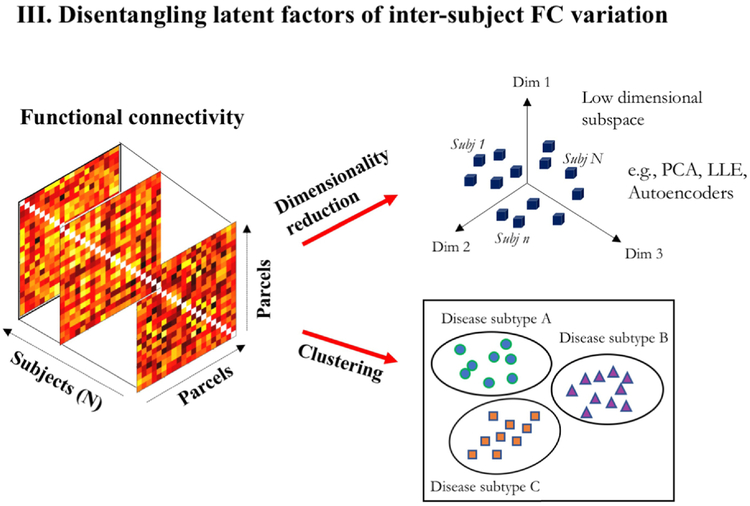

3.3. Disentangling latent factors of inter-subject FC variation

Unsupervised learning can also disentangle latent explanatory factors for FC variation across population. We find two applications here: (i) learning low dimensional embeddings of FC matrices for subsequent supervised learning and (ii) learning population groupings to differentiate phenotypes based solely on FC.

3.3.1. Dimensionality reduction

Rs-fMRI analysis is plagued by the curse of dimensionality, i.e., the phenomenon of increasing data sparsity in higher dimensions. Commonly used data features such as FC between pairs of regions, increase as with the number of parcellated regions. Further, sample size in typical fMRI studies is typically of the order of tens or hundreds, making it harder to learn generalizable patterns from original high dimensional data. To overcome this, linear decomposition methods like PCA or sparse dictionary learning have been widely used for dimensionality reduction of functional connectivity data [89, 90, 91, 92].

Several non-linear embedding methods like Locally linear embedding (LLE) or Autoencoders (AEs) have also garnered attention. LLE embeddings have been employed in rs-fMRI studies, for example, to improve predictions in supervised age regression [93], or for low-dimensional clustering to distinguish Schizophrenia patients from controls [94]. AEs are a neural network based alternative for generating reduced feature sets through nonlinear input transformations. They have been used for feature reduction of RSFC in several studies [86, 95]. AEs can also be used in a pre-training stage for supervised neural network training, in order to direct the learning towards parameter spaces that support generalization [96]. This technique was shown, for example, to improve classification performance of Autism and Schizophrenia using RSFC [97, 98].

3.3.2. Clustering heterogeneous diseases

Clustering can expose sub-groups within a population that show similar FC. Using unsupervised maximum margin clustering [99], Zeng et al. [100] demonstrated that clusters can be associated with disease category (depressed v/s control) to yield high classification accuracy. Recently, Drysdale et al. [101] discovered novel neurophysiological subtypes of depression based on RSFC. Using an agglomerative hierarchical procedure, they identified clustered patterns of dysfunctional connectivity, where clusters showed associations with distinct clinical symptom profiles despite no external supervision. Several psychiatric disorders, like depression, schizophrenia, and autism spectrum disorder, are believed to be highly heterogeneous with widely varying clinical presentations. Instead of labelling them as a unitary syndrome, differential characterization based on disease sub-types can build better diagnostic, prognostic or therapy selection systems. Unsupervised clustering could aid in the identification of these disease subtypes based on their rs-fMRI manifestations.

4. Supervised Learning

Supervised learning denotes the class of problems where the learning system is provided input features of the data and corresponding target predictions (or labels). The goal is to learn the mapping between input and label, so that the system can compute predictions for previously unseen input data points. Prediction of autism from rs-fMRI correlations is an example problem. Since intrinsic FC reflects interactions between cognitively associated functional networks, it is hypothesized that systematic alterations in resting-state patterns can be associated with pathology or cognitive traits. Promising diagnostic accuracy attained by supervised algorithms using rs-fMRI constitute strong evidence for this hypothesis.

In this section, we separate the discussion of rs-fMRI feature extraction from the classification algorithms and application domains.

4.1. Deriving connectomic features

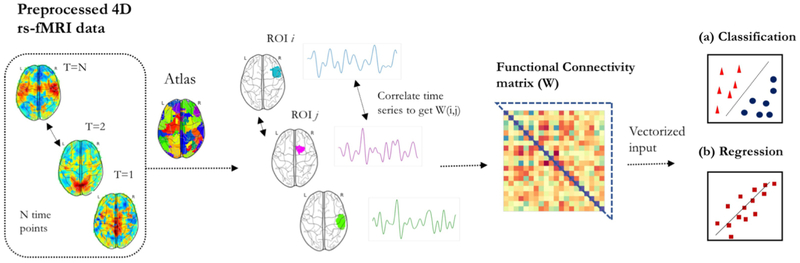

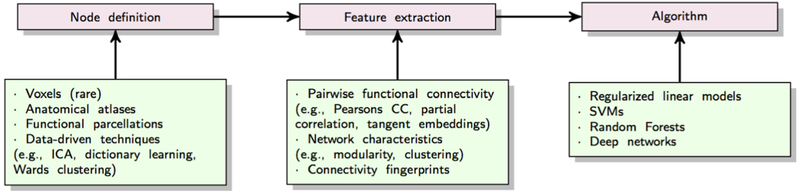

To render supervised learning effective, the most critical factor is feature extraction. Capturing relevant neurophenotypes from rs-fMRI depends on various design choices. Almost all supervised prediction models use brain networks or ”connectomes” extracted from rs-fMRI time-series as input features for the learning algorithm. The prototypical prediction pipeline is shown in Figure 8. Here, we discuss critical aspects of common choices for brain network representations in supervised learning.

Figure 8:

A common classification/regression pipeline for connectomes

The first step in the prototypical pipeline is region definition and corresponding time-series extraction. Dense connectomes derived from voxel-level correlations are rarely used in practice for supervised prediction due to their high dimensionality. Both functional and anatomical atlases have been extensively used for this dimensionality reduction. Atlases delineate ROIs within the brain that are often used to study RSFC at a supervoxel scale. Each ROI is represented with a distinct time-course, often computed as the average signal from all voxels within the ROI. Consequently, the data is represented as an N × T matrix, where N denotes the number of ROIs and T represents the time-points in the signal. A drawback of using pre-defined atlases is that they may not explain the rs-fMRI dataset very well since they are not optimized for the data at hand. Several studies employ data-driven techniques to define regions within the brain, using unsupervised models such as K-means clustering, Ward clustering, ICA or dictionary learning etc [66, 102]. It is important to note that since we use pairs of ROIs to define whole-brain RSFC, the features grow as with the number of ROIs. Therefore, in most studies, the network granularity is often limited to the range of 10-400 ROIs.

The second step in this pipeline involves defining connectivity strength for extracting the connectome matrix. Functional connectivity between pairs of ROIs is the most common feature representation of rs-fMRI in supervised learning. In order to extract connectivity matrix, first the covariance matrix needs to be estimated. Sample covariance matrices are subject to a significant amount of estimation error due to the limited number of time-points. This ill-posed problem can be partially resolved through the use of shrinkage transformations [103]. Connectivity strength can then be estimated from the covariance matrix in multiple ways. Pearson’s correlation coefficient is a commonly used metric for estimating functional connectivity. Partial correlation is another metric that has been shown to yield better estimates of network connections in simulated rs-fMRI data [104]. It measures the normalized correlation between two time-series, after removing the effect of all other time-series in the data. Alternatively, one can use a tangent-based reparametrization of the covariance matrix to obtain functional connectivity matrices that respect the Riemannian manifold of covariance matrices [105]. These connectivity coefficients can boost the sensitivity for comparing diseased versus patient populations [66, 105]. It is also possible to define frequency-specific connectivity strength by decomposing the original time-series into multiple frequency sub-bands and correlating signals separately within these sub-bands [106].

A few studies depart from this routine. In graph-theoretic analysis, it is common to represent parcellated brain regions as graph nodes and functional connectivity between nodes as edge weights. This graph based representation of functional connectivity, the human ”connectome”, has been used to infer various topological characteristics of brain networks, such as modularity, clustering, small-worldedness etc. Some discriminative models have exploited these graph-based measures for individual-level predictions [107, 108, 13], although they are more commonly used for comparing groups. While limited in number, a few studies have also explored rs-fMRI features beyond RSFC. Amplitude of low-frequency fluctuations (ALFF) and local synchronization of rs-fMRI signals or Regional Homeogeneity (ReHo) are two alternate measures for studying spontaneous brain activity that have shown discriminative ability [109, 110]. More recently, several studies have also begun to explore the predictive capacity of dynamic FC in supervised models [111, 112].

4.2. Feature selection

The goal of feature selection is to remove noisy, redundant or irrelevant features from the data while minimizing the information loss. Feature selection can often be an advantageous pre-processing step for training supervised learning algorithms, especially in the low sample size regime. In the absence of adequate regularization, large number of features can result in a loss of generalization power. Selecting a subset of features with highest relevance can thus help in building better generalizable models while reducing computational complexity.

Feature selection can be performed in a supervised or unsupervised fashion. Supervised or semi-supervised feature selection techniques choose a subset of features based on their ability to distinguish samples from different classes. These methods thus rely on class labels and can be further classified into filter, wrapper or embedded type models. Filter models first rank features by their importance/relevance for the classification task based on a statistical measure (e.g. t-test) and then select the top-ranked features. Wrapper models select feature subsets based on their predictive accuracy and thus need a pre-determined classification algorithm. Wrapper models thus perform better as they take into account the prediction accuracy estimates during feature selection. Due to the repeated learning and cross-validation, however, these models are computationally prohibitive. Embedded models combine the advantages of the two by integrating feature selection into the learning algorithm. Regression models such as LASSO belong to this category as they implicitly select features by encouraging sparsity. These feature selection methods are discussed in depth in a detailed review by Tang et al. [113].

An alternative for feature selection is input dimensionality reduction. Methods like PCA or LLE belong to the category of unsupervised feature selection techniques and have been used to reduce the feature set to a manageable size in several studies. However, as pointed out in [114], these are not at all guaranteed to improve classification performance since they are oblivious to class labels.

Further, whether or not feature selection is necessary also depends on the downstream learning algorithm. Support vector machines, in general, deal well with high-dimensional data because of an implicit regularization. In the context of SVMs, Vapnik et al. [115] have shown that an upper bound on generalization error is independent of the number of features. Regularized models, in general, are capable of handling large feature sets. A drawback is that these models necessitate cross-validation to tune hyper-parameters such as the weight of the regularization penalty. This can reduce the effective sample size available for training and/or independent testing.

In some situations, it might be beneficial to exploit domain knowledge to guide feature selection. For example, if certain anatomical regions are known to have altered functional connectivity in disease based on prior studies, it might be advantageous to use this prior knowledge for constructing a focused feature set.

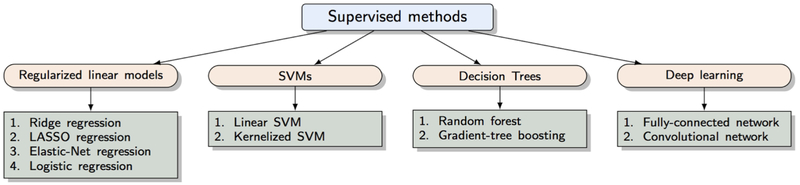

4.3. Methods

The majority of supervised learning methods applied to rs-fMRI are discriminant-based, i.e., they discriminate between classes without any prior assumptions about the generative process. The focus is on correctly estimating the boundaries between classes of interest. Learning algorithms for the same discriminant function (e.g., linear) can be based on different objective functions, giving rise to distinct models. We describe common models below.

4.3.1. Regularized linear models

A large class of supervised learning algorithms are based on regularized linear models. The goal is to predict a target variable Y given input features X. Without loss of generality and for notational convenience, let us assume that the feature vector contains a single constant entry equal to 1, which allows us to account for a bias term. These algorithms differ in the choice of their likelihood model, P(Y∣X, w) and/or prior P(w), where w denotes the parameters of the model. These methods yield optimization problems that are based on a conditional likelihood estimation or a maximum a posteriori estimation (MAP) framework.

Ridge regression.

Ridge regression is another widely used supervised learning algorithm belonging to the class of regularized linear models. The goal is to predict a real-valued output Y given input features X. The conditional likelihood in this algorithm is specified as a multivariate normal distribution where the mean parameter is modelled as a linear combination of input features, i.e., . The prior on weight parameters is often modelled a zero-mean gaussian with a diagonal covariance matrix,i.e., . The optimal weight paramaters w are thus optimized within a maximum a posteriori estimation (MAP) framework according to,

The MAP estimation problem above is convex and admits an elegant analytical solution.

Logistic Regression.

Logistic regression employs a Bernoulli distribution to model the conditional probability of an output class Y given the input features X, i.e. Y∣X ~ Bernoulli(μ). The mean parameter, μ, is specified with a logistic link function σ(·) using a linear combination of input features, i.e., μ = σ(wTx). Given data {(xi, yi), i = 1, .., n}, the model parameters w are optimized within a conditional maximum likelihood framework by solving the following convex optimization problem,

The training objective is optimized using iterative methods such as gradient descent or Newton’s method. Regularized variants of logistic regression incorporate priors on the weight parameters (e.g., multivariate gaussian) and optimize the MAP estimates instead of the conditional likelihood estimates.

4.3.2. Support Vector Machines (SVMs)

The SVM is the most widely used classification/regression algorithm in rs-fMRI studies. SVMs search for an optimal separating hyperplane between classes that maximizes the margin, i.e., the distance from hyperplane to points closest to it on either side. This results in a classifier of the form f(x) = sign(wTx). The model parameters are obtained by solving the following convex optimization problem:

∥w−b∥2 is the L2 norm of the weight vector excluding the bias term. C controls the capacity of the model and determines the margin of the classifier. Tuning C can control overfitting and reduce the generalization error of the model. The resulting classification model is determined by only a subset of training instances that are closest to the boundary, known as the support vectors. SVMs can be extended to seek non-linear separating boundaries via adopting a so-called kernel function. The kernel function, which quantifies the similarity between pairs of points, implicitly maps the input data to higher dimensions. Conceptually, the use of kernel functions allows incorporation of domain-specific measures of similarity. For example, graph-based kernels, such as Weisfeiler-Lehman subtree kernel, can define a distance metric on the graphical representation of functional connectivity data for classification directly in the graph space.

4.3.3. Decision trees and random forests

Decision trees predict the output Y based on a sequence of splits in the input feature space X. The tree is a directed acyclic graph whose nodes represent decision points and edges represent their outcomes. The traversal of this tree in conjunction leads up to a target outcome prediction when a node with no children (leaf node) has been reached. Decision trees are often constructed in a top-down greedy fashion where nodes are split at each step by optimizing a metric that quantifies the consistency between predictions and ground truth. For example, in classification, an often-used information-theoretic metric for quantifying this consistency is Information-Gain, i.e., the reduction in entropy of Y after knowing X. Mathematically, this is expressed as

where H denotes the Shannon entropy. Based on this metric, the first split will use the attribute of X that gives the maximum information gain. Decision trees can offer interpretability, often at the cost of reduced accuracy. Ensembles of decision trees, such as random forests or boosted trees, are thus a more popular choice in most applications since they yield much better prediction performance.

4.3.4. Deep neural networks

An ideal machine learning system should be highly automated, with limited hand-crafting in feature extraction as well as minimal assumptions about the nature of mapping between data and labels. The system should be able to mechanistically learn patterns useful for prediction from observed labelled data. Neural networks are highly promising methods for automated learning. This stems from their capability to approximate arbitrarily complex functions given sufficient labelled data [116].

Deep learning based models or neural networks define a mapping Y = f(X; θ) and optimize for parameters θ that yield the best functional approximation. The function f(·) is typically composed as a concatenation of simple nonlinear functions, often referred to as layers. A widely-used layer is a fully-connected layer that linearly combines the input variables, and applies a simple elementwise non-linear functions such as a sigmoid. The number of layers determines the depth of the network and controls the complexity of the model. The weights and biases of the layers are optimized via gradient descent based methods to minimize an objective function that quantifies the empirical risk. Traditionally, the use of neural network algorithms has been limited since neuroimaging is a data-scarce domain, making it difficult to learn a reliable mapping between input and prediction variables. However, with data sharing and open release of large-scale neuroimaging data repositories, neural networks have recently gained adoption in the the rs-fMRI community for supervised prediction tasks. Neural networks with fully connected dense layers have been adopted to learn arbitrary mappings from connectivity features to disease labels [97, 98]. Recently, more advanced neural networks models with local receptive fields, like convolutional neural networks (CNNs), have shown promising classification accuracy using rs-fMRI data [117]. CNNs replace the fully-connected operations by convolutions with a set of learnable filters. Success of this approach stems from its ability to exploit the full-resolution 3D spatial structure of rs-fMRI without having to learn too many model parameters, thanks to the weight sharing in CNNs.

4.3.5. Comments

I. Strengths/weaknesses of diverse approaches.

All algorithms have their own strengths and weaknesses and the choice of approach should be driven by several factors such as the prediction task, sample size, and nature of the input features. The training objective in common supervised learning algorithms used for neuroimaging applications, such as regularized linear models or SVMs, is often a combination of two terms: a data loss term that is a measure of the empirical risk or training error and a regularization penalty for the prior that helps combat over-fitting during learning (generalization error). The penalty norm can be critical and is often constrained by our prior knowledge about the data. L1 penalties encourage sparsity in weights whereas L2 penalties can allow kernelization and thus enable non-linear decision functions. L2 penalties lead to dense priors and are useful in learning problems where all features are expected to contribute to the predictive model. L1 penalties are useful when prior belief suggests that only a subset of features will contribute to predictions. Some regression models, e.g., Elastic-Net, employ a linear combination of both these penalties at the expense of an additional hyperparameter for tuning the trade-off between the two. The algorithmic choice is also affected by the end-goal. Models like decision trees or LASSO are often preferred when interpretability is desired over optimal performance whereas high-complexity models like SVMs, Random Forests or Neural Networks are imperative if the goal is to maximize performance.

II. Comments on sample sizes.

An important question arises: What is an appropriate sample size for training supervised learning models? Unsurprisingly, research has shown that the sample size needed for learning is dependent on the complexity of the model. Powerful non-linear algorithms typically require more training examples to be effective. In general, one would also expect that the more features in the data, the more training examples would be required to characterize their distribution. Hence, the minimum training size for training a ML algorithm is in general a complex function of input dimensionality, complexity of the chosen model, quality of data, data heterogeneity, separability of classes etc.

Given the significant impact of sample size on classification performance, it is imperative to understand the nature of this relationship. There is significant ongoing research in answering this question using learning curves. These curves model the relationship between sample size and generalization error and can be used to predict the sample size required to train a particular classifier. Several studies have shown that learning curves can be well-characterized with an inverse power-law functional form, with E(n) αn−β, where E denotes the error and n denotes the sample size [118, 119]. Besides empirical justification, many studies have also provided theoretical motivations for the inverse power-law model. The parameters of the learning curve are fitted empirically for a given application domain based on prior classification studies. For traditional algorithms, learning curves are known to plateau, i.e., the performance gains are insignificant beyond a certain sample size. One significant advantage of deep learning methods is that given sufficient capacity, they scale remarkably well with more data. Given the recent surge of interest in single-subject predictions using rs-fMRI, estimating the learning curve for classification of rs-fMRI data could be invaluable for understanding sample size requirements in this domain.

Another critical issue relates to the robustness of the estimated prediction scores. Empirical studies have shown that small sample sizes, typical in neuroimaging studies, result in large error bars on the prediction accuracy. For instance, with a sample size of 100, Varoquaux et al., ballpark the error in estimated prediction accuracy of binary classification tasks to be close to 10%. With 1000 samples, this error reduces down to 3%. Large confidence bounds can potentially invalidate the conclusions of studies based on a small number of samples.

One possible strategy to overcome the limitations of insufficient sample sizes is to exploit unlabelled data in a semi-supervised fashion in order to increase the effectiveness of supervised learning algorithms. Transfer learning techniques are another promising alternative for enhancing classification performance in the low-data regime. These methods exploit neural networks trained on large datasets or auxiliary tasks by fine-tuning them to a target dataset or classification task. These are relatively unexplored directions in the field of rs-fMRI analysis that hold significant potential to alleviate the sample size limitations.

III. Comments on model evaluation.

Cross-validation is a model evaluation technique used to estimate the generalization error of a predictive model. A naive cross-validation strategy is holdout, wherein the data is randomly split into a training and test set and the test score in this single-run is used as an estimate of out-of-sample accuracy. Given the limited sample sizes in most neuroimaging studies, K-fold is the dominant cross-validation choice as it utilizes all data points for both training and validation through repeated holdout, yielding error estimates with much less variance than classic holdout. It first partitions the data into K non-overlapping subsets, D = {S1, .., SK}. For each fold i in {1, .., K}, the model is trained on D Si and evaluated on Si. The mean accuracy across all folds is then used to estimate the model performance. While K can be anything, common choices include 5 or 10. When K equals the number of samples in the training set, the resampling procedure is known as leave one-out cross-validation. This can be used with computationally inexpensive models when sample sizes are low, typically less than a hundred.

4.4. Applications of supervised learning in rs-fMRI

Studies harnessing resting-state correlations for supervised prediction tasks are evolving at an unprecedented scale. We describe some interesting applications of supervised machine learning in rs-fMRI below.

4.4.1. Brain development and aging

Machine learning methods have shown promise in investigating the developing connectome. In an early influential work, Dosenbach et al. [120] demonstrated the feasibility of using RSFC to predict brain maturation as measured by chronological age, in adolescents and young adults. Using SVM, they developed a functional maturation index based on predicted brain ages. Later studies showed that brain maturity can be reasonably predicted even in diverse cohorts distributed across the human lifespan [121, 122]. These works posited rs-fMRI as a valuable tool to predict healthy neurodevelopment and exposed novel age-related dynamics of RSFC, such as major changes in FC of sensorimotor regions [122], or an increasingly distributed functional architecture with age [120]. In addition to characterizing RSFC changes accompanying natural aging, machine learning has also been used to identify atypical neurodevelopment [123].

4.4.2. Neurological and Psychiatric Disorders

Machine learning has been extensively deployed to investigate the diagnostic value of rs-fMRI data in various neurological and psychiatric conditions. Neurodegenerative diseases like Alzheimer’s disease [24, 107, 124], its prodromal state Mild cognitive impairment [125, 126, 127, 128], Parkinson’s [129], and Amyotrophic Lateral Sclerosis (ALS) [130] have been classified by ML models with promising accuracy using functional connectivity-based biomarkers. Brain atrophy patterns in neurological disorders like Alzheimer’s or Multiple Sclerosis appear well before before behavioral symptoms emerge. Thus, neuroimaging-based biomarkers derived from structural or functional abnormalities are favorable for early diagnosis and subsequent intervention to slow down the degenerative process.