Abstract

Perceptual recalibration allows listeners to adapt to talker-specific pronunciations, such as atypical realizations of specific sounds. Such recalibration can facilitate robust speech recognition. However, indiscriminate recalibration following any atypically pronounced words also risks interpreting pronunciations as characteristic of a talker that are in reality due to incidental, short-lived factors (such as a speech error). We investigate whether the mechanisms underlying perceptual recalibration involve inferences about the causes for unexpected pronunciations. In five experiments, we ask whether perceptual recalibration is blocked if the atypical pronunciations of an unfamiliar talker can also be attributed to other incidental causes. We investigated three potential incidental causes for atypical pronunciations: the talker is intoxicated, the talker speaks unusually fast, or the atypical pronunciations occur only in the context of tongue twisters. In all five experiments, we find robust evidence for perceptual recalibration, but little evidence that the presence of incidental causes block perceptual recalibration. We discuss these results in light of other recent findings that incidental causes can block perceptual recalibration.

Keywords: speech perception, perceptual recalibration, causal inference, adaptation, tongue twister, intoxicated speech

Talkers differ from each other in their meaning-to-sound mappings: the same word produced in the same context will differ acoustically and phonetically depending on the talker. How listeners overcome this problem has continued to be one of the pressing questions in research on speech perception. One important part of the answer seems to be adaptive mechanisms during speech perception. Adaptation is observed when listeners are exposed to unfamiliar talkers with non-native or otherwise atypical pronunciations (e.g., Bradlow & Bent, 2008; Sidaras, Alexander & Nygaard, 2009; Xie, Theodore & Myers, 2017). While listeners might initially experience processing difficulty, some of this difficulty can be overcome within minutes of exposure (Clarke & Garrett, 2004; Xie, Weatherholtz, Bainton, Rowe, Burchill, Liu & Jaeger, 2018). The adaptive nature of the speech perception system is also evident in a phenomenon called perceptual recalibration. When exposed to an unfamiliar talker with atypical pronunciations of a sound category, listeners adapt the categorization boundary between those sound categories (e.g., Eisner & McQueen, 2006; Kraljic & Samuel, 2005; Norris, McQueen & Cutler, 2003; Reinisch & Holt, 2014; Vroomen & Baart, 2009). For example, after exposure to a talker who produces /s/ in a way that makes it sound more like an /∫/,1 listeners change the boundary along the /s/-/∫/ continuum, so that more sounds along that continuum are now categorized as /s/.

Intuitively, recalibration facilitates robust speech perception, helping listeners to overcome inter-talker variability in the sound-meaning mapping. While the existence of perceptual recalibration is now firmly established, questions remain about the nature of its underlying mechanisms (for review, see Weatherholtz & Jaeger, 2016). Here we ask whether recalibration applies indiscriminately when an unfamiliar talker with atypical pronunciation is encountered, or whether perceptual recalibration can be cancelled if there is evidence that the input is not characteristic of the talker—for example, because the pronunciation might have resulted from an incidental cause (e.g., the talker is chewing gum).

In an influential study, Kraljic, Samuel & Brennan (2008) found that perceptual recalibration to atypical pronunciations of /∫/ was blocked when the atypical pronunciations could be attributed to an incidental cause. In their experiments, atypical pronunciations were either paired with a video showing the talker producing the shifted word with a pen in their mouth or with a pen in their hand. Kraljic and colleagues identified perceptual recalibration when the shifted pronunciations were paired with videos where the talker has a pen in the hand. When the talker had a pen in the mouth while producing the atypical sound, perceptual recalibration was blocked. One explanation for this blocking is that listeners attribute the atypical pronunciations to the pen (Liu & Jaeger 2018; for related discussion, see Arnold, Kam & Tanenhaus 2007; Kraljic et al. 2008). Inferences about the causes for unexpected pronunciations would allow listeners to determine whether they should expect the same talker to sound similar on future occasions, or whether the observed deviation from expected pronunciations was incidental (though alternative explanations have been proposed, Kraljic & Samuel 2011).

As of yet, this ‘pen-in-the-mouth’ effect remains the only manipulation of incidental causes for which blocking of perceptual recalibration has been investigated. It is thus an open question as to whether other incidental causes can block (or at least reduce) recalibration, as would be expected if causal inferences underlie the pen-in-the-mouth effect. More generally, relatively little is known about the extent to which listeners take into account alternative causes when interpreting linguistic input. Some studies have found similar effects on other aspects of language understanding—in particular, alternative causes presented in explicit instructions (e.g., Arnold et al. 2007; Dix, Gardner, Lawrence, Morgan, Sullivan & Kurumada 2018; Grodner & Sedivy 2011; Kurumada, Brown, Bibyk & Tanenhaus 2018, as summarized in Rohde & Kurumada 2018). For example, listeners tend to anticipate unfamiliar objects as referents following a speech disfluency (“Click on [pause] thee uh red...”), as evidenced in anticipatory eye-movements in a visual world paradigm (Arnold et al., 2007). This effect was blocked when listeners were told that the speaker suffered from a pathology that made naming objects difficult. Results like these suggest that listeners can in principle integrate the presence of alternative causes for the linguistic input they observe during the interpretation of that input. Whether similar inferences can affect perceptual recalibration or other adaptive processes during speech perception remains an open question.

Here we investigate listeners’ perceptual recalibration when atypical pronunciations of /s/ or /∫/ are presented in the context of incidental causes. Across five experiments, we investigate the effects of three incidental causes: alleged intoxication, faster than usual speech rate, and tongue twisters. Any of these factors can cause atypical pronunciation of the /s/-/∫/ contrast, though our focus lies on tongue twisters. Anyone who grew up in an English-speaking environment is likely familiar with well-known tongue twisters like “She sells seashells by the seashore.” or “Peter Piper picked a peck of pickled peppers.” Tongue twisters are notoriously difficult for talkers to produce and often result in speech errors when produced quickly. This is precisely the property that makes tongue twisters a suitable manipulation for the present purpose. While categorical speech errors—such as full phoneme exchanges—are rare in spontaneous speech (<0.1–2%, as estimated in Garnham, Shillcock, Brown, Mill & Cutler, 1981; Levelt, 1993; Wijnen, 1992), the rate of speech errors increases drastically when speakers have to produce sequences of similar sounding words in production experiments (up to 8–17%, according to Choe & Redford, 2012; Motley & Baars, 1976) and even more so in the context of tongue twisters. Because of perceptual biases, these numbers likely underestimate the true rate of speech errors (by some estimates by a factor of three or more, Alderete & Davies 2018; Ferber 1991). We draw on this increased incidence of production errors in tongue twister contexts compared to non-tongue twister contexts. Specifically, we ask whether participants are less likely to expect all pronunciations of a talker to sound atypical when all previously observed atypical pronunciations by that talker occurred in tongue twisters, compared to when previously observed atypical pronunciations occurred in non-tongue twister contexts.

All our experiments employ graded phonetic deviations from typical pronunciations, rather than categorical speech errors. Traditionally, the study of speech errors in productions has focused on categorical errors (phoneme substitution, deletion, transposition, omission, or addition, e.g., Fromkin, 1971). However, recent analyses suggest that speech errors are often graded noncategorical deviations from the intended pronunciation (Frisch & Wright, 2002; Goldrick & Blumstein, 2006; Goldstein, Pouplier, Chen, Saltzman & Byrd, 2007; McMillan & Corley, 2010; Mowrey & MacKay, 1990; Pouplier, 2007). For example, Frisch & Wright (2002) measured the percentage of voicing, duration of frication, and the amplitude of frication for the /s/ and /z/ contrasts, produced in a tongue twister context. Frisch and Wright found that errors often exhibited phonetic characteristics that placed them along the continuum between /s/ and /z/, rather than being categorical substitution of one sound for the other. Similarly, Navas (2001, as cited in Goldrick and Blumstein, 2006) found that some fricative errors exhibited spectral characteristics that were between typical /s/ and /∫/ pronunciations (for a concise summary of related works, see Alderete & Davies, 2018, p. 27–29).

This makes tongue twisters a suitable incidental cause for the present purpose: similar (experimenter-created) gradient pronunciations are used in perceptual recalibration experiments, including the experiments we present here. Imagine you hear a talker produce the tongue twister “She sells seashells by the seashore.” The talker might pronounce the beginning of this phrase as “She shells”, shifting the /s/ in “sells” towards (but not completely) the /∫/ in “shells”. If listeners can take into account incidental causes, they should infer that this pronunciation might not be typical for the talker, and therefore not predictive of future pronunciations of /s/ by the same talker. We would thus expect that perceptual recalibration is reduced if the talker’s shifted pronunciations only ever occur in the context of tongue twisters.

Overview of experiments

Experiment 1 verifies that we are able to detect perceptual recalibration using our paradigm. After we establish that we indeed can detect perceptual recalibration to shifted sounds, we test whether perceptual recalibration is blocked when shifted sounds during exposure only occur within a tongue twister context (e.g., “passion mansion passive passion”). Blocking of perceptual recalibration is expected if listeners’ fully attribute the atypical pronunciation to the tongue twister context, and thus infer that those atypical pronunciations are not informative about how the same talker’s speech outside of tongue twister contexts.

Anticipating our results, we find robust evidence of perceptual recalibration. However, we do not find significant evidence that the tongue twister condition blocks perceptual recalibration: the perceptual recalibration effect in Experiment 1 does not differ significantly across non-tongue twister and tongue twister contexts. This leads us to conduct Experiment 2, which establishes that we can in principle detect statistically significant differences between exposure that elicits perceptual recalibration (as in Experiment 1) and exposure that does not elicit perceptual recalibration (as in Experiment 2). Experiment 3 explores whether the presentation of explicit instructions about plausible incidental causes for shifted pronunciations—e.g., that the talker is intoxicated—can block perceptual recalibration. We again find robust perceptual recalibration effects across all conditions, and no significant evidence that incidental causes can block perceptual recalibration. This leads us to assess the plausibility of our tongue twister contexts, and compare them against attested tongue twisters like “Peter Piper Pepper Peter”. Experiment 4 identifies the most convincing tongue twister contexts and assesses whether perceptual recalibration can be blocked when only those most plausible tongue twisters are used. We again observe robust perceptual recalibration after exposure to non-tongue twister contexts. And, again, we find no significant blocking of perceptual recalibration after exposure to tongue twister contexts. Finally, Experiment 5 tests whether perceptual recalibration is reduced if the shifted pronunciation occurs together with clear signs of production difficulty.

Like the planned analyses for Experiments 1-4, Experiment 5 fails to find significant evidence that listeners integrate incidental causes to explain away atypical pronunciations. These findings contrast with the robust pen-in-the-mouth effect, which has been replicated across a number experiments (Kraljic et al., 2008; Kraljic & Samuel, 2011), including in paradigms similar to the one employed here (Liu & Jaeger, 2018). There is, however, some evidence in support of causal inference during perceptual recalibration: the non-significant effects we observe go in the predicted direction (reduced perceptual recalibration in the presence of an incidental cause) in five out of six between-subject comparisons. Prompted by reviewers, we thus conducted post-hoc analyses. These analyses reveal some (albeit weak) evidence consistent with the hypothesis that incidental causes can reduce the magnitude of perceptual recalibration.

In the general discussion, we review how our results narrow down possible explanations for the effect of visually presented causes like the pen in the mouth. Broadly speaking, one possibility is that the pen-in-the-mouth effect does not originate in causal inferences, contrary to our earlier interpretation (Liu & Jaeger, 2018). This would, however, raise the need for alternative explanations of previous findings that have been attributed to causal inferences (for discussion, see Kraljic & Samuel, 2011). Another possibility is that the pen-in-the-mouth effect does originate in causal inferences but that visual information, or specifically visual information about articulation, has a special status during speech processing—for example, because of special mechanisms dedicated to the integration of audio-visual percepts (cf. Rosenblum 2008; Tuomainen, Andersen, Tiippana & Sams 2005). Finally, our results are compatible with the hypothesis that perceptual recalibration is affected by causal inferences, provided that these inferences are exquisitely sensitive to the probability of the hypothesized incidental cause resulting in the observed auditory percepts. We discuss the properties of our experiments that afford this latter interpretation, and determine future steps to distinguish between the different accounts.

Analysis and reporting approach

Following standard procedure from our lab, we report all studies conducted for this project. Three auxiliary experiments that yielded identical results to experiments we report in detail are presented in supplementary information available via OSF https://osf.io/ungba/, and summarized in the main text. Unless explicitly mentioned otherwise, analyses were planned prior to inspection of the data.

The number of participants and test items included in the analysis was held constant across all experiments, and was chosen so as to achieve sufficient power based on the effect sizes reported in similar previous research (for details, see Methods). We confirmed that we have high power by parametrically generating 10,000 data sets with an effect size estimated from previous work—specifically, half the estimate observed in Liu & Jaeger (2018). These estimates were intended, and turn out, to be conservative (the effect sizes observed in the experiments reported below are larger than those assumed in the power analyses). These simulations estimate the power of our analyses to detect perceptual recalibration (Label effect in predicted direction)—detected in all experiments reported below—at > 95%, and the power to detect blocking of perceptual recalibration (interaction of Label and Context effects in predicted direction)—detected in none of our experiments—at > 81% (for explanation of the conditions, see below). All data and analyses are available at https://osf.io/ungba/.

Aggregate demographic information about participants

Since the demographic composition of our participants did not vary significantly across experiments, we report aggregate information here. All demographic categories were based verbatim on NIH reporting requirements. Across all experiments presented here, 48% of our participants reported as “female”, and 47% report as “male”, and 5% declined to report gender. The mean age of our participants was 36.3 years, with an interquartile range of 27–42 years (SD = 19; 4% declined to report). All participants reported to be at least 18 years of age. With regard to ethnicity, 9% of the participants reported as “Hispanic”, 85% as “Non-Hispanic”, and 6% declined to report. With regard to race, 74% report as “White”, 8% as “Black or African American”, 7% as “Asian”, 4% as “More than one race”, 1% as “American Indian/Alaska Native” or “Native Hawaiian or other Pacific Islander”, 1% as other and 5% declined to report. As we have no theoretical reasons to investigate demographic effects on the outcomes reported in the present study, we refrained from doing so.

Experiment 1

We begin by verifying that we can detect perceptual recalibration to atypical pronunciations of /s/ and /∫/ in a new variant of an exposure-test paradigm that accommodates our present goals. The general structure of our experiments is summarized in Figure 1 and elaborated on below. Following previous perceptual recalibration experiments, our experiment consisted of an exposure block intended to induce perceptual recalibration, followed by a test block to assess the degree of perceptual recalibration (Eisner & McQueen, 2005; Kraljic et al., 2008; Kraljic & Samuel, 2005, 2011; Liu & Jaeger, 2018; Norris et al., 2003).

Figure 1.

Structure of experiments. During the exposure block, participants heard 24 four-word phrases and were asked to transcribe them. Exposure was manipulated between participants. During the test block, all participants categorized sounds as either /asi/ or /a∫i/.

In order to study the effects of tongue twisters, listeners in the present study heard four word phrases during exposure, some of which contained the shifted /?s∫/ sound (either an /s/ shifted towards and /∫/ or vice versa; for details, see Methods). Specifically, we used a 2 × 2 between-participant design in the exposure block (Label x Context). Participants heard a shifted sound replace the /s/ sound (S-Label condition) or the /∫/ sound (∫-Label condition), and these atypical pronunciations either occurred in a Tongue Twister Context (e.g., “passive massive pa?s∫ion passive”) or a Non-Tongue Twister Context (e.g., “holler tamper pa?s∫ion holler”). The Tongue Twister Context contained sound sequences intended to make it more difficult to produce than the Non-Tongue Twister Context. This was intended to make it seem likely to participants that any atypical pronunciation in the Tongue Twister Context was due to an incidental speech error. We employ the /s/ and /∫/ contrast because these sounds are commonly exchanged for each other in speech errors (Shattuck-Hufnagel & Klatt, 1979). This makes it more likely that atypical pronunciations of /s/ and /∫/ in a tongue twister context will be seen as a plausible speech error.

The test block did not vary across participants, and followed previous perceptual recalibration experiments (Kraljic & Samuel, 2005; Liu & Jaeger, 2018; Norris et al., 2003). During test, we assess whether exposure affected categorization along an /s/-/∫/ continuum, as expected from previous studies on perceptual recalibration. We then examine whether this perceptual recalibration effect could be blocked or reduced depending on the context in which /s/ and /∫/ appeared.

All experiments reported below use a web-based crowdsourcing paradigm. This allows us to collect data more quickly, and from a more heterogeneous participant group than lab-based paradigms. This was particularly helpful for the present studies, which include a total of 960 participants. We have employed similar web-based paradigms in previous work on speech perception (e.g., Bicknell, Bushong, Tanenhaus & Jaeger, 2019; Burchill, Liu & Jaeger, 2018; Bushong & Jaeger, 2017; Kleinschmidt, Raizada & Jaeger, 2015; Xie et al., 2018), including lexically-guided perceptual recalibration to /s/ and /∫/ (Liu & Jaeger, 2018).

Method

Participants.

173 total participants were recruited to achieve a target of 40 participants for each of the four between-participant conditions (S/∫-Label crossed with the Tongue Twister/Non-Tongue Twister Context). The same holds for all perceptual recalibration experiments presented below, in order to avoid unnecessary researchers’ degrees of freedom. The targeted number of participants is comparable to previous experiments on perceptual recalibration (e.g., ~ 48 in Kraljic and Samuel, 2005; ~ 25 participants Norris et al., 2003).

The experiment took about 10 minutes, and participants were paid $1.00 ($6/hour). Participants were instructed to participate only if they were native speakers of English, and if they would complete the experiment while wearing headphones in a quiet room.

Exclusion criteria were determined prior to conducting the experiment, closely following previous work (specifically, all applicable criteria from Liu and Jaeger, 2018). Based on these criteria, 8 participants were excluded based on their incorrect response to a catch question asking them to identify whether the exposure talker was male or female (the talker was clearly a female speaker), and 2 participants were excluded for reporting that they did not wear headphones during the experiment (7.5% total exclusion rate). Both the catch question and the headphone question were part of a post-experiment exit survey described below. 3 participants were excluded for likely confusing their response keys during the test block, as evidenced by inverted categorization boundaries (more /s/ responses at the /∫/ end of the continuum), which would not be expected under any theory of speech perception.

Materials. Exposure Block: Transcription Task.

Participants heard and transcribed 24 four-word phrases (all phrases given in Tables 1 and 2). The condition (Label x Context) that the participant was in dictated the specific set of phrases they would hear. 8 of these phrases contained a shifted pronunciation of either /s/ or /∫/, depending on the Label condition. We chose to have 8 shifted pronunciations because we had previously found perceptual recalibration to /s/ and /∫/ in similar paradigms with 6 and 10 shifted pronunciations, and little to no benefit for more than 10 shifted pronunciations (Liu & Jaeger 2018; see also Kleinschmidt & Jaeger 2011). Our power simulations were based on half the effect size found in previous work for 10 shifted pronunciations (see appendix for details).

Table 1.

Stimuli for S-Label condition (Experiment 1). The 8 shaded rows in each condition represent the critical stimuli containing a shifted sound (?s∫). The non-shaded rows in each condition are the 16 filler phrases. 8 of the filler phrases were identical across all conditions, and contained no fricative sounds. The other filler phrases were balanced between the critical phrases so that participants in the Tongue Twister Context and the Non-Tongue Twister Context of each Label condition would hear the exact same recordings. Items marked with an asterisk (*) represent the subset of items used in Experiment 4 and 5. We note that our design implies that there are 3-times as many unshifted sounds as atypical shifted critical sounds. This differs from previous work and is addressed in Experiment 2.

| Tongue Twister Context (S-Label) |

Non-Tongue Twister Context (S-Label) |

|---|---|

| passion mansion pa ?s∫ive passion * | holler tamper pa ?s∫ive holler * |

| pushing cushion ki?s∫ing pushing | kelly bigot ki ?s∫ing kelly |

| crucial glacial cla?s∫ic crucial | gecko ruby cla?s∫ic gecko |

| pension mission po ?s∫sum pension | tamer hater po?s∫sum tamer |

| cashew kosher ca ?s∫tle cashew * | layman hating ca?s∫tle hating * |

| blushing pressure blo ?s∫om blushing * | header leaning blo ?s∫om leaning * |

| ration washing ran ?s∫om ration * | yapping nodded ran ?s∫om nodded * |

| bishop gusher go ?s∫ip bishop | wacky talent go ?s∫ip talent |

| holler tamper hamper holler * | passion mansion hamper passion * |

| kelly bigot belly kelly | pushing cushion belly pushing |

| gecko ruby raking gecko | crucial glacial raking crucial |

| tamer hater hammer tamer | pension mission hammer pension |

| layman hating human hating * | cashew kosher human cashew * |

| header leaning leader leaning * | blushing pressure leader blushing * |

| yapping nodded napping nodded * | ration washing napping ration * |

| wacky talent tacky talent | bishop gusher tacky bishop |

| weary deepen dairy deepen * | weary deepen dairy deepen * |

| polly gaping goalie gaping * | polly gaping goalie gaping * |

| carry making marry making | carry making marry making |

| debit rookie rabbit rookie * | debit rookie rabbit rookie * |

| hidden berry button berry | hidden berry button berry |

| bullet happy hamlet happy * | bullet happy hamlet happy * |

| wacko tamer taco tamer | wacko tamer taco tamer |

| weary deepen dairy deepen | weary deepen dairy deepen |

Table 2.

Stimuli for ∫-Label condition (Experiment 1). For details, see caption of Table 1.

| Tongue Twister Context (∫-Label) |

Non-Tongue Twister Context (∫-Label) |

|---|---|

| passive massive pa ?s∫ion passive * | holler tamper pa ?s∫ion holler * |

| kissing missing cu?s∫ion kissing | kelly bigot cu ?s∫ion kelly |

| classic glassy cru?s∫ial classic | gecko ruby cru?s∫ial gecko |

| tossing possum pen ?s∫ion tossing | tamer hater pen ?s∫ion tamer |

| castle missile ca ?s∫ew castle * | layman hating ca ?s∫ew hating * |

| blossom pressing blu ?s∫ing blossom * | header leaning blu ?s∫ing leaning * |

| ransom wussy ra ?s∫ion ransom * | yapping nodded ra?s∫ion nodded * |

| gossip bicep bi?s∫op gossip | wacky talent bi ?s∫op talent |

| holler tamper hamper holler * | passive massive hamper passive * |

| kelly bigot belly kelly | kissing missing belly kissing |

| gecko ruby raking gecko | classic glassy raking classic |

| tamer hater hammer tamer | tossing possum hammer tossing |

| layman hating human hating * | castle missile human castle * |

| header leaning leader leaning * | blossom pressing leader blossom * |

| yapping nodded napping nodded * | ransom wussy napping ransom * |

| wacky talent tacky talent | gossip bicep tacky gossip |

| weary deepen dairy deepen * | weary deepen dairy deepen * |

| polly gaping goalie gaping * | polly gaping goalie gaping * |

| carry making marry making | carry making marry making |

| debit rookie rabbit rookie * | debit rookie rabbit rookie * |

| hidden berry button berry | hidden berry button berry |

| bullet happy hamlet happy * | bullet happy hamlet happy * |

| wacko tamer taco tamer | wacko tamer taco tamer |

| weary deepen dairy deepen | weary deepen dairy deepen |

| passion mansion pa?s∫ive passion * | holler tamper pa ?s∫ive holler * |

We refer to the phrases that contained a shifted pronunciation as critical phrases. The eight critical phrases occurred in either a Tongue Twister or Non-Tongue Twister Context, described below. The other 16 phrases were filler phrases. Critical words were always bi-syllabic, and the /s/ and /∫/ sound always occurred at the beginning of the second syllable. This was to ensure that the “critical phonemes ... [were] well-articulated and ... preceded by relatively strong lexical information” (Kraljic and Samuel, 2005, p.147). In Kraljic and Samuel’s study, critical sounds occurred at syllable onsets late in words, with most words having 3, sometimes 4, syllables. Our decision to use bi-syllabic words might have reduced the strength of the lexical information preceding the critical sounds (as our results show, this was not an issue), but allowed us to closely match the phonotactic context in critical words for /s/ and /∫/ sounds (e.g., passive—passion). For the same reason, /s/ and /∫/ sounds were always surrounded by either a vowel or nasal sound.

Following previous work, none of the other words contained any other fricative sounds (incl. /s/ and /∫/). Lists for stimuli presentation were created by Latin square design over Label and Context. One pseudo-randomized stimulus order (and its reverse) was created in which no more than two critical phrases occurred in a row. This resulted in eight lists (2 Label x 2 Context * 2 Orders = 8 Lists).

We first describe the creation of the shifted pronunciations. We then describe the structure of the critical phrases in the Tongue Twister Context, followed by the structure of the filler phrases in the Tongue Twister Context. Finally, we describe the structure of the critical and filler phrases in the Non-Tongue Twister Context. Phrases were recorded at a natural speech rate, with durations of about 2–2.5 seconds.

Creation of shifted pronunciations.

The third word in the phrase was the critical word: the /s/ or /∫/ in this word was shifted toward its fricative counterpart (i.e. /∫/ or /s/, respectively). To create these atypical productions, the talker (a 25 year old female, native talker of American English) recorded two versions of each phrase, one containing the normal pronunciation of the third word (e.g., passive) and one containing the atypical pronunciation of the third word with the fricative counterpart (e.g., pashive). The pronunciation containing the fricative counterpart never resulted in a real word, which allowed the participant to use lexical knowledge to disambiguate the identity of the shifted fricative. The /s/ and /∫/ of the two recordings were blended using FricativeMakerPro (McMurray, Rhone & Galle, 2012) to create a continuum with 31 steps for that word (e.g., ranging from passive to pashive). Following Kraljic and Samuel (2005), three native English speakers then independently listened to these words to identify the word that sounded maximally ambiguous. The average of their responses was selected as the shifted /?s∫/ word that was presented to participants. Each shifted pronunciation was then inserted back into the phrases corresponding to the Tongue Twister and Non-Tongue Twister Context, which we describe next.

Critical phrases in Tongue Twister Context.

We created 8 four-word phrases in each Label condition that contained an atypical pronunciation of either /s/ or /∫/ in the third word position (e.g., passion mansion pa?s∫ive passion). Specifically, the phrases were of the structure S1 S2 ?s∫ S1 (or ∫1 ∫2 ?s∫ ∫1), where S1 and S2 were words which contained the /s/ sound, and ?s∫was a word that contained the shifted /?s∫/ sound. These tongue twister phrases had a number of structural properties that were intended to make it plausible that they would elicit mispronunciations of /s/ as /∫/ (or more /∫/-sounding /s/ sounds) in the S-label condition (and vice versa in the ∫-label condition). For example, the /s/ and /∫/ sounds in our experiment all appeared word medially, as speech errors are more likely to affect sounds that share a word position than when they do not (Shattuck-Hufnagel, 1983; Wilshire, 1999).

Additionally, we positioned the atypical /s/ and /∫/ sounds in the third word position, preceding a word with a typical pronunciation of the counterpart fricative, because speech errors are likely to anticipate upcoming sounds (Wilshire, 1999). In other words, the third word in our tongue twister phrases were shifted towards the fricative in the first, second, and fourth word of our phrases.

Finally, as much as possible, the first and second word in the phrase shared a common vowel in the second syllable, and either the first or second word in the phrase shared a common onset with the third word. For example, consider the phrase “passive massive pa?s∫ion passive”. The first and second words (passive and massive) share the vowel in the second syllable (in fact, they share the entire syllable), and the first and third word share a common onset (passive and passion). This stimulus structure was chosen to approximate the type of tongue twisters used in speech error eliciting experiments (e.g., Sevald & Dell, 1994; Shattuck-Hufnagel & Klatt, 1979; Wilshire, 1999). For example, Wilshire (1999) employed tongue twisters consisting of four monosyllabic words, where the word-initial phoneme varied in the structure ABBA, and the word-final phoneme varied in the structure ABAB (e.g., palm neck name pack).

Filler phrases in the Tongue Twister Context.

We created 16 four-word filler phrases. In 4 of these phrases, the first word was repeated in the fourth position (e.g., holler tamper hamper holler), and in 12 of these phrases, the second word was repeated in the fourth position (e.g., weary deepen dairy deepen). When combined with the 8 critical phrases described above this resulted in each participant hearing 12 examples where the first word was repeated in the fourth position, and 12 examples where the second word was repeated in the fourth position. This was done so that participants would not be able to consistently anticipate the fourth word.

Additionally, for each four-word filler phrase, we aimed to select pairs of phonemes to use for the onsets of the first and second syllables of each word that had no (or a very low) incidence of speech errors with each other, based on the MIT confusion matrix of 1,620 single phoneme errors (Shattuck-Hufnagel & Klatt, 1979). For example, for the filler phrase “holler tamper hamper holler”, both the pairs h/t and l/p are exchanged for each other the fewest number of times in that matrix (0 occurrences).

Critical and filler phrases in the Non-Tongue Twister Context.

For each Label condition, participants in the Non-Tongue Twister Context heard exactly the same recordings of words as those in the Tongue Twister Context. To achieve this, for each of the critical phrases in the Tongue Twister Context, we spliced the third word (containing the shifted /?s∫/) into one of the filler phrases. For example, in the S-Label / Tongue Twister condition, one of the critical phrases is “passion mansion pa?s∫ive passion” and one of the filler phrases is “holler tamper hamper holler”. In the S-Label / Non-Tongue Twister condition, the critical phrase becomes “holler tamper pa?s∫ive holler” and the filler phrase becomes “passion mansion hamper passion” (see Tables 1 and 2 for full list of stimuli). Thus, in the Tongue Twister Context, the word containing a shifted /?s∫/ occurs in a phrase that was created to make speech errors seem plausible, while in the Non-Tongue Twister Context, it does not.

Test Block: Categorization Task.

Following previous work, the same talker who recorded the exposure stimuli was recorded saying the nonce words /asi/ and /a∫i/. These nonce words were blended together using FricativeMakerPro (McMurray et al., 2012) to create a continuum of 31 steps ranging from /asi/ to /a∫i/. We selected 7 of these steps to serve as test steps based on initial informal piloting: five steps were centered close to the point of maximal ambiguity, and two steps represented category endpoints. This procedure closely follows previous work, though the specific numbers of test tokens and their placement along the continuum varies somewhat across works (e.g., Kraljic & Samuel, 2006; Liu & Jaeger, 2018; Norris et al., 2003; Vroomen, van Linden, De Gelder & Bertelson, 2007). The results reported in Figure 3 below confirm that the seven test steps span the /s/-/∫/ continuum, as intended.

Figure 3.

Proportion of /∫/ responses as a function of Continuum Step (Experiment 1). Participants in the ∫-Label condition (blue triangle) shift towards /s/ and participants in the S-Label condition (red circle) shift towards /∫/ for both Context conditions. Error bars show 95% confidence intervals obtained via non-parametric bootstrap over the by-participant means. Note that our analysis follows previous work and collapses across continuum steps.

Procedure.

The experiment began with instructions, one practice trial, the exposure block, the test block, and the post-experimental survey. This general structure was identical to that employed in many previous perceptual recalibration experiments. Previous work has often employed lexical decision tasks during exposure (e.g., Kraljic & Samuel, 2005; Liu & Jaeger, 2018; Norris et al., 2003; Zhang & Samuel, 2014), though perceptual recalibration has also been found for a broad variety of different tasks during exposure (e.g., passive listening with catch trials, e.g., Bertelson, Vroomen & De Gelder 2003; Vroomen et al. 2007; ABX discrimination, Clarke-Davidson, Luce & Sawusch 2008; categorization, Clayards, Tanenhaus, Aslin & Jacobs 2008; Kleinschmidt et al. 2015; for further review and comparison of various paradigms, see Drouin & Theodore 2018). Here we employ a transcription task during exposure, a paradigm often employed in related work on accent adaptation (e.g., Bradlow & Bent, 2008; Baese-Berk, Bradlow & Wright, 2013; Tzeng, Alexander, Sidaras & Nygaard, 2016; Xie, Liu & Jaeger, 2019).

During the practice trial, participants heard a male, native American-English accented talker saying the words “grumpy kitten table pretty”. This talker was clearly different from the exposure and test talker, who was female. After the phrase, participants were asked to transcribe the words that they heard, separated by spaces. The trial was repeated until participants correctly transcribed the words. The purpose of the practice trial was to familiarize participants with the task and to allow them to adjust the volume to a comfortable listening level.

The exposure block trials followed the exact same format as the practice trial, except that participants did not receive feedback on the accuracy of their transcriptions and each trial was only played once. Participants heard 24 trials, separated by an inter-trial interval of 1000ms. With a total of 96 words (24 four-word trials), the amount of exposure was similar to our previous web-based studies in which we found perceptual recalibration for /s/-/∫/ (e.g., 60–160 trials across the three experiments reported in Liu and Jaeger, 2018).

One difference of the current study to the more common lexical decision paradigm is the relative proportion of unshifted and atypical pronunciations. In the present study, due to the necessary repetition of sounds in the Tongue Twister Context, participants heard three times as many non-shifted pronunciations (of /s/ or /∫/) as shifted pronunciations, whereas previous studies have exposed participants to equal number of unshifted and atypical pronunciations. Most accounts of perceptual recalibration would predict that the degree of boundary shift primarily depends on the number of atypical pronunciations (Experiment 2 assesses and confirms this assumption). The number of atypical pronunciation and their relative proportion out of all trials in the present study (8 critical atypical items out of 96, i.e., 8.3%) was similar compared to previous web-based studies in which we found perceptual recalibration for /s/-/∫/ (e.g., 6–16 atypical items at a rate of 10% of all items, in Liu and Jaeger, 2018).

During the test block participants, categorized seven steps on the /asi/-/a∫i/ continuum as either /asi/ or /a∫i/, five times each. The steps were played in five cycles (trial bins), each containing a random ordering of the seven steps. Participants indicated their responses using the ‘X’ and ‘M’ keys on their keyboard. Key bindings were counterbalanced across participants. This test procedure is identical to that of our previous web-based studies in which we found perceptual recalibration for /s/-/∫/, except that we halved the number of trials bins from 10 to 5. We did so because our previous work found that the perceptual recalibration effect is largest at the beginning of the test block and then steadily decreases (Liu and Jaeger, 2018; confirmed below).

Finally, participants answered a questionnaire that asked about their audio equipment, language background, technical difficulties, and attention during the experiment.

Scoring transcription accuracy during exposure.

An undergraduate research assistant compiled a list of common misspellings for each word (e.g., spelling polly as pollie or poly). Transcription accuracy was automatically scored for matches to the expected transcriptions; any word that was also on the list of common misspellings was labeled as correct. We counted a word’s transcription as correct regardless of whether the four words had been transcribed in the correct order. The same scoring approach was used for all other experiments reported below. If word order mistakes were counted, transcription accuracies would decrease by about 7.8% across all experiments (range = 5.8–12.1%). None of the results reported in this paper change if order mistakes are counted (for full information, see supplementary data information).

Results

We first summarize our analyses of transcription accuracy during the exposure block. We then turn to the critical results from the test block, comparing the Label and Context conditions. We employ mixed logistic regression (Breslow & Clayton, 1993; Jaeger, 2008) to analyze responses during both the exposure and the test phase, as both involve binary dependent variables.

Exposure Block: Transcription accuracy.

Following previous work, we analyzed two aspects of transcription accuracy during exposure. We first examined the overall accuracy in order to assess whether participants were listening to the stimuli. Then, we assessed whether participants transcribed the critical shifted words correctly. A failure to do so might suggest that participants did not recognize the words, which in turn might reduce the magnitude of perceptual recalibration. Figure 2 summarizes the overall transcription accuracy for all experiments.

Figure 2.

Transcription accuracy during exposure for all experiments and between-participant conditions. Transparent points show by-participant averages. Solid point ranges show the mean and bootstrapped 95% confidence interval over those by-participant averages.

For Experiment 1, the overall transcription accuracy averaged over by-participant means was 88.4% (SD = 7.0%). Table 3 shows accuracies by between-participant conditions. Given the challenging task of transcribing 24 sequences of four semantically unrelated words spoken at a rate of about two words/second, we take this to be adequate performance, indicating that participants were paying attention during the exposure block. To assess whether transcription accuracy differed between conditions, we conducted a mixed logit regression predicting trial-level accuracy (1 = correct, 0 = incorrect) from Label (always sum-coded:∫-Label = 1 vs. S-Label = −1), Context (sum-coded: Non-Tongue Twister = 1 vs. Tongue Twister = −1), and their interaction. The analysis included by-participant intercepts and by-item random intercepts and slopes for Context, Label, and their interaction. An item was defined as the nth row of Tables 1 and 2. For example, the first rows of Tables 1 and 2 together constitute one item.

Table 3.

Transcription accuracy by Label and Context condition with standard deviations in parentheses (Experiment 1)

| Percent of Words Correctly Transcribed | ||

|---|---|---|

| ∫-Label | S-Label | |

| Non-tongue twister | 90.7% (3.6%) | 85.9% (10.7%) |

| Tongue twister | 89.1% (6%) | 87.8% (5.9%) |

Participants in the ∫-Label condition transcribed significantly more words correctly than those in the S-Label condition = 0.27, z = 3.11, p < 0.002). This effect was small (see Table 3), and does not hold across all experiments (see Figure 2). To foreshadow the results of Experiments 2-5, Experiment 3b exhibited the same effect as Experiment 1 ( = 0.18, z = 2.21, p < 0.03), but Experiment 3a exhibited a similarly small effect in the opposite direction—lower accuracy in the ∫-Label condition = −0.20, z = −2.61, p < 0.01). No main effects of Label condition were observed in Experiments 2, 4b, and 5. More importantly given our interest in tongue twister contexts, neither the effects of Context (p > 0.92), nor its interaction between with Label was significant (p > 0.48). It is thus unlikely that any effect of Context (or lack thereof) on the categorization boundary during the test phase could be confounded by overall task engagement.

Next, we analyzed the proportion of correctly transcribed critical shifted words with the exact same analysis approach. The mean transcription accuracy of shifted words in Experiment 1 was 84.8% (SD = 16.3). Table 4 shows accuracies by between-participant conditions. The mixed logit regression found that participants in the ∫-Label condition transcribed significantly more shifted words correctly than those in the S-Label condition = 0.56, z = 4.28, p < 0.0001). This effect was larger than for overall accuracy, possibly driving the effects on overall accuracy. More importantly, neither the effects of Context (p > 0.85), nor its interaction between with Label was significant (p > 0.84). The same holds for all experiments reported below: none of our Context manipulations had a significant main effect on the overall accuracy, or the accuracy with which shifted tokens were transcribed; similarly, Context never interacted significantly with the Label condition (though there were marginally significant interactions in Experiments 3b and 5).

Table 4.

Transcription accuracy for only the eight critical shifted words (Experiment 1)

| Percent of Words Correctly Transcribed | ||

|---|---|---|

| ∫-Label | S-Label | |

| Non-tongue twister | 90.6% (10.9%) | 83.1% (22.6%) |

| Tongue twister | 83.4% (13.7%) | 82.2% (14.9%) |

In short, there is no reason to expect that differences in task engagement during exposure, or differences in participants’ ability to recognize and process the shifted words would confound the analyses of the test data reported below. Still, in order to address our own concerns and those of reviewers’, additional control analyses are reported in the supplementary information, available at https://osf.io/ungba/. Specifically, we repeated all analyses of category boundary shifts during the test block (for all experiments) while also including the participant’s transcription accuracy during exposure as a predictor, as well as all interactions of that predictor with all other variables in the analysis. All of these analyses confirmed the results we report below: while higher accuracy during exposure predicted larger perceptual recalibration effects during test in some of the experiments, this effect never changed the significance of Label or its interaction with Context. This was the case regardless of the specific accuracy measure employed.

Test Block: Changes in the categorization boundary.

We present two planned analyses. Both analyses are trial-level analyses over all data from the test block. The first analysis follows standard practice, and analyzes the average proportion of /∫/ responses ignoring continuum steps and trial order. This resembles the analyses of variance presented in the majority of studies on perceptual recalibration. The second analysis assesses the perceptual recalibration effect at the beginning of the test block. The reason for this second (planned) analysis is found in our previous work: in Liu and Jaeger (2018) we found that perceptual recalibration effect continuously reduced during the test block, perhaps due to the uniform distribution of stimuli across the /asi/-/a∫i/ continuum. This means that the standard analysis—assessing average perceptual recalibration across the entire test block—can substantially underestimate the true perceptual recalibration (as we confirm this below). Such undoing of perceptual recalibration effects during testing is expected if perceptual recalibration reflects distributional learning (as argued in, e.g., Kleinschmidt & Jaeger, 2015; Lancia & Winter, 2013).

Our second analysis directly addresses this possibility by capturing changes in perceptual recalibration during test, and providing a measure of perceptual recalibration at the beginning of the test block. As we show below, this increases our ability to detect effects on perceptual recalibration (such as the hypothesized blocking of perceptual recalibration). Following our previous work, all subsequent analyses are based on this alternative approach.

For the Non-Tongue Twister Context we predict the same type of perceptual recalibration as in previous studies with different exposure tasks (Kraljic et al., 2008; Kraljic and Samuel, 2005, 2011; Liu and Jaeger, 2018; Norris et al., 2003): participants in the J-Label should shift their category boundary towards /s/ and thus categorize more sounds as /∫/, and participants in the S-Label shift their category boundary towards /∫/ and thus should categorize more sounds as /s/.

In the Tongue Twister Context, participants heard the same shifted pronunciations as in the Non-Tongue Twister Context, but embedded in a tongue twister. If the tongue twister context provided participants with a plausible causal explanation for the atypical pronunciations, then participants may attribute these atypical pronunciations to an incidental cause, leading them to adapt less or not at all (as observed for visually provided cause in Kraljic et al., 2008; Kraljic & Samuel, 2011; Liu & Jaeger, 2018).

Average perceptual recalibration across the test block.

Figure 3 shows the categorization curve for all four conditions of Experiment 1 (averaged across all trial bins). We conducted mixed logit regression, where we predicted /∫/ responses (1 = /∫/ response, 0 = /s/ response) by Label (sum-coded: ∫-Label = 1 vs. S-Label = −1) and Context (sum-coded: Non-Tongue Twister = 1 vs. Tongue Twister = −1), and their interaction. The analysis included by-participant random intercepts, which constitutes the maximal random effect structure for our design.

This revealed that overall more /∫/ responses were observed in the ∫-Label condition than in the S-Label condition ( = 0.30, z = 5.0, p < 0.001; Figure 3). This is consistent with perceptual recalibration, and a shift in the categorization boundary based on Label. The output from the model is shown in Table 5. Critically, there was no significant difference of Context (p = 0.41) nor was there a significant interaction between Label and Context (p = 0.69). This suggests that participants who heard the atypical pronunciations in the Tongue Twister Context adapted just as strongly as those who had heard these pronunciations in the Non-Tongue Twister Context, contrary to what we had originally predicted.

Table 5.

Mixed logit regression predicting proportion of /∫/ responses from Label, Condition, and their interaction (Experiment 1). Coding: Label (sum coded: ∫-Label = 1 vs. S-Label = −1) and Condition (Non-Tongue Twister Context = 1 vs. Tongue Twister Context = −1). Rows that are critical to our analysis are highlighted in grey.

| Predictors | Parameter Estimates |

Significance Test |

||

|---|---|---|---|---|

| Coef () | Std Err | z | p | |

| (Intercept) | −0.10 | 0.06 | −1.62 | 0.10 |

| Label (J vs. S) | 0.30 | 0.06 | 5.0 | <0.001 |

| Context (NonTT vs. TT) | −0.05 | 0.06 | −0.8 | 0.41 |

| Label:Context | 0.02 | 0.06 | 0.40 | 0.69 |

Measuring perceptual recalibration at the beginning of test block.

Replicating Liu and Jaeger (2018), we find that participant responses move towards a 50/50 (empirical logit of 0) asi/-/a∫i/ baseline over the course of the test block (Figure 4). Following Liu and Jaeger (2018), we thus conducted an additional analysis to assess the perceptual recalibration effect at the very beginning of the test block. We employed mixed logit regression to predict /∫/ responses from Label (sum-coded: ∫-Label = 1 vs. S-Label = −1), Context (sum-coded: Non-Tongue Twister = 1 vs. Tongue Twister = −1), Trial Bin (coded continuously with the first trial bin as 0), and their interactions (Table 6). The estimated effect of Label thus represents the estimate of the recalibration effect across both Context conditions during the first trial bin of the test block. This and all subsequent analyses of this type included by-participant random intercepts (models with by-participant random slopes for Trial Bin did not converge or led to singular fits, except for Experiment 3a).

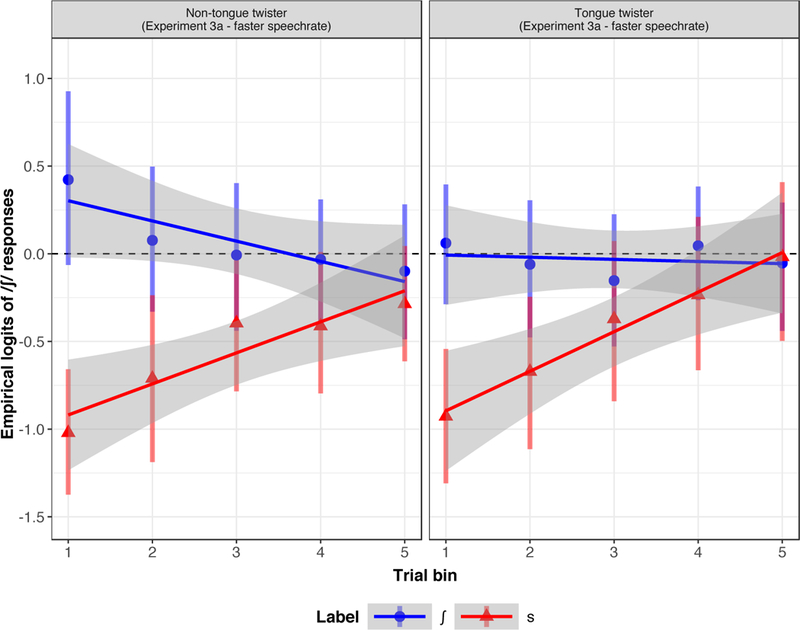

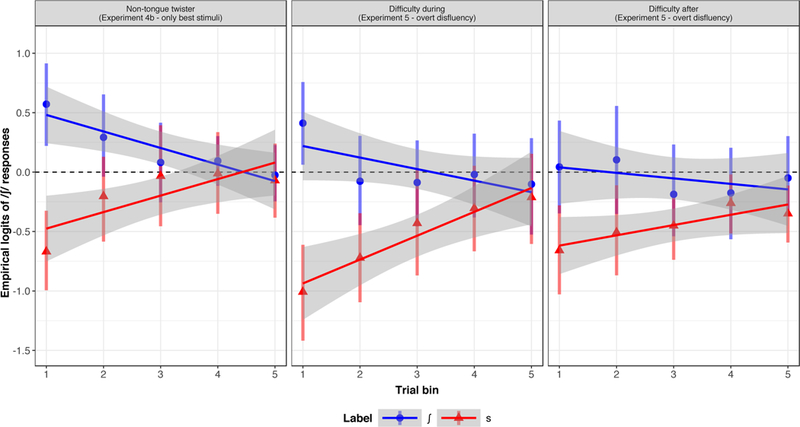

Figure 4.

Proportion of /∫/ responses as a function of exposure condition and Trial Bin (Experiment 1). Proportions of /∫/ responses were empirical logit transformed for ease of comparison with the model’s prediction. Points show empirical means for each trial bin. Solid lines show predictions of the model we use to obtain corrected estimates of the category boundary shift at the beginning of the test block. Error bars show 95% confidence intervals obtained via non-parametric bootstrap over the by-participant means. Over the course of testing, categorization responses in all exposure conditions move towards 0 empirical logits (i.e., 50/50 /s/ and /∫/ responses, dashed line).

Table 6.

Mixed logit regression predicting proportion of /∫/ responses from Label, Condition, and their interaction (Experiment 1). Coding: Label (sum coded: ∫-Label = 1 vs. S-Label = −1), Condition (Non-Tongue Twister Context = 1 vs. Tongue Twister Context = −1), Trial Bin (First bin = 0). Rows that are critical to our analysis are highlighted in grey.

| Predictors | Parameter Estimates |

Significance Test |

||

|---|---|---|---|---|

| Coef () | Std Err | z | p | |

| (Intercept) | −0.25 | 0.07 | −3.37 | <0.001 |

| Label (J vs. S) | 0.56 | 0.07 | 7.59 | <0.001 |

| Context (NonTT vs. TT) | −0.07 | 0.07 | −1.00 | 0.32 |

| Trial Bin (First bin = 0) | 0.07 | 0.02 | 3.63 | <0.001 |

| Label:Context | 0.01 | 0.07 | 0.19 | 0.85 |

| Label:TrialBin | −0.13 | 0.02 | −6.27 | <0.001 |

| Context:TrialBin | 0.01 | 0.02 | 0.56 | 0.57 |

| Label:Context:TrialBin | 0.01 | 0.02 | 0.27 | 0.79 |

We again found that participants in the J-Label condition provided more /∫/ responses than those in the S-Label condition ( = 0.56, z = 7.59, p < 0.001). That is, the true perceptual recalibration effect at the beginning of the test block (1.12 log-odds = * 2 since we used −1 vs. 1 sum-coding) is almost twice as large as the estimate one obtains from averaging across the entire test block (0.6 log-odds). This validates the need for the advanced analysis, which we continue to use throughout the remainder of the paper.

The total number of /∫/ responses tended to increase over trial bins ( = 0.07, z = 3.65, p < 0.001), and that this differed between Label conditions, in a way consistent with convergence towards 50/50: participants in the ∫-Label condition tended to provide fewer /∫/ responses in later trial bins, compared to those in the S-Label condition ( = −0.13, z = −6.27, p < 0.001). This behavior is clearly visible in Figure 4.2

Critically, the interaction between Label and Context was again non-significant (p = 0.85), suggesting that even in the first trial bin there was no evidence that the effect of perceptual recalibration differed depending on whether the shifted pronunciations were embedded in a tongue twister context or not (see Figure 4).

Discussion

In Experiment 1, we find that exposure to atypical pronunciations of /s/ or /∫/ from one talker leads participants to change how they categorize sounds on the /s/-/∫/ continuum. Specifically, participants who are exposed to words containing atypical sounds labeled as /∫/ then categorize more sounds as /∫/, leading to a shift in their categorization curve towards /s/. This replicates the results of previous perceptual recalibration studies, but in a novel multi-word phrase transcription paradigm. The size of the perceptual recalibration effect at the beginning of the test block was comparable to previous work. Specifically, Liu and Jaeger (2018) found a perceptual recalibration effect (the difference between the two Label conditions) of 1.65 log-odds for 10 critical tokens (Experiment 1) and a perceptual recalibration effect of a little under 1.0 log-odds for 6 critical tokens (Experiment 2).3 The present result of 1.12 log-odds for 8 critical tokens thus falls within the expected range. This provides initial validation of the present paradigm, as most theories of perceptual recalibration would predict the effect to increase with the number of critical tokens.

Our paradigm differs from the standard perceptual recalibration paradigm in that participants heard three times as many typical pronunciations as they heard shifted pro-nunciations (e.g. participants in the ∫-Label condition heard 24 typical /s/, and 8 shifted /∫/). This contrasts with previous experiments, where participants typically heard equal numbers of typical and shifted pronunciations.

One potential concern is that the increased number of typical pronunciations that participants heard might have affected how participants categorized sounds, and that this overrides any potential effect of causal attribution—and thus the hypothesized effect of tongue twisters on the adaptation to atypical pronunciations. For example, work on selective adaptation has found that repeated presentations of typical instances of one phoneme leads listeners to categorize fewer sounds as that same phoneme. This effect has variously been attributed to the fatigue of “linguistic feature detectors” or other phonetic assignment processes (Eimas & Corbit, 1973; Samuel, 1986), or a shrinking of the variance for the listener’s underlying distribution for that phonetic category or a change in the prior probability for a category (Kleinschmidt & Jaeger, 2016).

Two considerations ameliorate this concern. First, there are striking differences between the present paradigm and selective adaptation studies. For example, selective adaptation paradigms tend to repeat the typical stimulus many dozens of times (e.g., Samuel 1989; Vroomen et al. 2007). Second, we observe effect sizes that match what is expected under previous perceptual recalibration experiments. This would be rather unexpected, if the differences in paradigms had a large effect on our results.

Still, it is theoretically possible that the shift in categorization boundary in Experiment 1 is driven by the repeated typical sounds, rather than the shifted sounds. In that case, no effect of Context is expected (both context conditions contained equally many unshifted typical sounds, and Tongue Twister contexts are not expected to block the effect of exposure to typical pronunciations). We thus decided to conduct Experiment 2 to directly address the possibility that the shift in the categorization boundary in Experiment 1 was driven entirely by the repetition of unshifted phonemes. As we detail below, Experiment 2 also serves as a baseline to both Experiment 1 and the subsequent experiments.

Experiment 2

Experiment 2 tests whether the shift in the categorization boundary for the /s/ and /∫/ phonemes that we observed in Experiment 1 could be driven solely by the higher number of typical unshifted, compared to atypical shifted, tokens. To this end, we presented participants with only typical, unshifted instances of /s/ and /∫/ (interspersed with the same filler trials as in Experiment 1). These typical sounds were presented in the same Non-Tongue Twister Contexts used in Experiment 1, so that the only difference to Experiment 1 was that the atypical pronunciations were replaced with unshifted productions. In Experiment 2, participants in the ∫-Label group heard three times as many unshifted /s/ as unshifted /∫/, and participants in the S-Label group heard three times as many unshifted /∫/ as unshifted /s/.

If Experiment 2 finds the same shift in the categorization boundary as Experiment 1, this would constitute strong evidence that the effect observed in Experiment 1 is, in fact, not due to perceptual recalibration (since Experiment 2 does not contain any atypical pronunciations of /s/ or /∫/). However, if we fail to find a difference between the two Label conditions in Experiment 2 or if the difference is weaker than it is in Experiment 1, this would suggest that the effect from Experiment 1 is at least in part due to perceptual recalibration. This in turn would raise the question why the perceptual recalibration effect in Experiment 1 is not blocked by the presence of an incidental cause.

Method

Participants.

We recruited 86 participants on Amazon Mechanical Turk for a target of 40 participants in each of the two Label conditions after exclusions. 2 participants were excluded for not correctly identifying the speaker as female, 1 participant for not wearing headphones, and 3 for inverted categorization functions. As in Experiment 1, participants were paid $1 for this experiment, which took roughly 10 minutes ($6/hr).

Materials.

The stimuli used during the exposure block were identical to the stimuli from the Non-Tongue Twister Context of Experiment 1, except that we substituted the critical atypical pronunciation with the typical pronunciation of the same word. These typical pronunciations were the endpoints of the continuum used to create the atypical pronunciations that contained shifted /?s∫/. For example, in the Non-Tongue Twister Context condition of Experiment 1, participants would hear “holler tamper pa?s∫ive holler”, but in the current condition, participants would hear “holler tamper passive holler”. All other stimuli were identical. We thus refer to the current conditions as “Unshifted” conditions, and the Non-Tongue Twister Context conditions from Experiment 1 as “Shifted” conditions.

Procedure.

The procedure was identical to Experiment 1.

Results

We first analyze the results from the test block to assess whether participants in the S-Label condition differed in how they categorized sounds compared to participants in the ∫-Label condition. We next compare the difference between Label conditions in the current experiment (Unshifted condition) with the difference in Label conditions (perceptual recalibration effect) identified in the Non-Tongue Twister Context of Experiment 1 (Shifted condition). Taken together our results suggest that the effect in Experiment 1 is unlikely to be due solely to the higher number of typical pronunciations, and thus is likely to reflect perceptual recalibration.

The transcription accuracies for Experiment 2 and all subsequent experiments are summarized in Figure 2. With an overall accuracy of 89.8% (SD = 6.5%), transcriptions in Experiment 2 were similar to Experiment 1 (88.4%). For details, see supplementary information.

Test Block: Changes in the categorization boundary.

First, we assessed whether there was a difference in categorization between the two Label conditions. To do this, we conducted mixed logit regression, where we predicted categorization by Label condition (sum-coded: ∫-Label = 1 vs. S-Label = −1), Trial Bin (First bin = 0), and their interaction. This analysis is presented in Table 7.

Table 7.

Mixed logit regression predicting proportion of /∫/ responses from Label, Condition, and their interaction (Experiment 2). Coding: Label (sum coded: ∫-Label = 1 vs. S-Label = −1), Trial Bin (First bin = 0). Rows that are critical to our analysis are highlighted in grey.

| Predictors | Parameter Estimates |

Significance Test |

||

|---|---|---|---|---|

| Coef () | Std Err | z | p | |

| (Intercept) | 0.01 | 0.11 | 0.11 | 0.91 |

| Label (∫ vs. S) | 0.17 | 0.11 | 1.49 | 0.14 |

| TrialBin (First bin = 0) | 0.02 | 0.03 | 0.55 | 0.59 |

| Label:TrialBin | −0.05 | 0.03 | −1.7 | =0.09 |

We did not identify a significant effect of Label at the first Trial Bin ( = 0.17, z = 1.49, p = 0.14). Numerically, the effect trended in the same direction as the significant effect in Experiment 1, though it was much smaller (0.34 log-odds in Experiment 2, compared to 1.12 in log-odds Experiment 1). To more directly test whether the effect in Experiment 1 could have been caused simply by the higher proportion of typical pronunciations, we compared the Unshifted condition (Experiment 2) to the Shifted condition (Non-Tongue Twister condition of Experiment 1). These two conditions are identical with the exception that the critical words contained unshifted /s/ or /∫/ instead of the shifted /?s∫/. We conducted a mixed logit regression predicting categorization by Label condition (sum-coded: J-Label = 1 vs. S-Label = −1), Shift (sum-coded: Shifted = 1 vs. Unshifted = −1), Trial Bin (First bin = 0), and their interactions. The results are presented in Table 8 and visualized in Figure 5.

Table 8.

Mixed logit regression predicting proportion of /∫/ responses from Label, Condition, and their interaction (Experiments 1 and 2). Coding: Label (sum coded: ∫-Label = 1 vs. S-Label = −1), Shift condition (sum-coded: Shifted critical pronunciation (Experiment 1) = 1 vs. Unshifted pronunciations (Experiment 2) = −1), Trial Bin (First bin = 0). Rows that are critical to our analysis are highlighted in grey.

| Predictors | Parameter Estimates |

Significance Test |

||

|---|---|---|---|---|

| Coef () | Std Err | z | p | |

| (Intercept) | −0.16 | 0.08 | −1.98 | = 0.05 |

| Label (∫ vs. S) | 0.37 | 0.08 | 4.66 | <0.001 |

| Shift (Shifted vs. Unshifted) | −0.17 | 0.08 | −2.13 | <0.05 |

| TrialBin (First bin = 0) | 0.05 | 0.02 | 2.5 | <0.01 |

| Label:Shift | 0.21 | 0.08 | 2.59 | <0.01 |

| Label:TrialBin | −0.09 | 0.02 | −4.21 | <0.001 |

| Shift:TrialBin | 0.04 | 0.02 | 1.73 | =0.08 |

| Label:Shift:TrialBin | −0.04 | 0.02 | −1.84 | =0.07 |

Figure 5.

Empirical logits of /∫/ responses as a function of Trial Bin (Experiments 1 and 2). For further information, see caption of Figure 4. To facilitate comparison across experiments, the range of the y-axes is held constant here and in all other result plots.

Participants in the ∫-Label condition tended to categorize more sounds as /∫/ ( = 0.37, z = 4.67, p < 0.001). Critically, there was a significant interaction between Label and Shift = 0.21, z = 2.6, p < 0.01), and participants who heard shifted critical words categorized significantly fewer sounds as /∫/ than those who heard unshifted critical words ( = −0.17, z = −2.13, p < 0.05). Simple effect analysis confirmed that the Label condition had an effect in Experiment 1 ( = 0.58, z = 5.10, p < 0.0001) but not Experiment 2 ( = 0.17, z = 1.48, p > 0.14).

Discussion

The results of Experiment 2 suggest that it is unlikely that the effects of the Label condition in Experiment 1 originate solely in the larger proportion of unshifted, compared to shifted, pronunciations. This result is not unexpected given differences between the current experiment and paradigms used to study selective adaptation. Experiments on selective adaptation tend to repeat the typical pronunciation many dozens of times (e.g., Bowers, Kazanina & Andermane, 2016; Samuel, 1989, 1997; Vroomen et al., 2007). By contrast, in the current experiment, the repeated typical sounds totaled only 24 tokens. It would thus have been surprising to see large selective adaptation effects as driving the effects in Experiment 1. Experiment 2 confirmed this.

Experiment 2 also serves as a baseline for Experiment 1 and all subsequent experiments we report: in Experiment 2, participants were exposed to only typical sounds and never heard shifted sounds. A comparison of the left panel in Figure 5 (Experiment 2) to the right panel (the Non-Tongue Twister condition in Experiment 1) suggest that the perceptual recalibration effect is driven by only the S-Label condition. This was confirmed by a simple effect analysis comparing the two conditions: participants who were exposed to shifted S-Label words in Experiment 1 identified significantly fewer sounds as /∫/ than those who had been exposed to unshifted S-Label words in Experiment 2 ( = −0.38, z = −3.23, p < 0.01); in contrast, there was no difference between Experiments 1 and 2 for participants in the ∫-Label condition (p = 0.82). The same asymmetry was found when comparing Experiment 1 to an alternative baseline experiment (reported as Experiment 2b in the supplementary information). In the alternative baseline experiment participants were exposed only to filler phrases—i.e., the complete absence of any /s/ or /∫/ during exposure—and then measured category shifts during the same test phase as in Experiments 1-2. The categorization boundary observed in that experiment was identical to that observed in Experiment 2.

This asymmetry differs from previous experiments in which we identified perceptual recalibration away from the baseline for both /s/ and /∫/ (Liu and Jaeger, 2018). Similar asymmetries have, however, been observed in other work (e.g., Drouin, Theodore & Myers 2016; Zhang & Samuel 2014). Indeed, which of two sound categories elicits perceptual recalibration can differ between experiments (for review, see Samuel, 2016, p. 111), possibly due to stimulus-specific properties and, in particular, the placement of the test continuum relative to the acoustic properties of exposure tokens (for evidence and discussion, see Drouin et al., 2016).

Finally, Experiment 2 further ameliorates concerns that Experiment 1 may suffer from lack of power to detect effects of tongue twister contexts. Our power simulations (see Appendix) found more than 95% power to detect the effect of Label and more than 80% power to detect blocking of those effects. Experiment 2 shows that we can indeed detect the absence or blocking of a perceptual recalibration effect, compared to Experiment 1.

Experiments 3a and 3b

One possibility for why the tongue twister context did not block adaptation in Experiment 1 is that the tongue twister context we provided was not a sufficiently plausible cause of the atypical pronunciations for participants. If participants did not view the tongue twister context to cause production difficulties, in the way a real tongue twister would, then it is not surprising that we do not find blocking of adaptation when shifted pronunciations are presented in this context.

In the current experiment, we address this possibility in two ways. In Experiment 3a, we increase the plausibility that our tongue twisters would be viewed as tongue twisters, as intended. Production experiments have found an increased incidence of errors when speech rate is increased (MacKay, 1982). To increase the plausibility that our tongue twisters would be viewed as likely to have caused the atypical pronunciations, we increase the speech rate of our stimuli. Additionally, we provide participants in the Tongue Twister Context with explicit information stating that they will hear tongue twisters that may have been difficult for the talker to produce. Explicit instructions of this type have sometimes been found to facilitate attribution to alternative causes, such as intended here (e.g., Arnold et al., 2007, discussed below). In Experiment 3b, we provide participants with an alternative (non-tongue twister) cause for the atypical pronunciations. We inform participants that the talker is intoxicated. These experiments taken together allow us to assess whether inferences about causes during speech perception may be influenced by explicit instructions.

Experiment 3a

Method

Participants.

We recruited 177 participants on Amazon Mechanical Turk to achieve a target of 40 participants in each of four conditions (S-Label/∫-Label x Tongue Twister/Non-Tongue Twister). 7 participants were excluded for not correctly identifying the speaker as female and 10 participants for not wearing headphones (9.6% exclusion rate). Participants were paid $1 for this experiment, which took roughly 10 minutes ($6/hr).

Materials and Procedure.

For this experiment, we used the exact stimuli from Experiment 1. We increased the tempo of the stimuli by 23%, the maximum speed-up at which the stimuli still sounded natural. We used the free software Audacity (https://www.audacityteam.org/), so that the speed of the stimuli changed, but the pitch and formants remained unchanged. Since static spectral cues are highly predictive of the /s/ vs. /∫/ contrast (e.g., McMurray et al., 2012, Table 3), this procedure is unlikely to change the perceived shift of our exposure tokens. Since these cues are duration invariant, we also do not expect that the increase in speech rate during exposure affects the perception of the test stimuli (which had the same speech rate as in Experiments 1 and 2).

The procedure of Experiment 3a was identical to that of Experiment 1, except that we added an additional prompt for participants in the Tongue Twister Context condition. This prompt emphasized the tongue twister as a plausible cause for the atypical pronunciations. We did so because Experiment 1 had not found an effect of the Tongue Twister Context on blocking the perceptual recalibration effect. Participants in the Tongue Twister Context were shown the following prompt:

“A number of the phrases that the speaker was asked to say are difficult tongue twisters. You might notice that the speaker occasionally mispronounces certain words slightly because of this. Do not worry about the mispronunciations. Just transcribe the words as best as you can.”

Participants in the Non-Tongue Twister Context were not shown the prompt. The rest of the procedure was identical to that of Experiment 1. Transcription accuracy (84.8%, SD = 10.1%) was somewhat lower than in Experiment 1, likely because of the increased speech rate in Experiment 3a.

Results

Test Block: Changes in the categorization boundary.

As in Experiments 1 and 2, we used mixed logit regression to predict /∫/ responses from Label (sum-coded: ∫-Label = 1 vs. S-Label = −1), Context (sum-coded: Non-Tongue Twister = 1 vs. Tongue Twister = −1), Trial Bin (coded continuously with the first trial bin as 0), and their interactions (Table 9). For Experiment 3a, the analysis converged with by-participant intercepts and slopes for Trial Bin.

Table 9.

Mixed logit regression predicting proportion of /∫/ responses from Label, Condition, and their interaction (Experiment 3a). Coding: Label (sum coded: ∫-Label = 1 vs. S-Label = −1), Context (NonTT = 1 vs. TT = −1), Trial Bin (First bin = 0). Rows that are critical to our analysis are highlighted in grey.

| Predictors | Parameter Estimates |

Significance Test |

||

|---|---|---|---|---|

| Coef () | Std Err | z | p | |

| (Intercept) | −0.47 | 0.13 | −3.69 | <0.001 |

| Label (∫ vs. S) | 0.63 | 0.13 | 4.92 | <0.001 |

| Context (NonTT vs. TT) | 0.08 | 0.13 | 0.61 | 0.54 |

| TrialBin (First bin = 0) | 0.08 | 0.03 | 3.1 | <0.002 |

| Label:Context | 0.08 | 0.13 | 0.67 | 0.50 |

| Label:TrialBin | −0.15 | 0.03 | −5.79 | <0.001 |

| Context:TrialBin | −0.04 | 0.03 | −1.52 | =0.13 |

| Label:Context:TrialBin | −0.01 | 0.03 | −0.40 | 0.69 |

At the beginning of the test block, participants in the J-Label condition provided more /∫/ responses than those in the S-Label condition ( = 0.63, z = 4.92, p < 0.001). Furthermore, the total number of /∫/ responses tended to increase over trial bins ( = 0.08, z = 3.1, p < 0.002), and that this differed between Label conditions, in a way consistent with convergence towards 50/50: participants in the ∫-Label condition tended to provide fewer /∫/ responses in later trials bins, compared to those in the S-Label condition ( = −0.15, z = −5.79, p < 0.001). Critically, however, we did not identify a significant effect of Context (p = 0.54) or interaction between Context and Label (p = 0.50). This suggests that the categorization of stimuli during the test block was not strongly affected by whether the shifted stimuli were presented in a Tongue Twister Context or Non-Tongue Twister Context. It is worth pointing out though that the interaction is numerically in the predicted direction. This is also visible in Figure 6.

Figure 6.

Empirical logits of /∫/ responses as a function of Trial Bin (Experiment 3a). For further information, see caption of Figure 4.

Additional planned analyses reported in the supplementary information found that i) the magnitude of perceptual recalibration in Experiment 3a was identical to that of Experiment 1 and ii) Experiment 3a again only finds perceptual recalibration in the S-Label condition (compared to the unshifted baseline from Experiment 2). These results also suggest that the increased speech rate did not affect perceptual recalibration.

Experiment 3b

In Experiment 3b, we further explore whether explicitly provided information about the talker can affect adaptation. We attempt to block perceptual recalibration by providing participants with an alternate reason why the talker might sound atypical. Specifically, we test whether instructions that talker in the experiment was intoxicated during the exposure block, but not during the test block, reduce or block the perceptual recalibration effect. We chose to use this alternate cause for two reasons. First, when intoxicated, speech errors become more common (Chin & Pisoni, 1997; Cutler & Henton, 2004). Second, the specific significant shift that we used to observe perceptual recalibration (/s/ shifting towards /∫/) has been documented as one effect of intoxication on speech production (Chin & Pisoni, 1997; Heigl, 2018). Both of these factors combined make it plausible that intoxication may provide listeners with a plausible cause for the atypical pronunciation that they hear.

Method

Participants.