Abstract

Data augmentation, a technique in which a training set is expanded with class-preserving transformations, is ubiquitous in modern machine learning pipelines. In this paper, we seek to establish a theoretical framework for understanding data augmentation. We approach this from two directions: First, we provide a general model of augmentation as a Markov process, and show that kernels appear naturally with respect to this model, even when we do not employ kernel classification. Next, we analyze more directly the effect of augmentation on kernel classifiers, showing that data augmentation can be approximated by first-order feature averaging and second-order variance regularization components. These frameworks both serve to illustrate the ways in which data augmentation affects the downstream learning model, and the resulting analyses provide novel connections between prior work in invariant kernels, tangent propagation, and robust optimization. Finally, we provide several proof-of-concept applications showing that our theory can be useful for accelerating machine learning workflows, such as reducing the amount of computation needed to train using augmented data, and predicting the utility of a transformation prior to training.

1. Introduction

The process of augmenting a training dataset with synthetic examples has become a critical step in modern machine learning pipelines. The aim of data augmentation is to artificially create new training data by applying transformations, such as rotations or crops for images, to input data while preserving the class labels. This practice has many potential benefits: Data augmentation can encode prior knowledge about data or task-specific invariances, act as regularizer to make the resulting model more robust, and provide resources to data-hungry deep learning models. As a testament to its growing importance, the technique has been used to achieve nearly all state-of-the-art results in image recognition [4, 11, 13, 33], and is becoming a staple in many other areas as well [40, 22]. Learning augmentation policies alone can also boost the state-of-the-art performance in image classification tasks [31, 7].

Despite its ubiquity and importance to the learning process, data augmentation is typically performed in an ad-hoc manner with little understanding of the underlying theoretical principles. In the field of deep learning, for example, data augmentation is commonly understood to act as a regularizer by increasing the number of data points and constraining the model [12, 42]. However, even for simpler models, it is not well-understood how training on augmented data affects the learning process, the parameters, and the decision surface of the resulting model. This is exacerbated by the fact that data augmentation is performed in diverse ways in modern machine learning pipelines, for different tasks and domains, thus precluding a general model of transformation. Our results show that regularization is only part of the story.

In this paper, we aim to develop a theoretical understanding of data augmentation. First, in Section 3, we analyze data augmentation as a Markov process, in which augmentation is performed via a random sequence of transformations. This formulation closely matches how augmentation is often applied in practice. Surprisingly, we show that performing k-nearest neighbors with this model asymptotically results in a kernel classifier, where the kernel is a function of the base augmentations. These results demonstrate that kernels appear naturally with respect to data augmentation, regardless of the base model, and illustrate the effect of augmentation on the learned representation of the original data.

Motivated by the connection between data augmentation and kernels, in Section 4 we show that a kernel classifier on augmented data approximately decomposes into two components: (i) an averaged version of the transformed features, and (ii) a data-dependent variance regularization term. This suggests a more nuanced explanation of data augmentation—namely, that it improves generalization both by inducing invariance and by reducing model complexity. We validate the quality of our approximation empirically, and draw connections to other generalization-improving techniques, including recent work in invariant learning [43, 24, 30] and robust optimization [28].

Finally, in Section 5, to illustrate the utility of our theoretical understanding of augmentation, we explore promising practical applications, including: (i) developing a diagnostic to determine, prior to training, the importance of an augmentation; (ii) reducing training costs for kernel methods by allowing for augmentations to be applied directly to features—rather than the raw data—via a random Fourier features approach; and (iii) suggesting a heuristic for training neural networks to reduce computation while realizing most of the accuracy gain from augmentation.

2. Related Work

Data augmentation has long played an important role in machine learning. For many years it has been used, for example, in the form of jittering and virtual examples in the neural network and kernel methods literatures [35, 34, 8]. These methods aim to augment or modify the raw training data so that the learned model will be invariant to known transformations or perturbations. There has also been significant work in incorporating invariance directly into the model or training procedure, rather than by expanding the training set. One illustrative example is that of tangent propagation for neural networks [36, 37], which proposes a regularization penalty to enforce local invariance, and has been extended in several recent works [32, 9, 43]. However, while efforts have been made that loosely connect traditional data augmentation with these methods [21, 43], there has not been a rigorous study on how these sets of procedures relate in the context of modern models and transformations.

In this work, we make explicit the connection between augmentation and modifications to the model, and show that prior work on tangent propagation can be derived as a special case of our more general theoretical framework (Section 5). Moreover, we draw connections to recent work on invariant learning [24, 30] and robust optimization [28], illustrating that data augmentation not only affects the model by increasing invariance to specific transformations, but also by reducing the variance of the estimator. These analyses lead to an important insight into how invariance can be most effectively applied for kernel methods and deep learning architectures (Section 5), which we show can be used to reduce training computation and diagnose the effectiveness of various transformations.

Prior theory also does not capture the complex process by which data augmentation is often applied. For example, previous work [1, 3] shows that adding noise to input data has the effect of regularizing the model, but these effects have yet to be explored for more commonly applied complex transformations, and it is not well-understood how the inductive bias embedded in complex transformations manifest themselves in the invariance of the model (addressed here in Section 4). A common recipe in achieving state-of-the-art accuracy in image classification is to apply a sequence of more complex transformations such as crops, flips, or local affine transformations to the training data, with parameters drawn randomly from hand-tuned ranges [4, 10]. Similar strategies have also been employed in applications of classification for audio [40] and text [22]. In Section 3, we analyze a motivating model reaffirming the connection between augmentation and kernel methods, even in the setting of complex and composed transformations.

Finally, while data augmentation has been well-studied in the kernels literature [2, 34, 25], it is typically explored in the context of simple geometrical invariances with closed forms. For example, van der Wilk et al. [41] use Gaussian processes to learn these invariances from data by maximizing the marginal likelihood. Further, the connection is often approached in the opposite direction—by looking for kernels that satisfy certain invariance properties [15, 38]. We instead approach the connection directly via data augmentation, and show that even complicated augmentation procedures akin to those used in practice can be represented as a kernel method.

3. Data Augmentation as a Kernel

To begin our study of data augmentation, we propose and investigate a model of augmentation as a Markov process, inspired by the general manner in which the process is applied—via the composition of multiple different types of transformations. Surprisingly, we show that this augmentation model combined with a k-nearest neighbor (k-NN) classifier is asymptotically equivalent to a kernel classifier, where the kernel is a function of the base transformations. While the technical details of the section can be skipped on a first reading, the central message is that kernels appear naturally in relation to data augmentation, even when we do not start with a kernel classifier. This provides additional motivation to study kernel classifiers trained on augmented data, as in Section 4.

Markov Chain Augmentation Process.

In data augmentation, the aim is to perform class-preserving transformations to the original training data to improve generalization. As a concrete example, a classifier that correctly predicts an image of the number ‘1’ should be able to predict this number whether or not the image is slightly rotated, translated, or blurred. It is therefore common to pick some number of augmentations (e.g., for images: rotation, zoom, blur, flip, etc.), and to create synthetic examples by taking an original data point and applying a sequence of these augmentations. To model this process, we consider the following procedure: given a data point, we pick augmentations from a set at random, applying them one after the other. To avoid deviating too far, with some probability we discard the point and start over from a random point in the original dataset. We formalize this below.

Definition 1 (Markov chain augmentation model). Given a dataset of n examples , we augment the dataset via augmentation matrices A1,A2,…,Am, for , which are stochastic transition matrices over a finite state space of possible labeled (augmented) examples . We model this via a discrete time Markov chain with the transitions:

With probability proportional to βj, a transition occurs via augmentation matrix Aj.

With probability proportional to γi, a retraction to the training set occurs, and the state resets to zi.

For example, the probability of retracting to training example z1 is γ1/(γ1 + ⋯ + γn + β1 + ⋯ + βm). The augmentation process starts from any point and follows Definition 1 for an arbitrary amount of time. The retraction steps intuitively keep the final distribution grounded closer to the original training points.

From Definition 1, by conditioning on which transition is chosen, it is evident that the entire process is equivalent to a Markov chain whose transition matrix is the weighted average of the base transitions. Note that the transition matrices Aj do not need to be materialized but are implicit from the description of the augmentation. A concrete example is given in Section B.2. Without loss of generality, we let all rates βj,γi be normalized with Σj γi = 1. Let {eω}ω∈Ω be the standard basis of Ω, and let be the basis element corresponding to zi. The resulting transition matrix and stationary distribution are given below; proofs and additional details are provided in Appendix A. This describes the long-run distribution of the augmented dataset.

Proposition 1. The described augmentation process is a Markov chain with transition matrix:

Lemma 1 (Stationary distribution). The stationary distribution is given by:

| (1) |

Lemma 1 agrees intuitively with the augmentation process: When all βj ≈ 0 (i.e., low rate of augmentation), Lemma 1 implies that the stationary distribution π is close to ρ, the original data distribution. As βj increases, the stationary distribution becomes increasingly distorted by the augmentations.

Classification Yields a Kernel.

Using our proposed model of augmentation, we can show that classifying an unseen example using augmented data results in a kernel classifier. In doing so, we can observe the effect that augmentation has on the learned feature representation of the original data. We discuss several additional uses and extensions of the result itself in Appendix A.1.

Theorem 1. Consider running the Markov chain augmentation process in Definition 1 and classifying an unseen example x ∈ X using an asymptotically Bayes-optimal classifier, such as k-nearest neighbors. Suppose that the Ai are time-reversible with equal stationary distributions. Then in the limit as time T → ∞ and k → ∞, this classification has the following form:

| (2) |

where is supported only on the dataset z1,…,zn, and is a kernel matrix (i.e., K is symmetric positive definite and non-negative) depending only on all the augmentations Aj,βj.

Theorem 1 follows from formulating the stationary distribution (Lemma 1) as π = α⊤K for a kernel matrix K and . Noting that k-NN asymptotically acts as a Bayes classifier, selecting the most probable label according to this stationary distribution, leads to (2).1 In Appendix A, we include a closed form for α and K along with the proof. We include details and examples, and elaborate on the strength of the assumptions.

Takeaways.

This result has two important implications: First, kernels appear naturally in relation to complex forms of augmentation, even when we do not begin with a kernel classifier. This underscores the connection between augmentation and kernels even with complicated compositional models, and also serves as motivation for our focused study on kernel classifiers in Section 4. Second, and more generally, data augmentation—a process that produces synthetic training examples from the raw data—can be understood more directly based on its effect on downstream components in the learning process, such as the features of the original data and the resulting learned model. We make this link more explicit in Section 4, and show how to exploit it in practice in Section 5.

4. Effects of Augmentation: Invariance and Regularization

In this section we build on the connection between kernels and augmentation in Section 3, exploring directly the effect of augmentation on a kernel classifier. It is commonly understood that data augmentation can be seen as a regularizer, in that it reduces generalization error but not necessarily training error [12, 42]. We make this more precise, showing that data augmentation has two specific effects: (i) increasing the invariance by averaging the features of augmented data points, and (ii) penalizing model complexity via a regularization term based on the variance of the augmented forms. These are two approaches that have been explicitly applied to get more robust performance in machine learning, though outside of the context of data augmentation. We demonstrate connections to prior work in our derivation of the feature averaging (Section 4.1) and variance regularization (Section 4.2) terms. We also validate our theory empirically (Section 4.3), and in Section 5, show the practical utility of our analysis to both kernel and deep learning pipelines.

General Augmentation Process.

To illustrate the effects of augmentation, we explore it in conjunction with a general kernel classifier. In particular, suppose that we have an original kernel K with a finite-dimensional2 feature map , and we aim to minimize some smooth convex loss with parameter over a dataset (x1,y1),…,(xn,yn). The original objective function to minimize is , with two common losses being logistic l(ŷ;y) = log(1 + exp(−yŷ)) and quadratic l(ŷ;y) = (ŷ − y)2.

Now, suppose that we first augment the dataset using an augmentation kernel T. Whereas the augmentation kernel in Section 3 had a specific form based on the stationary distribution of the proposed Markov process, here we make this more general, simply requiring that for each data point xi, T(xi) describes the distribution over data points into which xi can be transformed. The new objective function becomes:

| (3) |

4.1. Data Augmentation as Feature Averaging

We begin by showing that, to first order, objective (3) can be approximated by a term that computes the average augmented feature of each data point. In particular, suppose that the applied augmentations are “local” in the sense that they do not significantly modify the feature map ϕ. Using the first-order Taylor approximation, we can expand each term around any point ϕ0 that does not depend on ti:

Picking ϕ0 = Eti~T(xi) [ϕ(ti)], the second term vanishes, yielding the first-order approximation:

| (4) |

This is exactly the objective of a linear model with a new feature map ψ(x) = Et~T(x) [ϕ(t)], i.e., the average feature of all the transformed versions of x. If we overload notation and use T(x,u) to denote the probability density of transforming x to u, this feature map corresponds to a new kernel:

That is, training a kernel linear classifier with a particular loss function plus data augmentation is equivalent, to first order, to training a linear classifier with the same loss on an augmented kernel , with feature map ψ(x) = Et~T(x) [ϕ(t)]. This feature map is exactly the embedding of the distribution of transformed points around x into the reproducing kernel Hilbert space [26, 30]. This means that the first-order effect of training on augmented data is equivalent to training a support measure machine [25], with the n input distributions corresponding to the n distributions of transformed points around x1,…,xn. The new kernel has the effect of increasing the invariance of the model, as averaging the features from transformed inputs that are not necessarily present in the original dataset makes the features less variable to transformation.

By Jensen’s inequality, since the function l is convex, ĝ(w) ≤ g(w). In other words, if we solve the optimization problem that results from data augmentation, the resulting objective value using will be no larger. Further, if we assume that the loss function is strongly convex and strongly smooth, we can quantify how much the solution to the first-order approximation and the solution of the original problem with augmented data will differ (see Proposition 3 in the appendix). We validate the accuracy of this first-order approximation empirically in Section 4.3.

4.2. Data Augmentation as Variance Regularization

Next, we show that the second-order approximation of the objective on an augmented dataset is equivalent to variance regularization, making the classifier more robust. We can get an exact expression for the error by considering the second-order term in the Taylor expansion, with ζi denoting the remainder function from Taylor’s theorem:

where Δti,xi := ϕ(ti) − ψ(xi) is the difference between the features of the transformed image ti and the averaged features ψ(xi). If (as is the case for logistic and linear regression) l″ is independent of y, the error term is independent of the labels. That is, the original augmented objective g is the modified objective ĝ plus some regularization that is a function of the training examples, but not the labels. In other words, data augmentation has the effect of performing data-dependent regularization.

The second-order approximation to the objective is:

| (5) |

For a fixed w, this error term is exactly the variance of the output w⊤ϕ(X), where the true data X is assumed to be sampled from the empirical data points xi and their augmented versions specified by T(xi), weighted by l″(w⊤ψ(xi)) This data-dependent regularization term favors weight vectors that produce similar outputs wTϕ(x) and wTϕ(x′) if x′ is a transformed version of x.

4.3. Validation of Approximation

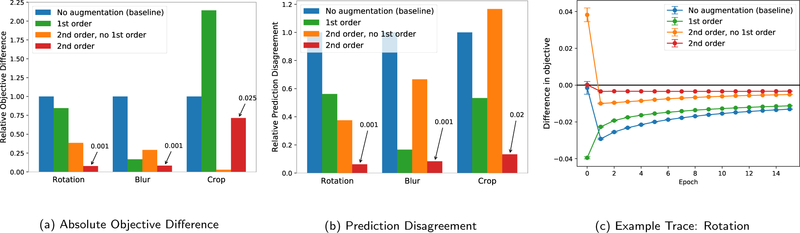

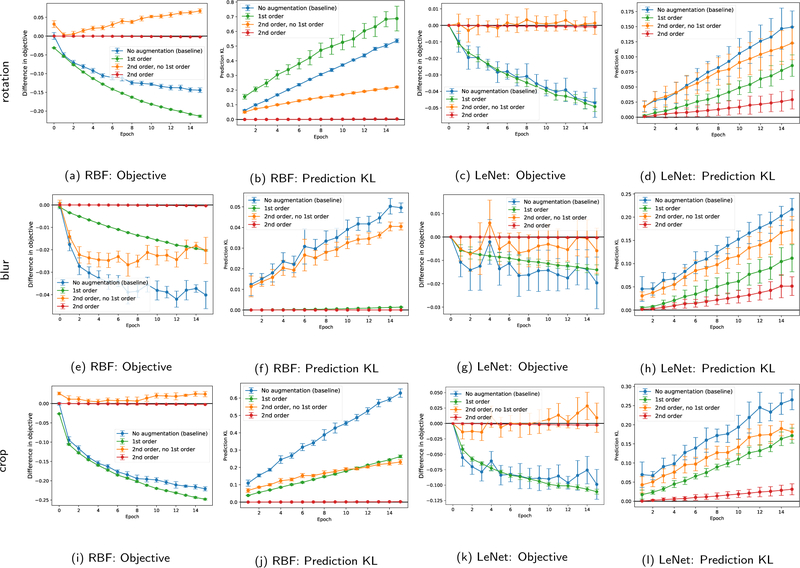

We empirically validate3 the first- and second-order approximations, ĝ(w) and , on MNIST [19] and CIFAR-10 [18] datasets, performing rotation, crop, or blur as augmentations, and using either an RBF kernel with random Fourier features [29] or LeNet (details in Appendix E.1) as a base model. Our results show that while both approximations perform reasonably well, the second-order approximation indeed results in a better approximation of the actual objective than the first-order approximation alone, validating the significance of the variance regularization component of data augmentation. In particular, in Figure 1a, we plot the difference after 10 epochs of SGD training, between the actual objective function over augmented data g(w) and: (i) the first-order approximation , (ii) second-order approximation , and (iii) second-order approximation without the first-order term, . As a baseline, we plot these differences relative to the difference between the augmented and non-augmented objective (i.e., the original images), f(w). In Figure 1b, to see how training on approximate objectives affect the predicted test values, we plot the prediction disagreement between the model trained on true objective and the models trained on approximate objectives. Finally, Figure 1c shows that these approximations are relatively stable in terms of performance throughout the training process. For the CIFAR-10 dataset and the LeNet model (Appendix E), the results are quite similar, though we additionally observe that the first-order approximation is very close to the model trained without augmentation for LeNet, suggesting that the data-dependent regularization of the second-order term may be the dominating effect in models with learned feature maps.

Figure 1:

For the MNIST dataset, we validate that (a) the proposed approximate objectives ĝ(w) and are close to the true objective g(w), and (b) training on the approximate objectives leads to similar predictions as training on the true objective. We plot the relative difference between the proposed approximations and the true augmented objective, in terms of difference in objective value (1a) and resulting test prediction disagreement (1b), using the non-augmented objective as a baseline. The 2nd-order approximation closely matches the true objective, particularly in terms of the resulting predictions. We observe that the accuracy of the approximations remains stable throughout training (1c). Full experiments are provided in Appendix E.

4.4. Connections to Prior Work

The approximations we have provided in this section unify several seemingly disparate works.

Invariant kernels.

The derived first-order approximation can capture prior work in invariant kernels as a special case, when the transformations of interest form a group and averaging features over the group induces invariance [24, 30]. The form of the averaged kernel can then be used to learn the invariances from data [41].

Robust optimization.

Our work also connects to robust optimization. For example, previous work [1, 3] shows that adding noise to input data has the effect of regularizing the model. Maurer & Pontil [23] bounds generalization error in terms of the empirical loss and the variance of the estimator. The second-order objective here adds a variance penalty term, thus optimizing generalization and automatically balancing bias (empirical loss) and variance with respect to the input distribution coming from the empirical data and their transformed versions (this is presumably close to the population distribution if the transforms capture the right invariance in the dataset). Though the resulting problem is generally non-convex, it can be approximated by a distributionally robust convex optimization problem, which can be efficiently solved by a stochastic procedure [28, 27].

Tangent propagation.

In Section 5.3, we show that when applied to neural networks, the described second-order objective can realize classical tangent propagation methods [36, 37, 43] as a special case. More precisely, the second-order only term (orange in Figure 1) is equivalent to the approximation described in Zhao et al. [43], proposed there in the context of regularizing CNNs. Our results indicate that considering both the first- and second-order terms, rather than just this second-order component, in fact results in a more accurate approximation of the true objective, e.g., providing a 6–9× reduction in the resulting test prediction disagreement (Figure 1b). This suggests an approach to improve classical tangent propagation methods, explored in Section 5.3.

5. Practical Connections: Accelerating Training With Data Augmentation

We now present several proof-of-concept applications to illustrate how the theoretical insights in Section 4 can be used to accelerate training with data augmentation. First, we propose a kernel similarity metric that can be used to quickly predict the utility of potential augmentations, helping to obviate the need for guess-and-check work. Next, we explore ways to reduce training computation over augmented data, including incorporating augmentation directly in the learned features with a random Fourier features approach, and applying our derived approximation at various layers of a deep network to reduce overall computation. We perform these experiments on common benchmark datasets, MNIST and CIFAR-10, as well a real-world mammography tumor-classification dataset, DDSM.

5.1. A Fast Kernel Metric for Augmentation Selection

For new tasks and datasets, manually selecting, tuning, and composing augmentations is one of the most time-consuming processes in a machine learning pipeline, yet is critical to achieving state-of-the-art performance. Here we propose a kernel alignment metric, motivated by our theoretical framework, to quickly estimate if a transformation is likely to improve generalization performance without performing end-to-end training.

Kernel alignment metric.

Given a transformation T, and an original feature map ϕ(x), we can leverage our analysis in Section 4.1 to approximate the features for each data point x as ψ(x) = Et~T(x) [ϕ(t)]. Defining the feature kernel and the label kernel KY (y,y′) = 1{y = y′}, we can compute the kernel target alignment [6] between the feature kernel and the target kernel KY without training:

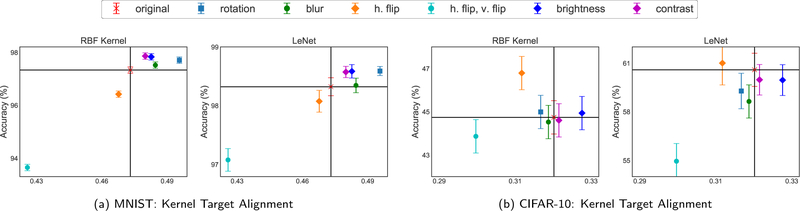

where This alignment statistic can be estimated quickly and accurately from subsamples of the data [6]. In our case, we use random Fourier features [29] as an approximate feature map ϕ(x) and sample t ~ T(x) to estimate the averaged feature ψ(x) = Et~T(x) [ϕ(t)]. The kernel target alignment measures the extent to which points in the same class have similar features. If this alignment is larger than that between the original feature kernel K(x,x′) = ϕ(x)⊤ϕ(x) and the target kernel, we postulate that the transformation T is likely to improve generalization. We validate this method on MNIST and CIFAR-10 with numerous transformations (rotation, blur, flip, brightness, and contrast). In Figure 2, we plot the accuracy of the kernel classifier and LeNet against the kernel target alignment. We see that there is indeed a correlation between kernel alignment and accuracy, as points tend to cluster in the upper right (higher alignment, higher accuracy) and lower left (lower alignment, lower accuracy) quadrants, indicating that this approach may be practically useful to detect the utility of a transformation prior to training.

Figure 2:

Accuracy vs. kernel target alignment for RBF kernel and LeNet models, for MNIST (left) and CIFAR-10 (right) datasets. This alignment metric can be used to quickly select transformations (e.g., MNIST: rotation) that improve performance and avoid bad transformations (e.g., MNIST: flips).

5.2. Efficient Augmentation via Random Fourier Features

Beyond predicting the utility of an augmentation, we can also use our theory to reduce the computation required to perform augmentation on a kernel classifier—resulting, e.g., in a 4× speedup while achieving the same accuracy (MNIST, Table 1). When the transformations are affine (e.g., rotation, translation, scaling, shearing), we can perform transforms directly on the approximate kernel features, rather than the raw data points, thus gaining efficiency while maintaining accuracy.

Table 1:

Performance of augmented random Fourier features on MNIST, CIFAR-10, and DDSM

| Model | MNIST | CIFAR-10 | DDSM | |||

|---|---|---|---|---|---|---|

| Acc. (%) | Time | Acc. (%) | Time | Acc. (%) | Time | |

| No augmentation | 96.1 ± 0.1 | 34s | 39.4 ± 0.5 | 51s | 57.3 ± 6.7 | 27s |

| Traditional augmentation | 97.6 ± 0.2 | 220s | 45.3 ± 0.5 | 291s | 59.4 ± 3.2 | 61s |

| Augmented RFFs | 97.6 ± 0.1 | 54s | 45.2 ± 0.4 | 124s | 58.8 ± 5.1 | 34s |

Recall from Section 4 that the first-order approximation of the new feature map is given by ψ(x) = Et~T(x) [ϕ(t)], i.e., the average feature of all the transformed versions of x. Suppose that the transform is linear in x of the form Aαx, where the transformation is parameterized by α. For example, a rotation by angle α has the form T(x) = Rαx, where Rα is a d × d matrix that 2D-rotates the image x. Further, assume that the original kernel k(x,x′) is shift-invariant (say an RBF kernel), so that it can be approximated by random Fourier features [29]. Instead of transforming the data point x itself, we can transform the averaged feature map for x directly as:

where ω1,…,ωD are sampled from the spectral distribution, and α1,…,αs are sampled from the distribution of the parameter α (e.g., uniformly from [−15, 15] if the transform is rotation by α degrees). This type of random feature map has been suggested by Raj et al. [30] in the context of kernels invariant to actions of a group. Our theoretical insights in Section 4 thus connect data augmentation to invariant kernels, allowing us to leverage the approximation techniques in this area. Our framework highlights additional ways to improve this procedure: if we view augmentation as a modification of the feature map, we naturally apply this feature map to test data points as well, implicitly reducing the variance in the features of different versions of the same data point. This variance regularization is the second goal of data augmentation discussed in Section 4.

We validate this approach on standard image datasets MNIST and CIFAR-10, along with a real-world mammography tumor-classification dataset called Digital Database for Screening Mammography (DDSM) [17, 5, 20]. DDSM comprises 1506 labeled mammograms, to be classified as benign versus malignant tumors. In Table 1, we compare: (i) a baseline model trained on non-augmented data, (ii) a model trained on the true augmented objective, and (iii) a model that uses augmented random Fourier features. We augment via rotation between −15 and 15 degrees. All models are RBF kernel classifiers with 10,000 random Fourier features, and we report the mean accuracy and standard deviation over 10 trials. To make the problem more challenging, we also randomly rotate the test data points. The results show that augmented random Fourier features can retain 70–100% of the accuracy boost of data augmentation, with 2–4× reduction in training time.

5.3. Intermediate-Layer Feature Averaging for Deep Learning

Finally, while our theory does not hold exactly given the non-convexity of the objective, we show that our theoretical framework also suggests ways in which augmentation can be efficiently applied in deep learning pipelines. In particular, let the first k layers of a deep neural network define a feature map ϕ, and the remaining layers define a non-linear function f(ϕ(x)). The loss on each data point is then of the form Eti~T(xi) [l(f(ϕ(ti));yi)]. Using the second-order Taylor expansion around ψ(xi) = Eti~T(xi) [ϕ(ti)], we obtain the objective:

If f(ϕ(x)) = w⊤ϕ(x), we recover the result in Section 4 (Equation 5). Operationally, we can carrying out the forward pass on all transformed versions of the data points up to layer k (i.e., computing ϕ(ti)), and then averaging the features and continuing with the remaining layers using this averaged feature, thus reducing computation.

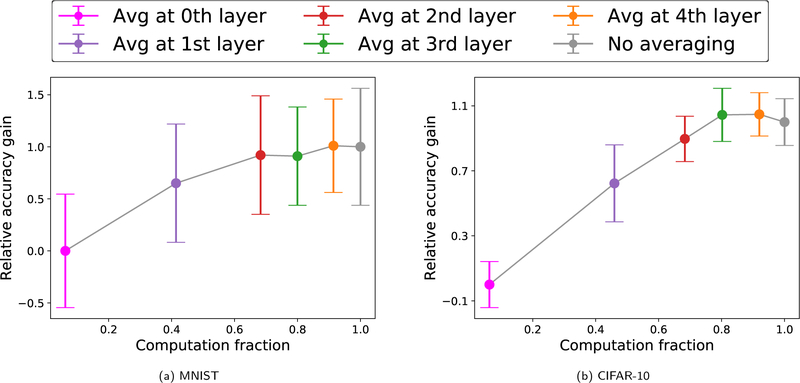

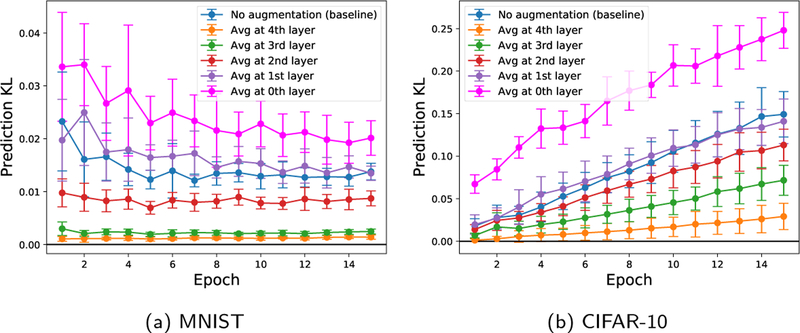

We train with this approach, applying the approximation at various layers of a LeNet network using rotation as the augmentation. To get a rough measure of tradeoff between accuracy of the model and computation, we record the fraction of time spent at each layer in the forward pass, and use this to measure the expected reduction in computation when approximating at layer k. In Figure 3, we plot the relative accuracy gain of the classifier when trained on approximate objectives against the fraction of computation time, where 0 corresponds to accuracy (averaged over 10 trials) of training on original data, and 1 corresponds to accuracy of training on true augmented objective g(w). These results indicate, e.g., that this approach can reduce computation by 30%, while maintaining 92% of the accuracy gain (red, Figure 3a). In Appendix E.4, we demonstrate similar results in terms of the test prediction distribution throughout training.

Figure 3:

Accuracy gain relative to baseline (no augmentation) when averaging at various layers of a LeNet network. Approximation at earlier layers saves computation but can reduce the fidelity of the approximation.

Connection to tangent propagation.

If we perform the described averaging before the very first layer and use the analytic form of the gradient with respect to the transformations (i.e., tangent vectors), this procedure recovers tangent propagation [36]. The connection between augmentation and tangent propagation in this special case was recently observed in Zhao et al. [43]. However, as we see in Figure 3, applying the approximation at the first layer (standard tangent propagation) can in fact yield very poor accuracy results—similar to performing no augmentation—showing that our more general approximation can improve this approach in practice.

6. Conclusion

We have taken steps to establish a theoretical base for modern data augmentation. First, we analyze a general Markov process model and show that the k-nearest neighbors classifier applied to augmented data is asymptotically equivalent to a kernel classifier, illustrating the effect that augmentation has on downstream representation. Next we show that local transformations for data augmentation can be approximated by first-order feature averaging and second-order variance regularization components, having the effects of inducing invariance and reducing model complexity. We use our insights to suggest ways to accelerate training for kernel and deep learning pipelines. Generally, a tension exists between incorporating domain knowledge more naturally via data augmentation, or through more principled kernel approaches. We hope our work will enable easier translation between these two paths, leading to simpler and more theoretically grounded applications of data augmentation.

Acknowledgments

We thank Fred Sala and Madalina Fiterau for helpful discussion, and Avner May for providing detailed feedback on earlier versions.

We gratefully acknowledge the support of DARPA under Nos. FA87501720095 (D3M) and FA86501827865 (SDH), NIH under No. N000141712266 (Mobilize), NSF under Nos. CCF1763315 (Beyond Sparsity) and CCF1563078 (Volume to Velocity), ONR under No. N000141712266 (Unifying Weak Supervision), the Moore Foundation, NXP, Xilinx, LETI-CEA, Intel, Google, NEC, Toshiba, TSMC, ARM, Hitachi, BASF, Accenture, Ericsson, Qualcomm, Analog Devices, the Okawa Foundation, and American Family Insurance, and members of the Stanford DAWN project: Intel, Microsoft, Teradata, Facebook, Google, Ant Financial, NEC, SAP, and VMWare. The U.S. Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright notation thereon. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views, policies, or endorsements, either expressed or implied, of DARPA, NIH, ONR, or the U.S. Government.

A. Omitted Proofs and Results From Section 3

Here we provide additional details and proofs from Section 3. First, we prove Lemma 1 characterizing the stationary distribution of the Markov chain augmentation process.

Proof of Lemma 1. Recall that the stationary distribution satisfies πR = π.

Under the given notation, we can express R as

Assume for now that I(β + 1) − A is invertible. Notice that

Also, A1 = ∑j βj(Aj1) = β1, so we know that (I(β+1)−A)1 = 1. So the inverse satisfies (I(β+1)−A)−11 = 1 as well. Thus,

It follows that π = ρ⊤(I(β + 1) − A)−1 is the stationary distribution of this chain.

Finally, we show that I(β +1)−A is invertible as follows. By the Gershgorin Circle Theorem, the eigenvalues of A lie in the union of the discs B(Aii,∑j≠i|Aij|) = B(Aii,β −Aii). In particular, the eigenvalues have real part bounded by β. Thus I(β + 1) − A has all eigenvalues with real part at least 1, hence is invertible.

For convenience, we now restate the main Theorem of Section 3.

Theorem 1. Consider running the Markov chain augmentation process in Definition 1 where the base augmentations preserve labels, and classifying an unseen example using k-nearest neighbors. Suppose that the Ai are time-reversible with equal stationary distributions. Then there are coefficients and a kernel K depending only on the augmentations, such that in the limit as the number of augmented examples goes to infinity, this classification procedure is equivalent to a kernel classifier

| (6) |

For the remainder of this section, we will refer to K alternately as a matrix or as a function , with corresponding notation and .

Classification process.

Suppose that we receive a new example with unknown label y. Consider running the augmentation process for time T and determining the label for x via k-nearest neighbors.4 Then in the limit as T → ∞, we predict

| (7) |

In other words, as the number of augmented training examples increases, k-NN approaches a Bayes classifier: it will select the class for x that is most probable in π.

We now show that under additional mild assumptions on the base augmentations Aj, applying this classification process after the Markov chain augmentation process is equivalent to a kernel classifier. In particular, suppose that the Markov chains corresponding to the Aj are all time-reversible and there is a positive distribution π0 that is stationary for all Aj. This condition is not restrictive in practice, as we discuss in Section A.1. Under these assumptions, the stationary distribution can be expressed in terms of a kernel matrix.

Lemma 2. The stationary distribution (1) can be written as π = α⊤K, where the vector is supported only on the dataset z1,…,zn, and K is a kernel matrix (i.e., K symmetric positive definite and non-negative) depending only on the augmentations Aj,βj.

Proof of Lemma 2. Let Π0 = diag(π0). The stationary distribution can be written as

(Π0 is invertible from the assumption that π0 is supported everywhere).

Letting and , we have π = α⊤K. Clearly, α is supported only on the dataset z1,…,zn since ρ is. It remains to show that K is a kernel.

The detailed balance condition of time-reversible Markov chains states that π0(u)Aj(u,v) = π0(v)Aj(v,u) for all augmentations A1,…,Am and u,v ∈ Ω. This can be rewritten Aj(u,v)π0(v)−1 = Aj(v,u)π0(u)−1 or , so that is symmetric. This implies that and in turn K are symmetric.

The positivity of K follows from the Gershgorin Circle Theorem, similar to the last part of the proof of Lemma 1. To show this, it suffices to show positivity of ; we have already shown it is symmetric. Thus it suffices to show positivity of Z = Π0((β + 1)I − A).5 In particular, the eigenvalues of Z are in the union of the discs Di = B((π0)i(β + 1 − Aii),(π0)i(β − Aii)).6 Note that Di has real part at least (π0)i, and therefore the eigenvalues of Z are at least mini(π0)i > 0.

Finally, we need to show that K is a nonnegative matrix; it suffices to show this for (I(β + 1) − A)−1. Note that is a stochastic matrix, hence the spectral radius of is bounded by . Therefore we can expand

This is a sum of nonnegative matrices, so K is nonnegative.

By Lemma 2, since α is supported only on the training set z1,…,zn, the stationary probabilities can be expressed as .

Expanding the classification rule (7) using this explicit representation yields

Finally, suppose, as is common practice, that our augmentations Aj do not change the label y. In this case, we overload the notation K so that K(x1,x2) := K((x1,1),(x2,1)) = K((x1,−1),(x2,−1))7, and the classification simplifies to equation (6), the classification rule for a kernel-trick linear classifier using kernel K. Thus, k-NN with data augmentation is asymptotically equivalent to a kernel classifier. This completes the proof of Theorem 1.

Rate of Convergence.

The rate at which the augmentation plus k-NN classifier approaches the kernel classifier can be decomposed into two parts: the rate at which the augmentation Markov chain mixes, and the rate which the k-NN classifier approaches the true function. The latter follows from standard generalization error bounds for the k-NN classifier. For example, if the kernel regression function is smooth enough (e.g. Lipschitz) on the underlying space , then the expected difference between the two classifiers of the probability of misclassifying new examples scales as n−1/(2+d), where n is the number of samples (i.e. augmentation steps) [14]. Furthermore, the stationary distribution (1) can be further analyzed to yield the finite-sample distributions of the Markov chain, which is related to the power series expansion ρ⊤(I(β + 1) −A)−1 = ρ⊤(β + 1)−1(I + A/(β + 1) + …) of Equation (1). This in turn determines the mixing rate of the Markov chain, which converges to its stationary distribution exponentially fast with rate β/(β +1). More formal statements and proofs of these bounds are in Appendix A.2.

A.1. Discussion

Here we describe our modeling assumptions in more detail, as well as additional uses of our main result in Theorem 1. First, we note that the assumptions needed for Lemmas 1 and 2 hold for most transformations used in practice. For example, the condition behind Lemma 2 is satisfied by “reversible” augmentations, or any augmentation that has equal probability of sending a point x ∈ Ω to y as y to x: these augmentations have symmetric transition matrices, which are time-reversible and have a uniform stationary distribution. This class includes all deterministic lossless transformations, such as jittering, flips, and rotations for images. Furthermore, lossy transformations can be combined with their inverse transformation (such as ZoomOut for ZoomIn), possibly adding a probability of not transitioning, to form a symmetric augmentation.8

Second, our use of augmentation matrices implies a finite state space Ω, which is defined as the set of base training examples and all possible augmentations. Note that augmentations typically yield finite orbits (e.g. flip, rotation, zoom – as output pixel values are a subset of input values), which is consistent with this assumption. Furthermore, finiteness is always true in actual models due to the use of finite precision (e.g. floating point numbers).

Beyond serving as motivating connection between data augmentation, a process applied to the raw input data, and kernels, which affect the downstream feature representation, Theorem 1 also points to alternate ways to understand and optimize the augmentation pipeline. In particular, Lemma 2 provides a closed-form representation for the induced kernel in terms of the base augmentation matrices and rates, and we point out two potential ways this alternate classifier can be useful on top of the original augmentation process.

In Appendix B.1 we show that if the augmentations are changed, for example by tuning the rates or adding/removing a base augmentation, the kernel matrix can potentially be directly updated from the original kernel (opposed to re-sampling an augmented dataset and re-training the classifier).

Second, many parameters of the original process appear in the kernel directly. For example, in Appendix B.2 we show that in the simple case of a single additive Gaussian noise augmentation, the equivalent kernel is very close to a Gaussian kernel whose bandwidth is a function of the variance of the jitter. Additionally, in general the augmentation rates βj all show up in the resulting kernel in a differentiable manner. Therefore instead of treating them as hyperparameters, there is potential to optimize the underlying parameters in the base augmentations, as well as the augmentation rates, through their role in a more tractable objective.

A.2. Convergence Rate

The following proposition from Györfi et al. [14] and Tibshirani & Wasserman [39] provides generalization bounds for a k-NN classifier when k → ∞ and k/n → 0 at a suitable rate. Treating the equivalent kernel classifier as the true function, this bounds the risk between the k-NN and kernel classifiers as a function of the number of augmented samples n.

Proposition 2. Let Ĉ be the k-NN classifier. Let C0 be the asymptotically equivalent kernel classifier from Theorem 1 and assume it is L-Lipschitz.

Letting r(C) = Pr(x,y)~π(y ≠ C(x)) be the risk of a classifier C, then

Next we analyze the convergence of the Markov chain by computing its distribution at time n. Define

We claim that πn is the distribution of the combined Markov augmentation process at time n.

Recall that ρ⊤ is a distribution over the orignial training data. We naturally suppose that the initial example is drawn from this distribution, so that π0 = ρ⊤. Note that this matches the expression for πn at n = 0. All that remains to show that this is the distribution of the Markov chain at time n is to prove that πn+1 = πnR.

From the relations ρ⊤1 = 1 and A1 = β1, we have

for all i ≥ 0.

Therefore

The difference from the stationary distribution is

The l2 norm of this can be straightforwardly bounded, noting that ∥A∥op ≤ β.

A bound on the total variation distance instead incurs an extra constant (in the dimension). This shows that the augmentation chain mixes exponentially fast, i.e. takes O((β + 1)log(1/ε) samples to converge to a desired error from the stationary distribution.

B. Kernel Transformations and Special Cases

B.1. Updated Kernel for Modified Augmentations

Our analysis of the kernel classifier in Lemma 2 yields a closed form in terms of the base augmentation matrices. This allows us to modify any kernel by changing the augmentations, producing a new kernel. For example, imagine that we start with a kernel K, which has corresponding augmentation operator A such that

Suppose that we want to add an additional augmentation operator with stochastic transition matrix  and rate . The resulting kernel is guaranteed to be a non-negative kernel by Lemma 2, and it can be computed from the known K by expanding

B.2. Kernel Matrix for the Jitter Augmentation

In the context of Definition 1, consider performing a single augmentation Aj corresponding to adding Gaussian noise to an input vector. Although Definition 1 uses an approximated finite sample space, for this simple case we consider the original space . The transition matrix A1 is just the standard Gaussian kernel, A1(x,y) = (2πσ2)−d/2 exp(−∥x − y∥2/(2σ2)). With rate β, the kernel matrix by Lemma 2 is

where we think of I as the identity operator on .

We define a d-dimensional Fourier Transform satisfying

Note that this Fourier Transform is its own inverse on Gaussian densities. Therefore

To compute the inverse transform of this, consider the function

Applying the inverse Fourier Transform , it becomes

Since the value of the kernel matrix only matters up to a constant, we can scale it by the first term. We also ignore the δ(ω) term, which in the context of Theorem 1 only serves to emphasize that a test point in the training set should be classified as its known true label. Scaling by α(α/β)σd, we are left with

Finally, after plugging in the corresponding values for α and β, notice that β is proportional to (2π)−d/2, which causes the sum to be negligible.

C. Additional Propositions for Section 4

A function f is α-strongly convex if for all x and x′, f(x′) ≥ f(x) + ∇f(x)⊤(x′ − x) + (α/2) ∥x′ − x∥2; the function f is β-strongly smooth if for all x and x′, f(x′) ≤ f(x) + ∇f(x)⊤(x′ − x) + (β/2) ∥x′ − x∥2.

If we assume that the loss is strongly convex and strongly smooth, then the difference in objective functions g(w) and ĝ(w) can be bounded in terms of the squared-norm of w, and then the minimizer of the approximate objective ĝ(w) is close to the minimizer of the true objective g(w).

Proposition 3. Assume that the loss function l(x;y) is α-strongly convex and β-strongly smooth with respect to x, and that

Letting w* = arg min g(w) and ŵ = argmin ĝ(w), then

If αc ≫ βb (that is, the covariance of ϕ(ti) is small relative to the square of its expected value), then , and so

This means that minimizing the first-order approximate objective ĝ will provide a fairly accurate parameter estimate for the objective g on the augmented dataset.

Proof of Proposition 3. By Taylor’s theorem, for any random variable X over , there exists some remainder function such that

The condition of l(x;y) being α-strongly convex and β-strongly smooth means that α ≤ l″(x) ≤ β for any x. Thus

It follows that (letting our random variable X be w⊤ ϕ(ti)),

Because of the assumption that ,

We can bound the second derivative of ĝ(w):

where we have used the assumption that . Thus ĝ is (αc)-strongly convex.

We bound ĝ(w*) − ĝ(ŵ):

where we have used the fact that ĝ(w) ≤ g(w) for all w and that w* minimizes g(w). But ĝ is (αc)-strongly convex, so ĝ(w*) − ĝ(ŵ) ≥ αc/2∥w* −ŵ∥2. Combining these inequalities yields

D. Variance Regularization Terms for Common Loss Functions

Here we derive the variance regularization term for common loss functions such as logistic regression and multinomial logistic regression.

For logistic regression,

And so

and

since y ∈ {−1,1} and so y2 = 1. Therefore,

To second order, this is

For multinomial logistic regression, we use the cross entropy loss. With the softmax probability ,

The first derivative is:

The second derivative is:

which does not depend on y.

E. Additional Experiment Details and Results

E.1. First- and Second-order Approximations

For all experiments, we use the MNIST and CIFAR-10 dataset and test three representative augmentations: rotations between −15 and 15 degrees, random crops of up to 64% of the image area, and Gaussian blur. We explore our approximation for kernel classifier models, using either an RBF kernel with 10000 random Fourier features [29] or a learned LeNet neural network [19] as our base feature map. The RBF kernel bandwidth is chosen by computing the kernel alignment, as in Section 5.1. We optimize the models using stochastic gradient descent, with learning rate 0.01, momentum 0.9, batch size 256, running for 15 epochs. We explicitly transform the images and add them to the dataset.

We validate two claims: (i) the approximate objectives are close to the true objective, and (ii) training on approximate objectives give similar models to training on the true objective.

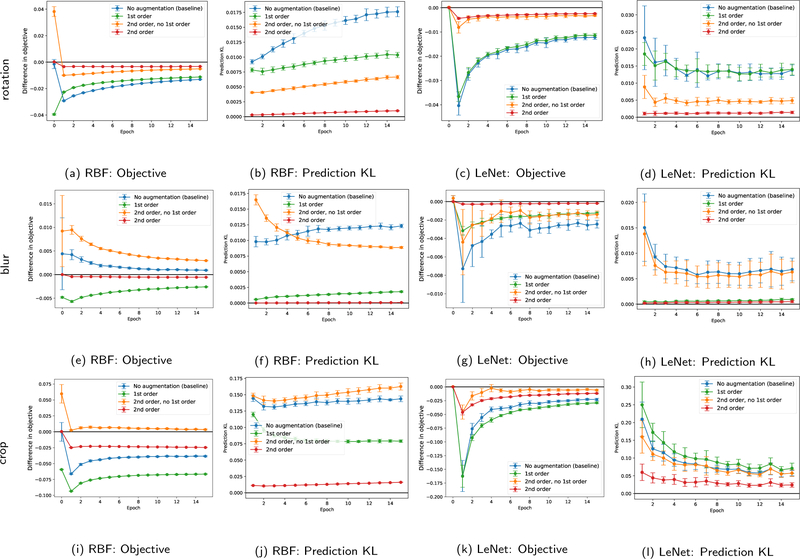

We plot the mean and standard deviation over 10 runs in Figure 4 and Figure 5. For claim (i), we plot the objective difference, throughout the process of training a model on the true objective, in Figure 4 (a, c, e, g, i, k) and Figure 5 (a, c, e, g, i, k). Objective difference closer to 0 is better. The second-order approximation is better than the first-order and the second-order without first-order approximation. For claim (ii), we train 5 models, each on different objectives: true objective, first-order approximation, second-order approximation, second-order without first-order approximation, and no augmentation objective. We measure the KL divergence between the predictions given by the approximate models and the predictions given by the model with true objective, as they are trained. Lower KL divergence means the prediction distributions of the approximate model is more similar to the predictions made by the true model. Figure 4 (b, d, f, h, j, l) and Figure 5 (b, d, f, h, j, l) show that the approximate models trained on first-order approximation and second-order approximation yield similar predictions to the model trained on the true objective, with the second-order approximate model being particularly close to the true model.

Figure 4: MNIST Dataset.

The difference in objective value (a,e,i,c,g,k) and prediction distribution (b,f,j,d,h,l) (as measured via the KL divergence) between approximate and true objectives. In all plots, the second-order approximation tends to closely match the true objective, and to be closer than the first-order approximation or second-order component alone.

Figure 5: CIFAR-10 Dataset.

The difference in objective value (a,e,i,c,g,k) and prediction distribution (b,f,j,d,h,l) (as measured via the KL divergence) between approximate and true objectives. In all plots, the second-order approximation tends to closely match the true objective, and to be closer than the first-order approximation or second-order component alone.

E.2. Kernel Alignment

For the experiment in Section 5.1, we use the same RBF kernel with 10000 random Fourier features and LeNet, as in Section E.1. We compute the kernel target alignment by collecting statistics from mini-batches of the dataset, iterating over the dataset 50 times. For the MNIST dataset, we consider rotation (between −15 and 15 degrees), Gaussian blur, horizontal flip, horizontal & vertical flip, brightness adjustment (from 0.75 to 1.25 brightness factor), contrast adjustment (from 0.65 to 1.35 contrast factor). For the CIFAR-10 dataset, we consider rotation (between −5 and 5 degrees), Gaussian blur, horizontal flip, horizontal & vertical flip, brightness adjustment (from 0.75 to 1.25 brightness factor), contrast adjustment (from 0.65 to 1.35 contrast factor). Accuracy on the validation set is obtained from SGD training over 15 epochs, with the same hyperparameters as in Section E.1, averaged over 10 trials.

E.3. Augmented Random Fourier Features

We use two standard image classification datasets MNIST and CIFAR-10, and a real-world mammography tumor-classification dataset called Digital Database for Screening Mammography (DDSM) [17, 5, 20]. DDSM comprises 1506 labeled mammograms, to be classified as benign versus malignant tumors. The DDSM images are gray-scale and of size 224×224, which we resize to 64×64.

We use the same RBF kernels with 10000 random Fourier features as in Section E.1. We apply rotations between −15 and 15 degrees for MNIST and CIFAR-10, and rotations between 0 and 360 degrees for DDSM, since the tumor images are rotationally invariant. We sample s = 16 angles to construct the augmented random Fourier feature map for MNIST and CIFAR, and s = 36 angles for DDSM.

To make the MNIST and CIFAR classification tasks more challenging, we also rotate the images in the test set between −15 and 15 degrees for MNIST, and between −5 and 5 degrees for CIFAR-10.

E.4. Feature Averaging for Deep Learning

In addition to the accuracy results in Section 5.3, we also explore the effect of feature averaging on prediction disagreement throughout training. We plot the difference in generalization between approximation and true objectives for LeNet in Figure 6, for rotation between −15 and 15 degrees. We again observe that approximation at earlier layers saves computation but can reduce the fidelity of the approximation.

Figure 6:

Difference in generalization between approximate and true objectives for LeNet in terms of KL divergence in test predictions, for MNIST (a) and CIFAR-10 (b) datasets.

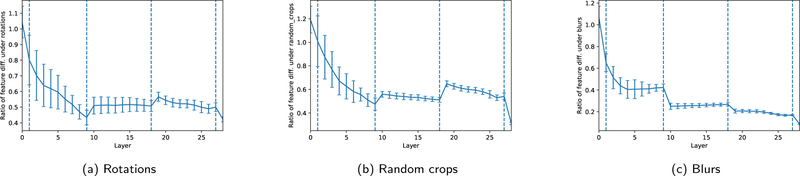

E.5. Layerwise Feature Invariance in a ResNet

Finally, to provide additional motivation for the deep learning experiments in Section 5, where our theory breaks down due to non-convexity, we explore invariance with respect to deep neural networks. For each layer l of a deep neural network, we examine the average difference in feature values when data points x are transformed according to a certain augmentation distribution T, using a model trained with data augmentation T0 which has feature layers :

| (8) |

Specifically, in Figure 7, we examine the ratio of this measure of invariance for a model trained with data augmentation using T, and trained without any data augmentation, Δl,T,T/Δl,T,∅, to see if and how training with a specific augmentation makes the layers of the network more invariant to it. We use a standard ResNet as in He et al. [16] with three blocks of nine residual units (separated by vertical dashed lines), an initial convolution layer, and a final global average pooling layer, implemented in TensorFlow9, trained on CIFAR-10 and averaged over ten trials. We see that training with an augmentation indeed makes the feature layers of the network more invariant to this augmentation, with the steepest change in the earlier layers (first residual block), and again in the final layer when features are pooled and averaged.

Figure 7:

The ratio of average difference in features under an augmentation (8) when the network is trained with that augmentation, to when it is trained without any data augmentation, using a ResNet trained on CIFAR-10, averaged over ten trials. We see that in all but the first one or two layers, the network trained with the augmentation is indeed more invariant to it, with the steepest increase in invariance in the first block of layers and in the last global average pooling layer.

Footnotes

We use k-NN as a simple example of a nonparametric classifier, but the result holds for any asymptotically Bayes classifier.

We focus on finite-dimensional feature maps for ease of exposition, but the analysis still holds for infinite-dimensional feature maps.

Code to reproduce experiments and plots is available at https://github.com/HazyResearch/augmentation_code

This works for any label-preserving non-parametric model.

This follows from the characterization of A positive definite as x⊤ Ax > 0∀x ≠ 0.

Where B(x; r) is the ball centered at × of radius r.

The intuition is that K measures the similarity between examples in Ω in terms of how hard it is to augment one to the other, and this distance is the same whether y is 1 or −1 in the label-preserving case. Formally, a K satisfying this condition exists because the Markov chain is not irreducible, and an appropriate π0 putting equal weights on y = ±1 can be chosen in Lemma 2. Note also that K((x1; 1); (x2;−1)) = K((x1; −1); (x2; 1)) = 0 by the same intuition; formally, A is a block matrix with A((x1; y); (x2;−y)) = 0 so K = Π0(I(β + 1) − A)−1 has the same property

For example, if a lossy transform sends a; b; c → c, with transition matrix , it can be symmetrized to .

References

- [1].Bishop CM Training with noise is equivalent to tikhonov regularization. Neural computation, 7(1): 108–116, 1995. [Google Scholar]

- [2].Burges CJC Geometry and invariance in kernel based methods. Advances in Kernel Methods, pp. 89–116, 1999. [Google Scholar]

- [3].Chapelle O, Weston J, Bottou L, and Vapnik V Vicinal risk minimization In Leen TK, Dietterich TG, and Tresp V (eds.), Advances in Neural Information Processing Systems 13, pp. 416–422. MIT Press, 2001. [Google Scholar]

- [4].Cireşan DC, Meier U, Gambardella LM, and Schmidhuber J Deep, big, simple neural nets for handwritten digit recognition. Neural Computation, 22(12):3207–3220, 2010. [DOI] [PubMed] [Google Scholar]

- [5].Clark K, Vendt B, Smith K, Freymann J, Kirby J, Koppel P, Moore S, Phillips S, Maffitt D, Pringle M, et al. The cancer imaging archive (TCIA): maintaining and operating a public information repository. Journal of digital imaging, 26(6):1045–1057, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Cristianini N, Shawe-Taylor J, Elisseeff A, and Kandola JS On kernel-target alignment. In Neural Information Processing Systems, 2002. [Google Scholar]

- [7].Cubuk ED, Zoph B, Mane D, Vasudevan V, and Le QV Autoaugment: Learning augmentation policies from data. arXiv preprint arXiv:180509501, 2018. [Google Scholar]

- [8].Decoste D and Schölkopf B Training invariant support vector machines. Machine Learning, 46(1): 161–190, 2002. [Google Scholar]

- [9].Demyanov S, Bailey J, Kotagiri R, and Leckie C Invariant backpropagation: how to train a transformation-invariant neural network. arXiv:150204434, 2015. [Google Scholar]

- [10].Dosovitskiy A, Springenberg JT, Riedmiller M, and Brox T Discriminative unsupervised feature learning with convolutional neural networks. In Neural Information Processing Systems, 2014. [DOI] [PubMed] [Google Scholar]

- [11].Dosovitskiy A, Fischer P, Springenberg JT, Riedmiller M, and Brox T Discriminative unsupervised feature learning with exemplar convolutional neural networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 38(9):1734–1747, 2016. [DOI] [PubMed] [Google Scholar]

- [12].Goodfellow I, Bengio Y, and Courville A Deep Learning. MIT Press, 2016. http://www.deeplearningbook.org. [Google Scholar]

- [13].Graham B Fractional max-pooling. arXiv:14126071, 2014. [Google Scholar]

- [14].Györfi L, Kohler M, Krzyzak A, and Walk H A distribution-free theory of nonparametric regression. Springer Science & Business Media, 2006. [Google Scholar]

- [15].Haasdonk B and Burkhardt H Invariant kernel functions for pattern analysis and machine learning. Machine Learning, 68(1):35–61, 2007. [Google Scholar]

- [16].He K, Zhang X, Ren S, and Sun J Identity mappings in deep residual networks. In European Conference on Computer Vision, 2016. [Google Scholar]

- [17].Heath M, Bowyer K, Kopans D, Moore R, and Kegelmeyer P The digital database for screening mammography. Digital mammography, pp. 431–434, 2000. [Google Scholar]

- [18].Krizhevsky A and Hinton G Learning multiple layers of features from tiny images. 2009. [Google Scholar]

- [19].LeCun Y, Bottou L, Bengio Y, and Haffner P Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998. [Google Scholar]

- [20].Lee RS, Gimenez F, Hoogi A, and Rubin D Curated breast imaging subset of ddsm. The Cancer Imaging Archive, 2016. [Google Scholar]

- [21].Leen T From data distributions to regularization in invariant learning. In Neural Information Processing Systems, 1995. [Google Scholar]

- [22].Lu X, Zheng B, Velivelli A, and Zhai C Enhancing text categorization with semantic-enriched representation and training data augmentation. Journal of the American Medical Informatics Association, 13(5):526–535, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Maurer A and Pontil M Empirical Bernstein bounds and sample variance penalization. In Conference on Computational Learning Theory, 2009. [Google Scholar]

- [24].Mroueh Y, Voinea S, and Poggio TA Learning with group invariant features: A kernel perspective. In Neural Information Processing Systems, 2015. [Google Scholar]

- [25].Muandet K, Fukumizu K, Dinuzzo F, and Schölkopf B Learning from distributions via support measure machines In Pereira F, Burges CJC, Bottou L, and Weinberger KQ (eds.), Advances in Neural Information Processing Systems 25, pp. 10–18. Curran Associates, Inc., 2012. [Google Scholar]

- [26].Muandet K, Fukumizu K, Sriperumbudur B, Schölkopf B, et al. Kernel mean embedding of distributions: A review and beyond. Foundations and Trends R in Machine Learning, 10(1–2):1–141, 2017. [Google Scholar]

- [27].Namkoong H and Duchi J Stochastic gradient methods for distributionally robust optimization with f-divergences. In Neural Information Processing Systems, 2016. [Google Scholar]

- [28].Namkoong H and Duchi JC Variance-based regularization with convex objectives. In Neural Information Processing Systems, 2017. [Google Scholar]

- [29].Rahimi A and Recht B Random features for large-scale kernel machines. In Neural Information Processing Systems, 2007. [Google Scholar]

- [30].Raj A, Kumar A, Mroueh Y, Fletcher PT, and Schölkopf B Local group invariant representations via orbit embeddings. International Conference on Artificial Intelligence and Statistics, 2017. [Google Scholar]

- [31].Ratner AJ, Ehrenberg H, Hussain Z, Dunnmon J, and Ré C Learning to compose domain-specific transformations for data augmentation. In Neural Information Processing Systems, 2017. [PMC free article] [PubMed] [Google Scholar]

- [32].Rifai S, Dauphin YN, Vincent P, Bengio Y, and Muller X The manifold tangent classifier. In Neural Information Processing Systems, 2011. [Google Scholar]

- [33].Sajjadi M, Javanmardi M, and Tasdizen T Regularization with stochastic transformations and perturbations for deep semi-supervised learning. In Neural Information Processing Systems, 2016. [Google Scholar]

- [34].Schölkopf B, Burges C, and Vapnik V Incorporating invariances in support vector learning machines. In International Conference on Artificial Neural Networks, 1996. [Google Scholar]

- [35].Sietsma J and Dow RJ Creating artificial neural networks that generalize. Neural Networks, 4(1): 67–79, 1991. [Google Scholar]

- [36].Simard P, Victorri B, LeCun Y, and Denker J Tangent prop-a formalism for specifying selected invariances in an adaptive network. In Neural Information Processing Systems, 1992. [Google Scholar]

- [37].Simard P, LeCun Y, Denker J, and Victorri B Transformation invariance in pattern recognition—tangent distance and tangent propagation. Neural Networks: Tricks of the Trade, pp. 549–550, 1998. [Google Scholar]

- [38].Teo CH, Globerson A, Roweis ST, and Smola AJ Convex learning with invariances. In Neural Information Processing Systems, 2008. [Google Scholar]

- [39].Tibshirani R and Wasserman L Nonparametric regression and classification, 2018. URL http://www.stat.cmu.edu/~larry/=sml/NonparametricPrediction.pdf. [Google Scholar]

- [40].Uhlich S, Porcu M, Giron F, Enenkl M, Kemp T, Takahashi N, and Mitsufuji Y Improving music source separation based on deep neural networks through data augmentation and network blending. In International Conference on Acoustics, Speech and Signal Processing, 2017. [Google Scholar]

- [41].van der Wilk M, Bauer M, John S, and Hensman J Learning invariances using the marginal likelihood In Bengio S, Wallach H, Larochelle H, Grauman K, Cesa-Bianchi N, and Garnett R (eds.), Advances in Neural Information Processing Systems 31, pp. 9960–9970. Curran Associates, Inc., 2018. [Google Scholar]

- [42].Zhang C, Bengio S, Hardt M, Recht B, and Vinyals O Understanding deep learning requires rethinking generalization. In International Conference on Learning Representations, 2017. [Google Scholar]

- [43].Zhao J, Li J, Zhao F, Yan S, and Feng J Marginalized CNN: Learning deep invariant representations. In British Machine Vision Conference, 2017. [Google Scholar]