Abstract

Rationale

The impact of neuroscience-based approaches for psychiatry on pragmatic clinical decision-making has been limited. Although neuroscience has provided insights into basic mechanisms of neural function, these insights have not improved the ability to generate better assessments, prognoses, diagnoses, or treatment of psychiatric conditions.

Objectives

To integrate the emerging findings in machine learning and computational psychiatry to address the question: what measures that are not derived from the patient’s self-assessment or the assessment by a trained professional can be used to make more precise predictions about the individual’s current state, the individual’s future disease trajectory, or the probability to respond to a particular intervention?

Results

Currently, the ability to use individual differences to predict differential outcomes is very modest possibly related to the fact that the effect sizes of interventions are small. There is emerging evidence of genetic and neuroimaging-based heterogeneity of psychiatric disorders, which contributes to imprecise predictions. Although the use of machine learning tools to generate clinically actionable predictions is still in its infancy, these approaches may identify subgroups enabling more precise predictions. In addition, computational psychiatry might provide explanatory disease models based on faulty updating of internal values or beliefs.

Conclusions

There is a need for larger studies, clinical trials using machine learning or computational psychiatry model parameters predictions as actionable outcomes, comparing alternative explanatory computational models, and using translational approaches that apply similar paradigms and models in humans and animals.

Keywords: Computational psychiatry, Machine learning, Prediction, Models, Reinforcement learning

The challenges in psychiatry

The search for objective markers in psychiatry has been elusive. The basic question that needs to be addressed is: what measures that are not derived from the patient’s self-assessment or the assessment by a trained professional can be used to make more precise predictions about the individual’s current state, the individual’s future disease trajectory, or the probability to respond to a particular intervention? Addressing this question can provide directions for programs of research that would make a major difference for the patient, the mental health provider, the payer, or policy makers even in the face of our limited understanding of how psychiatric disorders emerge as consequences of brain dysfunctions. Here, we aim to bring together the emerging findings in machine learning and computational psychiatry to address the question raised above. First, we highlight some of the explanatory and predictive challenges in psychiatry that make a general solution or answer difficult. Second, we frame the pragmatic aspect of research in psychiatry within the framework of risk prediction models. Third, we discuss the differences between machine learning approaches and computational modeling approaches and what these different aspects of computational psychiatry can contribute to solving the basic question. Finally, we review the novel insights from studies conducted over the past three years in the field of machine learning and computational psychiatry. These findings will be used to make recommendations for future directions.

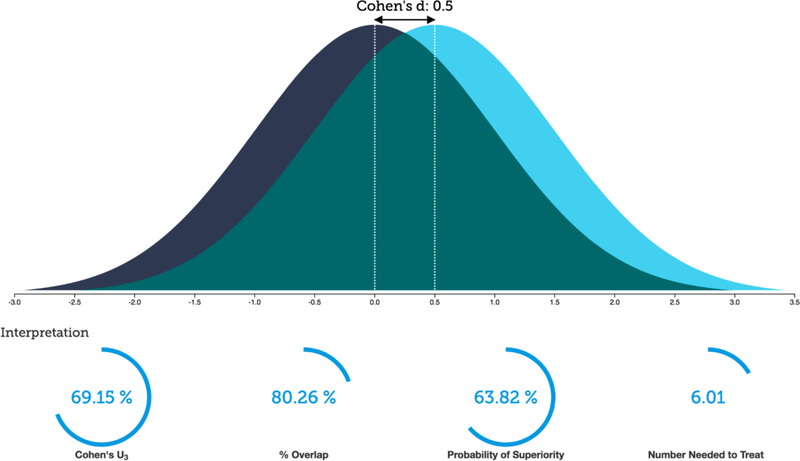

An important first step to determine how machine learning and computational psychiatry might improve our ability to make clinically meaningful prediction is to delineate the strength of associations among variables of interest or responses to intervention. In a recent investigation of associations focusing on individual difference research based on 87 meta-analyses across six journals yielding 708 meta-analytically derived correlations, the authors (Gignac and Szodorai 2016) reported a median correlation coefficient of 0.19. This means that - on average - having knowledge about an individual difference variable helps to explain about 3.6% of the variance in a dependent measure that we care to make predictions about. In comparison, a large study (Bosco et al. 2015) examining 147,328 correlational effect sizes in applied psychology reported a median effect size of 0.16, i.e. one measure explaining about 2.5% of the variance of the other measure. When it comes to interventions, a meta-synthesis of 62 meta-analyses of behavioral interventions focused on health behavior change (Johnson et al. 2010) yielded Cohen’s d effect sizes of between 0.08 to 0.45. In other words, for these interventions between 53–67% of the treatment group would be above the mean of the control group, 97–82% of the two groups would overlap, and there would be a 52–62% chance that a person picked at random from the treatment group would have a higher score than a person picked at random from the control group. If one were to assume a 20% placebo response rate, one would need to treat 6–43 individuals to have one more favorable outcome in the treatment group compared to the control group (number needed to treat; see https://rpsychologist.com/d3/cohend/ for more details). In another meta-synthesis focused on psychiatric medications based on 61 meta-analyses including 21 psychiatric disorders, the authors reported an average medication effect size of 0.5, i.e. in this case 69% of the treatment group would be above the mean of the control group (Cohen’s U), there would be an overlap of 80% of the two groups, and there would be a 64% chance that a person picked at random from the treatment group will have a higher (or better) score than a person picked at random from the control group (Probability of Superiority, see also Figure 1). In addition, one would need to treat 6 people to have one more favorable outcome in the treatment group compared to the control group. These results support three main conclusions. First, the ability to predict or relate an individual difference variable to an outcome is very modest, i.e. explaining single digit variances. Second, the effect sizes of interventions are such that individual level outcome differences are small. Third, the aim of machine learning and computational psychiatry needs to be to utilize individual difference measures to better separate outcome variables such that providers can make specific recommendations to patients.

Figure 1:

Typical effect size of pharmacological treatment in psychiatry.

Several explanations have been proposed for these modest base-rate effect sizes. First, there is evidence from other neurological disorders that even in the case of a single gene mutation, the phenomenology can be complex. For example, Huntington’s disease, which is caused by a CAG repeat expansion in the huntingtin gene shows considerable clinical phenotypic heterogeneity, which has prompted a search for biomarkers to refine the prognosis, progression, and treatment response of the disorder (Ross et al. 2014). On the other hand, motor neuron diseases are an etiologically heterogeneous group of disorders that are characterized by muscle weakness and/or spastic paralysis (Dion et al. 2009). Thus, considering the relationship between clinical presentation and underlying biology there is a one-to-many mapping in case of Huntington’s disease, there is a many-to-one mapping for motor neuron diseases. Similar genetic heterogeneity has been reported for constructs of fundamental importance in psychiatry. There is growing evidence that different psychiatric disorders have overlapping genetic etiologies (Schork et al. 2019). Moreover, genetic analyses of neuroticism (Hall et al. 2018; Smith et al. 2016) have identified genome-wide significant loci that help to understand the genetic architecture of this construct. However, a more refined look at the individual items of neuroticism provided evidence for a genetically heterogeneous basis (Nagel et al. 2018) of a phenomenologically homogeneous psychological construct, which may explain the weak association between the underlying genetic architecture and psychiatric phenomenology. This heterogeneity has been extended recently to neuroimaging approaches (Wolfers et al. 2019; Wolfers et al. 2018). Briefly, in both bipolar disorder and schizophrenia, structural neuroimaging characteristics of individual subjects showed greater variability amongst diagnostic groups than amongst comparison subjects, which prompted the investigators to suggest that idea of the average patient is a noninformative construct in psychiatry. These findings raise a deep conundrum in psychiatric research in general and for a program of research aimed at making individual level predictions in particular. Specifically, if the current diagnostic categories are not aggregating homogenous subject populations and if these categories or even the dimensional extensions along a few constructs show limited utility as prior knowledge, what should be the basis for individual level predictions? Moreover, if symptom severity and other phenomenological constructs are determined by many different biological processes, what is the appropriate target prediction variable, i.e. what is pragmatically useful posterior knowledge?

Some investigators have emphasized that psychiatric disorders are “pluralistic”, involve “multiple levels, and are “multi-causal” (Kendler 2008), which suggests that there are no simple explanatory models in psychiatry. Moreover, the need to find causal relationships for psychiatric disorders across biological, psychological and social-environmental domains challenges simplistic nosological frameworks (Kendler 2017a). Thus, it should not be surprising that there is no consensus among the scientific community when it comes to explanatory models for psychiatric disorders. On the one hand, some investigators propose to explain psychiatric disorders based on highly reduced basic neuroscience models, which are thought to provide novel targets for intervention (Casey et al. 2013). Others have suggested that explanations may not be possible because experiential signs and symptoms are emerging properties and cannot be reduced to underlying mechanistic dysfunctions (Kendler 2014). Explanatory models are based on mechanisms, which - in turn - are tightly linked to causation, which can be defined as an antecedent event, condition, or characteristic that was necessary for the occurrence of the disease at the moment it occurred, given that other conditions are fixed (Rothman and Greenland 2005). A mechanism is, roughly speaking, a set of entities and activities that are spatially, temporally, and causally organized in such a way that they exhibit the phenomenon to be explained (Menzies 2012). Kendler proposed in alignment with Marr’s computational hierarchy (Marr 1982) that explanatory models need to address (a) the goal or purpose of the process, (b) what are the basic elements of the process and how does an algorithm operate on these processes, and (c) how this process is implemented physically. Moreover, based on Hill’s criteria on causation, Kendler emphasizes that good explanations have to be judged based on their strength of evidence, causal confidence, generalizability, specificity, manipulability, proximity to the observed psychological phenomena, and generativity, i.e. the ability to provide a comprehensive account of the current state (Kendler 2012). Thus, an empirically based causative framework is fundamental to provide explanatory models in psychiatry.

Explanatory models are important for patients, providers and families, who want to understand how psychiatric disorders emerge, how these disorders wax and wane, and how interventions improve these disorders or provide permanent cures (Channa and Siddiqi 2008). Psychiatric disorders are grounded in mental, first-person experiences, are etiologically complex (Kendler 2005), and can be conceptualized as having a distinct course and characteristic symptoms (Kendler and Engstrom 2017). Thus, researchers need to be able to provide empirically based explanatory models of psychiatric diseases that address these features and the stakeholders’ concerns. However, it is also important to not substitute specific experiences and symptoms for the disorder but to merely take these elements as possible instantiation of an underlying latent process (Kendler 2017b). Yet, latent variable models may have also significant limitations as appropriate conceptualization of psychiatric disorders (Zachar and Kendler 2017). A pragmatic view (Brendel 2003) is that explanatory models in psychiatry reflect what clinicians deem valuable in rendering people’s behavior intelligible and thus help guide treatment choices for mental illnesses. Taken together, there is a pragmatic need for developing evidence-based explanatory models in psychiatry that serve to stakeholders to better understand these illnesses and adapt their lives accordingly.

Risk Prediction Model Framework and Computational Psychiatry

A complementary approach to the elusive search for causal and explanatory models in psychiatry is to embed a program of research into a risk prediction framework. Risk prediction models use predictors (covariates) to estimate the absolute probability or risk that a certain outcome is present (diagnostic prediction model) or will occur within a specific time period (prognostic prediction model) in an individual with a particular predictor profile (Moons et al. 2012b). The components of Risk Prediction (Gerds et al. 2008) consist of (a) a samples of n subjects, (b) a set of k markers, which are obtained for each subject, (c) an individual subject status at some later time t, which can be a scalar or vector variable, and finally (d) a model that takes the sample and markers and assigns a probability p of the status at time t for each individual. To be useful, a prediction model must provide validated and accurate estimates of the risks, and the uptake of those estimates should improve subject (self-) management and therapeutic decision-making, and consequently, (relevant) individuals’ outcomes and cost-effectiveness of care (Moons et al. 2012a). Risk prediction models can be derived with many different statistical approaches. To compare them, measures of predictive performance are often derived from ROC methodology and from probability forecasting theory. These tools can be applied to assess single markers, multivariable regression models and complex model selection algorithms (Gerds et al. 2008). The outcome probabilities or level of risk and other characteristics of prognostic groups are the most salient statistics for review and perhaps meta-analysis. Reclassification tables, i.e. risk prediction model-based categorization of individual cases, can help determine how a prognostic test affects the classification of patients into different prognostic groups, hence their treatment (Rector et al. 2012). The aim of risk-prediction models is to robustly delineate predictively meaningful dimensional or categorically identified subgroups. Thus, the identification of these subgroups can be used to resolve statistically the many-to-one or one-to-many mappings mentioned above.

Competing risk prediction models can be compared with well-operationalized statistical approaches (Cook 2007). In addition, if the overall fit for one model over another is better but general calibration and discrimination are similar, one can assess whether the fit would be better among individuals of special interest. This would help to determine how many individuals would be reclassified in clinical risk categories and whether the new risk category is more accurate for those reclassified. Finally, one can assess utility of the risk prediction model if it is based on an invasive or expensive biomarker, by determining whether a higher or lower estimated risk would change treatment decisions for the individual subject. This general approach is similar to the one proposed by Pencina (Pencina and D’Agostino 2012), who argued that the incremental predictive value of a new marker should be based on its potential in reclassification and discrimination. In that sense, a new potentially predictive (bio)markers should be assessed on their added value to existing prediction models or predictors, rather than simply being tested on their predictive ability alone (Moons et al. 2012b). The ultimate test of the effectiveness of a risk prediction tool, like any other intervention, is a Randomized Clinical Trial in which providers are randomized to use the prediction tool in addition to usual care versus usual care alone to generate clinically actionable decisions (Scott and Greenberg 2010). In summary, the risk prediction model framework has a number of advantages over a mechanistic framework: a clear utilitarian approach, sound statistical background, a framework of iterative improvement, and the ability to ultimately connect with and coexist with a mechanistic understanding of psychiatric disease.

Computational psychiatry is a young field that uses computational approaches to advance our understanding of mental health to develop practical applications to improve treatment outcomes for patients (Huys et al. 2016). A growing number of researchers from a wide range of disciplines related to psychiatry including machine learning, computational neuroscience, neuroimaging, cognitive psychology and others are attracted by the challenge of applying sophisticated mathematical tools and the relevance of using these tools to improve patient’s suffering. Broadly speaking, computational psychiatry includes researchers who aim to use machine learning approaches to make advances in pragmatic academic psychiatry (Paulus 2017), i.e. who want to robustly and accurately predict individual patient’s states. Alternatively, others aim to use process models (e.g. (Hernaus et al. 2018; Powers et al. 2017)) that are able to better and more quantitatively explain the dysfunctions observed in psychiatric patients and who provide measures that can predict future patient’s states (Stephan et al. 2017).

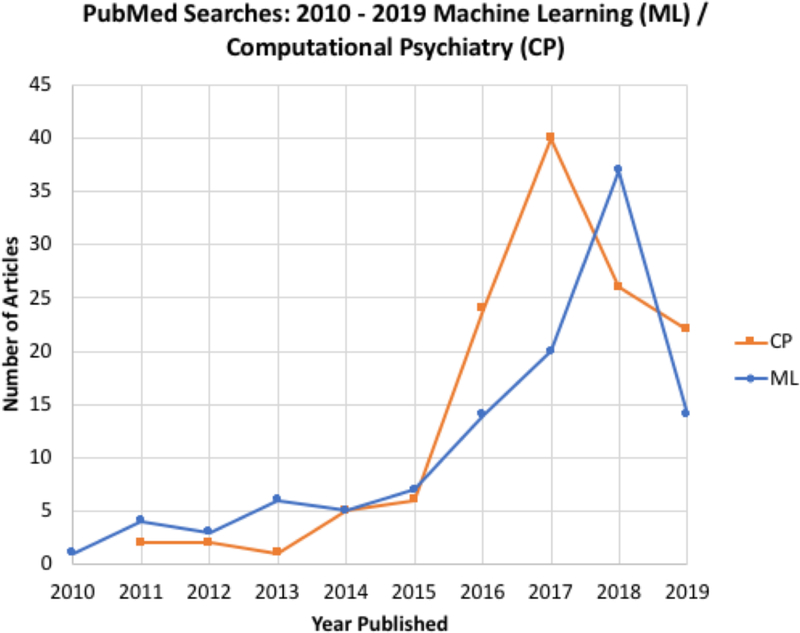

Computational Psychiatry: Machine Learning

A literature review of the machine learning (ML) literature in psychiatry over the past three years ((((machine learning [Title/Abstract]) AND psychiatry[Title/Abstract])) AND (“2011/1/1”[Date - Entrez] : “3000”[Date - Entrez]) accessed 4/25/2019 yielding 112 references) shows a rapid increase in number of publications that have used both clinical and neuroimaging data to generate individual-level outcome predictions (see also Figure 2). A significant road block to obtain robust prediction estimates is the heterogeneity of psychiatric disorders (Wolfers et al. 2019; Wolfers et al. 2018). Some have proposed that ML approaches may actually help to identify robust subgroups and proposed that this could help to improve our understanding of the neurobiology of mental disorders (Schnack 2017). Recent reviews have noted that there is growing evidence for the prognostic capability of machine learning-based models using neuroimaging (Janssen et al. 2018). At the same time, there is also the recognition that because of small sample sizes and the lack of independent validation the reported accuracies may be too optimistic. In a systematic, qualitative review of risk prediction models for depression, bipolar disorder, generalized anxiety disorder, post-traumatic stress disorder, and psychotic disorders the authors noted that this approach is feasible and is yielding prediction accuracies that approach clinical utility. However, they also pointed out that more large-scale, longitudinal studies are needed, emphasized the need of carrying out external validations of risk prediction models, raised the need for testing potential selective and indicated preventive interventions, and suggested that these models needed to be evaluated in the context of risk stratification using risk prediction models (Bernardini et al. 2017). The need for additional validation procedures to assess the generalizability of the models, which will require large samples has been echoed by others (Schnack and Kahn 2016). The main limitation of using neuroimaging as a predictor tool is its cost and problematic scalability. New and emerging trends in risk prediction models have included other measurement domains. For example, studies investigating blood biomarkers such measuring inflammatory pathways and immune markers may help to predict stable treatment response (Busch and Menke 2019). Some investigators have used relatively inexpensive assessments including self-report, census variables and insurance data to predict the emergence of PTSD 3 months after trauma hospitalization with reasonable success (Papini et al. 2018). In comparison, the clinical utility of genetic risk prediction is still low and more diverse samples of genome-wide association studies have been proposed to provide better predictive measures (Martin et al. 2018). Genetic markers could be used to help an individual find out how likely he or she is to develop a particular disease (Torkamani et al. 2018) or a combination of genetic and environmental assessments could be used for early identification of individuals at risk for depression (Kohler et al. 2018). On the other hand, there have been several examples of using medical record information to make outcome predictions. However, the problem is that electronic medical records do not systematically collect information that would be necessary for large observational treatment studies (Kessler et al. 2017). Although there is optimism about the use of machine learning tools with clinical data to generate individual level predictions, none of these approaches have been sufficiently well developed to be clinically actionable. There are at least three steps that would need to be taken to move this field forward. First, future prediction studies should be pre-registered with explicitly defined machine learning approaches, prespecified independent and dependent variables, and clearly specified populations. Second, all models generated by these tools need to be validated in a completely independent sample, which has not been used to generate similar predictions. Third, the utility of the prediction model will need to be tested in a pre-registered randomized controlled trial where some of the individuals are used to generate predictions and some are not. Thus, this information needs to be tied to clinical actions and outcome variables. Finally, the development of ML algorithms that minimize bias from confounding variables is an encouraging development (Johansson et al. 2016; Wager and Athey 2018) Taken together, the application of ML tools to generate clinically actionable predictions is still in its infancy and much of the literature is focused on proof-of-concept studies. The utility of these approaches will depend on whether it can be shown that ML-based predictions save lives, improve patients faster and more completely, and saves money.

Figure 2:

publications in machine learning and computational psychiatry.

Computational Psychiatry: Models

The application of computational models to psychiatry (Friston et al. 2014) is a growing field ((((computational psychiatry[Title/Abstract]) AND psychiatry[Title/Abstract])) AND (“2011/1/1”[Date - Entrez] : “3000”[Date - Entrez) accessed 4/25/2019 yielded 128 references ). Computational models are a heterogeneous group of mathematical approaches, which aim to explain observations based on optimizing some constraints such as a cost function. Several advances have occurred over the past decade explaining the resurgence of this field. First, the utility of using various types of reinforcement learning models (Schultz et al. 1997), which quantify the degree of surprise given the expectation of a reward or punishment, have helped to clearly link specific brain structures to behavior (Daw and Doya 2006; Huys et al. 2015). Second, the availability of open source codes (Stephan and Mathys 2014) to apply different model approaches to behavioral and neuroimaging data have facilitated the broader uptake of these techniques. Third, some recent examples of how psychopathology can be recast as a computational processing problem (Browning et al. 2015; Powers et al. 2017) provide evidence that these models can be used as explanatory frameworks to help patients understand their symptoms. Fourth, there is some evidence that a computationally informed analytic approach can improve split-half reliability and modestly improve test-retest reliability (Price et al. 2019).

Active inference is theory based on belief propagation via selection of actions aimed to minimize the amount of surprise (Friston et al. 2017a). This approach can be used to develop a model that generates symptoms, signs, and diagnostic outcomes from latent psychopathological states. In turn, psychopathology is caused by pathophysiological processes that are perturbed by (etiological) causes such as predisposing factors, life events, and therapeutic interventions (Friston et al. 2017b). On the other hand, dynamic evolution of systems composed of both dynamic and random effects can be studied with stochastic dynamic models. These models can be used to integrate empirical observations with models of perception and behavior (Roberts et al. 2017a). Emerging research in this field includes phenomenological models of mood fluctuations in bipolar disorder and biophysical models of functional imaging data in psychotic and affective disorders (Roberts et al. 2017b). Some investigators have highlighted the importance of the ability of data generated by candidate models to falsify models and therefore support the specific claims about cognitive function (Palminteri et al. 2017). Others have argued that by embedding semantically interpretable computational models of brain dynamics or behavior into a statistical machine learning context, insights into dysfunction beyond mere prediction and classification may be gained (Durstewitz et al. 2019).

There have been a number of experiments focused on establishing specific computational processing errors in psychiatric patient populations. These have focused principally on three different areas. First, reward learning paradigms have been used to examine prediction error processing characteristics. Dysfunctions of self-assessment is an important aspect of mood disorders, a subject population that frequently shows dysfunction in ventral anterior cingulate and ventromedial prefrontal cortex. For example, using a social feedback paradigm to examine self-esteem during functional MRI, investigators found an association between social prediction errors and activity in ventral striatum as well as in the subgenual anterior cingulate cortex (Will et al. 2017). Moreover, updates in self-esteem resulting from these errors was associated with activity in ventromedial prefrontal cortex, a brain area that has been implicated in self-related value processing. Schizophrenia subjects have difficulty computing the value difference between two options, which is associated with value-based learning deficits (Hernaus et al. 2018). Although subjects with post-traumatic stress disorder do not show primary deficits in processing prediction errors, these individuals have difficulty adjusting the impact of these prediction errors based on the variability of the context, which has been interpreted as reflecting increased attention-based learning (Brown et al. 2018). Some investigators have examined state-dependent learning effects, which are particularly important for substance use disorders. For example, during cocaine deprivation, cocaine-dependent individuals show increased positive learning rates that go together with heightened neural positive prediction error (Wang et al. 2018). In moderately dependent smokers learning from positive prediction error signals are reduced during smoking abstinence and enhanced following cigarette consumption. However, learning from negative prediction error signals was enhanced during smoking abstinence and reduced following cigarette consumption (Baker et al. 2018). These findings are beginning to parse behavioral dysfunctions in psychiatric disorders as possibly state-dependent computational failure modes. The field is still at an early stage and many of the results are based on small samples, which limits generalizability. However, faulty updating of internal values or beliefs, which might be context and state-dependent is emerging as one of the central dysfunctions in psychiatric populations. Nevertheless, these dysfunctions have yet to be translated into individually specific intervention recommendations or have yet to be used in risk prediction models.

Until recently, computational models of avoidance learning have received less attention than similar models for reward-related processing. In some of these tasks, participants are exposed to primary aversive stimuli and given the opportunity to escape or avoid. These paradigms can be used to inform models of reinforcement learning and drift-diffusion, which together capture effects of approach/avoidance in choice behavior as well as variability of response latency (Millner et al. 2017) based on experienced consequences (Bach 2017). There is some indication that anxious individuals show a greater reliance on a model parameter that characterizes a prepotent bias to withhold responding during negative outcomes (Mkrtchian et al. 2017a), which might explain why anxiety promotes avoidant behavior (Mkrtchian et al. 2017b). Taken together, these models provide evidence for computational failure modes, which can serve as explanatory disease model, e.g. smokers motivated behavior might be driven by an excessive incentive to act to avoid an impending withdrawal state.

In the context Bayesian cognitive updating process, i.e. when an individual has a certain belief the update of the belief is based on likelihood of the data given the belief and the probability of the belief, some have argued that this updating is biased due to the subjective perception of probabilities in humans (Matsumori et al. 2018). These distortions of Bayesian inference help to explain psychological decision biases and impairments in cognitive control (Matsumori et al. 2018). Thus, a disorder can be understood as the growth of maladaptive beliefs possibly because more benign alternative schemas are discounted during belief updating (Moutoussis et al. 2018). Some have found that while the acquisition of the priors was intact in schizophrenia subjects, autistic traits were associated with weaker influence of expectations possibly due to more precise sensory representations (Karvelis et al. 2018). Others have proposed that psychotic symptoms such as auditory hallucinations may result from increased prior expectations of sensing voices (Powers et al. 2017). A disconnect between belief updating and action selection has been observed in subjects with obsessive compulsive disorder, which seem to develop an accurate, internal model of the environment but fail to use it to guide behavior (Vaghi et al. 2017).

These examples show that there is accumulating evidence of process dysfunctions that affect different aspects of behavior acquired via positive or negative reinforcement as well as Pavlovian conditioning. There are however still several shortcomings that need to be considered. First, these studies often involve case-control designs, which have limited explanatory depth and often cannot resolve the specificity of the finding. Second, studies rarely compare models to determine whether alternative explanations can provide better description of the data. Thus, there is some uncertainty whether the process dysfunction as proposed by the underlying model is the best possible explanation. Third, as pointed out above it is very likely that psychiatric disorders are highly heterogenic and that there is no uniform dysfunction. However, most studies use diagnostic labels or domain dysfunctions (e.g. anhedonia) as a monolithic label. Much larger studies will be necessary to address these issues, which have prompted some to propose a phased approach not unlike what has been used to develop new pharmacological agents (Paulus et al. 2016). Such a pipeline could be used both to refine machine learning models and computational models and help to move the field toward precision psychiatry.

Recommendations

Results from both machine learning and computational models in psychiatry point towards several recommendations for future investigations such that results from studies can be used to improve individual predictions or help to develop empirically guided improved clinical decision-making. First, there is a need for large, multi-site studies that provide sufficient data to develop robust machine learning approaches or computational models that can be validated on sufficiently large samples that have not been used to train or test the models. Computational psychiatry is currently fragmented and the clinical samples tend to be small, a consortium of investigators may help advance the field (Paulus et al. 2016). Second, machine learning predictions are frequently evaluated based on their ability to correctly predict outcomes. However, they are rarely examined whether they contribute to better decision-making in a clinical context. For example, if an ML algorithm can predict improvement or non-improvement in a particular patient population, would this algorithm help to improve the number needed to treat if applied before the individuals are undergoing treatment. Currently, there are few studies that have taken this prospective approach (Kingslake et al. 2017). Third, computational models provide evidence-based explanations of behavior in disease populations, however, most investigations do not actively compare alternative accounts of these explanations. Specifically, behavioral task performance could be explained by a reinforcement learning model or a belief- based updating model. These approaches provide complementary explanations of how the individual arrives at a decision. Nevertheless, these differences may be important for the development of process-specific behavioral interventions. Fourth, although many of the initial computational models were developed in animal models, there has been a surprising dearth of studies that use translational paradigms to examine computational dysfunctions in both humans and animals (for a possible exception see (Joyner et al. 2018)). This would be particularly helpful in the development of novel pharmacological agents aimed at correcting some of these dysfunctions. Taken together, computational psychiatry holds promise for both pragmatic and explanatory domains in psychiatry, however, the field is at an early stage and discretion is the better part of valor when it comes what impact the results will have on improving mental health assessment, prognosis, and treatment.

Acknowledgement and Funding

This research was supported by the Laureate Institute for Brain Research and the National Institute of General Medical Sciences (P20GM121312, Paulus).

Footnotes

Conflict of Interest Statement:

On behalf of all authors, the corresponding author states that there is no conflict of interest.

References

- Bach DR (2017) The cognitive architecture of anxiety-like behavioral inhibition. Journal of experimental psychology Human perception and performance 43: 18–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker TE, Zeighami Y, Dagher A, Holroyd CB (2018) Smoking decisions: Altered reinforcement learning signals induced by nicotine state. Nicotine & tobacco research : official journal of the Society for Research on Nicotine and Tobacco. [DOI] [PubMed] [Google Scholar]

- Bernardini F, Attademo L, Cleary SD, Luther C, Shim RS, Quartesan R, Compton MT (2017) Risk Prediction Models in Psychiatry: Toward a New Frontier for the Prevention of Mental Illnesses. J Clin Psychiatry 78: 572–583. [DOI] [PubMed] [Google Scholar]

- Bosco FA, Aguinis H, Singh K, Field JG, Pierce CA (2015) Correlational effect size benchmarks. The Journal of applied psychology 100: 431–49. [DOI] [PubMed] [Google Scholar]

- Brendel DH (2003) Reductionism, eclecticism, and pragmatism in psychiatry: the dialectic of clinical explanation. J Med Philos 28: 563–80. [DOI] [PubMed] [Google Scholar]

- Brown VM, Zhu L, Wang JM, Frueh BC, King-Casas B, Chiu PH (2018) Associability-modulated loss learning is increased in posttraumatic stress disorder. eLife 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Browning M, Behrens TE, Jocham G, O’Reilly JX, Bishop SJ (2015) Anxious individuals have difficulty learning the causal statistics of aversive environments. Nat Neurosci 18: 590–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Busch Y, Menke A (2019) Blood-based biomarkers predicting response to antidepressants. Journal of neural transmission (Vienna, Austria : 1996) 126: 47–63. [DOI] [PubMed] [Google Scholar]

- Casey BJ, Craddock N, Cuthbert BN, Hyman SE, Lee FS, Ressler KJ (2013) DSM-5 and RDoC: progress in psychiatry research? Nat Rev Neurosci 14: 810–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Channa R, Siddiqi M (2008) What do patients want from their psychiatrist? A cross- sectional questionnaire based exploratory study from Karachi. BMC Psychiatry 8: 14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook NR (2007) Use and misuse of the receiver operating characteristic curve in risk prediction. Circulation 115: 928–35. [DOI] [PubMed] [Google Scholar]

- Daw ND, Doya K (2006) The computational neurobiology of learning and reward. CurrOpinNeurobiol 16: 199–204. [DOI] [PubMed] [Google Scholar]

- Dion PA, Daoud H, Rouleau GA (2009) Genetics of motor neuron disorders: new insights into pathogenic mechanisms. Nat Rev Genet 10: 769–82. [DOI] [PubMed] [Google Scholar]

- Durstewitz D, Koppe G, Meyer-Lindenberg A (2019) Deep neural networks in psychiatry. Mol Psychiatry. [DOI] [PubMed] [Google Scholar]

- Friston K, FitzGerald T, Rigoli F, Schwartenbeck P, Pezzulo G (2017a) Active Inference: A Process Theory. Neural computation 29: 1–49. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Redish AD, Gordon JA (2017b) Computational Nosology and Precision Psychiatry. Computational psychiatry (Cambridge, Mass) 1: 2–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ, Stephan KE, Montague R, Dolan RJ (2014) Computational psychiatry: the brain as a phantastic organ. The lancet Psychiatry 1: 148–58. [DOI] [PubMed] [Google Scholar]

- Gerds TA, Cai T, Schumacher M (2008) The performance of risk prediction models. Biometrical journal Biometrische Zeitschrift 50: 457–79. [DOI] [PubMed] [Google Scholar]

- Gignac GE, Szodorai ET (2016) Effect size guidelines for individual differences researchers. Personality and Individual Differences 102: 74–78. [Google Scholar]

- Hall LS, Adams MJ, Arnau-Soler A, Clarke TK, Howard DM, Zeng Y, Davies G, Hagenaars SP, Maria Fernandez-Pujals A, Gibson J, Wigmore EM, Boutin TS, Hayward C, Scotland G, Porteous DJ, Deary IJ, Thomson PA, Haley CS, McIntosh AM (2018) Genome-wide meta-analyses of stratified depression in Generation Scotland and UK Biobank. Translational psychiatry 8: 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hernaus D, Gold JM, Waltz JA, Frank MJ (2018) Impaired Expected Value Computations Coupled With Overreliance on Stimulus-Response Learning in Schizophrenia. Biological psychiatry : cognitive neuroscience and neuroimaging 3: 916–926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huys QJ, Maia TV, Frank MJ (2016) Computational psychiatry as a bridge from neuroscience to clinical applications. Nat Neurosci 19: 404–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huys QJM, Guitart-Masip M, Dolan RJ, Dayan P (2015) Decision-theoretic psychiatry. Clinical Psychological Science 3: 400–421. [Google Scholar]

- Janssen RJ, Mourao-Miranda J, Schnack HG (2018) Making Individual Prognoses in Psychiatry Using Neuroimaging and Machine Learning. Biological psychiatry : cognitive neuroscience and neuroimaging 3: 798–808. [DOI] [PubMed] [Google Scholar]

- Johansson FD, Shalit U, Sontag D (2016) Learning Representations for Counterfactual Inference. eprint arXiv:160503661: arXiv:1605.03661. [Google Scholar]

- Johnson BT, Scott-Sheldon LAJ, Carey MP (2010) Meta-synthesis of health behavior change meta-analyses. American journal of public health 100: 2193–2198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joyner MA, Gearhardt AN, Flagel SB (2018) A Translational Model to Assess Sign-Tracking and Goal-Tracking Behavior in Children. Neuropsychopharmacology 43: 228–229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karvelis P, Seitz AR, Lawrie SM, Series P (2018) Autistic traits, but not schizotypy, predict increased weighting of sensory information in Bayesian visual integration. eLife 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kendler KS (2005) Toward a philosophical structure for psychiatry. Am J Psychiatry 162: 433–40. [DOI] [PubMed] [Google Scholar]

- Kendler KS (2008) Explanatory models for psychiatric illness. Am J Psychiatry 165: 695–702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kendler KS (2012) Levels of explanation in psychiatric and substance use disorders: implications for the development of an etiologically based nosology. Mol Psychiatry 17: 11–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kendler KS (2014) The structure of psychiatric science. Am J Psychiatry 171: 931–8. [DOI] [PubMed] [Google Scholar]

- Kendler KS (2017a) David Skae and his nineteenth century etiologic psychiatric diagnostic system: looking forward by looking back. Mol Psychiatry 22: 802–807. [DOI] [PubMed] [Google Scholar]

- Kendler KS (2017b) DSM disorders and their criteria: how should they inter-relate? Psychol Med: 1–7. [DOI] [PubMed] [Google Scholar]

- Kendler KS, Engstrom EJ (2017) Kahlbaum, Hecker, and Kraepelin and the Transition From Psychiatric Symptom Complexes to Empirical Disease Forms. Am J Psychiatry 174: 102–109. [DOI] [PubMed] [Google Scholar]

- Kessler RC, van Loo HM, Wardenaar KJ, Bossarte RM, Brenner LA, Ebert DD, de Jonge P, Nierenberg AA, Rosellini AJ, Sampson NA, Schoevers RA, Wilcox MA, Zaslavsky AM (2017) Using patient self-reports to study heterogeneity of treatment effects in major depressive disorder. Epidemiology and psychiatric sciences 26: 22–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kingslake J, Dias R, Dawson GR, Simon J, Goodwin GM, Harmer CJ, Morriss R, Brown S, Guo B, Dourish CT, Ruhe HG, Lever AG, Veltman DJ, van Schaik A, Deckert J, Reif A, Stablein M, Menke A, Gorwood P, Voegeli G, Perez V, Browning M (2017) The effects of using the PReDicT Test to guide the antidepressant treatment of depressed patients: study protocol for a randomised controlled trial. Trials 18: 558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohler CA, Evangelou E, Stubbs B, Solmi M, Veronese N, Belbasis L, Bortolato B, Melo MCA, Coelho CA, Fernandes BS, Olfson M, Ioannidis JPA, Carvalho AF (2018) Mapping risk factors for depression across the lifespan: An umbrella review of evidence from meta-analyses and Mendelian randomization studies. J Psychiatr Res 103: 189–207. [DOI] [PubMed] [Google Scholar]

- Marr D (1982) Vision: A computational investigation into the human representation and processing of visual information. San Francisco: WH Freeman [Google Scholar]

- Martin AR, Daly MJ, Robinson EB, Hyman SE, Neale BM (2018) Predicting Polygenic Risk of Psychiatric Disorders. Biol Psychiatry. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matsumori K, Koike Y, Matsumoto K (2018) A Biased Bayesian Inference for Decision-Making and Cognitive Control. Front Neurosci 12: 734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Menzies P (2012) The causal structure of mechanisms. Studies in history and philosophy of biological and biomedical sciences 43: 796–805. [DOI] [PubMed] [Google Scholar]

- Millner AJ, Gershman SJ, Nock MK, den Ouden HEM (2017) Pavlovian Control of Escape and Avoidance. J Cogn Neurosci: 1–12. [DOI] [PubMed] [Google Scholar]

- Mkrtchian A, Aylward J, Dayan P, Roiser JP, Robinson OJ (2017a) Modeling Avoidance in Mood and Anxiety Disorders Using Reinforcement Learning. Biol Psychiatry 82: 532–539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mkrtchian A, Roiser JP, Robinson OJ (2017b) Threat of shock and aversive inhibition: Induced anxiety modulates Pavlovian-instrumental interactions. J Exp Psychol Gen 146: 1694–1704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moons KG, Kengne AP, Grobbee DE, Royston P, Vergouwe Y, Altman DG, Woodward M (2012a) Risk prediction models: II. External validation, model updating, and impact assessment. Heart (British Cardiac Society) 98: 691–8. [DOI] [PubMed] [Google Scholar]

- Moons KG, Kengne AP, Woodward M, Royston P, Vergouwe Y, Altman DG, Grobbee DE (2012b) Risk prediction models: I. Development, internal validation, and assessing the incremental value of a new (bio)marker. Heart 98: 683–90. [DOI] [PubMed] [Google Scholar]

- Moutoussis M, Shahar N, Hauser TU, Dolan RJ (2018) Computation in Psychotherapy, or How Computational Psychiatry Can Aid Learning-Based Psychological Therapies. Computational psychiatry (Cambridge, Mass) 2: 50–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nagel M, Watanabe K, Stringer S, Posthuma D, van der Sluis S (2018) Item-level analyses reveal genetic heterogeneity in neuroticism. Nature communications 9: 905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palminteri S, Wyart V, Koechlin E (2017) The Importance of Falsification in Computational Cognitive Modeling. Trends Cogn Sci 21: 425–433. [DOI] [PubMed] [Google Scholar]

- Papini S, Pisner D, Shumake J, Powers MB, Beevers CG, Rainey EE, Smits JAJ, Warren AM (2018) Ensemble machine learning prediction of posttraumatic stress disorder screening status after emergency room hospitalization. J Anxiety Disord 60: 35–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paulus MP (2017) Evidence-Based Pragmatic Psychiatry-A Call to Action. JAMA Psychiatry. [DOI] [PubMed] [Google Scholar]

- Paulus MP, Huys QJ, Maia TV (2016) A Roadmap for the Development of Applied Computational Psychiatry. Biological psychiatry : cognitive neuroscience and neuroimaging 1: 386–392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pencina MJ, D’Agostino RB, Sr. (2012) Thoroughly modern risk prediction? Science translational medicine 4: 131fs10. [DOI] [PubMed] [Google Scholar]

- Powers AR, Mathys C, Corlett PR (2017) Pavlovian conditioning-induced hallucinations result from overweighting of perceptual priors. Science 357: 596–600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price RB, Brown V, Siegle GJ (2019) Computational Modeling Applied to the Dot-Probe Task Yields Improved Reliability and Mechanistic Insights. Biol Psychiatry 85: 606–612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rector TS, Taylor BC, Wilt TJ (2012) Chapter 12: systematic review of prognostic tests. J Gen Intern Med 27 Suppl 1: S94–101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts JA, Friston KJ, Breakspear M (2017a) Clinical Applications of Stochastic Dynamic Models of the Brain, Part I: A Primer. Biological psychiatry : cognitive neuroscience and neuroimaging 2: 216–224. [DOI] [PubMed] [Google Scholar]

- Roberts JA, Friston KJ, Breakspear M (2017b) Clinical Applications of Stochastic Dynamic Models of the Brain, Part II: A Review. Biological psychiatry : cognitive neuroscience and neuroimaging 2: 225–234. [DOI] [PubMed] [Google Scholar]

- Ross CA, Aylward EH, Wild EJ, Langbehn DR, Long JD, Warner JH, Scahill RI, Leavitt BR, Stout JC, Paulsen JS, Reilmann R, Unschuld PG, Wexler A, Margolis RL, Tabrizi SJ (2014) Huntington disease: natural history, biomarkers and prospects for therapeutics. Nat Rev Neurol 10: 204–16. [DOI] [PubMed] [Google Scholar]

- Rothman KJ, Greenland S (2005) Causation and causal inference in epidemiology. Am J Public Health 95 Suppl 1: S144–50. [DOI] [PubMed] [Google Scholar]

- Schnack HG (2017) Improving individual predictions: Machine learning approaches for detecting and attacking heterogeneity in schizophrenia (and other psychiatric diseases). Schizophrenia research. [DOI] [PubMed] [Google Scholar]

- Schnack HG, Kahn RS (2016) Detecting Neuroimaging Biomarkers for Psychiatric Disorders: Sample Size Matters. Frontiers in psychiatry 7: 50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schork AJ, Won H, Appadurai V, Nudel R, Gandal M, Delaneau O, Revsbech Christiansen M, Hougaard DM, Baekved-Hansen M, Bybjerg-Grauholm J, Giortz Pedersen M, Agerbo E, Bocker Pedersen C, Neale BM, Daly MJ, Wray NR, Nordentoft M, Mors O, Borglum AD, Bo Mortensen P, Buil A, Thompson WK, Geschwind DH, Werge T (2019) A genome-wide association study of shared risk across psychiatric disorders implicates gene regulation during fetal neurodevelopment. Nat Neurosci 22: 353–361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague PR (1997) A neural substrate of prediction and reward. Science 275:1593–1599. [DOI] [PubMed] [Google Scholar]

- Scott IA, Greenberg PB (2010) Cautionary tales in the interpretation of studies of tools for predicting risk and prognosis. Internal medicine journal 40: 803–12. [DOI] [PubMed] [Google Scholar]

- Smith DJ, Escott-Price V, Davies G, Bailey ME, Colodro-Conde L, Ward J, Vedernikov A, Marioni R, Cullen B, Lyall D, Hagenaars SP, Liewald DC, Luciano M, Gale CR, Ritchie SJ, Hayward C, Nicholl B, Bulik-Sullivan B, Adams M, Couvy-Duchesne B, Graham N, Mackay D, Evans J, Smith BH, Porteous DJ, Medland SE, Martin NG, Holmans P, McIntosh AM, Pell JP, Deary IJ, O’Donovan MC (2016) Genome-wide analysis of over 106 000 individuals identifies 9 neuroticism-associated loci. Mol Psychiatry 21: 749–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephan KE, Mathys C (2014) Computational approaches to psychiatry. Curr Opin Neurobiol 25: 85–92. [DOI] [PubMed] [Google Scholar]

- Stephan KE, Schlagenhauf F, Huys QJM, Raman S, Aponte EA, Brodersen KH, Rigoux L, Moran RJ, Daunizeau J, Dolan RJ, Friston KJ, Heinz A (2017) Computational neuroimaging strategies for single patient predictions. Neuroimage 145: 180–199. [DOI] [PubMed] [Google Scholar]

- Torkamani A, Wineinger NE, Topol EJ (2018) The personal and clinical utility of polygenic risk scores. Nat Rev Genet 19: 581–590. [DOI] [PubMed] [Google Scholar]

- Vaghi MM, Luyckx F, Sule A, Fineberg NA, Robbins TW, De Martino B (2017) Compulsivity Reveals a Novel Dissociation between Action and Confidence. Neuron 96: 348–354.e4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wager S, Athey S (2018) Estimation and Inference of Heterogeneous Treatment Effects using Random Forests. Journal of the American Statistical Association 113: 1228–1242. [Google Scholar]

- Wang JM, Zhu L, Brown VM, De La Garza R 2nd, Newton T, King-Casas B, Chiu PH (2018) In Cocaine Dependence, Neural Prediction Errors During Loss Avoidance Are Increased With Cocaine Deprivation and Predict Drug Use. Biological psychiatry : cognitive neuroscience and neuroimaging. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Will GJ, Rutledge RB, Moutoussis M, Dolan RJ (2017) Neural and computational processes underlying dynamic changes in self-esteem. eLife 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfers T, Beckmann CF, Hoogman M, Buitelaar JK, Franke B, Marquand AF (2019) Individual differences v. the average patient: mapping the heterogeneity in ADHD using normative models. Psychol Med: 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfers T, Doan NT, Kaufmann T, Alnaes D, Moberget T, Agartz I, Buitelaar JK, Ueland T, Melle I, Franke B, Andreassen OA, Beckmann CF, Westlye LT, Marquand AF (2018) Mapping the Heterogeneous Phenotype of Schizophrenia and Bipolar Disorder Using Normative Models. JAMA psychiatry (Chicago, Ill). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zachar P, Kendler KS (2017) The Philosophy of Nosology. Annu Rev Clin Psychol 13: 49–71. [DOI] [PubMed] [Google Scholar]