Abstract

Nonhuman primates have been used extensively to study eye-head coordination and eye-hand coordination, but the combination—eye-head-hand coordination—has not been studied. Our goal was to determine whether reaching influences eye-head coordination (and vice versa) in rhesus macaques. Eye, head, and hand motion were recorded in two animals with search coil and touch screen technology, respectively. Animals were seated in a customized “chair” that allowed unencumbered head motion and reaching in depth. In the reach condition, animals were trained to touch a central LED at waist level while maintaining central gaze and were then rewarded if they touched a target appearing at 1 of 15 locations in a 40° × 20° (visual angle) array. In other variants, initial hand or gaze position was varied in the horizontal plane. In similar control tasks, animals were rewarded for gaze accuracy in the absence of reach. In the Reach task, animals made eye-head gaze shifts toward the target followed by reaches that were accompanied by prolonged head motion toward the target. This resulted in significantly higher head velocities and amplitudes (and lower eye-in-head ranges) compared with the gaze control condition. Gaze shifts had shorter latencies and higher velocities and were more precise, despite the lack of gaze reward. Initial hand position did not influence gaze, but initial gaze position influenced reach latency. These results suggest that eye-head coordination is optimized for visually guided reach, first by quickly and accurately placing gaze at the target to guide reach transport and then by centering the eyes in the head, likely to improve depth vision as the hand approaches the target.

NEW & NOTEWORTHY Eye-head and eye-hand coordination have been studied in nonhuman primates but not the combination of all three effectors. Here we examined the timing and kinematics of eye-head-hand coordination in rhesus macaques during a simple reach-to-touch task. Our most novel finding was that (compared with hand-restrained gaze shifts) reaching produced prolonged, increased head rotation toward the target, tending to center the binocular field of view on the target/hand.

Keywords: eye-hand coordination, eye-head coordination, head-unrestrained gaze, nonhuman primates, reach, saccades

INTRODUCTION

Nonhuman primates have been used extensively as animal models for human eye-head coordination (Bizzi et al. 1971; Choi and Guitton 2006; Crawford et al. 1999; Freedman and Sparks 1997a; Quinet and Goffart 2007) and eye-hand coordination (Battaglia-Mayer et al. 2001; Dean et al. 2011; Hwang et al. 2014; Snyder et al. 2002), but in real-world circumstances all three of these effectors (eye, head, and hand) are coordinated. A relatively small number of studies have examined eye-head-hand coordination in humans (Biguer et al. 1982; Blohm and Crawford 2007; Pelz et al. 2001; Reppert et al. 2018; Tao et al. 2018; Vercher et al. 1994), but to our knowledge no one has studied eye-head-hand coordination in the nonhuman primates. Animal models are particularly important for this topic, because most commonly available human neuroscience techniques (fMRI, etc.) are incompatible with head motion, whereas head-unrestrained neurophysiological recordings have become relatively common in recent decades (Choi and Guitton 2006; Freedman and Sparks 1997b; Gandhi and Katnani 2011; Roy and Cullen 1998; Sadeh et al. 2015). Thus a behavioral model of eye-head-hand coordination in monkeys could provide the basis for further neurophysiological studies of the corresponding neural mechanisms. The specific goal of the present study was to examine the influence of reach on head-unrestrained gaze kinematics, particularly the contribution of the head, and conversely the influence of gaze on reach behavior in rhesus macaques.

Behavioral studies of eye-head coordination during gaze shifts in macaques have primarily focused on 1) the relative timing of eye and head motion, 2) the relative contribution of head motion to the gaze shift, 3) amplitude-velocity relationships, and 4) three-dimensional (3D) kinematics (Crawford et al. 1999; Freedman 2008; Freedman and Sparks 1997a, 2000; Guitton 1992). Macaque gaze behavior has proven remarkably similar to human gaze behavior. The timing of eye and head motion during gaze shifts may depend on task variables (Collins and Barnes 1999; Fuller 1992; Stahl 2001), but, typically, a head-unrestrained gaze shift (i.e., rotation of the line of sight in space) begins with an eye-in-head saccade accompanied by a slower, longer-lasting head movement and stabilization of gaze after the saccade by engaging the vestibuloocular reflex (Freedman and Sparks 1997a; Guitton 1992; Roy and Cullen 1998).

Head motion is sometimes measured as contribution to the gaze shift (the amount of head motion that occurs during the saccade) versus total head movement (lasting beyond the duration of the saccade) (Chen 2006; Freedman and Sparks 1997a; Monteon et al. 2010; Tu and Keating 2000). Typically, macaques (like humans) move their head more for larger gaze shifts (Freedman and Sparks 1997a), but the amount is task dependent. For example, if macaques are expected to keep gaze near center or if the head is already turned toward the target, the contribution of the head will be less (Gandhi and Sparks 2001; Monteon et al. 2012). In contrast, if animals are cued to use a larger gaze range (Monteon et al. 2012), if the head is already turned away from the target (Freedman and Sparks 1997a; Gandhi and Sparks 2001), or if the contribution of the eye is physically limited (Constantin et al. 2004; Crawford and Guitton 1997), the head will contribute more motion for same-sized gaze shifts. Furthermore, head and gaze motion approximate a fixed amplitude-velocity relationship similar to that observed in the “main sequence” of saccades (Freedman and Sparks 1997a). Finally, during eye-head gaze shifts, macaques employ various 3D coordination strategies to maintain Listing’s law of the eye and an analogous Donders’ law of the head during interim fixations (Crawford et al. 1999; Monteon et al. 2010).

Studies of eye-hand coordination in macaques are somewhat less common than studies of either eye-head coordination in macaques or eye-hand coordination in humans. These can be grouped into studies in which gaze fixation and hand motion were confined to a common 2D plane (Dean et al. 2011; Pesaran et al. 2006; Snyder et al. 2002; Song and McPeek 2009; Vazquez et al. 2017) versus those in which animals performed a 3D reach in depth (Battaglia-Mayer et al. 2001; Ferraina et al. 1997; Hawkins et al. 2013; Marconi et al. 2001; Marzocchi et al. 2008). Macaques will generally saccade in advance to capture the reach target (Pelz and Canosa 2001; Song and McPeek 2009). Reaches tend to decrease saccade latency and increase saccade velocity in both macaques and humans (Sailer et al. 2016; Snyder et al. 2002). Although macaques can be trained to decouple gaze fixation from reach direction (Hawkins et al. 2013), accuracy and temporal coupling are higher when animals are allowed to coordinate the eye and hand toward a common goal (Vazquez et al. 2017). In general, the kinematic rules for eye-hand coordination in macaques are similar to those observed in the more frequently studied human eye-hand coordination system (Henriques et al. 1998; Prablanc et al. 1979; van Donkelaar and Staub 2000). However, it is not known how well the macaque results translate directly to head-unrestrained reach behavior.

As noted above, we are not aware of any studies of eye-head-hand coordination in monkeys, but there are several in humans. Head orientation and eye-head-body geometry are accounted for in the sensorimotor transformations for eye-hand coordination (Blohm and Crawford 2007; Henriques et al. 2003; Henriques and Crawford 2002; Ren and Crawford 2009). The typical order of recruitment (at the behavioral level) appears to be eye, then head, then arm (Biguer et al. 1982), whereas the order of termination has been reported to be gaze, then finger (on target), and, finally, head motion (Carnahan and Marteniuk 1991). Some authors have emphasized a common source model based on synchrony between eye, head, and reach commands, e.g., at the level of EMG and corrective response latencies (Biguer et al. 1982; Tao et al. 2018), whereas others have argued that the relative variability of eye, head, and hand initiation contradicts control by a single motor program (Carnahan and Marteniuk 1991).

Reaching also appears to alter patterns of eye-head coordination (Carnahan and Marteniuk 1991). This study showed that when subjects pointed quickly with the finger the head started to move before the eyes, whereas when subjects pointed slowly and accurately the eyes started to move first, followed by the head and finger. However, when the subjects were told to point with only the eyes and head, eye movement occurred before the head, regardless of the speed accuracy instructions. It has been suggested that hand pointing accuracy does not depend on the relative timing of eye, head, and hand movements (Vercher et al. 1994), but allowing the head to move appears to reduce pointing errors (Biguer et al. 1984). Finally, it was recently shown that during coordinated eye-head-hand reach movements reach vigor (peak hand velocity for a given amplitude) was most tightly linked with the vigor of head rotations (Reppert et al. 2018). But again, it is not known how well these various results generalize to rhesus macaques.

In the present study, we investigated the influence of reach on gaze kinematics, and conversely gaze on reach behavior, in head-unrestrained rhesus macaques. We employed a behavioral paradigm in which animals were rewarded for accurately reaching (in direction and depth) but, importantly, were allowed to make head-unrestrained gaze shifts using the coordination strategy of their choice. We then compared this with the more standard task in which animals were rewarded for making eye-head gaze shifts in the absence of a reach. The primary intent of this design was to explore the influence of reach on eye-head gaze kinematics, but we also examined the influence of reach (initial position, final position, timing) on gaze accuracy and timing and the influence of initial gaze position on reach. Overall, our results confirm several of the multijoint interactions between eye, head, and reach kinematics that have already been reported in the literature and, importantly, reveal an additional head-hand coordination strategy that may function to optimize vision for reach movements.

METHODS

Animals and Surgical Procedures

Data were collected from two female rhesus macaque monkeys (Macaca mulatta; animals W and O). These animals weighed ~6 kg and 10 kg, respectively. Before the experiment, each animal underwent surgery under general anesthesia (1.5% isoflurane following intramuscular injection of 10 mg/kg ketamine hydrochloride, 0.05 mg/kg atropine sulfate, and 0.5 mg/kg acepromazine). A stainless steel head post was implanted onto the skull with a dental acrylic head cap secured by stainless steel cortex screws. The head post was only used to stabilize the head during preparation of the experiment, i.e., while attaching the head coil and putting the reward tube in place. During the experiments, a fluid dispenser and two orthogonal search coils were mounted on the head to provide 3D recordings of the head rotation relative to space. One Teflon-coated stainless steel search coil (18 mm in diameter) was implanted in both animals subconjunctivally. A scleral coil was placed in one eye for 2D recordings of gaze orientation (horizontal and vertical) in space. Animals were given 2 wk of recovery after the surgery with unrestricted food and fluid intake. After this, animal fluid intake was controlled during working weekdays so that they received most of their fluids as rewards during training and/or performing experimental tasks. The animal’s fluid and food intake, weight, and health were monitored closely by the lab care staff and the university veterinarian. All surgical and experimental protocols were followed according to the Canadian Council for Animal Care guidelines on the use of laboratory animals and were reviewed and approved by the York University animal care committee.

Experimental Setup and Behavioral Recordings

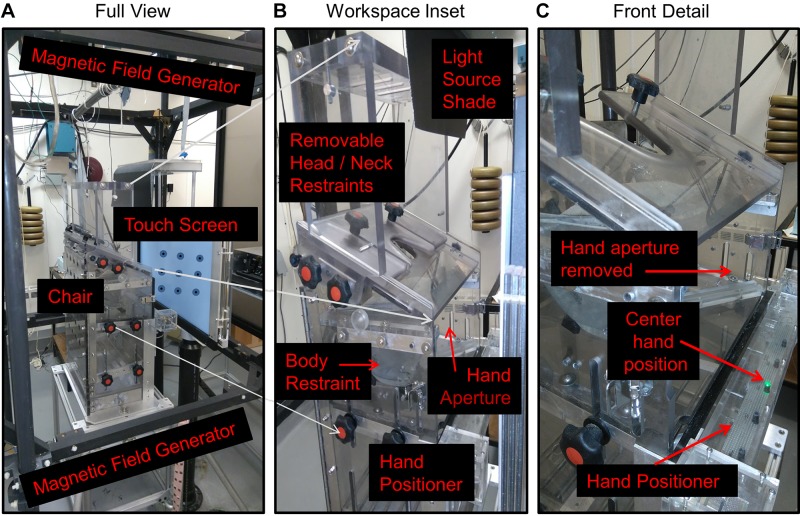

The experimental setup, including a modified primate “chair” and equipment for behavioral recordings and stimulus presentation, is shown in Fig. 1. During the experiments, animals were seated within a customized Crist primate chair (Crist Instrument, Hagerstown, MD) placed so that the head was centered within three mutually orthogonal magnetic fields of 1-m distance in all directions (Fig. 1A). The primate chair was modified to allow free motion of the head (Crawford et al. 1999) and (for the present study) to allow both positioning of the initial hand position and unencumbered forward reaching movements. The modifications for head motion included a removable head restraint, removable top plates, and a security vest as described in our previous publications (Crawford et al. 1999; Klier et al. 2001, 2003). For this experiment, an aperture was added across the front of the chair to allow manual access to our full range of visual stimuli in both depth and direction. The width of the chair was also increased to provide free motion of the arm, whereas a shoulder collar was added to restrain the location of the right shoulder joint and for enhanced security. The upper body was prevented from rotating with an iron link chain that attached the primate jacket to the chair. In these experiments, only motion of the right hand was allowed; the left arm was restrained with an additional upper arm Velcro cuff. The cuff was put on the left upper arm, which further connected to the chair with a thick cotton thread.

Fig. 1.

Apparatus. A: full view. A modified primate “chair” (Crist Instrument) was placed in the center of a 3-dimensional (3D) magnetic field generator in front of a touch screen. B: workspace inset. Image shows primate chair modifications provided to allow forward translation of the hand in both direction and depth and 3D rotation of the head motion by removing a hand aperture plate and head/neck restraints during experiments. The hand positioner was composed of an LED bar placed at the waist level just in front of the hands. Body restraint was achieved by fitting a jacket around the torso of the animal and attaching this to the inside of the chair (to prevent spinning and escaping), and other obstacles were attached to the chair as required to reduce motion of the unused hand and other body parts. The light source shade was simply a shade to remove reflections from the hand positioner LEDs. C: front detail. Image shows a close-up of the front of the chair and the hand positioner. The illuminated green LED is the center hand position.

Visual stimuli were presented on a vertically mounted screen (OPTIR Touch 32-in. IR touch screen; Keytec) placed at a 23-cm distance ahead of the eye (Fig. 1B). This screen was surrounded by a frame equipped with vertical and horizontal infrared sensors in the plane of the gaze/reach stimuli. These sensors measured the central 2D location of manual contact with an accuracy and precision of 1° radius. Initial hand position was controlled by training the animal to rest its hand on the LED bar attached to the chair at waist level (Fig. 1C), with the center LED 16 cm ahead and 28 cm below the right shoulder. A total of five LEDs were distributed horizontally along this bar at an average 16-cm interval, at visual angles (from the eye) of (−19°, −64°), (0°, −64°), (19°, −64°), (34°, −64°), and (45°, −64°) in horizontal and vertical visual angle, respectively, where rightward positions are positive. This range was selected because animals were hesitant to move their right hand to the far left of their body. Correct positioning of the hand on the LED bar was measured with two strategies. First, we used infrared light to monitor the horizontal position of the hand, and second, there was a vertical sensor above the LED bar, which detected whether the light was blocked by the animals’ hand. Software codes for stimulus control and behavioral recordings were modified from our previous primate gaze control studies (e.g., Crawford et al. 1999; Li et al. 2017).

System Calibration

Two separate calibrations were done to get precise search coil signals before each training or experimental session. First, the 3D magnetic fields (3 mutually orthogonal magnetic fields) were precalibrated before each experiment by rotating an external coil through each field direction and adjusting field strength in each direction to an equal maximum (Crawford et al. 1999; Li et al. 2017; Tweed and Vilis 1990). This was sufficient for computation quaternions from the 3D head coils. During experiments, the primate chair was positioned such that the head was at the center of three mutually orthogonal magnetic fields, and a reference position for the head was recorded while the animal looked straight ahead. To calibrate the 2D eye coil, we then made the animals fixate each of the nine targets used in our main Centrifugal task (see Training and Behavioral Paradigm) for 1 s. The average data from two sequential runs of the paradigm were used by an online program that fit the eye coil signals to the correct physical locations of the stimuli (Li et al. 2017; Monteon et al. 2010). The touch screen apparatus was calibrated through the software that was provided by the manufacturer (Keytec).

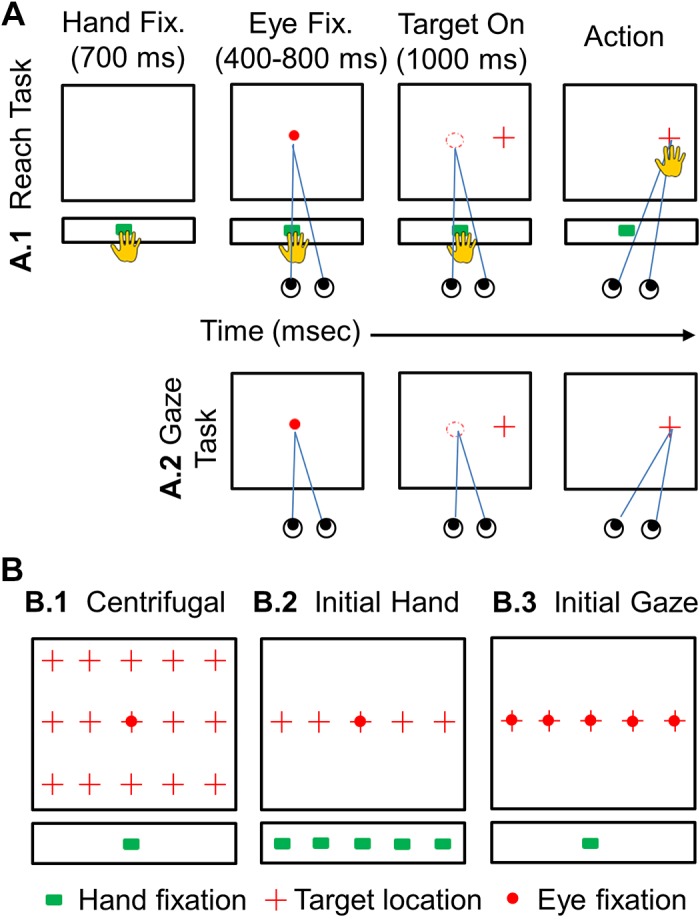

Training and Behavioral Paradigm

Training and experiments were conducted in complete darkness to minimize extraneous stimuli and allocentric landmarks. Animals were rewarded with a drop of water for completing each trial correctly. Animals were first trained to perform the Gaze task, i.e., the control task (Fig. 2A2). Training started with teaching animals to maintain their gaze at the central fixation point (red disk) for 1.000 ms. They were further trained to make a saccade to the target (red cross) within 1,000 ms. When the animals mastered fixating the center position and making a saccade toward a peripheral target, they were trained on the full Gaze task (the control). Here the fixation dot was illuminated for 1,000 ms and animals were required to maintain gaze fixation within a radius of 6° of this point for 400–800 ms (chosen randomly each trial) to avoid anticipation. At the end of the fixation period, the fixation point was extinguished, and simultaneously the target appeared at 1 of the 15 positions (chosen randomly). Animals were required to a make saccade toward the target within a spatial window of 6° in the Gaze task. The targets stayed on for 1,000 ms and were 10° apart from each other in both directions, vertical and horizontal.

Fig. 2.

Schematic illustration of the experimental paradigm. A: sequence of events in 2 major tasks. Colored symbols indicate the LED-projected initial hand position (green square), the laser-projected fixation point (red circle), and the target (red cross) for each trial; the room was otherwise dark. In A1 (Reach task) animals were required to touch the LED light (green square) on the LED bar at the waist level (for 700 ms) and then maintain fixation (red circle) on the touch screen in front of them for 400–800 ms. The fixation light was then extinguished, and a target (red cross) appeared simultaneously, randomly selected at one of the task locations (see B). In the Reach task (A1), the animal was required to touch the target and was free to move its head and eyes, whereas in the Gaze task (A2), the animal could move its eyes and head to the target, but its reach was restrained. B: the 3 spatial conditions that were studied. B1: the centrifugal task, which utilized 1 central gaze fixation point (red circle) and 1 center initial hand position (green square) where the movements of eye, head, and hand were recorded with 15 target locations (red crosses) on the touch screen 10° apart from each other horizontally and vertically. B2: the initial hand task with 5 variable hand locations (green squares) and 1 central gaze fixation (red circle) position with 5 targets (red crosses) 10° apart horizontally. B3: initial Gaze task with 5 initial gaze positions (red circles) and 1 central hand position (green square) with 5 targets (red crosses) 10° apart horizontally. Both reach+gaze (A1) and gaze-only (A2) blocks were performed for each spatial condition.

Moving on to the Reach task, i.e., the experimental task (Fig. 2A1), animals were first trained to place their hand on an illuminated green LED at one of the five initial positions (starting with the shoulder-aligned LED and later incorporating the more peripheral LEDs). Next, they were trained to touch a single target on the screen in front of them, chosen randomly from 15 different positions (Fig. 2B1). When animals mastered placing their hand on the LED bar and touching the single target, they were trained on the full Reach task. The animals started the task as they placed their hand on the LED (green) light for 700 ms. Then a central fixation (red circle) appeared on the screen for 1,000 ms, and they were required to maintain their gaze at that point between 400 and 800 ms within the spatial window of 6°. As the fixation point disappeared, concurrently, the target appeared for 1.000 ms. Animals were required to touch the target within the spatial window of 13° vertically and horizontally (typically they chose to place their fingers over the area where they saw a stimulus). Note that in the Reach task animals were not required to make a saccade toward the target to obtain a reward. In this way, they were allowed to choose their own gaze strategy while reaching. In both tasks, head motion was unencumbered, and thus animals were free to use whatever eye-head coordination strategy they wanted to choose.

When animals completed the entire trial in both Gaze task and Reach task correctly, a water reward was provided for the correct trials. Trials were aborted when the animals either failed to make a saccade to the fixation point or target or did not touch the target. When the animal was able to consistently complete the entire trial without mistakes, training was complete. At this point, data collection commenced (with the preceding task parameters and both motor task variations) with the following three specific spatial condition configurations.

Centrifugal condition.

Animals were required to reach (from the shoulder-aligned, waist-height hand LED) and/or gaze (from the eye-centered fixation light) toward 1 of the 15 targets in a 40° horizontal × 20° vertical (visual angle) array, with free movement of their eyes and head (Fig. 2B1). Data were recorded for 8 wk in animal W (resulting in 25 reach trials for each target and 25 gaze trials for each target) and for 8 wk in animal O (resulting in 25 reach trials for each target and 25 gaze trials for each target). In total, we recorded 375 trials for the Reach task and 375 trials for the Gaze task in each animal, for a total across both animals of 1,500 trials collected in this condition.

Variable initial hand position condition.

This was the same as the previous condition, except that the initial hand position was varied randomly among the five horizontally arrayed green LEDs (at waist height) and only five targets (the horizontal row at eye height) were used (Fig. 2B2). Data were recorded for 15 wk in animal W (resulting in 25 reach trials for each target and 25 gaze trials for each target) and for 15 wk in animal O (resulting in 25 reach for each target trials and 25 gaze trials for each target). We recorded 625 trials for the Reach task and 625 trials for the Gaze task in each animal, for a total of 2,500 trials collected in this condition.

Variable initial gaze position condition.

This was similar to the previous condition, except that only the shoulder-aligned initial hand position was used, and instead the initial gaze position was varied between the five horizontal targets (Fig. 2B3). Data were recorded for 12 wk in animal W (resulting in 25 reach trials for each target and 25 gaze trials for each target) and for 15 wk in animal O (resulting in 25 reach trials for each target and 25 gaze trials for each target). In total, we recorded 625 trials for the Reach task and 625 trials for the Gaze task in each animal, for a total of 2,500 trials in this condition. Thus a grand total of 6,500 trials were collected in all three tasks.

Data Analysis

Each animal’s head was at the center of three mutually orthogonal magnetic fields, voltages from the coil were sampled at 1,000 Hz, and data were digitized. These data were used to determine horizontal and vertical position signals of gaze (eye relative to space), head relative to space, and eye relative to head. These two measures (gaze and head) were calculated in a visual coordinate system in degrees as stated previously in Crawford et al. (1999). Detailed measurements of initial hand position LEDs and final hand position according to the touch screen coordinates were used for further hand analysis in visual coordinate system. The visual angle of an object is a measure of the size of the object’s image on the retina. The visual angle was calculated as follows:

where

Our apparatus allowed us to record the initial and final hand positions as well as their timing. This allowed us to calculate mean hand speed based on the overall length of the trajectory divided by (screen touch time – initial hand offset). This apparatus did not allow us to record hand positions or velocities during the reach movement.

Recorded trials were rejected if animals made a saccade or hand movement before a target stimulus appeared on the screen. Since animals were trained until they performed the task consistently, only a small number of trials (~5%) had to be eliminated. Only successful trials were included in analysis.

Experimental data were analyzed off-line with custom scripts written in MATLAB 2016. The beginning of the gaze movement was marked when the velocity reached >50°/s for 2 ms and end of the gaze movement when the velocity reached <30°/s for 2 ms for each rewarded trial. The gaze trajectory was determined for each trial, and their end points were calculated. The timing of head movement onset was marked when the head velocity reached 20°/s, and final head position was selected at the point in time when the animal touched the target (or the mean point in time after target onset in the Gaze task). The “arm onset” was marked as when the animal left the LED light, and “touch on the target” was marked as the end of the arm movement. Reaction time was measured from the onset of the target to the detected onset of the respective gaze, head movement, and/or arm movement. Movement time was measured from the onset of gaze, head movement, and/or arm movement to the completion of the gaze, head movement, and/or arm movement.

To present end-point distributions, 95% confidence ellipses were computed by averaging the orientation angles and major/minor axes of the individual subject ellipses. We also calculated the orientation angle to these ellipses to approximate the direction of greatest scatter of the reach and saccade distributions.

Statistical Analysis

We conducted several three-way ANOVAs (between factors: task condition and initial gaze/hand position; repeated factor: target location) to test whether 2 task conditions (gaze shift, reach), 5 initial gaze/hand positions (10° apart from each other horizontally), and 15/5 target positions (10° apart from each other in vertical and horizontal dimension) statistically affected reaction time, velocity, and movement time. To compare scatter of end points across the two effectors for the gaze and hand task to three conditions (centrifugal, hand variation, and gaze variation), the three-way ANOVA included the repeated factor effector (gaze, hand) as well as the initial gaze/hand position and target location. We also performed unpaired t test and Kolmogorov–Smirnov (K-S) test to see whether the difference between the control (gaze shift) and the experiment (reach) was statistically significant for velocity and amplitude. All statistical tests were conducted with the Statistical Package for the Social Sciences (SPSS) and Microsoft Excel.

RESULTS

General Observations

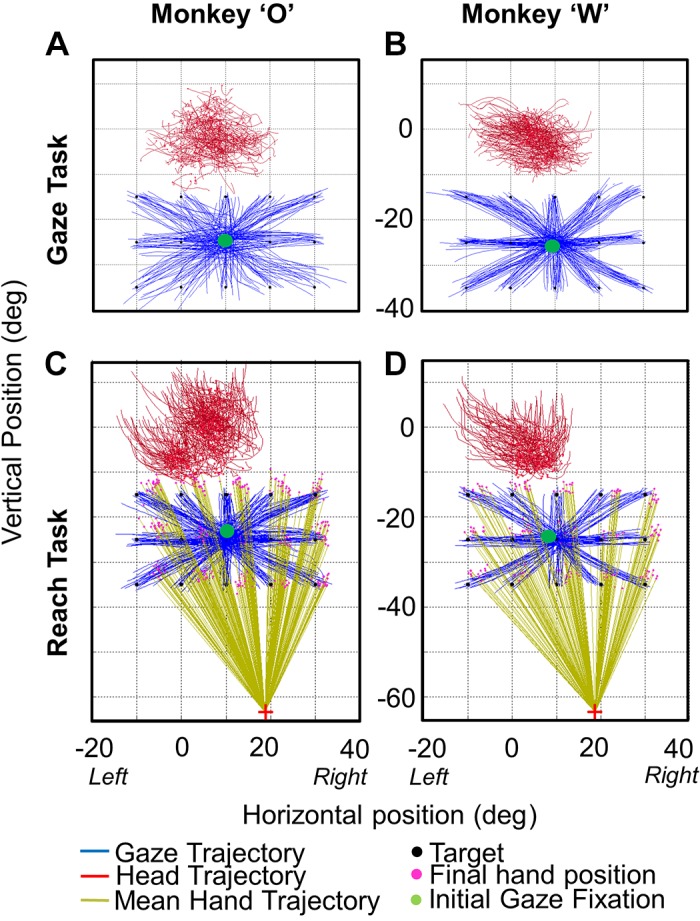

Figure 3 provides typical 2D trajectories of gaze, head position, and hand position for both animals in the Gaze task (Fig. 3, A and B) and the Reach task (Fig. 3, C and D) in the centrifugal condition configuration. Data are provided in visual angles, projected onto the coordinates of the touch screen. The initial gaze fixation point is indicated in each panel of Fig. 3, centered at 25° down and 10° right in touch screen coordinates. Reaching always took much longer than gaze shifts, so data are plotted until the time of hand contact with the screen or (in the case of the Gaze task) until the mean time of hand contact in the Reach task for each animal. This convention was used throughout our analysis to compare gaze-head kinematics in the presence or absence of reach on a similar timescale.

Fig. 3.

Representative gaze, head, and hand trajectories: example 2-dimensional gaze and head trajectories in the centrifugal Gaze task (A and B) and the centrifugal Reach task (C and D) are shown for animal O (left) and animal W (right). Trajectors are plotted from the time of initial gaze motion until the time of final hand contact on the touch screen. In the Reach task, the magenta dots show the reach end points for each target (black dots) and the initial hand position is shown by the red cross. Complete gaze and head trajectories are provided, whereas the hand trajectories are only shown as vectors joining the initial and final hand positions (light green lines). These data were taken from the centrifugal task, where the center green dot represents the initial eye fixation point for each trial. Comparing the gaze (control) task and the reach+gaze (experimental) task, one can already note that the head seems to move more during reaches, as shown in more detail in the following figures.

As expected in the control Gaze task, animals made centrifugal gaze shifts toward the targets. Since head positions corresponding to the central target were selected as reference orientations, the head range is centered approximately around zero, but this corresponds to our observation that animals generally faced forward with their eyes turned down toward the target. Similarly in the Reach task, animals chose to make gaze shifts immediately toward the targets, followed (as we shall see) by reaches toward the same target, such that gaze and hand end points landed within a similar range. In both tasks, gaze shifts were accompanied by head movements in the general direction (as we shall see) of the gaze shifts. The first and most obvious observation we made was that the head appeared to move more in the Reach task, especially in the vertical dimension.

The following sections quantify these and other details of the eye-head-hand coordination patterns, starting with the spatial aspects, and timing, and finally some spatiotemporal aspects. Since the results from our three spatial condition configurations (centrifugal, variable hand, variable gaze) were qualitative and statistically similar, we present data from the centrifugal condition, unless explicitly mentioned otherwise.

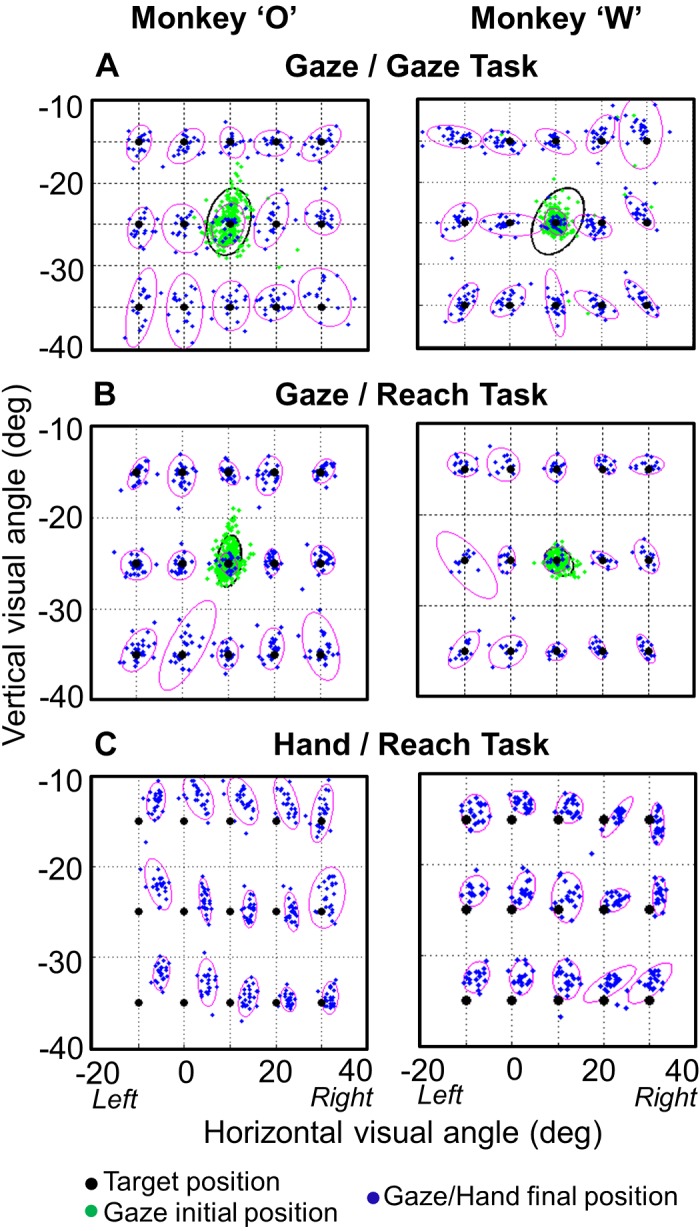

Spatial Analysis

Figure 4 shows the individual subjects’ 95% confidence ellipses for the end points of gaze (Fig. 4, A and B) and hand (Fig. 4C) in the Gaze task (Fig. 4A) and the Reach task (Fig. 4, B and C) for each target in the centrifugal condition configuration. These ellipse fits were used as an overall measure of the scatter in final gaze, head position, or hand position. Even though saccades occurred before reaches (as documented in Relative Timing of Events), gaze was more precise (less variable) in the Reach task. The mean ellipse area across all 15 targets was 32.7°2 (Gaze task) versus 23.4°2 (Reach task) in animal O and 19.7°2 (Gaze task) versus 15.3°2 (Reach task) in animal W. The difference between the areas of the ellipses in the Reach task and the Gaze task was significant in both animals [P = 0.043 and P = 0.017 (ANOVA test) in animals O and W, respectively]. Thus reach planning had an influence on gaze precision. However, there was no significant difference in accuracy (mean errors) between the two tasks (P = 0.74, P = 0.82; K-S test). We did not find a correlation between reach and saccade end points within each target distribution [r = −0.122, r = −0.27 (Pearson correlation test) for animal O and animal W, respectively]. The scatter of reach end points was not significantly different between gaze end points in either animal (P = 0.689, P = 0.330; K-S test). The same observations held in our other two spatial condition configurations.

Fig. 4.

95% Confidence ellipses for gaze and hand end points. A: gaze end points at the end of saccades in the centrifugal Gaze task for both animals. B: gaze end points at the end of saccades in the centrifugal Reach task for both animals. C: hand end points at the point of screen contact in the Reach task. The cluster of green dots shows the initial gaze position for each trial in both tasks. Black dots show target positions. Final gaze position was more precise in the Reach task (B) compared with the Gaze task (A) (P = 0.043, P = 0.017 for animal W and animal O, respectively).

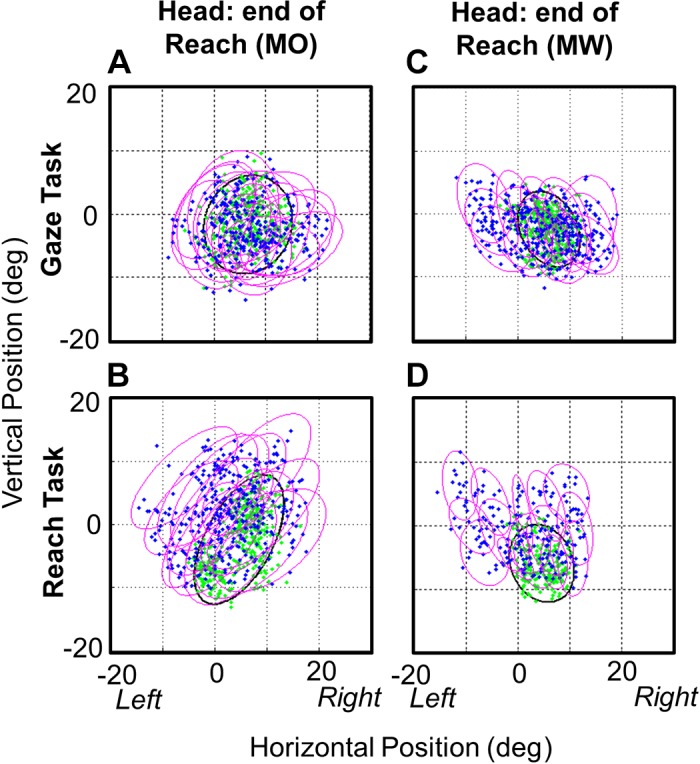

Figure 5 shows the 95% confidence ellipses for head end points (at the time of hand contact or mean equivalent) for each target in both animals in the Gaze task (Fig. 5, A and C) and the Reach task (Fig. 5, B and D). This results in two observations. First, the final head position scatter (i.e., ellipse size) was larger in the Reach task, especially in the vertical dimension. The area of the ellipses in the Reach task is significantly higher [P = 1.31E-06 and P = 0.0367; (K-S test) in animal O and animal W, respectively]. Second, there is greater separation between the ellipses for different targets in the Reach task, suggesting that target position has more influence on final head position, presumably because the head is moving more in the direction of final gaze/target position.

Fig. 5.

95% Confidence ellipses for head end points. The head end points are plotted at the time when the hand touches the target in the Reach task (B and D) and at the equivalent average time in the Gaze task (A and C) for each of the 15 targets in the centrifugal condition and for both animals [monkey O (MO) and monkey W (MW)]. The area of the ellipse fits to the head data were significantly less (across targets) than the area of the ellipses in the Reach task (P = 1.31E-06, P = 0.0367), indicating that the head moves more in the Reach task.

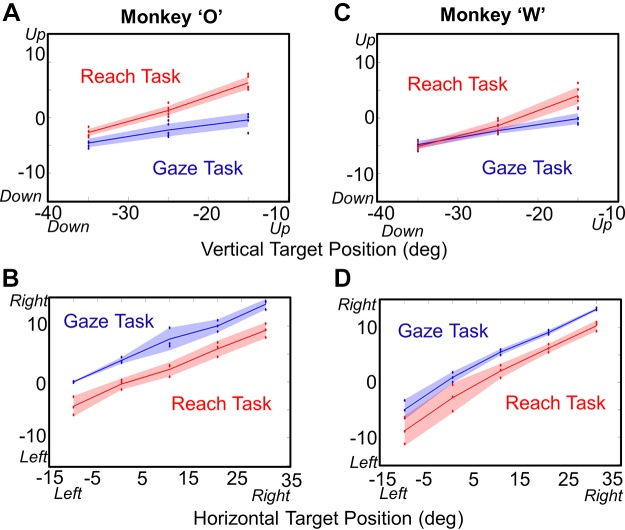

To test the abovementioned assumption, we plotted final head position as a function of target position (Fig. 6), separating this into vertical (Fig. 6, A and C) and horizontal (Fig. 6, B and D) components. This graph results in several observations: 1) the final head position depends on target position in both tasks and both directions and 2) in the Gaze task, the horizontal slope is steeper than the vertical slope, but 3) this is no longer true when the animal reaches. To quantify this, we did a one-way ANOVA test in each task in vertical and horizontal directions separately. The Reach task showed a significant influence of target position in both the vertical direction [P = 9.78E-11, slope = 0.46 and P = 9.45E-12, slope = 0.45 (1-way ANOVA) in monkeys O and W, respectively] and the horizontal direction [P = 0.03, slope = 0.35 and P = 0.027, slope = 0.47 (1-way ANOVA) in monkeys O and W, respectively]. The Gaze task showed a significant influence of target position on final head position in the vertical direction [P = 8.71E-11, slope = 0.21 and P = 8.53E-11, slope = 0.23 (1-way ANOVA) in monkeys O and W, respectively] but not in the horizontal direction [P = 0.58, slope = 0.35 and P = 0.47, slope = 0.45 (1-way ANOVA) in animals O and W, respectively]. Compared with the Gaze task, the slope of the Reach relationship was significantly higher in the vertical direction [P = 0.005 and P = 0.01 (K-S test) in monkeys O and W, respectively] but not in the horizontal direction [P = 0.89, P = 0.136 (K-S test) in animals O and W, respectively]. We also noted that head positions for the Gaze data were biased to the right relative to the Reach data in both animals (or vice versa, since it depends which data set is used as the reference).

Fig. 6.

Final head position as a function of target position, plotted separately for the vertical (A and C) and horizontal (B and D) directions from the centrifugal Gaze task and the Reach task for animal O (A and B) and animal W (C and D). Each panel suggests a linear relationship between target position and final head position, where the slopes depend on both the direction of motion and the task (see text for slopes and statistics).

From these results it follows that, since the head moved more overall in the Reach task and yet gaze was equally accurate, the overall distributions of final eye-in-head positions (not shown) must have a lower range. This was indeed the case, as the slope of the eye-in-head as a function of target plot got significantly smaller in vertical direction for both animals (P < 0.00001; K-S test).

Relative Timing of Events

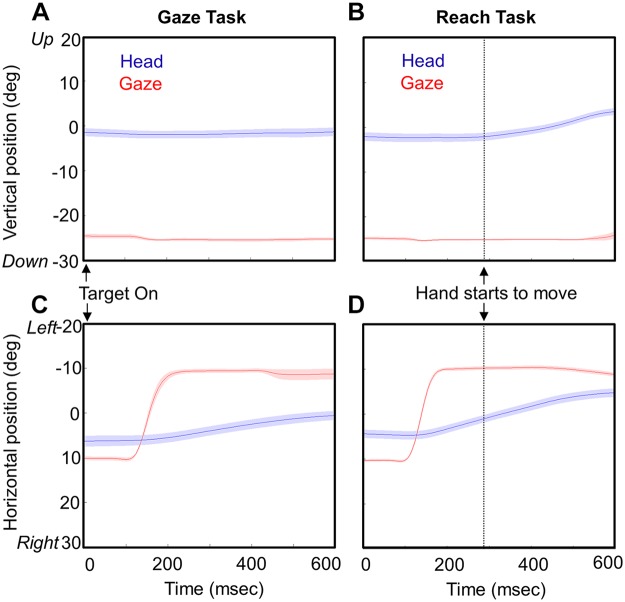

Figure 7 shows the typical time course of the gaze-head-hand coordination pattern that we observed. Vertical (Fig. 7, A and B) and horizontal (Fig. 7, C and D) components of gaze position and head position are provided for 25 trials averaged across time toward the 20° leftward target in animal O, from the Gaze task (Fig. 7, A and C) and the Reach task (Fig. 7, B and D). The dotted vertical line in each reach plot marks the onset of hand motion, and data plots are terminated at the end of hand motion (or the mean equivalent time in the Gaze task). The mean duration of hand movements across all centrifugal targets was 254.90 ± 36.12 ms for animal O and 276.65 ± 42.13 ms for animal W.

Fig. 7.

Head and gaze movement trajectories as a function of time. Averaged eye (gaze) and head movement trajectories (±95% confidence intervals) are plotted in the vertical (A and B) and horizontal (C and D) dimensions in both the Gaze task (A and C) and the Reach task (B and D). Dotted vertical lines show the time when the hand starts to move in the Reach task. The end of the trajectory marks the time when the animal touches the target in the Reach task and the average equivalent time period in the Gaze task. This example is taken from the centrifugal task when the target appears 20° to the left of the fixation point. The head starts to move with gaze in the main direction of the gaze shifts (horizontal) but only starts to move with the hand in the other dimension (vertical). The variability in the head movement onset was weakly correlated with the reach onset time in vertical (r = −0.06, r = −0.51) and horizontal (r = −0.1273, r = −0.42) directions.

Several features of coordinated gaze-head movement difference between the Reach task and Gaze task are observed by these movement trajectories. First, as reported previously (Snyder et al. 2002) there was a significant reduction in saccade reaction time (107.27 ± 17.09 ms for animal O, 141.69 ± 39.18 ms for animal W) in the Reach task relative to the Gaze task (133.14 ± 76.04 ms for animal O, 166.47 ± 102.75 ms for animal W) in both animals (P = 1.05E-07 for animal O, P = 0.00164 for animal W; K-S test). The mean reaction time of hand movement onset across all centrifugal targets was 312.37 ± 59.68 ms for animal O and 315.95 ± 93.05 ms for animal W. Hand reaction time was more closely correlated with gaze reaction time in both animals (r = 0.4006, r = 0.6038) and weakly correlated with head reaction time (r = 0.153, r = 0.216; Pearson correlation test). The mean reaction time of the head across all centrifugal targets was 314.45 ± 138.84 ms for animal O and 324.65 ± 88.65 ms for animal W for the Reach task and 264.45 ± 158.97 ms for animal O and 311.75 ± 112.28 ms for animal W for the Gaze task. Second, gaze shifts were complete by the time the hand started moving, and gaze generally held its position until the hand made contact (meaning that the vestibuloocular reflex was engaged). Third, it is evident that in both tasks the head starts to move around the time of the gaze shift (at least along the main component of the gaze shift), but in our task most of the head motion occurs after the gaze shift. Head velocity peaked significantly later than gaze velocity in both the Gaze task (animal O: 233.2 ± 167.7°/s, P < 0.00001; animal W: 142.52 ± 140°/s, P = 3.01E-31) and the Reach task (animal O: 300.6 ± 164.04°/s, P < 0.00001; animal W: 190.26 ± 156.3°/s, P = 2.81E-43). Fourth, this late head motion is greater in the Reach task, particularly in the vertical direction (as noted already). Furthermore, in the vertical “off axis” component of this example, the head only moves during the reach and only starts moving at the onset of the reach movement.

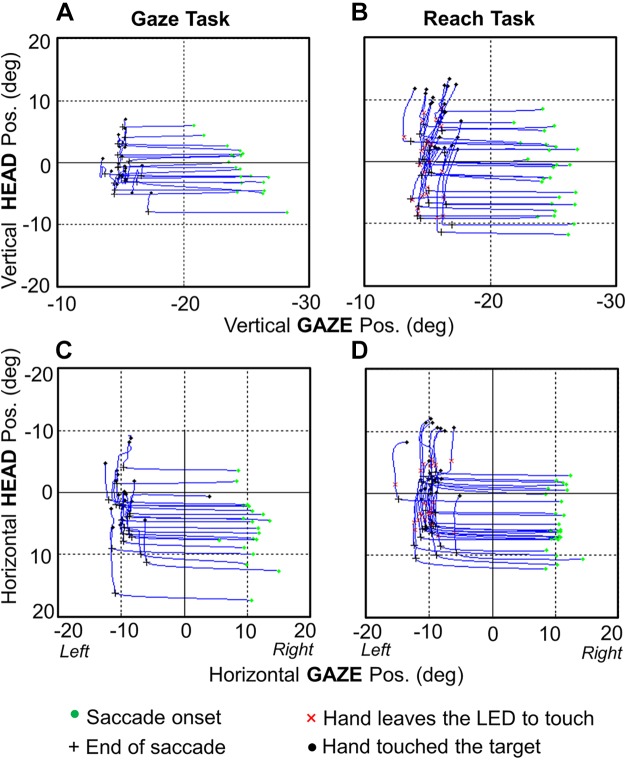

Figure 8 illustrates the relative timing of gaze-head-hand coordination with phase plots of head position as a function of gaze position. Here, vertical components of a vertical gaze shift (Fig. 8, A and B) and horizontal components of a horizontal gaze shift (Fig. 8, C and D) are shown. Once again, we see a significant increase in head motion between the Gaze task (Fig. 8, A and C) (20.74 ± 15.94° and 23.32 ± 13.84° in animals O and W, respectively) and the Reach task (Fig. 8, B and D) (36.43 ± 14.44° and 32.04 ± 13.84° in animals O and W, respectively). The main point here is that in all plots the saccade end point occurs at the inflection between gaze and head motion and, more importantly, the duration of hand motion encompasses most of the head movement. Indeed, in the vertical component (where reach had the most influence) the reach start coincided very closely with the gaze-head inflection point, more so than the horizontal component, where the head began before the reach. We have noted that the period when the gaze is stable but the head is moving in the figure corresponds to the vestibuloocular period.

Fig. 8.

Head trajectories as a function of gaze trajectories, coupled through time. The spatiotemporal coupling of head and gaze motion for 1 target (20° to the left of center) is shown both in the vertical dimension (A and B) and in the horizontal dimension (C and D) for both the Gaze task (A and C) and the Reach task (B and D). Data are plotted from gaze onset until the hand contacts the target (or average equivalent in the Gaze task). Green dots indicate the positions at the time of gaze onset, black crosses indicate positions at the end of the saccade (in both tasks), red × indicate the time when the hand starts moving in the Reach task, and black dots indicate the time when the target was touched for each trial. As in Fig. 7, the target was 20° to the left of the fixation point from animal O. This figure shows clearly how the majority of head motion occurred after the gaze shift and coincided with the duration of hand movement.

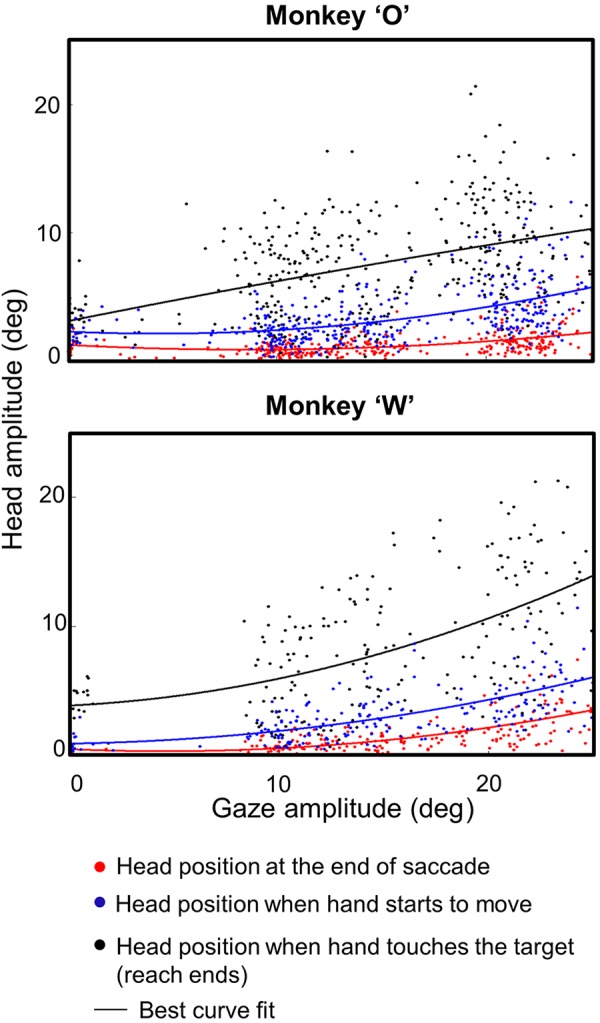

Figure 9 summarizes these observations across our entire centrifugal reach data set by plotting head amplitude as a function of gaze shift amplitude at three different task intervals (head amplitude at end of saccades, head amplitude at the start of reach, and head amplitude at the end of reach). This figure points toward several observations. First, the amplitude of the head movement increased as a function of the amplitude of the gaze movement in the Reach task. We observed a significant positive correlation between the gaze shift and the head amplitude both at the end of the saccade (r = 0.4773 animal O, r = 0.6492 animal W; P < 0.0001, Pearson correlation test) and at the time of touching the target (r = 0.338 animal O, r = 0.5493 animal W; P < 0.00001, Pearson correlation test). This was expected; here we were more interested how the these relationships changed through time.

Fig. 9.

Head amplitude as a function of gaze amplitude. Head amplitude is shown at 3 stages of each trial for the entire centrifugal Reach data set in both monkeys. Red dots indicate the data derived at end of saccades, blue dots indicate data derived when the hand starts to move, and black dots indicate data derived when the target is touched. The line in each color indicates its best fit. The head amplitude increases as a function of gaze amplitude in both animals. A small amount of the total motion occurs during saccades (red) and between saccades and reach onset (blue), but most occurs between reach onset and offset (black).

Comparing the three different task intervals in Fig. 9, one can see that most of the increased head motion occurs after the saccade, especially during the reach movement. A K-S test showed a significant difference (P < 0.001; K-S test) in both animals between the points where the saccade ends, the reach begins, and the reach ends. Again, the change in head amplitude at the time of touch (or equivalent) was significantly higher in the reach condition versus controls (not shown) for both animal O (P = 0.00037; K-S test) and animal W (P = 0.000046; K-S test). The difference was more subtle at the end of the saccade but was still significant across all trials in both animals (P < 0.001; K-S test). But as illustrated above (Figs. 7 and 8), these patterns become more extreme in some situations, e.g., in horizontal gaze shifts where vertical position only moved during reach.

Velocity-Amplitude Relationships

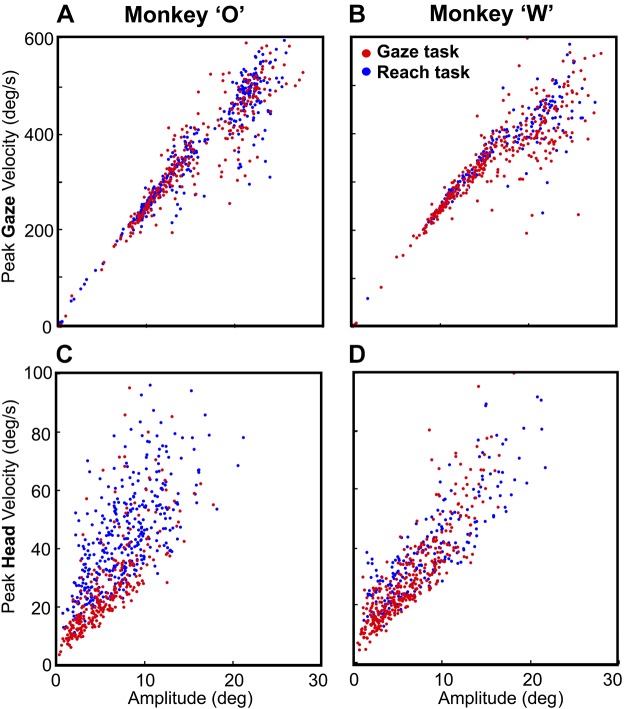

Figure 10, A and B, plot peak gaze velocity as a function of gaze amplitude for the centrifugal condition, contrasting the control gaze data and the experimental reach data for each animal. Peak gaze velocity (averaged across all 15 targets) was slightly higher in the Reach task (346.96 ± 134.22°/s, 334.69 ± 122.24°/s) than in the Gaze task (341.07 ± 141.54°/s, 325.92 ± 132.92°/s), but their velocity-amplitude distributions were not significantly different in K-S tests (P = 0.43 and P = 0.202, animals O and W, respectively).

Fig. 10.

Velocity-amplitude relationships for gaze and head motion. A and B show the peak gaze velocity as the function of the gaze amplitude in the Gaze task (red dots) vs. the Reach task (blue dots). These distributions were not significantly different in either animal (P = 0.43, P = 0.2; Kolmogorov–Smirnov test). C and D show peak head velocity as a function of head amplitude in the Gaze task vs. the Reach test in both animals. Head velocity was significantly higher in the Reach task for both animals (P = 2.38E-38, P = 8.21E-21; Kolmogorov–Smirnov test).

Likewise, Fig. 10, C and D, plot peak head velocity as a function of head amplitude for the centrifugal condition, contrasting the control gaze data and the experimental reach data for each animal. Averaged head velocity across all trials (combining all 15 targets) was 45.45 ± 16.64°/s and 40.65 ± 17.73°/s for the Reach task compared with 26.16 ± 16.08°/s and 25.27 ± 16.95°/s for the Gaze task for animals O and W, respectively. In this case, the peak velocity-amplitude distributions were significantly higher when gaze was accompanied by an arm movement (K-S test: P = 2.38E-38 and P = 8.21E-21 in animals O and W, respectively) compared with the gaze control task. Target position had a significant effect on head velocity in Reach and Gaze tasks for both animals (P < 0.001; 1-way ANOVA). Finally, we considered the recent study suggesting that human reach velocity correlates better with head than gaze motion (Reppert et al. 2018). Our setup was not designed to measure peak hand velocity, but consistent with Reppert et al. (2018), we found a higher correlation between mean hand velocity (total amplitude/total duration) and peak head velocity [r = 0.396 and r = 0.487 (Pearson correlation test) in animals W and O, respectively] compared with peak gaze velocity [r = 0.193 and r = 0.196 (Pearson correlation test) in animals W and O, respectively].

Influence of Initial Gaze and Hand Position

We repeated the analyses described above for our variable initial hand (Fig. 2B2) and variable initial gaze (Fig. 2B3) data sets and found the same general results (summarized statistically in Table 1). Rather than repeat those confirmatory results at length, here we report additional analyses designed specifically to test the influence of initial gaze and hand position. A statistical summary of these results is provided in Table 2. Some of the significant interactions can be explained by known kinematic rules: for example, initial gaze position influenced gaze and head velocities (in the Reach task), but this is expected simply because this altered the amplitudes of the movement for a given target, because head motion correlates with gaze motion, and overall the head moved more during reach. Likewise, initial hand position influenced hand speed by altering hand amplitude. In contrast, initial hand position had no influence on gaze or head kinematics or latencies (Table 2).

Table 1.

Statistical summary of differences in gaze parameters between Gaze task and Reach task in initial gaze position condition

| Behavioral Parameter | Animal | Gaze Task | Reach Task | Statistical Difference |

|---|---|---|---|---|

| Gaze latency, ms | W | 151.365 ± 122.547 | 129.828 ± 117.812 | P = 0.00015 |

| O | 118.381 ± 83.928 | 111.986 ± 43.071 | P = 0.033 | |

| Gaze velocity, °/s | W | 246.569 ± 158.906 | 270.381 ± 184.561 | P = 0.092 |

| O | 343.533 ± 182.027 | 359.578 ± 237.177 | P = 0.641 | |

| Gaze precision, °2 | W | 28.791 ± 11.512 | 22.218 ± 8.956 | P = 0.015 |

| O | 30.191 ± 13.358 | 24.316 ± 9.452 | P = 0.005 | |

| Head amplitude at end of saccade, ° | W | 1.654 ± 2.186 | 1.851 ± 2.104 | P = 0.0028 |

| O | 0.967 ± 0.934 | 1.399 ± 1.653 | P = 0.0047 | |

| Head amplitude at time of target touch, ° | W | 6.561 ± 5.980 | 7.781 ± 4.862 | P = 0.0089 |

| O | 6.157 ± 4.542 | 8.033 ± 4.428 | P < 0.00001 | |

| Head velocity, °/s | W | 29.313 ± 21.013 | 36.269 ± 19.281 | P = 0.00006 |

| O | 28.115 ± 14.984 | 44.678 ± 15.991 | P < 0.00001 |

Values are means ± SD. Analysis performed for variable initial gaze position condition in control (Gaze task) and experiment (Reach task). The results were similar to the centrifugal condition. Head amplitude at time of target touch in the Gaze task was taken at the mean time of touch calculated in the Reach task. P values arise from 1-way ANOVA tests. Significant values are bolded.

Table 2.

Statistical summary of influence of initial gaze position condition and initial hand position condition

| Animal | Gaze Reaction Time, ms | Head Reaction Time, ms | Hand Reaction Time, ms | Gaze Speed, °/s | Head Speed, °/s | Hand Speed, °/s | |

|---|---|---|---|---|---|---|---|

| Initial gaze position (Gaze task) | W | 0.276** | 0.323* | <0.001* | 0.564* | ||

| O | 0.358** | 0.939* | <0.001* | 0.231* | |||

| Initial gaze position (Reach task) | W | <0.00001** | 0.327* | 0.003* | 0.0004* | <0.001* | 0.126* |

| O | <0.00001** | 0.962* | 0.001* | 0.0008* | <0.001* | 0.461* | |

| Initial hand position (Reach task) | W | 0.265** | 0.711* | 0.187* | 0.671* | 0.328* | 0.0006* |

| O | 0.415** | 0.845* | 0.306* | 0.954* | 0.136* | 0.0015* |

Values are P values arising from 1-way ANOVA tests for influence of initial gaze position and initial hand position on gaze and reach parameters. Significant values are bolded.

Test for a main effect in a 1-way ANOVA.

Test for interaction in a 2-way ANOVA.

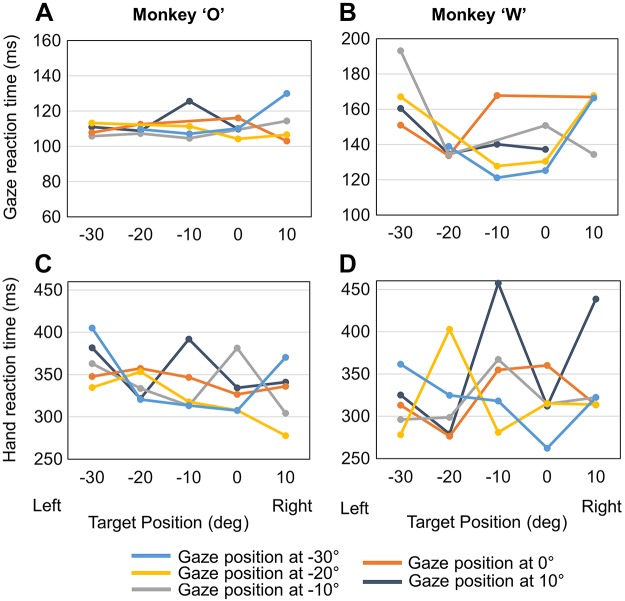

More interestingly, initial gaze position influenced reaction time for both gaze and hand motion (Fig. 11). Specifically, there was a significant interaction between initial gaze position and target position on the gaze reaction time in the reach condition (Fig. 11, A and B), but not in the gaze, in both animals. In other words, during reaches initial gaze position had an influence on gaze reaction time that depended on target position. Initial gaze position also had a significant influence on hand reaction time in both animal O and animal W (Fig. 11, C and D). Taken together, these findings suggest that gaze position has an influence on both gaze and hand reaction time during reaches. Inspection of Fig. 11 suggests a general trend (i.e., in some but not all cases) for reaction time to decrease for left fixation points/right targets, possibly suggesting a right visual field advantage during reach.

Fig. 11.

Gaze and hand reaction times in the Reach task plotted as a function of target position. Reaction times were calculated relative to fixation offset/target onset time. A and B: mean gaze reaction times for each initial gaze location (color coded) in both animals. C and D: mean hand reaction times for each initial gaze position (color coded) in both animals.

DISCUSSION

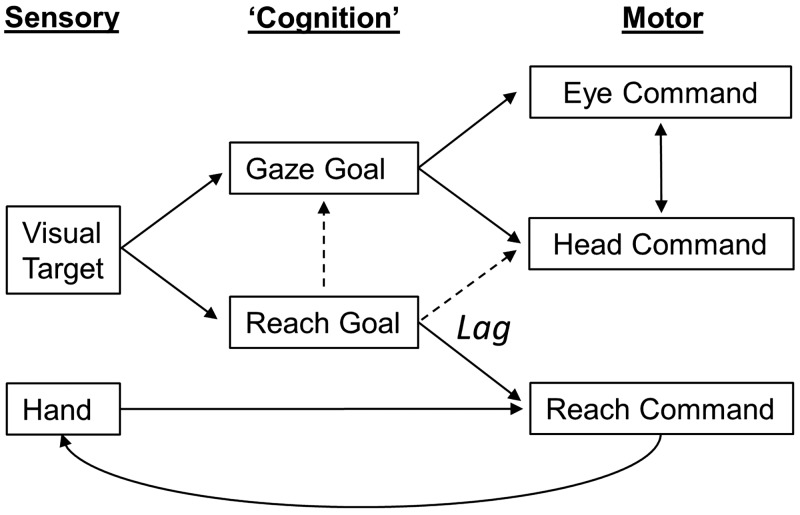

We investigated the influence of reaching on gaze and head movement in rhesus macaques and found several interrelationships between these effectors. In our reach-for-reward task, animals typically first initiated a saccade toward the target, followed by head movement in the same general direction and finally reaching out to touch the target. More importantly, we found that the head moved more in the Reach task, particularly in the vertical direction, and for the duration of the reach. We also observed that saccade accuracy increased, saccade latency decreased, and head velocity increased (for a given amplitude) when a reach movement accompanied the gaze shift. These observations suggest a considerable degree of coupling between eye, head, and reach control systems (Fig. 12), likely, as we shall argue, for the optimization of gaze kinematics for the visual control of reach.

Fig. 12.

Conceptual model of gaze-head-hand coordination. Gaze goals in eye-centered coordinates are thought to drive both gaze and head commands, which in turn influence each other during the gaze shift (Freedman 2008; Guitton et al. 1990). Reach goals derived from the visual target are compared to hand position estimates derived from proprioception and vision of the hand to drive the reach command and in turn influence the hand position (curved line) through internal feedback and actual motion (Bédard and Sanes 2009). This transformation appears to lag the gaze-head command. The present study suggests that the reach goal also influences gaze and head commands (dashed lines), directly and/or through visual feedback.

Optimization of Gaze for Reaching

Reaching had three main influences on gaze shifts in our animals. First, as reported previously in head-restrained human and monkey studies (Sailer et al. 2016; Snyder et al. 2002), reaches decreased saccade latency. Consistent with most human studies (Biguer et al. 1982; Carnahan and Marteniuk 1991), the recruitment order of our animals’ movements was gaze, head, and then hand. To some extent, this may be due to the relative excitation-contraction coupling times and inertia in these plants, but this cannot entirely account for the lag between initial gaze/head motion and reach motion. This order appears to reflect an intentional strategy to foveate the target (which remained on for the duration of the hand movement) before the hand started moving. Second, as also reported in head-unrestrained studies, we found that hand motion altered the “main sequence” of velocity-amplitude relationships for gaze shifts, i.e., providing higher velocities for the same amplitude gaze shift (Snyder et al. 2002). It has previously been reported that reaching increases eye-in-saccade velocities in humans and monkeys (Snyder et al. 2002). It has also been reported that hand velocity (and latency) tends to correlate with head velocity (and latency) (Smeets et al. 1996). Finally, reaches increased gaze accuracy despite the absence of a gaze reward. Conversely, it has been reported that head movement increases both gaze and reach accuracy (Biguer et al. 1984). The net effect of these factors is that in our task targets were foveated more rapidly and precisely before the reach even began.

Taken together, these factors suggest that, contrary to some suggestions (Vercher et al. 1994), visual gaze “scouting ahead” is important for planning a reach movement (Bowman et al. 2009; Kurz et al. 2017; Pelz and Canosa 2001), presumably to help guide reach transport. In our simple task, gaze remained fixed to the target until the reach was complete. A simple explanation for this is the potential advantage of foveal vision for aiming and guiding the hand movement. Consistent with this, hand pointing accuracy decreased when the target fixation was not stabilized before the target was extinguished in another report (Vercher et al. 1994). Finally, reaching and pointing is even more accurate when gaze aligns with the target in complete darkness (Bock 1986; Henriques et al. 1998; Henriques and Crawford 2000). This suggests the possibility that the internal sense of gaze direction (efference copy) can also be used to guide the hand (Bock 1986). Likewise, the influence of initial gaze position on both gaze and hand latency in our Reach task might be due to both simple visual factors (e.g., variable and distant peripheral retinal positions) and the increased computational load of accounting for noisy gaze position signals when transforming vision to reach commands (Blohm and Crawford 2007; Gauthier et al. 1990). Or, as suggested by some of the data, it could simply be related to a visual field advantage. The general trend of an advantage for right visual field that was observed here seems to make ecological sense for the right hand (appearing more often on the right), but the opposite has been reported in humans (Woods et al. 2015). In practice, it seems likely that all of these factors contribute to eye-hand coordination strategies, depending on visual conditions.

Eye-Head Coupling and Head Contribution

Some studies have supported the notion that the early signals for gaze control in the cortex and superior colliculus primarily take the shape of an undifferentiated, eye-centered command for 2D gaze direction, with default levels of 3D eye-head coordination determined downstream (Freedman and Sparks 1997a; Klier et al. 2001, 2003; Sadeh et al. 2015; Sajad et al. 2016). However, this story becomes more complex when one considers both the various types of interaction between the eye and head and their degree of independent control. Various studies have demonstrated both 2D interactions between eye and head position (Freedman and Sparks 1997a; Gandhi and Sparks 2001; Tweed and Vilis 1990) and 3D interactions (Crawford et al. 1999; Monteon et al. 2012; Tweed and Vilis 1990). As a result, most recent mechanistic models of eye-head coordination include signals for cross talk between eye and head control (Daemi and Crawford 2015; Daye et al. 2014; Freedman 2001; Tweed and Vilis 1990). On the other hand, the high degree of context dependence of relative eye-head contribution that has been observed in various studies suggests the potential for independent control (Constantin et al. 2004; Crawford and Guitton 1997; Freedman and Sparks 1997a; Fuller 1992; Gandhi and Sparks 2001; Kowler et al. 1992; Ron and Berthoz 1991; Stahl 2001). The present investigation is generally consistent with these previous studies of eye-head coordination in macaques but extends those results to eye-head-hand coordination, where the following new findings must also be considered.

Optimization of Head Motion for Reach

The present study suggests an additional drive for head motion in the presence of reach, in terms of peak amplitudes and velocities. This was already statistically significant during saccades (the head contribution to gaze) but was much more obvious during the prolonged head motion that accompanied subsequent reaches. This was particularly the case in the vertical dimension, but this might simply be because the head usually moves more horizontally than vertically in the absence of reach (Crawford and Guitton 1997; Goossens and Van Opstal 1997; Tweed et al. 1995). Since the head moved more horizontally than vertically in the Gaze task, the increase in vertical head motion in the Reach task thus tended to equalize the horizontal and vertical components of head motion (Fig. 6). There was also a constant bias in horizontal head position between the two tasks, but from our data it is unclear whether this was due to the hand used (right in this case) and/or simply a postural adjustment resulting from opening the hand aperture.

In our data set, the additional vertical head movement components appeared to accompany the timing of the reach rather than the saccade. To our knowledge, this additional head drive has not been reported previously. Reppert et al. (2018) briefly compared gaze kinematics with and without reach but reported a decrease in head velocity during reaches, opposite to the increase we observed. This was not the focus of their report, so it is unclear whether our disparate results were due to the tasks or the way we analyzed our data. It does not appear to be a species difference, because we have obtained similar preliminary results in the human study (Al Tahan and Crawford 2018).

It would appear that the increased head drive in our study is more related to the reach goal than the reach vector, because in our data set the reach-related head movement was correlated to target position and was not modulated by initial hand position. Because the vestibuloocular reflex stabilizes gaze position by rolling back the eye opposite to the head direction after saccades (Guitton 1992; Snyder and King 1992), this increased head motion does not change gaze direction. To some extent this occurred in both of our tasks, but why would this be enhanced during reaches relative to ordinary gaze shifts? One possibility is that ordinary gaze shifts, beyond very close targets, are primarily concerned with redirecting gaze direction. In contrast, depth information becomes very important during 3D reaches (as in our setup), especially as the hand must decelerate and contact a specific depth in space. It has been shown that changes in eye-in-head orientation complicate depth vision (Blohm et al. 2008; Schreiber et al. 2001) and that depth vision is superior in the central oculomotor range. Therefore, it may be advantageous for the 3D visuomotor transformation if increased head motion drives the eye (in head) toward the central range during reaches.

Possible Mechanisms

Figure 12 provides a simplified conceptual model for the main gaze-head-hand interaction we observed in this study. Like most recent gaze-control models, the visual stimulus is used to derive an undifferentiated gaze goal, which then drives semi-independent control systems for gaze and the head (the arrow between these in Fig. 12 indicates that they interact). However, to aim the hand in depth, the brain must compare 3D estimates of initial hand position and target position to derive the desired movement vector (Sober and Sabes 2003). To indicate coordination between these systems, we have provided additional inputs (dashed arrows in Fig. 12) from the reach goal command to both the gaze command (to explain our gaze latency, velocity, and accuracy results) and the longer-lasting head command, to explain the hand-head linkages reported here and in Reppert et al. (2018). This cross talk could take the form of relatively direct (and likely learned) neural connections, but if our visual hypothesis is correct they might also be driven indirectly through heightened visual attention to the reach target and later the distance between the hand and target. We do not provide feedback from the hand to the gaze system here because we did not find an influence of hand position on gaze, but this is likely task dependent. In this visual reach task the gaze signal likely drives the hand, whereas in somatosensory-driven tasks there is a strong influence of hand position on saccade metrics (Ren et al. 2006, 2007).

Note the lag introduced somewhere between the goal and the commands of head and arm motion (Fig. 12). This is added to account for the observation that in our data set most of the head (and reach) movement occurred after the gaze shift, with both ending at approximately the same time in the Reach task (Figs. 6 and 7). Although some inertial lag is expected here relative to eye motion, it is not unusual in some Gaze tasks for head motion to begin during the saccade during rapid gaze shifts (Freedman and Sparks 1997a; Guitton 1992; Roy and Cullen 1998). Likewise, mechanics alone may not account for the delay we observed between gaze motion and hand motion (205.1 ms in animal O and 174.26 ms in animal W), and even if it did, the system could chose to delay the gaze shift until the hand moves. Thus this neuromuscular lag seems to be part of a deliberate strategy, again to 1) allow time for gaze to reach the target first so that foveal vision can be used to aim accurate reach movements and then 2) to coordinate head and arm motion so that the head is position to optimize depth vision at the point of final contact (and in most real-world tasks, manipulation of the object).

The possible neural substrates for gaze-hand coordination have been the topics of numerous reviews (Battaglia-Mayer et al. 2001; Hwang et al. 2014; Marconi et al. 2001). In contrast, very little is known about the higher-level control mechanisms for head motion. One possibility is that the hand-head influence might be implemented at the brain stem level. Although the superior colliculus is best known for its gaze signals (Freedman and Sparks 1997b; Munoz et al. 1991; Sadeh et al. 2015), it also shows head-related responses (Walton et al. 2007), and these responses become far more prominent when animals are cued to produce larger head movements (Monteon et al. 2012). The final ingredient to this mix is that the deep layers of the superior colliculus also show modulations with reach (Stuphorn et al. 2000; Werner 1993). It has been proposed that these modulations could mediate increases in eye velocity and, likewise, might mediate the reach-related changes in head motion described here. The cerebellum, which has been proposed to play a role in eye-hand coordination (Miall et al. 2001), might also be involved.

Alternatively, these effects could be mediated by cortex. Although some studies emphasize the predominance of gaze signals in frontal gaze control structures (Knight and Fuchs 2007; Martinez-Trujillo et al. 2003; Monteon et al. 2010; Sajad et al. 2016), others have suggested the presence of at least some degree of independent head control signals in frontal cortex (Chapman et al. 2012; Knight 2012). In particular, stimulation of the frontal eye field sometimes results in head motion that centers eye position in the head (Chen 2006), similar to the head movements we observed here during reach. In theory, inputs from adjacent reach control centers such as dorsal and ventral premotor cortex could modulate those responses for reach. Other experiments show that inactivating the parietal reach region during coordinated saccade and reach movements (Hwang et al. 2014) alters the temporal coupling of coordinated movements while concurrently changing the reach and saccade end points. Furthermore, the gaze-hand coordination circuit could also include other brain areas extending across a larger frontal-parietal network (Battaglia-Mayer et al. 2001; Marconi et al. 2001; Pesaran et al. 2006), the supplementary eye fields (Mushiake et al. 1996), and the superior colliculus (Song and McPeek 2015). Taken together, these could implement the network illustrated schematically in Fig. 12.

Conclusions

Nonhuman primates have been used extensively as animal models for human eye-head and eye-hand coordination, but to our knowledge eye-head-hand coordination was not previously studied in monkeys. Although we did not observe any influence of gaze parameters on reach in the present study, we did observe several instances of reach influencing gaze and eye-head coordination. First, we observed that reaches improved gaze accuracy and confirmed that they “sped up” the gaze shift in terms of both latency and velocity. Most interestingly, we observed an increased head motion in the direction of the gaze/reach target, coupled to the duration of the reach. This was accompanied by an increase in head velocity for a given head amplitude. As noted above, these adaptations may get the fovea rapidly on the target in order to aim reach transport and may optimize depth vision during the final contact phase of touch (which might be even more important during grasp). In general, these observations expand on the notion that the gaze system is the “slave” to the reach system during eye-head-hand coordination (Crawford 1994), in the sense that it optimizes vision for successful reaching.

GRANTS

This study was supported by the Canadian Institutes for Health Research and a Canada Research Chair (Tier 1) held by J. D. Crawford. V. Bharmauria was supported by a Natural Sciences and Engineering Research Council CREATE-IRTG fellowship and a Vision: Science to Applications (VISTA) fellowship. H. Wang and X. Yan were supported by VISTA.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

J.D.C. conceived and designed research; H.K.A., X.Y., and H.W. performed experiments; H.K.A. and S.S. analyzed data; H.K.A. and J.D.C. interpreted results of experiments; H.K.A. prepared figures; H.K.A. and J.D.C. drafted manuscript; V.B. and J.D.C. edited and revised manuscript; H.K.A., V.B., X.Y., S.S., H.W., and J.D.C. approved final version of manuscript.

ACKNOWLEDGMENTS

The authors thank Dr. Veronica Nacher for critical comments on the manuscript.

REFERENCES

- Al-Tahan H, Crawford JD. To reach or not to reach: coordination of eye, head and hand movements during visually guided reach (Abstract). Neuroscience 2018 San Diego, CA, November 3–7, 2018, p. 399.23/LL6. [Google Scholar]

- Battaglia-Mayer A, Ferraina S, Genovesio A, Marconi B, Squatrito S, Molinari M, Lacquaniti F, Caminiti R. Eye-hand coordination during reaching. II. An analysis of the relationships between visuomanual signals in parietal cortex and parieto-frontal association projections. Cereb Cortex 11: 528–544, 2001. doi: 10.1093/cercor/11.6.528. [DOI] [PubMed] [Google Scholar]

- Bédard P, Sanes JN. Gaze and hand position effects on finger-movement-related human brain activation. J Neurophysiol 101: 834–842, 2009. doi: 10.1152/jn.90683.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biguer B, Jeannerod M, Prablanc C. The coordination of eye, head, and arm movements during reaching at a single visual target. Exp Brain Res 46: 301–304, 1982. doi: 10.1007/BF00237188. [DOI] [PubMed] [Google Scholar]

- Biguer B, Prablanc C, Jeannerod M. The contribution of coordinated eye and head movements in hand pointing accuracy. Exp Brain Res 55: 462–469, 1984. doi: 10.1007/BF00235277. [DOI] [PubMed] [Google Scholar]

- Bizzi E, Kalil RE, Tagliasco V. Eye-head coordination in monkeys: evidence for centrally patterned organization. Science 173: 452–454, 1971. doi: 10.1126/science.173.3995.452. [DOI] [PubMed] [Google Scholar]

- Blohm G, Crawford JD. Computations for geometrically accurate visually guided reaching in 3-D space. J Vis 7: 4, 2007. doi: 10.1167/7.5.4. [DOI] [PubMed] [Google Scholar]

- Blohm G, Khan AZ, Ren L, Schreiber KM, Crawford JD. Depth estimation from retinal disparity requires eye and head orientation signals. J Vis 8: 3, 2008. doi: 10.1167/8.16.3. [DOI] [PubMed] [Google Scholar]

- Bock O. Contribution of retinal versus extraretinal signals towards visual localization in goal-directed movements. Exp Brain Res 64: 476–482, 1986. doi: 10.1007/BF00340484. [DOI] [PubMed] [Google Scholar]

- Bowman MC, Johansson RS, Flanagan JR. Eye-hand coordination in a sequential target contact task. Exp Brain Res 195: 273–283, 2009. [Erratum in Exp Brain Res 208: 309, 2011.] doi: 10.1007/s00221-009-1781-x. [DOI] [PubMed] [Google Scholar]

- Carnahan H, Marteniuk RG. The temporal organization of hand, eye, and head movements during reaching and pointing. J Mot Behav 23: 109–119, 1991. doi: 10.1080/00222895.1991.9942028. [DOI] [PubMed] [Google Scholar]

- Chapman BB, Pace MA, Cushing SL, Corneil BD. Recruitment of a contralateral head turning synergy by stimulation of monkey supplementary eye fields. J Neurophysiol 107: 1694–1710, 2012. doi: 10.1152/jn.00487.2011. [DOI] [PubMed] [Google Scholar]

- Chen LL. Head movements evoked by electrical stimulation in the frontal eye field of the monkey: evidence for independent eye and head control. J Neurophysiol 95: 3528–3542, 2006. doi: 10.1152/jn.01320.2005. [DOI] [PubMed] [Google Scholar]

- Choi WY, Guitton D. Responses of collicular fixation neurons to gaze shift perturbations in head-unrestrained monkey reveal gaze feedback control. Neuron 50: 491–505, 2006. doi: 10.1016/j.neuron.2006.03.032. [DOI] [PubMed] [Google Scholar]

- Collins CJ, Barnes GR. Independent control of head and gaze movements during head-free pursuit in humans. J Physiol 515: 299–314, 1999. doi: 10.1111/j.1469-7793.1999.299ad.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Constantin AG, Wang H, Crawford JD. Role of superior colliculus in adaptive eye-head coordination during gaze shifts. J Neurophysiol 92: 2168–2184, 2004. doi: 10.1152/jn.00103.2004. [DOI] [PubMed] [Google Scholar]

- Crawford JD. The oculomotor neural integrator uses a behavior-related coordinate system. J Neurosci 14: 6911–6923, 1994. doi: 10.1523/JNEUROSCI.14-11-06911.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crawford JD, Ceylan MZ, Klier EM, Guitton D. Three-dimensional eye-head coordination during gaze saccades in the primate. J Neurophysiol 81: 1760–1782, 1999. doi: 10.1152/jn.1999.81.4.1760. [DOI] [PubMed] [Google Scholar]

- Crawford JD, Guitton D. Primate head-free saccade generator implements a desired (post-VOR) eye position command by anticipating intended head motion. J Neurophysiol 78: 2811–2816, 1997. doi: 10.1152/jn.1997.78.5.2811. [DOI] [PubMed] [Google Scholar]

- Daemi M, Crawford JD. A kinematic model for 3-D head-free gaze-shifts. Front Comput Neurosci 9: 72, 2015. doi: 10.3389/fncom.2015.00072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daye PM, Optican LM, Blohm G, Lefèvre P. Hierarchical control of two-dimensional gaze saccades. J Comput Neurosci 36: 355–382, 2014. doi: 10.1007/s10827-013-0477-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dean HL, Martí D, Tsui E, Rinzel J, Pesaran B. Reaction time correlations during eye-hand coordination: behavior and modeling. J Neurosci 31: 2399–2412, 2011. doi: 10.1523/JNEUROSCI.4591-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferraina S, Johnson PB, Garasto MR, Battaglia-Mayer A, Ercolani L, Bianchi L, Lacquaniti F, Caminiti R. Combination of hand and gaze signals during reaching: activity in parietal area 7 m of the monkey. J Neurophysiol 77: 1034–1038, 1997. doi: 10.1152/jn.1997.77.2.1034. [DOI] [PubMed] [Google Scholar]

- Freedman EG. Interactions between eye and head control signals can account for movement kinematics. Biol Cybern 84: 453–462, 2001. doi: 10.1007/PL00007989. [DOI] [PubMed] [Google Scholar]

- Freedman EG. Coordination of the eyes and head during visual orienting. Exp Brain Res 190: 369–387, 2008. doi: 10.1007/s00221-008-1504-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freedman EG, Sparks DL. Eye-head coordination during head-unrestrained gaze shifts in rhesus monkeys. J Neurophysiol 77: 2328–2348, 1997a. doi: 10.1152/jn.1997.77.5.2328. [DOI] [PubMed] [Google Scholar]

- Freedman EG, Sparks DL. Activity of cells in the deeper layers of the superior colliculus of the rhesus monkey: evidence for a gaze displacement command. J Neurophysiol 78: 1669–1690, 1997b. doi: 10.1152/jn.1997.78.3.1669. [DOI] [PubMed] [Google Scholar]

- Freedman EG, Sparks DL. Coordination of the eyes and head: movement kinematics. Exp Brain Res 131: 22–32, 2000. doi: 10.1007/s002219900296. [DOI] [PubMed] [Google Scholar]

- Fuller JH. Head movement propensity. Exp Brain Res 92: 152–164, 1992. doi: 10.1007/BF00230391. [DOI] [PubMed] [Google Scholar]

- Gandhi NJ, Katnani HA. Motor functions of the superior colliculus. Annu Rev Neurosci 34: 205–231, 2011. doi: 10.1146/annurev-neuro-061010-113728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gandhi NJ, Sparks DL. Experimental control of eye and head positions prior to head-unrestrained gaze shifts in monkey. Vision Res 41: 3243–3254, 2001. doi: 10.1016/S0042-6989(01)00054-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gauthier GM, Nommay D, Vercher JL. The role of ocular muscle proprioception in visual localization of targets. Science 249: 58–61, 1990. doi: 10.1126/science.2367852. [DOI] [PubMed] [Google Scholar]

- Goossens HH, Van Opstal AJ. Human eye-head coordination in two dimensions under different sensorimotor conditions. Exp Brain Res 114: 542–560, 1997. doi: 10.1007/PL00005663. [DOI] [PubMed] [Google Scholar]

- Guitton D. Control of eye-head coordination during orienting gaze shifts. Trends Neurosci 15: 174–179, 1992. doi: 10.1016/0166-2236(92)90169-9. [DOI] [PubMed] [Google Scholar]

- Guitton D, Munoz DP, Galiana HL. Gaze control in the cat: studies and modeling of the coupling between orienting eye and head movements in different behavioral tasks. J Neurophysiol 64: 509–531, 1990. doi: 10.1152/jn.1990.64.2.509. [DOI] [PubMed] [Google Scholar]

- Hawkins KM, Sayegh P, Yan X, Crawford JD, Sergio LE. Neural activity in superior parietal cortex during rule-based visual-motor transformations. J Cogn Neurosci 25: 436–454, 2013. doi: 10.1162/jocn_a_00318. [DOI] [PubMed] [Google Scholar]

- Henriques DY, Klier EM, Smith MA, Lowy D, Crawford JD. Gaze-centered remapping of remembered visual space in an open-loop pointing task. J Neurosci 18: 1583–1594, 1998. doi: 10.1523/JNEUROSCI.18-04-01583.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henriques DY, Crawford JD. Direction-dependent distortions of retinocentric space in the visuomotor transformation for pointing. Exp Brain Res 132: 179–194, 2000. doi: 10.1007/s002210000340. [DOI] [PubMed] [Google Scholar]

- Henriques DY, Crawford JD. Role of eye, head, and shoulder geometry in the planning of accurate arm movements. J Neurophysiol 87: 1677–1685, 2002. doi: 10.1152/jn.00509.2001. [DOI] [PubMed] [Google Scholar]