Abstract

Background Health systems often employ interruptive alerts through the electronic health record to improve patient care. However, concerns of “alert fatigue” have been raised, highlighting the importance of understanding the time burden and impact of these alerts on providers.

Objectives Our main objective was to determine the total time providers spent on interruptive alerts in both inpatient and outpatient settings. Our secondary objectives were to analyze dwell time for individual alerts and examine both provider and alert-related factors associated with dwell time variance.

Methods We retrospectively evaluated use and response to the 75 most common interruptive (“popup”) alerts between June 1st, 2015 and November 1st, 2016 in a large academic health care system. Alert “dwell time” was calculated as the time between the alert appearing on a provider's screen until it was closed. The total number of alerts and dwell times per provider per month was calculated for inpatient and outpatient alerts and compared across alert type.

Results The median number of alerts seen by a provider was 12 per month (IQR 4–34). Overall, 67% of inpatient and 39% of outpatient alerts were closed in under 3 seconds. Alerts related to patient safety and those requiring more than a single click to proceed had significantly longer median dwell times of 5.2 and 6.7 seconds, respectively. The median total monthly time spent by providers viewing alerts was 49 seconds on inpatient alerts and 28 seconds on outpatient alerts.

Conclusion Most alerts were closed in under 3 seconds and a provider's total time spent on alerts was less than 1 min/mo. Alert fatigue may lie in their interruptive and noncritical nature rather than time burden. Monitoring alert interaction time can function as a valuable metric to assess the impact of alerts on workflow and potentially identify routinely ignored alerts.

Keywords: electronic health records, workflow, decision support systems, patient care, medical order entry systems

Background and Significance

Electronic health records (EHRs) have the capability to improve patient care and safety through forms of clinical decision support (CDS) such as smart order-sets and provider alerts. 1 One frequently utilized mechanism is the “interruptive alert” (also known as “popup alert”) that requires a response before the person may return to their previous action. While such alerts can improve safety, provider dissatisfaction with automated EHR interruptions is high. 2 3 Moreover, when alerts fire too often, they cause alert fatigue, decreasing the effectiveness of these alerts with time. 3 4 5 Nevertheless, utilization of EHR-based alerts is increasing as a means of EHR decision support. 3 6 7 8 9

Objectives

To date, studies of interruptive alerts have generally focused on the overall frequency of the alert and/or response (acceptance vs. rejection) to them. 10 11 Less is known regarding the amount of time spent viewing any particular alert (also known as “dwell time”) or the cumulative time burden of EHR-based alerts in practice. 1 3 12 To better understand how much time providers spent interacting with interruptive alerts at a health system level, we recorded when an alert fired and when it was acted upon to calculate alert dwell time. We then sought to (1) determine the total time providers spent on interruptive alerts in both inpatient and outpatient settings, (2) analyze dwell time for individual alerts, and (3) examine both provider and alert-related factors associated with dwell time variance.

Methods

Time stamps (in tenths of seconds) were created for interruptive alerts that fired for providers at the Duke University Health System between June 1st, 2015 and November 1st, 2016, which use the Epic Systems EHR (Verona, WI). All interruptive alerts seen by an attending physician, resident, or advanced practice provider (physician assistant or nurse practitioner) that required some interaction such as answering a question, acknowledging the alert, or closing the interruptive box across three covered hospitals and all outpatient clinics were included. We excluded drug–drug interaction and drug-duplicate alerts and passive background alerts, which allow doctors to decide when to interact with them, as accurate time data could not be calculated for either category.

The alert dwell time was calculated as the number of seconds between when an alert was presented on the screen and when an action taken allowed it to be dismissed. To exclude interactions where the provider may have left the EHR or the patient record for an alternative task, interactions with extreme dwell times (>10 minutes) were excluded.

The 75 most common alerts (representing >95% of firings for these categories) were reviewed independently by two authors (P.E. and A.M.N.), and sorted into four groups by message content: (1) prompt to fill in missing workflow data, (2) billing and documentation requirements, (3) forgotten action deemed important for clinical care, (4) patient safety alerts triggered requiring clinician review. No adjudication was required in the categorization process. Examples of each type of alert can be found in Appendix A .

Appendix A. Number of unique alerts and top three most common alerts by category.

| Alert category | Text display |

|---|---|

| Prompt to fill in missing workflow data |

• A dosing weight has not been entered for this patient. If dosing weight is not entered, proper dosing support may not be provided in order entry. Please enter a dosing weight before proceeding to place orders. |

| • A follow-up provider has not been designated. | |

| • Patient has an allergy review status of “unable to assess” or “in progress.” Please review and update allergies. | |

| ...(+4)... | |

| Billing and documentation requirements | • Delivery summary not signed. Please return to this section to complete. |

| • Payor requires admission order and attending cosign prior to procedure. | |

| • This patient does not currently have an order for admission. | |

| ...(+8)... | |

| Forgotten action | • Allergies have not been verified during this encounter. |

| • Patient's eyes are dilated. Please offer sunglasses. | |

| • Nurse has indicated that a provider should decide whether to give influenza vaccine to this patient. | |

| ...(+16)... | |

| Patient safety | • An anticoagulant and an epidural (or existing epidural/regional access) are still in place and have both been ordered on this patient. Check with anesthesiology before proceeding. |

| • Use of iodinated intravenous contrast is strongly discouraged in patients with significant renal impairment (Cr > 2.0). | |

| • Warning: This patient APPEARS TO NEED IRRADIATED blood products (based on age, diagnosis, or medication) but you have ordered NON-irradiated blood products. Please choose from the orders below as appropriate. | |

| ...(+56)... |

Descriptive statistics were calculated for the total number of alerts seen by alert type, provider type, and location. Because not all providers practiced for the entire 17-month observation period, we evaluated the burden of alerts per provider-month (PM), defined as any month in which a provider saw at least one patient within the health system. We then examined the total time spent on alerts for the average provider and the distribution of time spent on individual alerts. Mean time spent with an alert open was then calculated, as well as variance by action taken on an alert. To understand how time burden and interaction patterns may change as providers become accustomed to a specific alert, we analyzed variance over time when two new outpatient alerts were introduced to the health system related to unmanaged hypertension and atrial fibrillation. Differences in frequency of alerts by provider type and location were calculated using chi-square tests. Variance in time was calculated using analysis of variance and t -tests. All analysis was completed using SPSS (version 23.0, Chicago, IL) and Python (version 2.7, Beaverton, OR). The study was approved by the Duke University Institutional Review Board (IRB—PRO 00069720).

Results

Over the 17-month study period, 3,796 unique providers were exposed to at least one alert. These 3,796 providers contributed a total of 35,622 PMs in the dataset. A total of 1,226,644 interruptive alerts were fired during this time period, corresponding to 72,155 alerts per month in the health system ( Table 1 ). The majority of the alerts fired during inpatient encounters (73.6% of all alerts). The median time providers spent on individual alerts was slightly higher in the outpatient setting but both were short (3.6 seconds vs. 2.4 seconds per alert, p < 0.001). In both the inpatient and outpatient setting, the most common types of alerts were ones that notified providers of data missing for care workflow and ones that prompted clinicians to complete forgotten actions. Patient safety alerts were the least prevalent type of alert, but the ones associated with the highest dwell times compared with all three other groups (median 5.2 vs. 2.6 seconds, p < 0.001).

Table 1. Frequency and time burden of alerts by alert type and setting.

| Alert category | Average alerts fired per month (%) | Median time per alert, s (IQR) | Total time per month (min) | Portion of dwell time (%) |

|---|---|---|---|---|

| Inpatient | ||||

| Prompt to fill in missing workflow data | 26,126 (49.2) | 2.1 (1.4–3.2) | 1,530 | 43.4 |

| Billing and documentation requirements | 6,824 (12.9) | 2.6 (2.3–4.3) | 561 | 15.9 |

| Forgotten action | 17,812 (33.5) | 2.2 (1.3–3.5) | 1,025 | 29.1 |

| Patient safety | 2,342 (4.4) | 5.2 (2.3–11.7) | 407 | 11.6 |

| Subtotal | 53,103 (100) | 2.4 (1.4–3.8) | 3,524 | 100 |

| Outpatient | ||||

| Prompt to fill in missing workflow data | 6,805 (35.8) | 3.3 (2.5–4.9) | 714 | 31.5 |

| Billing and documentation requirements | 1,110 (5.8) | 4.4 (2.3–7.0) | 162 | 7.1 |

| Forgotten action | 10,410 (54.6) | 3.7 (2.7–4.5) | 1,242 | 55.0 |

| Patient safety | 728 (3.8) | 5.3 (3.1–11.4) | 144 | 6.4 |

| Subtotal | 19,052 (100) | 3.6 (2.9–5.5) | 2,262 | 100 |

| Overall | 72,155 | 2.2 (2.1–3.7) | 5,786 | |

Abbreviation: IQR, interquartile range.

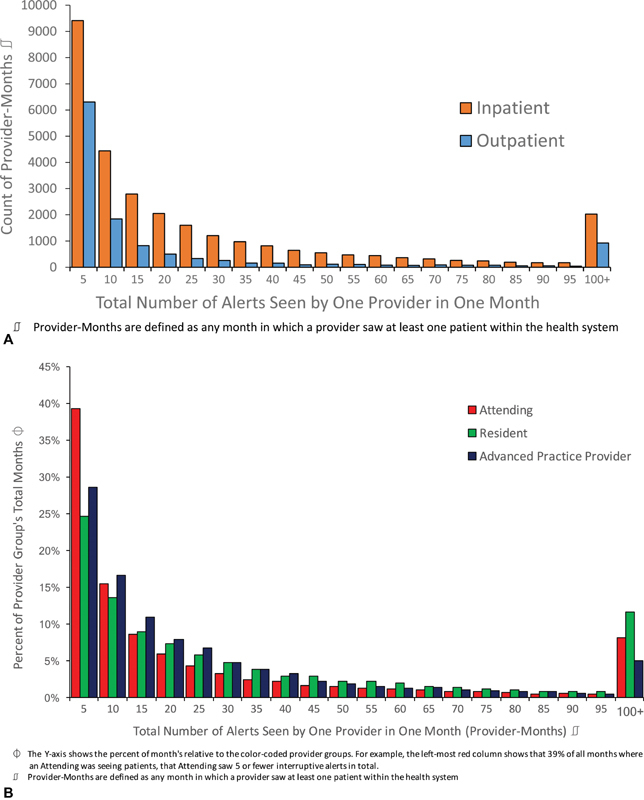

The median number of alerts per month per provider was 12 (IQR 4–34). Fig. 1 shows the number of alerts seen per provider by setting and provider type.

Fig. 1.

( A ) Alerts seen per unique provider-month by setting. ( B ) Cumulative alerts seen per month by provider type.

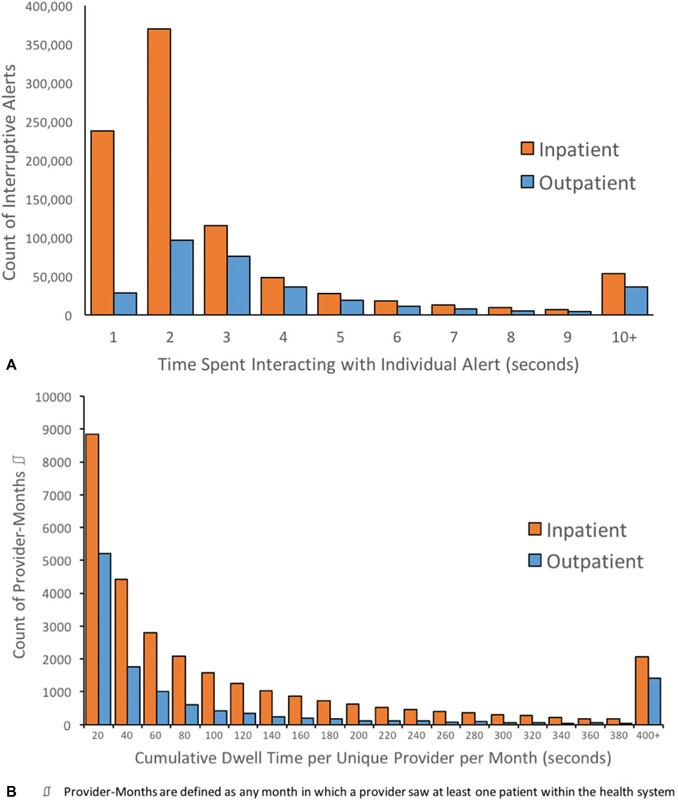

The amount of time each individual alert remained open also varied, as shown in Fig. 2A . For inpatient encounters 26.4% of alerts were closed in under 2 seconds, 67.4% in under 3 seconds, and 80.1% of all alerts were closed in under 4 seconds. For outpatient encounters 9.0% of alerts were closed in under 2 seconds, 38.8% in under 3 seconds, and 62.2% of all alerts were closed in under 4 seconds.

Fig. 2.

( A ) Distribution of individual alert dwell times. ( B ) Cumulative dwell time spent on interruptive alerts per provider per month.

Fig. 2B shows the cumulative dwell time individual providers spent per month interacting with alerts. The median cumulative monthly dwell time for inpatient alerts was 49 seconds while for outpatient alerts it was 28 seconds. Resident physicians had the highest average cumulative dwell times per PM (174.8 seconds per PM), followed by attending physicians (174.0 seconds per PM), and advanced practice providers (130.3 seconds per PM), largely as a product of differing number of alerts rather than different dwell times.

In the health system studied, providers spent 5,786 minutes overall per month (3,524 inpatient and 2,262 outpatient) interacting with interruptive alerts ( Table 1 ). The amount of time providers spent interacting with alerts varied substantially. The top 5% of providers ( n = 189) saw nearly 41.2% of all alerts but only contributed 8.4% of all PMs. They were comprised of 49% attendings, 40% residents, and 11% advanced practice providers. Compared with all providers there was a significantly higher representation of residents amongst the group with highest interruptive alert burden (40 vs. 31%, p = 0.036). Amongst the top 5% the average number of alerts was 169 per PM. This same group also averaged a cumulative dwell time of 787.7 seconds per PM.

Table 2 shows the amount of time providers spent on an alert varied based on response criteria required to close the alert. In both inpatient and outpatient settings, alerts that could be cancelled, exited, or accepted/acknowledged with a single click required the least amount of time (median 2.2–2.3 seconds per inpatient alert, 3.5–3.6 seconds for outpatient alerts). The most time-intensive alerts required a provider reply to a warning by either free-response text or choosing from a menu of options, with median times of 7.1 seconds for inpatient and 6.0 seconds for outpatient.

Table 2. Time spent on alerts by response.

| Provider required action to close alert | Median time per alert (s) | Interquartile range (s) | Number of alerts fired |

|---|---|---|---|

| Inpatient | |||

| Accept alert | 2.2 | 1.6–3.2 | 455,908 |

| Cancel or exit alert | 2.5 | 2.1–3.9 | 406,516 |

| Modify an order | 3.1 | 2.9–6.5 | 23,455 |

| Provide typed/dropdown response | 7.1 | 4.2–15.0 | 16,873 |

| Outpatient | |||

| Accept alert | 3.5 | 2.2–4.5 | 175,309 |

| Cancel or exit alert | 3.1 | 2.9–5.6 | 124,464 |

| Modify an order | 3.4 | 2.8–7.1 | 11,627 |

| Provide typed/dropdown response | 6.0 | 4.7–12.3 | 12,492 |

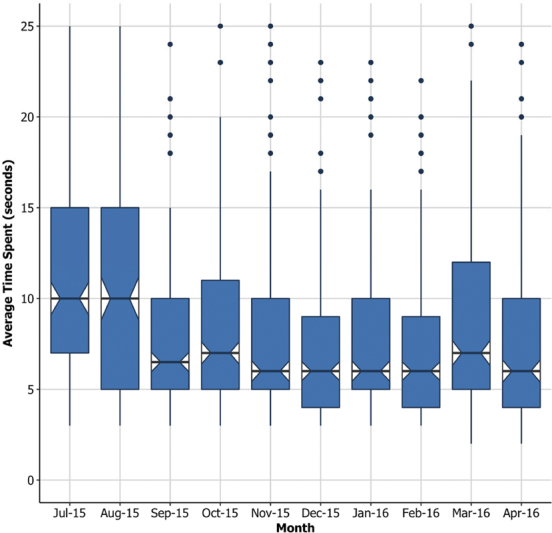

Over the course of the study period a new alert was introduced in the clinical setting for a subset of physicians. This alert fired when patients had uncontrolled blood pressure (BP) and required a provider acknowledge the BP value and choose amongst scripted options (or provide a free-text response) to explain their intended response to the value. Fig. 3 shows median provider dwell time on the alert per month after the alert was released. Initial median dwell times for the new alert were 10 seconds in the first 2 months after the alert was released. This fell to 6 seconds in the third month and remained there for the rest of the study period.

Fig. 3.

Average dwell time on a newly introduced outpatient alert over time.

Discussion

As EHRs become more widely used, interruptive alerts are increasingly employed for a variety of purposes, ranging from patient safety alerts to documentation and billing requirements. At this large university health system, interruptive alerts fired on average 72,155 times per month, for a total of 5,786 PMs, or nearly 100 hours of total provider effort per month. However, when viewed across all providers, most spent only a few seconds responding to each alert and a total of less than 1 min/month interacting with interruptive alerts.

Our data provide a new framework from which to understand alert fatigue. Although some providers do spend considerable time interacting with alerts, the median time per provider per month interacting with inpatient and outpatient alerts was under 1 minute. Given this relatively small amount of time spent managing alerts, it is unlikely that the time burden of alerts is the cause of alert fatigue. Rather, alert fatigue may be more related to distraction from other tasks and the interruption from workflow. 13 14 15 16 17 Interruptions in workflow are known to be a significant driver in orders being placed on incorrect patients and other patient safety events. 18 19 20

While the cumulative time burden on providers for alerts may underestimate providers' perceived burden of alerts, assessing the time providers spend with alerts may lead to important insights about the potential effectiveness of an alert. A large number of alerts, including two-thirds of inpatient alerts, were closed in under 3 seconds. We cannot rule out that providers are recognizing these alerts, processing the information, and choosing a response in this time frame. It may be possible that as providers interact more and more with the same alerts, they are able to understand the information and dismiss them quickly. However, the rapid time to close the alerts raises the possibility that providers are closing many alerts as quickly as possible, and may not be reading the text or processing the information presented. It is likely that a large proportion of alerts closed in <2 seconds are due to habituated responses with limited conscious intention. 15 If this is the case, the frequency of interruption from these alerts, and resulting frustration by providers who are interrupted, may not be leading to any net clinical benefit.

Dwell time may correspond to the clinical importance of the alerts. Patient safety alerts had the longest dwell times in both the inpatient and outpatient setting. This suggests that providers do spend more time interacting with some clinically relevant alerts. One alternative explanation for the longer dwell times for safety alerts, however, was that these alerts were less common, and may have been harder to interpret and therefore not quickly or automatically dismissed by providers. Only 4.3% of all alert firings fell in this category despite the vast majority, 59 of the 75 unique alerts we evaluated, belonging to this group. Additionally, these alerts would be more likely to require a provider to type in an acknowledgment or change an order which also contributed to longer average interaction time. When alerts are critically important, modifications such as these may help increase their impact or prevent automatic and habituated closing by providers.

The introduction of a new alert during our study period offered an opportunity for a natural experiment to assess how providers become accustomed to a new alert over time. As part of a hypertension improvement program, a group of outpatient providers saw an interruptive alert when their patients had uncontrolled blood pressure and were seen in the clinic. Providers were asked to check a box to explain the reason for hypertension or planned response. Interaction times with this alert decreased as providers became increasingly familiar with the alert. In this case, it took 3 months for provider dwell times to stabilize, from a median of 10 to 6 seconds.

Our study had several limitations. First, this is a single-center experience, and the results may not be generalizable to other centers. However, the EHR utilized (Epic) is representative of most academic medical centers and has medical records for over half the U.S. population across all installations. 16 Next, we were not able to analyze interruptive alerts due to medication interactions as these occurred during the ordering process and could not be time-stamped. Finally, while we evaluated the time spent on alerts, we were unable to determine the degree to which time spent was associated with alert effectiveness or change in care plan. 21

As learning health systems attempt to decrease alert fatigue and improve the quality of their CDS, understanding both the volume and time burden of interruptive alerts will be critical. Even when alerts take small amounts of time to close, the volume and frequency of interruption can still contribute to alert fatigue. Institutions should decrease the use of interruptive alerts for administrative and billing reasons as we found they currently represent the majority of alerts and should consider using interruptive alerts only when necessary for patient safety. When alerts are critical, requiring providers to have multistep interactions with the alert (rather than clicking a single box to close) may increase the time spent thinking about the patient safety question raised.

Future research should be conducted to determine the degree to which time spent with an alert corresponds to the desired patient safety outcomes. When alerts are routinely closed in under 2 seconds, health systems should consider whether they are truly benefitting care or if they ought to be turned off.

Conclusion

Alert interaction time can represent a valuable metric in assessing alert responses, in part by allowing determination of their productivity costs. It can also provide important information for health systems to determine the best way to utilize interruptive alerts to improve patient care while minimizing the perceived burden on providers. In addition to tracking the cumulative time spent on alerts, analyzing time spent on individual alerts can help health systems both assess the potential impact of these alerts on work flow and identify alerts that are not useful to providers.

Clinical Relevance Statement

In this retrospective study, we looked at 1.2 million interruptive alerts over 17 months and found the median number of alerts per provider per month was 12. Providers spent a median of 49 seconds viewing inpatient alerts and 28 seconds on outpatient alerts per month. The cumulative time burden of interruptive alerts on providers appears minimal. A majority of alerts were closed in under 3 seconds. The observed short interaction times suggest that many alerts marked as acknowledged may never be read given short interaction times.

Multiple Choice Questions

-

What is one way that institutions could reduce alert fatigue and increase the impact of interruptive alerts?

Increase frequency of alerts.

Reduce billing-related alerts.

Increase noncritical alerts.

Use alerts only when unrelated to patient safety.

Correct Answer: The correct answer is option b. Our study found that average dwell time and cumulative time burden associated with interruptive alerts are minimal—suggesting that alert fatigue may be attributed to the frequency of alerts, rather than the time spent on alerts, and that providers are likely dismissing alerts without fully reading them. One potential solution to this problem would be to decrease the number of noncritical, billing-related alerts—allowing providers to prioritize critical alerts.

-

The most common interruptive alerts are for which category of information?

Missing workflow data.

Billing and documentation requirements.

Forgotten action deemed important for clinical care.

Patient safety alerts triggered requiring clinician review.

Correct Answer: The correct answer is option a. Interruptive alerts are an important part of EHR systems, and thus are likely to increase in both prevalence and importance in coming years. Currently, most interruptive alerts are used for administrative tasks, such as missing workflow data and billing and documentation requirements. Decreasing the number of alerts related to administrative tasks could increase the amount of time that providers spend interacting with critical alerts, reducing alert fatigue, and increasing the impact of interruptive alerts.

Acknowledgments

The authors would like to acknowledge the help of Dr. David Bates and Ms. Kara McArthur in reviewing this article and providing thoughtful improvements throughout the manuscript process.

Conflict of Interest B.W. reports that he receives royalties from Lippincott Williams & Wilkins and McGraw-Hill for writing/editing several books on patient safety and information technology in health care; receives stock options for serving on the board of Acuity Medical Management Systems; receives a yearly stipend for serving on the board of The Doctors Company; serves on the scientific advisory boards for Amino.com, PatientSafe Solutions, Twine, and EarlySense (for which he receives stock options); consults with Commure (for which he receives a stipend and stock options); has a small royalty stake in CareWeb, a hospital communication tool developed at The University of California, San Francisco. A.M.N. reports that she has received significant research grants from Amgen, Sanofi, and Regeneron and served as a consultant or advisory board member for Amgen and Sanofi.

Protection of Human and Animal Subjects

No human and/or animal subjects were included in the project. The research was limited to a retrospective review of de-identified data, and no personally identifiable information was collected.

References

- 1.Embi P J, Leonard A C.Evaluating alert fatigue over time to EHR-based clinical trial alerts: findings from a randomized controlled study J Am Med Inform Assoc 201219(e1):e145–e148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bates D W, Leape L L, Cullen D J et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA. 1998;280(15):1311–1316. doi: 10.1001/jama.280.15.1311. [DOI] [PubMed] [Google Scholar]

- 3.McDaniel R B, Burlison J D, Baker D Ket al. Alert dwell time: introduction of a measure to evaluate interruptive clinical decision support alerts J Am Med Inform Assoc 201623(e1):e138–e141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Slight S P, Seger D L, Nanji K C et al. Are we heeding the warning signs? Examining providers' overrides of computerized drug-drug interaction alerts in primary care. PLoS One. 2013;8(12):e85071. doi: 10.1371/journal.pone.0085071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Baysari M T, Tariq A, Day R O, Westbrook J I. Alert override as a habitual behavior—a new perspective on a persistent problem. J Am Med Inform Assoc. 2017;24(02):409–412. doi: 10.1093/jamia/ocw072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rehr C A, Wong A, Seger D L, Bates D W. Determining inappropriate medication alerts from “inaccurate warning” overrides in the intensive care unit. Appl Clin Inform. 2018;9(02):268–274. doi: 10.1055/s-0038-1642608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Nanji K C, Slight S P, Seger D L et al. Overrides of medication-related clinical decision support alerts in outpatients. J Am Med Inform Assoc. 2014;21(03):487–491. doi: 10.1136/amiajnl-2013-001813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Meslin S MM, Zheng W Y, Day R O, Tay E MY, Baysari M T. Evaluation of clinical relevance of drug-drug interaction alerts prior to implementation. Appl Clin Inform. 2018;9(04):849–855. doi: 10.1055/s-0038-1676039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kassakian S Z, Yackel T R, Gorman P N, Dorr D A. Clinical decisions support malfunctions in a commercial electronic health record. Appl Clin Inform. 2017;8(03):910–923. doi: 10.4338/ACI-2017-01-RA-0006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Payne T H, Hines L E, Chan R C et al. Recommendations to improve the usability of drug-drug interaction clinical decision support alerts. J Am Med Inform Assoc. 2015;22(06):1243–1250. doi: 10.1093/jamia/ocv011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ojeleye O, Avery A, Gupta V, Boyd M. The evidence for the effectiveness of safety alerts in electronic patient medication record systems at the point of pharmacy order entry: a systematic review. BMC Med Inform Decis Mak. 2013;13(01):69. doi: 10.1186/1472-6947-13-69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Weingart S N, Simchowitz B, Shiman L et al. Clinicians' assessments of electronic medication safety alerts in ambulatory care. Arch Intern Med. 2009;169(17):1627–1632. doi: 10.1001/archinternmed.2009.300. [DOI] [PubMed] [Google Scholar]

- 13.Phansalkar S, van der Sijs H, Tucker A D et al. Drug-drug interactions that should be non-interruptive in order to reduce alert fatigue in electronic health records. J Am Med Inform Assoc. 2013;20(03):489–493. doi: 10.1136/amiajnl-2012-001089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Taylor L K, Tamblyn R.Reasons for physician non-adherence to electronic drug alerts Stud Health Technol Inform 2004107(Pt 2):1101–1105. [PubMed] [Google Scholar]

- 15.Dexheimer J W, Kirkendall E S, Kouril M et al. The effects of medication alerts on prescriber response in a pediatric hospital. Appl Clin Inform. 2017;8(02):491–501. doi: 10.4338/ACI-2016-10-RA-0168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Makam A N, Lanham H J, Batchelor K et al. Use and satisfaction with key functions of a common commercial electronic health record: a survey of primary care providers. BMC Med Inform Decis Mak. 2013;13(01):86. doi: 10.1186/1472-6947-13-86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Varghese P, Wright A, Andersen J M, Yoshida E I, Bates D W. New York, NY: Springer Nature; 2016. Clinical decision support: the experience at Brigham and women's hospital/partners healthcare; pp. 227–244. [Google Scholar]

- 18.Kuperman G J, Bobb A, Payne T H et al. Medication-related clinical decision support in computerized provider order entry systems: a review. J Am Med Inform Assoc. 2007;14(01):29–40. doi: 10.1197/jamia.M2170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gregory M E, Russo E, Singh H. Electronic health record alert-related workload as a predictor of burnout in primary care providers. Appl Clin Inform. 2017;8(03):686–697. doi: 10.4338/ACI-2017-01-RA-0003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Murphy D R, Meyer A N, Russo E, Sittig D F, Wei L, Singh H. Burden of inbox notifications in commercial electronic health records. JAMA Intern Med. 2016;176(04):559–560. doi: 10.1001/jamainternmed.2016.0209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dombrowski W, Clay B, O'Brien J D. Electronic health records quantify previously existing phenomenon. JAMA Intern Med. 2016;176(08):1234–1235. doi: 10.1001/jamainternmed.2016.3889. [DOI] [PubMed] [Google Scholar]