Abstract

The pleasure of music listening regulates daily behaviour and promotes rehabilitation in healthcare. Human behaviour emerges from the modulation of spontaneous timely coordinated neuronal networks. Too little is known about the physical properties and neurophysiological underpinnings of music to understand its perception, its health benefit and to deploy personalized or standardized music-therapy. Prior studies revealed how macroscopic neuronal and music patterns scale with frequency according to a 1/fα relationship, where a is the scaling exponent. Here, we examine how this hallmark in music and neuronal dynamics relate to pleasure. Using electroencephalography, electrocardiography and behavioural data in healthy subjects, we show that music listening decreases the scaling exponent of neuronal activity and—in temporal areas—this change is linked to pleasure. Default-state scaling exponents of the most pleased individuals were higher and approached those found in music loudness fluctuations. Furthermore, the scaling in selective regions and timescales and the average heart rate were largely proportional to the scaling of the melody. The scaling behaviour of heartbeat and neuronal fluctuations were associated during music listening. Our results point to a 1/f resonance between brain and music and a temporal rescaling of neuronal activity in the temporal cortex as mechanisms underlying music appreciation.

Subject terms: Electrophysiology; Scale invariance; Cognitive neuroscience; Human behaviour; Statistical physics, thermodynamics and nonlinear dynamics

Introduction

Music is a cross-cultural phenomenon, and biological constraints underlie its appeal1. Music listening recruits the concerted activity of several cortical and subcortical regions2,3; its emotive power relies on extensive neuronal circuits4 and modulates neurochemistry5. Besides being often used to regulate emotions6, music listening appears valuable in, e.g., cognitive rehabilitation of post-stroke and dementia patients7,8, diagnosis of disorders of consciousness9, and visual-spatial reasoning10. Moreover, pleasant music boosts problem-solving skills11,12. However, what makes music pleasurable and why it emerges as widely beneficial in cognition remains unclear.

Music theorists argue that the enjoyment of music derives from how the music unfolds in time. A balance between regularity and variation in its composition13 and individual innate and learned expectations14 shape the emotions evoked. Fractal theory is a notable way of conceptualising this balance of predictability and surprise within the music flow. Random fractals encompass irregular fluctuations that show a statistical resemblance at several timescales and are therefore called self-similar15,16. The degree of self-similarity can be quantified by a scaling exponent (α), which captures the relationship between the averaged fluctuation, F(t), and the timescale, t: F(t) ~ tα. When 0.5 < α < 1, there are persistent long-range temporal correlations present; α = 1 (1/f noise) represents a compromise between the unpredictable randomness of white noise (α = 0.5) and the smoothness of Brownian noise (α = 1.5)17. A straightforward relationship with the frequency-domain is such that the spectrum S(f) displays an inverse power-law scaling (S(f) ~ 1/fβ, where β = 2α − 1). Musical pitch and loudness18,19 and musical rhythms20,21 obey approximately a 1/f power-law distribution and, fractal properties differentiate composers and genres20,22. Compositions in which the frequency and duration of the notes follow 1/f distributions sound more pleasing than 1/f2 or random ones23 and 1/f deviations in computer-generated beats humanise and create more pleasant rhythms than random deviations21. Scaling behaviour is also ubiquitous in multi-level neuronal systems. In particular, neurons from earlier stages of the auditory pathways are tuned to sounds with different 1/fβ spectra, while neurons in the auditory cortex favour 1/f statistics24. Fluctuations in neuroelectric and neuromagnetic brain activity display 1/fβ scaling under rest25–28 and music29. Self-similarity further characterises the heartbeat variations30 and such dynamical organization of the nervous system is functionally relevant17,31–33. Humans can apprehend recursive fractal rules embedded in tone sequences34, predict 1/f better than random tempo fluctuations35 and, a preferential cortical tracking of tones occurs when its pitches display long-range temporal correlations36. Furthermore, electrophysiological evidence suggests humans process long-distance dependencies typical of music syntax37. Altogether, it led us to hypothesise that the scaling of music shapes the neuronal scaling behaviour during listening and to posit that the brain’s sensitivity to music—and the pleasure derived from listening—lies in their shared similar dynamical complex properties38.

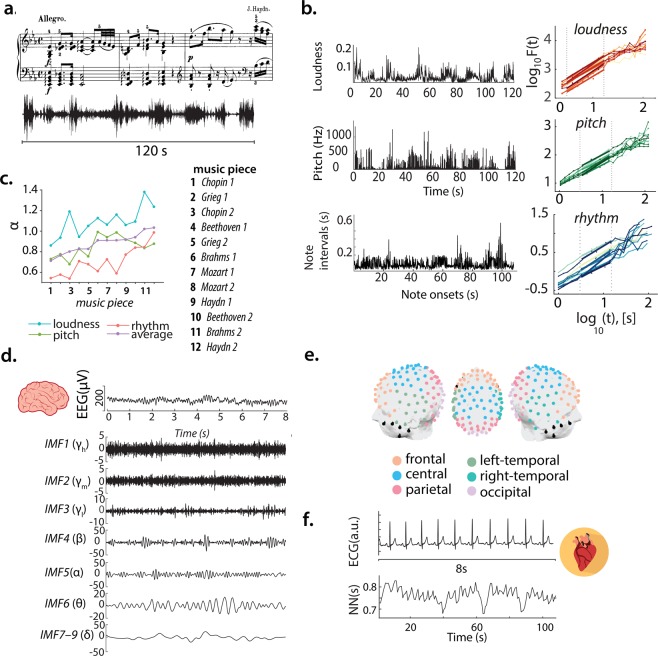

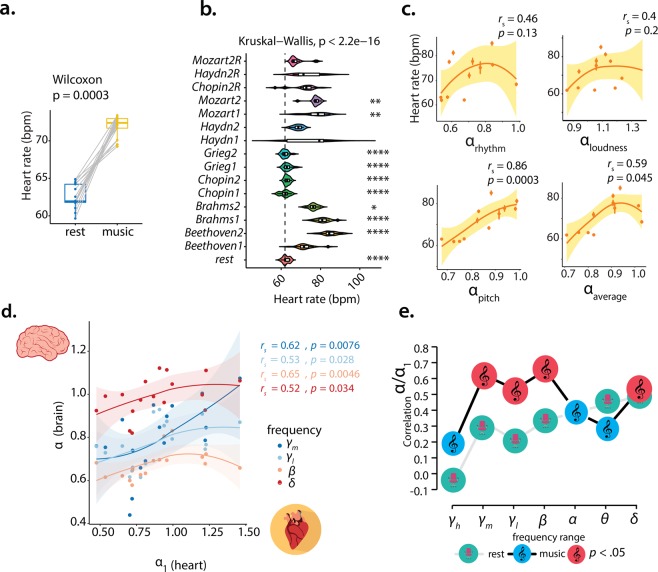

Here, we characterise the self-similarity of fluctuations in loudness, pitch, and rhythm of 12 classical pieces (Fig. 1b,c) and analyse the scaling behaviour of multiscale neuronal activity from different scalp regions (Fig. 1d,e) and cardiac interbeat intervals (Fig. 1f) of healthy individuals—at baseline and during music listening—and associate self-reported pleasure with these measures (see also Table 1).

Figure 1.

Scheme of the investigation. (a) Stimuli example—excerpt of the sound signal from the Sonata no. 62 Allegro (Haydn) and partial score. (b) (Left) Correspondent loudness, pitch and rhythm time series representing respectively the audio envelope, successive dominant note-frequency changes and note intervals. (Right) Approximate linear relationship between log t and the average fluctuation log F(t) for t ∈ [3, 15]s reveals a fractal scaling characteristic of how the musical features unfold in time. (c) Scaling exponents obtained for each of the music dimensions form a gradient from near randomness (0.5) to smooth and highly correlated fluctuations (>1). (d) Broadband EEG trace and the different timescales (Empirical Mode Decomposition) analysed. (e) Channels (dots) and regions (colour-coded) analysed (cf. Methods for details and Table 1 for a glossary of the main experiment variables). (f) Heartbeat and interbeat intervals (NN) obtained from ECG signals. Music sheet is a courtesy of Musopen. Brain and heart images in (d,f) by Sinisa Maric and Marcus Hartmann, under Pixabay license.

Table 1.

Glossary with the main experimental variables and its (multi)-level descriptions.

| Variable | Description | Multi-level description |

|---|---|---|

| αbrain | scaling exponent, indicating the persistence or self-similarity in modulations of neuronal activity (see Fig. 1d for components studied: , , , αβ, αα, αθ and αδ) | (averaged values across pieces for each subject) |

| (averaged values across subjects for each piece) | ||

| αmusic |

scaling exponent characterising the self-similarity of a musical feature (see Fig. 1b for musical features studied: αloudness, αpitch, αrhythm, αaverage) |

|

| s | behavioural scores (ratings of pleasure, concentration and familiarity) | (averaged values across pieces for each subject) |

| (averaged values across subjects for each piece) | ||

| α1 (heart) | scaling behaviour exponent of short-term heart rate variability | |

| AVNN (heart) | average of normal sinus interbeat intervals | |

| 1/f noise |

stochastic process which displays a power spectral density, S(f), with the form S(f) = 1/fβ, where f is the frequency. The β exponent is related to the colour of the signal, that is to the degree the signal is autocorrelated, a measure of self-similar dynamics. If β = 0, the power spectrum is flat and there are no autocorrelations present (white noise), if β = 2, the signal is highly autocorrelated corresponding to integrated white noise (i.e., Brownian or red noise). The special case when β = 1 yields a signal with moderate autocorrelations, often referred as pink noise. In this Paper, 1/f refers to pink noise and 1/fβ to other generic cases of 1/f scaling. |

|

| 1/f resonance |

phenomenon by which a stimulus with 1/f variability transmits maximal information to another system if the latter is close to the ideal 1/f-noise condition |

Results

Neuronal scaling behaviour during music listening

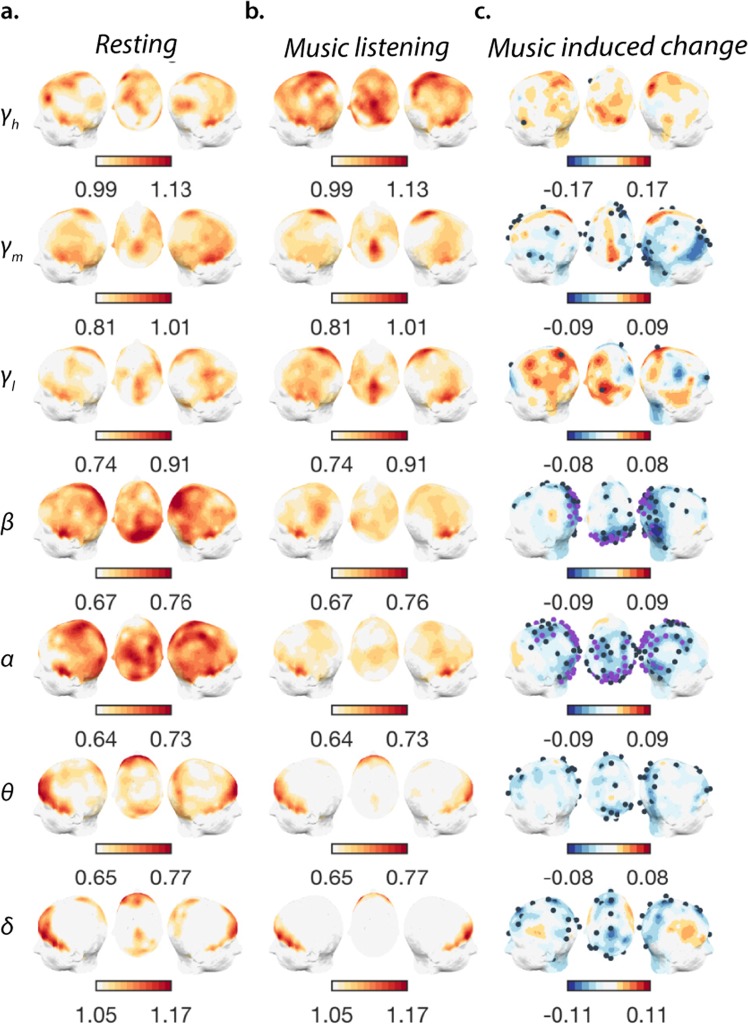

Twenty-eight participants underwent a music-listening task after a baseline period of eyes-closed rest. We quantified the degree of self-similarity (α) in seven neuronal components (Fig. 1d; Methods). Music listening decreased the α value of most frequency-dependent levels relative to the resting-state (Fig. 2). These decreases were conspicuous in the parietal and occipital regions of neuronal activity correspondent to the α- (, ; , , pc < 0.005; Wilcoxon signed-rank test: z—z-score, r—effect size), β- (, ; , , pc < 0.011) and γm-components (, ; , , p < 0.048; n.s. differences after FDR correction). Additional significant decreases occurred in the central area exclusively in the α-component (, , p < 0.048) and in the right-frontal channels within the γm neuronal component (, , p < 0.043) (Fig. 2c). On a global topographic level, the median scaling of neuronal oscillations also decreased but only in activity below γ (Supplementary Fig. 2, SI Results). The scaling behaviour characterises how the amplitude envelope of selected frequency components is modulated on different time scales; the fact that this property did not remain static across states suggests that it may capture meaningful functional processes. Of note, this music-induced change of neuronal assembly dynamics is distinct from changes in spectral power. In fact, average spectral power increased (α − β), decreased (θ) or remained unchanged in the γ amplitude fluctuations (Supplementary Fig. 3).

Figure 2.

Music-induced decrease in the scaling of the envelope fluctuations in most frequency ranges relative to the rest, default-state. Head surface maps of the scaling exponent of neuronal components (γh − δ) during rest (a) during a music listening task (b) and of the difference between the latter two. (c) The decreases are accentuated in the parietal and occipital regions. Channels marked with dark blue dots display significant differences (p < 0.05, uncorrected), purple dots signal significant differences after FDR correction (q = 0.05), minimum p = 0.005 (α), p = 0.011 (β)).

Music-induced behaviour

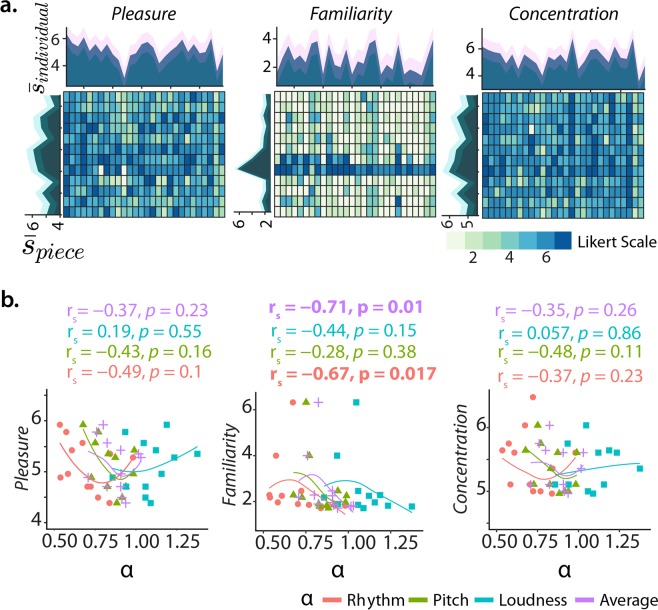

On average, individuals agreed with experiencing Pleasure during music listening (, mean ± SD) and being focused on the listening (5.4 ± 0.7) whereas, Familiarity was most often rated with low scores (2.5 ± 0.9), with only two pieces being widely familiar (Fig. 3a). There was considerable individual variability in which pieces elicited more pleasure (Krippendorff’s α = 0.12), were more familiar (α = 0.27) or elicited more focus (α = 0.07) (see also Fig. 3a). Familiarity was inversely correlated to the scaling of the musical rhythm (αrhythm; rs = −0.71, p = 0.01) and the averaged scaling of all features (αaverage; rs = −0.67, p = 0.02) (Fig. 3b). On average, the level of Pleasure experienced was strongly associated with the Concentration ratings of each piece (rs = −0.71, p = 4.2 . 10−2) and participant (rs = 0.73, p = 1.1. 10−5). However, while overall Pleasure/Concentration elicited by a piece positively correlated with Familiarity (rs = 0.58, p = 0.05; Supplementary Fig. 4a), participants experiencing more pleasure had lower familiarity scores (rs = −0.36, p = 0.06) and more concentrated participants had higher familiarity scores (rs = −0.55, p = 0.0025; Supplementary Fig. 4b). Thus, for the music stimuli used in this experiment, the variance in Familiarity did not explain a great percentage of the variance in Pleasure and a lower individual Familiarity appears linked to higher concentration.

Figure 3.

Behaviour induced by music listening and its relationship to musical features. (a) Individual pleasure, familiarity and concentration ratings for each participant (x-axis) and piece (y-axis) and their average () per individual/piece (dark colour) and SD (shade in light colours) (lateral plots). (b) Relationship between and the scaling exponent (αmusic) of each piece for all music dimensions; only of Familiarity shows a significant association with the dynamics of the stimuli musical dimensions.

Neuronal scaling behaviour links to individual musical pleasure

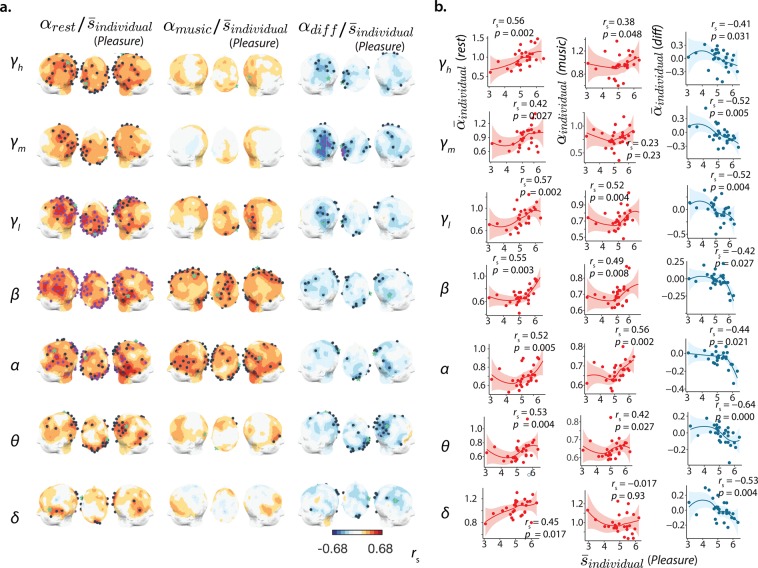

It is generally accepted that the enjoyment of music is subjective, conditioned by taste and personal history. We found that the self-similarity of the individuals’ ongoing neuronal activity correlates with the pleasure experienced during music listening (Fig. 4a). Specifically, individuals exhibiting scaling exponents of the amplitude fluctuations of resting-state neuronal activity with higher values, close to 1, were more likely to enjoy the music (Fig. 4b). This pattern of association was general, however, it occurred most significantly in the α-, β- and γl-components. The scaling of amplitude modulations of the music (αloudness) was also roughly 1 in the group of music pieces used in the experiment (αloudness = 1.07 ± 0.14, Fig. 1c). The regions constituting loci with the most predictive power of overall music enjoyment were extended throughout the cortex, in particular in the parietal (; 0.34(β); 0.41(γl)), occipital (; 0.40(β); 0.33(γl)) and temporal lobes (; 0.37(β); 0.34(γl)) (rs, Spearman coefficient; q = 0.1, FDR). During music listening, a similar coarse profile characterised this association albeit less significantly (ns. for q = 0.1, FDR) and with inferior magnitude, mainly in the temporal cortices (; 0.24(β); 0.14(γl)). Strikingly, individual differences in the scaling behaviour (music-rest) were inversely associated with perceived pleasure, i.e., the highest music-induced decreases in neuronal scaling exponents were likely to occur for the participants who scored high on Pleasure. The greatest proportions of shared variance were, in this case, centred in the γm activity of the temporal and, less significantly, in the parietal cortices. Particularly, in the left-temporal (; −0.42(γm); −0.28(γl)) as opposed to the right-temporal region (; −0.24(γm); −0.18(γl)); (see Fig. 4a,b for statistical significance). Considering how pleasure and concentration ratings were strongly correlated (Supplementary Fig. 4), it is plausible that the αbrain–Pleasure associations are merely related to concentration. To rule out this possibility, we also correlated the neuronal scaling exponents to the Concentration scores (Supplementary Fig. 5). Although topographically the associations resemble those found for Pleasure, the magnitude is diminished and significant interactions are scarce, suggesting the association to Pleasure is not primarily driven by a higher individual capacity to attend to the music.

Figure 4.

The scaling behaviour of neuronal activity during baseline and its induced change during listening capture the individual pleasure experienced with the music. (a) Head surface mappings of the associations between Pleasure () and the scaling exponents of the components (γh − δ) during baseline (rest), during a music listening task, and with the induced change in scaling between the latter. The channels marked in dark blue indicate a nominally significant correlation (Spearman coefficient rs, p < 0.05), the channels in purple show significant association after FDR correction (q = 0.1, minimum p-values at this FDR spanned between 2.82 × 10−4 and 0.025). (b) Scatter plots of the highlighted channels (green star in (c) exemplify the individual values (n = 28), a locally weighted regression line was added to aid visualising the relationship and the shadowed area represent the confidence interval.

Interactions between neuronal, music and cardiac dynamics

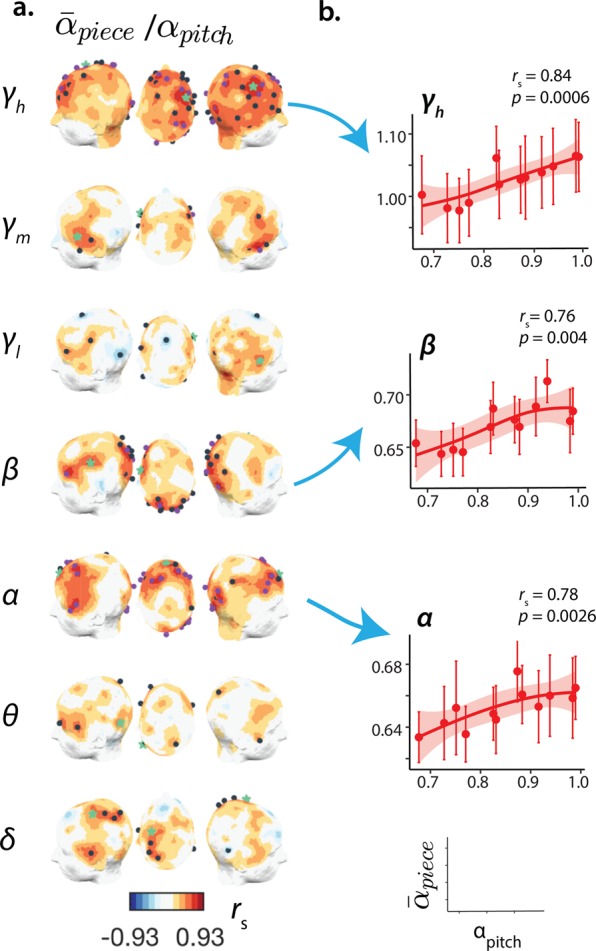

We also sought to investigate the extent to which the temporal structure of the music signals determined the scaling of neuronal activity during music. By mapping the correlation between the scaling of different music features (αloudness,pitch,rhythm) and the average inter-subject neuronal scaling exponent for each piece (), we found a significant association between the self-similarity of pitch successions and that of γh-, β- and α-component dynamics in the occipital region (, pc < 0.017; , pc < 0.006; , pc < 0.017, FDR—q = 0.2) (Fig. 5). Some focal frontal and right-temporal locations had strong associations (Fig. 5a,b), and some of these were robust to a false-discovery rate correction at 10 and 5%. Rhythm and loudness showed a magnitude-wise similar positive correlation but with relative sparsity. Only a few clusters of the interactions and reached statistical significance after correction (Supplementary Fig. 7). We further probed the music effect on cardiac dynamics, using a subset of participants (n = 17) we found that average heart rate (AVNN) significantly increased during music (Fig. 6a), nevertheless AVNN values varied widely across pieces (Fig. 6b). Similarly to the neuronal effect, AVNN increased almost linearly with αpitch (Fig. 6c); the summary of the dimensions (αaverage) also correlated significantly to AVNN despite non-strictly monotonic associations of this latter with αrhythm/loudness and no relationship with the tempi of the pieces (Supplementary Fig. 8). Despite modulating the sinus rhythm and other standard heart-rate variability measures (Supplementary Fig. 9), music did neither consistently modulate the scaling of the interbeat intervals (α1) (Supplementary Fig. 10a), nor the scaling of the music associated with α1 (Supplementary Fig. 10b). Lastly, we investigated whether a correspondence between neuronal and cardiac dynamics could mediate the experience of music listening. To address this question, we correlated α1 to the global (average of channels) neuronal scaling exponents . We observed a significant positive correlation selectively within the γh − β and δ frequency-ranges (Fig. 6d); this association with the midhigh-frequency neuronal dynamics was significant and stronger during music vs. rest (Fig. 6e). Thus, we conclude that, during music listening, a synergistic dynamical interplay of heart rate and neuronal activity may be facilitated.

Figure 5.

Correlation analysis reveals a link between the scaling behaviour of the music and the scaling of neuronal activity in the α, β and γh-components. (a) Headplots of the correlation between the scaling exponent of the pitch series (αpitch) and the average scaling exhibited by the multiscale neuronal activity ( for γh − δ) for the music pieces; the dark blue dots indicate a channel with significant correlation (Spearman coefficient rs, p < 0.05), the purple ones show significant association after FDR correction (q = 0.2, minimum p = 0.017 (γh, α) and p = 0.006 (β)). (b) Scatterplots portray the association between the scaling of neuronal activity in the γh, β and α components, for the channels highlighted with a green star in (a) and the scaling of pitch successions; the error bars denote the standard error of the mean.

Figure 6.

Heart rate dependency on musical dimensions of the music stimuli and 1/f resonance between brain and heart dynamics. (a) Average heart rate (AVNN) during music listening shows a significant increase relative to baseline. (b) Individual AVNN depends on music piece as shown by Violin plots with overlaid boxplots, the box limits show the 25th and 75th percentile together with the medians, whiskers extend 1.5 times the interquartile range (IQR) and the coloured polygons representing density estimates of AVNN. (c) Relationship between the scaling behaviour of the musical dimensions and the average AVNN for each piece; error bars indicate standard error of the mean. (d) The self-similarity in individual heart rate variability (α1) is associated with the neuronal scaling of both high- (γh, γ1 and β activity) and low-frequency oscillations (δ). (e) Correlation between brain and α1 is strengthened during music relative to baseline suggesting music may facilitate an 1/f resonance between brain and heart. Brain and heart images in (d) by Sinisa Maric and Marcus Hartmann. Clef and chair images in (e) by rawpixel and Pettycon. All under Pixabay license.

Discussion

Embodied cognition emerged as the science explaining cognitive processes through the continuous interaction of bodies, brains and environment39. Within this framework, we have used a dynamical property—the scaling behaviour—to characterise how neuronal, cardiac activity and music unfold in time. We hypothesised that the pleasure of music mutually depends on the scaling behaviour of neuronal and musical features. This possibility deepens our knowledge of how the brain harnesses and is shaped by the dynamics of music, sheds light on the neuronal mechanisms underlying musical pleasure and may guide the deployment of music therapy. To this end, we analysed the EEG of individuals that listened to classical pieces characterised by different 1/f scaling. We report how music reshapes neuronal activity fluctuations over many seconds. The music-induced change in the scaling behaviour of these fluctuations and its baseline level associate with the pleasure experienced. These associations with pleasure were not caused by concentration or familiarity levels. In addition, the neuronal scaling behaviour covaries with the scaling of music, and an interdependence between the scaling of neuronal and cardiac dynamics emerges during music listening.

The decrease in the scaling exponent of neuronal activity (αbrain) found during music aligns with the emerging view that tasks reduce the self-similarity in the ongoing fluctuations of brain field potentials40. These decreases may stem from merely eye-opening41, to greater attentional demands such as during auditory42 and visual43 tasks. Individuals who finger-tap more accurately to a fixed rhythm were also shown to have lower scaling exponents within the α-band44, which altogether with our results suggests that this temporal reorganisation is linked to tracking basic or complex rhythms. Our study consistently implicated the α, β and γ bands in music processing. Attending to auditory stimuli is long known to modulate α oscillations in the parieto-occipital region45, and the individual and music-specific changes observed in αbrain of the α-component converge with evidence on how music modulates parieto-occipital alpha power in an individual- and stimulus-specific fashion46. Further, the β − γ modulations are consistent with these neuronal bands substantiating predictive timing mechanisms of beat-processing47. The music-induced decreases of αbrain seemingly reflect a dynamical reorganisation of neuronal ensembles, known to resemble more closely 1/f noise (α = 1) during rest48, to adapt to the rapid demands underlying processing of stimuli by forming more transient but persistent spatiotemporal patterns. It may appear paradoxical that both the most pronounced music-induced decreases and the highest resting-state scaling exponents are linked to pleasure. Yet, αbrain during rest was positively correlated to pleasure in wide areas of the brain and the reductions in αbrain were focal to the (particularly left) temporal cortices. Hence, the spatial topography suggests that the decreases in αbrain are a proxy of the auditory processing, integrating the encoding of meaning from sounds49 and abstract representation of music50. The selective involvement of γm is also consistent with the role of >40 Hz activity in indexing task-specific processes during auditory perception51. This result supports a current theory of how the aesthetic musical emotions are linked to the satisfaction of a knowledge instinct52,53. In a nutshell, this theory proposes that pleasant music can overcome cognitive dissonance by allowing multiple contradictory beliefs to be reconciled, generating a synthesis of newer abstract meanings. Given that a decrease in self-similarity is arguably linked to an increased dimensional complexity54, we hypothesise that the pleasure induced decreases in αbrain exclusively in the left-temporal area—an area thought to harbour abstract neural representations—reflect a more flexible dynamical organisation of the underlying neuronal ensembles which may facilitate the embodiment of abstract concepts55. Conversely, the association of musical pleasure to αbrain during baseline reflects intrinsic traits constraining the listening experience.

Remarkably, the baseline values of individual αbrain from subjects that experienced more overall pleasure were close to 1 in the components most significantly associated with pleasure (viz., α − γl). On average, the scaling of amplitude modulations of music (αloudness) was also 1. A 1/f scaling endows a system with the sensitivity to perturbations with a wide dynamic range, i.e., information at many timescales56 and, interacting systems which have matching 1/f scaling are thought to display maximal information exchange38,57,58. Thus, our results are consistent with the hypothesis that music exerts a strong influence on the brain by means of a 1/f resonance mechanism. This concept is akin to entrainment but differs from the common use of the latter (rhythmic processes interacting until eventually “locking-in” to a common phase). It can be thought as a derivation of the stochastic resonance phenomenon for the perturbation of a system by another system when they both have broadband dynamics with complex fractal signatures59. We have previously shown the importance of a similar phenomenon for speech comprehension60 and demonstrate here its relevance for music’s enjoyment. On another level, the relative distribution of low-frequency power has been linked to personality traits such as neuroticism and openness61, known to predispose music-induced emotions62—suggesting another facet of how intrinsic 1/f scaling connects to music’s pleasure.

Moreover, the positive correlation αpitch/αbrain extends previous findings of how neuromagnetic 41.5 Hz phase tracks the statistics of artificial tone sequences36. However, in contrast to this study where energy modulations were independent of the dynamics of the auditory stimulus, we unveiled a functional relationship between the scaling of pitch and γh fluctuations (as in α/β) during music listening in frontal and occipitoparietal networks. The foremost correlations (0.9) were located in the right frontotemporal region, concurring with the critical role of this region in melodic processing63 and in processing non-local dependencies in musical motifs64. However, prior research also found an inverse relationship between the scaling of pitch fluctuations and the scaling of neuronal activity (α-component) in the occipital area65. A possible reason for this discrepancy is the use of artificial 1/f tone sequences instead of natural music, like in the current paradigm. Notwithstanding a lack of effect of αpitch on the scaling of heart rate (α1), the AVNN increased monotonically with αpitch. This discovery expands our knowledge of how the autonomic nervous system (ANS) is regulated by music’s temporal properties. Tempo has been often taken as the first proxy of music rhythm, yet both this paradigm and previous studies66–68 have revealed a nuanced role of tempo in shaping the AVNN of the listener. Our finding suggests that the scaling of music carries a predictive value when inferring ANS function not directly available from tempo. The selective correlation between the scaling exponent of both cortical δ, β and γl/γm activity and cardiac dynamics suggests a pivotal role of these frequency channels in mediating communication between heart and brain systems. Interestingly, during rest, the scaling of neuromagnetic modulations around 3 (δ) and 30 (β) Hz is positively correlated to the scaling of HRV69, and β oscillations were identified as the primary hub mediating bidirectional brain-heart information transfer during sleep70. Since these studies were limited to frequencies below 30 Hz and distinct states, it remains to elucidate whether the observed relationship with the neuronal scaling of γ activity reflects a generalised means of interaction or if it is peculiar to music listening. To the best of our knowledge, no other studies concomitantly assessed γ and HRV, hindering any conclusion about the genericity of this mechanism. Conversely, although the interactive pattern pervades rest and music, the significance and magnitude of the interaction in β − γm was selectively enhanced during music. One possible root for this interchange is the fact that respiratory rate changes during music listening71; α1 (heart) reflects interbeat interval fluctuations which are dominated by the regular oscillations of respiration30 and could, therefore, be a source of the music-induced interactive changes. This hypothesis is further supported by recent iEEG evidence showing how the breathing cycle tracks power (40–150 Hz) in diverse cortical and limbic areas72.

Some limitations of this experiment limit the conclusions, namely, since we optimised the scaling gradient of the stimuli by first considering αpitch/αrhythm, we cannot rule out that the prevalence of significant interaction of pitch with physiological measures stems from this choice. The absence of a systematic influence of music on the scaling of cardiac output could also derive from the smaller sample size (17/28 subjects). Finally, the DFA method applied justifiably uses a detrending procedure to mitigate the nonstationarity of the underlying processes and estimate the scaling exponent robustly. This approach may nonetheless underestimate the contribution of nonlinearities that can be meaningful in the characterisation of music and brain signals and have been shown relevant for the aesthetic appreciation of music73. We expect our findings can fuel research and inform motor rehabilitative tools that deploy 1/f auditory cueing74 and brain-computer interfaces that leverage acoustic and neuronal features to predict music-induced emotion75,76. In addition, since music induces αbrain decreases, maximally in occipitoparietal areas and with pleasure, and a comprehensive literature suggests this dynamical reorganisation sustains many tasks–it would be interesting to probe whether transfer effects of music (e.g., the mitigation of visual neglect in post-stroke patients by pleasant music in a visuospatial task77) are bolstered by these mechanisms.

To conclude, the pleasure of music derives not from the fractal structure of music dimensions per se, but may arise from the interaction between the acoustic scaling with the scaling behaviour of neuronal dynamics. A 1/f resonance between music sounds and our brain prevails when music moves us.

Methods

Participants

A total of 31 healthy volunteers were recruited and paid to participate in this experiment. Three participants were excluded from analysis due to technical issues during recording, which led to incomplete data. The final sample was constituted by 28 subjects (12 female, 16 male) with mean age = 26.8 ± 4.2 (SD) (27 right-handed, 1 left-handed). All participants gave written informed consent prior to the experiment. The study was performed in accordance with the guidelines approved by the Ethics Committee of the Faculty of Psychology and Education at the VU University Amsterdam. The participants had no history of neurological disease or psychiatric disorders, were not musicians and only roughly half had any formal music training. (see Supplementary Fig. S11). Only 17 out of the 28 participants underwent the ECG recording.

Stimuli

The music excerpts (see Supplementary Table S1) were selected from a pool of piano compositions which had available scores in the Humdrum Kern database78; the chosen stimuli lasted at least 2 min and exceeded 200 separate note onsets20. The main criteria for inclusion relied on the scaling exponent of the rhythm and pitch of these compositions. In addition, the Sonata for Two Pianos in D Major K. 448 by Mozart10 was included. The scaling exponents (α) were computed based on the score using DFA (detrended fluctuation analysis)79 (see details below), similarly to previous studies20,80. Next, the pieces were binned by their values of scaling of pitch and rhythm series, and 12 compositions were selected allowing a range of values 0.5–1, trying to match compositions with similar pitch/rhythm exponents. Two distinct compositions from six different composers were chosen to integrate some stylistic diversity. Recorded performances of the selected pieces were obtained from Qobuz.com in CD-quality (lossless, 16 bits, 44.1 kHz). The stimuli were created by extracting the first 110 seconds of music from the recordings using Audacity (2.0.6), (equalizing these at a 65-dB output level (http://www.holgermitterer.eu/research.html), and adding 0.5-second long fade-in and fade-outs to prevent speaker popping—both using Praat (http://www.praat.org/).

EEG/ECG paradigm and data acquisition

A three–minute eyes-closed rest was recorded as a baseline for the music paradigm. Both, during the rest and music listening periods, the subjects were instructed to keep their eyes closed and only open them with an auditory cue. After the period of rest and at the end of the experiment, the subjects filled the Amsterdam Resting-state Questionnaire (ARSQ) (see81 for details), this questionnaire data were not analysed in the present study. The music listening phase exposed the participants to 12 music excerpts with different degrees of statistical self-similarity (different α) regarding how its volume, melody and rhythm unfolded over time and, they were asked to rate the piece-induced level of pleasure, familiarity and concentration. The 12 music piece excerpts were presented in a randomised order. At the end, three of these pieces were played again, the same excerpts for all participants but also with randomised order. The participants were first familiarised with the task with a trial music piece, distinct from the stimuli used and instructed to adjust the volume to a comfortable level. Before each piece, a visual cue signalled that the piece would start and the subject should close their eyes and concentrate on the music until they heard a beep, after the piece’s end. A Likert-like 7-point psychometric scale was used to rate whether the subject strongly agreed (7) to strongly disagreed (1) the piece was pleasurable/familiar/easy to focus. The subjects also had to indicate whether they had opened their eyes (binary scale). Finally, the experiment ended with the filling of a questionnaire about the subject’s overall musical taste and experience (summarised results in Supplementary Fig. S11). All visual cues, instructions, and questionnaires were presented on a computer screen. Auditory cues and music stimuli were presented over KRK Rokit 8 RPG 2 studio monitors. Stimuli presentation and acquisition of behavioural data was done using custom scripts in the OpenSesame environment (v.2.8.3)82. EEG data were sampled at 1 kHz and the EGI Geodesic EEG system with HydroCel sensor nets consisting of 128 Ag/AgCl electrodes was used. Impedance was kept below a 50–100 kΩ range and the vertex was used as the common reference.

EEG preprocessing

From the whole-set EEG, ten channels were excluded from analysis due to its location extrinsic to the brain. The continuous recordings were epoched in 16 segments comprising a segment of 178 seconds of eyes-closed resting state and 15 segments of 108 seconds of the different music pieces presented once or twice. The first 2 seconds of all segments were clipped while epoching (minimizing possible amplifier saturation effects when applicable). Line-noise removal followed the method for sinusoidal removal implemented in the PREP pipeline (PrepPipeline 0.55.1 see83). Line noise (50 Hz) and energy in its harmonics (up to 450 Hz) are preferably removed without a notch filtering so that most wide-spectral energy is preserved and only deterministic components are removed. Briefly, the method applies to a high-passed filtered version of the data, an iterative procedure to reconstruct the sinusoidal noise based on Slepian tapers (4 s, sliding window of 0.1 s), which is a posteriori removed from the original non-filtered data. Such a small sliding window (—pipeline’s default) was crucial for line-noise removal in our data, longer windows only allowed attenuation and caused ringing artefacts. This procedure avoids high-pass or notch filters which have known pitfalls84, minimising distortions in the long-term structure of signals and facilitating the analysis of high-frequency EEG activity intended here. Bad channels were detected and interpolated and the robust re-referencing of the signals to an estimate of the average reference performed (all details in83). To mitigate transient artefacts such as myogenic/ocular activity, each segment was split into multiple sub-epochs of 0.25 s (a duration in which the EEG is quasi-stationary85) and sub-epochs with high epoch’s amplitude range, variance or difference from the epoch’s mean were not included in further analysis.

Multiscale analysis

To study the neuronal dynamics at a multiscale level we opted to apply Empirical Mode Decomposition (EMD)86, a method that separates a signal x(t) in a set of n so-called intrinsic mode functions (IMFs)—ci, which represent the dynamics of the signal at different time scales:

| 1 |

where ci(t) represents the n IMFs and rn(t) is the residue (a constant or monotonic trend). It is noteworthy that the method does not result in a separation of the signal in predetermined frequency bands but rather, in a signal-dependent time-variant filtering87, fully adaptive and, therefore, suitable for the nonstationary and nonlinearity of the electroencephalogram. Notwithstanding, the IMFs obtained have a defined bandwidth that can be related to the classic frequency bands used in clinical practice or neuroscientific research. Each mode has typically a power spectrum that peaks around a limited range of frequencies87, and a characteristic frequency given by fs/2n+1, where fs represents the sampling frequency and n the number of the mode (n = 1, 2, 3 …). Thus, to match this filtering method with the frequency ranges of the classical bands, the first six (IMF1–IMF6) correspond to a spectral energy with peaks within roughly the range 8–250 Hz and the last three have activity around 1–4 Hz (Fig. 1 and Supplementary Fig. S1). Following the aforementioned relationship between mode number and its main frequencies, we labelled IMF1 as high-gamma (γh), IMF2 as mid-gamma (γm), IMF3 as low-gamma (γl), IMF4 as β, IMF5 as α, IMF6 as θ, and finally, the sum of modes IMF7, 8 and 9 equivalent to δ. The method uses a sifting process which starts by identifying the extrema in the raw time series x(t). Next, two cubic splines are fitted, connecting the local maxima and the local minima. The average—m(t)—of these envelopes is performed and subtracted from x(t), this difference constitutes the first mode (IMF1). The residue signal (r1 = x(t) − c1) is treated as the new signal and the sifting process iterates until further modes are extracted. The optimal number of sifting is undetermined; we opted to use 10 as this choice preserves a dyadic filtering ratio across the signals88. The code used is available online at http://perso.ens-lyon.fr/patrick.flandrin/emd.html.

Estimation of neuronal scaling

To assess the degree of long-range temporal correlations or equivalently the self-similarity present in the EEG segments, the instantaneous amplitudes of the fluctuations the γh, γm, γl, β, α, θ and δ ranges were calculated using the magnitude of the analytic signals quantified using the Hilbert Transform. Next, fractal scaling exponents of these amplitude fluctuations were estimated using DFA79—an algorithm useful to quantify the long-range scale-free correlations in non-stationary signals, mitigating spurious detection of artefactual long-range dependency due to nonstationarity or some extrinsic trends89. The method is essentially a modified root mean square analysis of a random walk30, introduced to use in neuronal band-passed signals in25; full details are described elsewhere25,30,90. Briefly, for a given time series x the algorithm quantifies the relationship between F(n), the root-mean-square fluctuation of the integrated and detrended time series, and the elapsed window of time, n. Typically, F(n) increases with n and displays the asymptotic behaviour F(n) ~ nα. The fractal scaling exponent (α) was estimated by extracting the slope of a linear least-square regression of F(n) on a log-log plot within the scaling range of n ∈ [3, 15]. The self-similar exponent (α) is closely related to the auto-correlation function C(τ). When α = 0.5, the C(τ) is 0 for any time-lag (τ ≠ 0) and the signal is equivalent to white noise hence, constituting uncorrelated randomness. A scaling exponent in the range of [0.5–1] indicates the presence of persistent long-range temporal correlations and scale-free properties; the closer its value is to one, the greatest its self-similarity—in this case C(τ) ~ τγ where γ = 2 − 2α. Within this regime, there is a straightforward relationship between the auto-correlation and the power spectral density, P(f). Following the Wiener-Khintchine theorem, considering that P(f) ~ 1/fβ and β = 1 − γ, thus β = 2α − 1. Above one (α > 1), the underlying signal still displays long-range temporal correlations but these are not temporally structured in a fractal manner. The same bounds of the fitting range (3 and 15 s) were used in the estimation of α from all resting state or music listening segments. Scaling behaviour analysis was based on adapted functions from the Neurophysiological Biomarker Toolbox (NBT v.0.6.5–alpha, www.nbtwiki.net)90, in MATLAB (R2015a; The MathWorks, Inc).

Analysis of musical dimensions

Music stems from the orderly sequencing of sounds; while adding notes or increasing the volume does not produce music, the manipulation of sound’s intensity, of its melody (successive changes in pitch) and rhythm (successive changes in tone duration), contribute essentially to musical meaning19,80. To obtain estimates of the loudness, pitch and rhythm variation embedded in the stimuli presented we used the MATLAB-based MIR toolbox (v.1.7)91. We obtained these dimensions from the 110 s stimuli audio recordings to ideally quantify the exact scaling properties of the excerpts the subjects were exposed to—performers can vary in their interpretations of music scores and a segment’s scaling properties can diverge from those of the whole piece. To approximate the acoustic amplitude and frequency properties of the music pieces to the perceived loudness and pitch, we used as an auditory model the 2-channel filterbank developed in92, composed of 2-channels (one above and the other below 1 kHz). Estimations were performed in a band-wise fashion and the results were later merged. To estimate loudness, the spectrogram of the excerpts was obtained with a window size of 0.1 s, Hann windowing with 10% overlap; the envelope was extracted after 40 Hz high-pass filtering with the Butterworth filter implemented in the toolbox, half-wave rectified and down-sampled to 2756.25 Hz. For pitch calculation, the several notes/chords need to be detected at a given instant; the polyphony, which arises from notes being played simultaneously by distinct hands, challenges accurate pitch tracking due to masking effects93. We found that using the mirpitch() function with default settings in the toolbox performed nearly optimally; for the notes in each frame, a dominant pitch/fundamental frequency was extracted. This resulted in a monodic curve sampled at 100 Hz to which we applied the first derivative to obtain the pitch successions. To estimate the rhythm, a similar procedure was followed to obtain the times of the note onsets using the default threshold of 0.4. The so-called novelty curve—which captures sudden changes in the music signal by means of peaks that represent onset candidates—was obtained by applying the “spectral flux” implemented in the mironsets() function, a widely used method based on the signal short-time spectrum93. The differences in time between notes were taken as the rhythm series.

The estimation of the scaling exponent of the musical dimensions was done as described for the neuronal activity, in the same range of 3–15 seconds for best comparison to the neuronal scaling. The average across the 12 music stimuli yielded the following median dimension estimates: 1.07 ± 0.14 (loudness), 0.88 ± 0.10 (pitch) and 0.72 ± 0.13 (rhythm). An additional estimate of the overall scaling of music was taken by averaging the scaling exponent of the three dimensions.

Spectral power estimation of neuronal activity

As a control to the data quality, the averaged spectral power was also estimated in seven frequency bands δ (1–3 Hz), θ (4–7 Hz), α (8–12 Hz), β (13–30 Hz), γl (31–45 Hz), γm (55–125 Hz) and γh (126–345 Hz)) by applying the Welch’s modified periodogram method implemented in Matlab’s pwelch() function with 50% overlapping Hann windows of 2.048 seconds, the averaged power was computed by integrating the power spectral densities within each band and calculating the mean.

ECG analysis

The ECG data were sampled at 1 kHz, a low-pass filter (cut-off frequency: 100 Hz) and a notch filter (that attenuates frequencies around 50 Hz, default from the acquisition system) were applied during recording. After acquisition, high-pass and low-pass two-way constrained least squares FIR filters (cutoff 45 Hz, order = 500; cutoff 0.5 Hz, order = 1200, −70 dB stopband attenuation, passband ripple = 0.1%) were used to eliminate baseline drift of non-cardiac origin and minimize other artifacts such as power line interference or electromyographic noise. To study heart rate variability (HRV), the distances between sinus beats (so-called RR or interbeat intervals) were computed from the preprocessed signals of the subjects (n = 17). Ectopic beats were eliminated automatically by excluding the beats deviating >20% from the previous one and also by visual inspection. In most cases, the automatic procedure resulted in 100% or close to 100% of the beats being kept; however, for a few segments this value approached 70–90% and, therefore, we opted to not use any method for linear interpolation of the excluded beats94. Following visual inspection, 9/272 segments had data removed due to artefacts. With the cleaned interbeat series (NN intervals), the scaling exponent or self-similarity of the cardiac rate variability was estimated in a range between 4 and 11 beats. The procedure was similar to the described for EEG time series. Of note, this value is considered an index of short-term heart-rate variability and we will designate it here as α1 (heart)30, to distinguish from the fractal scaling often computed on longer timescales. The range of ~4–11 beats coincides with the interval 3–15 seconds used in the computation of the neuronal and musical scaling parameters if the heart rate is close to normal baseline levels (~60 bpm) and is slightly shorter if heart rate accelerates beyond that level. The following standard measures of short-term HRV were also calculated from the NN time intervals: AVNN (average heart rate), SDNN (standard deviation), rMSSD (square root of the mean of the squares of differences between adjacent NN intervals), pNN50 (percentage of NN >50 ms) and the frequency domain measures—VLF (spectral power of the NN interval time series between 0.003 and 0.04 Hz), LF(spectral power of the NN interval time series between 0.04 and 0.15 Hz), HF (spectral power of the NN interval time series between 0.15 and 0.4 Hz), and LF/HF (ratio of low to high frequency). The frequency domain measures were computed using the Lomb-Scargle periodogram method. The analysis were performed utilizing the PhysioNet Cardiovascular Signal Toolbox94 available at www.physionet.org 95.

Statistical testing

To study the overall effect of music in ongoing neuronal dynamics, we averaged αbrain of the several timescales [γh, γm, γl …] across the 12 music pieces firstly presented and compared to the values during resting-state using Wilcoxon signed rank tests (p < 0.05, two-tailed), in light of the null hypothesis that the scaling behaviour is identical in these two states. Spearman correlation was applied to associate these changes in neuronal dynamics with the individual’s behavioural score, due to its nonparametric character and also the ordinal nature of the psychometric scoring96. We also investigated the interaction between the music and the neuronal dynamics: averages of αbrain for each component [γh, γm, γl …], across the n = 28 subjects for each piece of music were plotted against the scaling exponent (of each music dimension: αloudness, αpitch and αrhythm) of those same pieces and the Spearman’s correlation coefficient (rs) computed. Similarly, associations between the scaling behaviour of music and HRV indices were computed for the subset of subjects with recorded ECG (n = 17). To correct for multiple testing associated with the 119 channel locations, Type I errors were minimised by employing a false discovery rate (FDR) correction97, the FDR threshold was set so that 5%, 10% and 20% of the supra-threshold differences or correlations are expected to be false positives and the results compared across these levels of statistical significance.

Head surfaces displayed were created by adapting the headplot Matlab code from EEGLab https://sccn.ucsd.edu/eeglab/ 98. For visualisation purposes, a locally weighted regression smooth line was added to scatterplots by using the loess fit99 implemented in R100 with various smoothing spans and a polynomial degree of 2. The loess fit is suitable as a nonparametric descriptive of the monotonic associations reported; no steps were taken to ensure the monotonicity of the bivariate relationship but, it was present in most cases. Importantly, the associations seldom approached a linear relationship, justifying the need for nonparametric statistical testing and fitting.

Supplementary information

Acknowledgements

We gratefully thank Simon-Shlomo Poil for assisting with data acquisition and preprocessing.

Author contributions

A.F.T.-B. conceived research; A.F.T.-B., M.I., T.B. and K.L.-H. designed the experiment; A.F.T.-B., M.I. and T.B. performed research; A.F.T.-B. analyzed data; D.J.A.S. and H.D.M. provided statistical or conceptual advice; H.D.M. and K.L.-H. oversaw the project; A.F.T.-B. wrote the paper.

Data availability

The datasets generated and analysed during the current study are available from the corresponding author on reasonable request.

Code availability

Any custom code used during the current study is available from the corresponding author on reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

is available for this paper at 10.1038/s41598-019-54060-x.

References

- 1.McDermott J, Hauser M. The origins of music: Innateness, uniqueness, and evolution. Music. Percept. 2005;23:29–59. doi: 10.1525/mp.2005.23.1.29. [DOI] [Google Scholar]

- 2.Janata P. Brain networks that track musical structure. Ann NY Acad Sci. 2005;1060:111–124. doi: 10.1196/annals.1360.008. [DOI] [PubMed] [Google Scholar]

- 3.Levitin DJ, Tirovolas AK. Current advances in the cognitive neuroscience of music. Ann NY Acad Sci. 2009;1156:211–31. doi: 10.1111/j.1749-6632.2009.04417.x. [DOI] [PubMed] [Google Scholar]

- 4.Koelsch S. Brain correlates of music-evoked emotions. Nat Rev Neurosci. 2014;15:170–180. doi: 10.1038/nrn3666. [DOI] [PubMed] [Google Scholar]

- 5.Salimpoor VN, Benovoy M, Larcher K, Dagher A, Zatorre RJ. Anatomically distinct dopamine release during anticipation and experience of peak emotion to music. Nat Neurosci. 2011;14:257–262. doi: 10.1038/nn.2726. [DOI] [PubMed] [Google Scholar]

- 6.Juslin PN, Laukka P. Expression, perception, and induction of musical emotions: A review and a questionnaire study of everyday listening. J New Music. Res. 2004;33:217–238. doi: 10.1080/0929821042000317813. [DOI] [Google Scholar]

- 7.Särkämö T, et al. Music listening enhances cognitive recovery and mood after middle cerebral artery stroke. Brain. 2008;131:866–876. doi: 10.1093/brain/awn013. [DOI] [PubMed] [Google Scholar]

- 8.Sihvonen AJ, et al. Music-based interventions in neurological rehabilitation. Lancet Neurol. 2017;16:648–660. doi: 10.1016/s1474-4422(17)30168-0. [DOI] [PubMed] [Google Scholar]

- 9.Magee WL, O’Kelly J. Music therapy with disorders of consciousness: current evidence and emergent evidence-based practice. Ann NY Acad Sci. 2017;1337:256–262. doi: 10.1111/nyas.12633. [DOI] [PubMed] [Google Scholar]

- 10.Rauscher FH, Shaw GL, Ky CN. Music and spatial task performance. Nat. 1993;365:611–611. doi: 10.1038/365611a0. [DOI] [PubMed] [Google Scholar]

- 11.Masataka N, Perlovsky L. Cognitive interference can be mitigated by consonant music and facilitated by dissonant music. Sci Rep. 2013;3:–. doi: 10.1038/srep02028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Perlovsky L, Cabanac A, Bonniot-Cabanac M-C, Cabanac M. Mozart effect, cognitive dissonance, and the pleasure of music. Behav Brain Res. 2013;244:9–14. doi: 10.1016/j.bbr.2013.01.036. [DOI] [PubMed] [Google Scholar]

- 13.Schoenberg, A. Theory of Harmony (University of California Press, Los Angeles, 1983), 2nd edn.

- 14.Meyer, L. B. Emotion and Meaning in Music (The University od Chicago Press, Chicago and London, 1956).

- 15.Voss R. Random fractals: Self-affinity in noise, music, mountains, and clouds. Phys. D. 1989;38:362–371. doi: 10.1016/0167-2789(89)90220-0. [DOI] [Google Scholar]

- 16.Mandelbrot, B. B. The Fractal Geometry of Nature (W. H. Freeman and Company, New York, 1982).

- 17.Goldberger AL, et al. Fractal dynamics in physiology: Alterations with disease and aging. Proc Natl Acad Sci USA. 2002;99:2466–2472. doi: 10.1073/pnas.012579499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Richard F, Voss JC. 1/f noise in music and speech. Nat. 1975;258:317–318. doi: 10.1038/258317a0. [DOI] [Google Scholar]

- 19.Hsü K, Hsü A. Fractal geometry of music. Proc Natl Acad Sci USA. 1990;87:938–941. doi: 10.1073/pnas.87.3.938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Levitin DJ, Chordia P, Menon V. Musical rhythm spectra from bach to joplin obey a 1/f power law. Proc Natl Acad Sci USA. 2012;109:3716–20. doi: 10.1073/pnas.1113828109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hennig H, et al. The nature and perception of fluctuations in human musical rhythms. PLoS One. 2011;6:e26457. doi: 10.1371/journal.pone.0026457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jennings HD, Ivanov PC, Martins ADM, Silva PD, Viswanathan G. Variance fluctuations in nonstationary time series: a comparative study of music genres. Phys. A. 2004;336:585–594. doi: 10.1016/j.physa.2003.12.049. [DOI] [Google Scholar]

- 23.Voss R, Clarke J. 1/f noise in music: Music from 1/f noise. J Acoust Soc Am. 1978;63:258–263. doi: 10.1121/1.381721. [DOI] [Google Scholar]

- 24.Garcia-Lazaro JA, Ahmed B, Schnupp JW. Emergence of tuning to natural stimulus statistics along the central auditory pathway. PloS One. 2011;6:e22584. doi: 10.1371/journal.pone.0022584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Linkenkaer-Hansen K, Nikouline V, Palva J, Ilmoniemi R. Long-range temporal correlations and scaling behavior in human brain oscillations. J Neurosci. 2001;21:1370–7. doi: 10.1523/JNEUROSCI.21-04-01370.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pritchard WS. The brain in fractal time: 1/f-like power spectrum scaling of the human electroencephalogram. Int J Neurosci. 1992;66:119–129. doi: 10.3109/00207459208999796. [DOI] [PubMed] [Google Scholar]

- 27.Dehghani N, Bédard C, Cash SS, Halgren E, Destexhe A. Comparative power spectral analysis of simultaneous elecroencephalographic and magnetoencephalographic recordings in humans suggests non-resistive extracellular media. J Comput. Neurosci. 2010;29:405–21. doi: 10.1007/s10827-010-0263-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Freeman WJ. Characterization of state transitions in spatially distributed, chaotic, nonlinear, dynamical systems in cerebral cortex. Integr. Physiol. Behav. Sci. 1994;29:294–306. doi: 10.1007/BF02691333. [DOI] [PubMed] [Google Scholar]

- 29.Bhattacharya J, Petsche H. Universality in the brain while listening to music. Proc Royal Soc Lond B. 2001;268:2423–33. doi: 10.1098/rspb.2001.1802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Peng C, Havlin S, Stanley H, Goldberger A. Quantification of scaling exponents and crossover phenomena in nonstationary heartbeat time series. Chaos. 1995;5:82–7. doi: 10.1063/1.166141. [DOI] [PubMed] [Google Scholar]

- 31.Werner G. Fractals in the nervous system: conceptual implications for theoretical neuroscience. Front Physiol. 2010;1:15. doi: 10.3389/fphys.2010.00015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.He BJ. Scale-free brain activity: past, present, and future. Trends Cogn Sci. 2014;18:480–487. doi: 10.1016/j.tics.2014.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tognoli E, Kelso SJ. The Metastable Brain. Neuron. 2014;81:35–48. doi: 10.1016/j.neuron.2013.12.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Martins M, Gingras B, Puig-Waldmueller E, Fitch TW. Cognitive representation of musical fractals: Processing hierarchy and recursion in the auditory domain. Cogn. 2017;161:31–45. doi: 10.1016/j.cognition.2017.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Rankin SK, Fink PW, Large EW. Fractal structure enables temporal prediction in music. J Acoust Soc Am. 2014;136:EL256–EL262. doi: 10.1121/1.4890198. [DOI] [PubMed] [Google Scholar]

- 36.Patel A, Balaban E. Temporal patterns of human cortical activity reflect tone sequence structure. Nat. 2000;404:80–4. doi: 10.1038/35003577. [DOI] [PubMed] [Google Scholar]

- 37.Koelsch S, Rohrmeier M, Torrecuso R, Jentschke S. Processing of hierarchical syntactic structure in music. Proc Natl Acad Sci USA. 2013;110:15443–15448. doi: 10.1073/pnas.1300272110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bianco S, et al. Brain, music, and non-poisson renewal processes. Phys Rev E. 2007;75:061911. doi: 10.1103/PhysRevE.75.061911. [DOI] [PubMed] [Google Scholar]

- 39.Schiavio A, Altenmüller E. Exploring Music-Based Rehabilitation for Parkinsonism through Embodied Cognitive Science. Front. Neurol. 2015;6:217. doi: 10.3389/fneur.2015.00217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.He BJ, Zempel JM, Snyder AZ, Raichle ME. The temporal structures and functional significance of scale-free brain activity. Neuron. 2010;66:353–69. doi: 10.1016/j.neuron.2010.04.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Nikulin VV, Brismar T. Long-range temporal correlations in alpha and beta oscillations: effect of arousal level and test-retest reliability. Clin Neurophysiol. 2004;115:1896–908. doi: 10.1016/j.clinph.2004.03.019. [DOI] [PubMed] [Google Scholar]

- 42.Poupard L, Sartène R, &Wallet J-C. Scaling behavior in β-wave amplitude modulation and its relationship to alertness. Biol Cybern. 2001;85:19–26. doi: 10.1007/PL00007993. [DOI] [PubMed] [Google Scholar]

- 43.Irrmischer, M., Intra, F., Mansvelder, H. D., Poil, S. & Linkenkaer-Hansen, K. Strong long-range temporal correlations of beta/gamma oscillations are associated with poor sustained visual attention performance. Eur J Neurosci, 10.1111/ejn.13672 (2017). [DOI] [PMC free article] [PubMed]

- 44.Smit DJ, Linkenkaer-Hansen K, Geus EJD. Long-range temporal correlations in resting-state α oscillations predict human timing-error dynamics. J Neurosci. 2013;33:11212–20. doi: 10.1523/JNEUROSCI.2816-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Adrian ED. Brain rhythms. Nat. 1944;153:360–362. doi: 10.1038/153360a0. [DOI] [Google Scholar]

- 46.Schaefer RS, Vlek RJ, Desain P. Music perception and imagery in eeg: Alpha band effects of task and stimulus. Int J Psychophysiol. 2011;82:254–259. doi: 10.1016/j.ijpsycho.2011.09.007. [DOI] [PubMed] [Google Scholar]

- 47.Fujioka T, Trainor LJ, Large EW, Ross B. Beta and gamma rhythms in human auditory cortex during musical beat processing. Ann NY Acad Sci. 2009;1169:89–92. doi: 10.1111/j.1749-6632.2009.04779.x. [DOI] [PubMed] [Google Scholar]

- 48.Allegrini P, et al. Spontaneous brain activity as a source of ideal 1/f noise. Phys Rev E. 2009;80:061914. doi: 10.1103/physreve.80.061914. [DOI] [PubMed] [Google Scholar]

- 49.Koelsch S, Siebel WA. Towards a neural basis of music perception. Trends Cogn Sci. 2005;9:578–584. doi: 10.1016/j.tics.2005.10.001. [DOI] [PubMed] [Google Scholar]

- 50.Ruiz MH, Koelsch S, Bhattacharya J. Decrease in early right alpha band phase synchronization and late gamma band oscillations in processing syntax in music. Hum Brain Mapp. 2009;30:1207–1225. doi: 10.1002/hbm.20584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Crone NE, Boatman D, Gordon B, Hao L. Induced electrocorticographic gamma activity during auditory perception. Clin Neurophysiol. 2001;112:565582. doi: 10.1016/s1388-2457(00)00545-9. [DOI] [PubMed] [Google Scholar]

- 52.Perlovsky, L. Music, Passion, and Cognitive Function (Academic Press, San Diego, CA, 2017).

- 53.Perlovsky, L. Cognitive Function of Music and Meaning-Making. J. Biomusical Eng. 2016, 10.4172/2090-2719.s1-004 (2016).

- 54.Shen Y, Olbrich E, Achermann P, Meier P. Dimensional complexity and spectral properties of the human sleep EEG. Electroencephalograms. Clin. neurophysiol. 2003;114:199–209. doi: 10.1016/S1388-2457(02)00338-3. [DOI] [PubMed] [Google Scholar]

- 55.Perlovsky, L. Origin of music and embodied cognition. Front. Psychol. 6, 10.3389/fpsyg.2015.00538 (2015). [DOI] [PMC free article] [PubMed]

- 56.Chialvo DR. Psychophysics: Are our senses critical? Nat Phys. 2006;2:301–302. doi: 10.1038/nphys300. [DOI] [Google Scholar]

- 57.West BJ, Geneston EL, Grigolini P. Maximizing information exchange between complex networks. Phys Rep. 2008;468:1–99. doi: 10.1016/j.physrep.2008.06.003. [DOI] [Google Scholar]

- 58.Aquino G, Bologna M, West BJ, Grigolini P. Transmission of information between complex systems: 1/f resonance. Phys Rev E. 2011;83:051130. doi: 10.1103/physreve.83.051130. [DOI] [PubMed] [Google Scholar]

- 59.Freeman WJ, Kozma R, Werbos PJ. Biocomplexity: adaptive behavior in complex stochastic dynamical systems. Biosyst. 2001;59:109–123. doi: 10.1016/s0303-2647(00)00146-5. [DOI] [PubMed] [Google Scholar]

- 60.Borges AFT, Giraud A-L, Mansvelder HD, Linkenkaer-Hansen K. Scale-free amplitude modulation of neuronal oscillations tracks comprehension of accelerated speech. J Neurosci. 2018;38:710–722. doi: 10.1523/jneurosci.1515-17.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Kunisato Y, et al. Personality traits and the amplitude of spontaneous low-frequency oscillations during resting state. Neurosci Lett. 2011;492:109–113. doi: 10.1016/j.neulet.2011.01.067. [DOI] [PubMed] [Google Scholar]

- 62.Juslin PN, Liljeström S, Västfjäll D, Barradas G, Silva A. An experience sampling study of emotional reactions to music: Listener, music, and situation. Emot. 2008;8:668–683. doi: 10.1037/a0013505. [DOI] [PubMed] [Google Scholar]

- 63.Zatorre R, Evans A, Meyer E. Neural mechanisms underlying melodic perception and memory for pitch. J Neurosci. 1994;14:1908–1919. doi: 10.1523/jneurosci.14-04-01908.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Cheung VKM, Meyer L, Friederici AD, Koelsch S. The right inferior frontal gyrus processes nested non-local dependencies in music. Sci Rep. 2018;8:3822. doi: 10.1038/s41598-018-22144-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Lin, A., Maniscalco, B. & He, B. J. Scale-free neural and physiological dynamics in naturalistic stimuli processing. eNeuro3 (2016). [DOI] [PMC free article] [PubMed]

- 66.Bernardi L, et al. Dynamic interactions between musical, cardiovascular, and cerebral rhythms in humans. Circ. 2009;119:3171–3180. doi: 10.1161/circulationaha.108.806174. [DOI] [PubMed] [Google Scholar]

- 67.Krabs R, Enk R, Teich N, Koelsch S. Autonomic Effects of Music in Health and Crohn’s Disease: The Impact of Isochronicity, Emotional Valence, and Tempo. PLOS One. 2015;10:e0126224. doi: 10.1371/journal.pone.0126224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Watanabe K, Ooishi Y, Kashino M. Heart rate responses induced by acoustic tempo and its interaction with basal heart rate. Sci. Reports. 2017;7:srep43856. doi: 10.1038/srep43856. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Zhigalov A, Arnulfo G, Nobili L, Palva S, Palva J. Relationship of fast- and slow-timescale neuronal dynamics in human meg and seeg. J Neurosci. 2015;35:5385–5396. doi: 10.1523/JNEUROSCI.4880-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Faes L, Nollo G, Jurysta F, Marinazzo D. Information dynamics of brain–heart physiological networks during sleep. New J Phys. 2014;16:105005. doi: 10.1088/1367-2630/16/10/105005. [DOI] [Google Scholar]

- 71.Koelsch S, Jäncke L. Music and the heart. Eur Hear. J. 2015;36:3043–3049. doi: 10.1093/eurheartj/ehv430. [DOI] [PubMed] [Google Scholar]

- 72.Herrero JL, Khuvis S, Yeagle E, Cerf M, Mehta AD. Breathing above the brain stem: volitional control and attentional modulation in humans. J Neurophysiol. 2018;119:145–159. doi: 10.1152/jn.00551.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.González-Espinoza A, Larralde H, Martínez-Mekler G, Müller M. Multiple scaling behaviour and nonlinear traits in music scores. R. Soc. open sci. 2017;4:171282. doi: 10.1098/rsos.171282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Hunt N, McGrath D, Stergiou N. The influence of auditory-motor coupling on fractal dynamics in human gait. Sci Rep. 2014;4:5879. doi: 10.1038/srep05879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Daly I, et al. Music-induced emotions can be predicted from a combination of brain activity and acoustic features. Brain Cogn. 2015;101:1–11. doi: 10.1016/j.bandc.2015.08.003. [DOI] [PubMed] [Google Scholar]

- 76.Lin, Y.-P., Yang, Y.-H. & Jung, T.-P. Fusion of electroencephalographic dynamics and musical contents for estimating emotional responses in music listening. Front Neurosci 8, 10.3389/fnins.2014.00094 (2014). [DOI] [PMC free article] [PubMed]

- 77.Soto D, et al. Pleasant music overcomes the loss of awareness in patients with visual neglect. Proc Natl Acad Sci USA. 2009;106:6011–6016. doi: 10.1073/pnas.0811681106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Huron, D. Humdrum kern database Last accessed October 2014 (2010).

- 79.Peng C, et al. Mosaic organization of dna nucleotides. Phys. review. E, Stat. physics, plasmas, fluids, related interdisci- plinary topics. 1994;49:1685–9. doi: 10.1103/physreve.49.1685. [DOI] [PubMed] [Google Scholar]

- 80.Hsü KJ, Self-similarity AH. of the "1/f noise" called music. Proc Natl Acad Sci USA. 1991;88:3507–3509. doi: 10.1073/pnas.88.8.3507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Diaz, et al. The Amsterdam Resting-State Questionnaire reveals multiple phenotypes of resting-state cognition. Front. human neuroscience. 2013;7:446. doi: 10.3389/fnhum.2013.00446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Mathôt S, Schreij D, Theeuwes J. Opensesame: An open-source, graphical experiment builder for the social sciences. Behav. Res. Methods. 2011;44:314–324. doi: 10.3758/s13428-011-0168-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Bigdely-Shamlo N, Mullen T, Kothe C, Su K-M, Robbins KA. The prep pipeline: standardized preprocessing for large-scale eeg analysis. Front. Neuroinformatics. 2015;9:16. doi: 10.3389/fninf.2015.00016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Widmann A, Schröger E, Maess B. Digital filter design for electrophysiological data – a practical approach. J. Neurosci. Methods. 2015;250:34–46. doi: 10.1016/j.jneumeth.2014.08.002. [DOI] [PubMed] [Google Scholar]

- 85.Kaplan A, Fingelkurts AA, Fingelkurts AA, Borisov SV, Darkhovsky BS. Nonstationary nature of the brain activity as revealed by eeg/meg: Methodological, practical and conceptual challenges. Signal Process. 2005;85:2190–2212. doi: 10.1016/j.sigpro.2005.07.010. [DOI] [Google Scholar]

- 86.Huang N, et al. The empirical mode decomposition and the hilbert spectrum for nonlinear and non-stationary time series analysis. Proc Royal Soc Lond A. 1998;454:903–995. doi: 10.1098/rspa.1998.0193. [DOI] [Google Scholar]

- 87.Flandrin P, Rilling G. Empirical mode decomposition as a filter bank. IEEE Signal Process. Lett. 2004;11:112–114. doi: 10.1109/lsp.2003.821662. [DOI] [Google Scholar]

- 88.Wu Z, Huang N. On the filtering properties of the empirical mode decomposition. Adv. Adapt. Data Analysis. 2010;2:397–414. doi: 10.1142/s1793536910000604. [DOI] [Google Scholar]

- 89.Hu K, Ivanov P, Chen Z, Carpena P, Stanley EH. Effect of trends on detrended fluctuation analysis. Phys. Rev. E. 2001;64:011114. doi: 10.1103/PhysRevE.64.011114. [DOI] [PubMed] [Google Scholar]

- 90.Hardstone R, et al. Detrended fluctuation analysis: a scale-free view on neuronal oscillations. Front. physiology. 2012;3:450. doi: 10.3389/fphys.2012.00450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Lartillot, O. & Toiviainen, P. MIR in Matlab (II): a toolbox for musical feature extraction from audio. In Proc. Intl.Conf. Music Inform. Retrieval, 237–244 (2007).

- 92.Tolonen T, M K. A computationally efficient multipitch analysis model. IEEE Trans Speech Audio Process. 2000;8:708–716. doi: 10.1109/89.876309. [DOI] [Google Scholar]

- 93.Müller M, Ellis DP, Klapuri A, Richard G. Signal processing for music analysis. IEEE J. Sel. Top. Signal Process. 2011;5:1088–1110. doi: 10.1109/jstsp.2011.2112333. [DOI] [Google Scholar]

- 94.Vest AN, et al. An open source benchmarked toolbox for cardiovascular waveform and interval analysis. Physiol. Meas. 2018;39:105004. doi: 10.1088/1361-6579/aae021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Goldberger AL, et al. Physiobank, physiotoolkit, and physionet components of a new research resource for complex physiologic signals. Circ. 2000;101:e215–e220. doi: 10.1161/01.cir.101.23.e215. [DOI] [PubMed] [Google Scholar]

- 96.Jamieson S. Likert scales: how to (ab)use them. Med. Educ. 2004;38:1217–1218. doi: 10.1111/j.1365-2929.2004.02012.x. [DOI] [PubMed] [Google Scholar]

- 97.Benjamini Y, Krieger AM, Yekutieli D. Adaptive linear step-up procedures that control the false discovery rate. Biom. 2006;93:491–507. doi: 10.1093/biomet/93.3.491. [DOI] [Google Scholar]

- 98.Delorme A, Makeig S. Eeglab: an open source toolbox for analysis of single-trial eeg dynamics including independent component analysis. J. Neurosci. Methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- 99.Jacoby WG. Loess: a nonparametric, graphical tool for depicting relationships between variables. Elect. Stud. 2000;19:577–613. doi: 10.1016/s0261-3794(99)00028-1. [DOI] [Google Scholar]

- 100.R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria (2018).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets generated and analysed during the current study are available from the corresponding author on reasonable request.

Any custom code used during the current study is available from the corresponding author on reasonable request.