Abstract

Visual working memory (VWM) has been implicated both in the online representation of object tokens (in the object-file framework) and in the top-down guidance of attention during visual search (implementing a feature template). It is well established that object representations in VWM are structured by location, with access to the content of VWM modulated by position consistency. In the present study, we examined whether this property generalizes to the guidance of attention. Specifically, in two experiments, we probed whether the guidance of spatial attention from features in VWM is modulated by the position of the object from which the feature was encoded. Participants remembered an object with an incidental color. Items in a subsequent search array could match either the color of the remembered object, the location, or both. Robust benefits of color match (when the matching item was the target) and costs (when the matching items was a distractor) were observed. Critically, the magnitude of neither effect was influenced by spatial correspondence. The results demonstrate that features in VWM influence attentional priority maps in a manner that does not necessarily inherit the spatial structure of the object representations in which those features are maintained.

Keywords: visual working memory, visual search

Two of Anne Treisman’s fundamental contributions to cognitive science concerned mechanisms of feature-based attendonal priority in visual search (e.g., Treisman & Gelade, 1980; Treisman & Gormican, 1988) and mechanisms for the representation of individual object tokens (e.g., Kahneman, Treisman, & Gibbs, 1992). The work on feature-based priority helped generate an entire subfield of research devoted to visual search, both as a tool for understanding basic properties of visual perception and as a core behavioral ability to be understood in its own right (how humans efficiently find and identify task-relevant objects in complex scenes). In the course of this development, the construct of strategic, feature-based guidance has taken a central role in almost all theories of visual search as a means for exerting control (Bundesen, Habekost, & Kyllingsbaek, 2005; Desimone & Duncan, 1995; Duncan & Humphreys, 1989; Ehinger, Hidalgo-Sotelo, Torralba, & Oliva, 2009; Hamker, 2005; Navalpakkam & Itti, 2005; Wolfe, 1994; Zelinsky, 2008). And in many of these, feature-based guidance is implemented as an active representation of task-relevant features, maintained in visual working memory (VWM).1 Indeed, there appears to be strong overlap between information maintained in VWM and the guidance of attention in visual search (Bahle, Beck, & Hollingworth, 2018; Hollingworth & Luck, 2009; Olivers, Meijer, & Theeuwes, 2006; Soto, Heinke, Humphreys, & Blanco, 2005; Woodman, Carlisle, & Reinhart, 2013).

VWM is also a plausible substrate of the object token representations at the heart of Treisman and colleagues’ object-file framework (Kahneman et al., 1992; Treisman, 1992). Although there was only limited discussion of the representational substrate of object files in these papers, the properties ascribed to object files correspond closely to those of VWM: an online representation of a subset of visible objects that can bridge disruptions such as brief occlusion and saccades. A strongly overlapping relationship between VWM and the object-file construct was confirmed most directly in Hollingworth and Rasmussen (2010), who combined key aspects of the original Kahneman et al. object reviewing paradigm and VWM paradigms (as illustrated in Figure 1). In the object-file framework, object tokens are addressed by their location: objecthood is defined by unique location, and the contents of the file (visual features, identity, etc.) are bound within the same object representation by virtue of being associated with the same spatial index. Retrieval of the remembered features is proposed to require selection of the spatial index to which they were bound. In Hollingworth and Rasmussen, retrieval of remembered features from VWM was strongly modulated by object position: the detection of changes to colored objects was impaired if the position of the test features did not correspond to either the original positions or the expected positions (given intervening motion) of the encoded objects. Similar findings have been observed across a range of remembered feature dimensions and set sizes (Hollingworth, 2007; Hollingworth & Rasmussen, 2010; Jiang, Olson, & Chun, 2000; Olson & Marshuetz, 2005; B. Wang, Cao, Theeuwes, Olivers, & Wang, 2016). Thus, although it would be too strong to claim that object representations are defined solely by location (Hollingworth & Franconeri, 2009; Moore, Stephens, & Hein, 2010; Richard, Luck, & Hollingworth, 2008), there is broad evidence that access to the content of VWM is modulated by position, drawing a close link between VWM and the object-file framework.

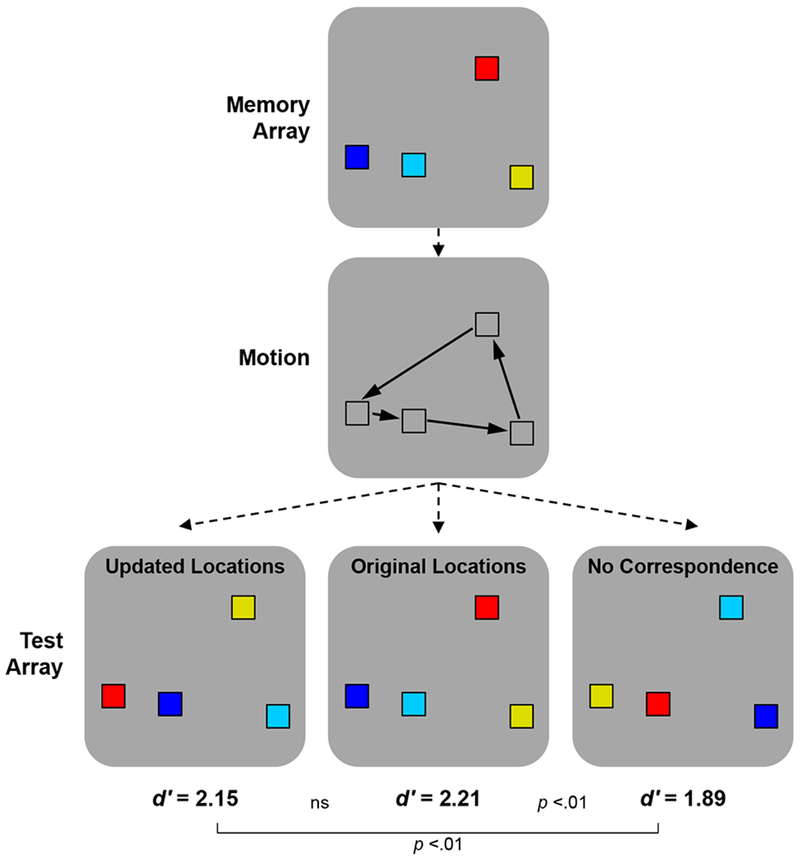

Figure 1.

Representative method and results from Hollingworth and Rasmussen (2010, Experiment 1). Participants remembered a set of colors across an intervening motion display. In the test array (all colors same or one changed), the locations of the test colors either corresponded to the expected location given motion (updated), to the original location, or to neither (no correspondence). There was a reliable decrement in memory accuracy in the no correspondence condition and a complementary increase in decision time (not depicted).

If VWM is involved both in the maintenance of online object representations and in the feature-based guidance of attention, to what extent is position modulation observed in access to objects reflected in the application of object features to guide search? Research on feature-based attention has typically concluded that feature biases are applied in a spatially homogeneous manner across the entire visual field (Liu & Mance, 2011; Martinez-Trujillo & Treue, 2004; Serences & Boynton, 2007; Treue & Martinez Trujillo, 1999; Zhang & Luck, 2009), through the modulation of competition between subpopulations of neurons coding different feature values, independently of location. Although this work provides a clear theoretical alternative, the empirical evidence does not address the present question directly, since the spatial manipulations in this literature have tended to be relatively gross (typically, hemifield), the cued features were fixed over long periods of time (likely engaging long-term mechanisms of attentional bias rather than VWM, Carlisle et al., 2011), and the cued features were not associated with discrete objects. In contrast with the standard literature on feature-based attention, Leonard, Balestreri, and Luck (2014) recently observed that capture by a distractor that matched a target-defining color was strongly graded as a function of distance from task-relevant stimuli, indicating interaction between the current locus of spatial attention and the spatial distribution of feature-based guidance. Leonard et al. used the same target feature across the entire experiment and did not associate feature cues with discrete objects. However, in the present context it is plausible that VWM for an object will lead to preferential allocation of spatial attention to the encoded location, producing graded feature-based guidance that is most pronounced at that location. That is, both guidance from and access to features in VWM may be modulated by position, because spatial attention is associated with both the maintenance and retrieval of VWM representations. However, it should be noted that substantial evidence indicates that VWM maintenance can be dissociated from the locus of spatial attention (Gajewski & Brockmole, 2006; Hakim, Adam, Gunseli, Awh, & Vogel, in press; Johnson, Hollingworth, & Luck, 2008; Maxcey-Richard & Hollingworth, 2013).

One study has addressed the present research question directly, albeit in a limited fashion. van Moorselaar, Theeuwes, and Olivers (2014) had participants remember a different set of colors on each trial, engaging VWM. In an intervening search task, a matching color-singleton distractor was presented either in the remembered location or in a different location. The magnitude of attention capture was not influenced by this manipulation. The result hints at a potentially important architectural distinction between access to the features of objects in VWM compared with the effects of these features on attentional guidance. However, van Moorselaar et al. only probed attention capture (there was no test of strategic processes), position modulation was a secondary aspect of their design, and they were only able to use ~25% of their trials to address it, raising the possibility that they simply had insufficient power to detect an effect of position match. Moreover, on the trials that were available for analysis, the singleton distractor was three times more likely to appear at the remembered location than at any other possible location, potentially generating a general bias to avoid attending to the remembered location (B. C. Wang & Theeuwes, 2018), which could have limited sensitivity to position-match effects.

In the present study, we examined systematically whether the guidance of spatial attention from features in VWM is applied homogeneously or whether it is modulated by the position of the object from which the feature was encoded. We developed a search task probing position-specificity in both the benefits of guidance (when the target shared the remembered feature) and the costs of guidance (when a distractor shared the remembered feature). In addition, we tested this question in the context of incidental (Experiment 1) and strategic (Experiment 2) guidance.

Experiment 1

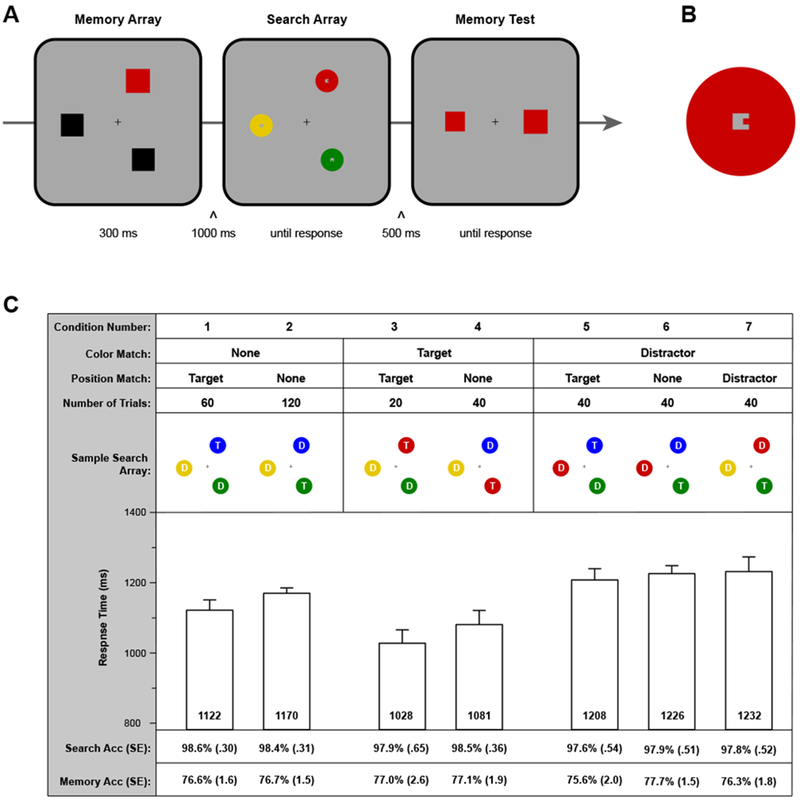

In Experiment 1, we examined the incidental guidance of attention from the content of VWM, asking whether this guidance is modulated by the position of the remembered stimulus. The basic paradigm is illustrated in Figure 2. Participants first saw an array of three squares. Two were black, and one had a color determined randomly on each trial. They remembered the size of the colored item for a memory test at the end of the trial; thus, color was an incidental property of the remembered object. During the retention interval, participants searched a three-item array for an object that had a small internal target feature. One item in the search array could match the color of the relevant memory square (color match). In addition, this item was either the target or a distractor. Moreover, the matching-color item could either appear at the same location as the relevant item from the memory display (position match) or at a different location (position mismatch). Search RT in these conditions was compared with a control condition in which none of the items matched the color of the remembered object (color mismatch). Note that the color and location of the remembered object were uncorrelated with the color and location of the search target (see Figure 2). In addition, the color and location of the remembered object were uncorrelated with the colors and locations of the distractors.

Figure 2.

A) Sequence of events in a sample trial of Experiment 1. Participants first saw a memory array with one colored item that was not black (memory item). Next, they searched for a target disc and reported a small internal feature. Finally, they completed a two-alternative forced-choice test probing memory for the size of the memory item. B) Target feature within a search disk. In the central, square region of the disk, the target had a “bump” on the left or right (pictured). Distractors has a “bump” on the top or bottom. C) Experimental design and key results. The seven conditions are illustrated, implementing manipulations of (1) the correspondence between the color of the memory item and the colors of the search disks, and (2) the correspondence between the location of the memory item and the locations of the search disks. The sample search arrays show configurations relative to the memory array in a. “T” denotes the target, and “D” a distractor. For each of the seven conditions, we report mean search RT (error bars are within subject, condition-specific 95% confidence intervals; Morey, 2008), mean search accuracy (Search Acc), and mean memory test accuracy (Memory Acc). For the latter two reports, standard errors of the means are in parentheses.

We assessed the benefits and costs associated with the presence of a color-matching item and, critically, probed whether these were modulated by the correspondence between the location of the matching item and the location of the remembered object. If position specificity observed for access to the features of remembered objects (Hollingworth & Rasmussen, 2010; Kahneman et al., 1992) is reflected in the mechanisms by which these features interact with search priority, then both the benefits and costs of color match should be larger in the position-match condition than in the position-mismatch condition. However, if the features in VWM interact with priority in the spatially homogeneous manner suggested by the feature-based attention literature, there should be minimal or no modulation of the color-match effect by position.

Method

Participants.

Thirty-two participants completed Experiment 1. Each was recruited from the University of Iowa community, was between the ages of 18 and 30, reported normal or corrected to normal vision, completed the experiment for course credit, and was naive with respect to the hypotheses under investigation. Two participants were replaced because mean search accuracy fell below an a priori criterion of 85% correct (49.7% and 80.3%). Of the final 32 participants, 21 were female.

Stimuli.

The trial events are displayed in Figure 2A. The background was gray with a central black fixation cross (.65° X .65°). The memory array contained one colored square (red, green, blue, or yellow, randomly selected on each trial) and two black squares. The black squares subtended 1.63°. The colored square subtended a randomly selected value between 1.30° and 2.11°, in increments of 0.02°. The three squares were presented at the vertices of a virtual equilateral triangle, each at an eccentricity of 3.25° (measured to the object center). The position of the colored item was randomly selected. To vary the absolute locations from trial to trial, the triangular configuration was offset on each trial by a random angular value between 0° and 119°.

The search array consisted of three colored disks (1.63° diameter), presented at the same three locations used for the memory array. Each disk had a central, gray “hole” (.24°) and a square “bump” (.08°) that protruded from one of the four edges defining the “hole” (Figure 2B). Two of the disks (distractors) had the bump on the top or bottom (randomly selected) and one (target) had the bump on the left or right (randomly selected). When there was a memory-matching color in the array, the two other colors were selected randomly without replacement from the remaining three colors. When there were no memory-matching colors, each disk was one of the three remaining colors. Note that, unlike the color-singleton method of van Moorselaar et al. (2014), the critical matching object in the present search arrays was not physically salient.

The memory test display consisted of two squares drawn in the original memory color, presented to the left and right of central fixation (3.25° eccentricity). One square was the same size as the original memory square (correct alternative), and the other (the foil) was either .33° larger or smaller than the memory square, randomly selected. The locations of the two alternatives were also selected randomly.

Apparatus.

Stimuli were presented on a 100-Hz LCD monitor at a viewing distance of 70 cm. Responses were collected with a USB game controller. The experiment was controlled by E-prime software (Schneider, Eschmann, & Zuccolotto, 2002).

Procedure.

Participants pressed a pacing button to begin each trial. There was a 300-ms delay, followed by the memory display for 300 ms, a 1000-ms delay (fixation cross only), the search display until response, a 500-ms delay, and finally the memory test display until response. Participants were instructed to maintain central fixation until the search array appeared. The experimenter monitored eye position via a large video image of the right eye and recorded trials on which participants made an eye movement, providing feedback each time. Central fixation ensured that search was not contaminated by differences in fixation position at search onset (as might occur if participants fixated the memory square and maintained fixation at that location). For half of the participants, the central fixation cross was briefly changed to white for 150 ms during the 1000-ms delay between the memory and search displays (500 ms black fixation cross, 150 ms white, 350 ms black). This abrupt luminance change was designed to direct covert attention back to central fixation after having attended the location of the memory color square.

For the search task, participants were instructed to find the “bump” that appeared on the left or right and press the corresponding gamepad button to indicate “bump” position, as quickly and as accurately as possible. Because the target feature was very small, they were also instructed that they could move their eyes from central fixation upon appearance of the search array. Finally, participants pressed either the left or right button (unspeeded) to indicate the size-matching square in the memory test display. If the participants responded incorrectly to the search array, the trial was terminated with a red, “incorrect” message displayed for 2000 ms. Similarly, an incorrect response to the memory test was followed by the same error message for 400 ms.

Note that we made the critical feature of the remembered object (color) incidental to the memory task (size discrimination). This was done to eliminate the possibility that participants would strategically attend to memory-matching items in the search array so as to improve performance on the memory task. The method has been successfully used in several studies (Hollingworth & Luck, 2009; Hollingworth, Matsukura, & Luck, 2013a, 2013b), with robust guidance of attention from a feature of the remembered object that was irrelevant to the memory task.

Participants first completed a practice session of 18 trials, followed by an experimental session of 360 trials. The key manipulations concerned 1) the presence of a color-matching item in the array and its status as target or distractor and 2) the correspondence between the location of the remembered item and the locations of the target and distractors. The full design is illustrated in Figure 2C, as well as the number trials in each condition. Trials from the various conditions were randomly intermixed. The target was equally likely to appear at each of the three array locations. For the memory-match trials, the matching item was equally likely to appear at each of the three array locations. Thus, the memory location did not predict the location of the matching array item or the location of the target. In addition, the target was presented in the memory color on one-third of trials, and thus the memory color did not predict the target color.

Data analysis.

The two groups of participants (with and without a fixation cross luminance change between memory and search displays) produced functionally equivalent results—there were no significant differences for any of the main analyses, reported below—and were combined. Accuracy on the search task (Figure 2C) was very high (M = 98.2%) and did not differ significantly for any of the main tests. Mean accuracy on the memory task was 76.7% and did not differ significantly for any of the main tests (Figure 2C). Trials were eliminated from the search RT analysis if a saccade was detected before the onset of the search array, if the search response was incorrect, or if the RT was more than 2.5 SD from a participant’s condition mean (a total of 5.0% of the data). RT trimming did not alter the pattern of results. RT analyses were not significantly altered when limited to trials on which the memory response was correct, so the RT results, reported below, include both memory correct and incorrect trials.

Results

Mean manual response time (RT) results are presented in Figure 2C. We first report the broad effects of color match (to probe memory-based guidance), then the effects of position match (to examine biases based on memory item position), and finally the interaction between remembered position and color match (to determine whether color-match effects were modulated by position). Note that for concision, we will refer in the following analyses to the condition numbers listed in Figure 2C.

Color match.

The data were entered in to a one-way, repeated-measures ANOVA over the three color-match conditions (no match, target match, distractor match), collapsing across position match. There was a reliable effect of match to the remembered color, F(2,62) = 55,8, p < .001, η2p = .643. Consistent with a bias to attend to memory-matching items, mean RT was lower on target-match trials (1063 ms) than on no-match trials (1154 ms), F(1,31) = 36.8, p < .001, η2p = .543, and mean RT was higher on distractor-match trials (1222 ms) than on no-match trials, F(1,31) = 40.6, p < .001, η2p = .567.

Position match.

We next examined whether search was influenced by the correspondence between the position of the memory item and the position of the search target. The data were entered into a 3 (color match: no match, target match, distractor match) × 2 (target position: remembered location, other location) repeated-measures ANOVA. Conditions 6 and 7 (see Figure 2C) were collapsed for this analysis. There was a reliable effect of position match, F(1,31) = 6.55, p = .016, η2p = .174, with lower RT when the target appeared in the remembered position (1119 ms) than when it appeared in a different position (1160 ms). A similar effect has been observed by de Vries, van Driel, Karacaoglu, and Olivers (2018). In addition, we examined a planned contrast between target position match and mismatch for the trials on which no color-matching item was present (Conditions 1 and 2), providing the most direct test of this question. Mean RT was reliably lower when the target appeared in the position of the remembered item, F(1,31)= 10.6, p = .003, η2p = .254.

Modulation of color match by position.

The key question was whether the color match effect was modulated by position. In the first analysis, we examined if the benefit of a color-matching search target was influenced by whether or not that item appeared in the remembered location. This involved a 2 (color match) × 2 (position match) repeated-measures ANOVA over conditions 1-4, testing whether the effect of color match at the remembered location (Condition 1 versus Condition 3 = 94 ms, 95% CI = ± 45.9 ms) was larger than the effect of color match at a different location (Condition 2 versus Condition 4 = 90 ms, 95% CI = ± 38.6 ms). The interaction did not approach reliability, F(2,62) = .017, p = .897, η2p = .001 (upper 90% confidence limit, η2p = .041). Bayes factor was calculated based on a paired t-test over the color match difference scores for same and different location, using the procedure outlined in Rouder, Speckman, Sun, Morey, and Iverson (2009) and the default scaling factor value (.707). The Bayes factor estimate indicated that the data were 5.28:1 in favor of the null hypothesis (i.e., 5.28 times more likely to occur under a model that did not include an effect of position match).

The second test examined whether the cost of a color-matching distractor was influenced by whether that item appeared in the remembered location. This involved a simple planned contrast between condition 7 (matching color distractor in remembered location) and condition 6 (matching color distractor in a different location), controlling for the location of the target. There was no reliable difference between these conditions, F(1,31) = .091, p = .765, η2p = .003 (upper 90% confidence limit, η2p = .088). The mean difference score (same minus different location) was −6.21 ms (95% CI = ± 42.0 ms). Estimate of the Bayes factor (again based on a paired t-test) indicated that the data were 5.08 times more likely to occur under a model that did not include an effect of position match.

Discussion

There were two main effects. First, attention was reliably directed to items matching a search-irrelevant color maintained in VWM. Relative to a neutral condition, there were both benefits and costs of memory match. Second, targets were detected more rapidly when the target appeared in the same location as the memory item had appeared, indicating that attention during search was preferentially allocated to this location. Critically, these two effects did not interact. Specifically, neither the effect of a color-matching target nor the effect of a color-matching distractor was more pronounced at the remembered location than at other array locations. Thus, the data support a view in which the content of VWM introduces substantial control over the allocation of spatial attention and that this control is implemented homogeneously, without respect to the location of the item held in memory. However, the data also indicate that there was an independent bias to attend to the remembered location.

Experiment 2

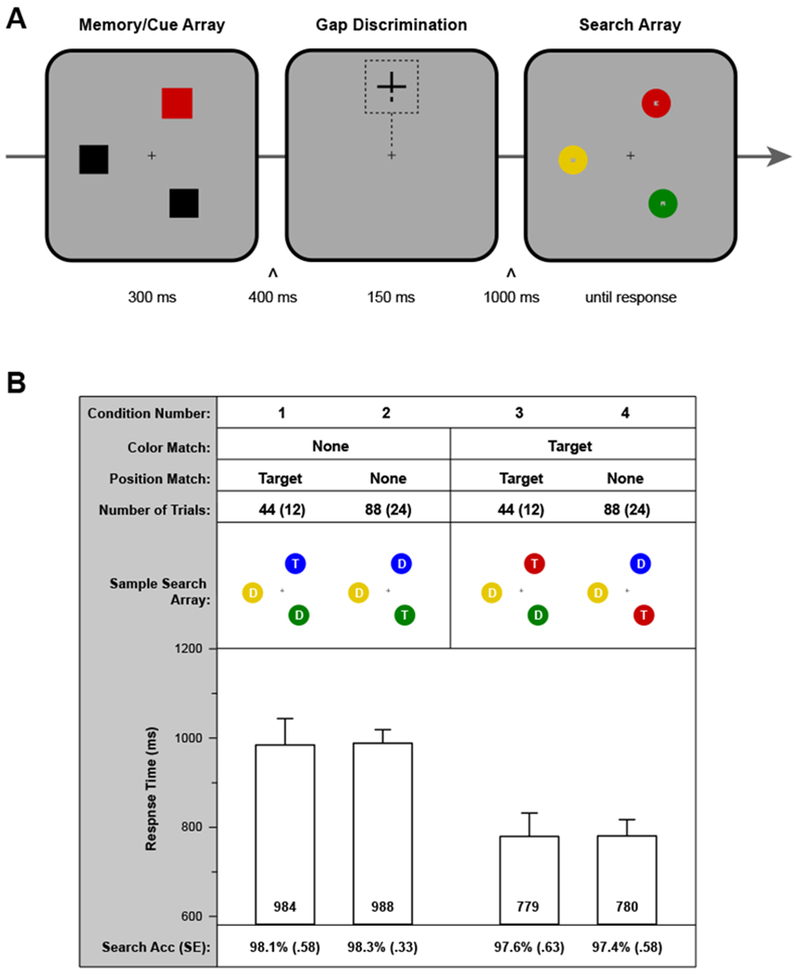

In Experiment 2, the paradigm was modified to probe the strategic guidance of attention from VWM and its potential modulation by object position, as illustrated in Figure 3. The distractor-color-match conditions were eliminated, so that when a color-matching object was present in the search array, this item was always the target. Thus, participants had strong incentive to remember the color of the square in the memory array (which, essentially, served as a cue display) and use this VWM representation to guide search.

Figure 3.

A) Sequence of events in a sample trial of Experiment 2. Participants first saw a memory array with one uniquely colored item Next, participant monitored for the appearance of a gap in the central fixation cross that appeared in the top of the vertical section (response required) or in the bottom of the vertical section (no response). Finally, participants completed the same search task as in Experiment 1. B) Experimental design and key results. The four conditions are illustrated, implementing manipulations of (1) the correspondence between the color of the memory item and the color of the target disk, and (2) the correspondence between the location of the memory item and the location of the target disk. The sample search arrays show configurations relative to the memory array in a. “T” denotes the target, and “D” a distractor. For the number of trials, the main value corresponds to the number of trials with a bottom gap in the fixation cross and the value in parentheses to the number of trials with a top gap. Analyses were limited to the bottom-gap trials. For each of the four conditions, we report mean search RT (error bars are within-subject, condition-specific 95% confidence intervals; Morey, 2008) and mean search accuracy (Search Acc). For the latter, standard errors of the means are in parentheses.

A secondary goal was to probe in more depth the main effect of position match observed in Experiment 1. This position-match effect could be consistent with theories positing that VWM maintenance strongly overlaps with spatial attention (Chun, Golomb, & Turk-Browne, 2011; Kiyonaga & Egner, 2013); the act of memory maintenance would necessarily lead to sustained spatial attention at the original object location, producing the effect on search. However, an alternative view is that VWM maintenance and spatial attention can be dissociated (Hollingworth & Maxcey-Richard, 2013; Rerko, Souza, & Oberauer, 2014). In this view, spatial attention was directed to the remembered location because it was required for VWM encoding (e.g., Schmidt, Vogel, Woodman, & Luck, 2002) and there was simply no strong demand to withdraw attention from that location prior to search. Although the fixation cross luminance increment used for half of the subjects in Experiment 1 was designed to redirect spatial attention back to the fixation cross, such events can fail to influence attention if it is already focused at a discrete location (Yantis & Jonides, 1990).

Thus, in Experiment 2, we used a method that required participants to direct spatial attention to the fixation cross between the offset of the memory array and the onset of the search array: A small gap appeared in either the upper or lower section of the vertical portion of the fixation cross. Participants either executed a response to the gap (top gap, a small proportion of trials) or withheld response (bottom gap, a large proportion of trials).

Method

Participants.

Twenty participants (11 female) completed Experiment 2. Each was recruited from the University of Iowa community, was between the ages of 18 and 30, reported normal or corrected to normal vision, completed the experiment for course credit, and was naïve with respect to the hypotheses under investigation.

Stimuli.

In the memory display, the size of the colored square was always the same as the two black squares (1.63°). The gap in the fixation cross subtended 0.14 °. It appeared at the midpoint of either the upper or lower half of the vertical section.

Procedure.

The events in a trial differed from Experiment 1 in the following respects. During the interval between the memory and search displays, the fixation cross was presented in isolation for 400 ms, followed by the appearance of the gap for 150 ms, followed by the full fixation cross again for 1000 ms (Figure 3A). The gap could appear on the upper section of the fixation cross (21.4%) or on the lower section (78.6% of trials). Participants were instructed to press a button if the gap was on the top and to withhold response if the gap was on the bottom. This method allowed us asses gap discrimination accuracy while limiting the search RT analysis to the large majority of trials (gap on the bottom) that did not require two responses. If the participant’s response to the gap location was incorrect (both errors of commission and omission), the trial was immediately terminated with the message “Error -- Press button when gap on top!” presented in red for 5000 ms before the start of the next trial.

The design was limited to conditions 1-4 (Figure 3B). That is, when there was a color-matching item in the search array, it was always the target. This provided a strong incentive to attend to color-matching items in the search array, and participants were explicitly instructed to remember the color as a cue for the search task. (Note that the position of the memory color remained unpredictive of the target position.) Given that the memory color was relevant to the search task, we eliminated the memory test at the end of the trial. Participants first completed a practice session of 12 trials in which they performed only the search task, with no gap discrimination task. Then, they completed a second practice session of 18 trials that included the gap discrimination task. Finally, they completed an experimental session of 336 trials. The distribution of trials across conditions is listed in Figure 3B.

Data analysis.

Accuracy on the gap discrimination task was very high (M = 98.2%) and did not differ for trials when the gap was on the top or bottom of the fixation cross. All further analyses were conducted over trials on which the gap was in the bottom position. (Analyses over all trials produced the same pattern of results and statistical significance). Accuracy on the search task (Figure 3B) was high (M = 97.8%), with no significant main effects or interaction. Trials were eliminated from the RT analysis if a saccade was detected before the onset of the search array, if the gap response was incorrect, if the search response was incorrect, or if the RT was more than 2.5 SD from a participant’s condition mean (a total of 5.5% of the data). RT trimming did not alter the pattern of results.

Results and Discussion

RT results are presented in Figure 3B. The data were entered into a 2 (color match) X 2 (position match) repeated-measures ANOVA.

Color match.

There was a main effect of color match, F(1,19) = 35.1, p < .001, η2p = .649. Mean RT was lower when the target matched the memory color (779 ms) than when it did not (986 ms), consistent with the guidance of attention by VWM. Moreover, the benefit of color match was more than twice as large as that observed in Experiment 1, indicating strategic use of the remembered color to guide attention.

Position match.

Unlike Experiment 1, there was no main effect of position match, F(1,19) = .132, p = .721, η2p = .007, with mean RT of 881 ms when the target was in the same location as the memory item and 884 ms when it was not. The Bayes factor estimate indicated that the data were 4.06 times more likely to occur under a model that did not include an effect of position match. Thus, the demand to attend to the fixation cross prior to search eliminated the main effect of position, suggesting that the reliable effect of position match in Experiment 1 was likely due to the incidental perseveration of spatial attention at the memory encoding location and was not inherent to the maintenance of the memory item in VWM (which must have been remembered reliably, as memory match had a large effect on search performance).2

Modulation of color match by position.

Finally, and replicating Experiment 1, there was no interaction between color match and location match. The benefit of color match at the remembered location (Condition 1 versus Condition 3 = 205 ms, 95% CI = ± 95.5 ms) was no larger than the benefit of color match at a different location (Condition 2 versus Condition 4 = 208 ms, 95% CI = ± 56.1 ms), F(1,19) = .010, p = .922, η2p = .001 (upper 90% confidence limit, η2p = .041). As in Experiment 1, Bayes factor was calculated based on a paired t-test over the color match difference scores for same and different location. The Bayes factor estimate indicated that the data were 4.28 times more likely to occur under a model that did not include an effect of position match.

Discussion

Complementing Experiment 1, the results of Experiment 2 indicate that the strategic guidance of attention on the basis of a feature representation in VWM is also implemented in a spatially homogeneous manner, without respect to the remembered location of the object from which that guidance is derived. In addition, the results of Experiment 2 indicate that the main effect of position match in Experiment 1 was likely to be an artifact of the allocation of attention to that location in order to encode the memory item. When the task required that attention be directed to the central fixation cross before search, no main effect of position was observed.

General Discussion

The present results are consistent with a spatially homogeneous application of features in VWM to the guidance of spatial attention. This can be accommodated naturally by models in the feature-based attention literature that posit modulation of feature-specific subpopulations of neurons in sensory cortex, independently of location (e.g., Martinez-Trujillo & Treue, 2004). The present results are also broadly consistent with models of visual search that have built on Treisman’s work on feature-based priority. For example, in Wolfe’s Guided Search model (Wolfe, 1994), top-down guidance of attention is achieved in a spatially homogeneous manner: any stimulus in the visual field that matches the current top-down set of relevant visual features receives a boost in strength in the priority map that determines attentional selection. Of course, it is possible that additional sources of guidance (e.g., knowledge of relevant spatial locations) will interact with the feature-based biases on the priority map, such that the combined effect reflects graded guidance (Leonard et al., 2014). However, the present experiments were designed to eliminate any additional sources of guidance so as to isolate feature-based effects. In particular, the location of the remembered object was uninformative with respect to the search task.

How might a dissociation arise between spatially modulated access to the content of VWM but spatially homogeneous feature-based guidance from VWM? Access involves selecting the relevant object representation from among other active object representations. Clearly, an efficient means to this end is to select on the basis of unique location, as originally proposed by Kahneman et al. (1992). Although object tokens can also be defined by surface properties (Hollingworth & Franconeri, 2009; Moore et al., 2010), location can be particularly useful in cases where stimuli have similar surface features or where there is change in surface properties. Moreover, associations between objects and locations are formed automatically in the course of generating structured representations of scenes (Hollingworth, 2007; Hollingworth & Rasmussen, 2010). Such higher-level position associations are likely supported by parietal (Hakim et al., in press; Vogel & Machizawa, 2004) systems—and, perhaps, medial temporal (Rolls & Wirth, 2018) systems—providing the spatial index to which the content of the file is bound. Critically, and consistent with the original conceptualization of the object-file framework, the content of the file can be maintained in a manner that is largely separable from the representation of the index addressing it. Specifically, the features of objects in VWM are maintained, to a significant extent, by the sustained activation of subpopulations of neurons in relatively early visual areas (Emrich, Riggall, LaRocque, & Postle, 2013; Harrison & Tong, 2009; Serences, Ester, Vogel, & Awh, 2009), and this activation generalizes to retinotopically tuned populations coding locations other than that occupied by the encoded object (Ester, Serences, & Awh, 2009). Such spatially global activity would then implement spatially homogeneous feature-based attention, biasing the competition between subpopulations of neurons coding feature values in favor of remembered values (Desimone & Duncan, 1995; Hamker, 2005; Martinez-Trujillo & Treue, 2004; Schneegans, Spencer, Schöner, Hwang, & Hollingworth, 2014) and thus filtering sensory input to priority maps guiding attention during visual search.

Acknowledgments

This research was supported by a National Institutes of Health grant (R01 EY01735). Correspondence concerning this article should be addressed to Andrew Hollingworth, The University of Iowa, Department of Psychological and Brain Sciences, 11 Seashore Hall E, Iowa City, IA, 52242-1407. Email should be sent to andrew-hollingworth@uiowa.edu.

Footnotes

Publisher's Disclaimer: This Author Accepted Manuscript is a PDF file of a an unedited peer-reviewed manuscript that has been accepted for publication but has not been copyedited or corrected. The official version of record that is published in the journal is kept up to date and so may therefore differ from this version.

Search templates (broadly construed as the information used to guide visual search strategically) draw from multiple sources and from multiple memory systems (Anderson, Laurent, & Yantis, 2011; Carlisle, Arita, Pardo, & Woodman, 2011; Chun& Jiang, 1999; Torralba, Oliva, Castelhano, & Henderson, 2006; Wolfe, 2012). Here we focus on featme-based guidance from VWM.

It is possible that the demand to return attention to the central fixation cross generated inhibition of return at the memory item location and that this effect perfectly cancelled a second bias to attend to that location. Even if this were the case, the critical point here is that the color match effect was not modulated by location.

References

- Anderson BA, Laurent PA, & Yantis S (2011). Value-driven attentional capture. Proceedings of the National Academy of Sciences of the United States of America, 108(25), 10367–10371. doi: 10.1073/pnas.1104047108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bahle B, Beck VM, & Hollingworth A (2018). The architecture of interaction between visual working memory and visual attention. Journal of Experimental Psychology: Human Perception & Performance, 44(7), 992–1011. doi: 10.1037/xhp0000509 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bundesen C, Habekost T, & Kyllingsbaek S (2005). A neural theory of visual attention: Bridging cognition and neurophysiology. Psychological Review, 112(2), 291–328. doi: 10.1037/0033-295x.112.2.291 [DOI] [PubMed] [Google Scholar]

- Carlisle NB, Arita JT, Pardo D, & Woodman GF (2011). Attentional templates in visual working memory. Journal of Neuroscience, 31(25), 9315–9322. doi: 10.1523/jneurosci.1097-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chun MM, Golomb JD, & Turk-Browne NB (2011). A taxonomy of external and internal attention In Fiske ST, Schacter DL & Taylor SE (Eds.), Annual Review of Psychology, Vol 62 (Vol. 62, pp. 73–101). doi: 10.1146/annurev.psych.093008.100427 [DOI] [PubMed] [Google Scholar]

- Chun MM, & Jiang Y (1999). Top-down attentional guidance based on implicit learning of visual covariation. Psychological Science, 10(4), 360–365. doi: 10.1111/1467-9280.00168 [DOI] [Google Scholar]

- de Vries IEJ, van Driel J, Karacaoglu M, & Olivers CNL (2018). Priority switches in visual working memory are supported by frontal delta and posterior alpha interactions. Cerebral Cortex, 28(11), 4090–4104. doi: 10.1093/cercor/bhy223 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R, & Duncan J (1995). Neural mechanisms of selective visual attention. Annual Review of Neuroscience, 18, 193–222. doi: 10.1146/annurev.ne.18.030195.001205 [DOI] [PubMed] [Google Scholar]

- Duncan J, & Humphreys GW (1989). Visual search and stimulus similarity. Psychological Review, 96(3), 433–458. doi: 10.1037//0033-295X.96.3.433 [DOI] [PubMed] [Google Scholar]

- Ehinger KA, Hidalgo-Sotelo B, Torralba A, & Oliva A (2009). Modelling search for people in 900 scenes: A combined source model of eye guidance. Visual Cognition, 17(6-7), 945–978. doi: 10.1080/13506280902834720 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emrich SM, Riggall AC, LaRocque JJ, & Postle BR (2013). Distributed patterns of activity in sensory cortex reflect the precision of multiple items maintained in visual short-term memory. Journal of Neuroscience, 33(15), 6516–6523. doi: 10.1523/jneurosci.5732-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ester EF, Serences JT, & Awh E (2009). Spatially global representations in human primary visual cortex during working memory maintenance. Journal of Neuroscience, 29(48), 15258–15265. doi: 10.1523/JNEUROSCI.4388-09.2009 %J The Journal of Neuroscience [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gajewski DA, & Brockmole JR (2006). Feature bindings endure without attention: Evidence from an explicit recall task. Psychonomic Bulletin & Review, 13(4), 581–587. doi: 10.3758/BF03193966 [DOI] [PubMed] [Google Scholar]

- Hakim N, Adam KCS, Gunseli E, Awh E, & Vogel EK (in press). Dissecting the neural focus of attention reveals distinct processes for spatial attention and object-based storage in visual working memory. Psychological Science. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamker FH (2005). The reentry hypothesis: The putative interaction of the frontal eye field, ventrolateral prefrontal cortex, and areas V4, IT for attention and eye movement. Cerebral Cortex, 15(4), 431–447. doi: 10.1093/cercor/bhh146 [DOI] [PubMed] [Google Scholar]

- Harrison SA, & Tong F (2009). Decoding reveals the contents of visual working memory in early visual areas. Nature, 458(7238), 632–635. doi: 10.1038/nature07832 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollingworth A (2007). Object-position binding in visual memory for natural scenes and object arrays. Journal of Experimental Psychology: Human Perception and Performance, 33(1), 31–47. doi: 10.1037/0096-1523.33.1.31 [DOI] [PubMed] [Google Scholar]

- Hollingworth A, & Franconeri SL (2009). Object correspondence across brief occlusion is established on the basis of both spatiotemporal and surface feature cues. Cognition, 113(2), 150–166. doi: 10.1016/j.cognition.2009.08.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollingworth A, & Luck SJ (2009). The role of visual working memory (VWM) in the control of gaze during visual search. Attention, Perception, & Psychophysics, 71(4), 936–949. doi: 10.3758/APP.71.4.936 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollingworth A, Matsukura M, & Luck SJ (2013a). Visual working memory modulates low-level saccade target selection: evidence from rapidly generated saccades in the global effect paradigm. Journal of Vision, 13(13), 4. doi: 10.1167/13.13.4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollingworth A, Matsukura M, & Luck SJ (2013b). Visual working memory modulates rapid eye movements to simple onset targets. Psychological Science, 24(5), 790–796. doi: 10.1177/0956797612459767 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollingworth A, & Maxcey-Richard AM (2013). Selective maintenance in visual working memory does not require sustained visual attention. Journal of Experimental Psychology: Human Perception and Performance, 39(4), 1047–1058. doi: 10.1037/A0030238 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollingworth A, & Rasmussen IP (2010). Binding objects to locations: The relationship between object files and visual working memory. Journal of Experimental Psychology: Human Perception and Performance, 36(3), 543–564. doi: 10.1037/a0017836 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang Y, Olson IR, & Chun MM (2000). Organization of visual short-term memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 26(3), 683–702. doi: 10.1037//0278-7393.26.3.683 [DOI] [PubMed] [Google Scholar]

- Johnson JS, Hollingworth A, & Luck SJ (2008). The role of attention in the maintenance of feature bindings in visual short-term memory. Journal of Experimental Psychology: Human Perception and Performance, 34(34), 41–55. doi: 10.1037/0096-1523.34.1.41 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D, Treisman A, & Gibbs BJ (1992). The reviewing of object files: Object-specific integration of information. Cognitive Psychology, 24(2), 175–219. doi: 10.1016/0010-0285(92)90007-O [DOI] [PubMed] [Google Scholar]

- Kiyonaga A, & Egner T (2013). Working memory as internal attention: Toward an integrative account of internal and external selection processes. Psychonomic Bulletin & Review, 20(2), 228–242. doi: 10.3758/s13423-012-0359-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard CJ, Balestreri A, & Luck SJ (2014). Interactions between space-based and feature-based attention. Journal of Experimental Psychology: Human Perception and Performance. doi: 10.1037/xhp0000011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu TS, & Mance I (2011). Constant spread of feature-based attention across the visual field. Vision Research, 51(1), 26–33. doi: 10.1016/j.visres.2010.09.023 [DOI] [PubMed] [Google Scholar]

- Martinez-Trujillo JC, & Treue S (2004). Feature-based attention increases the selectivity of population responses in primate visual cortex. Current Biology, 14(9), 744–751. doi: 10.1016/j.cub.2004.04.028 [DOI] [PubMed] [Google Scholar]

- Maxcey-Richard AM, & Hollingworth A (2013). The strategic retention of task-relevant objects in visual working memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39(3), 760–772. doi: 10.1037/A0029496 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore CM, Stephens T, & Hein E (2010). Features, as well as space and time, guide object persistence. Psychonomic Bulletin & Review, 17(5), 731–736. doi: 10.3758/pbr.17.5.731 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morey RD (2008). Confidence intervals from normalized data: A correction to Cousineau (2005). Tutorials in Quantitative Methods for Psychology, 4, 61–64. [Google Scholar]

- Navalpakkam V, & Itti L (2005). Modeling the influence of task on attention. Vision Research, 45(2), 205–231. doi: 10.1016/j.visres.2004.07.042 [DOI] [PubMed] [Google Scholar]

- Olivers CNL, Meijer F, & Theeuwes J (2006). Feature-based memory-driven attentional capture: Visual working memory content affects visual attention. Journal of Experimental Psychology: Human Perception and Performance, 32(5), 1243–1265. doi: 10.1037/0096-1523.32.5.1243 [DOI] [PubMed] [Google Scholar]

- Olson IR, & Marshuetz C (2005). Remembering “what” brings along “where” in visual working memory. Perception & Psychophysics, 67(2), 185–194. doi: 10.3758/BF03206483 [DOI] [PubMed] [Google Scholar]

- Rerko L, Souza AS, & Oberauer K (2014). Retro-cue benefits in working memory without sustained focal attention. Memory & Cognition, 42(5), 712–728. doi: 10.3758/s13421-013-0392-8 [DOI] [PubMed] [Google Scholar]

- Richard AM, Luck SJ, & Hollingworth A (2008). Establishing object correspondence across eye movements: Flexible use of spatiotemporal and surface feature information. Cognition, 109(1), 66–88. doi: 10.1016/j.cognition.2008.07.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rolls ET, & Wirth S (2018). Spatial representations in the primate hippocampus, and their functions in memory and navigation. Progress in Neurobiology, 171, 90–113. doi: 10.1016/j.pneurobio.2018.09.004 [DOI] [PubMed] [Google Scholar]

- Rouder JN, Speckman PL, Sun DC, Morey RD, & Iverson G (2009). Bayesian t tests for accepting and rejecting the null hypothesis. Psychonomic Bulletin & Review, 16(2), 225–237. doi: 10.3758/pbr.16.2.225 [DOI] [PubMed] [Google Scholar]

- Schmidt BK, Vogel EK, Woodman GF, & Luck SJ (2002). Voluntary and automatic attentional control of visual working memory. Perception & Psychophysics, 64(5), 754–763. doi: 10.3758/BF03194742 [DOI] [PubMed] [Google Scholar]

- Schneegans S, Spencer JP, Schöner G, Hwang S, & Hollingworth A (2014). Dynamic interactions between visual working memory and saccade target selection. Journal of Vision, 14(11). doi: 10.1167/14.11.9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider W, Eschmann A, & Zuccolotto A (2002). E-Prime user’s guide. Pittsburgh, PA: Psychology Software Tools, Inc. [Google Scholar]

- Serences JT, & Boynton GM (2007). Feature-based attentional modulations in the absence of direct visual stimulation. Neuron, 55(2), 301–312. doi: 10.1016/j.neuron.2007.06.015 [DOI] [PubMed] [Google Scholar]

- Serences JT, Ester EF, Vogel EK, & Awh E (2009). Stimulus-specific delay activity in human primary visual cortex. Psychological Science, 20(2), 207–214. doi: 10.1111/j.1467-9280.2009.02276.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soto D, Heinke D, Humphreys GW, & Blanco MJ (2005). Early, involuntary top-down guidance of attention from working memory. Journal of Experimental Psychology: Human Perception and Performance, 31(2), 248–261. doi: 10.1037/0096-1523.31.2.248 [DOI] [PubMed] [Google Scholar]

- Torralba A, Oliva A, Castelhano MS, & Henderson JM (2006). Contextual guidance of eye movements and attention in real-world scenes: The role of global features in object search. Psychological Review, 113(4), 766–786. doi: 10.1037/0033-295X.113.4.766 [DOI] [PubMed] [Google Scholar]

- Treisman A (1992). Perceiving and re-perceiving objects. American Psychologist, 47(7), 862–875. doi: 10.1037/0003-066X.47.7.862 [DOI] [PubMed] [Google Scholar]

- Treisman A, & Gelade G (1980). A feature-integration theory of attention. Cognitive Psychology, 12(1), 97–136. doi: 10.1016/0010-0285(80)90005-5 [DOI] [PubMed] [Google Scholar]

- Treisman A, & Gormican S (1988). Feature analysis in early vision: Evidence from search asymmetries. Psychological Review, 95(1), 15–48. doi: 10.1037/0033-295X.95.1.15 [DOI] [PubMed] [Google Scholar]

- Treue S, & Martinez Trujillo JC (1999). Feature-based attention influences motion processing gain in macaque visual cortex. Nature, 399(6736), 575–579. doi: 10.1038/21176 [DOI] [PubMed] [Google Scholar]

- van Moorselaar D, Theeuwes J, & Olivers CN (2014). In competition for the attentional template: can multiple items within visual working memory guide attention? Journal of Experimental Psychology: Human Perception and Performance, 40(4), 1450–1464. doi: 10.1037/a0036229 [DOI] [PubMed] [Google Scholar]

- Vogel EK, & Machizawa MG (2004). Neural activity predicts individual differences in visual working memory capacity. Nature, 428(6984), 748–751. doi: 10.1038/nature02447 [DOI] [PubMed] [Google Scholar]

- Wang B, Cao X, Theeuwes J, Olivers CN, & Wang Z (2016). Location-based effects underlie feature conjunction benefits in visual working memory. Journal of Vision, 16(11), 12. doi: 10.1167/16.11.12 [DOI] [PubMed] [Google Scholar]

- Wang BC, & Theeuwes J (2018). Statistical regularities modulate attentional capture. Journal of Experimental Psychology: Human Perception and Performance, 44(1), 13–17. doi: 10.1037/xhp0000472 [DOI] [PubMed] [Google Scholar]

- Wolfe JM (1994). Guided Search 2.0: A revised model of visual search. Psychonomic Bulletin & Review, 1(2), 202–238. doi: 10.3758/bf03200774 [DOI] [PubMed] [Google Scholar]

- Wolfe JM (2012). Saved by a log: How do humans perform hybrid visual and memory search? Psychological Science, 23(7), 698–703. doi: 10.1177/0956797612443968 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodman GF, Carlisle NB, & Reinhart RMG (2013). Where do we store the memory representations that guide attention? Journal of Vision, 13(3). doi: 10.1167/13.3.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yantis S, & Jonides J (1990). Abrupt visual onsets and selective attention: Voluntary versus automatic allocation. Journal of Experimental Psychology: Human Perception and Performance, 16(1), 121–134. doi: 10.1037//0096-1523.16.1.121 [DOI] [PubMed] [Google Scholar]

- Zelinsky GJ (2008). A theory of eye movements during target acquisition. Psychological Review, 115(4), 787–835. doi: 10.1037/a0013118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang W, & Luck SJ (2009). Feature-based attention modulates feedforward visual processing. Nature Neuroscience, 12(1), 24–25. doi: 10.1038/nn.2223 [DOI] [PubMed] [Google Scholar]