Abstract

Purpose:

To train a deep learning (DL) algorithm that quantifies glaucomatous neuroretinal damage on fundus photographs using the minimum rim width relative to Bruch’s membrane opening (BMO-MRW) from spectral domain-optical coherence tomography (SDOCT).

Design:

Cross-sectional study

Methods:

9,282 pairs of optic disc photographs and SDOCT optic nerve head scans from 927 eyes of 490 subjects were randomly divided into the validation plus training (80%) and test sets (20%). A DL convolutional neural network was trained to predict the SDOCT BMO-MRW global and sector values when evaluating optic disc photographs. The predictions of the DL network were compared to the actual SDOCT measurements. The area under the receiver operating curve (AUC) was used to evaluate the ability of the network to discriminate glaucomatous visual field loss from normal eyes.

Results:

The DL predictions of global BMO-MRW from all optic disc photos in the test set (mean ± standard deviation [SD]: 228.8±63.1μm) were highly correlated with the observed values from SDOCT (mean ± SD: 226.0±73.8μm) (Pearson’s r=0.88; R2=77%; P<0.001), with mean absolute error of the predictions of 27.8μm. The AUCs for discriminating glaucomatous from healthy eyes with the DL predictions and actual SDOCT global BMO-MRW measurements were 0.945 (95% CI:0.874–0.980) and 0.933 (95% CI:0.856–0.975), respectively (P=0.587).

Conclusions:

A DL network can be trained to quantify the amount of neuroretinal damage on optic disc photographs using SDOCT BMO-MRW as a reference. This algorithm showed high accuracy for glaucoma detection, and may potentially eliminate the need for human gradings of disc photos.

INTRODUCTION

Glaucoma is the leading cause of irreversible blindness worldwide, and will affect approximately 80 million people by 2020.1 Nevertheless, several population-based surveys have suggested that the majority of patients with glaucoma are unaware that they have the disease.2, 3 The reasons for this are likely multifactorial, including poor public knowledge about glaucoma,3,4 and the fact that glaucoma symptoms may be minimal until the later stages.5 Public health interventions that can effectively and inexpensively screen populations for glaucoma are thus needed so that patients can be diagnosed and treated earlier in the disease course, before they suffer irreversible visual dysfunction.

Over the past two decades, nonmydriatic fundus photographs have been widely and effectively employed to screen for diabetic retinopathy via teleophthalmology.6, 7 However, the use of optic disc photos for glaucoma screening has been limited by the dependence on human graders. Manual review of disc photographs is not only time-consuming, but also highly subjective. When used in a screening setting, monoscopic fundus photo gradings have also shown poor sensitivity for glaucoma.8–13 Numerous studies have documented that gradings of optic disc photographs have only modest reproducibility14, 15 and fair interrater reliability8, 15, 16, even among expert graders with fellowship training in glaucoma.16

Nevertheless, interest in the potential use of fundus photographs for glaucoma screening17, 18 has been renewed in light of recent publications demonstrating the ability of deep learning algorithms to provide accurate classification of retinal diseases on fundus photographs.18–21 Most recently, Li and colleagues17 showed that a deep learning neural network algorithm could be trained to detect optic nerves suspicious, or “referable”, for glaucoma on fundus photographs. While their work is enlightening, the true accuracy of their algorithm is limited by their reliance on the use of subjectively graded fundus photographs for the reference standard. If a neural network is trained using subjectively graded photographs, then the neural network will be trained to mimic the original errors in discrimination made by the human graders. For example, optic nerves with physiologically enlarged cups may be misdiagnosed with glaucoma whereas true glaucomatous damage on small optic discs may be underappreciated.17

When ophthalmologists in clinical practice have difficulty determining whether an optic nerve’s appearance is glaucomatous, they turn to objective metrics such as spectral domain optical coherence tomography (SDOCT) to provide quantitative structural information regarding the optic disc and surrounding tissue. We propose that such quantitative data derived from structural measurements of the optic nerve head may provide a better reference standard for development of neural networks than qualitative gradings of the optic disc on fundus photographs. The minimum rim width relative to Bruch’s membrane opening (BMO-MRW) is a fairly new parameter that has been introduced for the evaluation of the neuroretinal rim on SDOCT. The BMO-MRW is defined by the minimum distance from the internal limiting membrane (ILM) to the inner opening of the BMO within the SDOCT scans averaged around the disc (Supplemental Figure 1, Supplemental Material at AJO.com).22, 23 Several studies have suggested that BMO-MRW is at least as accurate24–26 and may be more sensitive than peripapillary RNFL and other rim-based parameters22, 24, 27 for diagnosing glaucoma. BMO-MRW has shown a very strong correlation with visual field loss in glaucoma.24, 25, 27, 28 Moreover, BMOMRW may be particularly sensitive for the identification of early glaucoma22, 29 and glaucoma suspects,24 making it a potentially useful metric for screening.

In the current study, we develop and validate a novel deep learning algorithm that has been trained using the BMO-MRW from SDOCT to detect glaucomatous optic neuropathy on fundus photographs and to predict the quantitative amount of neuroretinal damage.

METHODS

The data used in this study were drawn from the Duke Glaucoma Repository, a database of electronic research and medical records developed by the Duke University Vision, Imaging and Performance (VIP) Laboratory. The Duke Institutional Review Board approved this study with a waiver of informed consent due to the retrospective nature of this research. The study protocol adhered to the tenets of the Declaration of Helsinki and was conducted in accordance with the Health Insurance Portability and Accountability Act.

All included subjects were adults at least 18 years of age. Records were reviewed for diagnosis, medical history, and results from comprehensive ophthalmic examination including visual acuity, intraocular pressure, slit-lamp biomicroscopy, gonioscopy, and dilated fundus examination. In addition, stereoscopic optic disc photographs (Nidek 3DX, Nidek, Japan) and Spectralis SDOCT (Software version 5.4.7.0, Heidelberg Engineering, GmbH, Dossenheim, Germany) images and associated data were collected. Standard automated perimetry (SAP) acquired with a size III stimulus using the 24–2 test pattern and Swedish interactive threshold algorithm (Carl Zeiss Meditec, Inc., Dublin, CA) was included if the test met reliability parameters such as fewer than 33% fixation losses and less than 15% false-positive errors. Patients with other ocular or systemic diseases that could affect the optic nerve or visual field were excluded.

Eyes were categorized with glaucoma if they had evidence of glaucomatous optic neuropathy documented on dilated fundus examination (i.e. cupping, diffuse or focal rim thinning, optic disc hemorrhage, or retinal nerve fiber layer defects), and a reproducible visual field defect on two consecutive SAP with pattern standard deviation <5% or glaucoma hemifield test outside normal limits. Glaucoma suspects had ocular hypertension, positive family history, or a suspicious appearing optic nerve on clinical examination. Normal subjects had to have a normal dilated examination with no evidence of ocular hypertension or abnormality on SAP.

Spectral Domain Optical Coherence Tomography

Images of the optic nerve head (ONH) were acquired using Spectralis SDOCT (Software version 5.4.7.0, Heidelberg Engineering, GmbH, Heidelberg, Germany). The device has a dualbeam SDOCT as well as a confocal laser-scanning ophthalmoscope that uses a super luminescent diode light with a center wavelength of 870 nm as well as an infrared scan to provide simultaneous images of ocular microstructures. In order to decrease geometrical errors, scans were acquired with reference to the fovea-BMO axes of the patient’s eye,27 and data was sectored based on these axes. A live B-scan was used to detect the fovea and the center of the BMO. A radial scan pattern with 24 equidistant radial B-scans that each subtended 15 degrees was centered on the BMO and used to compute the neuroretinal rim parameters. Each B-scan was averaged from 1536 A-scans per B-scan acquired at a scanning speed of 40,000 A-scans per second. Automated software (Glaucoma Module Premium Edition, version 6.0, Heidelberg Engineering) was used to identify the ILM and BMO. The BMO-MRW was calculated as the minimum distance from the ILM to the BMO averaged over the 24 radial B-scans.28 This was computed automatically for both the global value and the sectors by reference to Garway-Heath structure-function maps.30

During image acquisition, the device’s image registration and eye-tracking software was used to reduce the effect of eye movements and to ensure that the same location was scanned over time. Images were manually reviewed to ensure scan centration, signal strength of at least 15 dB, and high image quality without image inversion or clipping. SDOCT images with coexistent retinal pathology were excluded. In order to improve the heterogeneity of the dataset for deep learning, we used all available optic disc photographs acquired over time and matched them to the closest SDOCT ONH scan within 6 months of the photo date.

Development of the Deep Learning Algorithm

We trained a deep learning algorithm to predict the SDOCT BMO-MRW from assessment of optic disc photographs. The target value, or variable we wanted to predict from analysis of optic disc photographs, was the SDOCT BMO-MRW measurement. Separate models were used for the MRW global value and for each of the following sector values: superior temporal, superior nasal, temporal, nasal, inferior temporal, and inferior nasal. A pair of train-target for training the neural network consisted of the optic disc photograph and the SDOCT BMO-MRW values. The sample of pairs of photo-OCT was split into a training plus validation (80%) and test sample (20%). Random sampling was performed at the patient level so that no data of any patient was present in both the training and test samples. This helped to prevent leakage and biased estimates of test performance.

All optic disc stereophotographs were first preprocessed to derive data for the deep learning algorithm. Each stereoscopic photograph was split to create a pair of photos from the stereo views. The images were subsequently downsampled to 256 × 256 pixels and pixel values were scaled to range from 0 to 1. The heterogeneity of the photographs was improved with data augmentation which helped to mitigate the risk of overfitting as well as allow the algorithm to learn the most relevant features of the image. Data augmentation included random lighting, consisting of subtle changes in image balance and contrast, random rotation, consisting of rotations of up to 10 degrees in the image, and random horizontal image flips. Vertical flips were not performed to preserve the orientation of superior and inferior sectors.

We used the Residual deep neural Network (ResNet34) architecture that had been previously trained on the ImageNet dataset.31 The ResNet is a deep residual network that allows relatively rapid training of very deep convolutional neural networks.32 These networks use identity shortcut connections that skip one or more layers and decrease the vanishing gradient problem when training deep networks. Since the recognition task for the current investigation differs from that of ImageNet, we performed additional training by unfreezing the last 2 layers. Next, all layers were unfrozen and training was performed using differential learning rates. The network was trained with minibatch gradient descent of size 64. The best learning rate was determined using the cyclical learning method with stochastic gradient descents with restarts.

In order to assess the areas of the optic disc photographs that were most important in explaining the deep learning algorithm predictions, we built heatmaps corresponding to the Gradient-weighted class activation maps over the input images.33, 34 For this analysis, we categorized SDOCT MRW into normal and abnormal categories based on the 95% cutoff from healthy eyes. These heatmaps indicate how important each location of the image is with respect to the class under consideration. This technique allows one to visualize the parts of the image that are most important in the deep neural network classification.

Statistical Analyses

We evaluated the performance of the deep learning algorithm for quantifying glaucomatous damage in optic disc photographs in the test sample by comparing the predictions of the algorithm with the actual SDOCT BMO-MRW global and sector values. Generalized estimating equations (GEE) were used to account for multiple measures within each patient. We calculated the mean absolute error of the predictions as well as Pearson’s correlation coefficient.

We also evaluated the relationship between the predicted and observed values of sectoral BMO-MRW and the corresponding sectoral visual field sensitivities according to the Garway-Heath structure-function map.30 Receiver operating characteristic curves were also used to compare the ability of the deep learning algorithm on optic disc photographs versus actual SDOCT BMO-MRW values to discriminate eyes with glaucomatous visual field loss from healthy eyes. Receiver operating characteristic (ROC) curves were plotted to demonstrate the tradeoff between the sensitivity and 1 – specificity. The area under the ROC curve (AUC) was used to assess the diagnostic accuracy of each parameter, with 1.0 representing perfect discrimination and 0.5 representing chance discrimination. Sensitivity at fixed specificity of 95% were calculated. A bootstrap resampling procedure was used to derive 95% confidence intervals and P-values, where the cluster of data for the participant was considered as the unit of resampling. This procedure is commonly employed to adjust for the presence of multiple correlated measurements within the same subject,35 and was used to adjust standard errors in this study since multiple images of both eyes in the same subject had been used.

RESULTS

The study included 9,282 pairs of optic disc photos and SDOCT optic nerve head scans from 927 eyes of 490 subjects, divided into training and validation (80%) and test sample (20%). The test sample consisted of 1,742 pairs of disc photos and SDOCTs from 184 eyes of 98 subjects. Table 1 shows demographic and clinical characteristics of the subjects and eyes in the training and test samples.

Table 1.

Demographic and clinical characteristics of the eyes and patients included in the training and test samples.

| Training sample (7,540 pairs of disc photos and SDOCT optic nerve head scans from 743 eyes of 392 subjects) | |||

| Normal | Suspect | Glaucoma | |

| Number of eyes | 124 | 178 | 441 |

| Number of images | 1,046 | 1,924 | 4,570 |

| Age (years) | 65.6 ± 10.9 | 66.9 ± 11.2 | 71.3 ± 10.6 |

| Gender, % Female | 67.8 | 58.2 | 52.0 |

| Race (%) Caucasian | 58.1 | 70.8 | 69.6 |

| African-American | 41.9 | 29.2 | 30.4 |

| SAP MD (dB) | −0.88 ± 1.68 | −0.26 ± 1.63 | −7.12 ± 7.24 |

| SAP PSD (dB) | 2.18 ± 0.91 | 1.59 ± 0.24 | 6.37 ± 4.02 |

| Global BMO-MRW (μm) | 301.0 ± 52.7 | 250.1 ± 58.3 | 205.3 ± 64.7 |

| Test sample (1,742 pairs of disc photos and SDOCT optic nerve head scans from 184 eyes of 98 subjects) | |||

| Number of eyes | 42 | 34 | 108 |

| Number of images | 340 | 432 | 970 |

| Age (years) | 68.3 ± 9.7 | 72.6 ± 9.1 | 72.0 ± 11.0 |

| Gender, % Female | 66.7 | 68.6 | 51.7 |

| Race (%) Caucasian | 61.9 | 81.4 | 80.8 |

| African-American | 38.1 | 18.6 | 19.2 |

| SAP MD (dB) | −0.39 ± 1.35 | −0.04 ± 1.35 | −5.62 ± 5.9 |

| SAP PSD (dB) | 2.14 ± 1.07 | 1.62 ± 0.20 | 5.11 ± 3.33 |

| Global BMO-MRW (μm) | 318.0 ± 51.2 | 230.1 ± 49.8 | 192.0 ± 60.2 |

SDOCT = Spectral Domain-Optical Coherence Tomography; SAP = Standard Automated Perimetry; MD = Mean Deviation; PSD = Pattern Standard Deviation; BMO-MRW = Minimum rim width relative to Bruch’s membrane opening; dB = Decibels; μm = microns

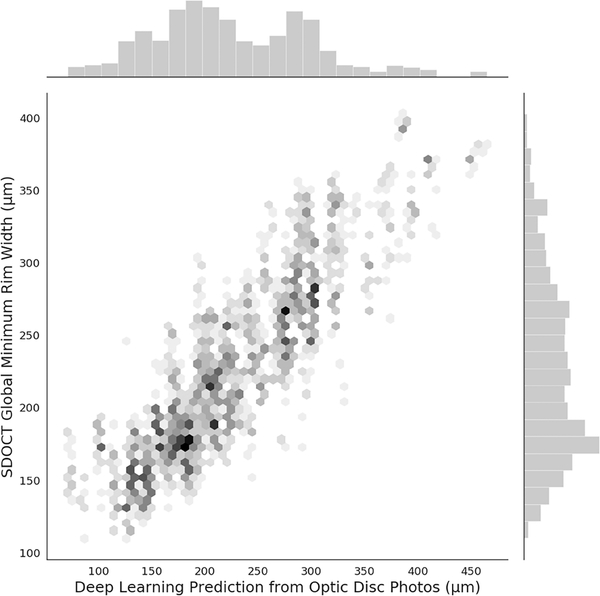

Table 2 shows mean MRW predictions in the test set from the deep learning algorithm for global and sectoral areas, as well as corresponding actual SDOCT MRW measurements. For global MRW, the mean prediction from the deep learning algorithm was 228.8 ± 63.1 μm, whereas the mean actual SDOCT MRW was 226.0 ± 73.8 μm (P=0.415; GEE). A strong correlation was seen between predictions and observed values, with r=0.88 (R2 = 77%; P<0.001; GEE). Figure 1 shows a scatterplot and corresponding histograms illustrating the relationship between deep learning predictions and actual SDOCT measurements. The mean absolute deviation was 27.8 μm, or approximately 12%. Pearson’s correlation coefficients ranged from 0.68 to 0.82 for the different sectors around the optic disc (all correlations with P<0.001; GEE).

Table 2.

Mean global and sectoral minimum rim width relative to Bruch’s membrane opening values obtained from spectral-domain optical coherence tomography and corresponding mean values for the predictions from the deep learning algorithm in the test sample. The table also shows the Pearson’s correlation coefficient and mean absolute error between predictions and observations from the test sample.

| Deep Learning Prediction from Fundus Photos Mean ± SD, μm | SDOCT BMO-MRW Mean ± SD, μm | P for difference in means | r (R2) | P for correlation | MAE, μm | |

|---|---|---|---|---|---|---|

| Global | 228.8 ± 63.1 | 226.0 ± 73.8 | 0.415 | 0.88 (77%) | <0.001 | 27.8 |

| Temporal inferior | 221.8 ± 78.9 | 228.4 ± 91.3 | 0.141 | 0.81 (66%) | <0.001 | 41.7 |

| Temporal Superior | 206.8 ± 60.9 | 208.6 ± 75.7 | 0.667 | 0.78 (62%) | <0.001 | 36.8 |

| Temporal | 165.9 ± 34.8 | 163.1 ± 54.7 | 0.487 | 0.68 (46%) | <0.001 | 32.2 |

| Nasal Superior | 253.3 ± 72.9 | 254.6 ± 90.4 | 0.784 | 0.82 (67%) | <0.001 | 38.8 |

| Nasal Inferior | 273.6 ± 77.8 | 277.6 ± 104.3 | 0.522 | 0.78 (60%) | <0.001 | 51.9 |

| Nasal | 257.7 ± 71.2 | 253.9 ± 90.1 | 0.459 | 0.82 (68%) | <0.001 | 40.4 |

SD = Standard Deviation; MAE = Mean Absolute Error; SDOCT = Spectral Domain-Optical Coherence Tomography; BMO-MRW = Minimum rim width relative to Bruch’s membrane opening; μm = microns

Figure 1.

Scatterplot illustrating the relationship between predictions obtained by the deep learning algorithm evaluating optic disc photographs and actual global minimum rim width relative to Bruch’s membrane opening (BMO-MRW) thickness measurements from spectral domain-optical coherence tomography (SDOCT). Data is from the independent test set.

Deep learning global MRW predictions were significantly associated with SAP MD (r = 0.46; P<0.001; GEE). SDOCT Global MRW also showed a similar association with SAP MD (r = 0.49; P<0.001; GEE). Table 3 shows the associations between sectoral deep learning MRW predictions and the visual field sensitivities of the corresponding sectors according to the structure-function map. The associations had similar strength to those observed for the actual SDOCT MRW measurements. ROC curve areas to discriminate glaucomatous from healthy eyes are shown in Table 4 and they were also similar for deep learning predictions and actual SDOCT measurements. For global MRW, deep learning predictions had an ROC curve area of 0.945 (95% CI: 0.874 – 0.980) versus 0.933 (95% CI: 0.856 – 0.975) for SDOCT measurements.

Table 3.

Correlations with 95% confidence interval between visual field sensitivity by sectors and the corresponding minimum rim width relative to Bruch’s membrane opening deep learning predictions and actual spectral-domain optical coherence tomography values.

| Deep Learning BMO-MRW Prediction (95% CI) | SDOCT BMO-MRW (95% CI) | |

|---|---|---|

| Temporal inferior | 0.52 (0.42 – 0.63) | 0.59 (0.49 – 0.70) |

| Temporal Superior | 0.43 (0.32 – 0.54) | 0.50 (0.39 – 0.61) |

| Temporal | 0.38 (0.22 – 0.54) | 0.41 (0.29 – 0.52) |

| Nasal Superior | 0.40 (0.30 – 0.50) | 0.42 (0.31 – 0.53) |

| Nasal Inferior | 0.39 (0.28 – 0.50) | 0.48 (0.37 – 0.60) |

| Nasal | 0.36 (0.23– 0.50) | 0.38 (0.27 – 0.50) |

SDOCT = Spectral Domain-Optical Coherence Tomography; BMO-MRW = Minimum rim width relative to Bruch’s membrane opening; CI = Confidence Interval

Table 4.

Areas under the receiver operating characteristic curves to discriminate eyes with glaucoma from healthy eyes in the test sample for spectral domain optical coherence tomography minimum rim width relative to Bruch’s membrane opening and the corresponding predictions from the deep learning algorithm assessing fundus photographs.

| Deep Learning BMO-MRW Prediction | SDOCT BMO-MRW | P | |

|---|---|---|---|

| Global | 0.945 (0.874 – 0.980) | 0.933 (0.856 – 0.975) | 0.587 |

| Temporal inferior | 0.939 (0.885 – 0.973) | 0.927 (0.843 – 0.969) | 0.632 |

| Temporal Superior | 0.935 (0.879 – 0.970 | 0.904 (0.822 – 0.969) | 0.334 |

| Temporal | 0.915 (0.829 – 0.962) | 0.843 (0.734 – 0.927) | 0.180 |

| Nasal Superior | 0.949 (0.886 – 0.980) | 0.927 (0.848 – 0.974) | 0.365 |

| Nasal Inferior | 0.942 (0.884 – 0.979) | 0.952 (0.894 – 0.986) | 0.689 |

| Nasal | 0.919 (0.837 – 0.973) | 0.898 (0.794 – 0.958) | 0.447 |

ROC = Receiver operating characteristic; SDOCT = Spectral Domain-Optical Coherence Tomography; BMO-MRW = minimum rim width relative to Bruch’s membrane opening

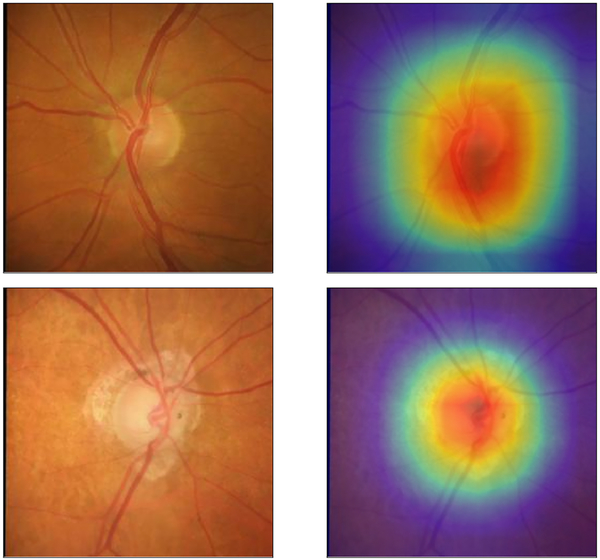

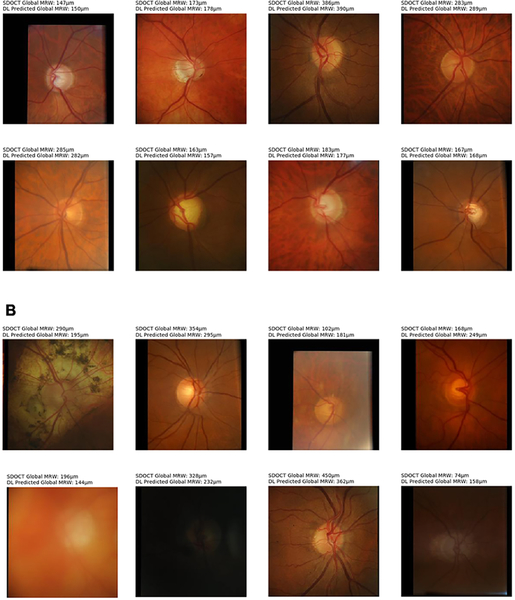

Figure 2 illustrates examples of optic disc photographs and corresponding activation maps (heatmaps) of the deep learning network for a normal (top) and a glaucoma (bottom) eye. The heatmaps show that the activations were most strongly found in the area of the optic nerve, indicating that these areas were the most important for the network predictions. Figure 3 shows some random examples of optic disc photos from the test sample along with the corresponding global MRW deep learning predictions and SDOCT measurements. For illustrative purposes, the random examples were drawn from a sample where predictions closely agreed to observations (within 20μm) and also from a sample where the predictions disagreed with the observations (greater than 50μm difference).

Figure 2.

Activation heatmaps showing the areas of the optic disc photograph that were most important for the deep learning algorithm predictions in an example of a healthy (Top row) and glaucomatous (Bottom row) eye.

Figure 3.

Random examples of optic disc photos from the test sample along with the corresponding global minimum rim width (MRW) relative to Bruch’s membrane opening deep learning (DL) predictions and spectral domain-optical coherence tomography (SDOCT) measurements. (Top) Random examples where predictions closely agreed to observations (within 20μm). (Bottom) Random examples where the predictions disagreed with the observations (greater than 50μm difference).

DISCUSSION

In this study, we developed and validated a novel deep learning neural network capable of quantifying the amount of neuroretinal damage on an optic disc photograph. We accomplished this by training the algorithm to predict the SDOCT BMO-MRW when assessing photos of glaucomatous optic nerves, glaucoma suspects and normal nerves. We also demonstrated that these predicted structural values were significantly correlated with visual field loss on standard automated perimetry. To the best of our knowledge, this is the first investigation to demonstrate that a deep learning network can accurately predict the BMO-MRW when assessing an optic disc photograph.

The task of grading disc photographs is notoriously laborious and time-intensive for human graders. Deep learning algorithms may offer an alternative mechanism for interpreting disc photos that is both expedient and accurate. Such algorithms may eventually preclude the reliance on human graders. However, currently published machine learning algorithms that have been developed for the detection of glaucoma on optic disc photographs were trained using photos that had been graded by human graders.17, 18 The reliance on subjective gradings for a reference standard presents several dilemmas. Human gradings on optic disc photos have been shown to have poor reproducibility14, 15and interrater reliability.8, 15, 16 Moreover, misclassification can occur. Clinicians tend to underappreciate glaucomatous damage in eyes with small optic discs and myopic nerves but overdiagnose glaucoma in eyes with large physiologic cups.17 Thus, if subjective human gradings are used as the reference standard to train a neural network, the resulting deep learning algorithm will yield similar diagnostic errors.

Using an SDOCT parameter such as BMO-MRW as a reference standard for training the classifier offers several unique advantages. First, the data is objective rather than subjective, and bypasses the need for human gradings. Second the parameter BMO-MRW is known to have high reproducibility,36 unlike human gradings of photos.14, 15 Third, the BMO-MRW parameter is highly accurate for glaucoma on SDOCT.25 Previous groups have also suggested that certain sectors of the BMO-MRW, such as the superotemporal, superonasal and nasal sectors, may perform better than regional RNFL thickness for the diagnosis of glaucoma.37 We found that the superotemporal, superonasal, inferotemporal and inferonasal sector values had high ROC curve areas for discriminating eyes with glaucomatous field loss from healthy eyes. Future studies should investigate whether neural network trained with SDOCT BMO-MRW perform better than those trained with other parameters such as RNFL.

BMO-MRW may also be advantageous in situations where the optic nerve is difficult to grade. For example, a previous study has shown that the use of BMO-MRW may improve the structure-function correlation in eyes with high myopia.25 This is important since Li et al.’s deep learning algorithm, which was trained with subjectively graded photographs tended to underdiagnose glaucoma in high myopia, generating false negatives.17 Similarly eyes with physiologically enlarged cups or pathologic myopia were frequently misclassified with glaucoma, leading to a false positive result in their study.17 Since our deep learning algorithm was trained using SDOCT BMO-MRW, we suspect that it may have better discriminatory ability in such challenging cases when compared to other algorithms trained by human gradings of photos. However, additional work is needed to test this hypothesis. Finally, several groups have suggested that BMO-MRW may be better able to detect mild glaucoma22, 29 and glaucoma suspects24 than other SDOCT parameters. Thus, neural networks trained using SDOCT BMOMRW may be of particular value as a screening measure to detect preperimetric glaucoma before the onset of visual field is loss.

Although SDOCT has become the nonpareil method for quantifying structural damage from glaucoma, the technology is currently too expensive and impractical to transport for it to be used for screening in a low-resource setting. On the other hand, fundus photographs can be acquired through a variety of affordable and portable mechanisms, from relatively inexpensive nonmydriatic fundus cameras38 to cell phones.39 However, numerous studies have found that human interpretations of monoscopic disc photos have poor sensitivity as low as 50% for glaucoma.8–13 Such reports have called into question the practical utility of relying on photos for glaucoma screening. Although screening with SDOCT is not currently feasible, we have shown that a deep learning algorithm trained to predict the BMO-MRW from fundus photographs shows very high correlation with the actual SDOCT values. Moreover, the correlation of the algorithm’s predictions with visual field loss was similar to that found for the actual SDOCT measurements. This provides additional validation of the algorithm by demonstrating its ability to discriminate between glaucomatous field loss and normal fields. This is particularly important since other recently published work that developed deep learning algorithms trained by subjectively graded optic disc photographs did not correlate their classifications with visual dysfunction or any other clinically relevant outcomes.11, 12, 17, 18

Finally, since the algorithm we developed provides continuous predicted values similar to the BMO-MRW, it is possible that these values may be useful for detecting longitudinal change over time. This is a unique advantage over any classification algorithm that only provides a binary assessment of the optic disc as glaucomatous or not. Algorithms trained to produce quantitative data may make it possible to detect progression in patients followed over time with fundus photographs in low resource settings where SDOCT is not available. Future longitudinal investigations will evaluate the plausibility of this approach.

Our study has several limitations. We did not remove low quality fundus photographs, which likely introduced some of the unexplained variability in the algorithm. On the other hand, photos obtained through screening programs and in a clinical setting are likely to exhibit a range of quality. Algorithms trained only on optimal photos may not be generalizable to real-world settings. We expect that our algorithm will perform well at quantifying neuroretinal damage on optic disc photographs acquired in a similar clinical setting. However, performance in a screening setting may differ especially in racial and ethnic groups not represented in this study. External validation in different screening populations will be the subject of future studies. Variability of BMO-MRW on SDOCT may also contribute to some of the unexplained variability of the algorithm. Future studies will compare algorithms trained by different SDOCT parameters to algorithms trained by subjectively graded fundus photos. Combining two or more parameters may also further improve the discriminatory ability of the algorithm.40

In summary, we used the BMO-MRW from SDOCT to train a deep learning algorithm to predict quantitative data about the degree of neuroretinal damage when assessing an optic disc photograph. The predicted quantitative data was correlated with the BMO-MRW value from SDOCT and showed high diagnostic discrimination for glaucomatous visual field loss. This approach overcomes substantial limitations of algorithms trained by human graders. These findings may have important implications for the diagnostic value of fundus photographs for glaucoma screening, especially in low-resource settings.

Supplementary Material

Supplemental Figure 1. Spectral domain-optical coherence tomography (SDOCT) scans from a patient with glaucoma; Bruch’s membrane opening (BMO) is outlined by red dots. Solid white lines indicate the section of each B-scan shown in detail. The minimum rim width relative to the BMO (BMO-MRW) is indicated by a green line if it is normal or a red line if it is thinner than the normative database.

ACKNOWLEDGEMENTS/DISCLOSURE

a. Funding support: Supported in part by National Institutes of Health/National Eye Institute grant EY025056 (FAM), EY021818 (FAM), and the Heed Foundation (ACT). The funding organizations had no role in the design or conduct of this research.

b. Financial Disclosures: A.A.J.: Conselho Nacional de Desenvolvimento Científico e Tecnológico (F). F.A.M.: Alcon Laboratories (C, F, R), Allergan (C, F), Bausch&Lomb (F), Carl Zeiss Meditec (C, F, R), Heidelberg Engineering (F), Merck (F), nGoggle Inc. (F), Sensimed (C), Topcon (C), Reichert (C, R). A.C.T.: none.

Footnotes

Supplemental Material available at AJO.com.

REFERENCES

- 1.Quigley HA, Broman AT. The number of people with glaucoma worldwide in 2010 and 2020. Br J Ophthalmol 2006;90(3):262–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Budenz DL, Barton K, Whiteside-de Vos J, et al. Prevalence of glaucoma in an urban West African population: the Tema Eye Survey. JAMA Ophthalmol 2013;131(5):651–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hennis A, Wu SY, Nemesure B, et al. Awareness of incident open-angle glaucoma in a population study: the Barbados Eye Studies. Ophthalmology 2007;114(10):1816–21. [DOI] [PubMed] [Google Scholar]

- 4.Sathyamangalam RV, Paul PG, George R, et al. Determinants of glaucoma awareness and knowledge in urban Chennai. Indian J Ophthalmol 2009;57(5):355–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Weinreb RN, Aung T, Medeiros FA. The pathophysiology and treatment of glaucoma: a review. JAMA 2014;311(18):1901–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Owsley C, McGwin G Jr., Lee DJ, et al. Diabetes eye screening in urban settings serving minority populations: detection of diabetic retinopathy and other ocular findings using telemedicine. JAMA Ophthalmol 2015;133(2):174–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Maa AY, Patel S, Chasan JE, et al. Retrospective Evaluation of a Teleretinal Screening Program in Detecting Multiple Nondiabetic Eye Diseases. Telemed J E Health 2017;23(1):41–8. [DOI] [PubMed] [Google Scholar]

- 8.Chan HH, Ong DN, Kong YX, et al. Glaucomatous optic neuropathy evaluation (GONE) project: the effect of monoscopic versus stereoscopic viewing conditions on optic nerve evaluation. Am J Ophthalmol 2014;157(5):936–44. [DOI] [PubMed] [Google Scholar]

- 9.Lichter PR. Variability of expert observers in evaluating the optic disc. Trans Am Ophthalmol Soc 1976;74:532–72. [PMC free article] [PubMed] [Google Scholar]

- 10.Kumar S, Giubilato A, Morgan W, et al. Glaucoma screening: analysis of conventional and telemedicine-friendly devices. Clin Exp Ophthalmol 2007;35(3):237–43. [DOI] [PubMed] [Google Scholar]

- 11.Maa AY, Evans C, DeLaune WR, et al. A novel tele-eye protocol for ocular disease detection and access to eye care services. Telemed J E Health 2014;20(4):318–23. [DOI] [PubMed] [Google Scholar]

- 12.Marcus DM, Brooks SE, Ulrich LD, et al. Telemedicine diagnosis of eye disorders by direct ophthalmoscopy. A pilot study. Ophthalmology 1998;105(10):1907–14. [DOI] [PubMed] [Google Scholar]

- 13.Yogesan K, Constable IJ, Barry CJ, et al. Evaluation of a portable fundus camera for use in the teleophthalmologic diagnosis of glaucoma. J Glaucoma 1999;8(5):297–301. [PubMed] [Google Scholar]

- 14.Varma R, Steinmann WC, Scott IU. Expert agreement in evaluating the optic disc for glaucoma. Ophthalmology 1992;99(2):215–21. [DOI] [PubMed] [Google Scholar]

- 15.Abrams LS, Scott IU, Spaeth GL, et al. Agreement among optometrists, ophthalmologists, and residents in evaluating the optic disc for glaucoma. Ophthalmology 1994;101(10):1662–7. [DOI] [PubMed] [Google Scholar]

- 16.Jampel HD, Friedman D, Quigley H, et al. Agreement among glaucoma specialists in assessing progressive disc changes from photographs in open-angle glaucoma patients. Am J Ophthalmol 2009;147(1):39–44 e1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Li Z, He Y, Keel S, et al. Efficacy of a Deep Learning System for Detecting Glaucomatous Optic Neuropathy Based on Color Fundus Photographs. Ophthalmology 2018;125(8):1199–206. [DOI] [PubMed] [Google Scholar]

- 18.Ting DSW, Cheung CY, Lim G, et al. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images From Multiethnic Populations With Diabetes. JAMA 2017;318(22):2211–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gulshan V, Peng L, Coram M, et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA 2016;316(22):2402–10. [DOI] [PubMed] [Google Scholar]

- 20.Raju M, Pagidimarri V, Barreto R, et al. Development of a Deep Learning Algorithm for Automatic Diagnosis of Diabetic Retinopathy. Stud Health Technol Inform 2017;245:559–63. [PubMed] [Google Scholar]

- 21.Takahashi H, Tampo H, Arai Y, et al. Applying artificial intelligence to disease staging: Deep learning for improved staging of diabetic retinopathy. PLoS One 2017;12(6):e0179790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chauhan BC, O’Leary N, AlMobarak FA, et al. Enhanced detection of open-angle glaucoma with an anatomically accurate optical coherence tomography-derived neuroretinal rim parameter. Ophthalmology 2013;120(3):535–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Reis AS, O’Leary N, Yang H, et al. Influence of clinically invisible, but optical coherence tomography detected, optic disc margin anatomy on neuroretinal rim evaluation. Invest Ophthalmol Vis Sci 2012;53(4):1852–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Pollet-Villard F, Chiquet C, Romanet JP, et al. Structure-function relationships with spectral-domain optical coherence tomography retinal nerve fiber layer and optic nerve head measurements. Invest Ophthalmol Vis Sci 2014;55(5):2953–62. [DOI] [PubMed] [Google Scholar]

- 25.Reznicek L, Burzer S, Laubichler A, et al. Structure-function relationship comparison between retinal nerve fibre layer and Bruch’s membrane opening-minimum rim width in glaucoma. Int J Ophthalmol 2017;10(10):1534–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gmeiner JM, Schrems WA, Mardin CY, et al. Comparison of Bruch’s Membrane Opening Minimum Rim Width and Peripapillary Retinal Nerve Fiber Layer Thickness in Early Glaucoma Assessment. Invest Ophthalmol Vis Sci 2016;57(9):Oct575–84. [DOI] [PubMed] [Google Scholar]

- 27.Danthurebandara VM, Vianna JR, Sharpe GP, et al. Diagnostic Accuracy of Glaucoma With Sector-Based and a New Total Profile-Based Analysis of Neuroretinal Rim and Retinal Nerve Fiber Layer Thickness. Invest Ophthalmol Vis Sci 2016;57(1):181–7. [DOI] [PubMed] [Google Scholar]

- 28.Gardiner SK, Ren R, Yang H, et al. A method to estimate the amount of neuroretinal rim tissue in glaucoma: comparison with current methods for measuring rim area. Am J Ophthalmol 2014;157(3):540–9.e1–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gardiner SK, Boey PY, Yang H, et al. Structural Measurements for Monitoring Change in Glaucoma: Comparing Retinal Nerve Fiber Layer Thickness With Minimum Rim Width and Area. Invest Ophthalmol Vis Sci 2015;56(11):6886–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Garway-Heath DF, Poinoosawmy D, Fitzke FW, Hitchings RA. Mapping the visual field to the optic disc in normal tension glaucoma eyes. Ophthalmology 2000;107(10):1809–15. [DOI] [PubMed] [Google Scholar]

- 31.Deng J, Dong W, Socher R, et al. Imagenet: A large-scale hierarchical image database Paper presented at: Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference 2009. [Google Scholar]

- 32.He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. ArXiv eprints. 2015. https://ui.adsabs.harvard.edu/#abs/2015arXiv151203385H. Accessed December 01, 2015 [Google Scholar]

- 33.Selvaraju RR, Cogswell M, Das A, et al. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. ArXiv e-prints 2016. https://ui.adsabs.harvard.edu/#abs/2016arXiv161002391S. Accessed October 01, 2016. [Google Scholar]

- 34.Selvaraju RR, Das A, Vedantam R, et al. Grad-CAM: Why did you say that? ArXiv eprints 2016. https://ui.adsabs.harvard.edu/#abs/2016arXiv161107450S. Accessed November 01, 2016. [Google Scholar]

- 35.Medeiros FA, Sample PA, Zangwill LM, et al. A statistical approach to the evaluation of covariate effects on the receiver operating characteristic curves of diagnostic tests in glaucoma. Invest Ophthalmol Vis Sci 2006;47(6):2520–7. [DOI] [PubMed] [Google Scholar]

- 36.Reis ASC, Zangalli CES, Abe RY, et al. Intra- and interobserver reproducibility of Bruch’s membrane opening minimum rim width measurements with spectral domain optical coherence tomography. Acta Ophthalmol 2017;95(7):e548–e55. [DOI] [PubMed] [Google Scholar]

- 37.Kim SH, Park KH, Lee JW. Diagnostic Accuracies of Bruch Membrane Opening-minimum Rim Width and Retinal Nerve Fiber Layer Thickness in Glaucoma. J Korean Ophthalmol Soc 2017;58(7):836–45. [Google Scholar]

- 38.Miller SE, Thapa S, Robin AL, et al. Glaucoma Screening in Nepal: Cup-to-Disc Estimate With Standard Mydriatic Fundus Camera Compared to Portable Nonmydriatic Camera. Am J Ophthalmol 2017;182:99–106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Fleming C, Whitlock EP, Beil T, et al. Screening for primary open-angle glaucoma in the primary care setting: an update for the US preventive services task force. Ann Fam Med 2005;3(2):167–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Park K, Kim J, Lee J. The Relationship Between Bruch’s Membrane Opening-Minimum Rim Width and Retinal Nerve Fiber Layer Thickness and a New Index Using a Neural Network. Transl Vis Sci Technol 2018;7(4):14. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Figure 1. Spectral domain-optical coherence tomography (SDOCT) scans from a patient with glaucoma; Bruch’s membrane opening (BMO) is outlined by red dots. Solid white lines indicate the section of each B-scan shown in detail. The minimum rim width relative to the BMO (BMO-MRW) is indicated by a green line if it is normal or a red line if it is thinner than the normative database.