Abstract

The identification of discriminative features from information-rich data with the goal of clinical diagnosis is crucial in the field of biomedical science. In this context, many machine-learning techniques have been widely applied and achieved remarkable results. However, disease, especially cancer, is often caused by a group of features with complex interactions. Unlike traditional feature selection methods, which only focused on finding single discriminative features, a multilayer feature subset selection method (MLFSSM), which employs randomized search and multilayer structure to select a discriminative subset, is proposed herein. In each level of this method, many feature subsets are generated to assure the diversity of the combinations, and the weights of features are evaluated on the performances of the subsets. The weight of a feature would increase if the feature is selected into more subsets with better performances compared with other features on the current layer. In this manner, the values of feature weights are revised layer-by-layer; the precision of feature weights is constantly improved; and better subsets are repeatedly constructed by the features with higher weights. Finally, the topmost feature subset of the last layer is returned. The experimental results based on five public gene datasets showed that the subsets selected by MLFSSM were more discriminative than the results by traditional feature methods including LVW (a feature subset method used the Las Vegas method for randomized search strategy), GAANN (a feature subset selection method based genetic algorithm (GA)), and support vector machine recursive feature elimination (SVM-RFE). Furthermore, MLFSSM showed higher classification performance than some state-of-the-art methods which selected feature pairs or groups, including top scoring pair (TSP), k-top scoring pairs (K-TSP), and relative simplicity-based direct classifier (RS-DC).

1. Introduction

Identifying disease types/subtypes from biomedical data is very important to understand diseases and develop drugs, among other important functions. In this context, many machine-learning techniques, including support vector machine (SVM) [1], random forest (RF) [2], and k-nearest-neighbor (KNN) [3], have been applied in this field with remarkable performance [4, 5].

Given that biomedical data are expensive to generate and difficult to obtain, a small number of samples with thousands of features would distort the distribution of the real data. Various selection methods had been proposed to remove the insignificant features and improve the prediction performance of the models [6–9]. Depending on the way to combine the search of feature subsets with the construction of classification model, feature selection methods are divided into three categories: filter methods, wrapper methods, and embedded methods [10]. Filter methods focus on univariate or multivariate analysis and ignore the interaction with classifier. T-test, relief [11], correlation-based feature selection (CFS) [12], and fast correlation-based feature selection (FCBF) [13] are the common filter methods. Different from filter methods, wrapper methods use classification models to evaluate the selected feature subsets, including sequential search [14], genetic algorithms (GA) [15], and estimation of distribution algorithm (EDA) [16]. Embedded methods select optimal feature subsets and construct suitable classification models simultaneously. Support vector machine recursive feature elimination (SVM-RFE) is a typically famous embedded feature selection method [17].

As the number of feature subsets would increase exponentially with the number of features, it is impractical to evaluate all the subsets in wrapper or embedded methods. Search strategies had been proposed. Among these strategies, randomized and deterministic methods were the most frequently used [18–20]. Randomized methods search the subsets with some kind of randomness, including the Las Vegas wrapper (LVW) [21], genetic algorithm partial least squares (GAPLS) [22], and Monte Carlo-based uninformative variable elimination in partial least squares [23], while deterministic methods do with some heuristic search ways, including sequential forward selection (SFS) and sequential backward selection (SBS).

However, deterministic methods were often prone to local optimum while randomized search method always returned the ranking of features. Because the upper limit on the number of features in a subset was difficult to be predetermined, the complexity of randomized search methods would increase exponentially with the upper limit. In this paper, we propose a wrapper feature selection method with randomized search strategy. Unlike other randomized search methods, the goal of our method is to select a feature subset. We employ randomized search and multilayer structure to constantly adjust the weights of each feature. First, all the features are assigned same weights. Many feature subsets are generated based on the weights, and the classification models are constructed with SVM for each subset. The weight of a feature should increase if it is selected into more subsets with better performance than other features on the current layer. In this manner, the weights of features are revised layer-by-layer, precision of feature weights are constantly improved, and better subsets are repeatedly constructed by the features with higher weights. Finally, the topmost feature subset of the last layer is returned as the result. Herein, our multilevel feature subset selection method (MLFSSM) is compared with LVW, GAANN [24], SVM-RFE, and other feature selection methods using publicly available cancer datasets.

2. Methods

We assumed a dataset X (N × M), where N is the number of samples and M is the number of features. The feature set is denoted as F = {f1, f2,…, fM}, and the class label set C is denoted as C = {−1,1}.

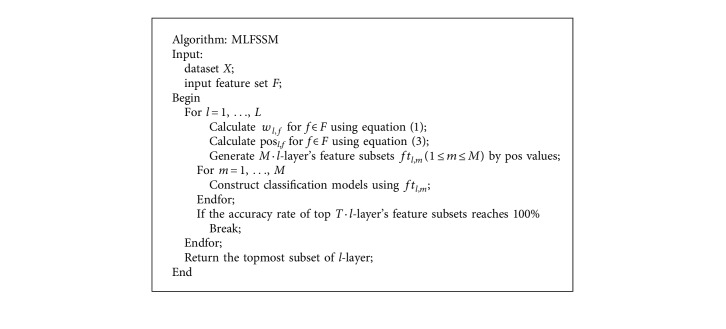

In this article, we propose a multilayer feature subset selection method named MLFSSM. First, the features are set to the same weight and picked into the subsets based on the weights. To obtain diverse feature combinations, many subsets are generated to assure the diversity of the combinations. Subsequently, classification models are constructed on each subset. According to the accuracies of the models, the weight of a feature should be increased if it is selected into more subsets with better performance than other features on the current layer. In this way, the weights of features are recalculated, and new subsets are regenerated using the weights on the following layer. The process is repeated until the terminal condition is met. The subset with the highest classification accuracy among those on the last layer is returned as the final result. Here, (1) how to calculate feature weights, (2) how to select features into the subsets, and (3) how to decide the terminal condition are the three key issues.

-

(1)Considering the issue of calculation of feature weights, the features in the subset might be more discriminative than others when a feature subset achieves a high accuracy rate, and their weights should be increased for the next subset selection. Furthermore, the weights of features calculated on the former layers are involved in the computation of the feature weights on the current layer. The weight of feature f on layer l is calculated as follows:

(1) For diversity feature subsets, the total number of subsets generated on layer l equals to the number of features M. ftl,m denotes the mth subset on the lth layer, and accuftl,m is the classification accuracy of ftl,m on layer l. w l−1,f is the weight of feature f on the former layer l − 1. And

| (2) |

Each subset ftl,m (1 ≤ m ≤ M) includes ls features (the length of the subset). On the first layer, we set each subset contains nonduplicate features, and the occurrence frequency of each feature is equal. On the following layers, M subsets were constructed by the revised feature weights, while duplicate features might be contained. We will discuss how to decide the appropriate value of ls in the experimental section.

Furthermore, apt values of α and p might prevent the program from getting stuck in a local optimum and learn enough information from the former layers at the same time. In the experimental section, we will discuss how to choose the appropriate value of the parameters in the experimental section.

(2) For the second issue, the probability of feature f being selected on layer l is

| (3) |

From equation (3), it is observed that features have equal probabilities 1/M for selection on the first layer. With the weights being revised, the features with higher weights would appear in more subsets for their larger posibility values than other features.

(3) For the terminate condition issue, although more layers might achieve higher performance, the running time will sharply increase and the performance improvement will slow down or stabilize with the number of layers. The algorithm could be terminated when the accuracy rate of the top T feature subset reaches 100% or when the number of layers reaches L. Here, we suggest T = 20 and L = 20 for tolerable running time and enough stability results.

Algorithm 1 lists the description of the MLFSSM algorithm.

Algorithm 1.

Description of the MLFSSM algorithm.

3. Experiments

To validate the effectiveness of MLFSSM, we attempt to discuss three issues:

In this method, three parameters affect the performance of MLFSSM, including weight ratio α and power number p in equation (1) and the length of every subset ls.

The performances of MLFSSM, LVW, GAANN, SVM-RFE, and other traditional feature selection methods are compared to assess whether MLFSSM is more effective than the other methods.

The performance of MLFSSM is compared with those of the three methods, including TSP, K-TSP, and RS-DC. We further validate whether the subset selected by MLFSSM is more effective than the pairs or groups by the other methods.

In this study, SVM is used for classification. The codes of SVM were downloaded from LibSVM (available at http://www.csie.ntu.edu.tw/∼cjlin/libsvm). RBF kernel was used for good bioinformatics performance, and the penalty parameter C was set to 1 in SVM-RFE and MLFSSM. All the experiments used 20 times five-fold cross validation. Five public gene datasets were used in the three experiments. Table 1 lists the details of the datasets.

Table 1.

Details of five public datasets for comparison.

3.1. Effects of Parameters

The default values and the ranges of the three parameters are listed in Table 2. To study the effects of the parameters, the value of one parameter was changed at a time, while the values of other parameters were set to the default values in the experiments.

Table 2.

Summary of the parameter setting.

| Parameters | Default values | Range |

|---|---|---|

| Weight ratio α | 0.2 | 0.1, 0.2, 0.4, 0.6, 0.8, 0.9 |

| Power number p | 32 | 1, 2, 4, 8, 16, 32, 64, 128, 256, 512 |

| Feature subset length ls | 21 | 1, 5, 11, 15, 21, 25, 31, 35, 41, 45, 51 |

3.1.1. Effects of Weight Ratio α

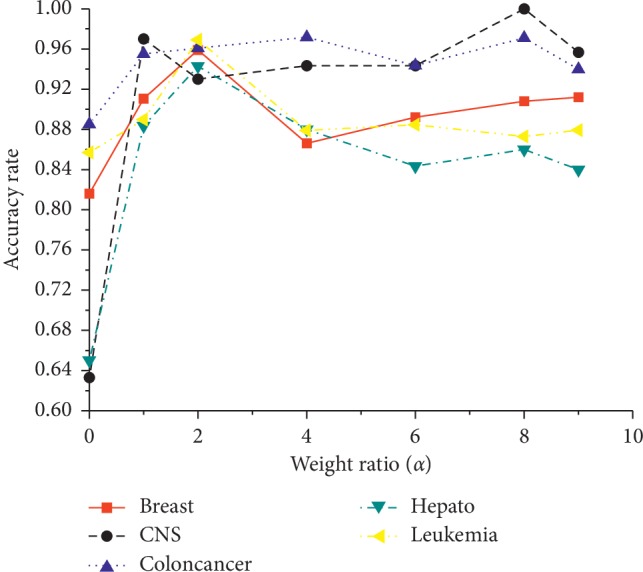

Figure 1 shows the effects of the ratios α in MLFSSM which ranges from 0.1 to 0.9. It is observed that the values are usually lower than others when α = 0.1 or 0.9, and the accuracies at α = 0.2 show well above the ones at other values in most of the datasets. The possible reason could be that α = 0.2 could get a globally optimal solution and avoid falling into local optimum in MLFSSM. So α = 0.2 is suggested as the default value.

Figure 1.

Effects of weight ratio α.

3.1.2. Effects of Power Number p

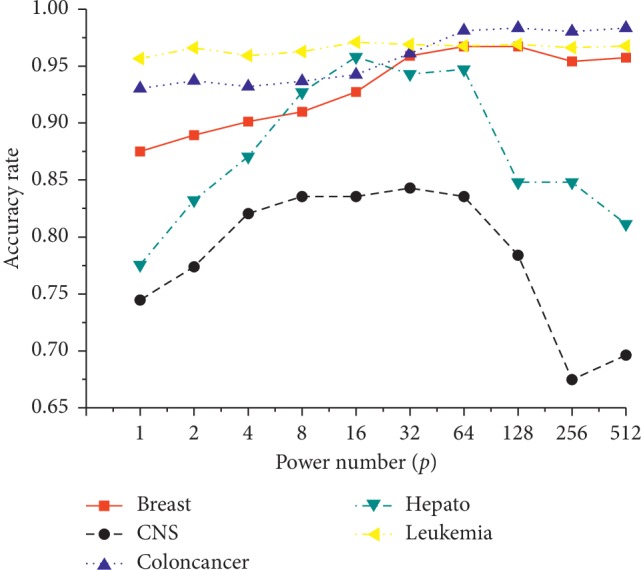

Figure 2 shows the effects of power number p, which ranges from 1 to 512. The accuracy usually increases when p ≤ 32 with the highest accuracies at p=32. Subsequently, the accuracy often decreases when p > 32. This might be because smaller or larger p values fail to find the global optimum features. Thus, p=32 is suggested as the default value.

Figure 2.

Effects of power number p.

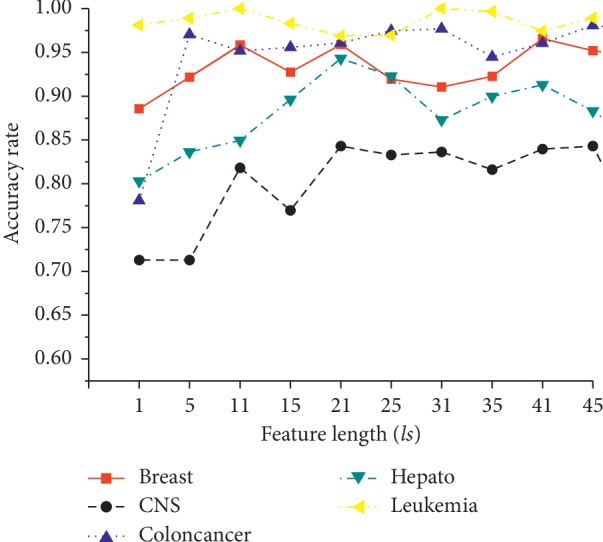

3.1.3. Effects of Feature Subset Length ls

Figure 3 shows the effects of feature subset length ls, which ranges from 1 to 51. The accuracies at ls = 21 have showed better performances than the ones at other values in all five datasets. The possible reason could be that a modest number of features not only include informative features but also exclude noise features. Therefore, ls = 21 is suggested as the default value.

Figure 3.

Effects of feature subset length ls.

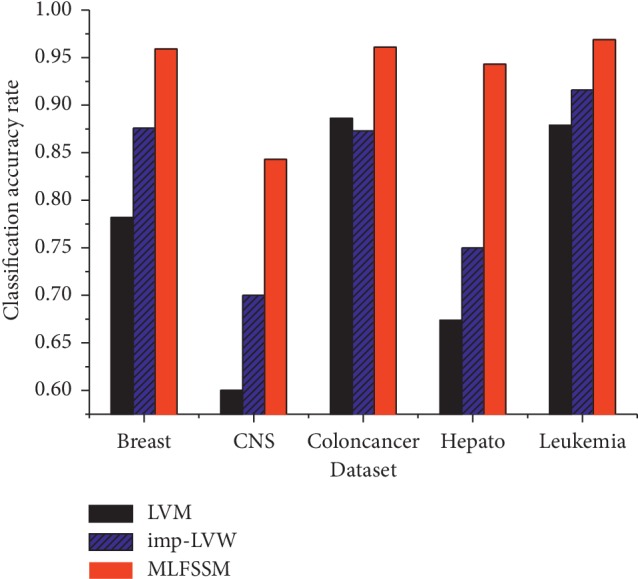

3.2. Comparison with LVW and Its Improved Method

LVW is a typical wrapper feature selection method [21]. It was proposed by Liu and Setiono which used the Las Vegas method for randomized search strategy to select feature subsets. The description of LVW is listed in Supplementary . For comparisons, we set T = M ∗ 21 as one termination condition of LVW.

Furthermore, we improved LVW with constantly revised weights in randomized search procedure of LVW named imp-LVW. In imp-LVW, feature weights were equal to each other firstly. Furthermore, the weight of a feature would increase if the current subset including the feature has better performance than the previous subsets. The description of imp-LVW is listed in Supplementary . Similar to LVW, we set T = M ∗ 21 as one termination condition of imp-LVW.

Figure 4 shows the classification accuracy rates of MLFSSM, LVW, and imp-LVW in the five public datasets. As three wrapper feature selection methods, LVW always has the lowest values in the methods, imp-LVW shows the better performance than LVW, and MLFSSM displays the best performance in the methods. The possible reason might be the constantly revised weights bring improvement in the performance. Because the weights of features stay consistent over time in LVW, the optimal subset is difficult to be found in a limited time for large dimensions of biomedical data. Imp-LVW changes the weights continuously. However, imp-LVW only focuses on the performance of the current subset which might fall into the local optimum. MLFSSM constantly adjusts the weights of each feature with the layers. A feature would be selected in the final subset based on good performance in not only the current layer but also the former layers.

Figure 4.

Comparisons in LVW, imp-LVW, and MLFSSM.

3.3. Comparison with Fuzzy_GA and GAANN

In this section, we compare MLFSSM with Fuzzy_GA [30] and GAANN [24] based on genetic algorithm. Fuzzy_GA was proposed by Carlos et al. Fuzzy_GA combined fuzzy systems and genetic algorithm to classify Wisconsin breast cancer database (WBCD) dataset involving a few simple rules. GAANN was proposed by Fadzil et al. It used genetic algorithm (GA) for feature subset selection and parameter optimization of an artificial neural network (ANN). In addition, three variations of backpropagation were applied for GAANN and GAANN with backpropagation (GAANN_RP) showing the best accuracies.

The comparison uses WBCD dataset as Ahmad et al. did [24]. Moreover, we replaced the missing values, rescaled the attributes, and used cross-validation methods in the experiments as Ahmad et al. did [24].

Table 3 shows the average accuracies of Fuzzy_GA, GAANN_RP, and MLFSSM. We could observe that MLFSSM shows the best performance in the methods. Based on GA, Fuzzy_GA and GAANN_RP generate a new population (subset) by crossover and mutation using two chromosomes in each generation. There are two important factors in MLFSSM different from them: one is a large number of feature subsets generated in each layer; the other is the method of feature evaluation based on multilayer. The two factors not only guarantee informative feature subsets selected, but also avoid premature convergence and instability results.

Table 3.

Comparison of the accuracies of GAANN_RP and MLFSSM.

| Method | Average accuracy |

|---|---|

| Fuzzy_GA | 0.9736 |

| GAANN_RP | 0.9829 |

| MLFSSM | 0.9997 |

3.4. Comparison with Traditional Feature Selection Methods

In this section, we describe the comparison of MLFSSM with some feature selection methods on the five public datasets. The comparative methods, including SVM-RFE, least square-bound (LS-Bound) [6], Bayes + KNN [7], elastic net-based logistic regression (EN-LR) [31], guided regularized random forest (GRRF) [32], and T-SS [33] had shown improved performance in biomedical data in recent years. The results of the comparison of the methods have been previously reported [33]. Table 4 shows the average accuracy rates of the methods. In the table, the bold and italic numbers indicate the largest values using the corresponding method in a dataset.

Table 4.

Comparison of the average accuracy rates of MLFSSM with four feature selection methods.

| Method | Breast | Leukemia | Colon | Hepato | CNS |

|---|---|---|---|---|---|

| SVM-RFE | 0.877 | 0.967 | 0.835 | 0.658 | 0.693 |

| LS-bound | 0.778 | 0.935 | 0.817 | 0.618 | 0.61 |

| Bayes + KNN | 0.821 | 0.92 | 0.828 | 0.628 | 0.628 |

| EN-LR | 0.854 | 0.962 | 0.837 | 0.683 | 0.664 |

| GRRF | 0.846 | 0.939 | 0.834 | 0.67 | 0.618 |

| T-SS | 0.893 | 0.97 | 0.871 | 0.693 | 0.655 |

| MLFSSM | 0.959 | 0.969 | 0.961 | 0.943 | 0.843 |

In Table 4, MLFSSM shows superiority over the four feature selection methods for the five datasets. We observe that the highest accuracy rates among these methods are 0.693 (by T-SS) and 0.693 (by SVM-RFE), respectively, for Hepato and CNS datasets, which is well below those by MLFSSM (by 0.25 and 0.15, respectively). As the compared methods are based on deterministic search strategies, the results show the effectiveness of MLFSSM with randomized search strategy.

3.5. Comparison with the Methods Selecting Pairs or Groups

In this section, we compare MLFSSM with TSP [34], K-TSP [35], and RS-DC [36] to discuss whether the subsets selected by MLFSSM are more effective than the pairs or groups selected by the other methods. TSP was proposed by Geman et al. This method focused on pairwise rank comparisons to reflect the underlying biological role and selected the top feature pair to build a classification model when the two features of the pair shifted their rank positions more dramatically in the phenotypic classes than others. Because one feature pair might not contain enough information, Tan et al. suggested selecting top K feature pairs for building K classification models and ensembling the final classification results by majority voting. The K value should not be too large; thus, it was often set from 3 to 11. Given that the length of subsets is suggested to be 21 in MLFSSM, we set K = 11 in K-TSP for comparison. Chen et al. integrated individual feature effects with pairwise joint effects between the target feature and others, proposed a novel score measure named relative simplicity (RS), and built RS-DC to select binary-discriminative genes for classification. Table 5 lists the average accuracy rates of the four compared methods using the five datasets, and the bold and italic numbers indicate the largest values using the corresponding method in a dataset.

Table 5.

Comparison of the average accuracy rates of MLFSSM with four feature selection methods based on groups.

| Method | Breast | Leukemia | Colon | Hepato | CNS |

|---|---|---|---|---|---|

| TSP | 0.783 | 0.900 | 0.891 | 0.602 | 0.496 |

| K-TSP (K = 11) | 0.870 | 0.969 | 0.962 | 0.657 | 0.517 |

| RS-DC | 0.868 | 0.944 | 0.896 | 0.604 | 0.597 |

| MLFSSM | 0.959 | 0.969 | 0.961 | 0.943 | 0.843 |

Table 5 shows that MLFSSM has obvious advantages over TSP, K-TSP, and RS-DC in Breast, Hepato, and CNS. MLFSSM showed outstanding performance in Hepato and CNS, where MLFSSM achieved 0.943 and 0.843, respectively, for accuracy, which are higher by 0.286 and 0.246 points than the maximum values by TSP, K-TSP, and RS-DC. The possible reason could be that these three methods only focused on the discriminative ability of pairs, while MLFSSM could find the informative feature subsets with more than two features.

3.6. Analysis of the Selected Top Feature Pairs

In this section, we further analyze the ten most selected genes of the final subsets in CNS. Table 6 lists the details of the features and the corresponding biological pathway using [37].

Table 6.

Details of the ten most selected genes of CNS dataset.

| No. | Gene accession number | Gene description | Official symbol | Gene ID | Biological pathway |

|---|---|---|---|---|---|

| 1 | M13149_at | HRG histidine-rich glycoprotein | HRG | 3273 | Dissolution of fibrin clot |

| 2 | S75989_at | Gamma-aminobutyric acid transporter type 3 (human, fetal brain, mRNA, 1991 nt) | — | — | — |

| 3 | HG2987-HT3136_s_at | Vasoactive intestinal peptide | VIP | 7432 | Glucagon-type ligand receptors |

| 4 | M63959_at | LRPAP1 low density lipoprotein-related protein-associated protein 1 (alpha-2-macroglobulin receptor-associated protein 1) | LRPAP1 | 4043 | Reelin signaling pathway Lissencephaly gene (LIS1) in neuronal migration and development |

| 5 | Z73677_at | Gene encoding plakophilin 1b | PKP1 | 5317 | Apoptotic cleavage of cell adhesion proteins Apoptotic cleavage of cellular proteins |

| 6 | D79986_at | KIAA0164 gene | — | — | — |

| 7 | M23575_f_at | PSG11 pregnancy-specific beta-1 glycoprotein 11 | PSG11 | 5680 | — |

| 8 | U37139_at | Beta 3-endonexin mRNA, long form and short form | — | — | — |

| 9 | D28235_s_at | Cyclooxygenase-2 (hCox-2) gene | PTGS2 | 5743 | COX reactions Prostanoid metabolism Calcium signaling in the CD4+ TCR pathway JNK signaling in the CD4+ TCR pathway Ras signaling in the CD4+ TCR pathway |

| 10 | HG2271-HT2367_at | Profilaggrin | — | — | — |

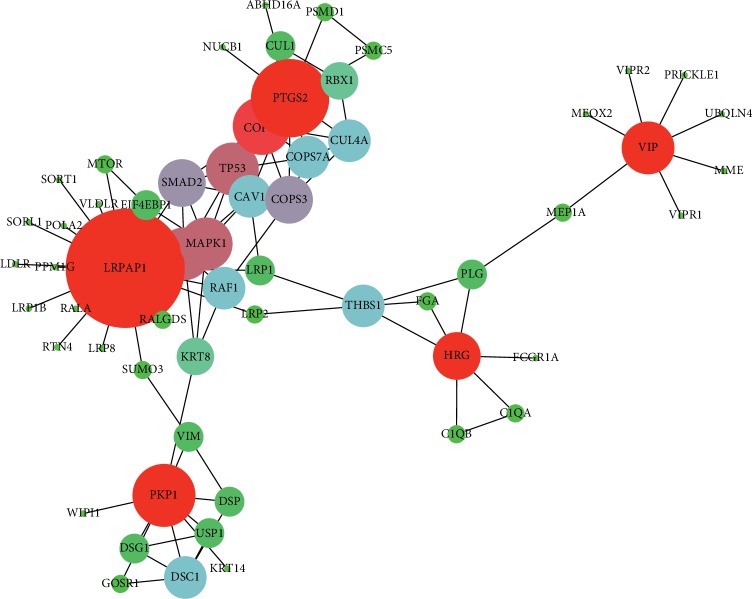

Figure 5 shows the interaction of the genes (red nodes) selected by us with the other nodes (other color nodes) identified by researchers from outside the selected dataset. We could find that the identified genes are all top 5-ranked ones in Table 6, and they have minimum 4 interactions. Especially, LRPAP1 shows the highest degree in Figure 5, which have been proved as a valuable marker in many diseases such as gallbladder cancer [38], Alzheimer disease [39], and lymphoma [40].

Figure 5.

Gene interaction diagram. Note: the size of node is related with its degree in the graph.

4. Discussion

In this paper, we focus on searching discriminative feature subsets. For this to be realized, it is crucial to have a large amount and diversity of subsets. At the first layer, we initialize features with equal weights of appearance and construct subsets whose quantity is the same as feature number. Meanwhile, the length of the subsets (ls) is long enough to provide diverse feature combinations and appropriate runtime. In the experimental section, we show ls = 21 brings better performance than other values.

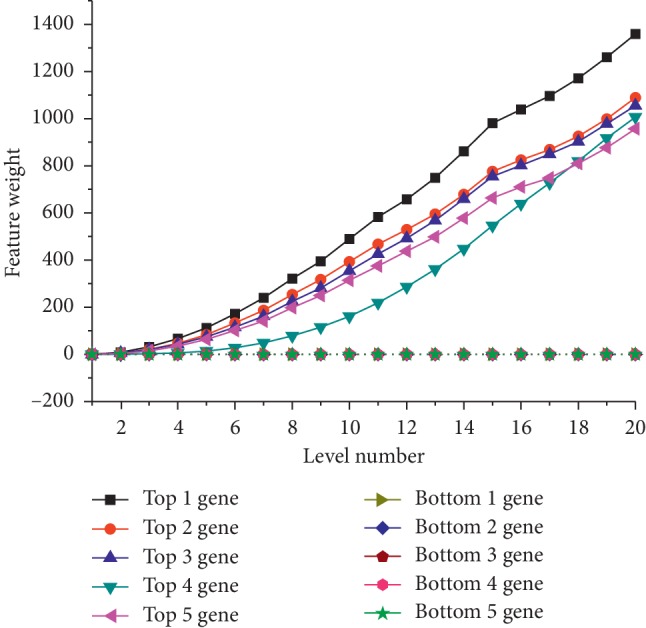

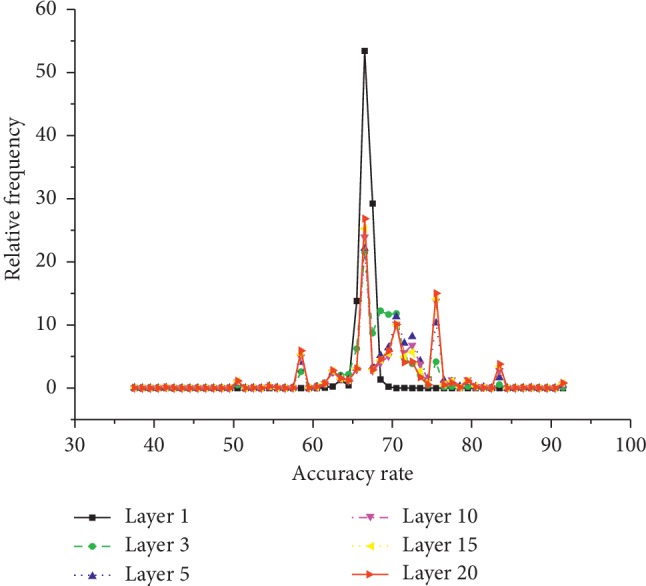

Based on the multilayer structure, we revise feature weights and find good subset gradually. Next, we will take CNS dataset as an example to further show the influence of multilayer structure on feature subset selection. MLFSSM shows the highest accuracy rate on CNS dataset among the comparative methods. We could find the average total level on CNS dataset as 18.29, which is far above the ones on other datasets. The possible reason is that MLFSSM evaluates feature weights with amounts of subsets in each layer, revises the values of features by their performances on former and current layers, and selects the highest performances of feature combinations through multilayer structure. Next, we will further analyze the classification procedures of MLFSSM on the CNS dataset with Figures 6 and 7.

Figure 6.

Weight values with increasing layer of top and bottom 5-ranked genes on CNS.

Figure 7.

The frequencies of accuracy rates of features with increasing layer on CNS.

Figure 6 shows the influence on the weights of 10 features in CNS dataset including top-ranked 5 and bottom-ranked 5 features at the last layer. It is observed that the weights of the features are equal at layer one. The top 5-ranked features are continually revised to higher weights and the bottom 5-ranked features are revised to lower weights with the increase of layer number.

Then, we further analyze the accuracy rates of features on different layers in Figure 7. If feature f ∈ ftl,m, the accuracy rate of subset ftl,m is averaged as the accuracy rate of feature f on layer l. We make a statistical analysis on the frequency of different accuracy rates with increasing layers. We could observe that the accuracy rates of 99% features are about 60% in layer 1, and then the rates of 28.37% features increase to over 70% in layer 2. With increasing layer, the rates of some features increase. Finally, the rates of 0.78% features are over 90% in the last layer. The result further shows that MLSFFM with a multilayer structure obtains more accurate feature evaluations and more effective feature subsets.

5. Conclusion

Here, we propose a wrapper feature subset method called MLFSSM, wherein based on the multilayer structure, we compute the weights of features and generate subsets by weights layer-by-layer. Ultimately, the top feature subset of the last layer is returned. Experiments on five public gene datasets showed MLFSSM to have an advantage over other similar methods in terms of classification performance. In the future, we plan to further analyze the features for biomarker detection, ascertain how to dynamically determine the parameter values on different datasets, and improve the running speed of the algorithm.

Acknowledgments

This work was supported by the National Natural Science Foundation of China (Grant no. 61562066).

Abbreviations

- GA:

Genetic algorithm

- SVM-RFE:

Support vector machine recursive feature elimination

- MLFSSM:

Multilevel wrapper feature subset selection method

- RF:

Random forest

- KNN:

k-nearest-neighbor

- LVW:

Las Vegas wrapper

- LS-Bound:

Least square-bound

- EN-LR:

Elastic net-based logistic regression

- GRRF:

Guided regularized random forest

- TSP:

Top scoring pair

- K-Tsp:

k-top scoring pairs

- TST:

Top scoring triplet

- TSN:

Top scoring n

- RS-DC:

Relative simplicity-based direct classifier.

Data Availability

Previously reported public datasets (including Breast, ColonCancer, CNS, Hepato, and Leukemia) are included within the supplementary information files.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Supplementary Materials

Public datasets: details of five public datasets (had been described in Table 3 in the paper) which are the important datasets for classification with our methods and other comparative methods. Supplymentary Tables 1 and 2: description of two algorithms (LVW and Imp-LVW) which were compared with our method in Section 3.2.

References

- 1.Cortes C., Vapnik V. Support-vector networks. Machine Learning. 1995;20(3):273–297. doi: 10.1023/a:1022627411411. [DOI] [Google Scholar]

- 2.Breiman L. Random forest. Machine Learning. 2001;45(1):5–32. doi: 10.1023/a:1010933404324. [DOI] [Google Scholar]

- 3.Hand D., Mannila H., Smyth P. Principles of Data Mining. Cambridge, MA, USA: The MIT Press; 2001. [Google Scholar]

- 4.Díaz-Uriarte R., Alvarez de Andrés S. Gene selection and classification of microarray data using random forest. BMC Bioinformatics. 2006;7(1):p. 3. doi: 10.1186/1471-2105-7-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jirapech-Umpai T., Aitken S. Feature selection and classification for microarray data analysis: evolutionary methods for identifying predictive genes. BMC Bioinformatics. 2005;6(1):p. 148. doi: 10.1186/1471-2105-6-148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Zhou X., Mao K. Z. LS bound based gene selection for DNA microarray data. Bioinformatics. 2005;21(8):1559–1564. doi: 10.1093/bioinformatics/bti216. [DOI] [PubMed] [Google Scholar]

- 7.Zhang J. G., Deng H. W. Gene selection for classification of microarray data based on the Bayes error. BMC Bioinformatics. 2007;8(1):p. 370. doi: 10.1186/1471-2105-8-370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sun L., Zhang X.-Y., Qian Y.-H., Xu J.-C., Zhang S.-G., Tian Y. Joint neighborhood entropy-based gene selection method with fisher score for tumor classification. Applied Intelligence. 2019;49(4):1245–1259. doi: 10.1007/s10489-018-1320-1. [DOI] [Google Scholar]

- 9.Qian Y., Liang J., Pedrycz W., Dang C. Positive approximation: an accelerator for attribute reduction in rough set theory. Artificial Intelligence. 2010;174(9-10):597–618. doi: 10.1016/j.artint.2010.04.018. [DOI] [Google Scholar]

- 10.Saeys Y., Inza I., Larranaga P. A review of feature selection techniques in bioinformatics. Bioinformatics. 2007;23(19):2507–2517. doi: 10.1093/bioinformatics/btm344. [DOI] [PubMed] [Google Scholar]

- 11.Kira K., Rendell L. A. The feature selection problem: traditional methods and a new algorithm. Proceedings of the Tenth National Conference on Artificial Intelligence (AAAI’92); July 1992; San Jose, CA, USA. [Google Scholar]

- 12.Hall M. Hamilton, New Zealand: New Zeal and Department of Computer Science, Waikato University; 1999. Correlation-based feature selection for machine learning. Ph.D. thesis. [Google Scholar]

- 13.Yu L., Liu H. Efficient feature selection via analysis of relevance and redundancy. Journal of Machine Learning Research. 2004;5:1205–1224. [Google Scholar]

- 14.Xiong M., Fang X., Zhao J. Biomarker identification by feature wrappers. Genome Research. 2001;11(11):1878–1887. doi: 10.1101/gr.190001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Li L., Umbach D. M., Terry P., Taylor J. A. Application of the GA/KNN method to SELDI proteomics data. Bioinformatics. 2004;20(10):1638–1640. doi: 10.1093/bioinformatics/bth098. [DOI] [PubMed] [Google Scholar]

- 16.Inza I., Larrañaga P., Etxeberria R., Sierra B. Feature subset selection by Bayesian network-based optimization. Artificial Intelligence. 2000;123(1-2):157–184. doi: 10.1016/s0004-3702(00)00052-7. [DOI] [Google Scholar]

- 17.Guyon I., Weston J., Barnhill S., Vapnik V. Gene selection for cancer classification using support sector machines. Machine Learning. 2002;46:389–422. doi: 10.1023/a:1012487302797. [DOI] [Google Scholar]

- 18.Sun Z., Bebis G., Miller R. Object detection using feature subset selection. Pattern Recognition. 2004;37(11):2165–2176. doi: 10.1016/j.patcog.2004.03.013. [DOI] [Google Scholar]

- 19.Jain A. K., Duin P. W., Jianchang Mao J. C. Statistical pattern recognition: a review. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2000;22(1):4–37. doi: 10.1109/34.824819. [DOI] [Google Scholar]

- 20.Kudo M., Sklansky J. Comparison of algorithms that select features for pattern classifiers. Pattern Recognition. 2000;33(1):25–41. doi: 10.1016/s0031-3203(99)00041-2. [DOI] [Google Scholar]

- 21.Liu H., Setiono R. Feature selection and classification—a probabilistic wrapper approach. Proceedings of the 9th International Conference on Industrial and Engineering Applications of AI and ES; June 1996; Fukuoka, Japan. [Google Scholar]

- 22.Hasegawa K., Miyashita Y., Funatsu K. GA strategy for variable selection in QSAR studies: GA-based PLS analysis of calcium channel antagonists. Journal of Chemical Information and Computer Sciences. 1997;37(2):306–310. doi: 10.1021/ci960047x. [DOI] [PubMed] [Google Scholar]

- 23.Cai W., Li Y., Shao X. A variable selection method based on uninformative variable elimination for multivariate calibration of near-infrared spectra. Chemometrics and Intelligent Laboratory Systems. 2008;90(2):188–194. doi: 10.1016/j.chemolab.2007.10.001. [DOI] [Google Scholar]

- 24.Ahmad F., Mat Isa N. A., Hussain Z., Osman M. K., Sulaiman S. N. A GA-based feature selection and parameter optimization of an ANN in diagnosing breast cancer. Pattern Analysis and Applications. 2015;18(4):861–870. doi: 10.1007/s10044-014-0375-9. [DOI] [Google Scholar]

- 25.West M., Blanchette C., Dressman H., et al. Predicting the clinical status of human breast cancer by using gene expression profiles. Proceedings of the National Academy of Sciences. 2001;98(20):11462–11467. doi: 10.1073/pnas.201162998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Alon U., Barkai N., Notterman D. A., et al. Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proceedings of the National Academy of Sciences. 1999;96(12):6745–6750. doi: 10.1073/pnas.96.12.6745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Pomeroy S. L., Tamayo P., Gaasenbeek M., et al. Prediction of central nervous system embryonal tumour outcome based on gene expression. Nature. 2002;415(6870):436–442. doi: 10.1038/415436a. [DOI] [PubMed] [Google Scholar]

- 28.Iizuka N., Oka M., Yamada-Okabe H., et al. Oligonucleotide microarray for prediction of early intrahepatic recurrence of hepatocellular carcinoma after curative resection. The Lancet. 2003;361(9361):923–929. doi: 10.1016/s0140-6736(03)12775-4. [DOI] [PubMed] [Google Scholar]

- 29.Golub T. R., Slonim D. K., Tamayo P., et al. Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science. 1999;286(5439):531–537. doi: 10.1126/science.286.5439.531. [DOI] [PubMed] [Google Scholar]

- 30.Peña-Reyes C. A., Sipper M. A fuzzy-genetic approach to breast cancer diagnosis. Artificial Intelligence in Medicine. 1999;17(2):131–155. doi: 10.1016/s0933-3657(99)00019-6. [DOI] [PubMed] [Google Scholar]

- 31.Zou H., Hastie T. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2005;67(2):301–320. doi: 10.1111/j.1467-9868.2005.00503.x. [DOI] [Google Scholar]

- 32.Deng H., Runger G. Gene selection with guided regularized random forest. Pattern Recognition. 2013;46(12):3483–3489. doi: 10.1016/j.patcog.2013.05.018. [DOI] [Google Scholar]

- 33.Mundra P. A., Rajapakse J. C. Gene and sample selection using T-score with sample selection. Journal of Biomedical Informatics. 2016;59:31–41. doi: 10.1016/j.jbi.2015.11.003. [DOI] [PubMed] [Google Scholar]

- 34.Geman D., D’Avignon C., Naiman D. Q., Winslow R. L. Classifying gene expression profiles from pairwise mRNA comparisons. Statistical Applications in Genetics and Molecular Biology. 2004;3(1):1–19. doi: 10.2202/1544-6115.1071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tan A. C., Naiman D. Q., Xu L., Winslow R. L., Geman D. Simple decision rules for classifying human cancers from gene expression profiles. Bioinformatics. 2005;21(20):3896–3904. doi: 10.1093/bioinformatics/bti631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chen Y., Wang L., Li L., Zhang H., Yuan Z. Informative gene selection and the direct classification of tumors based on relative simplicity. BMC Bioinformatics. 2016;17:1–16. doi: 10.1186/s12859-016-0893-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Shannon P., Markiel A., Ozier O., et al. Cytoscape: a software environment for integrated models of biomolecular interaction networks. Genome Research. 2003;13(11):2498–2504. doi: 10.1101/gr.1239303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Pandey S. N., Dixit M., Choudhuri G., et al. Lipoprotein receptor associated protein (LRPAP1) insertion/deletion polymorphism: association with gallbladder cancer susceptibility. International Journal of Gastrointestinal Cancer. 2006;37(4):124–128. doi: 10.1007/s12029-007-9002-y. [DOI] [PubMed] [Google Scholar]

- 39.Sánchez L., Alvarez V., González P., González I., Alvarez R., Coto E. Variation in the LRP-associated protein gene (LRPAP1) is associated with late-onset Alzheimer disease. American Journal of Medical Genetics. 2001;105(1):76–78. doi: 10.1002/1096-8628(20010108)105:1<76::aid-ajmg1066>3.3.co;2-i. [DOI] [PubMed] [Google Scholar]

- 40.Lorenz T., Sylvia H., Natalie F., et al. LRPAP1 is a frequent proliferation-inducing antigen of BCRs of mantle cell lymphomas and can be used for specific therapeutic targeting. Leukemia. 2019;33(1):148–158. doi: 10.1038/s41375-018-0182-1. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Public datasets: details of five public datasets (had been described in Table 3 in the paper) which are the important datasets for classification with our methods and other comparative methods. Supplymentary Tables 1 and 2: description of two algorithms (LVW and Imp-LVW) which were compared with our method in Section 3.2.

Data Availability Statement

Previously reported public datasets (including Breast, ColonCancer, CNS, Hepato, and Leukemia) are included within the supplementary information files.