Abstract

Accurate optic disc and optic cup segmentation plays an important role for diagnosing glaucoma. However, most existing segmentation approaches suffer from the following limitations. On the one hand, image devices or illumination variations always lead to intensity inhomogeneity in the fundus image. On the other hand, the spatial prior knowledge of optic disc and optic cup, e.g., the optic cup is always contained inside the optic disc region, is ignored. Therefore, the effectiveness of segmentation approaches is greatly reduced. Different from most previous approaches, we present a novel locally statistical active contour model with the structure prior (LSACM-SP) approach to jointly and robustly segment the optic disc and optic cup structures. First, some preprocessing techniques are used to automatically extract initial contour of object. Then, we introduce the locally statistical active contour model (LSACM) to optic disc and optic cup segmentation in the presence of intensity inhomogeneity. Finally, taking the specific morphology of optic disc and optic cup into consideration, a novel structure prior is proposed to guide the model to generate accurate segmentation results. Experimental results demonstrate the advantage and superiority of our approach on two publicly available databases, i.e., DRISHTI-GS and RIM-ONE r2, by comparing with some well-known algorithms.

1. Introduction

Glaucoma is the second leading cause of blinding in modern times and approximately 80 million persons to be afflicted with glaucoma by the year 2020 [1, 2]. Since the lost capabilities of the optic nerve fibers caused by glaucoma cannot be recovered, early detection and timely treatment of glaucoma can be regarded as the most effective way for patients to slow down the procession of visual damage.

Glaucoma screening is done by ophthalmologists who use the retinal fundus images to assess the damaged optic disc. Nevertheless, this process is subjective, time consuming, and expensive. Therefore, automatic glaucoma screening would be very beneficial [3]. There are two different regions within the optic disc. A center bright zone is the optic cup, and the peripheral region between the optic disc and optic cup boundaries is the neuroretinal rim (see the region of interest (ROI) depicted in Figure 1).

Figure 1.

Major structures of the optic disc. Red line: the optic disc boundary; green line: the optic cup boundary; the region between the red line and green line is the neuroretinal rim.

According to the characteristics of the optic disc, several strategies can be used to assess the optic disc. One of the effective strategies is to utilize the image features for optic disc assessment [4]. Nevertheless, how to select the suitable image features and classifiers is the challenging issue. Apart from the aforementioned feature extraction strategy, the usage of the clinical indicators is another strategy to assess the optic disc, such as the vertical cup to disc ratio (CDR) [5], ISNT rule [6], and notching. Although these clinical indicators are different from each other, precise optic disc and optic cup boundary information is necessary.

To date, there are a series of approaches that have been developed for optic disc and optic cup segmentation [7–27]. They can be roughly classified into the following two categories: nonmodel-based and model-based approaches [7]. For nonmodel-based approaches [3, 8–11], the contours of both the optic disc and the optic cup are extracted by means of some techniques, i.e., morphological operations, pixels clustering, and thresholding. However, intensity inhomogeneity is a frequently occurring phenomenon in retinal fundus images caused by imperfection of image devices or illumination variations, which affects the contour extraction of the optic disc (Figure 2(a)).

Figure 2.

Intensity inhomogeneity challenges in optic disc and optic cup segmentation.

For model-based approaches, they can be divided into shape-based template-matching approaches [12–15], deformable model approaches [16–29], and deep learning-based model approaches [30–32]. Considering that the shape of the object is round or slightly oval, the contour of the optic disc can be estimated as a circle [12–14] or an ellipse [15]. However, the shape-based template-matching approaches cannot represent contours of complex topology and handle topological changes, such as the optic disc regions with shape irregularity due to some pathological changes (i.e., peripapillary atrophy (PPA), see Figure 2(b)) or variations in view.

To address it, many deformable model approaches have been presented which can be further divided into edge-based active contour models [16–21] and region-based active contour models [22–27]. Edge-based active contour models can bridge the discontinuities in the image feature being located. Besides, they can deform the shape of the object freely due to the fact that they have no global structure of the template. In [16, 17], the optic disc boundary is extracted by the gradient vector flow (GVF). Then, the optic disc contour is evolved via a minimization of the effect on the perturbance in the energy value due to the high variations at the vessels locations. In [18–21], the authors used a modified level set approach, with ellipse fitting to detect the optic disc and optic cup margins. Although these approaches perform well in most regular cases, an irregularly shaped optic disc having high gradient variations will fail in detecting the entire optic disc. More recently, motivated by the main idea of the Mumford-Shah model [28], some region-based active contour models such as Chan-Vese (C-V) model [22] and its variations [23–27] have been applied to optic disc contour extraction. Although these models can perform better in dealing with the local variations of the optic disc, they can hardly deal with the images with intensity inhomogeneity. In a recent paper, a robust level set approach called locally statistical active contour model (LSACM) is presented [29] by exploiting local image region statistics in an unsupervised manner. Compared with the existing region-based active contour models, LSACM approach performs better performance, especially for the image segmentation in the presence of intensity inhomogeneity. However, one difficulty with active contour model-based approaches is that the spatial correlation prior information between the optic disc and optic cup is ignored. Therefore, the useful spatial information cannot guide and constrain the contour evolution.

Nowadays, deep learning has widely been used in computer vision and pattern recognition areas and achieved remarkable performance, and some optic disc and optic cup segmentation approaches based on deep network have been proposed [30–32]. Although these approaches can achieve good performance in optic disc and optic cup segmentation, there are still some limitations in them [33, 34]. On the one hand, a large number of training samples consisting of pixel-level annotations are necessary to train the deep network model for testing. However, it is difficult for the network to achieve promising segmentation performance in the condition of insufficient labeled training samples. On the other hand, these networks ignore the prior knowledge of objects, thereby the spatial information being lost in encoder through max-pooling resulting in irregular segmentation.

To address all the limitations raised above, a novel locally statistical active contour model with structure prior (LSACM-SP) approach is developed in this paper which aims at jointly segmenting optic disc and optic cup in retinal fundus images. The major innovations are as below: first of all, unsupervised LSACM is employed to join optic disc and optic cup segmentation over different retinal fundus images, which can address the issue of insufficient labeled samples and the influence of the retinal fundus images with a large range of appearance and illumination variations. Second, since the spatial correlation information is typically ignored by the existing deep network-based approaches, the segmentation performance is accordingly reduced. To address it, we design a structure prior to satisfy with the topological structure in retinal images that “cup” region is contained in the “disc” region. At last, we develop an efficient segmentation approach by incorporating locally statistical active contour model with the proposed structure prior together to simultaneously extract optic disc and optic cup contours. Since minimizing the proposed energy functional can acquire the contours, there is no need to use predefined geometric templates to guide auto-segmentation. Therefore, our approach reduces the segmentation performance degradation on the fundus images with a large range of intensity and shape variations. The overview of the proposed approach is shown in Figure 3.

Figure 3.

The flowchart of the proposed approach.

The rest of this paper is organized as follows. First, some preprocessing techniques are depicted in Section 2. Then, the introduction of the proposed LSACM-SP is given in Section 3. Next, the experimental results and analyses are presented in Section 4. At last, we summarize this paper in Section 5.

2. Preprocessing

To perform accurate optic disc and optic cup segmentation, some preprocessing operations are necessary before carrying out our approach. In this paper, the preprocessing process consists of optic disc location, optic disc ROI extraction, and contour initialization. Figure 4 depicts the process of preprocessing.

Figure 4.

Preprocessing. (a) Original color image; (b) detected optic disc center in black “+”; (c) extracted ROI; (d) blood vessels extraction; (e) “vessels-free” image; (f) red channel of (e). Blue: the extracted optic disc initialization contour and green: the extracted optic cup initialization contour.

Given a retinal image (see Figure 4(a)), we employ our previous proposed approach based on saliency detection and feature extraction techniques to locate optic disc [1]. The detected optic disc location is marked in black “+” (see Figure 4(b)). Meanwhile, a 400 ∗ 400 ROI around the optic disc is extracted for further segmentation, as shown in Figure 4(c). Since blood vessels in the retinal images vary much in size, and meanwhile their locations and shapes among individual cases, a high variance in the data will affect the performance of segmentation (see Figure 4(c)). Hence, it is necessary to remove the influence caused by blood vessels before contour evolution. Recently, B-COSFIRE filters that combine the aligned responses of DoG filters with geometric mean are simple and robust for blood vessels segmentation. Therefore, we firstly use two rotation-invariant B-COSFIRE filters given in [35] to segment blood vessels, as illustrated in Figure 4(d). After obtaining the blood vessels, an image inpainting algorithm proposed in [36] is employed to fill in the blood vessel areas. In our setting, the blood vessel removal is to replace each vessel pixel intensity value by the median of the intensity values of the pixels in its neighborhood image that are not vessel pixels. According to our experience, we set the size of neighbor at 15. Meanwhile, image information around the vessel regions is used to fill in vessels and the obtained “vessels-free” image is shown in Figure 4(e). Considering that red color channel gives a good definition both on the optic disc and optic cup regions, this paper chooses the red channel of “vessels-free” image (see Figure 4(f)) for segmentation. For the obtained red channel of blood vessel-removed optic disc image, we use canny edge detection and circular Hough transform to estimate the positions and sizes of the optic disc and the optic cup [23]. In experiment, we set the parameters of threshold and sigma at 0.3 and 0.8, respectively, for canny edge detection. Finally, the estimated results are regarded as the initialization contours for our approach.

3. Methods

In Section 3.1, first of all, we briefly review the locally statistical active contour model (LSACM). Then, the structure prior is given in Section 3.2. Finally, we design a novel LSACM-SP model for simultaneous segmentation of optic disc and cup by joining the LSACM and the structure prior together in Section 3.3. In order to better understand the proposed approach, in the following sections, we will use bold italic variables (e.g., x, y) to denote vectors, small letters (e.g., n) to denote scalars, and capital letters (e.g., I) to denote functions.

3.1. Locally Statistical Active Contour Model (LSACM)

According to [37], we can learn that the regions in the images with severe intensity inhomogeneity must have sharp discontinuities in the statistics. Inspired by it, Zhang et al. [29] presented a locally statistical active contour model (LSACM) to deal with the images with intensity inhomogeneity. In [29], the authors firstly modeled the objects with intensity inhomogeneity as Gaussian distributions of different means and variances. Then, transforming the pixels in original image into another domain makes the intensities in the transformed space having less overlapping in the statistics. Besides, to approximate the true image, a maximum likelihood energy functional is employed. In comparison with the existing statistical model-based segmentation algorithms [23–28], LSACM is more robust and stable. For more detailed descriptions, refer to [29].

For a given input image I, its segmentation can be done by minimizing the following energy functional:

| (1) |

where

| (2) |

where n is the number of objects in I. x, y ∈ Ω ⊂ ℜ2 are pixel coordinates; Ω=∪i=1,…,nΩi represents image domain, in which Ωi is the domain of the i-th object and Ωi∩Ωj=Θ for all i ≠ j. The symbol Θ denotes an empty set. I(y) is the pixel value at the location of pixel coordinate y. B(x) : Ω⟶ℜ is an unknown bias field. Φ is a series of level set functions. If y ∈ Ωi, then Mi(Φ(y))=1, otherwise Mi(Φ(y))=0 in which Mi(Φ(y)) is the membership function of the region Ωi. The symbol Ox denotes a neighboring region centering at location x, i.e., Ox={y||y − x| ≤ ρ}, y is the neighborhood point relative to x, and ρ is the radius of the region Ox. The symbol θ={ci, σi, i=1,2,…, n} are needed to be estimated parameters, where the constant ci is the true signal of the i-th object and the object in region Ωi is assumed to be Gaussian distributed with standard deviation σi. B(x)ci is the spatial varying mean that is estimated at the local region Ωi∩Ox. Kρ(x, y) denotes the indicator function.

Considering that one level set function Φ just represents two different regions, namely, the inside region of contour S, Ω1=in(S)={Φ > 0} and the outside region of contour S, Ω2=out(S)={Φ < 0}, this model is defined as two phase. However, one level set cannot work well for more than two different regions. To deal with this case, incorporating four-phase model is necessary, which uses two level set functions, i.e., Φ1 and Φ2, to represent all different regions [29]. Hence, four-phase model denotes any two adjacent regions indicated by different colors. Figure 5(a) depicts the segmentation results obtained from the four-phase LSACM, in which the blue line and the green line represent two-level set functions (i.e., Φ1 and Φ2), respectively. The corresponding regions, such as “R11” (in gray color), “R12” (in red color), “R13” (in yellow color), and “R14” (in purple color) depicted in Figure 5(b), are extracted from Figure 5(a). Seen from the segmentation results in Figure 5(b), optic disc and optic cup regions cannot be determined directly due to the existing nonobject segmentation region (i.e., “R12”). The main reason is that the evolution processes of the regions from different classes (i.e., “R11,” “R12,” “R13” and “R14”) are independent while ignoring the prior that “cup” region is contained in the “disc” region. Therefore, how to control the evolution of two level set functions which one locates inside another, to approximate the true optic disc and optic cup contours, is a main issue.

Figure 5.

Segmentation results obtained by LSACM. (a) Segmentation results by the four-phase-based LSACM and (b) the corresponding regions by four-phase LSACM. Green: optic cup result and blue: optic disc result.

3.2. Structure Prior

To address the aforementioned issue, this paper proposes a structure prior to guarantee the truth that the “cup” region is contained in the “disc.” The structure prior together with the effective optimization of LSACM can enable the proposed approach generate robust and reliable segmentation results. In this paper, the structure prior consists of hierarchical image segmentation and attraction term. Specifically, hierarchical indicates evolution manner of two-level set functions, that is, the evolution of optic disc is performed on the whole image region while the evolution of optic cup is constrained inside the optic disc region. The symbols ϕ and φ are two-level set functions. In this study, we use ϕ > 0 and φ > 0 to represent the optic disc region and the optic cup region, respectively. The whole image region represented as Ω and E is an energy functional.

3.2.1. Hierarchical Image Segmentation

Observing that the optic cup in the retinal image always places inside the optic disc, optic cup segmentation is enough to be done inside the optic disc. Here, we propose the hierarchical image segmentation ECup(c3, c4, σ3, σ4, Bcup(x), ϕ, φ) to indicate the image region in which the contour evolution of optic cup is enforced. We consider

| (3) |

| (4) |

| (5) |

where EH(c3, c4, σ3, σ4, Bcup(x), ϕ, φ) is used to constrain the evolution of the optic cup which should be done inside the optic disc region. Thus, the background region is not considered in (3) while reducing the influence of nonobjects. EHCSmooth(φ) is used to make the extracted contour more smoother. BCup is an unknown bias field for segmenting the OC, which accounts for the intensity inhomogeneity in the optic disc. α and μ are positive parameters and the level set functions ϕ and φ are positive inside zero-level sets. H represents the Heaviside function. ∇ is the gradient operator. σ3 is the standard deviation subject to the object in the optic cup region and σ4 is the standard deviation subject to the object in the neuroretinal rim region.

3.2.2. Attraction Term

The optic cup region φ > 0 should be in the optic disc region ϕ > 0 to reduce the background region. A term measuring the intersection area of the regions {ϕ < 0} and {φ > 0} plays a role to pull the region {φ > 0} to inside the region {ϕ > 0}:

| (6) |

where ν is a positive parameter. Obviously, the objective function value in (6) becomes zero when the region φ > 0 is inside the region ϕ > 0.

3.3. LSACM-SP

The proposed model is designed by combining the locally statistical model and structure prior (hierarchical image segmentation and attraction term) together. The details of the proposed LSACM-SP model are given as follows:

| (7) |

where

| (8) |

| (9) |

| (10) |

where θ={ci, σi, i=1,2,3,4}, B={BDisc(x), BCup(x)}, and ELSACM(c1, c2, σ1, σ2, BDisc, ϕ) and EDSmooth(ϕ) are the original LSACM model and the smoothing term, respectively. BDisc(x) is an unknown bias field for segmenting the optic disc. λ is a positive parameter. σ1 is the standard deviation subject to the object in the optic disc region and σ2 is the standard deviation subject to the object in the background region (the outside region of the optic disc). The segmentation result obtained by LSACM-SP is illustrated in Figure 6(a), and the corresponding segmentation regions are shown in Figure 6(b). Here, “R24” is the optic cup region, “R23” is the neuroretinal rim region, “R22” is the optic disc region consisting of “R23” and “R24,” and “R21” is the background region.

Figure 6.

The segmentation results by the proposed model. (a) Segmentation results by the proposed LSACM-SP and (b) the corresponding regions by the proposed LSACM-SP. White: optic disc ground truth by an expert, black: optic cup ground truth by an expert, green: optic cup result by an approach, and blue: optic disc result by an approach.

In the proposed segmentation approach, we set n as 4 indicating 4 regions, namely, outer and inner regions of the optic disc (“R21” and “R22” in Figure 6(b)) and the outer and inner regions of the optic cup (“R23” and “R24” in Figure 6(b)). Specially, the outer region of optic cup (“R23”in Figure 6(b)) is the complementary set of the inner optic cup region (“R24” in Figure 6(b)) relative to the optic disc region (“R22” in Figure 6(b)). According to the aforementioned descriptions, we further rewrite (7) and obtain the objective function as

| (11) |

where

| (12) |

| (13) |

where θ={ci, σi, i=1,2,3,4}, B={BDisc(x), BCup(x)}, MDisc_1 and MDisc_2 denote the membership functions for inner and outer regions of the optic disc, and MCup_3 and MCup_4 are the membership functions for inner and outer regions of the optic cup in the optic disc. Although the membership functions in (13) are alike with the four-phase model in LSACM, they are different in fact. The main difference is that the proposed approach incorporates the structure prior knowledge to constrain the contour evolution based on hierarchical. Therefore, the evolution space of feasible segmentation is reduced, which improves the accuracy of segmentation.

Seen from (7), it requires a hard work to find a minimizer ELSACM−SP for ϕ, φ, θ={ci, σi, i=1,2,3,4}, and B={BDisc(x), BCup(x)}, simultaneously. Similar to the [29], we solve the minimization problem for each variable alternatively to find a minimizer of (7). The procedure will be repeated until satisfying a stopping condition. In this study, the initial conditions of the gradient descent method are given as BDisc(x)=1, BCup(x)=1, σi=i(i=1,2,3,4), and the region scale parameter ρ is set as 6. Accordingly, the initialization of ci(i=1,2,3,4) can be calculated by (A.2)–(A.5). Meanwhile, the time step for level set evolution is set at Δt1=1 and the time step regularization is set at Δt=1. The level set functions ϕ=ϕ0 and φ=φ0. For more details, refer to Appendix.

4. Results

4.1. Database

In this paper, two publicly available databases, namely, DRISHTI-GS [38] and RIM-ONE r2 [39], are used to verify the effectiveness of our approach.

The DRISHTI-GS database [38] contains a total of 101 images containing 31 normal images and 70 glaucomatous images in 2896 × 1944 resolutions. For each image, the optic disc and optic cup are accurately annotated via a majority voting manual markings obtained from four glaucoma ophthalmologists. In our experiments, we use the marking result obtained by a value of threshold 0.75 as the final ground truth for evaluation.

The RIM-ONE r2 database [39] contains 455 retinal fundus images with 255 normal images and 200 glaucoma images. In experiment, all of the images in the database are firstly arranged in the same dimension by resizing, and then the preprocessing techniques depicted in Section 2 are used to these images. Finally, the obtained red channel of blood vessel-removed optic disc image and extracted initialization contours are regarded as the inputs for our approach.

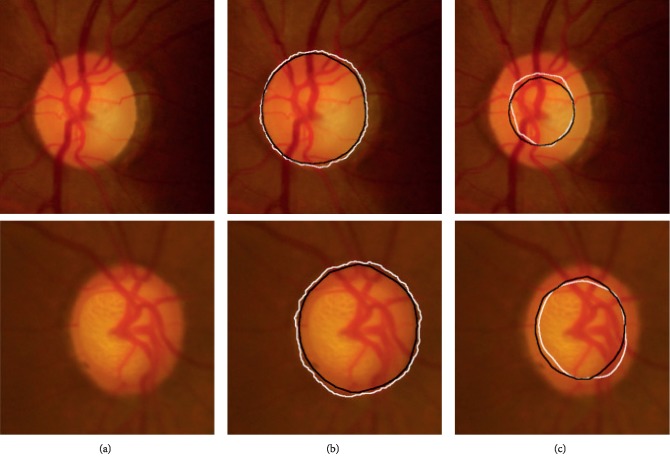

In this paper, all the experiments are evaluated under the Matlab programming environment and on a desktop of 3.30 GHz CPU with 16G RAM. Figure 7 illustrates two examples for the application of our model to optic disc and optic cup segmentation, in which Figure 7(a) gives the original image; Figure 7(b) shows the optic disc and the corresponding ground truth image; and Figure 7(c) depicts the optic cup and the corresponding ground truth.

Figure 7.

Optic disc and optic cup boundaries extraction. (a) Original color images, (b) original color images with contours of both optic disc and ground truth superimposed, and (c) original color images with contours of both optic cup and ground truth superimposed. Black line: ground truth marked by an expert and white line: results obtained by the proposed method.

4.2. Evaluation Measurements

In our experiment, three widely used measurements are utilized to evaluate the performances of different approaches, including Dice coefficients (DI), boundary-based distance, and accuracy.

Dice coefficients (DI):

| (14) |

where NTP is the number of true positive, NFP is the number of false positive, and NFN is the number of false negative. Positive and negative refer to pixels belonging to the segmentation area and background in accordance to the ground truth segmentation, respectively. The DI is a standard evaluation metric for segmentation tasks [18].

Boundary-based distance:

| (15) |

where dgθ and doθ represent the distances from the expert's curve centroid to the points on the expert's curve Cg and our method's curve Co, respectively, in the θ-th angular direction and k denotes the count of angular samples. According to [23], we set k as 8 along angular directions 0°, 45°, 90°, 135°, 180°, 225°, 270°, and 315°, respectively. Ideally, the distance D should be close to zero. Figure 8 gives a detailed description of boundary-based distance. Figure 8(a) shows the center of expert's curve and eight directions (OR1–OR8). Figure 8(b) depicts the distance between Cg and Co.

Figure 8.

The description of boundary-based distance measurement. (a) The reference point (expert's curve centroid) and eight directions (OR1-OR8); (b) the detail of the distance between the expert's curve Cg (white) and our method's curve Co (blue).

Accuracy:

| (16) |

where true positive (TP) is the number of glaucoma images that are correctly identified, false negative (FN) is the number of incorrectly found as nonglaucoma images, false positive (FP) is the number of incorrectly found as glaucoma images, and true negative (TN) is the number of nonglaucoma images that are correctly identified. Positive and negative refer to testing retinal images belonging to glaucoma and normal in accordance to the vertical CDR value. Similar to the existing approaches [3], when vertical CDR value is greater than a threshold, it is glaucomatous, otherwise, healthy, we set the standard threshold value as 0.5 for glaucoma diagnosis in this paper [40].

4.3. Optic Disc Segmentation Results

In this section, five models are implemented for optic disc segmentation performance comparison, namely, gradient vector flow (GVF) [16], C-V model [24], LIC model [41], superpixel [3], and LSACM [29]. Several optic disc segmentation results obtained by different approaches are shown in Figure 9. As shown in this figure, the white lines show the segmentation results marked by the expert and the blue one by an approach. Since the first two row images in Figure 9 contain PPA having high gradient variations, all the segmentation approaches have error segmentation results, but our approach, LSACM approach, and superpixel approach are better than the others. For an irregular shaped optic disc image example with high gradient variations depicted in the fourth row, the segmentation results by the GVF model are sensitive to the local gradient minima. Although the C-V model can deal with the local gradient variations, it is not suitable to deal with intensity inhomogeneity image due to the fact that it uses piecewise constant functions to model images. For the LIC model based on locally weighted K-means clustering approach, it may fail to discriminate the intensities of an object from its background when the intensity inhomogeneity is severe. The main reason is that the clustering variance is ignored in it. Although superpixel-based approach can improve the segmentation performance by extracting features from the superpixel level, it may have a bias of underestimating large optic discs and overestimating small optic discs when the medium-sized optic discs are employed to train the model. Moreover, LSACM and our approaches are robust to intensity inhomogeneity (i.e., PPA). However, our approach takes the spatial correlation prior information into consideration while LSACM does not. Therefore, our approach is more robust than other comparison approaches.

Figure 9.

Optic disc segmentation results. First column: original images; second column: GVF model results [16]; third column: C-V model results [24]; fourth column: LIC model results [41]; fifth column: superpixel [3]; sixth column: LSACM [29]; seventh column: LSACM-SP. White line: ground truth and blue line: detected result by an approach.

To assess the performance of our approach, we compare it with other approaches in terms of DI and boundary-based distance measurements, as shown in Table 1. Seen from Table 1, our approach can achieve the highest DI and lowest average boundary distance among all the approaches, indicating the effectiveness of the proposed approach.

Table 1.

Optic disc segmentation results on the DRISHTI-GS and RIM-ONE r2 databases.

| Methods | DI (DRISHTI-GS) | Boundary-based distance (DRISHTI-GS) | DI (RIM-ONE r2) | Boundary-based distance (RIM-ONE r2) |

|---|---|---|---|---|

| GVF [16] | 0.867 | 39.239 | 0.735 | 42.768 |

| C-V [24] | 0.885 | 26.578 | 0.734 | 29.648 |

| LIC [41] | 0.910 | 11.900 | 0.779 | 15.764 |

| Superpixel [3] | 0.932 | 10.747 | 0.816 | 13.611 |

| LSACM [29] | 0.931 | 10.012 | 0.808 | 13.155 |

| LSACM-SP | 0.955 | 8.711 | 0.853 | 10.232 |

Seen from the aforementioned comparison results, our approach performs the best. The main reason is that the proposed model takes local image region statistics information into consideration, which is robust to noise and intensity overlapping (i.e., PPA). Besides, based on the incorporation of structure prior, all of the nonobject regions outside the optic disc are regarded as the background region in the proposed approach (see “R21” in Figure 6(b)), and thus all nonobject segmentation regions are eliminated (see “R12” in Figure 5(b)). Finally, an optic disc with fuzzy boundary is depicted in the fourth row. Comparing the results of our approach with those of the existing approaches, the optic disc boundary obtained by our approach is matching closely with the ground truth. The reason is that our approach models the objects as Gaussian distributions with different means and variances. Therefore, different objects will be separated from each other. Overall, the proposed optic disc segmentation approach is robust to a large range of variations in retinal images.

In order to further verify the effectiveness of the proposed LSACM-SP, the pairwise one-tailed t-tests is used in this paper. In this test, the null hypothesis is our LSACM-SP makes no difference when compared with the existing optic disc segmentation approaches and the alternative hypothesis is our LSACM-SP makes an improvement when compared with other approaches. For example, if we want to compare the performance of LSACM-SP with that of C-V (LSACM-SP vs. C-V), the null and alternative hypotheses are defined as H0 : MLSACM‐SP=MC‐V and H1 : MLSACM‐SP > MC‐V, respectively, where MLSACM‐SP and MC−V are the measurement results obtained by LSACM-SP and C-V approaches on different datasets. In our experiment, we set the significance level at 0.05. Seen from Table 2, all the p values are much less than 0.05, which means that the null hypotheses are disapproved in all pairwise tests. Therefore, the proposed LSACM-SP significantly outperforms other optic disc segmentation approaches.

Table 2.

The p values of the pairwise one-tailed t-tests of LSACM-SP and other optic disc segmentation approaches on DI.

| Methods | p values (DRISHTI-GS) | Methods | p values (RIM-ONE r2) |

|---|---|---|---|

| LSACM-SP vs. GVF | 3.43e − 05 | LSACM-SP vs. GVF | 1.37e − 06 |

| LSACM-SP vs. C-V | 3.44e − 05 | LSACM-SP vs. C-V | 2.80e − 05 |

| LSACM-SP vs. LIC | 1.35e − 04 | LSACM-SP vs. LIC | 5.35e − 05 |

| LSACM-SP vs. superpixel | 8.90e − 04 | LSACM-SP vs. superpixel | 6.32e − 04 |

| LSACM-SP vs. LSACM | 4.34e − 03 | LSACM-SP vs. LSACM | 8.73e − 04 |

4.4. Optic Cup Segmentation Results

For cup segmentation, we employ two different approaches, i.e., thresholding [24] and clustering [42], for comparison. The corresponding comparison results obtained from different approaches are shown in Figure 10.

Figure 10.

Optic cup segmentation results. (a) Original color image, (b) thresholding [24], (c) SWFCM clustering [42], and (d) LSACM-SP. Black line: ground truth and green line: detected results obtained by an approach.

According to Figure 10, it can be seen that our approach achieves small deviation of the detected optic cup boundary from the ground truth both on the nasal and temporal sides. However, other approaches suffer from a significant influence on the segmentation accuracy, especially for dense blood vessels presented on the nasal side. Specially, our approach is always superior to the nonjoint approaches [24, 42] due to the fact that our approach makes full use of the useful prior of optic disc boundary for optic cup boundary extraction. Comparing with the existing approaches, our approach has the following advantages: (1) intensity inhomogeneity, a frequently occurring phenomenon within optic cup is addressed, and thereby the discrimination between the optic cup and nonoptic cup is enhanced. (2) The proposed structure prior can guide the optic cup contour evolution in an effective region, which can reduce the negative effect of nonobjects, generating robust and reliable segmentation results. (3) Our approach is free from any training process and shape constraint, which is robust and effective in capturing a large range of intensity and shape variations. More detailed quantitative assessment results of the optic cup segmentation using DI and boundary-based distance criteria are shown in Table 3. As seen from Table 3, the proposed approach outperforms others comparison approaches in terms of the two important segmentation measurements.

Table 3.

Optic cup segmentation results on the DRISHTI-GS and RIM-ONE r2 databases.

Furthermore, the pairwise one-tailed t-tests [43] are used to verify the effectiveness of our approach for optic cup segmentation. The corresponding results are depicted in Table 4. Seen from Table 4, all the p values are much less than 0.05, indicating that the null hypotheses are disapproved in all pairwise tests. As a whole, the proposed approach shows a significant improvement in the optic cup segmentation results.

Table 4.

The p values of the pairwise one-tailed t-tests of LSACM-SP and other optic cup segmentation approaches on DI.

| Methods | p values (DRISHTI-GS) | Methods | p values (RIM-ONE r2) |

|---|---|---|---|

| LSACM-SP vs. Thresholding | 8.12e − 04 | LSACM-SP vs. Thresholding | 1.26e − 05 |

| LSACM-SP vs. SWFCM clustering | 1.04e − 03 | LSACM-SP vs. SWFCM clustering | 2.21e − 03 |

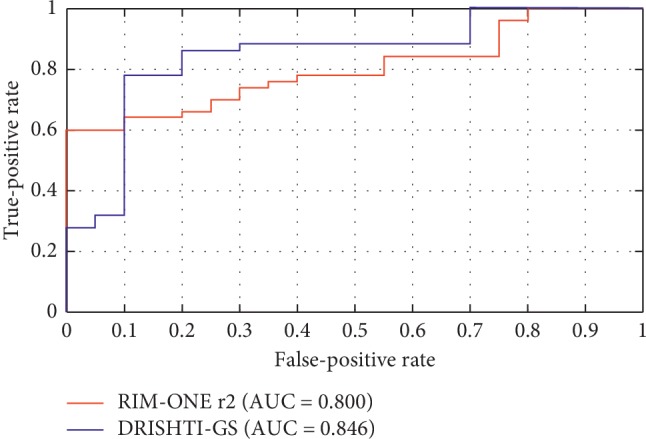

4.5. Glaucoma Assessment

In this section, we will give the performance of the glaucoma detection based on our approach. Since the vertical CDR value is one of the most important indicators for glaucoma detection, we use the segmented optic disc and optic cup results to calculate the vertical CDR. Here, the normalized CDR value of the ith image can be calculated by

| (17) |

where Qmax and Qmin are the maximum and minimum vertical CDR values. Here, the area under ROC curve (AUC) is used for glaucoma assessment, as shown in Figure 11.

Figure 11.

The ROC curves of LSACM-SP in glaucoma detection on the Drishti-GS and RIM-ONE r2 databases.

4.6. Comparison with the State-of-the-Art Approaches

After the optic disc and optic cup boundaries extraction, we use the accuracy as a common measurement for glaucoma assessment. In this section, some state-of-the-art glaucoma diagnosis approaches are employed for verifying the effectiveness of the proposed approach, i.e., superpixel segmentation [3], wavelet features [44], multifeature fusion [45], deep learning [46], SDC [47], and AWLCSC [48]. Table 5 shows the classification results obtained by different algorithms on the DRISHTI-GS and RIM-ONE r2 databases. According to the comparison result, we can learn that the proposed LSACM-SP achieves a promising performance.

Table 5.

Comparison of the proposed approach against the state-of-the-art approaches on the DRISHTI-GS and RIM-ONE r2 databases.

Besides, we also use the pairwise one-tailed t-tests on accuracy to further verify the effectiveness of the proposed approach. The results are shown in Table 6. From these results, we can learn that the p values obtained by the pairwise one-tailed t-tests are less than 0.05, which indicates that our approach significantly outperforms other glaucoma diagnosis approaches.

Table 6.

The p values of the pairwise one-tailed t-tests of LSACM-SP and other glaucoma diagnosis approaches on accuracy.

| Methods | p values (DRISHTI-GS) | Methods | p values (RIM-ONE r2) |

|---|---|---|---|

| LSACM-SP vs. superpixel | 9.34e − 04 | LSACM-SP vs. superpixel | 5.56e − 04 |

| LSACM-SP vs. wavelet features | 5.98e − 05 | LSACM-SP vs. wavelet features | 2.92e − 05 |

| LSACM-SP vs. multifeature fusion | 0.0097 | LSACM-SP vs. multifeature fusion | 0.0014 |

| LSACM-SP vs. deep learning | 0.0110 | LSACM-SP vs. deep learning | 0.0019 |

| LSACM-SP vs. SDC | 0.0183 | LSACM-SP vs. SDC | 0.0272 |

| LSACM-SP vs. AWLCSC | 0.0421 | LSACM-SP vs. AWLCSC | 0.0303 |

5. Conclusions

Since glaucomatous damage is irreversible, automated assessment of glaucoma is of interest in early detection and treatment. A novel model for simultaneous segmenting optic disc and optic cup for glaucoma diagnosis is presented in this paper. First, LSACM is introduced to overcome the influence caused by intensity inhomogeneity. Then, the proposed approach is presented by combining structure prior consisting of the hierarchical image segmentation and the attraction term. After that our approach is updated iteratively and constantly adjusted both the optic disc and optic cup boundaries to approximate the true object boundaries. The proposed approach is tested and evaluated on two publicly available DRISHTI-GS and RIM-ONE r2 databases. Seen from the experimental results, the proposed approach outperforms the state-of-the-art approaches. Although good segmentation performance can be achieved by the proposed approach, it may fail in some of the normal fundus images with extremely small optic cup sizes. In the future, we will introduce the priors on the location and shape of optic disc and optic cup to overcome this issue.

Acknowledgments

This study was supported by the National Natural Science Foundation of China (no. 61602221), the Project of Doctoral Foundation of Shenyang Aerospace University (no. 19YB01), the Scientific Research Fund Project of Liaoning Provincial Department of Education (no. JYT19040), the Natural Science Foundation of Liaoning Province Science and Technology Department (no. 2019-ZD-0234), the Science and Technology Research Project of Jiangxi Provincial Department of Education (no. GJJ160333), and the Natural Science Foundation of Jiangxi Province (no. 20171BAB212009).

Appendix

Minimizing the energy functional ELSACM‐SP with respect to c1, c2, c3, c4, BDisc(x), BCup(x), σ1, σ2, σ3, and σ4, we can achieve the minimizer for each variable. That is,

-

(1)Energy minimization with respect to{ci, σi}, BDisc(x), BCup(x): functionals for {ci, σi}, BDisc(x), BCup(x) of ELSACM‐SP are givens as

(A.1) - Each functional in (A.1) has an explicit minimizer for each variable. That is,

(A.2) (A.3) (A.4) (A.5) (A.6) (A.7) (A.8) (A.9) (A.10) (A.11) where ⊗ denotes the convolution operator.

-

(2)Energy minimization with respect toϕ and φ: minimizing ELSACM‐SP with respect to ϕ and φ, it can be solved by the standard gradient descent method. After a series of calculations, the solution is obtained as follows:

(A.12) (A.13) where ∇ denotes the gradient operator, div(·) is the divergence operator, and δ(ϕ) is the Dirac functional [29].

where ϕlandφl are two level set functions acquired at the l-th iteration, ∇2 is the Laplacian operator, and Δt denotes time steps.

Contributor Information

Yugen Yi, Email: yiyg510@jxnu.edu.cn.

Yuan Gao, Email: gaoyuan@stumail.neu.edu.cn.

Data Availability

The databases used in our study are all available publicly, and these data used to support the findings of this study are included within the following articles. The DRISHTI-GS database is available in Sivaswamy et al. [38]. The RIM-ONE r2 database is available in Fumero et al. [39].

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Gao Y., Yu X., Wu C., Zhou W., Wang X., Chu H. Accurate and efficient segmentation of optic disc and optic cup in retinal images integrating multi-view information. IEEE Access. 2019;7(1):148183–148197. doi: 10.1109/access.2019.2946374. [DOI] [Google Scholar]

- 2.Quigley H. A., Broman A. T. The number of people with glaucoma worldwide in 2010 and 2020. British Journal of Ophthalmology. 2006;90(3):262–267. doi: 10.1136/bjo.2005.081224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Cheng J., Liu J., Xu Y., et al. Superpixel classification based optic disc and optic cup segmentation for glaucoma screening. IEEE Transactions on Medical Imaging. 2013;32(6):1019–1032. doi: 10.1109/tmi.2013.2247770. [DOI] [PubMed] [Google Scholar]

- 4.de Sousa J. A., de Paiva A. C., Sousa de Almeida J. D., Silva A. C., Junior G. B., Gattass M. Texture based on geostatistic for glaucoma diagnosis from fundus eye image. Multimedia Tools & Applications. 2017;76(18):19173–19190. doi: 10.1007/s11042-017-4608-y. [DOI] [Google Scholar]

- 5.Araújo J. D. L., Souza J. C., Silva Neto O. P., et al. Glaucoma diagnosis in fundus eye images using diversity indexes. Multimedia Tools & Applications. 2019;78(10):12987–13004. doi: 10.1007/s11042-018-6429-z. [DOI] [Google Scholar]

- 6.Harizman N., Oliveira C., Chiang A., Tello C., et al. The ISNT rule and differentiation of normal from glaucomatous eyes. Archives of Ophthalmology. 2006;124(11):1579–1583. doi: 10.1001/archopht.124.11.1579. [DOI] [PubMed] [Google Scholar]

- 7.Haleem M. S., Han L., Hemert J. V., et al. A novel adaptive deformable model for automated optic disc and cup segmentation to aid glaucoma diagnosis. Journal of Medical Systems. 2018;42(1):p. 20. doi: 10.1007/s10916-017-0859-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Thakur N., Juneja M. Survey on segmentation and classification approaches of optic cup and optic disc for diagnosis of glaucoma. Biomedical Signal Processing and Control. 2018;42:162–189. doi: 10.1016/j.bspc.2018.01.014. [DOI] [Google Scholar]

- 9.Walter T., Klein J. C. Segmentation of color fundus images of the human retina: detection of the optic disc and the vascular tree using morphological techniques. Proceedings of the Second International Symposium on Medical Data Analysis; October 2001; Madrid, Spain. Springer; pp. 282–287. [Google Scholar]

- 10.Vishnuvarthanan A., Rajasekaran M. P., Govindaraj V., Zhang Y., Thiyagarajan A. An automated hybrid approach using clustering and nature inspired optimization technique for improved tumor and tissue segmentation in magnetic resonance brain images. Applied Soft Computing. 2017;57:399–426. doi: 10.1016/j.asoc.2017.04.023. [DOI] [Google Scholar]

- 11.Wang S., Li Y., Shao Y., Cattani C., Zhang Y., Du S. Detection of dendritic spines using wavelet packet entropy and fuzzy support vector machine. CNS & Neurological Disorders—Drug Targets. 2017;16(2):116–121. doi: 10.2174/1871527315666161111123638. [DOI] [PubMed] [Google Scholar]

- 12.Zhu X., Rangayyan R. M. Detection of the optic disc in images of the retina using the hough transform. Proceedings of the International Conference of the IEEE Engineering in Medicine and Biology Society; August 2008; Vancouver, BC, Canada. pp. 3546–3549. [DOI] [PubMed] [Google Scholar]

- 13.Aquino A., Gegúndez-Arias M. E., Marín D. Detecting the optic disc boundary in digital fundus images using morphological, edge detection, and feature extraction techniques. IEEE Transactions on Medical Imaging. 2010;29(11):1860–1869. doi: 10.1109/tmi.2010.2053042. [DOI] [PubMed] [Google Scholar]

- 14.Lalonde M., Beaulieu M., Gagnon L. Fast and robust optic disc detection using pyramidal decomposition and Hausdorff-based template matching. IEEE Transactions on Medical Imaging. 2001;20(11):1193–1200. doi: 10.1109/42.963823. [DOI] [PubMed] [Google Scholar]

- 15.Cheng J., Liu J., Wong D. W. K., et al. Automatic optic disc segmentation with peripapillary atrophy elimination. Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society; September 2011; Boston, MA, USA. pp. 6224–6227. [DOI] [PubMed] [Google Scholar]

- 16.Osareh A., Mirmehdi M., Thomas B., et al. Colour morphology and snakes for optic disc localization. Proceedings of the the 6th Medical Image Understanding and Analysis Conference; July 2002; Portsmouth, UK. pp. 21–24. [Google Scholar]

- 17.Lowell J., Hunter A., Steel D., et al. Optic nerve head segmentation. IEEE Transactions on Medical Imaging. 2004;23(2):256–264. doi: 10.1109/tmi.2003.823261. [DOI] [PubMed] [Google Scholar]

- 18.Yin F., Liu J., Wong D. W. K., et al. Automated segmentation of optic disc and optic cup in fundus images for glaucoma diagnosis. Proceedings of International Symposium on Computer-Based Medical Systems; June 2012; Rome, Italy. pp. 1–6. [DOI] [Google Scholar]

- 19.Gao Y., Yu X., Wu C., Zhou W., Lei X., Zhuang Y. Automatic optic disc segmentation based on modified local image fitting model with shape prior information. Journal of Healthcare Engineering. 2019;2019:10. doi: 10.1155/2019/2745183.2745183 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Yin F., Liu J., Ong S. H., et al. Model-based optic nerve head segmentation on retinal fundus images. Proceedings International Conference of the IEEE Engineering in Medicine and Biology Society; September 2011; Boston, MA, USA. pp. 2626–2629. [DOI] [PubMed] [Google Scholar]

- 21.Tang L., Garvin M. K., Kwon Y. H., et al. Segmentation of optic nerve head rim in color fundus photographs by probability based active shape model. Investigative Ophthalmology & Visual Science. 2012;53(14):p. 2144. doi: 10.1167/iovs.12-9803. [DOI] [Google Scholar]

- 22.Chan T., Vese L. An active contour model without edges. IEEE Transactions on Image Processing. 2002;10(2):266–277. doi: 10.1109/83.902291. [DOI] [PubMed] [Google Scholar]

- 23.Septiarini A., Harjoko A., Pulungan R., Ekantini R. Optic disc and cup segmentation by automatic thresholding with morphological operation for glaucoma evaluation. Signal, Image and Video Processing. 2017;11(5):945–952. doi: 10.1007/s11760-016-1043-x. [DOI] [Google Scholar]

- 24.Joshi G. D., Sivaswamy J., Karan K., et al. Optic disk and cup boundary detection using regional information. Proceedings of IEEE International Symposium on Biomedical Imaging, ISBI; April 2010; Rotterdam, Netherlands. pp. 948–951. [DOI] [Google Scholar]

- 25.Lowell J., Hunter A., Steel D., et al. Optic nerve head segmentation. IEEE Transactions on Medical Imaging. 2004;23(2):256–264. doi: 10.1109/tmi.2003.823261. [DOI] [PubMed] [Google Scholar]

- 26.Xu J., Chutatape O., Sung E., Zheng C., Chew Tec Kuan P. Optic disk feature extraction via modified deformable model technique for glaucoma analysis. Pattern Recognition. 2007;40(7):2063–2076. doi: 10.1016/j.patcog.2006.10.015. [DOI] [Google Scholar]

- 27.Tang Y., Li X., von Freyberg A., et al. Automatic segmentation of the papilla in a fundus image based on the c-v model and a shape restraint. Proceedings of 18th International Conference on Pattern Recognition (ICPR’06); August 2006; Hong Kong, China. pp. 183–186. [DOI] [Google Scholar]

- 28.Mumford D. K., Shah J. Optimal approximations by piecewise smooth functions and associated variational problems. Communications on Pure & Applied Mathematics. 1989;42(5):577–685. doi: 10.1002/cpa.3160420503. [DOI] [Google Scholar]

- 29.Zhang K., Zhang L., Lam K.-M., Zhang D. A level set approach to image segmentation with intensity inhomogeneity. IEEE Transactions on Cybernetics. 2016;46(2):546–557. doi: 10.1109/tcyb.2015.2409119. [DOI] [PubMed] [Google Scholar]

- 30.Sevastopolsky A. Optic disc and cup segmentation methods for glaucoma detection with modification of U-Net convolutional neural network. Pattern Recognition and Image Analysis. 2017;27(3):618–624. doi: 10.1134/s1054661817030269. [DOI] [Google Scholar]

- 31.Fu H., Cheng J., Xu Y., Wong D. W. K., Liu J., Cao X. Joint optic disc and cup segmentation based on multi-label deep network and polar transformation. IEEE Transactions on Medical Imaging. 2018;37(7):1597–1605. doi: 10.1109/tmi.2018.2791488. [DOI] [PubMed] [Google Scholar]

- 32.ShuangY, Di X., Shaun F., Kanagasingam Y. Robust optic disc and cup segmentation with deep learning for glaucoma detection. Computerized Medical Imaging and Graphics. 2019;74:61–71. doi: 10.1016/j.compmedimag.2019.02.005. [DOI] [PubMed] [Google Scholar]

- 33.Yi Y., Wang J., Zhou W., Zheng C., Kong J., Qiao S. Non-Negative matrix factorization with locality constrained adaptive graph. IEEE Transactions on Circuits and Systems for Video Technology. 2019 doi: 10.1109/TCSVT.2019.2892971. [DOI] [Google Scholar]

- 34.Yi Y., Wang J., Zhou W., Fang Y., Kong J., Lu Y. Joint graph optimization and projection learning for dimensionality reduction. Pattern Recognition. 2019;92:258–273. doi: 10.1016/j.patcog.2019.03.024. [DOI] [Google Scholar]

- 35.Azzopardi G., Strisciuglio N., Vento M., Petkov N. Trainable cosfire filters for vessel delineation with application to retinal images. Medical Image Analysis. 2015;19(1):46–57. doi: 10.1016/j.media.2014.08.002. [DOI] [PubMed] [Google Scholar]

- 36.Telea A. An image inpainting technique based on the fast marching method. Journal of Graphics Tools. 2004;9(1):23–34. doi: 10.1080/10867651.2004.10487596. [DOI] [Google Scholar]

- 37.Brox T. Saarbrücken, Germany: Department of Computer Science, Saarland University; 2005. From pixels to regions: partial differential equations in image analysis. Ph.D. Dissertation. [Google Scholar]

- 38.Sivaswamy J., Krishnadas S. R., Joshi G. D., et al. Drishti-GS: retinal image dataset for optic nerve head (ONH) segmentation. Proceedings of the IEEE, International Symposium on Biomedical Imaging; May 2014; Beijing, China. pp. 53–56. [DOI] [Google Scholar]

- 39.Fumero F., Alayon S., Sanchez J. L., Sigut J., Gonzalez-Hernandez M. RIM-ONE: an open retinal image database for optic nerve evaluation. Proceedings of the 24th International Symposium on Computer-Based Medical Systems (CBMS); June 2011; Bristol, UK. IEEE; pp. 1–6. [DOI] [Google Scholar]

- 40.Ayub J., Ahmad J., Muhammad J., et al. Glaucoma detection through optic disc and cup segmentation using K-mean clustering. Proceedings of the 2016 International Conference on Computing, Electronic and Electrical Engineering (ICE Cube); April 2016; Quetta, Pakistan. [DOI] [Google Scholar]

- 41.Zhou W., Wu C., Gao Y., Yu X. Automatic optic disc boundary extraction based on saliency object detection and modified local intensity clustering model in retinal images. IEICE Transactions on Fundamentals of Electronics, Communications and Computer Sciences. 2017;E100.A(9):2069–2072. doi: 10.1587/transfun.e100.a.2069. [DOI] [Google Scholar]

- 42.Mittapalli P. S., Kande G. B. Segmentation of optic disk and optic cup from digital fundus images for the assessment of glaucoma. Biomedical Signal Processing and Control. 2016;24(47):34–46. doi: 10.1016/j.bspc.2015.09.003. [DOI] [Google Scholar]

- 43.Zhou W., Cheng D., Wang J., et al. Double regularized matrix factorization for image classification and clustering. EURASIP Journal on Image and Video Processing. 2018;2018(1):p. 49. doi: 10.1186/s13640-018-0287-5. [DOI] [Google Scholar]

- 44.Dua S., Acharya U. R., Chowriappa P., Sree S. V. Wavelet-based energy features for glaucomatous image classification. IEEE Transactions on Information Technology in Biomedicine. 2012;16(1):80–87. doi: 10.1109/titb.2011.2176540. [DOI] [PubMed] [Google Scholar]

- 45.Chakravarty A., Sivaswamy J. Glaucoma classification with a fusion of segmentation and image-based features. Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI); April 2016; Arlington, VA, USA. IEEE; pp. 689–692. [DOI] [Google Scholar]

- 46.Yu S., Xiao D., Frost S., Kanagasingam Y. Robust optic disc and cup segmentation with deep learning for glaucoma detection. Computerized Medical Imaging and Graphics. 2019;74:61–71. doi: 10.1016/j.compmedimag.2019.02.005. [DOI] [PubMed] [Google Scholar]

- 47.Cheng J., Yin F., Wong D. W. K., Tao D., Liu J. Sparse dissimilarity-constrained coding for glaucoma screening. IEEE Transactions on Biomedical Engineering. 2015;62(5):1395–1403. doi: 10.1109/tbme.2015.2389234. [DOI] [PubMed] [Google Scholar]

- 48.Zhou W., Yi Y., Bao J., Wang W. Adaptive weighted locality-constrained sparse coding for glaucoma diagnosis. Medical & Biological Engineering & Computing. 2019;57(9):2055–2067. doi: 10.1007/s11517-019-02011-z. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The databases used in our study are all available publicly, and these data used to support the findings of this study are included within the following articles. The DRISHTI-GS database is available in Sivaswamy et al. [38]. The RIM-ONE r2 database is available in Fumero et al. [39].