Abstract

We examined the responses of neurons in posterior parietal area 7a to passive rotational and translational self-motion stimuli, while systematically varying the speed of visually simulated (optic flow cues) or actual (vestibular cues) self-motion. Contrary to a general belief that responses in area 7a are predominantly visual, we found evidence for a vestibular dominance in self-motion processing. Only a small fraction of neurons showed multisensory convergence of visual/vestibular and linear/angular self-motion cues. These findings suggest possibly independent neuronal population codes for visual versus vestibular and linear versus angular self-motion. Neural responses scaled with self-motion magnitude (i.e., speed) but temporal dynamics were diverse across the population. Analyses of laminar recordings showed a strong distance-dependent decrease for correlations in stimulus-induced (signal correlation) and stimulus-independent (noise correlation) components of spike-count variability, supporting the notion that neurons are spatially clustered with respect to their sensory representation of motion. Single-unit and multiunit response patterns were also correlated, but no other systematic dependencies on cortical layers or columns were observed. These findings describe a likely independent multimodal neural code for linear and angular self-motion in a posterior parietal area of the macaque brain that is connected to the hippocampal formation.

Keywords: angular rotation, electrophysiology, forward translation, multisensory representation, speed tuning

Introduction

Efficient navigation demands the use of static, external sensory cues as well as dynamic cues generated by self-motion. When landmarks are unavailable, humans and animals are capable of using self-motion signals to navigate by path integration (see Etienne and Jeffery 2004 for review). A candidate cortical area that might convey self-motion information to navigation circuits is the posterior parietal cortex (PPC). PPC plays a major role in directing spatial behavior (Cohen and Andersen 2002; Save and Poucet 2008; Nitz 2009), and rats with PPC lesions exhibit impaired spatial navigation (Kolb et al. 1994; Save and Poucet 2000) and inaccurate path integration (Save, et al. 2001; Parron and Save 2004). Furthermore, a growing body of evidence suggests that a large fraction of neurons in the rat PPC is modulated by the animals’ locomotor state (McNaughton et al. 1994; Nitz 2006) including movement speed (Whitlock et al. 2012; Wilber et al. 2017). In contrast, the tuning of PPC neurons to allocentric variables (e.g., route information or landmarks) is weak at best (Chen, Lin, Barnes et al. 1994; Chen, Lin, Green, et al. 1994).

The above findings are yet to be fully corroborated in primates. The macaque PPC has a more complex parcellation and anatomical connectivity than the rodent PPC (Kaas et al. 2013). Although neuronal responses to multisensory self-motion stimuli in parietal areas such as the medial superior temporal (MSTd) and ventral intraparietal (VIP) areas are well documented (Britten and van Wezel 1998, 2002; Bremmer, Duhamel, et al. 2002; Bremmer, Klam, et al. 2002; Chen et al. 2011; Gu et al. 2006; Maciokas and Britten 2010; Zhang and Britten 2010), these are not the areas with the strongest connectivity to navigation circuits. Anatomical studies suggest that the posterior aspect of the inferior parietal lobule, between the intraparietal and superior temporal sulci (area 7a), constitutes the main region of the PPC with both direct and indirect connections to the hippocampal formation (Pandya and Seltzer 1982; Vogt and Pandya 1987; Cavada and Goldman-Rakic 1989; Andersen et al. 1990; Morris et al. 1999; Rockland and Van Hoesen 1999; Ding et al. 2000; Kobayashi and Amaral 2000, 2003; Kondo et al. 2005). The indirect pathway via the retrosplenial cortex has been proposed to play a critical role in communicating navigationally relevant information (Burgess et al. 1999; Kravitz et al. 2011).

Several studies appear to support this view. First, lesions to area 7a in cynomolgus monkeys impaired navigation of whole-body translation through a maze (Traverse and Latto 1986; Barrow and Latto 1996). Second, motion-sensitive receptive fields of area 7a neurons often exhibit a radial arrangement of preferred directions within their receptive field (Motter and Mountcastle 1981; Steinmetz et al. 1987), such that they are maximally activated by the expanding or contracting patterns of optic flow that are typically experienced when the observer moves forward or backward through the world (Siegel and Read 1997; Merchant et al. 2001, 2004; Raffi and Siegel 2007). Finally, the response gain of these neurons is also modulated by eye/head position (“gain fields”) (Andersen et al. 1985), suggesting that they may play a role in transforming sensory inputs into a navigationally useful format such as a body-centered representation (Zipser and Andersen 1988; Pouget and Sejnowski 1997). Thus, both anatomical and physiological evidence suggests that area 7a plays a role in bridging motion perception and navigation. Yet, while responsiveness to optic flow has been described (Read and Siegel 1997; Phinney and Siegel 2000; Raffi and Siegel 2007), responses of 7a neurons to vestibular stimulation have never been directly tested. Here, we recorded neuronal spikes and local-field potentials (LFPs) in area 7a during 2 stimulus paradigms: forward linear translation that is either visually simulated by optic flow or delivered by a motion platform that provides vestibular cues, and rotation about the yaw axis that is either visually simulated or delivered by platform movement.

Methods

Animal Preparation

We recorded extracellularly using linear array electrodes from area 7a of 3 adult male rhesus macaques (Macaca Mulatta; 8.5–10 kg). We collected data from 9 recording sessions from the left hemisphere and 10 from the right hemisphere from monkey O, 5 sessions from the right hemisphere of monkey J, and 20 sessions from the right hemisphere of monkey Q. All animals were chronically implanted with a lightweight polyacetal ring for head restraint, a removable grid to guide electrode penetrations, and scleral coils for monitoring eye movements (CNC Engineering, Seattle WA, USA). Monkeys were trained using standard operant conditioning procedures to maintain fixation on a visual target within a 2° square window for a period up to 3 s. All surgeries and procedures were approved by the Institutional Animal Care and Use Committee at Baylor College of Medicine and were in accordance with National Institutes of Health guidelines.

Experimental Setup

Monkeys were seated, head-fixed, and secured in a primate chair that was mounted on top of a rotary motor (Kollmorgen, Radford, VA, USA). The chair and motor were both mounted on a motion platform (Moog 6DOF2000E, East Aurora, NY, USA). The platform and the rotary motor were used to generate motion during the vestibular condition, for the linear translation and angular rotation protocols, respectively (see next section for a description of these protocols). Specifically, the motion platform had 6 degrees of freedom, including 3 axes of translation (fore-aft, left-right, up-down), but only forward motion was used in the translation protocol. While the motion platform can also execute rotational movements around the 3 axes, its range of rotational motion is rather limited. Thus, the rotary motor mounted on top of the platform was used to deliver clockwise (CW) and counter-clockwise (CCW) yaw rotations in the rotation protocol.

A 3-chip DLP projector (Christie Digital Mirage 2000, Cypress, CA, USA) was mounted on top of the motion platform and rear-projected images onto a 60 × 60 cm2 tangent screen that was attached to the platform in front of the chair, ~30 cm from the monkey (Fig. 1A). The projector rendered stereoscopic images generated by an OpenGL accelerator board (Nvidia Quadro FX 3000 G) at 60 Hz and was used to deliver optic flow (visual) stimuli for all protocols. During the combined visual–vestibular condition, the visual display was updated synchronously with the dynamic trajectory of the motion platform (for the translation protocol) or the rotary motor (for the rotation protocol). Custom scripts were written for behavioral control and data acquisition with TEMPO software (Reflective Computing, St. Louis, MO, USA).

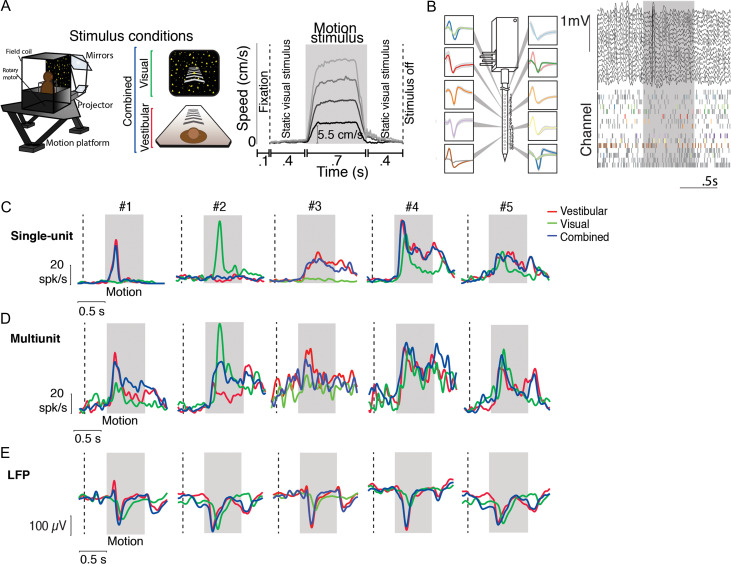

Figure 1.

Example responses to linear translation. (A) Left—stimulus conditions: Monkeys were seated on a motion platform in front of a projector screen, and experienced simulated forward translation via optic flow (visual, green), real forward translation delivered using the platform (vestibular, red) or a congruent combination of the 2 (combined, blue) while fixating on a central target. Right—trial structure: Time course of translation velocity reconstructed from accelerometer recordings during 4 representative trials under the vestibular condition. The desired temporal profile of velocity was trapezoidal for both vestibular and visual motion, and 1 of 4 possible movement speeds (5.5, 11, 16.5, 22 cm/s) was presented on each trial. During trials with visual motion, the motion period was flanked by the presentation of a static cloud of dots for 400 ms. No visual stimulus, except for the fixation target, was present during these periods under the vestibular condition. (B) Left: Recordings were carried out using a linear electrode array (U-Probe) with 16 contact sites (waveforms illustrate recorded spikes from an example session; shaded regions show ± 1 standard deviation). Right: Local field potentials (LFP, top) and spikes (bottom) from multiunits (gray) and isolated single-units (colored) during one trial. (C) Response time-course of 5 example isolated neurons, averaged across all speeds, chosen to highlight the diversity in temporal dynamics within the population. (D, E) Corresponding stimulus-evoked MUA and LFP signals (recorded from the same electrode as the single units in C) during vestibular (red), visual (green), and combined (blue) conditions. Gray shaded areas correspond to periods of movement. Vertical dashed lines show start of the static cloud of dots for the visual and combined conditions. After motion offset, the monkey maintained fixation for another 400 ms (and the static cloud of dots was maintained for visual and combined conditions).

Stimulus

Monkeys had to maintain fixation on a central target (a square that subtended 0.5° on each side) for 100 ms to initiate each trial. A self-motion stimulus that lasted 700 ms was presented during each trial. The onset of motion occurred 400 ms following trial initiation, and its dynamics followed an approximately trapezoidal profile with the speed held roughly constant for 500 ms in the middle (Fig. 1A). Depending on the experimental protocol (see below), up to 3 different kinds of trials were interleaved in which the self-motion stimulus was presented by moving the platform and/or motor on which the animal was seated (vestibular condition), by presenting visual motion of a 3D cloud of dots having a density of 0.01 dots/cm3 (visual condition), or through a congruent combination of both vestibular and visual cues (combined condition). In trials containing visual motion (visual and combined conditions), presentation of the motion stimulus was flanked on either side by a 400 ms display of a static cloud of dots to prevent on/off visual transient responses from contaminating neural responses to motion. In the vestibular condition, no visual stimulus was present during these periods, except for the fixation square. A liquid reward was delivered at the end of the trial if the monkey maintained fixation throughout the trial. Trials were aborted following fixation breaks.

Experimental Protocols

In each recording session, we employed 2 different experimental protocols—translation and rotation—that shared a trial structure identical to that described above but differed in the type of motion presented: whereas the former involved linear translation along the forward (straight-ahead) direction, the latter involved a pure yaw rotation. Vestibular presentation of translational motion was achieved by translating the motion platform, while presentation of rotational motion involved rotating the motor beneath the monkey’s chair. Note that the projection screen was attached to the motion platform and translated with the animal, whereas yaw rotations applied by the motor changed the animal’s body orientation relative to the projection screen. Whereas the translation protocol included all 3 stimulus modality conditions (visual/vestibular/combined), the rotation protocol included only the 2 unimodal conditions, as combined presentation of the rotation stimulus was technically challenging. For both the translation and rotation protocols, the visual fixation target remained head-fixed during movement, such that no eye-in-head movements were necessary for the animal to maintain fixation.

The peak speed of motion was varied across trials in each protocol. We employed 4 different speeds of linear translation between 5.5 and 22 cm/s in steps of 5.5 cm/s, and 5 different rotation speeds (15–75°/s in steps of 15°/s) for both CW and CCW directions of rotation. We collected data for at least 5 repetitions of each distinct stimulus, and both protocols included additional control trials (baseline condition) in which the monkey was required to fixate on a blank screen without any self-motion stimuli.

Recording and Data Acquisition

Area 7a was identified using structural MRI, MRI atlases, and confirmed physiologically with the presence of large receptive fields responsive to optic flow (Siegel and Read 1997; Merchant et al. 2001). Extracellular activity was recorded using a 16-channel linear electrode array with electrodes spaced 100 μm apart (U-Probe, Plexon Inc., Dallas, TX, USA). The electrode array was advanced into the cortex through a guide-tube using a hydraulic microdrive. The spike-detection threshold was manually adjusted separately for each channel to facilitate monitoring of action potential waveforms in real-time, and recordings began once waveforms were stable. The broadband signals were amplified and digitized at 20 KHz using a multichannel data acquisition system (Plexon Inc., Dallas, TX, USA) and were stored along with the action potential waveforms for offline analysis. Additionally, for each channel, we also stored low-pass filtered (−3 dB at 250 Hz) LFP signals.

Recording Reconstructions

Two MRI datasets of T1-weighted scans from monkey Q were used to reconstruct recording sites using a frameless stereotaxic technique pre and post ring implantation. The latter included a plastic grid filled with betadine ointment to allow for precise electrode targeting and reconstruction of recordings sites. We used an operator-assisted segmentation tool for volumetric imaging (ITK-SNAP v3.6, www.itksnap.org, last accessed 12 October 2018) to segment in 3D and render the brain structures from the MRI scans (Yushkevich et al. 2006). We aligned the MRI datasets to bony landmarks and brain structures to identify anterior–posterior zero (ear bar zero). All horizontal slices were aligned to the horizontal plane passing through the interaural line and the infraorbital ridge; all coronal slices were aligned parallel to the vertical plane passing through the interaural line (ear-bar zero). Brain structures used for alignment were the anterior and posterior commissure, as well as the genu and splenium of the corpus callosum (Dubowitz and Scadeng 2011; Frey et al. 2011). All of the anatomical landmarks derived from horizontal, coronal, and sagittal sections were then used to index to positions in standard macaque brain atlases (Paxinos et al. 2000; Saleem and Logothetis 2012) and recording sites were then reconstructed using the position on the grid in stereotaxic coordinates.

Analysis of Single-units, Multiunits, and LFP

Spike Sorting

Spikes were sorted offline using commercially available software (Offline Sorter v3.3.5, Plexon Inc., Dallas, TX, USA) to isolate single-units. Spikes that could not be distinctly clustered were grouped and labeled as multiunit activity (MUA), representing a measure of aggregate spiking of neurons in the vicinity of the electrode. All subsequent analyses were performed using Matlab (The Mathworks, Natick, MA, USA).

Temporal Response

Stimulus-evoked LFPs in Figures 1 and 5 were obtained by trial-averaging LFP traces recorded from each electrode channel, and then averaging across electrodes. Similarly, for single-units and MUA, we constructed peristimulus time histograms (PSTHs) for the complete duration of each trial (1.6 s including the fixation period) in each stimulus condition by convolving the spike trains with a 25 ms wide Gaussian function and then averaging across stimulus repetitions.

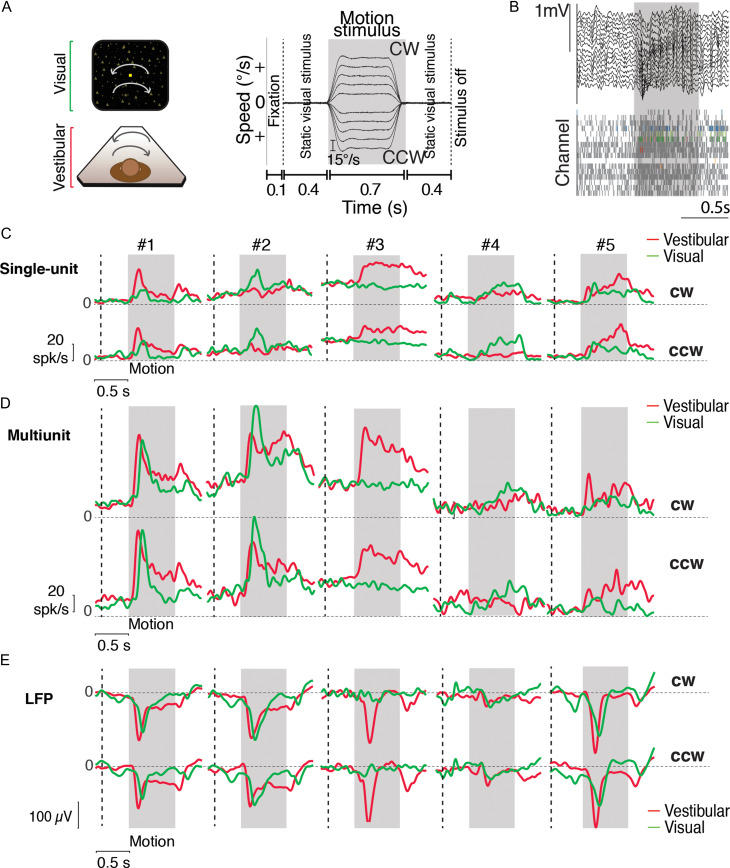

Figure 5.

Example responses to angular rotation. (A) Left—stimulus conditions: Monkeys were seated in a chair mounted on top of a rotary motor in front of a projector screen. Clockwise (CW) and counter-clockwise (CCW) rotational motion (yaw axis) was delivered visually via optic flow cues and by rotating the chair at various speeds. Right—trial structure: Time-course of rotational velocity reconstructed from accelerometer recordings obtained during 10 different angular velocities (2 directions × 5 speeds) under the vestibular condition. Similar to linear translation, the desired temporal profile of angular velocity was trapezoidal for vestibular and visual rotation. Peak rotational velocity varied across trials from among 10 possible values (±15, ±30, ±45, ±60, and ±75°/s). During trials with visual motion, the motion period was flanked by presentation of a static cloud of dots for 400 ms. The screen was blank throughout the trial under the vestibular condition. (B) Local field potentials (LFP) (top) and spikes (bottom, gray: multiunits, colored: single-units) recorded from all channels of the linear array during one example trial. (C) Time course of trial-averaged response of 5 example isolated neurons to vestibular (red) and visual (green) rotational motion for CW (top) and CCW (bottom) directions (averaged across speeds). (D, E) Corresponding stimulus-evoked MUA and LFP signals (recorded from the same electrode as the single units in C). Gray shaded areas show the motion period. Vertical dashed lines show start of the static cloud of dots for the visual condition. CW = clockwise; CCW = counter-clockwise.

Responsiveness

A neuron was classified as responsive to motion if its average firing rate during a 250 ms time-window following motion stimulus onset was significantly different from its activity during a window of the same length preceding motion onset (P ≤ 0.05; 2-sided t-test). Responsive neurons were further classified into “excitatory” and “suppressive” depending on whether motion increased or decreased neuronal activity relative to baseline.

Selectivity to Speed

We tested the significance of tuning for self-motion speed by performing a one-way ANOVA (P ≤ 0.05) to test if the mean responses to different speeds were significantly different from each other. We estimated tuning functions for speed by measuring the average firing rate during the 500 ms flat phase of the trapezoidal motion profile when speed was approximately constant. To quantify the strength of tuning to speed, we computed a speed selectivity index (SSI) which ranged from 0 to 1 (Takahashi et al. 2007): where and are the mean firing rates of the neuron in response to speeds that elicited maximal and minimal responses, respectively; SSE is the sum-squared error around the mean responses; N is the total number of observations (trials); and M is the total number of speeds (4 and 5 for the translation and rotation protocols respectively). In contrast to the conventional modulation index, , the SSI quantifies the amount of response modulation due to changes in speed relative to intrinsic variability. For rotational motion, we averaged responses to the 2 rotation directions separately for each rotation speed before computing and

Peak Responses and Latency

For LFPs, peak amplitude was defined as the amplitude of the largest peak or trough in the signal after motion onset. For both spikes and LFP, response latency was defined as the time after the onset of motion at which the activity deviated by 2 standard deviations from the average response during the 250 ms window prior to motion onset, for at least 4 consecutive time bins (100 ms total). This specific comparison with the time window preceding motion onset was chosen to control for responses related to the appearance of static dots at the onset of the visual stimulus. We also analyzed the temporal dynamics of single- and MUA to determine whether the temporal response was unimodal or bimodal. To do this, we determined the time-points at which the firing rate within a 25 ms sliding window was at least 2 standard deviations above the response in the immediately preceding window. We identified such-time points within the 250 ms window following motion onset and offset (if available) to categorize single- and multiunits into unimodal and bimodal units. Units with at least one such-time point following motion onset, but none following motion offset, were classified as unimodal, whereas neurons with such time points following both onset and offset of motion were labeled bimodal.

Jitter Analysis

For each neuron, we defined the timing of peak response under each stimulus condition as the time (following stimulus onset) at which the trial-averaged response was maximum. We computed the jitter in the timing of the peak, (the standard error of the mean) by bootstrapping (100 bootstrapped samples). We noticed that the amount of jitter was significantly larger in the visual condition. A large amount of jitter can lead to the amplitude of peak response being underestimated. We quantitatively analyzed the effect of this jitter on the amplitude of peak response under the visual condition by simulating the effect of the additional jitter. For each neuron, we generated a set of 1000 trial-averaged response time-courses by bootstrapping trials from the combined condition. We then shifted (jittered) each time-course by a random delay, , with zero mean and variance equal to the difference between the square of the jitter under combined and visual conditions (i.e., the excess jitter under the visual condition), . Finally, we estimated the average of the randomly shifted time-courses. The peak amplitude of the resulting average time-course was taken as the predicted peak amplitude under the visual condition.

Stimulus–Response Correlation

To compute the correlation between neural responses and motion amplitude, we computed the trial-averaged response of individual neurons to each stimulus. We then pooled the trial-averaged responses of all neurons to obtain a vector of responses to each motion amplitude, and estimated the correlation between the resulting population vectors and motion amplitude.

Pairwise Correlations

We computed temporal correlations between responses of simultaneously recorded pairs of single-units, multiunits, and LFPs during self-motion and 400 ms before and after the motion period. For single- and multiunits, we first smoothed spike trains from individual trials using a 25 ms Gaussian window before concatenating temporal responses of all trials. For LFPs, we concatenated the raw voltage signals recorded during each trial. Finally, we computed pairwise correlations between the resulting smoothed concatenated time-series.

For single- and multiunits, we additionally computed signal and noise correlations separately for vestibular and visual conditions. Signal correlation was computed as the Pearson correlation between the speed tuning curves (obtained from the average firing rate during the middle 500 ms of the trapezoidal motion) of pairs of units. For computing noise correlations, we z-scored average firing rates (during the middle 500 ms of the trapezoidal motion) separately for trials corresponding to each motion speed, and then computed pairwise correlations between pooled z-scored responses. The z-scoring essentially removes shared fluctuations induced by changes in speed (signal) but retains correlations in trial-by-trial variability (noise). We estimated pairwise correlations as a function of distance by pooling all pairs with a given electrode separation (0–1500 μm in steps of 100 μm), regardless of depth.

Current Source Density

Current source density (CSD), was computed as the second spatial derivative of the LFP according to , which we discretized as . Here and correspond to the channel number and time, respectively, and was the inter-channel spacing between our electrode sites. CSD was computed using 3 s long LFP segments averaged across trials. Following standard conventions, sites with positive and negative densities were labeled as sources and sinks, respectively. To align CSD and multiunit responses from different recordings, we computed the centroid of the sink at the time-point where the current density was most negative. CSD and multiunit responses from different recordings were shifted across space to align the respective centroids before averaging. The top of the sink was labeled as the bottom of putative layer IV following standard convention used for labeling layers in macaque primary visual cortex (Mitzdorf and Singer 1979; Schroeder 1998; Self et al. 2013).

Spike Waveform Classification

Neurons were classified as putative pyramidal cells and interneurons using standard methods based on the shape of the action potential waveform (Mitchell et al. 2007). We constructed the distribution of spike waveform half-widths (defined as the average peak-trough duration). For unimodality assessment, we used a Hartigan’s dip test (P < 0.05, Hartigan and Hartigan 1985). Neurons were categorized into narrow and broad spiking depending on the percentile score of their spike half-widths. Where necessary, we inverted the polarity of spike waveforms to ensure that peaks followed troughs. We used a binomial proportion test to test if there is a difference in the fraction of responsive neurons in the 2 populations. We excluded 11 neurons from this analysis as the peaks and troughs of the action potential could not be reliably determined.

Results

Responses to Forward Translation

We recorded neural activity and LFP from 704 channels across 44 recording sessions and isolated 340 neurons from area 7a of 3 macaque monkeys while they passively fixated and experienced real and/or visually simulated forward motion at different speeds (Methods, Fig. 1A, Supplementary Fig. S1). All recordings were carried out extracellularly using laminar electrodes with 16 contact sites, spaced 100 μm apart, allowing us to sample neural activity spanning 1.5 mm across the depth of the cortex (Fig. 1B).

We encountered diverse neural responses in both single unit and multiunit activity, but more stereotyped LFPs, as illustrated by the examples in Figure 1C–E. Diversity in the dynamics of neural responses was present even among neurons recorded simultaneously at different depths along the same penetration (Supplementary Figs S2 and S3). Whereas some neurons responded with a transient change in activity following onset and/or offset of the motion stimulus, a large number of neurons exhibited responses that persisted throughout the period of motion. There was also notable diversity in the polarity of response: although the vast majority of responsive neurons exhibited an increase in firing rate following motion onset (“excitatory”), a small but significant fraction of neurons exhibited a decrease in firing rate (“suppressive,” Methods). Responsiveness of neurons was assessed by comparing the average firing rate following motion onset to a window of the same length preceding motion onset (Methods). This assessment was quite robust to the precise window length used to determine responsiveness (Supplementary Fig. S4).

A larger fraction of neurons was significantly responsive to vestibular (~40%) than visual translation (~27%, Table 1A). Although the proportion of neurons responding to the combined stimulus was relatively large (~38%; Table 1A, “Combined”), only a small subset of those neurons responded significantly to both individual conditions when presented separately (~17%; Table 1A, “vestibular and visual”). In fact, given the proportion of vestibular and visual neurons in our population, the number of bisensory neurons (n = 57) was only marginally greater than expected by chance (99% confidence interval for the number of bisensory neurons: n=[25,51]). Therefore, although both visual and vestibular signals are present in 7a, multisensory convergence of motion information is rare at the single neuron level.

Table 1.

Statistics of single- and multiunit responses to forward translation. (A) Singleunits were classified into 2 groups based on whether at least one of the stimuli elicited responses that were significantly higher (excitatory) or lower (suppressive) than their baseline response (fixation-only trials; t-test corrected for multiple comparisons; significance-level P = 0.05). Data have been grouped into stimulus type (vestibular, visual, combined), whereas the last row shows the statistics of cells that were significantly tuned to both Visual and Vestibular stimuli. A subset of responsive neurons was identified as “speed tuned” to linear motion if their responses were significantly different across stimulus amplitudes (one-way ANOVA; P = 0.05; Fig. 1A). Values between brackets correspond to percentages of the total number of recorded neurons. (B) Corresponding statistics for multiunit responses

| (A) Single-unit | (B) Multiunit | ||||||

|---|---|---|---|---|---|---|---|

| Stimulus condition | Excitatory | Suppressive | Total | Stimulus condition | Excitatory | Suppressive | Total |

| Vestibular | 118 (34.7%) | 15 (4.4%) | 133 (39.1%) | Vestibular | 530 (75.2%) | 23 (3.2%) | 553 (78.5%) |

| Visual | 77 (22.6%) | 15 (4.4%) | 92 (27%) | Visual | 235 (33.3%) | 66 (9.3%) | 301 (42.7%) |

| Combined | 124 (36.4%) | 6 (1.7%) | 130 (38.2%) | Combined | 520 (73.8%) | 13 (1.8%) | 533 (75.7%) |

| Vestibular and visual | 49 (14.4%) | 8 (2.3%) | 57 (16.7%) | Vestibular and visual | 198 (28.1%) | 3 (0.4%) | 201 (28.5%) |

| Speed tuned | Excitatory | Speed tuned | Excitatory | ||||

| Vestibular | 52 (15.2%) | Vestibular | 215 (30.5%) | ||||

| Visual | 25 (7.3%) | Visual | 57 (8.1%) | ||||

| Combined | 51 (15%) | Combined | 240 (34%) | ||||

| Vestibular and visual | 11 (3.2%) | Vestibular and visual | 43 (6.1%) | ||||

Across the population of all single-units recorded in the translation protocol, the time-course of neuronal activity during combined visual–vestibular motion was much more strongly correlated with that of the vestibular response (mean pairwise Pearson’s correlation, r = 0.82 ± 0.25) than the visual response (r = 0.57 ± 0.2), suggesting that neuronal response dynamics to multisensory inputs are more strongly dictated by vestibular rather than visual inputs. This effect is shown for the subset of 57 bisensory single-units in Figure 2A. Correlations of combined responses with visual and vestibular responses had mean values of 0.37 ± 0.35 (SD) and 0.66 ± 0.24 (SD), respectively (Fig. 2A, arrows), and the difference between these mean correlation coefficients was significant (P = 2.8 × 10−6, paired t-test).

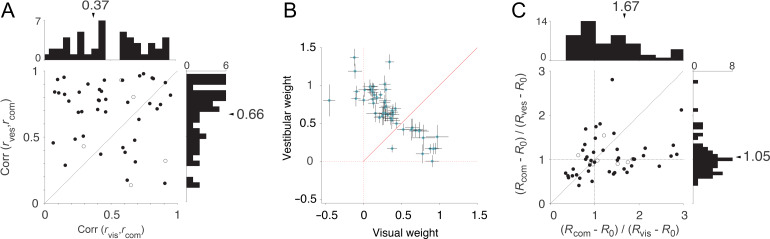

Figure 2.

Similarity of responses across sensory modalities. (A) Scatter plot of correlation of the time-course of single-unit activity during combined vs. vestibular (ordinate) or visual (abscissa) motion. Data are pooled across stimulus speeds (20 trials = 4 speeds × 5 trials per speed for each condition). Correlations were computed for a time interval starting 400 ms before stimulus onset and ending 400 ms after motion offset (Fig. 1A, right, vertical dashed lines). Thus, it included the static visual response to stimulus onset in the visual and combined conditions. (B) Vestibular and visual weights derived obtained using multiple linear regression analysis for the population of bisensory single neurons (n = 57). For each neuron, we used the trial-averaged response to the combined stimulus as a dependent variable while the vestibular and the visual responses served as predictors. Across the population of bisensory neurons, the vestibular weights were larger than visual weights. Error bars denote 95% CI obtained by bootstrapping. (C) Comparison of the ratio of peak driven response (peak response, R, minus baseline, R0) elicited by the combined (visual+vestibular) stimulus to peak driven response elicited by the vestibular (ordinate) or visual (abscissa) motion alone. Data come from the subset of bisensory neurons that were responsive to both visual and vestibular inputs (n = 57; filled symbols: excitatory responses; open symbols: suppressive responses).

We used multiple linear regression to regress the combined response to translation against the visual and vestibular responses for this subset of bisensory neurons (Fig. 2B). For each neuron, the average response across all trials in the combined stimulus was used as the dependent variable, and vestibular and visual responses as predictors. Across the population of bisensory neurons, the vestibular weights were larger than visual weights (vestibular = 0.67 ± 0.33; visual = 0.34 ± 0.36) and this difference was statistically significant (paired t-test, P = 3.1 × 10−5).

In addition, the peak driven response (defined as firing rate minus baseline activity) of the subset of bisensory neurons in the combined stimulus condition was more similar to the peak vestibular response than the peak visual response (Fig. 2C; (Rcom − R0)/(Rvis − R0) 1.67 ± 1.5, (Rcom − R0)/(Rves− R0) 1.05 ± 0.4, paired t-test P = 0.003). Qualitatively, the timing of the peak response in the visual condition had a larger temporal variability (jitter) that could lead to the peak response being underestimated for the visual condition. To determine the temporal precision of the peak responses, we estimated the standard errors in the timing of peak response for each neuron under all stimulus conditions by bootstrapping. Across neurons, the jitter in the timing of the peak was greater for the visual condition (visual: 82 ± 9 ms; vestibular: 60 ± 5 ms; combined: 61 ± 5). We verified by simulation (see Methods section jitter analysis) that the increased jitter in the visual condition should result in an average reduction of peak response (relative to combined condition) by a factor of 1.1, whereas the reduction observed in the data was 1.67. Thus, although the ratio of peak responses is influenced by jitter, the observed difference between visual and vestibular conditions is likely dominated by the difference in the relative weighting of the 2 responses. These results reinforce the view that the neural representation of forward motion in area 7a is strongly influenced by vestibular inputs.

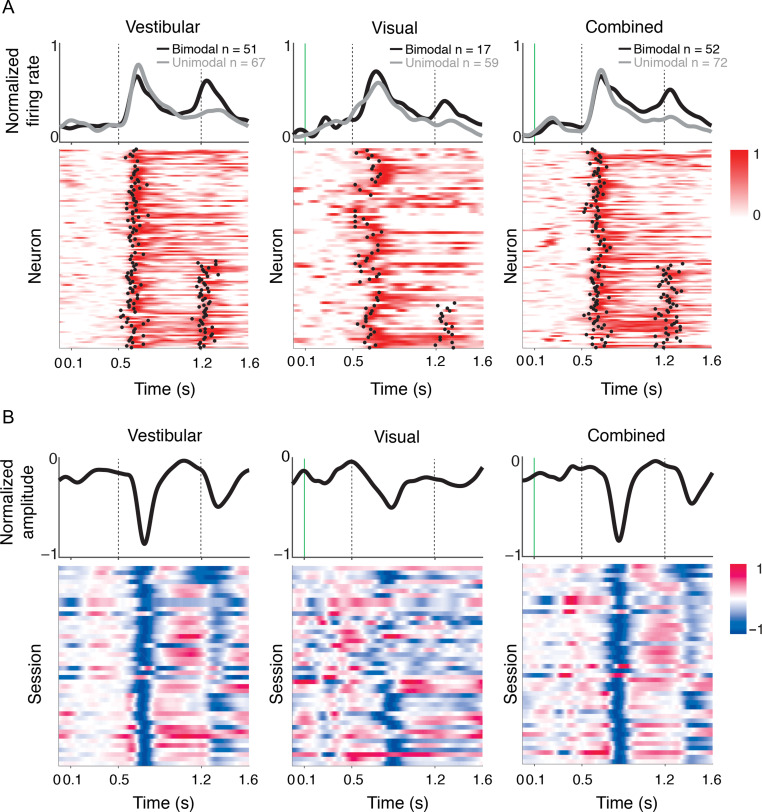

Figure 3A shows the temporal response dynamics for all single-units that exhibited a motion-induced increase (“excitatory”) in firing rate within 250 ms of motion onset. An analysis of these dynamics (Methods) revealed 2 distinct populations. During vestibular motion, the response profile of nearly half of the neurons (~43%) was bimodal, exhibiting an increase after both the onset and offset of motion (Fig. 3A, left). This fraction was not altered by the addition of visual motion cues (42%, Fig. 3A, right). In contrast, responses to purely visual motion were mostly unimodal (76%, Fig. 3A, middle). Vestibular responses had significantly shorter latencies than visual responses (vestibular: 128±5 ms, visual: 140±6 ms; t-test, P = 0.019). The latency of responses to the combined stimulus (134 ± 4 ms) was more strongly correlated with vestibular (r = 0.37, P = 4.4 × 10−4) than visual (r = 0.007, P = 0.96) latencies.

Figure 3.

Temporal pattern of response. (A) The rows in each panel correspond to normalized firing rates of all isolated single neurons that increased their firing (“excitatory”) to vestibular (left), visual (middle), and combined (right) motion. To normalize the responses, we subtracted the mean prestimulus activity of each neuron from its response, and then divided by its peak response. We classified the temporal responses as unimodal or bimodal depending on the number of time-points at which a sudden increase in response was detected (black dots, Methods). Both unimodal (following motion onset) and bimodal (following motion onset/offset) profiles are frequently encountered in response to vestibular and combined motion. In contrast, responses are mostly unimodal when only visual motion is present. Responses of neurons with unimodal (gray) and bimodal (black) profiles are averaged separately and shown on top of the respective panels. Vertical dotted lines show motion onset and offset. (B) Response profiles of LFPs (averaged across all channels) for all recorded sessions (n = 44), normalized in the same way as the single-unit responses. Note that LFPs were strongly negative at most sites.

Very similar results were found for MUA. Of 704 recordings sites, MUA at 553 (~78%) sites was significantly responsive to vestibular input, 301 (~43%) sites were responsive to visual input, and 533 (~76%) sites were responsive to the combined stimulus (Table 1B). Similar to single-units, MUA exhibited diverse temporal dynamics, with nearly half of recordings showing an increase in firing after both the onset and offset of vestibular motion (Supplementary Fig. S5). In fact, we show later that the time course of multiunit responses was correlated strongly with the time course of single-unit responses recorded from the same electrode site, suggesting clustering of self-motion modulation, which was the strongest for the vestibular condition.

We tested whether neurons responsive to translation scaled their response amplitude with speed of motion (Methods). Since the vast majority of responsive neurons were excited (rather than suppressed) by motion (Table 1A, top), we restricted this analysis to the excitatory group of neurons. We found that many neurons were tuned to stimulus amplitude (expressed as a function of peak speed in Fig. 4; vestibular: n = 52/118; visual: n = 25/77; combined: n = 51/124; ANOVA, P < 0.05; Table 1A, bottom). As illustrated in Figure 4A,B, neural responses increased on average with stimulus peak speed (vestibular: r = 0.21, P = 2 × 10−3; visual: r = 0.27, P = 5 × 10-3; combined: r = 0.30, P = 1.6 × 10−5).

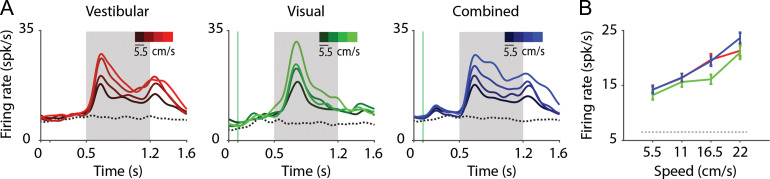

Figure 4.

Translation speed modulates neuronal responses in area 7a. (A) Time course of responses averaged across isolated single neurons that were significantly tuned to stimulus amplitude in the vestibular (left, n = 52), visual (middle, n = 25), and combined (right, n = 51) conditions. Trials are grouped and color-coded according to peak motion speed (brighter hues correspond to greater speeds). Dotted trace denotes response to the baseline condition (fixation-only trials). gray shaded areas correspond to periods of movement. (B) Average tuning curves of single-units in (A) to translation amplitude (expressed as peak speed) under the 3 conditions (red—vestibular, green—visual, blue—combined) computed using the response during the middle 500 ms flat phase of the trapezoidal motion profile in which stimulus velocity is roughly constant.

To quantify the sensitivity of each neuron to changes in motion amplitude, we computed a SSI, based on its response during the constant speed interval (Methods). The mean SSI value for the vestibular condition (0.38 ± 0.11) was slightly but significantly greater than that for the visual condition (0.35 ± 0.10, P = 1.6 × 10−5, paired t-test), suggesting that speed selectivity is greater, overall, under the vestibular condition. Moreover, the mean SSI under the combined condition was significantly greater than that of the visual (0.39 ± 0.11 paired t-test, P = 1.6 × 10−5) but not the vestibular (t-test, P = 0.28) condition, suggesting that speed tuning in the combined condition is more likely to be driven by vestibular inputs.

Finally, we analyzed spike waveforms to identify neuronal subtypes (Methods) and found that the distribution of spike widths was not unimodal (Hartigan’s dip-test, P = 0.044, Hartigan and Hartigan 1985), but instead showed 2 distinct populations of neurons with narrow or broad spike widths (Supplementary Fig. S6A). Past studies on visual and premotor areas have shown that pyramidal neurons and GABAergic interneurons exhibit different physiological properties (Song and McPeek 2010; Anderson et al. 2013; Thiele et al. 2016), implying that they may constitute distinct functional subnetworks within the cortical microcircuit. However we found that the response dynamics and speed selectivity of the putative broad- and narrow-spiking neurons were similar (Supplementary Fig. S6B,C). The fraction of responsive neurons and the SSI were both statistically indistinguishable between the 2 populations (see legend of Supplementary Fig. S6), suggesting that putative pyramidal cells and interneurons may share the same basic neural coding of translational motion. This might imply that self-motion velocity is computed through nonspecific recurrent interactions between the different neuronal subtypes within area 7a, or that feedforward velocity inputs broadly activate all neurons in area 7a, regardless of cell type.

Response to Angular Rotation

We also recorded from 268 well-isolated neurons (across 36 recording sessions) using a protocol in which the motion stimulus was composed of a pure yaw rotation at one of several angular velocities (Methods, Fig. 5A,B), including both CW and CCW) rotations. Combined visual–vestibular rotation was not included in this protocol due to technical limitations (Methods).

Single- and multiunit responses, as well as LFPs, were similar in overall properties to those observed in response to forward translation. Specifically, whereas LFPs had a stereotypic waveform, there was a diverse pattern of single neuron and multiunit temporal responses (Fig. 5C–E, Supplementary Figs S7 and S8). Both vestibular and visual rotational motion elicited responses in a substantial fraction of neurons (Table 2A; ~31% for vestibular, ~20% for visual motion). Nevertheless, consistent with the findings from forward motion, the number of bisensory neurons (n = 29) barely exceeded that expected by chance (99th percentile CI: [10,27]), again suggesting weak bimodality at the single neuron level. The average latency of neuronal responses to vestibular rotation showed a slight tendency toward faster responses, as compared with visual rotation (vestibular: 124±5 ms; visual: 143±10 ms; P = 0.07, t-test). These results corroborate findings from the translation protocol and suggest that area 7a is more sensitive to vestibular cues both for translational and rotational motion.

Table 2.

Statistics of single- and multiunit responses to rotational motion. (A) Single-unit responses to angular rotation were classified in the same way as linear translation based on their response to the motion (excitatory, suppressive). (B) Corresponding statistics for multiunit responses to angular rotation. (C) Statistics for 169 cells that were recorded under both linear and angular speeds. Data are summarized first for both speeds separately (top) and under both protocols (bottom). Values between brackets correspond to percentages of the total number of neurons recorded during the 2 protocols

| (A) Single-unit | (B) Multiunit | ||||||

|---|---|---|---|---|---|---|---|

| Stimulus condition | Excitatory | Suppressive | Total | Stimulus condition | Excitatory | Suppressive | Total |

| Vestibular | 75 (27.9%) | 9 (3.3%) | 84 (31.3%) | Vestibular | 469 (81.4%) | 16 (2.7%) | 485 (84.2%) |

| Visual | 51 (19%) | 2 (0.7%) | 53 (19.7%) | Visual | 256 (44.4%) | 55 (9.5%) | 311 (53.9%) |

| Vestibular and visual | 28 (10.4%) | 1 (0.3%) | 29 (10.7%) | Vestibular and visual | 198 (28.1%) | 3 (0.4%) | 201 (28.5%) |

| Speed tuned | Excitatory | Speed tuned | Excitatory | ||||

| Vestibular | 26 (9.7%) | Vestibular | 264 (45.8%) | ||||

| Visual | 27 (10%) | Visual | 88 (15.2%) | ||||

| Vestibular and visual | 9 (3.3%) | Vestibular and visual | 65 (11.2%) | ||||

| (C) Neurons recorded during linear and angular motion protocols (n = 169) | |||||||

| Stimulus condition | Excitatory | Suppressive | Total | ||||

| Vestibular | 37 (21.8%) | 2 (1.1%) | 39 (23%) | ||||

| Visual | 13 (7.6%) | 0 (0%) | 13 (7.6%) | ||||

| Speed tuned | Excitatory | ||||||

| Vestibular | 4 (2.3%) | ||||||

| Visual | 3 (1.7%) | ||||||

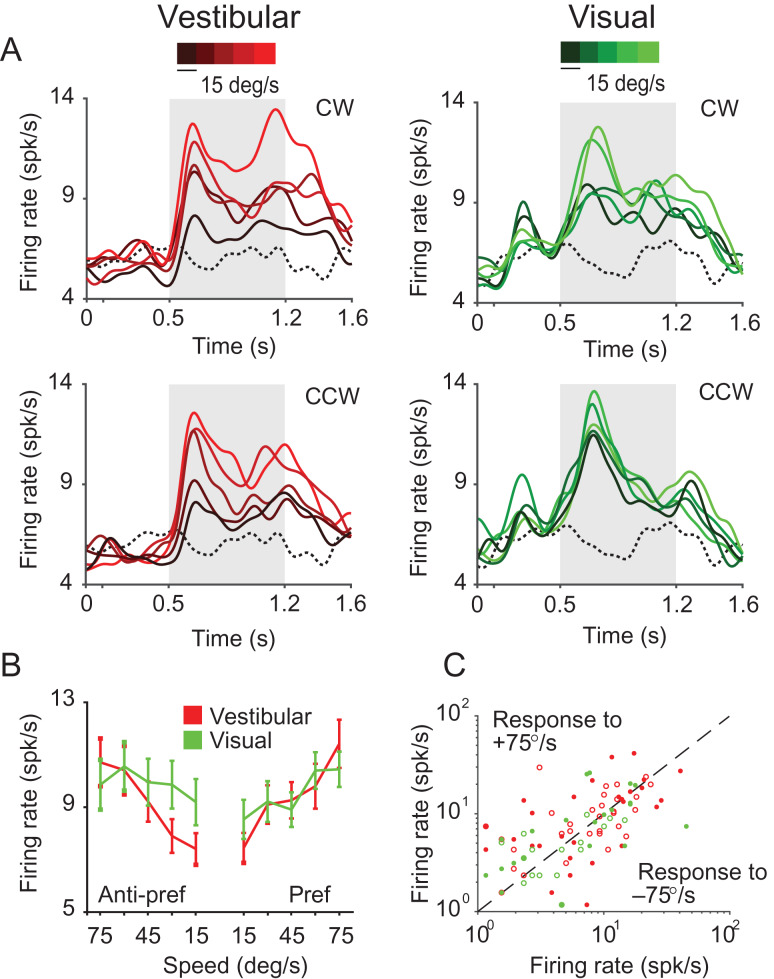

For each stimulus condition, we found that a significant proportion of neurons that were excited by rotational motion showed significant tuning (vestibular: n = 26/75; visual: n = 27/51, ANOVA, P < 0.05) for the amplitude of the stimulus (expressed as peak rotation speed; Table 2A, Fig. 6A,B). We tested the direction selectivity of individual neurons by comparing responses from trials with CW rotation against responses from trials with CCW rotation. We found that only a small fraction of neurons was significantly tuned to the direction of rotation (vestibular: n = 4/75; Visual: n = 9/51; P < 0.05, t-test). Consequently, neuronal responses to the fastest speed of CW and CCW rotation were strongly correlated across the population (vestibular: r = 0.87, P = 4.57 × 10−85; Visual: r = 0.77, P = 2.65 × 10−54, Fig. 6C). This indicates that 7a neurons largely signal the magnitude but not the direction of rotation.

Figure 6.

Selectivity to angular speed. (A) Time-course of responses averaged across all isolated single neurons tuned to rotation amplitude under vestibular (red, n = 26) and visual (green, n = 27) conditions. Different shades correspond to different stimulus amplitudes (brighter hues correspond to greater peak speeds). Dotted traces denote response to the baseline condition (fixation only trials). Gray shaded areas show time-periods of motion. (B) Average speed tuning curves computed during the middle 500 ms period for the “preferred” and “anti-preferred” rotation directions (see text). (C) A comparison of the response of individual neurons to clockwise (+75°/s) and counter-clockwise (−75°/s) rotation. Open—untuned, closed—tuned to stimulus amplitude under vestibular (red, n = 55) or visual (green, n = 25) conditions. CW = clockwise; CCW = counter-clockwise.

We estimated the correlation between neuronal responses and peak angular speed by pooling trial-averaged responses of all neurons to each rotation speed separately (Methods). Since neural responses were largely unaffected by rotation direction, we combined responses from CW and CCW directions before trial averaging. We observed a significant positive correlation between neuronal activity and rotation speed in the vestibular condition (Pearson’s correlation r = 0.18, P = 0.004). A similar tendency was present for the visual condition, although the correlation did not quite reach statistical significance (r = 0.07, P = 0.06).

Across the population of all single-units, the mean SSI tended to be larger in the vestibular (SSI = 0.44 ± 0.08) than visual (SSI = 0.42 ± 0.09) rotation condition (paired t-test, P = 0.036). As was the case for linear translation, the effects described above were also present, and often much stronger, in multiunit responses (Supplementary Fig. S9, Table 2B).

We wanted to know whether the same set of neurons was involved in representing both linear and angular motion cues. Of 169 single-units tested with a range of both translational and rotational stimuli, ~23% were responsive to vestibular motion and ~8% were responsive to visual motion under both protocols. However, even though several cells were temporally modulated by both linear and angular motion stimuli, only ~2% (ves: n = 4/169; vis: n = 3/169) of the units were significantly tuned to both linear and angular speeds (Table 2C, 95% CI of the number of neurons expected by chance: ves: [0,5] vis: [0,3]), suggesting a factorized representation of linear and angular speeds in this brain area.

Spatial Organization of Responses

We leveraged the power of our laminar recordings to study the spatial organization of responses across the depth of the electrodes (slanted 31° ± 4.3° relative to the cortical surface). We first tested whether similarities in the temporal fluctuations of LFP, single-unit, and multiunit responses depended on the spatial separation of recording sites across the linear electrode array. To test this, we concatenated temporal responses from all trials and computed pairwise correlations between the concatenated responses (this includes both signal and noise correlations). There was a clear distance-dependent decrease in shared responsiveness, which includes both temporal and trial-by-trial variability to both translational and rotational motion (Fig. 7A), which is well described by a power-law decay (Supplementary Fig. S10). Moreover, the correlation between LFP signals also exhibited a strong spatial periodicity with a wavelength of about 800 μm, suggesting a possible laminar organization of neural circuit processing in area 7a.

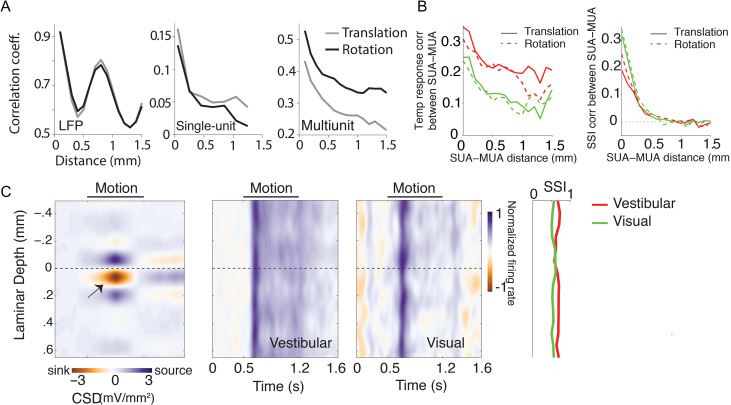

Figure 7.

Spatial profile of responses. (A) Magnitude of correlated variability in the temporal fluctuations between pairs of simultaneously recorded local field potentials (left), single-unit spikes (middle), and multiunit spikes (right), as a function of distance between the channels from which they were recorded. Note that these measures include both signal and noise correlations. (B) Correlation in temporal response profile (left) and speed selectivity indices (SSI, right) between single- and multiunit activity decreases as a function of the separation between the units. Red solid line: vestibular translation, green solid line: visual translation, red dashed line: vestibular rotation, green dashed line: visual rotation. (C) Left: Laminar profile of the current source density (CSD), averaged across recording sessions (n = 14) that exhibited a clearly identifiable source and sink (indicated by black arrow). CSDs from individual recordings were shifted to align the bottom of putative layer IV (0 mm, Methods) before averaging. Right: Average time course of the normalized multiunit response to vestibular (left) and visual motion (right) as a function of cortical depth for all recording sessions (each session includes both protocols), aligned using the CSD as reference. The spatial profile of multiunit SSIs for vestibular (red) and visual (green) motion is shown to the right. SUA = single-unit activity; MUA = multiunit activity.

For single-units and multiunits, a similar distance-dependent decrease was also found separately for correlations in stimulus-induced (signal correlation) and stimulus-independent (noise correlation) components of spike-count variability under both visual and vestibular motion conditions (Supplementary Fig. S11). These results suggest that neighboring neurons likely share similar response properties. Neurons in other regions of parietal cortex have previously been shown to be clustered according to their heading tuning (Chen et al. 2008, 2010). We asked whether there was similar clustering of responses in area 7a based on the temporal response profile and speed selectivity. We found that both the temporal response profile and the SSI values of single-units in response to both translational and rotational motion were more strongly correlated with those of multiunits recorded from the same electrode channel than those from other channels (Fig. 7B), suggesting that translation/rotation selective neurons are clustered in 7a (though not necessarily in a columnar fashion since our electrode arrays were not normal to the cortical surface).

To test whether self-motion selectivity is confined to specific layers, we used the laminar profile of the LFP to estimate the layers spanning the electrode array by measuring the CSD (Methods). While we cannot use CSD to tell the precise identity of the different layers, the location of the current sink can be used to identify putative layer IV and thereby the relative locations of the infragranular and supragranular layers (Schroeder 1998; Maier et al. 2011; Self et al. 2013). We used this technique to align CSDs and multiunit responses across different recordings (Fig. 7C). We found no systematic differences in SSI values across layers (one-way ANOVA, vestibular: P = 0.41, visual: P = 0.79). There was also no dependence of SSI on the coordinates of recording locations along the anterior–posterior (translational SSI–vestibular: Pearson’s correlation coefficient, r = 0.03, P = 0.72, visual: r = 0.05, P = 0.57; rotational SSI–vestibular: r=−0.07, P = 0.61, visual: r = 0.01, P = 0.63), or the mediolateral axes (translational SSI–vestibular: r = 0.07, P = 0.49, visual: r = 0.11, P = 0.37; rotational SSI–vestibular: r=−0.06, P = 0.52, visual: r = 0.15, P = 0.51). Together, these results suggest that self-motion tuning is not restricted to specific layers or sites in area 7a (see also Supplementary Fig. S12).

Discussion

In this study, we have presented evidence for a multisensory representation of self-motion in area 7a of the macaque PPC. Our findings extend the literature on self-motion processing in this brain area in 3 ways. First, past studies on speed selectivity of neurons in area 7a used radial optic flow stimuli typically experienced during fore-aft translation (Siegel and Read 1997; Phinney and Siegel 2000), but not during angular rotation. Here we used translational (radially outward) as well as rotational optic flow patterns to investigate the representation of both linear and angular components of self-motion velocity. Second, to our knowledge, sensitivity of area 7a neurons to vestibular inputs has never been explicitly tested. A previous study by Kawano et al. (1980) reported vestibular responses from area 7. However, these recordings were in fact performed in the anterior bank of the superior temporal sulcus (area MST, Kawano et al. 1980; Komatsu and Wurtz 1988, K. Kawano, personal communication, 2016). Here, we used a motion platform with translational and rotational degrees of freedom to investigate representations of self-motion velocity derived from vestibular cues. Finally, for the first time we simultaneously used visually simulated (using optic flow) and real motion (using platform) to study multisensory convergence of self-motion cues during forward translation in area 7a.

Our experiments using visual motion stimuli confirm earlier reports of optic flow sensitive neurons in area 7a (Read and Siegel 1997; Siegel and Read 1997; Merchant et al. 2001; Raffi and Siegel 2007). For radially outward optic flow, the fraction of neurons with a statistically significant response in our data was comparable to previous studies (~30% for Siegel and Read 1997 and Merchant et al. 2001). However, the overall percentage of flow-responsive neurons in previous studies was slightly greater than reported here. This discrepancy is likely because of the greater variety of optic flow patterns that were employed in those studies. For example, Merchant et al. (2001) found that ~60% of neurons in area 7a responded significantly to at least one of their flow fields. In our recordings, we limited the optic flow conditions to those corresponding to natural navigation, that is radially outward flow (simulating forward translation) and rotational flow (simulating yaw rotation) only, which limited the fraction of neurons that could be driven effectively by our stimuli. In addition, recordings in previous studies were carried out using single electrodes, which may have allowed for greater sampling biases in selecting neurons. In contrast, we used linear electrode arrays to increase neuronal yield and we isolated all single-units offline before assessing their responsiveness, thereby reducing sampling bias. The fraction of visually responsive multiunits in our dataset was substantially greater than the responsive fraction of single-units and was more comparable to earlier work (Read and Siegel 1997; Siegel and Read 1997; Phinney and Siegel 2000; Merchant et al. 2001). Moreover, we did observe significant motion-induced (negative) evoked potentials in the LFP for the vast majority of recording sites, implying widespread activation of area 7a by optic flow.

A previous study on speed selectivity for optic flow reported heterogeneous tuning to speed, with individual 7a neurons responding exclusively to low, high, intermediate, or even multiple different speeds (Phinney and Siegel 2000). We did not observe such diversity in speed tuning—responses of most speed-selective neurons in our recordings increased monotonically with speed. Notably, earlier work on area MSTd using radial optic flow under conditions similar to ours also found mostly monotonically increasing responses to movement speed (Duffy and Wurtz 1997). The differences between our findings and those of Phinney and Siegel (2000) are likely due to some fundamental differences between the 2 studies. First, Phinney and Siegel (2000) simulated a wider range of speeds than those used here, which may have contributed to greater diversity in the observed speed tuning profiles. Extending the range of motion to higher speeds might therefore reveal more heterogeneous tuning for both linear and angular speeds. Second, they also used a wider variety of optic flow patterns. It is possible that speed tuning is nonmonotonic for some optic flow patterns. Third, and perhaps most important, their recordings were performed in behaving monkeys that were trained to detect changes in the global structure of optic flow. Sensory representations in the PPC are modulated by attentional factors and are continuously refined during learning (Bucci 2009; Robinson and Bucci 2012). Consequently, the nature of self-motion tuning may have been influenced by the task used by Phinney and Siegel (2000). Future recordings in animals trained to perform tasks such as virtual navigation should be able to examine this possibility.

The experiments using the motion platform revealed that neurons in area 7a are sensitive to both translational and rotational vestibular cues. There is previous indirect evidence for a vestibular influence on neuronal responses in 7a (Brotchie et al. 1995; Snyder et al. 1998). Specifically, head orientation was found to modulate visual responses only when the head rotated at velocities above the vestibular threshold. Here, we demonstrate a direct effect of physical movement (both translation and rotation) on the responses of individual neurons in area 7a. Critically, we find that many neurons signal the amplitude of vestibular translation and/or rotation, indicating that area 7a can use vestibular inputs to convey explicit information about self-motion speed, not just head orientation (Snyder et al. 1998). The origin of vestibular inputs to area 7a may be either from connections with thalamic nuclei that convey vestibular input to the cortex (Ventre and Faugier-Grimaud 1989), or from multimodal areas that project to 7a, such as MSTd and VIP (Pandya and Seltzer 1982; Seltzer and Pandya 1986; Van Essen et al. 1990; Rozzi et al. 2006). Additionally, it is worth noting that area 7a may project directly to the vestibular nuclei (Faugier-Grimaud and Ventre 1989).

Responses to the combined visual–vestibular stimulus tended to be dominated by vestibular, rather than visual inputs (Fig. 2). In some cases, neurons selectively responsive to visual motion were even suppressed when visual motion was paired with platform movement (Fig. 1C, #2). This dominance of vestibular influences on responses was unexpected because area 7a is thought to be largely visual and is generally considered the output of the dorsal visual hierarchy (Andersen 1989; Britten 2008). Moreover, vestibular dominance has not been reported in other multimodal parietal areas, such as MSTd and VIP (Schlack et al. 2002; Bremmer, Klam, et al. 2002; Gu et al. 2008; Chen et al. 2013). The fact that vestibular inputs more strongly dictate neuronal responses in area 7a suggests that local mechanisms may inhibit visual motion inputs when vestibular cues are available. Interestingly, a circuit mechanism involving parvalbumin-expressing interneurons in the mouse PPC has recently been found to help resolve multisensory conflicts in audiovisual tasks (Song et al. 2017). It is possible that area 7a could perform an analogous role in mediating conflicts related to self-motion processing in macaques, although this remains a speculation at this point. Such a role would be consistent with the idea that area 7a conveys self-motion information to navigation circuits, for it would be critical to resolve multisensory conflicts before using self-motion cues to navigate. We also failed to observe multisensory convergence at the level of single neurons. We have previously reported that neuronal responses in macaque areas MSTd and VIP reflect multisensory integration of self-motion signals (Gu et al. 2008; Chen et al. 2013) and we have provided a quantitative assessment of their roles in self-motion perception (Lakshminarasimhan, Pouget et al. 2018). It is therefore possible that, in the presence of multimodal cues, area 7a is limited to resolving cue conflicts, rather than integrating those cues for self-motion estimation.

In both the visual and vestibular conditions, neuronal responses often varied with stimulus amplitude, which we expressed as a function of peak motion speed. This result parallels the outcome of experiments in freely moving rodents that demonstrate representations of speed by neurons in the PPC (Whitlock et al. 2012). However, unlike in rodent PPC, neurons in area 7a did not show diverse preferences for speed. Instead, their responses were more similar to those of “speed cells” reported in the medial entorhinal cortex of rats that exhibit a speed-dependent increase in firing rate (Sargolini et al. 2006; Kropff et al. 2015; Hinman et al. 2016). Our recordings also revealed an important qualitative feature of angular velocity representation in 7a—firing rates increased with rotation speed regardless of the direction of yaw rotation. This finding is reminiscent of a previous study by Siegel and Read (1997), in which a significant fraction of 7a neurons was reportedly selective for the type of motion (rotation/translation, etc.) but not for motion direction. Thus, while the magnitude of angular speed may be decodable from this area, information about rotation direction (CW or CCW) likely has to be obtained from other brain areas.

Although a speed-dependent increase in firing rate was found for both translation and rotation, neuronal populations representing translational and rotational speeds were almost completely nonoverlapping (Table 2C). A potential computational benefit of such a spatially demultiplexed representation is that the translational and rotational components may be decoded independently by reading out the corresponding populations and integrated to generate estimates of linear and angular position respectively. Perhaps, this explains the presence of separate distance and head direction signals distributed across a network of brain regions (Valerio and Taube 2012; Barry and Burgess 2014; Weiss and Derdikman 2018). Such a segregated representation could be important for mediating flexible behaviors where the animals can choose actions based on their orientation or distance to the goal depending on the context. In parallel, circuits mediating path integration behavior such as the medial entorhinal cortex (McNaughton et al. 2006; Burak and Fiete 2009) may also build 2D spatial representations by combining both linear and angular velocity estimates.

The decrease in correlated variability with spatial separation (Fig. 7) supports the notion that neurons in area 7a are spatially clustered with respect to their sensory representation of self-motion. Moreover, the heterogeneity in responses across sites suggests that there may be no clear topographic organization for a specific modality or for the strength of speed tuning (Supplementary Fig. S12). Functional organization of speed tuned neurons has been described in macaque area MT (Liu and Newsome 2002), where penetrations normal to the surface of the cortex reveal clear clustering of neurons according to speed preference, but this organization is not columnar. Similar to our results, they observed that single and multiunit response profiles recorded from the same sites were correlated. In addition to coarse clustering of neuronal responses, there may also be a finer scale in the organization of the underlying circuit. In fact, we observed a striking periodicity in the correlation between LFPs as a function of laminar separation. We used CSD analysis to test if there was a layer-specific encoding of self-motion at the neuronal level. Unlike other areas (Self et al. 2013; Nandy et al. 2017), we found no layer-specific differences in the strength of tuning of single-unit or multiunit response latencies. This is consistent with recent findings from the rat parietal cortex showing that self-motion-tuned neurons are evenly distributed across all depths (Wilber et al. 2017).

Imaging studies in humans have identified candidate areas of the PPC involved in navigation and route planning using virtual reality tasks (Maguire 1998; Rosenbaum et al. 2004; Shelton and Gabrieli 2002; Wolbers et al. 2004; Spiers and Maguire 2006, 2007; Ciaramelli et al. 2010; Cottereau et al. 2017). Similar tasks in macaque monkeys have revealed route-selective neurons in the medial parietal region (Sato et al. 2006, 2010). Our findings complement those studies by demonstrating a potential role for the PPC in self-motion-based, rather than route-based, navigation. Although we focused on coding of self-motion information in this work, PPC could span multiple levels of a hierarchical representation (Chafee and Crowe 2012). In this general framework, single neurons in PPC may carry mixtures of signals at many different time scales, ranging from momentary sensory inputs reported here to dynamical representations that are modulated by working memory (Constantinidis and Steinmetz 1996; Qi et al. 2010; Rawley and Constantinidis 2010), attention (Steinmetz et al. 1994; Robinson et al. 1995; Constantinidis and Steinmetz 2001a, 2001b; Quraishi et al. 2007; Oleksiak et al. 2011), or learning (Freedman and Assad 2006). The flow of information between brain areas is likely modulated by task demands, which could in turn influence precisely where neuronal responses fall along this continuum. We know relatively little about the neural mechanisms by which interactive environments can dynamically lead to the emergence of cognitive neural signals in the cortex. Future studies will combine complex, naturalistic tasks with normative models of behavior (Lakshminarasimhan, Petsalis et al. 2018) to understand the flow of information between populations of neurons that encode different task-relevant variables, and how the PPC transforms sensory inputs into a more behaviorally relevant format.

Supplementary Material

Notes

We thank Jing Lin and Jian Chen for assistance in stimulus programming. Conflict of Interest: None declared.

Authors’ Contributions

E.A., K.J.L., G.C.D., and D.E.A. designed the experiment. E.A. and K.J.L. conducted the experiment and analyzed the data. E.A., K.J.L., G.C.D., and D.E.A. wrote the article.

Funding

Simons Collaboration on the Global Brain (#324143), BRAIN Initiative grant U01NS094368 and NIH NIDCD (004260).

References

- Andersen RA. 1989. Visual and eye movement functions of the posterior parietal cortex. Annu Rev Neurosci. 12:377–403. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Asanuma C, Essick G, Siegel RM. 1990. Corticocortical connections of anatomically and physiologically defined subdivisions within the inferior parietal lobule. J Comp Neurol. 296:65–113. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Essick GK, Siegel RM. 1985. Encoding of spatial location by posterior parietal neurons. Science. 230:456–458. [DOI] [PubMed] [Google Scholar]

- Anderson EB, Mitchell JF, Reynolds JH. 2013. Attention-dependent reductions in burstiness and action-potential height in macaque area V4. Nat Neurosci. 16:1125–1131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrow CJ, Latto R. 1996. The role of inferior parietal cortex and fornix in route following and topographic orientation in cynomolgus monkeys. Behav Brain Res. 75:99–112. [DOI] [PubMed] [Google Scholar]

- Barry C, Burgess N. 2014. Neural mechanisms of self-location. Curr Biol. 24:330–339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bremmer F, Duhamel JR, Ben Hamed S, Graf W. 2002. Heading encoding in the macaque ventral intraparietal area (VIP). Eur J Neurosci. 16:1554–1568. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel J-R, Ben Hamed S, Graf W. 2002. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP). Eur J Neurosci. 16:1569–1586. [DOI] [PubMed] [Google Scholar]

- Britten KH. 2008. Mechanisms of self-motion perception. Annu Rev Neurosci. 31:389–410. [DOI] [PubMed] [Google Scholar]

- Britten KH, van Wezel RJA. 1998. Electrical microstimulation of cortical area MST biases heading perception in monkeys. Nat Neurosci. 1:59–63. [DOI] [PubMed] [Google Scholar]

- Britten KH, van Wezel RJA. 2002. Area MST and heading perception in macaque monkeys. Cereb Cortex. 12:692–701. [DOI] [PubMed] [Google Scholar]

- Brotchie PR, Andersen RA, Snyder LH, Goodman SJ. 1995. Head position signals used by parietal neurons to encode locations of visual stimuli. Nature. 375:232–235. [DOI] [PubMed] [Google Scholar]

- Bucci DJ. 2009. Posterior parietal cortex: an interface between attention and learning? Neurobiol Learn Mem. 91:114–120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burak Y, Fiete IR. 2009. Accurate path integration in continuous attractor network models of grid cells. PLoS Comput Biol. 5:e1000291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burgess N, Jeffery KJ, O’Keefe J, Royal Society. 1999. The hippocampal and parietal foundations of spatial cognition. Oxford (UK): Oxford University Press. [Google Scholar]

- Cavada C, Goldman‐Rakic PS. 1989. Posterior parietal cortex in rhesus monkey: II. Evidence for segregated corticocortical networks linking sensory and limbic areas with the frontal lobe. J Comp Neurol. 287:422–445. [DOI] [PubMed] [Google Scholar]

- Chafee MV, Crowe DA. 2012. Thinking in spatial terms: decoupling spatial representation from sensorimotor control in monkey posterior parietal areas 7a and LIP. Front Integr Neurosci. 6:112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. 2010. Macaque parieto-insular vestibular cortex: responses to self-motion and optic flow. J Neurosci. 30:3022–3042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. 2011. A comparison of vestibular spatiotemporal tuning in macaque parietoinsular vestibular cortex, ventral intraparietal area, and medial superior temporal area. J Neurosci. 31:3082–3094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. 2013. Functional specializations of the ventral intraparietal area for multisensory heading discrimination. J Neurosci. 33:3567–3581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, Gu Y, Takahashi K, Angelaki DE, Deangelis GC. 2008. Clustering of self-motion selectivity and visual response properties in macaque area MSTd. J Neurophysiol. 100:2669–2683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen LL, Lin LH, Barnes CA, McNaughton BL. 1994. Head-direction cells in the rat posterior cortex—II. Contributions of visual and ideothetic information to the directional firing. Exp Brain Res. 101:24–34. [DOI] [PubMed] [Google Scholar]

- Chen LL, Lin LH, Green EJ, Barnes CA, McNaughton BL. 1994. Head-direction cells in the rat posterior cortex—I. Anatomical distribution and behavioral modulation. Exp Brain Res. 101:8–23. [DOI] [PubMed] [Google Scholar]

- Ciaramelli E, Rosenbaum RS, Solcz S, Levine B, Moscovitch M. 2010. Mental space travel: damage to posterior parietal cortex prevents egocentric navigation and reexperiencing of remote spatial memories. J Exp Psychol Learn Mem Cogn. 36:619–634. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Andersen RA. 2002. A common reference frame for movement plans in the posterior parietal cortex. Nat Rev Neurosci. 3:553–562. [DOI] [PubMed] [Google Scholar]

- Constantinidis C, Steinmetz MA. 1996. Neuronal activity in posterior parietal area 7a during the delay periods of a spatial memory task. J Neurophysiol. 76:1352–1355. [DOI] [PubMed] [Google Scholar]

- Constantinidis C, Steinmetz MA. 2001. a. Neuronal responses in area 7a to multiple-stimulus displays: I. Neurons encode the location of the salient stimulus. Cereb Cortex. 11:581–591. [DOI] [PubMed] [Google Scholar]

- Constantinidis C, Steinmetz MA. 2001. b. Neuronal responses in area 7a to multiple stimulus displays: II. Responses are suppressed at the cued location. Cereb Cortex. 11:592–597. [DOI] [PubMed] [Google Scholar]

- Cottereau BR, Smith AT, Rima S, Fize D, Héjja-Brichard Y, Renaud L, Lejards C, Vayssière N, Trotter Y, Durand JB. 2017. Processing of Egomotion-Consistent Optic Flow in the Rhesus Macaque Cortex. Cereb Cortex.. 27:330–343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding SL, Van Hoesen G, Rockland KS. 2000. Inferior parietal lobule projections to the presubiculum and neighboring ventromedial temporal cortical areas. J Comp Neurol. 425:510–530. [DOI] [PubMed] [Google Scholar]

- Dubowitz DJ, Scadeng M. 2011. A frameless stereotaxic MRI technique for macaque neuroscience studies. Open Neuroimag J. 5:198–205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. 1997. Medial superior temporal area neurons respond to speed patterns in optic flow. J Neurosci. 17:2839–2851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Etienne AS, Jeffery KJ. 2004. Path integration in mammals. Hippocampus. 14:180–192. [DOI] [PubMed] [Google Scholar]

- Faugier-Grimaud S, Ventre J. 1989. Anatomic connections of inferior parietal cortex (area 7) with subcortical structures related to vestibulo-ocular function in a monkey (macaca fascicularis). J Comp Neurol. 280:1–14. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Assad JA. 2006. Experience-dependent representation of visual categories in parietal cortex. Nature. 443:85–88. [DOI] [PubMed] [Google Scholar]

- Frey S, Pandya DN, Chakravarty MM, Bailey L, Petrides M, Collins DL. 2011. An MRI based average macaque monkey stereotaxic atlas and space (MNI monkey space). Neuroimage. 55:1435–1442. [DOI] [PubMed] [Google Scholar]

- Gu Y, Angelaki DE, Deangelis GC. 2008. Neural correlates of multisensory cue integration in macaque MSTd. Nat Neurosci. 11:1201–1210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. 2006. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J Neurosci. 26:73–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartigan JA, Hartigan PM. 1985. The dip test of unimodality. Ann Stat. 13:70–84. [Google Scholar]

- Hinman JR, Brandon MP, Climer JR, Chapman GW, Hasselmo ME. 2016. Multiple running speed signals in medial entorhinal cortex. Neuron. 91:666–679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaas JH, Gharbawie OA, Stepniewska I. 2013. Cortical networks for ethologically relevant behaviors in primates. Am J Primatol. 75:407–414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawano K, Sasaki M, Yamashita M. 1980. Vestibular input to visual tracking neurons in the posterior parietal association cortex of the monkey. Neurosci Lett. 17:55–60. [DOI] [PubMed] [Google Scholar]

- Kobayashi Y, Amaral DG. 2000. Macaque monkey retrosplenial cortex: I. Three-dimensional and cytoarchitectonic organization. J Comp Neurol. 426:339–365. [DOI] [PubMed] [Google Scholar]

- Kobayashi Y, Amaral DG. 2003. Macaque monkey retrosplenial cortex: II. Cortical afferents. J Comp Neurol. 466:48–79. [DOI] [PubMed] [Google Scholar]

- Kolb B, Buhrmann K, McDonald R, Sutherland RJ. 1994. Dissociation of the medial prefrontal, posterior parietal, and posterior temporal cortex for spatial navigation and recognition memory in the rat. Cereb Cortex. 4:664–680. [DOI] [PubMed] [Google Scholar]

- Komatsu H, Wurtz RH. 1988. Relation of cortical areas MT and MST to pursuit eye movements. I. Localization and visual properties of neurons. J Neurophysiol. 60:580–603. [DOI] [PubMed] [Google Scholar]

- Kondo H, Saleem KS, Price JL. 2005. Differential connections of the perirhinal and parahippocampal cortex with the orbital and medial prefrontal networks in macaque monkeys. J Comp Neurol. 493:479–509. [DOI] [PubMed] [Google Scholar]

- Kravitz DJ, Saleem KS, Baker CI, Mishkin M. 2011. A new neural framework for visuospatial processing. Nat Rev Neurosci. 12:217–230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kropff E, Carmichael JE, Moser MB, Moser EI. 2015. Speed cells in the medial entorhinal cortex. Nature. 523:419–424. [DOI] [PubMed] [Google Scholar]

- Lakshminarasimhan KJ, Petsalis M, Park H, DeAngelis GC, Pitkow X, Angelaki DE. 2018. A dynamic Bayesian observer model reveals origins of bias in visual path integration. Neuron. 99:1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakshminarasimhan KJ, Pouget A, Deangelis GC, Angelaki DE, Pitkow X. 2018. Inferring decoding strategies for multiple correlated neural populations. PLOS Comput Biol. 14:e1006371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J, Newsome WT. 2002. Functional organization of speed tuned neurons in visual area MT. J Neurophysiol. 89:246–256. [DOI] [PubMed] [Google Scholar]

- Maciokas JB, Britten KH. 2010. Extrastriate area MST and parietal area VIP similarly represent forward headings. J Neurophysiol. 104:239–247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maguire EA. 1998. Knowing where and getting there: a human navigation network. Science. 280:921–924. [DOI] [PubMed] [Google Scholar]

- Maier A, Aura CJ, Leopold DA. 2011. Infragranular sources of sustained local field potential responses in macaque primary visual cortex. J Neurosci. 31:1971–1980. [DOI] [PMC free article] [PubMed] [Google Scholar]