Abstract

A new hybrid intelligent model was developed for estimating the compressive strength (CS) of ground granulated blast furnace slag (GGBFS) concrete, and the synergistic benefits of the hybrid algorithm as compared with a single algorithm were verified. While using the collected 269 data from previous experimental studies, artificial neural network (ANN) models with three different learning algorithms namely back-propagation (BP), particle swarm optimization (PSO), and new hybrid PSO-BP algorithms, were constructed and the performance of the models was evaluated with regard to the prediction accuracy, efficiency, and stability through a threefold procedure. It was found that the PSO-BP neural network model was superior to the simple ANNs that were trained by a single algorithm and it is suitable for predicting the CS of GGBFS concrete.

Keywords: ground granulated blast furnace slag concrete, artificial neural network, particle swarm optimization, back-propagation, hybrid PSO-BP

1. Introduction

Numerous researchers have attempted to enhance the sustainability of concrete by not only reducing the amount of carbon dioxide (CO2) generated from the production of the Portland cement, but also increasing the durability of concrete, which can benefit the environment through the conservation of resources and the reduction of waste [1]. One commonly used strategy is to utilize recycled aggregates and mineral admixtures, such as fly ash, ground granulated blast furnace slag (GGBFS), and silica fume as a partial replacement for cement or aggregate in concrete [1,2,3]. The use of such industrial by-products has been found to improve the mechanical properties and durability of concrete, reducing CO2 emissions, conserving energy, and mitigating the adverse environmental effects of concrete [4].

Blast furnace slag is a by-product that was obtained in the production of iron in a blast furnace. When the molten blast furnace slag is quenched with water and finely ground to a cement parcel size, it is transformed into GGBFS. GGBFS, as a latent hydraulic material, reacts with calcium hydroxide (Ca(OH)2) in the presence of water, forming calcium silicate hydrate (C-S-H), which is primarily responsible for the strength of cement-based materials [5,6]. Through this pozzolanic reaction, the use of GGBFS as a supplementary cementitious material might reduce the early strength, but it increases the ultimate strength and significantly improves the microstructure and durability of hardened concrete [7,8,9].

Several empirical equations and mathematical models have been developed for estimating the compressive strength (CS) and other properties to minimize the experimental task that is required for concrete mix design [10,11,12]. These equations are generally in regression form based on the results of a series of experiments. However, selecting a suitable regression equation (linear, nonlinear, exponential, etc.) for each analysis requires considerable experience and multiple techniques, and the accuracy of analysis decreases as the number of explanatory variables increases [13,14,15]. In recent years, numerical modeling for such relationships has been accomplished by constructing an artificial neural network (ANN) model, which is capable of learning and generalizing from examples through the trial-and-error method without any presumptions [13,16]. ANNs can not only produce correct or nearly correct solutions to incomplete tasks, but also generate evidential results, even when the data are poor or insufficient [17,18]. Owing to these advantages, numerous researchers have applied ANNs for predicting the CS and other properties of concrete [4,19,20,21]. Bilim (2009) [21] used ANN models that were trained by several different back-propagation (BP) algorithms to predict the CS of GGBFS concrete based on concrete ingredients and age. Bakhta Boukhatem et al. (2011) [22] investigated the efficiency factor of GGBFS related with concrete strength by using ANNs.

In most studies employing ANN models for the estimation of concrete properties, a BP algorithm was used to train the network [19,20,21]. Nevertheless, the BP algorithm has some disadvantages: it can be easily trapped in local minima depending on the selection of initial parameters and it may be unreliable (with a low prediction accuracy), relying on training data [23,24]. Combinations of BP and several metaheuristic algorithms have been proposed as alternatives to overcome these drawbacks. Among the metaheuristic algorithms, particle swarm optimization (PSO) has been often integrated with BP algorithm to improve the performance of predictive models due to its simplicity and wide applicability. The hybrid PSO-BP algorithm uses the global search ability of PSO algorithm and the fast-converging capabilities of BP algorithm so that the ANN models with it can converge to true global optimization more accurately and rapidly than the models with a single algorithm. The effectiveness and superiority of this hybrid algorithm have been proven in various fields [25,26,27,28]. Bo et al. (2017) [27] proposed a hybrid PSO-BP neural network for wind power forecasting, and its performance was compared to the network that was trained by the conventional BP algorithm. The results of their study showed that the performance prediction of the developed hybrid algorithm is superior to the basic BP algorithm. Wang et al. (2015) [28] used the PSO-BP neural network to enhance the performance of the integrated navigation system and indicated that neural networks with the hybrid PSO-BP algorithm can compensate and estimate the navigation error more effectively than the conventional neural networks. However, few studies have been performed on the use of the hybrid algorithms to develop ANN models for predicting concrete properties.

In this study, three different ANN models using BP, PSO, and hybrid PSO-BP algorithms were developed for predicting the CS of GGBFS concrete based on the concrete mix ingredients and curing temperature. The prediction results of these models were compared to investigate the beneficial effects of combining the BP and PSO algorithms and select the best intelligent system for the estimation of GGBFS concrete strength.

2. Database

It is necessary to prepare data and construct a database for training and testing the prediction model to develop ANN-based models for predicting the CS of GGBFS-incorporated concrete. The 269 experimental data that were used in this study were collected from several reports [11,29,30,31,32,33,34,35,36,37]. All of the data contained complete sets of information regarding the mix design proportion, curing condition, and experimental CS of GGBFS concrete. The variables were selected according to all of the available data samples. The input parameters included the curing temperature (T), water to binder ratio (w/b), GGBFS to total binder ratio (GGBFS/B), water (W), fine aggregate (FA), coarse aggregate (CA), and superplasticizer (SP). The output variable was the CS at 28 days, which ranged from 17 to 80 MPa. Details regarding the chemical and mechanical properties of the concrete components are presented in [11,29,30,31,32,33,34,35,36,37]. Table 1 presents the minimum and maximum values of each parameter, and Appendix A presents a database containing all of the data.

Table 1.

Ranges of the input and output parameters in the database.

| Parameters | Symbol | Unit | Category | Min | Max |

|---|---|---|---|---|---|

| Curing temperature | T | °C | Input | 5 | 75 |

| Water to binder ratio | w/b | % | Input | 25 | 88.9 |

| Water | W | kg/m3 | Input | 128 | 295 |

| GGBFS to total binder ratio | GGBFS/B | % | Input | 0 | 85 |

| Fine aggregate | FA | kg/m3 | Input | 395 | 947 |

| Coarse aggregate | CA | kg/m3 | Input | 723 | 1135 |

| Superplasticizer | SP | % | Input | 0 | 2.9 |

| Compressive strength | CS | MPa | Output | 17.2 | 77 |

3. Methodology

3.1. Artificial Neural Network

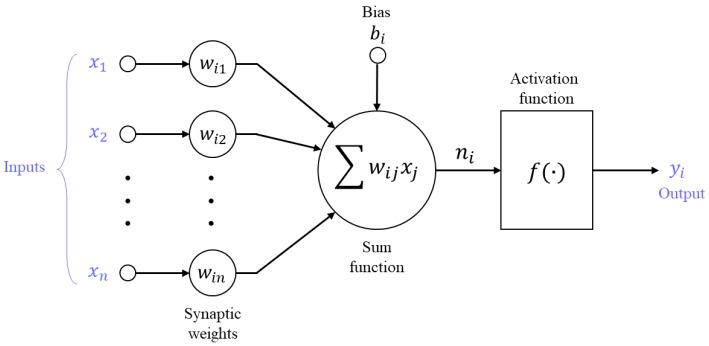

ANNs are massive parallel systems that are composed of simple, highly interconnected processing units, i.e., artificial neurons, which process information. ANNs are effective for engineering applications and they have been widely used to solve diverse problems due to its ability learning from examples [16,38]. ANNs can be classified into different types depending on the architecture and information flow procedure [15]. Among them, the multilayer feedforward network consisting of an input layer, one or more hidden layer(s), and an output layer is the most commonly used network, where all of the neurons in each layer only have connections to the neurons of successive layers, not to neurons in the same layer [15,17]. Every node in a layer is connected to the nodes in the adjacent layers with different weights. The typical elements of a neuron are shown in Figure 1: inputs, a summation function, an activation function, a bias, and an output. In every neuron except for the input neurons, signals from the previous layer (xi) are multiplied by an associated adaptive weight (wij), which indicates the connection strength of the neuron with a particular input, and the summation function is then applied to the weighted signals [39]. Finally, the bias of the neuron (bj) is added to the aggregate signals, which forms the net input of the neurons (ni). This process can be mathematically expressed as:

| (1) |

Figure 1.

Artificial neuron model.

The output (yi) of the neuron is then obtained by applying an activation function (f) to the net input (ni):

| (2) |

The activation function limits the amplitude of the output of a neuron within a manageable range and introduces nonlinear properties to the neuron. In general, the hyperbolic tangent function is a commonly used activation function in multilayer models [15].

Training ANNs is a process of updating the connection weights and biases, so that the network exhibits desired or interesting behavior. In the course of training, the network architecture and parameters are adjusted by the iterative simulation with the given training examples to minimize the error function, which is often represented as the root mean squared error (RMSE), and to produce outputs that are equal or close to the targets [39,40]. Instead of following a set of rules that are specified by experts, ANNs automatically learn underlying rules from the given examples [41]. The steps used for training the network are called the learning algorithm.

3.2. Back-Propagation

The BP algorithm is the most widely used algorithm for training ANNs [42]. It is a gradient-based procedure to minimize the error between the network outputs and the desired outputs, adjusting the weights and biases by a small amount at a time [15,17]. It comprises two procedures: a forward stage and a backward stage. In the forward procedure, the input signals move forward through the network and the error is calculated in the output layer. Subsequently, the error is propagated backward from the output layer to the input layer, updating parameters for the direction in which the performance function most rapidly decreases [40,42]. The change of the weights during each iteration is calculated, as follows:

| (3) |

where is the weight, and are the changes in the weight at k and k−1 iteration, α is the momentum factor, and is the learning rate. The entire procedure is repeated until the performance of the network reaches an acceptable level.

3.3. Particle Swarm Optimization

PSO is a stochastic optimization technique for finding the best solution, which is inspired by the social behavior of biological organisms to locate desirable positions in a given area through cooperation and competition [43,44,45,46]. In PSO, some entities, called particles, are scattered in the search space, and the position of each particle represents a possible solution to the optimization problem in the n-dimensional search space [46,47]. Each particle moves iteratively through the problem space to find the optimal locations, while remembering the best position it has ever visited and communicating with other particles.

The position and velocity of the particles are randomly initialized at the beginning of the process and, during every iteration, each particle accelerates toward its own personal best solution discovered so far, as well as the global best position found thus far across the whole population [48]. The velocity and position of each particle are updated via the following equations at every step t [49]:

| (4) |

| (5) |

where , , , and represent the new velocity, current velocity, new position, and current position of the particles. and are random numbers uniformly distributed in the range of (0, 1) [50], giving the particles good state space exploration ability. and are referred to as acceleration coefficients, which represent the strength of attraction toward the personal best position () and the global best position (), respectively [50,51]. The velocity ( is updated based on its current values multiplied by the inertia weight and the distances from its current position to the personal best and the global best. The particle position ( is adjusted according to the newly computed velocity (). Subsequently, the fitness of each updated position is evaluated, and the personal best and global best are updated during each iteration. This process is repeated until the expected position is obtained or the termination criteria are satisfied.

3.4. Hybrid PSO-BP Algorithm

The hybrid PSO-BP algorithm that is proposed herein is an optimization method that combines the PSO with the BP. Although the BP algorithm is the most widely used training algorithm for ANNs, it can easily fall into the local optimal solution, and its performance depends on the initial weights of the ANN [23,27]. If the initial weights and biases are far from the optimal values that can give the global optimal solutions, the ANN might become stuck at the local minimum [23]. Many researchers have combined the BP algorithm with metaheuristic optimization algorithms, such as PSO, genetic algorithm, and harmony search algorithm, to overcome these shortcomings of the BP algorithm and enhance the accuracy of models [23,45,52]. Among them, PSO has been often used to improve the performance of BP training in ANNs due to its simplicity and wide applicability [27,52].

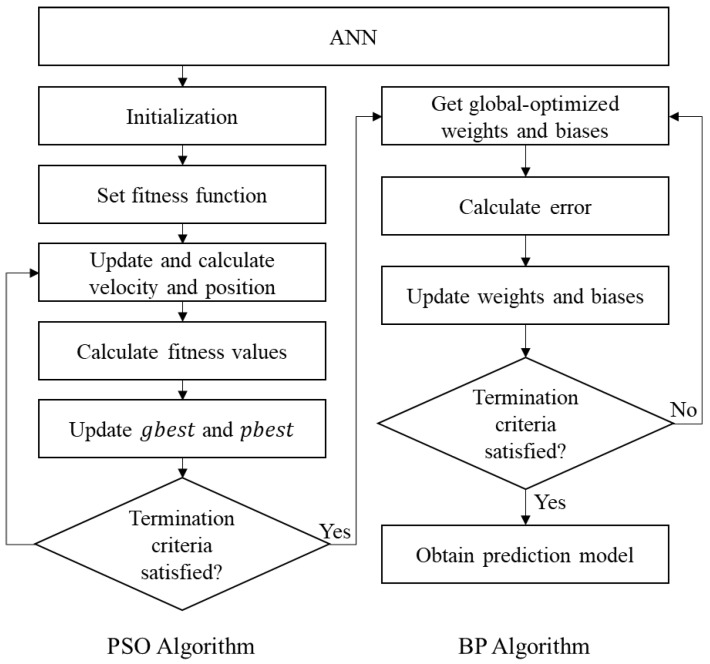

The hybrid PSO-BP algorithm employs the global search ability of the PSO algorithm to obtain the initial weights and biases of the ANN that can lead the network to converge to the global minimum of the error function, and it uses the fast-converging capabilities of the BP algorithm. The near-global optimal initial weights and biases that were obtained by the PSO algorithm were applied in BP training to find true global optimization and improve performance of the ANN. Figure 2 describes the overall calculation process of the PSO-BP algorithm that was used in this study. Section 4 provides details regarding the determination of the parameters and the modeling of the PSO-BP ANN for predicting the CS of concrete.

Figure 2.

Flowchart for the hybrid particle swarm optimization-back-propagation (PSO-BP) algorithm.

4. Development of CS Prediction Models

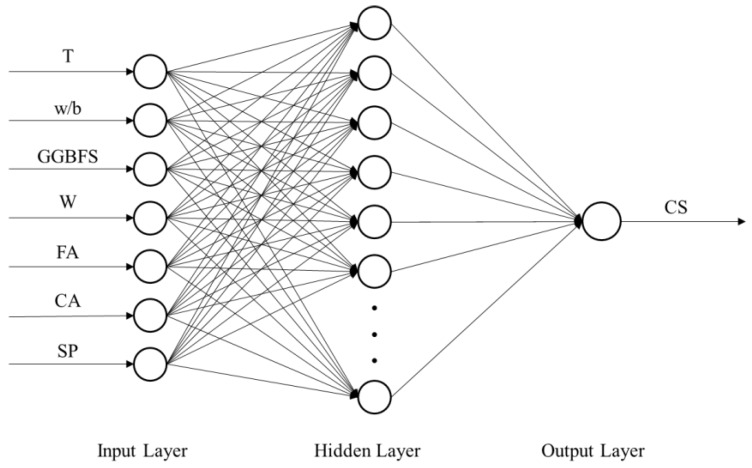

This section presents the procedures for developing the ANN models while using the BP, PSO, and PSO-BP algorithms for predicting the CS of GGBFS concrete. As previously mentioned, the curing temperature (T), water to binder ratio (w/b), GGBFS to total binder ratio (GGBFS/B), water (W), fine aggregate (FA), coarse aggregate (CA), and superplasticizer (SP) were used as the input parameters for the CS prediction models. To construct and evaluate the network models, the dataset was divided into training and testing sets; 80% of the data were used for training and the remaining 20% were employed for testing. For the BP algorithm, 10% of the training dataset was used for validation. The test set was not applied in training, but it was used to evaluate the generalization performance of the developed network. All of the models presented in this study were developed while using MATLAB R2018a.

4.1. BP ANN

There are several BP algorithms that can be applied in ANNs, such as the Powell Beale conjugate gradient, BFGS Quasi Newton, and Bayesian regularization. Among these BP algorithms, the Levenberg–Marquardt algorithm, which has been used most commonly in training networks, owing to its high speed and robustness, was adopted in this study to train the ANNs [53,54]. It has been utilized for developing predictive models for concrete properties, and its effectiveness as compared with other BP algorithms has been proven [14,15,21].

Before the training of the network, all of the input and target values were normalized within the range [−1, 1] while using the following equation:

| (6) |

where and represent the raw and normalized values, respectively. and indicate the largest and smallest values of , respectively. Normalization of the data can improve the efficiency of learning and simplify the design procedure [39].

The performance of ANNs depends strongly on the network architecture and parameters, including the number of hidden layers, number of neurons in each hidden layer, and activation functions. According to various researchers, ANNs with only one hidden layer can solve almost all engineering problems [55,56,57] and generally produce excellent results [53]. Therefore, all of the ANN-based predictive models that were constructed in this study had a single hidden layer. Figure 3 shows the architecture of the CS prediction ANN models. The hyperbolic tangent function and linear function were used as the activation functions of the hidden and output neurons, respectively.

Figure 3.

Architecture of the compressive strength (CS) prediction neural network model.

As highlighted by several researchers, determining the number of neurons in the hidden layer (Nh) is a critical task, because this number significantly affects the performance of ANNs. However, there is no theoretical rule for selecting the proper value of Nh. Therefore, in this study, it was determined through trial and error. Several different ANNs were constructed with various values of Nh within a reasonable range based on previously proposed empirical equations [55,58,59,60,61,62], and their performances were evaluated while using the coefficient of determination (R2) to obtain the optimal value. Table 2 presents the equations used to decide the Nh range for the CS model. As shown, the Nh range (2,21) was selected for the CS prediction BP ANN. The models with different Nh values were each run 10 times, and the average R2 values of both the training and testing sets were computed to determine the optimal number of hidden neurons. The BP model with 15 hidden neurons exhibited the best performance; thus, a 7-15-1 architecture was applied to the BP ANN models in this study. Additional details regarding the specifications of the best BP ANN model for predicting the CS are presented later.

Table 2.

Empirical equations for the number of hidden neurons (Nh).

| Empirical Equation | Reference | |

|---|---|---|

| 0.75Ni | Neville (1986) | [58] |

| 2Ni + 1 | Hecht-Nielsen (1987) | [55] |

| 3Ni | Hush (1989) | [59] |

| 2Ni | Gallant (1993) | [60] |

| Ni + 1 | Tamura (1997) | [61] |

| (4Ni2 + 3)/(Ni2 − 8) | Sheela (2013) | [62] |

Ni is the number of input neurons.

4.2. PSO ANN

The PSO ANN represents the ANN model that was trained by the PSO algorithm, in which the positions of the particles indicate the weights and biases of the ANN. The parameters that are associated with PSO and the ANN should be selected properly to achieve the best performance of the PSO ANN. However, the parameters that lead to the minimum of the cost function are not the same in all cases, and there is no theoretical approach for identifying the optimal values. In this study, to construct a robust and accurate predictive model, the ANN parameter, i.e., the network architecture, and the PSO parameters, including the number of particles in the swarm (Nop) and the acceleration coefficients (c1, c2), were determined through parametric analyses. The inertia weight (w)—one of the PSO parameters—was taken as a random number within the range of (0, 1) [25,63]. Various values that were suggested in the previous studies were considered to find the optimal parameters, as shown in Table 3.

Table 3.

Values of the PSO parameters considered.

| Acceleration Coefficient (c1, c2) | Swarm Size (Nop) | Number of Hidden Neurons (Nh) | |

|---|---|---|---|

| c1 = 0.8, c2 = 3.2 | c1 = 3.2, c2 = 0.8 | 10 | 2–21 |

| c1 = 1.333, c2 = 2.667 | c1 = 2, c2 = 1.5 | 20 | |

| c1 = 1.714, c2 = 2.286 | c1 = 2, c2 = 1 | 30 | |

| c1 = 2, c2 = 2 | c1 = 1, c2 = 2 | 40 | |

| c1 = 2.286, c2 = 1.714 | c1 = 1.5, c2 = 2 | 50 | |

| c1 = 1.333, c2 = 2.667 | c1 = 1.5, c2 = 1.5 | 100 | |

Each time that a network was trained, the training was stopped when the termination criteria were satisfied, i.e., the iteration number reached the limit of 2000 or the improvement in the cost function was <10−8 for 100 successive iterations [25]. The models with different parameters were each trained five times, and R2, as a performance measure, was calculated for the training and testing data in every run. The best model was selected according to the average values of R2 through the same method that was described in the previous section. The best result was obtained when the number of hidden neurons was 15 (as in the case of the BP ANN), the swarm size was 30, and c1 and c2 were 1.5 and 2.5, respectively.

4.3. PSO-BP ANN

Hybrid algorithms combining PSO and BP have been used in ANNs to solve several engineering problems, owing to their fast convergence and global optimization capability. In the PSO-BP network model, the PSO algorithm attempted to find the near-global optimal initial points instead of random initial weights for the BP training of the ANN. The parameters that were associated with both algorithms were specified as the values determined in Section 4.1 and Section 4.2.

5. Evaluation of CS Prediction Models

The CS prediction ANN models trained by the BP, PSO, and PSO-BP algorithms were evaluated and compared. Each model was run 15 times with different training and testing data, and the results were evaluated with regard to the prediction accuracy, efficiency, and stability through a threefold procedure.

The four statistical indices that were employed to evaluate the performance capacity and prediction accuracy of each CS prediction model. The RMSE, mean absolute error (MAE), mean absolute percentage error (MAPE), and coefficient of determination (R2) were the main criteria that were used for performance measurement. These indices are defined as follows:

| (7) |

| (8) |

| (9) |

| (10) |

where is the predicted value of the compressive strength, is the experimental value, is the total number of data, is the mean value of the predicted strength, and is the mean value of the experimental strength. Lower values of the MAE, RMSE, and MAPE and higher values of R2 indicate a better predictability of the models.

Table 4 presents the performance indices of the best BP ANN, PSO ANN, and PSO-BP ANN models. As shown, among the developed models, the model that was trained by the hybrid algorithm had the lowest MAE, RMSE, and MAPE, as well as the highest R2, for both the training and testing datasets, which indicated that this model could predict the CS with the highest accuracy. Furthermore, the difference between the statistical performance results for the training and testing data was the smallest for the hybrid model. This result reveals the PSO-BP network model has better generalization performance than the other models.

Table 4.

Obtained statistical performance values for the developed models.

| Statistical Indices | BP | PSO | PSO-BP | |||

|---|---|---|---|---|---|---|

| TR | TS | TR | TS | TR | TS | |

| MAE | 2.446 | 3.325 | 3.196 | 4.663 | 1.581 | 2.689 |

| RMSE | 3.123 | 5.045 | 4.400 | 5.822 | 2.253 | 3.332 |

| MAPE | 0.0595 | 0.0778 | 0.0821 | 0.114 | 0.0392 | 0.0644 |

| R 2 | 0.943 | 0.906 | 0.884 | 0.861 | 0.971 | 0.961 |

TR and TS represent the training and testing datasets, respectively.

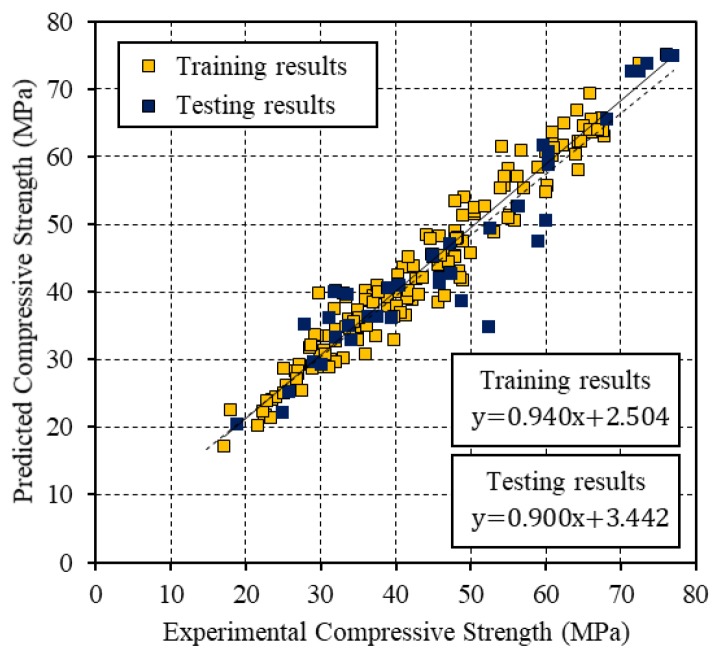

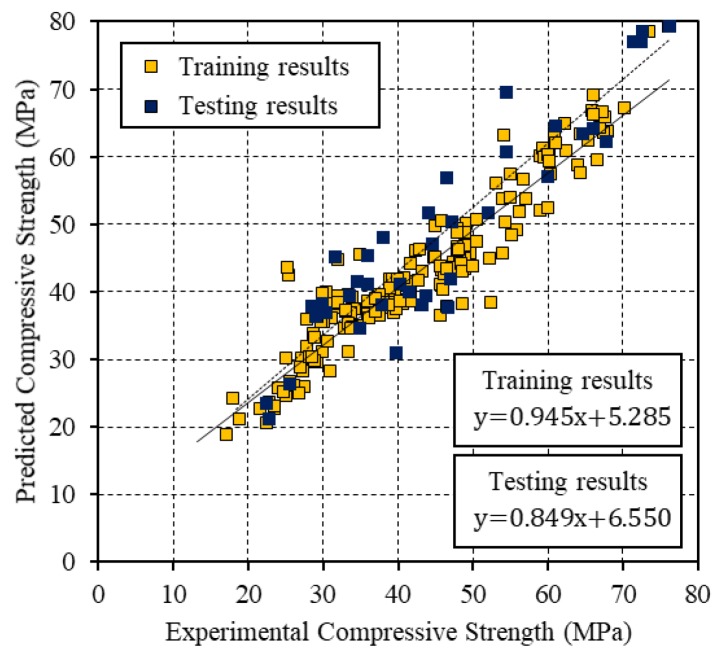

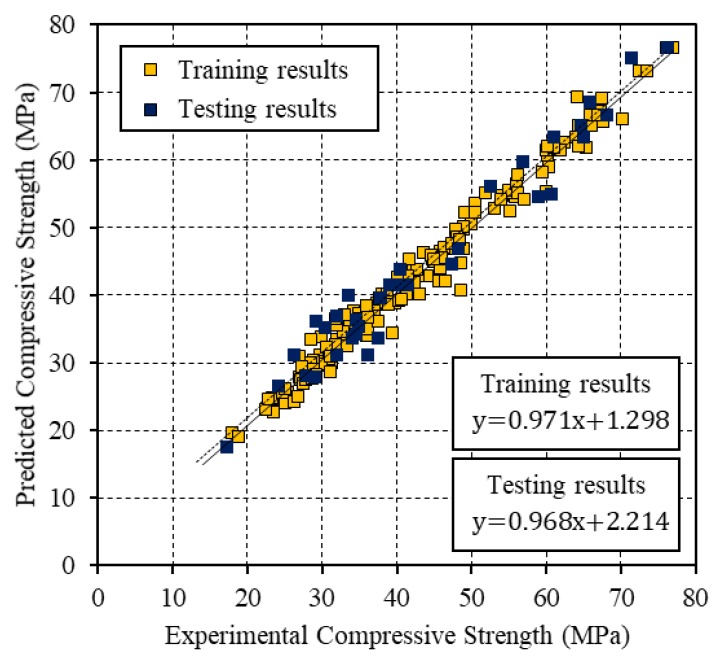

Figure 4, Figure 5 and Figure 6 present the relationships between the experimental CS and the values that were predicted by the BP, PSO, and PSO-BP networks, respectively. The BP and PSO-BP models exhibited R2 values of >0.9 for both the training and testing datasets, which indicated that these models can provide reliable outputs with a high degree of fitness to the actual values. Thus, they are suitable for predicting the CS of GGBFS based on the mixture constituents and curing temperature. The relatively high R2 values of the proposed PSO-BP model suggest that it has the potential for estimating strength more accurately than the other models.

Figure 4.

Comparison between the experimental CS and that predicted by the BP neural network.

Figure 5.

Comparison between the experimental CS and that predicted by the PSO neural network.

Figure 6.

Comparison between the experimental CS and that predicted by the PSO-BP neural network.

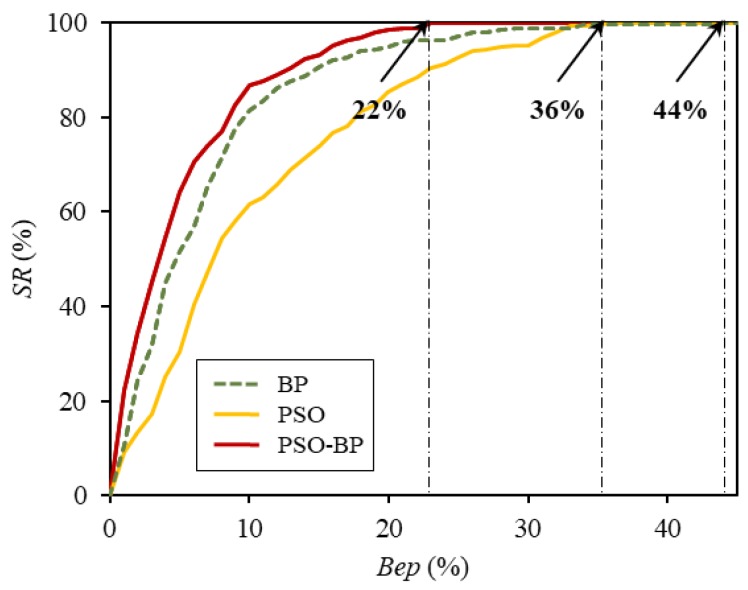

To perform a detailed assessment, the computational efficiency of each model was evaluated while using the SR [64], which is given by the following equations:

| (11) |

where is the relative error and and are the measured and predicted values, respectively, of the th data entry in the dataset. is the number of data entries, the relative error of which is smaller than the restrained error bound (i.e., the number of entries within the area ), and is the total number of data in the considered set. The SR is the percentage of data that have equal or smaller relative error than the specified error criterion and it has been used for the estimation of the numerical efficiency and validity of the developed models in several studies [64]. The SR of each model was computed with the variation of the restrained error from 0 to 100%. Figure 7 and Table 5 show the obtained results. When was 5%, the SR for the PSO-BP ANN model was 64.1%, and those for the conventional BP ANN and the PSO ANN were 49.2% and 30.2%, respectively. These results indicate that 64.1% of the data were well-predicted by the hybrid model, with accuracy of . As shown in Figure 7, for all values of , including 5%, the SR of the PSO-BP network model was greater than that of the other models. Additionally, for the PSO-BP ANN, the relative error of the entire data was not greater than 22%; that is, when the restrained error was 22%, the SR was 100%. In comparison, the prediction errors of all the data for the ANNs that were trained by the BP algorithm alone and the PSO algorithm alone were equal to or smaller than 43% and 35%, respectively. These results indicate that the hybrid prediction model has better validity and efficiency than the other models for predicting the CS of GGBFS concrete.

Figure 7.

Percentage of data that have equal or smaller relative error than the specified error criterion (SR) for the developed models.

Table 5.

Values of the SR for the developed models.

| Learning Algorithm | SR (%) | Bep (%) (SR = 100%) | ||||

|---|---|---|---|---|---|---|

| Bep = 5% | Bep = 10% | Bep = 20% | Bep = 30% | Bep = 40% | ||

| BP | 49.2 | 81.4 | 94.0 | 98.8 | 99.6 | 44 |

| PSO | 30.2 | 61.7 | 85.5 | 95.1 | 100 | 36 |

| PSO-BP | 64.9 | 89.5 | 99.2 | 100 | 100 | 22 |

Bep represents the restrained error.

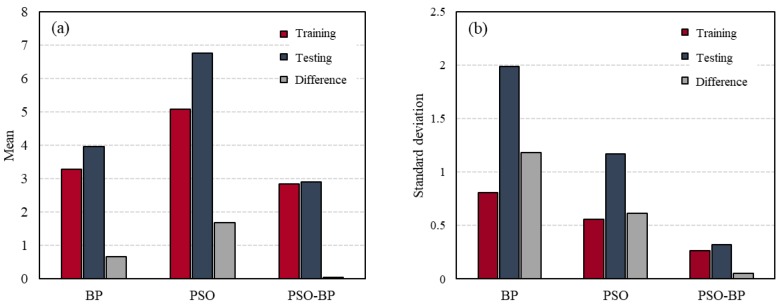

Finally, to evaluate the stability of the developed models, the standard deviations of the RMSE for the models that were trained with 15 randomly selected training samples were calculated and compared. An ANN-based predictive model can give different outputs and have different performance for the same inputs, depending on the initial weight and bias values or the data-splitting method [24]. This property can cause significant problems in practical application [53,65]. Therefore, the stability of an ANN model must be validated prior to use [65,66]. In this study, as mentioned previously, each model was trained 15 times while using different combinations of training and testing sets and, then, the standard deviation () of the RMSE was computed while using Equation (12) to evaluate the stability of the developed models. The standard deviation indicates the sensitivity of the prediction performance of a model to the data used to train and develop it. A model with higher standard deviation is more strongly dependent on the training observations.

| (12) |

Here, is the total number of training data and is the RMSE for the kth training set. denotes the mean value of the RMSE for models that are trained by a specific algorithm with 15 randomly selected training samples. Figure 8 and Table 6 show the standard deviations and mean values for the BP, PSO, and PSO-BP ANN models that are based on the training and testing datasets. The BP ANN model had lower means and higher standard deviations of RMSE than PSO ANN model. These results show that the ANN models trained by BP algorithm have better prediction accuracy, but lower stability than the PSO ANN models. The standard deviations and means of the PSO-BP ANN model for both the training and testing data were smaller than those of the other models, which indicates that the model based on the hybrid algorithm was less influenced by the data splitting. Moreover, the difference between the standard deviations for the two datasets was the smallest for the PSO-BP model. As a result, it can be concluded that the PSO-BP neural network model is the most stable and accurate among the three models for estimating the CS of GGBFS concrete.

Figure 8.

(a) Mean and (b) standard deviation of the root mean squared error (RMSE) for the developed models.

Table 6.

Mean and standard deviation of the RMSE for the developed models.

| Learning Algorithm | TR | TS | ||

|---|---|---|---|---|

| Mean | Standard Deviation | Mean | Standard Deviation | |

| BP | 3.185 | 0.828 | 3.959 | 1.989 |

| PSO | 5.079 | 0.557 | 6.767 | 1.170 |

| PSO-BP | 2.630 | 0.271 | 2.905 | 0.319 |

TR and TS represent the training and testing datasets, respectively.

6. Conclusions

The ANN models were constructed to predict the CS of GGBFS concrete based on the concrete mix proportions and curing temperature while using three different learning algorithms: BP, PSO, and PSO-BP. The parameters that were associated with each algorithm or neural network were determined via a trial-and-error method, and the proposed models were trained while using 269 data divided into two sets: testing and training. The developed PSO-BP neural network model was compared with ANN models that were trained by either BP or PSO to verify its accuracy, efficiency, and stability in prediction and to prove the synergetic benefits of using the hybrid algorithms.

The PSO-BP neural network model had the lowest values of the RMSE, MAE, and MAPE, as well as the highest values of R2 for both the training and testing data, and the deviation between the results that were obtained from the training and testing data was the smallest for the PSO-BP network. These results indicate that the proposed hybrid model has the best fit for not only training data, but also unseen data.

As shown in Table 5 and Figure 7, the hybrid model also had the highest for the specified error limit; i.e., its maximum relative error was smaller than those of the other two models. Additionally, when the models were trained with 15 randomly selected training samples, the PSO-BP network model exhibited the lowest standard deviation and mean values of the RMSE, which demonstrates that its prediction performance was the least affected by data division.

Several performance analyses indicated that the PSO-BP ANN model offers more accurate, reliable, and stable prediction of the CS of GGBFS concrete than the other models. That is, it has the best predictability and generalization performance among the developed models in this study. According to the results, it is obvious that using the hybrid algorithm has synergistic benefits for the performance of ANN models and the proposed hybrid PSO-BP ANN model is reliable for estimating the CS of GGBFS concrete.

Appendix A

Table A1.

The dataset used in this research.

| No | T (°C) | w/b (%) | GGBFS/B (%) | W (kg/m3) | FA (kg/m3) | CA (kg/m3) | SP (%) | CS (MPa) | No | T (°C) | w/b (%) | GGBFS/B (%) | W (kg/m3) | FA (kg/m3) | CA (kg/m3) | SP (%) | CS (MPa) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 60 | 25 | 0 | 163 | 682 | 882 | 0.8 | 66.2 | 20 | 20 | 41.7 | 30 | 156 | 848 | 933 | 0.95 | 39.01 |

| 2 | 60 | 25 | 40 | 163 | 647 | 897 | 0.65 | 65.91 | 21 | 20 | 44.1 | 50 | 165 | 835 | 918 | 0.6 | 38.11 |

| 3 | 60 | 25 | 50 | 163 | 641 | 899 | 0.7 | 64.13 | 22 | 20 | 43 | 50 | 161 | 840 | 924 | 0.7 | 41.51 |

| 4 | 60 | 25 | 60 | 163 | 634 | 901 | 0.75 | 70.32 | 23 | 20 | 42 | 50 | 157 | 845 | 929 | 0.8 | 40.36 |

| 5 | 60 | 25 | 70 | 163 | 628 | 903 | 0.7 | 62.27 | 24 | 20 | 40.9 | 50 | 153 | 850 | 934 | 0.95 | 43.69 |

| 6 | 60 | 27.5 | 0 | 163 | 703 | 909 | 0.77 | 60.34 | 25 | 20 | 44.1 | 70 | 165 | 832 | 915 | 0.5 | 45.79 |

| 7 | 60 | 27.5 | 40 | 163 | 685 | 911 | 0.6 | 62.48 | 26 | 20 | 42.8 | 70 | 160 | 838 | 922 | 0.6 | 47.27 |

| 8 | 60 | 27.5 | 50 | 163 | 678 | 913 | 0.63 | 67.78 | 27 | 20 | 41.4 | 70 | 155 | 845 | 929 | 0.75 | 45.76 |

| 9 | 60 | 27.5 | 60 | 163 | 671 | 916 | 0.7 | 65.39 | 28 | 20 | 40.1 | 70 | 150 | 851 | 936 | 0.9 | 41.76 |

| 10 | 60 | 27.5 | 70 | 163 | 665 | 918 | 0.68 | 54.5 | 29 | 20 | 44.1 | 0 | 165 | 850 | 916 | 0.65 | 43.14 |

| 11 | 60 | 30 | 0 | 163 | 721 | 932 | 0.8 | 60.68 | 30 | 20 | 43.3 | 70 | 162 | 850 | 916 | 0.65 | 45.74 |

| 12 | 60 | 30 | 40 | 163 | 719 | 919 | 0.63 | 56.85 | 31 | 20 | 42.5 | 50 | 159 | 851 | 918 | 0.7 | 46.63 |

| 13 | 60 | 30 | 50 | 163 | 712 | 921 | 0.6 | 64.07 | 32 | 20 | 41.7 | 30 | 156 | 852 | 919 | 0.7 | 48.72 |

| 14 | 60 | 30 | 60 | 163 | 706 | 924 | 0.68 | 60.24 | 33 | 20 | 43.1 | 0 | 168 | 857 | 888 | 0.608 | 37.83 |

| 15 | 60 | 30 | 70 | 163 | 699 | 927 | 0.7 | 50.6 | 34 | 20 | 42.8 | 20 | 167 | 847 | 895 | 0.607 | 38.55 |

| 16 | 20 | 44.1 | 0 | 165 | 841 | 925 | 0.65 | 41.67 | 35 | 20 | 42.6 | 30 | 166 | 841 | 900 | 0.607 | 38.74 |

| 17 | 20 | 44.1 | 30 | 165 | 837 | 921 | 0.65 | 33.03 | 36 | 20 | 42.3 | 50 | 165 | 828 | 911 | 0.607 | 40.18 |

| 18 | 20 | 43.3 | 30 | 162 | 841 | 925 | 0.8 | 36.01 | 37 | 20 | 41.8 | 70 | 163 | 811 | 928 | 0.508 | 38.04 |

| 19 | 20 | 42.5 | 30 | 159 | 845 | 929 | 0.85 | 37.68 | 38 | 20 | 28.6 | 0 | 166 | 757 | 879 | 1.309 | 67.38 |

| 39 | 20 | 28.4 | 20 | 165 | 742 | 884 | 1.208 | 68.05 | 59 | 20 | 49.2 | 30 | 160 | 863 | 949 | 0.1 | 29.9 |

| 40 | 20 | 28.3 | 30 | 164 | 725 | 899 | 1.158 | 67.53 | 60 | 20 | 49.2 | 30 | 146 | 863 | 949 | 0.1 | 30.3 |

| 41 | 20 | 27.9 | 50 | 162 | 707 | 914 | 0.857 | 67.62 | 61 | 20 | 49.2 | 30 | 128 | 863 | 949 | 0.1 | 30.1 |

| 42 | 20 | 27.6 | 70 | 160 | 690 | 929 | 0.656 | 66.18 | 62 | 20 | 49.2 | 40 | 160 | 863 | 949 | 0.1 | 32 |

| 43 | 10 | 43.1 | 0 | 168 | 857 | 888 | 0.608 | 33.05 | 63 | 20 | 49.2 | 40 | 146 | 863 | 949 | 0.1 | 30.4 |

| 44 | 10 | 42.8 | 20 | 167 | 847 | 895 | 0.607 | 36.03 | 64 | 20 | 44.5 | 30 | 160 | 775 | 923 | 0.1 | 55.3 |

| 45 | 10 | 42.3 | 50 | 165 | 828 | 911 | 0.607 | 36.13 | 65 | 20 | 44.5 | 30 | 137 | 775 | 923 | 0.1 | 49.1 |

| 46 | 10 | 28.6 | 0 | 166 | 757 | 879 | 1.309 | 59.42 | 67 | 20 | 44.5 | 40 | 160 | 775 | 923 | 0.1 | 55.9 |

| 47 | 10 | 28.4 | 20 | 165 | 742 | 884 | 1.208 | 59.55 | 68 | 20 | 44.5 | 40 | 137 | 775 | 923 | 0.1 | 54.4 |

| 48 | 10 | 27.9 | 50 | 162 | 707 | 914 | 0.857 | 54.23 | 69 | 20 | 44.5 | 50 | 160 | 775 | 923 | 0.15 | 52.6 |

| 49 | 15 | 43.1 | 0 | 168 | 857 | 888 | 0.608 | 34.32 | 70 | 20 | 44.5 | 50 | 137 | 775 | 923 | 0.15 | 50.5 |

| 50 | 15 | 42.8 | 20 | 167 | 847 | 895 | 0.607 | 33.78 | 71 | 20 | 50 | 30 | 163 | 836 | 965 | 1.63 | 39.8 |

| 51 | 15 | 42.3 | 50 | 165 | 828 | 911 | 0.607 | 33.44 | 72 | 20 | 50 | 40 | 163 | 834 | 964 | 1.63 | 37.4 |

| 52 | 15 | 28.6 | 0 | 166 | 757 | 879 | 1.309 | 65.03 | 73 | 20 | 50 | 50 | 163 | 834 | 963 | 1.63 | 35 |

| 53 | 15 | 28.4 | 20 | 165 | 742 | 884 | 1.208 | 61.07 | 74 | 20 | 40 | 30 | 163 | 764 | 968 | 2.04 | 48.2 |

| 54 | 15 | 27.9 | 50 | 162 | 707 | 914 | 0.857 | 61 | 75 | 20 | 40 | 40 | 163 | 763 | 966 | 2.04 | 45.9 |

| 55 | 20 | 47.4 | 0 | 173 | 873 | 949 | 0.5 | 52.4 | 76 | 20 | 40 | 50 | 163 | 761 | 964 | 2.04 | 44.1 |

| 56 | 20 | 47.4 | 50 | 173 | 873 | 949 | 0.5 | 29.7 | 77 | 45 | 55 | 0 | 175 | 853 | 1000 | 0.75 | 55 |

| 57 | 20 | 49.2 | 20 | 160 | 863 | 949 | 0.15 | 30.4 | 78 | 45 | 55 | 30 | 175 | 822 | 1014 | 0.6 | 60 |

| 58 | 20 | 49.2 | 20 | 146 | 863 | 949 | 0.15 | 31.3 | 79 | 45 | 55 | 50 | 175 | 801 | 1029 | 0.6 | 59 |

| 80 | 45 | 55 | 70 | 175 | 799 | 1026 | 0.6 | 50 | 100 | 60 | 35 | 70 | 175 | 694 | 969 | 0.65 | 29 |

| 81 | 45 | 45 | 0 | 175 | 817 | 968 | 0.7 | 49 | 101 | 75 | 55 | 0 | 175 | 853 | 1000 | 0.75 | 61 |

| 82 | 45 | 45 | 30 | 175 | 795 | 980 | 0.6 | 49 | 102 | 75 | 55 | 30 | 175 | 822 | 1014 | 0.6 | 59 |

| 83 | 45 | 45 | 50 | 175 | 774 | 995 | 0.55 | 48 | 103 | 75 | 55 | 50 | 175 | 801 | 1029 | 0.6 | 56 |

| 84 | 45 | 45 | 70 | 175 | 771 | 991 | 0.55 | 41 | 104 | 75 | 55 | 70 | 175 | 799 | 1026 | 0.6 | 48 |

| 85 | 45 | 35 | 0 | 175 | 741 | 952 | 0.8 | 39 | 105 | 75 | 45 | 0 | 175 | 817 | 968 | 0.7 | 48 |

| 86 | 45 | 35 | 30 | 175 | 718 | 962 | 0.7 | 36 | 106 | 75 | 45 | 30 | 175 | 795 | 980 | 0.6 | 45 |

| 87 | 45 | 35 | 50 | 175 | 698 | 974 | 0.65 | 35 | 107 | 75 | 45 | 50 | 175 | 774 | 995 | 0.55 | 49 |

| 88 | 45 | 35 | 70 | 175 | 694 | 969 | 0.65 | 32 | 108 | 75 | 45 | 70 | 175 | 771 | 991 | 0.55 | 37 |

| 89 | 60 | 55 | 0 | 175 | 853 | 1000 | 0.75 | 61 | 109 | 75 | 35 | 0 | 175 | 741 | 952 | 0.8 | 36 |

| 90 | 60 | 55 | 30 | 175 | 822 | 1014 | 0.6 | 62 | 110 | 75 | 35 | 30 | 175 | 718 | 962 | 0.7 | 37 |

| 91 | 60 | 55 | 50 | 175 | 801 | 1029 | 0.6 | 55 | 111 | 75 | 35 | 50 | 175 | 698 | 974 | 0.65 | 36 |

| 92 | 60 | 55 | 70 | 175 | 799 | 1026 | 0.6 | 43 | 112 | 75 | 35 | 70 | 175 | 694 | 969 | 0.65 | 31 |

| 93 | 60 | 45 | 0 | 175 | 817 | 968 | 0.7 | 49 | 113 | 20 | 42 | 0 | 168 | 783 | 972 | 0.715 | 46.1 |

| 94 | 60 | 45 | 30 | 175 | 795 | 980 | 0.6 | 48 | 114 | 20 | 42 | 30 | 168 | 780 | 968 | 0.463 | 44.9 |

| 95 | 60 | 45 | 50 | 175 | 774 | 995 | 0.55 | 48 | 115 | 20 | 42 | 50 | 168 | 778 | 966 | 0.413 | 44.6 |

| 96 | 60 | 45 | 70 | 175 | 771 | 991 | 0.55 | 36 | 116 | 20 | 37 | 0 | 168 | 729 | 981 | 0.817 | 50.5 |

| 97 | 60 | 35 | 0 | 175 | 741 | 952 | 0.8 | 40 | 117 | 20 | 37 | 30 | 168 | 726 | 977 | 0.515 | 56.3 |

| 98 | 60 | 35 | 30 | 175 | 718 | 962 | 0.7 | 39 | 118 | 20 | 37 | 50 | 168 | 724 | 974 | 0.455 | 57.1 |

| 99 | 60 | 35 | 50 | 175 | 698 | 974 | 0.65 | 32 | 119 | 20 | 38 | 0 | 168 | 672 | 981 | 1.018 | 60 |

| 120 | 20 | 38 | 30 | 168 | 668 | 976 | 0.868 | 64.3 | 140 | 20 | 85.8 | 17.6 | 219 | 729 | 1106 | 0 | 23.6 |

| 121 | 20 | 38 | 50 | 168 | 665 | 972 | 0.768 | 66.7 | 141 | 20 | 74.6 | 30 | 224 | 707 | 1072 | 0 | 30 |

| 122 | 20 | 27 | 0 | 168 | 608 | 965 | 1.52 | 71.4 | 142 | 20 | 64.1 | 41.6 | 231 | 677 | 1027 | 0 | 34 |

| 123 | 20 | 27 | 30 | 168 | 604 | 959 | 1.37 | 72.6 | 143 | 20 | 57.1 | 50 | 240 | 645 | 979 | 0 | 34.9 |

| 124 | 20 | 27 | 50 | 168 | 601 | 954 | 1.27 | 76.2 | 144 | 20 | 52.2 | 56.2 | 251 | 611 | 927 | 0 | 34.5 |

| 125 | 20 | 41 | 0 | 170 | 697 | 1035 | 2.9 | 48.3 | 145 | 20 | 48.3 | 61 | 261 | 578 | 877 | 0 | 31.8 |

| 126 | 20 | 41 | 10 | 170 | 697 | 1035 | 2.9 | 48.1 | 146 | 20 | 66.2 | 0 | 232 | 684 | 1037 | 0 | 35 |

| 127 | 20 | 41 | 30 | 170 | 697 | 1035 | 2.9 | 47.1 | 147 | 20 | 88.9 | 0 | 218 | 735 | 1114 | 0 | 22.6 |

| 128 | 20 | 41 | 50 | 170 | 697 | 1035 | 2.9 | 46.4 | 148 | 20 | 75.6 | 17.6 | 225 | 708 | 1073 | 0 | 29 |

| 129 | 20 | 56 | 0 | 168 | 750 | 1080 | 0 | 53.1 | 149 | 20 | 65.7 | 30 | 230 | 683 | 1036 | 0 | 36.1 |

| 130 | 20 | 56 | 55 | 168 | 750 | 1080 | 0 | 42.5 | 150 | 20 | 56.9 | 41.6 | 239 | 647 | 982 | 0 | 41.4 |

| 131 | 20 | 56 | 85 | 168 | 750 | 1080 | 0 | 33.5 | 151 | 20 | 51 | 50 | 250 | 609 | 924 | 0 | 42.3 |

| 132 | 20 | 87.6 | 0 | 219 | 732 | 1111 | 0 | 22.7 | 152 | 20 | 46.9 | 56.2 | 263 | 569 | 864 | 0 | 41.5 |

| 133 | 20 | 87.6 | 17.6 | 215 | 748 | 1135 | 0 | 18.1 | 153 | 20 | 44.2 | 61 | 279 | 526 | 799 | 0 | 37.5 |

| 134 | 20 | 87.6 | 30 | 218 | 731 | 1109 | 0 | 23.5 | 154 | 20 | 59.7 | 0 | 239 | 659 | 999 | 0 | 40.4 |

| 135 | 20 | 74.3 | 41.6 | 223 | 707 | 1073 | 0 | 27 | 155 | 20 | 80 | 0 | 224 | 716 | 1087 | 0 | 27.5 |

| 136 | 20 | 65.7 | 50 | 230 | 681 | 1033 | 0 | 27.8 | 156 | 20 | 67.9 | 17.6 | 231 | 686 | 1041 | 0 | 33.7 |

| 137 | 20 | 59.5 | 56.2 | 238 | 654 | 991 | 0 | 27.2 | 157 | 20 | 59 | 30 | 236 | 659 | 999 | 0 | 41.8 |

| 138 | 20 | 55.1 | 61 | 248 | 624 | 948 | 0 | 25.1 | 158 | 20 | 51.4 | 41.6 | 247 | 617 | 936 | 0 | 47.5 |

| 139 | 20 | 75 | 0 | 225 | 708 | 1075 | 0 | 28.9 | 159 | 20 | 46.9 | 50 | 263 | 570 | 866 | 0 | 48.4 |

| 160 | 20 | 43.4 | 56.2 | 278 | 525 | 796 | 0 | 47 | 180 | 20 | 41.4 | 70 | 155 | 845 | 929 | 0.75 | 45.76 |

| 161 | 20 | 40.9 | 61.1 | 295 | 477 | 723 | 0 | 42.7 | 181 | 20 | 40.1 | 70 | 150 | 851 | 936 | 0.9 | 41.76 |

| 162 | 20 | 50 | 0 | 185 | 850 | 952 | 0.5 | 41.5 | 182 | 20 | 44.1 | 0 | 165 | 850 | 916 | 0.65 | 43.14 |

| 163 | 20 | 50 | 10 | 185 | 850 | 952 | 0.5 | 40.1 | 183 | 20 | 43.3 | 30 | 162 | 850 | 916 | 0.65 | 45.74 |

| 164 | 20 | 50 | 20 | 185 | 850 | 952 | 0.5 | 40.6 | 184 | 20 | 42.5 | 50 | 159 | 851 | 918 | 0.7 | 46.63 |

| 165 | 20 | 50 | 30 | 185 | 850 | 952 | 0.5 | 39.8 | 185 | 20 | 41.7 | 70 | 156 | 852 | 919 | 0.7 | 48.72 |

| 166 | 20 | 50 | 40 | 185 | 850 | 952 | 0.5 | 39.4 | 186 | 20 | 47.9 | 0 | 163 | 947 | 870 | 0.7 | 25.5 |

| 167 | 20 | 50 | 50 | 185 | 850 | 952 | 0.5 | 37.5 | 187 | 20 | 47.9 | 60 | 163 | 939 | 863 | 0.5 | 23.3 |

| 168 | 20 | 50 | 60 | 185 | 850 | 952 | 0.42 | 35.2 | 188 | 20 | 45.3 | 0 | 163 | 920 | 880 | 0.7 | 28.2 |

| 169 | 20 | 44.1 | 0 | 165 | 841 | 925 | 0.65 | 41.67 | 189 | 20 | 45.3 | 60 | 163 | 913 | 873 | 0.55 | 26.6 |

| 170 | 20 | 44.1 | 30 | 165 | 837 | 921 | 0.65 | 33.03 | 190 | 20 | 42.9 | 0 | 163 | 894 | 890 | 0.7 | 32.9 |

| 171 | 20 | 43.3 | 30 | 162 | 841 | 925 | 0.8 | 36.01 | 191 | 20 | 42.9 | 60 | 163 | 886 | 882 | 0.5 | 29.2 |

| 172 | 20 | 42.5 | 30 | 159 | 845 | 929 | 0.85 | 37.68 | 192 | 20 | 45.3 | 0 | 163 | 920 | 880 | 0.7 | 28.5 |

| 173 | 20 | 41.7 | 30 | 156 | 848 | 933 | 0.95 | 39.01 | 193 | 20 | 45.3 | 30 | 163 | 916 | 876 | 0.6 | 26.2 |

| 174 | 20 | 44.1 | 50 | 165 | 835 | 918 | 0.6 | 38.11 | 194 | 20 | 45.3 | 60 | 163 | 913 | 873 | 0.55 | 27.1 |

| 175 | 20 | 43.0 | 50 | 161 | 840 | 984 | 0.7 | 41.51 | 195 | 20 | 42 | 0 | 168 | 783 | 972 | 0.715 | 46.1 |

| 176 | 20 | 42.0 | 50 | 157 | 845 | 929 | 0.8 | 40.36 | 196 | 20 | 42 | 30 | 168 | 780 | 968 | 0.463 | 44.9 |

| 177 | 20 | 40.9 | 50 | 153 | 850 | 934 | 0.95 | 43.69 | 197 | 20 | 42 | 50 | 168 | 778 | 966 | 0.413 | 44.6 |

| 178 | 20 | 44.1 | 70 | 165 | 832 | 915 | 0.5 | 45.79 | 198 | 20 | 37 | 0 | 168 | 729 | 981 | 0.817 | 50.5 |

| 179 | 20 | 42.8 | 70 | 160 | 838 | 922 | 0.6 | 47.27 | 199 | 20 | 37 | 30 | 168 | 726 | 977 | 0.515 | 56.3 |

| 200 | 20 | 37.0 | 50 | 168 | 724 | 974 | 0.465 | 57.1 | 220 | 23 | 50 | 30 | 168 | 790 | 995 | 1.05 | 31.1 |

| 201 | 20 | 32.0 | 0 | 168 | 672 | 981 | 1.018 | 60 | 221 | 23 | 50 | 40 | 168 | 789 | 994 | 1.05 | 32 |

| 202 | 20 | 32.0 | 30 | 168 | 668 | 976 | 0.868 | 64.3 | 222 | 23 | 50 | 50 | 168 | 788 | 993 | 1.16 | 33.5 |

| 203 | 20 | 32.0 | 50 | 168 | 665 | 972 | 0.767 | 66 | 223 | 23 | 45.0 | 30 | 158 | 760 | 1039 | 1.46 | 33.9 |

| 204 | 20 | 27.0 | 0 | 168 | 608 | 965 | 1.52 | 71.4 | 224 | 23 | 45.0 | 40 | 158 | 759 | 1038 | 1.47 | 34.5 |

| 205 | 20 | 27.0 | 30 | 168 | 604 | 959 | 1.37 | 72.6 | 225 | 23 | 45.0 | 50 | 158 | 758 | 1037 | 1.48 | 36.2 |

| 206 | 20 | 27 | 50 | 168 | 601 | 954 | 1.27 | 76.2 | 226 | 20 | 42 | 0 | 168 | 783 | 972 | 0.715 | 46 |

| 207 | 20 | 50 | 0 | 175 | 800 | 965 | 0.645 | 32 | 227 | 20 | 42 | 30 | 168 | 780 | 968 | 0.463 | 45 |

| 208 | 20 | 50 | 10 | 175 | 799 | 963 | 0.605 | 31.8 | 228 | 20 | 42 | 50 | 168 | 778 | 966 | 0.413 | 44.7 |

| 209 | 20 | 50 | 30 | 175 | 797 | 960 | 0.585 | 29.8 | 229 | 20 | 37 | 0 | 168 | 729 | 981 | 0.817 | 50.5 |

| 210 | 20 | 50 | 50 | 175 | 794 | 957 | 0.545 | 28.5 | 230 | 20 | 37.0 | 30 | 168 | 726 | 977 | 0.515 | 52 |

| 211 | 20 | 30 | 0 | 165 | 717 | 924 | 0.65 | 56.3 | 231 | 20 | 37.0 | 50 | 168 | 724 | 974 | 0.465 | 54 |

| 212 | 20 | 30 | 65 | 165 | 705 | 909 | 0.3 | 54.5 | 232 | 20 | 32.0 | 0 | 168 | 672 | 981 | 1.018 | 60.3 |

| 213 | 20 | 27 | 65 | 163 | 689 | 887 | 0.3 | 61.1 | 233 | 20 | 32.0 | 30 | 168 | 668 | 976 | 0.868 | 64.7 |

| 214 | 23 | 55 | 0 | 179 | 819 | 952 | 0.73 | 27.8 | 234 | 20 | 32.0 | 50 | 168 | 665 | 972 | 0.767 | 67 |

| 215 | 23 | 50 | 0 | 168 | 793 | 999 | 1.04 | 28.7 | 235 | 20 | 27.0 | 0 | 168 | 608 | 965 | 1.52 | 72.4 |

| 216 | 23 | 45 | 0 | 158 | 764 | 1043 | 1.46 | 30.7 | 236 | 20 | 27.0 | 30 | 168 | 604 | 959 | 1.37 | 73.5 |

| 217 | 23 | 55 | 30 | 179 | 817 | 949 | 0.84 | 28.9 | 237 | 20 | 27.0 | 50 | 168 | 601 | 954 | 1.27 | 77 |

| 218 | 23 | 55 | 40 | 179 | 815 | 947 | 1.05 | 29.3 | 238 | 5 | 60.0 | 0 | 217.2 | 399 | 912 | 0.65 | 24.8 |

| 219 | 23 | 55 | 50 | 179 | 814 | 946 | 1.05 | 31.2 | 239 | 5 | 60.0 | 10 | 217.2 | 397 | 910 | 0.65 | 22.4 |

| 240 | 5 | 60.0 | 30 | 217.2 | 396 | 908 | 0.65 | 18.8 | 260 | 20 | 60.0 | 20 | 172 | 877 | 996 | 0.5 | 52.2 |

| 241 | 5 | 60.0 | 50 | 217.2 | 395 | 906 | 0.65 | 17.2 | 261 | 20 | 57.0 | 20 | 175 | 843 | 997 | 0.55 | 46.3 |

| 242 | 20 | 60.0 | 0 | 217.2 | 399 | 912 | 0.65 | 26.4 | 262 | 20 | 50.0 | 20 | 176 | 811 | 998 | 0.5 | 39 |

| 243 | 20 | 60.0 | 10 | 217.2 | 397 | 910 | 0.65 | 24.1 | 263 | 20 | 45.0 | 20 | 181 | 769 | 985 | 0.5 | 37.2 |

| 244 | 20 | 60.0 | 30 | 217.2 | 396 | 908 | 0.65 | 22.9 | 264 | 20 | 40.0 | 20 | 187 | 721 | 962 | 0.5 | 31.6 |

| 245 | 20 | 60.0 | 50 | 217.2 | 395 | 906 | 0.65 | 21.7 | 265 | 20 | 60.0 | 30 | 172 | 877 | 996 | 0.5 | 49 |

| 246 | 35 | 60.0 | 0 | 217.2 | 399 | 912 | 0.65 | 27.4 | 266 | 20 | 55.0 | 30 | 175 | 843 | 997 | 0.5 | 43.4 |

| 247 | 35 | 60.0 | 10 | 217.2 | 397 | 910 | 0.65 | 25.7 | 267 | 20 | 50.0 | 30 | 176 | 811 | 998 | 0.5 | 40.8 |

| 248 | 35 | 60.0 | 30 | 217.2 | 396 | 908 | 0.65 | 26.8 | 268 | 20 | 45.0 | 30 | 181 | 769 | 985 | 0.5 | 39.3 |

| 249 | 35 | 60.0 | 50 | 217.2 | 395 | 906 | 0.65 | 25.1 | 269 | 20 | 40.0 | 30 | 187 | 721 | 962 | 0.5 | 32.1 |

| 250 | 20 | 60.0 | 0 | 172 | 877 | 996 | 0.5 | 49.7 | |||||||||

| 251 | 20 | 55.0 | 0 | 175 | 843 | 997 | 0.5 | 43.3 | |||||||||

| 252 | 20 | 50.0 | 0 | 176 | 811 | 998 | 0.5 | 39.7 | |||||||||

| 253 | 20 | 45.0 | 0 | 181 | 769 | 985 | 0.5 | 37.9 | |||||||||

| 254 | 20 | 40.0 | 0 | 187 | 721 | 962 | 0.48 | 25.5 | |||||||||

| 255 | 20 | 60.0 | 10 | 172 | 877 | 996 | 0.5 | 54 | |||||||||

| 256 | 20 | 55.0 | 10 | 175 | 843 | 997 | 0.5 | 46.5 | |||||||||

| 257 | 20 | 60.0 | 10 | 176 | 811 | 998 | 0.5 | 43.3 | |||||||||

| 258 | 20 | 45.0 | 10 | 181 | 769 | 985 | 0.5 | 33.5 | |||||||||

| 259 | 20 | 40.0 | 10 | 187 | 721 | 962 | 0.5 | 25.2 |

Author Contributions

Conceptualization, I.-J.H., Y.-S.Y. and J.-H.K.; investigation, I.-J.H., T.-F.Y. and J.-Y.L.; writing—original draft preparation, I.-J.H.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (NRF-2019R1A2C2087646).

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Monteiro P.J., Miller S.A., Horvath A. Towards sustainable concrete. Nat. Mater. 2017;16:698–699. doi: 10.1038/nmat4930. [DOI] [PubMed] [Google Scholar]

- 2.Tang Y., Li L., Feng W., Liu F., Zhu M. Study of seismic behavior of recycled aggregate concrete-filled steel tubular columns. J. Constr. Steel Res. 2018;148:1–15. doi: 10.1016/j.jcsr.2018.04.031. [DOI] [Google Scholar]

- 3.Tang Y., Li L., Wang C., Chen M., Feng W., Zou X., Huang K. Real-time detection of surface deformation and strain in recycled aggregate concrete-filled steel tubular columns via four-ocular vision. Robot. Comput. Integr. Manuf. 2019;59:36–46. doi: 10.1016/j.rcim.2019.03.001. [DOI] [Google Scholar]

- 4.Behnood A., Golafshani E.M. Predicting the compressive strength of silica fume concrete using hybrid artificial neural network with multi-objective grey wolves. J. Clean. Prod. 2018;202:54–64. doi: 10.1016/j.jclepro.2018.08.065. [DOI] [Google Scholar]

- 5.Bentz D.P. Influence of water-to-cement ratio on hydration kinetics: Simple models based on spatial considerations. Cement Concrete Res. 2006;36:238–244. doi: 10.1016/j.cemconres.2005.04.014. [DOI] [Google Scholar]

- 6.Chidiac S., Panesar D. Evolution of mechanical properties of concrete containing ground granulated blast furnace slag and effects on the scaling resistance test at 28 days. Cement Concrete Comp. 2008;30:63–71. doi: 10.1016/j.cemconcomp.2007.09.003. [DOI] [Google Scholar]

- 7.Cheng A., Huang R., Wu J.-K., Chen C.-H. Influence of GGBS on durability and corrosion behavior of reinforced concrete. Mater. Chem. Phys. 2005;93:404–411. doi: 10.1016/j.matchemphys.2005.03.043. [DOI] [Google Scholar]

- 8.Özbay E., Erdemir M., Durmuş H.İ. Utilization and efficiency of ground granulated blast furnace slag on concrete properties—A review. Constr. Build. Mater. 2016;10:423–434. doi: 10.1016/j.conbuildmat.2015.12.153. [DOI] [Google Scholar]

- 9.Song H.-W., Saraswathy V. Studies on the corrosion resistance of reinforced steel in concrete with ground granulated blast-furnace slag—An overview. J. Hazard. Mater. 2006;138:226–233. doi: 10.1016/j.jhazmat.2006.07.022. [DOI] [PubMed] [Google Scholar]

- 10.Neville A.M. Properties of Concrete. Pearson Education; Bengaluru, India: 1963. [Google Scholar]

- 11.Oner A., Akyuz S. An experimental study on optimum usage of GGBS for the compressive strength of concrete. Cement Concrete Comp. 2007;29:505–514. doi: 10.1016/j.cemconcomp.2007.01.001. [DOI] [Google Scholar]

- 12.Papadakis V., Tsimas S. Supplementary cementing materials in concrete: Part I: Efficiency and design. Cement Concrete Res. 2002;32:1525–1532. doi: 10.1016/S0008-8846(02)00827-X. [DOI] [Google Scholar]

- 13.Alshihri M.M., Azmy A.M., El-Bisy M.S. Neural networks for predicting compressive strength of structural light weight concrete. Constr. Build. Mater. 2009;23:2214–2219. doi: 10.1016/j.conbuildmat.2008.12.003. [DOI] [Google Scholar]

- 14.Chithra S., Kumar S.R.R.S., Chinnaraju K., Alfin Ashmita F. A comparative study on the compressive strength prediction models for High Performance Concrete containing nano silica and copper slag using regression analysis and Artificial Neural Networks. Constr. Build. Mater. 2016;114:528–535. doi: 10.1016/j.conbuildmat.2016.03.214. [DOI] [Google Scholar]

- 15.Dantas A.T.A., Leite M.B., de Jesus Nagahama K. Prediction of compressive strength of concrete containing construction and demolition waste using artificial neural networks. Constr. Build. Mater. 2013;38:717–722. doi: 10.1016/j.conbuildmat.2012.09.026. [DOI] [Google Scholar]

- 16.Beşikçi E.B., Arslan O., Turan O., Ölçer A.I. An artificial neural network based decision support system for energy efficient ship operations. Comput. Oper. Res. 2016;66:393–401. doi: 10.1016/j.cor.2015.04.004. [DOI] [Google Scholar]

- 17.Sarıdemir M. Prediction of compressive strength of concretes containing metakaolin and silica fume by artificial neural networks. Adv. Eng. Softw. 2009;40:350–355. doi: 10.1016/j.advengsoft.2008.05.002. [DOI] [Google Scholar]

- 18.Ince R. Prediction of fracture parameters of concrete by artificial neural networks. Eng. Fract. Mech. 2004;71:2143–2159. doi: 10.1016/j.engfracmech.2003.12.004. [DOI] [Google Scholar]

- 19.Naderpour H., Rafiean A.H., Fakharian P. Compressive strength prediction of environmentally friendly concrete using artificial neural networks. J. Build. Eng. 2018;16:213–219. doi: 10.1016/j.jobe.2018.01.007. [DOI] [Google Scholar]

- 20.Uddin M.T., Mahmood A.H., Kamal M.R.I., Yashin S.M., Zihan Z.U.A. Effects of maximum size of brick aggregate on properties of concrete. Constr. Build. Mater. 2017;134:713–726. doi: 10.1016/j.conbuildmat.2016.12.164. [DOI] [Google Scholar]

- 21.Bilim C., Atiş C.D., Tanyildizi H., Karahan O. Predicting the compressive strength of ground granulated blast furnace slag concrete using artificial neural network. Adv. Eng. Softw. 2009;40:334–340. doi: 10.1016/j.advengsoft.2008.05.005. [DOI] [Google Scholar]

- 22.Boukhatem B., Ghrici M., Kenai S., Tagnit-Hamou A. Prediction of Efficiency Factor of Ground-Granulated Blast-Furnace Slag of Concrete Using Artificial Neural Network. ACI Mater. J. 2011;108:56–63. [Google Scholar]

- 23.Lee A., Geem Z., Suh K.-D. Determination of optimal initial weights of an artificial neural network by using the harmony search algorithm: Application to breakwater armor stones. Appl. Sci. 2016;6:164. doi: 10.3390/app6060164. [DOI] [Google Scholar]

- 24.Liou S.-W., Wang C.-M., Huang Y.-F. Integrative discovery of multifaceted sequence patterns by frame-relayed search and hybrid PSO-ANN. J. UCS. 2009;15:742–764. [Google Scholar]

- 25.Rukhaiyar S., Alam M., Samadhiya N. A PSO-ANN hybrid model for predicting factor of safety of slope. Int. J. Geotech. Eng. 2018;12:556–566. doi: 10.1080/19386362.2017.1305652. [DOI] [Google Scholar]

- 26.Gordan B., Armaghani D.J., Hajihassani M., Monjezi M. Prediction of seismic slope stability through combination of particle swarm optimization and neural network. Eng. Comput. 2016;32:85–97. doi: 10.1007/s00366-015-0400-7. [DOI] [Google Scholar]

- 27.Bo G., Hejuan H., Hui H., Yan R. Hybrid PSO-BP neural network approach for wind power forecasting. Int. Energy J. 2017;17:211–222. [Google Scholar]

- 28.Wang Q., Li Y., Diao M., Gao W., Qi Z. Performance enhancement of INS/CNS integration navigation system based on particle swarm optimization back propagation neural network. Ocean Eng. 2015;108:33–45. doi: 10.1016/j.oceaneng.2015.07.062. [DOI] [Google Scholar]

- 29.Park I. Master’s Thesis. Kyungpook National University; Daegu, Korea: 2005. Study on the Properties of Concrete with the Ratio of Ground Granulated Blast-Furnace Slag Replacement. [Google Scholar]

- 30.Jin J. Master’s Thesis. Chonnam National University; Gwangju, Korea: 2015. Fresh and Hardened Properties of Concrete According to Slag Replacement Ratio. [Google Scholar]

- 31.Choi W. Master’s Thesis. Hanyang University; Seoul, Korea: 1999. Study on the Mix Design Method of Concrete Using Finely Blast-Furnace Slag. [Google Scholar]

- 32.Moon H., Shin H. Utilization of ready mixed concrete sludge for improving the strength of concrete with GGBF slag. J. Korean Soc. Civ. Eng. 2002;22:315–326. [Google Scholar]

- 33.Yoon J., Lee M. A study on the compressive strength development of concrete using ground granulated blast-furnace slag of different fineness. J. Archit. Inst. Korea Struct. Constr. 2000;16:63–70. [Google Scholar]

- 34.Lee K., Kwon K., Lee H., Lee S., Kim G. Characteristics of autogenous shrinkage for concrete containing blast-furnace slag. J. Korea Concr. Inst. 2004;16:621–626. doi: 10.4334/JKCI.2004.16.5.621. [DOI] [Google Scholar]

- 35.Lee K.M., Lee H.K., Lee S.H., Kim G.Y. Autogenous shrinkage of concrete containing granulated blast-furnace slag. Cement Concrete Res. 2006;36:1279–1285. doi: 10.1016/j.cemconres.2006.01.005. [DOI] [Google Scholar]

- 36.Wainwright P., Rey N. The influence of ground granulated blast furnace slag (GGBS) additions and time delay on the bleeding of concrete. Cement Concrete Comp. 2000;22:253–257. doi: 10.1016/S0958-9465(00)00024-X. [DOI] [Google Scholar]

- 37.Li Q., Li Z., Yuan G. Effects of elevated temperatures on properties of concrete containing ground granulated blast furnace slag as cementitious material. Constr. Build. Mater. 2012;35:687–692. doi: 10.1016/j.conbuildmat.2012.04.103. [DOI] [Google Scholar]

- 38.Rafiq M., Bugmann G., Easterbrook D. Neural network design for engineering applications. Comput. Struct. 2001;79:1541–1552. doi: 10.1016/S0045-7949(01)00039-6. [DOI] [Google Scholar]

- 39.Armaghani D.J., Mohamad E.T., Narayanasamy M.S., Narita N., Yagiz S. Development of hybrid intelligent models for predicting TBM penetration rate in hard rock condition. Tunn. Undergr. Sp. Tech. 2017;63:29–43. doi: 10.1016/j.tust.2016.12.009. [DOI] [Google Scholar]

- 40.Šipoš T.K., Miličević I., Siddique R. Model for mix design of brick aggregate concrete based on neural network modelling. Constr. Build. Mater. 2017;148:757–769. doi: 10.1016/j.conbuildmat.2017.05.111. [DOI] [Google Scholar]

- 41.Ballal T.M.A. Ph.D. Thesis. Loughborough University; Loughborough, UK: 1999. The Use of Artificial Neural Networks for Modelling Buildability in Preliminary Structural Design. [Google Scholar]

- 42.Bingöl A.F., Tortum A., Gül R. Neural networks analysis of compressive strength of lightweight concrete after high temperatures. Mater. Des. 2013;52:258–264. doi: 10.1016/j.matdes.2013.05.022. [DOI] [Google Scholar]

- 43.Shi Y., Eberhart R.C. Parameter selection in particle swarm optimization; Proceedings of the 7th International Conference on Evolutionary Programming; San Diego, CA, USA. 25–27 March 1998; Berlin/Heidelberg, Germany: Springer; 1998. [Google Scholar]

- 44.Bratton D., Kennedy J. Defining a standard for particle swarm optimization; Proceedings of the 2007 IEEE Swarm Intelligence Symposium; Honolulu, HI, USA. 1–5 April 2007. [Google Scholar]

- 45.Zhang J.-R., Zhang J., Lok T.-M., Lyu M.R. A hybrid particle swarm optimization–back-propagation algorithm for feedforward neural network training. Appl. Math. Comput. 2007;185:1026–1037. doi: 10.1016/j.amc.2006.07.025. [DOI] [Google Scholar]

- 46.Kaur A., Kaur M. A review of parameters for improving the performance of particle swarm optimization. Int. J. Hybrid Inf. Technol. 2015;8:7–14. doi: 10.14257/ijhit.2015.8.4.02. [DOI] [Google Scholar]

- 47.Van den Bergh F., Engelbrecht A.P. A study of particle swarm optimization particle trajectories. Inf. Sci. 2006;176:937–971. doi: 10.1016/j.ins.2005.02.003. [DOI] [Google Scholar]

- 48.Parsopoulos K.E., Vrahatis M.N. Recent approaches to global optimization problems through particle swarm optimization. Nat. Comput. 2002;1:235–306. doi: 10.1023/A:1016568309421. [DOI] [Google Scholar]

- 49.Poli R., Kennedy J., Blackwell T. Particle swarm optimization. Swarm Intell. 2007;1:33–57. doi: 10.1007/s11721-007-0002-0. [DOI] [Google Scholar]

- 50.Trelea I.C. The particle swarm optimization algorithm: Convergence analysis and parameter selection. Inf. Process. Lett. 2003;85:317–325. doi: 10.1016/S0020-0190(02)00447-7. [DOI] [Google Scholar]

- 51.Shi Y., Eberhart R.C. Particle swarm optimization: Developments, applications and resources; Proceedings of the 2001 Congress on Evolutionary Computation; Seoul, Korea. 27–30 May 2001. [Google Scholar]

- 52.Chang Y.-T., Lin J., Shieh J.-S., Abbod M.F. Optimization the initial weights of artificial neural networks via genetic algorithm applied to hip bone fracture prediction. Adv. Fuzzy Syst. 2012;6 doi: 10.1155/2012/951247. [DOI] [Google Scholar]

- 53.Beale M.H., Hagan M.T., Demuth H.B. Neural Network Toolbox™ User’s Guide. The MathWorks Inc.; Natick, MA, USA: 2017. [Google Scholar]

- 54.Pala M., Özbay E., Öztaş A., Yuce M. Appraisal of long-term effects of fly ash and silica fume on compressive strength of concrete by neural networks. Constr. Build. Mater. 2007;21:384–394. doi: 10.1016/j.conbuildmat.2005.08.009. [DOI] [Google Scholar]

- 55.Hecht-Nielsen R. Kolmogorov’s mapping neural network existence theorem; Proceedings of the IEEE International Conference on Neural Networks III; San Diego, CA, USA. 21–24 June 1987; Piscataway, NJ, USA: IEEE Press; 1987. [Google Scholar]

- 56.Cybenko G. Approximation by superpositions of a sigmoidal function. Math. Control Signal. 1989;2:303–314. doi: 10.1007/BF02551274. [DOI] [Google Scholar]

- 57.Hornik K., Stinchcombe M., White H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989;2:359–366. doi: 10.1016/0893-6080(89)90020-8. [DOI] [Google Scholar]

- 58.Ozturan M., Kutlu B., Ozturan T. Comparison of concrete strength prediction techniques with artificial neural network approach. Build. Res. J. 2008;56:23–36. [Google Scholar]

- 59.Hush D.R. Classification with neural networks: A performance analysis; Proceedings of the IEEE International Conference on Systems Engineering; Fairborn, OH, USA. 24–26 August 1989. [Google Scholar]

- 60.Gallant S.I. Neural Network Learning and Expert Systems. MIT Press; Cambridge, MA, USA: 1993. [Google Scholar]

- 61.Tamura S.I., Tateishi M. Capabilities of a four-layered feedforward neural network: Four layers versus three. IEEE Trans. Neural Netw. 1997;8:251–255. doi: 10.1109/72.557662. [DOI] [PubMed] [Google Scholar]

- 62.Sheela K.G., Deepa S.N. Review on methods to fix number of hidden neurons in neural networks. Math. Probl. Eng. 2013;11:425740. doi: 10.1155/2013/425740. [DOI] [Google Scholar]

- 63.Alam M.N., Das B., Pant V. A comparative study of metaheuristic optimization approaches for directional overcurrent relays coordination. Electr. Power Syst. Res. 2015;128:39–52. doi: 10.1016/j.epsr.2015.06.018. [DOI] [Google Scholar]

- 64.Waszczyszyn Z., Ziemiański L. Parameter Identification of Materials and Structures. Springer; Berlin/Heidelberg, Germany: 2005. Neural networks in the identification analysis of structural mechanics problems; pp. 265–340. [Google Scholar]

- 65.Sayyad H., Manshad A.K., Rostami H. Application of hybrid neural particle swarm optimization algorithm for prediction of MMP. Fuel. 2014;116:625–633. doi: 10.1016/j.fuel.2013.08.076. [DOI] [Google Scholar]

- 66.Twomey J.M., Smith A.E. Bias and variance of validation methods for function approximation neural networks under conditions of sparse data. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 1998;28:417–430. doi: 10.1109/5326.704579. [DOI] [Google Scholar]