Abstract

Purpose:

To develop and test deep learning classifiers that detect gonioscopic angle closure and primary angle closure disease (PACD) based on fully automated analysis of anterior segment OCT (AS-OCT) images.

Methods:

Subjects were recruited as part of the Chinese-American Eye Study (CHES), a population-based study of Chinese Americans in Los Angeles, CA. Each subject underwent a complete ocular examination including gonioscopy and AS-OCT imaging in each quadrant of the anterior chamber angle (ACA). Deep learning methods were used to develop three competing multi-class convolutional neural network (CNN) classifiers for modified Shaffer grades 0, 1, 2, 3, and 4. Binary probabilities for closed (grades 0 and 1) and open (grades 2, 3, and 4) angles were calculated by summing over the corresponding grades. Classifier performance was evaluated by five-fold cross validation and on an independent test dataset. Outcome measures included area under the receiver operating characteristic curve (AUC) for detecting gonioscopic angle closure and PACD, defined as either two or three quadrants of gonioscopic angle closure per eye.

Results:

4036 AS-OCT images with corresponding gonioscopy grades (1943 open, 2093 closed) were obtained from 791 CHES subjects. Three competing CNN classifiers were developed with a cross-validation dataset of 3396 images (1632 open, 1764 closed) from 664 subjects. The remaining 640 images (311 open, 329 closed) from 127 subjects were segregated into a test dataset. The best-performing classifier was developed by applying transfer learning to the ResNet-18 architecture. For detecting gonioscopic angle closure, this classifier achieved an AUC of 0.933 (95% confidence interval, 0.925-0.941) on the cross-validation dataset and 0.928 on the test dataset. For detecting PACD based on two- and three-quadrant definitions, the ResNet-18 classifier achieved AUCs of 0.964 and 0.952, respectively, on the test dataset.

Conclusion:

Deep learning classifiers effectively detect gonioscopic angle closure and PACD based on automated analysis of AS-OCT images. These methods could be used to automate clinical evaluations of the ACA and improve access to eyecare in high-risk populations.

Introduction

Primary angle closure glaucoma (PACG), the most severe form of primary angle closure disease (PACD), is a leading cause of permanent vision loss worldwide.1 The primary risk factor for developing PACG is closure of the anterior chamber angle (ACA), which leads to impaired aqueous humor outflow and elevations of intraocular pressure (IOP). PACG is preceded by primary angle closure suspect (PACS) and primary angle closure (PAC), which comprise the majority of patients with PACD.2,3 Interventions such as laser peripheral iridotomy (LPI) and lens extraction can alleviate angle closure and lower the risk of progression to PACG and glaucoma-related vision loss.4–6 However, PACD must first be detected before eyecare providers can assess its severity and administer the appropriate interventions.

Gonioscopy is the current clinical standard for evaluating the ACA and detecting angle closure and PACD. The epidemiology, natural history, and clinical management of gonioscopic angle closure has been extensively studied.2–8 However, gonioscopy has several shortcomings that limit its utility in clinical examinations. Gonioscopy is qualitative and dependent on the examiner’s expertise in identifying specific anatomical landmarks. It is also limited by inter-observer variability, even among experienced glaucoma specialists.9 Gonioscopy requires contact with the patient’s eye, which can be uncomfortable or deform the ACA. Finally, a thorough gonioscopic examination can be time-intensive and must be performed prior to dilation, thereby decreasing clinical efficiency.

Anterior segment optical coherence tomography (AS-OCT) is an in vivo imaging method that acquires cross-sectional images of the anterior segment by measuring its optical reflections.10 AS-OCT has several advantages over gonioscopy. It can produce quantitative measurements of biometric parameters, such as lens vault (LV) and iris thickness (IT), that are risk factors for gonioscopic angle closure.11–14 AS-OCT devices also have excellent intra-device, intra-user, and inter-user reproducibility.9,15–21 Finally, AS-OCT is non-contact, which makes it easier for patients to tolerate. However, one major limitation of AS-OCT is that image analysis is only semi-automated; a trained grader must manually identify specific anatomical structures in each image before it can be related to gonioscopic angle closure.22–24

In this study, we apply deep learning methods to population-based gonioscopy and AS-OCT data to develop and test fully automated classifiers capable of detecting eyes with gonioscopic angle closure and PACD.

Methods

Subjects were recruited as part of the Chinese American Eye Study (CHES), a population-based, cross-sectional study that included 4,572 Chinese participants aged 50 years and older residing in the city of Monterey Park, California. Ethics committee approval was previously obtained from the University of Southern California Medical Center Institutional Review Board. All study procedures adhered to the recommendations of the Declaration of Helsinki. All study participants provided informed consent.

Inclusion criteria for the study included CHES subjects who received gonioscopy and AS-OCT imaging. Exclusion criteria included history of prior eye surgery (e.g., cataract extraction, incisional glaucoma surgery), penetrating eye injury, or media opacities that precluded visualization of ACA structures. Subjects with history of prior LPI were not excluded. Both eyes from a single subject could be recruited so long as they fulfilled the inclusion and exclusion criteria.

Clinical Assessment

Gonioscopy in CHES was performed in the seated position under dark ambient lighting (0.1 cd/m2) with a 1-mm light beam and a Posner-type 4-mirror lens (Model ODPSG; Ocular Instruments, Inc., Bellevue, WA, USA) by one of two trained ophthalmologists (D.W., C.L.G.) masked to other examination findings. One ophthalmologist (D.W.) performed the majority (over 90%) of gonioscopic examinations. Care was taken to avoid light falling on the pupil and inadvertent indentation of the globe. The gonioscopy lens could be tilted to gain a view of the angle over the convexity of the iris. The angle was graded in each quadrant (inferior, superior, nasal, and temporal) according to the modified Shaffer classification system based on identification of anatomical landmarks: grade 0, no structures visible; grade 1, non-pigmented trabecular meshwork (TM) visible; grade 2; pigmented TM visible; grade 3, scleral spur visible; grade 4, ciliary body visible. Gonioscopic angle closure was defined as an angle quadrant in which pigmented TM could not be visualized (grade 0 or 1). PACD was defined as an eye with more than two or three quadrants (greater than 180 or 270 degrees) of gonioscopic angle closure in the absence of potential causes of secondary angle closure, such as inflammation or neovascularization.25

AS-OCT Imaging and Data Preparation

AS-OCT imaging in CHES was performed in the seated position under dark ambient lighting (0.1 cd/m2) after gonioscopy and prior to pupillary dilation by a single trained ophthalmologist (D.W.) with the Tomey CASIA SS-1000 swept-source Fourier-domain device (Tomey Corporation, Nagoya, Japan). 128 two-dimensional cross-sectional AS-OCT images were acquired per eye. During the imaging, the eyelids were gently retracted taking care to avoid inadvertent pressure on the globe.

Raw image data was imported into the SS-OCT Viewer software (version 3.0, Tomey Corporation, Nagoya, Japan). Two images were exported in JPEG format per eye: one oriented along the horizontal (temporal-nasal) meridian and the other along the vertical (superior-inferior) meridian. Images were divided in two along the vertical midline, and right-sided images were flipped about the vertical axis to standardize images with the ACA to the left and corneal apex to the right. No adjustments were made to image brightness or contrast. One observer (A.A.P.) masked to the identities and examination results of the subjects inspected each images; eyes with corrupt images or images with significant lid artifacts precluding visualization of the ACA were excluded from the analysis. Image manipulations were performed in MATLAB (Mathworks, Natick, MA).

The total dataset had relatively equal numbers of images with open and closed angles to minimize training biases during classifier development. The total number of open angle images was limited to match the number of angle closure images. Prior to classifier training, 85% of images were segregated into a cross-validation dataset. 80% and 20% of the cross-validation dataset were used for training and validation, respectively. The remaining 15% of images were segregated into an independent test dataset. In order to prevent data leakage (e.g. inter- and intra-eye correlations) between cross-validation and test datasets, multiple images acquired from a single subject appeared together either in the cross-validation or test dataset and were not split across both datasets. The test dataset was constructed so that it had the same distribution of gonioscopy grades as the cross-validation dataset. Data manipulations were performed in the Python programming language.

Deep Learning Classifier Development

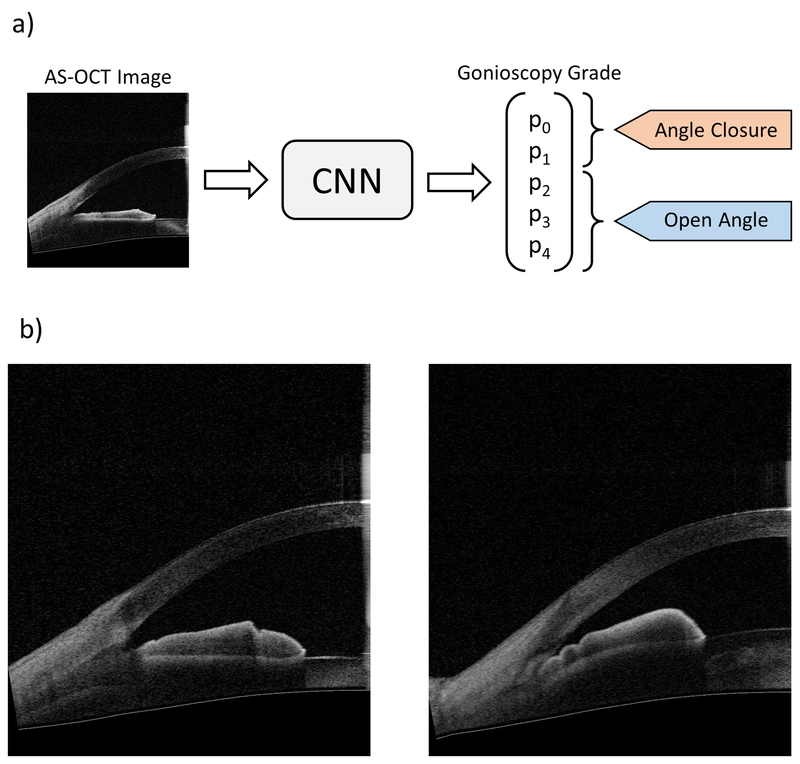

Three competing convolutional neural network (CNN) classifiers were developed to classify the ACA in individual AS-OCT images as either open or closed (Figure 1). All three were multi-class classifiers for Shaffer grades 0, 1, 2, 3, and 4. Given an input image, the classifiers produced a normalized probability distribution over Shaffer grades p = [p0, p1, p2, p3, p4]. Binary probabilities for closed angle (grades 0 and 1) and open angle (grades 2 to 4) eyes were generated by summing probabilities over the corresponding grades, i.e., pclosed = p0 + p1 and popen = p2 + p3 + p4. The classifiers acted as detectors for gonioscopic angle closure, i.e., a positive detection event was defined as classification to either grade 0 or 1.

Figure 1:

a) Schematic of binary classification process (top). Unmarked AS-OCT images were used as inputs to the convolutional neural network (CNN) classifiers. Gonioscopy grade probabilities (p0 to p4) were summed to make the binary prediction of angle status: angle closure = grades 0 and 1, open angle = grades 2, 3, and 4. b) Representative AS-OCT images corresponding to open (bottom left, grade 4) and closed (bottom right; grade 0) angles based on gonioscopic examination.

Each classifier was based on a unique CNN architecture and developed with the common goal of optimizing performance for detecting gonioscopic angle closure. Images were resized to 350 by 350 pixels to reduce hardware demands during classifier training. Grayscale input images were preprocessed by normalizing RGB channels to have a mean of [0.485, 0.456, 0.406] and a standard deviation of [0.229, 0.224, 0.225]. Images were augmented through random rotation between 0 to 10 degrees, random translation between 0 to 20 pixels, and random perturbations to balance and contrast.

The first classifier was a modified ResNet-18 CNN pre-trained on the ImageNet Challenge dataset.26 The average pooling layer was replaced by an adaptive pooling layer where bin size is proportional to input image size; this enables the CNN to be applied to input images of arbitrary sizes.27 The final fully connected layer of the ResNet-18 architecture was changed to have five nodes. Softmax-regression was used to calculate the multinomial probability of the five grades with a cross-entropy loss used during training. Transfer learning was applied to train the final layer of the CNN.28 All layers of the CNN were fine-tuned using backpropagation; optimization was performed using stochastic gradient descent with warm restarts.29 Test-time augmentation was performed by applying the same augmentations at test time and averaging predictions over augmentation variants.

The second classifier was a CNN with a custom 14-layer architecture (Supplementary Figure 1). Layers, kernel size, number of filters, stride, and number of dense layers were varied between training sessions until optimal performance was achieved. The learning rate was held constant at 0.0001.

The third classifier was developed using a combination of deep learning and logistic regression algorithms. The Inception-v3 model was pre-trained with the weights from the ImageNet dataset.30 The final layer of the Inception-v3 model was removed and replaced by a logistic regression classifier for the purpose of feature extraction. In order to perform multi-class classification, the one-vs-rest (OvR) scheme was used along with logistic regression to assign each image a Shaffer grade from 0 to 4.

The performance of each classifier was evaluated through five-fold cross-validation. Mean area under the receiver operator curve (AUC) metrics were used to determine the best-performing classifier. ROC curves were generated by varying the threshold probabilities for open and closed angle classification (i.e., pclosed and popen). Predictive accuracy of the best-performing classifier for gonioscopic angle closure was calculated for all images corresponding to each examiner-assigned Shaffer grade. Accuracy was defined as (true positive + true negative) / all cases. PACD classification was made by aggregating angle closure predictions across all four quadrants based on either the two- or three-quadrant definition. The ROC curve for PACD detection was generated by varying the threshold for angle closure detection across all quadrants.

In order to evaluate the effect of dataset size on classifier performance, the best-performing classifier was retrained on different-sized random subsets of the cross-validation dataset. AUC for each classifier was calculated on the test dataset.

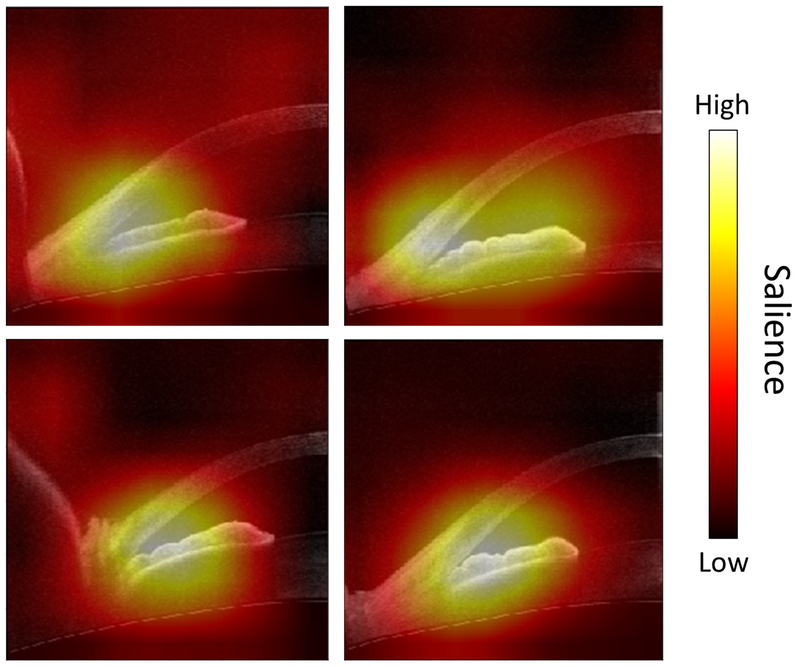

Saliency maps were generated in order to visualize the pixels of an image that most contribute to a CNN prediction. The final global average pooling layer of the best-performing classifier was modified to generate a class activation map.31 The network was then retrained on the entire cross-validation dataset before saliency maps were generated on the test dataset.

Results

4280 images with corresponding gonioscopy grades from 1070 eyes of 852 eligible subjects (335 consecutive with PACD, 517 consecutive with open angles) were obtained from CHES after excluding eyes with history of intraocular surgery (N = 25) (Supplementary Figure 2). The mean age of the subjects was 61.1 ± 8.1 years (range 50-91). 251 (31.7%) subjects were male and 540 (68.3%) were female. 244 images from 61 eyes with either corrupt images or images affected by eyelid artifact were excluded from the analysis.

The final dataset consisted of 4036 angles images with corresponding gonioscopy grades from 1009 eyes of 791 subjects. There was a relatively balanced number of images with open (N = 1943) and closed (N = 2093) angles, although individual grades were not balanced: grade 0 (N = 957), grade 1 (N = 1136), grade 2 (N = 425), grade 3 (N = 1073), grade 4 (N = 445). The cross-validation dataset consisted of 3396 images (1632 open, 1764 closed; 84.1% of total images) from 664 subjects. The test dataset consisted of the remaining 640 images (311 open, 329 closed; 15.9%) from 127 subjects.

Classifier Performance for Detecting Gonioscopic Angle Closure and PACD

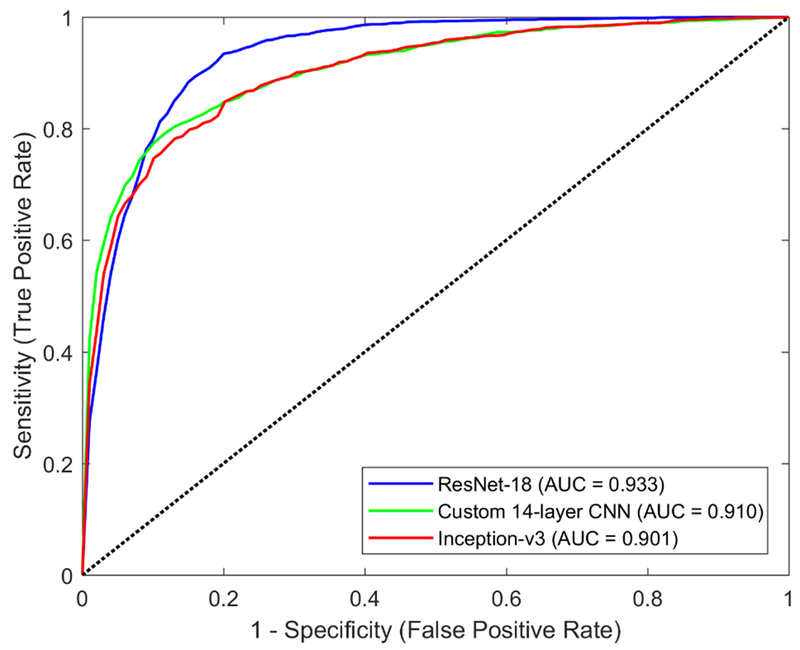

For detecting gonioscopic angle closure in the cross-validation dataset, the ResNet-18 classifier achieved the best performance, with an AUC of 0.933 (95% confidence interval, 0.925-0.941) (Figure 2). The Inception-v3 and custom CNN classifiers had AUCs of 0.901 (95% confidence interval, 0.892-0.91) and 0.910 (95% confidence interval, 0.902-0.918), respectively (Figure 2). The predictive accuracy of the ResNet-18 classifier for gonioscopic angle closure among angle quadrants with examiner-assigned Shaffer grade 0 or 1 was 98.4% (95% confidence interval, 97.9-98.9%) and 89.1% (95% confidence interval, 85.4-92.8%), respectively. Its predictive accuracy for gonioscopic open angle among angle quadrants with examiner-assigned Shaffer 2, 3, and 4 was 40.0% (95% confidence interval, 33.9-46.1%), 87.4% (95% confidence interval, 84.5-90.3%), and 98.9% (95% confidence interval, 97.9-99.9%), respectively.

Figure 2:

ROC curves of three competing classifiers for detecting gonioscopic angle closure developed using different deep learning architectures: ResNet-18 (blue, AUC = 0.933), custom 14-layer CNN, (green, AUC = 0.910), Inception-v3 (red, AUC = 901). Performance was evaluated on the training dataset.

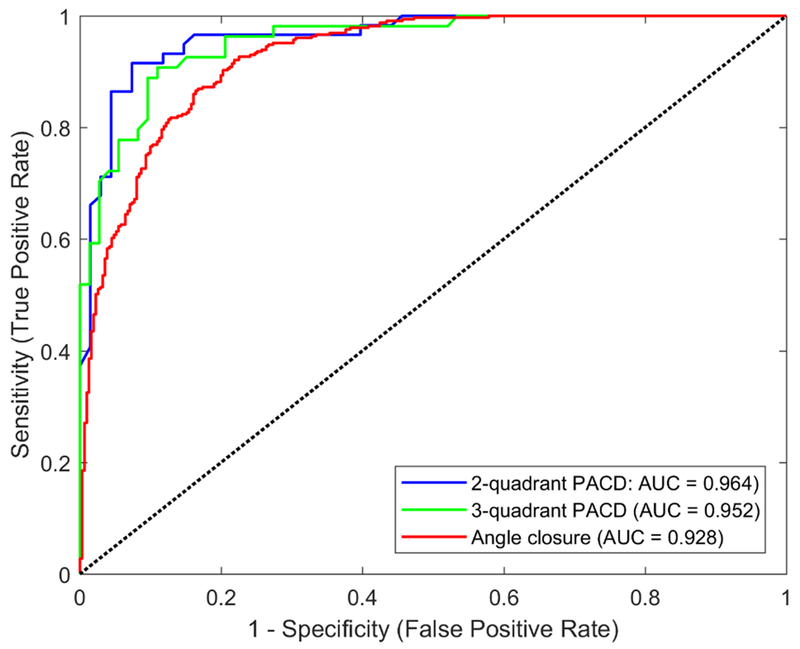

For detecting gonioscopic angle closure in the test dataset, the ResNet-18 classifier had an AUC of 0.928 (Figure 3). For detecting PACD based on the two- and three-quadrant definitions, the ResNet-18 classifier achieved an AUC of 0.964 and 0.952, respectively, on the test dataset (Figure 3).

Figure 3:

ROC curves of the ResNet-18 classifier for detecting gonioscopic angle closure (red, AUC = 0.928) and PACD based on either the two- (blue, AUC = 0.964) or three-quadrant (green, AUC = 0.952) definition. Performance was evaluated on the test dataset.

The ResNet-18 classifier had AUCs that rapidly increased and plateaued when re-trained with subsets of the cross-validation dataset (Figure 4). AUCs saturated at approximately 25% of the total cross-validation dataset.

Figure 4:

Representative saliency maps highlight the pixels that are most discriminative in the prediction of angle closure status by the ResNet-18 classifier. Colormap indicates colors in descending order of salience: white, yellow, red, black.

The ResNet-18 classifier focused primarily on the ACA to detect images with gonioscopic angle closure based on saliency maps indicating its strategy (Figure 5).

Discussion

In this study, we developed and tested deep learning classifiers that detect gonioscopic angle closure and PACD based on automated analysis of AS-OCT images. The best-performing ResNet-18 classifier achieved excellent performance, especially in eyes with the highest or lowest degrees of gonioscopic angle closure. Saliency maps demonstrate that the ResNet-18 classifier focuses on portions of images that contain salient biometric features. To our knowledge, these are the first fully automated deep learning classifiers for detecting gonioscopic angle closure. We believe these findings have important implications for modernizing clinical evaluations of the ACA and reducing barriers to eyecare in populations at high risk for PACD.

Gonioscopy, the current clinical standard for assessing the ACA, relies on an examiner’s ability to identify specific anatomical structures. Deep learning classifiers developed in this study performed “automated gonioscopy” based on AS-OCT images and achieved performance approximating that of a highly experienced ophthalmologist (D.W.) who performed nearly 4000 gonioscopic examinations during CHES alone. The development of these classifiers is significant for several reasons. First, gonioscopy is highly dependent on examiner expertise whereas AS-OCT imaging is less skill dependent and can be performed by a trained technician. An automated OCT-based ACA assessment method could facilitate community-based screening for PACD, which is an urgent need since the majority of PACD occurs in regions of the world with limited access to eyecare.1 Once PACD has been detected by automated methods, eyecare providers can evaluate patients for advanced clinical features, such as peripheral anterior synechiae (PAS), elevated IOP, or glaucomatous optic neuropathy, and administer necessary interventions. Second, gonioscopy is time-consuming and underperformed by eyecare providers, leading to misdiagnosis and mismanagement of ocular hypertension and glaucoma.32‘33 An automated ACA assessment method could increase examination compliance and clinical efficiency by alleviating the burden of manual gonioscopy in the majority of patients. Third, a standardized computational approach could eliminate inter-examiner variability associated with gonioscopy. Finally, an automated interpretation method could validate wide-spread clinical adoption of AS-OCT, which is currently limited due to time and expertise needed for manual image analysis.

Prior efforts have utilized other automated methods to detect gonioscopic angle closure and PACD. Two semi-automated machine learning classifiers applied logistic regression algorithms to detect gonioscopic angle closure based on AS-OCT measurements of biometric risk factors.23,24 These classifiers, which required manual annotation of the scleral spur, achieved AUCs of 0.94 and 0.957 based on the two-quadrant definition of PACD. Classifiers utilizing feature-based learning methods to automatically localize and analyze the ACA on AS-OCT images achieved mixed performance, with AUCs ranging between 0.821 to 0.921.34 The fact that a deep learning classifier with no instruction on the saliency of anatomical structures or features performed better than classifiers based on known biometric risk factors further supports the role of deep learning methods for detecting clinical disease.

The performance of deep learning classifiers is limited by the reproducibility of “ground truth” clinical labels and inherent uncertainty of predicted diagnoses. There are limited studies on inter- and intra-examiner reproducibility of gonioscopic angle closure diagnosis, and these metrics could not be calculated for the CHES examiners based on available data.9 However, we were able to estimate the contribution of clinical uncertainty to classifier performance by calculating prediction accuracy segregated by examiner-assigned Shaffer grade. The highest accuracy of predicting gonioscopic angle closure was among images corresponding to grades 0 and 4 followed by grades 1 and 3, which intuitively correlates with degree of examiner certainty. The accuracy of predicting images corresponding to grade 2 as open was close to chance, likely because examiner certainty is low and detection of pigmented TM is highly dependent on dynamic examination techniques (e.g. lens tilting, indentation) that are difficult to model. However, this distribution of performance is reassuring since images with the highest degree of angle closure are also classified with the highest degree of accuracy.

The ResNet-18 classifier was developed to identify gonioscopic angle closure in individual angle quadrants and then adapted to detect PACD. While this differs from previous machine learning classifiers that detected PACD based on AS-OCT measurements, there are several reasons for this approach.23,24 Classifiers for individual angle quadrants replicate the clinical experience of performing gonioscopy quadrant by quadrant. In addition, the clinical significance of current definitions of PACD, originally conceived for epidemiological studies, is incompletely understood.25 Single-quadrant classifiers provide increased flexibility; the definition of PACD could be refined in the future as the predictive values of current definitions are revealed by longitudinal studies.35

One challenge in practical applications of deep learning is identifying the features that classifiers evaluate to make their predictions. We computed saliency maps to visualize which pixels were utilized by the ResNet-18 classifier to make its predictions. The maps support previous studies that identified features related to the iridocorneal junction as highly discriminative for gonioscopic angle closure.36 Interestingly, the maps appear to ignore LV, an important risk factor for gonioscopic angle closure, likely because LV cannot be deduced based on half of a cross-sectional AS-OCT image.11,12 In addition, the maps appear to incorporate variable portions of the iris, which supports iris thickness or curvature as important discriminative features in some eyes.13,14 However, further work is needed to identify the precise features that enable image-based classifiers to out-perform measurement-based classifiers.24

Our approach to classifier development included measures to optimize performance and limit biases associated with deep learning methodology. First, we developed three competing classifiers using different CNN architectures. The ResNet-18 architecture was ultimately superior to the other two approaches, likely because transfer learning allowed ResNet-18 to take advantage of pre-trained weights compared to the custom 14-layer architecture, while its relatively shallow depth may have reduced overfitting compared to the Inception-v3 architecture. Second, recent studies applying deep learning methods to detect eye disease used hundreds of thousands of images as inputs.37–39 The size of our image database was limited to CHES subjects and was relatively small, which could have limited classifier performance. However, the benefit of using additional images to train the ResNet-18 classifier was minimal above 25% of the cross-validation dataset, suggesting that relatively few images are needed to train this type of classifier. Third, we minimized the likelihood of classifier bias toward closed or open angles by balancing the number of images in the two groups. Finally, we accounted for the effects of inter- and intra-eye correlations by segregating subjects into either the cross-validation or test dataset, which did not significantly affect classifier performance on the test dataset.

Our study has some limitations. Gonioscopy in CHES was performed primarily by one expert examiner (D.W.), which could limit classifier generalizability due to inherent inter-examiner differences in gonioscopy grading. In addition, all subjects in CHES were self-identified as Chinese-American, which could limit classifier performance among patients of other ethnicities. However, the excellent performance demonstrated by the ResNet-18 CNN architecture strongly suggests that the same method could be effectively applied to clinical labels from gonioscopy performed by a panel of experts on a multi-ethnic cohort. While there are logistic challenges to this approach, such as patient discomfort and alterations of ocular biometry associated with multiple consecutive gonioscopic examinations, it would likely alleviate issues related to classifier generalizability. Finally, our classifiers were trained using only one AS-OCT image to per quadrant, similar to previous classifiers based on AS-OCT measurements.23,24 There are eyes in which the ACA is open in one portion of a quadrant but closed in another. While the ResNet-18 demonstrated excellent performance, it is possible that a more complex classifier developed using multiple images per quadrant could achieve even greater performance.

In this study, we developed fully automated deep learning classifiers for detecting eyes with gonioscopic angle closure and PACD that achieve favorable performance compared to previous manual and semi-automated methods. Recent work demonstrated that the vast majority of eyes with early PACD do not progress even in the absence of treatment with LPI.40 Therefore, future directions of research should include efforts to develop automated methods that identify the subset of patients with PACD who are at high risk for elevated IOP and glaucomatous optic neuropathy.41 We hope this study prompts further development of automated clinical methods that improve and modernize the detection and management of PACD.

Supplementary Material

Supplementary Figure 1: Schematic of custom 14-layer CNN architecture.

Supplementary Figure 2: Flow diagram of CHES subjects, eyes, and images recruited to and excluded from the study. Each image was paired with its corresponding gonioscopy grade.

Supplementary Figure 3: Effect of training dataset size on classifier performance. The ResNet-18 classifier was trained using different-sized subsets of the training dataset. Performance was evaluated on the test dataset.

Acknowledgements

This work was supported by grants U10 EY017337, K23 EY029763, and P30 EY029220 from the National Eye Institute, National Institute of Health, Bethesda, Maryland; and an unrestricted grant to the Department of Ophthalmology from Research to Prevent Blindness, New York, NY; Rohit Varma is a Research to Prevent Blindness Sybil B. Harrington Scholar.

We would like to thank Drs. Dandan Wang (D.W.) and Carlos L. Gonzalez (C.L.G.) for performing eye examinations, including gonioscopy and AS-OCT imaging, during CHES.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Disclosures

Benjamin Y. Xu, Michael Chiang, Shreyasi Chaudhary, Shraddha Kulkarni, Anmol A. Pardeshi have no financial disclosures. Rohit Varma is a consultant for Allegro Inc., Allergan, and Bausch Health Companies Inc.

References

- 1.Tham YC, Li X, Wong TY, Quigley HA, Aung T, Cheng CY. Global prevalence of glaucoma and projections of glaucoma burden through 2040: A systematic review and meta-analysis. Ophthalmology. 2014;121(11):2081–2090. [DOI] [PubMed] [Google Scholar]

- 2.Liang Y, Friedman DS, Zhou Q, et al. Prevalence and characteristics of primary angle-closure diseases in a rural adult Chinese population: The Handan eye study. Investig Ophthalmol Vis Sci. 2011;52(12):8672–8679. [DOI] [PubMed] [Google Scholar]

- 3.Sawaguchi S, Sakai H, Iwase A, et al. Prevalence of primary angle closure and primary angle-closure glaucoma in a southwestern rural population of Japan: The Kumejima study. Ophthalmology. 2012;119(6): 1134–1142. [DOI] [PubMed] [Google Scholar]

- 4.He M, Friedman DS, Ge J, et al. Laser Peripheral Iridotomy in Primary Angle-Closure Suspects: Biometric and Gonioscopic Outcomes. The Liwan Eye Study. Ophthalmology. 2007;114(3):494–500. [DOI] [PubMed] [Google Scholar]

- 5.Azuara-Blanco A, Burr J, Ramsay C, et al. Effectiveness of early lens extraction for the treatment of primary angle-closure glaucoma (EAGLE): a randomised controlled trial. Lancet. 2016;388(10052): 1389–1397. [DOI] [PubMed] [Google Scholar]

- 6.Radhakrishnan S, Chen PP, Junk AK, Nouri-Mahdavi K, Chen TC. Laser Peripheral Iridotomy in Primary Angle Closure: A Report by the American Academy of Ophthalmology. Ophthalmology. 2018; 125(7): 1110–1120. [DOI] [PubMed] [Google Scholar]

- 7.Thomas R Five year risk of progression of primary angle closure suspects to primary angle closure: a population based study. Br J Ophthalmol. 2003;87(4):450–454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Thomas R, Parikh R, Muliyil J, Kumar RS. Five-year risk of progression of primary angle closure to primary angle closure glaucoma: A population-based study. Acta Ophthalmol Scand. 2003;81(5):480–485. [DOI] [PubMed] [Google Scholar]

- 9.Rigi M, Bell NP, Lee DA, et al. Agreement between Gonioscopic Examination and Swept Source Fourier Domain Anterior Segment Optical Coherence Tomography Imaging. J Ophthalmol. 2016;2016:1727039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Izatt JA, Hee MR, Swanson EA, et al. Micrometer-Scale Resolution Imaging of the Anterior Eye In Vivo With Optical Coherence Tomography. Arch Ophthalmol. 1994; 112(12): 1584–1589. [DOI] [PubMed] [Google Scholar]

- 11.Nongpiur ME, He M, Amerasinghe N, et al. Lens vault, thickness, and position in chinese subjects with angle closure. Ophthalmology. 2011;118(3):474–479. [DOI] [PubMed] [Google Scholar]

- 12.Ozaki M, Nongpiur ME, Aung T, He M, Mizoguchi T. Increased lens vault as a risk factor for angle closure: Confirmation in a Japanese population. Graefe’s Arch Clin Exp Ophthalmol. 2012;250(12): 1863–1868. [DOI] [PubMed] [Google Scholar]

- 13.Wang B, Sakata LM, Friedman DS, et al. Quantitative Iris Parameters and Association with Narrow Angles. Ophthalmology. 2010;117(1): 11–17. [DOI] [PubMed] [Google Scholar]

- 14.Wang BS, Narayanaswamy A, Amerasinghe N, et al. Increased iris thickness and association with primary angle closure glaucoma. Br J Ophthalmol. 2011. ;95(1):46–50. [DOI] [PubMed] [Google Scholar]

- 15.Maram J, Pan X, Sadda S, Francis B, Marion K, Chopra V. Reproducibility of angle metrics using the time-domain anterior segment optical coherence tomography: Intra-observer and inter-observer variability. Curr Eye Res. 2015;40(5):496–500. [DOI] [PubMed] [Google Scholar]

- 16.Cumba RJ, Radhakrishnan S, Bell NP, et al. Reproducibility of scleral spur identification and angle measurements using fourier domain anterior segment optical coherence tomography. J Ophthalmol. 2012;2012:1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Liu S, Yu M, Ye C, Lam DSC, Leung CKS. Anterior chamber angle imaging with swept-source optical coherence tomography: An investigation on variability of angle measurement. Investig Ophthalmol Vis Sci. 2011;52(12):8598–8603. [DOI] [PubMed] [Google Scholar]

- 18.Sakata LM, Lavanya R, Friedman DS, et al. Comparison of Gonioscopy and Anterior Segment Ocular Coherence Tomography in Detecting Angle Closure in Different Quadrants of the Anterior Chamber Angle. Ophthalmology. 2008;115(5):769–774. [DOI] [PubMed] [Google Scholar]

- 19.Sharma R, Sharma A, Arora T, et al. Application of anterior segment optical coherence tomography in glaucoma. Surv Ophthalmol. 2014;59(3):311–327. [DOI] [PubMed] [Google Scholar]

- 20.McKee H, Ye C, Yu M, Liu S, Lam DSC, Leung CKS. Anterior chamber angle imaging with swept-source optical coherence tomography: Detecting the scleral spur, schwalbe’s line, and schlemm’s canal. J Glaucoma. 2013;22(6):468–472. [DOI] [PubMed] [Google Scholar]

- 21.Pan X, Marion K, Maram J, et al. Reproducibility of Anterior Segment Angle Metrics Measurements Derived From Cirrus Spectral Domain Optical Coherence Tomography. J Glaucoma. 2015;24(5):e47–e51. [DOI] [PubMed] [Google Scholar]

- 22.Console JW, Sakata LM, Aung T, Friedman DS, He M. Quantitative analysis of anterior segment optical coherence tomography images: the Zhongshan Angle Assessment Program. Br J Ophthalmol. 2008;92(12):1612–1616. [DOI] [PubMed] [Google Scholar]

- 23.Nongpiur ME, Haaland BA, Friedman DS, et al. Classification algorithms based on anterior segment optical coherence tomography measurements for detection of angle closure. Ophthalmology. 2013;120(1):48–54. [DOI] [PubMed] [Google Scholar]

- 24.Nongpiur ME, Haaland BA, Perera SA, et al. Development of a score and probability estimate for detecting angle closure based on anterior segment optical coherence tomography. Am J Ophthalmol. 2014;157(1):32–38.e1. [DOI] [PubMed] [Google Scholar]

- 25.Foster PJ, Buhrmann R, Quigley HA, Johnson GJ. The definition and classification of glaucoma in prevalence surveys. Br J Ophthalmol. 2002;86(2):238–242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Khosla A, Krause J, Fei-Fei L, et al. ImageNet Large Scale Visual Recognition Challenge. Int J Comput Vis. 2015;115(3):211–252. [Google Scholar]

- 27.He K, Zhang X, Ren S, Sun J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans Pattern Anal Mach Intell. 2015;37(9):1904–1916. [DOI] [PubMed] [Google Scholar]

- 28.Pan SJ, Yang Q. A survey on transfer learning. IEEE Trans Knowl Data Eng. 2010;22(10): 1345–1359. [Google Scholar]

- 29.Loshchilov I, Hutter F. SGDR: Stochastic Gradient Descent with Warm Restarts. August 2016. [Google Scholar]

- 30.Abidin AZ, Deng B, DSouza AM, Nagarajan MB, Coan P, Wismüller A. Deep transfer learning for characterizing chondrocyte patterns in phase contrast X-Ray computed tomography images of the human patellar cartilage. Comput Biol Med. 2018;95:24–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Karabulut N Inaccurate citations in biomedical journalism: Effect on the impact factor of the American Journal of Roentgenology. Am J Roentgenol. 2017;208(3):472–474. [DOI] [PubMed] [Google Scholar]

- 32.Coleman AL, Yu F, Evans SJ. Use of Gonioscopy in Medicare Beneficiaries Before Glaucoma Surgery. J Glaucoma. 2006;15(6):486–493. [DOI] [PubMed] [Google Scholar]

- 33.Varma DK, Simpson SM, Rai AS, Ahmed IIK. Undetected angle closure in patients with a diagnosis of open-angle glaucoma. Can J Ophthalmol. 2017;52(4):373–378. [DOI] [PubMed] [Google Scholar]

- 34.Xu Yanwu, Liu Jiang, Cheng Jun, et al. Automated anterior chamber angle localization and glaucoma type classification in OCT images. In: 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) IEEE; 2013:7380–7383. [DOI] [PubMed] [Google Scholar]

- 35.Jiang Y, Friedman DS, He M, Huang S, Kong X, Foster PJ. Design and methodology of a randomized controlled trial of laser iridotomy for the prevention of angle closure in Southern China: The zhongshan angle closure prevention trial. Ophthalmic Epidemiol. 2010;17(5):321–332. [DOI] [PubMed] [Google Scholar]

- 36.Narayanaswamy A, Sakata LM, He MG, et al. Diagnostic performance of anterior chamber angle measurements for detecting eyes with narrow angles: An anterior segment OCT study. Arch Ophthalmol. 2010;128(10):1321–1327. [DOI] [PubMed] [Google Scholar]

- 37.Gulshan V, Peng L, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA - J Am Med Assoc. 2016;316(22):2402–2410. [DOI] [PubMed] [Google Scholar]

- 38.Ting DSW, Cheung CYL, Lim G, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA - J Am Med Assoc. 2017;318(22):2211–2223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Baxter SL, Duan Y, Zhang CL, et al. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell. 2018; 172(5): 1122–1131. e9. [DOI] [PubMed] [Google Scholar]

- 40.He M, Jiang Y, Huang S, et al. Laser peripheral iridotomy for the prevention of angle closure: a single-centre, randomised controlled trial. Lancet. 2019;0(0). [DOI] [PubMed] [Google Scholar]

- 41.Xu BY, Burkemper B, Lewinger JP, et al. Correlation Between Intraocular Pressure and Angle Configuration Measured by Optical Coherence Tomography: The Chinese American Eye Study. Ophthalmol Glaucoma. 2018;1:158–166. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Figure 1: Schematic of custom 14-layer CNN architecture.

Supplementary Figure 2: Flow diagram of CHES subjects, eyes, and images recruited to and excluded from the study. Each image was paired with its corresponding gonioscopy grade.

Supplementary Figure 3: Effect of training dataset size on classifier performance. The ResNet-18 classifier was trained using different-sized subsets of the training dataset. Performance was evaluated on the test dataset.