Abstract

Adequate tumor detection is critical in complete transurethral resection of bladder tumor (TURBT) to reduce cancer recurrence, but up to 20% of bladder tumors are missed by standard white light cystoscopy. Deep learning augmented cystoscopy may improve tumor localization, intraoperative navigation, and surgical resection of bladder cancer. We aimed to develop a deep learning algorithm for augmented cystoscopic detection of bladder cancer. Patients undergoing cystoscopy/TURBT were recruited and white light videos were recorded. Video frames containing histologically confirmed papillary urothelial carcinoma were selected and manually annotated. We constructed CystoNet, an image analysis platform based on convolutional neural networks, for automated bladder tumor detection using a development dataset of 95 patients for algorithm training and five patients for testing. Diagnostic performance of CystoNet was validated prospectively in an additional 54 patients. In the validation dataset, per-frame sensitivity and specificity were 90.9% (95% confidence interval [CI], 90.3–91.6%) and 98.6% (95% CI, 98.5–98.8%), respectively. Per-tumor sensitivity was 90.9% (95% CI, 90.3–91.6%). CystoNet detected 39 of 41 papillary and three of three flat bladder cancers. With high sensitivity and specificity, CystoNet may improve the diagnostic yield of cystoscopy and efficacy of TURBT.

Patient summary:

Conventional cystoscopy has recognized shortcomings in bladder cancer detection, with implications for recurrence. Cystoscopy augmented with artificial intelligence may improve cancer detection and resection.

Keywords: Bladder cancer, Computer-assisted image analysis, Cystoscopy, Deep learning, Diagnostic imaging

Bladder cancer is the ninth most common malignancy globally, with an estimated 430 000 new diagnoses annually [1]. Standard diagnosis and surveillance of bladder cancer rely on white light cystoscopy (WLC), and over 2 million cystoscopies are performed annually in the USA and Europe [2]. Suspicious findings prompt transurethral resection of bladder tumor (TURBT) in the operating room for diagnosis and staging. Non–muscle-invasive bladder cancer accounts for 75% of new diagnoses and is typically managed endoscopically [3]. High recurrence rates necessitate frequent surveillance and intervention.

Identification and complete resection of non–muscle-invasive bladder cancer reduces recurrence and progression; yet up to 40% of patients with multifocal disease have incomplete initial resection [4]. A significant number of papillary tumors and flat lesions that are difficult to discern by WLC are visible with blue light cystoscopy [5]. While blue light cystoscopy improves tumor detection and reduces recurrence, it requires preoperative intravesical instillation of hexaminolevulinate and specialized fluorescence cystoscopes [6]. Despite demonstrated benefit, adoption of blue light cystoscopy remains modest. Low-cost, noninvasive, easily adoptable adjunct imaging technologies are needed to address the diagnostic shortcomings of WLC.

Recent advances in deep learning–based automated image processing may address limitations of cystoscopy and TURBT. Convolutional neural networks, with the ability to learn complex relationships and incorporate existing knowledge into an inference model, are particularly promising. To address the limitations of WLC, we used convolutional neural networks to develop a deep learning algorithm, CystoNet, for augmented bladder cancer detection.

With institutional review board approval and informed participant consent, white light videos were collected between 2016 and 2019 from patients undergoing clinic flexible cystoscopy and TURBT. Cystoscopy without suspicious lesions or biopsies confirmed with benign histology was classified as normal. The algorithm development dataset consisted of 141 videos from 100 patients who underwent TURBT. Video frames containing pathologically confirmed papillary urothelial carcinoma were selected, and tumors were outlined using LabelMe [7]. Flat lesions were excluded as margins could not be annotated accurately. The bladder neck, ureteral orifices, and air bubbles were labeled for exclusion learning.

Our image analysis platform, CystoNet, was trained and tested using the annotated development dataset (Supplementary Fig. 1). The training set contained 2335 frames of normal/benign bladder mucosa and 417 labeled frames containing histologically confirmed papillary urothelial carcinoma. Performance of the model in the test set across a range of probability thresholds was evaluated, and a threshold of 0.98 was selected for cancer presence (Supplementary Fig. 2). In the development test set, the per-frame sensitivity for tumor detection was 88.2% (95% confidence interval [CI], 83.0–92.2%), and nine of 10 tumors were accurately identified with specificity of 99.0% (95% CI, 98.2–99.5%; Table 1).

Table 1–

Patient demographics and tumor characteristics for development and prospective datasetsa

| Development dataset | Validation dataset | |||

|---|---|---|---|---|

| Training set | Test set | Nonnal cohort | Tumor cohort | |

| Data acquisition | 2016–2018 | 2018–2019 | ||

| Source | TURBT | TURBT | Clinic cystoscopy | Clinic cystoscopy + TURBT |

| Patients | 95 | 5 | 31 | 23 |

| Tumor histology—tumor number | 142 (42 LG Ta, 54 HG Ta, 15 HG T1, 9 HG T2) | 10 (1 LG Ta, 7HG Ta, 2 HG T1) | – | 44 (13 LG Ta, 15 HG Ta, 9 HG T1, 3 HG T2, 3 CIS, 1 inverted papilloma) |

| Videos | 136 | 5 | 31 | 26 b |

| Nonnal frames | 2335 | 1002 | 20 643 | 31 330 |

| Tumor frames | 417 | 211 | – | 7542 |

| True positives c | – | 186 | – | 6857 |

| False negatives d | – | 25 | – | 685 |

| True negatives e | – | 992 | 20 359 | 23 382 |

| False positives f | – | 10 | 284 | 406 |

| Per-frame sensitivity | – | 88.2% (95% CI, 83.0–92.2%) | – | 90.9% (95% CI, 90.3–91.6%) |

| Per-tumor sensitivity g | – | – | – | 95.5% (95% CI, 84.5–99.4%) |

| Per-frame specificity | – | 99.0% (95% CI, 98.2–99.5%) | 98.6% (95% CI, 98.5–98.8%) | – |

CI = confidence Interval; CIS = carcinoma in situ; HG = high grade; LG = low grade; TURBT = transurethral resection of bladder tumor.

Bladder cancer staging: Ta, T1, T2.

Three patients underwent clinic flexible cystoscopy for diagnosis followed by transurethral resection of bladder tumor for treatment.

True positives were defined as lesions contained within the algorithm-generated alert box that were histologically confirmed bladder cancers.

False negatives were defined as frames containing histologically confirmed bladder cancers where the algorithm did not generate an alert.

True negatives were defined as frames containing normal bladder mucosa (either biopsy proven benign or deemed normal by the practicing urologist and not biopsied) where the algorithm did not generate an alert.

False positives were defined as frames containing normal bladder mucosa (either biopsy proven benign or deemed normal by the practicing urologist and not biopsied) where the algorithm generated an alert.

Per-tumor sensitivity is defined as algorithm sensitivity for the detection of a histologically confirmed bladder cancer in at least one frame.

For validation, videos from an additional 54 patients undergoing either TURBT or clinic flexible cystoscopy were analyzed. All patients undergoing cystoscopy or TURBT for bladder cancer evaluation were eligible for recruitment, including patients with nonpapillary tumors. Full-length cystoscopy videos were evaluated using CystoNet, and sensitivity and specificity were determined after correlation with final histopathology.

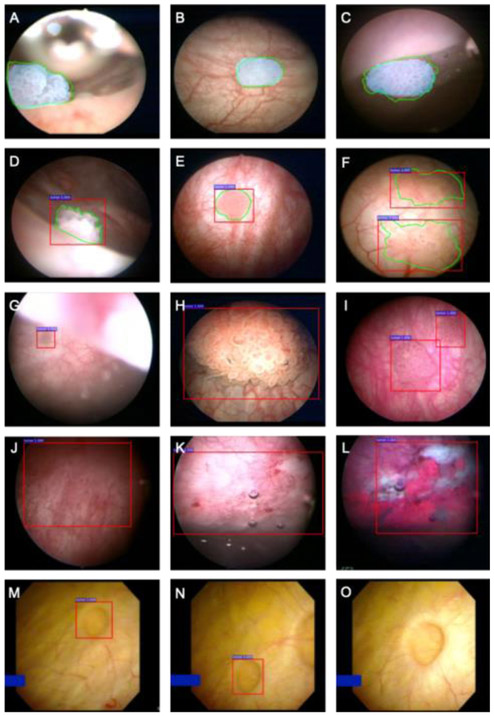

In the validation dataset, 31 patients had normal/benign bladder mucosa on cystoscopy (normal cohort). In the other 23 patients (tumor cohort), 26 videos were recorded and 44 tumors identified (41 papillary urothelial carcinoma, three carcinoma in situ [CIS]). Representative examples of CystoNet overlay from clinic-based flexible cystoscopy and TURBT frames are shown in Figure 1 and Supplementary Videos 1 and 2. There was no significant difference in false alerts generated per cystoscopy between normal and tumor-containing cystoscopies (0.1%, 95% CI, –0.9% to 1.4%; p = 0.856). Significantly more alerts were generated during cystoscopy with a tumor compared with normal (12.5%, 95% CI, 10.3–14.6%; p < 0.001; Supplementary Table 1). Overall, the CystoNet algorithm identified bladder cancer in the validation dataset with per-frame sensitivity and specificity of 90.9% (95% CI, 90.3–91.6%) and 98.6% (95% CI, 98.5– 98.8%), respectively, and per-tumor sensitivity was 95.5% (95% CI, 84.5–99.4%; Table 1).

Fig. 1.

Representative bladder cancer detection using CystoNet. Green outlines (A–F) represent manual tumor annotation, blue shading (A–C) indicates the algorithm-driven automated tumor segmentation, and red boxes (D–N) indicate the alerts generated by CystoNet. CystoNet tumor segmentation (blue) and manual outline (green) of small papillary tumors located at (A) bladder dome, (B) posterior wall, and (C) anterior wall. The CystoNet alert (red) and corresponding manual annotation (green) shown for (D and E) small solitary tumors and (F) larger, multifocal tumors. In the validation cohort, automated detection of (G) a small tumor at the dome as seen from the bladder neck, (H) a large papillary tumor, and (I) a multifocal papillary tumor with limited background contrast. (J) Example of CystoNet detection of a flat lesion with WLC pathologically confirmed to be carcinoma in situ; (K) another example of carcinoma in situ with (L) corresponding photodynamic diagnosis under blue light cystoscopy. (M and N) False-positive CystoNet alert of a small bladder diverticulum; (O) as the cystoscope is moved closer to inspect the area of the diverticulum, alerting box disappears, which is suggestive of a benign lesion.

WLC = white light cystoscopy.

For development and validation of CystoNet, the cystoscopy videos analyzed were representative of clinical practice: low- and high-grade cancer, variable tumor size ranging from a few millimeters to over 5 cm, solitary and multifocal tumors, and varying degrees of cystoscopic visibility. While CystoNet was trained on papillary urothelial tumors, three cases of CIS in the prospective cohort were accurately identified, suggesting that there are detectable features common to all bladder cancers. Further work is needed to determine algorithm performance with a variety of flat lesions.

Prior artificial intelligence–assisted WLC has focused on analysis of static bladder images. One approach developed a color segmentation system with good sensitivity for tumor identification, but a false positive rate of 50% [8]. Another achieved high sensitivity and specificity for cystoscopy image classification using convolutional neural networks, but training and validation relied on a highly curated previously published image atlas limiting clinical translation [9]. Since the initial development of CystoNet was based on analysis of cystoscopy videos, integration of CystoNet in real time during cystoscopy and TURBT is possible. Dynamic overlays of regions of interest hold promise in improving diagnostic yield and thoroughness of bladder tumor resection. There are several limitations to our work. While bladder tumors were defined histopathologically, “normal” was based on cystoscopic interpretation without tissue diagnosis. For CystoNet to detect bladder cancer, the tumor must be within the visual field of the cystoscope. While the number of patients in the training set was small, analysis of cystoscopy videos from 95 patients provided 2752 frames for algorithm development, which was sufficient to achieve excellent performance in distinguishing cancer from benign lesions due to the relative homogeneity of gross tumor structure. However, for subclassification of benign and malignant lesions, a larger training set is needed. Despite these limitations, this study represents a critical step toward computer augmented cystoscopy and TURBT.

In conclusion, we have created a deep learning algorithm that accurately detects bladder cancer. As cystoscopic tumor detection is affected by clinician experience, clarity of visual field, and tumor characteristics including size, morphology, and location, CystoNet augmented cystoscopy has potential to aid in training and diagnostic decision making, and standardize performance across providers in a noninvasive fashion without costly specialized equipment. Further, as demand rises from an aging population, deep learning algorithms such as CystoNet may serve to improve the quality and availability of cystoscopy globally, by enabling providers with limited experience to perform high-quality cystoscopy, and facilitate a streamlined quality control process [10].

Supplementary Material

Acknowledgments:

We thank E. So, J. Crotty, T. Metzner, and B. Robinson for assistance with data collection.

Funding/Support and role of the sponsor: Lei Xing received funding support from NIH R01 CA227713. Joseph C. Liao received support from Stanford University Department of Urology.

Footnotes

Financial disclosures: Joseph C. Liao certifies that all conflicts of interest, including specific financial interests and relationships and affiliations relevant to the subject matter or materials discussed in the manuscript (eg, employment/affiliation, grants or funding, consultancies, honoraria, stock ownership or options, expert testimony, royalties, or patents filed, received, or pending), are the following: None.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- [1].Santoni G, Morelli MB, Amantini C, Battelli N. Urinary markers in bladder cancer: an update. Front Oncol 2018;8:1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Svatek RS, Hollenbeck BK, Holmäng S, et al. The economics of bladder cancer: costs and considerations of caring for this disease. Eur Urol 2014;66:253–62. [DOI] [PubMed] [Google Scholar]

- [3].Chang TC, Marcq G, Kiss B, Trivedi DR, Mach KE, Liao JC. Image-guided transurethral resection of bladder tumors—current practice and future outlooks. Bladder Cancer 2017;3:149–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Witjes JA, Redorta JP, Jacqmin D, et al. Hexaminolevulinate-guided fluorescence cystoscopy in the diagnosis and follow-up of patients with non-muscle-invasive bladder cancer: review of the evidence and recommendations. Eur Urol 2010;57:607–14. [DOI] [PubMed] [Google Scholar]

- [5].Burger M, Grossman HB, Droller M, et al. Photodynamic diagnosis of non-muscle-invasive bladder cancer with hexaminolevulinate cystoscopy: a meta-analysis of detection and recurrence based on raw data. Eur Urol 2013;64:846–54. [DOI] [PubMed] [Google Scholar]

- [6].Greco F, Cadeddu JA, Gill IS, et al. Current perspectives in the use of molecular imaging to target surgical treatments for genitourinary cancers. Eur Urol 2014;65:947–64. [DOI] [PubMed] [Google Scholar]

- [7].Russell BC, Torralba A, Murphy KP, Freeman WT. LabelMe: a database and web-based tool for image annotation. Int J Comput Vision 2008;77:157–73. [Google Scholar]

- [8].Gosnell ME, Polikarpov DM, Res M, et al. Computer-assisted cystoscopy diagnosis of bladder cancer. Urol Oncol Semin Orig Investig 2018;36:8.e9–15. [DOI] [PubMed] [Google Scholar]

- [9].Eminaga O, Eminaga N, Semjonow A, Bred B. Diagnostic classification of cystoscopic images using deep convolutional neural networks. JCO Clin Cancer Informatics 2018;2:1–8. [DOI] [PubMed] [Google Scholar]

- [10].McKibben MJ, Kirby EW, Langston J, et al. Projecting the urology workforce over the next 20 years. Urology 2016;98:21–6. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.