A central function of the brain is to plan, predict, and imagine the effect of movement in a dynamically changing environment. Here we show that in mice head-fixed in a plus-maze, floating on air, and trained to pick lanes based on visual stimuli, the asymmetric movement, and position of whiskers on the two sides of the face signals whether the animal is moving, turning, expecting reward, or licking.

Keywords: attention, cortical state, decoding behavior, motor planning, somatosensory cortex, vibrissae

Abstract

A central function of the brain is to plan, predict, and imagine the effect of movement in a dynamically changing environment. Here we show that in mice head-fixed in a plus-maze, floating on air, and trained to pick lanes based on visual stimuli, the asymmetric movement, and position of whiskers on the two sides of the face signals whether the animal is moving, turning, expecting reward, or licking. We show that (1) whisking asymmetry is coordinated with behavioral state, and that behavioral state can be decoded and predicted based on asymmetry, (2) even in the absence of tactile input, whisker positioning and asymmetry nevertheless relate to behavioral state, and (3) movement of the nose correlates with asymmetry, indicating that facial expression of the mouse is itself correlated with behavioral state. These results indicate that the movement of whiskers, a behavior that is not instructed or necessary in the task, can inform an observer about what a mouse is doing in the maze. Thus, the position of these mobile tactile sensors reflects a behavioral and movement-preparation state of the mouse.

SIGNIFICANCE STATEMENT Behavior is a sequence of movements, where each movement can be related to or can trigger a set of other actions. Here we show that, in mice, the movement of whiskers (tactile sensors used to extract information about texture and location of objects) is coordinated with and predicts the behavioral state of mice: that is, what mice are doing, where they are in space, and where they are in the sequence of behaviors.

Introduction

One of the principal functions of the brain is to control movement (Wolpert and Ghahramani, 2000; Llinas, 2015; Wolpert and Landy, 2012). According to one view, brains may even have evolved for the sole purpose of guiding and predicting the effect of movement (Llinas, 2015). Whether or not the brain evolved for motor control, it is clear that the activity of many brain circuits is intimately linked to movement (Fetz, 1994) and that movement can involve many sensory-motor modalities. For example, the simple act of reaching to touch an object requires postural adjustments, and motion of the head, eye, and limbs, with the eyes often moving first (Barnes, 1979; Anastasopoulos et al., 2009).

The rodent whisker system is a multimodal sensory-motor system. While it is often called a model sensory system (van der Loos and Woolsey, 1973), where each whisker is associated with 1000s of neurons in the trigeminal somatosensory pathways, this system is indeed also a model motor system, with single muscles associated with each whisker (Dörfl, 1982; Grinevich et al., 2005; Haidarliu et al., 2013). Not only do mice have the potential to control the motion of these tactile sensors individually, but the motion of the whiskers is often coordinated with motion of the head (Sachdev et al., 2002; Towal and Hartmann, 2006; Mitchinson et al., 2011; Grant et al., 2012b; Mitchinson and Prescott, 2013; Schroeder and Ritt, 2016). Additionally, whisking can be triggered by sniffing, chewing, licking, and walking (Welker, 1964; Deschênes et al., 2012; Grant et al., 2012a; Arkley et al., 2014; Sofroniew et al., 2014). Whisking can also be used to detect the location of objects, it can be used in social contexts, and it can predict direction of movement of the freely moving and head-fixed animal (Krupa et al., 2004; Sellien et al., 2005; Knutsen et al., 2006; Godde et al., 2010; Prescott et al., 2011; Cao et al., 2012; Grant et al., 2012b; Arkley et al., 2014; Reimer et al., 2014; Sofroniew et al., 2014; Lenschow and Brecht, 2015; Saraf-Sinik et al., 2015; Voigts et al., 2015). Together, this earlier work indicates that rodents move their whiskers in a variety of contexts. Specifically, they move their whiskers while navigating through and exploring their environment, during social interactions, and when they change their facial expression.

The sensory motor circuits dedicated to whiskers are often studied in the context of active sensation (i.e., when the whiskers are moved to touch and detect objects or to discriminate between objects). Even though a lot is known about whisker use, most earlier studies in head-fixed rodent have limited their observations to simple preparations. They have avoided studying whisker use in complex environments that have walls, contours, and textures (but see Sofroniew et al., 2014). While the development of virtual reality systems has increased the complexity of behaviors used in head-fixed rodents, virtual systems are predominantly geared toward creating virtual visual worlds around animals (Hölscher et al., 2005; Harvey et al., 2009). Here we use an alternative platform that floats on air, one in which head-fixed mice are trained to search for a dark lane. As they navigate the environment, they can touch, manipulate, and experience it (Nashaat et al., 2016; Voigts and Harnett, 2018). Here we began by looking for stereotypy in whisking during different behavioral epochs that occur in the course of the task. Once stereotypy became evident, we tested the obvious hypothesis that asymmetric whisking reflected tactile input from whiskers. Our work reveals that, instead of a tactile and exploratory functions during navigation in the maze, the asymmetric movement of whiskers predicts the behavioral state of the animal.

Materials and Methods

We performed all procedures in accordance with protocols approved by the Charité Universitätsmedizin Berlin and the Berlin Landesamt für Gesundheit und Soziales for the care and use of laboratory animals.

Surgery.

Five adult male mice on a C57 bl6 background (weighing 25–32 g) were used in these experiments. Animals were anesthetized with ketamine/xylazine (90 mg/kg ketamine and 10 mg/kg xylazine) to prepare them for head fixation. A lightweight aluminum headpost was attached using a mixture of Rely-X cement and Jet acrylic black cement. Animals were monitored during recovery and were given antibiotics (enroflaxacin) and analgesics (buprenorphine and carprofen).

Air-Track plus maze.

The details of the custom-made plus maze, air-table, and monitoring system for detecting the location and position of the plus maze have been published previously (Nashaat et al., 2016). Here we used a clear Plexiglas air table mounted on aluminum legs, on which we placed a 3D printed circular platform 30 cm in diameter, that was shaped into a plus maze, where each lane of the maze was 10 cm long, 4 cm wide, and 3 cm high, and the center of the maze, the “rotation area,” was 10 cm in diameter (see Fig. 1A). The walls of the lanes had different textures. Some lanes had smooth walls; others had walls with vertical evenly spaced raised indentations. Mice were not trained to discriminate between the different textures of the walls; they were simply exposed to them.

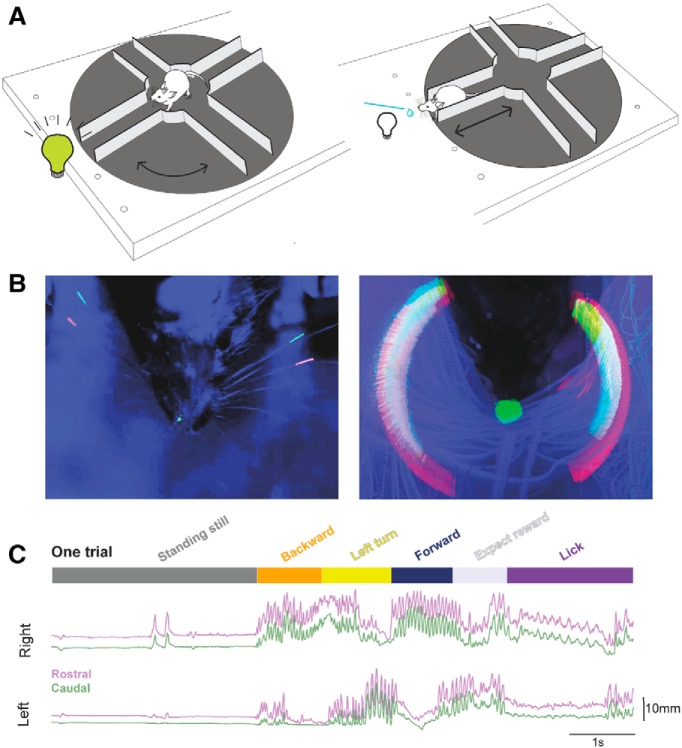

Figure 1.

A trial in an “Air-Track” plus maze with whisker tracking. A, Mice in a plus maze. A schematic of the Air-Track plus maze used for this study is shown here with a head-fixed mouse, navigating the maze. Mice were trained to rotate the maze away from a lane that had an LED light and kept rotating the maze around themselves to find the dark lane, which they entered, and traversed to the end, to obtain a milk reward. The mouse, the LED, and the location of the reward remained fixed in place; all that moved was the maze. B, Maximum intensity projection of a mouse, its painted whiskers, and nose. Left, The painted whiskers on each side of the face and a spot painted on the nose are shown in a single frame. Some of the adjacent, untrimmed whiskers are clearly visible. Right, Maximum intensity projection of 30 trials. The movement of the whiskers around the face of the mouse forms a halo composed of the two colors painted on the whiskers and reflects all the positions the two painted whiskers occupied in the course of 30 trials in the maze. Furthermore, the small spot painted on the nose transforms into a large spot over the course of the 30 trials, indicating that the nose moves a lot as the animal traverses the maze. C, A single decomposed trial of behavior and bilateral whisker motion. We tracked the motion of two whiskers (rostral one painted red, caudal one painted green) bilaterally for the duration of each trial. Once a trial ended, the lick tube was retracted, the LED light turned on, indicating that the mouse was in the wrong lane. At this point, mice often waited at the end of the lane without moving much (top, dark gray bar, standing still) for seconds to minutes, before they exited the lane by going backward (orange). Then the mouse turned and rotated the maze around itself (yellow), until the LED at the end turned off. Mice had to enter the dark lane and move forward into it (dark blue). At the end of the lane, they waited for the lick tube to descend (light gray, expect reward), and lick for the reward (purple). There was a delay between the end of the forward motion and the time for the lick tube to descend to the animal (reward expectation). Two whiskers on each side of the face, one painted red (rostral whisker) and the other painted green (caudal whisker), could be tracked for the extent of the trial. The position of the whiskers over the course of the trial was related to the behavioral epochs. Whisker motion was related to the animal's motion. Whisking was apparent when the animal was moving backward or rotating the maze or going forward. In contrast, when the animal was standing still, whiskers did not move much. And when the animal was licking the reward, the pattern of whisker motion was distinct (low amplitude, rhythmic, bilaterally symmetric) compared with epochs where the mouse was moving the maze.

A pixy camera/Arduino interface tracked the Air-floating maze position with 35 fps resolution. This interface was used to trigger an actuator that moved the reward spout toward the animal (Movie 1), when the animal entered the correct lane. This interface was also used to trigger reward delivery.

A wide-angle view of a mouse performing a single trial in the maze. In this trial, the mouse backs out of a lane, and partially enters a lane where the LED light is still on, then backs out and enters a dark lane, and gets rewarded for correct performance. The nose and two whiskers were painted bilaterally.

Training.

A week after surgical preparation, animals were gradually habituated to handling and head fixation on the plus maze. Mice were kept under water restriction and were monitored daily to ensure a stable body weight not <85% of their initial starting weight. Habituation consisted of head fixing mice in the plus maze while manually giving condensed milk as a reward. Subsequently, over the next few days, animals were guided manually with experimenter nudging and moving the maze under the mouse. Mice learned to rotate the maze, go forward and backward, collecting a reward at the end of a specific lane. The rewarded lane was indicated by an LED (placed at the end of the lane) turning off once the animals were facing the correct lane (see Fig. 1A).

A single complete trial started when the LED turned on, the animal walked backwards to the center of the maze, rotated the maze, orienting itself to the correct lane (indicated by the LED turning off). Then the animal moved forward to the end of this lane, waited for the lick tube to descend, and licked the tube for a reward (Movies 1, 2). A trial could last indefinitely; there was no requirement for the animal to move the maze quickly, or even to keep the maze moving. Thus, individual trials could vary widely in their duration.

A close-up view of a mouse performing a single trial. In this trial, the mouse backs out of a lane, and enters an incorrect lane and waits there, then backs out and enters a dark lane, and gets rewarded for correct performance.

Data acquisition began once animals performed ∼50 trials in a day. Each day, the same two whiskers on each side of the face and a small spot on the nose were painted red or green using UV Glow 95 body paint (see Fig. 1B, left). The tips of the tracked whiskers were trimmed to ensure that they stayed within the 4 cm width of the lane. All other whiskers were left intact.

High-speed video was acquired at 190 Hz, with a Basler camera while the setup was illuminated with two dark lamps. Data were acquired in dark light conditions, where the UV glow colors on whiskers were most clearly visible and distinguishable from the background (see Fig. 1B).

Data selection and image analysis.

We used data from 5 well-trained animals. These mice performed 50–100 trials in a single hour-long session. Data used here were from animals that could move the maze smoothly. Trials were selected for analysis if the whiskers were visible, the paint was glowing uniformly, and if, in the course of the trial, the view of the whiskers was not obstructed by the motion of the animal (Nashaat et al., 2017).

Behavioral states were annotated manually by marking the frames when state transitions occurred. The time point of entry into or exit from the lane was determined by using the position of the eyes, in relationship to the edges of the lanes. The frame on which the animal started moving continuously in one direction was defined as the onset of forward or backward movement.

Data were acquired as Matrox-format video files, each file covering a single trial. These files were converted into H.264-format using ZR view (custom software made by Robert Zollner, Eichenau, Germany). Maximum intensity projections of painted whiskers and nose position within one session were created using ImageJ. From the maximum intensity projection, three individual rectangular region of interest (ROIs) were selected using the rectangular selection tool in ImageJ. Two ROIs included whiskers, on each side of the face, and a third one was set around the nose (see Fig. 1B, right). The ROI dimensions were calculated using the Measurement tools in ImageJ.

Tracking.

Whisker and nose position were tracked for each session, and for each animal separately. A custom-made ImageJ plugin (https://github.com/gwappa/Pixylator, version 0.5) or an equivalent Python code (https://github.com/gwappa/python-videobatch, version 1.0) was used to track the pixels inside the ROI selected with ImageJ. For each frame, the pixels that belonged to a particular hue value (red or green) were collected, and the center of mass for the pixels was computed using the brightness/intensity of the pixel. If tracking failed in some frames (i.e., the algorithm failed to detect any matched pixels), because of shadows, movement, or the whisker getting bent under the animal or against a wall, these frames were dropped and linear interpolation was used to ascribe position values in the missing frames. From this analysis, we created masks for each whisker (Movie 3), which tracked the whisker position for the entire session.

The masks used for each whisker and nose in a single trial.

Experimental design and statistical analysis.

We used data from 5 control animals. Once control data had been collected, 2 animals had their whiskers trimmed, and data were collected from these animals. Data from 10 sessions were used, which included 8 control, and 2 trimmed sessions. We used data from 3 left turning and 2 right-turning animals. The trimmed data are exclusively from left turning animals.

We observed the same sequences of behavior on every trial, in every mouse regardless of whether mice turned right or left, whether whiskers were trimmed or intact. This made it possible to combine data from all animals into a single dataset.

All statistical tests used here were nonparametric. We used Python libraries for Wilcoxon Sign Rank test (available in the scipy library) and for Kruskal–Wallis test (https://gist.github.com/alimuldal/fbb19b73fa25423f02e8). The p values for each comparison are provided in the figure legends.

Analysis.

The following analytical procedures were performed using Python (https://www.python.org/, version 3.7.2), along with several standard modules for scientific data analysis (NumPy, https://www.numpy.org/, version 1.15.4; Scipy, https://www.scipy.org/, version 1.2.0; matplotlib, https://matplotlib.org/, 3.0.2; pandas, https://pandas.pydata.org/, 0.23.4; and scikit-learn, https://scikit-learn.org/stable/, version 0.20.2). Asymmetry of whisker position was assessed on a trial-by-trial basis, using values of normalized positions, which made it possible to compare side-to-side differences in position. In each trial, the most retracted and the most protracted positions became 0 and 1, respectively. The “ΔR-L” values were computed by subtracting the left whisker value from its right counterpart on each time point. Thus, the ΔR-L value ranged from −1 to 1: a value of 1 meant that the right whisker protracted maximally while the left whisker retracted maximally (i.e., the whiskers orient fully leftward). A value of 0 indicated that the left and the right whiskers protract/retract to the same extent. To test asymmetry, a Wilcoxon Sign Rank test was used to test for the left versus right normalized positions.

The nose position was normalized in the same manner as whisker position, with the exception that, for the nose, the rightmost and leftmost positions become −1 and 1, respectively.

Normalization of duration for each behavioral state was performed by resampling. For each epoch, we fitted the (normalized) time base from −0.2 to 1.2 with steps being 0.01, where the epoch starts at time 0 and ends at time 1. The data points were resampled from the original time base (i.e., frames) to the normalized time base, using interpolation. The normalized data could then be used for calculating averages and standard error of the mean (SEM) of whisker position in the course of an epoch. We used a Wilcoxon Sign Rank test to assess whether whiskers were asymmetrically positioned in different behavioral states. We computed the R2 values and used the Kruskal–Wallis test to assess whether the position of whiskers on each side of the face, or the asymmetry of whisker position best captured the variance of side-to-side movement of the nose.

Calculation of whisking parameters.

Three whisking parameters were calculated from the whisker position traces: set point, amplitude of whisking, and frequency. Set points of whisker position and amplitude of whisking were computed directly from whisker position traces, using a 200 ms sliding window around each time point. Within this window, the “set point” was defined as the minimum (the most retracted) value while the “amplitude” was defined as the difference between the maximum (the most protracted) and the minimum values (the set point). The sliding-window algorithm was based on the Bottleneck python module (https://github.com/kwgoodman/bottleneck, version 1.2.1).

The frequency components were estimated from time-varying power spectra obtained through wavelet transformation (Morlet wavelet where the frequency constant was set at 6 for which we used the “wavelets” python module: https://github.com/aaren/wavelets, commit a213d7c3). The power spectra data between 5 and 35 Hz derived from each animal were pooled (movement data from both sides were used). Three frequency components were estimated using a non-negative matrix factorization. Here the “decomposition.NMF” class of the scikit-learn module without L1 regularization was used. The modal values of the low-, medium-, and high-frequency components for each animal were ∼5–8, 8–15, and 15–25 Hz, respectively. The power of each frequency component was defined as the coefficient of the component of the power spectrum at a given time point, multiplied by the power of the component.

Classification analysis.

For classification and decoding, we used 5 motion parameters taken from both the left and the right whiskers (10 parameters in total): the left and right whisking set points, the left and right whisking amplitudes, and the power of the 3 frequency components for left and the right whiskers (for methods used to extract these parameters, see previous sections). Before inputting these parameters to the classifier, the right and left whisker parameters were mixed and transformed into “offset” and “asymmetry” parameters. The offset and asymmetry parameters were computed for each of the five whisking parameters (referred to in general as variable X here). The values for each side XLeft and XRight were computed for each time point, and these values in turn were used to derive the offset value Xoffset and an asymmetry value XAsymmetry, using the following equations (in case of the left-turning animals): Xoffset = (XLeft + XRight)/2, and XAsymmetry = (XLeft − XRight)/2. For right-turning animals, the sign of XAsymmetry was inverted. A total of 10 parameters were generated and used for analysis: the offset and the asymmetry of whisking set point, the offset and asymmetry of whisking amplitude, and the offset and asymmetry of the three frequency components of whisking. We computed these parameters based on each of the individual high-speed videos, acquired at 190 Hz.

We used naive-Bayes classifiers with Gaussian priors that predicted the animal's behavioral state based on the 10 parameters of whisker motion described above. The “naive-Bayes.GaussianNB” class of scikit-learn was used with default parameter settings. Because the labeling of whiskers varied across sessions, a classifier was trained to predict the behavior of each animal during each daily session. For training the classifier, the 10 parameters were first pooled for all trials during a session. To avoid selecting periods where the animal was transitioning from one epoch to another, we pooled time points from the midpoint of an epoch and included just the middle 80% of each behavioral epoch. Classifiers were trained with 500 randomly chosen video frames for each epoch. In most cases, single time points (i.e., video frames) were not resampled during the selection process; the only exception was if the total number of time points for the epoch was <500. This was an issue in two sessions: one for a Forward epoch and another for an Expect-reward epoch.

The accuracy of the classifier was tested by comparing predictions against manual annotation. First, we let the naive-Bayes classifier predict the behavioral epoch of randomly chosen video frames based on the 10 parameters of whisker motion. For this analysis, 200 frames were randomly chosen from each behavioral epoch. These sets of frames could overlap with those used for training the classifier. Then the prediction of the classifier was tested against the manual annotation. Finally, the probability for each behavioral state was computed using the “predict_proba” method of the “GaussianNB” class. We used 20 iterations of training and testing a classifier for each session (i.e., randomly picking up 500 and 200 video frames per epoch for testing and training, respectively). The scores of the 20 classifiers built to classify one session were averaged.

One caveat in performing this classification was that the whisker motion changed dramatically in the course of each behavioral epoch; it was different in the first and second halves of many behavioral epochs. To avoid underfitting, the classifier was trained to predict “half-epochs” (e.g., Standing-still first half, Standing-still second half, Backward first half, etc.). The output from the classifier was then merged into full-epochs: a classifier that returned “Turn first half” as an output was said to have categorized the input parameter set to be in the Turn state. The probability of being in the Turn state was computed by summing the probabilities, for the “Turn first half” and the “Turn second half” half-epochs.

As an estimate of accuracy of a classifier, we used the fraction of successful predictions, denoted as R, against the 200 video frames used for testing. Thus, the successful predictions of a classifier trained with the actual dataset, Rdata, could be computed as follows: Rdata = Ncorrect/(Nincorrect + Ncorrect), where Ncorrect and Nincorrect denote the number of successfully and erroneously predicted video frames, and Ncorrect + Nincorrect = 200. To estimate the performance of a classifier (i.e., how well it behaved above the chance level), we trained another classifier based on a randomized dataset where the manual annotation of each video frame was shuffled in place. The success rate of the resulting classifier without having the true behavioral annotation, R*annotation, was used as an estimate of the chance level. The contribution of all the 10 parameters of whisker motion was defined as the difference Rdata − R*annotation.

Similarly, to estimate the contribution of an aspect of whisker motion, we trained classifiers with a dataset where the corresponding parameter(s) was shuffled, and computed the success rate, R*param. The contribution of the parameter was then defined as the difference Rdata − R*param. For example, to estimate the contribution of whisking set points, we trained classifiers with a dataset where the offset and the asymmetry values of set points were shuffled separately, to obtain the success rate without having the true set point values, R*set point. Then the contribution of set points to accuracy of classifiers was computed as Rdata − R*set point. Likewise, to estimate the contribution of offset (i.e., the offset of whisking set points, whisking amplitudes, and the frequency components), we computed the success rate R*offset based on a dataset where all of the 5 offset values were shuffled separately, and calculated the difference Rdata − R*offset.

Results

A single trial in the Air-Track plus maze

The behavior in the maze was simple, self-initiated, and had no time constraints. Mice decided when to begin a trial and how long to spend on a trial (Fig. 1C; Movies 1, 2). At the beginning of a trial, when mice were at the end of a lane, just after they had obtained a reward, the LED was turned on. Mice then had to move backward out of the lane to reach the center of the maze. When they reached the center of the maze, they turned it around themselves to find a dark lane, a lane in which the LED light was off. Mice then entered this lane: moved forward in it, all the way to the end, where they waited for the reward tube to descend down toward them and then they licked the tube for the milk reward. When mice entered incorrect lanes (i.e., the lane where the LED light was on), they were not rewarded and had to move out of the incorrect lane, rotate the maze, and find the dark lane (Movies 1, 2). Over the course of training, mice learned to steer the maze and to select the correct lane quickly in each trial. During navigation, the behavior of mice could be divided into distinct states, consistent enough to be classified into epochs (Fig. 1C): (1) the “Standing still” epoch that marked the beginning of a new trial, where the mouse stood still at the end of a lane, typically after previous reward delivery; (2) the “Backward” epoch, where the animal moved backward out of the lane; (3) the “Turn” epoch, where the animal entered the center of the maze, and rotated the maze left or right around itself until it chose a new lane; (4) the “Forward” epoch, where the mouse moved forward into the new lane; (5) the “Expect reward” epoch, when the mouse waited at the end of a lane for the reward tube to descend; and (6) the “Lick” epoch, where, if the chosen lane was correct, the reward tube descended toward the animal. This epoch lasted until the tube was retracted up and away from the animal.

Stereotypical whisking in each behavioral epoch

We tracked the movement of C1 and gamma whiskers as mice (n = 5 animals) navigated the plus maze (Movies 1, 2, 3). Whiskers on one side of the face in general moved to the same extent, and they were at similar set points with respect to each other in all behavioral epochs over the course of a trial. Therefore, for the rest of our analysis, we compare only the motion of a single whisker, the rostral C1 whisker that was painted red on each side of the face (Fig. 1B).

In general, whisker motion showed four stereotypical characteristics in different behavioral stages (Movie 4): (1) When mice moved in the maze, large-amplitude (high-frequency) whisking with a high degree of asymmetry between the sides of the face was evident (i.e., in backward, forward, or turning epochs) (Figs. 1C, 2). (2) In contrast, when mice were simply standing still in the maze, whisker movement was negligible and the set point of whiskers was retracted in this epoch (Standing still, Figs. 1C, 2). (3) When mice were standing still but were licking the reward tube, whisking was regular, and occurred at lower frequency than when mice were moving. When mice were standing still, but were licking, the set point for whiskers was also protracted compared with times when mice were at the end of the lane and standing still (Fig. 2). (4) Finally, during reward expectation, whiskers were protracted and showed high-amplitude and high-frequency whisking.

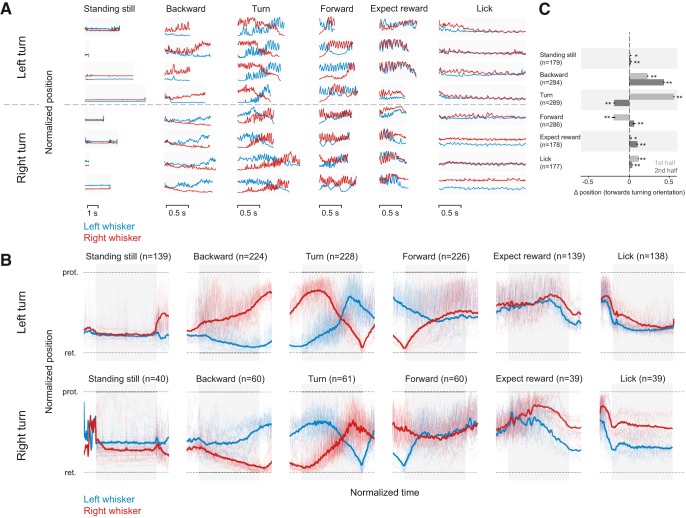

Figure 2.

Patterns of whisker motion during different behavioral epochs. A, Normalized whisker position in single trials and behavioral epochs. The position of whiskers on each side of the face (red, right side; blue, left side) in the course of each behavioral epoch (without averaging or smoothing), taken on different days are shown from 3 left turning animals (top, above the dashed line) and 2 right turning animals (below the hashed line). A trial was decomposed into behavioral epochs selected for analysis. B, Normalized average whisker position in different behavioral epochs. Whisker asymmetry in different behavioral epochs averaged during behavior for left turning (top) and right turning (bottom) mice. Normalized whisker position on left and right side, for left-turning (n = 3) and right-turning (n = 2) animals. While whiskers were retracted almost equally on both sides of the face, before the trial began, the average normalized data reveal that, as mice begin to go backward, whisker positioning becomes asymmetric and the asymmetry was related to the direction of turn mice were about to impose on the maze. When mice moved the maze to the right (bottom), the positioning of whiskers was asymmetric, and a mirror image of how whiskers were positioned when mice turned the platform to the left (top). Blurred red and blue traces in the background represent whisker positions in every single trial. The epochs used for analysis are defined by the gray bars behind the average whisker position traces. C, Average whisker position in the first and second half of behavioral epoch. The average side-to-side position of whiskers changed significantly in all behavioral epochs combined for right and left turning trials. Wilcoxon Sign Rank test: *p < 0.05; **p < 0.01. The normalized mean asymmetry for the first half of each epoch was 0.02 (SEM 0.01) for standing still, 0.23 (SEM 0.01) for backward, 0.56 (SEM 0.01) for turn, −0.2 (SEM 0.02) for forward, 0.023 (SEM 0.01) for expect reward, and 0.12 (SEM 0.01) for lick. The normalized mean asymmetry for the second half of each epoch was 0.02 (SEM 0.01) for standing still, 0.432 (SEM 0.01) for backward, for −0.19 (SEM 0.01) turn, 0.06 (SEM 0.02) for forward, 0.1 (SEM 0.01) for expect reward, and 0.04 (SEM 0.06) for lick. In the right turning animals (data are not shown), the mean values were −0.65 (SEM 0.02) for standing still, −0.14 (SEM 0.02) backward, −0.46 (SEM 0.01) for turn, 0.08 (SEM 0.02) forward, 0.08 (0.02) expect reward, and 0.27 (0.03) lick in the first half and −0.09 (SEM 0.02) stand still, −0.31 (0.02) backward, 0.119 (0.02) turn, −0.03 (0.03) forward, 0.28 (0.03) expect reward, and 0.26 (0.04) lick. Light red and blue represent the single-trial data that were used to compute the average whisker positions on two sides of the face. Gray bar represents the epochs used for analysis.

Top view of whisker positions in each distinct behavioral epoch.

Whisking was so stereotyped, that it served as a “signature” of each behavioral epoch (Fig. 2). Even though the duration of a behavior (i.e., standing still or moving backward varied from trial to trial), the positioning of whiskers bilaterally reflected the behavior.

The whisker position traces from each trial were averaged after normalizing for time and bilateral extent of whisker movement (to compare whisker position on the two sides of the face) (Fig. 2B). Averaging smoothed out the rhythmic whisking motion (i.e., averaging removed the fast components of whisking). These averages confirmed what was evident in the raw data: each behavioral epoch had its own whisking signature, and this was independent of animals, sessions, and trials.

Bilateral asymmetry signals turn direction

Mice had a strong rotation preference; they rotated the maze left or right, and rarely turned the maze ambidextrously in both directions. The mouse's decision to turn right or left was reflected in the asymmetric positioning of whiskers throughout a trial, which was evident very early during the backward movement epochs (n = 284; 224 left turning epochs + 60 right turning). The asymmetry increased as the animal reached the center of the maze. In all trials, when mice reached the center of the maze, animals that turned left retracted their whiskers on the left side to their full extent while simultaneously protracting whiskers on the right side (Fig. 2A,B, top; Movies 1, 2). In contrast, animals that turned right retracted their right whiskers, and protracted their left ones, as they reached the center of the maze (Fig. 2A, bottom). Whiskers on the side of the face, which were protracted, showed high-amplitude whisking motion, whereas whiskers on the other side of the face retracted but displayed almost no rhythmic whisking motion (Fig. 2; Movies 1, 2). The asymmetry that began at or just before the onset of backward movement increased while the animal was approaching the center of the maze and flipped during the turn (Fig. 2). There was an inversion in the whisker position as the animals moved forward into a lane: whiskers that were completely protracted during backward movement were retracted, and vice versa. This inversion in whisker position was stereotypic, occurring automatically in every turn epoch (n = 289; 228 left turning epochs + 61 right turning). Mice maintained this asymmetric position as they moved forward into the lane, primarily as a result of whisker contact with the wall on one side of their face. The asymmetry gradually diminished as mice moved further forward into the lane (n = 286 forward; 226 left + 60 right).

To quantify these changes in asymmetry in the course of each behavioral epoch, we divided each behavioral epoch in two and compared the normalized left whisker position to the right whisker position (ΔR-L) at the beginning and end of each behavioral epoch. In all behavioral epochs, in both right and left turning animals, there was significant whisker asymmetry (p < 0.05, Wilcoxon Sign Rank test, n = 139–228 epochs for left turning animals, and n = 40–61 epochs for right turning animals; Fig. 2C) and asymmetric positioning changed significantly (p < 0.01, Wilcoxon Sign Rank test) when whisker asymmetry in the first and second half of each behavioral epoch was compared (Fig. 2C; data for right and left turning animals are binned together). There is a caveat to note here: the small difference in side-to-side positioning of whiskers during reward expectation and licking arose in part from the direction of descent of the lick tube (Movies 1, 2). Independently of whether mice propelled the maze right or left, the reward tube descended toward the left side of their face; consequently, during licking and reward expectation, the side-to-side asymmetry in all animals shows a similar pattern (compare the whisker position in reward expectation and licking epochs for the left and right turning mice in Fig. 2B during reward expectation and lick epochs). By the time the animal finished licking the reward tube, and decided to move backward in the lane, the lick tube-related asymmetry was no longer evident; instead, a small but consistent and significant (p < 0.05, Wilcoxon Sign Rank test) difference in side-to-side position of whiskers was evident (compare the left and right turning data, in standing still epochs in Fig. 2A,B). Together, our results suggest that whisker asymmetry is a constant feature, and whiskers are actively repositioned as mice move through the maze. Whisker position at the beginning of a trial can predict decisions mice make in imposing a movement direction on the maze, and the extent and direction of the asymmetry can effectively map the position of the animal in the maze.

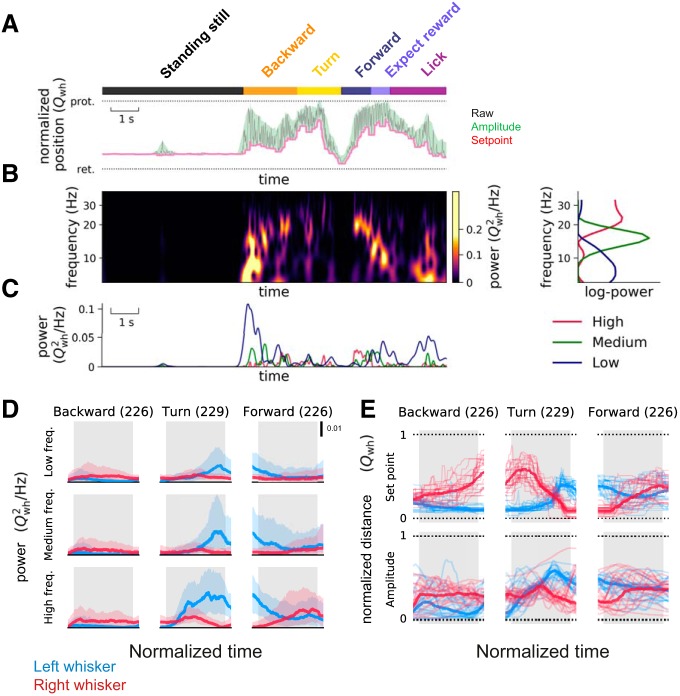

In principle, whisker position asymmetry could arise from changes in amplitude or frequency of whisking, or from changes in set point (Fig. 3). To examine these possibilities, the time-varying power spectra of whisking, the amplitude of whisking, and the set point of whisker position were calculated. The time-varying power spectra revealed multiple frequency bands: one band that spanned 0–8 Hz, another that spanned 12–30 Hz, and an intermediate band from 8 to 12 Hz (Fig. 3A–D). When mice were standing still, they did not whisk much; as they began moving backward, whisking frequency was low (Fig. 3C); but as they continued moving, the frequency components in whisking increased.

Figure 3.

Behavioral state dependency and whisking frequency, set point, and amplitude. A, Extraction of set point and amplitude of whisking. The behavioral epochs, standing still (black rectangle), backward (yellow rectangle), etc., were annotated in the same way as in Figure 1. A 200 ms sliding window was applied to the whisking trace (gray) to detect the set points (minima in the window; pink line) and amplitudes (difference between maxima and minima; green) of whisking. The normalized whisker positions (Qwh) were plotted with the most retracted (ret) position set to 0 and the most protracted (prot) whisker position set to 1. B, Extraction of time-varying power spectra of whisker motion. The time-varying power spectra of whisker motion were calculated (left), and 3 frequency bands evident in the power spectra, high-frequency (the modes typically located at 12–30 Hz, red), medium-frequency (8–12 Hz modes, green), and the low-frequency (0–8 Hz modes, blue) components, were plotted on the right. C, Behavioral state and time-varying frequency components. The three time-varying frequency components plotted over the course of a trial show that, for a single trial plotted here, the high-frequency components were more common as mice finished turning, and the low frequencies were common as the mouse began to move backward. D, Whisking frequency on the right and left side during different behavioral states. The side-to-side difference in power in the 3 frequency components of whisker motion was highest as animals turned the maze, and as they entered a new lane. Shaded regions represent the 25th and 75th percentiles of the values across all sessions from different animals (n = 5 sessions from 3 left-turning animals; numbers in parentheses indicate total number of epochs analyzed). The epochs used for analysis are defined by the gray bars in the background. Data shown here are from left-turning animals. E, Set point of whiskers and amplitude of whisker motion during different behavioral states. When mice were moving backward and/or turning, asymmetry in set points and amplitude developed differently over time (compare middle and bottom plots, the whisker position plots in the top row are plotted here as reference, taken from Fig. 2). Thick lines indicate median values. Thin lines indicate the values from 20 representative epochs across all sessions from different animals (n = 5 sessions from 3 left-turning animals; numbers in parentheses indicate total number of epochs analyzed). In these behavioral epochs, the asymmetry in whisker positions can be attributed to asymmetry in set points. The normalized mean asymmetry for set point of whisker position in the first half of each epoch was 0.17 (SEM 0.006) for backward, 0.47 (SEM 0.007) for turn, and −0.13 (SEM 0.01) for forward motion. The asymmetry for the set point in the second half of each epoch was 0.31 (SEM 0.007), −0.03 (SEM 0.006), and 0.05 (SEM 0.01) for backward, turn, and forward motion, respectively. Similarly, asymmetry for the amplitude of whisking in the first half of each epoch was 0.12 (SEM 0.008), 0.01 (SEM 0.007), and −0.12 (SEM 0.02) for backward, turn, and forward motion, respectively. Asymmetry in amplitude of whisking for second half of each epoch was 0.22 (SEM 0.007), for −0.24 (SEM 0.006), and −0.035 (SEM 0.01) for backward, turn, and forward motion, respectively.

The amplitude of whisking (Fig. 3A, green) and the set point (Fig. 3A, pink) both reflected this asymmetry. In the course of the backward movement (n = 226 left turning epochs) and the turn (n = 229), the set point and amplitude traces showed distinct patterns, suggesting that these aspects of whisking change independently during movement in the maze (Fig. 3E). When mice moved backward, the asymmetry in set point continued to increase (i.e., mice protracted one side more). When mice turn, the set point and amplitude of whisking changed at different times in the behavior (Fig. 3E). When mice move forward (n = 226) into a lane, both the set point and amplitude of whisking change at the same time and in the same direction (Fig. 3E, right). Together, these results suggest that the bilateral amplitude of whisking and the set point of whisker position are actively and independently controlled, and independently contribute to asymmetry.

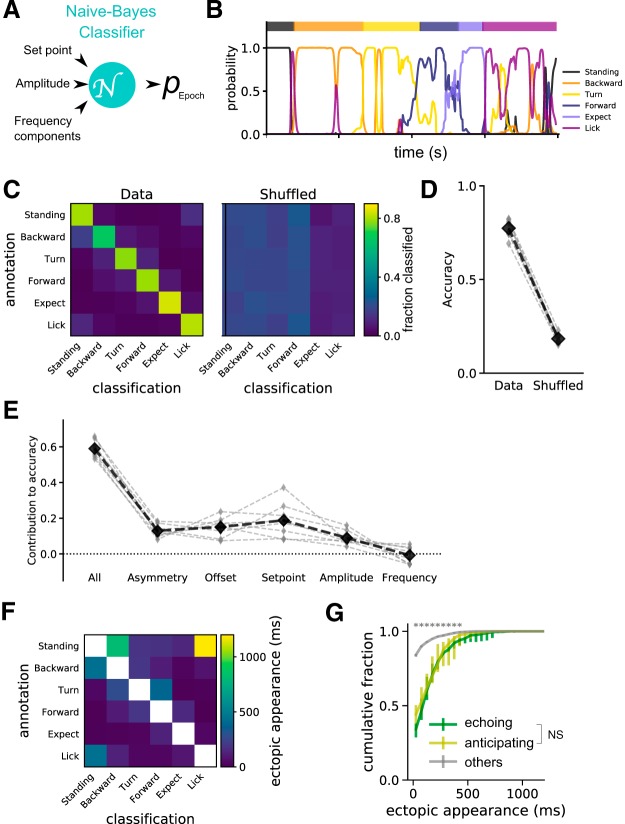

Decoding behavioral state from whisking parameters

The behaviors in the plus maze are complex: mice can move rapidly or slowly; behavioral epochs can be interrupted by pauses when the mouse takes a time out from the behavior. The time taken to complete a trial can vary widely. Nevertheless, because whisking in each epoch is so stereotyped (every trial shows the same dynamics of whisker movement), it is possible to use Bayesian analysis to decode the behavior of the mouse from the whisking parameters (i.e., frequency, amplitude, and set point). To examine whether behavioral state could be predicted from one or more whisking parameters, we used a naive-Bayes classifier, where each feature of whisking (set point, amplitude, and frequency) was examined independently. Each parameter's contribution to the correct classification of the behavioral state was assessed (Fig. 4A). For a single trial, the probability of correctly classifying each behavioral state from whisking parameters was high (Fig. 4B). But behaviors overlap with each other: when the mouse is “Licking,” it is also “Standing still”; when the mouse is “Turning,” it is also going “Forward” or “Backward.” When behaviors overlap, whisking parameters also overlap and make it a little bit harder to correctly classify behavior from the whisking parameters. When we trained the classifier and used it on the dataset (left matrix), and on the shuffled dataset, behavioral states could be accurately classified 80% of the time (Fig. 4C), with a 20% correct classification, even after shuffling the data (i.e., there was a baseline effect of 20%) (Fig. 4D). These data suggest that whisking parameters can predict where the mouse is and what the mouse is doing in a maze.

Figure 4.

Classification, decoding, and prediction of the relationship between behavior state and whisking. A, A naive-Bayes classifier with Gaussian priors was used to output the probability of a specific behavioral state (pEpoch) based on set point, amplitude, and frequency parameters of whisker motion. In total, 20 distinct classifiers were trained for each session. B, The output of probabilities in a representative trial. Individual colored lines indicate the probability output generated by the classifier for each behavioral epoch. The ground-truth behavioral epochs were annotated manually and are shown on the top of the graph. C, Confusion matrices for the classifiers. Classifiers trained with the actual dataset (left matrix) correctly classified behavioral states 80% of the time; those trained with shuffled annotations (right matrix) generated chance-level (20%) accuracy. Rows represent the categories of the manually annotated behavioral epochs used for testing the classifiers. Columns represent the categorical output from the classifier. Colors of each square represent the fraction of time points belonging to each manually annotated behavioral state. D, Accuracy of the classifiers. Gray marks indicate the scores for each session (n = 8 sessions from 5 animals). Black marks indicate the averages across sessions. p = 0.0143 (Wilcoxon Sign Rank test). E, Contribution of individual parameters to accuracy of classifiers. Asymmetry, set point, and amplitude contributed significantly to the ability of classifiers to decode the behavioral state of the animal, whereas whisking frequency generated no additional effect. Each parameter set was randomized individually, and reduction in accuracy was computed for each session. Small gray diamonds represent the changes in accuracy in individual sessions. Black diamonds represent the averages. Kruskal–Wallis test, performed for each combination of parameters, revealed significant effects for asymmetry versus frequency (*p = 0.0363), offset versus frequency (p = 0.0012), and set point versus frequency (***p < 0.0001). p > 0.1 for all other combinations (with the omnibus probability being ***p < 0.0001). F, Misclassifications. The duration/time points, where the probability was higher than the chance level, were plotted. There was a tendency that behaviorally neighboring epochs get misclassified (i.e., there was “ectopic appearance” of whisking patterns related to certain other behavioral epochs). G, Smooth transitions between behavioral epochs. The duration of above-chance probability for neighboring epochs was computed and plotted as cumulative histograms. The effects of “echoing” and “anticipating,” the effects of preceding and following behavioral epochs, respectively, were found to be significantly smaller in bins >500 ms compared with all the other types of ectopic epoch appearances. *p < 0.05 (Kruskal–Wallis test). n = 8 sessions from 5 animals. No significant difference was found between echoing and anticipating epoch appearances (p > 0.05 for all the time bins).

Next, we examined whether asymmetry, set point, amplitude of whisking, or frequency contributed significantly to the ability of classifiers to decode the behavioral state (Fig. 4E). Five parameters of bilateral whisking were examined: the amplitude, set point, frequency, asymmetry, and offset. Asymmetry was defined as the difference in position of whiskers on two sides of the face, offset was defined as the average position of whiskers on two sides of the face, the set point was the most retracted position of the whiskers, and the amplitude was the difference in the most retracted and most protracted position of the whiskers. Our analysis indicated that set point, amplitude, and offset of whisking significantly contributed to the accuracy of decoding behavioral state. Frequency of whisking had a negligible additional effect on decoding behavioral state: it added very little, once asymmetry of whisker position, the amplitude of whisking, and set point had been taken into account (Fig. 4E).

Finally, we looked for ectopic appearances (i.e., unusual occurrences) in the classification by comparing what we observed in manually annotated behavioral state, to the predicted behavior state. Decoding of behavior relies on whisking parameters. But if the behaviors mix with each other (i.e., animal stands still during licking, or stops licking in licking epoch, or stands still during a forward or backward motion), then decoding cannot be perfect. When the animal was Standing still, it could also be licking; when it was in the Licking state, it could just be Standing still; and when it was Turning, it could also be moving Forward into a lane (Fig. 4F).

Another issue in decoding arises from the incomplete separation between behavioral epochs. Transitions between behaviors can be incomplete, and there can be an echoing effect i.e., earlier behavioral epochs affect subsequent ones or an anticipation effect where the epoch after affects the one before (Fig. 4G). These effects of transitions between states could be minimized if the duration of the behavioral epoch was long, at least 500 ms (Fig. 4G).

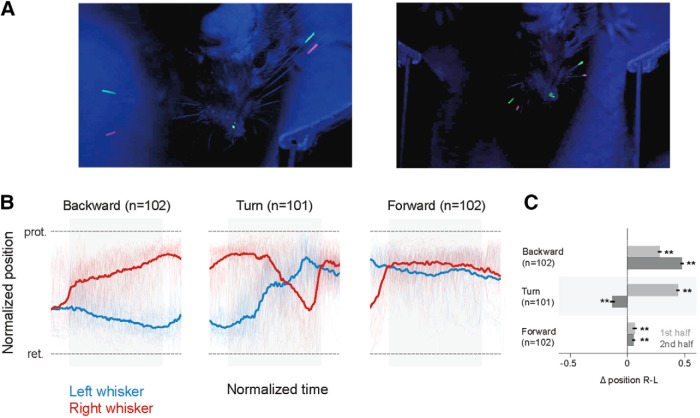

Sensory input and asymmetry

Whiskers are the primary tactile organs of rodents; and in principle, whisker asymmetry could arise from tactile input to the whiskers, especially in the Air-Track system where whisker contact with the walls is a constant factor. To examine whether tactile input from whiskers drives the side-to-side asymmetry, we trimmed all whiskers off bilaterally and painted the remaining whisker stubs (Fig. 5A, right). Mice were able to perform the task without their whiskers (Fig. 5B). Furthermore, in these mice, whisker asymmetry still predicted the direction that the animal would move in, and whisker position still varied in a behavioral state-dependent manner. There were still significant differences in direction of asymmetry during backward motion (n = 102 left turning epochs), turning (n = 101 epochs), and forward motion (n = 102 left turning epochs) (p < 0.01 Wilcoxon Sign Rank test, n = 101; Fig. 5C). Even here, as the animal rotates left, in the first half of the turn, the right whisker is protracted; and as mice complete the turn, the left whisker protracts and the right whisker retracts. In the course of the turn, whiskers on two sides of face flip their positions. This is similar to what happens when the whiskers are intact (Fig. 2B,C). Trimming abolished side-to-side asymmetry during standing still, licking, and expecting reward. These effects of trimming during licking and reward expectation are in part related to the positioning of the lick tube as it descended toward the left side of the animal. That trimming abolished asymmetry during standing still epoch was probably related to the small initial asymmetry in this epoch. These results suggest that sensory input is not the sole proximal cause of stereotypical whisker positioning. However, these data cannot rule out the effect of long-term practice with tactile feedback. Mice experience the environment, are trained, with the full complement of their whiskers, and may learn to position their whiskers in a stereotypical manner.

Figure 5.

Whisker asymmetry was not related to tactile input. A, Single frames of whisker position in normal (left) and trimmed (right) conditions. In left turning mice, when whiskers were trimmed, the whisker stubs could still be tracked; side-to-side asymmetry of whisker position was still evident. B, Normalized average whisker position after trimming in different behavioral epochs. The asymmetric positioning of whiskers persisted and was evident when mice began to move backward, and persisted through the trial, as mice moved further backward and made a left turn. C, Mean asymmetry after trimming. Even after trimming, there was significant asymmetry (**Wilcoxon Sign Rank test, p < 0.01, based on n = 102 for backward motion, n = 101 turn, n = 102 forward epochs). The normalized mean asymmetry of whisker position in the first half of each epoch was 0.28 (SEM 0.02) for backward, 0.44 (SEM 0.013) for turn, and 0.06 (SEM 0.02) for forward motion. The normalized mean asymmetry in the second half of each epoch was 0.48 (SEM 0.01) for backward, for −0.1 (SEM 0.02) for turn, and 0.06 (SEM 0.01) for forward motion. Light red and blue represent the single-trial data that were used to compute the average whisker positions on two sides of the face. Gray bars in the background of the whisker position traces represent the epochs used for analysis.

Whisker asymmetry in freely moving animals

Earlier work has shown that whisker asymmetry arises and is related to movement of the head (Towal and Hartmann, 2006; Grant et al., 2009; Schroeder and Ritt, 2016). These earlier studies were all in freely moving animals. To examine whether the results we obtained in head-fixed mice were an artifact of head fixation, we tracked whisker motion in 2 freely moving animals (Movie 5). These animals had been previously trained on the plus maze while they were head-fixed. Whisker tracking in freely moving animals was constrained to just the central portion of the maze: that is, just as the mouse backed out of a lane, turned, and entered another lane. In freely moving mice, head movement made tracking of whisker position difficult. Not only did the head move up and down, mice often rotated their head from side to side. Nevertheless, in a limited set of anecdotal observations, when accounting for head angle, whisker position was asymmetric in the same direction, at the same places, in freely moving animal as in the head-fixed mouse. Whiskers on the animal's left side were retracted, right side protracted as the mouse exited the lane and began to turn left. Once the mouse was in the center of the maze and turning, the whisker position flipped and the mouse protracted whiskers on the right side and retracted them on the left. These observations are consistent with the previous work and suggest that head fixation alone does not cause the asymmetry.

Freely moving mice. Whiskers were tracked in mice that had been trained in the maze while head-fixed. Whisker positions can be seen as mice move forward and backward. The top of the maze is covered with clear Plexiglas.

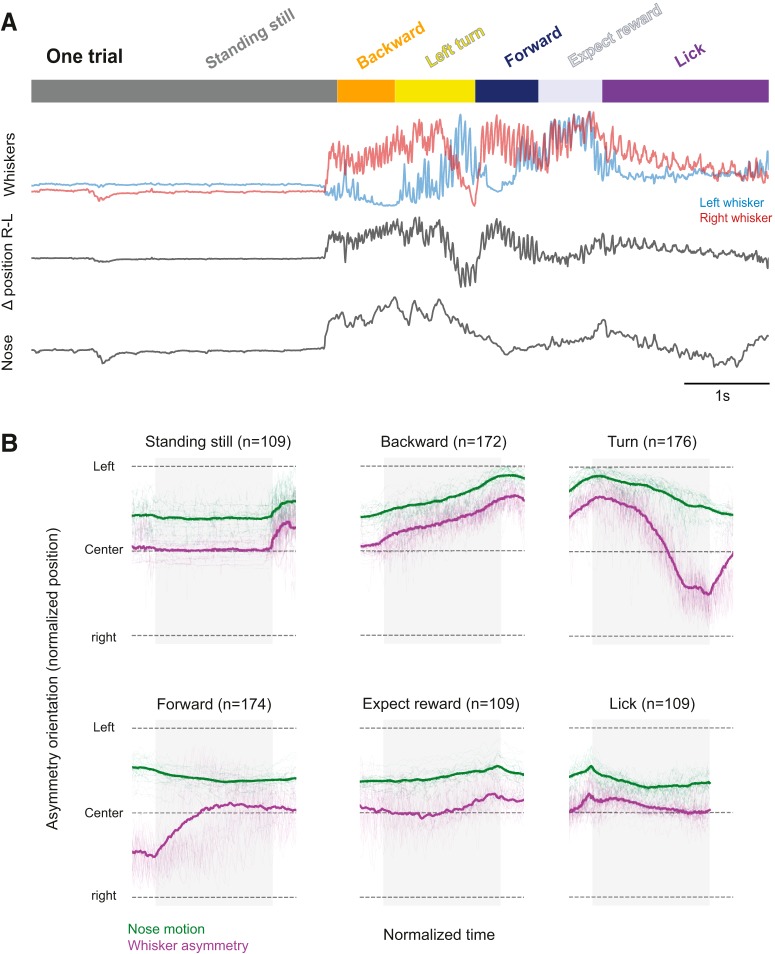

Nose movement: relationship to whisker asymmetry

The facial muscles that move whisker pad and whiskers are controlled by a central pattern generator, which controls breathing and sniffing (Moore et al., 2013, 2014). Some of these muscles also control the motion of the nose (Haidarliu et al., 2012, 2015); indeed, the nose and whiskers move in a coordinated fashion (Kurnikova et al., 2017; McElvain et al., 2018). Here we examined whether the nose moved in a behavioral state-dependent manner, and whether nose movement was correlated to whisker motion. As the maximum intensity projection of nose movement over 30 trials shows, the nose moved a fair amount in the course of Air-Track behaviors (Fig. 1B) and moved differently in each behavioral epoch (Fig. 6A, bottom). Side-to-side nose movement (i.e., left to right) was best related to the asymmetry of whisker position on the two sides of the face (Fig. 6A, middle); it was not as nicely related to the motion of whiskers on either side of the face (Fig. 6A, top; p < 0.001 Kruskal–Wallis test). This becomes especially evident when whisker asymmetry and nose movement were plotted together: bilateral whisker movement (Δposition = R-L, purple) and nose movement (green) changed together (Fig. 6B). The end of the Turn epoch and the beginning of the Forward epoch were uniformly associated with whisker contact to the wall, which was likely to have interfered with the relation between whisker asymmetry and nose movement. These data show that, just as whisker asymmetry is stereotyped in the behavioral epochs, nose movement was also stereotypical. We conclude that the facial expression governed by the contraction of the various muscles in the face changes in a stereotyped fashion in coordination with behavioral sequences mice express in the maze.

Figure 6.

Whisker asymmetry and nose movement. A, The nose moves when mice move. Whenever the mouse moved its whiskers (top, red and blue), whisker movement was asymmetric (middle), and the mouse moved its nose (third trace from top). The right-left movement of the nose was significantly and best correlated with the complex motion of whiskers (second trace) on both sides of the face (Kruskal–Wallis test p < 0.001), not with motion of whiskers on any side of the face. B, Average nose movement related to whisker asymmetry in the different behavioral epochs. When mice are standing still, licking, or expecting reward, the nose (green) does not move, and asymmetry in whisker position (purple) is minimal; but when mice are moving the maze, when mice go backward, turn and go forward, the nose also moves and this movement is related to the asymmetric positioning of the whiskers.

Discussion

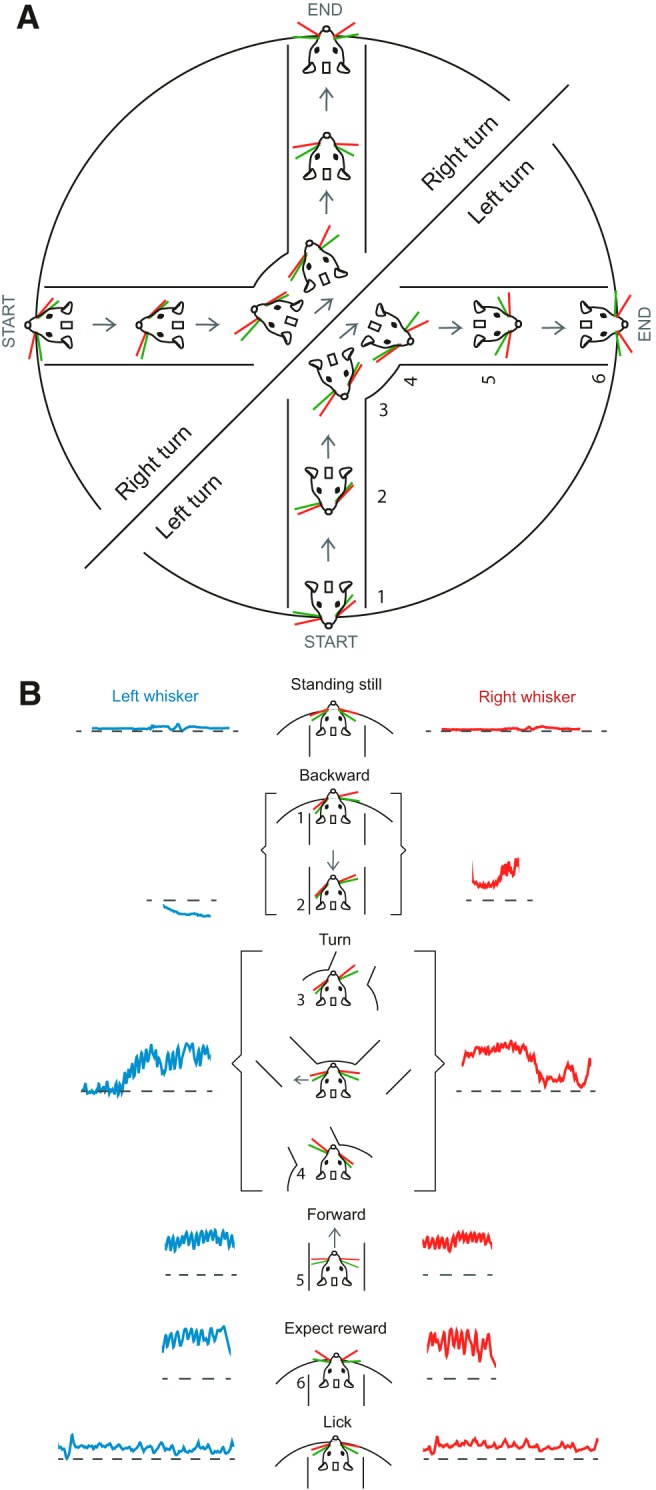

Here we show that features of whisking correlate with and can reliably decode the behavioral state of the mouse. Each behavioral epoch has its signature whisking features, which make it possible for whisker asymmetry to predict whether the mouse was moving forward or backward, turning, licking, or expecting reward. Even though the Air-Track platform we use here is rich in tactile features, such as walls and surfaces; and even though whiskers are tactile organs, this relationship between whisking and behavioral state had little to do with ongoing tactile input to the mouse. Instead, the position of whiskers was related to motor preparation, postural adjustment, and active whisking during navigation. Even when mice moved backward in a straight line, the extent and direction of side-to-side asymmetry in whisker position correlated with the turn that the animal was about to initiate (Fig. 2). The signature whisking features that made it possible to relate each behavioral epoch to the positioning of the whiskers (Fig. 7) serve as a map of “what the mouse was doing” and “where the mouse was” in the maze. An astonishing aspect of the relationship between whisking and behavior of the mouse is that whisking is not instructed, it is not necessary for the task performance, but it occurs spontaneously in the course of the search for the correct lane.

Figure 7.

Whisking is a signature of motor plan and behavioral state. A, Right and left turning mice plan their movement in the plus maze, and this plan is reflected in how they position their whiskers. Left turning mice protract their whiskers on the right side while moving backward, and right turning mice protract their whiskers on the left side while moving backward (mice in the semicircles show whisker position for right and left turning mice). The numbers next to the mice indicate the different behavioral epochs. At the onset of backward movement (1), whiskers are already asymmetric. As the animal continues moving backward toward the middle of the maze (2), the asymmetry in whisker position increases until the mouse exits the lane and is in the middle of the maze (3). As the mouse moved forward into a new lane, the asymmetric whisker positioning flipped (4); and then as the mouse proceeded into the lane, the asymmetry diminished (5). The trial ends when the animal is licking (6). B, Schematic of whisker position as a signature of behavioral state and movement preparation. Asymmetry in whisker position and whisker set point both reflects the distinct behavioral state of the animal. The numbers in the schematic are the behavioral states (related to the numbers used in A).

A priori, we expected that a portion of the whisker asymmetry would arise from tactile input from the whiskers. After all, each whisker is associated with thousands of neurons in the rodent somatosensory cortex (van der Loos and Woolsey, 1973; Oberlaender et al., 2012), and stimuli to individual whiskers evoke a cortical response (Simons, 1978; Armstrong-James et al., 1992; Petersen et al., 2003; Hasenstaub et al., 2007). Furthermore, whiskers are known as tactile elements, used by rodents in social settings (Lenschow and Brecht, 2015), for detecting the location and presence of objects (Hutson and Masterton, 1986), for discriminating between fine textures (Carvell and Simons, 1995; Chen et al., 2013; Kerekes et al., 2017), and for guiding mice as they navigate their environment (Hutson and Masterton, 1986; Carvell and Simons, 1990; Brecht et al., 1997; MItchinson et al., 2011; Grant et al., 2012b; Voigts et al., 2015). In the plus maze used here, the floor and walls of the maze, their texture, and the edges of the lane openings can all provide tactile input. Whiskers contact the wall as the animal navigates the maze, and this contact might result in the asymmetry. But our work shows that, while whiskers do invariably contact the walls (in left turn and forward motion; Fig. 1C; Movies 1, 2, 3), contact is not the main reason for side-to-side asymmetry; the side-to-side asymmetry persists, even after whiskers have been trimmed to stubs that provide little tactile input. This suggests that the asymmetry arises as a postural adjustment or as a preparation for the actions that can follow hundreds of milliseconds after the initial asymmetric positioning of whiskers. That the level of asymmetry, and the set point/amplitude of whisking on two sides of the face, increases and decreases in a behaviorally relevant fashion (i.e., it increases as the animal approaches a turn), and the protracted and retracted side flip as the animal makes the turn, suggest that this asymmetry could be learned, and be related to active positioning of the whiskers (Fig. 7).

The persistence of a correlation between asymmetric positioning and motion of whiskers and behavioral state, even after trimming of the whiskers, implies that tactile input from the walls does not drive the asymmetries, and tactile input does not drive the relationship to behavioral states. Whisking in rodents has been synonymous with exploration and navigation, and the way whiskers spread around the face during active tactile exploration has been described as forming a canopy of mobile sensors (Welker, 1964; Wineski, 1985; Carvell and Simons, 1990). Whisking has also been linked to the expression of overt attention to points in space around the face and head (Mitchinson and Prescott, 2013), and whisking strategy has been linked to the novelty of the environment (Arkley et al., 2014).

The prevalence of whisking asymmetry in the Air-Track is striking: it is evident just as mice begin to move the maze, and it is evident throughout their behavior in the maze. Previous work in head-fixed mice has shown that whisking asymmetry relates to the direction of movement of the animal on an air ball (Sofroniew et al., 2014). In freely moving mice, on the other hand, asymmetry can relate to multiple factors, including anticipation of future head movements (Towal and Hartmann, 2006), anticipation of contact (Grant et al., 2009; Mitchinson et al., 2011; Voigts et al., 2015), and/or the actual contact between whiskers and objects (Mitchinson et al., 2007, 2013; Schroeder and Ritt, 2016). Our results suggest that, while learning and long-term practice could play a role in anticipatory asymmetry (Landers and Zeigler, 2006; Grant et al., 2012b), anticipatory asymmetry is not solely constrained or linked exclusively to head movement. It is also not constrained by real-time whisker contact in the course of the task; it is related to the direction of movement of the maze and potentially related to the direction of the movement of the mouse. One possibility that we cannot rule out is that mice learn to navigate the maze using tactile input, and it is this learned behavior that they express, even when they no longer need tactile input.

It is possible that motor preparation involves changes in breathing triggered by anticipation of the additional effort that walking involves, and this change in breathing triggers nose movement, and whisking that co-occurs with each bout of movement the animal makes (Kurnikova et al., 2017). But asymmetry in whisker set point probably does not arise from breathing or sniffing, which are fast events and occur simultaneously in both nares.

In conclusion, our work suggests that it is possible to discern the internal behavioral state of the animal from the whisker position (Fig. 7) and that brain circuits that control the animal's motion, the animal's whisking, and the motion of its nose are engaged together but distinctly during each behavioral epoch. The changes in whisking reflect changes in the state of the animal, with the set point and whisking amplitude independently reflecting internal brain states. In human beings, facial movements are used for communication of emotion: distress, pleasure, and anger. Whether facial expressions in rodents reflect emotions, attention, or another aspect of behavior is only beginning to be explored (Langford et al., 2010; Finlayson et al., 2016). The degree of correlation between whisker position and the animal's behavioral state reveals how whisking is interweaved with a number of other behaviors, including breathing and sniffing and head movement. While the asymmetry expressed in each moment may be learned during training in the task, it is not instructed, and is probably related to activity in widespread areas of the brain (Musall et al., 2019; Stringer et al., 2019). It is therefore even more remarkable that the internal state of the animal is predicted by the positioning and motion of these tactile sensory organs.

Footnotes

This work was supported by Deutsche Forschungsgemeinschaft Grant 2112280105 to M.E.L., and Grants LA 3442/3–1 and LA 3442/5–1 to M.E.L.; Deutsche Forschungsgemeinschaft Project 327654276-SFB 1315 to M.E.L.; European Union's Horizon 2020 research and innovation program and Euratom research and training program 2014–2018 (under Grant 670118) to M.E.L.; H2020 Research and Innovation Programme 720270/HBP SGA1, 785907/HBP SGA2 and 670118/ERC ActiveCortex (M.E.L.); and Einstein Stiftung. We thank the Charité Workshop for technical assistance, especially Alexander Schill, Jan-Erik Ode, and Daniel Deblitz; and members of the M.E.L. laboratory, especially Naoya Takahashi, Jaan Aru, and Malinda Tantirigama, for useful discussions about earlier versions of the manuscript.

The authors declare no competing financial interests.

References

- Anastasopoulos D, Ziavra N, Hollands M, Bronstein A (2009) Gaze displacement and inter-segmental coordination during large whole body voluntary rotations. Exp Brain Res 193:323–336. 10.1007/s00221-008-1627-y [DOI] [PubMed] [Google Scholar]

- Arkley K, Grant RA, Mitchinson B, Prescott TJ (2014) Strategy change in vibrissal active sensing during rat locomotion. Curr Biol 24:1507–1512. 10.1016/j.cub.2014.05.036 [DOI] [PubMed] [Google Scholar]

- Armstrong-James M, Fox K, Das-Gupta A (1992) Flow of excitation within rat barrel cortex on striking a single vibrissa. J Neurophysiol 68:1345–1358. 10.1152/jn.1992.68.4.1345 [DOI] [PubMed] [Google Scholar]

- Barnes GR. (1979) Vestibulo-ocular function during co-ordinated head and eye movements to acquire visual targets. J Physiol 287:127–147. 10.1113/jphysiol.1979.sp012650 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brecht M, Preilowski B, Merzenich MM (1997) Functional architecture of the mystacial vibrissae. Behav Brain Res 84:81–97. 10.1016/S0166-4328(97)83328-1 [DOI] [PubMed] [Google Scholar]

- Cao Y, Roy S, Sachdev RN, Heck DH (2012) Dynamic correlation between whisking and breathing rhythms in mice. J Neurosci 32:1653–1659. 10.1523/JNEUROSCI.4395-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carvell GE, Simons DJ (1990) Biometric analyses of vibrissal tactile discrimination in the rat. J Neurosci 10:2638–2648. 10.1523/JNEUROSCI.10-08-02638.1990 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carvell GE, Simons DJ (1995) Task and subject-related differences in sensorimotor behavior during active touch. Somatosens Mot Res 12:1–9. 10.3109/08990229509063138 [DOI] [PubMed] [Google Scholar]

- Chen JL, Carta S, Soldado-Magraner J, Schneider BL, Helmchen F (2013) Behaviour-dependent recruitment of long-range projection neurons in somatosensory cortex. Nature 499:336–340. 10.1038/nature12236 [DOI] [PubMed] [Google Scholar]

- Deschênes M, Moore J, Kleinfeld D (2012) Sniffing and whisking in rodents. Curr Opin Neurobiol 22:243–250. 10.1016/j.conb.2011.11.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dörfl J. (1982) The musculature of the mystacial vibrissae of the white mouse. J Anat 135:147–154. [PMC free article] [PubMed] [Google Scholar]

- Fetz EE. (1994) Are movement parameters recognizably coded in the activity of single neurons? In: Movement control (Cordo P, Harnad S, eds), pp 77–88. Cambridge, UK: Cambridge UP. [Google Scholar]

- Finlayson K, Lampe JF, Hintze S, Würbel H, Melotti L (2016) Facial indicators of positive emotions in rats. PLoS One 11: e0166446. 10.1371/journal.pone.0166446 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Godde B, Diamond ME, Braun C (2010) Feeling for space or for time: task-dependent modulation of the cortical representation of identical vibrotactile stimuli. Neurosci Lett 480:143–147. 10.1016/j.neulet.2010.06.027 [DOI] [PubMed] [Google Scholar]

- Grant RA, Mitchinson B, Fox CW, Prescott TJ (2009) Active touch sensing in the rat: anticipatory and regulatory control of whisker movements during surface exploration. J Neurophysiol 101:862–874. 10.1152/jn.90783.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grant RA, Mitchinson B, Prescott TJ (2012a) The development of whisker control in rats in relation to locomotion. Dev Psychobiol 54:151–168. 10.1002/dev.20591 [DOI] [PubMed] [Google Scholar]

- Grant RA, Sperber AL, Prescott TJ (2012b) The role of orienting in vibrissal touch sensing. Front Behav Neurosci 6:39. 10.3389/fnbeh.2012.00039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grinevich V, Brecht M, Osten P (2005) Monosynaptic pathway from rat vibrissa motor cortex to facial motor neurons revealed by lentivirus-based axonal tracing. J Neurosci 25:8250–8258. 10.1523/JNEUROSCI.2235-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haidarliu S, Golomb D, Kleinfeld D, Ahissar E (2012) Dorsorostral snout muscles in the rat subserve coordinated movement for whisking and sniffing. Anat Rec (Hoboken) 295:1181–1191. 10.1002/ar.22501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haidarliu S, Kleinfeld D, Ahissar E (2013) Mediation of muscular control of rhinarial motility in rats by the nasal cartilaginous skeleton. Anat Rec (Hoboken) 296:1821–1832. 10.1002/ar.22822 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haidarliu S, Kleinfeld D, Deschênes M, Ahissar E (2015) The musculature that drives active touch by vibrissae and nose in mice. Anat Rec (Hoboken) 298:1347–1358. 10.1002/ar.23102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harvey CD, Collman F, Dombeck DA, Tank DW (2009) Intracellular dynamics of hippocampal place cells during virtual navigation. Nature 461:941–946. 10.1038/nature08499 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasenstaub A, Sachdev RN, McCormick DA (2007) State changes rapidly modulate cortical neuronal responsiveness. J Neurosci 27:9607–9622. 10.1523/JNEUROSCI.2184-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hölscher C, Schnee A, Dahmen H, Setia L, Mallot HA (2005) Rats are able to navigate in virtual environments. J Exp Biol 208:561–569. 10.1242/jeb.01371 [DOI] [PubMed] [Google Scholar]

- Hutson KA, Masterton RB (1986) The sensory contribution of a single vibrissa's cortical barrel. J Neurophysiol 56:1196–1223. 10.1152/jn.1986.56.4.1196 [DOI] [PubMed] [Google Scholar]

- Kerekes P, Daret A, Shulz DE, Ego-Stengel V (2017) Bilateral discrimination of tactile patterns without whisking in freely running rats. J Neurosci 37:7567–7579. 10.1523/JNEUROSCI.0528-17.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutsen PM, Pietr M, Ahissar E (2006) Haptic object localization in the vibrissal system: behavior and performance. J Neurosci 26:8451–8464. 10.1523/JNEUROSCI.1516-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krupa DJ, Wiest MC, Shuler MG, Laubach M, Nicolelis MA (2004) Layer-specific somatosensory cortical activation during active tactile discrimination. Science 304:1989–1992. 10.1126/science.1093318 [DOI] [PubMed] [Google Scholar]

- Kurnikova A, Moore JD, Liao SM, Deschênes M, Kleinfeld D (2017) Coordination of orofacial motor actions into exploratory behavior by rat. Curr Biol 27:688–696. 10.1016/j.cub.2017.01.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landers M, Zeigler HP (2006) Development of rodent whisking: trigeminal input and central pattern generation. Somatosens Mot Res. 23:1–10. 10.1080/08990220600700768 [DOI] [PubMed] [Google Scholar]

- Langford DJ, Bailey AL, Chanda ML, Clarke SE, Drummond TE, Echols S, Glick S, Ingrao J, Klassen-Ross T, Lacroix-Fralish ML, Matsumiya L, Sorge RE, Sotocinal SG, Tabaka JM, Wong D, van den Maagdenberg AM, Ferrari MD, Craig KD, Mogil JS (2010) Coding of facial expressions of pain in the laboratory mouse. Nat Methods.7:447–449. [DOI] [PubMed] [Google Scholar]

- Lenschow C, Brecht M (2015) Barrel cortex membrane potential dynamics in social touch. Neuron 85:718–725. 10.1016/j.neuron.2014.12.059 [DOI] [PubMed] [Google Scholar]

- Llinas R. (2015) I of the vortex: from neurons to self. Cambridge, MA: Massachusetts Institute of Technology. [Google Scholar]

- McElvain LE, Friedman B, Karten HJ, Svoboda K, Wang F, Deschênes M, Kleinfeld D (2018) Circuits in the rodent brainstem that control whisking in concert with other orofacial motor actions. Neuroscience 368:152–170. 10.1016/j.neuroscience.2017.08.034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchinson B, Martin CJ, Grant RA, Prescott TJ (2007) Feedback control in active sensing: rat exploratory whisking is modulated by environmental contact. Proc Biol Sci 274:1035–1041. 10.1098/rspb.2006.0347 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchinson B, Grant RA, Arkley K, Rankov V, Perkon I, Prescott TJ (2011) Active vibrissal sensing in rodents and marsupials. Philos Trans R Soc Lond B Biol Sci 366:3037–3048. 10.1098/rstb.2011.0156 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchinson B, Prescott TJ (2013) Whisker movements reveal spatial attention: a unified computational model of active sensing control in the rat. PLoS Comput Biol 9:e1003236. 10.1371/journal.pcbi.1003236 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore JD, Deschênes M, Furuta T, Huber D, Smear MC, Demers M, Kleinfeld D (2013) Hierarchy of orofacial rhythms revealed through whisking and breathing. Nature 497:205–210. 10.1038/nature12076 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore JD, Kleinfeld D, Wang F (2014) How the brainstem controls orofacial behaviors comprised of rhythmic actions. Trends Neurosci 37:370–380. 10.1016/j.tins.2014.05.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musall S, Kaufman MT, Juavinett AL, Gluf S, Churchland AK (2019) Single-trial-neural dynamics are dominated by richly varied movements. Nat Neurosci 22:1677–1686. 10.1038/s41593-019-0502-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nashaat MA, Oraby H, Sachdev RN, Winter Y, Larkum ME (2016) Air-Track: a real-world floating environment for active sensing in head-fixed mice. J Neurophysiol 116:1542–1553. 10.1152/jn.00088.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nashaat MA, Oraby H, Peña LB, Dominiak S, Larkum ME, Sachdev RN (2017) Pixying behavior: a versatile real-time and post hoc automated optical tracking method for freely moving and head-fixed animals. eNeuro 4:ENEURO.0245–16.2017. 10.1523/ENEURO.0245-16.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oberlaender M, de Kock CP, Bruno RM, Ramirez A, Meyer HS, Dercksen VJ, Helmstaedter M, Sakmann B (2012) Cell type-specific three-dimensional structure of thalamocortical circuits in a column of rat vibrissal cortex. Cereb Cortex 22:2375–2391. 10.1093/cercor/bhr317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petersen CC, Grinvald A, Sakmann B (2003) Spatiotemporal dynamics of sensory responses in layer 2/3 of rat barrel cortex measured in vivo by voltage-sensitive dye imaging combined with whole-cell voltage recordings and neuron reconstructions. J Neurosci 23:1298–1309. 10.1523/JNEUROSCI.23-04-01298.2003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prescott TJ, Diamond ME, Wing AM (2011) Active touch sensing. Philos Trans R Soc Lond B Biol Sci 366:2989–2995. 10.1098/rstb.2011.0167 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reimer J, Froudarakis E, Cadwell CR, Yatsenko D, Denfield GH, Tolias AS (2014) Pupil fluctuations track fast switching of cortical states during quiet wakefulness. Neuron 84:355–362. 10.1016/j.neuron.2014.09.033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sachdev RN, Sato T, Ebner FF (2002) Divergent movement of adjacent whiskers. J Neurophysiol 87:1440–1448. 10.1152/jn.00539.2001 [DOI] [PubMed] [Google Scholar]

- Saraf-Sinik I, Assa E, Ahissar E (2015) Motion makes sense: an adaptive motor-sensory strategy underlies the perception of object location in rats. J Neurosci 35:8777–8789. 10.1523/JNEUROSCI.4149-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder JB, Ritt JT (2016) Selection of head and whisker coordination strategies during goal-oriented active touch. J Neurophysiol 115:1797–1809. 10.1152/jn.00465.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sellien H, Eshenroder DS, Ebner FF (2005) Comparison of bilateral whisker movement in freely exploring and head-fixed adult rats. Somatosens Mot Res 22:97–114. 10.1080/08990220400015375 [DOI] [PubMed] [Google Scholar]

- Simons DJ. (1978) Response properties of vibrissa units in rat SI somatosensory neocortex. J Neurophysiol 41:798–820. 10.1152/jn.1978.41.3.798 [DOI] [PubMed] [Google Scholar]

- Sofroniew NJ, Cohen JD, Lee AK, Svoboda K (2014) Natural whisker-guided behavior by head-fixed mice in tactile virtual reality. J Neurosci 34:9537–9550. 10.1523/JNEUROSCI.0712-14.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stringer C, Pachitariu M, Steinmetz N, Reddy CB, Carandini M, Harris KD (2019) Spontaneous behaviors drive multidimensional brain wide activity. Science 364:255. 10.1126/science.aav7893 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Towal RB, Hartmann MJ (2006) Right-left asymmetries in the whisking behavior of rats anticipate head movements. J Neurosci 26:8838–8846. 10.1523/JNEUROSCI.0581-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]