Abstract

Background and objectives

Skilled clinical reasoning is a critical tool for physicians. Educators agree that this skill should be formally taught and assessed. Objectives related to the mastery of clinical reasoning skills appear in the documentation of most medical schools and licensing bodies. We conducted this study to assess the differences in clinical reasoning skills in medical students following paper- and computer-based simulated instructions.

Materials and methods

A total of 52 sixth semester medical students of the Dow University of Health Sciences were included in this study. A tutorial was delivered to all students on clinical reasoning and its importance in clinical practice. Students were divided randomly into two groups: group A received paper-based instructions while group B received computer-based instructions (as Flash-based scenarios developed with Articulate Storyline software [https://articulate.com/p/storyline-3]) focused on clinical reasoning skills in history-taking of acute and chronic upper abdominal pain. After one week, both groups were tested at two objective structured clinical examination (OSCE) stations to assess acute and chronic pain history-taking skills in relation to clinical reasoning.

Results

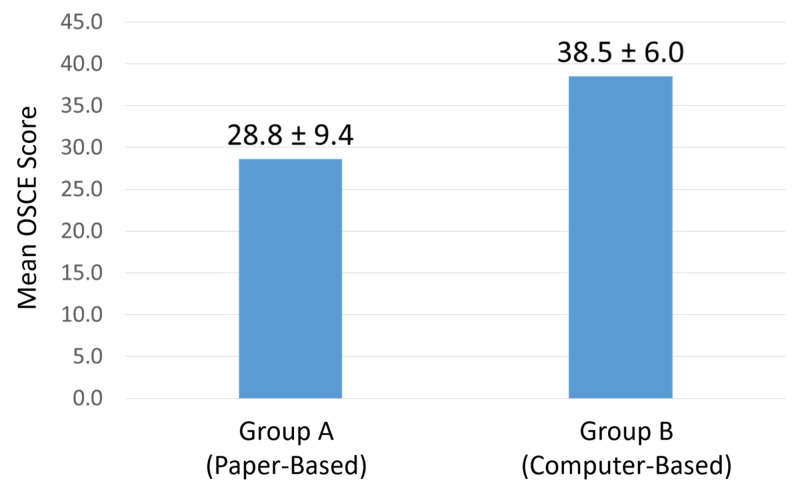

There were 27 students in group A and 25 students in group B. The mean OSCE score for group A (paper-based) was 28.6 ± 9.4 and that for group B (computer-based) was 38.5 ± 6.0. Group B’s mean score was statistically significantly greater (p < 0.001) than group A’s mean score for clinical reasoning skills.

Conclusion

A computer simulation program can enhance clinical reasoning skills. This technology could be used to acquaint students with real-life experiences and identify potential areas for more training before facing real patients.

Keywords: clinical reasoning, computer based learning, simulation, blended learning

Introduction

Clinical reasoning is a process by which clinicians “collect cues, process the information, come to an understanding of a patient problem or situation, plan and implement interventions, evaluate outcomes, and reflect on and learn from the process” [1-2]. Clinical reasoning is an important element of essential medical practice. A positive impact occurs on patient outcomes when a doctor has valuable clinical reasoning ability. Physicians with poor clinical reasoning skills usually fail to achieve positive outcomes and, consequently, compromise patient safety.

The development of an essential level of clinical reasoning skills is not facilitated by current medical curricula [3]. Cognitive failure is at the root of 57% of poor clinical results according to Wilson et al [4]. Objectives related to the mastery of clinical reasoning skills appear in the documentation of most medical schools and licensing bodies, but they are not usually practiced properly.

To develop the skill set for the proper diagnosis and management of a deteriorating patient and for essential communication in real health care, teaching and learning opportunities must be started at the undergraduate level [5]. The dynamic nature of the current clinical practice situations needs medical graduates to acquire multifarious roles that require clinical reasoning abilities during their undergraduate education.

All educators agree that this skill should be formally taught and assessed, but, unfortunately, there are some systemic hurdles faced by the teachers and clinicians in fulfilling this requirement. These hurdles are especially apparent in the progressively demanding and unpredictable character of the current health care environment and the lack of time for clinical educators and teachers to think through clinical problems with students [6]. One approach to overcoming these hurdles is the use of simulation-based clinical reasoning skills, which are becoming more available due to advancements in technology [7]. Many studies have explored the use of simulation in the education of health care professionals, but thorough analyses remain difficult. Most studies were not focused on clinical reasoning skill development at the undergraduate level [8]. Data on clinical reasoning assessment through simulation pertain most often to nursing students [9]. Therefore, additional studies are required to determine and establish the usefulness of computer-based simulation as an educational tool to develop the clinical reasoning skills of undergraduate medical students.

We conducted this study to assess the differences in clinical reasoning skills taught through paper- and computer-based instructions (through simulation) among medical students.

Materials and methods

This randomized controlled trial included undergraduate students at the Dow University of Health Sciences. This study was approved by the university's Institutional Review Board (number IRB-408/DUHS). A total of 52 sixth-semester medical students were included in this study. A clinical reasoning tutorial was delivered to all students by one of the authors (Masood Jawaid). Study participants were assigned into two groups using the Random Allocation Software, version 1.0 (http://mahmoodsaghaei.tripod.com/Softwares/randalloc.html). Students in group A (n = 27) received paper-based instructions from a recommended book for clinical practice of surgery [10]. Students in group B (n = 25) received computer-based instructions from Flash-based scenarios developed with Articulate Storyline software by Articulate Inc. (https://articulate.com/p/storyline-3), which focused on clinical reasoning skills in history-taking of acute and chronic upper abdominal pain. This development tool was used to construct screen content containing different skills, and the linkage between the screen content was used to reach the final diagnosis. After one week, both groups were tested by two objective structured clinical examination score (OSCE) stations on history-taking skills of acute and chronic pain in relation to clinical reasoning.

IBM SPSS Statistics for Windows, Version 20.0 (IBM Corp., Armonk, NY) was used to analyze data. The independent sample t-test was used to analyze the score difference between groups.

Results

The mean OSCE score of students in group A (paper-based instruction) was 28.6 ± 9.4. The mean OSCE score for students in group B (computer-based instruction) was 38.5 ± 6.0, which was statistically significantly higher than the mean group A score (p < 0.001) in clinical reasoning skills (Figure 1).

Figure 1. Mean OSCE Score.

OSCE: Objective Structured Clinical Examination.

Discussion

The significantly higher mean score achieved by students in the computer-based simulation program over the paper-based group indicates that computer-based instructions can enhance clinical reasoning skills of students. Our findings align with those of a study by Puri et al., whose results showed a mean post-test score of 6.65 ± 0.16 in the intervention group and 6.28 ± 0.29 in the control group (p < 0.05) [11]. Raidl et al. showed that computer-assisted simulations improved the clinical reasoning skills of dietetics students [12]. Similar results were also reported by Gilbart et al. in their study in which 92% of students indicated that simulation-based programming should be part of their clinical clerkship [13].

Most clinical faculties have very busy schedules that limit opportunities to properly develop clinical reasoning skills in their students. Adapting computer-based instruction and simulations can at least partially mitigate this systemic gap [6].

Our study was limited due to its relatively small number of participants. However, the degree to which sample size would have affected the results remains unclear. Another limitation was that the modules prepared did not cover multiple clinical scenarios and patients. We believe the students would have become more skilled otherwise.

Additional research is needed to determine which type of simulation-based education is most appropriate for specific health professional student positions [14-15]. Blended learning courses that include both computer-based simulation instructions and traditional methods are highly useful for augmenting clinical learning in medical students. Medical educators should consider the blended learning system to enhance the clinical reasoning skills of their students [16-17].

Conclusions

A computer simulation program can enhance clinical reasoning skills. This technology could be used to acquaint students with real-life experiences and identify potential areas for more training before facing real patients. On the other hand, it will help clinical educators using computer-based simulation to teach clinical reasoning and eventually the patients who are the receivers of care provided ultimately.

Acknowledgments

The study was presented at the 2015 AEME (Association of Excellence in Medical Education) Annual Conference in Islamabad, Pakistan.

The content published in Cureus is the result of clinical experience and/or research by independent individuals or organizations. Cureus is not responsible for the scientific accuracy or reliability of data or conclusions published herein. All content published within Cureus is intended only for educational, research and reference purposes. Additionally, articles published within Cureus should not be deemed a suitable substitute for the advice of a qualified health care professional. Do not disregard or avoid professional medical advice due to content published within Cureus.

The authors have declared that no competing interests exist.

Human Ethics

Consent was obtained by all participants in this study

Animal Ethics

Animal subjects: All authors have confirmed that this study did not involve animal subjects or tissue.

References

- 1.Alfaro-LeFevre Alfaro-LeFevre, R R. St. Louis: Elsevier; 2009. Critical Thinking and Clinical Judgement: A Practical Approach to Outcome-Focused Thinking. [Google Scholar]

- 2.The effects of human patient simulators on basic knowledge in critical care nursing with undergraduate senior baccalaureate nursing students. Hoffmann R, O’Donnell J, Kim Kim, Y Y. Simul Healthc. 2007;2:110–114. doi: 10.1097/SIH.0b013e318033abb5. [DOI] [PubMed] [Google Scholar]

- 3.Effectiveness of patient simulation manikins in teaching clinical reasoning skills to undergraduate nursing students: a systematic review. Lapkin S, Levett-Jones T, Bellchambers H, Fernandez R. Clin Simul Nurs. 2010;6:207–222. doi: 10.11124/01938924-201008160-00001. [DOI] [PubMed] [Google Scholar]

- 4.Quality in Australian health care study. Wilson RM, Runciman WB, Gibberd RW, Harrison BT, Hamilton JD. https://www.ncbi.nlm.nih.gov/pubmed/8668084. Med J Aust. 1996;164:754. doi: 10.5694/j.1326-5377.1996.tb122287.x. [DOI] [PubMed] [Google Scholar]

- 5.Outreach - a strategy for improving the care of the acutely ill hospitalized patient. Bright D, Walker W, Bion J. Crit Care Medicine. 2004;8:33–40. doi: 10.1186/cc2377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Use of the human patient simulator to teach clinical judgment skills in a baccalaureate nursing program. Rhodes ML, Curran C. Comput Inform Nurs. 2005;23:256–262. doi: 10.1097/00024665-200509000-00009. [DOI] [PubMed] [Google Scholar]

- 7.Computerized patient model and simulated clinical experiences: evaluation with baccalaureate nursing students. Feingold CE, Calaluce M, Kallen MA. https://www.ncbi.nlm.nih.gov/pubmed/15098909. J Nurs Educ. 2004;43:156–163. doi: 10.3928/01484834-20040401-03. [DOI] [PubMed] [Google Scholar]

- 8.Effectiveness of simulation on health profession students’ knowledge, skills, confidence and satisfaction. Laschinger S, Medves J, Pulling C. Int J Evid Based Healthc. 2008;6:278–302. doi: 10.1111/j.1744-1609.2008.00108.x. [DOI] [PubMed] [Google Scholar]

- 9.Simulator effects on cognitive skills and confidence levels. Brannan J, White A, Bezanson J. J Nurs Educ. 2008;47:495–500. doi: 10.3928/01484834-20081101-01. [DOI] [PubMed] [Google Scholar]

- 10.Browse NL, Black B, Burnand KG, Thomas WEG. London: CRC Press; 2005. Browse's Introduction to the Symptoms and Signs of Surgical Disease. [Google Scholar]

- 11.Dietetics students' ability to choose appropriate communication and counseling methods is improved by teaching behavior-change strategies in computer-assisted instruction. Puri R, Bell C, Evers WD. J Am Diet Assoc. 2010;110:892–897. doi: 10.1016/j.jada.2010.03.022. [DOI] [PubMed] [Google Scholar]

- 12.Computer-assisted instruction improve clinical reasoning skills of dietetics students. Raidl MA, Wood OB, Lehman JD, Evers WD. J Am Diet Assoc. 1995;95:868–873. doi: 10.1016/S0002-8223(95)00241-3. [DOI] [PubMed] [Google Scholar]

- 13.A computer-based trauma simulator for teaching trauma management skills. Gilbart MK, Hutchison CR, Cusimano MD, Regehr G. Am J Surg. 2000;179:223–228. doi: 10.1016/s0002-9610(00)00302-0. [DOI] [PubMed] [Google Scholar]

- 14.Simulation-based assessments in health professional education: a systematic review. [Oct;2019 ];Ryall T, Judd BK, Gordon CJ. J Multidiscip Healthc. 2016 9:69–82. doi: 10.2147/JMDH.S92695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Improving education in primary care: development of an online curriculum using the blended learning model. [Oct;2019 ];Lewin LO, Singh M, Bateman BL, Glover PB. BMC Med Educ. 2009 9:33. doi: 10.1186/1472-6920-9-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Clinical virtual simulation in nursing education: randomized controlled trial. Padilha JM, Machado PP, Ribeiro A, Ramos J, Costa P. J Med Internet Res. 2019;21:0. doi: 10.2196/11529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Training of clinical reasoning with a Serious Game versus small-group problem-based learning: a prospective study. [Oct;2019 ];Middeke A, Anders S, Schuelper M, Raupach T, Schuelper N. PLoS ONE. 2018 13:0. doi: 10.1371/journal.pone.0203851. [DOI] [PMC free article] [PubMed] [Google Scholar]