Abstract

Purpose

Phenotype information is crucial for the interpretation of genomic variants. So far it has only been accessible for bioinformatics workflows after encoding into clinical terms by expert dysmorphologists.

Methods

Here, we introduce an approach driven by artificial intelligence that uses portrait photographs for the interpretation of clinical exome data. We measured the value added by computer-assisted image analysis to the diagnostic yield on a cohort consisting of 679 individuals with 105 different monogenic disorders. For each case in the cohort we compiled frontal photos, clinical features, and the disease-causing variants, and simulated multiple exomes of different ethnic backgrounds.

Results

The additional use of similarity scores from computer-assisted analysis of frontal photos improved the top 1 accuracy rate by more than 20–89% and the top 10 accuracy rate by more than 5–99% for the disease-causing gene.

Conclusion

Image analysis by deep-learning algorithms can be used to quantify the phenotypic similarity (PP4 criterion of the American College of Medical Genetics and Genomics guidelines) and to advance the performance of bioinformatics pipelines for exome analysis.

Keywords: deep learning, computer vision, dysmorphology, variant prioritization, exome diagnostics

INTRODUCTION

Worldwide, more than half a million children born per year have a rare genetic disorder that is suitable for diagnostic evaluation by exome sequencing. This test’s unprecedented diagnostic yield is contrasted by the time requirement for variant interpretation. Making phenotypic information—the observable, clinical presentation—computer-readable is key to solving this problem and important for providing clinicians with a much-needed tool for diagnosing genetic syndromes.1

To date, the most advanced exome prioritization algorithms combine deleteriousness scores for variants with semantic similarity searches of the clinical description of a patient.2 The Human Phenotype Ontology (HPO) has become the lingua franca for this purpose.3 However, a facial gestalt for which no term exists and that is simply described as "characteristic" for a certain disease is not suitable for these computational approaches.

Beyond language, capturing indicative patterns through deep-learning approaches has recently gained attention in assessing facial dysmorphism.4,5 Artificial neural networks measure the similarities of patient photos to hundreds of disease entities. We hypothesized that results of this next-generation phenotyping tool could be used similarly to deleteriousness scores on the molecular level. This would enable us to transition from the dichotomous PP4 criterion “matching phenotype” in the American College of Medical Genetics and Genomics (ACMG) guidelines for variant interpretation to a quantifiable one.6,7

We therefore developed an approach to interpret sequence variants integrating results from the next-generation phenotyping tool DeepGestalt. By this means the clinical presentation of an individual is not only assessed by a human expert clinician, but also by using an artificial intelligence approach on the basis of frontal photographs. In short, we call this approach prioritization of exome data by image analysis (PEDIA).

MATERIALS AND METHODS

We compiled a cohort comprising 679 individuals with frontal facial photographs and clinical features documented in HPO terminology.3 The diagnoses of all individuals have previously been confirmed molecularly and are suitable for analysis by exome sequencing. In total, the cohort covers 105 different monogenic syndromes linked to 181 different genes. Of the individuals in this cohort, 446 were published and 233 have not been previously reported (see PMID column in Supplementary Table 1).

The study was approved by the ethics committees of the Charité–Universitätsmedizin Berlin and of the University Hospital Bonn. Written informed consent was given by the patients or their guardians, including permission to publish photographs. Easy to understand, transparent information with both text and illustrations about the pattern recognition in our algorithm that processes personal data in the form of 2D portrait photographs can be found at https://www.pedia-study.org/documents. Through technical and organizational measures (privacy by design), we process the photos and the data obtained from them in the least identifiable manner necessary for achieving the purpose. This respects the data minimization principle of data being adequate, relevant, and limited.

In addition to the PEDIA data set, we analyzed a subset of the DeepGestalt study. By removing disorders that are confirmed by tests other than exome sequencing, such as Down syndrome (Supplementary Table 2), we ended up with 260 of 329 cases from the DeepGestalt set.5

The facial images were analyzed with DeepGestalt, a deep convolutional neural network trained on more than 17,000 patient images.5 The results of this analysis are gestalt scores that quantify the similarity to 216 different rare phenotypes per individual. These vectors can also be used to identify duplicates in the DeepGestalt training set and test set without the need to access the original photos. To avoid overfitting, we excluded all cases of the PEDIA cohort from a DeepGestalt model that we used for benchmarking. It is noteworthy that the version of DeepGestalt available at Face2Gene will not yield the same results when photos of the PEDIA cohort are reanalyzed because it is built as a framework that aims to learn from every solved case.

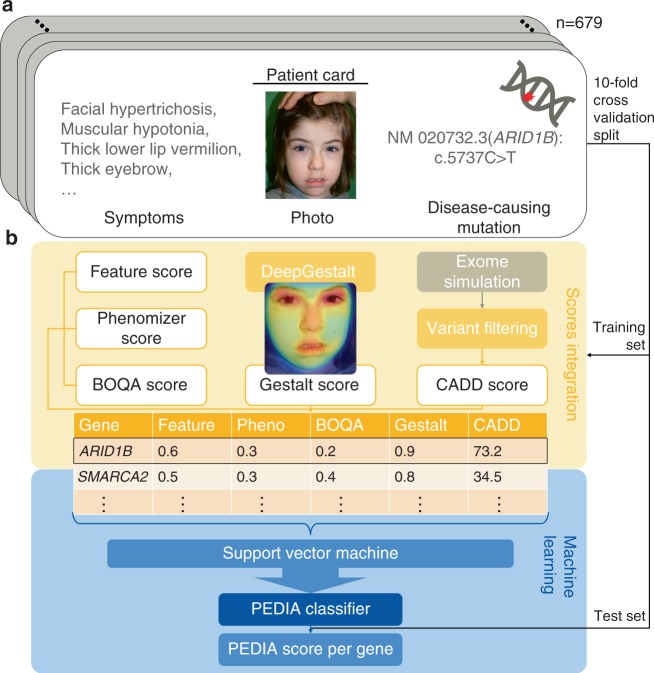

In addition to the image analysis, we performed semantic similarity searches with the annotated HPO terms by three different tools: Feature Match (FDNA), Phenomizer, and Bayesian Ontology Querying for Accurate Comparisons (BOQA).8,9 HPO terms for all published cases as well as the clinical notes in the electronic health records were independently extracted by two data curators. All terms that did not occur in both lists were revisited by a third curator (see Fig. 1a and Supplementary Table 1). The similarity scores from image analysis as well as semantic similarity searches were mapped to genes by mim2gene and morbidmap from OMIM.10 If there were several syndromes linked to a gene, the highest gestalt and feature scores were selected for this gene.

Fig. 1.

Prioritization of exome data by image analysis (PEDIA): cohort and classification approach. (a) Clinical features, facial photograph, and pathogenic variant of one individual of the PEDIA cohort. In total the cohort consists of 679 cases with monogenic disorders that are suitable for a diagnostic workup by exome sequencing. (b) Clinical features, images, and exome variants were evaluated separately and integrated to a single score by a machine learning approach. The disease-causing gene is shown at the top of the list.

Exome sequencing data was not available for the vast majority of cases. Therefore, we spiked in the disease-causing variant of each case into randomly selected exomes of healthy individuals of different ethnicities from the 1000 Genomes Project.11 All sequence variants were then filtered as described by Wright et al. and scored for deleteriousness with CADD.12,13 Per gene, the variant with the highest CADD score was used, regardless of the genotype. This heuristic was chosen to maximize the sensitivity also for compound heterozygous cases where the second hit in a recessive disease gene achieves only a relatively low CADD score.

For each case this procedure resulted in a table with rows for genes and the five different scores in the columns (Fig. 1b). All five scores per line as well as the Boolean label disease gene “true” or “false” (i.e., the vector) were used to train a classifier that yields a single value per gene, the PEDIA score, that can be used for prioritization (Fig. 1b). A detailed description of preprocessing and filtering, as well as all the annotated data, can be found in our code repository.

We used a support vector machine (SVM) to prioritize the genes based on the five scores for each case. To benchmark our approach, we performed tenfold cross-validation. First, we split the PEDIA cohort into ten groups, ensuring that a certain disease gene was included only in one of ten groups. By this means, we avoided overfitting, in case the same disease-causing variant occurred in two different individuals (Supplementary Fig. 1). We used a linear kernel on the five scores to train the SVM and selected the hyperparameter C in the range from 2−6 to 212 by performing internal fivefold cross-validation on the training set. The C with the highest top 1 accuracy was selected for training a linear SVM. We further benchmarked the performance of each case in the test set with this model. The distance of each gene to the hyperplane—defined as the PEDIA score—was used to rank the genes for the case. If the disease-causing gene was at the first position, we called it a top 1 match, or if it was among the first ten genes, we considered it a top 10 match.

For the 260 cases from the DeepGestalt publication test set, where exome diagnostics would be applicable, we randomly selected cases from the PEDIA cohort with the same diagnosis and added the CADD and the feature scores per case (see column C in Supplemental Table 1). The cases in the PEDIA cohort with the same pathogenic variant as already assigned to the DeepGestalt test set were removed from the training set. Then we trained the classifier on the PEDIA cohort and tested it on the DeepGestalt publication test set. The experiment was repeated ten times with random selection. By this means we studied how the publicly available portraits of the DeepGestalt test set would improve the performance when used in exome analysis with the PEDIA approach. However, it has to be emphasized that both approaches solve different multiclass classification problems (MCPs), the first tool operating on phenotypes and the second on genes. The difficulty of the task is not only characterized by the number of classes and the distinguishability of the different entities but also by the information available for the classification. For both MCPs the maximum number of classes can be estimated from OMIM by querying with the HPO term “abnormal facial shape”, yielding around 700 disorders and genes with disease-causing variants. As there is additional and nonredundant information available from the molecular level for PEDIA, it achieves better top 1 and top 10 accuracies.

Code availability

All training data as well as the classifier are available at https://github.com/PEDIA-Charite/PEDIA-workflow. The trained PEDIA model is provided as a service that is ready to use at https://pedia-study.org.

RESULTS

The performance of a prioritization tool can be assessed by the proportion of cases for which the correct diagnosis or disease gene is placed at the first position or among the first ten suggestions (top 1 and top 10 accuracy). The composition of the test set has an influence on the accuracy because some disease phenotypes are easier to recognize, and some gene variants are more readily identified as deleterious. The setup of the PEDIA cohort, which is comprehensively documented in the Supplementary Appendix, therefore aims at emulating the whole spectrum of cases that could be analyzed with DeepGestalt and diagnosed by exome sequencing.

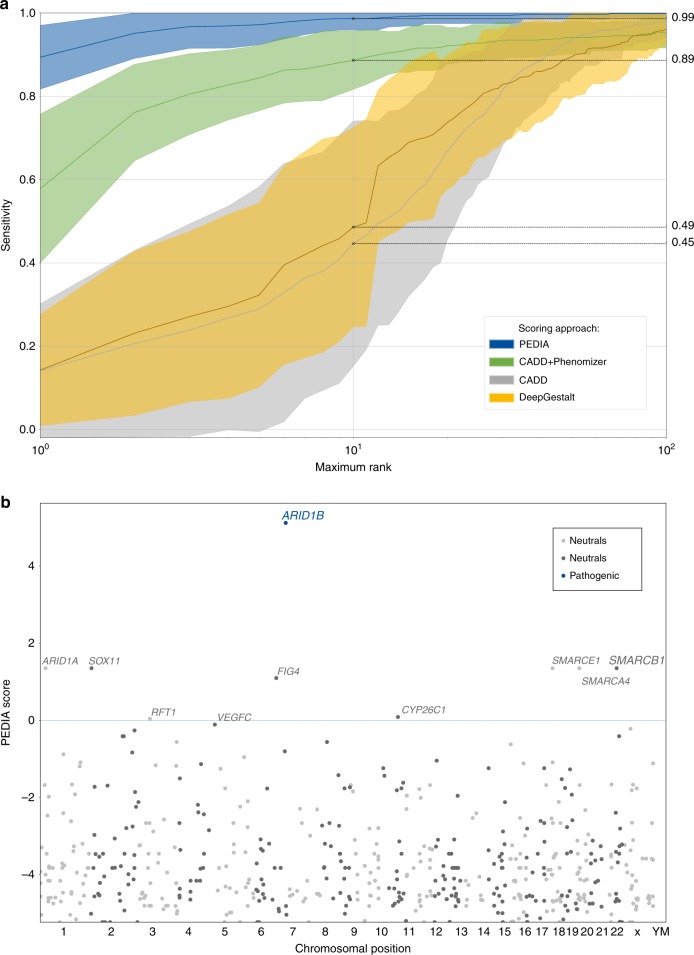

When only CADD scores are used for variant ranking, the disease-causing gene is in the top 10 in less than 45% of all tested cases. The top 10 accuracy increases up to 63–94%, when different semantic similarity scores based on HPO feature annotations are included (Supplementary Table 3).

The additional information from frontal photos of cases pushes the correct disease gene to the top 10 in 99% of all PEDIA cases (Fig. 2a). Particularly striking is the performance gain for the top 1 accuracy rate from 36–74% without DeepGestalt scores to 86–89% including the scores from image analysis (Supplementary Table 3).

Fig. 2.

Performance readout and visualization of test results for a representative prioritization of exome data by image analysis (PEDIA) case. (a) For each case the exome variants are ordered according to four different scoring approaches, solely by a molecular deleteriousness score (CADD), by a score from image analysis (DeepGestalt), by a combination of a molecular deleteriousness score and a clinical feature–based semantic similarity score (CADD+Phenomizer), or the PEDIA score that includes all three levels of evidence. The sensitivity of the prioritization approach depends on the number of genes that are considered in an ordered list. The top 1 and top 10 accuracy rates correspond to the intersection of the curves at maximum rank 1 and 10. Note that for benchmarking DeepGestalt on the gene level, syndrome similarity scores first have to be mapped to the gene level, resulting in a lower performance compared with the readout on a phenotype level, due to heterogeneity. The area under the curve is largest for PEDIA scoring. (b) The disease-causing gene of the case depicted in Fig. 1 achieves the highest PEDIA score and molecularly confirms the diagnosis of Coffin–Siris syndrome. Other genes associated with similar phenotypes, such as Nicolaides–Baraitser syndrome, also achieved high scores for gestalt but not for variant deleteriousness.

The distribution of the PEDIA scores does not differ using exomes with different ethnic backgrounds (Supplementary Fig. 2).

Although the top 10 accuracies of DeepGestalt scoring on the phenotype level and PEDIA scoring on the gene level cannot be compared directly, both approaches operate on a similar number of classes (Fig. 2). Adding suitable molecular information to 260 cases from the DeepGestalt publication test set confirms our results in the PEDIA cohort by achieving a top 10 accuracy rate of 99% (Supplementary Table 2).

The value of a frontal photograph is demonstrated by a case with Coffin–Siris syndrome (shown in Fig. 1): the characteristic facial features are relatively mild, so the correct diagnosis is only listed as the third suggestion by DeepGestalt. Among all the variants encountered in the exome, the disease-causing gene ARID1B would only achieve rank 27, if scored by the molecular information alone. However, combined with the phenotypic information, the PEDIA approach lists this gene as the first candidate (Fig. 2b).

Although the diagnosis of the illustrated case could be molecularly confirmed by a directed single-gene test in other instances where the facial gestalt is more indicative, syndromic disorders often puzzle clinicians due to their high phenotypic variability. In the Deciphering Developmental Disorders (DDD) project many syndromes were diagnosed only after exome sequencing.14 Still, the top 10 accuracy rate of 49% that DeepGestalt can achieve for phenotypes linked to genes is impressive (Fig. 2a). The contribution from the different sources of evidence to the PEDIA score is also reflected by the relative weight of the deleteriousness of the pathogenic variant (0.44), all feature-based scores combined (0.25), and the results from image analysis by DeepGestalt (0.31) that can be derived from a linear SVM model. The information contained in a frontal photograph of a patient therefore goes beyond what clinical terms can capture. The top 1 and top 10 accuracies are reported for all combinations of scores in the Supplementary Table 3.

DISCUSSION

The guidelines for variant classification in the laboratory follow a qualitative heuristic that combines distinct types of evidence (functional, population, phenotype, etc.). Interestingly, it is also compatible with Bayesian statistics7 and the advantage of such a framework is that continuous evidence types can be integrated into the classification system. While in silico predictions about a variant’s pathogenicity have a relatively long history in bioinformatics and machine learning, the quantification of phenotypic raw data such as facial images with artificial intelligence systems has just begun: the PEDIA approach uses scores from DeepGestalt for gene prioritization in combination with quantitative scores from the molecular level in Mendelian disorders identifiable by exome sequencing.

Interestingly, the ethnicity, which affects the number of variant calls or the deleterious variant load, had minor influence on the performance of PEDIA. Although the total number of variants detected by reference-guided sequencing in individuals of African descent is considerably higher than in individuals of European or Asian descent, the distribution of the CADD scores for rare variants is comparable (Supplementary Figs. 3, 4). That means the rank that a gene achieves due to the molecular score and the corresponding scores from the phenotypic information is hardly affected by the background population (Supplementary Fig. 2).

With regard to the routine use in the laboratory we have learned three important lessons from specific subgroups or cases achieving lower PEDIA ranks:

1. Although DeepGestalt, the convolutional neural network used for image analysis, has been pretrained on real-world uncontrolled 2D images, patient photographs that were not frontal, of low resolution, had poor lightening and contrast, or contained artifacts such as glasses, yielded lower gestalt scores for the searched disorder. In one use case envisioned for PEDIA, the human expert in the lab will only receive the similarity scores from DeepGestalt, but not the original photograph. In this setting it is not clear whether low scores originate from a low-quality photograph or whether there is little dysmorphic signal indicative of a syndromic disorder. This potential problem could be addressed by providing gestalt scores from additional photographs.

2. Particularly rare diseases or recently described disorders, for which the classifier’s representation is based on a smaller training set, show a lower performance, even if experienced dysmorphologists would consider them highly distinguishable. In a recent publication by Duddin-Byth et al. the machine learning approach showed the lowest accuracy for the disorder with the smallest number of training cases; however, so did humans.15

3. Disease-causing variants in genes that interact in a molecular pathway often result in highly similar phenotypes that are organized as series in OMIM and modeled as a single entity by DeepGestalt. Often there are subtle gene-specific differences in the gestalt and modeling the entire phenotypic series by a single class is not the theoretical optimum achievable with more cases.16,17 This will especially diminish the performance of genes less frequently mutated in a molecular pathway. This is exemplified in the PEDIA cohort by Hyperphosphatasia with Mental Retardation Syndrome (HPMRS), where the least frequently mutated gene, PGAP2, shows the lowest performance. Likewise, this applies to microdeletion syndromes that can also be caused by pathogenic variants in single genes, such as Smith–Magenis syndrome, or an atypical clinical presentation with Kabuki syndrome (see e.g., case IDs 246245 and 204233 in Supplementary Table 1).18

It is noteworthy that these shortcomings are mainly due to the limited training data for these particular genes and that they will most likely be overcome by more molecularly confirmed cases. DeepGestalt and PEDIA are therefore built as frameworks that will be improved continuously with additional data. In general, the use of artificial intelligence in medical sciences raises new or exacerbates existing ethical and legal issues as repositories of combined genotype and phenotype data become crucial for the machine learning community.19,20 Sharing portrait photos of individuals with rare diseases can be accomplished within the scope of even the most elaborate data privacy laws, such as the European Union General Data Protection Regulation 2016/679 (GDPR). The GDPR not only ensures the protection of individuals, but also the free movement of personal data, inter alia, for scientific research purposes.21

The interpretation of genetic variants is greatly facilitated by sequencing additional family members. Analogously, we hypothesize that the signal-to-noise ratio of next-generation phenotyping technologies can further be improved by including unaffected siblings or parents in the analysis.

We include and strive to include a wide variety of ethnicities, but European backgrounds are currently best represented, leading to best performance for this population. As the data set expands further, the algorithm will improve for currently underrepresented ethnicities.

Assistance with diagnosis of rare genetic disorders is highly valuable to clinicians, and by extension to the patients themselves and their families. Especially in inconclusive cases with findings of unknown clinical significance, additional evidence from computer-assisted analysis of medical imaging data could be a decisive factor.13

In conclusion, the PEDIA study documents that exome variant interpretation benefits from computer-assisted image analysis of facial photographs. By including similarity scores from DeepGestalt, we improved the top 10 accuracy rate significantly compared with state-of-the-art algorithms. Artificial intelligence–driven pattern recognition of frontal facial patient photographs is therefore an example of next-generation phenotyping technology that has proven its clinical value for the interpretation of next-generation sequencing data.22

Supplementary information

Acknowledgements

This work was funded by the Deutsche Forschungsgemeinschaft (KR 3985/7-3, KR 3985/6-1). M.A.M. is participant in the BIH Charité Junior Clinician Scientist Program funded by the Charité–Universitätsmedizin Berlin and the Berlin Institute of Health.

Disclosure

P.M.K. and K.W.G. receive compensation as consultants for FDNA Inc. H.D.E., Y.H., G.N., O. Bar, O.S., Y.G., N.F. are employees of FDNA; T.K. is an employee of GeneTalk GmbH. The other authors declare no conflicts of interest.

Footnotes

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Tzung-Chien Hsieh, Martin A. Mensah

Supplementary information

The online version of this article (10.1038/s41436-019-0566-2) contains supplementary material, which is available to authorized users.

References

- 1.Biesecker LG, Green RC. Diagnostic clinical genome and exome sequencing. N Engl J Med. 2014;371:1170. doi: 10.1056/NEJMc1409040. [DOI] [PubMed] [Google Scholar]

- 2.Pengelly RJ, et al. Evaluating phenotype-driven approaches for genetic diagnoses from exomes in a clinical setting. Sci Rep. 2017;7:13509. doi: 10.1038/s41598-017-13841-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Robinson PN, et al. The Human Phenotype Ontology: a tool for annotating and analyzing human hereditary disease. Am J Hum Genet. 2008;83:610–615. doi: 10.1016/j.ajhg.2008.09.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ferry Q, et al. Diagnostically relevant facial gestalt information from ordinary photos. eLife. 2014;3:e02020. doi: 10.7554/eLife.02020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gurovich Y, et al. DeepGestalt—identifying rare genetic syndromes using deep learning. Nat Med. 2019;25:60–64. doi: 10.1038/s41591-018-0279-0. [DOI] [PubMed] [Google Scholar]

- 6.Richards S, et al. Standards and guidelines for the interpretation of sequence variants: a joint consensus recommendation of the American College of Medical Genetics and Genomics and the Association for Molecular Pathology. Genet Med. 2015;17:405–424. doi: 10.1038/gim.2015.30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tavtigian SV, et al. Modeling the ACMG/AMP variant classification guidelines as a Bayesian classification framework. Genet Med. 2018;20:1054–1060. doi: 10.1038/gim.2017.210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Köhler S, et al. Clinical diagnostics in human genetics with semantic similarity searches in ontologies. Am J Hum Genet. 2009;85:457–464. doi: 10.1016/j.ajhg.2009.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bauer S, Köhler S, Schulz MH, Robinson PN. Bayesian ontology querying for accurate and noise-tolerant semantic searches. Bioinformatics. 2012;28:2502–2508. doi: 10.1093/bioinformatics/bts471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Online Mendelian Inheritance in Man (OMIM). Baltimore, MD: McKusick-Nathans Institute of Genetic Medicine, Johns Hopkins Universrity (Baltimore, MD); 2019. https://omim.org/.

- 11.The 1000 Genomes Project Consortium. A global reference for human genetic variation. Nature. 2015;526:68. doi: 10.1038/nature15393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kircher M, et al. A general framework for estimating the relative pathogenicity of human genetic variants. Nat Genet. 2014;46:310–315. doi: 10.1038/ng.2892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wright CF, et al. Making new genetic diagnoses with old data: iterative reanalysis and reporting from genome-wide data in 1,133 families with developmental disorders. Genet Med. 2018;20:1216–1223. doi: 10.1038/gim.2017.246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Deciphering Developmental Disorders Study. Prevalence and architecture of de novo mutations in developmental disorders. Nature. 2017;542:433–438. doi: 10.1038/nature21062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dudding-Byth T, et al. Computer face-matching technology using two-dimensional photographs accurately matches the facial gestalt of unrelated individuals with the same syndromic form of intellectual disability. BMC Biotechnol. 2017;17:90. doi: 10.1186/s12896-017-0410-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Pantel JT, et al. Advances in computer-assisted syndrome recognition by the example of inborn errors of metabolism. J Inherit Metab Dis. 2018;41:533–539. doi: 10.1007/s10545-018-0174-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Knaus A, et al. Characterization of glycosylphosphatidylinositol biosynthesis defects by clinical features, flow cytometry, and automated image analysis. Genome Med. 2018;10:3. doi: 10.1186/s13073-017-0510-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Badalato L, et al. KMT2D p.Gln3575His segregating in a family with autosomal dominant choanal atresia strengthens the Kabuki/CHARGE connection. Am J Med Genet A. 2017;173:183–189. doi: 10.1002/ajmg.a.38010. [DOI] [PubMed] [Google Scholar]

- 19.Hallowell DPH, et al. Big data phenotyping in rare diseases: some ethical issues. Genet Med. 2019;21:272–274. doi: 10.1038/s41436-018-0067-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mascalzoni D, et al. Are requirements to deposit data in research repositories compatible with the European Union’s General Data Protection Regulation? Ann Intern Med. 2019;170:332–334. doi: 10.7326/M18-2854. [DOI] [PubMed] [Google Scholar]

- 21.Bentzen HB, Høstmælingen N. Balancing protection and free movement of personal data: the new European Union General Data Protection Regulation. Ann Intern Med. 2019;170:335–337. doi: 10.7326/M18-2782. [DOI] [PubMed] [Google Scholar]

- 22.Hennekam R, Biesecker LG. Next-generation sequencing demands next-generation phenotyping. Hum Mutat. 2012;33:884–886. doi: 10.1002/humu.22048. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.