Summary

Adaptive decision-making in dynamic environments requires multiple reinforcement-learning steps that may be implemented by dissociable neural circuits. Here we used a novel directionally-specific viral ablation approach to investigate the function of several anatomically-defined orbitofrontal cortex (OFC) circuits during adaptive, flexible decision-making in rats trained on a probabilistic reversal learning task. Ablation of OFC neurons projecting to the nucleus accumbens selectively disrupted performance following a reversal, by disrupting the use of negative outcomes to guide subsequent choices. Ablation of amygdala neurons projecting to the OFC also impaired reversal performance, but due to disruptions in the use of positive outcomes to guide subsequent choices. Ablation of OFC neurons projecting to the amygdala, by contrast, enhanced reversal performance by destabilizing action values. Our data are inconsistent with a unitary function of the OFC in decision making. Rather, distinct OFC-amygdala-striatal circuits mediate distinct components of the action-value updating and maintenance necessary for decision making.

Keywords: decision-making, reinforcement learning, orbitofrontal cortex, amygdala, nucleus accumbens

eTOC Blurb

The orbitofrontal cortex (OFC) plays a critical role in guiding decisions in dynamic environments. In this issue of Neuron, Groman et al. (2019) used a directionally-specific viral ablation approach to demonstrate that OFC circuits encode separable reinforcement-learning processes that guide decisions.

Introduction

Action values that guide choices are estimated by the aggregation of multiple computational steps. For example, action values might be updated differently depending on whether that action was performed or not (Lee et al., 2005; Ito and Doya, 2009; Abe and Lee, 2011), and whether the outcome of a chosen action was appetitive or aversive (Donahue et al., 2013; Donahue and Lee, 2015). Reinforcement-learning algorithms can quantify the degree to which these individual computational steps influence choice (Dayan and Daw, 2008; Rangel et al., 2008; Lee, 2013). Previous studies have linked neural activity in the orbitofrontal cortex (OFC) to reward learning (Rolls et al., 1996; Tremblay and Schultz, 1999; O’Doherty et al., 2001; Wallis and Miller, 2003) and to specific reinforcement-learning parameters (Kennerley and Wallis, 2009; Sul et al., 2010; Abe and Lee, 2011; Stalnaker et al., 2014; Nogueira et al., 2017; Saez et al., 2017; Massi et al., 2018), suggesting that the OFC may contribute to multiple aspects of reinforcement learning (Wallis, 2007; Lee et al., 2012). This multiplicity might reflect the diverse reciprocal connections that exist between the OFC and other cortical and subcortical regions (Haber et al., 1995; Cavada et al., 2000). Distinct OFC circuits may implement different computational steps in reinforcement learning that are integrated to generate appropriate motor responses (Frank and Claus, 2006). Nevertheless, the contribution of individual OFC circuits to reinforcement learning has been difficult to analyze rigorously.

To address this question, we assessed the ability of rats to acquire and reverse preferences for probabilistically reinforced spatial locations before and after directionally-specific ablation of OFC circuits. We focused on three key OFC circuits: OFC neurons projecting to the nucleus accumbens (OFC→NAcc), OFC neurons projecting to the amygdala (OFC→Amygdala) and amygdala neurons projecting to the OFC (Amygdala→OFC). Ablation of the OFC→NAcc and Amygdala→OFC circuits disrupted the ability of rats to adjust their performance following a reversal. Further analysis revealed that OFC→NAcc circuit ablation disrupted the ability of rats to use negative outcomes effectively in guiding choices, while Amygdala→OFC circuit ablation disrupted the ability of rats to use positive outcomes. In contrast, ablation of the OFC→Amygdala circuit improved reversal performance, by facilitating the decay of values for unchosen options. These data demonstrate that anatomically defined OFC circuits encode distinct processes that are integrated during reinforcement learning to implement appropriate computations for value-based decision making.

Results

OFC circuit manipulations alter decision making

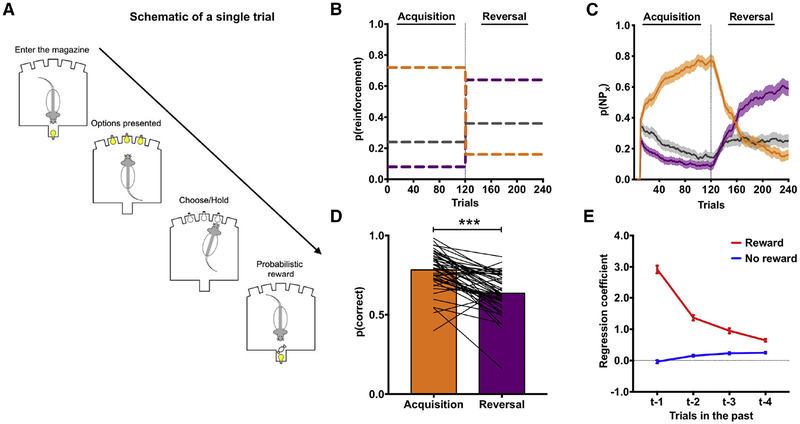

Decision making was assessed in adult, male rats (N=60) on a ‘three-armed bandit’ probabilistic reversal-learning (PRL) task using stochastic reward schedules (Groman et al., 2016; Figure 1A). On each trial, three noseport apertures were illuminated, with one of the apertures being associated with a higher probability of delivering reward (72%) than the other two apertures (24% and 8%). Reinforcement probabilities assigned to each noseport were pseudo-randomly assigned at the start of each session for individual rats. Rats could make a single choice on each trial by making a nosepoke into the port. Through trial-and-error, rats learned which of the ports was most often rewarded. After completing 120 trials (referred to as the acquisition phase), the probability of reward delivery changed (72%→16%; 8%→64%; 24%→36%), and rats completed 120 additional trials under this new reward schedule (referred to as the reversal phase; Figure 1B). Rats were able to track these dynamic reinforcement probabilities (Figure 1C) and, as expected, chose the most frequently reinforced option significantly less following a change in reward probabilities compared to their performance in the acquisition phase (Figure 1D). Moreover, choice behavior of rats was influenced by previous choices and outcomes (Figure 1E).

Figure 1:

The probabilistic reversal learning (PRL) task. (A) A diagram outlining the events of a single trial in the PRL. (B) The probability of reinforcement for each port during acquisition and reversal phases. (C) The choice probability of rats in the PRL. Rats were able to acquire (orange line) and reverse (purple line) their choices in the PRL using a 10-trial moving average. Line colors denote the reinforcement probabilities of three noseports shown in (B). (D) The probability of choosing the most frequently reinforced option was significantly lower following the reversal (χ2=48.41; p<0.001). (E) Regression coefficients from a logistic regression model predicting current trial choice based on previous trial choice and whether that choice was rewarded (red line) or unrewarded (blue line).

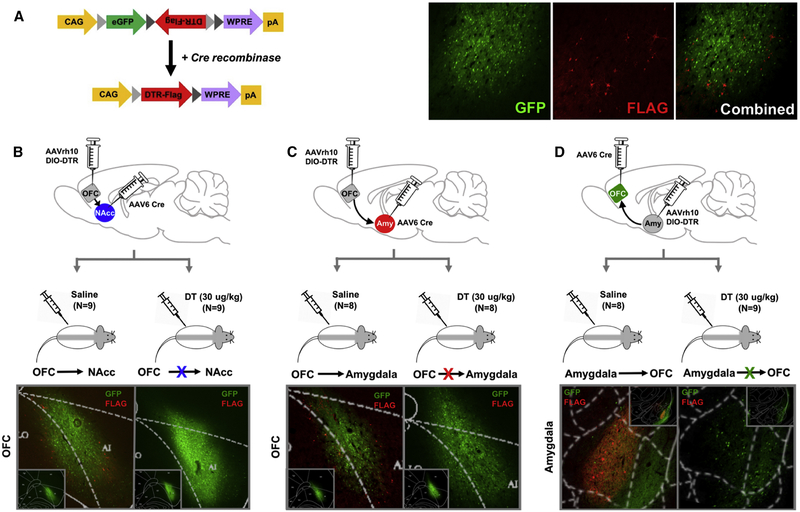

We combined a floxed diphtheria toxin receptor (DTR) construct with a retrograde transported AAV6.2-Cre virus to express DTR and ultimately to ablate defined neuronal circuits (Figure 2A). The AAVrh10-EGFP.DTR.FLAG virus was injected into the OFC (N=40), targeting the dorso-lateral portion of the OFC that extends posteriorly to the agranular insula, or into the amygdala (N=20) and the AAV6.2-Cre into the OFC (N=20), NAcc (N=20), or amygdala (N=20), as shown in Supplemental Figure 1–2. This resulted in three groups: 1) rats in which expression of DTR was confined to OFC neurons projecting to NAcc (N=18; OFC→NAcc; Figure 2B), 2) rats in which expression of DTR was confined to OFC neurons projecting to the amygdala (N=17; OFC→Amygdala; Figure 2C) and 3) rats in which expression of DTR was confined to amygdala neurons projecting to the OFC (N=17; Amygdala→OFC; Figure 2D). Since rodents do not endogenously express DTR, only the targeted circuit is ablated following systemic injection of diphtheria toxin (DT; Xu et al., 2015). All rats expressed DTR in anatomically defined circuits; half of the rats in each group received an injection of DT (30 ug/kg; i.p. N=26), while the other half received saline (i.p.; N=25) and served as the comparison group. Rats remained undisturbed for 72 h following toxin administration to allow for neuronal apoptosis (Keistler et al., 2017). Ablation was confirmed using immunohistochemistry and the number of DTR-positive neurons quantified (see Supplement Figure 2D).

Figure 2:

Targeted ablation of directionally specific OFC circuits. (A) Map of the AAVrh10-EGFP.DTR.FLAG (AAVrh10 DIO-DTR) vector. CAG, CMV early enhancer/chicken promoter β actin promoter; eGFP, enhanced green fluorescent protein; DTR, diphtheria toxin receptor; WPRE, woodchuck hepatitis virus posttranscriptional regulatory element. Representative micrographs of GFP (green) and FLAG (red) expression, as well as the combined image. The AAVrh10 DIO-DTR virus was injected into the OFC (Panel B and C) or amygdala (Panel D) and AAV6.2-Cre was injected into the NAcc (Panel B), amygdala (Panel C) or OFC (Panel D). Following 4–6 weeks for viral transfection, rats received either saline (N=9) or diphtheria toxin (DT; N=9) and remained undisturbed for 72 h. Tissue was collected upon completion of the behavioral assessments and stained for FLAG (red), GFP (green) and Cre (data shown in Figure S1) using immunohistochemistry. Representative micrographs from each experimental group are presented in the bottom panels and overlaid by a rat brain atlas. Inset panels display the AAVrh10 viral placement at a macrolevel in the OFC (B,C) and amygdala (D). Related to Supplemental Figure 1 and 2.

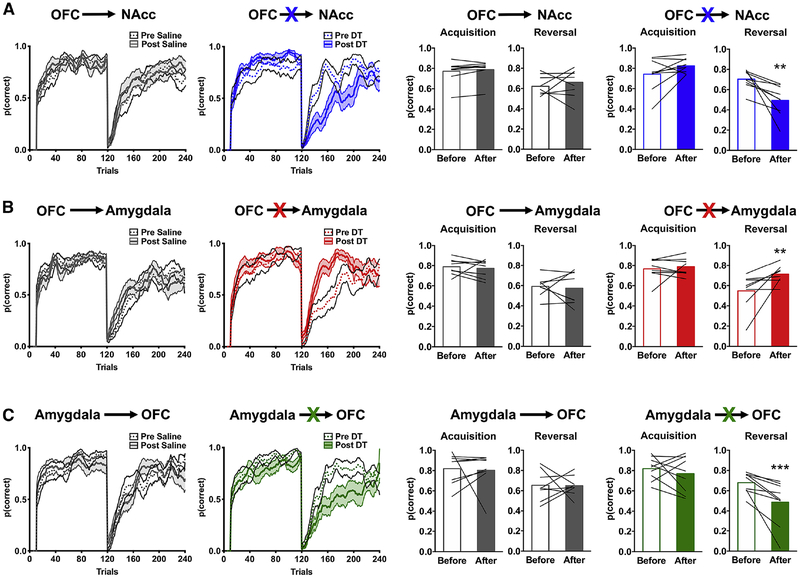

Decision making was re-assessed on the PRL task and the number of trials in which rats chose the most frequently reinforced option during the acquisition and reversal phase quantified (Figure 3; see also Supplemental Figure 3). Logistic regression within a general estimating equation framework revealed a significant four-way interaction [task phase (acquisition vs. reversal) × brain circuit (Amygdala→OFC, OFC→Amygdala or OFC→NAcc) × toxin (DT vs. saline) × time (before vs. after toxin): χ2=17.46; p=0.001)]. Although the number of correct choices rats made in the acquisition phase was not differentially altered following toxin administration (brain circuit × time × toxin: χ2=0.21; p=0.65), the number of correct choices rats made in the reversal phase was (brain circuit × time × toxin: χ2=15.59; p<0.001; Figure 3; Supplemental Figure 3).

Figure 3:

Ablation of directionally specific OFC circuits disrupts reversal learning performance. The probability of choosing correct noseports, p(correct), before (solid line) and after (dotted line) administration of saline (left) or DT (right) is shown for rats where the OFC→NAcc (A), OFC→Amygdala (B), or Amygdala→OFC (C) was targeted using a 10-trial moving average. The right bar graphs show the average p(correct) for the acquisition and reversal phase before (open bars) and after (closed bars) administration of saline (gray bars) or DT (colored bars). Values plotted are thin lines representing individual rats and averages ± SEM. ** p<0.01; *** p<0.001 denotes that values after DT administration were different from values before DT administration. Related to Supplemental Figure 3 and 4.

Compared to the performance of the control animals, ablation of OFC neurons that project to the NAcc decreased the number of correct choices after reversal (toxin × time: χ2=10.22; p=0.001; DT: time: χ2=14.09; p<0.001; Saline: time: χ2=0.43; p=0.51; Figure 3A). An analysis of error type for choices following the reversal revealed that the number of trials in which rats chose the port previously associated with the highest probability of reinforcement in the acquisition phase (e.g., perseverative choice) and the port that was associated with the intermediate probability of reinforcement (e.g., intermediate choice) was increased following administration of DT (Supplemental Figure 4).

By contrast, ablation of OFC neurons that project to the amygdala increased the number of correct choices after reversal (toxin × time: χ2=5.78; p=0.02; DT: time: χ2=8.39; p=0.004; Saline: time: χ2=0.12; p=0.73; Figure 3B) and decreased the number of perseverative choices (Supplemental Figure 4), without significantly altering the number of intermediate choices. Similar to the effect of ablating OFC→NAcc circuit, ablation of amygdala neurons that project to the OFC decreased the number of correct choices after reversal (toxin × time: χ2=4.39; p=0.03; DT: time: χ2=13.49; p<0.001; Saline: time: χ2=0.007; p=0.93; Figure 3C) and increased the number of intermediate choices (Supplemental Figure 4) but did not significantly alter the number of perseverative choices.

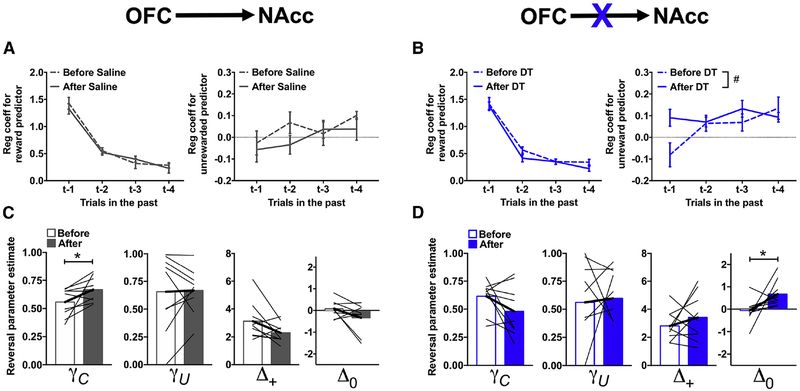

Ablation of the OFC→NAcc circuit mutes negative feedback

To characterize the decision-making processes disrupted in rats following ablation of the OFC→NAcc circuit in more details, choice behavior was analyzed using a logistic regression. In order to obtain a sufficient number of trials, choice behavior in the acquisition and reversal phase was analyzed collectively. This model quantifies the degree to which choices and outcomes (rewarded or unrewarded) on the previous four trials influence current choice (Groman et al., 2018). Regression coefficients estimate the change in the likelihood of repeating the same choice relative to an arbitrary baseline. Before toxin or saline administration, rats were more likely to stay when a previous choice was rewarded compared to an unrewarded choice and the influence of outcomes on current choice decreased across trial-lag (p<0.002), as shown in Figure 4A–B. We then examined the impact of DT/saline administration on decision making. Although the four-way interaction between toxin × time x outcome-type × trial-lag was not significant (χ2=1.54; p=0.67), the three-way interaction between toxin × time × outcome-type was (χ2=5.36; p=0.02). Administration of DT did not alter the likelihood that rats would repeat the same previously rewarded choice (χ2=0.93; p=0.34) but altered the likelihood that rats would switch after an unrewarded choice (χ2=10.12; p=0.001; Figure 4B). Post-hoc analyses suggested that there might be an increase in the likelihood that rats would persist with the same unrewarded trial following administration of DT (main effect of time: χ2=3.44; p=0.06) suggesting that ablation of the OFC→NAcc circuit disrupted the ability of rats to use negative outcomes appropriately in guiding their decisions (Figure 4B, right).

Figure 4:

Ablation of the OFC→NAcc circuit disrupts action-value updating following unrewarded outcomes. (A-B) Regression coefficients from the logistic regression model examining the likelihood of persisting with the same choice based on whether the same choice in the last four trials was rewarded (left) or unrewarded (right) in rats where the OFC→NAcc pathway was targeted before (solid line) and after (dashed line) administration of saline (A) or DT. (C-D) Reinforcement learning parameters for the reversal phase in rats where the OFC→NAcc pathway was targeted before (open bars) and after (closed bars) administration of saline (C) or DT (D). Values plotted are thin lines representing individual rats and averages ± SEM.# p<0.07, * p<0.05 denotes that values after DT/saline administration were different from those before DT/saline administration. Related to Supplemental Figure 6.

Reinforcement-learning models predict choices based on action values that are adjusted incrementally according to outcomes over multiple trials, and can capture more subtle changes in the effect of previous outcomes on decision making. To determine if ablation of the OFC→NAcc circuit impacted specific aspects of reinforcement learning following a reversal, choice data were fit with a differential forgetting reinforcement-learning (RL) model (Barraclough et al., 2004; Ito and Doya, 2009; STAR methods). This RL model contained four free parameters: a decay rate for the action values of chosen options (γC), a decay rate for the action values of unchosen options (γU), a parameter indexing the appetitive strength of rewarded outcomes (Δ+) and a parameter indexing the aversive strength of unrewarded outcomes (Δ0). Specifically, γ values closer to 0 indicate that the retention of action values for chosen (γC) and unchosen (γU) options is low (e.g., action values are reset on each trial). Lower Δ+ values indicate that rats are less likely to repeat the same rewarded choice, whereas lower Δ0 values indicate that rats are less likely to repeat the same unrewarded choice. In this model, these two parameters can also quantify the tendency to repeat the same choice when they become positive. This model was fit separately to the choice data during the acquisition and reversal phase to provide task-phase specific parameter estimates. We found that this RL model was a better fit to the choice data of rats both before and after DT/Saline administration when compared to other commonly used RL models (see Supplemental methods and Supplemental Figure 5).

The RL parameters derived from choice behavior in the acquisition phase were examined first. There was a significant time × toxin × RL parameter interaction (χ2=7.93; p=0.04), but none of the post-hoc comparisons were statistically significant (Supplement Figure 6). RL parameters derived from choice behavior in the reversal phase also showed a significant time × toxin × RL parameter interaction (χ2=10.19; p=0.02), which was specific to the γC (time × toxin: χ2=8.17; p=0.004) and Δ0 parameter (time × toxin: 9.05; p=0.003). Consistent with the results from the logistic regression analysis, post-hoc analyses revealed that administration of DT significantly increased the Δ0 parameter but did not alter the γC parameter (Figure 4D; Δ0: χ2=5.55; p=0.02; γC parameter: χ2=3.39; p=0.07). Administration of saline did not alter the Δ0 parameter but increased the γC parameter (Figure 4C; Δ0 parameter: χ2=1.61; p=0.20; γC parameter: χ2=6.06; p=0.01). These results provide convergent evidence that ablation of the OFC→NAcc circuit alters the normal updating of action values following a negative outcome, i.e., the Δ0 parameter. Moreover, the lack of a corresponding change in the Δ+ parameter indicates that ablation of the OFC→NAcc circuit increased the likelihood of repeating an unrewarded choice and not a generalized increase in choice repetition.

Disruptions in the ability of rats to update choices appropriately following a negative outcome should manifest as an increase in the probability of repeating an incorrect, unrewarded choice. Indeed, we found that ablation of the OFC→NAcc circuit increased the probability that rats would persist with an incorrect, unrewarded choice (χ2=7.87; p=0.005). Moreover, the probability that rats would persist with a rewarded, incorrect choice was not altered (χ2=0.09; p=0.77) indicating that the increase in incorrect choice repetition following OFC→NAcc circuit ablation was selective to the integration of negative outcomes into choice.

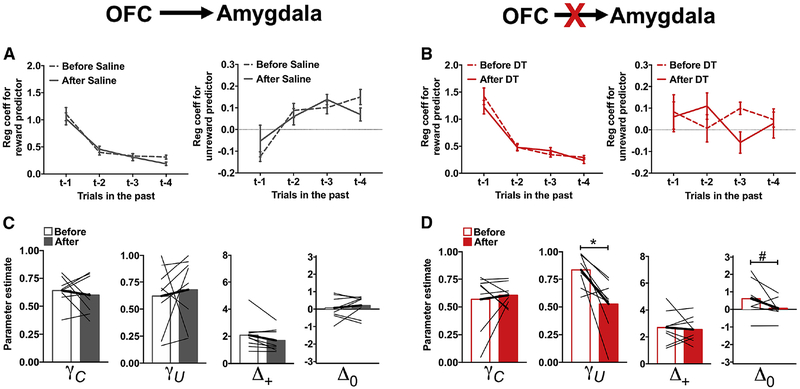

Ablation of the OFC→Amygdala circuit destabilizes action-values

Ablation of OFC→Amygdala neurons improved performance of rats in the reversal phase (Figure 3B). Prior to toxin administration, rats were more likely to stay when a previous choice was rewarded compared to an unrewarded choice, and the influence of previous trial outcomes on current choice decreased with trial-lag (p<0.001; Figure 5A and 5B). Post-hoc analyses of the significant time × toxin × outcome-type × trial-lag interaction (χ2=24.77; p<0.001) revealed a significant time × toxin × trial-lag interaction for the unrewarded predictor (χ2=10.84; p=0.01) and a trend-level three-way interaction for the rewarded predictor (χ2=7.22; p=0.06). Administration of DT (Figure 5B, right), but not saline (Figure 5A, right), significantly altered the likelihood that rats would repeat an unrewarded choice (time × trial-lag: χ2=10.08; p=0.02). The presence of a significant time × trial-lag interaction in the analysis of logistic regression coefficients, combined with a small effect of time following ablation of the OFC→Amygdala circuit, suggested to us that ablation of the OFC→Amygdala circuit might not be involved in the outcome-specific action-value updating that we had observed following ablation of the OFC→NAcc circuit. Instead, if OFC neurons projecting to the amygdala are involved in maintaining action values across trials/delays (Wallis, 2007), then ablation of the OFC→Amygdala circuit might reduce the influence of previous trial events on current choice and, consequently, improve performance following a reversal. Specifically, a disruption in the retention of action values could reduce response bias and enable a rapid switch in choice preference following a change in reinforcement contingencies.

Figure 5:

Ablation of the OFC→Amygdala circuit disrupts retention of action-values. Same format as in Figure 4 in rats where the OFC→Amygdala pathway was targeted before (open bars) and after (closed bars) administration of saline (A,C) or DT (B,D). Values plotted are thin lines representing individual rats and averages ± SEM. * p<0.05, # p<0.07 denotes that values after DT administration were different from those before DT administration. Related to Supplemental Figure 7.

To explore this possibility, choice data were fit with the RL model described above, and parameter estimates compared between the experimental groups before and after toxin administration. The parameter estimates for acquisition phase were not differentially affected between the experimental groups (Supplemental Figure 7), but there was a significant time × toxin × RL parameter interaction for the parameters derived from the reversal phase choices (time × toxin × RL parameter: χ2=10.99; p=0.01). This effect was specific to the γU (time × toxin: χ2=4.12; p=0.04) and Δ0 (time × toxin: χ2=6.26; p=0.01) parameters. Administration of DT decreased both γU and Δ0 (Figure 5D; γU parameter: χ2=11.88; p=0.001; Δ0 parameter: χ2=3.77; p=0.05), while administration of saline did not alter either of these parameters (Figure 5C; χ2<0.6; p>0.45). The reduction in the γU parameter following administration of DT confirms our hypothesis that ablation of the OFC→Amygdala circuit decreased the retention of action-values for unchosen options.

A decrease in the retention of action values for unchosen options would be expected to behaviorally manifest as a decrease in the probability that rats would choose the option with the smallest reinforcement probability (previously the best option) following a rewarded trial. Indeed, we found that the probability of choosing the worst options after a reward was reduced following DT administration (χ2=7.54; p=0.006), as well as the probability of choosing the worst option following an unrewarded trial (χ2=6.79; p=0.009). These data, collectively, demonstrate that ablation of the OFC→Amygdala circuit improved the ability of animals to discriminate between options by reducing the retention of action values for unchosen options.

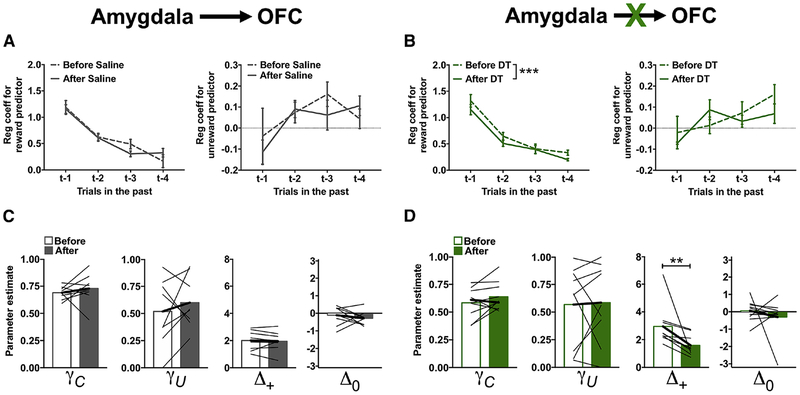

Ablation of the Amygdala→OFC circuit mutes positive-feedback

Ablation of the Amygdala→OFC circuit impaired reversal in a manner that was qualitative similar in both magnitude and direction to that observed following ablation of the OFC→NAcc circuit (Figure 3C). To determine if this behavioral impairment was also due to disruptions in the ability of rats to update action values following unrewarded outcomes, choice data was analyzed for ablation of the Amygdala→OFC circuit using the logistic regression analyses as described above. Prior to toxin administration, rats were more likely to stay when a previous choice was rewarded compared to an unrewarded choice, and the influence of previous reward on current choice decreased across trial-lag (p<0.001; Figure 6A and 6B). Post-hoc analyses of the significant time × toxin × outcome-type × trial-lag interaction (χ2=13.47; p=0.004) revealed a significant time × toxin × trial-lag interaction for the rewarded predictor (χ2=8.19; p=0.04), but not for the unrewarded predictor (χ2=1.48; p=0.69). The likelihood that rats would repeat a previous rewarded choice was reduced following administration of DT (time × trial-lag: χ2=7.81, p=0.05; Figure 6B, left), but was not altered following administration of saline (time × trial-lag: χ2=2.97, p=0.40; Figure 6A, left). These results indicate that ablation of the Amygdala→OFC circuit disrupted the ability of rats to use positive outcomes appropriately in guiding their decisions, but had no effect on the ability of rats to use negative outcomes appropriately.

Figure 6:

Ablation of the Amygdala→OFC circuit disrupts action-value updating following rewarded outcomes. Same format as in Figure 4 in rats where the Amygdala→OFC pathway was targeted before (open bars) and after (closed bars) administration of saline (A,C) or DT (B,D). Values plotted are thin lines representing individual rats and averages ± SEM. ** p<0.01. *** p<0.001 denotes that values after DT administration were different from those before DT administration. Related to Supplemental Figure 8.

To test this further, choice data were fit with the same RL model and parameter estimates compared between the experimental groups before and after toxin administration. RL parameters for acquisition phase were not differentially affected by toxin administration (Supplemental Figure 8), but the parameters during reversal phase were (time × toxin × RL parameter: χ2=22.64; p<0.001). Post-hoc analyses only detected a significant time × toxin interaction for the Δ+ parameter (χ2=6.19; p=0.01). The Δ+ parameter was reduced following administration of DT (Figure 6D; χ2=7.04; p=0.008), but was not altered following administration of saline (Figure 6C; χ2=0.16; p=0.69), indicating that ablation of the Amygdala→OFC circuit disrupted action-value updating following a positive outcome.

Disruptions in the ability of rats to update choices appropriately following a positive outcome should manifest as a decrease in the probability that rats would repeat a rewarded, correct choice. Indeed, we found that ablation of the Amygdala→OFC circuit decreased the probability that rats would persist with a correct, rewarded choice (χ2=57.38; p<0.001). Moreover, the probability that rats would persist with a correct, unrewarded choice was not altered by the ablation (χ2=1.74; p=0.19) indicating that the decrease in correct choice repetition following Amygdala→OFC circuit ablation was selective to the integration of positive outcomes into choice.

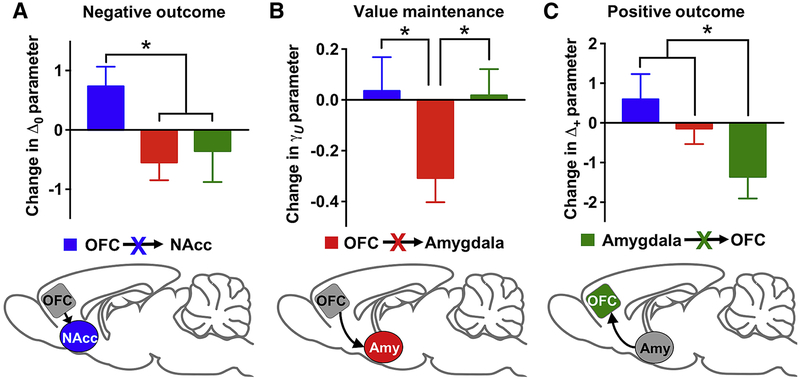

Dissociation of RL processes within OFC circuits

Ablation of OFC→NAcc and Amygdala→OFC pathways produced qualitatively and quantitatively similar deficits in reversal learning performance. Nevertheless, the learning mechanisms underlying these reversal impairments might be different. To confirm this, we compared the reversal RL parameters between the OFC→NAcc and Amygdala→OFC rats following administration of DT. There was a significant interaction between brain circuit [OFC→NAcc vs. Amygdala→OFC] and reversal RL parameters (χ2=10.49; p=0.02). Post-hoc comparisons between brain circuits indicated that the Δ+ and Δ0 parameters were higher in the OFC→NAcc rats compared to the Amygdala→OFC rats (Δ+: χ2=9.73; p=0.002; Δ0: χ2=6.15; p=0.01), but neither the γC or γU were significantly different between these groups (Figure 7). These data confirm that ablation of the OFC→NAcc and Amygdala→OFC that resulted in similar reductions in reversal-learning accuracy was in fact due to disruptions in distinct action-value updating mechanisms.

Figure 7:

OFC circuits make distinct contributions to reinforcement-learning processes that underlie flexible decision-making. Blue: ablation of OFC→NAcc; Red: ablation of OFC→Amygdala; Green: ablation of Amygdala→OFC. * p<0.05.

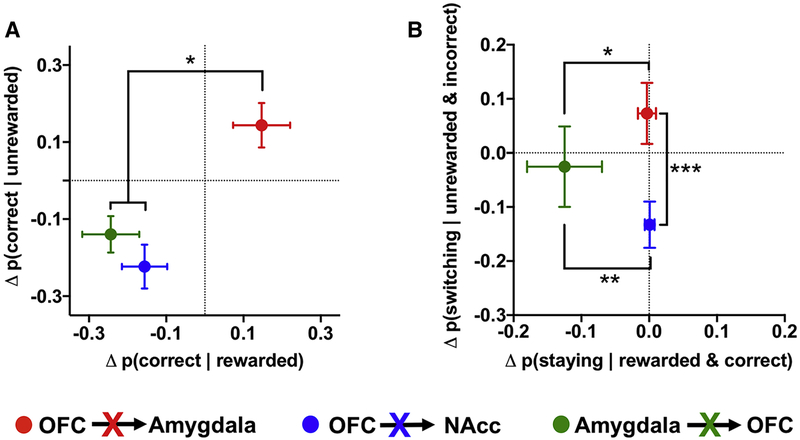

Our results, collectively, indicate that OFC circuits make distinct contributions to RL mechanisms that underlie decision making (Figure 7). To provide additional evidence of this triple dissociation, we compared differences in the patterns of choices after reversal between the three circuits. First, we examined the probability that rats would make a correct choice based on the outcome of the previous trial. Ablation of the OFC→Amygdala circuit increased the probability of choosing the correct noseport after either a rewarded or unrewarded trial, whereas ablation of the OFC→NAcc or Amygdala→OFC circuit reduced the probability of making a correct choice (Figure 8A). This increase in the OFC→Amygdala ablation was significantly different from the decrease in the OFC→NAcc and Amygdala→OFC ablation (p<0.05), but the result was similar for the OFC→NAcc and Amygdala→OFC groups (p=0.51).

Figure 8:

Behavioral dissociation of OFC circuit contributions to reinforcement learning. (A) The change in the probability of making a correct choice following a rewarded trial (x axis) plotted against the change in the probability of making a correct choice following an unrewarded trial (y axis) following ablation of the OFC→NAcc (blue), OFC→Amygdala (red) or Amygdala→OFC (green) circuit. (B) The change in the probability of staying with a rewarded and correct choice (x axis) plotted against the change in the probability of switching from an unrewarded and incorrect choice (y axis) following ablation of the OFC→NAcc, OFC→Amygdala or Amygdala→OFC circuit. Difference scores were calculated by subtracting the post-DT probabilities from the pre-DT probabilities and compared using Mann-Whitney tests. * p<0.05; ** p<0.01; *** p<0.001.

Results from RL models revealed that the decrease in the likelihood of making a correct choice might manifest differently between the OFC→NAcc (e.g., deficits in incorporating negative outcomes into action values) and Amygdala→OFC (e.g., deficits in incorporating positive outcomes into action values) groups. Therefore, we examined whether there were differences in the probability that rats would switch their choice after an unrewarded and incorrect response compared to the probability that rats would stay after a rewarded and correct response following circuit ablation (Figure 8B). As predicted, ablation of the OFC→NAcc circuit decreased the probability that rats would switch their choices following an unrewarded and incorrect response. In contrast, ablation of the Amygdala→OFC circuit decreased the probability that rats would persist with a rewarded and correct choice. This behavioral partition across OFC circuits further supports our conclusion that individual OFC circuits make distinct contributions to reinforcement-learning mechanisms of decision making.

Discussion

In the present study, we found that anatomically distinct OFC circuits contribute to unique aspects of reinforcement learning that guide decision-making processes. Using a novel viral approach in rats, we showed that ablation of OFC neurons projecting to the NAcc impairs the ability of rats to adjust their choices following a change in reinforcement probabilities by disrupting their ability to use negative outcomes appropriately. Ablation of OFC neurons projecting to the amygdala enhanced the ability of rats to adjust their choices in the reversal phase by increasing the lability of action values selectively for unchosen options. Finally, ablation of amygdala neurons projecting to the OFC disrupted the ability of rats to adjust their choices in the reversal phase by impairing their ability to use positive outcomes appropriately. These results provide novel insights into how circuit-level integration of reinforcement-learning processes might occur within the OFC.

OFC→NAcc circuit: updating action values in response to an aversive outcome

Difficulties adjusting behavior following a change in contingencies is a hallmark of damage to the OFC (McEnaney and Butter, 1969; Schoenbaum et al., 2003; Walton et al., 2010; Rudebeck et al., 2017), but the functional network of the OFC has only begun to be examined (Gremel and Costa, 2013; Lichtenberg et al., 2017). Here, we report that ablation of OFC neurons projecting to the NAcc resulted in inflexible behavior, qualitatively similar to that observed following excitotoxic lesions of the lateral OFC (Rudebeck et al., 2017) and ventral striatum (Costa et al., 2016; Taswell et al., 2018). We found that this deficit was due to a selective decrement in the ability of rats to use negative outcomes appropriately: rats were more likely to repeat an unrewarded action following ablation of the OFC→NAcc circuit. Although the majority of studies have implicated the NAcc in learning from positive outcomes (Kelley, 2004; Ambroggi et al., 2008), our findings are consistent with previous evidence demonstrating that lesions to NAcc disrupt learning from negative outcomes (Schoenbaum and Setlow, 2003; Singh et al., 2010).

One prevailing hypothesis about the role of the OFC in decision making is that the OFC generates representations of expected outcomes that can be subsequently conveyed to and evaluated by downstream brain regions (Baxter and Browning, 2007; Stalnaker et al., 2018). Neurons in the NAcc fire differentially to cues that predict different outcomes (Roitman et al., 2005), but a large proportion of these neurons (74%) fire selectively to cues predictive of an aversive outcome (quinine solution: Setlow et al., 2003; Roitman et al., 2005). Our results suggest that OFC neurons may synapse selectively on to NAcc neurons that encode aversive or unrewarded outcomes. Therefore, loss of the OFC-generated representation of the expected outcome to the NAcc could subsequently lead to disruptions in prediction error signals following negative feedback (Rodriguez et al., 2006) and the maladaptive decision-making reported here. Indeed, optogenetic stimulation of lateral OFC-striatal pathway reduces maladaptive grooming behavior in a genetic mouse model of obsessive compulsive disorder (Burguiere et al., 2013) that is known to have reversal learning deficits (Manning et al., 2018).

Amygdala→OFC circuit: updating action values in response to appetitive outcomes

The involvement of the amygdala in decision making is less well established than that of the OFC, in part because of the inconsistency in results regarding the impact of amygdala lesions on reversal-learning, and other decision-making tasks (Izquierdo and Murray, 2007; Izquierdo et al., 2013; Costa et al., 2016). Nevertheless, amygdala neurons rapidly modulate their activity following a change in stimulus value (Paton et al., 2006), and emerging evidence indicates that separable neuronal populations encode positive or negative stimulus values (Paton et al., 2006; Morrison et al., 2011; Beyeler et al., 2018). Updating of neurons in the amygdala following a reversal occurs on distinct time courses depending on the preference of the neuron for encoding positive or negative events (Morrison et al., 2011) which may reflect the diversity of networks within the amygdala. The amygdala, therefore, is well positioned to modulate complex reinforcement-learning processes. Ablation of amygdala neurons projecting to the OFC impaired the performance of rats in the reversal phase in a manner that was similar to that observed following ablation of the OFC→NAcc circuit. Computational analyses, however, revealed that the decision-making impairment was specific to a reduction in the ability of rats to use positive, but not negative, outcomes appropriately: rats were more likely to switch their choice after receiving a positive outcome following ablation of the Amygdala→OFC circuit. These results are consistent with the impairments in learning from positive feedback that have been observed in monkeys with amygdala lesions (Costa et al., 2016) and in humans with focal bilateral amygdala lesions (Hampton et al., 2007). Therefore, the Amygdala→OFC circuit may be important for updating the representation of expected outcomes after positive feedback (Zeeb and Winstanley, 2013; Moorman and Aston-Jones, 2014) particularly during early stages of reversal learning when the amygdala disproportionately influences the OFC (Morrison et al., 2011). Moreover, recent work using fMRI has demonstrated that amygdala-OFC coupling is modulated by the relevance of reward information across multiple timescales (Chau et al., 2015).

Valence-encoding neurons are distributed throughout the amygdala (Morrison et al., 2011; Beyeler et al., 2018), but divergent amygdala circuits may preferentially encode positive or negative valence (Beyeler et al., 2016). For example, lateral amygdala neurons that project to the NAcc are more responsive to cues predictive of appetitive outcomes, whereas lateral amygdala neurons projecting to the central nucleus of the amygdala are more responsive to cues predictive of aversive outcomes (Beyeler et al., 2016). Whether valence-encoding amygdala neurons also project to the OFC is not known, but such a projection is likely similar to the Amygdala→NAcc circuit observed in the medial prefrontal cortex (Beyeler et al., 2016).

OFC→Amygdala circuit: maintenance of action values

In addition to generating representations of expected outcomes for actions, the OFC may also be involved in updating action values (Sul et al., 2010). Neural signals in the OFC appear to encode previous trial choices and outcomes across multiple trials (Sul et al., 2010), and recent theories have posited a role of the OFC in reward-guided memory retrieval (Young and Shapiro, 2011) and encoding state space (Wilson et al., 2014). Ablation of OFC neurons projecting to the amygdala decreased the rate at which action values decay across trials (γU parameter), which enabled rats to more rapidly modify their choices following a reversal. This alteration was specific to the maintenance of unchosen options, as the decay rate of the chosen action value was not affected (γC parameter) suggesting that ablation of the OFC→Amygdala circuit might not disrupt global memory function.

We hypothesize, therefore, that OFC neurons projecting to the amygdala are involved in the maintenance of action values in working memory across short delays (Frank and Claus, 1996; Wallis, 2007). Indeed, delay-dependent activity has been observed in both the OFC and amygdala during working memory tasks (LoPresti et al., 2008). Ablation of the OFC→Amygdala circuit disrupted the retention of action values, which reduced response bias and thus enabled a rapid switch in choice preference following a change in reinforcement contingencies. Although rapid decay of action values improved decision-making in the dynamic environment used here, it is likely that ablation of the OFC→Amygdala circuit would have detrimental effects in stable environments (Behrens et al., 2007; Massi et al., 2018) or when action values for unchosen options need to be maintained across multiple task states, such as in multistage decision-making tasks (Daw et al., 2011).

Implications for OFC function

Understanding the role of the OFC in decision making is an area of active investigation. Several new theories have been proposed within the past decade that diverge from the traditional viewpoint that the primary function of OFC is one of response inhibition (Dias et al., 1996; Ridderinkhof et al., 2004). One such theory has suggested that the OFC plays a causal role in the assignment of credit to choices (Walton et al., 2010): aspiration of the OFC, which impaired reversal performance of monkeys, produced a pattern of choices that no longer reflected the history of previous choices and outcomes. Our data recapitulate some of these results and suggest that contingent learning of specific outcomes may be encoded within anatomically divergent OFC circuits. Aspiration OFC lesions that disrupt all OFC circuits, including those studied here, would be expected to disrupt multiple aspects of action-value updating that relies on different circuit elements and could produce the robust contingent-learning deficits observed previously (Walton et al., 2010). Moreover, the high rates of switching behavior in monkeys with OFC aspiration lesions that was observed by Walton et al. (2010) may be the behavioral culmination of disrupting multiple RL mechanisms that we believe are encoded within OFC circuits. Therefore, our data provide new insights into how separable OFC circuits represent choices and outcomes that may be involved in outcome-specific contingent learning.

Because the OFC appears to be crucial for outcome-specific contingent learning, one might expect that performance during the acquisition phase would be disrupted by ablation of OFC circuits. None of the circuit ablations here, however, altered the ability of rats to learn which port was associated with the highest probability of reward during initial learning. In fact, even global OFC lesions in monkeys leave contingency learning intact during initial acquisition, though monkeys with OFC lesions show deficits following subsequent reversal (Walton et al., 2010). This pattern has been interpreted as implicating the OFC in contingency learning when action-outcome relationships are fluctuating or ambiguous, which is the case following a reversal or when the expected values of multiple options are similar. Our schedule of reinforcement used expected values that were far apart both before and after reversal; consequently, we observed alterations in reversal learning following circuit ablations, but we did not probe the effects of ambiguous stimuli close to one another in value.

Although the homology between the OFC sub-region targeted here and those targeted in non-human primates is unclear (Walton et al., 2010; Rudebeck et al., 2013; Chau et al., 2015) and remains an area of active investigation (Laubach et al., 2018), this rat OFC sub-region may be homologous in function to the ventral lateral prefrontal cortex in non-human primates. Recent work has suggested that the reversal-learning impairments observed following OFC aspirations (Walton et al., 2010) may not be specific to the loss of OFC neurons (Walker Area 11, 13, and 14), but rather due to disruptions of fibers of passage located near the OFC (Rudebeck et al., 2013) that are in route to other cortical regions. Indeed, excitotoxic lesions of the OFC do not alter reversal learning in non-human primates, but excitotoxic lesions of the ventral lateral prefrontal cortex (Walker Area 12, 45, and 46) produce the same reversal-specific and contingent learning deficits (Rudebeck et al., 2013, 2017) previously observed following OFC aspirations (Walton et al., 2010). We hypothesize, therefore, that the rodent dorsolateral OFC may be functional homologous to the primate ventral lateral prefrontal cortex. Nevertheless, reversal learning in rats is disrupted following lesions of OFC sub-regions outside the dorso-lateral OFC (McAlonan and Brown, 2003; Kim and Ragozzino, 2005), so future studies using our circuit-specific ablation approach could provide insights into the functional mapping of the OFC and neural networks across species.

An alternate theory is that OFC processes a model of task structure, and OFC lesions impair reversal by disrupting these models (Wilson et al., 2014). OFC lesions have been reported to disrupt model-based representations in sensory preconditioning and multi-stage decision-making tasks (McDannald et al., 2011; Miller et al., 2017). It is possible that the selective disruptions in reversal performance found here following specific OFC circuit ablations may reflect compromised model-based, rather than model-free, processes. Contributions of model-free and model-based representations to decision-making may be illuminated by using multi-stage decision-making tasks, which enable simultaneous quantification of model-free and model-based reinforcement-learning mechanisms (Daw et al., 2011). We have developed a new rodent multi-stage decision-making task (Groman et al., 2019a), and are investigating to determine whether the reversal-specific deficits observed here are in fact caused by disruptions in model-based learning.

Implications for mental illness

Rigid, inflexible decision making is highly prevalent in individuals diagnosed with mental disorders, such as schizophrenia, addiction, and attention deficit hyperactive disorder (Itami and Uno, 2002; Fillmore and Rush, 2006; Waltz and Gold, 2007; Ghahremani et al., 2011). However, the latent behavioral factors contributing to maladaptive decision-making may differ between disorders; if so, this could provide insights into the pathological mechanisms that underlie these conditions. The current study demonstrates how disruptions in distinct latent behavioral processes – as exemplified by the parameters of a reinforcement learning model – can manifest as qualitatively and quantitatively similar behavioral disruptions. These findings, therefore, provides direct evidence for the utility of computational models to dissect the circuit-level disruptions in mental illness (Lee, 2013).

Moreover, the current study has important implications for delineating the neural circuits involved in the emergence and persistence of disorders in which decision making is perturbed, such as addiction. We have found that individual differences in the ability of rats to use rewarded outcomes to guide their choice behavior (e.g., Δ+ parameter) predicts subsequent drug-taking behaviors, but that chronic drug self-administration disrupts the ability of rats to use unrewarded outcomes to guide their decision-making (e.g., Δ0 parameter) (Groman et al., 2019b). Based on the results of the current study, we suggest that trait differences in the Amygdala→OFC circuit, which contributes to the Δ+ parameter, may mediate inter-individual differences in addiction vulnerability (Peters et al., 2016). In contrast, drug-induced disruptions in the OFC→NAcc circuit may contribute to decision-making deficits produced by chronic substance abuse (Paulus et al., 2003; Parvaz et al., 2015) and animals chronically exposed to drugs of abuse (Groman et al., 2018). Further studies integrating our viral approach with animal models of addiction are ongoing and should yield critical insights into the neural mechanisms of anatomically defined brain circuits that mediate specific aspects of addiction vulnerability and pathology.

Conclusions

Our data demonstrate that anatomically distinct amygdala-cortico-striatal circuits encode separable computations underpinning value-based decision-making in rodents. The use of an experimental approach that combines a systems level analysis with a translationally analogous computational model provides evidence for a framework that can be used to identify key neurobiological mechanisms of brain valuation processes disrupted in neuropsychiatric disorders. Theoretically, it should be possible to use this translational bridge towards predictive computational psychiatry to define both vulnerability and disease-specific biomarkers that could be targeted therapeutically to ameliorate inflexible decision making in psychiatric disorders.

STAR methods

CONTACT FOR REAGENT AND RESOURCE SHARING

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Stephanie Groman (stephanie.groman@yale.edu).

EXPERIMENTAL MODEL AND SUBJECT DETAILS

Adult, male Long Evans rats (N=60) were obtained from Charles River (Raleigh, NC facility) at approximately 6 weeks of age. Rats were pair house in a climate-controlled room and maintained on a 12-h light/dark cycle (lights on at 7am; lights off at 7pm) with access to water ad libitum. Rats were given four days to acclimate to the vivarium and underwent dietary restriction to 90% of their free-feeding weight. Food was provided to rats after completing their daily behavioral testing. All experimental procedures were performed as approved by the Institutional Animal Care and Use Committee at Yale University and according to NIH and institutional guidelines and the Public Health Service Policy on Humane Care and Use of Laboratory Animals.

METHOD DETAILS

Surgical procedures

Rats were anesthetized with gas anesthesia (isoflurane 1–2%) and fixed into a stereotaxic frame. For the rats in OFC→NAcc and OFC→Amygdala ablation groups, AAV6.2.CMV.PI.Cre.rBG (UPenn Vector Core; Lot CS0164-TC) was infused bilaterally into either the NAcc (AP: +1.5; ML: ±3.1; DV: −7.2 at a 16° angle) or amygdala (AP: −2.8; ML: ±5.0; DV: −8.7; −8.4 [half volume at each site]) at a volume 0.5 uL over a 10 min period followed by a 10 min diffusion period. In addition, AVrh10.CAG.EGFP.FLEX.DTRFLAG.WPRE.pA was infused bilaterally into the OFC (AP: +3.2; ML: ±3.5; DV: −5.1 at a volume of 0.25 uL over a 5 min followed by a 10 min diffusion period. The lateral portions of the OFC have been established in rats as critical for reversal learning (Schoenbaum et al., 1999, 2003; Boulougouris et al., 2007) and we targeted the dorso-lateral portion of the OFC as it has been shown to be exclusively involved in reversal learning. Other OFC sub-regions appear to be involved in reversal learning and other related executive functions (Izquierdo, 2017). For the Amygdala→OFC group, AAV6-Cre was infused into the OFC (same volume and rates as described above) and AAVrh10-DIO-DTR infused into the amygdala. All viral infusions were performed using a 25-gauge Hamilton syringe.

Immunohistochemistry

Upon completion of the experiment, rats were anesthetized with euthasol and perfused transcardially with ice-cold PBS followed by 10% formalin. Brains were post-fixed for 24 h then transferred to 30% w/v sucrose in PBS before slicing. 35 um sections were washed in 3 × 5 min in PBS, blocked in 3% normal donkey serum (NDS)/0.3% Triton-X/PBS for 60 min, then incubated overnight in blocking solution containing primary monoclonal antibodies directed toward FLAG (1:1000; Cell Signaling 2368S; RRID: AB_2217020), GFP (1:10,000; Abcam ab13970; RRID: AB_300798), or Cre (1:500; Millipore MAB3120; RRID: AB_2085748). Slices were then washed 3 × 5 min in PBS and incubated for 2 h in species appropriate AlexaFluor secondary antibodies (Abcam; 1:500). Slices were washed 3 × 5 min in PBS, mounted on slides, and coverslipped with Vectashield hard set mounting medium (VectorLabs). The number of FLAG-positive and GFP-positive cells were quantified and compared between rats that received DT or saline, and infusion sites verified (Supplemental Figure 2).

Behavioral procedures

Operant behavior was assessed in standard aluminum and Plexiglas operant conditioning chambers (Groman et al., 2016). Chambers were equipped with a photocell pellet-delivery magazine and a curved panel with five photocell-equipped ports on the opposite side (Med Associates) and housed inside of sound-attenuating cubicles, with background white noise being broadcast and a house light illuminating the environment. Rats were trained as previously described (Groman et al., 2018) and completed 238.5 ± 0.80 trials in each session. On each trial, rats had to make an entry into the magazine in order to illuminate the three port apertures in the center of the opposite panel. Rats could then respond to any of the ports in order to receive probabilistically delivered rewards (Figure 1; BioServ 45 mg sugar pellet) that were pseudo-randomly assigned by the computer program (72%, 24%, and 8%). Reinforcement probabilities assigned to each noseport were pseudo-randomly assigned at the start of each session by the program. These reinforcement probabilities remained stable for the first 120 trials, after which the probabilities assigned to the ports changed (72%–>16%, 24%–>36%, and 8%–>64%) and then remained stable for an additional 120 trials. Sessions terminated when rats completed 240 trials or 76 min had lapsed, whichever occurred first. We refer to the port with the highest reinforcement probability as correct, although this would not be known to the animal with certainty. The data presented here are from the two sessions rats completed before and after administration of either DT or saline.

Logistic regression

Impairments in outcome-based learning could be due to disruptions in how rats used rewarded or unrewarded outcomes to guide their choices. Choice behavior of rats in the PRL was analyzed using a logistic regression model that estimated the likelihood of repeating the same choice according to previous trial outcomes (t-1 through t-4; Parker et al., 2016; Groman et al., 2018). The predictors included in this model were as follows.

| Intercept: | +1 for all trials. |

| Rewarded(t-k): | +1 if the previous trial (t-k) was rewarded and the rat chose the same port as the current trial (t) |

| −1 if the previous trial (t-k) was reward and the rat chose a port different from the current trial (t) | |

| 0 if the previous trial was unrewarded. | |

| Unrewarded(t-k): | +1 if the previous trial (t-k) was unrewarded and the rat chose the same port as the current trial (t) |

| −1 if the previous trial was unrewarded and the rat chose a port different from the current trial (t-k) | |

| 0 if the previous trial was rewarded. |

Positive coefficients for the rewarded and unrewarded predictors indicate that rats are more likely to persist with the same choice, whereas negative regression coefficients indicate that rats are more likely to switch their choice.

Reinforcement-learning models

Reinforcement-learning (RL) models predict that choices are based on outcomes from different actions that incrementally accrue over many trials. To determine if circuit ablation influenced longer-term dependencies than those captured with the logistic regression, choice data were fit with five different RL models: 1) differential forgetting RL model (DF RL model), (2) forgetting RL model (F RL model), (3) standard Q-learning model, (4) forgetting Q-learning model (F Q-learning model), and (5) differential-forgetting Q-learning model (DF Q-learning model). Trials were split into the acquisition and reversal blocks and each model fit separately using 100 different initial parameter values. Starting action values for each acquisition block were set to 0 for models 1 and 2, and 0.33 for models 3–5. Starting values for the reversal block corresponded to the end values of the acquisition block. Fminsearch in Matlab (Mathworks, Inc) was used to maximize the log likelihood of the data given the parameters.

The value updating for each model are as follows:

| Differential forgetting RL model: |

if a = i, Qi(t + 1) = γC

Qi(t) + Δ(t) if a ≠ i, Qi(t + 1) = γU Qi(t) |

| Forgetting RL model: |

if a = i, Qi(t + 1) = γ Qi(t) + Δ(t) if a ≠ i, Qi(t + 1) = γ Qi(t) |

| Q-learning model: |

if a = i, Qi(t + 1) = Qi(t) + (r(t) − Qi(t)) if a ≠ i, Qi(t + 1) = Qi(t) |

| Forgetting Q-learning model: |

if a = i, Qi(t + 1) = γ Qi(t) + (r(t) − Qi(t)) if a ≠ i, Qi(t + 1) = γ Qi(t) |

| Differential forgetting Q-learning model: |

if a = i, Qi(t + 1) = γC

Qi(t) + (r(t) − Qi(t)) if a ≠ i, Qi(t + 1) = γU Qi(t) |

where Δ(t) = Δ+ and r(t) = 1 for rewarded trials, whereas Δ(t) = Δ0 and r(t) = 0 for unrewarded trials.

The AIC for each model was calculated for the acquisition and reversal blocks both before and after DT/saline administration and the AIC for each model summed across animals. These results are present in Supplemental Figure 5A–B. The AIC for the DF RL model was lower compared to all other models, indicating that this model best fit the rat choice data. We conducted a two-fold cross validation procedure (Ito and Doya, 2009) to compare the DF RL model to the F RL model that we have previously used (Barraclough et al., 2004; Groman et al., 2016, 2018). The negative log-likelihood for the DF RL model was lower than that for the F RL model (Supplemental Figure 5C) indicating that the DF RL model was more accurate in predicting the choice behavior of rats compared to the F RL model.

The DF RL model contained four free parameters that estimated the decay rate for chosen options, the decay rate for unchosen options, and the strength of appetitive and aversive outcomes. Specifically, value functions for the chosen option (i) was updated according to the following:

where the decay rate γC determines how quickly the chosen action value decays and Δ(t) indicates the change in the action value that depends on the outcome in trial t. If the outcome of the trial was reward, then the value function of the chosen port was updated by Δ(t) = Δ+, the reinforcing strength of reward. If the outcome of the trial was not rewarded, then the value function of the chosen port was updated by D(t) = Δ0, the aversive strength of no reward. The value for unchosen actions was updated according to the following:

where the decay rate γU determines how quickly the unchosen action value decays. Choice probability was calculated according to a softmax function and trial-by-trial choice data fit with these four parameters (γC, γU, Δ+, and Δ0) selected to maximize the likelihood of each rat’s sequence of choices in each session and phase.

QUANTIFICATION AND STATISTICAL ANALYSIS

Data are expressed as mean ± SEM. All analyses were conducted in SPSS (v25; IBM) using generalized estimating equations (GEE), an extension of generalized linear models for longitudinal/clustered data analysis (Liang and Zeger, 1986). GEE is a population-level approach based on the quasi-likelihood function that provides a population-averaged estimate of parameters. GEE permits the specification of a working correlation matrix to account for within-subject correlation of responses on dependent variables of different distributions, including normal, binomial, and Poisson, that yields unbiased regression parameters relative to ordinary least squares regression (Ballinger, 2004). Compared to repeated measures ANOVA, GEE is able to achieve higher power with smaller sample sizes and fewer repeated measurements (Ma et al., 2012). Data were entered into a GEE model as repeated measures using a probability distribution based on the known properties of the data. The working correlation matrix for each model was determined by comparing the quasi likelihood criterion (Pan, 2001). Factors in the model could include time (before and after drug administration), brain circuit (OFC→NAcc, OFC→Amygdala, Amygdala→OFC), toxin (diphtheria toxin or saline), task phase (acquisition or reversal), trial-lag (1 to 4), or RL parameter (γ1, γ2, Δ+, or Δ0). Statistical significance of explanatory factors included in the model were assessed with the Wald χ2 test. Post-hoc tests of significant interactions consisted of computing low-order comparisons (e.g., 3-way interactions, 2-way interactions) between experimental groups (saline vs. DT). Nonparametric comparisons were conducted using the Mann-Whitney test.

Supplementary Material

KEY RESOURCES TABLE

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Antibodies | ||

| Rabbit anti-FLAG | Cell Signaling | Catalogue Number: 2368S RRID:AB_2217020 |

| Chicken anti-GFP | Abcam | Catalogue Number: AB13970 RRID:AB_300798 |

| Mouse anti-Cre | Millipore | Catalogue Number: MAB3120 RRID:AB_2085748 |

| Bacterial and Virus Strains | ||

| pENN.AAV.CMVs.Pl.Cre.rBG | James Wilson Lab | Addgene AAV6; 105537-AAV6.2 |

| AAVrh10-DIO-EGFP-DTR-FLAG | Xu et al., 2015 | N/A |

| Experimental Models: Organisms/Strains | ||

| Long Evans rats | Charles River Laboratories | RRID:RGD_2308852 |

| Software and Algorithms | ||

| Matlab 2018a | Mathworks |

https://www.mathworks.com/products/matlab.html RRID:SCR_001622 |

| SPSS version 25 | IBM |

https://www.ibm.com/products/spss-statistics RRID:SCR_002865 |

Highlights.

Distinct OFC circuits make unique contributions to flexible decision making

OFC-nucleus accumbens circuit incorporates negative outcomes into values

OFC-amygdala circuit stabilizes action values

Amygdala-OFC circuit incorporates positive outcomes into values

Acknowledgements:

This research was supported by Public Health Service grants from the National Institute on Drug Abuse (DA041480, DA043443), the National Institute of Mental Health (MH091861), a NARSAD Young Investigator Award from the Brain and Behavior Research Foundation, and the State of Connecticut through its support of the Ribicoff Research Facilities.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

The authors declare no competing financial interests.

References:

- Abe H, Lee D (2011) Distributed Coding of Actual and Hypothetical Outcomes in the Orbital and Dorsolateral Prefrontal Cortex. Neuron 70:731–741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ambroggi F, Ishikawa A, Fields HL, Nicola SM (2008) Basolateral Amygdala Neurons Facilitate Reward-Seeking Behavior by Exciting Nucleus Accumbens Neurons. Neuron 59:648–661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ballinger GA (2004) Using Generalized Estimating Equations for Longitudinal Data Analysis.

- Barraclough DJ, Conroy ML, Lee D (2004) Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci 7:404–410. [DOI] [PubMed] [Google Scholar]

- Baxter MG, Browning PGF (2007) Two Wrongs Make a Right: Deficits in Reversal Learning after Orbitofrontal Damage Are Improved by Amygdala Ablation. Neuron 54:1–3. [DOI] [PubMed] [Google Scholar]

- Behrens TEJ, Woolrich MW, Walton ME, Rushworth MFS (2007) Learning the value of information in an uncertain world. Nat Neurosci 10:1214–1221. [DOI] [PubMed] [Google Scholar]

- Beyeler A, Chang C-J, Silvestre M, Lévêque C, Namburi P, Wildes CP, Tye KM (2018) Organization of Valence-Encoding and Projection-Defined Neurons in the Basolateral Amygdala. Cell Rep 22:905–918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beyeler A, Namburi P, Glober GF, Luck R, Wildes CP, Correspondence KMT (2016) Divergent Routing of Positive and Negative Information from the Amygdala during Memory Retrieval. Neuron 90:348–361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boulougouris V, Dalley JW, Robbins TW (2007) Effects of orbitofrontal, infralimbic and prelimbic cortical lesions on serial spatial reversal learning in the rat. Behav Brain Res 179:219–228. [DOI] [PubMed] [Google Scholar]

- Burguiere E, Monteiro P, Feng G, Graybiel AM (2013) Optogenetic Stimulation of Lateral Orbitofronto-Striatal Pathway Suppresses Compulsive Behaviors. Science (80-) 340:1243–1246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavada C, Compañy T, Tejedor J, Cruz-Rizzolo RJ, Reinoso-Suárez F (2000) The Anatomical Connections of the Macaque Monkey Orbitofrontal Cortex. A Review. Cereb Cortex 10:220–242. [DOI] [PubMed] [Google Scholar]

- Chau BKH, Sallet J, Papageorgiou GK, Noonan MP, Bell AH, Walton ME, Rushworth MFS (2015) Contrasting Roles for Orbitofrontal Cortex and Amygdala in Credit Assignment and Learning in Macaques. Neuron 87:1106–1118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costa VD, Dal Monte O, Lucas DR, Murray EA, Averbeck BB (2016) Amygdala and Ventral Striatum Make Distinct Contributions to Reinforcement Learning. Neuron 92:505–517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, Gershman SJ, Seymour B, Dayan P, Dolan RJ (2011) Model-based influences on humans’ choices and striatal prediction errors. Neuron 69:1204–1215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dayan P, Daw ND (2008) Decision theory, reinforcement learning, and the brain. Cogn Affect Behav Neurosci 8:429–453. [DOI] [PubMed] [Google Scholar]

- Dias R, Robbins TW, Roberts AC (1996) Primate analogue of the Wisconsin Card Sorting Test: effects of excitotoxic lesions of the prefrontal cortex in the marmoset. Behav Neurosci 110:872–886. [DOI] [PubMed] [Google Scholar]

- Donahue CH, Lee D (2015) Dynamic routing of task-relevant signals for decision making in dorsolateral prefrontal cortex. Nat Neurosci 18:295–301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donahue CH, Seo H, Lee D (2013) Cortical signals for rewarded actions and strategic exploration. Neuron 80:223–234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fillmore MT, Rush CR (2006) Polydrug abusers display impaired discrimination-reversal learning in a model of behavioural control. J Psychopharmacol 20:24–32. [DOI] [PubMed] [Google Scholar]

- Frank MJ, Claus ED (1996) Anatomy of a Decision: Striato-Orbitofrontal Interactions in Reinforcement Learning, Decision Making and Reversal. Beiser & Houk. [DOI] [PubMed] [Google Scholar]

- Frank MJ, Claus ED (2006) Anatomy of a decision: Striato-orbitofrontal interactions in reinforcement learning, decision making, and reversal. Psychol Rev 113:300–326. [DOI] [PubMed] [Google Scholar]

- Ghahremani DG, Tabibnia G, Monterosso J, Hellemann G, Poldrack RA, London ED (2011) Effect of modafinil on learning and task-related brain activity in methamphetamine-dependent and healthy individuals. Neuropsychopharmacology 36:950–959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gremel CM, Costa RM (2013) Orbitofrontal and striatal circuits dynamically encode the shift between goal-directed and habitual actions. Nat Commun 4:2264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Groman SM, Massi B, Mathias S, Curry D, Lee D, Taylor JR (2019a) Neurochemical and behavioral dissections of decision-making in a rodent multi-stage task. J Neurosci 39:295–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Groman SM, Massi B, Mathias SR, Lee D, Taylor JR (2019b) Model-free and model-based influences in addiction-related behaviors. Biol Psychiatry 18:32121–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Groman SM, Rich KM, Smith NJ, Lee D, Taylor JR (2018) Chronic Exposure to Methamphetamine Disrupts Reinforcement-Based Decision Making in Rats. Neuropsychopharmacology 43:770–780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Groman SM, Smith NJ, Petrullli JR, Massi B, Chen L, Ropchan J, Huang Y, Lee D, Morris ED, Taylor JR (2016) Dopamine D3 receptor availability is associated with inflexible decision making. J Neurosci 36:6732–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber SN, Kunishio K, Mizobuchi M, Lynd-Balta E (1995) The orbital and medial prefrontal circuit through the primate basal ganglia. J Neurosci 15:4851–4867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampton AN, Adolphs R, Tyszka JM, O’Doherty JP (2007) Contributions of the Amygdala to Reward Expectancy and Choice Signals in Human Prefrontal Cortex. Neuron 55:545–555. [DOI] [PubMed] [Google Scholar]

- Hu Y, Salmeron BJ, Gu H, Stein EA, Yang Y (2015) Impaired Functional Connectivity Within and Between Frontostriatal Circuits and Its Association With Compulsive Drug Use and Trait Impulsivity in Cocaine Addiction. JAMA Psychiatry 72:584. [DOI] [PubMed] [Google Scholar]

- Itami S, Uno H (2002) Orbitofrontal cortex dysfunction in attention-deficit hyperactivity disorder revealed by reversal and extinction tasks. Neuroreport 13:2453–2457. [DOI] [PubMed] [Google Scholar]

- Ito M, Doya K (2009) Validation of decision-making models and analysis of decision variables in the rat basal ganglia. J Neurosci 29:9861–9874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izquierdo A (2017) Functional Heterogeneity within Rat Orbitofrontal Cortex in Reward Learning and Decision Making. J Neurosci 37:10529–10540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izquierdo A, Darling C, Manos N, Pozos H, Kim C, Ostrander S, Cazares V, Stepp H, Rudebeck PH (2013) Basolateral amygdala lesions facilitate reward choices after negative feedback in rats. J Neurosci 33:4105–4109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izquierdo A, Murray EA (2007) Selective bilateral amygdala lesions in rhesus monkeys fail to disrupt object reversal learning. J Neurosci 27:1054–1062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keistler CR, Hammarlund E, Barker JM, Bond CW, DiLeone RJ, Pittenger C, Taylor JR (2017) Regulation of Alcohol Extinction and Cue-Induced Reinstatement by Specific Projections among Medial Prefrontal Cortex, Nucleus Accumbens, and Basolateral Amygdala. J Neurosci 37:4462–4471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelley AE (2004) Ventral striatal control of appetitive motivation: role in ingestive behavior and reward-related learning. Neurosci Biobehav Rev 27:765–776. [DOI] [PubMed] [Google Scholar]

- Kennerley SW, Wallis JD (2009) Encoding of reward and space during a working memory task in the orbitofrontal cortex and anterior cingulate sulcus. J Neurophysiol 102:3352–3364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim J, Ragozzino ME (2005) The involvement of the orbitofrontal cortex in learning under changing task contingencies. Neurobiol Learn Mem 83:125–133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laubach M, Amarante LM, Swanson K, White SR (2018) What, If Anything, Is Rodent Prefrontal Cortex? eneuro 5:ENEURO.0315–18.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee D (2013) Decision making: from neuroscience to psychiatry. Neuron 78:233–248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee D, McGreevy BP, Barraclough DJ (2005) Learning and decision making in monkeys during a rock–paper–scissors game. Cogn Brain Res 25:416–430. [DOI] [PubMed] [Google Scholar]

- Lee D, Seo H, Jung MW (2012) Neural Basis of Reinforcement Learning and Decision Making. Annu Rev Neurosci 35:287–308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liang K-Y, Zeger SL (1986) Longitudinal Data Analysis Using Generalized Linear Models.

- Lichtenberg NT, Pennington ZT, Holley SM, Greenfield VY, Cepeda C, Levine MS, Wassum KM (2017) Basolateral amygdala to orbitofrontal cortex projections enable cue-triggered reward expectations. J Neurosci 37:0486–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LoPresti ML, Schon K, Tricarico MD, Swisher JD, Celone KA, Stern CE (2008) Working memory for social cues recruits orbitofrontal cortex and amygdala: a functional magnetic resonance imaging study of delayed matching to sample for emotional expressions. J Neurosci 28:3718–3728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ma Y, Mazumdar M, Memtsoudis SG (2012) Beyond Repeated-Measures Analysis of Variance. Reg Anesth Pain Med 37:99–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manning EE, Dombrovski AY, Torregrossa MM, Ahmari SE (2018) Impaired instrumental reversal learning is associated with increased medial prefrontal cortex activity in Sapap3 knockout mouse model of compulsive behavior. Neuropsychopharmacology:1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massi B, Donahue CH, Lee D (2018) Volatility Facilitates Value Updating in the Prefrontal Cortex. Neuron 99:598–608.e4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McAlonan K, Brown VJ (2003) Orbital prefrontal cortex mediates reversal learning and not attentional set shifting in the rat. Behav Brain Res 146:97–103. [DOI] [PubMed] [Google Scholar]

- McDannald MA, Lucantonio F, Burke KA, Niv Y, Schoenbaum G (2011) Ventral striatum and orbitofrontal cortex are both required for model-based, but not model-free, reinforcement learning. J Neurosci 31:2700–2705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McEnaney KW, Butter CM (1969) Perseveration of responding and nonresponding in monkeys with orbital frontal ablations. J Comp Physiol Psychol 68:558–561. [DOI] [PubMed] [Google Scholar]

- Miller KJ, Botvinick MM, Brody CD (2017) Dorsal hippocampus contributes to model-based planning. Nat Neurosci 20:1269–1276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moorman DE, Aston-Jones G (2014) Orbitofrontal Cortical Neurons Encode Expectation-Driven Initiation of Reward-Seeking. J Neurosci 34:10234–10246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morrison SE, Saez A, Lau B, Salzman CD (2011) Different Time Courses for Learning-Related Changes in Amygdala and Orbitofrontal Cortex. Neuron 71:1127–1140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nogueira R, Abolafia JM, Drugowitsch J, Balaguer-Ballester E, Sanchez-Vives MV, Moreno-Bote R (2017) Lateral orbitofrontal cortex anticipates choices and integrates prior with current information. Nat Commun 8:14823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Doherty J, Kringelbach ML, Rolls ET, Hornak J, Andrews C (2001) Abstract reward and punishment representations in the human orbitofrontal cortex. Nat Neurosci 4:95–102. [DOI] [PubMed] [Google Scholar]

- Pan W (2001) Akaike’s information criterion in generalized estimating equations. Biometrics 57:120–125. [DOI] [PubMed] [Google Scholar]

- Parker NF, Cameron CM, Taliaferro JP, Lee J, Choi JY, Davidson TJ, Daw ND, Witten IB (2016) Reward and choice encoding in terminals of midbrain dopamine neurons depends on striatal target. Nat Neurosci 19:845–854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parvaz MA, Konova AB, Proudfit GH, Dunning JP, Malaker P, Moeller SJ, Maloney T, Alia-Klein N, Goldstein RZ (2015) Impaired Neural Response to Negative Prediction Errors in Cocaine Addiction. J Neurosci 35:1872–1879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paton JJ, Belova MA, Morrison SE, Salzman CD (2006) The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature 439:865–870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paulus MP, Hozack N, Frank L, Brown GG, Schuckit MA (2003) Decision making by methamphetamine-dependent subjects is associated with error-rate-independent decrease in prefrontal and parietal activation. Biol Psychiatry 53:65–74. [DOI] [PubMed] [Google Scholar]

- Peters S, Peper JS, Van Duijvenvoorde ACK, Braams BR, Crone EA (2016) Amygdala-orbitofrontal connectivity predicts alcohol use two years later: a longitudinal neuroimaging study on alcohol use in adolescence. [DOI] [PubMed]

- Rangel A, Camerer C, Montague PR (2008) A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci 9:545–556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ridderinkhof KR, van den Wildenberg WPM, Segalowitz SJ, Carter CS (2004) Neurocognitive mechanisms of cognitive control: The role of prefrontal cortex in action selection, response inhibition, performance monitoring, and reward-based learning. Brain Cogn 56:129–140. [DOI] [PubMed] [Google Scholar]

- Rodriguez PF, Aron AR, Poldrack RA (2006) Ventral–striatal/nucleus–accumbens sensitivity to prediction errors during classification learning. Hum Brain Mapp 27:306–313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roitman MF, Wheeler RA, Carelli RM (2005) Nucleus Accumbens Neurons Are Innately Tuned for Rewarding and Aversive Taste Stimuli, Encode Their Predictors, and Are Linked to Motor Output. Neuron 45:587–597. [DOI] [PubMed] [Google Scholar]

- Rolls ET, Critchley HD, Mason R, Wakeman EA (1996) Orbitofrontal cortex neurons: role in olfactory and visual association learning. J Neurophysiol 75:1970–1981. [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, Saunders RC, Lundgren DA, Correspondence EAM, Murray EA (2017) Specialized Representations of Value in the Orbital and Ventrolateral Prefrontal Cortex: Desirability versus Availability of Outcomes Article Specialized Representations of Value in the Orbital and Ventrolateral Prefrontal Cortex: Desirability versus Availability of Outcomes. Neuron 95:1208–1220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, Saunders RC, Prescott AT, Chau LS, Murray EA (2013) Prefrontal mechanisms of behavioral flexibility, emotion regulation and value updating. Nat Neurosci 16:1140–1145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saez RA, Saez A, Paton JJ, Lau B, Daniel C, Correspondence S (2017) Distinct Roles for the Amygdala and Orbitofrontal Cortex in Representing the Relative Amount of Expected Reward. Neuron 95:70–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M (1999) Neural encoding in orbitofrontal cortex and basolateral amygdala during olfactory discrimination learning. J Neurosci 19:1876–1884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B (2003) Lesions of nucleus accumbens disrupt learning about aversive outcomes. J Neurosci 23:9833–9841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B, Nugent SL, Saddoris MP, Gallagher M (2003) Lesions of orbitofrontal cortex and basolateral amygdala complex disrupt acquisition of odor-guided discriminations and reversals. Learn Mem 10:129–140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Setlow B, Schoenbaum G, Gallagher M (2003) Neural Encoding in Ventral Striatum during Olfactory Discrimination Learning. Neuron 38:625–636. [DOI] [PubMed] [Google Scholar]

- Singh T, McDannald M, Haney R, Cerri D, Schoenbaum G (2010) Nucleus accumbens core and shell are necessary for reinforcer devaluation effects on Pavlovian conditioned responding. Front Integr Neurosci 4:126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalnaker TA, Cooch NK, McDannald MA, Liu T-L, Wied H, Schoenbaum G (2014) Orbitofrontal neurons infer the value and identity of predicted outcomes. Nat Commun 5:3926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalnaker TA, Liu T-L, Takahashi YK, Schoenbaum G (2018) Orbitofrontal neurons signal reward predictions, not reward prediction errors. Neurobiol Learn Mem 153:137–143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sul JH, Kim H, Huh N, Lee D, Jung MW (2010) Distinct roles of rodent orbitofrontal and medial prefrontal cortex in decision making. Neuron 66:449–460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taswell CA, Costa VD, Murray EA, Averbeck BB (2018) Ventral striatum’s role in learning from gains and losses. Proc Natl Acad Sci 115:E12398–E12406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson PM, Hayashi KM, Simon SL, Geaga JA, Hong MS, Sui Y, Lee JY, Toga AW, Ling W, London ED (2004) Structural abnormalities in the brains of human subjects who use methamphetamine. J Neurosci 24:6028–6036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay L, Schultz W (1999) Relative reward preference in primate orbitofrontal cortex. Nature 398:704–708. [DOI] [PubMed] [Google Scholar]

- Volkow ND, Fowler JS, Wolf AP, Hitzemann R, Dewey S, Bendriem B, Alpert R, Hoff A (1991) Changes in brain glucose metabolism in cocaine dependence and withdrawal. Am J Psychiatry 148:621–626. [DOI] [PubMed] [Google Scholar]

- Wallis JD (2007) Orbitofrontal Cortex and Its Contribution to Decision-Making. Annu Rev Neurosci 30:31–56. [DOI] [PubMed] [Google Scholar]