Abstract

Network analysis has been applied to various correlation matrix data. Thresholding on the value of the pairwise correlation is probably the most straightforward and common method to create a network from a correlation matrix. However, there have been criticisms on this thresholding approach such as an inability to filter out spurious correlations, which have led to proposals of alternative methods to overcome some of the problems. We propose a method to create networks from correlation matrices based on optimization with regularization, where we lay an edge between each pair of nodes if and only if the edge is unexpected from a null model. The proposed algorithm is advantageous in that it can be combined with different types of null models. Moreover, the algorithm can select the most plausible null model from a set of candidate null models using a model selection criterion. For three economic datasets, we find that the configuration model for correlation matrices is often preferred to standard null models. For country-level product export data, the present method better predicts main products exported from countries than sample correlation matrices do.

Keywords: network inference, principle of maximum entropy, Lasso, thresholding, sparsity

1. Introduction

Many networks have been constructed from correlation matrices. For instance, asset graphs are networks in which a node represents a stock of a company and an edge between a pair of nodes indicates strong correlations between two stock prices [1,2]. A variety of tools for network analysis, such as centralities, network motifs and community structure, can be used for studying properties of correlation networks [3]. Network analysis may provide information that is not revealed by other analysis methods for correlation matrices such as principal component analysis and factor analysis. Network analysis on correlation data is commonly accepted across various domains [1,4–8].

The present paper proposes a new method for constructing networks from correlation matrices. There exist various methods for generating sparse networks from correlation matrices such as those based on the minimum spanning tree [9] and its variant [10]. Perhaps the most widely used technique is thresholding, i.e. retaining pairs of variables as edges if and only if the correlation or its absolute value is larger than a threshold value [1,4–8]. Because the structure of the generated networks may be sensitive to the threshold value, a variety of criteria for choosing the threshold value have been proposed [1,4–6,11]. An alternative is to analyse a collection of networks generated with different threshold values [6].

The thresholding method is problematic when large correlations do not imply dyadic relationships between nodes [12,13]. For example, in a time series of stock prices, global economic trends (e.g. recession and inflation) simultaneously affect different stock prices, which can lead to a large correlation between various pairs of stocks [14,15]. Additionally, the correlation between two nodes may be accounted for by other nodes. A major instance of this phenomenon is that, if node v1 is strongly correlated with nodes v2 and v3, then v2 and v3 would be correlated even if there is no direct relationship between them [16–18]. For example, the murder rate (corresponding to node v2) is positively correlated with the ice cream sales (node v3), which is accounted for by the fluctuations in the temperature (node v1), i.e. people are more likely to interact with others and purchase ice creams when it is hot [13].

The graphical lasso [19–23], which estimates precision matrices, and the sparse covariance estimation [24] were previously proposed for filtering out spurious correlations. These methods consider the correlation between nodes to be spurious if the correlation is accounted for by random fluctuations under a white noise null model, which assumes that observations at all nodes are independent of each other. However, nodes tend to be correlated with each other in empirical data owing to trivial factors (e.g. global trend), which calls for different null models that emulate different types of spurious correlations [15,25–28]. To incorporate such null models into network inference, we present an algorithm named the Scola, standing for Sparse network construction from COrrelational data with LAsso. The Scola places edges between node pairs if the correlations are not accounted for by a null model of choice. A main advantage of the Scola is that it leaves the choice of null models to users, enabling them to filter out different types of trivial relationships between nodes. Furthermore, the Scola can select the most plausible null model for the given data among a set of null models using a model selection framework. A Python code for the Scola is available at [29].

2. Methods

(a). Construction of networks from correlation matrices

Consider N variables, which we refer to as nodes. We aim to construct a network on N nodes, in which edges indicate the correlations that are not attributed to some trivial properties of the system. Let C = (Cij) be the N × N correlation matrix, where Cij is the correlation between nodes i and j, i.e. −1 ≤ Cij ≤ 1 and Cii = 1 for 1 ≤ i ≤ N. We write C as

| 2.1 |

Matrix Cnull = (Cnullii) is an N × N correlation matrix, where Cnullii is the mean value of the correlation between nodes i and j under a null model. For example, if every node is independent of each other under the null model, then one sets Cnull = I, where I is the N × N identity matrix. We introduce three null models in §2b. Matrix W = (Wij) is an N × N matrix representing the deviation from the null model. We place an edge between nodes i and j (i.e. Wij≠0) if and only if the correlation Cij is sufficiently different from that for the null model. We note that edges are undirected and may have positive or negative weights. The network is assumed not to have self-loops (i.e. Wii = 0) because the diagonal elements of Cnull and Csample are always equal to one.

What is the statistical assumption underlying equation (2.1)? Consider a set of N random variables, denoted by x1, …, xN, for which we compute the correlation matrix C. We write

| 2.2 |

for each i where xnulli is the random variable for node i under the null model whose covariance matrix is Cnull, and Δxi is the deviation from the null model. If we assume that xnulli and Δxi are statistically independent of each other, we obtain equation (2.1).

We estimate W from data as follows. Assume that we have L samples of data observed at the N nodes, based on which the correlation matrix is calculated. Denote by X = (xℓi) the L × N matrix, in which xℓi is the value observed at node i in the ℓth sample. Let xℓ = [xℓ1, xℓ2, …, xℓN] be the ℓth sample. We assume that each sample xℓ is independently and identically distributed according to an N-dimensional multivariate Gaussian distribution with mean zero. For mathematical convenience, we assume that X is preprocessed such that the average and the variance of xℓi over the L samples are zero and one respectively, i.e. and for 1 ≤ i ≤ N.

Our goal is to find W that maximizes the likelihood of X, i.e.

| 2.3 |

where → p is the transposition. It should be noted that C may not be equal to the sample Pearson correlation matrix, denoted by Csample≡X → pX/L [30], if L is finite. The log likelihood is given by

| 2.4 |

Using , one obtains

| 2.5 |

Substitution of equation (2.1) into equation (2.5) leads to the log likelihood of W as follows:

| 2.6 |

The log-likelihood is a concave function with respect to W. Therefore, one obtains the maximizer of , denoted by WMLE, by solving , i.e.

| 2.7 |

In practice, WMLE overfits the given data, leading to a network with many spurious edges. This is because the number of samples, L, is often smaller than the number of elements in WMLE, i.e. L < N(N − 1)/2, as is the case for the estimation of correlation matrices and precision matrices [19,24]. To prevent overfitting, we impose penalties on the number of non-zero elements in W using the Lasso [31]. The Lasso is commonly used for regression analysis to obtain a model with a small number of non-zero regression coefficients. The Lasso is also used for estimating sparse covariance matrices (i.e. C) [24] and sparse precision matrices (i.e. C−1) [19] with a small number of samples. (We discuss these methods in §4.) Here, we apply the Lasso to obtain a sparse W. Specifically, we maximize penalized likelihood function

| 2.8 |

where λij≥0 is the Lasso penalty for Wij. Large values of λij yield sparse W. Because is not concave with respect to W, one cannot analytically find the maximum of . Therefore, we numerically maximize using an extension of a previous algorithm [24], which is described in §2d.

The penalized likelihood contains N(N − 1)/2 Lasso penalty parameters, λij. A simple choice is to use the same value for all λij. However, this method is problematic [32,33]. If one imposes the same penalty to all node pairs, one tends to obtain either a sparse network with small edge weights or a dense network with large edge weights. However, sparse networks with large edge weights or dense networks with small edge weights may yield a larger likelihood. A remedy for this problem is the adaptive Lasso [33], which sets

| 2.9 |

where and γ > 0 are hyperparameters. With the adaptive Lasso, a small penalty is imposed on a pair of nodes i and j if WMLEij is far from zero, allowing the edge to have a large weight. If one has sufficiently many samples, the adaptive Lasso correctly identifies zero and non-zero regression coefficients (i.e. Wij in our case) given an appropriate value and any positive γ value [33]. The estimated Wij values did not much depend on γ in our numerical experiments (electronic supplementary material). Therefore, we set γ = 2, which is a typical value [21,33,34]. Hyperparameter controls the number of edges in the network (i.e. the number of non-zero elements in W). A large yields sparse networks. We describe how to choose in §2c.

(b). Null models for correlation matrices

The Scola accepts various null correlation matrices, i.e. Cnull. Nevertheless, we mainly focus on the configuration model for correlation matrices [28]. Although we also examined two other null models in numerical experiments, the configuration model was mostly chosen in the model selection (§3b).

Our configuration model is based on the principle of maximum entropy. With this method, one determines the probability distribution of data, denoted by , by maximizing the following Shannon entropy given by

| 2.10 |

with respect to under constraints, where is an L × N matrix such that is the value at node i in the ℓth sample. Let be the sample covariance matrix for . In the configuration model, we impose that each node has the same expected variance as that for the original data, i.e.

| 2.11 |

We also impose that the row sum (equivalently, the column sum) of Csample is preserved, i.e.

| 2.12 |

Equation (2.12) is analogous to the case of the configuration model for networks, which by definition preserves the row sum of the adjacency matrix of a network, or equivalently the degree of each node. We note that the ith row sum of Csample is proportional to the correlation between the observation at the ith node and the average of the observations over all nodes [15].

Denote by Ccon the expectation of . We compute Ccon, which we use as the null correlation matrix (i.e. Cnull), as follows. In [28], we showed that is a multivariate Gaussian distribution with mean zero. Under , Ccon is equal to the variance parameter of [28,30,35]. By substituting equation (2.3) into equations (2.10), (2.11) and (2.12), we rewrite the maximization problem as

| 2.13 |

subject to

| 2.14 |

Equation (2.13) is concave with respect to Ccon. Moreover, the feasible region defined by equation (2.14) is a convex set. Therefore, the maximization problem is a convex problem such that one can efficiently find the global optimum. We compute Ccon using an in-house Python program available at [36].

In addition to the configuration model, we consider two other null models. The first model is the white noise model, in which the signal at each node is independent of each other and has the same variance with that in the original data. The null correlation matrix for the white noise model, denoted by CWN, is given by CWNij = 0 for i≠j and CWNii = 1 for 1 ≤ i ≤ N. The white noise model is often used in the analysis of correlation networks [15,37].

Another null model is the Hirschberger-Qi-Steuer (HQS) model [27], in which each node pair is assumed to be correlated to the same extent as expectation. As is the case for the configuration model for correlation matrices, the original HQS model provides a probability distribution of covariance matrices. The HQS model preserves the variance of the signal at each node averaged over all the nodes as expectation. Moreover, the HQS model preserves the average and variance of the correlation values over different pairs of nodes in the original correlation matrix as expectation. The HQS model is analogous to the Erdős-Rényi random graph for networks, in which each pair of nodes is adjacent with the same probability [28]. We use the expectation of the correlation matrix generated by the HQS model, denoted by CHQS. We obtain for i≠j and CHQSii = 1 [27].

(c). Model selection

We determine the value of based on a model selection criterion. Commonly used criteria, Akaike information criterion (AIC) and Bayesian information criterion (BIC), favour an excessively rich model if the model has many parameters relative to the number of samples [38]. As discussed in §2a, this is often the case for the estimation of correlation matrices [19,22,24].

We use the extended BIC (EBIC) to circumvent this problem [22,38]. Let be the estimated correlation matrix at , i.e. , where is the network one estimates by maximizing . We adopt that minimizes

| 2.15 |

with respect to . In equation (2.15), M is the number of edges in the network (i.e. the half of the number of non-zero elements in W), K is the number of parameters of the null model and β is a parameter. The white noise model introduced in §2b does not have parameters. Therefore, K = 0. The HQS model has K = 1 parameter, i.e. the average of the off-diagonal elements. The configuration model yields K = N, i.e. the row sum of each node. It should be noted that we compute the number of parameters by exploiting the fact that Csample is a correlation matrix, i.e. the diagonal entries of Csample are always equal to one.

Parameter β∈[0, 1] determines the prior distribution for a Bayesian inference. The prior distribution affects the sparsity of networks; a large β value would yield sparse networks. Nevertheless, the effect of the prior distribution on the EBIC value diminishes when the number of samples (i.e. L) increases. We adopt a typical value, i.e. β = 0.5, which provided reasonable results for linear regressions and the estimation of precision matrices [22,23,38]. We adopt the golden-section search method to find the value that yields the minimum EBIC value [39] (appendix A).

In addition to selecting the value, the EBIC can be used for selecting a null model among different types of null models. In this case, we compute W for each null model with the value determined by the golden-section search method. Then, we select the pair of Cnull and W that minimizes the EBIC value.

(d). Maximizing the penalized likelihood

To maximize in terms of W, we use the minorize-maximize (MM) algorithm [24]. Although the MM algorithm may not find the global maximum, it converges to a local maximum. The MM algorithm starts from initial guess W = 0 and iterates rounds of the following minorization step and the maximization step.

In the minorization step, we approximate around the current estimate by [24]

| 2.16 |

It should be noted that is a minorizer of satisfying and for all W provided that Cnull + W is positive definite [24]. This property of ensures that maximizing yields a value larger than or equal to .

In the maximization step, we seek the maximizer of . Function is a concave function, which allows us to find the global maximum with a standard gradient descent rule for the Lasso. Specifically, starting from , we iterate the following update rule until convergence:

| 2.17 |

where is the tentative solution at the kth iteration, ϵ is the learning rate, and is the element-wise soft threshold operator given by

| 2.18 |

If , where kfinal is the iteration number after a sufficient convergence, we set . Then, we perform another round of a minorization step and a maximization step. Otherwise, the algorithm finishes.

The learning rate ϵ mainly affects the speed of the convergence of the iterations in the maximization step. We set ϵ using the ADAptive Moment estimation (ADAM), a gradient descent algorithm used in various machine learning algorithms [40]. ADAM adapts the learning rate at each update (equation (2.17)) based on the current and past gradients.

The MM algorithm requires computational time of because equation (2.17) involves the inversion of N × N matrices. In our numerical experiments (§3), the entirety of the Scola consumed approximately three hours on average for a network with N = 1930 nodes with 16 threads running on the Intel 2.6 GHz Sandy Bridge processors with 4GB memory.

3. Numerical results

(a). Prediction of country-level product exports

The product space is a network of products, where a pair of products is defined to be adjacent if both of them have a large share in the export volume of the same country [41,42]. Here, we construct the product space with the Scola and use it for predicting product exports from countries.

We use the dataset provided by the Observatory of Economic Complexity [43], which contains annual export volumes of Np = 988 products from Nc = 249 countries between 1962 and 2014. The dataset also contains the Standard International Trade Classification (SITC) code for each product, which indicates the product type. We quantify the level of sophistication of the product types using the PRODY index [44]. We compute the PRODY index in 1991 for each product, where a product with a large PRODY index is considered to be sophisticated. Then, we average the PRODY index over the products of the same type. The product types in descending order of the PRODY index are as follows: ‘Machinery & transport equipment’, ‘Chemicals’, ‘Miscellaneous manufactured articles’, ‘Manufactured goods by material’, ‘Miscellaneous’, ‘Mineral fuels, lubricants & related materials’, ‘Beverages & tobacco’, ‘Animal & vegetable oils, fats & waxes’, ‘Food & live animals’ and ‘Raw materials’.

In previous studies, the product space was constructed as follows. Two products p and p′ are defined to be adjacent in the product space if and only if they simultaneously constitute a large share of the total export volume of the same country relative to their shares in the world market. Specifically, one computes the so-called revealed comparative advantage (RCA) given by

| 3.1 |

where V(t)cp is the total export volume of product p from country c in year t. The numerator of the right-hand side of equation (3.1) is the share of product p in the total export volume of country c. The denominator is the analogous quantity for the world market, i.e. the share of product p in the sum of the export volume over all countries. Therefore, R(t)cp > 1 indicates that product p constitutes a large share in country c relative to the share in the world market. In the product space, two products p and p′ are adjacent if and only if R(t)cp > 1 and R(t)cp′ > 1 for at least one country c [41].

In contrast to this approach, we construct the product space as follows. First, countries may export different products in different years. To mitigate the effect of temporal changes, we split the data into two halves, i.e. those between Ts = 1972 and Tf = 1991, and those between Ts = 1992 and Tf = 2011.

Second, for each time window, we apply the Box-Cox transformation [45] to each R(t)cp to make the distribution closer to a standard normal distribution (appendix B). This preprocessing is crucial because the Scola assumes that the given data, X, is distributed according to a multivariate Gaussian distribution. We note that the sample average and variance of the transformed RCA values based on the Np products are equal to zero and one, respectively.

Third, we define a sample x(c,t) for each combination of country c and year t by

| 3.2 |

where is the transformed value of R(t)cp.

Fourth, we compute a sample Pearson correlation matrix for concatenated samples [x(c,t), x(c,t+10)]. Specifically, for each of the two time windows, we compute Csample by

| 3.3 |

In equation (3.3), Csample0,0 and Csample+10,+ 10 are the correlation matrices for the products within the same year. The off-diagonal block Csample0,+ 10 contains the correlations between the products with a time lag of ten years.

Fifth, we generate networks by applying either the thresholding method or the Scola to Csample. For the Scola, we adopt the configuration model as the null model. For the thresholding method, we set the threshold value such that the number of edges in the network is equal to that in the network generated by the Scola. We set the weight of each edge to one for the network generated by the thresholding method.

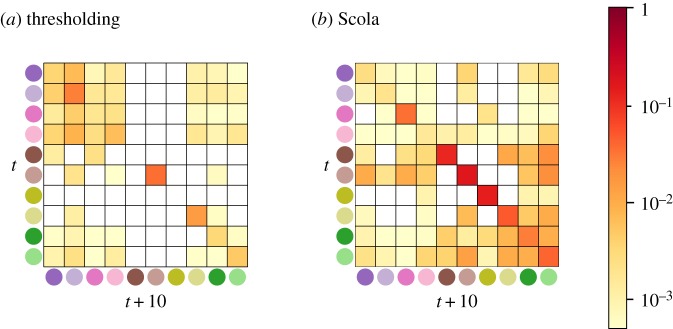

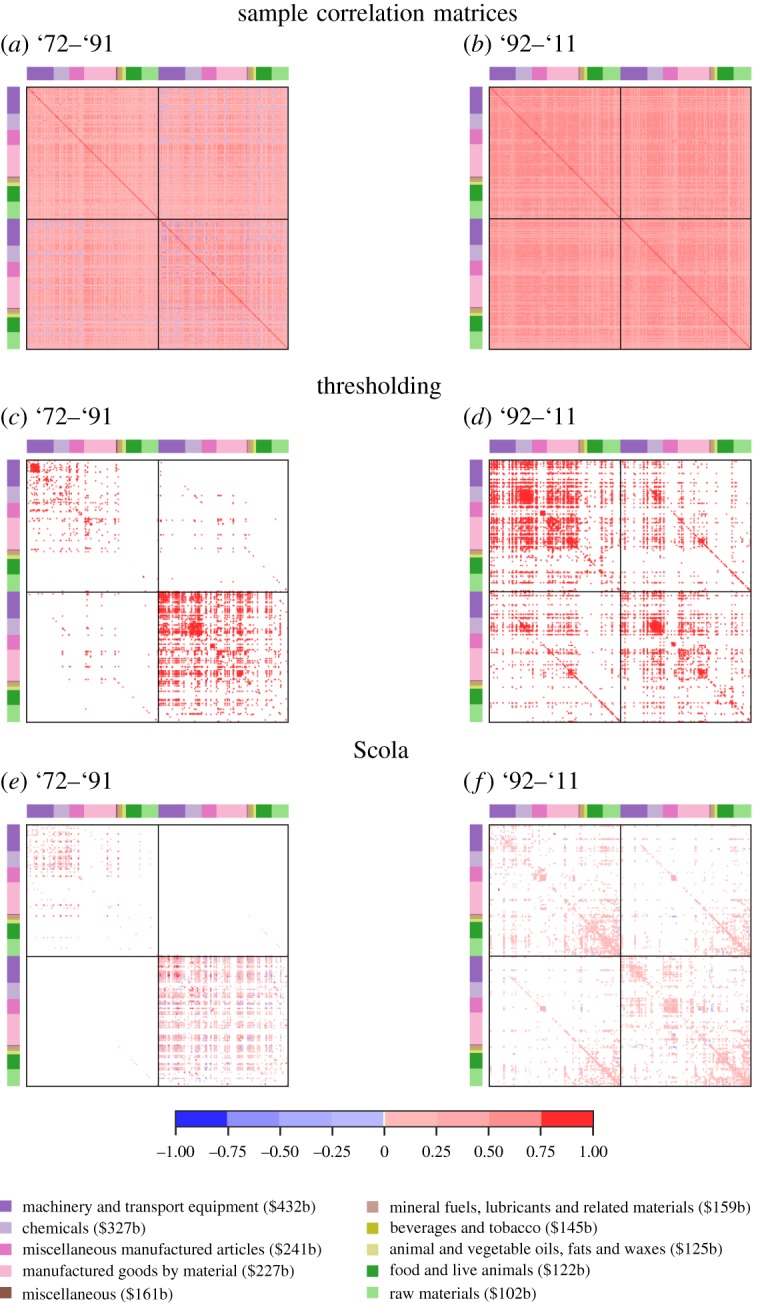

The sample correlation matrices are shown in figure 1a,b. The thresholding method places a majority of edges in the on-diagonal blocks for both time windows (figure 1c,d), suggesting strong correlations between the products exported within the same year when compared with those between different years. This is also true for the networks generated by the Scola (figure 1e,f ).

Figure 1.

Sample correlation matrices and networks for the product space data. The solid lines inside the matrices indicate the boundary between year t (first half) and year t + 10 (second half). The node colour indicates the product type. The value of the PRODY index averaged over the nodes in the same type is shown in the parentheses. (Online version in colour.)

The two methods place few edges (less than 5%) within the off-diagonal blocks for '72–'91. For '92–'11, more than 22% of edges are placed within the off-diagonal blocks by both methods. Although both methods place a similar number of edges within the off-diagonal blocks, the distribution of edges is considerably different. To see this, we compute the fraction of edges between product types for '92–'11 (figure 2). We do not show the result for '72–'91 owing to a small number of edges within the off-diagonal blocks. We find that, within the off-diagonal blocks, the thresholding method places relatively many edges between two nodes that correspond to sophisticated products in terms of the PRODY index (figure 2a). Examples include ‘Machinery & transport equipment’, ‘Chemicals’ and ‘Manufactured goods by material’. This result suggests that sophisticated products are strongly correlated with the same or other sophisticated products ten years apart. By contrast, the Scola finds many edges between nodes that correspond to less sophisticated products such as ‘Raw materials’, ‘Foods & live animals’ and ‘Animal & vegetable oils, fats & waxes’ (figure 2b). We find a similar result for the on-diagonal blocks for '92–'11 (figure 1f ).

Figure 2.

Fraction of edges between product types for time window '92–'11. (Online version in colour.)

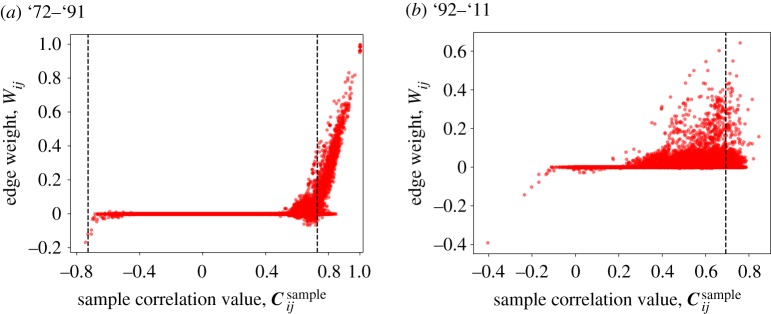

Highly correlated nodes may not be adjacent in the network generated by the Scola. To examine this issue, we plot the weight of edges estimated by the Scola against the correlation value of the corresponding node pair in figure 3. For time window '72–'91, there are 3021 node pairs with a correlation above the threshold in magnitude, of which 1700 (56%) node pairs are not adjacent in the network generated by the Scola. We find qualitatively the same result for time window '92–'11; there are 6834 node pairs with a correlation above the threshold in magnitude, of which 6343 node pairs (93%) are not adjacent in the network generated by the Scola. The weights of edges are correlated strongly with the original correlation values for time window '72–'91 but weakly for '92–'11 (Spearman correlation coefficients are 0.87 and 0.21, respectively).

Figure 3.

Correlation values in the sample correlation matrix and the weight of edges in the networks generated by the Scola shown in figure 1e,f . The dashed lines indicate the threshold value adopted by the thresholding method. (Online version in colour.)

We further demonstrate the use of the generated networks for predictions. We aim to predict RCA values in year t + 10 given those in year t. We make predictions using vector autoregression [46], which consists in computing conditional probability distribution P(x(c,t+10) | x(c,t)) = P(x(c,t), x(c,t+10))/P(x(c,t)). Note that the joint probability distribution P(x(c,t), x(c,t+10)) is given by equation (2.3). We make a prediction by the conditional expected value of x(c,t+10) under P(x(c,t+10) | x(c,t)), which is given in [47]

| 3.4 |

where C0,0 and C0,+10 are the blocks of C defined analogously to equation (3.3).

This prediction method requires C to be a covariance matrix. We set C to either the sample correlation matrix (C = Csample) or that provided by the Scola (i.e. C = Cnull + W).

We carry out fivefold cross-validation, where we split sample indices {1, …, L} into five subsets of almost equal sizes. We estimate C using the training set, which is the union of four out of the five subsets. Then, we perform predictions for the test set, which is the remaining subset. We carry out this procedure five times such that each of the subsets is used once as the test set.

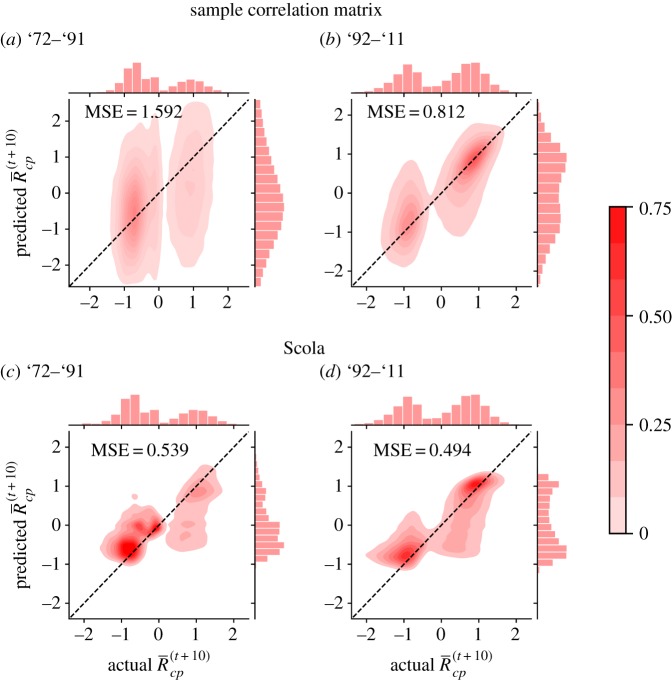

The joint distribution of the actual and predicted values, where each tuple (c, p, t) is regarded as an individual data point, is shown in figure 4. (The joint distributions for other null models are shown in the electronic supplementary material.) The perfect prediction would yield all points on the diagonal line. Between '72–'91, the Scola realizes better predictions than the sample correlation matrix does (figure 4a,c). In fact, the mean squared error (MSE) is approximately three times smaller for the Scola than for the sample correlation matrix. Between '92–'11, the MSE for the Scola is approximately 1.5 times smaller than that for the sample correlation matrix (figure 4b,d). A probable reason for the poor prediction performance for the sample correlation matrices is overfitting. Matrix Csample contains more than 1.9 × 106 elements, whereas there are only L = 1400 samples on average. The Scola represents the correlation matrix with a considerably smaller number of parameters (i.e. M + K = 8185 on average), which mitigates overfitting.

Figure 4.

Prediction of product exports. Joint distributions of the actual and predicted RCA values are shown. The dashed lines represent the diagonal. The MSE represents the mean square error. The marginal distributions are shown to the top and right of each panel. (Online version in colour.)

We have not used the thresholding method because it does not provide covariance matrices; the prediction method is only applicable to covariance matrices. Nevertheless, we implemented the prediction algorithm given by equation (3.4) for the thresholding method. We did so by substituting the network generated by the thresholding into C in equation (3.4). Note that matrix C is then not a covariance matrix. Between '72–'91 and '92–'11, the MSE for the thresholding method was equal to 0.918 and 1.745, respectively. Therefore, the thresholding method realizes a better prediction accuracy than the sample correlation matrix for '72–'91 but not for '92–'11 in terms of the MSE. For both time windows, the thresholding method was worse than the Scola combined with the configuration model in terms of the MSE.

(b). Model selection

What are appropriate null models for correlation networks? To address this question, we carry out model selection based on the EBIC to compare the Scola with different null models. We examine the three null models, i.e. the white noise model, the HQS model and the configuration model. We also compare the performance of the Scola with estimators of sparse precision matrices with different null models.

To construct a network from a precision matrix, we use a variant of the Scola (appendix C). We adopt the inverse of the correlation matrices for the white noise model, the HQS model and the configuration model as the null models for precision matrices. Because the white noise model is the identity matrix, its inverse is also the identity matrix, providing the white noise model for precision matrices. It should be noted that the variant is equivalent to the graphical Lasso [19,20] when one uses the white noise model as the null model.

In addition to the country-level export data used in §3a, we use the time series of stock prices in the US and Japanese markets. The US data comprise the time series of the daily closing prices of N = 1023 companies in the list of the Standard & Poor's 500 (S&P 500) index on 4174 days between 1 January 2000 and 29 December 2015. We compute the logarithmic return of the daily closing price by xti≡log[zi(t + 1)/zi(t)], where zi(t) is the closing price of stock i on day t. We split the time series of xti into two halves of eight years. Then, for each half, we exclude the stocks that have at least one missing value. (The stock prices are missing at the time points at which companies are not included in S&P 500.) We compute the correlation matrix for the logarithmic returns of the remaining stocks for each half.

The Japanese data comprise the time series of the daily closing prices of 264 stocks in the first section of the Tokyo Stock Exchange. We retrieve the stock prices for 5324 days between 12 March 1996 and 29 February 2016 using Nikkei NEED [48], where we exclude the stocks that do not have transactions on at least one day during the period. As is the case for the US data, we compute the correlation matrix for the logarithmic returns. We used the same correlation matrix for the Japanese data in our previous study [28]. We refer to the US and Japanese stock data as S&P 500 and Nikkei, respectively.

The EBIC values for the networks generated by the Scola and its variant for precision matrices combined with the three null models are shown in table 1. For the product space and S&P 500, the EBIC value for the configuration model is the smallest. For Nikkei, the EBIC value for the HQS model for precision matrices is the smallest. This result suggests that the Scola does not always outperform its variant for precision matrices. It should be noted that the graphical Lasso, which is equivalent to the variant of the Scola for precision matrices combined with the white noise model, is among the poorest across the different datasets.

Table 1.

Normalized EBIC values for correlation matrices and precision matrices combined with the different null models. We divide the EBIC value for each null model by that for the sample correlation matrix. Matrices CWN, CHQS and Ccon indicate the white noise model, HQS model and configuration model, respectively, as null models. For null precision matrices, we adopt the inverse of the three null correlation matrices and construct networks using a variant of the Scola (appendix C).

| data | null model |

|||||

|---|---|---|---|---|---|---|

| correlation matrix |

precision matrix |

|||||

| CWN | CHQS | Ccon | CWN | CHQS | Ccon | |

| product space | ||||||

| '72–'91 | 0.226 | 0.202 | 0.187 | 0.232 | 0.190 | 0.208 |

| '92–'11 | 0.300 | 0.301 | 0.226 | 0.304 | 0.238 | 0.256 |

| S&P 500 | ||||||

| '00–'07 | 0.600 | 0.562 | 0.553 | 0.640 | 0.557 | 0.587 |

| '08–'15 | 0.664 | 0.558 | 0.524 | 0.596 | 0.539 | 0.607 |

| Nikkei | 0.991 | 0.874 | 0.861 | 1.001 | 0.837 | 0.882 |

4. Discussion

We presented the Scola to construct networks from correlation matrices. We defined two nodes to be adjacent if the correlation between them is considerably different from that expected for a null model. The Scola yielded insights that were not revealed by the thresholding method such as a positive correlation between less sophisticated products across a decade. The generated networks also better predicted country-level product exports after 10 years than the mere sample correlation matrices.

Null models that have to be fed to the Scola are not limited to the three models introduced in §2b. Another major family of null models for correlation matrices is those based on random matrix theory, which preserves a part of spectral properties of given correlation matrices [15,49,50]. The Scola cannot employ this family of null models because it requires the null correlation matrix to be invertible. Null matrices based on random matrix theory are often not invertible because they leave out some of the eigenmodes. A remedy to this problem is to use a pseudo inverse.

The Scola is equivalent to the previous algorithm [24] if one adopts the white noise model as the null model, i.e. Cnull = CWN. Another method closely related to the Scola is the graphical Lasso [19–23], which provides sparse precision matrices (i.e. C−1). In contrast to correlation matrices, precision matrices indicate correlations between nodes with the effect of other nodes being removed. We focused on correlation matrices because null models for correlation matrices are relatively well studied [15,27,28,49,50], while studies on null models for precision matrices are still absent to the best of our knowledge.

The inverse of the correlation matrices provided by the HQS model and the configuration model may be reasonable null models for precision matrices. To explore this direction, we developed a variant of the Scola for the case of precision matrices, which is equivalent to the graphical Lasso if the white noise model is the null model. The variant of the Scola generates networks better than the original Scola for the Nikkei data in terms of the EBIC (§3b). We do not claim that the Scola is generally a strong performer. More comprehensive comparisons of the Scola and competitors warrant future work.

Scola simultaneously determines the presence or the absence of edges for all node pairs by maximizing the penalized likelihood. Alternatively, one may independently test whether or not to place an edge between each node pair, and then correct for the significance level to avoid the multiple comparison problem. However, this approach tends to find too few edges. In fact, many correction techniques including the Bonferroni correction and its variants suffer from excessive false negatives (i.e. significant correlations judged as insignificant) when the outcomes of the tests are dependent [51], which holds true in our case. For example, the correlation value between nodes v1 and v2 correlates with that between nodes v1 and v3 in general because both correlation values are computed using node v1.

Although the thresholding method has been widely employed [1,4–8], the overfitting problem inherent in this method has received much less attention than it deserves. In many cases, the number of observations based on which one computes the correlation matrix is of the same order of the number of nodes, which is much smaller than the number of the entries in the correlation matrix [19,24]. In this overfitting situation, if one removes or adds a small number of observations, one may obtain a substantially different correlation matrix and the resulting network.

We have assumed that the input data obey a multivariate Gaussian distribution. However, this assumption may not hold true for empirical data. A remedy commonly used in machine learning is to transform data using an exponential function, which is referred to as power transformations. The Box-Cox transformation, which we used in the analysis of the product space, is a standard power transformation. Other transformation techniques include the Fisher transformation [37] and the inverse hyperbolic transformation [52]. Alternatively, one may assume other probability distributions for input data, which is the case for a graphical Lasso for binary data [23].

Although we illustrated the Scola on economic data, the method can be applied to correlation data in various fields including neuroimaging [4–7,17], psychology [23], climate [53], metabolomics [8] and genomics [54]. For example, in neuroscience, the correlation data are often used to construct functional brain networks, analysis of which is expected to provide insights into how brains operate and cognition occurs. Applications of the Scola with the aim of finding insights that have not been obtained by thresholding methods, which has conventionally been used for these data, warrant future work.

Supplementary Material

Acknowledgements

The Standard & Poor's 500 data were provided by CheckRisk LLP in the UK. We thank Yukie Sano for providing the Japanese stock data used in the present paper.

Appendix A. Golden-section search

We adopt the golden-section search to find the value that minimizes the EBIC [39]. Let be the value yielding the minimum EBIC value. Let be the EBIC value at . In most cases, when one increases from 0, the EBIC monotonically decreases for , reaches the minimum at and monotonically increases for . The golden-section search method exploits this property to find . Specifically, suppose that one knows a lower bound and an upper bound of , i.e. . Then, consider and , where . If , it indicates , yielding a tighter bound . By contrast, indicates , yielding .

Based on this observation, the golden-section search method iterates the following rounds. In round k = 0, we set the initial lower and upper bounds following a previous study on regression analysis with Lasso [55]. Specifically, we set and to the minimum value of that satisfies at , i.e.

| A 1 |

In round k≥1, one sets and , where and is the golden ratio. Then, one updates the bound, i.e. if and if . If , we adopt either bound pair with an equal probability. If h(k+1) < h(1)/100, we stop the rounds of iteration and take the smaller of or as the output. Otherwise, we carry out the (k + 1)th round.

Appendix B. Preprocessing revealed comparative advantage values

The distribution of RCA may be considerably skewed. To make the distribution closer to a normal distribution, we perform a Box-Cox transformation [45], i.e. log(R(t)cp + 10−8). Then, for each product, we compute the z-score for the transformed values, generating normalized samples that have average zero and variance one for each product.

For the prediction task, we transform the RCA values separately for each time window as follows. First, we perform the Box-Cox transformation. Then, we split the transformed RCA values into a training set and test set. We compute the z-score for the training samples, generating normalized samples. For the test samples, we compute z-score for each product using the average and variance for the training samples because we should assume that statistics of the test samples are unknown when predicting the values of the test samples.

Appendix C. Constructing networks from precision matrices

The precision matrices are the inverse of the correlation matrix and contain the correlation between nodes with the effect of other nodes being removed. The precision matrix is sensitive to noise in data, which calls for robust estimators such as the graphical Lasso [19–23]. The graphical Lasso implicitly assumes a null model, where every node is conditionally independent of each other, as is the case for the white noise model for correlation matrices. Other null models for precision matrices have not been proposed to the best of our knowledge. Nevertheless, one may use the inverse of the null models for correlation matrices as the null models for precision matrices.

Therefore, we develop a variant of the Scola for precision matrices as follows. Denote by Θ the precision matrix (i.e. Θ = C−1). We write the precision matrix as

| C 1 |

where Θnull is the null precision matrix. Note that we have redefined W by the deviation of the precision matrix from the null precision matrix Θnull. The penalized log-likelihood function (equation (2.8)) is rewritten as

| C 2 |

We note that is a concave function with respect to W, which is different from the case of correlation matrices. By exploiting the concavity, we maximize using a gradient descent algorithm instead of the MM algorithm. Specifically, starting from an initial solution , we update tentative solution until convergence. The update equation is given by

| C 3 |

Each update requires the inversion of an N × N matrix, resulting in time complexity of the entire algorithm of . One may be able to use conventional efficient optimization algorithms for the graphical Lasso to save time [20]. However, we do not explore this direction.

Data accessibility

This article has no additional data.

Authors' contributions

N.M. conceived and designed the research; S.K. and N.M. proposed the algorithm; S.K. performed the computational experiment; S.K. and N.M. wrote the paper.

Competing interests

We declare we have no competing interests.

Funding

N.M. acknowledges the support provided through JST, CREST, grant no. JPMJCR1304.

References

- 1.Onnela J-P, Kaski K, Kertész J. 2004. Clustering and information in correlation based financial networks. Eur. Phys. J. B 38, 353–362. ( 10.1140/epjb/e2004-00128-7) [DOI] [Google Scholar]

- 2.Tse CK, Liu J, Lau FCM. 2010. A network perspective of the stock market. J. Emp. Fin. 17, 659–667. ( 10.1016/j.jempfin.2010.04.008) [DOI] [Google Scholar]

- 3.Newman MEJ. 2018. Networks, 2nd edn Oxford, UK: Oxford University Press. [Google Scholar]

- 4.Rubinov M, Sporns O. 2010. Complex network measures of brain connectivity: uses and interpretations. NeuroImage 52, 1059–1069. ( 10.1016/j.neuroimage.2009.10.003) [DOI] [PubMed] [Google Scholar]

- 5.van Wijk BCM, Stam CJ, Daffertshofer A. 2010. Comparing brain networks of different size and connectivity density using graph theory. PLoS ONE 5, e13701 ( 10.1371/journal.pone.0013701) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Zanin M, Sousa P, Papo D, Bajo R, García-Prieto J, del Pozo F, Menasalvas E, Boccaletti S. 2012. Optimizing functional network representation of multivariate time series. Sci. Rep. 2, 630 ( 10.1038/srep00630) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.De Vico Fallani F, Richiardi J, Chavez M, Achard S. 2014. Graph analysis of functional brain networks: practical issues in translational neuroscience. Phil. Trans. R. Soc. B 369, 20130521 ( 10.1098/rstb.2013.0521) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kose F, Weckwerth W, Linke T, Fiehn O. 2001. Visualizing plant metabolomic correlation networks using clique-metabolite matrices. Bioinformatics 17, 1198–1208. ( 10.1093/bioinformatics/17.12.1198) [DOI] [PubMed] [Google Scholar]

- 9.Mantegna RN. 1999. Hierarchical structure in financial markets. Eur. Phys. J. B 11, 193–197. ( 10.1007/s100510050929) [DOI] [Google Scholar]

- 10.Tumminello M, Aste T, Di Matteo T, Mantegna RN. 2005. A tool for filtering information in complex systems. Proc. Natl Acad. Sci. USA 102, 10 421–10 426. ( 10.1073/pnas.0500298102) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.De Vico Fallani V, Latora Fand, Chavez M. 2017. A topological criterion for filtering information in complex brain networks. PLoS Comput. Bio. 13, e1005305 ( 10.1371/journal.pcbi.1005305) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Jackson DA, Somers KM. 1991. The spectre of ‘spurious’ correlations. Oecologia 86, 147–151. ( 10.1007/BF00317404) [DOI] [PubMed] [Google Scholar]

- 13.Vigen T. 2015. Spurious correlations. Paris: Hachette books. [Google Scholar]

- 14.Bouchaud JP, Potters M. 2003. Theory of financial risk derivative pricing, 2nd edn Cambridge, UK: Cambridge University Press. [Google Scholar]

- 15.MacMahon M, Garlaschelli D. 2015. Community detection for correlation matrices. Phys. Rev. X 5, 021006 ( 10.1103/PhysRevX.5.021006) [DOI] [Google Scholar]

- 16.Marrelec G, Krainik A, Duffau H, Pélégrini-Issac M, Lehéricy S, Doyon J, Benali H. 2006. Partial correlation for functional brain interactivity investigation in functional MRI. NeuroImage 32, 228–237. ( 10.1016/j.neuroimage.2005.12.057) [DOI] [PubMed] [Google Scholar]

- 17.Zalesky A, Fornito A, Bullmore E. 2012. On the use of correlation as a measure of network connectivity. NeuroImage 60, 2096–2106. ( 10.1016/j.neuroimage.2012.02.001) [DOI] [PubMed] [Google Scholar]

- 18.Masuda N, Sakaki M, Ezaki T, Watanabe T. 2018. Clustering coefficients for correlation networks. Front. Neuroinform. 12, 7 ( 10.3389/fninf.2018.00007) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Banerjee O, El Ghaoui L, d'Aspremont A. 2008. Model selection through sparse maximum likelihood estimation for multivariate Gaussian or binary data. J. Mach. Learn. Res. 9, 485–516. ( 10.1145/1390681.1390696) [DOI] [Google Scholar]

- 20.Friedman J, Hastie T, Tibshirani R. 2008. Sparse inverse covariance estimation with the graphical lasso. Biostatistics 9, 432–441. ( 10.1093/biostatistics/kxm045) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Fan J, Feng Y, Wu Y. 2009. Network exploration via the adaptive LASSO and SCAD penalties. Ann. Appl. Stat. 3, 521–541. ( 10.1214/08-AOAS215) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Foygel R, Drton M. 2010. Extended Bayesian information criteria for Gaussian graphical models. In Proc. 23th Int. Conf. on Neural Information Processing Systems, NIPS'10, Vancouver, BC, 6–9 December, pp. 604–612. Curran Associates, Inc.

- 23.van Borkulo CD, Borsboom D, Epskamp S, Blanken TF, Boschloo L, Schoevers RA, Waldorp LJ. 2014. A new method for constructing networks from binary data. Sci. Rep. 4, 5918 ( 10.1038/srep05918) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bien J, Tibshirani RJ. 2011. Sparse estimation of a covariance matrix. Biometrika 98, 807–820. ( 10.1093/biomet/asr054) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Laloux L, Cizeau P, Bouchaud J-P, Potters M. 1999. Noise dressing of financial correlation matrices. Phys. Rev. Lett. 83, 1467–1470. ( 10.1103/PhysRevLett.83.1467) [DOI] [Google Scholar]

- 26.Plerou V, Gopikrishnan P, Rosenow B, Amaral LAN, Stanley HE. 1999. Universal and nonuniversal properties of cross correlations in financial time series. Phys. Rev. Lett. 83, 1471–1474. ( 10.1103/PhysRevLett.83.1471) [DOI] [Google Scholar]

- 27.Hirschberger M, Qi Y, Steuer RE. 2007. Randomly generating portfolio-selection covariance matrices with specified distributional characteristics. Eur. J. Oper. Res. 177, 1610–1625. ( 10.1016/j.ejor.2005.10.014) [DOI] [Google Scholar]

- 28.Masuda N, Kojaku S, Sano Y. 2018. Configuration model for correlation matrices preserving the node strength. Phys. Rev. E 98, 012312 ( 10.1103/PhysRevE.98.012312) [DOI] [PubMed] [Google Scholar]

- 29.Kojaku S. Python code for the Scola algorithm. See https://github.com/skojaku/scola.

- 30.Kollo T, von Rosen D. 2005. Advanced multivariate statistics with matrices. New York, NY: Springer. [Google Scholar]

- 31.Tibshirani R. 1996. Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. B (Methodol.) 58, 267–288. ( 10.1111/j.2517-6161.1996.tb02080.x) [DOI] [Google Scholar]

- 32.Fan J, Li R. 2001. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96, 1348–1360. ( 10.1198/016214501753382273) [DOI] [Google Scholar]

- 33.Zou H. 2006. The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 101, 1418–1429. ( 10.1198/016214506000000735) [DOI] [Google Scholar]

- 34.Hui FKC, Warton DI, Foster SD. 2015. Tuning parameter selection for the adaptive lasso using ERIC. J. Am. Stat. Assoc. 110, 262–269. ( 10.1080/01621459.2014.951444) [DOI] [Google Scholar]

- 35.Gupta AK, Nagar DK. 2000. Matrix variate distributions. London, UK: Chapman and Hall. [Google Scholar]

- 36.Masuda N. Python code for the configuration model for correlation/covariance matrices. See https://github.com/naokimas/config_corr/ (accessed 11 December 2018).

- 37.Fisher RA. 1915. Frequency distribution of the values of the correlation coefficient in samples from an indefinitely large population. Biometrika 10, 507–521. ( 10.2307/2331838) [DOI] [Google Scholar]

- 38.Chen J, Chen Z. 2008. Extended Bayesian information criteria for model selection with large model spaces. Biometrika 95, 759–771. ( 10.1093/biomet/asn034) [DOI] [Google Scholar]

- 39.Press WH, Teukolsky SA, Vettering WT, Flannery BP. 2007. Numerical recipes: the art of scientific computing, 3rd edn. New York, NY: Cambridge University Press. [Google Scholar]

- 40.Kingma DP, Ba J. 2014. Adam: a method for stochastic optimization. In Proc. Third Int. Conf. on Learning Representations (ICLR). ICLT'15, San Diego, CA, 7–9 May 2015, vol. 5, pp. 365–380. Ithaca, NY.

- 41.Hidalgo CA, Klinger B, Barabási A-L, Hausmann R. 2007. The product space conditions the development of nations. Science 317, 482–487. ( 10.1126/science.1144581) [DOI] [PubMed] [Google Scholar]

- 42.Hartmann D, Guevara MR, Jara-Figueroa C, Aristarán M, Hidalgo CA. 2017. Linking economic complexity, institutions, and income inequality. World Dev. 93, 75–93. ( 10.1016/j.worlddev.2016.12.020) [DOI] [Google Scholar]

- 43.Simoes A, Landry D, Hidalgo CA. The observatory of economic complexity. See https://atlas.media.mit.edu/en/resources/about/ (accessed 11 December 2018).

- 44.Hausmann R, Hwang J, Rodrik D. 2007. What you export matters. J. Econ. Growth 12, 1–25. ( 10.1007/s10887-006-9009-4) [DOI] [Google Scholar]

- 45.Box GEP, Cox DR. 1964. An analysis of transformations. J. R. Stat. Soc. B (Methodol.) 26, 211–252. ( 10.1111/j.2517-6161.1964.tb00553.x) [DOI] [Google Scholar]

- 46.Kilian L. 2006. New introduction to multiple time series analysis. Econ. Theory 22, 764 ( 10.1017/S0266466606000442) [DOI] [Google Scholar]

- 47.Bishop CM. 2006. Pattern recognition and machine learning. Berlin, Heidelberg, Germany: Springer. [Google Scholar]

- 48.Nikkei economic electric databank system. See http://www.nikkei.co.jp/needs/ (accessed 12 March 2019).

- 49.Plerou V, Gopikrishnan P, Rosenow B, Amaral LAN, Guhr T, Stanley HE. 2002. Random matrix approach to cross correlations in financial data. Phys. Rev. E 65, 066126 ( 10.1103/PhysRevE.65.066126) [DOI] [PubMed] [Google Scholar]

- 50.Utsugi A, Ino K, Oshikawa M. 2004. Random matrix theory analysis of cross correlations in financial markets. Phys. Rev. E 70, 026110 ( 10.1103/PhysRevE.70.026110) [DOI] [PubMed] [Google Scholar]

- 51.Shaffer JP. 1995. Multiple hypothesis testing. Annu. Rev. Psyc. 46, 561–584. ( 10.1146/annurev.ps.46.020195.003021) [DOI] [Google Scholar]

- 52.Burbidge JB, Magee L, Robb AL. 1988. Alternative transformations to handle extreme values of the dependent variable. J. Am. Stat. Assoc. 83, 123–127. ( 10.1080/01621459.1988.10478575) [DOI] [Google Scholar]

- 53.Tsonis AA, Swanson KL, Roebber PJ. 2006. What do networks have to do with climate? Bull. Am. Meter. Soc. 87, 585–596. ( 10.1175/BAMS-87-5-585) [DOI] [Google Scholar]

- 54.de la Fuente A, Brazhnik P, Mendes P. 2002. Linking the genes: inferring quantitative gene networks from microarray data. Trends Genet. 18, 395–398. ( 10.1016/S0168-9525(02)02692-6) [DOI] [PubMed] [Google Scholar]

- 55.Friedman J, Hastie T, Tibshirani R. 2010. Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw. 33, 1–22. ( 10.18637/jss.v033.i01) [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

This article has no additional data.