Abstract

Transmitted light microscopy can readily visualize the morphology of living cells. Here, we introduce artificial-intelligence-powered transmitted light microscopy (AIM) for subcellular structure identification and labeling-free functional analysis of live cells. AIM provides accurate images of subcellular organelles; allows identification of cellular and functional characteristics (cell type, viability, and maturation stage); and facilitates live cell tracking and multimodality analysis of immune cells in their native form without labeling.

Subject terms: Microscopy, Software, Cancer imaging, Cell migration, Imaging the immune system

Introduction

Microscopy imaging experiments constitute essential assays for cell biology research1. There are three common aims: (1) subcellular structure segmentation2, (2) cell status determination3, and (3) analysis of live cell dynamics4. Subcellular structure visualizations are typically performed using fluorescence labeling. Cell status characteristics such as their viability, type, and activity can be classified by dyeing representative biomarkers and evaluating their expression levels. However, performance of such analyses on a live cell is challenging. Expression of exogenous proteins through various transfection techniques would allow application of a specific fluorescent tag to target certain subcellular structures and/or biomarkers in live cells. However, several problems are associated with this approach: expression of exogenous proteins can have unexpected side effects, and some cells, especially immune or primary cells, are not transfectable5,6. It is notable that all limitations pertain to fluorescence labeling, with the additional restriction of fluorophore/wavelength selection7,8.

Digital image processing heavily extends the ability of the optical microscopy. Algorithms such as detection and segmentation allow making measurements and quantifications from the microscopic images3. Although, it may fail with many biological sample data due to its innate heterogeneity and complexity9. Recent advances in image processing with artificial intelligence (AI) break such limitations. Especially, deep neural network (DNN) explicate microscopy images in classification and segmentation with great performance10. For example, a DNN is able to interpret tissue section images and classify diseases on the level of trained experts11. Cell segmentation from microscopic images is carried out using DNNs12,13. In-silico staining approaches14–16 were developed, in which DNNs generate predictions for fluorescent labels from unstained cells. Microscopic object tracking is demonstrated by a DNN17.

Here, we introduce an AI-based software package to perform a complete live cell microscopy data analysis, called AI-powered transmitted light microscopy (AIM, Fig. 1a). Using a set of AI modalities including hierarchical k-means clustering algorithm of unsupervised machine learning, convolutional neural networks in deep learning18,19 and a complementary learner solving regression problems of machine learning20, AIM performs all three common aims from transmitted light microscopy (TL) images. In this work, we demonstrate (1) production of subcellular structure images of cell nuclei, mitochondria, and cytoskeleton fibers using AIM. In addition, (2) cellular and functional status information is attained; for example, cell viability, cell type, and immune cell maturation stages are identified. Furthermore, (3) accurate live cell tracking with subsequent analysis of the multimodality functions described above is presented, which enables completely label-free and multiplexed live cell imaging.

Figure 1.

Artificial-intelligence-powered transmitted light microscopy (AIM) with three functional interfaces finds subcellular structures, cellular & functional status information, and cell trajectories from transmitted light microscopy images. (a) AIM workflow. Transmitted light images are fed into (b) CellNet for subcellular structure segmentation and (c) ClassNet for cellular and functional classification, which identifies cell type, viability, conditions, etc. This is extended to live cell tracking and analysis using (d) TrackNet (see Methods for details).

Results

The AIM package consists of a hierarchical k-means clustering algorithm of unsupervised machine learning, convolutional neural networks in deep learning and a complementary learner solving regression problems of machine learning. We propose three functional networks: CellNet, ClassNet, and TrackNet (see Methods and Supplementary Notes 1–4). CellNet is engineered to develop images showing subcellular structures from TL images. This is achieved using both unsupervised and supervised machine learning techniques (Fig. 1b and Supplementary Note 2). Intensity clusters are first created from fluorescence microscopy (FL) images using a hierarchical K-means clustering algorithm21. The intensity cluster and corresponding TL images are fed to a fully convolutional neural network (FCN) that performs pixel-wise classification (see Methods and Supplementary Note 2)22. This trains CellNet to create FL-like images from TL images (Fig. 1a). ClassNet is designed for cell location and status classification (Fig. 1a) and is implemented through two convolutional neural networks (CNNs): one region-proposal CNN for cell searching and another CNN for cell classification (Fig. 1c and Supplementary Note 3)23,24. Finally, TrackNet is for automated cell tracking. TrackNet exploits an ensemble composed of a correlation filter and a pixel-wise probability to track a live cell (Fig. 1d and Supplementary Note 4)20. For each tracking procedure, cellular and functional information are obtained through subsequent analysis with CellNet and ClassNet (Fig. 1d).

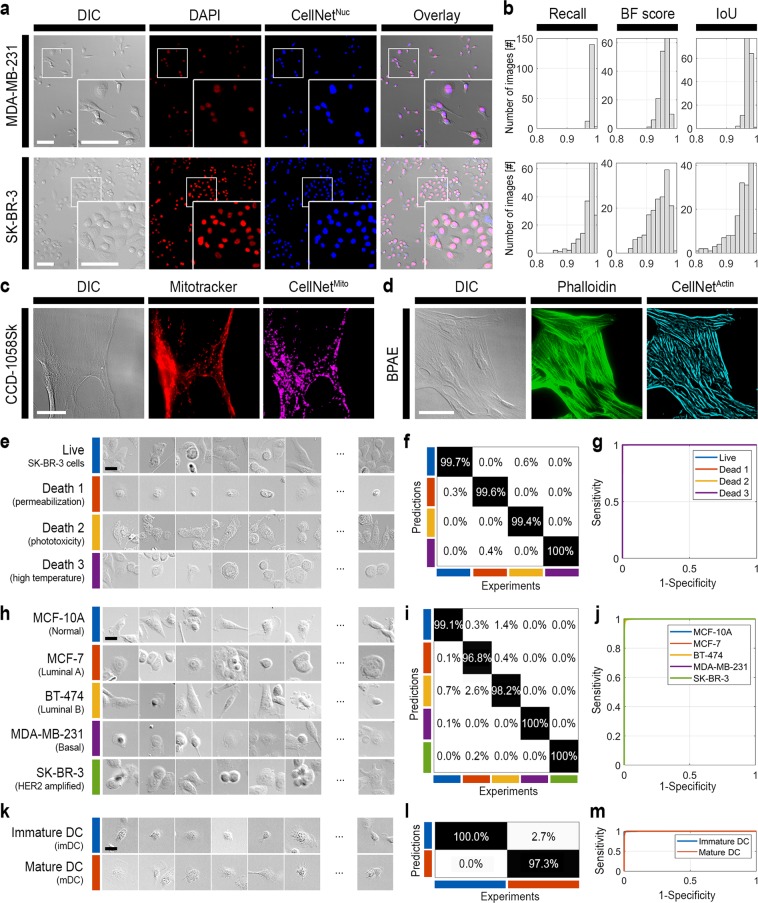

CellNet synthesizes FL images from TL images. In this study, subcellular structures (nuclei, mitochondria, and actin fibers) were predicted using this DNN (Fig. 2a–c). From differential interference contrast (DIC) microscopy images of MDA-MB-231 and SK-BR-3 cells, CellNetNuc generated cell nucleus images, which were comparable to 4′,6-diamidino-2-phenylindole (DAPI)-stained FL images (Fig. 2a). We noticed a few apparent false-positive cells, i.e., cells without DAPI staining that were identified as nuclei by CellNet. In each investigation, we found that most of those cases were in fact true positives with very low DAPI stains (see Supplementary Fig. 1). The CellNetNuc performance was evaluated according to three criteria: the recall, contour matching score (denoted by the BF score), and intersection-over-union (IoU) score (Fig. 2b, see Methods). CellNetNuc identified the MDA-MB-231 and SK-BR-3 cell nuclei with more than 98.36% and 96.93% recall and 97.27% and 94.85% IoU, respectively. It also found the respective nucleus boundaries (BF score) with 95.77% and 93.85%. Similarly, CellNetMito and CellNetActin were constructed to find mitochondria and actin fibers from TL images, respectively (Fig. 2c,d, Supplementary Note 2). Hence, FL-comparable images could be generated from TL images with 96.88% and 90.12% recall for mitochondria and actin fibers, respectively (Supplementary Fig. 2).

Figure 2.

Label-free subcellular structure identification and cellular and functional classification using AIM. (a) CellNetNuc identified cell nuclei from DIC images of MDA-MD-231 and SK-BR-3 cells. (b) The CellNetNuc performance was evaluated based on recall, BF score, and IoU. Demonstrations of (c) CellNetMito and (d) CellNetActin for mitochondrial and actin fiber identification from DIC images, respectively. ClassNet identified the (e) cell type, (h) cell viability, and (k) maturation stage of dendritic cells. The ClassNet classification performance was evaluated using (f,i,l) confusion matrixes and (g,j,m) receiver operating characteristic curves (ROCs) for cell type (f,g), cell viability (I,j), and maturation stage identification for dendritic cells (l,m). Scale bar: (a,c,d) 100 µm, (e,h,k) 20 µm.

ClassNet was designed to find cell status information, such as details of cell viability, cell type, and dendritic cell maturation stage from TL images alone (Fig. 2e–m). We performed cell viability tests of SK-BR-3 cells with three different causes of death (Fig. 2e, see Methods). A control (live sample) was also considered. The three causes of death were as follows: cell permeabilization (Death 1), phototoxicity (Death 2), and high temperature (Death 3). From the cell images, ClassNetViability could not only identify the cell viability with 99.78% classification accuracy (Supplementary Fig. 3a), but also classify each death condition with 99.69% classification accuracy (Fig. 2f). The receiver operating characteristic (ROC) curves for ClassNetViability indicate almost perfect classification performance (Fig. 2g and Supplementary Fig. 3b).

In addition, cancer cell classification was demonstrated using ClassNetCancer (Fig. 2h–j). Five cell lines from the breast cancer cell panel were used, which were differentiated by molecular signatures (Supplementary Table 1). ClassNetCancer could distinguish breast cancer cells from normal breast cells with 99.47% classification accuracy (Supplementary Fig. 3c), and differentiate five breast-cancer-cell subtypes with more than 98.80% classification accuracy (Fig. 2i). The ROC curves of both cancer and subtype classifications mark the perfect classification performance of ClassNetCancer (Supplementary Figs. 2j and 3d).

Dendritic cells (DCs) play a crucial role in adaptive immunity against pathogens and cancer cells via the maturation process25. DC maturation involves upregulation of MHC class II, CD40, CD80, and CD8626. We successfully demarcated immature DC (imDC) from mature DC (mDC) using ClassNetDC (Fig. 2k–m), again from DIC images obtained without fluorescent labeling. ClassNetDC found the imDC and mDC with 98.63% classification accuracy and the results were well correlated with the CD86 and CD40 expression levels (Fig. 2l and Supplementary Fig. 4). The corresponding ROC curve confirms the good classification performance of ClassNetDC (Fig. 2m).

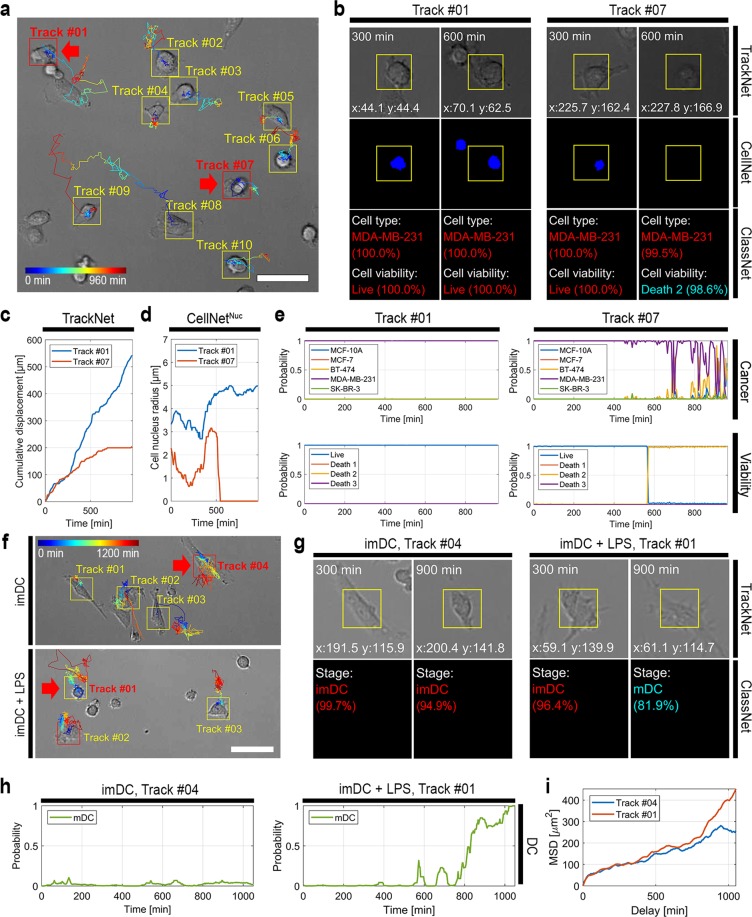

Label-free, multiplexed live cell tracking and analysis were achieved by combining CellNet, ClassNet, and TrackNet. MDA-MD-231 breast cancer cells were imaged for 16 hours using TL and analyzed using TrackNet (Fig. 3a and Supplementary Video 1–3). Cell images along the trajectory were evaluated by CellNet and ClassNet, which identified the cell nuclei, type, and viability over time (Fig. 3b). Here, we report on two cases: Tracks #01 and #07 of Fig. 3a. Based on the trajectories, the cumulative displacements were plotted over time (Fig. 3c). The Track #01 cell moved continuously to the end of the track whereas the motility of the Track #07 cell decreased. The cell nucleus area was computed for each trajectory (Fig. 3d). No cell nucleus was found after 500 min for Track #07. The cell type (ClassNetCancer) and viability (ClassNetViability) classification probabilities were plotted over time (Fig. 3e). We found that the cell in Track #07 was dead after 500 min, because of the phototoxicity, which was correlated with its motility and the measured cell nucleus size.

Figure 3.

AIM for live cell analysis. (a) Example of live cancer cell tracking using TrackNet. (b) Label-free, multiplexed live cell analysis performed by combining all three networks: CellNet, ClassNet, and TrackNet. Tracks #01 and #07 of (a) were taken as representative examples. (c–e) Cumulative trajectory displacements, cell nucleus areas detected using CellNetNuc, and classification probabilities of ClassNetCancer and ClassNetViability, respectively, for Tracks #01 and #07 plotted over time. (f) Live cell tracking and analysis of immature dendritic cells (imDC) for 20 h. The imDCs were in a cell culture medium (imDC; top) or a cell culture medium containing lipopolysaccharides (LPS) (imDC + LPS; bottom). (g) Live cell trajectories were obtained for imDC and imDC + LPS using TrackNet (Tracks #04 and #01, respectively) and the maturation stages were identified using ClassNetDC. (h) The classification probabilities of the DC maturation stage were plotted for Tracks #04 and #01 of (g). (i) The mean squared displacements (MSDs) were plotted for Tracks #04 and #01 from (g). Scale bar: (a,f) 100 µm.

We also demonstrated our AIM through application to immune cells. Live imDCs were collected and imaged for 20 h using TL (Fig. 3f–i, Supplementary Videos 4–7). To stimulate DC maturation, lipopolysaccharide (LPS) was added (denoted “imDC + LPS”). The DC trajectories were found using TrackNet and the maturation stages were evaluated using ClassNetDC. Two trajectories, i.e., Tracks #04 and #01 for imDC and imDC + LPS, respectively, are discussed as examples here (Fig. 3g). ClassNetDC found that the cell in Track #01 became an mDC after 830 min (Fig. 3g,h, see Supplementary Note 5). The diffusion rate of Track #01 was measured as being higher than that of Track #04 (Fig. 3i). In particular, the mean squared displacement (MSD) increased dramatically after an 830-min delay, implying that the DC diffusive behavior changed after maturation (Fig. 3i; Track #01).

Discussion

The AIM is an AI toolkit for live cell microscopy from TL images. Three functional AIs, CellNet, ClassNet, and TrackNet, performs cell staining, cell classification and cell tracking accurately (Fig. 1). We were able to produce subcellular structure images of cell nuclei, mitochondria, and cytoskeleton fibers using CellNet (Fig. 2a–d). Identifying cell viability, cell type, and immune cell maturation stage with over 99% classification accuracy was possible with ClassNet (Fig. 2e–m). TrackNet in addition of CellNet and ClassNet performs live cell tracking and analysis (Fig. 3, Supplementary Videos 1–7). The AIM is easy to incorporate to cell biology experiment with existing conventional microscopy setup. The modular architecture of our AIM brings flexibility of experimental design and reusability of each component (Supplementary Notes 1–4). The AIM is an example of using existing AI technologies into a scientific problem. The AI such as SegNet22, GoogLeNet24, or Staple20 were used for self-driving system, image classification, or object tracking problems and were transformed to solve cell staining, disease classification, or living cell tracking in our AIM toolkit. AIM performance is limited by the information available in the TL images. However, this performance could be improved through application of state-of-the-art AI technology. For instance, the spatial resolution of CellNet may be improved using other semantic segmentation techniques27 (Supplementary Note 2). In addition, we may construct experiment-exclusive DNNs for ClassNet rather than using networks optimally designed for the ImageNet Large Scale Visual Recognition Competition classification challenge28 (Supplementary Note 3, Figs. 5 and 6). Similarly, TrackNet can be improved by using different supervised or unsupervised techniques17,29 (Supplementary Note 4). The AIM package developed in this work introduces a new dimension of microscopy and live cell imaging.

Methods

AI-powered transmitted light microscopy (AIM)

The artificial-intelligence-powered transmitted light microscopy (AIM) package is constructed from three functional neural networks: CellNet, ClassNet, and TrackNet (Fig. 1). CellNet is designed based on a SegNet22 to perform semantic segmentation of subcellular structures. ClassNet is a convolutional neural network (CNN)23,24 for cellular and functional classification. TrackNet is constructed based on an ensemble composed of a correlation filter and the intensity histogram approach20 and is designed for living cell tracking (see Supplementary Notes 1–4 for details).

CellNet consists of an unsupervised and a supervised machine learning algorithm. Desired features are first identified from fluorescence microscopy images using the hierarchical K-means clustering algorithm (HK-means)21,30,31. HK-means is an unsupervised machine learning approach30,31 and defines intensity classes from fluorescence microscopy images. The results of the unsupervised machine learning are used to supervise CellNet, which is structured using the convolutional encoder-decoder architecture of SegNet22 (Fig. 1b and Supplementary Fig. 7). This structure recovers a fine-resolution classification map from a low-resolution encoder feature map. CellNet must be tuned in accordance with the imaging conditions, e.g., the effective pixel size, desired structure dimension, and input image size (Supplementary Fig. 8)22. See Supplementary Note 2 for information on data preprocessing and optimization conditions.

ClassNet uses a CNN for cell classification (Fig. 1c and Supplementary Note 3) Two CNN approaches are employed in two steps: cells are found using a region proposal CNN (R-CNN) and the cell status is classified using experiment-specific CNNs. A Faster R-CNN23 is used for the cell search. Cell classification is performed by training existing CNNs such as AlexNet18, GoogLeNet24, Inception-V332, and Inception-ResNet-V233 (Supplementary Note 3 and Supplementary Fig. 5). Note that the performance of these networks differs from the input class (Supplementary Fig. 6). GoogLeNet24 was used for the examples presented in the main manuscript (Fig. 2d–l). DNNs were modified according to the input image size and the output class numbers.

TrackNet performs live cell tracking and analysis using CellNet and ClassNet (Fig. 1d). Cells are first found using the R-CNN approach used in ClassNet (Supplementary Fig. 9). For each detection process, live cell tracking is performed by computing the image correlation and intensity histogram in subsequent frames20. For each trajectory, the cell images are extracted and analyzed using the pretrained CellNet and ClassNet (Fig. 3, Supplementary Notes 2–4, and Videos 1–7).

All computations reported in this paper were performed using MATLAB (MathWorks, USA) on a personal computer configured with an Intel i7 7700 central processing unit and a single Nvidia GTX 1080 graphics processing unit (GPU). The computations were mostly performed using the GPU. Full details of the network structure used in the manuscript are listed in Supplementary Data 1 to 6.

Performance evaluation

To evaluate the CellNet performance, the following scores were examined: recall, BF score, and IoU (see Fig. 2b,d)34. Recall denotes the ratio of correctly labeled pixels on the following relation:

| 1 |

where TP and FN are the numbers of true positive and false negative pixels, respectively35. The boundary F1 contour matching score (BF score) finds the proximity of the boundary at the given error tolerance of the image diagonal. The precision P and recall R are defined as follows:

| 2 |

where Bp is the CellNet-image contour map, Bg is the ground-truth contour map, d denotes the Euclidean distance, and |A| denotes the number of elements in A. The BF score is expressed as follows:

| 3 |

Further, the intersection over union (IoU, or Jaccard similarity coefficient) is scored as

| 4 |

where FP is the number of false positive pixels.

In this work, the ClassNet performance was evaluated by calculating the confusion matrix36 and the receiver operating characteristic (ROC) curve37. Classification accuracy is defined:

| 5 |

where TP, FP, TN, or FN are the true positive, false positive, true negative, or false negative of the predictions from the ClassNet. Confusion matrixes with absolute data counts are available in Supplementary Table 2. The ROC curves were obtained using the one versus the rest approach38. All the performance evaluations were conducted using experimentally independent data sets. Please see Supplementary Note 6 for our comments on this evaluation approach.

Transmitted light and fluorescence microscopy

The transmitted light and fluorescence microscopy imaging were performed using an inverted microscope (Eclipse-Ti; Nikon, Japan) configured with 20x and 40x dry objective lenses (Plan Apo 20x/0.75NA and 40x/0.95NA, respectively; Nikon, Japan). The transmitted light microscopy was conducted using a differential interference (DIC) contrast setup (Nikon, Japan) configured with white-light light-emitting-diode (LED) illumination (pE-100; CoolLED, UK). For the fluorescence microscopy, the sample was illuminated using colored LED light sources (pE-4000; CoolLED, UK). TRI, RFP, and Cy5 filters (Nikon, Japan) were used, depending on the fluorescence label. The microscopic images were recorded using an electron-multiplying charge-coupled-device camera (iXon Ultra; Andor, UK). A focus stabilization system (PFS; Nikon, Japan) was used for all imaging experiments. All the data were acquired by focusing fiducial markers immediately above the coverslip. The microscope system was controlled using MetaMorph software (Molecular Device, USA).

Data acquisition and preprocessing for AIM

A motorized stage (Ludl Electronic product, USA) with automated sample scanning capability and a multi-position imaging system (MetaMorph; Molecular Device, USA) were configured for the data acquisition. DIC and nucleus (4′,6-diamidino-2-phenylindole (DAPI) or Hoechst 33342) stained cell images were acquired for all fixed cell imaging experiments. Other fluorescence channel images were obtained in accordance with the experimental conditions. Imaging area were set to 16 mm × 16 mm per sample. The cell nucleus images were segmented using the HK-means algorithm and regions of interest (ROIs) were found by centering the cell nucleus. ROIs were in 101 pixels × 101 pixels (equivalent to 65 μm × 65 μm in 20x magnification, or equivalent to 32.5 μm × 32.5 μm in 40x magnification). 10,000 to 15,000 ROIs were identified per sample. Three samples were prepared per conditions, 20,000 to 30,000 ROIs from two samples were used as training data and 10,000 to 15,000 ROIs from the other sample were used for validation data. Number of training, validation, and testing images used the manuscript is available in Supplementary Table 3.

Cell lines and reagents

MCF-10A, MCF-7, BT-474, MDA-MB-231, SK-BR-3, and CCD-1058Sk breast cell lines were obtained from the American Type Culture Collection (ATCC) and maintained by following ATCC protocol (Supplementary Table 1). DAPI (Sigma-Aldrich, USA) or Hoechst 33342 (ThermoFisher, USA) were used in accordance with the manufacturer protocol for the cell nucleus staining. A FluoCellsTM prepared slide #1 (ThermoFisher, USA) was used for the mitochondrial and actin fiber imaging experiments (Fig. 2c). The dendritic cells were fluorescently labeled using the following antibodies: anti-CD86 fluorescein isothiocyanate (FITC)-conjugated antibody and anti-CD40 phycoerythrin (PE)-conjugated antibody (ThermoFisher, USA). Cells were fixed using 3.4% paraformaldehyde (PFA; Sigma-Aldrich, USA) in phosphate buffered saline (1xPBS; Sigma-Aldrich, USA).

Cell viability assay

SK-BR-3 cells were plated on glass-bottom dishes (SPL Life Science, South Korea). Cell death conditions, i.e., permeabilization (Death 1, Fig. 2d), phototoxicity (Death 2, Fig. 2g) and high temperature (Death 3, Fig. 2j), were simulated as follows. To synthesize Death 1, SK-BR-3 cells were treated with 0.1% saponin (Sigma Aldrich, USA) for 10 min at room temperature. Death 2 was achieved by irradiating SK-BR-3 cells with ultraviolet light at 27.9 mW/cm2 for 1 h (UVO cleaner; Jelight Company, USA). For Death 3, the SK-BR-3 cells were heat shocked for 10 min at 45 °C using a water bath. The cells were stained using a live/dead viability/cytotoxicity kit (ThermoFisher, USA) according to the manufacturer’s protocol. The cells were fixed using 3.4% PFA in 1xPBS for 10 min at room temperature. The cells were stained with DAPI and imaged using the microscope as described above.

Cell line classification assay

MCF-10A, MCF-7, BT-474, MDA-MB-231, and SK-BR-3 cells were plated on glass-bottom dishes and incubated for one day in the incubator. The cells were then fixed using 3.4% PFA in 1xPBS for 10 min at room temperature. The cells were stained with DAPI and imaged using the microscope as described above.

Animals

All animal experiments were conducted under protocols approved by the Institutional Animal Care and Use Committee of Ulsan National Institute of Science and Technology (UNISTIACUC-16-13). All animal experiments were conducted in accordance with the National Institutes of Health “Guide for the Care and Use of Laboratory Animals” (The National Academies Press, 8th Ed., 2011). The personnel who performed the experiment had completed the animal research and ethics courses of the Collaborative Institutional Training Initiative (CITI) Program (USA).

Dendritic cell preparation

The dendritic cells were isolated as described previously39. Briefly, the tibias and femurs of BALB/c mice (8–12 weeks of age, females) were used. To isolate bone-marrow-derived dendritic cells (BMDCs), red blood cells were lysed using ammonium-chloride-potassium (ACK) lysis buffer (Gibco, USA). Bone marrow cells were plated on 24-well cell culture plates (1 × 106 cells/ml). The cells were incubated in a culture medium containing RPMI 1640 supplemented with 5% fetal bovine serum, 1% 100x antibiotic-antimycotic solution, 1% 100x 4-(2-hydroxyethyl)-1-piperazineethanesulfonic acid (HEPES) buffer, 0.1% 1000x 2-mercaptoethanol, 1% L-glutamine (all from Gibco, USA) and 20 ng/ml recombinant mouse granulocyte macrophage colony-stimulating factor (GM-CSF) (Peprotech, USA). The culture medium was replaced on days two, four, and six. Non-adhesive and loosely adherent cells were gently collected through a pipette and transferred to Petri dishes. Immature MDCs, which appeared as floating cells, were collected after one day. The BMDCs were validated through flow cytometry assay (Supplementary Note 7 and Figs. 10 and 11).

Dendritic cell imaging experiment

Glass-bottom dishes were plasma-treated using a plasma cleaner (CUTE-1MPR; Femto Science, South Korea) for 90 s at 100 W. The dishes were then coated with 10 µg/ml fibronectin for one hour at room temperature. BMDCs were plated on the fibronectin-coated dish and incubated for one day in the incubator. To stimulate BMDC maturation, immature BMDCs were treated with 100-ng/ml lipopolysaccharide (LPS; Sigma, USA) for 18 h. The cells were fixed using 3.4% PFA in 1xPBS for 10 min at room temperature. The cells were fluorescently stained and imaged as described above.

Live cell imaging

The microscope was installed in a cage incubator system (Chamlide HK; Live Cell Instrument, South Korea), which maintained the microscope stage case at 37 °C with 95% humidity and 5% CO2 during the experiments. The cancer cells were imaged for 16 h and DIC images were obtained every 5 min (Fig. 3a–e). The dendritic cells were imaged for 20 h and DIC images were obtained every 3 min (Fig. 3f–i).

Live cell tracking and analysis

Live cell trajectories were found from sets of DIC images using TrackNet (Fig. 3a,f). Along the trajectories, a set of regions of interest (ROIs) was created by centering the track coordinates. The ROIs were analyzed by CellNet and/or ClassNet (Fig. 3b,g). The cumulative displacements (Fig. 3c) or mean squared displacements (MSDs) (Fig. 3h)40 were calculated from the live cell trajectories provided by TrackNet. Cell nucleus images were obtained from the ROIs using CellNet (Fig. 3b). The cell nucleus size was measured from the segmented cell nucleus nearest to the track coordinates (Fig. 3d). The classification probabilities were estimated from ClassNet and plotted over time (Fig. 3e,i). See Supplementary Note 8 for living cell analysis pseudo code.

Supplementary information

Supplementary Video 1. Cancer cell tracking demonstration

Supplementary Video 2. Cancer cell tracking demonstration (Track #01)

Supplementary Video 3. Cancer cell tracking demonstration (Track #07)

Supplementary Video 4. Immature dendritic cell tracking demonstration (without lipoplysaccharide (LPS))

Supplementary Video 5. Immature dendritic cell tracking demonstration (without lipoplysaccharide (LPS), Track #04)

Supplementary Video 6. Immature dendritic cell tracking demonstration (with lipoplysaccharide (LPS))

Supplementary Video 7. Immature dendritic cell tracking demonstration (with lipoplysaccharide (LPS), Track #01)

Acknowledgements

We thank all the Institute for Basic Science Center for Soft and Living Matter members for their feedback. This work is supported by IBS-R020-D1 from the Korean Government.

Author contributions

D.K. and Y.K.C. designed the research; D.K. and Y.M. performed the research; D.K., Y.M., J.M.O. and Y.K.C. wrote the manuscript.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

All software will be available on GitHub when the manuscript being public. The software used for live cell tracking was originally from Staple20.

Competing interests

D.K., Y.M. and Y.K.C. are inventors of the filed patents in Korea; 10-2019-0001740(KR), 10-2019-0001741(KR). J.M.O. has no financial and/or non-financial competing interests to declare.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

is available for this paper at 10.1038/s41598-019-54961-x.

References

- 1.Lichtman JW, Conchello J-A. Fluorescence microscopy. Nat. Methods. 2005;2:910–919. doi: 10.1038/nmeth817. [DOI] [PubMed] [Google Scholar]

- 2.Dragunow M. High-content analysis in neuroscience. Nat. Rev. Neurosci. 2008;9:779–788. doi: 10.1038/nrn2492. [DOI] [PubMed] [Google Scholar]

- 3.Caicedo JC, et al. Data-analysis strategies for image-based cell profiling. Nat. Methods. 2017;14:849–863. doi: 10.1038/nmeth.4397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dunn GA, Jones GE. Cell motility under the microscope: vorsprung durch Technik. Nat. Rev. Mol. Cell Biol. 2004;5:667–672. doi: 10.1038/nrm1439. [DOI] [PubMed] [Google Scholar]

- 5.Hamm A, Krott N, Breibach I, Blindt R, Bosserhoff AK. Efficient transfection method for primary cells. Tissue Eng. 2002;8:235–245. doi: 10.1089/107632702753725003. [DOI] [PubMed] [Google Scholar]

- 6.Banchereau J, Steinman RM. Dendritic cells and the control of immunity. Nature. 1998;392:245–252. doi: 10.1038/32588. [DOI] [PubMed] [Google Scholar]

- 7.Pearson H. The good, the bad and the ugly. Nature. 2007;447:138–140. doi: 10.1038/447138a. [DOI] [PubMed] [Google Scholar]

- 8.Stewart MP, et al. In vitro and ex vivo strategies for intracellular delivery. Nature. 2016;538:183–192. doi: 10.1038/nature19764. [DOI] [PubMed] [Google Scholar]

- 9.Altschuler SJ, Wu LF. Cellular heterogeneity: do differences make a difference? Cell. 2010;141:559–563. doi: 10.1016/j.cell.2010.04.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Moen, E. et al. Deep learning for cellular image analysis. Nat. Methods (2019). [DOI] [PMC free article] [PubMed]

- 11.Mobadersany P, et al. Predicting cancer outcomes from histology and genomics using convolutional networks. Proc. Natl. Acad. Sci. 2018;115:E2970–E2979. doi: 10.1073/pnas.1717139115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sadanandan SK, Ranefall P, Le Guyader S, Wählby C. Automated training of deep convolutional neural networks for cell segmentation. Sci. Rep. 2017;7:7860. doi: 10.1038/s41598-017-07599-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Van Valen DA, et al. Deep learning automates the quantitative analysis of individual cells in live-cell imaging experiments. Plos Comput. Biol. 2016;12:e1005177. doi: 10.1371/journal.pcbi.1005177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Christiansen EM, et al. In silico labeling: predicting fluorescent labels in unlabeled images. Cell. 2018;173:792–803.e19. doi: 10.1016/j.cell.2018.03.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ounkomol, C., Seshamani, S., Maleckar, M. M., Collman, F. & Johnson, G. R. Label-free prediction of three-dimensional fluorescence images from transmitted-light microscopy. Nat. Methods, 15, 917–920 (2018). [DOI] [PMC free article] [PubMed]

- 16.De Fauw J, et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat. Med. 2018;24:1342–1350. doi: 10.1038/s41591-018-0107-6. [DOI] [PubMed] [Google Scholar]

- 17.Ulman V, et al. An objective comparison of cell-tracking algorithms. Nat. Methods. 2017;14:1141–1152. doi: 10.1038/nmeth.4473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 1–9 (2012).

- 19.Esteva A, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bertinetto L, Valmadre J, Golodetz S, Miksik O, Torr PHS. CVPR. 2016. Staple: complementary learners for real-time tracking; pp. 1401–1409. [Google Scholar]

- 21.Eisen MB, Spellman PT, Brown PO, Botstein D. Cluster analysis and display of genome-wide expression patterns. Proc. Natl. Acad. Sci. USA. 1998;95:14863–8. doi: 10.1073/pnas.95.25.14863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Badrinarayanan V, Kendall A, Cipolla R. Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39:2481–2495. doi: 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 23.Ren S, He K, Girshick R, Sun J. Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39:1137–1149. doi: 10.1109/TPAMI.2016.2577031. [DOI] [PubMed] [Google Scholar]

- 24.Szegedy C, et al. Going deeper with convolutions. CVPR. 2015;2015:1–9. [Google Scholar]

- 25.Lipscomb MF, Masten BJ. Dendritic cells: immune regulators in health and disease. Physiol. Rev. 2002;82:97–130. doi: 10.1152/physrev.00023.2001. [DOI] [PubMed] [Google Scholar]

- 26.Hellman P, Eriksson H. Early activation markers of human peripheral dendritic cells. Hum. Immunol. 2007;68:324–333. doi: 10.1016/j.humimm.2007.01.018. [DOI] [PubMed] [Google Scholar]

- 27.Everingham, M., Van~Gool, L., Williams, C. K. I., Winn, J. & Zisserman, A. The PASCAL visual object classes challenge 2012 (VOC2012) results. Pascal Voc (2012).

- 28.Russakovsky O, et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 2015;115:211–252. doi: 10.1007/s11263-015-0816-y. [DOI] [Google Scholar]

- 29.Kristan, M. et al. The sixth visual object tracking VOT2018 challenge results. in Computer Vision, ECCV 2018 Workshops (eds Leal-Taixé, L. & Roth, S.) 3–53 (2019).

- 30.Steinhaus H. Sur la division des corps matériels en parties. Bull. Acad. Pol. Sci., Cl. III. 1957;4:801–804. [Google Scholar]

- 31.Tucker, A. B. Computer science handbook. Taylor Fr. (2004).

- 32.Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. Rethinking the inception architecture for computer vision. CVPR. 2016;2016:2818–2826. [Google Scholar]

- 33.Szegedy C, Ioffe S, Vanhoucke V, Alemi A. Inception-v4, Inception-ResNet and the impact of residual connections on learning. Pattern Recognit. Lett. 2016;42:11–24. [Google Scholar]

- 34.Csurka G, Larlus D, Perronnin F. What is a good evaluation measure for semantic segmentation? BMVA. 2013;2013(32):1–32.11. [Google Scholar]

- 35.Mathworks. evaluateSemanticSegmentation. Available at, https://www.mathworks.com/help/vision/ref/evaluatesemanticsegmentation.html. (Accessed: 1st August 2019).

- 36.Stehman SV. Selecting and interpreting measures of thematic classification accuracy. Remote Sens. Environ. 1997;62:77–89. doi: 10.1016/S0034-4257(97)00083-7. [DOI] [Google Scholar]

- 37.Fawcett T. An introduction to ROC. analysis. Pattern Recognit. Lett. 2006;27:861–874. doi: 10.1016/j.patrec.2005.10.010. [DOI] [Google Scholar]

- 38.Hand DJ, Till RJ. A simple generalisation of the area under the ROC Curve for multiple class classification problems. Mach. Learn. 2001;45:171–186. doi: 10.1023/A:1010920819831. [DOI] [Google Scholar]

- 39.Lutz MB, et al. An advanced culture method for generating large quantities of highly pure dendritic cells from mouse bone marrow. J. Immunol. Methods. 1999;223:77–92. doi: 10.1016/S0022-1759(98)00204-X. [DOI] [PubMed] [Google Scholar]

- 40.Tarantino N, et al. TNF and IL-1 exhibit distinct ubiquitin requirements for inducing NEMO–IKK supramolecular structures. J. Cell Biol. 2014;204:231–245. doi: 10.1083/jcb.201307172. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Video 1. Cancer cell tracking demonstration

Supplementary Video 2. Cancer cell tracking demonstration (Track #01)

Supplementary Video 3. Cancer cell tracking demonstration (Track #07)

Supplementary Video 4. Immature dendritic cell tracking demonstration (without lipoplysaccharide (LPS))

Supplementary Video 5. Immature dendritic cell tracking demonstration (without lipoplysaccharide (LPS), Track #04)

Supplementary Video 6. Immature dendritic cell tracking demonstration (with lipoplysaccharide (LPS))

Supplementary Video 7. Immature dendritic cell tracking demonstration (with lipoplysaccharide (LPS), Track #01)

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

All software will be available on GitHub when the manuscript being public. The software used for live cell tracking was originally from Staple20.