Abstract

Objective: Accurate diagnosis and prognosis are essential in lung cancer treatment selection and planning. With the rapid advance of medical imaging technology, whole slide imaging (WSI) in pathology is becoming a routine clinical procedure. An interplay of needs and challenges exists for computer-aided diagnosis based on accurate and efficient analysis of pathology images. Recently, artificial intelligence, especially deep learning, has shown great potential in pathology image analysis tasks such as tumor region identification, prognosis prediction, tumor microenvironment characterization, and metastasis detection. Materials and Methods: In this review, we aim to provide an overview of current and potential applications for AI methods in pathology image analysis, with an emphasis on lung cancer. Results: We outlined the current challenges and opportunities in lung cancer pathology image analysis, discussed the recent deep learning developments that could potentially impact digital pathology in lung cancer, and summarized the existing applications of deep learning algorithms in lung cancer diagnosis and prognosis. Discussion and Conclusion: With the advance of technology, digital pathology could have great potential impacts in lung cancer patient care. We point out some promising future directions for lung cancer pathology image analysis, including multi-task learning, transfer learning, and model interpretation.

Keywords: lung cancer, deep learning, pathology image, computer-aided diagnosis, digital pathology, whole-slide imaging

1. Introduction

Lung cancer is the leading cause of death from cancer in the United States and around the world [1,2,3]. Disease progression and response to treatment in lung cancer vary widely among patients. Therefore, accurate diagnosis is crucial in treatment selection and planning for each lung cancer patient. The microscopic examination of tissue slides remains an essential step in cancer diagnosis. It requires the pathologist to recognize subtle histopathological patterns in the highly complex tissue images. This process is time-consuming, subjective, and generates considerable inter- and intra-observer variation [4,5]. Hematoxylin and eosin (H&E) staining is the most popular tissue staining method [6]; with the advance of technology, H&E-stained whole slide imaging (WSI) of tissue slides is becoming a routine clinical procedure and has generated a massive number of pathology images that capture histological details in high resolution. Currently, the limited capacity of pathology image analysis algorithms is creating a bottleneck in digital pathology, constraining the ability of this technology to have greater clinical impact.

Recent developments in pathology image analysis [7,8] have led to new algorithms and software tools for clinical diagnosis and research into disease mechanisms. In 2011, the seminal works by Beck et al. [9] and Yuan et al. [10] applied pathology image analysis to breast cancer prognosis. Since then, computer algorithms for pathology image analysis have been developed to facilitate cancer diagnosis [11,12,13,14,15], grading [16,17,18,19,20], and prognosis [21,22]. In 2017, two studies [21,22] demonstrated the feasibility of utilizing morphological features in pathology slides for lung cancer prognosis, which opened up an exciting new direction for refining lung cancer prognosis using computer-aided image analysis.

Recently, artificial intelligence (AI) has been demonstrating remarkable success in medical image analysis owing to the rapid progress of “deep learning” algorithms [23,24,25], which have shown increasing power to solve complex, real-world problems in computer vison and image analysis [23,24,25]. The development of advanced deep learning algorithms could empower pathology image analysis and assist pathologists in challenging diagnostic tasks such as identifying neoplasia, detecting tumor metastasis, and quantifying mitoses and inflammation.

In this review, we aimed to provide an overview of current and potential applications for AI methods in lung cancer pathology image analysis. We first outlined the current challenges and opportunities in lung cancer pathology image analysis. Next, we discussed the recent deep learning developments that could potentially impact digital pathology in lung cancer. Finally, we summarized the existing applications of deep learning algorithms in lung cancer diagnosis and prognosis.

2. Current Challenges and Opportunities in Lung Cancer Pathology Image Analysis

2.1. Diagnosis: Tumor Detection and Classification

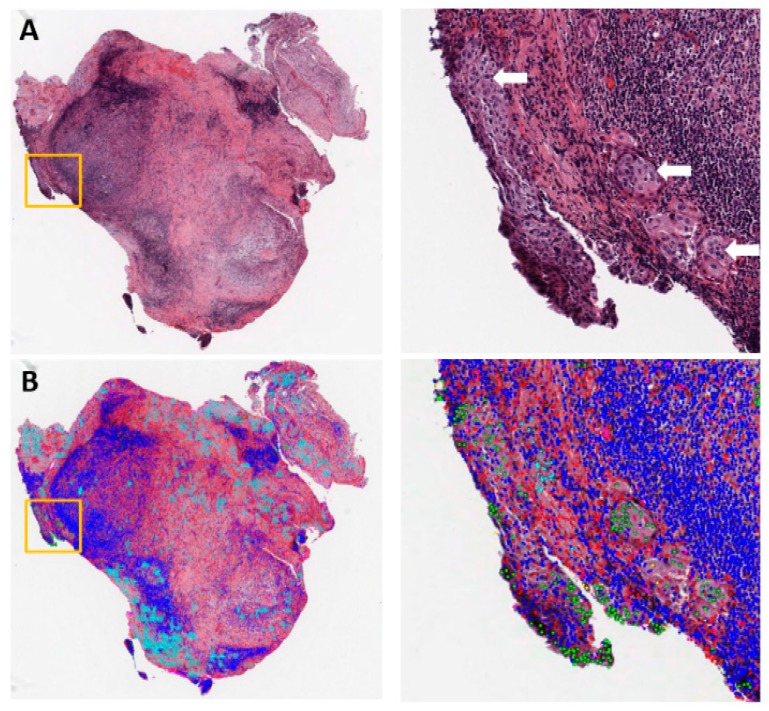

Pathology inspection of tissue slides is an important step in lung cancer diagnosis. For example, in the tumor, node, and metastasis (TNM) staging [26], the node (N) stage (regional lymph node involvement) is determined by examining whether the tumor has invaded the lymph nodes, based on pathology slides. Identifying the existence of tumor cells in dissected lymph nodes requires highly skilled pathologists and is a laborious task, especially if there are many dissected lymph nodes or the metastasis region(s) is small (Figure 1A). Computer-aided automatic detection of tumor cells in lymph nodes would greatly reduce the false negative rate, which would allow for better early detection and treatment of lung cancer, improve the accuracy of TNM staging, speed up the examination process, and reduce the workload for pathologists (Figure 1B).

Figure 1.

Example of metastasis detection in the lymph node for a lung adenocarcinoma patient. (A) Left: Hematoxylin and eosin (H&E)-stained lymph node pathology slide (40×). Data were collected by the National Lung Screening Trial (NLST). Tumor cells began to invade into the capsule in the orange box. Right: Region of interest in the orange box on the left, with white arrows pointing to tumor cells. (B) Cell classification result overlaid on the H&E image. Green: Tumor nuclei; blue: Lymphocytes; red: Stroma nuclei; cyan: Necrosis.

Histological classification of tumor subtypes is another application of pathology image analysis in lung cancer diagnosis. According to each tumor’s histopathological features, lung cancer can be divided into non-small cell lung cancer (NSCLC) and small cell lung cancer (SCLC). NSCLC accounts for 85% of lung cancer cases and can be further separated into lung adenocarcinoma (ADC), squamous cell carcinoma (SCC) lung cancer, and large cell lung cancer. Each subtype of lung cancer has a distinct biological origin and mechanism(s). Lung ADC accounts for 50% of lung malignancies, with remarkable heterogeneity in clinical, radiological, molecular, and pathological features [27]. According to the growth pattern and morphology, ADC can be further divided into subgroups: Lepidic, papillary, acinar, micropapillary, and solid patterns [28]. These five morphology subtypes of lung ADC have different prognostic outcomes [29,30] and treatment responses [31]. Although extensive research has been conducted into ADC subtyping, classification of these subtypes remains a challenge for two main reasons: (1) poor consistency even among expert pathologists and (2) co-existence of multiple morphology subtypes across one or more slides from the same patient. As a result, for accurate diagnosis, the proportion of each subtype needs to be quantified, which can be a very challenging and time-consuming task for pathologists. Currently, common practice is to use the dominant subtype to represent the patient, which may result in a loss of information for other subtypes. Even to determine the dominant subtype of ADC, pathologists usually need to go through multiple tissue slides in order to provide an accurate diagnosis. Other tumor classification tasks for NSCLC, such as differential status classification [32] and ADC/SCC classification [22], are subject to similar technical challenges.

In addition, tumor spread through air spaces (STAS) has been demonstrated as a significant clinical factor associated with poor prognosis for tumor recurrence and patient survival [33]. Identification and quantification of STAS requires detailed inspection of whole tissue slides by experienced pathologists. Therefore, pathology image analysis tools for fast and accurate identification of STAS will be useful to pathologists.

2.2. Tumor Microenvironment (TME) Characterization Based on Substructure Segmentation

In addition to tumor grade and subtype [34], pathology images can provide insights into the TME. An essential step in the quantitative characterization of the TME is segmenting different types of tissue substructures and cells from pathology images. Such segmentation serves as the basis for various image analysis tasks, such as cell composition, spatial organization, and substructure-specific morphological properties. Lung cancer TME is mainly formed by tumor cells, lymphocytes, stromal cells, macrophages, blood vessels, etc. Studies in lung cancer have shown tumor-infiltrating lymphocytes (TIL) to be positive prognostic factors [35] and angiogenesis to be negatively associated with survival outcome [36], while stromal cells have complex prognostic effects [37,38]. Traditional image processing methods involve feature definition, feature extraction, and/or segmentation. Although these methods have been applied to segment lymphocytes and to analyze the spatial organization of TIL [39] and stromal cells [9] in the TME, quantitative characterization of lung cancer TME remains challenging for the following reasons: (1) The composition of lung cancer TME is complex and heterogeneous: In addition to the aforementioned cell types, other structures, including the bronchus, cartilage, and pleura, are often found in lung pathology slides. Such complexity and heterogeneity make segmentation and traditional feature definition a challenge. (2) Cell spatial organization (such as the spatial distributions of and interactions among different types of cells), despite their important roles in TME, are much more challenging to characterize than simply providing the numbers or proportions of different types of cells. Current studies mainly focus on the proportions of different types of cells, while ignoring the complex cell spatial organization, which could lead to limited and contradicting results about the roles of different types of cells in the TME. (3) For H&E-stained slides, the color may vary substantially depending on staining conditions and the time lapse between slide preparation and scanning. Traditional image processing methods based on handcrafted feature extraction cannot easily surmount these impediments. Robust methods adapted to diverse tissue structures and color conditions are required.

2.3. Prognosis and Precision Medicine

One of the most important potential applications of digital pathology in cancer is to predict patient prognosis and response to treatment based on histopathological or morphological phenotyping, in order to facilitate precision medicine. While specific pathological features, such as the aforementioned tumor grade and subtype, have been reported as significant prognostic factors, directly linking pathology images with survival outcomes remains largely unexplored. Similar opportunities exist in fully utilizing pathology images for predicting treatment response. In particular, immunotherapy has been shown to be effective for some NSCLC patients [40]. Although several genomic biomarkers, such as PD-L1 expression [40] and neoantigen load [41], have been discovered to be predictive factors, immunotherapy response prediction remains a major challenge. The spatial organization of immune cells and the spatial distribution of TIL may be important factors in predicting immunotherapy response. However, automatic recognition of different types of cells and characterization of cell spatial organization are currently challenging tasks for pathology image analysis and require the development of new algorithms.

2.4. Association and Integration with Patient Genomic and Genetic Profiles

Another important emerging research field focuses on the relationship between patient genetic/genomic profiles and pathological/morphological phenotypes, which is essential for understanding the biological mechanisms underpinning cancer development and for selecting targeted therapy. Researchers have found an association between morphological features and tumor genetic mutations such as EGFR [42] and KRAS mutations [43]. The challenge in this area mainly lies in the facts that (1) both imaging and genomic/genetic data are extremely high-dimensional, and (2) the interaction between imaging and genomic features is largely unknown. Furthermore, molecular profiling data generate deep characterizations of the genetic, transcriptional, and proteomic events in tumors, while pathology images capture the tumor histology, growth pattern, and interactions with the surrounding microenvironment. Therefore, integrating imaging and molecular features could provide a more comprehensive view of individual tumors and potentially improve prediction of patient outcomes. However, how to construct and train a model that is capable of tackling such sophisticated data remains a difficult problem.

In summary, pathology image analysis is anticipated to become an important tool to facilitate precision medicine and basic research in cancer.

3. Advantages of Deep Learning Methods

To overcome the aforementioned challenges, various image processing and machine learning methods have been proposed and have so far achieved great progress. However, it is important to note the advantages of deep learning methods over non-deep-learning methods (also called shallow-learning methods).

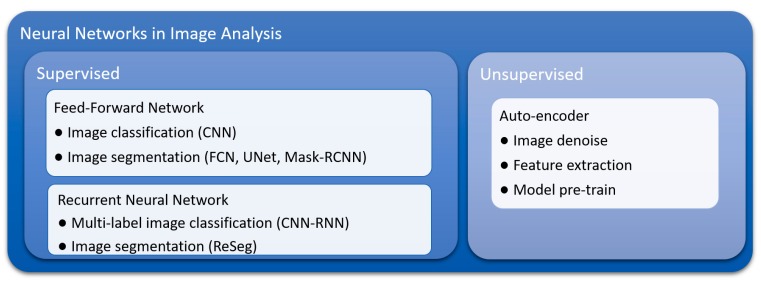

Currently, convolutional neural networks (CNNs) are the most frequently used deep learning model for image data classification, including tumor detection in pathology images of breast cancer [44,45], renal cell carcinoma [46], prostate cancer [47], and head and neck cancer [48]. Several forms of neural network have been derived from CNNs for image segmentation [49], including fully convolutional networks (FCNs) [50] and mask-regional convolutional neural networks (mask-RCNNs) [51]. Recurrent neural networks (RNNs), which are well known for modeling dynamic sequence behavior such as speech recognition, have also been explored in multi-label image classification [52] and image segmentation [53]. In additional to the aforementioned supervised deep learning models, autoencoder, an unsupervised deep learning model, has shown ability in analyzing pathology images through pre-training models [39], cell detection [54], and image feature extraction [55]. The taxonomy of the common neural networks used in image analysis is summarized in Figure 2.

Figure 2.

Taxonomy of common neural networks for image analysis. CNN: Convolutional neural network; FCN: Fully convolutional neural network; Mask-RCNN: Mask-regional convolutional neural network.

3.1. Inherent Characteristics and Advantages of Convolutional Neural Networks (CNNs)

Inspired by the working mechanisms of the brain, deep neural networks, also called “deep learning”, have one or more “hidden” layers between the input and output layers. In each layer, there are many neurons, also called kernels. Each kernel (usually a function in mathematics) takes inputs and computes an output. In a CNN model, a convolution kernel computes a feature at a specific location, called a “receptive field”, in the input space. The term “convolutional” denotes the operation of sliding the receptive fields through the input layer to generate the “feature map” from the convolution layer as the outputs. In essence, this operation was inspired by the functional mechanism of the visual cortex, and it makes CNN a great solution for many image analysis tasks.

A deep learning model has two important characteristics: (1) it allows for the construction and extraction of flexible representational features from input data, and (2) it contains multiple layers and many kernels that enable it to approximate basically any complex functions using the extracted features. In all, deep neural networks are capable of automatically extracting features and solving highly complex prediction problems. In contrast, traditional machine learning methods have two major steps: (1) defining the features, and (2) constructing models using these handcrafted features. Compared with traditional methods, deep learning models have the following advantages:

First, deep learning models greatly simplify or remove the task of manually defining features. Manual feature extraction is very challenging and time consuming, especially in the following two scenarios: (1) the prediction problem is complex, and/or (2) there is limited prior knowledge about the relationship between input data and the outcomes to be predicted. Both scenarios are true of pathology image analysis, as the prediction problems (such as using pathology images to predict patient outcomes or recognizing various tissue structures and cells from H&E-stained images) are very complex, and despite the accumulated knowledge from pathologists, little is known about which quantitative image features predict the outcomes. As a result, the advance of pathology image analysis had been slow and limited until the recent development of deep learning.

Second, the computation of deep learning algorithms can be highly parallel. As a result, deep learning can largely leverage the parallel computing power from the recent developments in GPU (graphics processing unit) hardware. With GPU-aided computation, processing (classifying or segmenting) a 1000 × 1000 pixels image usually takes less than one second for a deep learning model, much faster than traditional feature extraction steps [56,57] and non-deep-learning-based image segmentation methods [58]. Furthermore, since deep learning does not require handcrafted features, it can handle much more complex prediction problems and is able to recognize multiple objects simultaneously. For example, CNNs have shown great power in distinguishing as many as 1000 object categories [24].

Other advantages of deep learning methods include the following: (1) deep learning models fully utilize image data, as every pixel can be utilized in prediction model; (2) CNN models are insensitive to object position on the image, an inherent property of convolution operation; and (3) as discussed in the next section, by using extensive data augmentations in the model training process, CNN models are robust to different staining conditions in pathology image analysis.

3.2. Flexibility of Training and Model Construction Strategies of Deep Learning Methods

The training process of a deep learning model aims to optimize the kernels so as to minimize the difference between prediction and ground truth using the data from the training set. Traditional image processing methods usually experience difficulty in adapting to varying staining and lighting conditions [59], while deep learning models typically overcome this problem using a data augmentation strategy [24,60]. In pathology image analysis, data augmentation means the in silico creation of new training images through modifications to the color and shape of existing images. Compared with traditional image processing methods, deep-learning-based image recognition and segmentation models have shown higher stability in immunohistochemical (IHC) images [61] and H&E images, according to our experience. It is noteworthy that networks with multiple backbones provide a solution to the problem of suiting pathology images captured under different resolutions [44]. Moreover, selecting the proper image resolution depends entirely on the research goal; for example, lymphocyte and necrosis are best recognized at different scales [39].

Another advantage of deep learning methods is the flexibility of neural network construction, which consists of loss function selection and structure designation. Popular neural network frameworks, including Keras, TensorFlow, and PyTorch, have modularized the model construction process, which greatly reduces the effort required to modify and adapt an existing network. A neural network is trained by minimizing the loss function—a measure of the difference between ground truth and prediction. Although a common type of loss function for CNN is classification accuracy (e.g., differentiating tumor vs. non-malignant tissues), a wide range of loss functions can be selected to meet the needs of other problems. For example, Mobadersany et al. designed a negative partial likelihood loss function for survival outcomes in glioma and glioblastoma patients [62].

Flexibility in model architecture and structure make it relatively easy for deep learning models to integrate different sources and formats of information, such as in forming interactions between imaging and genomic features in pathology image analysis. In Mobaderany et al.’s research, the final feature layer generated from pathology images was concatenated with genomic features. This model integrated the imaging and genomic data and outperformed the model that used image data alone in predicting patient prognosis [62]. It is noteworthy that the recent developments in neural networks are making deep learning models even more powerful with increasingly complex structures. By combining a CNN with long short-term memory (LSTM) [63], another form of neural network popular in natural language processing, Zhang et al. designed a medical diagnostic model [64] that generated pathological reports and simultaneously visualized the attention of the model (i.e., which part of the input image contributed to a given word in a pathological report). This model has great potential to facilitate pathologists’ work in their daily practice.

3.3. Suitability for Transfer Learning

For traditional machine learning methods, applying the knowledge derived from one problem to a similar problem often requires complex mathematical deduction [65], and adapting a model developed from one dataset to a similar dataset also requires special procedures [66]. In contrast, transfer learning methods in deep learning make it straightforward and easy to adapt deep learning models developed from one dataset or problem to other similar datasets or prediction problems. Therefore, transfer learning enables us to solve some difficult prediction problems (due to the lack of a large volume of training data) by leveraging existing datasets of similar or related tasks. This is especially useful and important for biomedical applications, such as pathology image analysis, where the labeled training set is still limited. For example, the Cancer Genome Atlas (TCGA), one of the largest publicly available pathology image datasets, contains only hundreds of pathology images for each cancer type [67], while the ImageNet dataset contains 14 million labeled images for image recognition tasks. A good strategy for developing deep learning models for pathology image analysis is to start from a pre-trained model (e.g., an initialized model with pre-trained parameters from the model developed from the ImageNet dataset), and adapt the learned feature extractors to the pathology image dataset. By doing so, we can leverage the large volume of existing image datasets, such as ImageNet, for pathology image analysis. Studies have shown that this strategy leads to faster convergence and higher accuracy than training from scratch [68]. In recent years, transfer learning has been widely used in many deep learning studies of pathology image analysis tasks.

4. Applications of Deep Learning in Lung Cancer Pathology Image Analysis

To summarize the current progress of applying deep learning in lung cancer pathology image analysis, a survey of publications was performed. The key words “deep learning”, “pathology image”, and “lung cancer” were used in the survey. All eligible deep learning models published before October 2019 were categorized, sorted by time of publication, and are summarized in Table 1.

Table 1.

Summary of deep learning models for lung cancer pathology image analysis.

| Topic | Lung Cancer Subtype | Task | Model | Prognostic Value Reported? | Year | Ref. |

|---|---|---|---|---|---|---|

| Lung cancer detection | ADC | Maglinant vs. non-malignant classification | CNN | Yes | 2018 | [69] |

| NSCLC and SCLC | CNN | No | 2018 | [70] | ||

| ADC and SCC | CNN | No | 2019 | [71] | ||

| ADC | CNN | No | 2019 | [72] | ||

| SCC | CNN | No | 2019 | [72] | ||

| Not specified | CNN | No | 2019 | [73] | ||

| Lung cancer classification | ADC and SCC | ADC vs. SCC vs. non-malignant classification | CNN | No | 2018 | [74] |

| ADC and SCC | Mutation status prediction | CNN | No | 2018 | [74] | |

| ADC | Histological subtype classification | CNN | No | 2019 | [75] | |

| NSCLC | PD-L1 status prediction | FCN | No | 2019 | [76] | |

| ADC and SCC | ADC vs. SCC classification | CNN | No | 2019 | [71] | |

| ADC and SCC | ADC vs. SCC classification | CNN | No | 2019 | [72] | |

| ADC and SCC | Transcriptome subtype classification | CNN | No | 2019 | [72] | |

| ADC and SCC | ADC vs. SCC vs. non-malignant classification | CNN | No | 2019 | [77] | |

| ADC | Hisotological subtype classification | CNN | No | 2019 | [78] | |

| Micro-environment analysis | ADC and SCC | TIL positive vs. negative classification | CNN | Yes | 2018 | [39] |

| ADC and SCC | Necrosis positive vs. negative classification | CNN | Yes | 2018 | [39] | |

| ADC | Tumor vs. stromal cell vs. lymphcyte classification | CNN | Yes | 2018 | [79] | |

| ADC | Microvessel segmentation | FCN | Yes | 2018 | [80] | |

| ADC | Computation staining of 6 different nuclei types | Mask-RCNN | Yes | 2019 | [81] | |

| ADC and SCC | TIL positive vs. negative classification | CNN | No | 2019 | [82] | |

| Other | Early-stage NSCLC | Nucleus boundary segmentation | CNN | Yes | 2017 | [83] |

| Not specified | Nucleus segmentation | Unet + CRF | No | 2019 | [84] |

ADC: Adenocarcinoma; CNN: Convolutional neural network; CRF: Conditional random field; FCN: Fully convolutional neural network; Mask-RCNN: Mask-regional convolutional neural network; NSCLC: Non-small cell lung cancer; SCC: Squamous cell carcinoma; SCLC: Small cell lung cancer; TIL: Tumor-infiltrated lymphocytes.

4.1. Lung Cancer Diagnosis

Several deep learning models for lung cancer diagnosis have been proposed, aiming to facilitate the work of pathologists and researchers. Since a full-size WSI is typically at the megapixel level, image patches of much smaller size (around 300 × 300 pixels) extracted from the WSI have often been used as inputs. For example, Wang et al. trained a CNN model to classify each 300 × 300 pixels image patch from H&E-stained lung ADC WSIs as malignant or non-malignant, and overall classification accuracy (malignant vs. non-malignant) was 89.8% in the testing set [69]. By applying this classification model over a sliding window across a WSI, a heatmap of the probability of being malignant for each patch could be generated to facilitate tumor detection and study tumor spatial distribution, shape, and boundary features [69]. This methodology enables fast tumor detection even when the tumor region is small, which will greatly assist pathologists in future clinical diagnoses. Similarly, Li et al. trained and compared the performance of several CNNs with different structures in classifying 256 × 256 pixels image patches as malignant vs. non-malignant [70]. In addition to malignancy detection, deep learning models have also been developed to distinguish different lung cancer subtypes. Coudray et al. trained a CNN to classify lung cancer image patches into non-malignant, ADC, or SCC [74]. Coudray et al. also trained a CNN to predict the mutation status of six frequently mutated genes in lung ADC patients based on 512 × 512 pixels pathology image patches [74]. The areas under the curve (AUCs) of the receiver operation characteristic (ROC) curves for the classification accuracy of mutated vs. non-mutated were between 0.733 and 0.856 for these six genes in the validation dataset.

4.2. Lung Cancer Microenvironment Analysis

As the TME is increasingly acknowledged to be an important factor affecting tumor progression and immunotherapy response, several deep learning studies have been conducted to characterize the lung cancer TME. For example, Saltz et al. developed a CNN model to distinguish lymphocytes from necrosis or other tissues at the image-patch level across multiple cancer types, including lung ADC and SCC [39]. Through quantifying the spatial organization of detected lymphocyte image patches in the WSI, Saltz et al. reported the relationships among TIL distribution patterns, prognosis, and lymphocyte fractions. Wang et al. developed a CNN model to differentiate tumor cells, stroma cells, and lymphocytes at the single-cell level in lung ADC pathology images [79]. In Wang et al.’s study, conventional image processing methods were used to extract small image patches centered on cell nuclei. The image patches were then classified into different cell types using a CNN. A prognostic model based on image features characterizing the proportion and distribution of detected cells was then developed in the training set, and prognostic performances of the model was validated in two independent datasets. Another important application for characterizing lung cancer TME is angiogenesis characterization using automatic microvessel segmentation, which has been reported to be an important prognostic factor. Yi et al. developed a microvessel segmentation model for lung ADC pathology slides using a FCN model [80]. The model also showed generalizability to breast cancer and kidney cancer slides. While manual segmentation of microvessels is laborious and prone to error, deep-learning-based segmentation is fast and can quantify the area and spatial distribution of microvessels.

4.3. Lung Cancer Prognosis

Predicting tumor recurrence and survival for lung cancer patients is important in treatment planning. Due to the high heterogeneity and complexity of lung cancer pathology images, predicting patient outcome from lung cancer WSI is still a very challenging problem and will require a large amount of data for model development. As a result, most studies in lung cancer prognosis that use pathology images are currently focused on developing patient outcome prediction models based on image features extracted from deep-learning-aided classification or segmentation. Wang et al. used a CNN model to segment nucleus boundaries in H&E images by classifying each pixel as nucleus centroid, nucleus boundary, or non-nucleus [83]. Nuclear morphological and textural features were then extracted and used as predictors in a recurrence prediction model, which was validated in two independent datasets, and were then reported as independent factors for early stage NSCLC recurrence after adjusting for sex and tumor stage [83]. Spatial distribution of different cell types in the TME was also reported as a prognostic factor in lung cancer. As summarized in Section 4.2, Saltz et al. [39] and Wang et al. [79] developed CNN models to classify H&E image patches as lymphocytes and other cell types in pan-cancer and lung ADC patients, respectively. The distribution patterns of different cells were reported as prognostic for both overall survival and recurrence [39,79]. In addition to nuclear- and cellular-level features, Wang et al. applied CNNs to detect tumor regions across whole-slide images and extract tumor boundary and shape features, which were reported as prognostic for lung ADC overall survival [69].

5. Future Directions

5.1. Comprehensive Lung Cancer Diagnosis and Prognosis through Multi-Task Learning

As discussed in Section 2, although lung cancer histopathological subtypes and genetic alterations have been rigorously identified and investigated, there is a lack of objective, comprehensive deep learning models able to assist pathologists in lung cancer diagnosis and prognosis. More importantly, current studies tend to develop individual models for distinct but related tasks, such as tumor detection and ADC/SCC classification. Training various models independently results in redundant effort involving shared image information across different datasets and tasks. Thus, a comprehensive deep learning model that tackles a series of lung cancer diagnosis and prognosis tasks will be of great value.

5.2. Interpreting Deep Learning Models and Mining Knowledge from Trained Neural Networks

Although neural networks excel in many complex tasks, they are often regarded as “black boxes”. It is challenging to interpret the model, retrieve biological information, and gain meaningful insights from a well-trained model (usually with millions of parameters). Since neural networks have proven powerful in problem solving, efforts should be made to derive biological and physiological insights from a successful model.

5.3. Integrating Knowledge Accumulated from Clinical and Biological Studies into Deep Learning Methods

Another important direction is to impose well-accepted knowledge into deep learning models. For example, in the case of using gene expression data to predict clinical outcomes for lung cancer patients, incorporating current knowledge of biological pathways and gene regulation networks into prediction models will likely improve model performance and interpretability. Currently, neural networks are trained based only on input data and outcome. Although the concept of “transfer learning”, which refers to borrowing knowledge from one dataset to another [65], has been raised and widely implemented, it is not straightforward to incorporate prior knowledge into deep learning models. How to incorporate knowledge into a neural network is a challenging yet popular subject in pathology image analysis, as well as in the broader AI field.

5.4. Utilization and Integrating Multiple Methods of Medical Imaging

Since human eyes sense color mostly in three bands (red, green, and blue), traditional cameras implemented for capturing pathology images comprise sensors for red, green, and blue lights separately. However, it is possible that other wavelength bands contain extra valuable information. Thus, imaging at multiple bands (typically 3–10 bands, known as “multispectral imaging”; imaging with narrower bands (10–20 nm) is known as “hyperspectral imaging”) has been utilized in pathological imaging analysis and reported to improve algorithm performance in image recognition [85,86]. It is anticipated that deep learning will be able to fully utilize spectral information and perform well in multispectral image recognition.

Another important direction is the integration of pathology image analysis and radiomics. Routine radiology imaging modalities, such as computed tomography (CT), positron emission tomography (PET), and magnetic resonance imaging (MRI), are crucial for cancer screening and monitoring. Rooted in radiology, radiomics focuses on extracting quantitative features by deep mining of clinical images [87,88,89]. Compared with pathology, cancer imaging in radiology is much less invasive and often captures organ- or system-level physiological and pathological features (e.g., hemodynamics based on functional MRI [90] and glucose uptake based on PET [91]). Therefore, pathological and radiological images provide complementary information for the characterization of a tumor. In addition, radiomics also benefits from the availability of high-temporal-resolution data (e.g., dynamic-contrast-enhanced CT and MRI [92]) and long-term follow-up data. AI techniques have led to promising applications in radiology, from signal processing to clinical decision assistance [93,94,95,96]. Integration of AI-powered pathology image analysis and radiomics can leverage the most significant clinical, pathological, and molecular features to ultimately improve diagnosis and prognosis [97,98]. It is noteworthy that feature extraction and dimensionality reduction are important in analyzing radiomics and genomics [99,100,101,102,103,104]. A comprehensive framework for managing and harmonizing image data and features generated from different modalities, protocols, instruments, and analysis pipelines is also anticipated to play an important role in validating and applying this integrated analytics approach [98].

6. Conclusions

Compared with shallow learning methods, deep learning has multiple advantages in analyzing pathology images, including simplification of feature definition, power in recognizing complex objects, time-saving through parallel computation, and suitability for transfer learning. As expected, a rapid increase in studies applying deep learning methods to lung cancer pathological image analysis has been observed. The results of relatively simple tasks, such as tumor detection and histology subtype classification, are generally satisfactory, with an AUC around 0.9, while the results of more challenging tasks, including mutation and transcription status prediction, are less satisfactory, with AUCs ranging from 0.6 to 0.8. Larger datasets, adequate neural network architecture, and modern imaging methods are anticipated to help improve the performance. Thus, combining pathological imaging with deep learning methods could have great potential impact in lung cancer patient care.

Acknowledgments

The authors thank Jessie Norris for helping us to edit this manuscript.

Funding

This work was partially supported by the National Institutes of Health [5R01CA152301, P50CA70907, 1R01GM115473, and 1R01CA172211], and the Cancer Prevention and Research Institute of Texas [RP190107 and RP180805].

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Dela Cruz C.S., Tanoue L.T., Matthay R.A. Lung cancer: Epidemiology, etiology, and prevention. Clin. Chest Med. 2011;32:605–644. doi: 10.1016/j.ccm.2011.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.de Groot P.M., Wu C.C., Carter B.W., Munden R.F. The epidemiology of lung cancer. Transl. Lung Cancer Res. 2018;7:220–233. doi: 10.21037/tlcr.2018.05.06. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Barta J.A., Powell C.A., Wisnivesky J.P. Global Epidemiology of Lung Cancer. Ann. Glob. Health. 2019;85:e8. doi: 10.5334/aogh.2419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.van den Bent M.J. Interobserver variation of the histopathological diagnosis in clinical trials on glioma: A clinician’s perspective. Acta Neuropathol. 2010;120:297–304. doi: 10.1007/s00401-010-0725-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cooper L.A., Kong J., Gutman D.A., Dunn W.D., Nalisnik M., Brat D.J. Novel genotype-phenotype associations in human cancers enabled by advanced molecular platforms and computational analysis of whole slide images. Lab. Investig. A J. Tech. Methods Pathol. 2015;95:366–376. doi: 10.1038/labinvest.2014.153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Alturkistani H.A., Tashkandi F.M., Mohammedsaleh Z.M. Histological Stains: A Literature Review and Case Study. Glob. J. Health Sci. 2015;8:72–79. doi: 10.5539/gjhs.v8n3p72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jara-Lazaro A.R., Thamboo T.P., Teh M., Tan P.H. Digital pathology: Exploring its applications in diagnostic surgical pathology practice. Pathology. 2010;42:512–518. doi: 10.3109/00313025.2010.508787. [DOI] [PubMed] [Google Scholar]

- 8.Webster J.D., Dunstan R.W. Whole-Slide Imaging and Automated Image Analysis: Considerations and Opportunities in the Practice of Pathology. Vet. Pathol. 2014;51:211–223. doi: 10.1177/0300985813503570. [DOI] [PubMed] [Google Scholar]

- 9.Beck A.H., Sangoi A.R., Leung S., Marinelli R.J., Nielsen T.O., van de Vijver M.J., West R.B., van de Rijn M., Koller D. Systematic analysis of breast cancer morphology uncovers stromal features associated with survival. Sci. Transl. Med. 2011;3:108ra113. doi: 10.1126/scitranslmed.3002564. [DOI] [PubMed] [Google Scholar]

- 10.Yuan Y., Failmezger H., Rueda O.M., Ali H.R., Graf S., Chin S.F., Schwarz R.F., Curtis C., Dunning M.J., Bardwell H., et al. Quantitative image analysis of cellular heterogeneity in breast tumors complements genomic profiling. Sci. Transl. Med. 2012;4:157ra143. doi: 10.1126/scitranslmed.3004330. [DOI] [PubMed] [Google Scholar]

- 11.Williams B.J., Hanby A., Millican-Slater R., Nijhawan A., Verghese E., Treanor D. Digital pathology for the primary diagnosis of breast histopathological specimens: An innovative validation and concordance study on digital pathology validation and training. Histopathology. 2018;72:662–671. doi: 10.1111/his.13403. [DOI] [PubMed] [Google Scholar]

- 12.Bauer T.W., Slaw R.J., McKenney J.K., Patil D.T. Validation of whole slide imaging for frozen section diagnosis in surgical pathology. J. Pathol. Inform. 2015;6:e49. doi: 10.4103/2153-3539.163988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Snead D.R., Tsang Y.W., Meskiri A., Kimani P.K., Crossman R., Rajpoot N.M., Blessing E., Chen K., Gopalakrishnan K., Matthews P., et al. Validation of digital pathology imaging for primary histopathological diagnosis. Histopathology. 2016;68:1063–1072. doi: 10.1111/his.12879. [DOI] [PubMed] [Google Scholar]

- 14.Buck T.P., Dilorio R., Havrilla L., O’Neill D.G. Validation of a whole slide imaging system for primary diagnosis in surgical pathology: A community hospital experience. J. Pathol. Inform. 2014;5:e43. doi: 10.4103/2153-3539.145731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ordi J., Castillo P., Saco A., Del Pino M., Ordi O., Rodriguez-Carunchio L., Ramirez J. Validation of whole slide imaging in the primary diagnosis of gynaecological pathology in a University Hospital. J. Clin. Pathol. 2015;68:33–39. doi: 10.1136/jclinpath-2014-202524. [DOI] [PubMed] [Google Scholar]

- 16.Paul A., Mukherjee D.P. Mitosis Detection for Invasive Breast Cancer Grading in Histopathological Images. IEEE Trans. Image Process. A Publ. IEEE Signal. Process. Soc. 2015;24:4041–4054. doi: 10.1109/TIP.2015.2460455. [DOI] [PubMed] [Google Scholar]

- 17.Rathore S., Hussain M., Aksam Iftikhar M., Jalil A. Novel structural descriptors for automated colon cancer detection and grading. Comput. Methods Programs Biomed. 2015;121:92–108. doi: 10.1016/j.cmpb.2015.05.008. [DOI] [PubMed] [Google Scholar]

- 18.Nguyen K., Sarkar A., Jain A.K. Prostate cancer grading: Use of graph cut and spatial arrangement of nuclei. IEEE Trans. Med. Imaging. 2014;33:2254–2270. doi: 10.1109/TMI.2014.2336883. [DOI] [PubMed] [Google Scholar]

- 19.Waliszewski P., Wagenlehner F., Gattenlohner S., Weidner W. Fractal geometry in the objective grading of prostate carcinoma. Der Urol. Ausg. A. 2014;53:1186–1194. doi: 10.1007/s00120-014-3472-x. [DOI] [PubMed] [Google Scholar]

- 20.Atupelage C., Nagahashi H., Yamaguchi M., Abe T., Hashiguchi A., Sakamoto M. Computational grading of hepatocellular carcinoma using multifractal feature description. Comput. Med Imaging Graph. 2013;37:61–71. doi: 10.1016/j.compmedimag.2012.10.001. [DOI] [PubMed] [Google Scholar]

- 21.Luo X., Zang X., Yang L., Huang J., Liang F., Rodriguez-Canales J., Wistuba I.I., Gazdar A., Xie Y., Xiao G. Comprehensive Computational Pathological Image Analysis Predicts Lung Cancer Prognosis. J. Thorac. Oncol. 2017;12:501–509. doi: 10.1016/j.jtho.2016.10.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Yu K.H., Zhang C., Berry G.J., Altman R.B., Re C., Rubin D.L., Snyder M. Predicting non-small cell lung cancer prognosis by fully automated microscopic pathology image features. Nat. Commun. 2016;7:e12474. doi: 10.1038/ncomms12474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 24.Krizhevsky A., Sutskever I., Hinton G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM. 2017;60:84–90. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 25.Goodfellow I., Bengio Y., Courville A., Bengio Y. Deep Learning. MIT Press; Cambridge, MA, USA: 2016. [Google Scholar]

- 26.Goldstraw P., Chansky K., Crowley J., Rami-Porta R., Asamura H., Eberhardt W.E., Nicholson A.G., Groome P., Mitchell A., Bolejack V., et al. The IASLC Lung Cancer Staging Project: Proposals for Revision of the TNM Stage Groupings in the Forthcoming (Eighth) Edition of the TNM Classification for Lung Cancer. J. Thorac. Oncol. 2016;11:39–51. doi: 10.1016/j.jtho.2015.09.009. [DOI] [PubMed] [Google Scholar]

- 27.Song S.H., Park H., Lee G., Lee H.Y., Sohn I., Kim H.S., Lee S.H., Jeong J.Y., Kim J., Lee K.S. Imaging phenotyping using radiomics to predict micropapillary pattern within lung adenocarcinoma. J. Thorac. Oncol. 2017;12:624–632. doi: 10.1016/j.jtho.2016.11.2230. [DOI] [PubMed] [Google Scholar]

- 28.Travis W.D., Brambilla E., Noguchi M., Nicholson A.G., Geisinger K.R., Yatabe Y., Beer D.G., Powell C.A., Riely G.J., Van Schil P.E., et al. International Association for the Study of Lung Cancer/American Thoracic Society/European Respiratory Society International Multidisciplinary Classification of Lung Adenocarcinoma. J. Thorac. Oncol. 2011;6:244–285. doi: 10.1097/JTO.0b013e318206a221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gu J., Lu C., Guo J., Chen L., Chu Y., Ji Y., Ge D. Prognostic significance of the IASLC/ATS/ERS classification in Chinese patients-A single institution retrospective study of 292 lung adenocarcinoma. J. Surg. Oncol. 2013;107:474–480. doi: 10.1002/jso.23259. [DOI] [PubMed] [Google Scholar]

- 30.Hung J.J., Yeh Y.C., Jeng W.J., Wu K.J., Huang B.S., Wu Y.C., Chou T.Y., Hsu W.H. Predictive value of the international association for the study of lung cancer/American Thoracic Society/European Respiratory Society classification of lung adenocarcinoma in tumor recurrence and patient survival. J. Clin. Oncol. 2014;32:2357–2364. doi: 10.1200/JCO.2013.50.1049. [DOI] [PubMed] [Google Scholar]

- 31.Tsao M.S., Marguet S., Le Teuff G., Lantuejoul S., Shepherd F.A., Seymour L., Kratzke R., Graziano S.L., Popper H.H., Rosell R., et al. Subtype Classification of Lung Adenocarcinoma Predicts Benefit From Adjuvant Chemotherapy in Patients Undergoing Complete Resection. J. Clin. Oncol. 2015;33:3439–3446. doi: 10.1200/JCO.2014.58.8335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Ichinose Y., Yano T., Asoh H., Yokoyama H., Yoshino I., Katsuda Y. Prognostic factors obtained by a pathologic examination in completely resected non-small-cell lung cancer: An analysis in each pathologic stage. J. Thorac. Cardiovasc. Surg. 1995;110:601–605. doi: 10.1016/S0022-5223(95)70090-0. [DOI] [PubMed] [Google Scholar]

- 33.Kadota K., Nitadori J., Sima C.S., Ujiie H., Rizk N.P., Jones D.R., Adusumilli P.S., Travis W.D. Tumor Spread through Air Spaces is an Important Pattern of Invasion and Impacts the Frequency and Location of Recurrences after Limited Resection for Small Stage I Lung Adenocarcinomas. J. Thorac. Oncol. 2015;10:806–814. doi: 10.1097/JTO.0000000000000486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Travis W.D., Brambilla E., Nicholson A.G., Yatabe Y., Austin J.H.M., Beasley M.B., Chirieac L.R., Dacic S., Duhig E., Flieder D.B., et al. The 2015 World Health Organization Classification of Lung Tumors: Impact of Genetic, Clinical and Radiologic Advances Since the 2004 Classification. J. Thorac. Oncol. 2015;10:1243–1260. doi: 10.1097/JTO.0000000000000630. [DOI] [PubMed] [Google Scholar]

- 35.Gooden M.J., de Bock G.H., Leffers N., Daemen T., Nijman H.W. The prognostic influence of tumour-infiltrating lymphocytes in cancer: A systematic review with meta-analysis. Br. J. Cancer. 2011;105:93–103. doi: 10.1038/bjc.2011.189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Han H., Silverman J.F., Santucci T.S., Macherey R.S., dAmato T.A., Tung M.Y., Weyant R.J., Landreneau R.J. Vascular endothelial growth factor expression in stage I non-small cell lung cancer correlates with neoangiogenesis and a poor prognosis. Ann. Surg. Oncol. 2001;8:72–79. doi: 10.1007/s10434-001-0072-y. [DOI] [PubMed] [Google Scholar]

- 37.Bremnes R.M., Donnem T., Al-Saad S., Al-Shibli K., Andersen S., Sirera R., Camps C., Marinez I., Busund L.T. The role of tumor stroma in cancer progression and prognosis: Emphasis on carcinoma-associated fibroblasts and non-small cell lung cancer. J. Thorac. Oncol. 2011;6:209–217. doi: 10.1097/JTO.0b013e3181f8a1bd. [DOI] [PubMed] [Google Scholar]

- 38.Ichikawa T., Aokage K., Sugano M., Miyoshi T., Kojima M., Fujii S., Kuwata T., Ochiai A., Suzuki K., Tsuboi M., et al. The ratio of cancer cells to stroma within the invasive area is a histologic prognostic parameter of lung adenocarcinoma. Lung Cancer. 2018;118:30–35. doi: 10.1016/j.lungcan.2018.01.023. [DOI] [PubMed] [Google Scholar]

- 39.Saltz J., Gupta R., Hou L., Kurc T., Singh P., Nguyen V., Samaras D., Shroyer K.R., Zhao T.H., Batiste R., et al. Spatial Organization and Molecular Correlation of Tumor-Infiltrating Lymphocytes Using Deep Learning on Pathology Images. Cell Rep. 2018;23:181–193.e7. doi: 10.1016/j.celrep.2018.03.086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Garon E.B., Rizvi N.A., Hui R.N., Leighl N., Balmanoukian A.S., Eder J.P., Patnaik A., Aggarwal C., Gubens M., Horn L., et al. Pembrolizumab for the Treatment of Non-Small-Cell Lung Cancer. N. Engl. J. Med. 2015;372:2018–2028. doi: 10.1056/NEJMoa1501824. [DOI] [PubMed] [Google Scholar]

- 41.Schumacher T.N., Schreiber R.D. Neoantigens in cancer immunotherapy. Science. 2015;348:69–74. doi: 10.1126/science.aaa4971. [DOI] [PubMed] [Google Scholar]

- 42.Ninomiya H., Hiramatsu M., Inamuraa K., Nomura K., Okui M., Miyoshi T., Okumura S., Satoh Y., Nakagawa K., Nishio M., et al. Correlation between morphology and EGFR mutations in lung adenocarcinomas Significance of the micropapillary pattern and the hobnail cell type. Lung Cancer. 2009;63:235–240. doi: 10.1016/j.lungcan.2008.04.017. [DOI] [PubMed] [Google Scholar]

- 43.Tam I.Y.S., Chung L.P., Suen W.S., Wang E., Wong M.C.M., Ho K.K., Lam W.K., Chiu S.W., Girard L., Minna J.D., et al. Distinct epidermal growth factor receptor and KRAS mutation patterns in non-small cell lung cancer patients with different tobacco exposure and clinicopathologic features. Clin. Cancer Res. 2006;12:1647–1653. doi: 10.1158/1078-0432.CCR-05-1981. [DOI] [PubMed] [Google Scholar]

- 44.Detecting Cancer Metastases on Gigapixel Pathology Images. [(accessed on 23 September 2019)]; Available online: https://arxiv.org/abs/1703.02442.

- 45.Deep Learning for Identifying Metastatic Breast Cancer. [(accessed on 23 September 2019)]; Available online: https://arxiv.org/abs/1606.05718.

- 46.Tabibu S., Vinod P.K., Jawahar C.V. Pan-Renal Cell Carcinoma classification and survival prediction from histopathology images using deep learning. Sci. Rep. UK. 2019;9:e10509. doi: 10.1038/s41598-019-46718-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Arvaniti E., Fricker K.S., Moret M., Rupp N., Hermanns T., Fankhauser C., Wey N., Wild P.J., Ruschoff J.H., Claassen M. Automated Gleason grading of prostate cancer tissue microarrays via deep learning. Sci Rep. UK. 2018;8:e12054. doi: 10.1038/s41598-018-30535-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Folmsbee J., Liu X.L., Brandwein-Weber M., Doyle S. Active Deep Learning: Improved Training Efficiency of Convolutional Neural Networks for Tissue Classification in Oral Cavity Cancer; Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018); Washington, DC, USA. 4–7 April 2018; pp. 770–773. [Google Scholar]

- 49.Wang S.D., Yang D.H., Rang R.C., Zhan X.W., Xiao G.H. Pathology Image Analysis Using Segmentation Deep Learning Algorithms. Am. J. Pathol. 2019;189:1686–1698. doi: 10.1016/j.ajpath.2019.05.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Shelhamer E., Long J., Darrell T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39:640–651. doi: 10.1109/TPAMI.2016.2572683. [DOI] [PubMed] [Google Scholar]

- 51.He K.M., Gkioxari G., Dollar P., Girshick R. Mask R-CNN. IEEE Int. Conf. Comp. Vis. 2017:2961–2969. doi: 10.1109/TPAMI.2018.2844175. [DOI] [PubMed] [Google Scholar]

- 52.Wang J., Yang Y., Mao J.H., Huang Z.H., Huang C., Xu W. CNN-RNN: A Unified Framework for Multi-label Image Classification; Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Las Vegas, NV, USA. 26 June–1 July 2016; pp. 2285–2294. [DOI] [Google Scholar]

- 53.Visin F., Romero A., Cho K., Matteucci M., Ciccone M., Kastner K., Bengio Y., Courville A. ReSeg: A Recurrent Neural Network-based Model for Semantic Segmentation; Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW); Las Vegas, NV, USA. 26 June–1 July 2016; pp. 426–433. [DOI] [Google Scholar]

- 54.Su H., Xing F.Y., Kong X.F., Xie Y.P., Zhang S.T., Yang L. Robust Cell Detection and Segmentation in Histopathological Images Using Sparse Reconstruction and Stacked Denoising Autoencoders. Adv. Comput Vis. Patt. 2017:257–278. doi: 10.1007/978-3-319-42999-1_15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Cheng J., Mo X., Wang X., Parwani A., Feng Q., Huang K. Identification of topological features in renal tumor microenvironment associated with patient survival. Bioinformatics. 2018;34:1024–1030. doi: 10.1093/bioinformatics/btx723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Huang F.C., Huang S.Y., Ker J.W., Chen Y.C. High-Performance SIFT Hardware Accelerator for Real-Time Image Feature Extraction. IEEE Trans. Circ. Syst. Vid. Technol. 2012;22:340–351. doi: 10.1109/TCSVT.2011.2162760. [DOI] [Google Scholar]

- 57.Dessauer M.P., Hitchens J., Dua S. GPU-enabled High Performance Feature Modeling for ATR Applications; Proceedings of the IEEE 2010 National Aerospace & Electronics Conference; Fairborn, OH, USA. 14–16 July 2010; pp. 92–98. [DOI] [Google Scholar]

- 58.Cai W.L., Chen S.C., Zhang D.Q. Fast and robust fuzzy c-means clustering algorithms incorporating local information for image segmentation. Pattern Recogn. 2007;40:825–838. doi: 10.1016/j.patcog.2006.07.011. [DOI] [Google Scholar]

- 59.Alsubaie N., Trahearn N., Raza S.E.A., Snead D., Rajpoot N.M. Stain Deconvolution Using Statistical Analysis of Multi-Resolution Stain Colour Representation. PLoS ONE. 2017;12:e0169875. doi: 10.1371/journal.pone.0169875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Very Deep Convolutional Networks for Large-Scale Image Recognition. [(accessed on 23 September 2019)]; Available online: https://arxiv.org/abs/1409.1556.

- 61.Pham B., Gaonkar B., Whitehead W., Moran S., Dai Q., Macyszyn L., Edgerton V.R. Cell Counting and Segmentation of Immunohistochemical Images in the Spinal Cord: Comparing Deep Learning and Traditional Approaches. Conf Proc. IEEE Eng. Med. Biol. Soc. 2018;2018:842–845. doi: 10.1109/EMBC.2018.8512442. [DOI] [PubMed] [Google Scholar]

- 62.Mobadersany P., Yousefi S., Amgad M., Gutman D.A., Barnholtz-Sloan J.S., Vega J.E.V., Brat D.J., Cooper L.A.D. Predicting cancer outcomes from histology and genomics using convolutional networks. Proc. Natl. Acad. Sci. USA. 2018;115:2970–2979. doi: 10.1073/pnas.1717139115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Sundermeyer M., Schluter R., Ney H. LSTM Neural Networks for Language Modeling; Proceedings of the 13th Annual Conference of the International Speech Communication Association 2012 (Interspeech 2012); Portland, OR, USA. 9–13 September 2012; pp. 194–197. [Google Scholar]

- 64.Zhang Z.Z., Xie Y.P., Xing F.Y., McGough M., Yang L. MDNet: A Semantically and Visually Interpretable Medical Image Diagnosis Network; Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017); Honolulu, HI, USA. 21–26 July 2017; pp. 3549–3557. [DOI] [Google Scholar]

- 65.Pan S.J., Yang Q.A. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010;22:1345–1359. doi: 10.1109/TKDE.2009.191. [DOI] [Google Scholar]

- 66.Xiao G., Ma S., Minna J., Xie Y. Adaptive prediction model in prospective molecular signature-based clinical studies. Clin. Cancer Res. 2014;20:531–539. doi: 10.1158/1078-0432.CCR-13-2127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Tomczak K., Czerwinska P., Wiznerowicz M. The Cancer Genome Atlas (TCGA): An immeasurable source of knowledge. Contemp. Oncol. (Pozn.) 2015;19:68–77. doi: 10.5114/wo.2014.47136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Shin H.C., Roth H.R., Gao M.C., Lu L., Xu Z.Y., Nogues I., Yao J.H., Mollura D., Summers R.M. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans. Med. Imaging. 2016;35:1285–1298. doi: 10.1109/TMI.2016.2528162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Wang S.D., Chen A., Yang L., Cai L., Xie Y., Fujimoto J., Gazdar A., Xiao G.H. Comprehensive analysis of lung cancer pathology images to discover tumor shape and boundary features that predict survival outcome. Sci Rep. UK. 2018;8:e10393. doi: 10.1038/s41598-018-27707-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Computer-Aided Diagnosis of Lung Carcinoma Using Deep Learning-a Pilot Study. [(accessed on 23 September 2019)]; Available online: https://arxiv.org/abs/1803.05471.

- 71.Pan-Cancer Classifications of Tumor Histological Images Using Deep Learning. [(accessed on 23 September 2019)]; Available online: https://www.biorxiv.org/content/10.1101/715656v1.abstract.

- 72.Yu K.-H., Wang F., Berry G.J., Re C., Altman R.B., Snyder M., Kohane I.S.J.b. Classifying Non-Small Cell Lung Cancer Histopathology Types and Transcriptomic Subtypes using Convolutional Neural Networks. bioRxiv. 2019 doi: 10.1093/jamia/ocz230. bioRxiv:530360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Šarić M., Russo M., Stella M., Sikora M. CNN-based Method for Lung Cancer Detection in Whole Slide Histopathology Images; Proceedings of the 2019 4th International Conference on Smart and Sustainable Technologies (SpliTech); Split, Croatia. 18–21 June 2019; pp. 1–4. [Google Scholar]

- 74.Coudray N., Ocampo P.S., Sakellaropoulos T., Narula N., Snuderl M., Fenyo D., Moreira A.L., Razavian N., Tsirigos A. Classification and mutation prediction from non-small cell lung cancer histopathology images using deep learning. Nat. Med. 2018;24:1559–1567. doi: 10.1038/s41591-018-0177-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Wei J.W., Tafe L.J., Linnik Y.A., Vaickus L.J., Tomita N., Hassanpour S. Pathologist-level classification of histologic patterns on resected lung adenocarcinoma slides with deep neural networks. Sci. Rep. 2019;9:e3358. doi: 10.1038/s41598-019-40041-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Sha L., Osinski B.L., Ho I.Y., Tan T.L., Willis C., Weiss H., Beaubier N., Mahon B.M., Taxter T.J., Yip S.S.F. Multi-Field-of-View Deep Learning Model Predicts Nonsmall Cell Lung Cancer Programmed Death-Ligand 1 Status from Whole-Slide Hematoxylin and Eosin Images. J. Pathol. Inf. 2019;10:e24. doi: 10.4103/jpi.jpi_24_19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Bilaloglu S., Wu J., Fierro E., Sanchez R.D., Ocampo P.S., Razavian N., Coudray N., Tsirigos A. Efficient pan-cancer whole-slide image classification and outlier detection using convolutional neural networks. bioRxiv. 2019 bioRxiv:633123. [Google Scholar]

- 78.Gertych A., Swiderska-Chadaj Z., Ma Z.X., Ing N., Markiewicz T., Cierniak S., Salemi H., Guzman S., Walts A.E., Knudsen B.S. Convolutional neural networks can accurately distinguish four histologic growth patterns of lung adenocarcinoma in digital slides. Sci Rep. UK. 2019;9:e1483. doi: 10.1038/s41598-018-37638-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Wang S., Wang T., Yang L., Yi F., Luo X., Yang Y., Gazdar A., Fujimoto J., Wistuba I.I., Yao B. ConvPath: A Software Tool for Lung Adenocarcinoma Digital Pathological Image Analysis Aided by Convolutional Neural Network. arXiv. 2018 doi: 10.1016/j.ebiom.2019.10.033.1809.10240 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Yi F.L., Yang L., Wang S.D., Guo L., Huang C.L., Xie Y., Xiao G.H. Microvessel prediction in H&E Stained Pathology Images using fully convolutional neural networks. BMC Bioinform. 2018;19:e64. doi: 10.1186/s12859-018-2055-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Computational Staining of Pathology Images to Study Tumor Microenvironment in Lung Cancer. [(accessed on 23 September 2019)]; Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3391381.

- 82.Abousamra S., Hou L., Gupta R., Chen C., Samaras D., Kurc T., Batiste R., Zhao T., Kenneth S., Saltz J. Learning from Thresholds: Fully Automated Classification of Tumor Infiltrating Lymphocytes for Multiple Cancer Types. arXiv. 20191907.03960 [Google Scholar]

- 83.Wang X.X., Janowczyk A., Zhou Y., Thawani R., Fu P.F., Schalper K., Velcheti V., Madabhushi A. Prediction of recurrence in early stage non-small cell lung cancer using computer extracted nuclear features from digital H&E images. Sci Rep. UK. 2017;7:e13543. doi: 10.1038/s41598-017-13773-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Qu H., Wu P., Huang Q., Yi J., Riedlinger G.M., De S., Metaxas D.N. Weakly Supervised Deep Nuclei Segmentation using Points Annotation in Histopathology Images; Proceedings of the International Conference on Medical Imaging with Deep Learning (MIDL 2019); London, UK. 8–10 July 2019; pp. 390–400. [Google Scholar]

- 85.Akbari H., Halig L.V., Zhang H.Z., Wang D.S., Chen Z.G., Fei B.W. Detection of Cancer Metastasis Using a Novel Macroscopic Hyperspectral Method. Proc. SPIE. 2012;8317:e831711. doi: 10.1117/12.912026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Boucheron L.E., Bi Z.Q., Harvey N.R., Manjunath B.S., Rimm D.L. Utility of multispectral imaging for nuclear classification of routine clinical histopathology imagery. BMC Cell Biol. 2007;8(Suppl. 1):e8. doi: 10.1186/1471-2121-8-S1-S8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Lambin P., Leijenaar R.T.H., Deist T.M., Peerlings J., de Jong E.E.C., van Timmeren J., Sanduleanu S., Larue R.T.H.M., Even A.J.G., Jochems A., et al. Radiomics: The bridge between medical imaging and personalized medicine. Nat. Rev. Clin. Oncol. 2017;14:749–762. doi: 10.1038/nrclinonc.2017.141. [DOI] [PubMed] [Google Scholar]

- 88.Gillies R.J., Kinahan P.E., Hricak H. Radiomics: Images Are More than Pictures, They Are Data. Radiology. 2016;278:563–577. doi: 10.1148/radiol.2015151169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Rizzo S., Botta F., Raimondi S., Origgi D., Fanciullo C., Morganti A.G., Bellomi M. Radiomics: The facts and the challenges of image analysis. Eur. Radiol. Exp. 2018;2:e36. doi: 10.1186/s41747-018-0068-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Guimaraes M.D., Schuch A., Hochhegger B., Gross J.L., Chojniak R., Marchiori E. Functional magnetic resonance imaging in oncology: State of the art. Radiol. Bras. 2014;47:101–111. doi: 10.1590/S0100-39842014000200013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Croteau E., Renaud J.M., Richard M.A., Ruddy T.D., Benard F., deKemp R.A. PET Metabolic Biomarkers for Cancer. Biomark. Cancer. 2016;8:61–69. doi: 10.4137/BIC.S27483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.O’Connor J.P., Tofts P.S., Miles K.A., Parkes L.M., Thompson G., Jackson A. Dynamic contrast-enhanced imaging techniques: CT and MRI. Br. J. Radiol. 2011;84:112–120. doi: 10.1259/bjr/55166688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Hosny A., Parmar C., Quackenbush J., Schwartz L.H., Aerts H. Artificial intelligence in radiology. Nat. Rev. Cancer. 2018;18:500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Erickson B.J., Korfiatis P., Akkus Z., Kline T.L. Machine Learning for Medical Imaging(1) Radiographics. 2017;37:505–515. doi: 10.1148/rg.2017160130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Wang G., Ye J.C., Mueller K., Fessler J.A. Image Reconstruction Is a New Frontier of Machine Learning. IEEE Trans. Med. Imaging. 2018;37:1289–1296. doi: 10.1109/TMI.2018.2833635. [DOI] [PubMed] [Google Scholar]

- 96.Integrating Deep and Radiomics Features in Cancer Bioimaging. [(accessed on 23 September 2019)]; Available online: https://www.biorxiv.org/content/10.1101/568170v1.abstract.

- 97.Aeffner F., Zarella M.D., Buchbinder N., Bui M.M., Goodman M.R., Hartman D.J., Lujan G.M., Molani M.A., Parwani A.V., Lillard K., et al. Introduction to Digital Image Analysis in Whole-slide Imaging: A White Paper from the Digital Pathology Association. J. Pathol. Inf. 2019;10:e9. doi: 10.4103/jpi.jpi_82_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Saltz J., Almeida J., Gao Y., Sharma A., Bremer E., DiPrima T., Saltz M., Kalpathy-Cramer J., Kurc T. Towards Generation, Management, and Exploration of Combined Radiomics and Pathomics Datasets for Cancer Research. AMIA Jt. Summits Transl. Sci. Proc. 2017;2017:85–94. [PMC free article] [PubMed] [Google Scholar]

- 99.Haghighi B., Choi S., Choi J., Hoffman E.A., Comellas A.P., Newell J.D., Barr R.G., Bleecker E., Cooper C.B., Couper D., et al. Imaging-based clusters in current smokers of the COPD cohort associate with clinical characteristics: The SubPopulations and Intermediate Outcome Measures in COPD Study (SPIROMICS) Resp. Res. 2018;19:e178. doi: 10.1186/s12931-018-0888-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Haghighi B., Choi S., Choi J., Hoffman E.A., Comellas A.P., Newell J.D., Lee C.H., Barr R.G., Bleecker E., Cooper C.B., et al. Imaging-based clusters in former smokers of the COPD cohort associate with clinical characteristics: The SubPopulations and intermediate outcome measures in COPD study (SPIROMICS) Resp. Res. 2019;20:e153. doi: 10.1186/s12931-019-1121-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Gang P., Zhen W., Zeng W., Gordienko Y., Kochura Y., Alienin O., Rokovyi O., Stirenko S. Dimensionality Reduction in Deep Learning for Chest X-Ray Analysis of Lung Cancer; Proceedings of the 2018 Tenth International Conference on Advanced Computational Intelligence (ICACI); Xiamen, China. 29–31 March 2018; pp. 878–883. [Google Scholar]

- 102.Luyapan J., Ji X.M., Zhu D.K., MacKenzie T.A., Amos C.I., Gui J. An Efficient Survival Multifactor Dimensionality Reduction Method for Detecting Gene-Gene Interactions of Lung Cancer Onset; Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM); Mardid, Spain. 3–6 December 2018; pp. 2779–2781. [Google Scholar]

- 103.Yousefi B., Jahani N., LaRiviere M.J., Cohen E., Hsieh M.-K., Luna J.M., Chitalia R.D., Thompson J.C., Carpenter E.L., Katz S.I. Correlative hierarchical clustering-based low-rank dimensionality reduction of radiomics-driven phenotype in non-small cell lung cancer; Proceedings of the Medical Imaging 2019: Imaging Informatics for Healthcare, Research, and Applications; San Diego, CA, USA. 17–18 February 2019; p. 1095416. [Google Scholar]

- 104.Haghighi B., Ellingwood N., Yin Y.B., Hoffman E.A., Lin C.L. A GPU-based symmetric non-rigid image registration method in human lung. Med. Biol. Eng. Comput. 2018;56:355–371. doi: 10.1007/s11517-017-1690-2. [DOI] [PMC free article] [PubMed] [Google Scholar]