Abstract

Background and purpose:

Manual contouring is labor intensive, and subject to variations in operator knowledge, experience and technique. This work aims to develop an automated computed tomography (CT) multi-organ segmentation method for prostate cancer treatment planning.

Methods and materials:

The proposed method exploits the superior soft-tissue information provided by synthetic MRI (sMRI) to aid the multi-organ segmentation on pelvic CT images. A cycle generative adversarial network (CycleGAN) was used to estimate sMRIs from CT images. A deep attention U-Net (DAUnet) was trained on sMRI and corresponding multi-organ contours for auto-segmentation. The deep attention strategy was introduced to retrieve the most relevant features to identify organ boundaries. Deep supervision was incorporated into the DAUnet to enhance the features’ discriminative ability. Segmented contours of a patient were obtained by feeding the CT image into the trained CycleGAN to generate sMRI, which was then fed to the trained DAUnet to generate the organ contours. We trained and evaluated our model with 140 datasets from prostate patients.

Results:

The Dice similarity coefficient and mean surface distance between our segmented and bladder, prostate, and rectum manual contours were 0.95±0.03, 0.52±0.22 mm; 0.87±0.04, 0.93±0.51 mm; and 0.89±0.04, 0.92±1.03 mm, respectively.

Conclusion:

We proposed a sMRI-aided multi-organ automatic segmentation method on pelvic CT images. By integrating deep attention and deep supervision strategy, the proposed network provides accurate and consistent prostate, bladder and rectum segmentation, and has the potential to facilitate routine prostate-cancer radiotherapy treatment planning.

Keywords: multi-organ segmentation, deep learning, synthetic MRI

1. INTRODUCTION

Target and organ art risk (OAR) segmentation are prerequisites of radiotherapy treatment planning. Radiotherapy planning evaluation is based on radiation dose coverage of target volume and dose sparing of highly radiosensitive surrounding OARs. Segmentation accuracy of OARs directly impacts the quality of radiotherapy plans and subsequent clinical outcomes. Current routine clinical practice involves the manual contouring of target and OARs by clinicians on computed tomography (CT) images (1). However, due to low soft-tissue contrast of CT imaging, the accuracy of manual contouring is compromised by inter- and intra-observer variability (2–6). This issue poses even greater challenges for cases in which target and OARs are abutting, such as prostate, bladder and rectum. Magnetic resonance image (MRI) offers unparalleled soft-tissue contrast which helps to reduce inter-observer variability and improve organ delineation accuracy (7, 8). However, MRI is not always available due to access or insurance. Moreover, MRI lacks the electron density information that is required for accurate radiotherapy dose calculation; additionally, contours delineated on MRI must be registered to CT images for use in treatment planning, a process prone to CTMRI registration errors.

Given the observer variability, time and expertise required for manual organ delineation, automatic CT segmentation methods are of great clinical value and have the potential to provide highly consistent, rapid and accurate organ contouring (1). However, atlas-based (9–11) and model-based automatic segmentation methods (12–14) are usually difficult to generalize on cases when there is little similarity between reference and test images or if large variabilities exist (1).

The rapid growth of deep learning provides vast opportunities and potentials for developing automatic segmentation techniques that offers accurate and consistent delineation (15–19). This data-driven method explores features from large amount of data, and tends to provide a better solution to problems involving large variation. Kazemifar et al. segmented prostate, rectum and bladder on pelvic CT images with U-Net (20). Balagopal et al. integrated 2D localization network and 3D segmentation network for volumetric multi-organ segmentation on male pelvic CT images (21). Liu et al. employed deep neural networks on a large cohort of patients for prostate segmentation (22).

Different from other deep-learning based CT segmentation methods which implement the segmentation models directly on CT images, we integrate superior soft-tissue information provided by synthetic MRI (sMRI) into the CT-based segmentation network for prostate, bladder and rectum delineation. The proposed method is structured with two networks: the first synthesizes MRI from CT images to obtain better contrast between organs of interest, and the second employs a deep-attention learning method to perform multi-organ segmentation. We performed a retrospective study with 102 prostate patients’ data to evaluate the performance of the proposed method.

2. MATERIALS AND METHODS

Figure 1 outlines the schematic flow chart of the proposed method. First, a cycle generative adversarial network (CycleGAN) was used to estimate sMRIs from CT images. The inverse transformation in CycleGAN enforces the mapping between CT and MRI towards one-to-one mapping. Second, a deep attention U-Net (DAUnet) was trained separately on a sMRI and its corresponding multi-organ contours. A deep attention strategy was introduced to retrieve the most relevant features to identify organ boundaries. Deep supervision was incorporated into this DAUnet to enhance the features’ discriminative ability. Finally, the segmented contours for a new patient were obtained by first feeding the CT image into the trained CycleGAN to generate sMRI, which was then fed to the trained segmentation DAUnet for multi-organ segmentation.

Fig.1.

The schematic flow diagram of the proposed method which contains the training stage (upper) and the segmentation stage (lower).

2.A. Synthetic MRI generation

CT and MRI are imaging modalities of drastically different physical properties, therefore training a CT-to-MRI transformation with conventional deep learning models is difficult. To address this issue, we employed a 3D CycleGAN architecture to learn this transformation. CycleGAN contains both targeted transformation (CT-to-MRI, in this study) and inverse transformation (MRI-to-CT), demonstrating great capability to mimic target data distribution (23). Patient anatomy can vary significantly among individuals. To accurately predict each voxel in anatomic region, we introduced several dense blocks to capture multi-scale information (including low-frequency and high-frequency) by extracting features from previous and following hidden layers (23).

Figure 2 shows the detailed architecture of generator and discriminator of CycleGAN. The generator structure follows an encoder-decoder design. Two convolutional layers with stride size of 2×2×2 are followed by 9 dense blocks, 2 deconvolution layers and a tanh layer, outputting feature maps with sizes equal to inputs. The dense block is implemented by 6 convolutional layers. The discriminator is a typical classification-based fully convolutional network (FCN), which consists of 4 convolutional layers, 3 of which were followed by a pooling layer, and the last followed by a sigmoid operation.

Fig. 2.

Generator and discriminator architecture of CycleGAN for MRI synthesis. ‘Conv’ is convolution operation. ‘Deconv’ is deconvolution operation. PReLU is Parametric ReLU operation, and BN is batch normalization.

At training stage, the MRI and CT images were fed into the CycleGAN network in 64×64×64 patches, with an overlap between any two neighboring patches of 48×48×48. This overlap ensures that a continuous whole-image output can be obtained and allows for increased training data for the network. The learning rate for Adam optimizer was set to 2e-4, and the Cycle GAN model was trained and tested on a NVIDIA Tesla V100 with 32 GB of memory for each GPU. A batch size of 4. 10 GB CPU memory and 28 GB GPU memory was used for each batch optimization. It takes about 12 minutes per 2000 iterations during training. The training was stopped after 150000 iterations. Model training takes about 15 hours. Mean absolute error (MAE) and gradient difference (GD) loss between the synthetic image and original image were used as loss functions to supervise the Cycle GAN. For testing, sMRI patches were generated by feeding CT patches into the trained CycleGAN model. Pixel values were averaged when overlapping exists. The sMRI generation for one patient takes around 2–5 minutes depending on the image size.

2.B. Deep attention U-Net

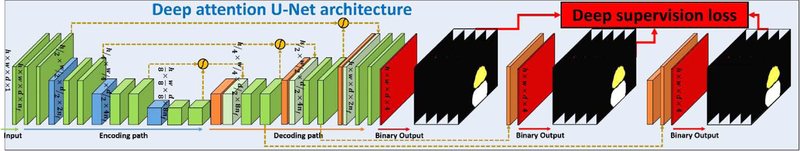

Manual prostate, bladder and rectum contours were used as the learning-based targets to supervise the segmentation model. Those contours were delineated on MRI by physicians, deformably registered to CT for treatment planning, and then approved by physicians. A 3D deep attention U-Net (DAUnet) was used to perform an end-to-end segmentation. DAUnet integrates both attention gates (AGs) (24) and deep supervision (25–27) into a conventional U-Net architecture to capture the most relevant semantic contextual information without enlarging the receptive field (19, 28). The feature maps extracted from the coarse scale were used in gating to disambiguate irrelevant and noisy responses in long skip connections (24). This was performed immediately prior to the concatenation operation to merge only relevant activations. Additionally, AGs filter the neuron activations during both the forward pass and the backward pass. We also used deep-supervision to force the intermediate feature maps to be semantically discriminative at each image scale. This helps to ensure that AGs, at different scales, have the ability to influence the responses to a large range of prostate content. Figure 3 shows the architecture of DAUnet for multi-organ segmentation.

Fig. 3.

DAUNet architecture for multi-organ segmentation.

The input patch size of our proposed DAUnet was set to 512×512×5, Dice loss was used as loss function, and the learning rate for Adam optimizer was set to 1e-3. The training was stopped after 200 epochs. For each epoch, the batch size was set to 20. The training of DAUnet model takes about 1.7 hours, after training, a single prostate segmentation can be completed in 1 to 2 seconds. Contour refinements, an averaging method to fuse the generated probability maps on overlapped slices, were applied to build a whole volume.

2.C. Data acquisition

In this retrospective study, we reviewed 140 patients with prostate malignancies treated with external beam radiation therapy in our clinic. All 140 patients underwent CT simulation and diagnostic MRIs. MRIs were fused with planning CT images to aid prostate delineation. The median prostate volume was 30.37 cc (range 8.39 −130.02 cc), based on 140 patients. Institutional review board approval was obtained, and this HIPAA-compliant retrospective analysis did not require informed consent.

The CT images were acquired by a Siemens SOMATOM Definition AS CT scanner at 120 kVp and 220 mAs with pixel size of 0.586 × 0.586 × 0.6mm3.The MRIs were acquired using a Siemens standard T2-weighted MRI scanner with 3D T2-SPACE sequence and 1.0×1.0×2.0 mm3 voxel size (TR/TE: 1000/123 ms, flip angle: 95°) The training MR and CT images were deformably registered using Velocity AI 3.2.1 (Varian Medical Systems, Palo Alto, CA).

2.D. Validation and evaluation

Dice similarity coefficient (DSC), sensitivity and specificity are calculated to quantify volume overlapping between manual and auto-segmented contours. We also calculated Hausdorff distance (HD), mean surface distance (MSD) and residual mean square distance (RMSD) to measure surface distances of the two contours. Center of mass distance (CMD) and percentage volume difference (PVD) are calculated to quantify the two contours’ center distance and volume differences.

Five-fold cross-validation was used to train and validate the proposed segmentation algorithm on 140 patients’ data. This method splits the data into 5 groups, with 28 datasets in each group. One of the groups was used as test set and the rest were used as training set. The process was repeated 5 times, and all 140 datasets were tested once. To illustrate the merits of deep attention and sMRI-aided strategy, we tested a model without deep attention as well as DAUnet segmentation model on CT instead of sMRI to compare their performances with the proposed model.

3. RESULTS

The comparison between models with and without deep attention, i.e., deeply supervised U-Net (DSUnet) is shown in Figure 4. The two algorithms were both trained on sMRI. Figures 4(b1–b4) and (c1–c4) show 3D scatter plots of the first 3 principal components of patch samples in the feature maps extracted from the last convolution layer of 3 deconvolution operators and from final convolution layers. We randomly selected 1000 samples from the posterior boundary of bladder, the anterior boundary of rectum, and the margin in-between, as shown in the subfigure (a4). For samples located on the voxels where the organs of interest are abutting, and differentiation is particularly difficult. As shown in the subfigures (b1–b4), the samples generated with DSUnet show substantial overlap. In subfigures (c1–c4), by integrating deep attention, DAUnet presents a better separation between samples for the organs.

Fig.4.

An illustrative example of the benefit of our DAUnet compared with DSUnet without using AGs, (a1) shows the original CT image in transverse plane. (a2) shows corresponding prostate (white) and rectum (grey) contours, (a3) shows the generated sMR image, (a4) shows the sample patches’ central positions drawn from sMRI, where the samples belonging to the prostate are highlighted by green circles, and the samples belonging to the rectum are highlighted by red asterisks, and the samples between prostate and rectum regions are highlighted by blue circles. (b1–b4) show the scatter plots of the first 3 principal components of corresponding patch samples in feature maps extracted by using a DSUnet, respectively. (c1–c4) show the scatter plots of first 3 principal components of corresponding patch samples in the feature maps extracted by DAUnet, respectively. The position of the viewer in (b1–b4) and (c1–c4) is azimuth = 10° and elevation = 30°.

The numerical comparison of these 2 methods are shown in Table 1. DSUnet had an average DSC of 0.93, 0.82 and 0.88 on bladder, prostate and rectum, respectively. By including deep attention, DAUnet improved the DSC by 0.02, 0.05 and 0.01 on the three organs. DAUnet outperformed DSUnet in all other calculated metrics, and obtained higher sensitivity and specificity, and smaller HD, MSD, RMSD, CMD and PVD values.

Table 1.

Numerical comparison of using DAUnet and DSUnet on sMRI data.

| Method | DSC | Sensitivity | Specificity | HD (mm) | MSD (mm) | RMSD (mm) | CMD (mm) | PVD | |

|---|---|---|---|---|---|---|---|---|---|

| Bladder | DSUnet | 0.93±0.05 | 0.96±0.04 | 0.90±0.07 | 12.68±14.93 | 0.67±0.28 | 1.11±0.56 | 1.28±1.08 | 4.8%±7.9% |

| DAUnet | 0.95±0.03 | 0.95±0.04 | 0.94±0.04 | 6.81±9.25 | 0.52±0.22 | 0.89±0.59 | 0.95±0.63 | 4.0%±4.1% | |

| p-value | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | 0.004 | <0.001 | 0.124 | |

| Prostate | DSUnet | 0.82±0.05 | 0.83±0.09 | 0.83±0.09 | 7.79±4.32 | 1.21±0.63 | 1.72±1.05 | 2.81±1.57 | 16.0%±15.0% |

| DAUnet | 0.87±0.04 | 0.86±0.08 | 0.88±0.08 | 6.35±3.11 | 0.93±0.51 | 1.38±0.85 | 1.90±1.32 | 14.7%±14.9% | |

| p-value | <0.001 | 0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | 0.116 | |

| Rectum | DSUnet | 0.88±0.04 | 0.89±0.05 | 0.88±0.07 | 13.16±17.16 | 1.00±1.06 | 1.78±2.40 | 3.51±3.27 | 7.9%±6.9% |

| DAUnet | 0.89±0.04 | 0.90±0.05 | 0.89±0.06 | 10.84±15.59 | 0.92±1.03 | 1.39±1.04 | 2.81±2.52 | 7.3%±7.2% | |

| p-value | <0.001 | <0.001 | 0.069 | 0.091 | 0.003 | 0.004 | <0.001 | 0.129 |

The improvement of soft-tissue contrast on sMRI over CT is illustrated Figure 5. We plotted the profiles transecting bladder, prostate and rectum on the two images in subfigures (4). To provide better illustration, we scaled voxel intensities on the dashed line to [0, 1]. As shown in Figure 5 (a4), (b4) and (c4), the contrast improvement with sMRI over CT can be clearly visualized on all three organs.

Fig.5.

Visual results of generated sMRI and its enhancement on soft-tissue contrast. (1) and (2) show the original CT image and the generated sMRI, (3) shows the deformed manual contour of (a) bladder, (b) prostate and (c) rectum. (4) shows the plot profiles of CT and sMRI along the blue dashed line in (1).

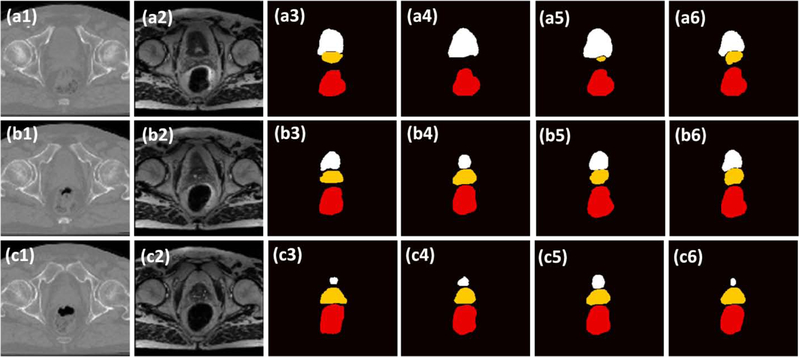

Given the enhanced soft-tissue contrast with sMRI, we then evaluates its impact on organ segmentation by comparing the segmentation results with the same model trained on CT. The qualitative results on three patients are illustrated in Figure 6, where the sMRI generated segmentations show better resemblance to the manual contours. The quantitative comparison also illustrated the improvement of segmentation accuracy with sMRI. As shown in Table 2, CT DAUnet obtained average DSC and MSD of 0.93 and 0.55mm, 0.82 and 1.15mm, 0.87 and 1.26mm for bladder, prostate, rectum respectively. With the help of sMRI, the same segmentation model increased DSC and reduced MSD, and the average DSC and MSD were 0.95 and 0.52mm, 0.87 and 0.93mm, 0.89 and 0.92mm on the three organs. Comparing to those generated with CT DAUnet, contours generated with sMRI DAUnet show better volume overlapping with the reference (higher sensitivity and specificity), better surface matching (smaller DH, MSD and RMSD), better center matching (smaller CMD) and better volume matching (smaller PVD).

Fig.6.

The comparison of the proposed method with two competing methods on three additional patients. (1) show the CT image. (2) show the sMRI, (3) shows the manual contour of bladder (white), prostate (yellow), and rectum (red). (4) show the segmented contours of DSUnet. (5) show the segmented contour of DAUnet trained on CT data; (6) show the segmented contour of DAUnet trained on sMRI data. (a), (b) and (c) are the segmentation results on three patients.

Table 2.

Numerical comparison by using DAUnet on CT and sMRI data.

| Method | DSC | Sensitivity | Specificity | HD (mm) | MSD (mm) | RMSD (mm) | CMD (mm) | PVD | |

|---|---|---|---|---|---|---|---|---|---|

| Bladder | CT | 0.93±0.03 | 0.95±0.03 | 0.95±0.04 | 10.29±10.56 | 0.55±0.32 | 0.97±0.71 | 1.23±0.82 | 7.7%±8.9% |

| sMRI | 0.95±0.03 | 0.95±0.04 | 0.94±0.04 | 6.81±9.25 | 0.52±0.22 | 0.89±0.59 | 0.95±0.63 | 4.0%±4.1% | |

| p-value | 0.148 | 0.092 | 0.711 | 0.543 | 0.166 | 0.178 | 0.654 | <0.001 | |

| Prostate | CT | 0.82±0.09 | 0.83±0.07 | 0.86±0.08 | 8.52±1.09 | 1.15±0.66 | 1.60±1.14 | 2.27±1.37 | 18.1%±20.9% |

| sMRI | 0.87±0.04 | 0.86±0.08 | 0.88±0.08 | 6.35±3.11 | 0.93±0.51 | 1.38±0.85 | 1.90±1.32 | 14.7%±14.9% | |

| p-value | <0.001 | <0.001 | 0.299 | <0.001 | <0.001 | 0.001 | 0.003 | 0.001 | |

| Rectum | CT | 0.87±0.05 | 0.86±0.05 | 0.88±0.08 | 16.08±19.63 | 1.26±1.18 | 2.42±3.20 | 3.45±3.32 | 9.4%±8.0% |

| sMRI | 0.89±0.04 | 0.90±0.05 | 0.89±0.06 | 10.84±15.59 | 0.92±1.03 | 1.39±1.04 | 2.81±2.52 | 7.3%±7.2% | |

| p-value | <0.001 | <0.001 | 0.011 | 0.001 | <0.001 | <0.001 | <0.001 | <0.001 |

4. DISCUSSION

Prostate, bladder and rectum delineation is a foundational component for prostate cancer radiotherapy treatment planning. Highly modulated planning methods, such as intensity modulated radiotherapy (IMRT) and stereotactic body radiotherapy (SBRT) are optimized based on the dose constraints on those organs, and the plan quality is subject to organ segmentation accuracy. While manual contouring is labor intensive, and subject to variations in operator knowledge, experience and technique, automatic segmentation methods provide a consistent and accurate multi-organ delineation approach that is observer-independent. Different from conventional automatic CT segmentation methods where segmentation models are built directly on CT images, the proposed method makes use of the superior soft-tissue contrast provided by sMRI to obtain accurate multi-organ segmentation. The integration of deep attention strategy aided the segmentation model to precisely locate organ boundaries that are prone to inter- and intra-observer variability. The proposed model demonstrates excellent segmentation accuracy with great potential to facilitate radiotherapy treatment planning.

Compared to the CT model, the sMRI model improved the average DSC by 0.02, 0.05 and 0.02 for bladder, prostate and rectum volumes. These qualitative results clearly demonstrate the benefits of sMRI, and the resultant improvement of segmentation accuracy with this sMRI-aided strategy. By synthesizing MRIs from CT images, the proposed method obtained additional soft-tissue information without additional imaging acquisition procedures. This not only resolves the issue of MRI availability, but also eliminates potential segmentation errors resulting from MRI-CT co-registration inaccuracy.

In this study, we used DSC and MSD distance to quantify the agreement of our results with reference contours. Overall, our method demonstrates statistically significant improvement in DSC and MSD on prostate over competing methods. Considering the variance in the statistical results, a few patients would benefit more than average. Such improvement in these quantification metrics directly helps the accuracy in treatment planning. For example, the 0.05 improvement in DSC of prostate in our method represents 5% more coverage on true prostate assuming a plan with 100% coverage on prostate contour. Future studies would include a dosimetric evaluation to quantitatively demonstrate the advantage of our method in the context of treatment planning.

Despite excellent multi-organ segmentation results, the proposed method has a number of limitations. Manual contours were employed in the training target of segmentation model. However, these contours are not perfect due to inter-observer variability. DSC of 0.91–0.94 on bladder, 0.74 to 0.79 on prostate and 0.74–0.85 on rectum resulting from inter-observer variability have been reported in literature (29–31). Since the segmentation model was trained with these manual contours, the model is also subject to the impact of inter-observer variability. The automatic segmentation model tries to find the ‘average’ among manual contours delineated by different physicians. By including large amount of training data, the model is expected to alleviate the issue of inter-observer variability. However, when testing the model, the automatic segmented contours were compared against those imperfect manual contours. The errors resulting from intra-observer variability were propagated to final evaluation results. Therefore, the segmentation errors of the proposed method reported in Table 1 and 2 represent both the intrinsic inaccuracy of the segmentation model and those from the reference manual contours. This issue could be alleviated by enrolling multiple observers to obtain accurate contours for model training and testing. Except inter-observer variability, the accuracy of manual contours is also subject to registration errors. Manual contours were delineated on MRIs that were registered to CT images, such that MRI-CT registration errors can propagate to manual contours. Similarly, this source of inaccuracy could be alleviated by including multiple observers for optimal contouring.

5. CONCLUSIONS

We developed a multi-organ segmentation method for pelvic CT images. By integrating deep attention and sMRI-aided strategy, the proposed network provides accurate and consistent prostate, bladder and rectum segmentation, and has the potential to facilitate routine radiotherapy treatment planning.

Highlights:

We made use of superior soft-tissue information provided by synthetic MRI to generate accurate and consistent multi-organ delineation on male pelvic CT images.

The integration of deep attenuation strategy aided the segmentation model to precisely locate organ boundaries that is prone to inter- and intra-observer variability.

The proposed model was trained and evaluated with 102 sets of patient data, and demonstrates excellent segmentation accuracy with great potential to facilitate radiotherapy treatment planning.

ACKNOWLEDGEMENTS

This research is supported in part by the National Cancer Institute of the National Institutes of Health under Award Number R01CA215718 (XY), the Department of Defense (DoD) Prostate Cancer Research Program (PCRP) Award W81XWH-17-1-0438 (TL) and W81XWH-17-1-0439 (AJ) and Dunwoody Golf Club Prostate Cancer Research Award (XY), a philanthropic award provided by the Winship Cancer Institute of Emory University.

Footnotes

Disclosure

The authors of this manuscript declare no relationships with any companies, whose products or services may be related to the subject matter of the article.

REFERENCES

- 1.Sharp G, Fritscher KD, Pekar V, et al. Vision 20/20: perspectives on automated image segmentation for radiotherapy. Med Phys. 2014;41:050902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Rasch C, Steenbakkers R, van Herk M. Target definition in prostate, head, and neck. Semin Radiat Oncol. 2005;15:136–145. [DOI] [PubMed] [Google Scholar]

- 3.Hurkmans CW, Borger JH, Pieters BR, Russell NS, Jansen EP, Mijnheer BJ. Variability in target volume delineation on CT scans of the breast. Int J Radiat Oncol Biol Phys. 2001;50:1366–1372. [DOI] [PubMed] [Google Scholar]

- 4.Vinod SK, Jameson MG, Min M, Holloway LC. Uncertainties in volume delineation in radiation oncology: A systematic review and recommendations for future studies. Radiother Oncol. 2016;121:169–179. [DOI] [PubMed] [Google Scholar]

- 5.Breunig J, Hernandez S, Lin J, et al. A system for continual quality improvement of normal tissue delineation for radiation therapy treatment planning. Int J Radiat Oncol Biol Phys. 2012;83:e703–708. [DOI] [PubMed] [Google Scholar]

- 6.Nelms BE, Tome WA, Robinson G, Wheeler J. Variations in the contouring of organs at risk: test case from a patient with oropharyngeal cancer. Int J Radiat Oncol Biol Phys. 2012;82:368–378. [DOI] [PubMed] [Google Scholar]

- 7.Villeirs GM, Vaerenbergh KV, Vakaet L, et al. Interobserver delineation variation using CT versus combined CT + MRI in intensity-modulated radiotherapy for prostate cancer. Strahlenther Onkol. 2005;181:424–430. [DOI] [PubMed] [Google Scholar]

- 8.Smith W, Lewis C, Bauman G, et al. Prostate volume contouring: a 3D analysis of segmentation using 3DTRUS, CT, and MR. Int J Radiat Oncol Biol Phys. 2007;67:1238–1247. [DOI] [PubMed] [Google Scholar]

- 9.Isgum I, Staring M, Rutten A, Prokop M, Viergever MA, van Ginneken B. Multi-atlas-based segmentation with local decision fusion--application to cardiac and aortic segmentation in CT scans. IEEE Trans Med Imaging. 2009;28:1000–1010. [DOI] [PubMed] [Google Scholar]

- 10.Aljabar P, Heckemann RA, Hammers A, Hajnal JV, Rueckert D. Multi-atlas based segmentation of brain images: atlas selection and its effect on accuracy. Neuroimage. 2009;46:726–738. [DOI] [PubMed] [Google Scholar]

- 11.Iglesias JE, Sabuncu MR. Multi-atlas segmentation of biomedical images: A survey. Med Image Anal. 2015;24:205–219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ecabert O, Peters J, Schramm H, et al. Automatic model-based segmentation of the heart in CT images. IEEE Trans Med Imaging. 2008;27:1189–1201. [DOI] [PubMed] [Google Scholar]

- 13.Qazi AA, Pekar V, Kim J, Xie J, Breen SL, Jaffray DA. Auto-segmentation of normal and target structures in head and neck CT images: a feature-driven model-based approach. Med Phys. 2011;38:6160–6170. [DOI] [PubMed] [Google Scholar]

- 14.Sun S, Bauer C, Beichel R. Automated 3-D segmentation of lungs with lung cancer in CT data using a novel robust active shape model approach. IEEE Trans Med Imaging. 2012;31:449–460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wang T, Lei Y, Tang H, et al. A learning-based automatic segmentation and quantification method on left ventricle in gated myocardial perfusion SPECT imaging: A feasibility study. Journal of Nuclear Cardiology. 2019:1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Yang X, Huang J, Lei Y, et al. Med-A-Nets: Segmentation of Multiple Organs in Chest CT Image with Deep Adversarial Networks. Paper presented at: MEDICAL PHYSICS2018. [Google Scholar]

- 17.Yang X, Lei Y, Wang T, et al. 3D Prostate Segmentation in MR Image Using 3D Deeply Supervised Convolutional Neural Networks. Medical physics. 2018;45:E582–E583. [Google Scholar]

- 18.Wang B, Lei Y, Tian S, et al. Deeply Supervised 3D FCN with Group Dilated Convolution for Automatic MRI Prostate Segmentation. Medical physics. 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Dong X, Lei Y, Wang T, et al. Automatic multiorgan segmentation in thorax CT images using U-net-GAN. Med Phys. 2019;46:2157–2168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kazemifar S, Balagopal A, Nguyen D, et al. Segmentation of the prostate and organs at risk in male pelvic CT images using deep learning. Biomedical Physics & Engineering Express. 2018;4. [Google Scholar]

- 21.Kazemifar AB Samaneh, Nguyen Dan, McGuire Sarah, Hannan Raquibul, Steve Jiang, Owrangi Amir. Segmentation of the prostate and organs at risk in male pelvic CT images using deep learning. Biomedical Physics & Engineering Express. 2018;4. [Google Scholar]

- 22.Liu C, Gardner SJ, Wen N, et al. Automatic Segmentation of the Prostate on CT Images Using Deep Neural Networks (DNN). Int J Radiat Oncol Biol Phys. 2019. [DOI] [PubMed] [Google Scholar]

- 23.Lei Y, Harms J, Wang T, et al. MRI-Only Based Synthetic CT Generation Using Dense Cycle Consistent Generative Adversarial Networks. Med Phys. 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mishra D, Chaudhury S, Sarkar M, Soin AS. Ultrasound Image Segmentation: A Deeply Supervised Network with Attention to Boundaries. IEEE Trans Biomed Eng. 2018;In press. [DOI] [PubMed] [Google Scholar]

- 25.Wang B, Lei Y, Tian S, et al. Deeply supervised 3D fully convolutional networks with group dilated convolution for automatic MRI prostate segmentation. Med Phys. 2019;46:1707–1718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lei Y, Tian S, He X, et al. Ultrasound Prostate Segmentation Based on Multi-Directional Deeply Supervised V-Net. Med Phys. 2019;In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wang T, Lei Y, Tian S, et al. Learning-based Automatic Segmentation of Arteriovenous Malformations on Contrast CT Images in Brain Stereotactic Radiosurgery. Med Phys. 2019;In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Balagopal A, Kazemifar S, Nguyen D, et al. Fully automated organ segmentation in male pelvic CT images. Phys Med Biol. 2018;63. [DOI] [PubMed] [Google Scholar]

- 29.Huyskens DP, Maingon P, Vanuytsel L, et al. A qualitative and a quantitative analysis of an auto-segmentation module for prostate cancer. Radiother Oncol. 2009;90:337–345. [DOI] [PubMed] [Google Scholar]

- 30.Geraghty JP, Grogan G, Ebert MA. Automatic segmentation of male pelvic anatomy on computed tomography images: a comparison with multiple observers in the context of a multicentre clinical trial. Radiat Oncol. 2013;8:106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Simmat I, Georg P, Georg D, Birkfellner W, Goldner G, Stock M. Assessment of accuracy and efficiency of atlas-based autosegmentation for prostate radiotherapy in a variety of clinical conditions. Strahlenther Onkol. 2012;188:807–815. [DOI] [PubMed] [Google Scholar]