Abstract

Background

There is growing interest in and provision of cadaveric simulation courses for surgical trainees. This is being driven by the need to modernize and improve the efficiency of surgical training within the current challenging training climate. The objective of this systematic review is to describe and evaluate the evidence for cadaveric simulation in postgraduate surgical training.

Methods

A PRISMA‐compliant systematic literature review of studies that prospectively evaluated a cadaveric simulation training intervention for surgical trainees was undertaken. All relevant databases and trial registries were searched to January 2019. Methodological rigour was assessed using the widely validated Medical Education Research Quality Index (MERSQI) tool.

Results

A total of 51 studies were included, involving 2002 surgical trainees across 69 cadaveric training interventions. Of these, 22 assessed the impact of the cadaveric training intervention using only subjective measures, five measured impact by change in learner knowledge, and 23 used objective tools to assess change in learner behaviour after training. Only one study assessed patient outcome and demonstrated transfer of skill from the simulated environment to the workplace. Of the included studies, 67 per cent had weak methodology (MERSQI score less than 10·7).

Conclusion

There is an abundance of relatively low‐quality evidence showing that cadaveric simulation induces short‐term skill acquisition as measured by objective means. There is currently a lack of evidence of skill retention, and of transfer of skills following training into the live operating theatre.

This systematic review of cadaveric simulation for surgical training showed that there is low‐quality evidence of short‐term skill acquisition after training, as measured by objective means. There is a lack of evidence of skill retention longitudinally.

Making a comeback?

Antecedentes

Existe un interés creciente en los cursos de simulación con cadáveres para la formación de residentes en cirugía, así como en aumentar la disponibilidad de dichos cursos. Ello es debido a la necesidad de modernizar y mejorar la eficiencia del entrenamiento en el marco actual del reto que supone la formación quirúrgica. El objetivo de esta revisión sistemática era describir y evaluar la evidencia del uso de la simulación con cadáveres en la formación quirúrgica de posgrado.

Métodos

Siguiendo la normativa PRISMA, se realizó una revisión sistemática de la literatura

de estudios que evaluaban prospectivamente el entrenamiento quirúrgico mediante la simulación con cadáveres. Se realizaron búsquedas en todas las bases de datos relevantes y en registros de ensayos clínicos hasta enero de 2019. Se evaluó el rigor metodológico utilizando la herramienta MERSQI (Medical Education Research Quality Index), ampliamente validada.

Resultados

Se incluyeron 51 estudios, con un total de 2.002 residentes de cirugía y 69 intervenciones de formación con simulación con cadáveres. Del total de dichos estudios, 22 evaluaron el impacto de la cirugía con cadáver utilizando solo medidas subjetivas, 5 midieron el impacto por el cambio en el conocimiento del alumno y 23 utilizaron herramientas objetivas para evaluar el cambio en el comportamiento del alumno después de la formación. Solo un estudio evaluó el resultado en pacientes, demostrando la transferencia de habilidades del entorno simulado al lugar de trabajo. De los estudios incluidos, el 55% tenía una metodología débil (puntuación MERSQI < 10,7).

Conclusión

Existe amplia evidencia, pero de baja calidad, referente a la simulación con cadáveres en la formación quirúrgica, demostrando que esta popular herramienta puede ser útil para adquirir habilidades a corto plazo, tal como indican los resultados derivados de medidas objetivas. Actualmente hay una falta de evidencia basada en estudios longitudinales respecto a la retención de habilidades y de cómo estas se transfieren al quirófano real una vez finalizada la formación. Se requieren futuros ensayos aleatorizados de alta calidad para abordar este punto.

Introduction

There is growing interest in the use of cadaveric simulation in postgraduate surgical training1. The move to incorporate simulation into surgical training is driven by a need to improve training efficiency in the current climate of reduced working hours2, 3, financial constraint and emphasis on patient safety4. Cadaveric simulation is of particular interest, as it provides ultra‐high‐fidelity representation of surgical anatomy as encountered in vivo 1, 5, authentic tissue handling6 and complex three‐dimensional neurovascular relationships, which are difficult to appreciate in textbooks and almost impossible to replicate in synthetic models7. Cadaveric simulation offers the opportunity to practise an operation in its entirety with high environmental, equipment and psychological fidelity, thereby enabling the rapid acquisition of procedural skills5 and attainment of competence in a setting remote from patient care8. With the current increase in availability of cadaveric training courses for surgical trainees9, a systematic evaluation of the evidence for their use is both timely and necessary.

The purpose of this review was to describe and evaluate the evidence for the use of cadaveric simulation in postgraduate surgical training.

Methods

This systematic review was conducted in accordance with the PRISMA guidelines10, 11; the review protocol was registered with PROSPERO (an international prospective register of systematic reviews)12.

Search strategy and data sources

A literature search was conducted in January 2019 using MEDLINE (Ovid) (1946 to the present), CINAHL (EBSCO) (Cumulative Index of Nursing and Allied Health Literature), Centre for Reviews and Dissemination Database, ISRCTN Registry, Cochrane Central Register of Controlled Trials, NHS Evidence, PubMed (1950 to the present), Embase (Ovid) (1947 to the present), Scopus, Australian Clinical Trials Registry and Google Scholar. Medical Subject Headings (MeSH) terms and text words from the MEDLINE search strategy (Table S1 , supporting information) were adapted for other databases according to the required syntax.

Search results were limited to human subjects and the English language. Duplicates were removed, and retrieved titles and abstracts were screened for initial eligibility. Reference lists of included studies and old reviews were hand‐searched to ensure literature saturation.

Selection criteria and data extraction

The initial eligibility screening criteria were: study participants were postgraduate doctors in training; there was exposure to human cadaveric simulation training; and there was an attempt at measuring the educational impact.

Studies were excluded at screening if they used animal cadaveric models, involved veterinary trainees, or were purely descriptive feasibility studies describing a cadaveric technique, with no assessment of the educational impact.

Abstracts that passed eligibility screening were retrieved in full text. Reference lists of full‐text articles were examined for relevant studies, and those found by hand‐searching were subject to the same eligibility screening process.

The data were extracted from the full‐text articles using piloted data extraction forms, by two reviewers working independently. Data items collected included: participant characteristics (number, stage of training and specialty); study characteristics (single‐centre versus multicentre, eligibility criteria defined, loss to follow‐up); cadaveric training (intervention, cadaveric model used, skills taught, comparator group (where applicable)); assessment of educational impact (primary outcome measure, evidence of instrument validation, results summary (objective and subjective), post‐test assessment and evidence of skill transfer (if applicable)).

Data analysis, quality assessment and evidence synthesis

Included studies were assigned a level of evidence score using a modified version of the Oxford Centre for Evidence‐Based Medicine (OCEBM) classification, which has been adapted by the European Association of Endoscopic Surgery and is used widely in educational systematic reviews13, 14. Methodological rigour of included studies was scored using the Medical Education Research Quality Instrument (MERSQI), which is a previously validated assessment tool15, 16, 17 for quantitative appraisal of medical education research across six domains: study design, sampling, type of data, instrument validity, data analysis and outcome. The maximum score is 18 points. The mean MERSQI score of both independent assessors for each included study is reported.

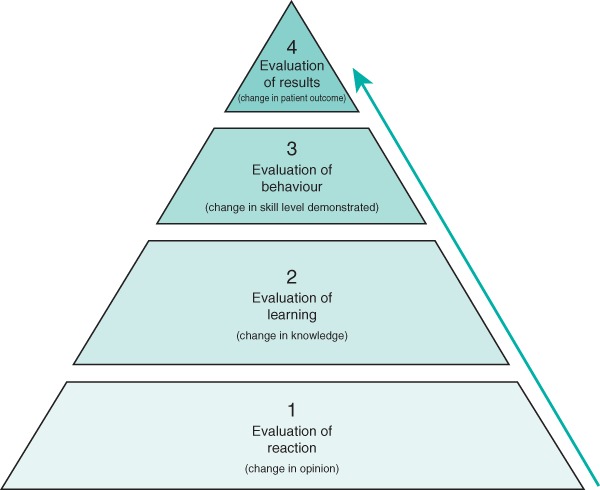

A qualitative, narrative synthesis of evidence was undertaken, structured around an adapted Kirkpatrick's hierarchy for assessing the educational impact of a teaching intervention (Fig. 1).

Figure 1.

Adapted Kirkpatrick hierarchy for assessing educational impact

Results

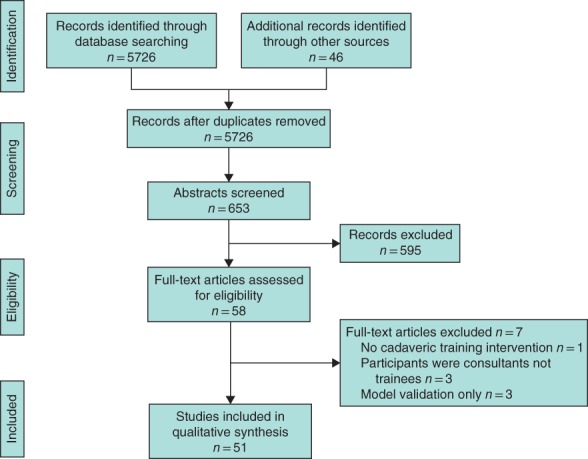

The initial search generated 5726 results, of which 5073 were clearly ineligible and rejected at title review (Fig. 2). A total of 653 abstracts were screened, 595 of which did not pass eligibility screening and were excluded. Some 58 articles were accessed in full text and reviewed carefully; one study was rejected at this stage as there was no cadaveric simulation training intervention, three were rejected as the study participants were consultants not trainees, and three studies were rejected as there was cadaveric model validation only with no assessment of educational impact. Fifty‐one studies were included in the review, of which 47 were full‐text original research articles and four were conference posters. The main characteristics of studies, including OCEBM and mean MERSQI scores are shown in Tables S2 – S5 (supporting information)18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68.

Figure 2.

PRISMA diagram of included studies

Study design and setting

Eight studies were RCTs, six were comparative cohort studies, and 37 were non‐comparative cohort studies.

The majority of studies were from the USA (35 studies) and the UK (8), with the remainder from Canada (4), Australia (2) and one each from Germany and Japan. All studies, except one33 were delivered at a single centre.

Participants

The number of participants in the included studies ranged from three to 390, totalling 2002 individual participants across 69 cadaveric training interventions, representing the breadth of surgical training grades.

Surgical specialty

In total, 12 surgical specialties and subspecialties were included (Tables S2 – S5 ). Most studies were within general surgery (14), trauma and orthopaedic surgery (9) and neurosurgery (7). All studies were single‐speciality.

Study quality

The mean MERSQI score was 9·4 (range 5–14). In terms of level of evidence, only two of 51 studies were OCEBM level 1b (RCT of good quality and adequate sample size with a power calculation), six studies were OCEBM 2a (RCT of reasonable quality and/or of inadequate sample size), six were OCEBM 2b (parallel cohort study), and 37 were OCEBM level 3 (non‐randomized, non‐comparative trials, descriptive research).

A linear relationship was observed between Kirkpatrick level and mean MERSQI score, suggesting that quality of evidence is linked with robust methodology.

Measurement of educational impact

An assessment of educational impact of the training intervention was made using objective measures in 28 of the 51 included studies, and using subjective measures only in the other 23 studies. Sixteen of the 28 studies that used objective outcome measures attempted to measure skill transfer after training.

Level 1: Reaction

Twenty‐two of the 51 studies measured the educational impact of a cadaveric training intervention using subjective measures of learner reaction/opinion. One18 of these studies used a comparative cohort design, comparing cadaveric‐trained with virtual reality‐trained participants (OCEBM level 2b), and 2119, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39 were descriptive research studies using non‐randomized, non‐comparative methods (OCEBM level 3). Two19, 29 of the Kirkpatrick level 1 studies attempted to measure skill transfer following the cadaveric training intervention. All 22 studies used participant questionnaires to assess learner reaction, most of which were purpose‐designed38 and not validated formally. The outcome measures included learner reaction with respect to simulation fidelity, learner opinion on the usefulness of the training, and change in operative confidence and self‐perceived competency after the training. All level 1 studies reported a positive effect of the cadaveric simulation training as measured by learner reaction/opinion.

Level 2: Learning

Five studies assessed the educational impact of the cadaveric training intervention by measuring change in learner knowledge. One40 of these studies was an RCT comparing cadaveric simulation training with a low‐fidelity bench‐top simulator, one41 was a cohort study comparing learning in cadaveric‐trained participants with those who received didactic teaching materials only, and three42, 43, 44 were non‐comparative cohort studies. Three studies40, 43, 44 used procedural knowledge scores as the primary outcome measure, and two41, 42 used viva voce and oral checklist examinations.

Cadaveric simulation training made no difference to the postintervention test scores in the study of AlJamal and colleagues40, but a significant improvement in overall examination scores was seen in the cadaveric‐trained group by Sharma and co‐workers41. Significant improvement in post‐test knowledge scores was reported in the three non‐comparative studies42, 43, 44 following cadaveric training.

Level 3: Behaviour

Twenty‐three studies assessed the educational impact of a cadaveric training intervention by attempting to measure a change in learner behaviour. Objective assessment methods of learner behaviour were highly variable, and included operational metrics (such as procedure time, error rate, hand motion analysis, path length) and final product analysis. Various score‐based methods were also used, including procedure scores, global rating scale (GRS), OSATS (Objective Structured Assessment of Technical Skills in Surgery) and the GOALS (Global Operative Assessment of Laparoscopic Skills) scale. Seven45, 46, 47, 48, 49, 50, 51 of the 23 studies were RCTs and 1652, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67 were cohort studies. Of the seven RCTs, three45, 46, 47 compared cadaveric simulation with no simulation training, and four48, 49, 50, 51 compared cadaveric simulation with low‐fidelity simulation.

Compared with no simulation training, cadaveric simulation‐trained learners showed significant improvement in most of the tested skill domains45, 46, 47. When comparing behaviour change after training in low‐fidelity simulation‐trained and cadaveric simulation‐trained learners, the results were mixed. Camp et al.51 reported that cadaveric training was superior to virtual reality (VR) when teaching knee arthroscopy, with greater improvement in procedural rating scores and reduced procedure time seen in the cadaveric‐trained compared with the VR‐trained group. Sidhu and colleagues49 reported that cadaveric training was superior to a bench‐top simulator for teaching graft‐to‐arterial anastomosis, as measured by a task‐based checklist (TBC), GRS and final product analysis (FPA). A greater benefit of the cadaveric training was seen in the more junior study participants.

Conversely, Anastakis and co‐workers48 compared behaviour change in cadaveric‐trained, low‐fidelity bench model‐trained and written materials only‐trained groups of learners performing basic general surgical skills, measured by procedural checklist scores and GRS. They found that the bench‐ and cadaveric‐trained groups performed better than the written materials only group, and that performances of the cadaveric‐ and bench‐trained groups were equivalent. Gottschalk et al.50 compared the performance of cadaveric‐trained, low‐fidelity bench model‐trained and ‘no training’ groups at cervical lateral mass screw placement using FPA. They found that, although both the cadaveric‐ and bench‐trained groups outperformed the no training group, the bench‐trained group had greater improvement in performance.

Of the 16 cohort studies measuring change in learner behaviour, five were comparative in design. Three studies53, 54, 55 compared inexperienced versus experienced performance, one52 compared behaviour change in cadaveric simulation‐trained versus low‐fidelity simulation‐trained cohorts, and one56 compared within‐subject performance change after cadaveric simulation training. Eleven57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67 were non‐comparative descriptive studies.

The primary objective of the three studies comparing inexperienced and experienced performance was construct validation of the simulator and/or assessment tools used in the studies. Zirkle and colleagues53 found that, when performing cortical mastoidectomy in a cadaveric simulation setting, FPA did not correlate with trainee experience, but GRS and TBC scores did. Mednick and co‐workers55 also found that, when performing corneal rust ring removal, FPA did not correlate with trainee experience, although procedure time did. Mackenzie et al.54 compared preintervention, immediately after (less than 4 weeks) and delayed (12–18 months) intervention scores for cadaveric‐trained, inexperienced learners with experienced ‘expert’ performance when undertaking lower‐extremity vascular exposure, repair and fasciotomy. The outcome measures were TBC, GRS, error frequency and procedure time. The results showed that experienced performance was significantly better at all time points, that performance amongst the inexperienced group was highly variable, and that evidence of skill retention was seen at 18 months postintervention.

When comparing cadaveric simulation‐trained and low‐fidelity (VR) simulation‐trained cohorts performing laparoscopic sigmoid colectomy, LeBlanc et al.52 reported that technical skills scores were better in the low‐fidelity group.

Of the 11 non‐comparative descriptive studies, all reported improvement in trainee performance after cadaveric simulation training, using a variety of outcome measures such as FPA, GRS and operational metrics.

Level 4: Objective measurement of educational impact by change in patient outcome

Only one68 of the 51 studies included in this review assessed the impact of the cadaveric training intervention on real‐world patient outcomes. Martin and colleagues68 measured the impact of cadaveric training on the real‐world performance of ‘core’ invasive skills (endotracheal tube insertion, chest tube insertion and venous cut‐down) by eight surgical trainees during the first 3 months of a trauma rotation. The complication rate for all skills decreased significantly immediately and at 3 weeks after instruction (P < 0·02). Initial trauma resuscitation time after training decreased from approximately 25 to 10 min in 80 patients treated by the participants68. The authors concluded that trainees' skills improve rapidly with competency‐based instruction (CBI), skills learnt through CBI in the laboratory can be translated to and sustained in the clinical setting, and CBI yields competent trainees who perform skills rapidly and with minimal complications.

Cadaveric models used for simulation training

A wide variety of cadaveric models were used in the included studies. Three studies26, 35, 67 used innovative techniques to perfuse or reconstitute cadaveric material for training purposes, to improve the fidelity of the simulation. These studies were all in the field of neurosurgery, and involved cannulation of the great vessels of the neck to allow pulsatile perfusion of cadaveric heads with an artificial blood substitute. All reported very high learner satisfaction with the models, and recognition of the opportunity that live reconstitution offers for overcoming the criticism35, 67 of conventional, non‐perfused cadaveric material, in that it does not bleed and thus the simulation fidelity is limited for teaching procedures where bleeding is a potential major consequence.

Fresh cadavers were used in 1220, 23, 28, 33, 37, 43, 48, 52, 54, 55, 65, 68 of the reviewed studies. These offer the most authentic tissue‐handling fidelity69, but have the significant disadvantage of rapid deterioration, and therefore a short time‐window for their potential use. Use of fresh cadavers for simulation training relies on a regular, local system of body donation bequests, as they are typically used within 48 h of the donor's death, and certainly no more than 7 days later, which places logistical and infrastructure challenges on training providers.

Fresh‐frozen cadavers, used in 1218, 21, 27, 30, 31, 32, 39, 42, 45, 46, 56, 57 of the studies, have gained popularity due to their versatility. The cadavers are non‐exsanguinated, washed with antiseptic soap, and frozen to −20°C within 1 week of procurement70. Typically, around 3 days before use they are gradually thawed at room temperature, retaining the realistic tissue‐handling characteristics that are important for high‐fidelity simulation. Fresh‐frozen cadavers have the great advantage of being able to be refrozen and thawed at a later date, permitting reuse across multiple training interventions and thus maximizing potential use and cost‐efficiency70.

Soft‐fix Thiel embalming techniques were used in two studies34, 58. This technique seeks a method of cadaveric preservation that preserves tissue‐handling, enables longevity of specimen use, and avoids the occupational and environmental health risks associated with exposure to formaldehyde71. Organs and tissues retain their flexibility, and the colour of the tissue remains similar to that seen in vivo.

Only one study41 used traditional formalin‐fixed cadavers. Formalin has the advantages of being relatively inexpensive and widely available, with a long history of use in preserving cadavers for the purposes of teaching anatomy71. It does, however, lead to changes in the colour, strength and tissue‐handling characteristics of the cadaveric material72, which may limit its usefulness in surgical training. The study by Sharma et al.41 did not evaluate the fidelity of the simulation or discuss the rationale or impact on the educational value of the training as a result of using formalin‐fixed material. Twenty studies provided no information on the type of cadaveric material used in the training intervention.

Almost half of the included studies (22 of 51, 43 per cent) assessed the impact of the cadaveric training using subjective measures only, representing the lowest level of impact in educational research (Kirkpatrick level 1). The second most prevalent category was studies measuring the impact of the training intervention by learner behaviour change (23 of 51, 45 per cent) (Kirkpatrick level 3). Of these, seven were RCTs and 16 were cohort studies. Of the 23 studies assessing behaviour change following cadaveric training, only one65 measured behaviour change in the workplace; the rest measured behaviour change in the simulation laboratory. Only one68 of the 51 studies actually measured a change in patient outcome as a result of the cadaveric training intervention; this is the highest level of impact assessment in educational research (Kirkpatrick level 4).

Discussion

The objective of this systematic review was to describe and evaluate evidence for the current use of cadaveric simulation in postgraduate surgical training. Fifty‐one studies involving 2002 surgical trainees across 69 cadaveric training interventions were included. Although there was research activity encompassing the breadth of surgical specialties, most studies were within general surgery (14), trauma and orthopaedic surgery (9) and neurosurgery (7). The majority were conducted in the USA (35) and UK (8). A wide range of methodology was used. Eight of 51 studies were RCTs (OCEBM level 1b and 2a), six were parallel cohort studies (OCEBM level 2b) and 37 were non‐comparative descriptive studies (OCEBM level 3).

There is evidence from three RCTs45, 46, 47 that cadaveric simulation training is superior to no training, yet the important question remains whether it is superior to low‐fidelity simulation training. This is of interest because cadaveric simulation training is expensive, and therefore needs to have demonstrable superiority over less expensive alternatives. The four RCTs48, 49, 50, 51 in this area revealed a mixed picture: two49, 51 showed superiority of cadaveric simulation training, one48 showed equivalence with low‐fidelity bench‐model training, and one50 showed that cadaveric simulation training was inferior to the bench‐model alternative.

The mean overall MERSQI score correlated well with the Kirkpatrick level: the higher the level of impact measured, the better the study methodology. Previous predictive studies17 have shown that studies with a MERSQI score of 10·7 or above are indicative of a methodologically strong study, likely to be accepted for publication. Some 67 per cent of the studies in this review had a MERSQI score below 10·7, and therefore the majority of the included studies could be considered to have weak methodology. Methodological problems noted amongst the included studies were: predominance of single‐site studies, lack of randomization, lack of comparator group, small sample sizes and underpowering, inadequate or absent reporting of descriptive statistics, overuse of a single group, and predominance of pretest/post‐test assessment strategies to determine the impact of the training intervention, which can overestimate the observed effect size73.

Several studies reported mixed‐modality training interventions, making assessment of the cadaveric component of the training in isolation impossible. There was also inadequate or absent description of the cadaveric model used in more than one‐third of the studies, which renders results pertaining to the face and content validity of training impossible to assess.

Half of the studies were published in the last 3 years, reflecting the recent explosion in popularity of cadaveric simulation training. Despite the clear attraction of cadaveric simulation training as measured by subjective means, there remains a dearth of evidence that there is retention and translation of skills learnt in the cadaveric laboratory into the operating theatre. The major ongoing challenge within educational research is demonstrating effective, sustained changes in learner behaviour, and improved patient outcome following a training intervention73. These challenges are particularly acute in the field of cadaveric simulation because of the cost and infrastructure demands, and there is presently little evidence that surgical educators are rising to meet this challenge.

This review has shown that there is an abundance of relatively low‐quality evidence indicating that cadaveric simulation may induce short‐term skill acquisition as measured by objective means. Adequately powered studies are needed to show whether skills are retained and transferable into the operating theatre before major investment in cadaveric simulation for surgical training can be recommended.

Disclosure

The authors declare no conflict of interest.

Supporting information

Table S1 MEDLINE search strategy

Table S2 Kirkpatrick Level 1: Studies that subjectively measure the impact of the training intervention by learner opinion

Table S3 Kirkpatrick Level 2: Studies that objectively measure the impact of the training intervention by learner knowledge

Table S4 Kirkpatrick Level 3: Studies that objectively measure the impact of the training intervention by change in learner behaviour

Table S5 Kirkpatrick Level 4: Studies that objectively measure the impact of the training intervention by change in patient outcome

References

- 1. Gilbody J, Prasthofer AW, Ho K, Costa ML. The use and effectiveness of cadaveric workshops in higher surgical training: a systematic review. Ann R Coll Surg Engl 2011; 93: 347–352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Pickersgill T. The European working time directive for doctors in training. BMJ 2001; 323: 1266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. House J. Calling time on doctors' working hours. Lancet 2009; 373: 2011–2012. [DOI] [PubMed] [Google Scholar]

- 4. UK Shape of Training Steering Group (UKSTSG) . Shape of Training: Report from the UK Shape of Training Steering Group (UKSTSG); 2017. https://www.shapeoftraining.co.uk/static/documents/content/Shape_of_Training_Final_SCT0417353814.pdf [accessed 1 December 2018].

- 5. Reznick RK, MacRae H. Teaching surgical skills – changes in the wind. N Engl J Med 2006; 355: 2664–2669. [DOI] [PubMed] [Google Scholar]

- 6. Carey JN, Minneti M, Leland HA, Demetriades D, Talving P. Perfused fresh cadavers: method for application to surgical simulation. Am J Surg 2015; 210: 179–187. [DOI] [PubMed] [Google Scholar]

- 7. Sugand K, Abrahams P, Khurana A. The anatomy of anatomy: a review for its modernisation. Anat Sci Educ 2010; 3: 83–93. [DOI] [PubMed] [Google Scholar]

- 8. Curran I. A Framework for Technology Enhanced Learning. Department of Health: London, 2011. [Google Scholar]

- 9. Hughes JL, Katsogridakis E, Junaid‐Siddiqi A, Pollard JS, Bedford JD, Coe PO et al The development of cadaveric simulation for core surgical trainees. Bull Roy Coll Surg Engl 2018; 101: 38–43. [Google Scholar]

- 10. Shamseer L, Moher D, Clarke M, Ghersi D, Liberati A, Petticrew M et al; PRISMA‐P Group. Preferred reporting items for systematic review and meta‐analysis protocols (PRISMA‐P) 2015: elaboration and explanation. BMJ 2015; 350: g7647. [DOI] [PubMed] [Google Scholar]

- 11. Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JP et al The PRISMA statement for reporting systematic reviews and meta‐analyses of studies that evaluate health care interventions: explanation and elaboration. Ann Int Med 2009; 151: W‐65–W‐94. [DOI] [PubMed] [Google Scholar]

- 12. National Institute for Health Research . PROSPERO: International Prospective Register of Systematic Reviews https://www.crd.york.ac.uk/prospero/ [accessed 14 December 2018].

- 13. Carter FJ, Schijven MP, Aggarwal R, Grantcharov T, Francis NK, Hanna GB et al Consensus guidelines for validation of virtual reality surgical simulators. Surg Endosc 2005; 19: 1523–1532. [DOI] [PubMed] [Google Scholar]

- 14. Morgan M, Aydin A, Salih A, Robati S, Ahmed K. Current status of simulation‐based training tools in orthopedic surgery: a systematic review. J Surg Educ 2017; 74: 698–716. [DOI] [PubMed] [Google Scholar]

- 15. Cook DA, Reed DA. Appraising the quality of medical education research methods: the medical education research study quality instrument and the Newcastle–Ottawa Scale – Education. Acad Med 2015; 90: 1067–1076. [DOI] [PubMed] [Google Scholar]

- 16. Smith RP, Learman LA. A plea for MERSQI: the medical education research study quality instrument. Obstet Gynecol 2017; 130: 686–690. [DOI] [PubMed] [Google Scholar]

- 17. Reed DA, Beckman TJ, Wright SM, Levine RB, Kern DE, Cook DA. Predictive validity evidence for medical education research study quality instrument scores: quality of submissions to JGIM's medical education special issue. J Gen Intern Med 2008; 23: 903–907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Sharma M, Horgan A. Comparison of fresh‐frozen cadaver and high‐fidelity virtual reality simulator as methods of laparoscopic training. World J Surg 2012; 36: 1732–1737. [DOI] [PubMed] [Google Scholar]

- 19. Dunnington GL. A model for teaching sentinel lymph node mapping and excision and axillary lymph node dissection. J Am Coll Surg 2003; 197: 119–121. [DOI] [PubMed] [Google Scholar]

- 20. Gunst M, O'Keeffe T, Hollett L, Hamill M, Gentilello LM, Frankel H et al Trauma operative skills in the era of nonoperative management: the trauma exposure course (TEC). J Trauma 2009; 67: 1091–1096. [DOI] [PubMed] [Google Scholar]

- 21. Reed AB, Crafton C, Giglia JS, Hutto JD. Back to basics: use of fresh cadavers in vascular surgery training. Surgery 2009; 146: 757–763. [DOI] [PubMed] [Google Scholar]

- 22. Lewis CE, Peacock WJ, Tillou A, Hines OJ, Hiatt JR. A novel cadaver‐based educational program in general surgery training. J Surg Educ 2013; 69: 693–698. [DOI] [PubMed] [Google Scholar]

- 23. Sheckter CC, Kane JT, Minneti M, Garner W, Sullivan M, Talving P et al Incorporation of fresh tissue surgical simulation into plastic surgery education: maximizing extraclinical surgical experience. J Surg Educ 2013; 70: 466–474. [DOI] [PubMed] [Google Scholar]

- 24. Gasco J, Holbrook TJ, Patel A, Smith A, Paulson D, Muns A et al Neurosurgery simulation in residency training: feasibility, cost, and educational benefit. Neurosurgery 2013; 73: 39–45. [DOI] [PubMed] [Google Scholar]

- 25. Jansen S, Cowie M, Linehan J, Hamdorf JM. Fresh frozen cadaver workshops for advanced vascular surgical training. ANZ J Surg 2014; 84: 877–880. [DOI] [PubMed] [Google Scholar]

- 26. Pham M, Kale A, Marquez Y, Winer J, Lee B, Harris B et al A perfusion‐based human cadaveric model for management of carotid artery injury during endoscopic endonasal skull base surgery. J Neurol Surg B Skull Base 2014; 75: 309–313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Ahmed K, Aydin A, Dasgupta P, Khan MS, McCabe JE. A novel cadaveric simulation program in urology. J Surg Educ 2015; 72: 556–565. [DOI] [PubMed] [Google Scholar]

- 28. Aydin A, Ahmed K, Khan MS, Dasgupta P, McCabe J. The role of human cadaveric procedural simulation in urology training. J Urol 2015; 193: e273. [Google Scholar]

- 29. Liu JK, Kshettry VR, Recinos PF, Kamian K, Schlenk RP, Benzel EC. Establishing a surgical skills laboratory and dissection curriculum for neurosurgical residency training. J Neurosurg 2016; 123: 1331–1338. [DOI] [PubMed] [Google Scholar]

- 30. Clark MA, Yoo SS, Kundu RV. Nail surgery techniques: a single center survey study on the effect of a cadaveric hand practicum in dermatology resident education. Dermatol Surg 2016; 42: 696–698. [DOI] [PubMed] [Google Scholar]

- 31. Hanu‐Cernat L, Thanabalan N, Alali S, Virdi M. Effectiveness of cadaveric surgical simulation as perceived by participating OMFS trainees. Br J Oral Maxillofac Surg 2016; 54: e126. [Google Scholar]

- 32. Calio BP, Kepler CK, Koerner JD, Rihn JA, Millhouse P, Radcliff KE. Outcome of a resident spine surgical skills training program. Clin Spine Surg 2017; 30: E1126–E1129. [DOI] [PubMed] [Google Scholar]

- 33. Lorenz R, Stechemesser B, Reinpold W, Fortelny R, Mayer F, Schroder W et al Development of a standardized curriculum concept for continuing training in hernia surgery: German Hernia School. Hernia 2017; 21: 153–162. [DOI] [PubMed] [Google Scholar]

- 34. Nobuoka D, Yagi T, Kondo Y, Umeda Y, Yoshida R, Kuise T et al The utility of cadaver‐based surgical training in hepatobiliary and pancreatic surgery. J Hepatobiliary Pancreat Sci 2017; 24: A338. [Google Scholar]

- 35. Pacca P, Jhawar SS, Seclen DV, Wang E, Snyderman C, Gardner PA et al ‘Live cadaver’ model for internal carotid artery injury simulation in endoscopic endonasal skull base surgery. Oper Neurosurg (Hagerstown) 2017; 13: 732–738. [DOI] [PubMed] [Google Scholar]

- 36. Takayesu JK, Peak D, Stearns D. Cadaver‐based training is superior to simulation training for cricothyrotomy and tube thoracostomy. Intern Emerg Med 2017; 12: 99–102. [DOI] [PubMed] [Google Scholar]

- 37. Weber EL, Leland HA, Azadgoli B, Minneti M, Carey JN. Preoperative surgical rehearsal using cadaveric fresh tissue surgical simulation increases resident operative confidence. Ann Transl Med 2017; 5: 302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Bertolo R, Garisto J, Dagenais J, Sagalovich D, Kaouk JH. Single session of robotic human cadaver training: the immediate impact on urology residents in a teaching hospital. J Laparoendosc Adv Surg Tech A 2018; 28: 1157–1162. [DOI] [PubMed] [Google Scholar]

- 39. Chouari TAM, Lindsay K, Bradshaw E, Parson S, Watson L, Ahmed J et al An enhanced fresh cadaveric model for reconstructive microsurgery training. Eur J Plast Surg 2018; 41: 439–446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. AlJamal Y, Buckarma E, Ruparel R, Allen S, Farley D. Cadaveric dissection vs homemade model: what is the best way to teach endoscopic totally extraperitoneal inguinal hernia repair? J Surg Educ 2018; 75: 787–791. [DOI] [PubMed] [Google Scholar]

- 41. Sharma G, Aycart MA, Najjar PA, van Houten T, Smink DS, Askari R et al A cadaveric procedural anatomy course enhances operative competence. J Surg Res 2016; 201: 22–28. [DOI] [PubMed] [Google Scholar]

- 42. Mitchell EL, Sevdalis N, Arora S, Azarbal AF, Liem TK, Landry GJ et al A fresh cadaver laboratory to conceptualize troublesome anatomic relationships in vascular surgery. J Vasc Surg 2012; 55: 1187–1194. [DOI] [PubMed] [Google Scholar]

- 43. Robinson WP, Doucet DR, Simons JP, Wyman A, Aiello FA, Arous E et al An intensive vascular surgical skills and simulation course for vascular trainees improves procedural knowledge and self‐rated procedural competence. J Vasc Surg 2017; 65: 907–915.e3. [DOI] [PubMed] [Google Scholar]

- 44. Hazan E, Torbeck R, Connolly D, Wang JV, Griffin T, Keller M et al Cadaveric simulation for improving surgical training in dermatology. Dermatol Online J 2018; 24: 15. [PubMed] [Google Scholar]

- 45. Sharma M, Macafee D, Horgan AF. Basic laparoscopic skills training using fresh frozen cadaver: a randomized controlled trial. Am J Surg 2013; 206: 23–31. [DOI] [PubMed] [Google Scholar]

- 46. Sundar SJ, Healy AT, Kshettry VR, Mroz TE, Schlenk R, Benzel EC. A pilot study of the utility of a laboratory‐based spinal fixation training program for neurosurgical residents. J Neurosurg Spine 2016; 24: 850–856. [DOI] [PubMed] [Google Scholar]

- 47. Chong W, Downing K, Leegant A, Banks E, Fridman D, Downie S. Resident knowledge, surgical skill, and confidence in transobturator vaginal tape placement: the value of a cadaver laboratory. Female Pelvic Med Reconstr Surg 2017; 23: 392–400. [DOI] [PubMed] [Google Scholar]

- 48. Anastakis DJ, Regehr G, Reznick RK, Cusimano M, Murnaghan J, Brown M et al Assessment of technical skills transfer from the bench training model to the human model. Am J Surg 1999; 177: 167–170. [DOI] [PubMed] [Google Scholar]

- 49. Sidhu RS, Park J, Brydges R, MacRae HM, Dubrowski A. Laboratory‐based vascular anastomosis training: a randomized controlled trial evaluating the effects of bench model fidelity and level of training on skill acquisition. J Vasc Surg 1999; 45: 343–349. [DOI] [PubMed] [Google Scholar]

- 50. Gottschalk MB, Yoon ST, Park DK, Rhee JM, Mitchell PM. Surgical training using three‐dimensional simulation in placement of cervical lateral mass screws: a blinded randomized control trial. Spine J 2015; 15: 168–175. [DOI] [PubMed] [Google Scholar]

- 51. Camp CL, Krych AJ, Stuart MJ, Regnier TD, Mills KM, Turner NS. Improving resident performance in knee arthroscopy: a prospective value assessment of simulators and cadaveric skills laboratories. J Bone Joint Surg Am 2016; 98: 220–225. [DOI] [PubMed] [Google Scholar]

- 52. LeBlanc F, Champagne BJ, Augestad KM, Neary PC, Senagore AJ, Ellis CN et al A comparison of human cadaver and augmented reality simulator models for straight laparoscopic colorectal skills acquisition training. J Am Coll Surg 2010; 211: 250–255. [DOI] [PubMed] [Google Scholar]

- 53. Zirkle M, Taplin MA, Anthony R, Dubrowski A. Objective assessment of temporal bone drilling skills. Ann Otol Rhinol Laryngol 2007; 116: 793–798. [DOI] [PubMed] [Google Scholar]

- 54. Mackenzie CF, Garofalo E, Puche A, Chen H, Pugh K, Shackelford S et al Performance of vascular exposure and fasciotomy among surgical residents before and after training compared with experts. JAMA Surg 2017; 152: 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Mednick Z, Tabanfar R, Alexander A, Simpson S, Baxter S. Creation and validation of a simulator for corneal rust ring removal. Can J Ophthalmol 2017; 52: 447–452. [DOI] [PubMed] [Google Scholar]

- 56. Wong K, Stewart F. Competency‐based training of basic trainees using human cadavers. ANZ J Surg 2004; 74: 639–642. [DOI] [PubMed] [Google Scholar]

- 57. Rowland EB, Kleinert JM. Endoscopic carpal‐tunnel release in cadavera. An investigation of the results of twelve surgeons with this training model. J Bone Joint Surg Am 1994; 76: 266–268. [DOI] [PubMed] [Google Scholar]

- 58. Levine RL, Kives S, Cathey G, Blinchevsky A, Acland R, Thompson C et al The use of lightly embalmed (fresh tissue) cadavers for resident laparoscopic training. J Minim Invasive Gynecol 2006; 13: 451–456. [DOI] [PubMed] [Google Scholar]

- 59. Bergeson RK, Schwend RM, DeLucia T, Silva SR, Smith JE, Avilucea FR. How accurately do novice surgeons place thoracic pedicle screws with the free hand technique? Spine (Phila Pa 1976) 2008; 33: E501–E507. [DOI] [PubMed] [Google Scholar]

- 60. Tortolani PJ, Moatz BW, Parks BG, Cunningham BW, Sefter J, Kretzer RM. Cadaver training module for teaching thoracic pedicle screw placement to residents. Orthopedics 2013; 36: e1128–e1133. [DOI] [PubMed] [Google Scholar]

- 61. Mowry SE, Hansen MR. Resident participation in cadaveric temporal bone dissection correlates with improved performance on a standardized skill assessment instrument. Otol Neurotol 2014; 35: 77–83. [DOI] [PubMed] [Google Scholar]

- 62. Awad Z, Tornari C, Ahmed S, Tolley NS. Construct validity of cadaveric temporal bones for training and assessment in mastoidectomy. Laryngoscope 2015; 125: 2376–2381. [DOI] [PubMed] [Google Scholar]

- 63. Egle JP, Malladi SV, Gopinath N, Mittal VK. Simulation training improves resident performance in hand‐sewn vascular and bowel anastomoses. J Surg Educ 2015; 72: 291–296. [DOI] [PubMed] [Google Scholar]

- 64. Nicandri GT, Cosgarea AJ, Hutchinson MR, Elkousy HA. Assessing improvement in diagnostic knee arthroscopic skill during the AAOS fundamentals of knee and shoulder arthroscopy course for orthopaedic residents. Orthop J Sports Med 2015; 3: 2325967115S0006. [Google Scholar]

- 65. Kim SC, Fisher JG, Delman KA, Hinman JM, Srinivasan JK. Cadaver‐based simulation increases resident confidence, initial exposure to fundamental techniques, and may augment operative autonomy. J Surg Educ 2016; 73: e33–e41. [DOI] [PubMed] [Google Scholar]

- 66. Martin KD, Patterson DP, Cameron KL. Arthroscopic training courses improve trainee arthroscopy skills: a simulation‐based prospective trial. Arthroscopy 2016; 32: 2228–2232. [DOI] [PubMed] [Google Scholar]

- 67. Ciporen JN, Lucke‐Wold B, Mendez G, Cameron WE, McCartney S. Endoscopic management of cavernous carotid surgical complications: evaluation of a simulated perfusion model. World Neurosurg 2017; 98: 388–396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Martin M, Vashisht B, Frezza E, Ferone T, Lopez B, Pahuja M et al Competency‐based instruction in critical invasive skills improves both resident performance and patient safety. Surgery 1998; 124: 313–317. [PubMed] [Google Scholar]

- 69. Benkhadra M, Faust A, Ladoire S, Trost O, Trouilloud P, Girard C et al Comparison of fresh and Thiel's embalmed cadavers according to the suitability for ultrasound‐guided regional anesthesia of the cervical region. Surg Radiol Anat 2009; 31: 531–535. [DOI] [PubMed] [Google Scholar]

- 70. Lloyd GM, Maxwell‐Armstrong C, Acheson AG. Fresh frozen cadavers: an under‐utilized resource in laparoscopic colorectal training in the United Kingdom. Colorectal Dis 2011; 13: e303–e304. [DOI] [PubMed] [Google Scholar]

- 71. Eisma R, Lamb C, Soames RW. From formalin to Thiel embalming: what changes? One anatomy department's experiences. Clin Anat 2013; 26: 564–571. [DOI] [PubMed] [Google Scholar]

- 72. Giger U, Frésard I, Häfliger A, Bergmann M, Krähenbühl L. Laparoscopic training on Thiel human cadavers: a model to teach advanced laparoscopic procedures. Surg Endosc 2008; 22: 901–906. [DOI] [PubMed] [Google Scholar]

- 73. Sullivan GM. Deconstructing quality in education research. J Grad Med Educ 2011; 3: 121–124. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table S1 MEDLINE search strategy

Table S2 Kirkpatrick Level 1: Studies that subjectively measure the impact of the training intervention by learner opinion

Table S3 Kirkpatrick Level 2: Studies that objectively measure the impact of the training intervention by learner knowledge

Table S4 Kirkpatrick Level 3: Studies that objectively measure the impact of the training intervention by change in learner behaviour

Table S5 Kirkpatrick Level 4: Studies that objectively measure the impact of the training intervention by change in patient outcome