Abstract

The field of basal cognition seeks to understand how adaptive, context-specific behavior occurs in non-neural biological systems. Embryogenesis and regeneration require plasticity in many tissue types to achieve structural and functional goals in diverse circumstances. Thus, advances in both evolutionary cell biology and regenerative medicine require an understanding of how non-neural tissues could process information. Neurons evolved from ancient cell types that used bioelectric signaling to perform computation. However, it has not been shown whether or how non-neural bioelectric cell networks can support computation. We generalize connectionist methods to non-neural tissue architectures, showing that a minimal non-neural Bio-Electric Network (BEN) model that utilizes the general principles of bioelectricity (electrodiffusion and gating) can compute. We characterize BEN behaviors ranging from elementary logic gates to pattern detectors, using both fixed and transient inputs to recapitulate various biological scenarios. We characterize the mechanisms of such networks using dynamical-systems and information-theory tools, demonstrating that logic can manifest in bidirectional, continuous, and relatively slow bioelectrical systems, complementing conventional neural-centric architectures. Our results reveal a variety of non-neural decision-making processes as manifestations of general cellular biophysical mechanisms and suggest novel bioengineering approaches to construct functional tissues for regenerative medicine and synthetic biology as well as new machine learning architectures.

Subject terms: Cognitive neuroscience, Computational science

Introduction

Biological systems have long served as an inspiration and a design challenge for the engineering of artificial intelligence and machine learning1,2, with a special focus on the brain3. However, many biological phenomena, ranging from maze solving by cells and slime molds to complex regulative morphogenesis and regeneration, can be viewed as processes involving information-processing and decision-making4, in the absence of a brain4–12. Memory, anticipation, and problem solving have been demonstrated in sperm, amoebae, yeast, and plants8–12, and these capabilities scaled with the emergence of the Metazoa. For example, when craniofacial anatomical features of tadpoles are scrambled into abnormal configurations, they normalize their aberrant positions over time to regain a correct frog face morphology and then cease remodeling13. Thus, cells and tissues functionally ascertain the difference between the current, incorrect craniofacial morphology and the frog’s native craniofacial morphology and undertake corrective movements to reduce the error. Similarly, the regeneration of entire limbs in animals such as salamanders can be understood as being driven by a ‘test-operate-text-exist’ model7, in which cells act to implement an invariant anatomical outcome from diverse starting conditions, and stop once this target morphology has been achieved7,14. This theme is reinforced by classical observations such as the fact that tails grafted onto the side of salamanders slowly remodel into limbs15, demonstrating the ability of tissue to ascertain its position within the whole, compare its organ-level anatomy with that dictated by the target morphology, and remodel toward that correct anatomical setpoint16.

Importantly, unicellular life forms and somatic cells of multicellular organisms were making flexible decisions based on inputs in their microenvironment long before neurons appeared11,17. Nerves may have speed-optimized ancient bioelectric processes that, since the time of bacterial biofilms18, were already exploited by evolution to implement memory, long-range coordination, and decision-making utilized for maintenance and construction of anatomical structures19. In multicellular organisms, these same functions are used to control large-scale patterning20–23. For example, bioelectric signals mediate important aspects of the long-range coordination that keep cells harnessed towards maintenance of a body-plan and away from tumorigenesis5. Stable bioelectric circuits also maintain the information needed for fragments of planaria to regenerate the correct number and distribution of heads and tails24,25, and spatial distributions of resting potentials that are critical for the correct formation of hearts26,27, eyes28, and brains29. Bioelectric signaling is involved at many scales, from decisions of stem cells as to when and how to differentiate30, to the control of size31 and regeneration of entire organs32,33. Transient inputs that shift the endogenous bioelectric network states into alternate stable modes can permanently change regenerative and developmental morphology34; because of this, mutations in ion channel genes are an important cause of human birth defects35,36, while modulation of bioelectric circuit state has been used to repair brain structure37. Thus, a number of important fields converge on the need to understand how tissues process information via bioelectric processes: evolutionary developmental biology (plasticity in cell behavior contributes to evolvability of body plans), regenerative medicine (induction of complex repair by modulating the patterns to which cells build), and synthetic bioengineering (the pursuit of novel synthetic ‘living machines’).

Decision-making often exploits some form of logic. The need to understand tissue functions as computation mediated by ancient biophysical mechanisms led us to seek a quantitative model of how non-neural networks could possess logical abilities like those commonly studied in brains. The simplest realizations of logic are known as “logic gates”, which are tiny circuits that perform elementary logic operations like AND, OR, NOT, etc. that comprise any modern digital computer. A unique feature of logic gates is that, although they are typically realized as electronic circuits, the underlying mathematical formulations have been used as coarse-grained models of neurons38,39, genes40,41, and even physiology12. Logic networks can be assembled to compute any computable function42, and thus can be valuable building blocks of intelligent information-processing systems.

Here, we show that non-neural bioelectric networks can compute logic functions, suggesting one way in which evolution can exploit biophysics for decision-making in cellular systems. This provides a new connection between non-neural physiology and a common kind of computational task, thus expanding the known capabilities of developmental bioelectricity. While defining necessary and sufficient conditions for “computation” is a profound question debated elsewhere43–46, logic gates are widely accepted as a non-controversial example of computation. Thus, we extend established connectionist approaches to a more general physiological setting and analyze them to reveal how ionic dynamics in non-neuronal cells could implement both simple and complex logic gates.

A New Bioelectric Network Model

To facilitate the analysis of dynamic, context-dependent biological processes (e.g., morphogenesis and remodeling) as cognitive tasks4, where networks of non-neural cells collectively make decisions, we constructed a minimal BioElectric Network (BEN) that comprises the simplest components and processes of bioelectrical signaling. BEN is inspired by a sophisticated and realistic model known as the Bioelectric Tissue Simulation Engine47 (BETSE), which has been used to show how bioelectric patterns can be created and sustained21,48–50 and how such a system might interact with genetic networks to give rise to morphological patterns51. Our goal was to define a generic, minimal biophysical system using realistic yet simple signaling dynamics, and study its computational properties in the absence of the specialized, highly-derived specifications that define neural networks. Thus, BEN is a simplified version of BETSE that is minimal enough to aid the investigation of the computational capabilities of a bioelectric system that have not yet been understood. The inputs and outputs of this network, representing the logic values, are represented by the bioelectric states of the cells (resting potential or Vmem).

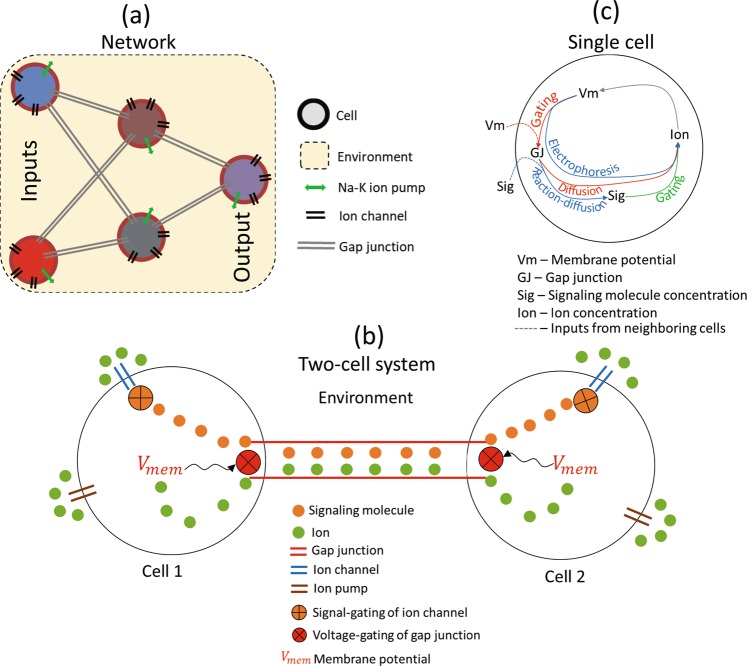

BEN is defined by a set of bioelectric components and processes that dictate their production, transport, and decay. BEN consists of two types of regions: an environment and a network of cells. A single cell in a BEN network consists of different types of proteins: ion channels and ion pumps. There are two type of molecules: ions (charged) and signaling molecules (generic uncharged molecules that may represent a ligand, a neurotransmitter, a secondary messenger, etc.). BEN contains three types of ions: sodium (Na+), potassium (K+), and chloride (Cl−). Furthermore, there are two types of ion channels (IC) that each selectively allow the passage of Na+ and K+ ions respectively between the cell and the environment; no such channel exists for Cl−. There is a single sodium-potassium ion pump that actively strives to maintain a non-zero membrane potential (Vmem). Two cells in a BEN network may be connected by an electrical synapse known as a “gap junction”52 (GJ), represented as an undirected edge in the network, which allows the passage of molecules between the cells. The process that governs the passage of ions through either the GJ or IC is called “electrodiffusion”, a combination of electrophoresis and regular diffusion, where the flux is driven by voltage gradients in the former and concentration gradients in the latter. Following the convention adopted in BETSE, we modeled electrodiffusion through GJs using the Nernst-Planck equation, and the transmembrane flux of ions through ion channels using the Goldman-Hodgkin-Katz flux equation47. However, since BEN is a networked system, where the processes occur at discrete points in space (the cells in Fig. 1a), we used simple discretized versions of the same equations. The flux of the signaling molecules in BEN is governed by a generic nonlinear reaction-diffusion process consisting of two layers of sigmoidal transformations. In the first transformation layer, the flux across each GJ is calculated using a sigmoidal transformation of the concentration difference of the signaling molecule. In the second layer, the transformed flux across each GJ is collectively transformed using a second sigmoid, constituting the net concentration change of the signaling molecule. These two layers minimally represent the multi-layered complexity prevalent in cell signaling networks53,54. For example, calcium (Ca2+) transport within a cell is mediated by clusters of inositol 1,4,5-triphosphate (IP3) receptors distributed inside the cell in a multi-layered fashion55–57. Moreover, such a two-layer transformation is also found in neuronal cells, where the first stage involves nonlinear transformations of local dendritic potentials, and a second stage involving a linear summation of the outputs from the first stage to determine the overall cellular response58–60. Thus, the two-layer signal transformation architecture of BEN is representative of the multi-layered signaling complexity characteristic of multiple cell types. Moreover, the dynamics of the signal may be viewed as a reaction-diffusion system; see the ‘Methods’ section for more details. Finally, the extracellular environment simulated in BEN includes the same three types of ions as the cells (Na+, K+, and Cl−), but initialized with different concentrations. We assume that the environment is effectively infinite in size, hence the ion concentrations there remain constant.

Figure 1.

Schematics of the BEN architecture and its constituents. (a) The general architecture of a BEN network; (b) A BEN network consisting of two cells; (c) The network of components (black) related by processes (blue, red and green) within a single cell, some of which receive inputs from adjacent cells. For example, the Vmem of a cell together with that of the adjacent cell gates the gap junction which in turn affects the concentrations of the ion and the signaling molecule, and the ion concentration ultimately determines the Vmem, closing the loop. This highlights the recurrent nature of the dynamics in BEN.

There are two types of gating mechanisms in BEN: chemical-gating of ion channels and voltage-gating of gap junctions. Chemical-gating of IC is a mechanism by which the permeability of an IC is modulated by the binding of a gating molecule (typically a ‘ligand’) to the channel, as a function of the concentration of the molecule51,61(Ch.31). Rather than the more conventional Hill function to model it51, we used a simpler sigmoid function that maps the concentration of the signaling molecule to the proportion of the maximum permeability of the IC. In BEN, a higher concentration of the signal tends to depolarize the cell, and lower concentrations hyperpolarize it. Voltage-gating of GJ is a mechanism by which the permeability of a GJ is modulated by the Vmem of the connected cells and also the difference between them (known as the “transjunctional voltage”)51,62,63. We adopted a sigmoidal transformation that maps the individual Vmem of the connected cells that are then averaged over to compute the net GJ permeability, in contrast to the more complex but similar Hill function based model adopted in BETSE51. In BEN, positive Vmem levels tend to make the GJ more permeable, and negative Vmem make them less permeable. The mathematical details of BEN are described in the ‘Methods’ section.

There are two types of learnable parameters in BEN: weight and bias, following the convention of artificial neural networks64 (ANN). The weight is associated with a GJ, and it helps modulate the flux of the signal as well as the voltage-gating of GJ. Negative weights tend to lower the signal concentration in a cell, and positive weights tend to increase it. With regards to voltage-gating, smaller weights diminish the effect of Vmem, and larger weights tend to magnify it. The bias is associated with a single cell that helps modulate signal flux: it specifies the threshold at which the signal concentration switches direction of change. By linking these parameters to the core features of BEN, we have assumed that they are not special to ANNs.

BEN networks have a layered architecture (Fig. 1a), a scheme that is widely adopted for ANNs64 but which also reflects the tissue layering of many different types of body structures. In this scheme, a network consists of multiple layers of cells, with different choices for the inter-layer and intra-layer connectivity. Some layers have clearly designated roles—for example, the first layer is the “input” and the last layer is the “output”. This follows the scheme of various biological systems with specific roles assigned to the layers. For example, the retina is the “sensory” layer that directly receives signals from the environment and sends it to the upstream inter-neuron layers that integrates the input65. The layered architecture is inspired by biological counterparts as diverse as the mammalian visual cortex1,2,65 and cellular signaling networks66, whose benefits include better organization and efficiency of information processing64 and prediction67.

A simulation of BEN proceeds as follows. The input layer cells are set to specific Vmem levels at the beginning, while all other cells are set at Vmem = 0. From that point onward, the Vmem levels of the network will dynamically change according to the network parameters, until the output settles at some Vmem. The input to the network is either fixed (clamped throughout a simulation) or transient (set at only the beginning of the simulation and then allowed to change). These two conditions represent the nature of biological information processing where the outcome may depend on the duration of the stimulus. For example, the induction of certain proteins in cellular signaling networks require long-duration input signals68,69; while a brief stimulus (progesterone) is sufficient to induce long-term regenerative response in Xenopus limb70, a brief exposure to a gap-junction blocker is sufficient to cause stochastic phenotypic shifts in planaria24, a transient invasion into a bacterial ecosystem can dramatically switch its character71 and transient alterations to Vmem cause permanent alterations to gene expression and phenotypic patterning in planaria72.

Results

The ability of somatic tissues outside the brain to compute is still controversial, in part because there is not a quantitative model available that demonstrates how basic operations of cognition could be implemented by generic cells. We demonstrate the logic-capabilities of BEN networks by constructing (1) small elementary logic gates; (2) larger “tissue-level” elementary logic gates; (3) compound logic gates composed from elementary gates; and (4) a pattern detector (showing how a complex regenerative response function can be implemented with these components) using standard machine learning methods. Below we show that BEN networks can indeed function as logic gates. We then analyze the behavior of a successfully trained BEN logic gate using the tools of information theory.

BEN networks can implement small elementary logic gates: AND, XOR

A logic gate is a circuit that computes a binary-valued output from binary-valued inputs, according to a set of rules. For example, the AND gate outputs a “HIGH” signal only if both inputs are “HIGH”, otherwise it outputs a “LOW”. The OR gate, on the other hand, outputs a “HIGH” signal if at least one of the inputs has a “HIGH” signal. Thus, a logic gate is defined by a “rule table” that maps an input configuration to a unique output. Many biological systems appear to implement logic functions to facilitate, for example, integration of the various inputs they receive from the environment with the required specificity54,73.

Here we demonstrate that BEN networks can function as logic gates. We used standard machine learning methods to identify the parameters of BEN networks that can perform a desired logical function. This discovery phase in the biological world can be implemented either by evolution (which tweaks the parameters on a phylogenetic time scale) or within the lifetime of a single animal by the dynamic adjustment of ion channel and gap junctional open states as a function of experience (plasticity)74.

To provide a proof-of-principle that somatic bioelectric networks can perform logical operations, we sought to identify BEN networks with specific behaviors. Since it is difficult to find the requisite parameters manually, we used machine learning to automatically discover suitable parameter values. We used “backpropagation” (BP) for that purpose. BP is a training method, often used in machine learning, that starts with a random network (defined with a random set of parameters) and gradually tweaks the parameters using gradient descent until the network performs the desired function. Since BEN networks are recurrent by definition (edges are bidirectional), we used “backpropagation through time” (BPTT) to train them; see ‘Methods’ section for more details. We do not claim that BP or BPTT is the method that organisms use to learn in the real world; they are just convenient tools that we used to discover parameters that illustrate the power of BEN networks (see Supplementary 3 for alternative training methods). Below, we show examples of BEN networks implementing the AND gate, and the more difficult XOR gate. First, we show the results of training for a set of 100 training runs for each gate. Then, we describe the behavior of the best gate in each set using time series and dynamical phase space illustrations.

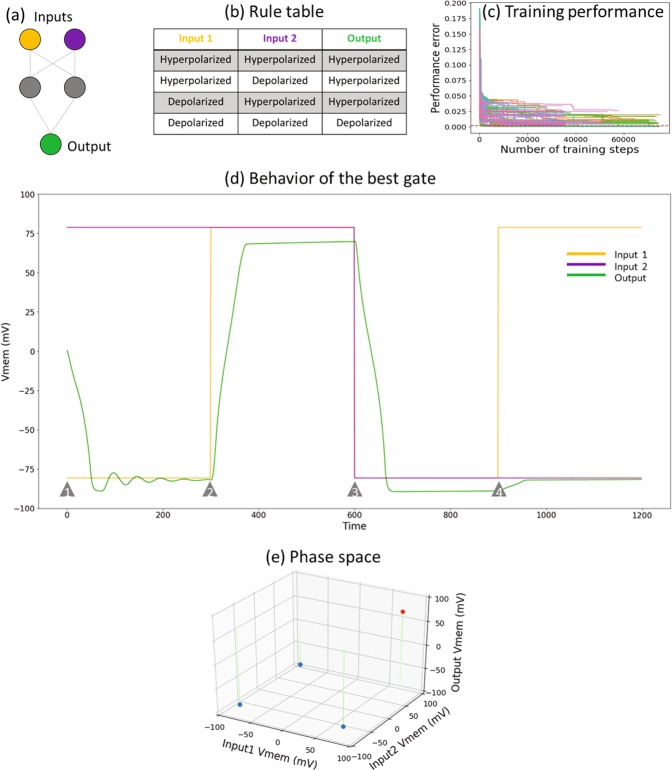

We first sought to determine if bioelectric networks can implement an AND gate, by training a small five-cell BEN network (Fig. 2a) to follow the AND rules that specifies the expected output for a given pair of inputs (Fig. 2b). We trained 100 instances of this network, each starting from different initial conditions of the parameters, discovering that some were indeed able to attain good performance, illustrating that BEN networks can perform the AND function (Fig. 2c). Out of the hundred networks we trained, seven achieved an error of 0.3 mV or less, while a total of 33 networks achieved an error of 1 mV or less. The behavior of the best AND gate we identified is illustrated below by way of time-series plots and phase space diagrams (Figs. 2d, 2e) by considering various input-output conditions.

Figure 2.

The AND gate. (a) A schematic of the AND logic gate and its rule table. The orange and purple cells are the inputs, and the green cell is the output; the grey cells are intermediate cells offering computational support. (b) Specification of the output Vmem for various combinations of input Vmem levels. This is known as the “truth table” in the Boolean logic literature, where the “hyperpolarized” state (around −80 mV) is indicated as “0”, “False” or “OFF” and the “depolarized” state (around +80 mV) as “1”, “True” or “ON”. This rule table essentially summarizes the mechanism of the BEN-based AND gate: the output is depolarized only when both of its inputs are depolarized; in Boolean logic terms the output is ON only when both inputs are ON. (c) Pareto front of training errors over time (one unit is equal to a single training epoch) for the AND gate. This plot depicts the “front” with the best errors achieved over time. This figure demonstrates that the training does indeed result in learning. (d) The behavior of the best AND gate shown in the form of time series of the input and output nodes of the gate, shown for all four input-output conditions generated in a random sequence. The red and blue lines represent the states of the two input nodes, and green represents the output. The grey triangles mark the time points at which the inputs are switched to a different state. For example, both inputs are hyperpolarized at time point (1), while at time point (2) the red input is depolarized. (e) The dynamical phase of the logic gate:a depiction of a set of trajectories in the input-output space, illustrated in a time-lapse style. This dynamical system has two attractors in the output space, highlighted in filled red (depolarized state) and blue (hyperpolarized state) circles. The trajectories look straight because the inputs are fixed, and only the output changes.

We next discovered a small five-cell BEN network that can function as an XOR gate by following the same training procedure as for the AND gate above (we report the parameter details in Supplementary 1). The rule table, training performances, behavior of the best XOR gate and the phase space diagram are shown below (Fig. 3). Of the fifty networks we trained, only one network achieved an error of 1 mV. Compared to the AND gate, clearly training the XOR gate is more difficult. This is consistent with the known difficulty of training any dynamical network to behave as an XOR function64, since it is a nonlinear function compared to AND, which is linear.

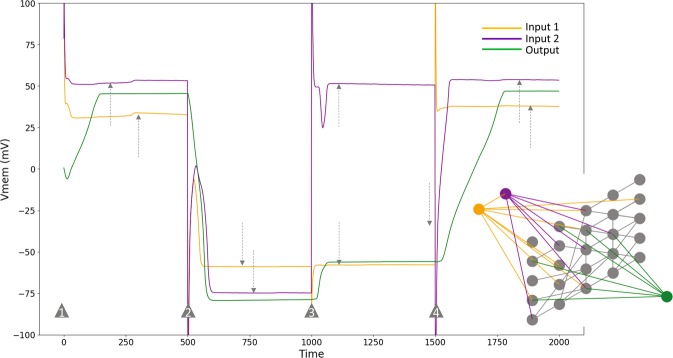

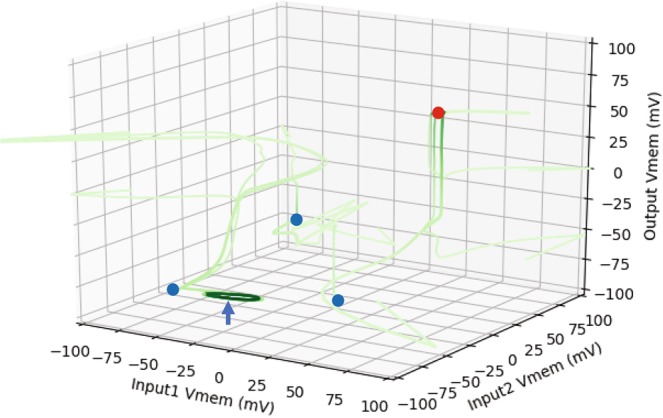

Figure 5.

The dynamical phase space of the best tissue-level AND gate. A depiction of a set of trajectories in the input-output space, illustrated in a time-lapse style. This dynamical system has two attractors in the output space, highlighted in filled blue (hyperpolarized state) and red (depolarized state) circles. As can be seen, there are three standard hyperpolarized attractors (fixed-point-like), and a fourth cyclic hyperpolarized attractor (marked with a blue arrow). Even though the cyclic attractor is in the appropriate region of the phase space, it was not actually required for during the training—it accidentally emerged. Furthermore, as opposed to the straight trajectories of the previous phase space diagrams, the trajectories maneuver freely here, and occasionally spill over the usual Vmem range which is about [−100,100] mV. This is because the inputs are transient and not fixed, hence they change states as well over time.

BEN networks can implement larger “tissue-level” elementary logic gates

We next asked whether larger networks, which contain more cells than strictly necessary, could still perform the logic functions. These larger networks are like tissues and organs, which can contain a range of diverse cells that communicate. Thus, we next describe a “tissue-level” AND gate. First, we show the results of training for a set of 100 training runs for each gate. Then, we describe the behavior of the best gate in each set, using time series and dynamical phase space illustrations.

We used a combination of genetic algorithm (GA) and BP for identifying this kind of circuit; the GA discovers the network structure (example structure in Fig. 4 inset) and BP finds the parameters of the network we sought. The best network was obtained at the end of 233 generations, which achieved an average error of about 0.6 mV. More details of the training are described in the ‘Methods’ section.

We conclude from the above results that it is indeed possible for large BEN circuits to compute simple logic functions, suggesting that this type of signaling can be carried out by developmental compartments with varying cell numbers.

BEN can implement a complex “tissue-level” logic gate: a pattern detector

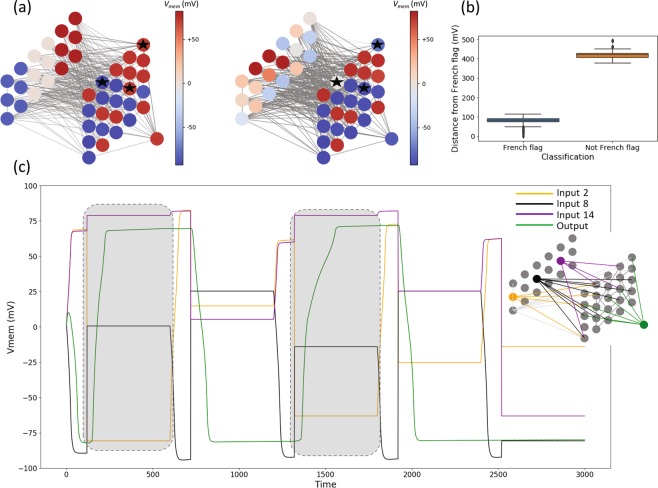

One of the most impressive computational tasks that tissues undertake is the set of decisions that enable large-scale regeneration of organs and appendages in some species19,75. Specifically, to repair after (unanticipated) injury and stop growth and remodeling when the anatomy has been corrected, cells need to make decisions about the physiological and geometric state of other tissues. Cells need to ascertain whether a large-scale morphology is correct or not, in order to regulate regenerative pathways or cease further change (errors in achieving morphostasis can manifest as cancer). Much work has gone into characterizing the physiological events that signal the binary event of “injury”, but it is now clear that even in the absence of trauma, some complex organ systems such as the craniofacial structures13,76 can begin drastic remodeling when the configuration is incorrect. Despite the advances in molecular biology that have identified genes necessary for this to occur, it is entirely unclear how cells recognize correct vs. incorrect patterns on a spatial scale much larger than themselves. Thus, we next sought to demonstrate that realistic biophysical mechanisms can implement computations which are sufficient to enable this crucial capability of living systems.

A pattern detector can be thought of as a complex (more than two inputs, thus non-elementary) AND gate, since its output is ON only when each of the inputs is at a specific desired state—in other words, only when all input conditions are satisfied. This is a more realistic setting that the tissue-level AND described in the previous section, since it has many more than two inputs (a tissue typically consists of many more than two cells). We identified, as proof-of-principle, a relatively large (44 cells) non-elementary logic gate that recognizes French-flag-like patterns; we used the French-flag as the pattern of interest due to its prominence in developmental biology as a simple morphogen gradient77. We define a French-flag pattern as a particular configuration of Vmem levels in the input layer (3 × 6): the leftmost two columns (band) are blue (hyperpolarized Vmem), the middle band is grey (intermediate Vmem), and the rightmost band is red (depolarized Vmem). This network recognizes the French-flag pattern and slightly noisy versions of it by expressing a depolarized Vmem in the output cell, while expressing a hyperpolarized Vmem in the output as a response to all other patterns.

The pattern detector is set up similar to the tissue-level AND gate. The main differences between the two are: (1) inputs are fixed in the pattern detector, as was the case for the small logic gates described in Section 4.1; (2) inputs are set after an initial time period during which the network is allowed to settle at its intrinsic “baseline” state (this captures the essence of biological systems that have intrinsic activity even in the absence of external stimuli); and (3) input patterns are generated randomly where two sets are generated, one consisting of the French-flag and noisy variants of it, and the other consisting fully randomized versions of the French-flag (details in the ‘Methods’ section). We used the combined GA-BP search-train method, as before, to find this network; see more details in the ‘Methods’ section. The best evolved pattern detector is the individual with the highest fitness score in the last generation of the GA, which was discovered relatively quickly at the end of 11 generations (three other networks achieved similar errors). Even though the pattern detector is larger than the tissue-level AND described above, it was easier to train than the latter. This is due to the advantage of the inputs being fixed in the case of the pattern detector but transient in the case of the tissue-level AND gate: the fixed inputs provide a constant supply of information that the network does not have to remember unlike the tissue-level AND gate.

The above search successfully discovered a successful French-flag detector. The behavior of this detector when it sees a French-flag pattern and a random pattern in its input layer is depicted in Fig. 6a: in the first case the output is depolarized (red), while in the second case it is hyperpolarized (blue), both as expected. Furthermore, this detector recognizes slightly distorted variants of the French-flag where the distance of a pattern from the French-flag is the Euclidean distance (Fig. 6b). We observe that input patterns up to a distance of about 150 mV from the French-flag are recognized as French-flag, while those at a distance of about 350 mV and above are classed as not French-flag. Finally, the detector also responds correctly to a sequence of randomly chosen input patterns (Fig. 6c), showing that the behavior of the network only depends on the state of the inputs and not on the rest of the network.

Figure 6.

The French-flag detector. (a) Snapshots of the equilibrium (final) network states (Vmem levels) in two detection scenarios. Left: input pattern is the French flag, and the output is depolarized (representing “correct” pattern). Right: input pattern is a highly distorted version of the French flag, and the output is hyperpolarized (representing “incorrect” pattern). Colors represent polarity levels of the cells in units of mV. Notice that the only three intermediate cells that differ in their states between the two cases are those marked with a black star, suggesting that the detector makes minimal use of information to distinguish between patterns. Also notice that the edges are thicker in the right than in the left, suggesting that the voltage-gating dynamics of the edges (representing gap junctions) play a crucial role. (b) The overall performance of the best evolved pattern detector, with data collected from a set of 1000 simulations: 100 parallel sets each with a random sequence of 10 simulations. As expected, the pattern detector classifies patterns similar to the French flag (a total of 500 sample inputs) as “French flag”, and those that are dissimilar (500 inputs) as “not French flag”. This suggests that the pattern detector is robust to noise to a sensible extent. The width of the classification boundary was set implicitly due to the way sample input patterns from the two classes were generated (details in the ‘Methods’ section). (c) The behavior of the best French-flag detector shown for a set of four representative cells (three inputs out of a total of eighteen and one output) for a random sequence of five patterns. Inset highlights the four nodes whose colors correspond with those in the time series. Highlighted in grey squares are the cases where a French-flag-like pattern is input for which the output is depolarized, as expected; for all other cases where a random pattern is shown, the output is hyperpolarized.

We conclude that BEN has in principle the ability to distinguish between patterns. The biological implication is that somatic tissues can in principle have the same ability, and thus be a part of much larger pattern regulation mechanisms.

BEN can implement compound logic gates

Biological processes are complex by nature. The rampant complexity is partly managed by nature by way of modularity78, in gene regulation79 and the brain80,81, for example, at multiple scales. Modularity is beneficial because the modules can be independently tinkered with for the purpose of large-scale outcomes79. Thus, it is important to understand whether bioelectric circuits can implement logic functions that are too complex to be described by a single gate but are actually combinations of elementary logic primitives.

Compound logic gates are compositions of modules of logic gates, and their biological equivalents underlie several processes including sporulation in B. subtilis and the neuronal dynamics of C. elegans82,83. They can also have pharmaceutical applications—for example, the control of bacterial invasion of tumor cells and diagnosis of cellular environments and automatic release of drugs82.

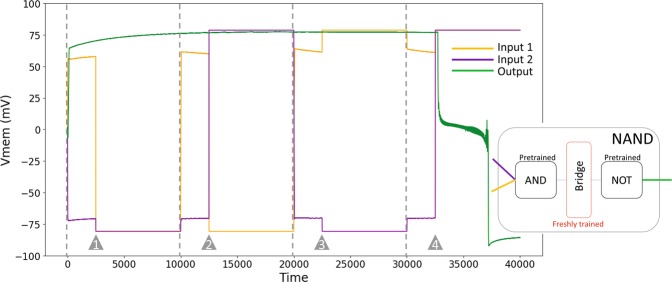

One way to design compound networks, in general, is to compose them from appropriate pre-designed modules. For example, a “NAND” gate can be constructed by combining the AND and NOT gates. Compound genetic circuits have been constructed in this fashion84,85, as well as designed de novo from scratch82. Here, we show how to construct compound logic gates in physiological networks by composing pre-trained BEN logic gate modules. Unlike genetic networks and neural networks, which are directed, BEN networks are bidirectional, due to the symmetric nature of gap junctions. Thus, unlike genetic networks, compound logic gates in BEN cannot be constructed simply by connecting the modules. Put more precisely, if the modules were simply connected together, then the output of one module will also receive signals from the input of the connected module. This could potentially result in the upstream module outputting the wrong state, and thus the downstream module receiving and outputting the wrong states as well. To mitigate this, we included a “bridge” layer that interfaces the modules and trained it so that the compound gate as a whole behaves as desired: the downstream module outputs the correct state for every set of inputs received by the upstream module. For more details on the architecture and training of the bridge, see Supplementary 4. Figure 7 shows an example of a successfully trained NAND gate by composing pre-trained AND and NOT gates.

Figure 7.

The behavior of a successfully trained NAND gate for a random sequence of four inputs. Orange and purple lines represent the activities of the inputs, while green represents the output. The vertical dashed lines mark the beginning of each trial in the sequence, however the inputs are applied, after an initial transient, at the time points marked by the grey triangles. Inset is a schematic of the architecture of the gate: pretrained AND and NOT gates are connected by a “bridge”, a single layer of cells, that is alone trained.

We conclude that it is possible to construct compound logic gates by composing pre-trained modules and training only an interface bridge connecting the modules. This method can in principle be extended to more complex circuits.

Discussion

Various forms of natural and artificial computing systems with unconventional mechanisms have been proposed86. Even the modern von Neumann computer architecture was inspired by the mechanisms of DNA transcription and translation87. Other examples include “liquid computers” that involve interactions of fluid streams and droplet flows to perform logic operations88; “chemical computers” that utilize the dynamics and equilibrium-behavior of chemical reactions89–93 for logic operations and learning94,95; DNA-based computers that utilize various forms of catalytic reactions such as ‘seesawing’ and ‘strand-displacement’ for pattern recognition96, protein-DNA interactions to implement logic functions97 and even for general-purpose computing98; gene regulatory networks that take advantage of the multistability of gene activity for learning99, memory100–102 and logic84,85,101,102; “bacterial computers” that use enzyme-based computing to implement logic gates103; electric current flow making use of Kirchhoff’s law for solving mazes104; and even “sandstone computers” that utilize natural erosion processes to implement logic gates105. BEN is an unconventional computing system, since it is a non-neural system like the examples above.

Our work does not require a commitment to the view that every biological question must be dealt with via a computational perspective, despite the fact that there is a robust body of new experimental work driven by the hypothesis that some tissues are indeed implementing processes we would recognize as memory, comparison, or measurement53,106–108. The key question we answer here is: are pre-neural physiological networks able, in principle, to perform simple computations such as logical operations? Prior to these results, it was not known, and many developmental biologists’ intuitions are that such networks cannot perform such functions - they are almost never considered for model-building. Our data provide the first proof-of-existence showing that the class of biological systems where a computational approach could be useful, such as evolved or synthetic regulative morphogenesis, needs to be expanded to include somatic bioelectric networks.

BEN is also a type of patterning system. Patterning is the ability of a system to generate, sustain, and recognize patterns in the states, whether physical, chemical, or biological, of the system. It is a widespread phenomenon reported in biological systems, including pattern recognition of pathogen molecules by cellular receptors109, axial patterning in planaria110, spatial patterning during neural patterning111, cell polarity112, genetic patterning in bacterial populations113 and voltage patterning in neural tubes49. Here we focus on bioelectric non-neural networks of patterning. Various non-neural network models of patterning have been proposed. Some examples include the “packet-routing” system that uses an internet-like intercellular messaging system114, auto-associative dynamical systems115, and cellular automata116 based systems that combine local and global communication to model pattern regeneration. Our laboratory has produced sophisticated non-neural bioelectric network models of tissue patterning, like the Bioelectric Tissue Simulation Engine (BETSE) that incorporates detailed biophysical mechanisms of ion channels and transport processes47,51,117, as well as relatively simpler models that incorporate only the high-level functionality of the bioelectric components48,118,119. These models have been used to show that networks of ordinary tissues can give rise to gradient-patterns47,51, Turing patterns21,119, as well as oscillatory patterns118,120 that are thought to be essential for long-distance communication. BEN differs from these models on two fronts: (1) it is biophysically simpler than BETSE, serving as a minimal model of dynamics sufficient for computation; and (2) it is more realistic than the model of Reference118, which uses “equivalent circuits” where the equilibrium Vmem is explicitly specified in the equations, whereas in BEN the equilibrium Vmem emerges from the ion channel parameters.

We have shown here that BEN networks can implement elementary logic gates, more complex tissue-level logic gates, pattern detectors and compound logic gates. Furthermore, we have demonstrated that logic can be implemented in circuits with bidirectional connections that is typical of non-neural tissues, in contrast to the conventional directed circuits like neural networks and digital electronic circuits. This implies that even though non-neural tissues may find it harder to implement formal logic (due to lower control over information flow; see Supplementary Fig. S2b, for example, where information flow is recurrent even in a small network, making it hard to control), they can achieve it. Not only can BEN networks compute, but they can also be robust to damage. One of the most important, and heretofore unexplained, properties of biological control circuits is that they continue to function computationally under significant perturbation (as seen by work on metamorphosis, stability of memory and behavioral programs during drastic tissue remodeling, and invariance of morphogenesis to changes in cell number76,121,122). BEN networks can exhibit a similar behavior, retaining function even after the removal of cells. We found that BEN achieves this by distributing information in the network (Supplementary 2).

The particular methods by which BEN networks were trained is not of central importance in this work and do not impact the main result – that BENs can be readily found which implement logic functions. We used BPTT simply as a way to discover the parameters of (train) BEN networks that perform specific logic functions or recognize patterns. In the biological world, training (learning) may happen in other ways that may be broadly classified as offline and online. Offline learning happens outside of the lifetime of an individual and at a population level, while online learning happens during the lifetime of an individual. Evolution is an example of the former, while Hebbian learning is an example of the latter. BPTT may be classified as a form of online learning, but it has been claimed to be not biologically realistic123,124. More biologically realistic forms of online learning have been proposed, for example, forward propagation of eligibility traces123, extended Hebbian learning125, dynamic self-organizing maps126 and instantaneously trained neural networks127. Such methods could potentially advance biologically realistic training of BEN networks, which we will develop in future research, but they are not necessary to find examples demonstrating that BEN networks can compute.

BEN models the bioelectric principles of a generic bioelectric network, and not all the physiological details, as these can vary widely across tissues. Individual cell types can be modeled in future effort simply by altering the ion channel parameters while retaining the basic form of the equations. Although BEN is a model of a non-neural bioelectric network, it has certain features that resemble those of a neural network, as described above (the weight and bias parameters, and the two-level nonlinear signal transformation). This may suggest that neural-like features are necessary for computation even in a non-neural network. On the other hand, it also suggests that non-neural features may support neural computation. Indeed, this is the subject matter of ongoing debates in neuroscience about how much glia contribute to neural computation128,129.

Our work contributes to the field of basal cognition by describing how a simple network of aneural cells may perform both basic and complex logic operations. However, our goal is not to validate basal cognition as a field – it is just one context (besides others, such as developmental neurobiology, evolutionary cognitive science, and synthetic bioengineering) which our findings impact. Even if some computational views of specific biological systems turn out to be wrong, our results provide value in showing how physiological circuits can be made with specific (and useful) behaviors, both for synthetic biology applications and to understand functional aspects of developmental physiology, the evolution of brain circuits, etc. In prior work, a computational perspective of cell behavior14,16,130 allowed our lab and other groups to formulate (1) ways of experimentally controlling large-scale anatomical outcomes13, and (2) formulate and solve the “inverse problem”14 of how the cells might actually achieve specific anatomical configurations, given cellular constraints and a range of starting conditions76,131. Our current work addresses (2), by demonstrating a possible approach that cells could use to implement logic functions under realistic cellular constraints.

Overall, BEN has the potential to shed light on as-yet-unexplained non-neural bioelectric information-processing. For example, when certain planarian species (highly regenerative animals) are cut they sometimes develop two heads, instead of a head and a tail, as a response to a temporary exposure to 1-octanol, a gap junction blocker. Without the gap junction blocker, they always develop a wild type morphology consisting of a single head and a tail. It has been shown that this decision to switch morphologies is bioelectrically controlled and non-neural in nature24,132. We hypothesize that the planarian bioelectric system encodes a pattern that either consists of a record of the gap junction blocker or not, and then maps it into different morphological decisions (wild-type or double-head) in a systematic way. We currently have a prototype BEN model that reproduces this phenomenon in a qualitative way, which we plan to report in the future. Finally, we have demonstrated in this work that the slower (reason described in ‘Methods’), continuous mode of non-neural bioelectric signaling, compared to the faster, pulsating mode of neural signaling, is sufficient to perform logical computations usually associated with the brain. This firmly supports the possibility of somatic computation in real biological systems. How it compares to the timescales of genetic signaling and how it could facilitate bioelectricity to be upstream of genetics53,103 is an open question that our future investigations will answer.

Our work paves the foundation for future research in training actual biological tissues for somatic computation. Ongoing research in our laboratory has identified ways by which gap junctions can be manipulated and controlled, further opening up the possibilities on this front. Overall, our research provides the conceptual and modeling foundations to understand and manipulate development and regeneration, and to construct computational synthetic living machines.

Methods

Mathematical details of BEN

The equations that define a BEN model are shown in Fig. 8 using an example 2-cell system. The equations are shown for cell-2 only; the same equations with appropriate change in subscripts apply to cell-1 and likewise to any other cell in larger networks. The processes that the equations represent, like electrodiffusion, reaction-diffusion and gating, are marked appropriately in the figure, thus self-explanatory. The following remarks serve to better illustrate the model. As described in the introductory section, electrodiffusion utilizes two types of gradients, namely voltage and concentration, to drive the flux. Those gradients are represented by the terms and respectively in the electrodiffusion equations. The ion-pump equations are approximate versions of the full mechanism modeled in equations (23–29) of BETSE51. BEN makes use of just the terms involving the ion concentrations in equation (27) of BETSE51, as it lacks the other biochemical components like ATP, and by choosing appropriate term coefficients to compensate for the approximation it effectively preserves the relationship between ion concentrations and the pump flux.

Figure 8.

Mathematical details of the BEN model. (Top) The equations that describe the dynamics in a 2-cell system illustrated in the figure on the left. (Bottom) A glossary of variables used in the equations, the constants and initial conditions.

The dynamics of the signaling molecule is viewed as a nonlinear reaction-diffusion process as it can be abstractly represented as: , where is the concentration gradient, represents the 1st layer of sigmoid approximating a nonlinear diffusion, and represents the 2nd layer of sigmoid approximating a nonlinear reaction. Moreover, the nested form of departs from the conventional form of reaction-diffusion which is , for example, . Nevertheless, it qualifies as reaction-diffusion since it clearly involves diffusion ()and a nonlinear component (the sigmoid) that alters the net mass of c, representing production or decay depending on the sign of . Another important point to note is that even though sigmoid functions, which define the signal flux, also constitute the activation functions of neurons in neural network models38,64, there is a crucial difference: while neuron models are input-activated since neuronal synaptic junctions are directional, a cell in BEN is gradient-activated since gap-junctions are bidirectional. This is also partly the reason why non-neuronal cells tend to be slower than neural cells—diffusion is a slower process than directed currents.

There’s no set range of Vmem levels that a given BEN network will not exceed (as there are no explicit bounds on the ion concentration levels), but there are typically set to lie within [−80 mV, +80 mV]; see for example Figs. 2d, 3d, 4, 6 and 7. The concentration of the signaling molecule, on the other hand, is forced to lie with [0, 1], that is, within the generic minimum and maximum possible values of the actual concentration which is not explicitly considered in BEN.

Figure 3.

The XOR gate. (a) Schematic of the XOR logic gate; the orange and purple cells are the inputs, and the green cell is the output; the grey cells are intermediate cells offering computational support. (b) The XOR rule table: output is depolarized only when one of its inputs, but not both, is depolarized. Table specifies the output Vmem for various combinations of input Vmem levels; this is known as the “truth table” in the Boolean logic literature, where the “hyperpolarized” state (around −80 mV) is indicated as “0”, “False” or “OFF” and the “depolarized” state (around +80 mV) as “1”, “True” or “ON”. (c) Pareto front of training errors over time (one unit is equal to a single training epoch) for the XOR gate. This plot depicts the “front” with the best errors achieved over time. This figure demonstrates that the training does indeed result in learning, but fewer networks are successful compared to AND (Fig. 2). (d) Behavior of the best XOR gate. Shown here are the time series of the input and output nodes of the gate, shown for all four input-output conditions generated in a random sequence. The red and blue lines represent the states of the two input nodes, and green represents the output. The grey triangles mark the time points at which the inputs are switched to a different state. (e) The dynamical phase space of the gate: a depiction of a set of trajectories in the input-output space, illustrated in a time-lapse style. This dynamical system has two attractors in the output space, highlighted in filled red (depolarized state) and blue (hyperpolarized state) circles. The trajectories look straight because the inputs are fixed, and only the output changes.

Figure 4.

The structure and behavior of the best evolved tissue-level AND gate. Following the convention of the color-coding, orange and purple represent input nodes, while green represents the output node. Behavior correctly follows the AND rule (Fig. 2b). Inputs are transient: they are set (externally) to their respective states at the time steps marked by the grey triangles and then removed. As can be seen, they slightly oscillate for a small period of time after they are set initially, before dynamically fixing themselves (marked by grey dashed arrows) during every simulation. Thus, the network has evolved the capacity for memory: it remembers the inputs (at least their qualitative levels) even after they are removed. Inset shows the structure of the network.

The software to simulate, train and evolve BEN networks is available at: https://gitlab.com/smanicka/BEN.

Details of the simulation

We used the standard Euler integration method for integration the equations. We used a time step of 0.01 for the bioelectric dynamics and 0.02 for the signaling molecule dynamics. Each simulation was set up to run for a number of steps ranging from 300 to 600; smaller networks were run for fewer steps. Thus, the total simulation ranged between 300 * 0.01 = 3 simulation seconds to 600 * 0.01 = 6 simulation seconds.

The backpropagation method for training elementary logic gates

Regardless of the gate, a training run was set up as follows. A single BEN network was instantiated with randomized parameters. The initial weight parameters were chosen in the range [−1, 1], and the biases in the range [0, 1]. It was then fed with a batch of four inputs and was simulated for about 300 time-steps, with the inputs being held fixed throughout the simulation. An “input” constitutes a specific Vmem pattern of the input cells. For example, input cell-1 may be set to a hyperpolarized Vmem and cell-2 to a depolarized Vmem. The input cells were then randomly set to a different state, drawn from the training batch, without disturbing the state of the rest of the network (a method referred to as “continuous computation”101) and the simulation was continued for another 300 time-steps; this was repeated for ten times. At the end of each of 10 simulations, the observed states of the output cell during the last 10 time-steps were matched against the desired output corresponding to the input in the training batch (see Figs. 2b and 3b for examples). An error was then calculated based on the difference for each of the 10 simulations for each input sequence in the batch and averaged over. The derivatives of the final average error (gradient) with respect to all the parameters were then calculated. Due to the recurrent nature of the dynamics (Fig. 1), the simulation takes many more steps than the number of layers in the network before the output reaches an equilibrium. Thus, the gradients would in principle have to computed over all those steps—a method known as “backpropagation through time” (BPTT). However, due to computational constraints and known problems like “vanishing gradient”, the gradients are typically computed over fewer time steps—this version of backpropagation is known as “truncated” BPTT. In this work, the gradients were computed over only the last 100 steps of a simulation. The quantum of changes for all the parameters were then calculated from the gradients after scaling it with a “learning rate” that set the pace of learning. The weights and bias were assigned separate “dynamic” learning rates, where the rates were fixed in such a way that the maximum change of a weight parameter was 0.1 and the maximum change of a bias parameter was 0.01. We adopted this strategy mainly to mitigate the vanishing gradient problem, and the assumption that small changes in the parameters should cause a smooth change in behavior. We also employed a “momentum” factor, a method often used in backpropagation, that determines the extent to which the previous parameter-change vector contributed to the following vector. We used a momentum of 0.5 for the first 100 training iterations, and 0.9 for the rest; the allowed range is [0, 1]. Finally, the parameter-updates were applied (thus error was backpropagated), and the whole process was repeated for about 600,000 “epochs”. The weights were allowed to change infinitely in both directions, whereas the biases were limited within the range [0, 1]. We deemed a network as successfully trained if it achieved an average performance error of 0.0002 or below at some point during the training.

We used the Python software package called Pytorch for computing derivatives during backpropagation. This package uses a method called “automatic differentiation” to compute derivatives based on “computational graphs”—a graph that keeps track of every computation performed during a simulation, which is then swept backwards for computing the derivatives, essentially constituting an algorithm for the “chain rule” of differential calculus.

The combined backpropagation-genetic-algorithm method for training tissue-level logic gate and the pattern detector

A population of 50 BEN “genomes” was instantiated. A genome consists of a combination of the network’s parameters, namely the weight matrix and the bias vector, that define a network. Henceforth, by “genome” we refer to a BEN network, for clarity. Each network consists of the following structure: an input layer consisting of 2 nodes, an intermediate “tissue” layer consisting of 25 nodes, and the output layer consisting of a single node. Initially the tissue layer was designed to be a two-dimensional lattice. Next, the tissue layer of each individual in the population was randomly rewired with a rewiring probability chosen uniformly from the range . Thus, the population consisted of the full spectrum of tissue layer structures ranging from lattice to random. Next, the input layer and the output layers of each individual in the population were respectively connected to approximately 50% of the nodes in the tissue layer; this gave the GA a chance to explore both denser and sparser inter-layer connectivity. Every individual in the population was then assigned randomized weight and bias parameters chosen in the same range as backpropagation. They were each then backpropagated for 80 iterations in the same fashion as described above for the elementary gates, with an important difference: the inputs for these gates were transient, as they are set for the first time-step only. The final average error of each individual became its fitness score. The GA then goes through the conventional steps of selection, mutation, creating a new generation of individuals.

We used a simple flavor of GA known as the “microbial genetic algorithm”133 that capitalizes on the notion of horizontal gene transfer observed in microbes. It is essentially a form of tournament selection where two individuals in a population are picked and pitted against each other (their fitness scores are compared). We used a “geographical selection” method where individuals are placed on a 1D ring, and only geographically close individuals are picked for the tournament. The size of selection-neighborhood is known as the “deme size”, which was set to 10% in this work. The genome of the winner is transmitted to the loser at a certain “recombination rate”, which was set to 0.1. The genome of the loser is then mutated at a certain “mutation rate” (set to 0.05) and reinserted into the population. This process is repeated for 1000 generations. The individual with the best fitness score in the final generation was deemed as the best individual—the most successfully trained tissue-level logic gate.

Generation of the input patterns during the training of the pattern detector

The set of input patterns used for training the pattern detector consists of two categories: (1) French-flag (FF) and (2) Not-French-flag (NFF). The subset FF consists of the French-flag pattern itself and its slightly noisy variants. The latter is generated by perturbing each pixel of the French-flag approximately within the range [−24 mV, +24 mV]. Any perturbation that takes a pixel below the bounds of [−80 mV, +80 mV] are reset to the closest boundary values. The subset NFF consists of the more distorted variants of the French-flag. It is generated in the same way as FF except the perturbation range is [−40 mV, +40 mV]. A total of 20 samples in each set were generated during each iteration of the GA-BP search.

Supplementary information

Acknowledgements

We thank Alexis Pietak and many members of the Levin lab for many helpful discussions, as well as Nima Dehghani and Joshua Finkelstein for helpful comments on a draft of the manuscript. This research was supported by the Allen Discovery Center program through The Paul G. Allen Frontiers Group (12171), and M. L. gratefully acknowledges support from the Templeton World Charity Foundation (TWCF0089/AB55), the Barton Family Foundation, and the National Science Foundation (DBI-1152279 and Emergent Behaviors of Integrated Cellular Systems subaward CBET-0939511). This research was also sponsored by the Defense Advanced Research Projects Agency (DARPA) under Cooperative Agreement Number HR0011-18-2-0022, the Lifelong Learning Machines program from DARPA/MTO. The content of the information does not necessarily reflect the position or the policy of the Government, and no official endorsement should be inferred. Approved for public release; distribution is unlimited.

Author contributions

M.L. and S.M. conceptualized the project together. S.M. wrote all the code and performed the computational experiments and data collection. M.L. and S.M. analyzed the data and wrote the paper.

Data availability

All parameters used for the networks in this manuscript, as well as the source code, can be downloaded at https://gitlab.com/smanicka/BEN.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

is available for this paper at 10.1038/s41598-019-54859-8.

References

- 1.Wiener, N. & Schadé, J. P. Cybernetics of the nervous system. (Elsevier Pub. C., 1965).

- 2.Wiener, N. & Schadé, J. P. Nerve, brain, and memory models. (Elsevier Pub. Co., 1963).

- 3.Simon HA. Studying human intelligence by creating artificial intelligence. Am Sci. 1981;69:300–309. [PubMed] [Google Scholar]

- 4.Manicka S, Levin M. The Cognitive Lens: a primer on conceptual tools for analysing information processing in developmental and regenerative morphogenesis. Philos Trans R Soc Lond B Biol Sci. 2019;374:20180369. doi: 10.1098/rstb.2018.0369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Moore D, Walker SI, Levin M. Cancer as a disorder of patterning information: computational and biophysical perspectives on the cancer problem. Convergent Science Physical. Oncology. 2017;3:043001. [Google Scholar]

- 6.Levin Michael, Martyniuk Christopher J. The bioelectric code: An ancient computational medium for dynamic control of growth and form. Biosystems. 2018;164:76–93. doi: 10.1016/j.biosystems.2017.08.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Pezzulo Giovanni, Levin Michael. Top-down models in biology: explanation and control of complex living systems above the molecular level. Journal of The Royal Society Interface. 2016;13(124):20160555. doi: 10.1098/rsif.2016.0555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Baluška F, Levin M. On Having No Head: Cognition throughout Biological Systems. Front Psychol. 2016;7:902. doi: 10.3389/fpsyg.2016.00902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tang SKY, Marshall WF. Cell learning. Curr Biol. 2018;28:R1180–R1184. doi: 10.1016/j.cub.2018.09.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mitchell A, Lim W. Cellular perception and misperception: Internal models for decision-making shaped by evolutionary experience. Bioessays. 2016;38:845–849. doi: 10.1002/bies.201600090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lyon P. The cognitive cell: bacterial behavior reconsidered. Front Microbiol. 2015;6:264. doi: 10.3389/fmicb.2015.00264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Silva-Rocha R, Tamames J, dos Santos VM, de Lorenzo V. The logicome of environmental bacteria: merging catabolic and regulatory events with Boolean formalisms. Environ Microbiol. 2011;13:2389–2402. doi: 10.1111/j.1462-2920.2011.02455.x. [DOI] [PubMed] [Google Scholar]

- 13.Vandenberg LN, Adams DS, Levin M. Normalized shape and location of perturbed craniofacial structures in the Xenopus tadpole reveal an innate ability to achieve correct morphology. Dev Dyn. 2012;241:863–878. doi: 10.1002/dvdy.23770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Pezzulo G, Levin M. Re-membering the body: applications of computational neuroscience to the top-down control of regeneration of limbs and other complex organs. Integr Biol (Camb) 2015;7:1487–1517. doi: 10.1039/c5ib00221d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Farinella-Ferruzza N. The transformation of a tail into a limb after xenoplastic transformation. Experientia. 1956;15:304–305. doi: 10.1007/BF02159624. [DOI] [Google Scholar]

- 16.Friston Karl, Levin Michael, Sengupta Biswa, Pezzulo Giovanni. Knowing one's place: a free-energy approach to pattern regulation. Journal of The Royal Society Interface. 2015;12(105):20141383. doi: 10.1098/rsif.2014.1383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lyon P. The biogenic approach to cognition. Cogn Process. 2006;7:11–29. doi: 10.1007/s10339-005-0016-8. [DOI] [PubMed] [Google Scholar]

- 18.Prindle Arthur, Liu Jintao, Asally Munehiro, Ly San, Garcia-Ojalvo Jordi, Süel Gürol M. Ion channels enable electrical communication in bacterial communities. Nature. 2015;527(7576):59–63. doi: 10.1038/nature15709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Levin M. Morphogenetic fields in embryogenesis, regeneration, and cancer: non-local control of complex patterning. Bio Systems. 2012;109:243–261. doi: 10.1016/j.biosystems.2012.04.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bates E. Ion Channels in Development and Cancer. Annu Rev Cell Dev Biol. 2015;31:231–247. doi: 10.1146/annurev-cellbio-100814-125338. [DOI] [PubMed] [Google Scholar]

- 21.Pietak A, Levin M. Bioelectrical control of positional information in development and regeneration: A review of conceptual and computational advances. Prog Biophys Mol Biol. 2018;137:52–68. doi: 10.1016/j.pbiomolbio.2018.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.McLaughlin KA, Levin M. Bioelectric signaling in regeneration: Mechanisms of ionic controls of growth and form. Dev Biol. 2018;433:177–189. doi: 10.1016/j.ydbio.2017.08.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mathews J, Levin M. The body electric 2.0: recent advances in developmental bioelectricity for regenerative and synthetic bioengineering. Curr Opin Biotechnol. 2018;52:134–144. doi: 10.1016/j.copbio.2018.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Durant F, et al. Long-Term, Stochastic Editing of Regenerative Anatomy via Targeting Endogenous Bioelectric Gradients. Biophys J. 2017;112:2231–2243. doi: 10.1016/j.bpj.2017.04.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Beane WS, Morokuma J, Adams DS, Levin M. A Chemical genetics approach reveals H,K-ATPase-mediated membrane voltage is required for planarian head regeneration. Chemistry &. Biology. 2011;18:77–89. doi: 10.1016/j.chembiol.2010.11.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pitcairn E, et al. Coordinating heart morphogenesis: A novel role for Hyperpolarization-activated cyclic nucleotide-gated (HCN) channels during cardiogenesis in Xenopus laevis. Communicative & Integrative Biology. 2017;10:e1309488. doi: 10.1080/19420889.2017.1309488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Pai VP, et al. HCN4 ion channel function is required for early events that regulate anatomical left-right patterning in a nodal and lefty asymmetric gene expression-independent manner. Biology Open. 2017;6:1445–1457. doi: 10.1242/bio.025957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Pai VP, Aw S, Shomrat T, Lemire JM, Levin M. Transmembrane voltage potential controls embryonic eye patterning in Xenopus laevis. Development. 2012;139:313–323. doi: 10.1242/dev.073759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Pai VP, et al. Endogenous Gradients of Resting Potential Instructively Pattern Embryonic Neural Tissue via Notch Signaling and Regulation of Proliferation. The Journal of Neuroscience. 2015;35:4366–4385. doi: 10.1523/JNEUROSCI.1877-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sundelacruz S, Levin M, Kaplan DL. Membrane potential controls adipogenic and osteogenic differentiation of mesenchymal stem cells. PLoS One. 2008;3:e3737. doi: 10.1371/journal.pone.0003737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Perathoner S, et al. Bioelectric signaling regulates size in zebrafish fins. PLoS genetics. 2014;10:e1004080. doi: 10.1371/journal.pgen.1004080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tseng A.-S., Beane W. S., Lemire J. M., Masi A., Levin M. Induction of Vertebrate Regeneration by a Transient Sodium Current. Journal of Neuroscience. 2010;30(39):13192–13200. doi: 10.1523/JNEUROSCI.3315-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Adams DS, Masi A, Levin M. H+ pump-dependent changes in membrane voltage are an early mechanism necessary and sufficient to induce Xenopus tail regeneration. Development. 2007;134:1323–1335. doi: 10.1242/dev.02812. [DOI] [PubMed] [Google Scholar]

- 34.Oviedo Néstor J., Morokuma Junji, Walentek Peter, Kema Ido P., Gu Man Bock, Ahn Joo-Myung, Hwang Jung Shan, Gojobori Takashi, Levin Michael. Long-range neural and gap junction protein-mediated cues control polarity during planarian regeneration. Developmental Biology. 2010;339(1):188–199. doi: 10.1016/j.ydbio.2009.12.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Adams DS, et al. Bioelectric signalling via potassium channels: a mechanism for craniofacial dysmorphogenesis in KCNJ2-associated Andersen-Tawil Syndrome. J Physiol. 2016;594:3245–3270. doi: 10.1113/JP271930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Masotti A, et al. Keppen-Lubinsky Syndrome Is Caused by Mutations in the Inwardly Rectifying K(+) Channel Encoded by KCNJ6. Am J Hum Genet. 2015;96:295–300. doi: 10.1016/j.ajhg.2014.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Pai, V. P. et al. HCN2 Rescues brain defects by enforcing endogenous voltage pre-patterns. NatureCommunications9, 10.1038/s41467-018-03334-5 (2018). [DOI] [PMC free article] [PubMed]

- 38.McCulloch, W. S. & Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bulletin of Mathematical Biophysics5 (1943). [PubMed]

- 39.Goldental A, Guberman S, Vardi R, Kanter I. A computational paradigm for dynamic logic-gates in neuronal activity. Front Comput Neurosci. 2014;8:52. doi: 10.3389/fncom.2014.00052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Albert, R. E. In Complex Networks. Lecture Notes in Physics Vol. 650 (ed Frauenfelder H. Ben-Naim E., Toroczkai Z.) (Springer, 2004).

- 41.Albert R, Thakar J. Boolean modeling: a logic-based dynamic approach for understanding signaling and regulatory networks and for making useful predictions. Wiley Interdisciplinary Reviews: Systems Biology and Medicine. 2014;6:353–369. doi: 10.1002/wsbm.1273. [DOI] [PubMed] [Google Scholar]

- 42.Crama, Y. & Peter L. Hammer. Boolean functions: Theory, algorithms, and applications. (Cambridge University Press, 2011).

- 43.Copeland BJ. What is computation. Synthese. 1996;108:335–359. doi: 10.1007/BF00413693. [DOI] [Google Scholar]

- 44.Horsman C, Stepney S, Wagner RC, Kendon V. When does a physical system compute? Proc Math Phys Eng Sci. 2014;470:20140182. doi: 10.1098/rspa.2014.0182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Nicholson DJ. Is the cell really a machine? J Theor Biol. 2019;477:108–126. doi: 10.1016/j.jtbi.2019.06.002. [DOI] [PubMed] [Google Scholar]

- 46.Harvey, I. Cognition is Not Computation; Evolution is Not Optimisation (1996).

- 47.Pietak A, Levin M. Exploring Instructive Physiological Signaling with the Bioelectric Tissue Simulation Engine. Front Bioeng Biotechnol. 2016;4:55. doi: 10.3389/fbioe.2016.00055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Cervera Javier, Pietak Alexis, Levin Michael, Mafe Salvador. Bioelectrical coupling in multicellular domains regulated by gap junctions: A conceptual approach. Bioelectrochemistry. 2018;123:45–61. doi: 10.1016/j.bioelechem.2018.04.013. [DOI] [PubMed] [Google Scholar]

- 49.Pai VP, et al. HCN2 Rescues brain defects by enforcing endogenous voltage pre-patterns. Nat Commun. 2018;9:998. doi: 10.1038/s41467-018-03334-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Levin Michael, Pietak Alexis M., Bischof Johanna. Planarian regeneration as a model of anatomical homeostasis: Recent progress in biophysical and computational approaches. Seminars in Cell & Developmental Biology. 2019;87:125–144. doi: 10.1016/j.semcdb.2018.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Pietak Alexis, Levin Michael. Bioelectric gene and reaction networks: computational modelling of genetic, biochemical and bioelectrical dynamics in pattern regulation. Journal of The Royal Society Interface. 2017;14(134):20170425. doi: 10.1098/rsif.2017.0425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Mathews J, Levin M. Gap junctional signaling in pattern regulation: Physiological network connectivity instructs growth and form. Developmental neurobiology. 2017;77:643–673. doi: 10.1002/dneu.22405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Koseska A, Bastiaens PI. Cell signaling as a cognitive process. EMBO J. 2017;36:568–582. doi: 10.15252/embj.201695383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Barnard A, Wolfe A, Busby S. Regulation at complex bacterial promoters: how bacteria use different promoter organizations to produce different regulatory outcomes. Curr Opin Microbiol. 2004;7:102–108. doi: 10.1016/j.mib.2004.02.011. [DOI] [PubMed] [Google Scholar]

- 55.Brodskiy PA, Zartman JJ. Calcium as a signal integrator in developing epithelial tissues. Physical biology. 2018;15:051001. doi: 10.1088/1478-3975/aabb18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Solovey Guillermo, Dawson Silvina Ponce. Intra-Cluster Percolation of Calcium Signals. PLoS ONE. 2010;5(2):e8997. doi: 10.1371/journal.pone.0008997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Lopez L, Piegari E, Sigaut L, Ponce Dawson S. Intracellular calcium signals display an avalanche-like behavior over multiple lengthscales. Front Physiol. 2012;3:350. doi: 10.3389/fphys.2012.00350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Polsky A, Mel BW, Schiller J. Computational subunits in thin dendrites of pyramidal cells. Nat Neurosci. 2004;7:621–627. doi: 10.1038/nn1253. [DOI] [PubMed] [Google Scholar]

- 59.Panayiota Poirazi TB, Bartlett W. Mel. Pyramidal neuron as two-layer neural network. Neuron. 2003;37:989–999. doi: 10.1016/S0896-6273(03)00149-1. [DOI] [PubMed] [Google Scholar]

- 60.Antic Srdjan D., Hines Michael, Lytton William W. Embedded ensemble encoding hypothesis: The role of the “Prepared” cell. Journal of Neuroscience Research. 2018;96(9):1543–1559. doi: 10.1002/jnr.24240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Sperelakis, N. Cell physiology source book: essentials of membrane biophysics. (Elsevier, 2012).

- 62.Bukauskas FF, Verselis VK. Gap junction channel gating. Biochim Biophys Acta. 2004;1662:42–60. doi: 10.1016/j.bbamem.2004.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Brink P. Gap Junction Voltage Dependence - a clear picture emerges. J. Gen. Physiol. 2000;116:11–12. doi: 10.1085/jgp.116.1.11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Nielsen, M. A. Neural networks and deep learning. Vol. 25 (Determination press, 2015).

- 65.Brodmann, K. Brodmann’s: Localisation in the cerebral cortex. (Springer Science & Business Media, 2007).

- 66.Hinczewski, M. & Thirumalai, D. Cellular signaling networks function as generalized Wiener-Kolmogorov filters to suppress noise. Physical Review X4 (2014).

- 67.Becker NB, Mugler A, Ten Wolde PR. Optimal Prediction by Cellular Signaling Networks. Phys Rev Lett. 2015;115:258103. doi: 10.1103/PhysRevLett.115.258103. [DOI] [PubMed] [Google Scholar]

- 68.Sagner Andreas, Briscoe James. Morphogen interpretation: concentration, time, competence, and signaling dynamics. Wiley Interdisciplinary Reviews: Developmental Biology. 2017;6(4):e271. doi: 10.1002/wdev.271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Ebisuya Miki, Briscoe James. What does time mean in development? Development. 2018;145(12):dev164368. doi: 10.1242/dev.164368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Herrera-Rincon C, et al. Brief Local Application of Progesterone via a Wearable Bioreactor Induces Long-Term Regenerative Response in Adult Xenopus Hindlimb. Cell Rep. 2018;25:1593–1609 e1597. doi: 10.1016/j.celrep.2018.10.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Amor, D. R., Ratzke, C. & Gore, J. Transient invaders can induce shifts between alternative stable states of microbial communities. BioRxiv, 10.1101/659052 (2019). [DOI] [PMC free article] [PubMed]

- 72.Durant Fallon, Bischof Johanna, Fields Chris, Morokuma Junji, LaPalme Joshua, Hoi Alison, Levin Michael. The Role of Early Bioelectric Signals in the Regeneration of Planarian Anterior/Posterior Polarity. Biophysical Journal. 2019;116(5):948–961. doi: 10.1016/j.bpj.2019.01.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Davidson SIAEH. Logic functions of the genomic cis-regulatory code. PNAS. 2004;102:4954–4959. doi: 10.1073/pnas.0409624102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Palacios-Prado N., Bukauskas F. F. Heterotypic gap junction channels as voltage-sensitive valves for intercellular signaling. Proceedings of the National Academy of Sciences. 2009;106(35):14855–14860. doi: 10.1073/pnas.0901923106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Birnbaum KD, Alvarado AS. Slicing across kingdoms: regeneration in plants and animals. Cell. 2008;132:697–710. doi: 10.1016/j.cell.2008.01.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Pinet Kaylinnette, McLaughlin Kelly A. Mechanisms of physiological tissue remodeling in animals: Manipulating tissue, organ, and organism morphology. Developmental Biology. 2019;451(2):134–145. doi: 10.1016/j.ydbio.2019.04.001. [DOI] [PubMed] [Google Scholar]

- 77.Wolpert L. Positional information and the spatial pattern of cellular differentiation. J. Theor. Biol. 1969;25:1–47. doi: 10.1016/S0022-5193(69)80016-0. [DOI] [PubMed] [Google Scholar]

- 78.Dawkins, R. The blind watchmaker: Why the evidence of evolution reveals a universe without design. (WW Norton & Company, 1996).

- 79.Espinosa-Soto Carlos. On the role of sparseness in the evolution of modularity in gene regulatory networks. PLOS Computational Biology. 2018;14(5):e1006172. doi: 10.1371/journal.pcbi.1006172. [DOI] [PMC free article] [PubMed] [Google Scholar]