Abstract

An enduring focus in the science of emotion is the question of which psychological states are signaled in expressive behavior. Based on empirical findings from previous studies, we created photographs of facial-bodily expressions of 18 states and presented these to participants in nine cultures. In a well-validated recognition paradigm, participants matched stories of causal antecedents to one of four expressions of the same valence. All 18 facial-bodily expressions were recognized at well above chance levels. We conclude by discussing the methodological shortcomings of our study and the conceptual implications of its findings.

Keywords: emotion, culture, expression

Within basic emotion theory, emotions are assumed to be brief psychological states accompanied by specific patterns of subjective response, expressive behavior, physiology, and cognition that enable the individual to respond effectively to threats and challenges in the social and physical environment (Ekman, 1992; Ekman & Cordaro, 2011; Keltner & Cordaro, 2016; Keltner & Haidt, 1999; Lench, Flores, & Bench, 2011; Matsumoto et al., 2008; Shariff & Tracy, 2011). A central hypothesis within the broader framework of basic emotion theory is that emotional states are signaled in distinct patterns of expressive behavior that are recognizable across cultures (Ekman, 1992; Ekman & Cordaro, 2011; Keltner, Tracy, Sauter, & Cowen, in press; Matsumoto et al., 2008).

Evidence of emotion-specific patterns of expressive behavior has spurred advances in the science of emotion (Hess & Thibault, 2009; Keltner, Tracy, Sauter, Cordaro, & McNeil, 2016; Matsumoto et al., 2008; Shariff & Tracy, 2011). Such evidence has informed evolutionary theorizing about how human emotional expression resembles that of other mammals (e.g., Keltner & Buswell, 1997; Snowdon, 2003). Social functional theorizing about emotion, which extends the tenets of basic emotion theory, posits that emotions coordinate social interactions through the informative, evocative, and incentive functions that emotion-specific expressions serve (e.g., Keltner & Kring, 1998; Niedenthal, Rychlowska, & Wood, 2017; van Kleef, 2016). Evidence establishing which psychological states are signaled in expressive behavior (and which states are not) informs taxonomic claims about which states might be considered emotions and the boundaries between them (e.g., Cowen & Keltner, 2018; Ekman, 1992, 2016; Keltner et al., in press). From theoretical claims about the forms of emotion to an understanding of the varying functions of fleeting emotional states, studies of emotion-related expressive behavior have been seminal to theoretical efforts in the field.

Two methods lie at the heart of the empirical literature on emotional expression. Encoding studies characterize systematic patterns of behavior that are observed when a participant is experiencing an emotion; is in an emotionally evocative context, such as winning or losing a competition; or is instructed to express an emotion to others (e.g., Cordaro et al., 2018; Elfenbein, Beaupré, Lévesque, & Hess, 2007; Sauter & Fischer, 2018). In decoding studies, participants are presented with images, videos, or audio recordings of static or dynamic expressions and asked to label the expressions, most typically with emotion words, situations, or in free response format (Ekman, 1993; Haidt & Keltner, 1999; Russell, 1994; Tracy & Robins, 2004).

One line of decoding studies has focused on what might be called the “Basic 6”—anger, disgust, fear, happiness, sadness, and surprise (Ekman, Sorenson, & Friesen, 1969). Decoding evidence related to these six emotions was a focus of the most well-cited meta-analysis establishing the degree to which these states are recognized across cultures (Elfenbein & Ambady, 2002), as well as critiques of claims about the universal recognition of emotion (Crivelli, Jarillo, Russell, & Fernández-Dols, 2016; Gendron, Roberson, van der Vyver, & Barrett, 2014; Russell, 1994). These six emotions have figured prominently in arguments about how emotion recognition engages different regions of the brain (Spunt, Ellsworth, & Adolphs, 2017), changes across development (Widen & Russell, 2013), and is shaped by cultural, contextual, and individual difference factors (Hess & Hareli, 2017).

A second line of empirical inquiry has sought to broaden the understanding of what psychological states are reliably signaled in expressive behavior. This work has precedent in the theorizing of Izard (1972), who posited 10 emotions with distinct signals—the six we have considered thus far, as well as contempt, shame, guilt, and interest. Continuing within this tradition, encoding studies have characterized posed and spontaneous expressions of positive states, including amusement, contentment/serenity, coyness, desire, interest, and sympathy (e.g., Campos, Shiota, Keltner, Gonzaga, & Goetz, 2013; Cordaro et al., 2018; Elfenbein et al., 2007); self-conscious and hierarchy-related states, including embarrassment, shame, and pride (e.g., Elfenbein et al., 2007; Keltner, 1995; Tracy & Robins, 2004); and what one might call more purely cognitive states, such as boredom and confusion (Clore & Ortony, 1988; Rozin et al., 2003). Complementing these encoding studies, decoding studies have explored whether observers can identify amusement, contempt, serenity, shame, and sympathy from static photos (e.g., Elfenbein et al., 2007; Haidt & Keltner, 1999) and relief, sensory pleasure, and triumph from videotaped portrayals of dynamic expressions (Sauter & Fischer, 2018).

The present investigation builds upon these efforts to broaden the field’s understanding of which psychological states might have patterns of expressive behavior recognized in different cultures. We created empirically derived photos of facial-bodily expressions of 18 psychological states, based on empirically based descriptions from past encoding studies (see online supplemental Table S1). These 18 states included anger, disgust, fear, happiness, sadness, and surprise, the central focus in the field thus far. We also developed photographs of the expressions of amusement, contentment, desire, embarrassment, interest, pain, pride, shame, and sympathy, states for which there is theoretical argument, select empirical evidence, and emerging discussion about whether they may be thought of as emotions within the field (e.g., Cordaro, Keltner, Tshering, Wangchuk, & Flynn, 2016; Ekman, 2016; Goetz, Keltner, & Simon-Thomas, 2010; Izard, 1972; Tracy & Robins, 2004). Finally, we created photos of boredom, confusion, and coyness. Boredom and confusion have been classified as cognitive states, in that they do not necessarily involve appraisals of valence or peripheral physiological response so central to emotion (e.g., Clore & Ortony, 1988). Coyness likely signals a complex mixture of emotions, including desire, interest, and fear, and is observed during flirtatious interactions (Grammer, Kruck, & Magnusson, 1998).

Guided by one empirical approach to universality—where the aim is “the generalization of psychological findings across disparate populations having different ecologies, languages, belief systems, and social practices” (Norenzayan & Heine, 2005)—we presented these photos to participants from China, Germany, India, Japan, Pakistan, Poland, South Korea, Turkey, and the United States, countries that vary profoundly on cultural dimensions, values, and self-construal (see online supplemental materials). Guided by recent analyses (Brosch, Pourtois, & Sander, 2010), we had participants match photos to situations portrayed in stories of causal antecedents. Given recent concerns about processes that inflate recognition accuracy, participants matched a situation to one of four photos portraying expressions of the same valence (Gendron et al., 2014). We hypothesized that the 18 facial-bodily expressions would be recognized across the nine cultures.

Method

Participants

Participants (49% female, 33% male, 18% did not respond; Mage = 24.26, SD = 5.18) were college students from China (n = 54), Germany (n = 54), India (n = 44), Japan (n = 55), Pakistan (n = 46), Poland (n = 64), South Korea (n = 50), Turkey (n = 61), and the United States (n = 55).1 Of note, these sample sizes are comparable to or larger than roughly 70% of the samples in the 168 samples that contributed to the meta-analysis by Elfenbein and Ambady (2002) and more recent decoding studies (e.g., Elfenbein et al., 2007; Haidt & Keltner, 1999). In the online supplemental materials, we offer a power analysis that shows that given the average recognition accuracy rate of .58 observed by Elfenbein and Ambady (2002), our sample sizes suffice, although they are underpowered to detect less reliably identified expressions, perhaps of states such as sympathy (e.g., Haidt & Keltner, 1999). We selected for participants who were between the ages of 18 and 30; had minimal experience living in other cultures (maximum of 1-month self-reported lifetime travel experience); had no prior knowledge of the scientific study of universal expressions, which was ascertained through self-report; and did not have a significant visual impairment, as evident in self-report and a demonstrated ability to recognize demonstration images before the test began.

Procedure

During a period of 6 months in 2012, participants were notified through e-mail, in person, or through online social networks that an online test was available that would test their understanding of emotional expression. Participants accessed the experiment on their personal computers through a link from http://ucbpsych.qualtrics.com. For those who did not have a personal computer, one was provided to them at the universities with which we collaborated.

Participants first gave informed consent and agreed to participate. Then, participants completed the facial-bodily expression task, in which they were presented with causal antecedent stories designed to capture 18 psychological states and asked to select a photo of a facial-bodily expression from a sample of four photos of the same valence to correspond to the story. Next, participants completed a vocal burst recognition task that has been previously reported (Cordaro et al., 2016). After that, they completed demographics and then were thanked and debriefed. This procedure was approved by the authors’ institutional review board.

Measures

Photographs of facial-bodily expressions.

We developed photographs of facial-bodily expressions of anger, disgust, fear, happiness, sadness, and surprise from descriptions in the field (Ekman, Friesen, & Ellsworth, 1972). For 12 other states, we translated empirically based descriptions of expressive behavior to still photos (see online supplemental Table S1). To produce these photographs, two researchers certified in the Facial Action Coding System (FACS; Ekman & Friesen, 1978) guided eight paid posers, all citizens of the United States, in muscle-by-muscle instructions to configure the states according to the anatomical movements documented in independent research. The gender and ethnic composition of the posers were male (one Asian American, one African American, two European Americans) and female (one Asian American, one African American, two European Americans). In Table 1, for purposes of replication, we present examples of these photographs, action units involved in the expression, and a physical description. The boredom and confusion photographs were produced according to the same instructions but were filmed in slightly different lighting conditions and, as a result, had a darker background. We consider this potential problem in the discussion.

Table 1.

Facial-Bodily Expression Examples, FACS Action Units, and Physical Descriptions for Each Expression

| State | Example photo | Action units | Physical description |

|---|---|---|---|

| Amusement |  |

6 + 7 + 12 + 25 + 26 + 53 | Head back, Duchenne smile, lips separated, jaw dropped |

| Anger |  |

4 + 5 + 17 + 23 + 24 | Brows furrowed, eyes wide, lips tightened and pressed together |

| Boredom |  |

43 + 55 | Eyelids drooping, head tilted (not scorable with FACS: slouched posture, head resting on hand) |

| Confusion |  |

4 + 7 + 56 | Brows furrowed, eyelids narrowed, head tilted |

| Contentment |  |

12 + 43 | Smile, eyelids drooping |

| Coyness |  |

6 + 7 + 12 + 25 + 26 + 52 + 54 + 61 | Duchenne smile, lips separated, head turned and down, eyes turned opposite to head turn |

| Desire |  |

19 + 25 + 26 + 43 | Tongue show, lips parted, jaw dropped, eyelids drooping |

| Disgust |  |

7 + 9 + 19 + 25 + 26 | Eyes narrowed, nose wrinkled, lips parted, jaw dropped, tongue show |

| Embarrassment |  |

7 + 12 + 15 + 52 + 54 + 64 | Eyelids narrowed, controlled smile, head turned and down (not scorable with FACS: hand touches face) |

| Fear |  |

1 + 2 + 4 + 5 + 7 + 20 + 25 | Eyebrows raised and pulled together, upper eyelid raised, lower eyelid tense, lips parted and stretched |

| Happiness |  |

6 + 7 + 12 + 25 + 26 | Duchenne smile |

| Interest |  |

1 + 2 + 12 | Eyebrows raised, slight smile |

| Pain |  |

4 + 6 + 7 + 9 + 17 + 18 + 23 + 24 | Eyes tightly closed, nose wrinkled, brows furrowed, lips tight, pressed together, and slightly puckered |

| Pride |  |

53 + 64 | Head up, eyes down |

| Sadness |  |

1 + 4 + 6 + 15 + 17 | Brows knitted, eyes slightly tightened, lip corners depressed, lower lip raised |

| Shame |  |

54 + 64 | Head down, eyes down |

| Surprise |  |

1 + 2 + 5 + 25 + 26 | Eyebrows raised, upper eyelid raised, lips parted, jaw dropped |

| Sympathy |  |

1 + 17 + 24 + 57 | Inner eyebrow raised, lower lip raised, lips pressed together, head slightly forward |

Note. FACS = Facial Action Coding System. These images are published online at http://www.neuroslam.com/emotionwise. Copyright by Lenny Kristal. Reprinted with permission. See the online article for the color version of this table.

Causal antecedent stories.

In different languages, the words that refer to psychological states, including emotions, vary in terms of number, denotation, and connotation, introducing ambiguities into recognition paradigms in which participants match single words to facial-bodily expressions (Russell, 1994). For these reasons, participants in the present investigation matched photographs of facial-bodily expressions to a brief description of a causal antecedent, a method used in past investigations (Camras & Allison, 1985; Dashiell, 1927; Ekman & Cordaro, 2011; Ekman et al., 1969; Gendron et al., 2014; Sauter, Eisner, Ekman, & Scott, 2010; Scott & Sauter, 2006; Simon-Thomas, Keltner, Sauter, Sinicropi-Yao, & Abramson, 2009). Stories add critical information about the situation within which a subjective experience is arising, allowing for more precise communication than what a single word conveys (Izard, 1994; Russell, 1991). We derived our stories from recent recognition studies of vocal bursts (Sauter et al., 2010; Simon-Thomas et al., 2009). In these studies, one-sentence stories that focused on an elicitor and a descriptor of the subjective experience were used in response formats in which participants selected from a set of vocal bursts the one that corresponded best to the story. This method can be traced back to the cross-cultural work of Ekman and colleagues (1969), who crafted simple, one-sentence stories, each of which matched one of the six emotions under investigation. More recent researchers have derived their stories from these originals (Gendron et al., 2014; Sauter et al., 2010; Simon-Thomas et al., 2009). For the present investigation, we used the Sauter et al. (2010) and Simon-Thomas et al. (2009) stories with minimal or no modifications, as suggested by our cultural informants, and created stories of similar length and structure for boredom, contentment, confusion, coyness, and pain. For desire, we wrote two stories, one for sex and one for food, the latter being included because of our cultural informant’s recommendations. The story translation procedure was similar to that of previous studies and is described below (e.g., Sauter et al., 2010).

Translations.

We iteratively revised the 19 stories in collab-oration with 27 cultural informants—three native speakers from each of the nine countries in the present investigation—until consensus was reached that we had achieved 19 simple stories that could be readily translated into each language (see online supplemental Table S2). A double back-translation method was used for all surveys and causal antecedent stories. The three translators from each culture were chosen if they were fluent in both English and the target language. Translator 1 converted all text from English to the target language. Translators 2 and 3 then took the target language translation and each separately back-translated the document to English. The experimenters then compared the two back-translations for consistency and correctness with respect to the original. Any discrepancies were discussed with all three translators, and edits were made accordingly. The translators were also instructed to make the stories sound colloquial and not like a direct translation from English.

Recognition task.

For each of the 18 psychological states, participants were presented with a causal antecedent story and photographs of four facial-bodily expressions, which included the target expression. Participants were asked to choose “the expression that best fits the story” or “none of the above” to guard against inflated recognition rates through forced-choice guessing (Russell, 1994). Each antecedent story was presented twice, one time with facial-bodily expressions of female posers and a second time with expressions of male posers. For each psychological state, we included the target expression and three other expressions that were of the same valence (Gendron et al., 2014). Furthermore, one of the alternative choices was the most anatomically similar of the “Basic 6” (see online supplemental Table S3).

Results

Analysis

For each psychological state, we calculated the percentages of respondents who chose the target response and the other alternatives. The gender of the participant marginally influenced accuracy ratings, such that females (M = 74%, SD = 14%) did marginally better than males (M = 71%, SD = 15%) in overall recognition across eight cultures, t(288.67) = 1.99, p = .047.2 The gender of the poser also had a small influence on overall accuracy ratings, such that people across nine cultures were better at recognizing female posers (M = 73%, SD = 15%) than male posers (M = 71%, SD = 16%), t(961.98) = 2.40, p = .017. Nonparametric binomial t tests determined whether participants chose the target expression at higher rates than chance, with chance guessing set at 25%, given that each judgment had four response options. Confusion matrices were not produced, since answer choices were randomized from a library of alternatives from the same valence instead of a fixed list of responses, which is required for confusion matrices. Participants also had the opportunity to choose “I can’t see some of the images,” which was counted as a nonresponse. Surprise, a neutrally valenced state, was included in both valence groups.

Recognizing Facial-Bodily Expressions

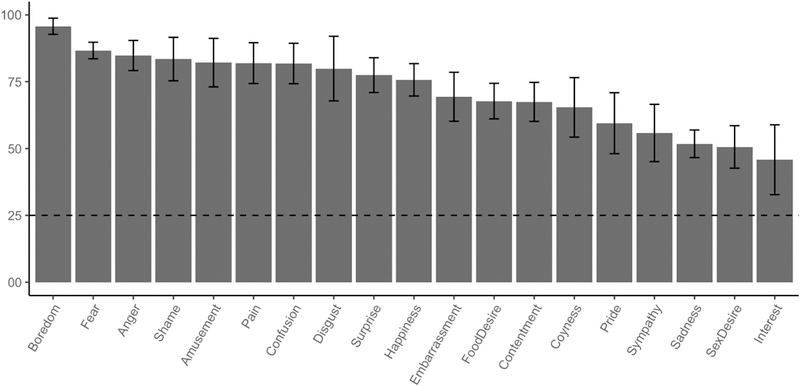

In Figure 1, we present the weighted mean levels of accuracy in recognizing the facial-bodily expressions of 18 psychological states. Across nine cultures, facial-bodily expressions of all 18 states were recognized at above chance levels, and the expressions of 15 states were recognized at rates equivalent to or greater than the 58% accuracy rate observed in the meta-analysis of emotion recognition studies (Elfenbein & Ambady, 2002).

Figure 1.

Recognition rates in identifying 18 facial-bodily expressions across nine cultures. This figure shows the weighted means with bars indicating the standard errors. Dashed lines indicate chance levels (25%).

Beyond the “Basic 6,” all 18 expressions were recognized at above-chance levels across all nine countries. None of these expressions was reliably confused for the most similar “basic” emtion choice (e.g., amusement, contentment, desire, and pride were readily distinguished from happiness). Expressions representing interest and sympathy failed to be recognized in at least one culture (see online supplemental Table S4 for accuracy rates for each emotion in each of the nine cultures).

Discussion

Drawing upon recent encoding and decoding studies of expressive behavior, we created still photos of facial-bodily expressions of 18 psychological states and presented these photos to participants from nine cultures diverse in their geographical origin, wealth, political context, religion, values, and self-construal. Participants matched a causal antecedent story to one of four photos portraying states of the same valence. Facial-bodily expressions of all 18 psychological states were reliably identified at well above chance levels, and 16 states were reliably recognized in all nine cultures. We note that of the two states not reliably identified in all nine countries—interest and sympathy—both have clear vocal signals, suggesting perhaps that these states are more reliably communicated with the voice (e.g., Cordaro et al., 2016). More generally, the results observed here converge with recent studies of the recognition of psychological states from the voice (Cordaro et al., 2016; Laukka, Neiberg, & Elfenbein, 2014), dynamic expressions in videos (Sauter & Fischer, 2018), voluntarily produced expressions (Cordaro et al., 2018), and open-ended methods of expression generation (Jack, Garrod, & Schyns, 2014) to suggest that upward of 20 psychological states have distinct expressions.

Critical limitations of the methods of this investigation warrant discussion. Perhaps the most critical set of concerns relates to our photographs of facial-bodily expressions. The expressions were produced by only eight posers (as a counterpoint, recent work by Elfenbein and colleagues involved 60 encoders; Elfenbein et al., 2007). Further, our posers were all from the United States, and we only involved single posers of Asian American and African American identity. The limited number of posers and the narrow range of their ethnic backgrounds raise serious questions about the generalizability of our results and whether the 18 psychological states studied here can be recognized in the expressions of people from different cultural backgrounds. Our use of only one Asian American and one African American poser of each gender, furthermore, precluded any possibility of examining within-group and between-group differences in recognition accuracy (Elfenbein & Ambady, 2002).

Importantly, our photographs portrayed prototypical expressions, derived from the findings of previous studies. People, though, express different states, including emotions, in varying and often more subtle or complex ways (e.g., Ekman, 1993; Keltner et al., in press). More prototypical expressions, such as those studied here, tend to be recognized with greater reliability (e.g., Sauter & Fischer, 2018; Simon-Thomas et al., 2009). It is very likely that the levels of recognition accuracy observed in the present investigation were elevated due to the prototypical nature of the expressions; studies of more varying expressions of these states would likely yield lower levels of accuracy. Given these methodological shortcomings, what is clearly needed are studies involving a much wider range of encoders of different ethnic backgrounds who are allowed to express the psychological states in unconstrained, more naturalistic fashion (e.g., see Elfenbein et al., 2007).

It is also important to note the relatively small size of our samples. Although they are comparable in size to most samples in the literature, and our power analysis simulation (see online supplemental Figure S1) suggested they were of sufficient size to detect significant effects given the level of accuracy observed in the Elfenbein and Ambady (2002) meta-analysis (and only 23.3% of the recognition accuracy rates observed in our study were lower than 0.58 across nine countries for 18 expressions), our samples were underpowered in terms of detecting reliable effects of less well-identified expressions. Clearly, it will be important to replicate these results with larger samples. Additionally, it is important to note that our samples involved college students, who tend to demonstrate higher levels of recognition accuracy (Russell, 1994). Within the recognition paradigm, we note that the causal antecedent stories were stereotypical and free of culturally varying content. It will be important for future work to study noncollege students, people in remote cultures, and more naturalistic expressions, as well as gather free-response data (Russell, 1994). It is also important to call the reader’s attention to another limitation of the present investigation: The background of two of our photos—of boredom and confusion—was grayer, which may have provided semantic cues to the interpretation of those expressions.

The present results raise intriguing questions for future studies. What levels of reliable judgment would be observed if the present paradigm was reversed and participants matched single facial expressions to multiple causal antecedent stories? With other kinds of data might the facial-bodily expressions of the 18 states focused on here reduce to simpler clusters of psychological states, as documented by Jack, Garrod, Yu, Caldara, and Schyns (2012)? How might the brain represent this richer palette of states conveyed in the 18 expressions studied here? Are children as adept at differentiating positive states in facialbodily expression as they are in differentiating vocalizations of positive states, which begins at age 2 (Wu, Muentener, & Schulz, 2017)? How do members of different cultures vary in the inferences they draw from such expressions? These and other questions await empirical attention.

Supplementary Material

Acknowledgments

This research was supported in part by a grant from the John Templeton Foundation (88210).

Footnotes

Supplemental materials: http://dx.doi.org/10.1037/emo0000576.supp

Due to a survey error, students in India did not report upon their gender.

This analysis includes the data from eight cultures but not India.

Contributor Information

Daniel T. Cordaro, Department of Psychology, Yale University

Rui Sun, Department of Psychology, Peking University.

Shanmukh Kamble, Department of Psychology, Karnatak University of Dharwad.

Niranjan Hodder, Department of Psychology, Karnatak University of Dharwad.

Maria Monroy, Department of Psychology, University of California, Berkeley.

Alan Cowen, Department of Psychology, University of California, Berkeley.

Yang Bai, Department of Psychology, University of California, Berkeley.

Dacher Keltner, Department of Psychology, University of California, Berkeley.

References

- Brosch T, Pourtois G, & Sander D (2010). The perception and categorization of emotional stimuli: A review. Cognition and Emotion, 24, 377–400. 10.1080/02699930902975754 [DOI] [Google Scholar]

- Campos B, Shiota MN, Keltner D, Gonzaga GC, & Goetz JL (2013). What is shared, what is different? Core relational themes and expressive displays of eight positive emotions. Cognition and Emotion, 27, 37–52. 10.1080/02699931.2012.683852 [DOI] [PubMed] [Google Scholar]

- Camras LA, & Allison K (1985). Children’s understanding of emotional facial expressions and verbal labels. Journal of Nonverbal Behavior, 9, 84–94. 10.1007/BF00987140 [DOI] [Google Scholar]

- Clore GL, & Ortony A (1988). Semantic analyses of the affective lexicon In Hamilton V, Bower G, & Frijda N (Eds.), Cognitive science perspectives on emotion and motivation (pp. 367–397). Amsterdam, the Netherlands: Martinus Nijhoff; 10.1007/978-94-009-2792-6_15 [DOI] [Google Scholar]

- Cordaro DT, Keltner D, Tshering S, Wangchuk D, & Flynn LM (2016). The voice conveys emotion in ten globalized cultures and one remote village in Bhutan. Emotion, 16, 117–128. 10.1037/emo0000100 [DOI] [PubMed] [Google Scholar]

- Cordaro DT, Sun R, Keltner D, Kamble S, Huddar N, & McNeil G (2018). Universals and cultural variations in 22 emotional expressions across five cultures. Emotion, 18, 75–93. 10.1037/emo0000302 [DOI] [PubMed] [Google Scholar]

- Cowen AS, & Keltner D (2018). Clarifying the conceptualization, dimensionality, and structure of emotion: Response to Barrett and Colleagues. Trends in Cognitive Sciences, 22, 274–276. 10.1016/j.tics.2018.02.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crivelli C, Jarillo S, Russell JA, & Fernandez-Dols JM (2016). Reading emotions from faces in two indigenous societies. Journal of Experimental Psychology: General, 145, 830–843. 10.1037/xge0000172 [DOI] [PubMed] [Google Scholar]

- Dashiell JF (1927). A new method of measuring reactions to facial expression of emotion. Psychological Bulletin, 24, 174–175. [Google Scholar]

- Eisenberg N, Schaller M, Fabes RA, Bustamante D, Mathy RM, Shell R, & Rhodes K (1988). Differentiation of personal distress and sympathy in children and adults. Developmental Psychology, 24, 766–775. 10.1037/0012-1649.24.6.766 [DOI] [Google Scholar]

- Ekman P (1992). An argument for basic emotions. Cognition and Emotion, 6, 169–200. 10.1080/02699939208411068 [DOI] [Google Scholar]

- Ekman P (1993). Facial expression and emotion. American Psychologist, 48, 384–392. 10.1037/0003-066X.48A384 [DOI] [PubMed] [Google Scholar]

- Ekman P (2016). What scientists who study emotion agree about. Perspectives on Psychological Science, 11, 31–34. 10.1177/1745691615596992 [DOI] [PubMed] [Google Scholar]

- Ekman P, & Cordaro D (2011). What is meantby calling emotions basic. Emotion Review, 3, 364–370. 10.1177/1754073911410740 [DOI] [Google Scholar]

- Ekman P, & Friesen WV (1978). Facial action coding system: A technique for the measurement of facial movement. Palo Alto, CA: Consulting Psychologists Press. [Google Scholar]

- Ekman P, Friesen WV, & Ellsworth P (1972). Emotion in the human face: Guidelines for research and an integration of findings. New York, NY: Pergamon Press. [Google Scholar]

- Ekman P, Sorenson ER, & Friesen WV (1969). Pan-cultural elements in facial displays of emotion. Science, 164, 86–88. 10.1126/science.164.3875.86 [DOI] [PubMed] [Google Scholar]

- Elfenbein HA, & Ambady N (2002). On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychological Bulletin, 128, 203–235. 10.1037/0033-2909.128.2.203 [DOI] [PubMed] [Google Scholar]

- Elfenbein HA, Beaupré M, Lévesque M, & Hess U (2007). Toward a dialect theory: Cultural differences in the expression and recognition of posed facial expressions. Emotion, 7, 131–146. 10.1037/1528-3542.7.1.131 [DOI] [PubMed] [Google Scholar]

- Gendron M, Roberson D, van der Vyver JM, & Barrett LF (2014). Perceptions of emotion from facial expressions are not culturally universal: Evidence from a remote culture. Emotion, 14, 251–262. 10.1037/a0036052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goetz JL, Keltner D, & Simon-Thomas E (2010). Compassion: An evolutionary analysis and empirical review. Psychological Bulletin, 136, 351–374. 10.1037/a0018807 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gonzaga GC, Keltner D, Londahl EA, & Smith MD (2001). Love and the commitment problem in romantic relations and friendship. Journal of Personality and Social Psychology, 81, 247–262. 10.1037/0022-3514.81.2.247 [DOI] [PubMed] [Google Scholar]

- Grammer K, Kruck KB, & Magnusson MS (1998). The courtship dance: Patterns of nonverbal synchronization in opposite-sex encounters. Journal of Nonverbal Behavior, 22, 3–29. 10.1023/A:1022986608835 [DOI] [Google Scholar]

- Grunau RV, & Craig KD (1987). Pain expression in neonates: Facial action and cry. Pain, 28, 395–410. 10.1016/0304-3959(87)90073-X [DOI] [PubMed] [Google Scholar]

- Haidt J, & Keltner D (1999). Culture and facial expression: Open-ended methods find more faces and a gradient of recognition. Cognition and Emotion, 13, 225–266. 10.1080/026999399379267 [DOI] [Google Scholar]

- Hess U, & Hareli S (2017). The social signal value of emotions: The role of contextual factors in social inferences drawn from emotion displays In Fernández-Dols J & Russell JA (Eds.), The science of facial expression; the science of facial expression (pp. 375–393). New York, NY: Oxford University Press. [Google Scholar]

- Hess U,& Thibault P (2009). Darwin and emotion expression. American Psychologist, 64, 120–128. 10.1037/a0013386 [DOI] [PubMed] [Google Scholar]

- Hofstede G, Hofstede GJ, & Minkov M (2010). Cultures and organizations: Software of the mind: Revised and expanded (3rd ed). New York, NY: McGraw-Hill. [Google Scholar]

- Izard CE (1972). Patterns of emotion. New York, NY: Academic Press. [Google Scholar]

- Izard CE (1994). Innate and universal facial expressions: Evidence from developmental and cross-cultural research. Psychological Bulletin, 115, 288–299. 10.1037/0033-2909.115.2.288 [DOI] [PubMed] [Google Scholar]

- Jack RE, Garrod OGB, & Schyns PG (2014). Dynamic facial expressions of emotion transmit an evolving hierarchy of signals over time. Current Biology, 24, 187–192. http://dx.doi.org/10.1016Zj.cub.2013.11.064 [DOI] [PubMed] [Google Scholar]

- Jack RE, Garrod OGB, Yu H, Caldara R, & Schyns PG (2012). Facial expressions of emotion are not culturally universal. Proceedings of the National Academy of Sciences of the United States of America, 109, 7241–7244. 10.1073/pnas.1200155109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keltner D (1995). Signs of appeasement: Evidence for the distinct displays of embarrassment, amusement, and shame. Journal of Personality and Social Psychology, 68, 441–454. 10.1037/0022-3514.68.3.441 [DOI] [Google Scholar]

- Keltner D, & Bonanno GA (1997). A study of laughter and dissociation: Distinct correlates of laughter and smiling during bereavement. Journal of Personality and Social Psychology, 73, 687–702. 10.1037/0022-3514.73.4.687 [DOI] [PubMed] [Google Scholar]

- Keltner D, & Buswell BN (1997). Embarrassment: Its distinct form and appeasement functions. Psychological Bulletin, 122, 250–270. 10.1037/0033-2909.122.3.250 [DOI] [PubMed] [Google Scholar]

- Keltner D, & Cordaro DT (2016). Understanding multimodal emotional expressions: Recent advances in basic emotion theory. Retrieved from http://emotionresearcher.com/ [Google Scholar]

- Keltner D, & Haidt J (1999). Social functions of emotions at four levels of analysis. Cognition and Emotion, 13, 505–521. 10.1080/026999399379168 [DOI] [Google Scholar]

- Keltner D, & Kring AM (1998). Emotion, social function, and psychopathology. Review of General Psychology, 2, 320–342. 10.1037/1089-2680.2.3.320 [DOI] [Google Scholar]

- Keltner D, Tracy J, Sauter DA, Cordaro DC, & McNeil G (2016). Expression of emotion In Barrett LF & Lewis M (Eds.), Handbook of emotions (pp. 467–482). New York, NY: Guilford Press. [Google Scholar]

- Keltner D, Tracy JL, Sauter D, & Cowen A (in press). What basic emotion theory really says for the 21st century study of emotion. Journal of Nonverbal Behavior. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laukka P, Neiberg D, & Elfenbein HA (2014). Evidence for cultural dialects in vocal emotion expression: Acoustic classification within and across five nations. Emotion, 14, 445–449. 10.1037/a0036048 [DOI] [PubMed] [Google Scholar]

- Lench HC, Flores SA, & Bench SW (2011). Discrete emotions predict changes in cognition, judgment, experience, behavior, and physiology: A meta-analysis of experimental emotion elicitations. Psychological Bulletin, 137, 834–855. 10.1037/a0024244 [DOI] [PubMed] [Google Scholar]

- Matsumoto D, Yoo SH, Fontaine J, Anguas-Wong AM, Arriola M, Ataca B,… Grossi E (2008). Mapping expressive differences around the world: The relationship between emotional display rules and individualism versus collectivism. Journal of Cross-Cultural Psychology, 39, 55–74. 10.1177/0022022107311854 [DOI] [Google Scholar]

- Niedenthal PM, Rychlowska M, & Wood A (2017). Feelings and contexts: Socioecological influences on the nonverbal expression of emotion. Current Opinion in Psychology, 17, 170–175. 10.1016/j.copsyc.2017.07.025 [DOI] [PubMed] [Google Scholar]

- Norenzayan A, & Heine SJ (2005). Psychological universals: What are they and how can we know? Psychological Bulletin, 131, 763–784. 10.1037/0033-2909.131.5.763 [DOI] [PubMed] [Google Scholar]

- Reddy V (2000). Coyness in early infancy. Developmental Science, 3, 186–192. 10.1111/1467-7687.00112 [DOI] [Google Scholar]

- Reeve J (1993). The face of interest. Motivation and Emotion, 17, 353–375. 10.1007/BF00992325 [DOI] [Google Scholar]

- Rozin P, & Cohen AB (2003). High frequency of facial expressions corresponding to confusion, concentration, and worry in an analysis of naturally occurring facial expressions of Americans. Emotion, 3, 68–75. 10.1037/1528-3542.3.1.68 [DOI] [PubMed] [Google Scholar]

- Ruch W (1997). State and trait cheerfulness and the induction of exhilaration: A FACS study. European Psychologist, 2, 328–341. 10.1027/1016-9040.2.4.328 [DOI] [Google Scholar]

- Russell JA (1991). Culture and the categorization of emotions. Psychological Bulletin, 110, 426–450. 10.1037/0033-2909.110.3.426 [DOI] [PubMed] [Google Scholar]

- Russell JA (1994). Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychological Bulletin, 115, 102–141. 10.1037/0033-2909.115.L102 [DOI] [PubMed] [Google Scholar]

- Sauter DA, Eisner F, Ekman P, & Scott SK (2010). Cross-cultural recognition of basic emotions through nonverbal emotional vocalizations. Proceedings of the National Academy of Sciences of the United States of America, 107, 2408–2412. 10.1073/pnas.0908239106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sauter DA, & Fischer AH (2018). Can perceivers recognise emotions from spontaneous expressions? Cognition and Emotion, 32, 504–515. 10.1080/02699931.2017.1320978 [DOI] [PubMed] [Google Scholar]

- Scherer KR, & Ellgring H (2007). Multimodal expression of emotion: Affect programs or componential appraisal patterns? Emotion, 7, 158–171. 10.1037/1528-3542.7.1.158 [DOI] [PubMed] [Google Scholar]

- Schwartz S (2008). The 7 Schwartz cultural value orientation scores for 80 countries. Retrieved from https://www.researchgate.net/publication/304715744_The_7_Schwartz_cultural_value_orientation_scores_for_80_countries [Google Scholar]

- Scott S, & Sauter D (2006). Non-verbal expressions of emotion acoustics, valence and cross cultural factors. Speech Communication, 40, 99–116. [Google Scholar]

- Shariff AF, & Tracy JL (2011). What are emotion expressions for? Current Directions in Psychological Science, 20, 395–399. 10.1177/0963721411424739 [DOI] [Google Scholar]

- Simon-Thomas ER, Keltner DJ, Sauter D, Sinicropi-Yao L, & Abramson A (2009). The voice conveys specific emotions: Evidence from vocal burst displays. Emotion, 9, 838–846. 10.1037/a0017810 [DOI] [PubMed] [Google Scholar]

- Snowdon CT (2003). Expression of emotion in nonhuman animals In Davidson RJ, Scherer KR, & Goldsmith HH (Eds.), Handbook of affective sciences (pp. 457–534). New York, NY: Oxford University Press. [Google Scholar]

- Spunt RP, Ellsworth E, & Adolphs R (2017). The neural basis of understanding the expression of the emotions in man and animals. Social Cognitive and Affective Neuroscience, 12, 95–105. 10.1093/scan/nsw161 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tracy JL, & Matsumoto D (2008). The spontaneous expression of pride and shame: Evidence for biologically innate nonverbal displays. Proceedings of the National Academy of Sciences of the United States of America, 105, 11655–11660. 10.1073/pnas.0802686105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tracy JL, & Robins RW (2004). Show your pride: Evidence for a discrete emotion expression. Psychological Science, 15, 194–197. 10.1111/j.0956-7976.2004.01503008.x [DOI] [PubMed] [Google Scholar]

- van Kleef GA (2016). The interpersonal dynamics of emotion: Toward an integrative theory of emotions as social information. New York, NY: Cambridge University Press; 10.1017/CBO9781107261396 [DOI] [Google Scholar]

- Widen SC, & Russell JA (2013). Children’s recognition of disgust in others. Psychological Bulletin, 139, 271–299. 10.1037/a0031640 [DOI] [PubMed] [Google Scholar]

- Wu Y, Muentener P, & Schulz LE (2017). One- to four-year-olds connect diverse positive emotional vocalizations to their probable causes. Proceedings of the National Academy of Sciences of the United States of America, 114, 11896–11901. 10.1073/pnas.1707715114 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.