Abstract

Background

According to the September 2015 Institute of Medicine report, Improving Diagnosis in Health Care, each of us is likely to experience one diagnostic error in our lifetime, often with devastating consequences. Traditionally, diagnostic decision making has been the sole responsibility of an individual clinician. However, diagnosis involves an interaction among interprofessional team members with different training, skills, cultures, knowledge, and backgrounds. Moreover, diagnostic error is prevalent in the interruption-prone environment, such as the emergency department, where the loss of information may hinder a correct diagnosis.

Objective

The overall purpose of this protocol is to improve team-based diagnostic decision making by focusing on data analytics and informatics tools that improve collective information management.

Methods

To achieve this goal, we will identify the factors contributing to failures in team-based diagnostic decision making (aim 1), understand the barriers of using current health information technology tools for team collaboration (aim 2), and develop and evaluate a collaborative decision-making prototype that can improve team-based diagnostic decision making (aim 3).

Results

Between 2019 to 2020, we are collecting data for this study. The results are anticipated to be published between 2020 and 2021.

Conclusions

The results from this study can shed light on improving diagnostic decision making by incorporating diagnostics rationale from team members. We believe a positive direction to move forward in solving diagnostic errors is by incorporating all team members, and using informatics.

International Registered Report Identifier (IRRID)

DERR1-10.2196/16047

Keywords: informatics; health care team; data science; decision support techniques; decision-making, computer-assisted; data display; diagnosis, computer-assisted

Introduction

Background

Americans experience at least one diagnostic error in their lifetime, sometimes with devastating consequences (Institute of Medicine [IOM] report 2015). Lack of timely attention to diagnostic error can have dire implications for public health, as exemplified by the widely reported diagnostic error regarding Ebola virus infection in a Dallas hospital emergency department (ED) [1]. Diagnostic error is likely to be one of the most common types of errors in ED settings [2]. The high-paced, high-volume, low-certainty, multiagent, dynamic, and complex environment may lead to diagnostic errors and adverse events [3-6]. Thus, in an environment prone to interruptions, vital patient information and cues to make a diagnosis are often lost during information collection and integration among physicians, residents, nurses, and other health care providers.

The team-based diagnostic approach has the potential to reduce errors. Although the current diagnostic process is often the responsibility of an individual clinician, ideally the diagnostic process involves collaboration among multiple health care professionals [7]. To manage the increasing complexity, clinicians will need to collaborate effectively and draw upon the knowledge and expertise of other health care professionals. Collaborative problem solving has been found to have a positive impact on diagnostic performance for team members to combine, sort, and filter new information [8-11]. Current diagnostic decision support tools do not support team-based decision making. These tools can generate diagnostic hypotheses based on the information already entered into the electronic health record (EHR) [12-15]. However, information loss in ED is more common during team communication among health care professionals [16,17]. Therefore, the recent IOM report calls for research into the process of how, where, when, and who is responsible for the entry of the vital information into the system to understand the etiology of failures in the team-based diagnostic decision-making process [18].

Research has shown that technology can positively impact provider interactions and coordination, helping group dynamics and efficiency [19]. Various computer supported cooperative work studies in health care have shown that clinicians deploy working records or provisional information to facilitate team collaboration, mostly in paper environments during case discussions to exchange key information [20-22]. These working records are essentially summaries of patients’ situations or important information cues that providers write down during patient interviews or during the information-gathering stage. Currently, the information documented on these working records is not transferred to the EHR and often is discarded after knowledge sharing sessions. Moreover, the decision support tools in the EHR do not support such computerized transitional documentation [23]. For example, nurses in ED collect patient medical history into transitional documents. Clinicians enter patient interview information related to diagnosis on paper or sticky notes [23]. However, the informal information, if shared with the team, can help to achieve shared team situation awareness to reach the correct diagnosis [24]. Research on collaborative environments has shown that sharing a physical workspace to communicate information can provide benefits such as improved activity awareness and coordination [25-28]. For example, Defense Collaboration Services (developed by the US Department of Defense) have shared Web-based platforms that can be accessed by different team members, and they can raise information need as well as input vital information cues related to mission planning [29,30]. Such real-time platforms in health care can provide an overview of the patient’s situation from different information-gathering agents (eg, nurses, residents, students, and physicians) to reach the correct diagnosis.

Objective

The informal information in a real-time workspace can help the team to communicate and interpret vital information with each other, which can improve team-based diagnostic decision making in the ED by reducing the loss of information. The objective of our study is to develop a collaborative prototype for improving team diagnostic decision making using an informatics approach.

Methods

Overview

We want to focus on all types of diagnosis for adult patients who come to the ED in both the trauma and medicine units. This will ensure that we can generalize the future prototype for all ED patients. The overall methodology is described in the following 3 aims.

Aim 1: identify factors contributing to failures in team-based diagnostic decision making

Aim 2: understand the barriers in using health information technology (IT) tools for team collaboration

Aim 3: design and evaluate a collaborative decision-making prototype

Aim 1: Identify Factors Contributing to Failures in Team-Based Diagnostic Decision Making

The research questions are as follows: (1) What are the specific diagnostic workflow processes that are vulnerable to failures in information gathering, integrating, interpreting, and establishing an explanation of the correct diagnosis? and (2) What specific information cues do teams share with each other to reach a diagnosis collaboratively?

Aim 1 Methods Overview

We will use the combination of direct observation, hierarchical task analysis (HTA), and health care failure mode and effect analysis (HFMEA) to analyze team tasks in the diagnosis process [31-33]. HTA involves describing the task being analyzed through the breakdown of the task into a hierarchy of goals, subgoals, operations, and plans [31]. The HFMEA technique will help us detect possible failure modes of each of the subprocesses and identify potential causes, effects, and solutions for the failure in the team diagnostic process [32]. A research assistant with qualitative coding background will analyze the data. The steps involved in this method are as follows:

Step 1: observe scenarios in ED settings and transcribe the scenarios from audio recordings

Step 2: use data from the transcription to create HTA process maps

Step 3: conduct HFMEA to identify failures and improvement strategies

Step 1: Observe Scenarios

The observation will start once the patient is admitted in the ED. A total of 2 research assistants will simultaneously observe the ED nurse and the attending physician. The observations will be nonintrusive, and researchers will turn on audio recorders only when the team is discussing or communicating with each other regarding the patient case [34-40]. We will take notes and audio record the conversation, interactions, and case discussion among the ED team members. We will transcribe the audio recordings and collect the transitional information that the nurse and the attending physicians record on paper.

Step 2: Construct Hierarchical Task Analysis Process Maps

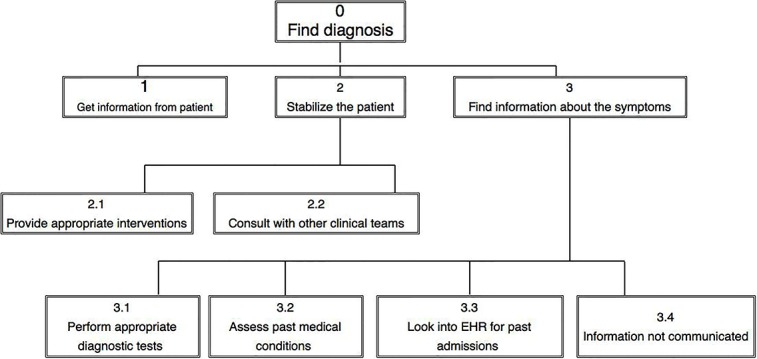

The research team will analyze the observation transcript independently and construct HTA process maps for each case until there are no more tasks related to reach the diagnosis. We will merge the goals and tasks for the physician and the nurse to construct the process maps. For example, if the highest goal is finding diagnosis, we will merge nursing goal of finding patients home medication history as a subgoal under finding diagnosis. We will focus on the main goals associated with finding the correct diagnosis and represent the associated task steps to accomplish those goals in a hierarchical decision tree (Figure 1) [31,41,42]. After we have developed the HTA process maps for each of the 40 patient cases, we will validate the HTA process maps with 2 ED physicians [41,43]. Finally, we will map the failure-prone tasks’ steps from the decision tree, based on the list for detecting failures across the diagnostic process developed by the IOM committee, as described in Table 1 [44]. For example, if consultation with other clinical team was not possible (Figure 1, subtask 2.2), we will code that as information integration 4 (information from other team not available), or if past medical conditions get missed (Figure 1, subtask 3.2), then we will code that as information interpretation 1 (inaccurate interpretation of history). After mapping with failure-prone subtasks, we will start the HFMEA process.

Figure 1.

Hierarchical task analysis diagram: tasks and subtasks are designated by numbers. EHR: electronic health record.

Table 1.

Nature of failures and description derived from the Institute of Medicine’s report.

| Nature of failure | Failure description |

| Information gathering 1 | Unable to elicit key information |

| Information gathering 2 | Unable to get key history |

| Information gathering 3 | Missed key physical findings |

| Information gathering 4 | Failed to order or perform needed tests |

| Information gathering 5 | Inappropriate review of test results |

| Information gathering 6 | Wrong tests ordered |

| Information gathering 7 | Tests ordered in wrong sequence |

| Information gathering 8 | Technical errors in handling, labeling, and processing of tests |

| Information integration 1 | Wrong hypothesis generation |

| Information integration 2 | Inaccurate suboptimal weighing and prioritization |

| Information integration 3 | Unable to recognize or weigh urgency |

| Information integration 4 | Information from other teams not available |

| Information interpretation 1 | Inaccurate interpretation of history |

| Information interpretation 2 | Inaccurate interpretation of physical findings |

| Information interpretation 3 | Inaccurate interpretation of test results |

| Establish explanation of diagnosis 1 | Delay in considering diagnosis |

| Establish explanation of diagnosis 2 | Patient develops infections or other complications |

| Establish explanation of diagnosis 3 | Information missed to form hypothesis because of health information technology |

| Establish explanation of diagnosis 4 | Signs and symptoms not recognized for specific disease |

| Establish explanation of diagnosis 5 | Delay or missed follow-up |

Step 3: Conduct Health Care Failure Mode and Effect Analysis

We will form a multidisciplinary ED team including 1 ED physician, 1 ED resident, and 1 ED nurse. We will then ask the team to conduct a brainstorming session with each HTA process map and discuss the vulnerable junctions (task steps) for patient safety, information loss, misinterpretation, group conflict, and factors associated with poor communication. The team will also discuss additional failure-prone task steps found in step 2 to find potential solutions. The team will rate the severity score (scale of 1 to 4) for each failure-prone task step as minor (score 1), moderate, major, and catastrophic (score 4). Then, the team will also rate the probability of the occurrence of such incidents on a scale of 1 to 4 as remote (score 1: happening rarely in 2 years), uncommon (once a year), occasional (every 3-6 months), or frequent (score 4: every month). We will combine the severity and probability scores to obtain a hazard score. We will focus only on subtasks with hazard scores of 5 or greater to identify potential solutions. Finally, the team will be asked to find potential solutions, including health IT interventions, that can improve the team communication and team diagnostic decision-making process. The final results will be shown as in Table 2 for each of the 40 patient cases.

Table 2.

Factors contributing to failure in team-based diagnostic decision-making process.

| Hazard score | Subtasks | Failure mode | Failure description | Causes | Effects | Remedial strategy |

| 5 | Subtask 2.2: consult with clinical teams | Information gathering 4 | Information from other teams not available | Radiology is overwhelmed with tasks | Delay in patient diagnosis | Update radiology team to send urgent patient results first |

| 7 | Subtask 3.3: information overlooked in EHRa for past admissions | Establish explanation of diagnosis 3 | Information missed to form hypothesis because of health information technology | Information lost because of interruption | Wrong diagnosis | Actively engage different team members to focus on multiple data sources in EHR |

aEHR: electronic health record.

Each brainstorming session will be limited to 50 min, will be audio recorded and transcribed, and will occur over multiple sessions. The principal investigator will conduct a final data analysis of the transcripts to identify the high failure-prone task steps and possible solutions.

Study Subjects and Recruitment Methods

We will recruit 4 ED physicians and 4 ED nurses for the observation study to increase provider diversity. For the HFMEA part of the study, we will recruit 2 ED physicians, 2 ED nurse, and 2 ED residents. A total of 14 providers will be recruited from 3 hospital sites by email and telephone, and a US $50 gift card will be provided for participation.

Sample Size Justification

On the basis of our pilot study sample size, we will observe 40 patient cases. We will include only adults (aged >18 years) for selecting cases. We will observe each scenario until the team reaches a consensus about the diagnosis. Previous studies have observed 32 to 50 cases for reaching data saturation [43,45-47].

Team Members Makeup

For this aim, we will assume the ED team includes the attending physician and the attending nurse. However, we will include senior and junior-level residents, radiology physicians, other nursing staff, pharmacists, and support staff based on the makeup of that current team on that particular shift.

Limitations

The HTA and HFMEA methods are time consuming, specifically observation, construction of the HTA, and data analysis. However, a 3-year timeline is reasonable. In addition, there may be concern that step 2 (HTA process maps) may not generate adequate failure-prone steps. However, step 3 (HFMEA) brainstorming session by the group will also identify failure-prone steps in addition to discussing failure-prone steps found in the HTA process maps and will complement each other.

Aim 2: Understand the Barriers in Using Health Information Technology Tools for Team Collaboration

The research questions are as follows: (1) What are the barriers to sharing information using current health IT tools? and (2) What are the leverage points (ie, critical pieces of information that lead to a useful decision path [48]) for the team during complex diagnostic decision-making tasks and negotiating conflict [49]?

Aim 2 Methods Overview

We will conduct a Critical Incident Technique (CIT)–based team Cognitive Task Analysis (CTA) interview [50-53]. CTA is a process of understanding cognition while performing complex tasks. It provides a mechanism for eliciting and representing general and specific knowledge [54-56]. Team CTA is an extension of CTA that considers a team as a single cognitive entity (eg, more than a collection of individuals) [57,58]. CIT comprises a set of procedures for gathering facts on human behavior in a recent complex situation. In this study, team CTA will help us identify the barriers that ED team members face while gathering, integrating, interpreting information and forming hypotheses about the diagnosis using current health IT tools. Effective teamwork includes motivating and gathering information from each discipline, regardless of interdisciplinary conflicts [59].

Procedure

We will ask the team members to describe a recent complex case that was challenging to solve as a team for an admitted patient. Experiences related to critical incidents in interprofessional teamwork will be evoked by asking open-ended questions: “Are there any difficulties or challenges involved in working together using the current health IT tools?” followed by “Can you describe a situation that you remember in detail when you experienced such a difficulty?” Once the situation is established with time-specific detail, follow-up questions and probes will be asked to elicit the team’s dynamic decision-making strategies to negotiate conflicts, the specific actions by each team member, and the process by which the problem was solved. We will focus on how team members prioritize and rank patient information to negotiate conflict to reach consensus.

Study Subjects and Recruitment Methods

We will recruit 5 ED teams by email and telephone. Each team will consist of 4 clinicians, including 1 attending ED physician, 1 ED nurse, 1 ED resident, and 1 ED pharmacist. Inclusion criteria will be at least 1-year experience as a team member and a recent (within last 3 months) experience in working in the ED. Each clinician will receive a US $50 gift card for participation.

Data Collection

We will use the transcripts from the audio recordings of the interviews for data analysis. All patient identifiers will be removed.

Study Measures

The study measures are as follows: (1) cues and patterns of the team members’ preferences for using current health IT tools, (2) leverage points (cues related to shared and complementary cognition), (3) common sources of conflict and resolution strategies [60], and (4) complementary knowledge and skills to synthesize task elements.

Data Analysis

A total of 2 investigators will independently code the transcripts from the team CTA interviews and merge the individual codes into subthemes and later into broader themes through a process of negotiated consensus. We will code based on a qualitative content analysis process [61-63]. We will use ATLAS.ti software for data analysis.

Sample Size Justification

We will interview 20 providers for the team CTA interviews. Previous studies used a range of 6 to 30 providers for successfully conducting similar team CTA interviews [64-67].

Limitations

CTA studies are based on memories. It can be difficult to explore past information, as key pieces of information may not be stored properly in the memory [68]. Therefore, we conducted a pilot study to prepare the questions that can evoke the response needed for data analysis [55].

Aim 3: Design and Evaluate a Collaborative Decision-Making Prototype

Aim 3 Methods Overview

We will develop complex case vignettes, design the prototype, and conduct the usability study.

Complex Team-Based Diagnostic Case Vignette Design

We will design 8 complex clinical vignettes based on team-based diagnostic problems from our findings from aims 1 and 2 [69]. We will validate the complexity of cases with 3 ED physicians. These cases will be presented to participants in a mock electronic EHR.

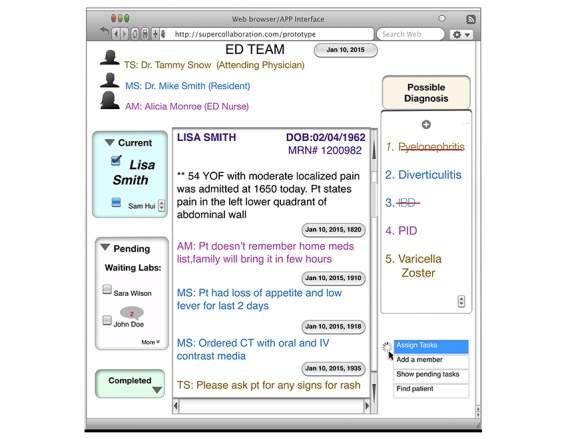

Prototype Design: Preliminary Design Concept

The purpose of this prototype is to gather, integrate, and collect vital patient information from different team members to rank and filter information for making an informed diagnostic decision collaboratively. The results from aim 1 will inform design by allocating failure-prone task steps as the main focus in the interface (ie, if unable to get key history becomes a major failure-prone task, then a separate tab should be created in the interface as pending information for patients). The results from aim 2 will provide specific design allocation for features such as knowledge characteristics (ie, team should be able to see updates of all patients in 1 screen) or expertise process requirements (ie, comments from each team member based on medical expertise should be grouped to improve trust in the information) and so on. For example, in this shared platform (Figure 2), all team members can enter relevant information regarding the patient (color coded as mocha for ED physicians, blue for residents, and magenta for nurses). Everyone can also add possible hypotheses about the diagnosis in the possible diagnosis tab. Only the ED physician will be able to delete a diagnosis (shown as red strike-through in the diagnosis tab). Physicians and other team members can also assign tasks and group patients by waiting labs or completed (left side of the interface). This is an initial version only. The design will be refined based on aim 1, aim 2, and iterative design in aim 3 to ensure patient safety.

Figure 2.

Screenshot of the mock-up user interface for the collaborative decision-making prototype.

Iterative Design

To facilitate rapid development, initial low-fidelity mock-ups and storyboarding will be iteratively created to illustrate the design and functionality of the tool and load it in a laptop. We will use the usability inquiry approach for the iterative design to understand user’s likes, dislikes, and needs [70]. The interprofessional research team (including 9 clinicians with diverse clinical background and 6 researchers) will then iteratively review and revise the mock-up based on the written and verbal feedback related to usability (think-aloud methods), efficiency, and ease of use for 3 months or until no further revisions are identified. Think-aloud methods will provide rich verbal data about specific changes and functionalities of the initial mock-up [71-73]. We will audio record and screen record (using Camtasia Studio) the sessions to analyze verbal feedback and measure the mouse movements. We will analyze the data using think-aloud methods and screen recordings to identify design issues and iterate interface functionalities accordingly.

Usability Testing of the Prototype

We will conduct the study in the Emanate Health System. We will provide initial training to each provider about the scope of the research, the prototype tool, and the 3 steps of usability testing that will reveal the prototype’s ease of use, familiarity, effectiveness, and user satisfaction. Each session will last less than 60 min. We will conduct the usability testing of the prototype in the following 3 steps:

Step 1: evaluate ease of use and familiarity

Step 2: test prototype effectiveness

Step 3: conduct prototype evaluation

Step 1: Evaluate Ease of Use and Familiarity (10-12 Min)

We will use the cognitive walkthrough evaluation method to understand the user’s background and the level of mental effort [74-76]. First, we will ask each provider about his or her initial perception and what action each of the interface components (eg, buttons and checkboxes) is expected to perform when interacted with. Then we will ask each provider to complete a sequence of tasks and subtasks while using the prototype and will provide assistance when asked. An example of a potential task is as follows: “Please use the interface to add a potential diagnosis” or “Please assign a task to your colleague.” Providers will then be given 5 min to use the tool on their own to gain familiarity, and any questions asked will be answered. Finally, we will ask the providers to conduct similar tasks without assistance to understand familiarity and ease of use. The number of times assistance is needed will be audio recorded and will serve as a descriptive measure of ease of use for data analysis. The ability of providers to accomplish tasks without assistance will serve as a marker of high ease of use. The principal investigator will conduct the final data analysis from the audio transcripts to find the number of times assistance was required before and after demonstration.

Step 2: Test Prototype Effectiveness (36 Min)

To measure the effectiveness of decision making using the prototype, we will use a 2 randomized between (presence/absence of the prototype) × 2 between (expertise) × 2 within (time pressure) factorial design. Each team will receive 8 vignettes presented in random order. The main effect of the presence or absence of the prototype tests the experimental question. With this design, we are also able to test for the interaction between the impact of the interface and the domain of expertise under time pressure. For example, the interface could change the interaction between time pressure and domain expertise (a 3-way interaction), eliminate the influence of time pressure overall for both ED expert and non-ED expert teams (2-way interaction), and have a main effect on quality for everyone in all conditions.

Step 3: Conduct Prototype Evaluation (10-12 Min)

We will conduct a team satisfaction survey to understand team members’ satisfaction level and System Usability Scale survey to understand the ease of use with the prototype. First, we will ask each provider to complete a Web-based user satisfaction survey to measure individual team members’ satisfaction for using the prototype [77]. This teamwork process–specific survey focuses on organizational context, team task design, information sharing, and team processes [78]. Research has shown that it is difficult to capture team-specific activities through commonly used surveys such as National Aeronautics and Space Administration Task Load Index [29]. This survey (6-point Likert scale) has been used for understanding team dynamics in other successful fields when evaluating group decision support tools [79-85]. Data analysis will include factor analysis, scale reliability analysis, aggregation analysis, and path analysis [77]. Finally, the ease of use of the prototype will be evaluated using the System Usability Scale, a rapidly administered, 10-question, 100-point scale designed to evaluate a user’s subjective assessment of usability [86]. Data analysis will include total score calculations based on the participants’ answers.

Dependent Variables

We will have 2 dependent variables, diagnostic accuracy and overall team diagnostic decision quality. For diagnostic accuracy, the presence of the correct diagnosis in the top 3 items of the diagnostic differential will be computed as a dichotomous (yes or no) variable. For example, if the clinical team correctly diagnoses the top 2 of the 3 diagnoses in the vignettes, it will be counted as yes. The overall team diagnostic decision quality will be an aggregate score created from the combination of the (1) correct diagnosis, (2) rating of the confidence of the final diagnosis (on a scale of 0-3, with 0 being the lowest confidence rating), and (3) correctly ordered diagnostic tests. The overall score will range from 0 to 10, with correct diagnoses receiving 4 points and confidence ratings and correctly ordered tests receiving 3 points each.

Independent Variables

Time pressure is an independent variable because we will be assigning high time pressure as less than 3 min and low time pressure as less than 6 min.

Procedures

We will explain the procedure and ask participants to finish 4 cases under high time pressure (<3 min) and 4 cases under low time pressure (<6 min). Initially, all team members, the nurse, the resident, and the physician, will be distant and reviewing the case independently. They will use the decision-making prototype (loaded in laptops) to communicate among themselves for sharing information to establish an explanation for the diagnosis. They will have the final 1 min to discuss, as a group, the high time pressure cases and the final 2 min for low time pressure cases to reach consensus about the correct diagnosis. We will ask each team to rate their confidence in the diagnosis. We will also note the responsible team members who voice their concerns regarding each of the complex patient cases.

Data Analysis

We will use Chi-square test to evaluate association between the independent variables with the decision quality. We will use analysis of variance (ANOVA) to calculate the mean difference within and between ED expert teams’ and non-ED expert teams’ decision quality. The within- and between-group design will provide us with a sample size adequate for an ANOVA test. The proportion of decisions made with the correct diagnosis and overall decision quality will be shown as a percentage value using ANOVA. If the distribution is not normal, we will use the General Linear Model for the data analysis [87].

Overall Study Measures

The overall study measures are as follows: (1) providers’ comments about the initial design, (2) number of times assistance was required before and after demonstration, (3) scores for team decision quality, and (4) survey responses.

Study Subjects and Recruitment Methods

We will recruit 12 teams with each team (6 ED experts and 6 non-ED experts) comprising a physician, a resident, and a nurse (36 providers: US $50 gift card will be provided) by emails and phone calls. The inclusion criterion for the ED team is that members should have at least 6 months’ experience working in the ED, and non-ED teams should include providers with expertise in other clinical domains.

Sample Size Justifications and Power Calculation

Previous studies successfully enrolled 7 to 36 providers for similar usability studies [74,88-93]. The 12 teams and 8 case vignettes in this within- and between-group design with a 2-tailed alpha of .05 and a moderate effect size give a power of 0.83.

Limitations

Reasonable efforts will be made to ensure the prototype realistically simulates a shared workspace for team collaboration. However, the assessment provides initial steps in understanding team diagnostic decision quality, serving as a foundation for future study in real-world situations.

Results

We are collecting preliminary data for this study between the period of 2019 and 2020. The results are expected to be published between 2020 and 2021.

Discussion

Collaborative Decision Support Design

Studies have shown that uneven information can result from the exclusion of team members from messages or the failure of team members to share uniquely held information [94-96]. Studies also show that task conflict can arise when some team members operate with incomplete information, suggesting that when information is provided, agreement can quickly be reached [97-99]. Collaborative decision support tools have proven to be effective in other successful fields in resolving conflict by providing a platform to coordinate team tasks.

This protocol addresses the problem of diagnostic error through innovative approaches for reducing the loss of vital patient information and effectively sharing key information to form correct diagnosis as a team. The robustness of the methodology used in this protocol has been applied to other successful fields. Observation, HTA (aim 1), and team CTA (aim 2) methods have been applied in military, naval warfare, aviation, air traffic control, emergency services, and railway maintenance [100-105]. This Web-based prototype, in the long term, can be integrated with EHR as well as installed in mobile (app-based) devices for providers to capture the transitional information and share this information with team members to reach the correct diagnosis. For this protocol, we are exploring the prototype only as front end; it will not be integrated or installed into any systems or any EHR. The protocol is planned over a period of 5 years. The research team is experienced and plans to execute the project before the timeline.

Conclusions

The results from this study can shed light on improving diagnostic decision making by incorporating diagnostics rationale from team members. We believe a positive direction to move forward in solving diagnostic errors is by incorporating all team members, and using informatics.

Acknowledgments

The authors would like to thank the Baylor College of Medicine team for helping and supporting ideas in this protocol. In addition, the authors acknowledge an internal funding supporting this research from the Western University of Health Sciences, College of Pharmacy and Chapman University, School of Pharmacy.

Abbreviations

- ANOVA

analysis of variance

- CIT

Critical Incident Technique

- CTA

cognitive task analysis

- ED

emergency department

- EHR

electronic health record

- HFMEA

health care failure mode and effect analysis

- HTA

hierarchical task analysis

- IOM

Institute of Medicine

- IT

information technology

Footnotes

Conflicts of Interest: None declared.

References

- 1.Upadhyay DK, Sittig DF, Singh H. Ebola US Patient Zero: lessons on misdiagnosis and effective use of electronic health records. Diagnosis (Berl) 2014;1(4):283–7. doi: 10.1515/dx-2014-0064. http://europepmc.org/abstract/MED/26705511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Okafor N, Payne VL, Chathampally Y, Miller S, Doshi P, Singh H. Using voluntary reports from physicians to learn from diagnostic errors in emergency medicine. Emerg Med J. 2016 Apr;33(4):245–52. doi: 10.1136/emermed-2014-204604. [DOI] [PubMed] [Google Scholar]

- 3.Werner NE, Holden RJ. Interruptions in the wild: development of a sociotechnical systems model of interruptions in the emergency department through a systematic review. Appl Ergon. 2015 Nov;51:244–54. doi: 10.1016/j.apergo.2015.05.010. [DOI] [PubMed] [Google Scholar]

- 4.Popovici I, Morita PP, Doran D, Lapinsky S, Morra D, Shier A, Wu R, Cafazzo JA. Technological aspects of hospital communication challenges: an observational study. Int J Qual Health Care. 2015 Jun;27(3):183–8. doi: 10.1093/intqhc/mzv016. [DOI] [PubMed] [Google Scholar]

- 5.Monteiro SD, Sherbino JD, Ilgen JS, Dore KL, Wood TJ, Young ME, Bandiera G, Blouin D, Gaissmaier W, Norman GR, Howey E. Disrupting diagnostic reasoning: do interruptions, instructions, and experience affect the diagnostic accuracy and response time of residents and emergency physicians? Acad Med. 2015 Apr;90(4):511–7. doi: 10.1097/ACM.0000000000000614. [DOI] [PubMed] [Google Scholar]

- 6.Berg LM, Källberg AS, Göransson KE, Östergren Jan, Florin J, Ehrenberg A. Interruptions in emergency department work: an observational and interview study. BMJ Qual Saf. 2013 Aug;22(8):656–63. doi: 10.1136/bmjqs-2013-001967. [DOI] [PubMed] [Google Scholar]

- 7.Weaver SJ, Dy SM, Rosen MA. Team-training in healthcare: a narrative synthesis of the literature. BMJ Qual Saf. 2014 May;23(5):359–72. doi: 10.1136/bmjqs-2013-001848. http://qualitysafety.bmj.com/cgi/pmidlookup?view=long&pmid=24501181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Moreira A, Vieira V, del Arco JC. Sanar: A Collaborative Environment to Support Knowledge Sharing with Medical Artifacts. Proceedings of the 2012 Brazilian Symposium on Collaborative Systems; SBSC'12; October 15-18, 2012; Sao Paulo, Brazil. 2012. [Google Scholar]

- 9.Zwaan L, Schiff GD, Singh H. Advancing the research agenda for diagnostic error reduction. BMJ Qual Saf. 2013 Oct;22(Suppl 2):ii52–7. doi: 10.1136/bmjqs-2012-001624. http://qualitysafety.bmj.com/cgi/pmidlookup?view=long&pmid=23942182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kachalia A, Gandhi TK, Puopolo AL, Yoon C, Thomas EJ, Griffey R, Brennan TA, Studdert DM. Missed and delayed diagnoses in the emergency department: a study of closed malpractice claims from 4 liability insurers. Ann Emerg Med. 2007 Feb;49(2):196–205. doi: 10.1016/j.annemergmed.2006.06.035. [DOI] [PubMed] [Google Scholar]

- 11.Singh H. Editorial: helping health care organizations to define diagnostic errors as missed opportunities in diagnosis. Jt Comm J Qual Patient Saf. 2014 Mar;40(3):99–101. doi: 10.1016/S1553-7250(14)40012-6. [DOI] [PubMed] [Google Scholar]

- 12.Bond WF, Schwartz LM, Weaver KR, Levick D, Giuliano M, Graber ML. Differential diagnosis generators: an evaluation of currently available computer programs. J Gen Intern Med. 2012 Feb;27(2):213–9. doi: 10.1007/s11606-011-1804-8. http://europepmc.org/abstract/MED/21789717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.El-Kareh R, Hasan O, Schiff GD. Use of health information technology to reduce diagnostic errors. BMJ Qual Saf. 2013 Oct;22(Suppl 2):ii40–ii51. doi: 10.1136/bmjqs-2013-001884. http://qualitysafety.bmj.com/cgi/pmidlookup?view=long&pmid=23852973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ramnarayan P, Kapoor RR, Coren M, Nanduri V, Tomlinson AL, Taylor PM, Wyatt JC, Britto JF. Measuring the impact of diagnostic decision support on the quality of clinical decision making: development of a reliable and valid composite score. J Am Med Inform Assoc. 2003;10(6):563–72. doi: 10.1197/jamia.M1338. http://europepmc.org/abstract/MED/12925549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dupuis EA, White HF, Newman D, Sobieraj JE, Gokhale M, Freund KM. Tracking abnormal cervical cancer screening: evaluation of an EMR-based intervention. J Gen Intern Med. 2010 Jun;25(6):575–80. doi: 10.1007/s11606-010-1287-z. http://europepmc.org/abstract/MED/20204536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Carter AJ, Davis KA, Evans LV, Cone DC. Information loss in emergency medical services handover of trauma patients. Prehosp Emerg Care. 2009;13(3):280–5. doi: 10.1080/10903120802706260. [DOI] [PubMed] [Google Scholar]

- 17.Laxmisan A, Hakimzada F, Sayan OR, Green RA, Zhang J, Patel VL. The multitasking clinician: decision-making and cognitive demand during and after team handoffs in emergency care. Int J Med Inform. 2007;76(11-12):801–11. doi: 10.1016/j.ijmedinf.2006.09.019. [DOI] [PubMed] [Google Scholar]

- 18.Balogh E, Miller BT, Ball JR. Improving Diagnosis in Health Care. Washington DC: The National Academies Press; 2015. [PubMed] [Google Scholar]

- 19.Nembhard IM, Singer SJ, Shortell SM, Rittenhouse D, Casalino LP. The cultural complexity of medical groups. Health Care Manage Rev. 2012;37(3):200–13. doi: 10.1097/HMR.0b013e31822f54cd. [DOI] [PubMed] [Google Scholar]

- 20.Fitzpatrick G. Integrated care and the working record. Health Informatics J. 2016 Jul 25;10(4):291–302. doi: 10.1177/1460458204048507. doi: 10.1177/1460458204048507. [DOI] [Google Scholar]

- 21.Hardey M, Payne S, Coleman P. 'Scraps': hidden nursing information and its influence on the delivery of care. J Adv Nurs. 2000 Jul;32(1):208–14. doi: 10.1046/j.1365-2648.2000.01443.x. [DOI] [PubMed] [Google Scholar]

- 22.Hardstone G, Hartswood M, Procter R, Slack R, Voss A, Rees G. Supporting Informality: Team Working and Integrated Care Records. Proceedings of the 2004 ACM conference on Computer supported cooperative work; CSCW'04; November 6-10, 2004; Chicago, Illinois, USA. 2004. pp. 142–51. [DOI] [Google Scholar]

- 23.Chen Y. Documenting Transitional Information in EMR. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; CHI'10; April 10-15, 2010; Atlanta, Georgia, USA. 2010. pp. 1787–96. https://dl.acm.org/citation.cfm?id=1753326.1753594. [Google Scholar]

- 24.Kelly KM, Andrews JE, Case DO, Allard SL, Johnson JD. Information seeking and intentions to have genetic testing for hereditary cancers in rural and Appalachian Kentuckians. J Rural Health. 2007;23(2):166–72. doi: 10.1111/j.1748-0361.2007.00085.x. [DOI] [PubMed] [Google Scholar]

- 25.Tang JC. Findings from observational studies of collaborative work. Int J Man Mach Stud. 1991;34(2):143–60. doi: 10.1016/0020-7373(91)90039-A. doi: 10.1016/0020-7373(91)90039-A. [DOI] [Google Scholar]

- 26.Ha V, Inkpen K, Mandryk RL, Whalen T. Direct Intentions: The Effects of Input Devices on Collaboration Around a Tabletop Display. Proceedings of the First IEEE International Workshop on Horizontal Interactive Human-Computer Systems; TABLETOP'06; January 5-7, 2006; Adelaide, SA, Australia, Australia. 2006. pp. 177–84. https://ieeexplore.ieee.org/abstract/document/1579210. [Google Scholar]

- 27.Gutwin C, Greenberg S, Roseman M. Workspace awareness in real-time distributed groupware: framework, widgets, and evaluation. In: Sasse A, Cunningham J, Winder R, editors. People and Computers XI : Proceedings of the HCI'96 Conference. Boston, Massachusetts, USA: Springer; 1996. pp. 281–98. [Google Scholar]

- 28.Poltrock S, Grudin J. Computer Supported Cooperative Work and Groupware. Conference Companion on Human Factors in Computing Systems; CHI'94; April 24-28, 1994; Boston, Massachusetts, USA. 1994. pp. 355–6. https://dl.acm.org/citation.cfm?id=259963.260448. [DOI] [Google Scholar]

- 29.Gregory JF, Allen DW, James MH, Brent TM, Sheldon MR, Benjamin AK, Scott G. Techniques for Cyber Attack Attribution. 2012. [2019-10-08]. Evaluation of Domain-Specific Collaboration Interfaces for Team Command and Control Tasks https://apps.dtic.mil/docs/citations/ADA562675.

- 30.Zheng X, Ke G, Zeng DD, Ram S, Lu H. Next-generation team-science platform for scientific collaboration. IEEE Intell Syst. 2011;26(6):72–6. doi: 10.1109/mis.2011.104. https://ieeexplore.ieee.org/document/6096577. [DOI] [Google Scholar]

- 31.Annett J. Hierarchical task analysis. In: Hollnagel E, editor. Handbook Of Cognitive Task Design (human Factors And Ergonomics) York - F0608 (York, United Kingdom): CRC Press; 2003. pp. 17–35. [Google Scholar]

- 32.DeRosier J, Stalhandske E, Bagian JP, Nudell T. Using health care Failure Mode and Effect Analysis: the VA National Center for Patient Safety's prospective risk analysis system. Jt Comm J Qual Improv. 2002 May;28(5):248–67, 209. doi: 10.1016/S1070-3241(02)28025-6. [DOI] [PubMed] [Google Scholar]

- 33.Patton MQ. Wiley Online Library. 2005. [2019-10-08]. Qualitative Research https://onlinelibrary.wiley.com/doi/abs/10.1002/0470013192.bsa514.

- 34.Stahl K, Palileo A, Schulman CI, Wilson K, Augenstein J, Kiffin C, McKenney M. Enhancing patient safety in the trauma/surgical intensive care unit. J Trauma. 2009 Sep;67(3):430–3; discussion 433. doi: 10.1097/TA.0b013e3181acbe75. [DOI] [PubMed] [Google Scholar]

- 35.Chisholm CD, Weaver CS, Whenmouth L, Giles B. A task analysis of emergency physician activities in academic and community settings. Ann Emerg Med. 2011 Aug;58(2):117–22. doi: 10.1016/j.annemergmed.2010.11.026. [DOI] [PubMed] [Google Scholar]

- 36.Kannampallil TG, Franklin A, Mishra R, Almoosa KF, Cohen T, Patel VL. Understanding the nature of information seeking behavior in critical care: implications for the design of health information technology. Artif Intell Med. 2013 Jan;57(1):21–9. doi: 10.1016/j.artmed.2012.10.002. [DOI] [PubMed] [Google Scholar]

- 37.Gilardi S, Guglielmetti C, Pravettoni G. Interprofessional team dynamics and information flow management in emergency departments. J Adv Nurs. 2014 Jun;70(6):1299–309. doi: 10.1111/jan.12284. [DOI] [PubMed] [Google Scholar]

- 38.Singh H, Meyer AND, Thomas EJ. The frequency of diagnostic errors in outpatient care: estimations from three large observational studies involving US adult populations. BMJ Qual Saf. 2014 Sep;23(9):727–31. doi: 10.1136/bmjqs-2013-002627. http://qualitysafety.bmj.com/cgi/pmidlookup?view=long&pmid=24742777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wheelock A, Suliman A, Wharton R, Babu ED, Hull L, Vincent C, Sevdalis N, Arora S. The impact of operating room distractions on stress, workload, and teamwork. Ann Surg. 2015 Jun;261(6):1079–84. doi: 10.1097/SLA.0000000000001051. [DOI] [PubMed] [Google Scholar]

- 40.Islam R, Mayer J, Clutter J. Supporting novice clinicians cognitive strategies: system design perspective. IEEE EMBS Int Conf Biomed Health Inform. 2016 Feb;2016:509–12. doi: 10.1109/BHI.2016.7455946. http://europepmc.org/abstract/MED/27275020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Fairbanks RJ, Guarrera TK, Bisantz AB, Venturino M, Westesson PL. Opportunities in IT support of workflow & information flow in the Emergency Department Digital Imaging process. Proc Hum Factors Ergon Soc Annu Meet. 2010 Sep 1;54(4):359–63. doi: 10.1177/154193121005400419. http://europepmc.org/abstract/MED/22398841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Stanton NA. Hierarchical task analysis: developments, applications, and extensions. Appl Ergon. 2006 Jan;37(1):55–79. doi: 10.1016/j.apergo.2005.06.003. [DOI] [PubMed] [Google Scholar]

- 43.Unertl KM, Weinger MB, Johnson KB, Lorenzi NM. Describing and modeling workflow and information flow in chronic disease care. J Am Med Inform Assoc. 2009;16(6):826–36. doi: 10.1197/jamia.M3000. http://europepmc.org/abstract/MED/19717802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Medicine IO. Balogh EP, Miller BT, Ball JR. Improving diagnosis in health care. Committee on Diagnostic Error in Health Care; Board on Health Care Services; Institute of Medicine; The National Academies of Sciences, Engineering, and Medicine. 2015 doi: 10.17226/21794. https://www.ncbi.nlm.nih.gov/pubmed/26803862. [DOI] [PubMed] [Google Scholar]

- 45.Huang Y, Gramopadhye AK. Systematic engineering tools for describing and improving medication administration processes at rural healthcare facilities. Appl Ergon. 2014 Nov;45(6):1712–24. doi: 10.1016/j.apergo.2014.06.003. [DOI] [PubMed] [Google Scholar]

- 46.Raduma-Tomàs MA, Flin R, Yule S, Close S. The importance of preparation for doctors' handovers in an acute medical assessment unit: a hierarchical task analysis. BMJ Qual Saf. 2012 Mar;21(3):211–7. doi: 10.1136/bmjqs-2011-000220. [DOI] [PubMed] [Google Scholar]

- 47.Yadav K, Chamberlain JM, Lewis VR, Abts N, Chawla S, Hernandez A, Johnson J, Tuveson G, Burd RS. Designing real-time decision support for trauma resuscitations. Acad Emerg Med. 2015 Sep;22(9):1076–84. doi: 10.1111/acem.12747. doi: 10.1111/acem.12747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Pirolli P, Card S. The Sensemaking Process and Leverage Points for Analyst Technology as Identified Through Cognitive Task Analysis. Proceedings of International Conference on Intelligence Analysis; IA'05; 2005; McLean, VA, USA. 2005. https://pdfs.semanticscholar.org/f6b5/4379043d0ee28bea45555f481af1a693c16c.pdf. [Google Scholar]

- 49.Ellington L, Kelly KM, Reblin M, Latimer S, Roter D. Communication in genetic counseling: cognitive and emotional processing. Health Commun. 2011 Oct;26(7):667–75. doi: 10.1080/10410236.2011.561921. [DOI] [PubMed] [Google Scholar]

- 50.Klein G. Cognitive task analysis of teams. In: Schraagen JM, Chipman SF, Shalin VL, editors. Cognitive Task Analysis. York - F0608 (York, United Kingdom): CRC Press; 2000. [Google Scholar]

- 51.Flanagan JC. The critical incident technique. Psychol Bull. 1954 Jul;51(4):327–58. doi: 10.1037/h0061470. [DOI] [PubMed] [Google Scholar]

- 52.Islam R, Weir C, Fiol G. Heuristics in managing complex clinical decision tasks in experts' decision making. IEEE Int Conf Healthc Inform. 2014 Sep;2014:186–93. doi: 10.1109/ICHI.2014.32. http://europepmc.org/abstract/MED/27275019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Islam R, Weir CR, Jones M, Del Fiol G, Samore MH. Understanding complex clinical reasoning in infectious diseases for improving clinical decision support design. BMC Med Inform Decis Mak. 2015 Nov 30;15:101. doi: 10.1186/s12911-015-0221-z. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-015-0221-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Schraagen JM, Chipman SF, Shalin VJ. Cognitive Task Analysis. London, England: Psychology Press; 2000. [Google Scholar]

- 55.Crandall B, Klein GA, Hoffman RR. Working Minds: A Practitioner's Guide To Cognitive Task Analysis. Cambridge, MA: The MIT Press; 2006. [Google Scholar]

- 56.Hoffman RR. Human factors contributions to knowledge elicitation. Hum Factors. 2008 Jun;50(3):481–8. doi: 10.1518/001872008X288475. [DOI] [PubMed] [Google Scholar]

- 57.O'Hare D, Wiggins M, Williams A, Wong W. Cognitive task analyses for decision centred design and training. Ergonomics. 1998 Nov;41(11):1698–718. doi: 10.1080/001401398186144. [DOI] [PubMed] [Google Scholar]

- 58.Klein C, DiazGranados D, Salas E, Le H, Burke CS, Lyons R, Goodwin GF. Does team building work? Small Group Res. 2009;40(2):181–222. doi: 10.1177/1046496408328821. https://journals.sagepub.com/doi/abs/10.1177/1046496408328821. [DOI] [Google Scholar]

- 59.Santana C, Curry LA, Nembhard IM, Berg DN, Bradley EH. Behaviors of successful interdisciplinary hospital quality improvement teams. J Hosp Med. 2011 Nov;6(9):501–6. doi: 10.1002/jhm.927. http://europepmc.org/abstract/MED/22042750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Morreim EH. Search eLibrary: SSRN. 2014. [2019-10-08]. Conflict Resolution in Healthcare https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2458744.

- 61.Neuendorf KA. The Content Analysis Guidebook. Thousand Oaks, California: SAGE Publications Inc; 2002. [Google Scholar]

- 62.Stemler S. An overview of content analysis. Pract Assess Res Eval. 2001;7(17):137–46. https://pareonline.net/getvn.asp?v=7&n=17. [Google Scholar]

- 63.Roosan D, Weir C, Samore M, Jones M, Rahman M, Stoddard GJ, Del Fiol G. Identifying complexity in infectious diseases inpatient settings: an observation study. J Biomed Inform. 2017 Jul;71S:S13–21. doi: 10.1016/j.jbi.2016.10.018. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(16)30155-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Arthur W, Glaze RM, Bhupatkar A, Villado AJ, Bennett W, Rowe LJ. Team task analysis: differentiating between tasks using team relatedness and team workflow as metrics of team task interdependence. Hum Factors. 2012 Apr;54(2):277–95. doi: 10.1177/0018720811435234. [DOI] [PubMed] [Google Scholar]

- 65.Dominguez C, Long WG, Miller TE, Wiggins SL. Design Directions for Support of Submarine Commanding Officer Decision Making; Proceedings of 2006 Undersea HSI Symposium: Research, Acquisition and the Warrior; 2006. [DOI] [Google Scholar]

- 66.Dominguez C, Uhlig P, Brown J, Gurevich O, Shumar W, Stahl G, Zemel A, Zipperer L. Studying and supporting collaborative care processes. Proc Hum Factors Ergon Soc Annu Meet. 2005;49(11) doi: 10.1177/154193120504901116. doi: 10.1177/154193120504901116. [DOI] [Google Scholar]

- 67.Kvarnström S. Difficulties in collaboration: a critical incident study of interprofessional healthcare teamwork. J Interprof Care. 2008 Mar;22(2):191–203. doi: 10.1080/13561820701760600. [DOI] [PubMed] [Google Scholar]

- 68.Markman KD, Klein WM, Suhr JA. Handbook of Imagination and Mental Simulation. London, England: Psychology Press; 2012. [Google Scholar]

- 69.Islam R, Weir C, Fiol G. Clinical complexity in medicine: a measurement model of task and patient complexity. Methods Inf Med. 2016;55(1):14–22. doi: 10.3414/ME15-01-0031. http://www.thieme-connect.com/DOI/DOI?10.3414/ME15-01-0031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Raven ME, Flanders A. Using contextual inquiry to learn about your audiences. SIGDOC Asterisk J Comput Doc. 1996;20(1):1–13. doi: 10.1145/227614.227615. https://dl.acm.org/citation.cfm?id=227615. [DOI] [Google Scholar]

- 71.Someren MW, Barnard YF, Sandberg JA. The Think Aloud Method: A Practical Guide To Modelling Cognitive Processes. London: Academic Press; 1994. [Google Scholar]

- 72.Roosan D, Fiol G, Butler J, Livnat Y, Mayer J, Samore M, Jones M, Weir C. Feasibility of population health analytics and data visualization for decision support in the infectious diseases domain: a pilot study. Appl Clin Inform. 2016;7(2):604–23. doi: 10.4338/ACI-2015-12-RA-0182. http://europepmc.org/abstract/MED/27437065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Roosan D, Samore M, Jones M, Livnat Y, Clutter J. Big-Data Based Decision-Support Systems to Improve Clinicians' Cognition. Proceedings of the 2016 IEEE International Conference on Healthcare Informatics; ICHI'16; October 4-7, 2016; Chicago, IL, USA. 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Karahoca A, Bayraktar E, Tatoglu E, Karahoca D. Information system design for a hospital emergency department: a usability analysis of software prototypes. J Biomed Inform. 2010 Apr;43(2):224–32. doi: 10.1016/j.jbi.2009.09.002. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(09)00119-1. [DOI] [PubMed] [Google Scholar]

- 75.Lewis C. Testing a Walkthrough Methodology for Theory-based Design of Walk-Up-And-Use Interfaces. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; CHI'90; April 1-5, 1990; Seattle, Washington, USA. 1990. pp. 235–42. [DOI] [Google Scholar]

- 76.Polson P, Lewis C, Rieman J, Wharton C. Cognitive walkthroughs: a method for theory-based evaluation of user interfaces. Int J Man Mach Stud. 1992;36(5):741–73. doi: 10.1016/0020-7373(92)90039-N. doi: 10.1016/0020-7373(92)90039-N. [DOI] [Google Scholar]

- 77.Doolen T, Hacker M, van Aken E. The impact of organizational context on work team effectiveness: a study of production team. IEEE Trans Eng Manage. 2003;50(3):285–96. doi: 10.1109/TEM.2003.817296. https://ieeexplore.ieee.org/abstract/document/1236004. [DOI] [Google Scholar]

- 78.Valentine MA, Nembhard IM, Edmondson AC. Measuring teamwork in health care settings: a review of survey instruments. Med Care. 2015 Apr;53(4):e16–30. doi: 10.1097/MLR.0b013e31827feef6. [DOI] [PubMed] [Google Scholar]

- 79.Rama J, Bishop J. A Survey and Comparison of CSCW Groupware Applications. Proceedings of the 2006 Annual Research Conference of the South African Institute of Computer Scientists and Information Technologists on IT Research in Developing Countries; SAICSIT'06; October 9-11, 2006; Somerset West, South Africa. 2006. https://dl.acm.org/citation.cfm?id=1216284. [DOI] [Google Scholar]

- 80.Urnes T, Nejabi R. Semantic Scholar. 1994. [2019-10-08]. Tools for Implementing Groupware: Survey and Evaluation https://pdfs.semanticscholar.org/daff/3a231ff8a9da690c250f6a179e27dee58379.pdf.

- 81.Herskovic V, Pino JA, Ochoa SF, Antunes P. Evaluation Methods for Groupware Systems. Proceedings of the 13th International Conference on Groupware: Design Implementation, and Use; CRIWG'07; September 16-20, 2007; Bariloche, Argentina. 2007. pp. 328–36. [DOI] [Google Scholar]

- 82.Pinelle D, Gutwin C. A Review of Groupware Evaluations. Proceedings of the 9th IEEE International Workshops on Enabling Technologies: Infrastructure for Collaborative Enterprises; WETICE'00; June 4-16, 2000; Gaithersburg, MD, USA. 2000. pp. 86–91. [DOI] [Google Scholar]

- 83.Glover W, Farris JA, van Aken EM, Doolen T. Critical success factors for the sustainability of Kaizen event human resource outcomes: an empirical study. Int J Prod Econ. 2011;132(2):197–213. doi: 10.1016/j.ijpe.2011.04.005. doi: 10.1016/j.ijpe.2011.04.005. [DOI] [Google Scholar]

- 84.Demirbag M, Sahadev S, Kaynak E, Akgul A. Modeling quality commitment in service organizations: an empirical study. Euro J Market. 2012;46(6):790–810. doi: 10.1108/03090561211214609. https://www.emerald.com/insight/content/doi/10.1108/03090561211214609/full/html. [DOI] [Google Scholar]

- 85.Frederick CV. Education Resources Information Center. 2009. [2019-10-08]. Analyzing Learner Characteristics, Undergraduate Experience and Individual Teamwork Knowledge, Skills and Abilities: Toward Identifying Themes to Promote Higher Workforce Readiness https://eric.ed.gov/?id=ED532494.

- 86.Brooke J. SUS - A quick and dirty usability scale. In: Jordan PW, Thomas B, McClelland IL, Weerdmeester B, editors. Usability Evaluation In Industry. London: Taylor & Francis; 1996. [Google Scholar]

- 87.Altman DG. Practical Statistics for Medical Research. London: Chapman and Hall; 1990. [Google Scholar]

- 88.Kastner M, Lottridge D, Marquez C, Newton D, Straus SE. Usability evaluation of a clinical decision support tool for osteoporosis disease management. Implement Sci. 2010 Dec 10;5:96. doi: 10.1186/1748-5908-5-96. https://implementationscience.biomedcentral.com/articles/10.1186/1748-5908-5-96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Abidi SR, Stewart S, Shepherd M, Abidi SS. Usability evaluation of family physicians' interaction with the Comorbidity Ontological Modeling and ExecuTion System (COMET) Stud Health Technol Inform. 2013;192:447–51. [PubMed] [Google Scholar]

- 90.Neri PM, Pollard SE, Volk LA, Newmark LP, Varugheese M, Baxter S, Aronson SJ, Rehm HL, Bates DW. Usability of a novel clinician interface for genetic results. J Biomed Inform. 2012 Oct;45(5):950–7. doi: 10.1016/j.jbi.2012.03.007. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(12)00052-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Salman YB, Cheng H, Patterson PE. Icon and user interface design for emergency medical information systems: a case study. Int J Med Inform. 2012 Jan;81(1):29–35. doi: 10.1016/j.ijmedinf.2011.08.005. [DOI] [PubMed] [Google Scholar]

- 92.Bury J, Hurt C, Roy A, Cheesman L, Bradburn M, Cross S, Fox J, Saha V. LISA: a web-based decision-support system for trial management of childhood acute lymphoblastic leukaemia. Br J Haematol. 2005 Jun;129(6):746–54. doi: 10.1111/j.1365-2141.2005.05541.x. [DOI] [PubMed] [Google Scholar]

- 93.Li AC, Kannry JL, Kushniruk A, Chrimes D, McGinn TG, Edonyabo D, Mann DM. Integrating usability testing and think-aloud protocol analysis with 'near-live' clinical simulations in evaluating clinical decision support. Int J Med Inform. 2012 Nov;81(11):761–72. doi: 10.1016/j.ijmedinf.2012.02.009. [DOI] [PubMed] [Google Scholar]

- 94.Cramton CD. Attribution in distributed work groups. In: Hinds PJ, Kiesler S, editors. Distributed Work. Cambridge, MA: The MIT Press; 2002. pp. 191–212. [Google Scholar]

- 95.Mortensen M, Hinds PJ. Conflict and shared identity in geographically distributed teams. Int J Confl. 2001;12(3):212–238. doi: 10.1108/eb022856. doi: 10.1108/eb022856. [DOI] [Google Scholar]

- 96.Purdy J, Nye P, Balakrishnan P. The impact of communication media on negotiation outcomes. Int J Confl. 2000;11(2):162–87. doi: 10.1108/eb022839. https://www.emerald.com/insight/content/doi/10.1108/eb022839/full/html. [DOI] [Google Scholar]

- 97.Weisband S. Maintaining awareness in distributed team collaboration: implications for leadership and performance. In: Hinds PJ, Kiesler S, editors. Distributed Work. Cambridge, MA: The MIT Press; 2002. pp. 311–33. [Google Scholar]

- 98.Mannix EA, Griffith TL, Neale MA. The phenomenology of conflict in distributed work teams. In: Hinds PJ, Kiesler S, editors. Distributed Work. Cambridge, MA: The MIT Press; 2002. pp. 213–33. [Google Scholar]

- 99.Simons TL, Peterson RS. Task conflict and relationship conflict in top management teams: the pivotal role of intragroup trust. J Appl Psychol. 2000 Feb;85(1):102–11. doi: 10.1037/0021-9010.85.1.102. [DOI] [PubMed] [Google Scholar]

- 100.Stanton NA, Stewart R, Harris D, Houghton RJ, Baber C, McMaster R, Salmon P, Hoyle G, Walker G, Young MS, Linsell M, Dymott R, Green D. Distributed situation awareness in dynamic systems: theoretical development and application of an ergonomics methodology. Ergonomics. 2006;49(12-13):1288–311. doi: 10.1080/00140130600612762. [DOI] [PubMed] [Google Scholar]

- 101.Stewart R, Stanton NA, Harris D, Baber C, Salmon P, Mock M, Tatlock K, Wells L, Kay A. Distributed situation awareness in an Airborne Warning and Control System: application of novel ergonomics methodology. Cogn Tech Work. 2008;10(3):221–9. doi: 10.1007/s10111-007-0094-8. [DOI] [Google Scholar]

- 102.Walker G, Stanton NA, Baber C, Wells L, Gibson H, Salmon P, Jenkins D. From ethnography to the EAST method: a tractable approach for representing distributed cognition in Air Traffic Control. Ergonomics. 2010 Feb;53(2):184–97. doi: 10.1080/00140130903171672. [DOI] [PubMed] [Google Scholar]

- 103.Houghton RJ, Baber C, McMaster R, Stanton NA, Salmon P, Stewart R, Walker G. Command and control in emergency services operations: a social network analysis. Ergonomics. 2006;49(12-13):1204–25. doi: 10.1080/00140130600619528. [DOI] [PubMed] [Google Scholar]

- 104.Salmon PM, Stanton NA, Walker GH, Jenkins D, Baber C, McMaster R. Representing situation awareness in collaborative systems: a case study in the energy distribution domain. Ergonomics. 2008 Mar;51(3):367–84. doi: 10.1080/00140130701636512. [DOI] [PubMed] [Google Scholar]

- 105.Walker GH, Gibson H, Stanton NA, Baber C, Salmon P, Green D. Event Analysis of Systemic Teamwork (EAST): a novel integration of ergonomics methods to analyse C4i activity. Ergonomics. 2006;49(12-13):1345–69. doi: 10.1080/00140130600612846. [DOI] [PubMed] [Google Scholar]