Abstract

Background

Assessment and rating of Parkinson’s Disease (PD) are commonly based on the medical observation of several clinical manifestations, including the analysis of motor activities. In particular, medical specialists refer to the MDS-UPDRS (Movement Disorder Society – sponsored revision of Unified Parkinson’s Disease Rating Scale) that is the most widely used clinical scale for PD rating. However, clinical scales rely on the observation of some subtle motor phenomena that are either difficult to capture with human eyes or could be misclassified. This limitation motivated several researchers to develop intelligent systems based on machine learning algorithms able to automatically recognize the PD. Nevertheless, most of the previous studies investigated the classification between healthy subjects and PD patients without considering the automatic rating of different levels of severity.

Methods

In this context, we implemented a simple and low-cost clinical tool that can extract postural and kinematic features with the Microsoft Kinect v2 sensor in order to classify and rate PD. Thirty participants were enrolled for the purpose of the present study: sixteen PD patients rated according to MDS-UPDRS and fourteen healthy paired subjects. In order to investigate the motor abilities of the upper and lower body, we acquired and analyzed three main motor tasks: (1) gait, (2) finger tapping, and (3) foot tapping. After preliminary feature selection, different classifiers based on Support Vector Machine (SVM) and Artificial Neural Networks (ANN) were trained and evaluated for the best solution.

Results

Concerning the gait analysis, results showed that the ANN classifier performed the best by reaching 89.4% of accuracy with only nine features in diagnosis PD and 95.0% of accuracy with only six features in rating PD severity. Regarding the finger and foot tapping analysis, results showed that an SVM using the extracted features was able to classify healthy subjects versus PD patients with great performances by reaching 87.1% of accuracy. The results of the classification between mild and moderate PD patients indicated that the foot tapping features were the most representative ones to discriminate (81.0% of accuracy).

Conclusions

The results of this study have shown how a low-cost vision-based system can automatically detect subtle phenomena featuring the PD. Our findings suggest that the proposed tool can support medical specialists in the assessment and rating of PD patients in a real clinical scenario.

Keywords: Classification, Artificial neural network, Support vector machine, Feature selection, Parkinson’s disease, Gait analysis, Finger tapping, Foot tapping, MDS-UPDRS, Microsoft kinect v2

Background

Nowadays neurological disorders represent the leading cause of disability [1]. Among neurological disorders, Parkinson’s disease (PD) affects more than six millions of people in the world and is the fastest growing so that the estimated number of PD affected people in the 2040 is 13 million [2]. PD is a neurodegenarative disorder caused by a substantial loss of dopamine in the forebrain. The exhibited signs and symptoms, that can be different for everyone, may include tremor, slowed movement, rigid muscles, impaired posture and balance, loss of automatic movements, speech and writing changes [3, 4]. PD diagnosis is typically made by analyzing motor symptoms with clinical scales, such as the Movement Disorder Society – sponsored revision of Unified Parkinson’s Disease Rating Scale (MDS-UPDRS) [5] and the Hoehn & Yahr (HY) [6].

Although several scientific results support the validity of the MDS-UPDRS for rating, subjectivity and low efficiency are inevitable since most of the diagnostic criteria use descriptive symptoms, which cannot provide a quantified diagnostic basis. In fact, PD early signs may be mild and go unnoticed and symptoms often begin on one side of the body and usually remain worse on that side, even after symptoms begin to affect both sides. With this evidence, the development of computer-assisted diagnosis and computer-expert systems is very important [7, 8], especially when dealing with motor features. Hence, a tool that can help neurologists to objectively quantify small changes in motion performance is needed to have a quantitative assessment of the disease.

Related works

In the last years, machine learning (ML) techniques have been used and compared for PD classification [9], e.g. Support Vector Machine (SVM), Linear Discriminant Analysis (LDA), Artificial Neural Network (ANN), Decision Tree (DT), Naïve Bayes. Most of the published studies investigate two-group classifications, i.e. PD patients vs healthy subjects of control (HC), with promising results obtained [10]. Few works, indeed, presented multiclass classification among patients at different disease stages [9–11].

Researchers have also applied ML to classify PD patients and HC by extracting features related to motor abilities. The majority of the studies based on the analysis of either the lower limb motor abilities or the upper limb motor abilities are usually focused on a single exercises or a single symptom [12–31]. Different technologies have been exploited to capture the analyzed movements, and the most used are optoelectronic systems, wearable sensors like accelerometers and gyroscopes and camera-based systems [32].

Objective and precise assessments of motor tasks are usually performed using large optoelectronic equipment (e.g., 3D-camera-based systems, instrumented walkways) that require heavy installation and a large space to conduct the experiments [33]. Earlier efforts to develop clinic-based gait assessment tools for patients with PD have appeared in the literature over the past two decades. Muro-de-la-Herran et al. [34] and Tao et al. [35], reviewed the use of wearable sensors, such as accelerometers, gyroscopes, magnetoresistive sensors, flexible goniometers, electromagnetic tracking systems, and force sensors in gait analysis (including both kinematics and kinetics), and reported that they have the potential to play an important role in various clinical applications. Among the different proposed wearable sensors, inertial measurement units (IMU) were widely used, even though there are several key limitations that should be considered when considering the use of wearable IMUs as a clinical-based tool, e.g. the gyroscope-based assessment tools suffer from a drifting effect [36]. Systems that are based on low-cost camera might represent a valid solution to overcome both the high cost and encumbrance of an optoelectronic system and the above reported limitation of the IMU-based system. Since the release of the Microsoft Kinect SDK, the Kinect v2 sensor has been widely utilized for PD-related research. Several projects focused on rehabilitation and they proposed experimental ways of monitoring patients’ activities [36–39]. Most of the cited works carried on comparisons of the Kinect v2 sensor in relation to gold standards, as optoelectronic systems, in order to test and quantify its accuracy.

According to the recent trends in the area of intelligent systems for personalized medicine [40–46], it seems clear that there is the necessity for new, low-cost, and accessible technologies to facilitate in-clinic and at-home assessment of motor alterations throughout the progression of PD [47]. In this context, we have proposed a low-cost camera-based system able to recognize and rate PD patients in a completely non-invasive manner. The main novel contributions respect to the state of the art presented above are:

differently from already published studies, we also considered the classification of the PD severity;

we used the MS Kinect v2 system to investigate three motor exercise: gait, finger tapping and foot tapping;

we evaluated a large set of features extracted from the kinematic data (spatio-temporal parameters, frequency and postural variables);

differently from previous studies on gait analysis for PD classification [36, 37, 39, 48], we also considered postural oscillations and kinematics of upper body parts (trunk, neck and arms);

we have developed and compared two classifiers (SVM and ANN) able to assess and rate the movement impairment of PD patients using a specific set of features extracted by the recorded movements.

Our main goal is to design and test a mobile low-cost decision support system (DSS) that can be easily used both in specialized hospitals and at home thus implementing the recent telemedicine paradigms. The system aims then to detected the early symptoms of PD, and to provide a tool able to monitor, assess and rate the disease in a non-invasive manner, since the early stages.

Materials and methods

Participants

We recruited thirty elderly participants from a local clinical center (Medica Sud s.r.l., Bari, Italy): 14 healthy subjects (10 male and 4 female, 73.5 ±6.4 years, range 65-82 years) and 16 idiopathic Parkinson patients (13 male and 3 female, 74.9 ±7.6 years, range 63-87 years). The PD patients were examined by a medical doctor and rated according to MDS-UPDRS that considers a scoring with five levels, i.e. normal, slight, mild, moderate and severe. In detail, nine patients have been classified as mild (mean age 67.2 years, SD 9.8, age range 54-81) and seven were rated as moderate (mean age 74.1 years, SD 7.1, age range 63-87). None of the patients was classified as either slight or severe.

Experimental setups

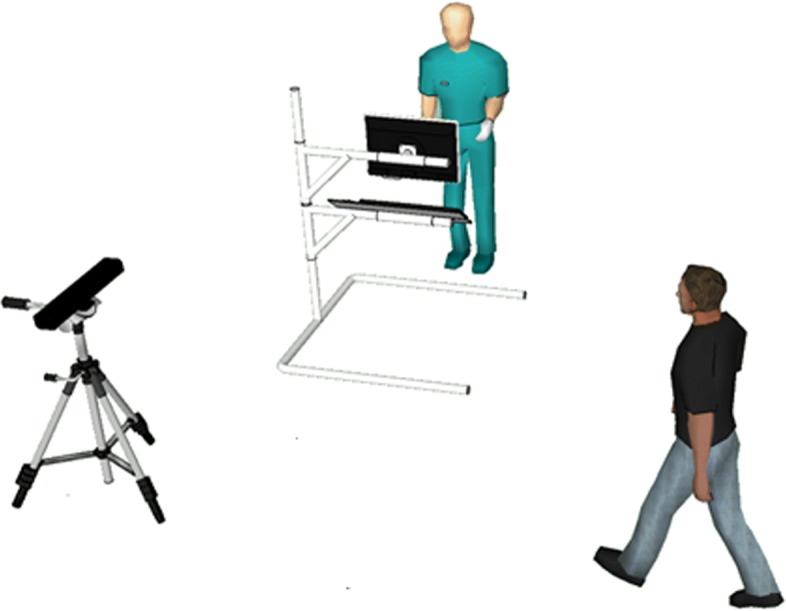

In this study, we considered three motor exercises that involve both the lower and upper extremities of the body: (1) gait, (2) finger tapping and (3) foot tapping. We designed and tested a specific experimental setup for each exercise. All the setups make use of the Microsoft Kinect v2 One RGB-D camera that acquired both color and depth data at 30 Hz. It is important to remark that a) the finger tapping and the foot tapping were performed the same manner as described in the Subsection III.4 and Subsection III.7 of the UDPRS scale, respectively, whereas b) the gait exercise has been performed on a distance, i.e. 2.5-m meters shorter than the path length considered by the Subsection III.10 (10 meters). The motivation for such choice will be further discussed.

In this study, right and left sides of each participant were independently considered, thus the final dataset about the PD patients is composed of 60 instances.

Posture and gait

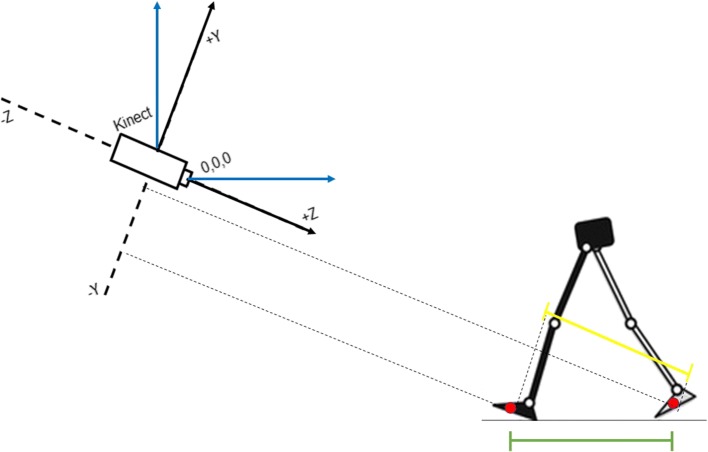

As the Subsection III.10 of the UDPRS considers, we asked each participant to walk straight towards the camera with the natural normal walking pace (Fig. 1). Participants were asked to repeat the task several times in order to acquire at least one gait cycle (stance phase and swing phase) for each side. The task trial started with the subject standing in a T-pose for one second. Subjects then walked toward the Kinect sensor, which was placed 3.5 m away from the subject’s starting point at a height of 0.75 m. The 3.5 m distance was selected to guarantee that the recorded gait cycle, which began when the subject was about 2.5–3 m from the Kinect, did not include the acceleration/deceleration phases of walking that are anticipated during the initiation or completion of the gait task.

Fig. 1.

Setup for postural and gait analysis. Representation of the proposed set-up in the clinical center

During the walking, the human skeleton pose has been estimated and recorded by using the Microsoft SDK functions. The resulting human skeleton is represented by 25 nodes, also called control points, in the Kinect’s reference frame known as the skeleton space. Each node represents a specific joint with 3D position information in units of meters. The skeleton space uses a right-handed coordinate system: the Y axis lies in the vertical direction of the image plane, the Z axis extends in depth perpendicularly from the sensor, and the X axis is horizontal in the image plane and orthogonal to the Y and Z axes (Fig. 2). Even though the Subsection III.10 considers a walking distance equal to 10 meters, we used a reduced path length in order to facilitate the skeleton tracking thus reducing the error of the estimated skeleton pose at each frame.

Fig. 2.

Reference system for postural and gait data acquisition. Schematic Representation of the Global Reference System (the Kinect, black lines) and of the Subject’s Reference System (blue lines)

Finger tapping

The finger tapping test considers the examination of both hand separately. As the Subsection III.4 of the UDPRS considers, the tested subject is seated in front of the camera and is instructed to tap the index finger on the thumb ten times as quickly and as big as possible. During the task the subject wears two thimbles made of reflective material on both the index finger and thumb (see Fig. 3).

Fig. 3.

Finger tapping and foot tapping setups. Left image shows a healthy subject wearing the two passive finger markers. The three images reported on the right show the foot of a subject doing the foot tapping exercise while he is wearing a passive marker on the toes

Foot tapping

The feet are tested separately. The tested subject sits in a straight-backed chair in front of the camera and has both feet on the floor. He is then instructed to place the heel on the ground in a comfortable position and then tap the toes ten times as big and as fast as possible. A system of stripes with a reflective marker is positioned on the toes (see Fig. 3).

Movement estimation and feature extraction

For each acquired task, we developed a specific routine able to compute the trajectories of the moving links of the body, i.e. arms, legs, fingers and toes, and to extract a set of hand-crafted features.

Gait and postural analysis

As discussed in the experimental setup section, the human skeleton pose has been estimated by using functions of the Microsoft SDK, that automatically computes the 3D position of the 25 landmark points. Given the whole trajectory of each extracted point, three categories of features have been considered:

temporal features, e.g. duration of gait phases in seconds and in percentage compared to the duration of the gait cycle;

spatial features, e.g. estimated length, width and velocity of movements, normalized by the height or the lower limb length of the subject according to the specific feature;

angular features, e.g. the average angle of specific articulations to evaluate the posture of the body and the range of motions of some other skeletal joints.

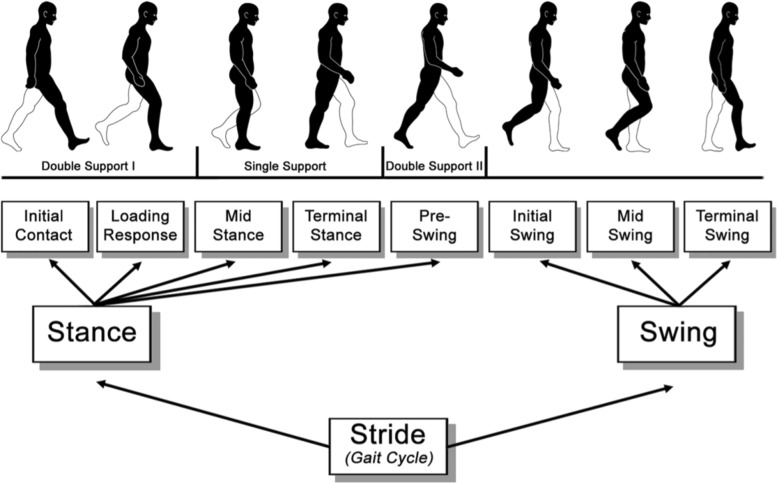

In detail, we have first segmented each phase of the gait cycle, i.e. Loading Response (LR), Mid-STance (MST), Terminal Stance (TST), Pre-SWing (PSW), Initial SWing (ISW), Mid-SWing (MSW) and Terminal SWing (TSW) (Fig. 4), as proposed by Tupa et al. [38]. Given the exact time of the begin and the end of each gait cycle phase, we then extracted the spatio-temporal features reported into the first 13 rows of the Table 1. Concerning the angular features, that are listed at the bottom of the Table 1, we computed 1) the range of motion (ROM) of the arm swing along the sagittal plane, the average value of the 2) Trunk and the 3) Neck flexion angles along the sagittal plane during the all gait cycle, and the 4) tonic lateral flexion of the trunk along the frontal plane (for more detail about the postural angle definitions please refer to the works of Seah et al. and Barone et al. [50, 51]). The mean and the standard deviation values of both postural and kinematic parameters for all the subjects are summarized in Table 2.

Fig. 4.

Gait cycle. Breakdown of the gait cycle into phases. Contribution from the work of Stöckel et al. [49]

Table 1.

Postural and gait analysis

| Feature | Acronym | Domain | Unit | Selection |

|---|---|---|---|---|

| Stance Phase | STp | Temporal | % | |

| Swing Phase | SWp | Temporal | % | |

| Double Support Phase | DSp | Temporal | % | Case A,B |

| Stance Time | STt | Temporal | sec | |

| Swing Time | SWt | Temporal | sec | |

| Stride Time | STDt | Temporal | sec | Case B |

| Stride Cadence | STDc | Spatial | #/min | Case A |

| Stride Length | STDl | Spatial | cm | Case A,B |

| Step Length | SPl | Spatial | cm | |

| Step Width | SPw | Spatial | cm | |

| Stride Velocity | STDv | Spatial | m/sec | Case A |

| Swing Velocity | SWv | Spatial | m/sec | Case A |

| Trunk Flexion | TFlex | Angular | degree | Case A,B |

| Neck Flexion | NFlex | Angular | degree | Case A,B |

| Pisa Syndrome | PS | Angular | degree | Case A |

| Arm Swing | ASrom | Angular | degree | Case A,B |

Summary of the 16 Features and the Selected Features (9 features for the Case A and 6 features for the Case B) used by the Classification Algorithms

Table 2.

Postural and gait analysis

| Feature | Healthy | PD | Mild PD | Moderate PD |

|---|---|---|---|---|

| STp | 60.1 ±3.3 | 62.0 ±3.5 | 61.6 ±2.6 | 62.5 ±4.4 |

| SWp | 39.8 ±3.3 | 38.0 ±3.5 | 38.4 ±2.6 | 37.4 ±4.4 |

| DSp | 18.6 ±5.2 | 22.9 ±5.2 | 21.8 ±3.7 | 24.2 ±6.6 |

| STt | 0.8 ±0.1 | 0.8 ±0.1 | 0.9 ±0.1 | 0.8 ±0.1 |

| SWt | 0.5 ±0.1 | 0.5 ±0.1 | 0.6 ±0.1 | 0.5 ±0.1 |

| STDt | 1.3 ±0.1 | 1.4 ±0.2 | 1.5 ±0.2 | 1.3 ±0.2 |

| STDc | 45.0 ±5.2 | 44.9 ±8.3 | 41.8 ±5.1 | 48.9 ±10.0 |

| STDl | 71.3 ±11.0 | 56.9 ±15.1 | 57.3 ±15.3 | 50.3 ±19.8 |

| SPl | 35.8 ±6.2 | 28.4 ±7.8 | 28.6 ±7.9 | 25.1 ±10.0 |

| SPw | 8.8 ±2.6 | 9.7 ±1.9 | 9.6 ±1.9 | 10.1 ±2.0 |

| STDv | 0.5 ±0.1 | 0.4 ±0.1 | 0.4 ±0.1 | 0.4 ±0.1 |

| SWv | 1.2 ±0.3 | 1 ±0.2 | 1.0 ±0.2 | 0.9 ±0.3 |

| TFlex | 5.4 ±2.2 | 5.6 ±2.9 | 5.6 ±2.9 | 4.7 ±3.8 |

| NFlex | 7.9 ±2.2 | 8.1 ±2.9 | 8.0 ±2.9 | 7.2 ±3.8 |

| PS | 0.1 ±1.2 | -0.2 ±0.8 | -0.2 ±0.8 | -0.1 ±0.7 |

| ASrom | 16.1 ±7.8 | 11.0 ±6.3 | 10.7 ±5.3 | 10.9 ±8.9 |

Mean and Standard Deviation of Postural and Kinematic Features during Gait

Finger tapping and foot tapping

The acquisition of the finger and foot tapping are based on custom made trackers covered by reflective material. Even though the two tapping are different movements and rely on trackers that have different shapes, a unique algorithm has been used to extract the features related to both exercises. In detail, such procedure considers the extraction of the reflective marker positions from the Microsoft Kinect v2 acquisitions, first, and the computation of all the features related to the acquired movement.

Image processing for movement tracking.

The two vision-based acquisition systems use passive reflective markers to track and record the position of the thumb, the index finger and the toes. A routine based on image processing techniques has been developed and employed to 1) recognize the markers in each acquired video frame and 2) compute the 3D position of a centroid point associated to the specific marker. As a first step, the blobs associated with the reflective markers have been segmented using the OpenCV library functions on each infrared image frame as follows:

Extraction of the pixels associated with the reflective passive markers with a thresholding operation;

Blurring and thresholding operations in sequence;

Eroding and dilating operations in sequence;

Dilating and eroding operations in sequence.

After the post-processing steps explained above, all the found blobs are extracted using an edge detection procedure. Only the blobs having sizes comparable with markers’ size are kept for the next analysis. As final step, the centroid of each blob (only one blob for the foot tapping and two blobs for the finger tapping) is computed. Given the position of the centroid into the image frame, its depth information and the intrinsic parameters of the Kinect V2, we then computed the 3D position of the centroid associated to each tracked marker in the camera reference system. The centroid position has been then considered as the position of the specific fingertip or the foot’s toe.

Feature extraction.

As shown in Fig. 5, the reconstructed marker trajectories have been used to extract the following two signals over time:

d1(t) - the distance between the index fingers and the thumb markers (Finger Tapping);

d2(t) - the distance between the position of the toes’ marker and the position of the same marker when the toes lie on the ground (Foot Tapping).

Fig. 5.

Finger Tapping and Foot Tapping: movement extraction. a Finger tapping. The signal d1(t) is the distance between the two centroids (red filled circles) of the passive finger markers. b Foot tapping. The signal d2(t) is the distance between the centroid of the toes’ marker and the centroid of the same marker when the toes are completely on the ground

Both signals have been normalized to make them range in [0,1] since the absolute values of the movement amplitude is not meaningful [5]. Given the entire acquired signal, all the single trials (ten finger tapping and ten foot tapping) have been extracted for each side. We then implemented a simple procedure that automatically extracts the same set of features for both computed signals (d1(t) and d2(t)). The list of features to the time domain, spatial domain and frequency domain follows:

meanTime: averaged execution time of the single exercise trial;

varTime: variance of the execution time of the single exercise trial;

meanAmplitude: averaged space amplitude of the single exercise trial;

varAmplitude: variance of the space amplitude of the single exercise trial;

tremors: number of peaks detected during the entire acquisition;

hesitations: number of amplitude peaks detected in the velocity signal during the entire acquisition;

periodicity: periodicity of the exercise computed as reported in [52];

AxF: (amplitude times frequency) the averaged value of the division between the amplitude peak reached in a single exercise trial and the time duration of the trial.

Classification

In addition to the classification of healthy subjects vs PD patients that has been quite deeply investigated in previous studies [9], in this work we also focused on the classification of PD patients affected by different disease severity. Then, the following two study cases have been conducted:

Case A: Healthy Subjects versus Parkinson’s Disease patients. Dataset consists of a total of 30 records, 16 PD patients (53.3%) and 14 older age healthy subjects (46,7%). Right and left sides of each subject were separately considered, then the final dataset is composed of 60 instances.

Case B: Mild versus Moderate Parkinson’s Disease patients. Dataset consists of a total of 16 records, 9 mild (56,3%) and 7 moderate (43.7%) PD patients. Right and left sides of each patient were separately considered, then the final dataset is composed of 32 instances.

All the analyses have been conducted following two different strategies based on SVMs and ANNs, which represent state-of-the-art classifiers that have gained popularity within pattern recognition tasks [53–55] and showed promising result in PD classification using kinematic data [9].

Considering the easy tuning of training parameters, SVMs classifiers [56, 57] have been considered to realize a preliminary inspection of the processed data. SVM is a classifier whose goal is to find the best decision hyperplane that separates the training features space. SVMs have high generalization capability because they can be extended to separate a space of non-linear input features [58]. For the purpose of the present work, the training process was based on 5-fold cross-validation and evaluate in sequence the following types of SVM classifiers: (1) linear SVM, (2) quadratic SVM, (3) cubic SVM, (4) Gaussian SVM. We also tested evolutionary approaches, and optimization strategies based on probabilistic graphical models [59, 60], for the design of neural classification architectures [61–64]. In particular, we applied an improved version of the Genetic Algorithm (GA) reported in Bevilacqua et al. [65], where the fitness function maximized by the GA consists in the mean value of accuracy reached by each ANN-based classifier trained with a fixed number of iterations, validated and tested using a random permutation of the dataset instances.

Classification based on gait and postural analysis

Concerning the classification based on the features extracted from the gait exercise, for each case (Case A and Case B) we derived two subcases depending on the number of features considered for the classification step (see Table 1). In particular, we ran a correlation based feature selection procedure (Weka - Attribute Evaluator: CfsSubsetEval - Search Method: BestFirst) that has individuated 9 features out of 16 for the Case A and 6 features out of 16 for the Case B:

Subcase A.1: all 16 features;

Subcase A.2: 9 selected features out of 16;

Subcase B.1: all 16 features;

Subcase B.2: 6 selected features out of 16.

Classification based on finger tapping and foot tapping analysis

Regarding the classification based on the features extracted from both the finger and foot tapping, for each case (Case A and Case B) we derived three subcases depending on the number of features considered for the classification step. Here, we list all the analyzed subcases:

Subcase A.1: all the 8 finger tapping features;

Subcase A.2: all the 8 foot tapping features;

Subcase A.3: both finger and foot tapping features.

Subcase B.1: all the 8 finger tapping features;

Subcase B.2: all the 8 foot tapping features;

Subcase B.3: both finger and foot tapping features.

Classification evaluation metrics

Each analyzed classifier has been tested using 5-fold cross-validation and evaluated in terms of Accuracy (Eq. 1), Sensitivity (Eq. 2) and Specificity (Eq. 3), where True Positive (TP), True Negative (TN), False Positive (FP) and False Negative (FN) numbers are computed using the Confusion Matrix reported in Table 3 for a binary classifier example.

| 1 |

Table 3.

Confusion Matrix for performance evaluation of a binary classifier

| True condition | |||

|---|---|---|---|

| Positive | Negative | ||

| Predicted condition | Positive | TP | FP |

| Negative | FN | TN |

| 2 |

| 3 |

Results

All the participants were able to complete both clinical and instrumented evaluations. Here we report the main achievements in terms of Accuracy, Sensitivity and Specificity for the classification algorithms.

Gait and postural analysis

We reported and compared the results obtained with both the best SVM-based and optimized ANN classifiers in Table 4. In detail, the comparison has been evaluated analyzing the average values of Accuracy, Sensitivity and Specificity across the 5-fold cross-validations.

Table 4.

Postural and gait analysis

| Accuracy | Sensitivity | Specificity | ||

|---|---|---|---|---|

| [%] | [%] | [%] | ||

| Subcase A.1 | SVM | 73.4 ±4.3 | 78.0 ±5.4 | 68.2 ±7.3 |

| ANN | 84.7 ± 8.6 | 82.6 ± 14.0 | 86.7 ± 13.2 | |

| Subcase A.2 | SVM | 78.5 ±3.4 | 81.7 ±4.9 | 74.8 ±5.5 |

| ANN | 89.4 ± 8.2 | 87.0 ± 12.7 | 91.8 ± 11.0 | |

| Subcase B.1 | SVM | 83.6 ±3.9 | 67.3 ±6.8 | 96.3 ±4.4 |

| ANN | 87.9 ± 9.7 | 76.5 ± 21.7 | 97.0 ± 9.3 | |

| Subcase B.2 | SVM | 88.7 ±3.9 | 78.9 ±6.0 | 96.3 ±5.1 |

| ANN | 95.0 ± 7.1 | 90.0 ± 15.7 | 99.0 ± 4.3 |

ANN and SVM performance comparison with all the features and selected features

In Table 4 we reported the classification performance comparison between ANN and the best SVM-based classifiers for each studied subcases. The results showed that the ANN classifier performed the best for each considered subcase. In particular, when diagnosing PD (Case A), the ANN reached 89.4% (±8.2%) of Accuracy, 87.0% (±12.7%) of Sensitivity and 91.8% (±11.0%) of Specificity with only 9 selected features; while, the ANN reached 95.0% (±7.1%) of Accuracy, 90.0% (±15.7%) of Sensitivity and 99.0% (±4.3%) of Specificity with the 6 selected features in classifying mild versus moderate PD patients (Case B). The optimized topologies of the best ANN classifiers for each case are reported in Fig. 6.

Fig. 6.

Optimal ANN topologies. Optimal topologies for ANNs obtained with the procedure based on the genetic algorithm: (top) Case A.2: Dataset with only 9 Features, (bottom) Case B.2: Dataset with only 6 Features

Finger tapping and foot tapping analysis

Here we reported and compared the results obtained with the best SVM for each of the three presented subcases. In detail, the comparison has been evaluated analyzing the average values of Accuracy, Sensitivity and Specificity across the 5-fold cross-validations (see Table 5).

Table 5.

Finger tapping and foot tapping analysis

| Accuracy | Sensitivity | Specificity | ||

|---|---|---|---|---|

| [%] | [%] | [%] | ||

| Case A | A.1 | 71.0±2.4 | 75.7±1.4 | 65.5±1.4 |

| A.2 | 85.5±1.7 | 91.0±4.2 | 79.0±5.2 | |

| A.3 | 87.1±3.6 | 87.7±3.1 | 86.0±1.7 | |

| Case B | B.1 | 57.0±2.3 | 100.0 | 0.0 |

| B.2 | 81.0±1.2 | 84.0±1.7 | 78.0±2.9 | |

| B.3 | 78.0±5.2 | 89.0±4.2 | 64.0±3.7 |

Classification indices: performance comparison among the best classifiers trained for each studied sub-case

Considering the Case A, i.e. "Healthy subjects vs PD patients" classification, we reported the results of the best trained SVM-based classifier: (sub-case A.1) the Gaussian SVM reached an accuracy of 71.0% (±2.4), a sensitivity of 75.7% (±1.4) and a specificity of 65.5% (±1.4); (sub-case A.2) the Gaussian SVM reached an accuracy of 85.5% (±1.7), a sensitivity of 91.0% (±4.2) and a specificity of 79.0% (±5.2); (sub-case A.3) the Quadratic SVM reached an accuracy of 87.1% (±3.6), a sensitivity of 87.8% (±3.1) and a specificity of 86.0% (±1.7). Also concerning the Case B, i.e. "Mild PD patients vs Moderate PD patients" classification, we reported the results of the best trained SVM-based classifier: (sub-case B.1) the Gaussian SVM reached an accuracy of 57.0% (±2.3), a sensitivity of 100% and a specificity of 0.0%; (sub-case B.2) the Gaussian SVM reached an accuracy of 81.0% (±1.2), a sensitivity of 84.0% (±1.7) and a specificity of 78.0% (±2.9); (sub-case B.3) the Gaussian SVM reached an accuracy of 78.0% (±5.2), a sensitivity of 89.0% (±4.2) and a specificity of 64.0% (±3.7).

Discussion

In the last decade, ML techniques have been used and compared for PD classification [9]. However, most of the published studies investigate two-group classifications, i.e. PD patients vs healthy subjects, with good results obtained [10]. In this work, we have also focused on the classification between groups of patients featuring different severity levels.

The Microsoft Kinect v2 sensor has been widely utilized for PD-related research, however we noticed that most of the research studies focused on comparisons of the Kinect device with respect to optoelectronic systems. The previous studies that focus on the lower limbs usually evaluate only kinematic parameters, whereas we introduced a vision system that is able to recognize and rate PD’s motor features taking into account also postural oscillations and kinematics of upper body parts (trunk, neck and arms) while walking.

Gait and postural features have been organized by domains, and classification was carried out considering either the complete set of extracted features or a subset of them selected with a correlation based feature selection algorithm. We found out that:

the spatial and the angular domains were the most relevant in terms of information content after feature selection phase;

the reduced dataset for Case A (A2) showed better results in terms of classification with: 89.4% (±8.6%) of Accuracy, 87.0% (±12.7%) of Sensitivity and 91.8% (±11.1%) of Specificity;

the reduced dataset for Case B (B2) performed the best in terms of classification with 95.0% (±7.1%) of Accuracy, 90.0% (±15.7%) of Sensitivity and 99.0% (±4.3%) of Specificity.

Our findings suggest that postural variables were the most relevant features associated with PD, which confirmed the importance of postural attitudes during walking in the neurodegeneration process. In fact, only 9 features were necessary, i.e. Double Support Phase, Stride Cadence, Stride Velocity, Swing Velocity, Stride Length, Trunk and Neck Flexion, Pisa Syndrome and Arm Swing, to diagnose PD (Case A) and only 6 features, i.e. Double Support Phase, Stride Time, Stride Length, Trunk and Neck Flexion and Arm Swing, were able to rate the severity level in PD patients (Case B).

Considering the classification based on the finger and foot tapping exercises, we first investigated the ability of the extracted features to distinguish between healthy subjects and PD patients using an SVM-based classifier. As first step, we analyzed the finger tapping (FiT) and the foot tapping (FoT) features independently, then we analyzed the features extracted from both exercises movements together. The main findings of this analysis indicate that:

the features extracted from the foot tapping exercise lead to a better classification in terms of all the three computed indices when compared with the finger tapping: accuracy (FoT: 85.5% (±1.7) vs FiT: 71.0% (±2.4)), sensitivity (FoT: 91.0% (±4.2) vs FiT: 75.7% (±1.4)) and specificity (FoT: 79.0% (±5.2) vs FiT: 65.5% (±1.4));

using the features extracted from both exercises (FoT and FiT) the SVM classifier performs better than the two classifiers based either on the FiT features or the FoT features. In particular, the classifier based on both feature sets reached a better accuracy (87.1% (±3.6)), a better specificity (86.0% (±1.7)) and a slight lower sensitivity (87.7% (±3.1)).

Hence, analysis of the Case A indicates that the set of features we selected from the movement acquired during both the finger and foot tapping can be used to capture the abnormal motor activity of a PD patient with great results. We also investigated the contribution of the features extracted from the finger and foot tapping exercises to distinguish between mild PD patients and moderate PD patients using an SVM-based classifier. As done for the "Healthy subjects vs PD patients" classification, we first analyzed the finger tapping (FiT) and the foot tapping (FoT) features independently, then we analyzed the features extracted from both movements together. The main findings of this analysis indicate that:

the FiT features are not representative of the difference between mild and moderate PD subjects (accuracy 57.0% (±2.3), sensitivity 100% and specificity 0.0%);

the FoT extracted features lead to best accuracy (81.0% (±1.2)) and specificity (78.0% (±2.9));

the SVM classifier that use both feature sets is characterized by both a slight lower accuracy (78.0% (±5.2)) and specificity (64.0% (±3.7)) than the SVM that uses only the FoT features, and the best sensitivity level that is equal to 89.0% (±4.2).

Hence, the analysis of the Case B indicates that the set of features we selected from the movement acquired during both FoT and FiT exercises lead to a good "Mild PD patients vs Moderate PD patients" classification results, but with classification scores that are slightly lower than the "Healthy subjects vs PD patients" classification ones. It is also worth noting that the FoT features are the most important ones to achieve the best accuracy and specificity levels, and that the extracted FiT features are not representative at all of the motor differences between mild and moderate PD patients since the FiT features lead to lower accuracy and specificity levels. Only when the FiT features are used together with the FoT features the SVM classifier presents a better sensitivity level at the expense of both the accuracy and specificity.

Even though the number of tested patients is comparable to the number of PD patients involved in previous published studies [9], the major limitation of the study regards the analysis of just two levels of PD severity. Future studies might indeed consider not only mild and moderate PD patients but also slight and severe ones.

Conclusions

Parkinson’s Disease influences a large part of worldwide population. About 1% of the population over 55 years of age is affected by this disease. Most of the current methods used for evaluating PD heavily, e.g. UPDRS scale, rely on human expertise. In this work we designed, implemented and tested a low-cost vision-based tool to automatically evaluate the motor abilities of PD patients for rating the disease severity. We investigated both motor abilities of the upper (finger and foot tapping analysis) and lower body (postural and gait analysis). Regarding the postural and gait analysis, we initially considered sixteen features: 7 temporal parameters (i.e., Stance phase %, Swing phase %, Double Support phase %, Stance time, Swing time, Strike time, and Stride cadence), 5 spatial parameters (i.e., Step length, Stride velocity, Swing velocity, Stride length, Step width), and 4 postural parameters (i.e., Average Trunk and Neck Flexion, Pisa Syndrome, Arm Swing Range of Motion). Results showed that the ANN classifier performed the best by reaching 89.4% of accuracy with only nine features in diagnosis PD and 95.0% of accuracy with only six features in rating PD severity. We found out that postural features were relevant in both cases and to our knowledge no previous studies have investigated in depth the role of these components in classification and rating of PD. Concerning the Finger and Foot tapping analysis, we extracted eight main features from the trajectories of the acquired movements using image processing techniques. Several SVM classifiers have been trained and evaluated to investigate whether the selected sets of features can be used to detect the main differences between healthy subjects and PD patients, and then between mild PD patients and moderate PD patients. Results showed that an SVM using the features extracted by both finger and foot tapping exercises is able to classify between healthy subjects and PD patients with great performances by reaching 87.1% of accuracy, 86.0% of specificity and 87.7% of sensitivity. The results of the classification of mild vs moderate PD patients indicated that the foot tapping features are the most representative ones compared to the finger tapping features. In fact, the SVM based on the foot tapping features reached the best score in terms of accuracy (81.0%) and specificity (78.0%).

From our findings, we can conclude that automatic vision system based on the Kinect v2 sensor together with the selected extracted features could represent a valid tool to support the assessment of postural, and spatio-temporal characteristics acquired from gait, finger and foot tapping in participants affected or not by the PD. In addition, the sensitivity of the Kinect v2 sensor could support medical specialists in the assessment and rating of PD patients. Finally, the low-cost cost feature and the easy and fast setup phase of the designed and implemented tool support and encourage its usability in a real clinical scenario. Feature work will focus on the integrated analysis of the data acquired during the three exercises. Moreover, a further analysis could consider a higher number of patients performing more kind of exercises. Finally, deep learning techniques might be evaluated considering the amount of big data that could be generated [66, 67].

Acknowledgements

We would like to thank all the health professionals in Medica Sud S.r.l. for their support and all the voluntary patients that took part in the study.

About this supplement

This article has been published as part of BMC Medical Informatics and Decision Making Volume 19 Supplement 9, 2019: Proceedings of the 2018 International Conference on Intelligent Computing (ICIC 2018) and Intelligent Computing and Biomedical Informatics (ICBI) 2018 conference: medical informatics and decision making. The full contents of the supplement are available online at https://bmcmedinformdecismak.biomedcentral.com/articles/supplements/volume-19-supplement-9.

Abbreviations

- ANN

Artificial neural network

- FiT

Finger tapping

- FN

False negative

- FoT

Foot tapping

- FP

False positive

- GA

Genetic algorithm

- IMU

Inertial measurement units

- ISW

Initial swing

- LR

Loading response

- ML

Machine learning

- MDS-UPDRS

Movement disorder society –unified Parkinson’s Disease rating scale

- MST

Mid-stance

- MSW

Mid-swing

- PD

Parkinson’s disease

- PSW

Pre-swing

- ROM

Range of motion

- SVM

Support vector machine

- TN

True negative

- TNR

True negative rate

- TP

True positive

- TPR

True positive rate

- TST

Terminal stance

- TSW

Terminal swing

Authors’ contributions

The research question and design were largely determined by DB, IB and VB, the data collection grid was developed by all authors. DB, IB, GDC, GFT and AB took responsibility for the data collection. All authors participated in the data treatment. All authors took part in the interpretation of the results and made significant contribution to the manuscript, including the drafting and the revising of the manuscript for critical intellectual content. All authors read and approved the final manuscript.

Funding

The activities of this work and the publication costs have been funded by the Italian project ROBOVIR (BRIC-INAIL-2016).

Availability of data and materials

The datasets generated and/or analyzed during the current study are not publicly available due restrictions associated with anonymity of participants but are available from the corresponding author on reasonable request.

Ethics approval and consent to participate

The experimental procedures were conducted in accordance with the Declaration of Helsinki. All participants provided written informed consent.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Domenico Buongiorno, Email: domenico.buongiorno@poliba.it.

Ilaria Bortone, Email: ilaria.bortone@ifc.cnr.it.

Giacomo Donato Cascarano, Email: giacomodonato.cascarano@poliba.it.

Gianpaolo Francesco Trotta, Email: gianpaolofrancesco.trotta@poliba.it.

Antonio Brunetti, Email: antonio.brunetti@poliba.it.

Vitoantonio Bevilacqua, Email: vitoantonio.bevilacqua@poliba.it.

References

- 1.Feigin Valery L, Abajobir Amanuel Alemu, Abate Kalkidan Hassen, Abd-Allah Foad, Abdulle Abdishakur M, Abera Semaw Ferede, Abyu Gebre Yitayih, Ahmed Muktar Beshir, Aichour Amani Nidhal, Aichour Ibtihel, Aichour Miloud Taki Eddine, Akinyemi Rufus Olusola, Alabed Samer, Al-Raddadi Rajaa, Alvis-Guzman Nelson, Amare Azmeraw T., Ansari Hossein, Anwari Palwasha, Ärnlöv Johan, Asayesh Hamid, Asgedom Solomon Weldegebreal, Atey Tesfay Mehari, Avila-Burgos Leticia, Frinel Euripide, Avokpaho G. Arthur, Azarpazhooh Mahmood Reza, Barac Aleksandra, Barboza Miguel, Barker-Collo Suzanne L, Bärnighausen Till, Bedi Neeraj, Beghi Ettore, Bennett Derrick A, Bensenor Isabela M, Berhane Adugnaw, Betsu Balem Demtsu, Bhaumik Soumyadeep, Birlik Sait Mentes, Biryukov Stan, Boneya Dube Jara, Bulto Lemma Negesa Bulto, Carabin Hélène, Casey Daniel, Castañeda-Orjuela Carlos A., Catalá-López Ferrán, Chen Honglei, Chitheer Abdulaal A, Chowdhury Rajiv, Christensen Hanne, Dandona Lalit, Dandona Rakhi, de Veber Gabrielle A, Dharmaratne Samath D, Do Huyen Phuc, Dokova Klara, Dorsey E Ray, Ellenbogen Richard G, Eskandarieh Sharareh, Farvid Maryam S, Fereshtehnejad Seyed-Mohammad, Fischer Florian, Foreman Kyle J, Geleijnse Johanna M, Gillum Richard F, Giussani Giorgia, Goldberg Ellen M, Gona Philimon N, Goulart Alessandra Carvalho, Gugnani Harish Chander, Gupta Rahul, Hachinski Vladimir, Gupta Rajeev, Hamadeh Randah Ribhi, Hambisa Mitiku, Hankey Graeme J, Hareri Habtamu Abera, Havmoeller Rasmus, Hay Simon I, Heydarpour Pouria, Hotez Peter J, Jakovljevic Mihajlo (Michael) B, Javanbakht Mehdi, Jeemon Panniyammakal, Jonas Jost B, Kalkonde Yogeshwar, Kandel Amit, Karch André, Kasaeian Amir, Kastor Anshul, Keiyoro Peter Njenga, Khader Yousef Saleh, Khalil Ibrahim A, Khan Ejaz Ahmad, Khang Young-Ho, Tawfih Abdullah, Khoja Abdullah, Khubchandani Jagdish, Kulkarni Chanda, Kim Daniel, Kim Yun Jin, Kivimaki Mika, Kokubo Yoshihiro, Kosen Soewarta, Kravchenko Michael, Krishnamurthi Rita Vanmala, Defo Barthelemy Kuate, Kumar G Anil, Kumar Rashmi, Kyu Hmwe H, Larsson Anders, Lavados Pablo M, Li Yongmei, Liang Xiaofeng, Liben Misgan Legesse, Lo Warren D, Logroscino Giancarlo, Lotufo Paulo A, Loy Clement T, Mackay Mark T, El Razek Hassan Magdy Abd, El Razek Mohammed Magdy Abd, Majeed Azeem, Malekzadeh Reza, Manhertz Treh, Mantovani Lorenzo G, Massano João, Mazidi Mohsen, McAlinden Colm, Mehata Suresh, Mehndiratta Man Mohan, Memish Ziad A, Mendoza Walter, Mengistie Mubarek Abera, Mensah George A, Meretoja Atte, Mezgebe Haftay Berhane, Miller Ted R, Mishra Shiva Raj, Ibrahim Norlinah Mohamed, Mohammadi Alireza, Mohammed Kedir Endris, Mohammed Shafiu, Mokdad Ali H, Moradi-Lakeh Maziar, Velasquez Ilais Moreno, Musa Kamarul Imran, Naghavi Mohsen, Ngunjiri Josephine Wanjiku, Nguyen Cuong Tat, Nguyen Grant, Le Nguyen Quyen, Nguyen Trang Huyen, Nichols Emma, Ningrum Dina Nur Anggraini, Nong Vuong Minh, Norrving Bo, Noubiap Jean Jacques N, Ogbo Felix Akpojene, Owolabi Mayowa O, Pandian Jeyaraj D., Parmar Priyakumari Ganesh, Pereira David M, Petzold Max, Phillips Michael Robert, Piradov Michael A, Poulton Richie G., Pourmalek Farshad, Qorbani Mostafa, Rafay Anwar, Rahman Mahfuzar, Rahman Mohammad HifzUr, Rai Rajesh Kumar, Rajsic Sasa, Ranta Annemarei, Rawaf Salman, Renzaho Andre M.N., Rezai Mohammad Sadegh, Roth Gregory A, Roshandel Gholamreza, Rubagotti Enrico, Sachdev Perminder, Safiri Saeid, Sahathevan Ramesh, Sahraian Mohammad Ali, Samy Abdallah M., Santalucia Paula, Santos Itamar S, Sartorius Benn, Satpathy Maheswar, Sawhney Monika, Saylan Mete I, Sepanlou Sadaf G, Shaikh Masood Ali, Shakir Raad, Shamsizadeh Morteza, Sheth Kevin N, Shigematsu Mika, Shoman Haitham, Silva Diego Augusto Santos, Smith Mari, Sobngwi Eugene, Sposato Luciano A, Stanaway Jeffrey D, Stein Dan J, Steiner Timothy J, Stovner Lars Jacob, Abdulkader Rizwan Suliankatchi, EI Szoeke Cassandra, Tabarés-Seisdedos Rafael, Tanne David, Theadom Alice M, Thrift Amanda G, Tirschwell David L, Topor-Madry Roman, Tran Bach Xuan, Truelsen Thomas, Tuem Kald Beshir, Ukwaja Kingsley Nnanna, Uthman Olalekan A, Varakin Yuri Y, Vasankari Tommi, Venketasubramanian Narayanaswamy, Vlassov Vasiliy Victorovich, Wadilo Fiseha, Wakayo Tolassa, Wallin Mitchell T, Weiderpass Elisabete, Westerman Ronny, Wijeratne Tissa, Wiysonge Charles Shey, Woldu Minyahil Alebachew, Wolfe Charles D A, Xavier Denis, Xu Gelin, Yano Yuichiro, Yimam Hassen Hamid, Yonemoto Naohiro, Yu Chuanhua, Zaidi Zoubida, El Sayed Zaki Maysaa, Zunt Joseph R, Murray Christopher J L, Vos Theo. Global, regional, and national burden of neurological disorders during 1990–2015: a systematic analysis for the Global Burden of Disease Study 2015. The Lancet Neurology. 2017;16(11):877–897. doi: 10.1016/S1474-4422(17)30299-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Dorsey E. Ray, Bloem Bastiaan R. The Parkinson Pandemic—A Call to Action. JAMA Neurology. 2018;75(1):9. doi: 10.1001/jamaneurol.2017.3299. [DOI] [PubMed] [Google Scholar]

- 3.Twelves D, Perkins KS, Counsell C. Systematic review of incidence studies of parkinson’s disease. Mov Disord Off J Mov Disord Soc. 2003;18(1):19–31. doi: 10.1002/mds.10305. [DOI] [PubMed] [Google Scholar]

- 4.Horváth K, Aschermann Z, Ács P, Deli G, Janszky J, Komoly S, Balázs É, Takács K, Karádi K, Kovács N. Minimal clinically important difference on the motor examination part of mds-updrs. Parkinsonism Relat Disord. 2015;21(12):1421–6. doi: 10.1016/j.parkreldis.2015.10.006. [DOI] [PubMed] [Google Scholar]

- 5.Goetz CG, Tilley BC, Shaftman SR, Stebbins GT, Fahn S, Martinez-Martin P, Poewe W, Sampaio C, Stern MB, Dodel R, et al. Movement disorder society-sponsored revision of the unified parkinson’s disease rating scale (mds-updrs): scale presentation and clinimetric testing results. Mov Disord Off J Mov Disord Soc. 2008;23(15):2129–70. doi: 10.1002/mds.22340. [DOI] [PubMed] [Google Scholar]

- 6.Hoehn MM, Yahr MD. Parkinsonism: onset, progression, and mortality. Neurology. 1967;17(5):427. doi: 10.1212/WNL.17.5.427. [DOI] [PubMed] [Google Scholar]

- 7.Morris M, Iansek R, McGinley J, Matyas T, Huxham F. Three-dimensional gait biomechanics in parkinson’s disease: Evidence for a centrally mediated amplitude regulation disorder. Mov Disord Off J Mov Disord Soc. 2005;20(1):40–50. doi: 10.1002/mds.20278. [DOI] [PubMed] [Google Scholar]

- 8.Bortone I, Argentiero A, Agnello N, Santo Sabato S, Bucciero A. A two-stage approach to bring the postural assessment to masses: the kiss-health project. In: Biomedical and Health Informatics (BHI), 2014 IEEE-EMBS International Conference On. IEEE: 2014. p. 371–4. 10.1109/bhi.2014.6864380. [DOI]

- 9.Rovini E, Maremmani C, Cavallo F. How wearable sensors can support parkinson’s disease diagnosis and treatment: A systematic review. Front Neurosci. 2017; 11:555. 10.3389/fnins.2017.00555. [DOI] [PMC free article] [PubMed]

- 10.Cavallo Filippo, Moschetti Alessandra, Esposito Dario, Maremmani Carlo, Rovini Erika. Upper limb motor pre-clinical assessment in Parkinson's disease using machine learning. Parkinsonism & Related Disorders. 2019;63:111–116. doi: 10.1016/j.parkreldis.2019.02.028. [DOI] [PubMed] [Google Scholar]

- 11.Benmalek Elmehdi, Elmhamdi Jamal, Jilbab Abdelilah. Multiclass classification of Parkinson’s disease using different classifiers and LLBFS feature selection algorithm. International Journal of Speech Technology. 2017;20(1):179–184. doi: 10.1007/s10772-017-9401-9. [DOI] [Google Scholar]

- 12.Djurić-Jovičić M, Bobić VN, Ječmenica-Lukić M, Petrović IN, Radovanović SM, Jovičić NS, Kostić VS, Popović MB. Implementation of continuous wavelet transformation in repetitive finger tapping analysis for patients with pd. In: 2014 22nd Telecommunications Forum Telfor (TELFOR): 2014. p. 541–4. 10.1109/TELFOR.2014.7034466. [DOI]

- 13.Yokoe M., Okuno R., Hamasaki T., Kurachi Y., Akazawa K., Sakoda S. Opening velocity, a novel parameter, for finger tapping test in patients with Parkinson's disease. Parkinsonism & Related Disorders. 2009;15(6):440–444. doi: 10.1016/j.parkreldis.2008.11.003. [DOI] [PubMed] [Google Scholar]

- 14.Rigas G., Tzallas A. T., Tsipouras M. G., Bougia P., Tripoliti E. E., Baga D., Fotiadis D. I., Tsouli S. G., Konitsiotis S. Assessment of Tremor Activity in the Parkinson’s Disease Using a Set of Wearable Sensors. IEEE Transactions on Information Technology in Biomedicine. 2012;16(3):478–487. doi: 10.1109/TITB.2011.2182616. [DOI] [PubMed] [Google Scholar]

- 15.Kostikis N., Hristu-Varsakelis D., Arnaoutoglou M., Kotsavasiloglou C. A Smartphone-Based Tool for Assessing Parkinsonian Hand Tremor. IEEE Journal of Biomedical and Health Informatics. 2015;19(6):1835–1842. doi: 10.1109/JBHI.2015.2471093. [DOI] [PubMed] [Google Scholar]

- 16.Djuric-Jovicic Milica D., Jovicic Nenad S., Radovanovic Sasa M., Stankovic Iva D., Popovic Mirjana B., Kostic Vladimir S. Automatic Identification and Classification of Freezing of Gait Episodes in Parkinson's Disease Patients. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2014;22(3):685–694. doi: 10.1109/TNSRE.2013.2287241. [DOI] [PubMed] [Google Scholar]

- 17.Tripoliti Evanthia E., Tzallas Alexandros T., Tsipouras Markos G., Rigas George, Bougia Panagiota, Leontiou Michael, Konitsiotis Spiros, Chondrogiorgi Maria, Tsouli Sofia, Fotiadis Dimitrios I. Automatic detection of freezing of gait events in patients with Parkinson's disease. Computer Methods and Programs in Biomedicine. 2013;110(1):12–26. doi: 10.1016/j.cmpb.2012.10.016. [DOI] [PubMed] [Google Scholar]

- 18.Tsanas A., Little M.A., McSharry P.E., Ramig L.O. Accurate Telemonitoring of Parkinson's Disease Progression by Noninvasive Speech Tests. IEEE Transactions on Biomedical Engineering. 2010;57(4):884–893. doi: 10.1109/TBME.2009.2036000. [DOI] [PubMed] [Google Scholar]

- 19.Mellone Sabato, Palmerini Luca, Cappello Angelo, Chiari Lorenzo. Hilbert–Huang-Based Tremor Removal to Assess Postural Properties From Accelerometers. IEEE Transactions on Biomedical Engineering. 2011;58(6):1752–1761. doi: 10.1109/TBME.2011.2116017. [DOI] [PubMed] [Google Scholar]

- 20.Heldman Dustin A., Espay Alberto J., LeWitt Peter A., Giuffrida Joseph P. Clinician versus machine: Reliability and responsiveness of motor endpoints in Parkinson's disease. Parkinsonism & Related Disorders. 2014;20(6):590–595. doi: 10.1016/j.parkreldis.2014.02.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Salarian Arash, Russmann Heike, Wider Christian, Burkhard Pierre R., Vingerhoets Franios J. G., Aminian Kamiar. Quantification of Tremor and Bradykinesia in Parkinson's Disease Using a Novel Ambulatory Monitoring System. IEEE Transactions on Biomedical Engineering. 2007;54(2):313–322. doi: 10.1109/TBME.2006.886670. [DOI] [PubMed] [Google Scholar]

- 22.Dai H, Lin H, Lueth TC. Quantitative assessment of parkinsonian bradykinesia based on an inertial measurement unit. BioMed Eng OnLine. 2015; 14(1):68. 10.1186/s12938-015-0067-8. [DOI] [PMC free article] [PubMed]

- 23.Griffiths Robert I., Kotschet Katya, Arfon Sian, Xu Zheng Ming, Johnson William, Drago John, Evans Andrew, Kempster Peter, Raghav Sanjay, Horne Malcolm K. Automated Assessment of Bradykinesia and Dyskinesia in Parkinson's Disease. Journal of Parkinson's Disease. 2012;2(1):47–55. doi: 10.3233/JPD-2012-11071. [DOI] [PubMed] [Google Scholar]

- 24.Buongiorno D, Trotta GF, Bortone I, Di Gioia N, Avitto F, Losavio G, Bevilacqua V. Assessment and rating of movement impairment in parkinson’s disease using a low-cost vision-based system. In: Huang D-S, Gromiha MM, Han K, Hussain A, editors. Intelligent Computing Methodologies. Cham: Springer; 2018. [Google Scholar]

- 25.Farran Mohamad, Al-Husseini Mohammed, Kabalan Karim, Capobianco Antonio-Daniele, Boscolo Stefano. Electronically reconfigurable parasitic antenna array for pattern selectivity. The Journal of Engineering. 2019;2019(1):1–5. doi: 10.1049/joe.2018.5141. [DOI] [Google Scholar]

- 26.Keijsers NL, Horstink MW, Gielen SC. Automatic assessment of levodopa-induced dyskinesias in daily life by neural networks. Mov Disord Off J Mov Disord Soc. 2003; 18(1):70–80. 10.1002/mds.10310. [DOI] [PubMed]

- 27.Lopane Giovanna, Mellone Sabato, Chiari Lorenzo, Cortelli Pietro, Calandra-Buonaura Giovanna, Contin Manuela. Dyskinesia detection and monitoring by a single sensor in patients with Parkinson's disease. Movement Disorders. 2015;30(9):1267–1271. doi: 10.1002/mds.26313. [DOI] [PubMed] [Google Scholar]

- 28.Saunders-Pullman Rachel, Derby Carol, Stanley Kaili, Floyd Alicia, Bressman Susan, Lipton Richard B., Deligtisch Amanda, Severt Lawrence, Yu Qiping, Kurtis Mónica, Pullman Seth L. Validity of spiral analysis in early Parkinson's disease. Movement Disorders. 2008;23(4):531–537. doi: 10.1002/mds.21874. [DOI] [PubMed] [Google Scholar]

- 29.Westin Jerker, Ghiamati Samira, Memedi Mevludin, Nyholm Dag, Johansson Anders, Dougherty Mark, Groth Torgny. A new computer method for assessing drawing impairment in Parkinson's disease. Journal of Neuroscience Methods. 2010;190(1):143–148. doi: 10.1016/j.jneumeth.2010.04.027. [DOI] [PubMed] [Google Scholar]

- 30.Bortone Ilaria, Quercia Marco Giuseppe, Ieva Nicola, Cascarano Giacomo Donato, Trotta Gianpaolo Francesco, Tatò Sabina Ilaria, Bevilacqua Vitoantonio. Intelligent Computing Theories and Application. Cham: Springer International Publishing; 2018. Recognition and Severity Rating of Parkinson’s Disease from Postural and Kinematic Features During Gait Analysis with Microsoft Kinect; pp. 613–618. [Google Scholar]

- 31.Loconsole C, Cascarano GD, Lattarulo A, Brunetti A, Trotta GF, Buongiorno D, Bortone I, De Feudis I, Losavio G, Bevilacqua V, Di Sciascio E. A comparison between ann and svm classifiers for parkinson’s disease by using a model-free computer-assisted handwriting analysis based on biometric signals. In: 2018 International Joint Conference on Neural Networks (IJCNN): 2018. p. 1–8. 10.1109/IJCNN.2018.8489293. [DOI]

- 32.Bortone I, Buongiorno D, Lelli G, Di Candia A, Cascarano GD, Trotta GF, Fiore P, Bevilacqua V. Gait analysis and parkinson’s disease: Recent trends on main applications in healthcare. In: Masia L, Micera S, Akay M, Pons JL, editors. Converging Clinical and Engineering Research on Neurorehabilitation III. Cham: Springer; 2019. [Google Scholar]

- 33.Bortone Ilaria, Trotta Gianpaolo Francesco, Brunetti Antonio, Cascarano Giacomo Donato, Loconsole Claudio, Agnello Nadia, Argentiero Alberto, Nicolardi Giuseppe, Frisoli Antonio, Bevilacqua Vitoantonio. Intelligent Computing Theories and Application. Cham: Springer International Publishing; 2017. A Novel Approach in Combination of 3D Gait Analysis Data for Aiding Clinical Decision-Making in Patients with Parkinson’s Disease; pp. 504–514. [Google Scholar]

- 34.Muro-De-La-Herran A, Garcia-Zapirain B, Mendez-Zorrilla A. Gait analysis methods: An overview of wearable and non-wearable systems, highlighting clinical applications. Sensors. 2014;14(2):3362–94. doi: 10.3390/s140203362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tao W, Liu T, Zheng R, Feng H. Gait analysis using wearable sensors. Sensors. 2012;12(2):2255–83. doi: 10.3390/s120202255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Eltoukhy M, Kuenze C, Oh J, Jacopetti M, Wooten S, Signorile J. Microsoft kinect can distinguish differences in over-ground gait between older persons with and without parkinson’s disease. Med Eng Phys. 2017;44:1–7. doi: 10.1016/j.medengphy.2017.03.007. [DOI] [PubMed] [Google Scholar]

- 37.Springer S, Yogev Seligmann G. Validity of the kinect for gait assessment: a focused review. Sensors. 2016;16(2):194. doi: 10.3390/s16020194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ťupa O, Procházka A, Vyšata O, Schätz M, Mareš J, Vališ M, Mařík V. Motion tracking and gait feature estimation for recognising parkinson’s disease using ms kinect. Biomed Eng Online. 2015;14(1):97. doi: 10.1186/s12938-015-0092-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Xu X, McGorry RW, Chou L-S, Lin J-h, Chang C-c. Accuracy of the microsoft kinect™ for measuring gait parameters during treadmill walking. Gait Posture. 2015;42(2):145–51. doi: 10.1016/j.gaitpost.2015.05.002. [DOI] [PubMed] [Google Scholar]

- 40.Bao W, Jiang Z, Huang D-S. Novel human microbe-disease association prediction using network consistency projection. BMC Bioinformatics. 2017;18(16):543. doi: 10.1186/s12859-017-1968-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Shen Z, Bao W, Huang D-S. Recurrent neural network for predicting transcription factor binding sites. Sci Rep. 2018;8(1):15270. doi: 10.1038/s41598-018-33321-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Peng Chen, Zou Liang, Huang De-Shuang. Discovery of Relationships Between Long Non-Coding RNAs and Genes in Human Diseases Based on Tensor Completion. IEEE Access. 2018;6:59152–59162. doi: 10.1109/ACCESS.2018.2873013. [DOI] [Google Scholar]

- 43.Bevilacqua V, Salatino AA, Leo CD, Tattoli G, Buongiorno D, Signorile D, Babiloni C, Percio CD, Triggiani AI, Gesualdo L. Advanced classification of alzheimer’s disease and healthy subjects based on eeg markers. In: 2015 International Joint Conference on Neural Networks (IJCNN): 2015. p. 1–5. 10.1109/IJCNN.2015.7280463. [DOI]

- 44.Bevilacqua V, Buongiorno D, Carlucci P, Giglio F, Tattoli G, Guarini A, Sgherza N, Tullio GD, Minoia C, Scattone A, Simone G, Girardi F, Zito A, Gesualdo L. A supervised cad to support telemedicine in hematology. In: 2015 International Joint Conference on Neural Networks (IJCNN): 2015. p. 1–7. 10.1109/IJCNN.2015.7280464. [DOI]

- 45.Bevilacqua V, Brunetti A, Trotta GF, De Marco D, Quercia MG, Buongiorno D, D’Introno A, Girardi F, Guarini A. A novel deep learning approach in haematology for classification of leucocytes. Smart Innovation, Systems and Technologies. 2019; 103:265–74. 10.1007/978-3-319-95095-2-25. cited By 0. [DOI]

- 46.Bortone I, Trotta GF, Cascarano GD, Regina P, Brunetti A, De Feudis I, Buongiorno D, Loconsole C, Bevilacqua V. A supervised approach to classify the status of bone mineral density in post-menopausal women through static and dynamic baropodometry. In: 2018 International Joint Conference on Neural Networks (IJCNN): 2018. p. 1–7. 10.1109/IJCNN.2018.8489205. [DOI]

- 47.Bevilacqua Vitoantonio, Trotta Gianpaolo Francesco, Loconsole Claudio, Brunetti Antonio, Caporusso Nicholas, Bellantuono Giuseppe Maria, De Feudis Irio, Patruno Donato, De Marco Domenico, Venneri Andrea, Di Vietro Maria Grazia, Losavio Giacomo, Tatò Sabina Ilaria. Advances in Intelligent Systems and Computing. Cham: Springer International Publishing; 2017. A RGB-D Sensor Based Tool for Assessment and Rating of Movement Disorders; pp. 110–118. [Google Scholar]

- 48.Zhao J, Bunn FE, Perron JM, Shen E, Allison RS. Gait assessment using the kinect rgb-d sensor. In: Engineering in Medicine and Biology Society (EMBC), 2015 37th Annual International Conference of the IEEE. IEEE: 2015. p. 6679–83. 10.1109/embc.2015.7319925. [DOI] [PubMed]

- 49.Stöckel T, Jacksteit R, Behrens M, Skripitz R, Bader R, Mau-Moeller A. The mental representation of the human gait in young and older adults. Front Psychol. 2015; 6:943. 10.3389/fpsyg.2015.00943. [DOI] [PMC free article] [PubMed]

- 50.Seah Sofia H.H., Briggs Andrew M., O’Sullivan Peter B., Smith Anne J., Burnett Angus F., Straker Leon M. An exploration of familial associations in spinal posture defined using a clinical grouping method. Manual Therapy. 2011;16(5):501–509. doi: 10.1016/j.math.2011.05.002. [DOI] [PubMed] [Google Scholar]

- 51.Barone P, Santangelo G, Amboni M, Pellecchia MT, Vitale C. Pisa syndrome in parkinson’s disease and parkinsonism: clinical features, pathophysiology, and treatment. The Lancet Neurol. 2016;15(10):1063–74. doi: 10.1016/S1474-4422(16)30173-9. [DOI] [PubMed] [Google Scholar]

- 52.Kanjilal P.P., Palit S., Saha G. Fetal ECG extraction from single-channel maternal ECG using singular value decomposition. IEEE Transactions on Biomedical Engineering. 1997;44(1):51–59. doi: 10.1109/10.553712. [DOI] [PubMed] [Google Scholar]

- 53.Huang D-S. Systematic theory of neural networks for pattern recognition. Publ House Electron Ind China Beijing. 1996; 201.

- 54.HUANG DE-SHUANG. RADIAL BASIS PROBABILISTIC NEURAL NETWORKS: MODEL AND APPLICATION. International Journal of Pattern Recognition and Artificial Intelligence. 1999;13(07):1083–1101. doi: 10.1142/S0218001499000604. [DOI] [Google Scholar]

- 55.Bevilacqua V, Tattoli G, Buongiorno D, Loconsole C, Leonardis D, Barsotti M, Frisoli A, Bergamasco M. A novel bci-ssvep based approach for control of walking in virtual environment using a convolutional neural network. In: 2014 International Joint Conference on Neural Networks (IJCNN): 2014. p. 4121–8. 10.1109/IJCNN.2014.6889955. [DOI]

- 56.Cortes C, Vapnik V. Support-vector networks. Mach Learn. 1995;20(3):273–97. [Google Scholar]

- 57.Suykens JA, Vandewalle J. Least squares support vector machine classifiers. Neural Process Lett. 1999;9(3):293–300. doi: 10.1023/A:1018628609742. [DOI] [Google Scholar]

- 58.Bevilacqua V, Pannarale P, Abbrescia M, Cava C, Paradiso A, Tommasi S. Comparison of data-merging methods with svm attribute selection and classification in breast cancer gene expression. In: BMC Bioinformatics: 2012. p. 9. BioMed Central. [DOI] [PMC free article] [PubMed]

- 59.Bevilacqua Vitoantonio, Costantino Nicola, Dotoli Mariagrazia, Falagario Marco, Sciancalepore Fabio. Strategic design and multi-objective optimisation of distribution networks based on genetic algorithms. International Journal of Computer Integrated Manufacturing. 2012;25(12):1139–1150. doi: 10.1080/0951192X.2012.684719. [DOI] [Google Scholar]

- 60.Bevilacqua V, Pacelli V, Saladino S. A novel multi objective genetic algorithm for the portfolio optimization. In: Huang D-S, Gan Y, Bevilacqua V, Figueroa JC, editors. Advanced Intelligent Computing. Berlin: Springer; 2012. [Google Scholar]

- 61.Huang De-Shuang, Du Ji-Xiang. A Constructive Hybrid Structure Optimization Methodology for Radial Basis Probabilistic Neural Networks. IEEE Transactions on Neural Networks. 2008;19(12):2099–2115. doi: 10.1109/TNN.2008.2004370. [DOI] [PubMed] [Google Scholar]

- 62.De Stefano C, Fontanella F, Marrocco C, di Freca AS. A hybrid evolutionary algorithm for bayesian networks learning: An application to classifier combination. In: European Conference on the Applications of Evolutionary Computation. Springer: 2010. p. 221–30.

- 63.Cordella Luigi P., De Stefano Claudio, Fontanella Francesco, Scotto di Freca Alessandra. Image Analysis and Processing – ICIAP 2013. Berlin, Heidelberg: Springer Berlin Heidelberg; 2013. A Weighted Majority Vote Strategy Using Bayesian Networks; pp. 219–228. [Google Scholar]

- 64.Bevilacqua V., Mastronardi G., Piscopo G. Evolutionary approach to inverse planning in coplanar radiotherapy. Image and Vision Computing. 2007;25(2):196–203. doi: 10.1016/j.imavis.2006.01.027. [DOI] [Google Scholar]

- 65.Bevilacqua V, Brunetti A, Triggiani M, Magaletti D, Telegrafo M, Moschetta M. An optimized feed-forward artificial neural network topology to support radiologists in breast lesions classification. In: Proceedings of the 2016 on Genetic and Evolutionary Computation Conference Companion. GECCO ’16 Companion. New York: ACM: 2016. p. 1385–92. 10.1145/2908961.2931733. [DOI]

- 66.Yi H-C, You Z-H, Huang D-S, Li X, Jiang T-H, Li L-P. A deep learning framework for robust and accurate prediction of ncrna-protein interactions using evolutionary information. Mol Ther-Nucleic Acids. 2018;11:337–44. doi: 10.1016/j.omtn.2018.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Chuai G, Ma H, Yan J, Chen M, Hong N, Xue D, Zhou C, Zhu C, Chen K, Duan B, Gu F, Qu S, Huang D, Wei J, Liu Q. Deepcrispr: optimized crispr guide rna design by deep learning. Genome Biol. 2018; 19(1):80. 10.1186/s13059-018-1459-4. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated and/or analyzed during the current study are not publicly available due restrictions associated with anonymity of participants but are available from the corresponding author on reasonable request.